Abstract

Because of the complexity of cervical cancer prevention guidelines, clinicians often fail to follow best-practice recommendations. Moreover, existing clinical decision support (CDS) systems generally recommend a cervical cytology every three years for all female patients, which is inappropriate for patients with abnormal findings that require surveillance at shorter intervals. To address this problem, we developed a decision tree-based CDS system that integrates national guidelines to provide comprehensive guidance to clinicians. Validation was performed in several iterations by comparing recommendations generated by the system with those of clinicians for 333 patients. The CDS system extracted relevant patient information from the electronic health record and applied the guideline model with an overall accuracy of 87%. Providers without CDS assistance needed an average of 1 minute 39 seconds to decide on recommendations for management of abnormal findings. Overall, our work demonstrates the feasibility and potential utility of automated recommendation system for cervical cancer screening and surveillance.

Keywords: cervical cancer, clinical decision support, natural language processing, Papanicolaou test, colposcopy

Introduction

Because of the complexity of cervical cancer screening guidelines and need for a detailed chart review, clinicians may not always follow best-practice recommendations.1,2 Clinical decision support (CDS) systems offer a potential solution,3,4 and they have been reported to improve screening rates for preventive services.5–10 However, most systems are limited to providing screening rather than surveillance recommendations for management of abnormal findings. The latter patients are especially at risk for cervical cancer – 15% of women with cervical cancer were reported not to have appropriate follow-up of abnormal results11 and 27% were reported to have had delayed or no referral for colposcopy.12

Recently, the American Society for Colposcopy and Cervical Pathology (ASCCP)13 has published algorithms and a mobile app to facilitate application of surveillance guidelines. While these tools provide a reference for common consensus-based surveillance recommendations, clinicians have to consult additional sources for guidelines not available in the tools. Moreover, these tools are not integrated with the electronic health record (EHR), which interrupts the clinicians’ workflow. Consequently, the time and effort required to utilize these tools pose a significant barrier for their use.

Given the complexity of the guidelines and lack of a single resource for evidence-based recommendations for cervical cancer screening and surveillance, an automated system that analyzes EHR to provide comprehensive guidance would be clinically useful. Therefore, we developed a system for deployment at the point of care. Our preliminary research has been published previously.14,15 In this paper, we provide an overview of our research, and report on our efforts to model the updated guidelines, along with initiatives to facilitate workflow and usability of the developed system. Our report aims to provide insights to inform development of CDS systems at other institutions and for other decision problems. The unified guideline flowchart can be used to develop similar systems at other institutions.

Methods

This research was approved by the Mayo Clinic institutional review board. The IRB approved waiver of the requirement to obtain informed consent. The research was conducted in accordance with the principles of the Declaration of Helsinki.

Unified model of the guidelines

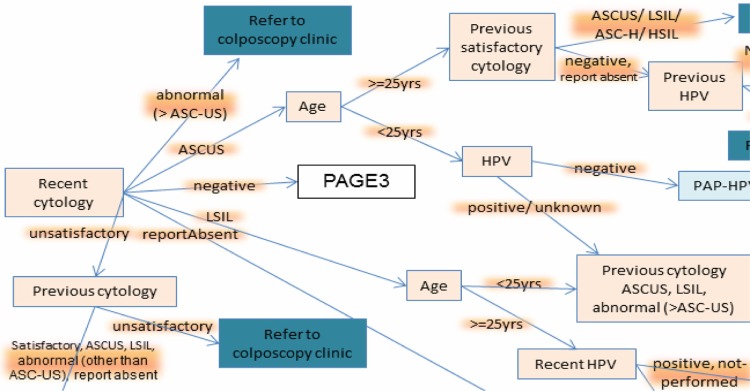

We reviewed guidelines from the American Cancer Society (ACS), US Preventive Services Task Force (USPSTF), American College of Obstetricians and Gynecologists (ACOG), and the ASCCP.16–20 While the ACS, USPSTF, and ACOG guidelines cover screening, ASCCP guidelines provide the recommendations for surveillance. We constructed a “unified guideline model” as a flow diagram (Fig. 1), which conglomerates the logic embedded in the relevant guidelines. The flow diagram consists of nodes and edges – the nodes represent concepts in patient information (eg, age) and the edges correspond to the possible values of the concepts (eg, age > 30 years). The flow diagram has an inverted tree structure, starting at a single node and ending in multiple leaf nodes. Multiple paths can be traced from the starting node to terminate in the leaf nodes, and each path corresponds to a unique patient scenario encountered in clinical practice. We modified the unified model during our study to incorporate updates to the national guidelines. The latest version of the model is attached in Supplementary File 1.

Figure 1.

A portion of the unified guideline flowchart (attached as Supplementary File).

Automated data extraction from HER

The values defined in the flow diagram are extracted from the EHR. A total of 10 concepts are extracted from six different sources including demographic information (date of birth and gender), cervical pathology reports, problem list, laboratory results for high-risk human papillomavirus (HPV) DNA testing, annual patient questionnaire responses, and disposition of previous clinical alerts. In addition, the cervical pathology and clinical notes are in free-text form and are not amenable to computational processing; hence, natural language processing (NLP) is used to determine parameters reported in these notes.

To ensure accuracy of data extraction, reconciliation is performed across multiple sources.21 Figure 2 summarizes the information sources. For example, the history of hysterectomy that determines if a patient should have cervical, vaginal, or no cytology testing is determined by searching for hysterectomy in surgical reports, problem list, clinical notes, and annual survey responses. Similarly, history of cervical cancer is inferred from problem list, searching cervical cytology reports, and the patient survey information.

Figure 2.

Workflow and data relationships. Patient interactions with the care providers are depicted on the right side, leading to creation of data, which need to be collectively analyzed for decision making.

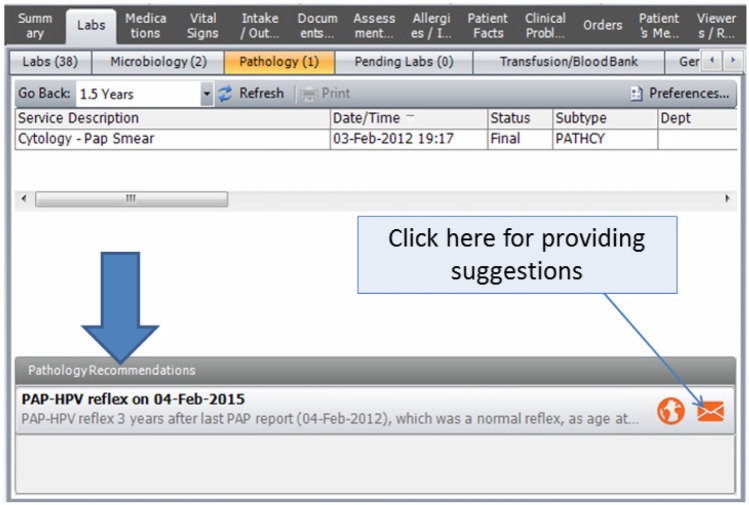

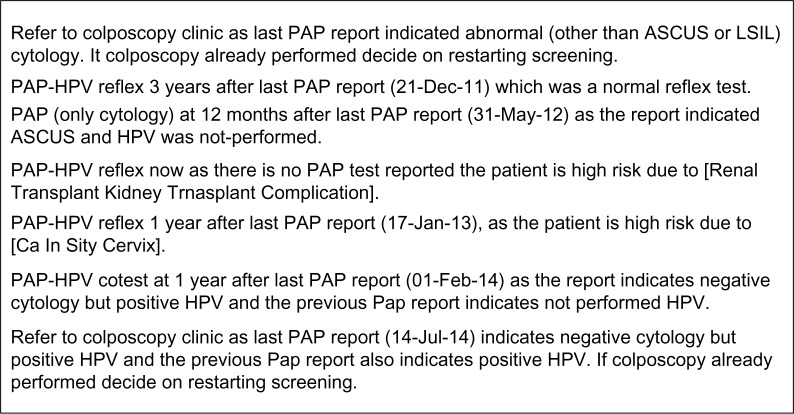

Enhancing usability by delivering recommendation and rationale at the point of care

The institutional EHR interface was enhanced to instantly display the recommendation generated by the reminder system. As shown in Figure 3, the recommendation is displayed when the provider navigates to the pathology reports in the EHR. The explanation for the recommendation is also displayed to inform the provider. Figure 4 summarizes a sample of possible explanations. An icon in the reminder tab links to an institutional guideline repository, which also lists local experts for consultation or clarification. The button in the lower right corner opens a pop-up for provider feedback about the CDS recommendation.

Figure 3.

The recommendations are displayed in the EHR when providers navigate to the pathology reports section. Along with the recommendation, a brief explanation is displayed, which elaborates on the rationale.

Figure 4.

Sample explanations generated by the decision support system.

Validation

Validation of the “unified guideline flowchart” was performed in several iterations/cycles. In each of the iterations, the system was developed using a random set of patients, and then validated on a separate randomly chosen set of patients. The development and validation were performed by comparing recommendations computed by the CDS with the clinical plan of the development and validation patients, respectively. The system was validated on a total of 333 patients. The validations were followed by an error analysis to identify shortcomings in data extraction or in the flowchart to improve the system. Further, to validate the system in the clinical setting, we conducted a pilot study with nine primary care providers for a six-month period and gathered feedback. The validations were performed before we updated the unified model to include the 2013 ASCCP update.

Results and Discussion

The latest version of the “unified guideline” flow diagram covers 51 decision scenarios identified in the practice population. In contrast to the ASCCP flow diagrams that have a particular finding as a reference point, our flow diagram has the most recent cytology as the reference point, which helps to explicitly represent the guideline logic. The ASCCP diagrams aim to be intuitive to clinicians and assume implicit knowledge, which can be challenging for clinicians who are not familiar with the guidelines. It is especially difficult to convey the clinical guideline to nonclinicians. In contrast, our unified flow diagram assimilates and explicitly represents the knowledge that aids computer programmers to develop CDS software for clinicians. The flow diagram reveals the complexity of decision making and visually represents the challenge faced by primary care providers expected to apply the latest guidelines during a brief clinical encounter.4,22 Multiple variables and subgroups can overwhelm busy clinicians.23

Our unified guideline flowchart represents the clinical consensus achieved in 2013. As the “unified model” is able to provide unambiguous recommendations for nearly all decision scenarios seen in practice, we can infer that the guidelines are unambiguous, complimentary, and comprehensive.24 However, a small number of patients who are exceptions to the model remain. These include pregnant women with low-grade squamous intraepithelial lesions, women with atypical glandular cells (AGC) or atypical endometrial cells, and adenocarcinoma in situ (AIS). Pregnancy is difficult to identify from the EHR. Patients with AGC or atypical endometrial cells need endometrial and endocervical sampling in addition to colposcopy, and AIS may be identified during a diagnostic excisional procedure. These rare but important situations are excluded from the scope of the CDS with a generic reminder to refer to the colposcopy clinic.

The iterative validations of the recommendations of the CDS system, with the help of clinicians, revealed shortcomings in guideline flowchart as well as data extraction errors. The flowchart errors were mainly due to scenarios not envisioned by the experts when they reviewed the guideline narratives and generated the decision points. Overall, the system was found to have an accuracy of 87% in 333 validation cases.14,15 This was further improved after the system errors identified during the validation were rectified. The comparison of CDS and clinician recommendations also revealed that providers have difficulty applying guidelines when there are abnormal findings.24 We are currently performing validation of the modifications made to the unified guideline model due to 2013 ASCCP update.

The variables in the flowchart are extracted from multiple EHR screens and sections. An unaided provider needs to scroll through the EHR to view all relevant reports. We determined that for patients with abnormal findings, the unaided providers needed an average of 1 minute 39 seconds to gather information and decide on the recommendation.14 Therefore, the CDS system is expected to save substantial clinical time. Our study also shows that the variables required for the decision making can be collated and reconciled from disparate data sources – this is particularly helpful to identify patients with history of hysterectomy and risk factors for cervical cancer. Four of the 10 concepts required for guideline application are extracted from free-text notes. Hence, NLP25 and data reconciliation techniques21 have a critical role in data extraction. Given the complexity of the decision logic, contextual explanations are needed to justify recommendations generated (Fig. 4) and to direct the provider to additional information sources or institutional experts.

A major challenge in the development of the CDS system is the constantly changing nature of the guidelines. Considerable effort and expertise is required to decipher new guidelines and to identify the resultant changes to the unified model. This is followed by validation of the model by comparing the system with clinicians. Hence, there is lag from publication of new guidelines to update the system. The time lag for system update, and the frequency of guideline updates, renders it essential that the system’s update–validate cycle is a continuous and an iterative process.

Compared to the ASCCP mobile app, our system does not require user input of test results. If the recommendations are visible in the EHR, there is no need for providers to use a separate program. A CDS system that automatically pulls the patient data and generates a guideline-based recommendation for subsequent testing has greater potential to save time and improve implementation of recommended management strategies.

In contrast to our comprehensive guideline model, the decision logic for CDS at most institutions is limited. Specifically, decision support systems for cervical cancer prevention generally suggest a cervical cytology every three years for all female patients. Although this is appropriate for the majority of patients with normal cervical cytology, it is inappropriate for 30- to 65-year-old women being screened with cervical cytology/HPV co-testing. Recent updates suggest five-year interval test frequency for co-tests.20,26 The three-year interval is also inappropriate for patients with abnormal cytology or other risk factors.27,28 Decision making for the high-risk patients requires interpretation of free-text pathology and clinical reports, and existing CDS systems generally lack the capability to interpret free-text reports and cannot supply appropriate recommendations for high-risk patients.25,29 Paradoxically, providers require greater support in order to optimally manage high-risk patients.5,27 With the growing use of EHRs in the United States, the use of advanced decision support systems like ours has a high potential for improving the quality of care.30

Conclusions and Future Work

Our research indicates that the national cervical cancer screening and surveillance guidelines are unambiguous, complimentary, and comprehensive for nearly all patients seen in clinical practice. However, application of the national guidelines for cervical cancer prevention requires determination of multiple variables, and it requires considerable time and effort for an unaided clinician. Overall, our work demonstrates the feasibility of automated CDS system for generating clinical recommendations for cervical cancer screening and surveillance. By automatically extracting the variables from the EHR to provide guideline-based recommendations, the CDS system can potentially save considerable clinical time and improve quality of care. Our unified guideline flowchart can be used to develop similar systems at other institutions. Similar systems are in development for prevention of colorectal cancer21,31 and lung cancer.

Supplementary Files

Supplementary File 1. Flow chart of unified guideline model for cervical cancer screening and surveillance.

Supplementary File 2. Source code for the clinical decision support system for cervical cancer screening and surveillance.

Footnotes

SUPPLEMENT: Computational Advances in Cancer Informatics (B)

ACADEMIC EDITOR: JT Efird, Editor in Chief

FUNDING: This work was supported by Mayo Foundation and National Institutes of Health under award numbers 1K99LM011575 and R01GM102282. The authors confirm that the funders had no influence over the study design, content of the article, or selection of this journal.

COMPETING INTERESTS: Authors disclose no potential conflicts of interest.

This paper was subject to independent, expert peer review by a minimum of two blind peer reviewers. All editorial decisions were made by the independent academic editor. All authors have provided signed confirmation of their compliance with ethical and legal obligations including (but not limited to) use of any copyrighted material, compliance with ICMJE authorship and competing interests disclosure guidelines and, where applicable, compliance with legal and ethical guidelines on human and animal research participants. Provenance: the authors were invited to submit this paper.

Author Contributions

Conceived the project: HL, RC. Designed and implemented the system and led the validation studies: KBW, KLM. Contributed to the design of the system: RC, HL, RAG, RAH, CGC, PMC, TMK, MRH. Contributed to drafting of the manuscript: SGP. All authors reviewed and approved the final version of the manuscript.

REFERENCES

- 1.Spence AR, Goggin P, Franco EL. Process of care failures in invasive cervical cancer: systematic review and meta-analysis. Prev Med. 2007;45(2–3):93–106. doi: 10.1016/j.ypmed.2007.06.007. [DOI] [PubMed] [Google Scholar]

- 2.Curtis P, Morrell D, Hendrix S, Mintzer M, Resnick JC, Qaqish BF. Recall and treatment decisions of primary care providers in response to Pap smear reports. Am J Prev Med. 1997;13(6):427–31. [PubMed] [Google Scholar]

- 3.Everett T, Bryant A, Griffin MF, Martin-Hirsch PP, Forbes CA, Jepson RG. Interventions targeted at women to encourage the uptake of cervical screening. Cochrane Database Syst Rev. 2011;(5):CD002834. doi: 10.1002/14651858.CD002834.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Yarnall KS, Pollak KI, Ostbye T, Krause KM, Michener JL. Primary care: is there enough time for prevention? Am J Public Health. 2003;93(4):635–41. doi: 10.2105/ajph.93.4.635. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Shaw JS, Samuels RC, Larusso EM, Bernstein HH. Impact of an encounter-based prompting system on resident vaccine administration performance and immunization knowledge. Pediatrics. 2000;105(4 pt 2):978–83. [PubMed] [Google Scholar]

- 6.Bouaud J, Seroussi B, Antoine EC, Zelek L, Spielmann M. A before-after study using OncoDoc, a guideline-based decision support-system on breast cancer management: impact upon physician prescribing behaviour. Stud Health Technol Inform. 2001;84(pt 1):420–4. [PubMed] [Google Scholar]

- 7.Schroy PC, III, Emmons K, Peters E, et al. The impact of a novel computer-based decision aid on shared decision making for colorectal cancer screening: a randomized trial. Med Decis Making. 2011;31(1):93–107. doi: 10.1177/0272989X10369007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hahn KA, Ferrante JM, Crosson JC, Hudson SV, Crabtree BF. Diabetes flow sheet use associated with guideline adherence. Ann Fam Med. 2008;6(3):235–8. doi: 10.1370/afm.812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Steele AW, Eisert S, Davidson A, et al. Using computerized clinical decision support for latent tuberculosis infection screening. Am J Prev Med. 2005;28(3):281–4. doi: 10.1016/j.amepre.2004.12.012. [DOI] [PubMed] [Google Scholar]

- 10.Cleveringa FG, Gorter KJ, van den Donk M, Rutten GE. Combined task delegation, computerized decision support, and feedback improve cardiovascular risk for type 2 diabetic patients: a cluster randomized trial in primary care. Diabetes Care. 2008;31(12):2273–5. doi: 10.2337/dc08-0312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Janerich DT, Hadjimichael O, Schwartz PE, et al. The screening histories of women with invasive cervical cancer, Connecticut. Am J Public Health. 1995;85(6):791–4. doi: 10.2105/ajph.85.6.791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Duggan MA, Nation J. An audit of the cervical cancer screening histories of 246 women with carcinoma. J Low Genit Tract Dis. 2012;16(3):263–70. doi: 10.1097/LGT.0b013e31823da811. [DOI] [PubMed] [Google Scholar]

- 13.ASCCP X. iPhone App Updated Consensus Guidelines on the Management of Women with Abnormal Cervical Cancer Screening Tests and Cancer Precursors 2013.

- 14.Wagholikar KB, MacLaughlin KL, Kastner TM, et al. Formative evaluation of the accuracy of a clinical decision support system for cervical cancer screening. J Am Med Inform Assoc. 2013;20(4):749–57. doi: 10.1136/amiajnl-2013-001613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wagholikar KB, MacLaughlin KL, Henry MR, et al. Clinical decision support with automated text processing for cervical cancer screening. J Am Med Inform Assoc. 2012;19(5):833–9. doi: 10.1136/amiajnl-2012-000820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.ACOG Practice Bulletin Number 131: screening for cervical cancer. Obstet Gynecol. 2012;120(5):1222–38. doi: 10.1097/aog.0b013e318277c92a. [DOI] [PubMed] [Google Scholar]

- 17.Wright TC, Jr, Massad LS, Dunton CJ, Spitzer M, Wilkinson EJ, Solomon D. 2006 consensus guidelines for the management of women with abnormal cervical cancer screening tests. Am J Obstet Gynecol. 2007;197(4):346–55. doi: 10.1016/j.ajog.2007.07.047. [DOI] [PubMed] [Google Scholar]

- 18.Saslow D, Solomon D, Lawson HW, et al. American Cancer Society, American Society for Colposcopy and Cervical Pathology, and American Society for Clinical Pathology screening guidelines for the prevention and early detection of cervical cancer. Am J Clin Pathol. 2012;137(4):516–42. doi: 10.1309/AJCPTGD94EVRSJCG. [DOI] [PubMed] [Google Scholar]

- 19.Moyer VA. Screening for cervical cancer: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2012;156(12):880–91. W312. doi: 10.7326/0003-4819-156-12-201206190-00424. [DOI] [PubMed] [Google Scholar]

- 20.Massad LS, Einstein MH, Huh WK, et al. updated consensus guidelines for the management of abnormal cervical cancer screening tests and cancer precursors. J Low Genit Tract Dis. 2012;2013;17(5 suppl 1):S1–27. doi: 10.1097/LGT.0b013e318287d329. [DOI] [PubMed] [Google Scholar]

- 21.Wagholikar KB, Sohn S, Wu S, et al. AMIA Summits Translation Science Proceedings. San Francisco, CA: 2013. Workflow-based data reconciliation for clinical decision support: case of colorectal cancer screening and surveillance. [PMC free article] [PubMed] [Google Scholar]

- 22.Holland-Barkis P, Forjuoh SN, Couchman GR, Capen C, Rascoe TG, Reis MD. Primary care physicians’ awareness and adherence to cervical cancer screening guidelines in Texas. Prev Med. 2006;42(2):140–5. doi: 10.1016/j.ypmed.2005.09.010. [DOI] [PubMed] [Google Scholar]

- 23.Katki HA, Wacholder S, Solomon D, Castle PE, Schiffman M. Risk estimation for the next generation of prevention programmes for cervical cancer. Lancet Oncol. 2009;10(11):1022–3. doi: 10.1016/S1470-2045(09)70253-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Isidean SD, Franco EL. Counterpoint: cervical cancer screening guidelines – approaching the golden age. Am J Epidemiol. 2013;178(7):1023–26. doi: 10.1093/aje/kwt166. discussion1027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Demner-Fushman D, Chapman WW, McDonald CJ. What can natural language processing do for clinical decision support? J Biomed Inform. 2009;42(5):760–72. doi: 10.1016/j.jbi.2009.08.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Saslow D, Solomon D, Lawson HW, et al. American Cancer Society, American Society for Colposcopy and Cervical Pathology, and American Society for Clinical Pathology screening guidelines for the prevention and early detection of cervical cancer. J Low Genit Tract Dis. 2012;16(3):175–204. doi: 10.1097/LGT.0b013e31824ca9d5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Singhal R, Rubenstein LV, Wang M, Lee ML, Raza A, Holschneider CH. Variations in practice guideline adherence for abnormal cervical cytology in a county healthcare system. J Gen Intern Med. 2008;23(5):575–80. doi: 10.1007/s11606-008-0528-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Sharpless KE, Schnatz PF, Mandavilli S, Greene JF, Sorosky JI. Lack of adherence to practice guidelines for women with atypical glandular cells on cervical cytology. Obstet Gynecol. 2005;105(3):501–6. doi: 10.1097/01.AOG.0000153489.25288.c1. [DOI] [PubMed] [Google Scholar]

- 29.Sittig DF, Wright A, Osheroff JA, et al. Grand challenges in clinical decision support. J Biomed Inform. 2008;41(2):387–92. doi: 10.1016/j.jbi.2007.09.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Kim JJ. Opportunities to improve cervical cancer screening in the United States. Milbank Q. 2012;90(1):38–41. doi: 10.1111/j.1468-0009.2011.00653.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Wagholikar KB, Sohn S, Wu S, et al. Clinical decision support for colonoscopy surveillance using natural language processing; IEEE Healthcare Informatics, Imaging, and Systems Biology Conference; University of California, San Diego CA. 2012. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary File 1. Flow chart of unified guideline model for cervical cancer screening and surveillance.

Supplementary File 2. Source code for the clinical decision support system for cervical cancer screening and surveillance.