Abstract

Perception studies have shown similarities between humans and other animals in a wide array of language-related processes. However, the components of language that make it uniquely human have not been fully identified. Here we show that nonhuman animals extract rules over speech sequences that are difficult for humans. Specifically, animals easily learn rules over both consonants and vowels, while humans do it only over vowels. In Experiment 1, rats learned a rule implemented over vowels in CVCVCV nonsense words. In Experiment 2, rats learned the rule when it was implemented over the consonants. In both experiments, rats generalized such knowledge to novel words they had not heard before. Using the same stimuli, human adults learned the rules over the vowels but not over the consonants. These results suggest differences between humans and animals on speech processing might lie on the constraints they face while extracting information from the signal.

Keywords: Speech perception, Rule-learning, Consonants and vowels, Animal Cognition

1. Introduction

The linguistic capacity to express and comprehend an unlimited number of ideas when combining a limited number of elements has only been observed in humans. Nevertheless, research has not fully identified the components of language that make it uniquely human. Extensive research on comparative cognition suggests humans and other species share basic perceptual abilities used for language processing (Yip, 2006). However, humans display remarkable linguistic abilities that other animals do not possess. One possibility is that differences emerge from humans outperforming other species in the processing of complex regularities that are the hallmark of the linguistic signal (e.g. Fitch & Hauser, 2004). But recent research exploring this hypothesis has not found conclusive evidence regarding computational differences between humans and other animals (for experiments with humans see Hochmann, Azadpour, & Mehler, 2008; Perruchet & Rey, 2005; for experiments with animals see Gentner, Fenn, Margoliash, & Nusbaum, 2006; van Heijningen, de Visser, Zuidema, & ten Cate, 2009). Another possibility is that some differences between humans and other species are not to be found in the extent to which nonhuman animals can process progressively complex structures. Rather, they might be reflected in how human and nonhuman animals face different constraints while processing speech information.

For example, humans tend to extract different types of information from consonants and vowels in both the written (Duñabeitia & Carreiras, 2011; New, Araujo, & Nazzi, 2008) and the vocal modality (Cutler, Sebastián-Gallés, Soler-Vilageliu, & van Ooijen, 2000; Owren & Cardillo, 2006). Moreover, these phonetic categories are the preferred targets of separate computations involved in language processing. Studies have found that distributional dependencies are predominantly computed over consonants (Bonatti, Peña, Nespor, & Mehler, 2005), while simple rules are preferentially extracted from vowels (Toro, Nespor, Mehler, & Bonatti, 2008; Toro, Shukla, Nespor, & Endress, 2008). But what is the source of these functional differences? Vowels and consonants differ in a number of acoustic parameters. Notably, vowels are longer, louder and more constant over their duration than consonants (Ladefoged, 2001). Thus, distinct physical correlates of consonants and vowels in the speech signal might drive auditory systems to process them in a different manner. If human and nonhuman animals share processing mechanisms triggered by such acoustic differences between consonants and vowels, we might expect to observe similar patterns of results across species. Or even more, if differences between humans and other animals are due to stronger processing capabilities in humans, we might expect them to outperform animals, since humans should be better fit to process speech stimuli than other species. On the contrary, if the observed differences in how humans process speech are a result of language-specific constraints, we should not observe functional differences in other species.

There is a long-standing research tradition demonstrating that mammals that do not communicate using elaborate vocalizations, such as rats, extract complex distributional regularities from the environment (Rescorla & Wagner, 1972) and share with humans the ability to generalize rules over acoustic stimuli (Murphy, Mondragón, & Murphy, 2008). Most mammals (including humans and rats) have in common many features in their auditory system that allows them to process sounds in a similar manner (e.g. Malmierca, 2003). These commonalities extend beyond the perception of simple sounds and extend to the processing of complex speech stimuli (Yip, 2006). For example, it has been observed that rats are able to discriminate across vowel categories (Eriksson & Villa, 2006), among consonants using the affricate-fricative continuum (Reed et al., 2003), and can organize consonant sounds around categories using their frequency distribution (Pons, 2006). That is, there are consistent behavioral indicators that rodents can process consonants and vowels. More directly related to the present work, different neural responses to consonants and vowels have been observed in the rats’ auditory cortex (Perez et al., 2012), suggesting they are indeed able to process separately these segments of the speech signal. Thus, in the present study, we test the above-mentioned hypotheses and explore the capacity of a nonhuman mammal to extract and generalize rules implemented over vowels (Experiment 1) and consonants (Experiment 2). We then compare rats’ performance with that of human adults using the same stimuli (Experiment 3).

2. Experiment 1. Rules over vowels

2.1. Methods

2.1.1. Subjects

Subjects were 9 Long-Evans rats (six males) of 6 months of age. They were food-deprived until they reached 80% of their free-feeding weight. They had access to water ad libitum. Food was administered after each training session.

2.1.2. Stimuli

Forty CVCVCV nonsense words (from now on words) were created for the training phase combining three consonants (k, b, s) and three vowels (a, i, oe). In half of the words vowels followed an AAB structure (the first two vowels were the same, while the third vowel was different). In the other half, vowels followed an ABC structure (the three vowels were different). Consonants, however, were combined randomly, although repetitions within a word were avoided (forming words like bakasi, kisiboe or soeboeka for AAB and bakisoe, kisaboe or soebika for ABC). For the test phase we combined three new consonants (f, l, p) and three new vowels (e, o, u) to form eight words. Half of these words implemented an AAB structure over the vowels (e.g. felepu, lepefo), while the other half implemented an ABC structure (e.g. felopu, lepufo). Consonants were again combined randomly, but avoiding repetitions within words. Words were synthesized using MBROLA software, with a female diphone database (fr2). Phoneme duration was set to 150 ms, and pitch at 250 Hz. Each word had a length of 900 ms.

2.1.3. Apparatus

Rats were placed in Letica L830-C Skinner boxes (Panlab S. L., Barcelona, Spain) while a PC computer recorded the lever-press responses and provided reinforcement. A Pioneer Stereo Amplifier A-445 and two E. V. (S-40) loudspeakers (with a response range from 85 Hz to 20 kHz), located besides the boxes, were used to present the stimuli at 68 dB.

2.1.4. Procedure

Rats were trained to press a lever until they reached a stable response rate at a variable-ratio-10 schedule (VR-10; that is, food was delivered each time a rat pressed 5 to 15 times the lever). Discrimination training consisted on 36 sessions, 1 session per day. During each training session, rats were placed individually in the Skinner box, and twenty words (10 with vowels following an AAB structure, and 10 with an ABC structure) were presented with 1 minute of inter-stimulus interval (ISI). Presentation of words was balanced within each session and across sessions, so all words were presented the same number of times across training. After the presentation of each AAB word food was delivered to rats on a VR-7 schedule (with food being delivered each time a rat pressed 4 to 10 times the lever). After the presentation of each ABC word, no food was delivered independently of lever-pressing responses. During the 1 min ISI rats’ lever-pressing responses were registered.

After 36 training sessions, a test session was run. As it is often found in training experiments (e.g. Gentner et al., 2006), the rate of learning might vary among rats. Therefore, in order to run the test phase rats had to reach the criterion of 80% correct responses (meaning that rats had to reach at least 80% of responses to AAB stimuli in the last sessions of training). The test phase was similar to the previous sessions, but eight new test words (four with an AAB structure and four with an ABC structure implemented over the vowels) that rats had not heard before were used. Following the presentation of each of the test items, there was a 1-min ISI during which lever-pressing responses were registered. Importantly, food was delivered during this interval after the presentation of both AAB and ABC words. Thus, any differences observed in lever-pressing responses could not be explained by artifacts of the reinforcement schedule.

2.2 Results and Discussion

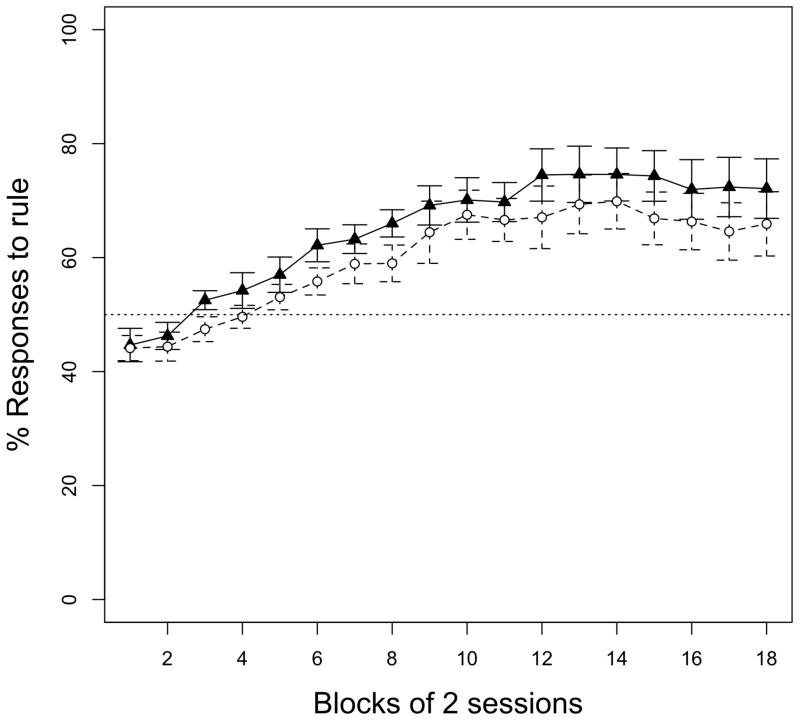

During training, rats increasingly responded to words implementing the AAB rule over the vowels (Fig. 1). A repeated-measures ANOVA over the percentage of lever-pressing responses to AAB words, with session (1 to 36) as the within-subjects factor, yielded a significant difference between sessions (F(35, 140)= 11.215, p< 0.001). An analysis showed that five rats, out of nine, reached the criterion of 80% of responses to AAB stimuli in the last session of training. Performance during the test revealed that rats responded significantly more to new AAB words than was expected by chance (M=56.78, SD=5.05; t(4)= 2.999, p< 0.05). A drop in performance observed between the last training session and the generalization test is not surprising as two factors contribute to differences between them. First, completely new items, composed of syllables not presented during training, were presented during the test phase. Second, the reinforcement schedule varied between the training trials and the test trials to avoid extinction of responses, making more remarkable that the rats’ performance remains above chance during the test phase. These results show that rats, like humans, extract rule-like structures implemented over vowels and generalize them to new items.

Figure 1.

Mean percentage (and standard error bars) of rats’ responses to the AAB words during 36 training sessions, presented in blocks of two sessions. White circles correspond to Experiment 1 (rule over vowels). Black triangles correspond to Experiment 2 (rule over consonants). A performance of 50% indicates rats responded equally to AAB and ABC words. Rats in both groups learned to discriminate AAB from ABC words independently of whether the rule was implemented over the vowels or over the consonants.

3. Experiment 2. Rules over consonants

3.1. Methods

3.1.1. Subjects

Subjects were 9 new Long-Evans rats (six males) of 6 months of age. They were food-deprived until they reached 80% of their free-feeding weight. They had access to water ad libitum. Food was administered after each training session.

3.1.2. Stimuli

Forty new CVCVCV nonsense words were created for the training phase combining the same three consonants (k, b, s) and three vowels (a, i, oe) as in Experiment 1. However, in Experiment 2 structures were implemented over consonants, while vowels were combined randomly, although repetitions within a word were avoided (forming words such as koekiba, sasoeki or bibasoe for AAB; sikaboe, bisoeka, kabisoe for ABC). As in Experiment 1, we combined three new consonants (f, l, p) and three new vowels (e, o, u) to form eight new words for the test phase. In half of these words consonants followed an AAB structure (e.g. fefulo, pupofe), whereas in the other half they followed an ABC structure (e.g. fepulo, pulofe). Vowels were again combined randomly but avoiding repetitions within words. Words were synthesized as in Experiment 1.

3.1.3. Apparatus and Procedure

The apparatus and the procedure were the same as in Experiment 1.

3.2 Results and Discussion

As in Experiment 1, rats significantly responded more to words implementing the AAB rule over the consonants during training (F(35, 140)= 9.862, p< 0.001; Fig. 1). Five rats reached the criterion of 80% correct responses in the last session of training. During the test phase, rats performed significantly above chance (M=56.68, SD=3.41; t(4)= 4.385, p< 0.05). These results show that, unlike humans, rats extract rule-like structures implemented over consonants and generalize them to new items.

More importantly, a comparison between both experiments showed that rats’ generalization performance over rules implemented over vowels (Experiment 1) and over consonants (Experiment 2) did not differ (t(8)=0.034, p=0.974). Together, the results of these experiments show that rats have the ability to learn simple rules over non-adjacent phonemes, independently of whether vowels or consonants instantiate them.

Still, it may be the case that also human adults might learn the rule instantiated over the specific consonants we used in Experiment 2. We thus run an experiment with human adults to assess rule learning over consonants and vowels using the same stimuli as in the previous experiments with rats. In order to closely compare performance across species, we run an experiment in which we implemented a novel experimental paradigm with human adults following an experimental procedure similar to that used with rats.

4. Experiment 3. A comparison across species

4.1. Methods

4.1.1. Participants

Thirty-six students from the Universitat Pompeu Fabra took part in this experiment. They received monetary compensation for their participation.

4.1.2. Stimuli

Stimuli were the same as in Experiment 1 and 2.

4.1.3. Procedure

Procedure in this experiment tried to replicate some of the conditions under which the rats were tested. It consisted in a discrimination training phase and a test phase. During training, forty CVCVCV words (20 with an AAB structure and 20 with an ABC structure implemented either over the vowels or the consonants) were presented with 2-sec ISI. Order of presentation of the words was randomized for each participant. Half of the participants (n=18) were presented with the words implementing the rule over the vowels (as in Experiment 1), and half were presented with the words implementing the rule over the consonants (as in Experiment 2). Participants were informed that they would listen to meaningless words, but that some of those words gave a prize, while others did not. They were told that their task was to find the words that gave a prize. In order to know which words were rewarded, participants were instructed to press the “B” key after the presentation of a word. Pressing the “B” key after a rewarded word (AAB words) triggered the feedback legend “you have won a prize” on the screen. Pressing the key after a non-rewarded word (ABC words) triggered the legend “you have lost a prize” on the screen. Participants were instructed to avoid responding to non-rewarded words. Once participants reached a learning criterion of three consecutive correct answers (responding to AAB words and not responding to ABC words), the test phase started. Participants were allowed a maximum of 200 training trials (but none of the participants reached this number before reaching the learning criterion). The test phase consisted on a two-alternative forced-choice (2AFC) test using eight new words (four with an AAB structure and four with an ABC structure implemented either over the vowels or the consonants; these were the same items used during the tests with the animals). Each word appeared twice, so there were eight trials during the test phase. Participants were instructed to choose the word that they considered more similar to the rewarded words (AAB words) during the training phase. All participants were tested in a silent room, wearing headphones (Sennheiser HD 555; with a response range from 30 Hz to 28 kHz). The experiment was presented on a Macintosh OS X based laptop using the experimental software PsyScope X B57.

4.2. Results and Discussion

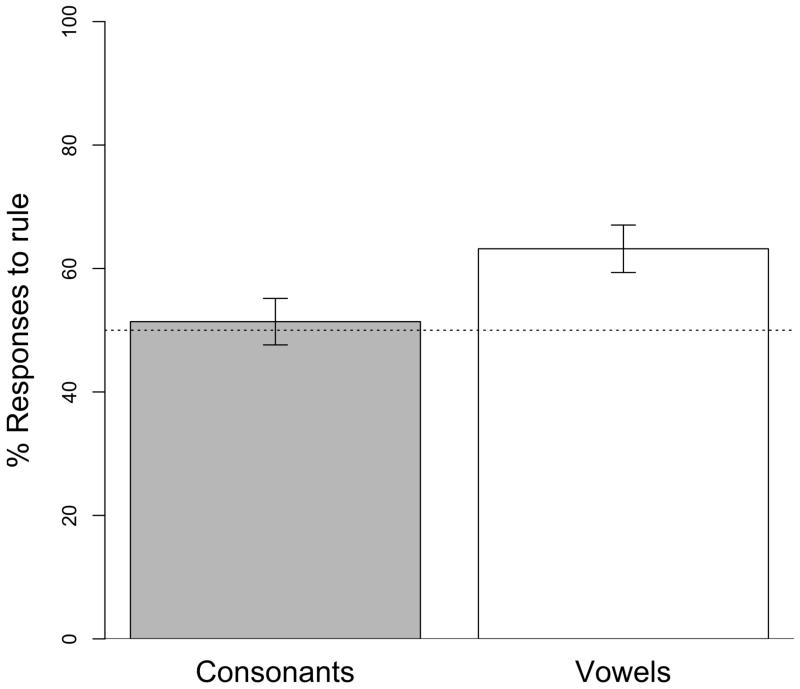

During training, participants required fewer trials to reach the learning criterion of three consecutive answers when the rule was implemented over the vowels (M = 54.05 trials, SD = 13.57) than over the consonants (M = 66.22 trials, SD = 27.13), though this difference only approached significance (t(34) = −1.701, p = 0.098). A T-test analysis over the percentage of responses to AAB words during the test phase yielded an above chance performance when the rule was instantiated over new vowels (M = 63.19%, SD = 3.84; t(17) = 3.432, p < 0.005), but not over new consonants (M = 51.39%, SD = 3.77; t(17) = 0.369, p = 0.717). Importantly, this difference in performance across conditions was significant (t(34) = 2.194, p < 0.05; Fig. 2). This pattern replicates previous results that suggest that when the rule is implemented over the consonants, humans find it difficult to generalize it to new instances (e.g. Toro et al., 2008 for results with adults; Pons & Toro, 2010 for results with infants). Even more, the results observed here and those reported in the above-mentioned studies, stand in contrast to those observed in nonhuman animals (Experiments 1 and 2). In our experiments, rats generalized the rule over both consonants and vowel.

Figure 2.

Mean percentage (and standard error bars) of human participants’ responses to AAB words during test. Participants generalized the rule to new tokens when it was implemented over the vowels, but not over the consonants.

Could differences in sensitivity across frequency regions between the two species explain the observed results? Rats are sensitive to frequency regions much higher than those of humans, and consonants as the ones used in our experiments concentrate energy in these high regions. Thus, differences between consonants might be more salient to rats than to humans. Nevertheless, previous studies suggest acoustic differences between consonants and vowels are not responsible for observed differences in rule learning over one or the other. Even equating for perceptual salience, humans still find it easier to learn rules over vowels than over consonants (Toro, Shukla et al., 2008). Even more, the present results show that rats learn with the same ease rules over both consonants and vowels, independently of acoustic differences in high frequency regions. Thus, acoustic differences between consonants and vowels do not seem to play a direct role in how different species extract rules over them.

5. General discussion

Differences in performance observed across species in the present study could be related to how consonants and vowels are represented at the neural level by humans (Caramazza, Chialant, Capasso, & Miceli, 2000), and the roles they take during language processing (Nespor, Peña, & Mehler, 2003). Such roles might even constrain the operation of statistical (Saffran, Aslin, & Newport, 1996) and rule learning mechanisms (Marcus, Vijayan, Bandi Rao, & Vishton, 1999). While statistical computations are preferentially performed over consonants, but not over vowels (Bonatti et al., 2005), structural generalizations seem to be preferentially made over vowels and not over consonants (Toro et al., 2008), even before the lexicon is developed (Pons & Toro, 2010; see also Hochmann, Benavides, Nespor, & Mehler, 2011). Given that consonants and vowels differ in their acoustic correlates (Ladefoged, 2001), one could expect that differences in the acoustic signal might trigger functional differences (i. e., that vowels mainly support the rule generalization mechanism, whereas statistical computations are better performed over consonants) in a nonhuman animal similar to those observed in humans. Nevertheless, our results show this is not the case.

Rats’ ability to learn rule-like structures over both vowels and consonants shows that they are able to track such regularities over non-adjacent elements (stimuli consisted of CVCVCV sequences). In fact, different neural responses have been observed in the rats’ auditory cortex for consonant and vowel sounds (Perez et al., 2012), suggesting rats might acoustically process them as separate sounds. But more importantly, the present results suggest that the acoustic difference between consonants and vowels is insufficient to trigger the functional differences observed in humans. This might be explained by the fact that, unlike humans (Caramazza et al., 2000), rats lack of a linguistic system in which phonemic categories may be represented separately, making them equally valid for extracting rule-like structures. Humans and other animals would thus use different cues to extract information from the signal. While humans would rely on abstract representations of relevant categories, other animals would process them as equivalent to each other (as suggested by results from the present study) or relying on purely acoustic differences between them. Evidence for the latter is provided by results demonstrating that tamarin monkeys display the opposite pattern shown by humans when computing statistical dependencies over consonants and vowels (Newport, Hauser, Spaepen, & Aslin, 2004; see also Bonatti et al., 2005).

Humans and other animals perceive phonemes around categories (Kuhl & Miller, 1975), they are able to normalize speech to recognize highly degraded input (Heimbauer, Beran, & Owren, 2011) and they extract enough rhythmic information from speech as to discriminate among different languages (Ramus, Hauser, Miller, Morris, & Mehler, 2000). In fact, humans and other animals might focus on the same features of the signal while processing linguistic stimuli, as suggested by results showing that zebra finches and humans use the same formants while categorizing vowels (Ohms, Escudero, Lammers, & ten Cate, 2011). Contrasting these results, the present study suggests other species might outperform humans extracting some rules from speech precisely because they are not constrained by a linguistic system that assigns different roles to phonetic categories.

Taken together, the present set of results supports the hypothesis that language relies on mechanisms that could be triggered by specific cues that guide the search for different types of information on the signal (e.g. Peña, Bonatti, Nespor, & Mehler, 2002). That is, consonants and vowels would modulate the application of both statistical and structural computational mechanisms through the different roles they play in the linguistic system. How these roles are assigned to various phonological representations is a question open for further research. One possibility is that experience with language allows learners to detect differences in informational value between consonants and vowels (Keidel, Jenison, Kluender, & Seidenberg, 2007). However, in the absence of a linguistic frame, consonants and vowels do not play different roles nor do they convey any distinct information. They both fit equally well as inputs to extract rule-like structures through a learning mechanism present in nonhuman animals.

Acknowledgements

This research was supported by grants Consolider Ingenio CSD2007-00012 and PSI2010-20029. Also by a Ramón y Cajal fellowship (RYC-2008-02909) and ERC Starting Grant agreement n. 312519 to JMT. We thank L. Bonatti, M. Nespor and N. Sebastián-Gallés for discussions regarding this study, Gisela Pi for her help with the human experiment, and Tere Rodrigo and staff from the Laboratorio de Psicología Animal for their help with the animal experiments.

References

- Bonatti L, Peña M, Nespor M, Mehler J. Linguistic constraints on statistical computations: The role of consonants and vowels in continuous speech processing. Psychological Science. 2005;16:451–459. doi: 10.1111/j.0956-7976.2005.01556.x. [DOI] [PubMed] [Google Scholar]

- Caramazza A, Chialant D, Capasso R, Miceli G. Separable processing of consonants and vowels. Nature. 2000;403:428–430. doi: 10.1038/35000206. [DOI] [PubMed] [Google Scholar]

- Cutler A, Sebastián-Gallés N, Soler-Vilageliu O, van Ooijen B. Constrains of vowels and consonants on lexical selection: Cross-linguistic comparisons. Memory & Cognition. 2000;28:746–755. doi: 10.3758/bf03198409. [DOI] [PubMed] [Google Scholar]

- Duñabeitia J, Carreiras M. The relative position priming effect depends on whether letters are vowels or consonants. Journal of Experimental Psychology: Learning, Memory & Cognition. 2011;37:1143–1163. doi: 10.1037/a0023577. [DOI] [PubMed] [Google Scholar]

- Eriksson J, Villa A. Learning of auditory equivalence classes for vowels by rats. Behavioural Processes. 2006;73:348–359. doi: 10.1016/j.beproc.2006.08.005. [DOI] [PubMed] [Google Scholar]

- Fitch T, Hauser M. Computational constraints on syntactic processing in a nonhuman primate. Science. 2004;303:377–380. doi: 10.1126/science.1089401. [DOI] [PubMed] [Google Scholar]

- Gentner T, Fenn K, Margoliash D, Nusbaum H. Recursive syntactic pattern learning by songbirds. Nature. 2006;440:1204–1207. doi: 10.1038/nature04675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heimbauer L, Beran M, Owren M. A chimpanzee recognizes synthetic speech with significantly reduced acoustic cues to phonetic content. Current Biology. 2011;21:1210–1214. doi: 10.1016/j.cub.2011.06.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hochmann J, Azadpour M, Mehler J. Do humans really learn AnBn artificial grammars from exemplars? Cognitive Science. 2008;32:1021–1036. doi: 10.1080/03640210801897849. [DOI] [PubMed] [Google Scholar]

- Hochmann J, Benavides S, Nespor M, Mehler J. Consonants and vowels: Different roles in early language acquisition. Developmental Science. 2011;14:1445–1458. doi: 10.1111/j.1467-7687.2011.01089.x. [DOI] [PubMed] [Google Scholar]

- Keidel J, Jenison R, Kluender K, Seidenberg M. Does grammar constrain statistical learning? Commentary on Bonatti et al. (2005) Psychological Science. 2007;18:922–923. doi: 10.1111/j.1467-9280.2007.02001.x. [DOI] [PubMed] [Google Scholar]

- Kuhl P, Miller J. Speech perception by the chinchilla: Voiced voiceless distinction in alveolar plosive consonants. Science. 1975;190:69–72. doi: 10.1126/science.1166301. [DOI] [PubMed] [Google Scholar]

- Ladefoged P. Vowels and consonants: An introduction to the sounds of language. Blackwell; Oxford: 2001. [Google Scholar]

- Malmierca M. The structure and physiology of the rat auditory system. International Review of Neurobiology. 2003;56:147–211. doi: 10.1016/s0074-7742(03)56005-6. [DOI] [PubMed] [Google Scholar]

- Marcus G, Vijayan S, Bandi Rao S, Vishton P. Rule learning by seven-month-old infants. Science. 1999;283:77–80. doi: 10.1126/science.283.5398.77. [DOI] [PubMed] [Google Scholar]

- Murphy R, Mondragón E, Murphy V. Rule learning by rats. Science. 2008;319:1849–1851. doi: 10.1126/science.1151564. [DOI] [PubMed] [Google Scholar]

- Nespor M, Peña M, Mehler J. On the different roles of vowels and consonants in speech processing and language acquisition. Lingue e Linguaggio. 2003;2:201–227. [Google Scholar]

- New B, Araujo V, Nazzi T. Differential processing of consonants and vowels in lexical access through reading. Psychological Science. 2008;19:1223–1227. doi: 10.1111/j.1467-9280.2008.02228.x. [DOI] [PubMed] [Google Scholar]

- Newport E, Hauser M, Spaepen G, Aslin R. Learning at a distance II: Statistical learning of nonadjacent dependencies in a nonhuman primate. Cognitive Psychology. 2004;49:85–117. doi: 10.1016/j.cogpsych.2003.12.002. [DOI] [PubMed] [Google Scholar]

- Ohms V, Escudero P, Lammers K, ten Cate C. Zebra finches and Dutch adults exhibit the same cue weighting bias in vowel perception. Animal Cognition. 2011 doi: 10.1007/s10071-011-0441-2. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Owren M, Cardillo G. The relative roles of vowels and consonants in discriminating talker identity versus word meaning. Journal of the Acoustical Society of America. 2006;119:1727–1739. doi: 10.1121/1.2161431. [DOI] [PubMed] [Google Scholar]

- Peña M, Bonatti L, Nespor M, Mehler J. Signal-driven computations in speech processing. Science. 2002;298:604–607. doi: 10.1126/science.1072901. [DOI] [PubMed] [Google Scholar]

- Perez C, Engineer C, Jakkamsetti V, Carraway R, Perry M, Kilgard M. Different timescales for the neural coding of consonant and vowel sounds. Cerebral Cortex. 2012 doi: 10.1093/cercor/bhs045. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perruchet P, Rey A. Does the mastery of center-embedded linguistic structures distinguish humans from nonhuman primates? Psychonomic Bulletin & Review. 2005;12:307–313. doi: 10.3758/bf03196377. [DOI] [PubMed] [Google Scholar]

- Pons F. The effects of distributional learning on rats’ sensitivity to phonetic information. Journal of Experimental Psychology: Animal Behavior Processes. 2006;32:97–101. doi: 10.1037/0097-7403.32.1.97. [DOI] [PubMed] [Google Scholar]

- Pons F, Toro JM. Structural generalizations over consonants and vowels in 11-month-old infants. Cognition. 2010;116:361–367. doi: 10.1016/j.cognition.2010.05.013. [DOI] [PubMed] [Google Scholar]

- Ramus F, Hauser M, Miller D, Morris D, Mehler J. Language discrimination by human newborns and cotton-top tamarin monkeys. Science. 2000;288:349–351. doi: 10.1126/science.288.5464.349. [DOI] [PubMed] [Google Scholar]

- Reed P, Howell P, Sackin S, Pizzimenti L, Rosen S. Speech perception in rats: Use of duration and rise time cues in labeling of affricate/fricative sounds. Journal of the Experimental Analysis of Behavior. 2003;80:205–215. doi: 10.1901/jeab.2003.80-205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rescorla R, Wagner A. In: Classical conditioning II. Black H, Prokasy W, editors. Appleton; New York: 1972. pp. 64–99. [Google Scholar]

- Saffran J, Aslin R, Newport E. Statistical learning by 8-month-old infants. Science. 1996;274:1926–1928. doi: 10.1126/science.274.5294.1926. [DOI] [PubMed] [Google Scholar]

- Toro JM, Nespor M, Mehler J, Bonatti L. Finding words and rules in a speech stream: Functional differences between vowels and consonants. Psychological Science. 2008;19:137–144. doi: 10.1111/j.1467-9280.2008.02059.x. [DOI] [PubMed] [Google Scholar]

- Toro JM, Shukla M, Nespor M, Endress A. The quest for generalizations over consonants: Asymmetries between consonants and vowels are not the by-product of acoustic differences. Perception & Psychophysics. 2008;70:1515–1525. doi: 10.3758/PP.70.8.1515. [DOI] [PubMed] [Google Scholar]

- van Heijningen C, de Visser J, Zuidema W, ten Cate C. Simple rules can explain discrimination of putative recursive syntactic structures by a songbird species. Proceedings of the National Academy of Sciences, USA. 2009;106:20538–20543. doi: 10.1073/pnas.0908113106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yip M. The search for phonology in other species. Trends in Cognitive Sciences. 2006;10:442–446. doi: 10.1016/j.tics.2006.08.001. [DOI] [PubMed] [Google Scholar]