Abstract

Studies over several decades have identified many of the neuronal substrates of music perception by pursuing pitch and rhythm perception separately. Here, we address the question of how these mechanisms interact, starting with the observation that the peripheral pathways of the so-called “Core” and “Matrix” thalamocortical system provide the anatomical bases for tone and rhythm channels. We then examine the hypothesis that these specialized inputs integrate tonal content within rhythm context in auditory cortex using classical types of “driving” and “modulatory” mechanisms. This hypothesis provides a framework for deriving testable predictions about the early stages of music processing. Furthermore, because thalamocortical circuits are shared by speech and music processing, such a model provides concrete implications for how music experience contributes to the development of robust speech encoding mechanisms.

Keywords: music, neuronal oscillations, thalamocortical projections, rhythm, auditory cortex

Introduction

Both speech and music rely on pitch transitions whose production is controlled over time. In everyday listening, the pitch and rhythm of speech and music blend together to create a seamless perception of pitch fluctuations organized in time. The organization of pitch in rhythm aids cognitive processing in both domains (Weener, 1971; Staples, 1968; Quene & Port, 2005; Dilley & Pitt, 2010; Shields, McHugh, & Martin, 1974; Shepard & Ascher, 1973; Devergie, Grimault, Tillmann, & Berthommier, 2010). An extensive body of research has linked music training to enhanced perception and processing of pitch (Besson, Schon, Moreno, Santos, & Magne, 2007; Tervaniemi, Just, Koelsch, Widmann, & Schroger, 2005; Fujioka, Trainor, Ross, Kakigi, & Pantev, 2004; Pantev et al., 2003; Pantev, Roberts, Schulz, Engelien, & Ross, 2001; Munte, Nager, Beiss, Schroeder, & Altenmuller, 2003; Musacchia, Sams, Skoe, & Kraus, 2007) and rhythm (Bailey & Penhune, 2010; Dawe, Platt, & Racine, 1995), as well as spoken (Musacchia, Strait, & Kraus, 2008; Musacchia et al., 2007; Magne, Schon, & Besson, 2006; Marques, Moreno, Luis, & Besson, 2007; Parbery-Clark, Strait, & Kraus, 2011; Meinz & Hambrick, 2010) and written (Strait, Hornickel, & Kraus, 2011) language. The neuronal mechanisms of pitch and rhythm perception are often investigated separately, and while the interrelationship between the two is a longstanding question, it remains poorly understood. Recent findings show that entrained brain rhythms facilitate neuronal processing and perceptual selection of rhythmic event streams (for review, (Schroeder, Wilson, Radman, Scharfman, & Lakatos, 2010; Schroeder, Lakatos, Kajikawa, Partan, & Puce, 2008a)). These findings join with others - specifically relating neuronal entrainment to speech (Luo & Poeppel, 2012) and music (Large & Snyder, 2009) - to prompt renewed interest in the neuronal mechanisms that underlie the brain’s ability to form a representation of rhythm and to use this to support the processing of tone.

There are several compelling reasons to explore the brain’s representation of musical structure. One feature that differentiates most music from speech is that it is organized around a beat, a perceived pulse that is temporally regular, or periodic. While periodicity is an ubiquitous aspect of spoken language, it is much less regular and is more often patterned on an alternation of stressed versus unstressed syllables (Patel & Daniele, 2003) rather than organized a-priori around a particular rhythm. The beat organization of music is particularly tractable from an experimental point of view because the rhythmic structure can be decomposed into simple (e.g. isochronous, equally timed intervals) or complex (e.g. syncopated or irregularly timed intervals) patterns for understanding how the brain integrates tonal content at different levels of complexity. Another heuristic feature of music is that the organization of musical rhythm is well matched, and perhaps coupled, to the neurophysiology of the brain. It is worthwhile to note that the nesting of faster rhythms into slower rhythms in music (e.g., in Habenera) parallels the nesting higher frequencies in lower frequencies in the brain; there is a similar parallel in speech where the formant scale (25 ms) is nested within the syllabic scale (theta) and that in turn is nested within the phrasal scale (delta). Thus, investigations of brain rhythm response changes to musical stimulus permutations music may help us to understand fundamental aspects of neuronal information processing in ecologically relevant paradigms. While both music and speech are periodic, the simpler structure of music could act to foster plasticity at a more basic level than the complex periodicity of speech.

In this paper, we advance the hypothesis that the peripheral pathways carry relatively segregated representations of tone and rhythmic features, that feed into separate and specialized thalamocortical projection channels. The so-called thalamic “Core” and “Matrix” systems, which are thalamocortical networks of chemically differentiated projection neurons(Jones, 1998; Jones, 2001) that generally correspond to “specific/non-specific” and “lemniscal/extralemniscal” dichotomies found elswehere, provide the anatomical bases for these channels. In this model, the classical types of “driving” and “modulatory” input mechanisms (Schroeder & Lakatos, 2009a; Lee & Sherman, 2008; Sherman & Guillery, 1998; Viane, Petrof & Sherman, 2011) are hypothesized to integrate tone content within rhythm context in auditory cortex. This hypothesis provides a framework for generating testable predictions about the early stages of music processing in the human brain, and may also be useful for understanding how the neuronal context of rhythm can facilitate music-related plasticity. Although music-related plasticity is not a focus of this paper, our hypothesis generally predicts an overlap of plasticity–related brain changes in speech and music domains, with rhythm-related plasticity over multiple timescales particularly enhanced with music training.

Integrating Tone and Rhythm

The proposed mechanism of tone and rhythm integration entails a specific subset of thalamocortical projections (i.e., the matrix) that organize activity around a beat and facilitate cortical processing of tones that fall within the rhythmic stream, and are conveyed into cortex by another subset of the thalamocortical projection system (i.e., the core). This notion is based on five main conclusions stemming from earlier research: 1) neuronal oscillations in thalamocortical systems entrain to repeated stimulation, 2) music and lower frequency neuronal oscillations operate on similar timescales and have a similar hierarchical organization, 3) different ascending projection systems from the thalamus convey tonal content and modulate cortical rhythmic context; 4) these input systems converge and interact in cortex and 5) neuronal oscillations generating the relevant cortical population rhythms have both optimal and non-optimal excitability phases, during which stimulus processing is generally facilitated or suppressed. In this way, the modulatory influences of oscillations in the matrix thalamocortical circuit, coupled to the rhythm of a musical stimulus, impose a coordinated “context” for the “content” (e.g. tonal information) carried by networks of core thalamocortical nuclei, much like interactions between hippocampal interneurons and principal cells (Chrobak & Buzsaki, 1998). Support for these conclusions, along with other important considerations and caveats to our hypothesis, are described below.

1: Oscillatory entrainment to periodic stimulation

The neuronal oscillations that give rise to the cortical population rhythms (indexed by the electroencephalogram or EEG) are cyclical fluctuations of baseline activity that manifest throughout the brain (for review (Buzsaki & Draguhn, 2004)), including both neocortical and thalamic regions (Slezia, Hangya, Ulbert, & Acsady, 2011; Steriade, McCormick, & Sejnowski, 1993). Oscillations generally have a 1/f frequency spectrum with peaks of activity at particular frequency bands [(e.g. delta ~1–3 Hz, theta ~4–9, alpha ~8–12 Hz, beta ~13–30 Hz, gamma ~30–70 Hz (Berger, 1929); for recent review, see (Buzsaki, 2006)] and interact strongly with stimulus-related responses (Lakatos et al., 2005; Schroeder, Lakatos, Kajikawa, Partan, & Puce, 2008b; Jacobs, Kahana, Ekstrom, & Fried, 2007). For example, when stimuli are presented in a periodic pattern, ambient neuronal oscillations entrain (phase-lock) to the structure of the attended stimulus stream (e.g. (Schroeder & Lakatos, 2009c; Will & Berg, 2007)). Neuronal entrainment to salient periodic stimulation can emerge rapidly without conscious control, and can still facilitate behavioral responses (Stefanics et al., 2010), however, such entrainment can also be attentionally modulated to favor a specific sensory stream when several streams are presented concurrently (Lakatos, Karmos, Mehta, Ulbert, & Schroeder, 2008). Previous work has shown that low-frequency (<1Hz), periodic sound stimulation promotes regular up/down transitions of activity in non-lemniscal (Matrix) thalamic nuclei (Gao et al., 2009). Interestingly, this entrainment effect emerges rapidly and can persistent for quite some time, modulating the efficacy of synaptic transmission at the entrained interval for some time (at least 10 minutes) after stimulation is discontinued. These findings suggest that entrainment of neuronal oscillations may also help to capture and retain the contextual structure of rhythmic stimulus intervals.

2: Mirroring of neuronal and musical rhythms

There is an uncanny resemblance between the organization of neuronal rhythms and the organization of musical rhythms. In temporal cortex, ongoing neural rhythms display 1/f structure, such that log power decreases as log frequency increases (Freeman, Rogers, Holmes, & Silbergeld, 2000). In naturally performed musical rhythms, temporal fluctuations display 1/f structure across musical styles (Hennig et al., 2011; Rankin, Large, & Fink, 2009), and such structured temporal fluctuations have been shown to communicate emotion in music (Bhatara, Tirovolas, Duan, Levy, & Levitin, 2011). In auditory cortex, brain rhythms nest hierarchically: delta (1–4 Hz) phase modulates theta (4–10 Hz) amplitude, and theta phase modulates gamma (30–50 Hz) amplitude (Lakatos et al., 2005). In perceptual experiments, pulse occupies the range from about 0.5–4 Hz (London, 2004), matching the delta band rather well. Like neuronal rhythm frequencies, musical beats nest hierarchically: faster metrical frequencies subdivide the pulse into 2’s and 3‘s (London, 2004). Perceptual and behavioral experiments with periodic auditory stimuli put the border between pulse frequencies and fast metrical frequencies at about 2.5–4 Hz (Large, 2008), with the upper limit for fast metrical frequencies at about 10 Hz (Repp, 2005). Content is also nested within rhythm context, for example the onset of events within acoustic rhythms evokes gamma band responses (Pantev, 1995; Snyder & Large, 2005). Furthermore auditory cortical rhythms synchronize to acoustic stimulation in the range of musical pulse (Fujioka, Trainor, Large, & Ross, 2012; Lakatos et al., 2008; Snyder & Large, 2005; Stefanics et al., 2010; Will & Berg, 2007), and delta and theta rhythms typically emerge with engagement of motor cortex (Saleh, Reimer, Penn, Ojakangas, & Hatsopoulos, 2010) which is a central nexus of rhythm-related brain activity (Besle et al., 2011; Saleh et al., Neuron, 2010)(Chen, Penhune, & Zatorre, 2008). Experiments have confirmed that synchronization with auditory rhythms matches predictions of entrained oscillations (Large, 2008) and have demonstrated qualitative shifts in coordination mode at the border of pulse and fast metrical frequencies (Kelso, deGuzman, & Holroyd, 1990).

3: Transmission of tonal and rhythmic information via separate thalamocortical circuits

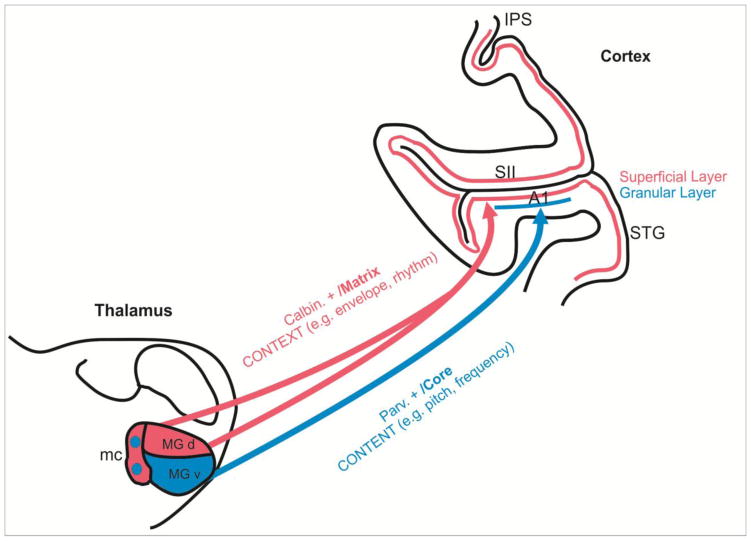

The mechanism we propose for integrating pitch and rhythm processing involves the contrasting physiology and anatomy of the so-called “Core” and “Matrix” systems described by Jones (Jones, 1998; Jones, 2001) (Figure 1). Note that the Core/Matrix dichotomy corresponds generally to the “specific/non-specific” and “lemniscal/extralemniscal” terms found elsewhere in the literature (Kaas & Hackett, 1999; Kraus & Nicol, 2005; Lomber & Malhotra, 2008; Abrams, Nicol, Zecker, & Kraus, 2011). In this model, Core and Matrix thalamocortical projection neurons are chemically differentiated by the calcium binding proteins they express, with Core neurons expressing parvalbumin (Pa) and Matrix neurons expressing calbindin (Cb).

Figure 1. A model for integration content and context cues in music based on “Core” and “Matrix” divisions of the auditory thalamocortical projection pathways.

Parvalbumin-positive (Parv. +) “Core” thalamocortical neurons (blue) lying mostly in the ventral division of the Medial Geniculate body (MGv) receive frequency-tuned inputs from the tonotopically organized internal nuclear portions of the inferior colliculus. These neurons terminate in the granular cortical layers of Core regions of primary auditory cortex (A1), giving rise to the relatively precise tonotopic representation observed there (Kimura et al., 2003; Hashikawa et al., 1995; Pandya et al., 1994). In contrast, calbindin positive (Calbin. +) “Matrix” thalamocortical neurons (red) lying mainly in the Magnocellular (mc) and dorsal (d) Medial Geniculate divisions receive broadly-tuned (diffuse) inputs input from the non-tonotopic external nuclear portions of the inferior colliculus. These inputs project broadly to secondary/tertiary (e.g., auditory belt and parabelt), as well as primary regions, terminating in the superficial cortical layers (Kimura et al., 2003; Hashikawa et al., 1995; Pandya et al., 1994). As detailed in the text, we propose that that activity conveyed by thalamocortical Matrix projections, and entrained to the accent/beat pattern of a musical rhythm, dynamically reset and “modulate” the phase of ongoing cortical rhythms, so that the cortical rhythms themselves synchronize with the rhythmic “contextual” pattern of the music. Because oscillatory phase translates to excitability state in a neuronal ensemble, a potential consequence of the modulatory phase resetting that underlies synchrony induction and maintenance is that responses “driven” by specific “content” that is associated with contextual musical accents (e.g., notes or words) will be amplified, relative to content that occurs off the beat. Abbreviations: IPS: Intrapariaetal Sulcus; 3b: Area 3b, Primary somatosensory cortex; SII: Secondary somatosenaory cortex; STG: Superior Temporal Gyrus

In the auditory system, Pa-positive core thalamic neurons lie mostly within the ventral division of the medial geniculate nucleus (de Venecia, Smelser, Lossman, & McMullen, 1995; Jones, 2003), receive ascending inputs from the internal nucleus of the inferior colliculus (Winer & Schreiner, 2005; Oliver & Huerta, 1992), and exhibit narrow frequency tuning (Anderson, Wallace, & Palmer, 2007; Calford, 1983). They project mainly to Layer 4 and deep layer 3 of the auditory cortical “Core” regions including A1, and the more rostral regions (R and RT) (Hashikawa, Molinari, Rausell, & Jones, 1995; McMullen & de Venecia, 1993; Pandya, Rosene, & Doolittle, 1994; Kimura, Donishi, Sakoda, Hazama, & Tamai, 2003), where their pure tone preferences give rise to the well-known tonotopic maps of these regions. Cb-positive Matrix thalamic neurons comprise much of the dorsal and Magnocellular divisions of the medial geniculate complex, but are also scattered throughout the ventral division of the MGB (de Venecia et al., 1995; Jones, 2003). They receive input from the external nuclear division of inferior colliculus (Winer & Schreiner, 2005; Oliver & Huerta, 1992), and thus, exhibit broad frequency tuning, as well as strong sensitivity to acoustical transients (Anderson et al., 2007; Calford, 1983). Matrix neurons project broadly to Layer 2 of all the auditory cortices, including both core (A1, R and Rt), as well as the surrounding belt regions (Jones, 1998; Jones, 2001; Jones, 2003). Their notably poor frequency tuning is consistent with the tuning properties of neurons in auditory belt regions, though it probably does not completely account for these properties.

Based on their proclivities for pure tones and acoustic transients, the Core and Matrix provide potential substrates for encoding and central projection of music’s pitch- and rhythm-related cues, respectively. This proposed division of labor, and in particular, the role of the Matrix in using entrained neuronal oscillations to represent and convey rhythm-related influences is more intriguing in light of the proposition that these oscillations represent the “context” for the processing of specific sensory “content” (Buzsaki, 2006); pitch components of music would equate to specific tonal “content,” while rhythmic components would equate to “context.”

4: Convergence and interaction of Core and Matrix circuits

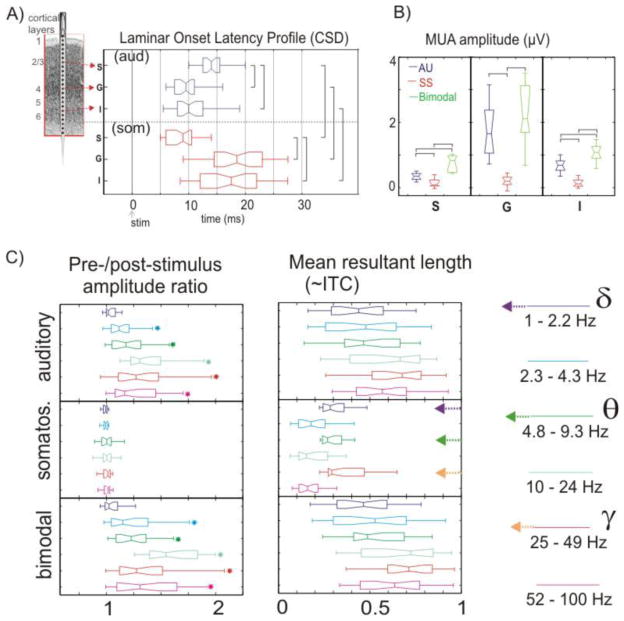

The separation of thalamocortical music processing into tonal and rhythmic components mediated by the core and matrix systems would also have predictable downstream effects in the neocortex. As mentioned above, Pa-positive thalamic Core inputs target the middle layers of primary cortical regions (A1, R and Rt), whereas Cb-positive thalamic Matrix afferents project over a much wider range of territory, targeting the most superficial layers of these areas, as well as the surrounding belt cortical regions (Jones, 1998; Jones, 2001), as illustrated in Figure 1. Core thalamic inputs clearly drive action potentials in the target neurons, and thus are reasonably considered as “driving” inputs, (e.g., (Sherman & Guillery, 2002) in auditory cortex). Matrix inputs, through their upper layer terminations, are in a position to function as “modulatory” inputs, which would not elicit action potentials directly, but rather, control the likelihood that appropriate driving inputs would do this. This idea is admittedly speculative, but fits with the notion that matrix afferents control cortical synchrony in general (Jones, 2001). One area where this has been extensively explored is in multisensory investigations. To make the proposition more concrete, we illustrate the contrasting physiology of driving and modulatory inputs on the laminar activity profile in A1 (Figure 2), by comparing the effects of auditory (driving) versus somatosensory (modulatory) inputs into A1 (Lakatos, Chen, O’Connell, Mills, & Schroeder, 2007).

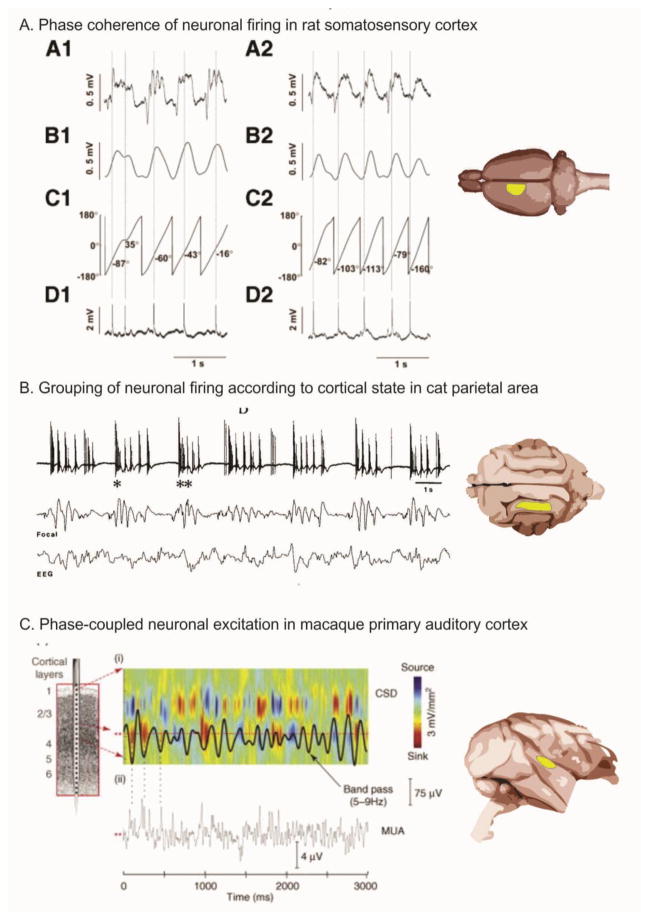

Figure 2. Oscillations control neuronal excitability.

Panel A. On the left, four aspects of neural activity are shown: the low pass filtered local field potential (LFP) (A1), the amplitude activity (B1), phase activity (C1) and multiunit activity (MUA) (D1) (Slezia et al., 2011). A2-D2 shows the same indices for an oscillation that increases in frequency. Panel B. MUA bursting of cat parietal corticothalamic neurons (top trace) occurs in the Delta frequency range and co-occurs with slower (0.3–0.4 Hz) LFP rhythms (middle trace) and scalp recorded electroencephalographic (EEG) activity (bottom trace) (Steriade et al., 1993). Panel C. In macaque (Lakatos et al., 2005), a current source density (CSD) profile for the supragranular layers of auditory cortex (i) sampled using a multielectrode (left) shows Theta-band (5–9 Hz) oscillatory activity (superimposed black line). Drop lines from ii shows coincidence of simultaneously recorded MUA activity. Reproduced, with permission, from [33] (A), [38] (B), [77] (C).

In this illustration, laminar current source density (CSD) onset latency profiles (Fig. 2a) index net local synaptic current flow. Patterns of activity show that auditory input initiates a classic feedforward synaptic activation profile, beginning in Layer 4, while synaptic activation stemming from somatosensory input begins in the supragranular layers. Quantified multiunit activity amplitude (MUA – Fig. 2c) for the same laminar locations show robust neuronal firing with auditory input, but no detectable change in local neuronal firing with somatosensory input. The combination of synaptic currents (CSD) with neuronal firing (MUA), plus the initiation of the auditory response profile in Layer 4, identifies it as a ‘driving’ (core) input, while the CSD input profile beginning outside of Layer 4, in the absence of a MUA identifies somatosensory as a subthreshold ‘modulatory’ input (Lakatos et al., 2007). Importantly, both the extremely short latency of the input and its high supragranular target zone suggest that it represents a matrix input. In the tone-rhythm integration mechanism we propose, tonal content carried by core afferents would form the driving input to Layer 4, while rhythmic context information borne by matrix afferents would form the modulatory input to the supragranular site.

How would the hypothesized tone – rhythm interaction look in this system? Notice first that interaction of the modulatory and driving inputs during bimodal stimulation in our example produces significant enhancement of neuronal firing (MUA, Figure 2c). We predict that modulatory rhythmic input would similarly enhance the neural representation of tonal inputs. It is further noteworthy that the modulatory somatosensory inputs appear to use phase reset of ongoing neuronal oscillations as an instrument for enhancement of auditory processing. Figure 2c (left) depicts pre- to post-stimulus amplitude ratios for single trial oscillatory activity in six frequency bands extending from delta to gamma. As expected of a pure modulatory input (Shah et al., 2004; Makeig et al., 2004), somatosensory input triggers no detectable change on the power of ongoing oscillations, but produces phase concentration (reset) and coherence over trials (intertrial coherence or ITC) in the low delta, theta and gamma bands (Figure 2c-right). This is how we predict that rhythmic inputs will behave. In contrast, the auditory (driving) input provokes broad band increase in both oscillatory power and ITC across the spectrum, as expected for an ‘evoked’ type of response, and this is how we predict tonal inputs will function. Thus rhythmic input would impact mainly by resetting the phase of ongoing oscillations and ‘sculpting’ the temporal structure of ambient oscillatory activity. Rhythm would ‘modulate’ the dynamics of activity in A1, but would not ‘drive’ auditory neurons to fire to any great extent.

5: Thalamocortical mediation of cortical excitability control at rhythmic intervals

Several lines of research provide a conceptually and empirically appealing explanation for the way that entrained, rhythmic input to the cortex modulates cortical excitability at beat intervals(Large & Snyder, 2009). As mentioned above, oscillations appear to reflect cyclical variations in neuronal excitability and are used as instruments of brain operation. Recent work has built on this idea to clarify the relationship between neuronal excitability and ongoing oscillations evident in the electroencephalogram (EEG) measured at the brain surface or scalp, as well as in similar signals measured as “local field potentials” (LFP), within the brain. One of the central tenets of our hypothesis is rhythmic oscillatory modulation of driving tonal input. Neuronal oscillations reflect cyclical variations in neuronal excitability and have been shown to relate to a wide range of cognitive and behavioral operations (Fries, Nikolic, & Singer, 2007; Klimesch, Freunberger, & Sauseng, 2009; Chrobak & Buzsaki, 1998; Fan et al., 2007; Schroeder & Lakatos, 2009b). Previous work has clarified the basis of the relationship between neuronal excitability and ongoing oscillations using electroencephalogram (EEG) activity measured at the brain surface or scalp, as well as in similar signals measured as “local field potentials” (LFP), within the brain. Specifically, findings across species and brain regions show a tight coupling between the phase of ongoing EEG/LFP oscillations and neuronal firing (Slezia et al., 2011; Steriade, Nunez, & Amzica, 1993; Lakatos et al., 2005; Buzsaki, 2006) (Fig 2). These data show that that neuronal firing is coupled to a “preferred phase” of the oscillatory EEG activity and that the final output of neuronal excitability modulation (i.e., firing) is controlled by ongoing oscillatory fluctuations. The coupling between oscillations and neuronal excitability is illustrated here in three animal species and sensory cortices (Fig. 3). In this example, spontaneous multi-unit neuronal activity (MUA) in rat somatosensory cortex occurs at a consistent phase of the ongoing EEG (Slezia et al., 2011). In this study, a neuron’s firing exhibited phase coupling, over successive cycles of LFP oscillation. This coupling shed light on earlier observations that delta band bursting of corticothalamic neurons in cat parietal cortex was grouped according to slow (0.3–0.4 Hz) rhythms (Steriade et al., 1993). The coupling of neuronal excitability with ongoing EEG phase is preserved in higher primates, with theta-band (5–9 Hz) oscillatory activity in the supragranular layers of macaque primary auditory cortex coincident with MUA recorded from the same site (Lakatos et al., 2005). These findings, together with those discussed above (re: Fig. 3), suggest that thalamocortical rhythmic input to cortical supragranular layers would organize the excitability state around a beat, providing rhythm-related time windows of enhanced firing probability.

Figure 3. Contrasting laminar profiles and physiology of “driving” and “modulatory” inputs.

A) A schematic of the multi-contact electrode and positioned astride the cortical laminae is shown on the right. Box-plots show quantified onset latencies of the best-frequency pure tone (blue) and somatosensory stimulus (red) related current source density (CSD) response in supragranular (S), granular (G) and infragranular (I) layers across experiments. Lines in the boxes depict the lower quartile, median, and upper quartile values. Notches in boxes depict 95% confidence interval for medians of each distribution. Brackets indicate the significant differences(Games-Howell post-hoc test, p<0.01). B) Quantified multi-unit activity (MUA) amplitudes (over 38 cases) from the representative supragranular, granular and infragranular channels (S, G, and I) over the 15–60 ms time interval for the same conditions as in A, along with a bimodal stimulation condition. Brackets indicate the significant differences (Games-Howell, p<0.01). C) left. Quantified (n=38) post-/pre-stimulus single trial oscillatory amplitude ratios (0 – 250 ms / −500 – −250 ms) for different frequency bands (different colors) of the auditory, somatosensory and bimodal supragranular responses. Stars denote the amplitude ratios significantly different from 1 (one-sample t-tests, p<0.01). right. Quantified (n=38) intertrial coherence (ITC) expressed as a vector quantity (mean resultant length), measured at 15 ms post-stimulus (i.e., at the initial peak response). For somatosensory events, increase in phase concentration only occurs in the low-delta (1–2.2 Hz), theta (4.8–9.3 Hz) and gamma (25–49 Hz) bands, indicated by colored arrows on the right. Adapted, with permission, from (Lakatos et al., 2007).

A caveat to the rhythm- tone processing and integration hypothesis

Despite the evidence in support of the hypothesis we advance here, it is incomplete for the following reason: If the system operated as simply as we have described it here, we would likely hear tonal content that occurs “on the beat” very well, and by the same token, tonal content occurring “off the beat” very poorly. This proves to be the case for simple transient stimuli (Large & Jones, 1999), but given the smooth melodic flow that we perceive in music, it is likely that the neuronal entrainment process we describe also orchestrates more subtle and complex processes. Lacking any firm information on this matter, we can nonetheless offer a speculation that is amenable to empirical examination, and may thus stimulate future studies on the topic.

We conjecture that entrainment is but one mechanism in a set of dynamic oscillatory mechanisms that operate at longer timescales, are sensitive to specific stimulus features, and form a recurrent loop with attentional mechanisms that together form a complex network of attention-controlled enhancement and suppression. Thus, different elements of the neuronal population responsive to tones may encode additional information such as tonal movements and progressions. Empirical evidence supports this notion. For example, the spiking of auditory cortical neurons in monkeys listening to extended duration naturalistic stimuli was investigated with specific attention to the precise phase of the ongoing local slow oscillation at which spiking occurred (Kayser, Montemurro, Logothetis, & Panzeri, 2009). The authors found that in these circumstances, individual neurons tended to fire characteristically at different phases of the local slow (reference) oscillation. They concluded that such a nested code combining spike-train patterns with the phase of firing optimized the information carrying capacity of the cortical ensemble, and provided the best reconstruction of the input signal. This representation of frequency-based information in spike-phase coding is strongly reminiscent of the encoding of spatial (movement) information by theta rhythm phase precession in rodent hippocampal place cells (Huxter, Burgess, & O’Keefe, 2003). Spike phase coding is an appealing mechanism for overcoming the limitations in our model, but further experimentation will be necessary to flesh-out this idea.

Outstanding Issues

A number of testable predictions based on the proposed model are outlined below. We also highlight some questions that remain to be addressed.

-

Neurophysiological Predictions

Selective rhythmic stimulation of matrix neurons (e.g. electrically or optogenetically) should have purely modulatory effects on neocortex, most prominent in supragranular layers, while stimulation of core neurons should produce stereotypic driving responses primarily in Layer 4.

Co-stimulation of core and matrix neurons should produce enhancement of cortical driving responses, stronger transmission of rhythmic driving patterns to the extragranular layers and to higher cortical regions.

Counterphase rhythmic stimulation of core and matrix should suppress driving responses, especially outside of Layer 4.

Disruption of matrix functioning should disrupt the emergent perception of the beat/pulse in music.

Matrix nuclei should have a wider interval range of accurate phase-locking to sound onset than Core neurons (e.g. can more accurately encode faster temporal intervals).

-

Open Questions

Does music-related plasticity have differential effects on core and matrix thalamocortical circuits? What about rhythm training vs. tonal content training?

Do disorders having specific sensory deficits of tone processing (e.g. amusia) or rhythmic processing (e.g. central pattern generation) show specific core vs matrix thalamocortical impact?

What is the role of pitch contour and consonance in rhythmic perception and processing?

Can the developmental trajectory of core and matrix circuit development in humans help explain acquisition of tonal (e.g. segmental) vs. rhythmic (e.g. suprasegmental) linguistic features?

Concluding Remarks

We have outlined a physiological hypothesis for an integration of tone and rhythm in which thalamic activity around accent/beat pattern of a musical rhythm, conveyed by thalamocortical Matrix projections, dynamically modulates the phase of ongoing cortical oscillatory rhythms. Because oscillatory phase translates to excitability state in a neuronal ensemble, a consequence of the modulatory phase resetting that underlies synchrony induction and maintenance is that specific content associated with musical accents (e.g., notes or words) will be physiologically amplified, in a way that is similar to that believed to occur in language perception (Schroeder et al., 2008b; Zion Golumbic, Poeppel, & Schroeder, 2012). Whether or not rhythm-based enhancement of neuronal firing confers perceptual accents, however, may be a more complex issue. For example, it is known that perceptual accents of sound does not always coincide with an established meter (Repp, 2010). It is likely that entrainment mechanisms function as a base operation to modulate attentionally-mediated mechanisms that, together with intrinsic state properties, enhance perceptual sensitivity and selectivity. Thus, not only are there reasonably obvious neuronal substrates for the encoding and representation of tonal and rhythmic components of music, but clear means of integrating the component representations, using neuronal systems that are themselves modifiable by the experiencing of music. This model posits a concrete mechanism that may help to explain how rhythm information is represented and transmitted to orgaize neural activity in a wide network of areas (Alluri et al., 2012) including multiple sensory (Chen, Zatorre, & Penhune, 2006), motor (Konoike et al., 2012) and frontal cortices. As noted in the preceding section, the hypothesis is rudimentary, and does not yet fully account for all aspects of the neural encoding of music, and as yet, direct empirical support for the precise mechanism we propose is not in hand. However the hypothesis makes clear physiological predictions than are amenable to empirical validation (preceding section), and can help to guide additional experimentation.

Our hypothesis may provide a phsyiolgoical explanation for left right differences observed at the level of the BOLD signal and scalp measurements. For example, multiple lines of evidence have supported a spectro-temporal theory of auditory processing that posits better temporal resolution in left auditory cortical areas and better spectral resolution right hemisphere homologues (Zatorre, Belin, & Penhune, 2002). If this idea holds, the biasing of rhythm and tone within the matrix and core thalamic nuclei and projections may show similar hemispheric asymmetries. Specifically, we suggest that a difference in physiology of the left and right non primary, matrix thalamic nuclei pathways may give rise to asymmetry, with primary “Core” nuclei on the left specialized for complex sound processing, and non primary “Matrix” nuclei on the right specialized for repeated stimulation and oscillatory phase reset mechanisms. It may be that the thalamic non primary system, encoding rhythm, is particularly sensitive to changes in context and that activity in the right thalamus preferentially processes rhythmic “contextual” cues. According to our hypothesis this would be explained by the oscillatory phase reset mechanisms or termination differences in the left and right matrix systems. Evidence for this theory have been demonstrated in animal models, showing that the nonprimary thalamic pathway may be specifically tuned to code low-frequency temporal information present in acoustic signals (Abrams et al., 2011). However, there may be an additional level of complexity to consider, as leftward asymmetry for speech processing findings have been observed in both primary-and non-primary thalamic structures (King, Nicol, McGee, & Kraus, 1999). Therefore, the relationship between the Core/Matrix and spectrotemporal hypotheses remains speculative, and more investigation is needed to understand the contributions of left/right specializations of the subcortical primary and non-primary pathways.

Further relationships between the current hypothesis and theories of learning can also be speculated upon. Integration of tone and rhythm through a thalamocortical oscillatory control is likely built on innate mechanisms that guide on a listener’s “sensitivity” and is modifiable by learning. Considerable evidence supports this notion, showing that oscillations are innate brain mechanisms and exhibit developmental and music training-related changes. In animal models, changes in the frequency and synchronization of neural oscillations are markers of certain stages of development. Maturation of neural synchrony in each stage is compatible with changes in the myelination of cortico-cortical connections and with development of GABAergic neurotransmission (Uhlhaas, Roux, Rodriguez, Rotarska-Jagiela, & Singer, 2010; Singer, 1995). In babies 16 to 36 months of age, gamma oscillation power is associated with language, cognitive and attention measures in children and can predict cognitive and linguistic outcomes through five years of age (Gou, Choudhury, & Benasich, 2011; Benasich, Gou, Choudhury, & Harris, 2008). In addition, induced gamma band activity becomes prominent around 4.5 years of age in children who have had 1 year of music training, but not in children of this age who have not been trained (Trainor, Shahin, & Roberts, 2009). Second, musicians also show evidence of training-related changes in oscillatory activity. Extensive musical training is associated with higher gamma band evoked synchrony when listening to music (Bhattacharya, Petsche, & Pereda, 2001) or notes of instruments that they themselves play (Shahin, Roberts, Chau, Trainor, & Miller, 2008). A tantalizing piece of evidence for our prediction also shows that musicians have stronger interactions between the thalamus and premotor cortex (PMC) at the beta frequency during a motor synchronization task, and drummers in particular exhibit stronger interactions between thalamus and posterior parietal cortex (PPC) at alpha and beta frequencies (Krause, Schnitzler, & Pollok, 2010; Fujioka et al., 2012).

A mechanism for tone and rhythm integration also has strong implications for clinical populations who lose the ability to generate or continue rhythmic motion. While this paper focused on externally driven entrainment, a similar mechanism is likely to operate in accordance with internally generated periodicity. In the auditory domain, the perception of the regular pulse in a sequence of temporal intervals is associated with activity in the basal ganglia (Grahn & Brett, 2007; Grahn & Brett, 2009). A further study suggests that the striatum is a locus of internal beat generation, with the generation and prediction of a perceived beat associated with larger striatal activity than in initial beat perception or adjustment (Grahn & Rowe, 2009). The striatum receive inputs from all cortical areas and, throughout the thalamus, project principally to frontal lobe areas (prefrontal, premotor and supplementary motor areas) which are concerned with motor planning (Herrero, Barcia, & Navarro, 2002). According to our hypothesis, anatomical or physiological disturbances that result in stereotypies or a decrease in motor pattern generation could be associated not only with basal ganglia deficits, but also with dysfunction of the thalamocortical Matrix mechanism that we believe transmits this information.

Highlights.

Core and Matrix are anatomically/physiologically distinct thalamocortical pathways.

Musical tone and rhythm may diverge into Core and Matrix systems respectively.

Matrix inputs to A1 modulate oscillatory (excitability) phase in A1 neuron ensembles.

Core inputs encode tonal content that directly drive neuronal firing in A1.

Matrix-Core interactions physiologically blend context and content in music.

Contributor Information

Gabriella Musacchia, Email: gm377@rutgers.edu, Center for Molecular & Behavioral Neuroscience, Rutgers University, 197 University Avenue, Newark, NJ 07102, Tel: (973) 353-3277, Fax: (973) 353-1760

Edward Large, Email: large@ccs.fau.edu, Center for Complex Systems and Brain Sciences, Florida Atlantic University, 777 Glades Rd, BS-12, Room 308, Tel: 561.297.0106, Fax: 561.297.3634

Charles E. Schroeder, Email: Cs2388@columbia.edu, schrod@nki.rfmh.org, Cognitive Neuroscience and Schizophrenia Program, Nathan S. Kline Institute for Psychiatric Research, 140 Old Orangeburg Rd. Orangeburg, NW 10962, Professor, Department of Psychiatry, Columbia University College of Physicians and Surgeons, Tel: (845) 398-6539

Reference List

- Abrams DA, Nicol T, Zecker S, Kraus N. A possible role for a paralemniscal auditory pathway in the coding of slow temporal information. Hear Res. 2011;272:125–134. doi: 10.1016/j.heares.2010.10.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alluri V, Toiviainen P, Jaaskelainen IP, Glerean E, Sams M, Brattico E. Large-scale brain networks emerge from dynamic processing of musical timbre, key and rhythm. Neuroimage. 2012;59:3677–3689. doi: 10.1016/j.neuroimage.2011.11.019. [DOI] [PubMed] [Google Scholar]

- Anderson LA, Wallace MN, Palmer AR. Identification of subdivisions in the medial geniculate body of the guinea pig. Hear Res. 2007;228:156–167. doi: 10.1016/j.heares.2007.02.005. [DOI] [PubMed] [Google Scholar]

- Bailey JA, Penhune VB. Rhythm synchronization performance and auditory working memory in early- and late-trained musicians. Exp Brain Res. 2010;204:91–101. doi: 10.1007/s00221-010-2299-y. [DOI] [PubMed] [Google Scholar]

- Benasich AA, Gou Z, Choudhury N, Harris KD. Early cognitive and language skills are linked to resting frontal gamma power across the first 3 years. Behav Brain Res. 2008;195:215–222. doi: 10.1016/j.bbr.2008.08.049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berger ED. Uber das Elektrenkephalogramm des Menschen. Arch Psychiat Nervenkr. 1929:527–570. [Google Scholar]

- Besson M, Schon D, Moreno S, Santos A, Magne C. Influence of musical expertise and musical training on pitch processing in music and language. Restor Neurol Neurosci. 2007;25:399–410. [PubMed] [Google Scholar]

- Bhatara A, Tirovolas AK, Duan LM, Levy B, Levitin DJ. Perception of emotional expression in musical performance. J Exp Psychol Hum Percept Perform. 2011;37:921–934. doi: 10.1037/a0021922. [DOI] [PubMed] [Google Scholar]

- Bhattacharya J, Petsche H, Pereda E. Long-range synchrony in the gamma band: role in music perception. J Neurosci. 2001;21:6329–6337. doi: 10.1523/JNEUROSCI.21-16-06329.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buzsaki G. Rhythms of the Brain. New York: Oxford University Press; 2006. [Google Scholar]

- Buzsaki G, Draguhn A. Neuronal oscillations in cortical networks. Science. 2004;304:1926–1929. doi: 10.1126/science.1099745. [DOI] [PubMed] [Google Scholar]

- Calford MB. The parcellation of the medial geniculate body of the cat defined by the auditory response properties of single units. J Neurosci. 1983;3:2350–2364. doi: 10.1523/JNEUROSCI.03-11-02350.1983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen JL, Penhune VB, Zatorre RJ. Moving on time: brain network for auditory-motor synchronization is modulated by rhythm complexity and musical training. J Cogn Neurosci. 2008;20:226–239. doi: 10.1162/jocn.2008.20018. [DOI] [PubMed] [Google Scholar]

- Chen JL, Zatorre RJ, Penhune VB. Interactions between auditory and dorsal premotor cortex during synchronization to musical rhythms. Neuroimage. 2006;32:1771–1781. doi: 10.1016/j.neuroimage.2006.04.207. [DOI] [PubMed] [Google Scholar]

- Chrobak JJ, Buzsaki G. Gamma oscillations in the entorhinal cortex of the freely behaving rat. J Neurosci. 1998;18:388–398. doi: 10.1523/JNEUROSCI.18-01-00388.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dawe LA, Platt JR, Racine RJ. Rhythm perception and differences in accent weights for musicians and nonmusicians. Percept Psychophys. 1995;57:905–914. doi: 10.3758/bf03206805. [DOI] [PubMed] [Google Scholar]

- de Venecia RK, Smelser CB, Lossman SD, McMullen NT. Complementary expression of parvalbumin and calbindin D-28k delineates subdivisions of the rabbit medial geniculate body. J Comp Neurol. 1995;359:595–612. doi: 10.1002/cne.903590407. [DOI] [PubMed] [Google Scholar]

- Devergie A, Grimault N, Tillmann B, Berthommier F. Effect of rhythmic attention on the segregation of interleaved melodies. J Acoust Soc Am. 2010;128:EL1–EL7. doi: 10.1121/1.3436498. [DOI] [PubMed] [Google Scholar]

- Dilley LC, Pitt MA. Altering context speech rate can cause words to appear or disappear. Psychol Sci. 2010;21:1664–1670. doi: 10.1177/0956797610384743. [DOI] [PubMed] [Google Scholar]

- Fan J, Byrne J, Worden MS, Guise KG, McCandliss BD, Fossella J, et al. The relation of brain oscillations to attentional networks. J Neurosci. 2007;27:6197–6206. doi: 10.1523/JNEUROSCI.1833-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freeman WJ, Rogers LJ, Holmes MD, Silbergeld DL. Spatial spectral analysis of human electrocorticograms including the alpha and gamma bands. J Neurosci Methods. 2000;95:111–121. doi: 10.1016/s0165-0270(99)00160-0. [DOI] [PubMed] [Google Scholar]

- Fries P, Nikolic D, Singer W. The gamma cycle. Trends Neurosci. 2007;30:309–316. doi: 10.1016/j.tins.2007.05.005. [DOI] [PubMed] [Google Scholar]

- Fujioka T, Trainor LJ, Large EW, Ross B. Internalized timing of isochronous sounds is represented in neuromagnetic beta oscillations. J Neurosci. 2012;32:1791–1802. doi: 10.1523/JNEUROSCI.4107-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fujioka T, Trainor LJ, Ross B, Kakigi R, Pantev C. Musical training enhances automatic encoding of melodic contour and interval structure. J Cogn Neurosci. 2004;16:1010–1021. doi: 10.1162/0898929041502706. [DOI] [PubMed] [Google Scholar]

- Gao L, Meng X, Ye C, Zhang H, Liu C, Dan Y, et al. Entrainment of slow oscillations of auditory thalamic neurons by repetitive sound stimuli. J Neurosci. 2009;29:6013–6021. doi: 10.1523/JNEUROSCI.5733-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gou Z, Choudhury N, Benasich AA. Resting frontal gamma power at 16, 24 and 36 months predicts individual differences in language and cognition at 4 and 5 years. Behav Brain Res. 2011;220:263–270. doi: 10.1016/j.bbr.2011.01.048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grahn JA, Brett M. Rhythm and beat perception in motor areas of the brain. J Cogn Neurosci. 2007;19:893–906. doi: 10.1162/jocn.2007.19.5.893. [DOI] [PubMed] [Google Scholar]

- Grahn JA, Brett M. Impairment of beat-based rhythm discrimination in Parkinson’s disease. Cortex. 2009;45:54–61. doi: 10.1016/j.cortex.2008.01.005. [DOI] [PubMed] [Google Scholar]

- Grahn JA, Rowe JB. Feeling the beat: premotor and striatal interactions in musicians and nonmusicians during beat perception. J Neurosci. 2009;29:7540–7548. doi: 10.1523/JNEUROSCI.2018-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hashikawa T, Molinari M, Rausell E, Jones EG. Patchy and laminar terminations of medial geniculate axons in monkey auditory cortex. J Comp Neurol. 1995;362:195–208. doi: 10.1002/cne.903620204. [DOI] [PubMed] [Google Scholar]

- Hennig H, Fleischmann R, Fredebohm A, Hagmayer Y, Nagler J, Witt A, et al. The nature and perception of fluctuations in human musical rhythms. PLoS One. 2011;6:e26457. doi: 10.1371/journal.pone.0026457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herrero MT, Barcia C, Navarro JM. Functional anatomy of thalamus and basal ganglia. Childs Nerv Syst. 2002;18:386–404. doi: 10.1007/s00381-002-0604-1. [DOI] [PubMed] [Google Scholar]

- Huxter J, Burgess N, O’Keefe J. Independent rate and temporal coding in hippocampal pyramidal cells. Nature. 2003;425:828–832. doi: 10.1038/nature02058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacobs J, Kahana MJ, Ekstrom AD, Fried I. Brain oscillations control timing of single-neuron activity in humans. J Neurosci. 2007;27:3839–3844. doi: 10.1523/JNEUROSCI.4636-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones EG. A new view of specific and nonspecific thalamocortical connections. Adv Neurol. 1998;77:49–71. [PubMed] [Google Scholar]

- Jones EG. The thalamic matrix and thalamocortical synchrony. Trends Neurosci. 2001;24:595–601. doi: 10.1016/s0166-2236(00)01922-6. [DOI] [PubMed] [Google Scholar]

- Jones EG. Chemically defined parallel pathways in the monkey auditory system. Ann NY Acad Sci. 2003;999:218–233. doi: 10.1196/annals.1284.033. [DOI] [PubMed] [Google Scholar]

- Kaas JH, Hackett TA. ‘What’ and ‘where’ processing in auditory cortex. Nat Neurosci. 1999;2:1045–1047. doi: 10.1038/15967. [DOI] [PubMed] [Google Scholar]

- Kayser C, Montemurro MA, Logothetis NK, Panzeri S. Spike-phase coding boosts and stabilizes information carried by spatial and temporal spike patterns. Neuron. 2009;61:597–608. doi: 10.1016/j.neuron.2009.01.008. [DOI] [PubMed] [Google Scholar]

- Kelso JAS, deGuzman GC, Holroyd T. The self-organized phase attractive dynamics of coordination. In: Babloyantz A, editor. Self-Organization, Emerging Properties, and Learning. 1990. pp. 41–62. [Google Scholar]

- Kimura A, Donishi T, Sakoda T, Hazama M, Tamai Y. Auditory thalamic nuclei projections to the temporal cortex in the rat. Neuroscience. 2003;117:1003–1016. doi: 10.1016/s0306-4522(02)00949-1. [DOI] [PubMed] [Google Scholar]

- Klimesch W, Freunberger R, Sauseng P. Oscillatory mechanisms of process binding in memory. Neurosci Biobehav Rev. 2009 doi: 10.1016/j.neubiorev.2009.10.004. [DOI] [PubMed] [Google Scholar]

- Konoike N, Kotozaki Y, Miyachi S, Miyauchi CM, Yomogida Y, Akimoto Y, et al. Rhythm information represented in the fronto-parieto-cerebellar motor system. Neuroimage. 2012;63:328–338. doi: 10.1016/j.neuroimage.2012.07.002. [DOI] [PubMed] [Google Scholar]

- Kraus N, Nicol T. Brainstem origins for cortical ‘what’ and ‘where’ pathways in the auditory system. Trends Neurosci. 2005;28:176–181. doi: 10.1016/j.tins.2005.02.003. [DOI] [PubMed] [Google Scholar]

- Krause V, Schnitzler A, Pollok B. Functional network interactions during sensorimotor synchronization in musicians and non-musicians. Neuroimage. 2010;52:245–251. doi: 10.1016/j.neuroimage.2010.03.081. [DOI] [PubMed] [Google Scholar]

- Lakatos P, Chen CM, O’Connell MN, Mills A, Schroeder CE. Neuronal oscillations and multisensory interaction in primary auditory cortex. Neuron. 2007;53:279–292. doi: 10.1016/j.neuron.2006.12.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lakatos P, Karmos G, Mehta AD, Ulbert I, Schroeder CE. Entrainment of neuronal oscillations as a mechanism of attentional selection. Science. 2008;320:110–113. doi: 10.1126/science.1154735. [DOI] [PubMed] [Google Scholar]

- Lakatos P, Shah AS, Knuth KH, Ulbert I, Karmos G, Schroeder CE. An oscillatory hierarchy controlling neuronal excitability and stimulus processing in the auditory cortex. J Neurophysiol. 2005;94:1904–1911. doi: 10.1152/jn.00263.2005. [DOI] [PubMed] [Google Scholar]

- Large EW. Resonating to musical rhtyhm: Theory and experiment. In: Grondin S, editor. The Psychology of Time. West Yorkshire: Emerald; 2008. pp. 189–213. [Google Scholar]

- Large EW, Jones MR. The dynamics of attending: How we track time varying events. Psychological Review. 1999;106:119–159. [Google Scholar]

- Large EW, Snyder JS. Pulse and meter as neural resonance. Ann NY Acad Sci. 2009;1169:46–57. doi: 10.1111/j.1749-6632.2009.04550.x. [DOI] [PubMed] [Google Scholar]

- Lomber SG, Malhotra S. Double dissociation of ‘what’ and ‘where’ processing in auditory cortex. Nat Neurosci. 2008;11:609–616. doi: 10.1038/nn.2108. [DOI] [PubMed] [Google Scholar]

- London JM. Hearing in Time: Psychological aspects of musical meter. New York, NY: Oxford University Press; 2004. [Google Scholar]

- Luo H, Poeppel D. Cortical oscillations in auditory perception and speech: evidence for two temporal windows in human auditory cortex. Front Psychol. 2012;3:170. doi: 10.3389/fpsyg.2012.00170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Magne C, Schon D, Besson M. Musician children detect pitch violations in both music and language better than nonmusician children: behavioral and electrophysiological approaches. J Cogn Neurosci. 2006;18:199–211. doi: 10.1162/089892906775783660. [DOI] [PubMed] [Google Scholar]

- Makeig S, Delorme A, Westerfield M, Jung TP, Townsend J, Courchesne E, et al. Electroencephalographic brain dynamics following manually responded visual targets. PLoS Biol. 2004;2:e176. doi: 10.1371/journal.pbio.0020176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marques C, Moreno S, Luis CS, Besson M. Musicians detect pitch violation in a foreign language better than nonmusicians: behavioral and electrophysiological evidence. J Cogn Neurosci. 2007;19:1453–1463. doi: 10.1162/jocn.2007.19.9.1453. [DOI] [PubMed] [Google Scholar]

- McMullen NT, de Venecia RK. Thalamocortical patches in auditory neocortex. Brain Res. 1993;620:317–322. doi: 10.1016/0006-8993(93)90173-k. [DOI] [PubMed] [Google Scholar]

- Meinz EJ, Hambrick DZ. Deliberate practice is necessary but not sufficient to explain individual differences in piano sight-reading skill: the role of working memory capacity. Psychol Sci. 2010;21:914–919. doi: 10.1177/0956797610373933. [DOI] [PubMed] [Google Scholar]

- Munte TF, Nager W, Beiss T, Schroeder C, Altenmuller E. Specialization of the specialized: electrophysiological investigations in professional musicians. Ann NY Acad Sci. 2003;999:131–139. doi: 10.1196/annals.1284.014. [DOI] [PubMed] [Google Scholar]

- Musacchia G, Sams M, Skoe E, Kraus N. Musicians have enhanced subcortical auditory and audiovisual processing of speech and music. Proc Natl Acad Sci USA. 2007;104:15894–15898. doi: 10.1073/pnas.0701498104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Musacchia G, Strait D, Kraus N. Relationships between behavior, brainstem and cortical encoding of seen and heard speech in musicians and non-musicians. Hear Res. 2008;241:34–42. doi: 10.1016/j.heares.2008.04.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oliver DL, Huerta MF. Inferior and superior colliculi. In: Webster DB, Popper AN, Fay RR, editors. The Mammalian Auditory Pathway. New York: Springer-Verlag; 1992. pp. 168–221. [Google Scholar]

- Pandya DN, Rosene DL, Doolittle AM. Corticothalamic connections of auditory-related areas of the temporal lobe in the rhesus monkey. J Comp Neurol. 1994;345:447–471. doi: 10.1002/cne.903450311. [DOI] [PubMed] [Google Scholar]

- Pantev C. Evoked and induced gamma-band activity of the human cortex. Brain Topogr. 1995;7:321–330. doi: 10.1007/BF01195258. [DOI] [PubMed] [Google Scholar]

- Pantev C, Roberts LE, Schulz M, Engelien A, Ross B. Timbre-specific enhancement of auditory cortical representations in musicians. Neuroreport. 2001;12:169–174. doi: 10.1097/00001756-200101220-00041. [DOI] [PubMed] [Google Scholar]

- Pantev C, Ross B, Fujioka T, Trainor LJ, Schulte M, Schulz M. Music and learning-induced cortical plasticity. Ann NY Acad Sci. 2003;999:438–450. doi: 10.1196/annals.1284.054. [DOI] [PubMed] [Google Scholar]

- Parbery-Clark A, Strait DL, Kraus N. Context-dependent encoding in the auditory brainstem subserves enhanced speech-in-noise perception in musicians. Neuropsychologia. 2011;49:3338–3345. doi: 10.1016/j.neuropsychologia.2011.08.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patel AD, Daniele JR. An empirical comparison of rhythm in language and music. Cognition. 2003;87:B35–B45. doi: 10.1016/s0010-0277(02)00187-7. [DOI] [PubMed] [Google Scholar]

- Quene H, Port RF. Effects of timing regularity and metrical expectancy on spoken-word perception. Phonetica. 2005;62:1–13. doi: 10.1159/000087222. [DOI] [PubMed] [Google Scholar]

- Rankin SK, Large EW, Fink PW. Fractal tempo fluctuation and pulse prediction. Music Perception. 2009;26:401–403. doi: 10.1525/mp.2009.26.5.401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Repp BH. Sensorimotor synchronization: a review of the tapping literature. Psychon Bull Rev. 2005;12:969–992. doi: 10.3758/bf03206433. [DOI] [PubMed] [Google Scholar]

- Saleh M, Reimer J, Penn R, Ojakangas CL, Hatsopoulos NG. Fast and slow oscillations in human primary motor cortex predict oncoming behaviorally relevant cues. Neuron. 2010;65:461–471. doi: 10.1016/j.neuron.2010.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schroeder CE, Lakatos P. Low-frequency neuronal oscillations as instruments of sensory selection. Trends Neurosci. 2009a;32:9–18. doi: 10.1016/j.tins.2008.09.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schroeder CE, Lakatos P. Low-frequency neuronal oscillations as instruments of sensory selection. Trends Neurosci. 2009b;32:9–18. doi: 10.1016/j.tins.2008.09.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schroeder CE, Lakatos P. The gamma oscillation: master or slave? Brain Topogr. 2009c;22:24–26. doi: 10.1007/s10548-009-0080-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schroeder CE, Lakatos P, Kajikawa Y, Partan S, Puce A. Neuronal oscillations and visual amplification of speech. Trends Cogn Sci. 2008a;12:106–113. doi: 10.1016/j.tics.2008.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schroeder CE, Lakatos P, Kajikawa Y, Partan S, Puce A. Neuronal oscillations and visual amplification of speech. Trends Cogn Sci. 2008b;12:106–113. doi: 10.1016/j.tics.2008.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schroeder CE, Wilson DA, Radman T, Scharfman H, Lakatos P. Dynamics of active sensing and perceptual selection. Curr Opin Neurobiol. 2010;20:172–176. doi: 10.1016/j.conb.2010.02.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shah AS, Bressler SL, Knuth KH, Ding M, Mehta AD, Ulbert I, et al. Neural dynamics and the fundamental mechanisms of event-related brain potentials. Cereb Cortex. 2004;14:476–483. doi: 10.1093/cercor/bhh009. [DOI] [PubMed] [Google Scholar]

- Shahin AJ, Roberts LE, Chau W, Trainor LJ, Miller LM. Music training leads to the development of timbre-specific gamma band activity. Neuroimage. 2008;41:113–122. doi: 10.1016/j.neuroimage.2008.01.067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shepard W, Ascher L. Effects of linguistic rule conformtiy on free recall in children and adults. Developmental Psychology. 1973;8:139. [Google Scholar]

- Sherman SM, Guillery RW. The role of the thalamus in the flow of information to the cortex. Philos Trans R Soc Lond B Biol Sci. 2002;357:1695–1708. doi: 10.1098/rstb.2002.1161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shields JL, McHugh A, Martin JG. Reaction time to phoneme target as a function of rhythmic cues in continuous speech. Journal of Experimental Psychology. 1974;10:250–255. [Google Scholar]

- Singer W. Development and plasticity of cortical processing architectures. Science. 1995;270:758–764. doi: 10.1126/science.270.5237.758. [DOI] [PubMed] [Google Scholar]

- Slezia A, Hangya B, Ulbert I, Acsady L. Phase advancement and nucleus-specific timing of thalamocortical activity during slow cortical oscillation. J Neurosci. 2011;31:607–617. doi: 10.1523/JNEUROSCI.3375-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snyder JS, Large EW. Gamma-band activity reflects the metric structure of rhythmic tone sequences. Brain Res Cogn Brain Res. 2005;24:117–126. doi: 10.1016/j.cogbrainres.2004.12.014. [DOI] [PubMed] [Google Scholar]

- Staples S. A paired-associates learning task utilizing music as the mediator: An exploratory study. Journal of Music Therapy. 1968;5:53–57. [Google Scholar]

- Stefanics G, Hangya B, Hernadi I, Winkler I, Lakatos P, Ulbert I. Phase entrainment of human delta oscillations can mediate the effects of expectation on reaction speed. J Neurosci. 2010;30:13578–13585. doi: 10.1523/JNEUROSCI.0703-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steriade M, McCormick DA, Sejnowski TJ. Thalamocortical oscillations in the sleeping and aroused brain. Science. 1993;262:679–685. doi: 10.1126/science.8235588. [DOI] [PubMed] [Google Scholar]

- Steriade M, Nunez A, Amzica F. A novel slow (< 1 Hz) oscillation of neocortical neurons in vivo: depolarizing and hyperpolarizing components. J Neurosci. 1993;13:3252–3265. doi: 10.1523/JNEUROSCI.13-08-03252.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strait DL, Hornickel J, Kraus N. Subcortical processing of speech regularities underlies reading and music aptitude in children. Behav Brain Funct. 2011;7:44. doi: 10.1186/1744-9081-7-44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tervaniemi M, Just V, Koelsch S, Widmann A, Schroger E. Pitch discrimination accuracy in musicians vs nonmusicians: an event-related potential and behavioral study. Exp Brain Res. 2005;161:1–10. doi: 10.1007/s00221-004-2044-5. [DOI] [PubMed] [Google Scholar]

- Trainor LJ, Shahin AJ, Roberts LE. Understanding the benefits of musical training: effects on oscillatory brain activity. Ann NY Acad Sci. 2009;1169:133–142. doi: 10.1111/j.1749-6632.2009.04589.x. [DOI] [PubMed] [Google Scholar]

- Uhlhaas PJ, Roux F, Rodriguez E, Rotarska-Jagiela A, Singer W. Neural synchrony and the development of cortical networks. Trends Cogn Sci. 2010;14:72–80. doi: 10.1016/j.tics.2009.12.002. [DOI] [PubMed] [Google Scholar]

- Weener P. Language structure and ree recall of verbal messages by children. Developmental Psychology. 1971;5:237–243. [Google Scholar]

- Will U, Berg E. Brain wave synchronization and entrainment to periodic acoustic stimuli. Neurosci Lett. 2007;424:55–60. doi: 10.1016/j.neulet.2007.07.036. [DOI] [PubMed] [Google Scholar]

- Winer JA, Schreiner CE. The inferior colliculus. New York: Springer Science+Business Media; 2005. Projections from the Cochlear Nuclear Complex to the Inferior Colliculus; pp. 115–131. [Google Scholar]

- Zion Golumbic EM, Poeppel D, Schroeder CE. Temporal context in speech processing and attentional stream selection: A behavioral and neural perspective. Brain Lang. 2012 doi: 10.1016/j.bandl.2011.12.010. [DOI] [PMC free article] [PubMed] [Google Scholar]