Abstract

The relationship between nonverbal behavior and severity of depression was investigated by following depressed participants over the course of treatment and video recording a series of clinical interviews. Facial expressions and head pose were analyzed from video using manual and automatic systems. Both systems were highly consistent for FACS action units (AUs) and showed similar effects for change over time in depression severity. When symptom severity was high, participants made fewer affiliative facial expressions (AUs 12 and 15) and more non-affiliative facial expressions (AU 14). Participants also exhibited diminished head motion (i.e., amplitude and velocity) when symptom severity was high. These results are consistent with the Social Withdrawal hypothesis: that depressed individuals use nonverbal behavior to maintain or increase interpersonal distance. As individuals recover, they send more signals indicating a willingness to affiliate. The finding that automatic facial expression analysis was both consistent with manual coding and revealed the same pattern of findings suggests that automatic facial expression analysis may be ready to relieve the burden of manual coding in behavioral and clinical science.

Keywords: Depression, Multimodal, FACS, Facial Expression, Head Motion

1. Introduction

Unipolar depression is a common psychological disorder and one of the leading causes of disease burden worldwide [56]; its symptoms are broad and pervasive, impacting affect, cognition, and behavior [2]. As a channel of both emotional expression and interpersonal communication, nonverbal behavior is central to how depression presents and is maintained [1, 51, 76]. Several theories of depression address nonverbal behavior directly and make predictions about what patterns should be indicative of the depressed state.

The Affective Dysregulation hypothesis [15] interprets depression in terms of valence, which captures the positivity or negativity (i.e., pleasantness or aversiveness) of an emotional state. This hypothesis argues that depression is marked by deficient positivity and excessive negativity. Deficient positivity is consistent with many neurobiological theories of depression [22, 42] and highlights the symptom of anhedonia. Excessive negativity is consistent with many cognitive theories of depression [5, 8] and highlights the symptom of rumination. In support of the Affective Dysregulation hypothesis, with few exceptions [61], observational studies have found that depression is marked by reduced positive expressions, such as smiling and laughter [7, 13, 26, 38, 39, 47, 49, 62, 64, 66, 67, 75, 78, 80, 83]. Several studies also found that depression is marked by increased negative expressions [9, 26, 61, 74]. However, other studies found the opposite effect: that depression is marked by reduced negative expressions [38, 47, 62]. These studies, combined with findings of reduced emotional reactivity using self-report and physiological measures (see Bylsma et al. [10] for a review), led to the development of an alternative hypothesis.

The Emotion Context Insensitivity hypothesis [63] interprets depression in terms of deficient appetitive motivation, which directs behavior towards exploration and the pursuit of potential rewards. This hypothesis argues that depression is marked by a reduction of both positive and negative emotion reactivity. Reduced reactivity is consistent with many evolutionary theories of depression [34, 52, 60] and highlights the symptoms of apathy and psychomotor retardation. In support of this hypothesis, some studies found that depression is marked by reductions in general facial expressiveness [26, 38, 47, 62, 69, 79] and head movement [30, 48]. However, it is unclear how much of this general reduction is accounted for by reduced positive expressions, and it is problematic for the hypothesis that negative expressions are sometimes increased in depression.

As both hypotheses predict reductions in positive expressions, their main distinguishing feature is their treatment of negative facial expressions. The lack of a clear result on negative expressions is thus vexing. Three limitations of previous studies likely contributed to these mixed results.

First, most studies compared the behavior of depressed participants with that of non-depressed controls. This type of comparison is problematic because depression is highly correlated with numerous personality traits that influence nonverbal behavior. For instance, people with psychological disorders in general are more likely to have high neuroticism (more anxious, moody, and jealous) and people with depression in particular are more likely to have low extraversion (less outgoing, talkative, and energetic) [54]. Thus, many previous studies confounded depression with the stable personality traits that are correlated with it.

Second, many studies used experimental contexts of low sociality, such as viewing emotional stimuli while alone. However, many nonverbal behaviors - especially those involved in social signaling - are typically rare in such contexts [36]. Thus, many previous studies may have introduced a “floor effect” by using contexts in which relevant expressions were unlikely to occur in any of the experimental groups.

Finally, most studies examined a limited range of nonverbal behavior. Many aggregated multiple facial expressions into single categories such as “positive expressions” and “negative expressions,” while others used single facial expressions to represent each category (e.g., smiles for positive affect and brow-lowering for negative affect). These approaches assume that all facial expressions in each category are equivalent and will be affected by depression in the same way. However, in addition to expressing a person’s affective state, nonverbal behavior is also capable of regulating social interactions and communicating behavioral intentions [36, 37]. Perhaps individual nonverbal behaviors are increased or decreased in depression based on their social-communicative function. Thus, we propose an alternative hypothesis to explain the nonverbal behavior of depressed individuals, which we refer to as the Social Withdrawal hypothesis.

The Social Withdrawal hypothesis interprets depression in terms of affiliation: the motivation to cooperate, comfort, and request aid. This hypothesis argues that depression is marked by reduced affiliative behavior and increased non-affiliative behavior, which combine to maintain or increase interpersonal distance. This pattern is consistent with several evolutionary theories [1, 76] and highlights the symptom of social withdrawal. It also accounts for variability within the group of negative facial expressions, as each varies in its social-communicative function. Specifically, expressions that signal approachability should be decreased in depression and expressions that signal hostility should be increased. In support of this hypothesis, several ethological studies of depressed inpatients found that their nonverbal behavior was marked by hostility and a lack of social engagement [23, 33, 67, 79]. Findings of decreased smiling in depression also support this interpretation, as smiling is often an affiliative signal [43, 44, 53].

1.1. The Current Study

We address many of the limitations of previous work by: (1) following depressed participants over the course of treatment; (2) observing them during a clinical interview; and (3) measuring multiple, objectively-defined nonverbal behaviors. By comparing participants to themselves over time, we control for stable personality traits; by using a clinical interview, we provide a context that is both social and representative of typical client-patient interactions; and by examining head motion and multiple facial movements individually, we explore the possibility that depression may affect different aspects of nonverbal behavior in different ways.

We measure nonverbal behavior with both manual and automatic coding. In an effort to alleviate the substantial time burden of manual coding, interdisciplinary researchers have begun to develop automatic systems to supplement (and perhaps one day replace) human coders [84]. Initial applications to the study of depression are also beginning to appear [17, 48, 49, 59, 68, 69]. However, many of these applications are “black-box” procedures that are difficult to interpret, some require the use of invasive recording equipment, and most have not been validated against expert human coders.

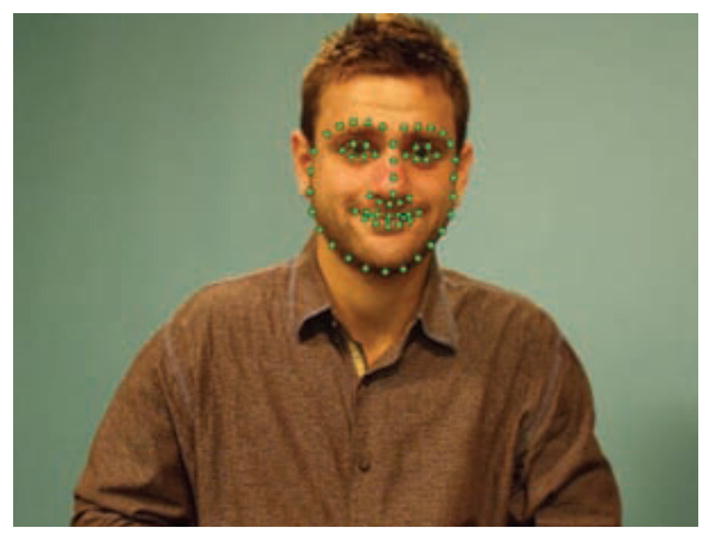

We examine patterns of head motion and the occurrence of four facial expressions that have been implicated as affiliative or non-affiliative signals (Figure 1). These expressions are defined by the Facial Action Coding System (FACS) [24] in terms of individual muscle movements called action units (AUs).

Figure 1.

Example images of AU 12, AU 14, AU 15, and AU 24

First, we examine the lip corner puller or smile expression (AU 12). As part of the prototypical expression of happiness, the smile is implicated in positive affect [25] and may signal affiliative intent [43, 44, 53]. Second, we examine the dimpler expression (AU 14). As part of the prototypical expression of contempt, the dimpler is implicated in negative affect [25] and may signal non-affiliative intent [43]. The dimpler can also counteract and obscure an underlying smile, potentially augmenting its affiliative signal and expressing embarrassment or ambivalence [26, 50]. Third, we examine the lip corner depressor expression (AU 15). As part of the prototypical expression of sadness, the lip corner depressor is implicated in negative affect [25] and may signal affiliative intent (especially the desire to show or receive empathy) [44]. Fourth, we examine the lip presser expression (AU 24). As part of the prototypical expression of anger, the lip presser is implicated in negative affect [25] and may signal non-affiliative intent [43, 44, 53]. Finally, we also examine the amplitude and velocity of head motion. Freedman [35] has argued that movement behavior should be seen as part of an effort to communicate. As such, head motion may signal affiliative intent.

We hypothesize that the depressed state will be marked by reduced amplitude and velocity of head motion, reduced activity of affiliative expressions (AUs 12 and 15), and increased activity of non-affiliative expressions (AUs 14 and 24). We also test the predictions of two established hypotheses. The Affective Dysregulation hypothesis is that depression will be marked by increased negativity (AUs 14, 15, and 24) and decreased positivity (AU 12), and the Emotion Context Insensitivity hypothesis is that the depressed state will be marked by reduced activity of all behavior (AUs 12, 14, 15, 24, and head motion).

2. Methods

2.1. Participants

The current study analyzed video of 33 adults from a clinical trial for treatment of depression [17]. At the time of study intake, all participants met DSM-IV [2] criteria for major depressive disorder [29]. In the clinical trial, participants were randomly assigned to receive either antidepressant medication (i.e., SSRI) or interpersonal psychotherapy (IPT); both treatments are empirically validated for use in depression [45].

Symptom severity was evaluated on up to four occasions at 1, 7, 13, and 21 weeks by clinical interviewers (n=9, all female). Interviewers were not assigned to specific participants and varied in the number of interviews they conducted. Interviews were conducted using the Hamilton Rating Scale for Depression (HRSD) [40], which is a criterion measure for assessing severity of depression. Interviewers all were expert in the HRSD and reliability was maintained above 0.90. HRSD scores of 15 or higher generally indicate moderate to severe depression, and scores of 7 or lower generally indicate a return to normal [65].

Interviews were recorded using four hardware-synchronized analogue cameras. The video data used in the current study came from a camera positioned roughly 15 degrees to the participant’s right; its images were digitized into 640×480 pixel arrays at a frame rate of 29.97 frames per second. Only the first three interview questions (about depressed mood, feelings of guilt, and suicidal ideation) were analyzed. These segments ranged in length from 28 to 242 seconds with an average of 100 seconds.

The automatic facial expression coding system was trained using data from all 33 participants. However, not all participants were included in the longitudinal depression analyses. In order to compare participants’ nonverbal behavior during high and low symptom severity, we only analyzed participants whose symptoms improved over the course of treatment. All participants had high symptom severity (HRSD ≥ 15) during their initial interview. “Responders” were those participants who had low symptom severity (HRSD ≤ 7) during a subsequent interview. Although 21 participants met this criterion, data from 3 participants were unable to be processed due to camera occlusion and gum-chewing. The final group was thus composed of 38 interviews from 19 participants; this group’s demographics were similar to those of the database (Table 1).

Table 1.

Participant groups and demographic data

| Database | Responders | |

|---|---|---|

| Number of Subjects | 33 | 19 |

| Number of Sessions | 69 | 38 |

| Average Age (years) | 42.3 | 42.1 |

| Gender (% female) | 66.7% | 63.2% |

| Ethnicity (% white) | 87.9% | 89.5% |

| Medicated (% SSRI) | 45.5% | 42.1% |

2.2. Manual FACS Coding

The Facial Action Coding System (FACS) [24] is the current gold standard for facial expression annotation. FACS decomposes facial expressions into component parts called action units (AUs). Action units are anatomically-based facial movements that correspond to the contraction of specific muscles. For example, AU 12 indicates contractions of the zygomatic major muscle and AU 14 indicates contractions of the buccinator muscle. AUs may occur alone or in combination to form more complex expressions.

The facial behavior of all participants was FACS coded from video by certified and experienced coders. Expression onset, apex, and offset were coded for 17 commonly occurring AUs. Overall inter-observer agreement for AU occurrence, quantified by Cohen’s Kappa [16], was 0.75; according to convention, this can be considered good agreement [31]. In order to minimize the number of analyses required, the current study analyzed four of these AUs that are conceptually related to emotion and social signaling (Figure 1).

2.3. Automatic FACS Coding

2.3.1. Face Registration

In order to register two facial images (i.e., the “reference image” and the “sensed image”), a set of facial landmark points is utilized. Facial landmark points indicate the location of important facial components (e.g., eye corners, nose tip, jaw line). Active Appearance Models (AAM) were used to track sixty-six facial landmark points (Figure 2) in the video. AAM is a powerful approach that combines shape and texture variation of an image into a single statistical model that can simultaneously match the face model into a new facial image [19]. Approximately 3% of video frames were manually annotated for each subject to train the AAMs. The tracked images were automatically aligned using a gradient-descent AAM fitting algorithm [57]. In order to enable comparison between images, differences in scale, translation, and rotation were removed using a two dimensional similarity transformation.

Figure 2.

AAM Facial Landmark Tracking [19]

2.3.2. Feature Extraction

Facial expressions appear as changes in facial shape (e.g., curvedness of the mouth) and appearance (e.g., wrinkles and furrows). In order to describe facial expression efficiently, it would be helpful to utilize a representation that can simultaneously describe the shape and appearance of an image. Localized Binary Pattern Histogram (LBPH), Histogram of Oriented Gradient (HOG), and Localized Gabor are some well-known features that can be used for this purpose [77]. We used Localized Gabor features for texture representation and discrimination as they have been found to be relatively robust to alignment error [14]. A bank of 40 Gabor filters (i.e., 5 scales and 8 orientations) was applied to regions defined around the 66 landmark points, which resulted in 2640 Gabor features per frame.

2.3.3. Dimensionality Reduction

In many pattern classification applications, the number of features is extremely large. Their high dimensionality makes analysis and classification a complex task. In order to extract the most important or discriminant features of the data, several linear techniques, such as Principle Component Analysis (PCA) and Independent Component Analysis (ICA), and nonlinear techniques, such as Kernel PCA and Manifold Learning, have been proposed [32]. We utilized the manifold learning technique to reduce the dimensionality of the Gabor features. The idea behind this technique is that the data points lie on a low dimensional manifold that is embedded in a high dimensional space [11]. Specifically, we utilized Laplacian Eigenmap [6] to extract the low-dimensional features and then, similar to Mahoor et al. [55], we applied spectral regression to calculate the corresponding projection function for each AU. Through this step, the dimensionality of the Gabor data was reduced to 29 features per frame.

2.3.4. Classifier Training

In machine learning, feature classification aims to assign each input value to one of a given set of classes. A well-known classification technique is the Support Vector Machine (SVM). The SVM classifier applies the “kernel trick,” which uses dot product, to keep computational loads reasonable. The kernel functions (e.g., linear, polynomial, and radial basis function) enable the SVM algorithm to fit a hyperplane with a maximum margin into the transformed high dimensional feature space. Radial basis function kernels were used in the current study. To build a training set, we randomly sampled positive and negative frames from each subject. To train and test the SVM classifiers, we used the LIBSVM library [12]. To find the best classifier and kernel parameters (i.e., C and γ, respectively), we used a “grid-search” procedure during leave-one-out cross-validation [46].

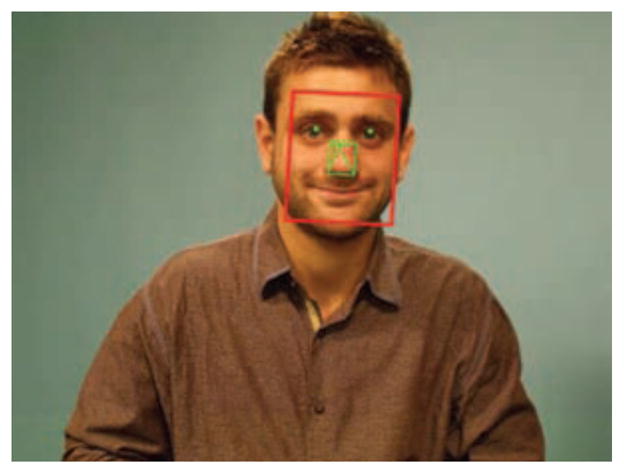

2.4. Automatic Head Pose Analysis

A cylinder-based 3D head tracker (CSIRO tracker) [20] was used to model rigid head motion (Figure 3). Angles of the head in the horizontal and vertical directions were selected to measure head pose and motion. These directions correspond to the meaningful motion of head nods and head turns. In a recent behavioral study, the CSIRO tracker demonstrated high concurrent validity with alternative methods for head tracking and revealed differences in head motion between distressed and non-distressed intimate partners [41].

Figure 3.

CSIRO Head Pose Tracking [20]

For each video frame, the tracker output six degrees of freedom of rigid head motion or an error message that the frame could not be tracked. Overall, 4.61% of frames could not be tracked. To evaluate the quality of the tracking, we visually reviewed the tracking results overlaid on the video. Visual review indicated poor tracking in 11.37% of frames; these frames were excluded from further analysis. To prevent biased measurements due to missing data, head motion measures were computed for the longest segment of continuously valid frames in each interview. The mean duration of these segments was 55.00 and 37.31 seconds for high and low severity interviews, respectively.

For the measurement of head motion, head angles were first converted into amplitude and velocity. Amplitude corresponds to the amount of displacement, and velocity (i.e., the derivative of displacement) corresponds to the speed of displacement for each head angle during the segment. Amplitude and velocity were converted into angular amplitude and angular velocity by subtracting the mean overall head angle across the segment from the head angle for each frame. Because the duration of each segment was variable, the obtained scores were normalized by the duration of its corresponding segment.

2.5. Measures

To capture how often different facial expressions occurred, we calculated the base rate for each AU. Base rate was calculated as the number of frames for which the AU was present divided by the total number of frames. This measure was calculated for each AU as determined by both manual and automatic FACS coding. To represent head motion, average angular amplitude and velocity were calculated using root mean square, which is a measure of the magnitude of a varying quantity.

2.6. Data Analysis

The primary analyses compared the nonverbal behavior of participants at two time points: when they were severely depressed and when they had recovered. Because base rates for the AU were highly skewed (each p<0.05 by Shapiro-Wilk test [72]), differences with severity are reported for non-parametric tests: the Wilcoxon Signed Ranks test [82]. Results using parametric tests (i.e., paired t-tests) were comparable.

We compared manual and automatic FACS coding in two ways. First, we measured the degree to which both coding sources detected the same AUs in each frame. This frame-level reliability was quantified using area under the ROC curve (AUC) [28], which corresponds to the automatic system’s discrimination (the likelihood that it will correctly discriminate between a randomly-selected positive example and a randomly-selected negative example). Additionally, we measured the degree to which the two methods were consistent in measuring base rate for each session. This session-level reliability was quantified using intraclass correlation (ICC) [73], which describes the resemblance of units in a group.

3. Results

Manual and automatic FACS coding demonstrated high frame-level and session-level reliability (Table 2) and revealed similar patterns between high and low severity interviews (Table 3). Manual analysis found that two AUs were reduced during high severity interviews (AUs 12 and 15) and one facial expression was increased (AU 14); automatic analysis replicated these results except that the difference with AU 12 did not reach statistical significance. Automatic head pose analysis also revealed significant differences between interviews. Head amplitude and velocity were decreased in high severity interviews (Table 4).

Table 2.

Reliability between manual and automatic FACS coding

| Facial Expression | Frame-level | Session-level |

|---|---|---|

| AU 12 | AUC = 0.82 | ICC = 0.93 |

| AU 14 | AUC = 0.88 | ICC = 0.88 |

| AU 15 | AUC = 0.78 | ICC = 0.90 |

| AU 24 | AUC = 0.95 | ICC = 0.94 |

Table 3.

Facial expression during high and low severity interviews, Average base rate

| Manual Analysis

|

Automatic Analysis

|

|||

|---|---|---|---|---|

| High Severity | Low Severity | High Severity | Low Severity | |

| AU 12 | 19.1% | 39.2% * | 22.3% | 31.2% |

| AU 14 | 24.7% | 13.9% * | 27.8% | 17.0% * |

| AU 15 | 05.9% | 11.9% * | 08.5% | 16.5% * |

| AU 24 | 12.3% | 14.2% | 18.4% | 16.9% |

p<0.05 vs. high severity interview by Wilcoxon Signed Rank test

Table 4.

Head motion during high and low severity interviews, Average root mean square

| Head Motion | High Severity | Low Severity |

|---|---|---|

| Vertical Amplitude | 0.0013 | 0.0029 * |

| Vertical Velocity | 0.0001 | 0.0005 ** |

| Horizontal Amplitude | 0.0014 | 0.0034 ** |

| Horizontal Velocity | 0.0002 | 0.0005 ** |

p<0.05,

p<0.01 vs. high severity interviews by Wilcoxon Signed Rank test

4. Discussion

Facial expression and head motion tracked changes in symptom severity. They systematically differed between times when participants were severely depressed (Hamilton score of 15 or greater) and those during which depression abated (Hamilton score of 7 or less). While some specific findings were consistent with all three alternative hypotheses, support was strongest for Social Withdrawal.

According to the Affective Dysregulation hypothesis, depression entails a deficit in the ability to experience positive emotion and enhanced potential for negative emotion. Consistent with this hypothesis, when symptom severity was high, AU 12 occurred less often and AU 14 occurred more often. Participants smiled less and showed expressions related to contempt and embarrassment more often when severity was high. When depression abated, smiling increased and expressions related to contempt and embarrassment decreased.

There were, however, two findings contrary to Affective Dysregulation. One, AU 24, which is associated with anger, failed to vary with depression severity. Because Affective Dysregulation makes no predictions about specific emotions, this negative finding might be discounted. Anger may not be among the negative emotions experienced in interviews. A second contrary finding is not so easily dismissed. AU 15, which is associated with both sadness and positive affect suppression, was decreased when depression was severe and increased when depression abated. An increase in negative emotion when depression abates runs directly counter to Affective Dysregulation. Thus, while the findings for AU 12 and AU 14 were supportive of Affective Dysregulation, the findings for AU 15 were not. Moreover, the findings for head motion are not readily interpreted in terms of this hypothesis. Because head motion could be related to either positive or negative emotion, this finding is left unaccounted for. Thus, while some findings were consistent with the hypothesis, others importantly were not.

According to the Emotion Context Insensitivity hypothesis, depression entails a curtailment of both positive and negative emotion reactivity. Consistent with this hypothesis, when symptom severity was high, AU 12 and AU 15 occurred less often. Participants smiled less and showed expressions related to sadness less often when severity was high. The decreased head motion in depression was consistent with the lack of reactivity assumed by the hypothesis. When depression abated, smiling, expressions related to sadness, and head motion all increased. These findings are consistent with Emotion Context Insensitivity.

However, two findings ran contrary to this hypothesis. AU 24 failed to vary with depression level, and AU 14 actually increased when participants were depressed. While the lack of findings for AU 24 might be dismissed, for reasons noted above, the results for AU 14 are difficult to explain from the perspective of Emotion Context Insensitivity. In previous research, support for this hypothesis has come primarily from self-report and physiological measures [10]. Emotion Context Insensitivity could reveal a lack of coherence among them. Dissociations between expressive behavior and physiology have been observed in other contexts [58]; depression may be another. Further research will be needed to explore this question.

The Social Withdrawal hypothesis emphasizes behavioral intention to affiliate rather than affective valence. According to this hypothesis, affiliative behavior decreases and non-affiliative behavior increases when severity is high. Decreased head motion together with decreased occurrence of AUs 12 and 15 and increased occurrence of AU 14 all were consistent with this hypothesis. When depression severity was high, participants smiled less, showed expressions related to sadness less often, showed expressions related to contempt and embarrassment more often, and showed smaller and slower head movements. When depression abated, smiling, expressions related to sadness, and head motion increased, while expressions related to contempt and embarrassment decreased. The only mark against this hypothesis is the failure to find a change in AU 24. As a smile control, AU 24 may occur less often than AU 14 and other smile controls. In a variety of contexts, AU 14 has been the most noticed smile control [27, 61]. Little is known, however, about the relative occurrence of different smile controls.

These results suggest that social signaling is critical to understanding the link between nonverbal behavior and depression. When depressed, participants signaled intention to avoid or minimize affiliation. Displays of this sort may contribute to the resilience of depression. It has long been known that depressed behavior is experienced as aversive by non-depressed persons [21]. Expressive behavior in depression may discourage social interaction and support that are important to recovery. Ours may be the first study to reveal specific displays responsible for the relative isolation that depression achieves.

Previous work called attention to social skills deficits [70] as the source of interpersonal rejection in depression [71]. According to this view, depressed persons are less adept at communicating their wishes, needs, and intentions with others. This view is not mutually exclusive with the Social Withdrawal hypothesis. Depressed persons might be less effective in communicating their wants and desires and less effective in resolving conflict, yet also signal lack of intention to socially engage with others to accomplish such goals. From a treatment perspective, it would be important to assess which of these factors were contributing to the social isolation experienced in depression.

The automatic facial expression analysis system was consistent with manual coding in terms of frame-level discrimination. The average AUC score across all AUs was 0.86, which is comparable with or better than other studies that detected AUs in spontaneous video [4, 18, 81, 85]. The automatic and manual systems were also highly consistent in terms of measuring the base rate of each AU in the interview session; the average ICC score across all AUs was 0.91. Furthermore, the significance tests using automatic coding were very similar to those using manual coding. The only difference was that the test of AU 12 base rate was significant for manual but not automatic coding, although the findings were in the same direction. To our knowledge, this is the first study to demonstrate that automatic and manual FACS coding are fungible; automatic and manual coding reveal the same patterns. These findings suggest automatic coding may be a valid alternative to manual coding. If so, this would free research from the severe constraints of manual coding. Manual coding requires hours for a person to label a minute or so of video, and that is after what is already a lengthy period of about 100 hours to gain competence in FACS.

The current study represents a step towards comprehensive description of nonverbal behavior in depression (see also [26, 38]). Analyzing individual AUs allowed us to identify a new pattern of results with theoretical and clinical implications. However, this fine-grained analysis also makes interpretation challenging because we cannot definitively say what an individual AU means. We address this issue by emphasizing the relation of AUs to broad dimensions of behavior (i.e., valence and affiliation). We also selected AUs that are relatively unambiguous. For example, we did not include the brow lowerer (AU 4) because it is implicated in fear, sadness, and anger [25] as well as concentration [3] and is thus ambiguous.

In conclusion, we found that high depression severity was marked by a decrease in specific nonverbal behaviors (AU 12, AU 15, and head motion) and an increase in at least one other (AU 14). These results help to parse the ambiguity of previous findings regarding negative facial expressions in depression. Rather than a global increase or decrease in such expressions, these results suggest that depression affects them differentially based on their social-communicative value: affiliative expressions are reduced in depression and non-affiliative expressions are increased. This pattern supports the Social Withdrawal hypothesis: that nonverbal behavior in depression serves to maintain or increase interpersonal distance, thus facilitating social withdrawal. Additionally, we found that the automatic coding was highly reliable with manual coding, yielded convergent findings, and appears ready for independent use in clinical research.

Highlights.

We investigated the relation between nonverbal behavior and severity of depression

When symptoms were severe, participants showed less AU 12 and AU 15 and more AU 14

When symptoms were severe, participants ‘head motion was reduced in size and speed

The pattern of findings was highly consistent for automated and manual measurements

The findings support the hypothesis of nonverbal social withdrawal in depression

Acknowledgments

The authors wish to thank Nicole Siverling and Shawn Zuratovic for their generous assistance. This work was supported in part by US National Institutes of Health grants MH51435, MH65376, and MH096951 to the University of Pittsburgh. Any opinions, conclusions, or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Institutes of Health.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Jeffrey M. Girard, Email: jmg174@pitt.edu.

Jeffrey F. Cohn, Email: jeffcohn@cs.cmu.edu.

Mohammad H. Mahoor, Email: mohammad.mahoor@du.edu.

S. Mohammad Mavadati, Email: seyedmohammad.mavadati@du.edu.

Zakia Hammal, Email: hammal@yahoo.fr.

Dean P. Rosenwald, Email: dpr10@pitt.edu.

References

- 1.Allen NB, Badcock PBT. The Social Risk Hypothesis of Depressed Mood: Evolutionary, Psychosocial, and Neurobiological Perspectives. Psychological Bulletin. 2003;129:887–913. doi: 10.1037/0033-2909.129.6.887. [DOI] [PubMed] [Google Scholar]

- 2.American Psychiatric Association. Diagnostic and statistical manual of mental disorders. 4. Washington, DC: 1994. [Google Scholar]

- 3.Baron-Cohen S. Mind reading: The interactive guide to emotion. Jessica Kingsley Publishers; London: 2003. [Google Scholar]

- 4.Bartlett MS, Littlewort G, Frank MG, Lainscsek C, Fasel IR, Movellan JR. Automatic Recognition of Facial Actions in Spontaneous Expressions. Journal of Multimedia. 2006;1:22–35. [Google Scholar]

- 5.Beck AT. Depression: Clinical, experimental, and theoretical aspects. Harper and Row; New York: 1967. [Google Scholar]

- 6.Belkin M, Niyogi P. Laplacian eigenmaps for dimensionality reduction and data representation. Neural Computation. 2003;15:1373–1396. [Google Scholar]

- 7.Berenbaum H, Oltmanns TF. Emotional experience and expression in schizophrenia and depression. Journal of Abnormal Psychology. 1992;101:37–44. doi: 10.1037//0021-843x.101.1.37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bower GH. Mood and memory. American Psychologist. 1981;36:129. doi: 10.1037//0003-066x.36.2.129. [DOI] [PubMed] [Google Scholar]

- 9.Brozgold AZ, Borod JC, Martin CC, Pick LH, Alpert M, Welkowitz J. Social functioning and facial emotional expression in neurological and psychiatric disorders. Applied Neuropsychology. 1998;5:15–23. doi: 10.1207/s15324826an0501_2. [DOI] [PubMed] [Google Scholar]

- 10.Bylsma LM, Morris BH, Rottenberg J. A meta-analysis of emotional reactivity in major depressive disorder. Clinical Psychology Review. 2008;28:676–691. doi: 10.1016/j.cpr.2007.10.001. [DOI] [PubMed] [Google Scholar]

- 11.Cayton L. Technical Report CS2008-0923. 2005. Algorithms for manifold learning. [Google Scholar]

- 12.Chang CC, Lin CJ. LIBSVM: a library for support vector machines. ACM Transactions on Intelligent Systems and Technology. 2011;2:27. [Google Scholar]

- 13.Chentsova-Dutton YE, Tsai JL, Gotlib IH. Further evidence for the cultural norm hypothesis: positive emotion in depressed and control European American and Asian American women. Cultural Diversity and Ethnic Minority Psychology. 2010;16:284–295. doi: 10.1037/a0017562. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Chew SW, Lucey P, Lucey S, Saragih J, Cohn JF, Matthews I, Sridharan S. In the Pursuit of Effective Affective Computing: The Relationship Between Features and Registration, IEEE Transactions on Systems. Man, and Cybernetics. 2012;42:1006–1016. doi: 10.1109/TSMCB.2012.2194485. [DOI] [PubMed] [Google Scholar]

- 15.Clark LA, Watson D. Tripartite model of anxiety and depression: psychometric evidence and taxonomic implications. Journal of Abnormal Psychology. 1991;100:316. doi: 10.1037//0021-843x.100.3.316. [DOI] [PubMed] [Google Scholar]

- 16.Cohen J. A coefficient of agreement for nominal scales. Educational and Psychological Measurement. 1960;20:37–46. [Google Scholar]

- 17.Cohn JF, Kruez TS, Matthews I, Ying Y, Minh Hoai N, Padilla MT, Feng Z, De La Torre F. Affective Computing and Intelligent Interaction. Amsterdam: Detecting depression from facial actions and vocal prosody; pp. 1–7. [Google Scholar]

- 18.Cohn JF, Sayette MA. Spontaneous facial expression in a small group can be automatically measured: An initial demonstration. Behavior Research Methods. 2010;42:1079–1086. doi: 10.3758/BRM.42.4.1079. URL: http://dx.doi.org/10.3758/BRM.42.4.1079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Cootes TF, Edwards GJ, Taylor CJ. Active appearance models. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2001;23:681–685. [Google Scholar]

- 20.Cox M, Nuevo-Chiquero J, Saragih JM, Lucey S. CSIRO Face Analysis SDK. 2013. [Google Scholar]

- 21.Coyne JC. Toward an interactional description of depression. Psychiatry: Journal for the study of interpersonal processes. 1976 doi: 10.1080/00332747.1976.11023874. [DOI] [PubMed] [Google Scholar]

- 22.Depue R, Iacono W. Neurobehavioral aspects of affective disorders. Annual review of psychology. 1989;40:457–492. doi: 10.1146/annurev.ps.40.020189.002325. [DOI] [PubMed] [Google Scholar]

- 23.Dixon AK, Fisch HU, Huber C, Walser A. Ethological Studies in Animals and Man, Their Use in Psychiatry. Pharmacopsychiatry. 1989;22:44–50. doi: 10.1055/s-2007-1014624. [DOI] [PubMed] [Google Scholar]

- 24.Ekman P, Friesen WV, Hager J. Facial Action Coding System (FACS): A technique for the measurement of facial movement. Research Nexus; Salt Lake City, UT: 2002. [Google Scholar]

- 25.Ekman P, Matsumoto D. Facial expression analysis. Scholarpedia. 2008;3 [Google Scholar]

- 26.Ellgring H. Nonverbal communication in depression. Cambridge University Press; Cambridge: 1989. [Google Scholar]

- 27.Fairbairn CE, Sayette Ma, Levine JM, Cohn JF, Creswell KG. The Effects of Alcohol on the Emotional Displays of Whites in Interracial Groups. Emotion (Washington, DC) 2013;12 doi: 10.1037/a0030934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Fawcett T. An introduction to ROC analysis. Pattern Recognition Letters. 2006;27:861–874. [Google Scholar]

- 29.First MB, Gibbon M, Spitzer RL, Williams JB. User’s guide for the structured clinical interview for DSM-IV Axis I disorders (SCID-I, Version 2.0, October 1995 Final Version) Biometric Research; New York: 1995. [Google Scholar]

- 30.Fisch HU, Frey S, Hirsbrunner HP. Analyzing nonverbal behavior in depression. Journal of abnormal psychology. 1983;92:307–18. doi: 10.1037//0021-843x.92.3.307. [DOI] [PubMed] [Google Scholar]

- 31.Fleiss JL. Statistical methods for rates and proportions. 2. John Wiley & Sons; New York: 1981. [Google Scholar]

- 32.Fodor IK. Technical Report. 2002. A survey of dimension reduction techniques. [Google Scholar]

- 33.Fossi L, Faravelli C, Paoli M. The ethological approach to the assessment of depressive disorders. Journal of Nervous and Mental Disease. 1984 doi: 10.1097/00005053-198406000-00004. [DOI] [PubMed] [Google Scholar]

- 34.Fowles DC. A Motivational View of Psychopathology. In: Spaulding WD, Simon HA, editors. Integrative Views of Motivation, Cognition, and Emotion. U of Nebraska Press; 1994. pp. 181–238. [Google Scholar]

- 35.Freedman N. The analysis of movement behavior during the clinical interview. In: Siegman AW, Pope B, editors. Studies in dyadic communication. Pergamon Press; New York: 1972. [Google Scholar]

- 36.Fridlund AJ. Human facial expression: An evolutionary view. Academic Press; 1994. [Google Scholar]

- 37.Frijda NH. Expression, emotion, neither, or both? Cognition and Emotion. 1995;9:617–635. [Google Scholar]

- 38.Gaebel W, Wolwer W. Facial expressivity in the course of schizophrenia and depression. European Archives of Psychiatry and Clinical Neuroscience. 2004;254:335–342. doi: 10.1007/s00406-004-0510-5. [DOI] [PubMed] [Google Scholar]

- 39.Gehricke JG, Shapiro D. Reduced facial expression and social context in major depression: discrepancies between facial muscle activity and self-reported emotion. Psychiatry Research. 2000;95:157–167. doi: 10.1016/s0165-1781(00)00168-2. [DOI] [PubMed] [Google Scholar]

- 40.Hamilton M. Development of a rating scale for primary depressive illness. British Journal of Social and Clinical Psychology. 1967;6:278–296. doi: 10.1111/j.2044-8260.1967.tb00530.x. [DOI] [PubMed] [Google Scholar]

- 41.Hammal Z, Bailie TE, Cohn JF, George DT, Lucey S. Temporal Coordination of Head Motion in Couples with History of Interpersonal Violence. International Conference on Automatic Face and Gesture Recognition; Shanghai, China. [Google Scholar]

- 42.Henriques JB, Davidson RJ. Decreased responsiveness to reward in depression. Cognition & Emotion. 2000;14:711–724. [Google Scholar]

- 43.Hess U, Adams RB, Jr, Kleck RE. Who may frown and who should smile? Dominance, affiliation, and the display of happiness and anger. Cognition and Emotion. 2005;19:515–536. [Google Scholar]

- 44.Hess U, Blairy S, Kleck RE. The influence of facial emotion displays, gender, and ethnicity on judgments of dominance and affiliation. Journal of Nonverbal Behavior. 2000;24:265–283. [Google Scholar]

- 45.Hollon SD, Thase ME, Markowitz JC. Treatment and Prevention of Depression. Psychological Science in the Public Interest. 2002;3:39–77. doi: 10.1111/1529-1006.00008. [DOI] [PubMed] [Google Scholar]

- 46.Hsu CW, Chang CC, Lin CJ. Technical Report. 2003. A practical guide to support vector classification. [Google Scholar]

- 47.Jones IH, Pansa M. Some nonverbal aspects of depression and schizophrenia occurring during the interview. Journal of Nervous and Mental Disease. 1979;167:402–409. doi: 10.1097/00005053-197907000-00002. [DOI] [PubMed] [Google Scholar]

- 48.Joshi J, Goecke R, Parker G, Breakspear M. Can Body Expressions Contribute to Automatic Depression Analysis?. International Conference on Automatic Face and Gesture Recognition; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Katsikitis M, Pilowsky I. A controlled quantitative study of facial expression in Parkinson’s disease and depression. Journal of Nervous and Mental Disease. 1991;179:683–688. doi: 10.1097/00005053-199111000-00006. [DOI] [PubMed] [Google Scholar]

- 50.Keltner D, Buswell BN. Embarrassment: its distinct form and appeasement functions. Psychological Bulletin. 1997;122:250. doi: 10.1037/0033-2909.122.3.250. [DOI] [PubMed] [Google Scholar]

- 51.Klerman GL, Weissman MM, Rounsaville BJ, Chevron E. Interpersonal psychotherapy of depression. Basic Books; New York: 1984. [Google Scholar]

- 52.Klinger E. Consequences of Commitment to and Disengagement from Incentives. Psychological Review. 1975;82:1–25. [Google Scholar]

- 53.Knutson B. Facial expressions of emotion influence interpersonal trait inferences. Journal of Nonverbal Behavior. 1996;20:165–182. [Google Scholar]

- 54.Kotov R, Gamez W, Schmidt F, Watson D. Linking big personality traits to anxiety, depressive, and substance use disorders: A meta-analysis. Psychological Bulletin. 2010;136:768. doi: 10.1037/a0020327. [DOI] [PubMed] [Google Scholar]

- 55.Mahoor MH, Cadavid S, Messinger DS, Cohn JF. A framework for automated measurement of the intensity of non-posed Facial Action Units. Computer Vision and Pattern Recognition Workshops; Miami. pp. 74–80. [Google Scholar]

- 56.Mathers CD, Loncar D. Projections of global mortality and burden of disease from 2002 to 2030. PLoS medicine. 2006;3:e442. doi: 10.1371/journal.pmed.0030442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Matthews I, Baker S. Active Appearance Models Revisited. International Journal of Computer Vision. 2004;60:135–164. [Google Scholar]

- 58.Mauss IB, Levenson RW, McCarter L, Wilhelm FH, Gross JJ. The tie that binds? Coherence among emotion experience, behavior, and physiology. Emotion. 2005;5:175–90. doi: 10.1037/1528-3542.5.2.175. [DOI] [PubMed] [Google Scholar]

- 59.Mergl R, Mavrogiorgou P, Hegerl U, Juckel G. Kinematical analysis of emotionally induced facial expressions: a novel tool to investigate hypomimia in patients suffering from depression. Journal of Neurology, Neurosurgery, and Psychiatry. 2005;76:138–140. doi: 10.1136/jnnp.2004.037127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Nesse RM. Is Depression an Adaptation? Archives of General Psychiatry. 2000;57:14–20. doi: 10.1001/archpsyc.57.1.14. [DOI] [PubMed] [Google Scholar]

- 61.Reed LI, Sayette MA, Cohn JF. Impact of depression on response to comedy: a dynamic facial coding analysis. Journal of Abnormal Psychology. 2007;116:804–809. doi: 10.1037/0021-843X.116.4.804. [DOI] [PubMed] [Google Scholar]

- 62.Renneberg B, Heyn K, Gebhard R, Bachmann S. Facial expression of emotions in borderline personality disorder and depression. Journal of Behavior Therapy and Experimental Psychiatry. 2005;36:183–196. doi: 10.1016/j.jbtep.2005.05.002. [DOI] [PubMed] [Google Scholar]

- 63.Rottenberg J, Gross JJ, Gotlib IH. Emotion context insensitivity in major depressive disorder. Journal of Abnormal Psychology. 2005;114:627–639. doi: 10.1037/0021-843X.114.4.627. [DOI] [PubMed] [Google Scholar]

- 64.Rottenberg J, Kasch KL, Gross JJ, Gotlib IH. Sadness and amusement reactivity differentially predict concurrent and prospective functioning in major depressive disorder. Emotion. 2002;2:135–146. doi: 10.1037/1528-3542.2.2.135. [DOI] [PubMed] [Google Scholar]

- 65.Rush AJJ, First MB, Blacker D, editors. Handbook of Psychiatric Measures. 2. American Psychiatric Publishing, Inc; Washington, D.C: 2008. [Google Scholar]

- 66.Sakamoto S, Nameta K, Kawasaki T, Yamashita K, Shimizu A. Polygraphic evaluation of laughing and smiling in schizophrenic and depressive patients. Perceptual and Motor Skills. 1997;85:1291–1302. doi: 10.2466/pms.1997.85.3f.1291. [DOI] [PubMed] [Google Scholar]

- 67.Schelde JT. Major depression: behavioral markers of depression and recovery. The Journal of nervous and mental disease. 1998;186:133–40. doi: 10.1097/00005053-199803000-00001. [DOI] [PubMed] [Google Scholar]

- 68.Scherer S, Stratou G, Mahmoud M, Boberg J, Gratch J, Rizzo AS, Morency Lp. Automatic Behavior Descriptors for Psychological Disorder Analysis. International Conference on Automatic Face and Gesture Recognition. [Google Scholar]

- 69.Schneider F, Heimann H, Himer W, Huss D, Mattes R, Adam B. Computer-based analysis of facial action in schizophrenic and depressed patients. European Archives of Psychiatry and Clinical Neuroscience. 1990;240:67–76. doi: 10.1007/BF02189974. [DOI] [PubMed] [Google Scholar]

- 70.Segrin C. Social skills deficits associated with depression. Clinical psychology review. 2000;20:379–403. doi: 10.1016/s0272-7358(98)00104-4. [DOI] [PubMed] [Google Scholar]

- 71.Segrin C, Dillard JP. The interactional theory of depression: A meta-analysis of the research literature. Journal of Social and Clinical Psychology. 1992;11:43–70. [Google Scholar]

- 72.Shapiro SS, Wilk MB. An Analysis of Variance Test for Normality (Complete Samples) Biometrika. 1965;52:591–611. [Google Scholar]

- 73.Shrout PE, Fleiss JL. Intraclass correlations: uses in assessing rater reliability. Psychological Bulletin. 1979;86:420. doi: 10.1037//0033-2909.86.2.420. [DOI] [PubMed] [Google Scholar]

- 74.Sloan DM, Strauss ME, Quirk SW, Sajatovic M. Subjective and expressive emotional responses in depression. Journal of Affective Disorders. 1997;46:135–141. doi: 10.1016/s0165-0327(97)00097-9. [DOI] [PubMed] [Google Scholar]

- 75.Sloan DM, Strauss ME, Wisner KL. Diminished response to pleasant stimuli by depressed women. Journal of Abnormal Psychology. 2001;110:488–493. doi: 10.1037//0021-843x.110.3.488. [DOI] [PubMed] [Google Scholar]

- 76.Sloman L. Evolved mechanisms in depression: the role and interaction of attachment and social rank in depression. Journal of Affective Disorders. 2003;74:107–121. doi: 10.1016/s0165-0327(02)00116-7. [DOI] [PubMed] [Google Scholar]

- 77.Tian Yl, Kanade T, Cohn JF. Facial expression analysis. Handbook of Face Recognition. 2005:247–275. [Google Scholar]

- 78.Tremeau F, Malaspina D, Duval F, Correa H, Hager-Budny M, Coin-Bariou L, Macher JP, Gorman JM. Facial expressiveness in patients with schizophrenia compared to depressed patients and nonpatient comparison subjects. American Journal of Psychiatry. 2005;162:92–101. doi: 10.1176/appi.ajp.162.1.92. [DOI] [PubMed] [Google Scholar]

- 79.Troisi A, Moles A. Gender differences in depression: an ethological study of nonverbal behavior during interviews. Journal of Psychiatric Research. 1999;33:243–50. doi: 10.1016/s0022-3956(98)00064-8. [DOI] [PubMed] [Google Scholar]

- 80.Tsai JL, Pole N, Levenson RW, Munoz RF. The effects of depression on the emotional responses of Spanish-speaking Latinas. Cultural Diversity and Ethnic Minority Psychology. 2003;9:49–63. doi: 10.1037/1099-9809.9.1.49. [DOI] [PubMed] [Google Scholar]

- 81.Whitehill J, Littlewort G, Fasel IR, Bartlett MS, Movellan JR. Toward Practical Smile Detection. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2009;31:2106–2111. doi: 10.1109/TPAMI.2009.42. [DOI] [PubMed] [Google Scholar]

- 82.Wilcoxon F. Individual Comparisons by Ranking Methods Frank Wilcoxon. Biometrics Bulletin. 1945;1:80–83. [Google Scholar]

- 83.Youngren MA, Lewinsohn PM. The functional relation between depression and problematic interpersonal behavior. Journal of Abnormal Psychology. 1980;89:333. doi: 10.1037//0021-843x.89.3.333. [DOI] [PubMed] [Google Scholar]

- 84.Zeng Z, Pantic M, Roisman GI, Huang TS. A survey of affect recognition methods: audio, visual, and spontaneous expressions. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2009;31:39–58. doi: 10.1109/TPAMI.2008.52. [DOI] [PubMed] [Google Scholar]

- 85.Zhu Y, De La Torre F, Cohn JF, Zhang YJ. Dynamic cascades with bidirectional bootstrapping for spontaneous facial action unit detection. International Conference on Affective Computing and Intelligent Interaction and Workshops; pp. 1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]