Supplemental Digital Content is available in the text.

Key Words: risk prediction model, emergency hospital admission, community-dwelling adults

Abstract

Background:

Risk prediction models have been developed to identify those at increased risk for emergency admissions, which could facilitate targeted interventions in primary care to prevent these events.

Objective:

Systematic review of validated risk prediction models for predicting emergency hospital admissions in community-dwelling adults.

Methods:

A systematic literature review and narrative analysis was conducted. Inclusion criteria were as follows; Population: community-dwelling adults (aged 18 years and above); Risk: risk prediction models, not contingent on an index hospital admission, with a derivation and ≥1 validation cohort; Primary outcome: emergency hospital admission (defined as unplanned overnight stay in hospital); Study design: retrospective or prospective cohort studies.

Results:

Of 18,983 records reviewed, 27 unique risk prediction models met the inclusion criteria. Eleven were developed in the United States, 11 in the United Kingdom, 3 in Italy, 1 in Spain, and 1 in Canada. Nine models were derived using self-report data, and the remainder (n=18) used routine administrative or clinical record data. Total study sample sizes ranged from 96 to 4.7 million participants. Predictor variables most frequently included in models were: (1) named medical diagnoses (n=23); (2) age (n=23); (3) prior emergency admission (n=22); and (4) sex (n=18). Eleven models included nonmedical factors, such as functional status and social supports. Regarding predictive accuracy, models developed using administrative or clinical record data tended to perform better than those developed using self-report data (c statistics 0.63–0.83 vs. 0.61–0.74, respectively). Six models reported c statistics of >0.8, indicating good performance. All 6 included variables for prior health care utilization, multimorbidity or polypharmacy, and named medical diagnoses or prescribed medications. Three predicted admissions regarded as being ambulatory care sensitive.

Conclusions:

This study suggests that risk models developed using administrative or clinical record data tend to perform better. In applying a risk prediction model to a new population, careful consideration needs to be given to the purpose of its use and local factors.

In the United States, rehospitalizations alone are estimated to cost €12 billion each year.1 Emergency or unplanned admissions account for approximately 35% of all hospitalizations in the United Kingdom (UK) costing an average of £11 billion annually.2 As a result of this escalating expenditure, reducing emergency admissions is a priority for health care policy-makers.3 For patients, unplanned hospitalizations may be distressing, and older people in particular are at risk of related adverse events such as hospital-acquired infections, loss of functional independence, and falls.4

One way of reducing emergency admissions is to identify people at higher risk who can then be prioritized for an intervention, such as case management.5 Risk prediction models developed for this purpose and not contingent on recent hospitalization have the advantage of broader applicability and can include a wider range of predictor variables. It has also been argued that focusing on specific high-risk groups, such as those discharged from a hospital, may not be the best approach to take in targeting emergency admissions. This is due, in part, to the concept of “regression to the mean,” which means that patients with a history of multiple admissions will on average have fewer admissions in the future than they had in the past.6,7

Three main types of data sources are utilized to derive risk models for predicting emergency admission.3 The first is self-report data collected through patient questionnaire or interview with the advantage of being able to include nonmedical variables such as functional status and social supports. The second is routine data collected for the purposes of administrative databases or population registries. The third incorporates data collated from the clinical record or other primary data sources with the advantage of being able to test larger number of variables and without the response biases associated with self-report.

The aim of this study is to perform a systematic review of validated risk prediction models for predicting emergency hospital admission in community-dwelling adults. Specific objectives were: (1) To examine the variables included in risk prediction models; (2) to summarize the performance of risk prediction models in derivation and validation cohorts; and (3) to compare the predictive accuracy of risk models externally validated in the same setting.

METHODS

The protocol for this systematic review has been published on PROSPERO (PROSPERO2013:CRD42013004390) and is available at http://www.crd.york.ac.uk/PROSPERO/display_record.asp?ID=CRD42013004390.

The PRISMA guidelines for the conduct and reporting of systematic reviews were utilized in undertaking this systematic review.8

Search Strategy

A systematic literature search was carried out in September 2013 and updated in February 2014 of the following search engines: PubMed, EMBASE, CINAHL, the Cochrane Library, and Google scholar. Additional databases were also searched: the US Agency for Healthcare Research and Quality (AHRQ), the John Hopkins Adjusted Clinical Groupings (ACG) publications, the UK Nuffield Trust, and the King’s fund. The search was supplemented by hand searching references of relevant articles and contacting study authors when necessary. No restrictions were placed on language or year of publication.

A combination of MeSH terms and keywords were used to capture studies of interest (Appendix 1, Supplemental Digital Content 1, http://links.lww.com/MLR/A747).

Study Selection

Studies were included if they met the following criteria:

Population: Community-dwelling adults (aged ≥18 y).

Risk: Risk prediction models, which were not contingent on an index hospital admission, with a derivation and at least 1 validation (either internal or external) cohort. Models were subdivided according to the data used to develop the model as follows: (i) Self-report; (ii) Administrative or clinical record data.

Outcome: Primary outcome of emergency hospital admission (defined as unplanned overnight stay in hospital). Studies that had emergency admission as part of their outcome of interest (e.g. combined endpoints) were also included.

Study design: Retrospective or prospective cohort studies.

The following studies were excluded:

Primary population of interest focused on pediatrics, obstetrics, surgery, mental illness, or patients enrolled in managed care programs; readmission risk prediction models (models contingent on an index hospital admission); models in which the primary outcome of interest was elective hospital admissions, models developed for use in emergency rooms (ERs), for specific diagnoses, for example, congestive heart failure, for a different primary outcome, e.g., mortality and risk adjustment models (models to compare provider performance with inform pay and health care financing). Studies that reported risk factors only and did not develop a model were also excluded.

Data Extraction

Two reviewers (E.W., E.S.) read the titles and/or abstracts of the identified records in duplicate and eliminated irrelevant studies. Studies that were considered eligible for inclusion were read fully in duplicate and their suitability for inclusion determined. Disagreements were managed by consensus and if consensus could not be reached then by third review (S.M.S.). Additional data were sought from authors when necessary. Data were extracted using a standardized data extraction form.

Statistical Analysis

Meta-analysis was not possible because of risk prediction model heterogeneity, so we narratively summarized each unique risk prediction model under the following headings:

The model’s derivation cohort study setting, participants and population studied.

Type of validation cohort, that is, internal or external.

Type of data used to derive the model.

Model discrimination was assessed using the c statistic with 95% confidence intervals when available. A c statistic of 0.5 indicates that the model performs no better than chance, a score of 0.7–0.8 indicates acceptable discrimination, whereas a score of >0.8 indicates good discrimination.9 In cases in which the c statistic was not presented, we present positive predictive values, sensitivity, and specificity.

Variables evaluated and considered for inclusion.

Variables included in the final model.

Methodological Quality Assessment

Methodological quality assessment of included studies was independently performed in duplicate (E.S., N.V.) using the McGinn checklist for the methodological assessment of clinical prediction rule studies10 (Appendix 2, Supplemental Digital Content 2, http://links.lww.com/MLR/A748). The McGinn criteria include a total of 8 criteria to assess the internal and external validity of derivation articles. For validation studies, a total of 5 criteria were used. Detailed guidance notes were also developed in-house to accompany the derivation and validation methodological criteria. Disagreements were solved by consensus or by adjudicating third review (E.W.).

RESULTS

Study Identification

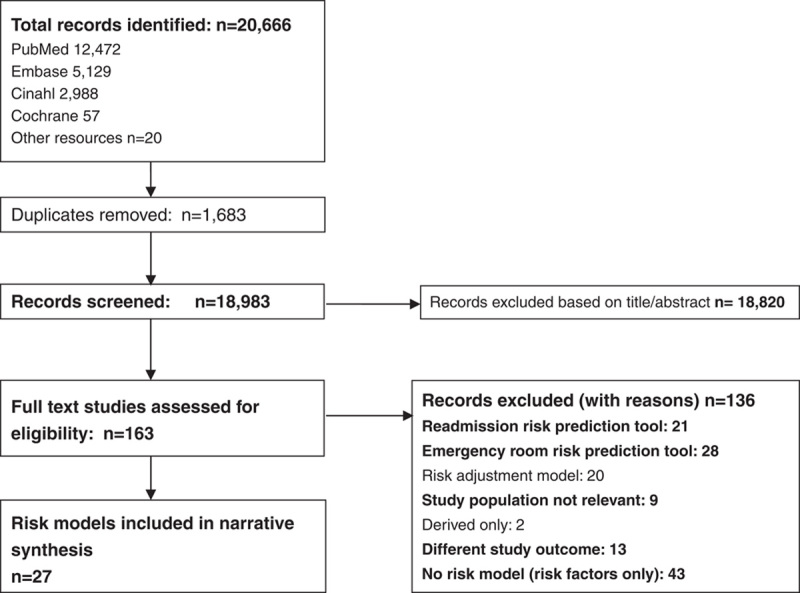

A flow diagram of the search strategy is presented in Figure 1. The electronic databases search strategy yielded 20,666 papers. A further 20 articles were retrieved from searches of other resources. After removal of duplicates, a total of 18,983 articles were screened by title and abstract, of which 163 studies were reviewed in full text; 27 unique risk prediction models met all inclusion criteria.

FIGURE 1.

PRISMA flow diagram of included risk prediction models.

Description of Included Risk Prediction Models

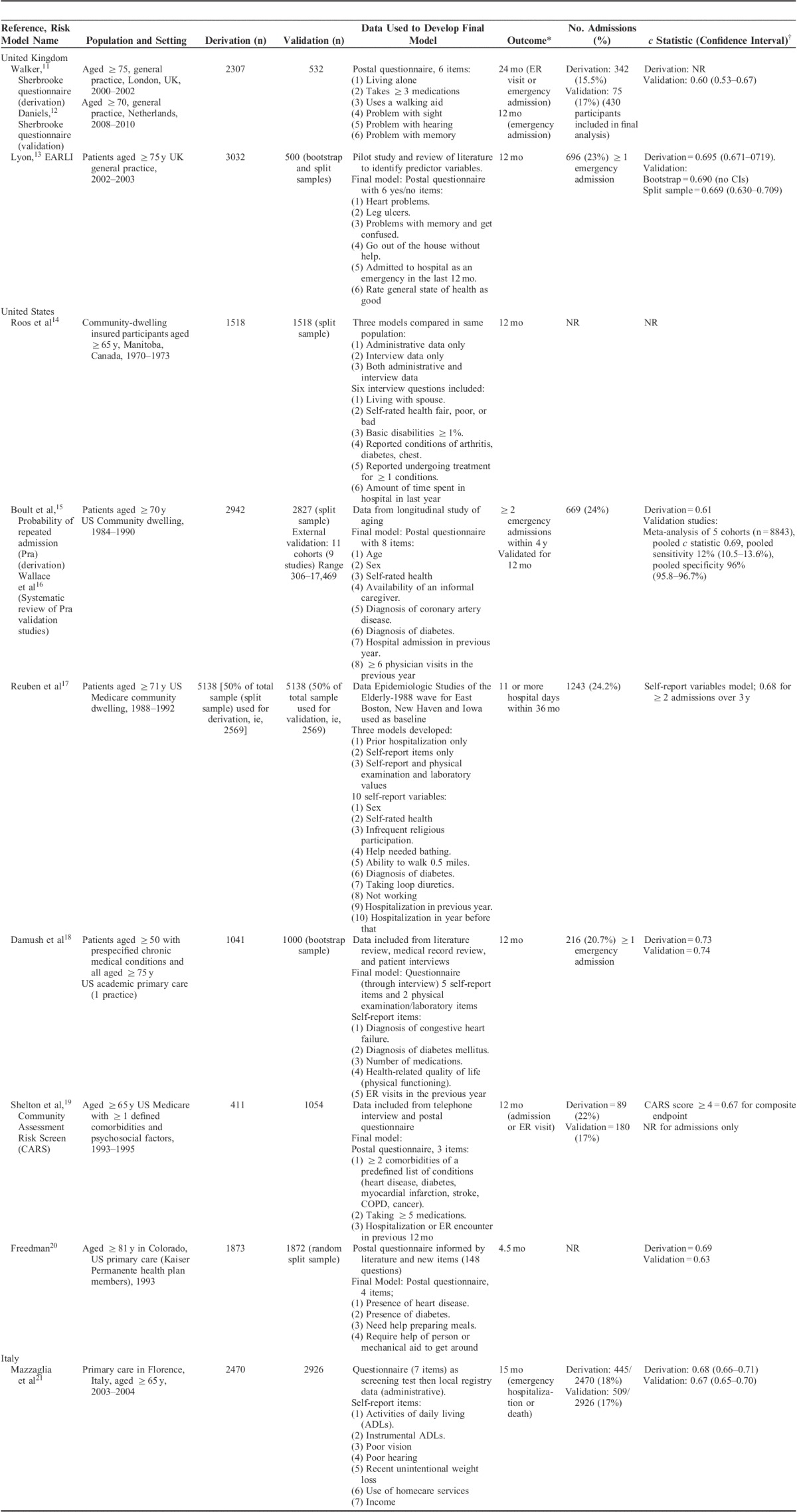

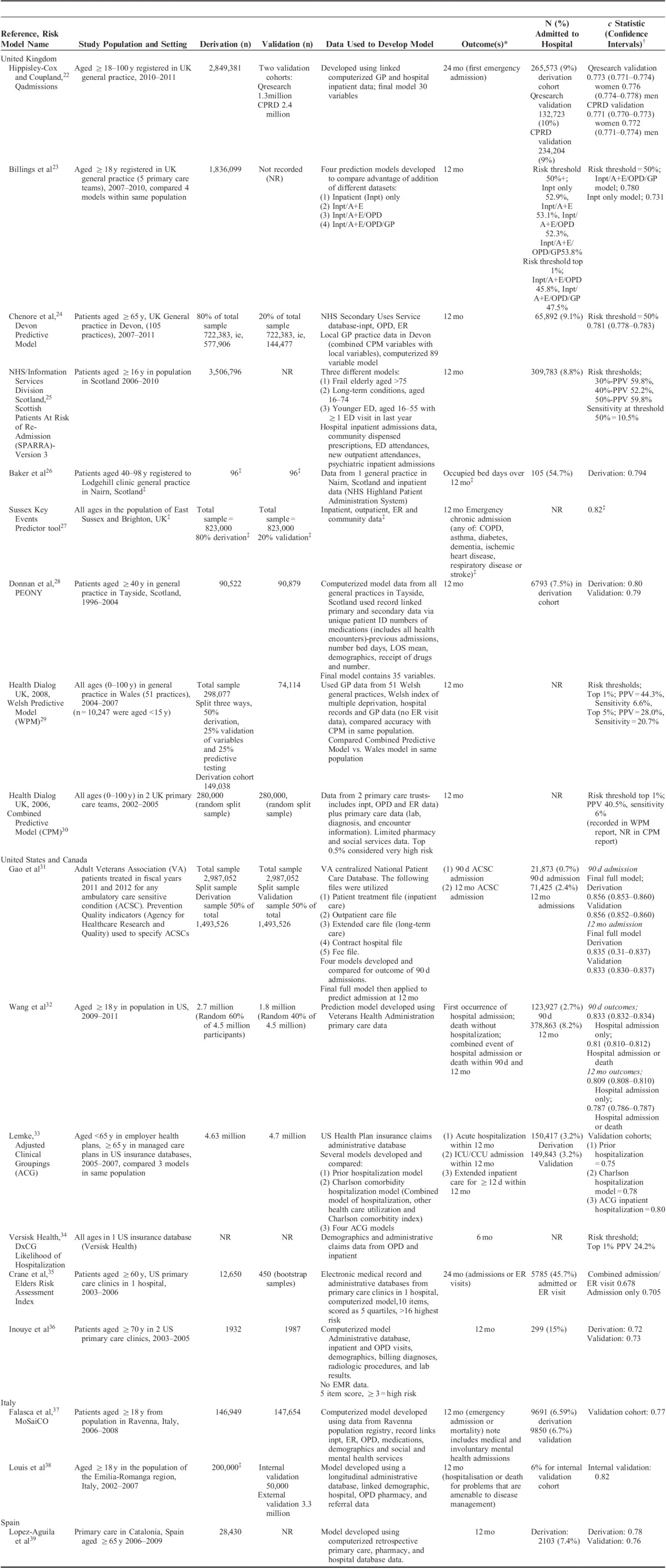

Of the 27 unique models included, 11 were developed in the UK, 11 in the US, 3 in Italy, 1 in Spain, and 1 in Canada. Nine models were developed using self-report data or a combination of self-report and administrative or routine data (Table 1) and the remainder (n=18) utilized routine or primary data alone (Table 2). A total of 13 models were developed specifically for use in older people (60 y and above). Total sample sizes ranged from 96 to 4.7 million participants. The majority of models (18 of 27) were developed to predict emergency hospital admission at 12-month follow-up (range, 90 d–4 y). Of these, 3 models focused on emergency admissions for chronic disease or conditions amenable to primary care management as a primary outcome measure.27,31,38 Two models predicted any hospitalization and 2 predicted occupied bed days over specific time periods.17,26,32,38 A further 3 models used the endpoint of emergency admission or ER visit and 2 used combined hospitalization/death.11,19,21,35,37

TABLE 1.

Risk Prediction Models Developed Using Self-report Data Primarily (n=9)

TABLE 2.

Risk Prediction Models Developed Using Administrative or Clinical Record Data (n=18)

Data Sources Used to Develop Risk Prediction Models

The 9 models developed with self-report data included literature reviews; medical record review and questionnaire pilot in the development of their models (Table 1). Of the 18 models developed using routine or clinical record data, 10 were developed using a combination of administrative and clinical record data.22–24,26–30,35,39 A further 8 were developed using administrative data alone.17,25,32–34,36–38 Eleven models included general practice (GP)/family practice clinical record data in their final model.22–24,26–30,35,39

Risk Prediction Model Variables

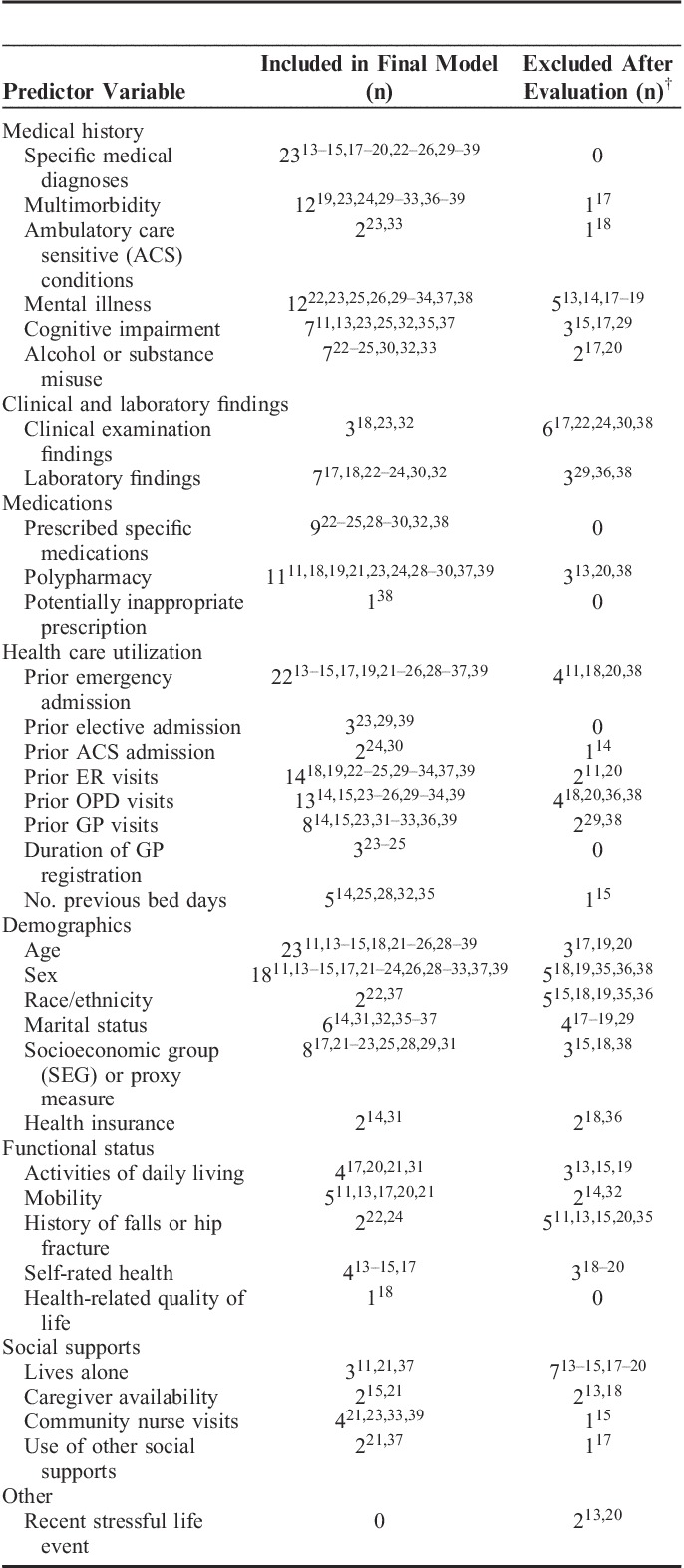

Each of the variables considered and included in each of the 27 models are presented in Table 3. Seven studies presented their final risk model only and not all variables considered for inclusion, and 1 study uses locally available data to create a risk prediction model specifically for a named population so variables considered for inclusion vary.23–25,27,28,31,34,37 The most frequently included predictor variables in final risk models were: (1) named medical diagnoses (23 models); (2) age (23 models); (3) prior emergency admission (22 models); and (4) sex (18 models). Other health care utilization variables commonly included were prior ER and outpatient department (OPD) visits (14 and 13 models, respectively). Twelve models included measures of multimorbidity (the presence of 2 or more chronic medical conditions in an individual), most commonly the Charlson index and simple disease counts.19,23,24,29–33,36–39 One model considered multimorbidity for inclusion and then excluded it after evaluation.17 Polypharmacy was considered as a predictor variable in 14 models and included in 11 final models.11,18,19,21,23,24,28–30,37,39 Five models included a specific measure of socioeconomic group (SEG) and a further 3 used either employment history or income as proxy measures for SEG.17,21–23,25,28,29,31

TABLE 3.

Predictor Variables in Risk Prediction Models (n=26*) for Predicting Emergency Hospital Admissions

Overall, a smaller number of models (n=11) included nonmedical factors.11,13–15,17,20–22,24,31,37 These variables were largely included in self-report data models (Table 1). Of those that included functional status as a predictor variable, most considered either activities of daily living, mobility, and/or a history of falls.11,13,17,20–22,24,31 Four questionnaires included measures of self-rated health and 1 included health-related quality of life.13–15,17,18 Two questionnaires included the social support measure of caregiver availability.15,21 Three models developed using administrative or clinical record data included nonmedical variables; these included a history of falls as a predictor variable, social supports and living arrangements, and a disability rating variable respectively.22,31,37

Predictive Accuracy of Risk Prediction Models

Eighteen models presented c statistics for the outcome of emergency admission ranging from 0.61 to 0.83. Six models reported c statistics of >0.8, indicating good model discrimination.27,28,31–33,38 Some similarities were noted among these models; all included prior health care utilization variables, multimorbidity or polypharmacy measures, and named medical diagnoses or named prescribed medications variables. Three of these 6 models utilized emergency admissions for chronic disease or conditions amenable to primary care management as a primary outcome measure.27,31,38 A further 7 risk prediction models reported c statistics of between 0.7 and 0.8 representing acceptable model performance.18,22–24,35–37,39 Of 9 models developed using self-report data primarily, 8 were designed for use in older people. In contrast, only 5 of the 18 models developed using administrative or clinical record data were derived specifically for use in older people. The remainder were developed for use in general populations aged over 18 years. Overall, models developed primarily using administrative or clinical record data performed better than those developed using self-report data, with reported c statistics ranging from 0.68 to 0.83 versus 0.61 to 0.74, respectively.

Comparison of Performance of Risk Prediction Models Within and Across Populations

Three studies developed several prediction models in 1 population, using different datasets and then compared their performance. Billings et al23 developed 4 models in the United Kingdom using: (1) inpatient data alone; (2) combined inpatient and ER data; (3) combined inpatient, ER, and OPD data; and (4) combined inpatient/ER/OPD/GP/family practice data. This was undertaken to determine whether the addition of GP/family practice data improved overall model performance. In the test sample of >1.8 million people, the OPD/ER/GP/inpatient model performed best (c statistic 0.78 vs. 0.73 for inpatient model).23 Similarly, Lemke and colleagues in the United States examined various models using the ACG classification and compared these with models using prior hospitalization only using a data source of 4.7 million medical insurance claims. The model using ACG groupings plus prior health care utilization performed best overall (c statistic 0.8 vs. 0.75).33 Reuben and colleagues compared models developed using prior admission only, self-report data only, and a model using a combination of self-report variables and laboratory values. The model with greatest predictive accuracy used a combination of self-report and laboratory variables (c statistic 0.69).17

Two studies directly compared different validated models in the same population. The UK Combined Predictive Model (CPM) was developed to be nationally representative.30 It was compared with 2 other UK risk models, the Wales predictive model and the Devon predictive model.24,29 In primary care the Wales model was found to have superior predictive ability when compared with the CPM in correctly identifying those who were subsequently admitted. The Devon predictive model included many of the same variables as the CPM but also local data variables and was found to have greater predictive accuracy when compared with the CPM. The authors argued that the addition of local factors, for example, the participant’s duration of family practitioner registration as a proxy for continuity of care, was integral to improved performance.

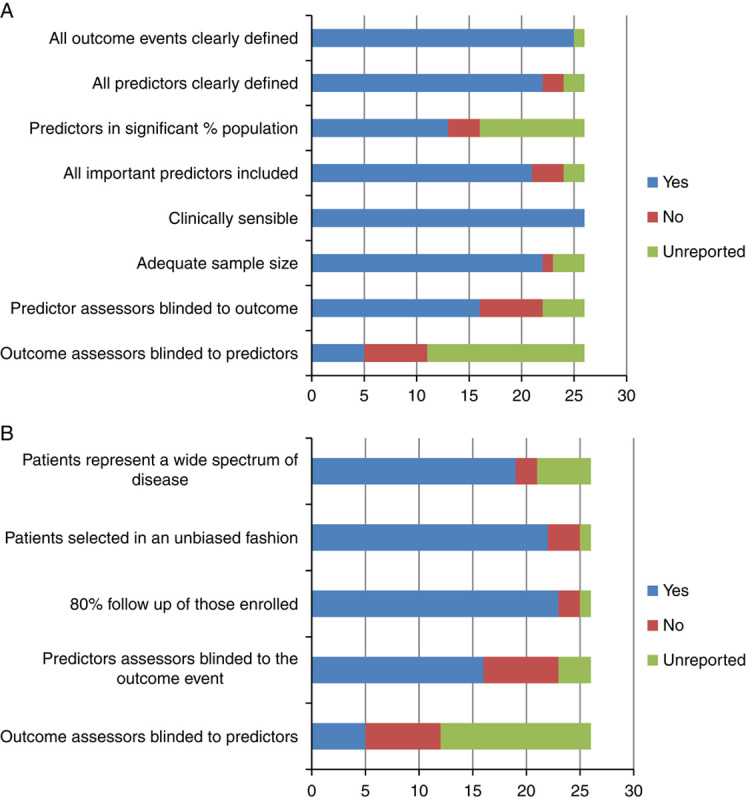

Methodological Quality Assessment of Included Studies

Overall, the methodological quality of included studies was good. For derivation, the majority of studies reported all checklist items with the exception of items pertaining to blinding of outcome assessors, blinding of those assessing the presence of predictors, and reporting of the proportion of the population with important predictors. For validation the majority of studies reported all checklist items with underreporting of blinding of those assessing the outcome event (Figs. 2A, B).

FIGURE 2.

Methodological quality assessment of included risk prediction models (n=26, n=1, model customized depending on the population it is intended for). A, Derivation studies. B, Validation studies. Colour code: Blue: item done and reported; Red: item not done and reported; Green: item unreported.

DISCUSSION

Summary of Findings

This systematic review identified 27 unique risk models for predicting hospital admission. Less than half were developed specifically for older people, with the rest designed for use in an adult population. Overall, models developed using administrative or clinical record data and developed on large datasets tended to have greater predictive ability than self-report questionnaires. Risk prediction models that examined the added benefit of GP/family practice clinical record data in increasing predictive accuracy reported improved performance when this data source was included.

Variables Included in Risk Prediction Models

Overall, almost all risk models in this review included age, prior hospitalization, and specified medical diagnoses, and the majority included sex. However, less than half considered a specific measurement of multimorbidity, which is surprising considering the impact the presence of multiple conditions has been shown to have on health care utilization.40,41 Similarly, less than half of models considered polypharmacy and only 8 included a measure for SEG in their development. In this review the 6 risk prediction models that demonstrated greatest predictive accuracy (based on reported c statistics) included similar variables, namely, prior health care utilization, multimorbidity or polypharmacy measures, and named medical diagnoses or named prescribed medications predictor variables. Three of the 6 focused on ambulatory care sensitive conditions (ACSCs) admissions.

Overall, nonmedical factors such as functional status, social supports, and self-rated health were included in approximately one third of risk models. These factors have been highlighted as potentially contributing to emergency hospitalization. One US study of qualitative interviews with patients identified by a risk prediction model as high risk found that the majority had poor self-rated health, precarious housing status, lived alone, and reported high levels of social isolation.42

Performance of Risk Prediction Models in New Settings

In 2 studies a nationally developed risk prediction model was applied to new populations in the same country and its performance compared with adapted models, which included local factors.24,29 In both studies the locally adapted models performed better at predicting future emergency hospitalization. One UK risk score developer designs customized risk models for a specified population using locally available data to ensure that the model created is fit for purpose.27 This approach seems sensible as local factors may well differ within countries and differences in population demographics may mean that a risk model should be applied differently.

Comparison With Previous Research

To our knowledge this is the first systematic review of risk prediction models for emergency admission in community-dwelling adults. Previous systematic reviews have focused on readmission risk models and risk factors for emergency admission. Kansagara et al43 found that of 26 retrieved readmission risk models only 6 reported a c statistic >0.7. They concluded that most readmission models perform poorly and suggested that the additional variables available through the medical record or patient self-report may improve performance. Our review supports this suggestion with models developed using clinical record data demonstrating improved predictive accuracy overall.

García-Pérez et al44 reported that the risk factors of chronic disease status and functional disability were the most important predictors, followed by prior health care utilization. Whereas medical diagnoses and prior health care utilization were included in almost all risk prediction models in this review, far fewer included functional status. This may be related to the type of data available in the development phase, especially those that utilize administrative or clinical record data only. Functional status variables have tended to be included in self-report questionnaires, which may be more prone to response bias for the reporting of other important predictors such as medical diagnoses and previous health care utilization. Future research needs to consider how best to capture nonmedical factors to determine whether their inclusion into predictive models improves performance.

Clinical and Research Implications

In 2011, a US-based heritage provider group offered a $3 million prize to any group that could develop a risk prediction model to identify people at higher risk for admission so that resources could be directed at reducing their risk.45 However, to date, the evidence for case management for higher-risk community-dwelling people is mixed and has not reduced emergency admissions.46 For instance, the Guided Care model aims to provide primary care that includes comprehensive geriatric assessment, case management, self-management support, and caregiver support provided by a team that includes a specially trained nurse who acts as care coordinator. Patients were targeted using age and multimorbidity as risk stratification criteria. In a 32-center randomized control trial, this intervention was found to improve participants’ chronic care and reduce caregiver strain and resulted in high levels of health care professional satisfaction.47 However, apart from 1 subgroup, compared with usual care, participants utilized similar levels of health care at 20-month follow-up, with the exception of home health care, which was significantly reduced.48

Overall, it is difficult to know whether case management has not achieved anticipated reductions in emergency admissions because of the intervention used or the case finding mechanism utilized. Studies to date have chosen relatively blunt measures of risk stratification to target patients for their respective interventions.48,49 Perhaps intensifying efforts in the choice of model for risk stratification may provide dividends for future studies. Further, focusing case management on interventions that prioritize components relating to multimorbidity and polypharmacy may have a role to play.50

Another consideration relates to the choice of outcome measure. Most risk models in this review used emergency admission for any cause as their primary outcome. Only 3 chose emergency admissions due to ACSCs as an endpoint. A further 3 models considered ACSCs in their development process. This is interesting as a proportion of all emergency admissions will not be preventable even with intensified care.51 ACSCs are chronic conditions for which it is possible to prevent acute exacerbations, therefore reducing the need for hospital admission through management in primary care.52,53 In the United Kingdom, it is estimated that approximately 16% of all emergency admissions for all age groups occur as a result of these conditions and up to 30% of admissions for those aged over 75 years.52 Community-based interventions should target conditions for which upscaling primary care management can really impact on preventing subsequent admissions. In the United States, risk prediction model developers are testing models that aim to focus resources not necessarily on patients at highest risk for emergency admission, but those with conditions or characteristics (such as prior treatment adherence) most likely to benefit from increased preventative care.54 In this way resources can be focused where impact is more likely to be realized.

Strengths and Limitations

This review is timely considering the increased interest in risk stratification to identify community-dwelling people at higher risk for future admission. However, there are some limitations. Risk prediction models developed in one population or health care setting may not be transferable to another and care must be taken in comparing models. Further, risk prediction models need frequent updating to remain relevant, and some of the older models described in this review are now obsolete. Seven of the included models presented their final risk model only and not all variables considered for inclusion, so the data presented in Table 3 is limited by this.

CONCLUSIONS

Choosing a robust method of risk stratification is an essential first step in attempting to reduce emergency hospital admissions. This review identified 27 validated risk prediction models developed for use in the community. Local factors and choice of outcome are important considerations in choosing a model. Capturing nonmedical factors may have a role in improving predictive accuracy.

Supplementary Material

ACKNOWLEDGMENTS

The authors thank the following authors who provided additional data: Dr Adrian Baker, Dr Paul Shelton, Prof. Peter Donnan, and Colin Styles.

Footnotes

Supplemental Digital Content is available for this article. Direct URL citations appear in the printed text and are provided in the HTML and PDF versions of this article on the journal's Website, www.lww-medicalcare.com.

The authors declare no conflict of interest.

Funded by the Health Research Board (HRB) of Ireland by the Research Training Fellowship for Healthcare Professionals award under Grant HPF/2012/20 and conducted as part of the HRB PhD Scholars Programme in Health Services Research Grant No. PHD/2007/16.

REFERENCES

- 1.Medicare payment advisory commission.A path to bundled payment around a rehospitalization. In Chapter 4 of Report to the Congress: reforming the delivery system. 2005Washington, DC:Medicare Payment Advisory Commission;83–103. [Google Scholar]

- 2.Blunt I, Bardsely M, Dixon J.Trends in Emergency Admissions in England 2004-2009: Is Greater Efficiency Breeding Inefficiency?. 2010London:The Nuffield Trust. [Google Scholar]

- 3.Purdy S.Avoiding hospital admission; what does the research evidence say? The King’s Fund. December 2010; London. Available at: http://www.kingsfund.org.uk/sites/files/kf/Avoiding-Hospital-Admissions-Sarah-Purdy-December2010.pdf. Accessed September 14, 2013.

- 4.de Vries EN, Ramrattan MA, Smorenburg SM, et al. The incidence and nature of in-hospital adverse events: a systematic review.Qual Saf Health Care. 2008;17:216–223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Lewis G, Curry N, Bardsley M.Choosing a Predictive Risk Model: A Guide for Commissioners in England. 2011London:The Nufffield Trust. [Google Scholar]

- 6.Roland M, Dusheiko M, Gravelle H, et al. Follow up of people aged 65 and over with a history of emergency admissions: analysis of routine admission data.BMJ. 2005;330:289–292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lewis G, Curry N, Bardsley M.How predictive modelling can help reduce risk, and hospital admissions. Health Service Journal (HSJ) 17th October 2011. Available at: www.hsj.co.uk/how-predictive-modelling-can-help-reduce-risk-and-hospital-admission/5035587.article. Accessed September 16, 2013.

- 8.Liberati A, Altman DG, Tetzlaff J, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration.BMJ. 2009;339:b2700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Iezzoni LL.Risk Adjustment for Measuring Health Care Outcomes. 2003:3rd ednChicago:Health Administration Press. [Google Scholar]

- 10.McGinn TG, Guyatt GH, Wyer PC, et al. Users’ guides to the medical literature: XXII: how to use articles about clinical decision rules. Evidence-Based Medicine Working Group.JAMA. 2000;284:79–84. [DOI] [PubMed] [Google Scholar]

- 11.Walker L, Jamrozik K, Wingfield D.The Sherbrooke Questionnaire predicts use of emergency services.Age Ageing. 2005;34:233–237. [DOI] [PubMed] [Google Scholar]

- 12.Daniels R, van Rossum E, Beurskens A, et al. The predictive validity of three self-report screening instruments for identifying frail older people in the community.BMC Public Health. 2012;12:69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Lyon D, Lancaster GA, Taylor S, et al. Predicting the likelihood of emergency admission to hospital of older people: development and validation of the Emergency Admission Risk Likelihood Index (EARLI).Fam Pract. 2007;24:158–167. [DOI] [PubMed] [Google Scholar]

- 14.Roos NP, Roos LL, Mossey J, et al. Using administrative data to predict important health outcomes. Entry to hospital, nursing home, and death.Med Care. 1988;26:221–239. [DOI] [PubMed] [Google Scholar]

- 15.Boult C, Dowd B, McCaffrey D, et al. Screening elders for risk of hospital admission.J Am Geriatr Soc. 1993;41:811–817. [DOI] [PubMed] [Google Scholar]

- 16.Wallace E, Hinchey T, Dimitrov BD, et al. A systematic review of the probability of repeated admission score in community-dwelling adults.J Am Geriatr Soc. 2013;61:357–364. [DOI] [PubMed] [Google Scholar]

- 17.Reuben DB, Keeler E, Seeman TE, et al. Development of a method to identify seniors at high risk for high hospital utilization.Med Care. 2002;40:782–793. [DOI] [PubMed] [Google Scholar]

- 18.Damush TM, Smith DM, Perkins AJ, et al. Risk factors for nonelective hospitalization in frail and older adult, inner-city outpatients.Gerontologist. 2004;44:68–75. [DOI] [PubMed] [Google Scholar]

- 19.Shelton P, Sager MA, Schraeder C.The community assessment risk screen (CARS): identifying elderly persons at risk for hospitalization or emergency department visit.Am J Manag Care. 2000;6:925–933. [PubMed] [Google Scholar]

- 20.Freedman JD, Beck A, Robertson B, et al. Using a mailed survey to predict hospital admission among patients older than 80.J Am Geriatr Soc. 1996;44:689–692. [DOI] [PubMed] [Google Scholar]

- 21.Mazzaglia G, Roti L, Corsini G, et al. Screening of older community-dwelling people at risk for death and hospitalization: the Assistenza Socio-Sanitaria in Italia project.J Am Geriatr Soc. 2007;55:1955–1960. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hippisley-Cox J, Coupland C.Predicting risk of emergency admission to hospital using primary care data: derivation and validation of QAdmissions score.BMJ Open. 2013;3:e003482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Billings J, Georghiou T, Blunt I, et al. Choosing a model to predict hospital admission: an observational study of new variants of predictive models for case finding.BMJ Open. 2013;3:e003352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Chenore T, Pereira Gray DJ, Forrer J, et al. Emergency hospital admissions for the elderly: insights from the Devon Predictive Model.J Public Health (Oxf). 2013;35:616–623. [DOI] [PubMed] [Google Scholar]

- 25.NHS National Services Scotland.Scottish Patients at Risk of Readmission (SPARRA). Version 3; 2011. Available at http://www.isdscotland.org/Health-Topics/Healthand-Social-Community-Care/SPARRA/SPARRA_Version_3_October 2011.pdf. Accessed September 18, 2013.

- 26.Baker A, Leak P, Ritchie LD, et al. Anticipatory care planning and integration: a primary care pilot study aimed at reducing unplanned hospitalisation.Br J Gen Pract. 2012;62:e113–e120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sussex Health Informatics Service.Sussex Combined Predictive Model (Sussex CPM). Available at: http://www.sussexhis.nhs.uk/sussexcpm. Accessed November 21, 2013.

- 28.Donnan PT, Dorward DW, Mutch B, et al. Development and validation of a model for predicting emergency admissions over the next year (PEONY): a UK historical cohort study.Arch Intern Med. 2008;168:1416–1422. [DOI] [PubMed] [Google Scholar]

- 29.Wales predictive model final report and technical documentation. Prepared for NHS Wales, Informing Healthcare by Health Dialog UK. 2008. Available at http://www.nliah.com/portal/microsites/Uploads/Resources/k5cma8PPy.pdf. Accessed September 18, 2013.

- 30.Combined predictive model: final report. NHS, Department of Health, UK, New York University-Robert E Wagner Graduate School of Public Health, King’s Fund and Health Dialog UK; 2006. Available at: http://tna.europarchive.org/20091005114006/http://networks.nhs.uk/uploads/06/12/combined_predictive_model_final_report.pdf. Accessed October 2, 2013.

- 31.Gao J, Moran E, Li YF, et al. Predicting potentially avoidable hospitalizations.Med Care. 2014;52:164–171. [DOI] [PubMed] [Google Scholar]

- 32.Wang L, Porter B, Maynard C, et al. Predicting risk of hospitalization or death among patients receiving primary care in the veterans health administration.Med Care. 2013;51:368–373. [DOI] [PubMed] [Google Scholar]

- 33.Lemke KW, Weiner JP, Clark JM.Development and validation of a model for predicting inpatient hospitalization.Med Care. 2012;50:131–139. [DOI] [PubMed] [Google Scholar]

- 34.Versisk Health.DxCG Likelihood of Hospitalization White paper, 2012. Available at: http://www.veriskhealth.com/likelihood-hospitalization-loh. Accessed October 1, 2013.

- 35.Crane SJ, Tung EE, Hanson GJ, et al. Use of an electronic administrative database to identify older community dwelling adults at high-risk for hospitalization or emergency department visits: the elders risk assessment index.BMC Health Serv Res. 2010;10:338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Inouye SK, Zhang Y, Jones RN, et al. Risk factors for hospitalization among community-dwelling primary care older patients: development and validation of a predictive model.Med Care. 2008;46:726–731. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Falasca P, Berardo A, Di Tommaso F.Development and validation of predictive MoSaiCo (Modello Statistico Combinato) on emergency admissions: can it also identify patients at high risk of frailty?Ann Ist Super Sanita. 2011;47:220–228. [DOI] [PubMed] [Google Scholar]

- 38.Louis D, Bobeson M, Herrera K, et al. Who is most likely to require a higher level of care: predicting risk of hospitalisation.BMC Health Serv Res. 2010;10suppl 2A2. [Google Scholar]

- 39.Lopez-Aguila S, Contel JC, Farre J, et al. Predictive model for emergency hospital admission and 6-month readmission.Am J Manag Care. 2011;17:e348–e357. [PubMed] [Google Scholar]

- 40.Salisbury C, Johnson L, Purdy S, et al. Epidemiology and impact of multimorbidity in primary care: a retrospective cohort study.Br J Gen Pract. 2011;61:e12–e21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Barnett K, Mercer SW, Norbury M, et al. The epidemiology of multimorbidity in a large cross-sectional dataset: implications for health care, research and medical education.Lancet. 2012;380:37–43. [DOI] [PubMed] [Google Scholar]

- 42.Raven M, Billings J, Goldfrank L, et al. Medicaid patients at high risk for frequent hospital admission: real-time identification and remediable risks.J Urban Health. 2009;86:230–241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Kansagara D, Englander H, Salanitro A, et al. Risk prediction models for hospital readmission: a systematic review.JAMA. 2011;306:1688–1698. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.García-Pérez L, Linertová R, Lorenzo-Riera A, et al. Risk factors for hospital readmissions in elderly patients: a systematic review.QJM. 2011;104:639–651. [DOI] [PubMed] [Google Scholar]

- 45.X prize to tackle hospital admissions.Available at: http://www.ehi.co.uk/news/acute-care/6606/x-prize_to_tackle_hospital_admissions. Accessed February 5, 2014.

- 46.Huntley AL, Thomas R, Mann M, et al. Is case management effective in reducing the risk of unplanned hospital admissions for older people? A systematic review and meta-analysis.Fam Pract. 2013;30:266–275. [DOI] [PubMed] [Google Scholar]

- 47.Boyd CM, Reider L, Frey K, et al. The effects of guided care on the perceived quality of health care for multi-morbid older persons: 18-month outcomes from a cluster-randomized controlled trial.J Gen Intern Med. 2010;25:235–242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Boult C, Reider L, Leff B, et al. The effect of guided care teams on the use of health services: results from a cluster-randomized controlled trial.Arch Intern Med. 2011;171:460–466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Gravelle H, Dusheiko M, Sheaff R, et al. Impact of case management (Evercare) on frail elderly patients: controlled before and after analysis of quantitative outcome data.BMJ. 2007;334:31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Smith SM, Soubhi H, Fortin M, et al. Managing patients with multimorbidity: systematic review of interventions in primary care and community settings.BMJ. 2012;345:e5205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Weber C, Neeser K.Using individualized predictive disease modeling to identify patients with the potential to benefit from a disease management program for diabetes mellitus.Dis Manag. 2006;9:242–256. [DOI] [PubMed] [Google Scholar]

- 52.Tian Y, Dixon A, Gao H.Emergency hospital admissions for ambulatory care sensitive conditions: identifying the potential for reductions. The King’s Fund. 2012.

- 53.AHRQ.‘Prevention Quality indicators overview.’ Agency for Healthcare Research and Quality (AHRQ). Available at: http://www.qualityindicators.ahrq.gov/Modules/pqi_resources.aspx. Accessed April 2, 2014.

- 54.Lewis GH.“Impactibility models”: identifying the subgroup of high-risk patients most amenable to hospital-avoidance programs.Milbank Q. 2010;88:240–255. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.