Abstract

Odors are surprisingly difficult to name, but the mechanism underlying this phenomenon is poorly understood. In experiments using event-related potentials (ERPs) and functional magnetic resonance imaging (fMRI), we investigated the physiological basis of odor naming with a paradigm where olfactory and visual object cues were followed by target words that either matched or mismatched the cue. We hypothesized that word processing would not only be affected by its semantic congruency with the preceding cue, but would also depend on the cue modality (olfactory or visual). Performance was slower and less precise when linking a word to its corresponding odor than to its picture. The ERP index of semantic incongruity (N400), reflected in the comparison of nonmatching versus matching target words, was more constrained to posterior electrode sites and lasted longer on odor-cue (vs picture-cue) trials. In parallel, fMRI cross-adaptation in the right orbitofrontal cortex (OFC) and the left anterior temporal lobe (ATL) was observed in response to words when preceded by matching olfactory cues, but not by matching visual cues. Time-series plots demonstrated increased fMRI activity in OFC and ATL at the onset of the odor cue itself, followed by response habituation after processing of a matching (vs nonmatching) target word, suggesting that predictive perceptual representations in these regions are already established before delivery and deliberation of the target word. Together, our findings underscore the modality-specific anatomy and physiology of object identification in the human brain.

Keywords: evoked potentials, functional MRI, human olfactory system, language, lexical–semantic system, orbitofrontal cortex

Introduction

The ability to recognize and identify odors has a long evolutionary precedent: rudimentary forms of chemosensory perception that govern behavior can be identified in single-cell organisms (Adler, 1969; Gottfried and Wilson, 2011). In contrast, the ability to name odors was undoubtedly established in recent phylogenetic history, well after neocortical development elevated primitive audiovisual communication (gesturing, imitation, and vocalization) to the language faculty of modern humans (Arbib, 2005). It has been suggested that the evolutionary asynchrony between early emergence of the olfactory system and recent emergence of the language system (Herz, 2005) accounts for the stark fact that humans are surprisingly poor at naming even highly familiar smells (Lawless and Engen, 1977; Cain, 1979; Cain et al., 1998; Larsson, 2002; Stevenson et al., 2007). Whatever the original basis for this cognitive shortcoming, there remains a marked gap in our neuroscientific understanding of the cortical interface between olfaction and language.

Lesion-based studies in epilepsy patients provided initial insights into the neural substrates of odor naming, highlighting the involvement of the anterior temporal lobe (ATL) and orbitofrontal cortex (OFC) (Eskenazi et al., 1986; Jones-Gotman and Zatorre, 1988; Jones-Gotman et al., 1997), though the large and variable extent of these lesions precluded a more precise functional localization. Subsequent neuroimaging studies have shown that these brain regions, as well as other olfactory, visual, and language-based areas, are active during odor identification, naming, and semantic processing (Royet et al., 1999; Savic et al., 2000; Kareken et al., 2003; Zelano et al., 2009; Kjelvik et al., 2012). Recent work from our group showed that in patients with primary progressive aphasia, deficits in the ability to match odors to words and pictures correlated strongly with the degree of atrophy in the temporal pole and adjacent ATL (Olofsson et al., 2013).

Thus, while some consensus is emerging regarding the roles of OFC and ATL in linking odor percepts to their names, a more systematic understanding of their contributions is lacking, given the challenges of isolating semantic integrative processing from verbal, perceptual, and mnemonic operations that likely co-occur during successful odor identification and naming. In two independent experiments, we used event-related potential (ERP) recordings and functional magnetic resonance imaging (fMRI) techniques to investigate the neural substrates of olfactory lexical–semantic integration with high temporal and spatial resolution. Participants took part in a cross-modal priming paradigm in which they judged whether a target word semantically matched a preceding olfactory or visual cue. Because successful performance on this task relies on extracting meaning from the initial cue, we hypothesized that the degree of semantic overlap between cues and word targets would help delineate the brain areas involved in mapping odor percepts to their lexical representations. Specific comparison between odor-cued and picture-cued trials enabled us to examine the temporal profiles and cortical networks selectively mediating the interface between odors and language, with a specific focus on the contributions of the OFC and ATL.

Materials and Methods

Semantic priming paradigm.

Semantic priming occurs when the contextual “meaning” of a cue stimulus facilitates the encoding of a subsequent target stimulus. Effects of semantic priming on brain responses are reliably measured by ERPs (N400 effect) and fMRI (cross-adaptation effect; for review, see Kutas and Federmeier, 2011; Henson and Rugg, 2003). Here we adapted a priming procedure previously used in our group to study object–word interactions in the visual modality (Hurley et al., 2009, 2012), but with the inclusion of an olfactory-cued condition. Although previous ERP studies have used odor cues to assess priming effects in response to target pictures (Grigor et al., 1999; Sarfarazi et al., 1999; Castle et al., 2000; Kowalewski and Murphy, 2012), the absence of any direct comparison to nonolfactory primes made it difficult to infer whether the observed findings were specific to the olfactory modality or merely a general property of semantic priming per se. Moreover, participants were not asked to make trial-specific decisions regarding cue–target congruency, and thus ERP results were not related to simultaneous cognitive performance.

In an initial ERP study, we measured the N400 effect, an electrographic signature that occurs around 400 ms after the onset of semantically nonmatching, compared with matching, stimuli (Kutas and Hillyard, 1980; Van Petten and Rheinfelder, 1995). The N400 is thought to reflect the semantic integration of stimuli based on contextual predictive coding (Chwilla et al., 1995; Kutas and Federmeier, 2000) and can be elicited by semantically incongruent (vs congruent) odors (Grigor et al., 1999; Kowalewski and Murphy, 2012). We hypothesized that the amplitude of the N400 response to target words would depend not only on their semantic congruency, but also on the sensory system by which the cues were delivered (i.e., olfactory or visual), implying a specific odor–language interface at this processing stage.

In a complementary study, we implemented an fMRI paradigm of cross-adaptation that is centered on the idea that repeated exposure to a particular stimulus feature (e.g., object size or identity) elicits a response decrease in brain regions encoding that feature (Henson and Rugg, 2003). Such methods have been used successfully with visual (Buckner et al., 1998; Dehaene et al., 2001; Vuilleumier et al., 2005), cross-linguistic (Holloway et al., 2013), and olfactory (Gottfried et al., 2006; Li et al., 2010) stimuli. In the context of this experiment, if the semantic meaning of an object cue is similar to the subsequent target word, then word-evoked fMRI responses should be attenuated in brain areas mediating odor–lexical integration compared with when the cue and target are semantically dissimilar.

Participants.

Participants were healthy young adult volunteers who received monetary compensation for their participation in this study, which was approved by the Northwestern University Institutional Review Board. All participants reported normal or corrected-to-normal vision, no smell or taste impairment, and no neurological or psychiatric conditions, and all were fluent speakers of English. The ERP experiment included 15 participants (10 women; mean age, 25.1 years; 14 right-handed). The fMRI experiment included 14 participants, none of whom had participated in the ERP experiment. fMRI data from two participants were lost because of equipment failure, and one participant was unable to participate because of unexpected claustrophobia, resulting in an effective sample of 11 participants (seven women; mean age = 25.6 years; all right-handed).

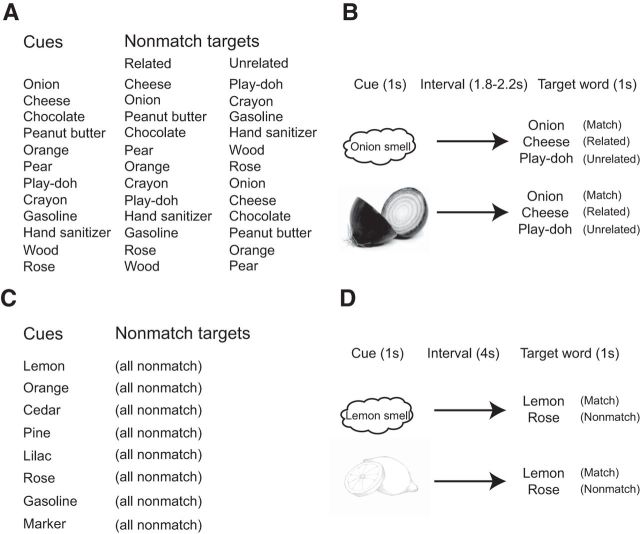

ERP stimuli and task.

Twelve common household objects (six food objects and six nonfood objects) were selected for the ERP experiment (Fig. 1A). Picture-cue stimuli were composed of colored photographs of each object. For odor-cue stimuli, natural odor sources were used, with the exception of orange, pear, rose, and cedarwood, which were perfume-essential oils. Four foil odors and pictures (coconut, coffee, rubber band, and pine) were also used in filler trials to control for response bias (see below). Target words (nouns) were presented in written form in the center of the screen. Cues and targets were paired together in three levels of semantic congruency: on matching trials, the object cue was paired with its referential noun; on related nonmatching trials, the cue was paired with a word from the same category or class of objects; and on unrelated nonmatching trials, food object cues were paired with nonfood noun labels, and vice versa (Fig. 1A). In a prior pilot study, nine healthy participants (five women; mean age, 28.1 years) rated the perceptual similarity between the odors from related pairings (e.g., chocolate and peanut butter odors) and between the odors from unrelated pairings (e.g., chocolate and hand sanitizer) on a 10-point scale. Similarity ratings were higher for odors from related pairings (M, 5.36; SD, 1.99) compared with unrelated pairings (M, 3.03; SD, 1.95; F(1,16) = 37.5, p < 0.001), validating our choice of object category memberships.

Figure 1.

Stimuli and trial timings. A, Object cues used in the ERP experiment and their target words on nonmatching trials. On matching trials, targets were the names of each object. B, Trial timing in the ERP experiment. Olfactory or visual object cues were followed by written word targets, each with a duration of 1 s with a variable interstimulus interval. C, Object cues used in the fMRI experiment. On matching trials, the object was paired with its name, and on nonmatching trials, the object was paired (one time each) with the names of the other seven objects. D, Trial timing in the fMRI experiment. Olfactory or visual object cues were followed by auditory word targets, each with an approximate duration of 1 s and a fixed 4 s interstimulus interval.

Before the start of the ERP experiment, participants were familiarized with the stimulus object cues and their corresponding target words, and they received a brief practice session to optimize subsequent task performance. The ERP sessions were divided into three odor–word blocks and three picture–word blocks, which alternated during the experiment. Each block consisted of 48 trials. It is worth emphasizing that odor-cued and picture-cued trials were never intermingled but always occurred in separate blocks. On picture blocks, participants viewed a computer screen where the text “get ready!” was shown for 3 s, followed by a picture cue that was presented for 1 s (Fig. 1B). After a jittered interstimulus interval of 1.8–2.2 s, the participants viewed a written word target on the screen for 1 s and pressed one of two buttons to indicate whether the word was a match or nonmatch to the preceding cue. A cross-hair was presented for 2–4 s between trials to maintain central gaze fixation. On odor blocks, the procedure was identical to picture blocks, with the exception that participants received a cue to sniff (green cross-hair, 1 s duration). A bottle containing the odor was placed directly under the participant's nose for the duration of the sniff cue. In a pre-experimental session, participants were trained to remain still while sniffing, to maintain fixation on the visual sniff cue and to produce a uniform sniff for the full duration of the cue to minimize EEG muscle artifact.

Per block, each of the 12 object cues was presented a total of three times, paired once each with matching, related nonmatching, and unrelated nonmatching target words (Fig. 1B). This created a potential response bias as there were two nonmatching trials for every matching trial. Therefore, as in previous studies using this design (Hurley et al., 2009), we introduced filler trials to balance the proportion of match and nonmatch responses. Odor and picture stimuli used in filler trials (coconut, coffee, rubber band, and pine) were intentionally different from those used as test stimuli and were always followed by a matching word target. Because filler stimuli could not be used to assess the N400 effect, behavioral and ERP data from filler trials were discarded before analysis. Each of the six blocks consisted of 36 relevant trials (plus 12 discarded foil trials) and was 8 min in length. Participants were given a short break between blocks, resulting in an ERP session that included 288 trials, and lasted for ∼50 min.

Percent accuracy on the cue-word matching task was determined by averaging across trials for each condition and each participant. Data were then submitted to a three-by-two repeated-measures ANOVA, with factors of cue–target congruency (three levels: matching, related nonmatching, unrelated nonmatching) and cue modality (two levels: olfactory, visual). Condition-specific response times (RTs), measured from the onset of the target word, were similarly analyzed using repeated-measures ANOVA, with follow-up pairwise post hoc t tests as appropriate. Statistical significance was set at p < 0.05 (two-tailed; except where noted).

ERP data acquisition and analysis.

EEG data were recorded from 32 scalp electrodes in an elastic cap, using a BioSemi ActiveTwo high-impedance amplifier (BioSemi Instrumentation). EEG was acquired at a sampling rate of 512 Hz, re-referenced off-line to averaged mastoid channels, and high-pass filtered at 0.01 Hz. Data were epoched from −100 to 800 ms relative to word onset and baseline corrected to the 100 ms prestimulus interval. Electro-ocular artifacts were monitored using electrodes placed below and lateral to the eyes. An eyeblink-correction algorithm was implemented using EMSE software (Source Signal Imaging) to remove blinks from the EEG trace. Epochs with remaining electro-ocular and other muscle artifacts were excluded from analysis, as were trials with inaccurate behavioral responses. This resulted in an average of 30.1 (SD, 5.2) trials per condition (84% of trials) contributing to odor ERPs and 31.2 (SD, 4.3) trials per condition (87% of trials) contributing to picture ERPs.

Inferential analyses focused on the N400 component, which was measured as the mean EEG amplitude from 300 to 450 ms after word target onset in 15 electrodes where N400 effects were found to be maximal in previous studies (Hurley et al., 2009, 2012). To compare the spatial distribution of N400 effects across cue modality (odor-cued vs picture-cued), electrodes were grouped into anterior, central, and posterior scalp regions, each containing five electrodes (anterior cluster: AF3, AF4, F3, Fz, F4; central cluster: Fc1, Fc2, C3, Cz, C4); posterior cluster: Cp1, Cp2, P3, Pz, P4). Significance of N400 effects was examined via a three-factor repeated-measures ANOVA, with factors of cue–target congruency (three levels: match, related nonmatch, unrelated nonmatch), cue modality (two levels: olfactory, visual), and electrode region (three levels: anterior, central, posterior), collapsed across the five electrodes contained within each regional cluster. The N400 effect is characterized as greater negative amplitude on nonmatching compared with matching trials, so the congruency factor was examined with contrast weights of −1 to both the related nonmatching and the unrelated nonmatching conditions and a contrast weight of +2 to the matching condition, following our prior methods (Hurley et al., 2009, 2012).

For visualization purposes, both grand-averaged ERP plots and topographic plots were created. Grand-averaged ERPs were low-pass filtered at 25 Hz for visualization (but not for inferential analysis). Topographic plots were created using EMSE Suite software. To visualize the distribution of N400 effects, the N400 amplitude from matching trials was subtracted from the amplitude on nonmatching trials (average of related nonmatching and unrelated nonmatching), and the resulting values were plotted across the scalp surface.

fMRI stimuli and task.

Eight common household objects were selected as stimuli for the fMRI experiment (Fig. 1C). Odors were obtained from essential oils, with two exceptions. Gasoline odor was obtained from the natural product, and marker pen odor was obtained from the monomolecular compound n-butanol. In the fMRI scanner, odor stimulus delivery was accomplished by means of a computer-controlled olfactometer, following our prior techniques (Bowman et al., 2012). Picture-cue stimuli were colored line drawings. Target spoken words were presented through noise-cancelling headphones in the fMRI scanner. Stimulus presentation and response monitoring was performed using Presentation software.

Before the start of the fMRI experiment, participants were familiarized with the odors, pictures, and word stimuli, and they underwent a brief practice session before the start of the fMRI experiment to optimize subsequent task performance. Scan sessions were divided into two odor-cued blocks and two picture-cued blocks, which alternated during the experiment. Each block consisted of 52 trials. As with the ERP experiment, odor-cued and picture-cued trials always occurred in separate blocks. On picture-cued blocks, participants viewed a computer screen where the text “get ready!” was shown for 3 s, followed by a picture cue that was presented for 1 s (Fig. 1D). After an interstimulus interval of 4 s, the participants heard a target word through headphones and pressed one of two buttons to indicate whether the word was a match or nonmatch to the preceding cue. A black cross-hair was then presented for 12 s between trials to maintain central gaze fixation. On nonmatch trials, each object cue was paired once each with each of the other seven target words to equate word item frequencies across match and nonmatch trials and across cue modalities.

On odor-cued blocks, the procedure was identical to picture blocks, with the exception that participants received a cue to sniff (green cross-hair, 1 s duration). During the presniff (get ready) period, participants were instructed to slowly exhale through their nose to enable effective odor sampling after presentation of the sniff cue. A computer-controlled flow-dilution olfactometer was used to deliver odor stimuli in synchrony with sniff cues (Bowman et al., 2012). Participants were instructed to remain as still as possible while sniffing to minimize movement artifact. Each of the four blocks included 28 match trials and 28 nonmatch trials, delivered in pseudorandom sequence order, for a total of 224 trials. Each block took 14 min, and participants were given a short break between blocks, resulting in a total fMRI session of ∼60 min.

fMRI data acquisition and analysis.

Functional imaging was performed using a Siemens Trio Tims 3T scanner to acquire gradient-echo T2*-weighted echoplanar images (EPIs) with blood oxygen level-dependent contrast. A 12-channel head coil and an integrated parallel acquisition technique, GRAPPA (GeneRalized Autocalibrating Partially Parallel Acquisition), were used to improve signal recovery in medial temporal and basal frontal regions. Image acquisition was tilted 30° from the horizontal axis to reduce susceptibility artifact in olfactory areas. Four runs of ∼350 volumes each were collected in an interleaved ascending sequence (40 slices per volume). Imaging parameters were as follows: repetition time, 2.51 s; echo time, 20 ms; slice thickness, 2 mm; gap, 1 mm; in-plane resolution, 1.72 × 1.72 mm; field of view, 220 × 206 mm; matrix size, 128 × 120 voxels.

Data preprocessing was performed in SPM5 (http://www.fil.ion.ucl.ac.uk/spm/). Images were spatially realigned to the first volume of the first session and slice-time adjusted. This was followed by spatial normalization to a standard EPI template, resulting in a functional voxel size of 2 mm, and smoothing with a 6 mm (full-width half-maximum) Gaussian kernel. Participant-specific T1-weighted anatomical scans (1 mm3) were also acquired and coregistered to the mean normalized EPI to aid in the localization of regional fMRI activations.

After image preprocessing, the event-related fMRI data were analyzed in SPM5 using a general linear model (GLM) in combination with established temporal convolution procedures. Eight onset vectors, consisting of the 4 conditions (match/nonmatch odors and pictures) × 2 blocks, were time-locked to the onset of the target word and entered into the model as stick (δ) functions. These were then convolved with a canonical hemodynamic response function (HRF) to generate condition-specific regressors. Temporal and dispersion derivatives of the HRF were also included as regressors to allow for variations in latency and width of the canonical function. Nuisance regressors included the six affine movement-related vectors derived from spatial realignment. A high-pass filter, with a cutoff frequency of 1/128 Hz, was used to eliminate slow drifts in the hemodynamic signal. Temporal autocorrelations were modeled using an AR(1) process.

Model estimation based on the GLM yielded condition-specific regression coefficients (β values) in a voxel-wise manner for each participant. In a second step (random-effects analysis), participant-specific linear contrasts of these β values were entered into a series of one-sample t tests or ANOVAs, each constituting a group-level SPM (statistical parametric map) of the T statistic. To investigate olfactory and visual cross-adaptation effects, we conducted repeated-measures ANOVAs to compare magnitudes of fMRI activation evoked at the onset of the target word in (1) olfactory-cued nonmatch versus match conditions, (2) visual-cued nonmatch versus match conditions, and (3) the interaction of cue (olfactory/visual) and congruency (nonmatch/match). In all comparisons, the prediction was that word-evoked responses would be selectively diminished in match conditions, reflecting cross-adaptation induced by the preceding, semantically related cue.

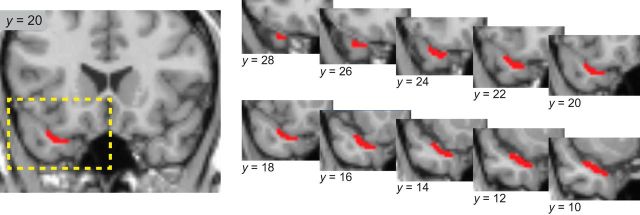

Regions of interest (ROIs) included the OFC and ATL. For statistical analysis of reported fMRI activations, we used small-volume correction for multiple comparisons. In the case of OFC, this was based on a sphere of 12 mm radius centered on a previously published coordinate [x = 32, y = 40, z = −16; MNI (Montreal Neurological Institute) space] identified in a prior fMRI cross-adaptation study of olfactory semantic quality (Gottfried et al., 2006). In the case of ATL, there are few prior reports of odor-evoked fMRI activity in this region. Therefore, we drew an anatomical ROI of “olfactory” ATL on the participant-averaged, coregistered T1-weighted MRI scan using MRIcron (http://www.nitrc.org/projects/mricron), based on anatomical tracer studies (Morán et al., 1987) showing that piriform (olfactory) cortex projects to the dorsal medial surface of the ATL. This ROI included the anterior-most dorsal extent of ATL (i.e., temporal pole) and extended posteriorly to the limen insula, where the dorsal temporal and basal frontal lobes first join (see Fig. 4). Statistical significance was set at p < 0.05, corrected for small volume.

Figure 4.

Anatomical mask of the dorsomedial ATL, corresponding to the area receiving direct projections from primary olfactory cortex. Left, boundaries of the ATL mask are shown superimposed on a single coronal T1-weighted section of the normalized, single-participant canonical MRI scan from SPM5 [y = 20 in MNI (Montreal Neurological Institute) coordinate space]. Right, The dashed yellow rectangle corresponds to the area of ATL displayed in serial coronal sections of the mask, spanning its full extent from y = 28 (anterior) to y = 10 (posterior). See Materials and Methods for more details.

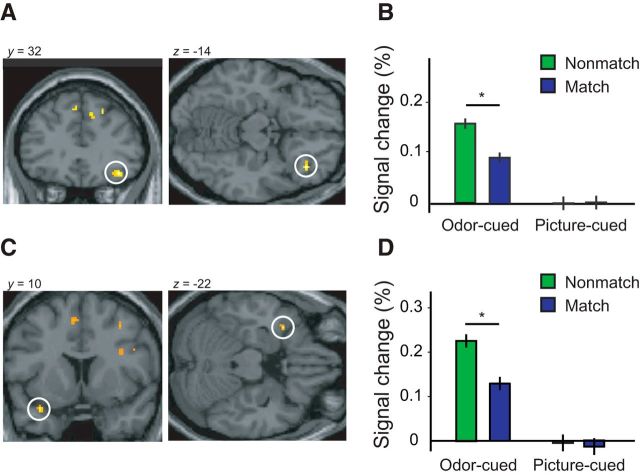

To prevent the possibility of fMRI statistical biases arising from circular inference (Kriegeskorte et al., 2009), we conducted a “leave-one-subject-out” cross-validation analysis for regions identified in the GLM. In this method, condition-specific regression coefficients from 10 participants (i.e., leaving out the 11th participant) were entered into an ANOVA, as in the previous model. Identification of the voxel most significant for the olfactory-specific, cross-adaptation (interaction) effect was then used to extract percentage signal change values from the 11th participant, thereby maintaining participant independence between voxel selection and voxel plotting. This procedure was iterated 11 times, with each participant “left out” once from the voxel selection step. Cross-validated interaction effects are shown in Figure 5, B and D.

Figure 5.

Results from the fMRI experiment. A, Right OFC activation for nonmatching > matching odor-cued trials. B, Right OFC interaction effect of cue modality* target word congruency (mean ± SE; condition-specific error bars adjusted for between-participants differences), indicating that cross-adaptation for matching trials was present in odor-cued but not in picture-cued conditions. C, Left ATL activation for nonmatching > matching odor-cued conditions. D, Left ATL interaction effect of cue modality by target word congruency, indicating that cross-adaptation for matching trials was present in odor-cued but not in picture-cued trials.

Finally, to characterize the fMRI time courses for cross-adapting regions identified in the primary GLM model, the preprocessed event-related fMRI data were analyzed using a finite impulse response (FIR) model. The FIR analysis was completely unconstrained (unfitted) and made no assumptions about the shape of the temporal response. Time courses for each condition type were characterized using a set of 10 basis functions (bins), each 2.5 s in duration, with the first bin aligned to the initial onset of each event. Condition-specific responses from each of the 10 bins were estimated in a voxel-wise manner per participant. Data were then extracted from the peak voxels of interest identified in the GLM, converted to percentage signal change, and assembled into group-averaged time series for each critical condition. For graphical depiction of within-participant variability (see Figs. 5B,D, 6A,B), condition-specific error bars were adjusted for between-participants differences by subtracting the mean across each modality for each participant (Cousineau et al., 2005; Antony et al., 2012).

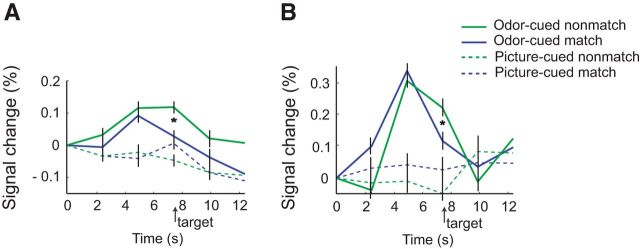

Figure 6.

Time-series profiles of fMRI signal change, aligned to trial onset and normalized across conditions at time 0. A, Time-course peaks in right OFC show little difference between match and nonmatch trials in response to the odor cue itself (∼5 s) but diverge in response to subsequent presentation of the target word (arrow, ∼7.5 s). Minimal reactivity is seen in association with the picture-cued trials. *p < 0.05, one-tailed, significant interaction between modality and congruency. B, In left ATL, odor cue-evoked fMRI response peaks (∼5 s) give way to cross-adapting profiles for matching versus nonmatching conditions (*; ∼7.5 s), whereas fMRI response profiles for picture-cued conditions remain relatively unreactive.

Respiratory recordings.

Nasal airflow was monitored continuously during fMRI scanning with a spirometer affixed to a nasal cannula positioned at the participant's nostril. The respiratory signal was analyzed off-line in Matlab. First, the respiratory time series from each run was smoothed using a low-pass FIR filter (cutoff frequency at 1 Hz) to remove high-frequency noise and then scaled to have a SD of 1. Next, participant-specific respiratory epochs were time-locked to the event onsets for each of the four conditions (odor cue; odor-associated word target; picture cue; and picture-associated word target), divided further into match and nonmatch conditions. For the odor-cue condition, the mean sniff-aligned respiratory waveform for each condition was calculated by averaging across all trials, from which the mean inhalation volume, peak, and duration were computed. For the other three conditions, there was no explicit instruction to sniff, so in these instances, the volume, peak, and duration of the first inhalation after event onset, as well as mean amplitude of respiration during cue and target presentations, were computed for all conditions on a trial-by-trial basis and then averaged across trials, following our prior methods (Gottfried et al., 2004). Because of technical problems in two participants, respiratory results are based on a total of nine participants. Paired t tests comparing match and nonmatch trials were used to assess statistical significance, independently for each of the four respiratory parameters, with a criterion of p < 0.05 (two-tailed).

Results

ERP study

Behavior

During the ERP experiment, participants were presented with either an olfactory cue or a visual cue, followed by one of three types of target words: matching, related nonmatching, or unrelated nonmatching. On each trial, participants indicated whether the presented word matched the preceding cue, the prediction being that responses would be faster and more accurate to words preceded by a matching, semantically congruent cue. Comparison of matching and nonmatching word conditions enabled us to characterize semantic effects between olfactory and visual modalities. Based on the imprecision with which humans map odor percepts onto lexical representations (Lawless and Engen, 1977; Cain, 1979; Cain et al., 1998; Larsson, 2002; Stevenson et al., 2007), we hypothesized that participants would exhibit weaker or less efficient olfactory priming at the behavioral level and that olfactory-cued N400 responses would exhibit regional and temporal differences compared with visual-cued responses.

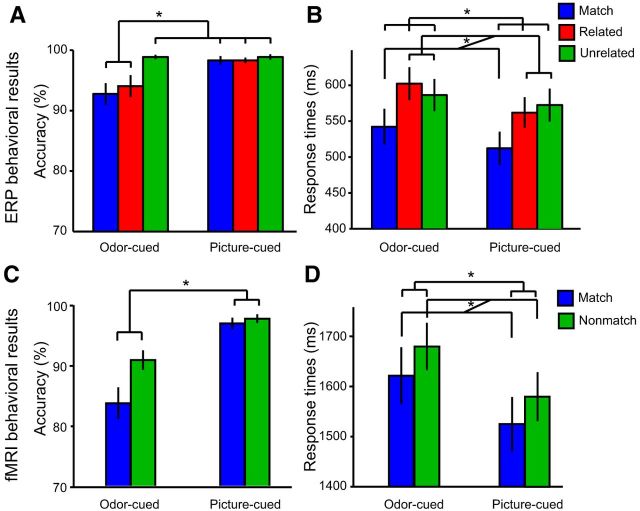

Analysis of response accuracy demonstrated significant main effects of both cue–target congruency (F(2,28) = 5.5, p = 0.01) and cue modality (F(2,14) = 14.0, p = 0.002), but there was also a significant congruency-by-modality interaction (F(2,28) = 3.5, p = 0.044; Fig. 2A). Post hoc t tests revealed that accuracy was lower on matching odor trials versus picture trials (t(14) = 3.1, p = 0.009), indicating fewer “hits” for matching words. Accuracy was also lower on related odor trials compared with related picture trials (t(14) = 2.2, p = 0.041), indicating more frequent “false alarms” to words from the same category as the cue. Accuracy did not significantly differ by cue modality on unrelated trials (t(14) = 0, p = 1.0), being near ceiling for both modalities. Analysis of response times (RTs) showed a slightly different profile; participants were significantly faster at identifying matching versus nonmatching words (related or unrelated), regardless of modality (repeated-measures ANOVA; main effect of cue–target congruency: F(2,28) = 15.5, p < 0.001; Fig. 2B). At the same time, responses were significantly faster for visual-cued versus olfactory-cued words, regardless of congruency (main effect of cue modality: F(1,14) = 6.0, p = 0.028), though there was no interaction between congruency and modality (F(2,28) = 1.6, p = 0.23).

Figure 2.

Behavioral results in ERP and fMRI experiments. Cue modality (olfactory, visual) is labeled below each set of bars, and cue–target congruency is indicated by color. Significant comparisons (p < 0.05) are indicated by an asterisk. A, Accuracy (mean ±SE) during the ERP experiment. Lower accuracy on matching and related odor trials, compared with other trial types, showed that participants were more likely to incorrectly reject the odors' names, and to falsely accept the names of other odors from the same semantic category, compared with picture trials. B, Response times (mean ± SE) in the ERP experiment were slower on odor trials than on picture trials and on nonmatching (related and unrelated) versus matching trials. C, In the fMRI experiment, accuracy was also lower on odor-cued compared with picture-cued trials. D, RTs in the fMRI experiment were slower on odor than picture trials and on nonmatching versus matching trials.

Together, these behavioral results suggest that participants can effectively use both olfactory and visual cues to establish linkages with their lexical referents. However, these processes are slower and less precise in the olfactory modality, much of which is attributable to a difficulty of differentiating between veridical word targets and categorically related lures.

N400 effect

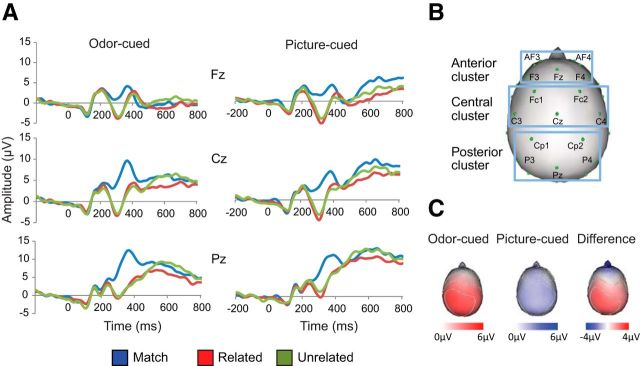

As a rapid online measure of brain activity, the N400 represents a unique window into how sensory context and predictive information shape processing of an upcoming word (Kutas and Federmeier 2011). ERP analysis confirmed that the topographic distribution of the N400 component differed according to whether the target word was preceded by an odor cue or a picture cue (Fig. 3), with a significant three-way interaction between congruency, modality, and region (F (1,14) = 17.6, p = 0.001). Follow-up analyses revealed that the N400 effect on picture-cued trials was significant at all three topographic regions (anterior: F(1,14) = 4.8, p = 0.046; central: F(1,14) = 9.4, p = 0.008; posterior: F(1,14) = 15.0, p = 0.002) but was restricted to central and posterior sites on odor-cued trials (anterior: F(1,14) = 0.93, p = 0.35; central: F(1,14) = 19.7, p = 0.001; posterior: F(1,14) = 61.5, p < 0.001). The interaction between modalities revealed that the N400 effect at the posterior electrode region was of higher amplitude on odor-cued trials than on picture-cued trials (F(1,14) = 4.6, p = 0.05). N400 responses in more difficult (related nonmatch) trials did not differ from those in easier (unrelated nonmatch) trials, and this was true for both odor-cued (F(1,14) = 0.033, p = 0.86) and picture-cued (F(1,14) = 0.035, p = 0.85) conditions, consistent with previous data (Hurley et al., 2009, 2012). These findings indicate that N400 topography was affected by the sensory modality of the cue, suggesting dissociable neural generators.

Figure 3.

Results from the ERP experiment. A, Grand-averaged ERP waveforms for the experimental conditions, from midline electrodes in anterior, central, and posterior regions of the scalp. The N400 was of significantly higher amplitude to nonmatching versus matching targets in all regions, except in anterior electrodes on olfactory-cued trials (top left). B, Electrode clusters used to examine significance in each scalp region. C, Scalp topography of the N400 effect (nonmatch minus match amplitude) for odor-cued trials (left), picture-cued trials (middle), and the difference between the two (right). N400 effects were relatively larger in posterior locations on odor-cued trials and relatively larger in anterior locations on picture-cued trials.

Based on the behavioral finding of slower RTs on odor trials, we performed a follow-up analysis to investigate whether there were modal differences in the latency and duration of N400 effects. We extracted N400 amplitudes separately in 25 ms epochs from each of the five central and five posterior electrode sites where N400 effects were significant for both cue modalities. The significance of the N400 effect (using the same contrast of match trials vs related and unrelated nonmatch trials) was evaluated separately at each 25 ms epoch. Odor-cued N400 effects had a duration of ∼175 ms, first reaching significance at the 250–275 ms epoch (F(1,14) = 8.9, p = 0.01), and remained significant (at α ≤ 0.05) until the 400–425 ms epoch (F(1,14) = 5.0, p = 0.043). In contrast, the picture-cued N400 effects had a duration of ∼125 ms, achieving significance from the 275–300 ms epoch (F(1,14) = 17.0, p = 0.001) until the 375–400 ms epoch (F(1,14) = 8.2, p = 0.013).

Finally, we observed that the olfactory task was slightly more difficult than the visual task, as evidenced by longer RTs and lower accuracy, raising the possibility that task difficulty could have contributed to the between-modality N400 differences. To address this issue, we compared ERP responses between the easier unrelated/nonmatching trials (e.g., orange odor cue followed by “wood” word target) with the harder related/nonmatching trials (e.g., orange odor cue followed by “pear” word target). This analysis did not reveal significant N400 differences between these trial types (p = 0.86), even though behavioral task difficulty was greater on related/nonmatching trials than unrelated/nonmatching trials, in terms of accuracy (p = 0.015) and reaction times (p = 0.05). This dissociation between behavioral performance and N400 effects for related and unrelated nonmatching trials suggests that difficulty per se was unlikely to account for the differences between odor-cued and word-cued N400 effects.

fMRI study

Behavior

A complementary fMRI study was conducted in a separate group of participants to identify the brain regions involved in linking odor percepts to their names. This study also enabled us to validate the modality-specific behavioral differences observed in the ERP experiment. As in the ERP study, participants were presented with an olfactory or visual cue, followed several seconds later by a target word whose meaning matched or did not match the preceding cue. Accuracy on the matching task (Fig. 2C) showed a significant effect of cue modality (F(1,10) = 48.3, p < 0.001), reflecting overall lower performance on odor-cued trials. This was true for both matching trials (olfactory vs visual; p = 0.003) and nonmatching trials (olfactory vs visual; p = 0.001). On the other hand, there were no effects of cue–target congruency (F(1,10) = 2.5, p = 0.148) or congruency-by-modality interaction (F(1,10) = 1.4, p = 0.266). Analysis of RTs (Fig. 2D) showed that participants responded more quickly on matching versus nonmatching trials (main effect of cue–target congruency: F(1,10) = 3.873, p = 0.039, one-tailed), with no effect of cue modality (F(1,10) = 2.059, p = 0.182) or congruency-by-modality interaction (F(1,10) = 0.247, p = 0.630). These findings are in general agreement with the behavioral profiles observed in the ERP experiment, such that participants were faster in identifying matches than nonmatches and had greater difficulty (lower performance accuracy) linking lexical representations to olfactory compared with visual cues.

Cross-adaptation effect

Our main focus in the fMRI experiment was to characterize the differential influence of olfactory and visual cues on the neural substrates of lexical-semantic processing. On odor-cued trials, the comparison of semantically matching with nonmatching word targets revealed significant fMRI cross-adaptation in the right central OFC (x = 38, y = 32, z = −14; Z = 4.20, p = 0.008 corrected for small volume; see Fig. 5A). The same comparison for picture-cued trials did not identify cross-adapting responses in right OFC, even at a liberal threshold of p < 0.05 uncorrected, suggesting that the intersection of cue–target meaning in OFC is selective for the olfactory modality. To assess the modal specificity of this effect, we tested the interaction between cue–target congruency and cue modality and found that semantic cross-adaptation in OFC was more pronounced when words were preceded by olfactory cues than by visual cues (x = 30, y = 36, z = −10; Z = 3.19, p < 0.001). The robustness of these OFC profiles was confirmed using an independent method of statistical verification (“leave-one-participant-out” cross-validation analysis; Kriegeskorte et al., 2009), highlighting a significant cue-by-congruency interaction (F(1,10) = 4.66, p = 0.028, one-tailed), such that match versus nonmatch trials differed for odor-cued conditions (p = 0.01) but not for picture-cued conditions (p = 0.94; see Fig. 5B).

Significant cross-adapting responses were also identified in left dorsomedial ATL (x = −34, y = 10, z = −22; Z = 3.63, p = 0.008 corrected for small volume; Fig. 4) when a target word was preceded by a matching olfactory cue compared with a nonmatching olfactory cue (Fig. 5C). No such effects were observed when target words were preceded by visual cues, even at a liberal threshold of p < 0.05 uncorrected. Condition-specific plots of mean activity from the left ATL revealed that cue–target cross-adaptation was selective for olfactory cues (Fig. 5D), with a significant two-way interaction between cue–target congruency and cue modality (x = −34, y = 10, z = −22; Z = 3.15, p < 0.001). This interaction effect also survived cross-validation statistical testing (interaction of cue and congruency: F(1,10) = 4.23, p = 0.033, one-tailed), whereby nonmatch trials were significantly higher than match trials for odor-cued conditions (p = 0.009) but not for picture-cued conditions (p = 0.84). At the whole-brain level, no other region exhibited evidence of fMRI cross-adaptation when corrected for multiple comparisons (at a family-wise error of p < 0.05).

The above findings were modeled based on the magnitude of fMRI activity evoked at the time of word target onset. However, to the extent that cross-adaptation implies a selective response decrease from the first stimulus (cue) to the second stimulus (word), the current approach is unable to confirm such a profile. For example, it is possible that differences between match and nonmatch conditions (at the time of word onset) could simply reflect differences already present at the time of cue presentation, in the absence of a selective cross-adapting response. Therefore, we implemented a FIR model to illustrate the fMRI time courses in OFC and ATL, spanning the entire trial duration from onset of the cue through appearance of the target word (Fig. 6). Importantly, the fact that each of the eight odor cues appeared equal numbers of times in match and nonmatch trials rules out the possibility that time course differences could be attributed to odor stimulus variations between trial types.

Effect sizes were considered at the time point corresponding to cue-evoked activity (∼5 s) and also at the time point corresponding to target word-evoked activity (∼7.5 s). After onset of an odor-cue, time-series plots of OFC responses showed similar levels of fMRI activity for both match and nonmatch trials (p = 0.65; Fig. 6A). At the same time, OFC responses were significantly higher when evoked by olfactory cues than for visual cues (main effect of modality: F(1,10) = 3.64, p < 0.043, one-tailed), in the absence of a main effect of congruency or cue-by-congruency interaction (p values >0.29). These findings imply that this region of OFC is selectively responsive to odor processing at the time of the cue. Of note is that after onset of the target word, OFC activity was sustained on olfactory nonmatch trials but was reduced, or cross-adapted, on olfactory match trials (p = 0.01), in accord with its involvement in extracting semantic meaning from odor input. No such change in word-evoked OFC activity was observed for visual nonmatch and match trials (p = 0.1); if anything, match trials were nominally higher than nonmatch trials.

Time-series fMRI profiles of cue–target cross-adaptation were also characterized in the left ATL (Fig. 6B). As demonstrated in OFC, similar increases in ATL activity were observed for nonmatch and match trials evoked at the time of the olfactory cue (p = 0.75), and these responses were significantly higher than those evoked at the time of the visual cue (main effect of modality: F(1,10) = 3.51, p < 0.046, one-tailed), without a main effect of congruency or cue-by-congruency interaction (p values >0.52). After delivery of the word target, ATL responses were sustained for nonmatch versus match trials during the olfactory cue conditions (p = 0.001), consistent with cross-adaptation, but there were no significant differences between nonmatch and match trials during the visual cue conditions (p = 0.18).

Finally, because the odor-cued trials were associated with sniffing, participants may have been relatively aligned in their respiratory cycle at the time of target word presentation. Given that the sniff cue appeared for 1 s and the word target appeared ∼3 s later, it is possible that participants were exhaling when the word was presented, which could have introduced word-evoked fMRI differences between the odor and picture blocks. Online measurements of nasal breathing enabled us to compare participants' respiratory patterns during cue and target presentations as a function of congruency. There were no significant differences between nonmatch and match trials for the odor-cue condition (inspiratory volume, p = 0.96; inspiratory peak, p = 0.97; inspiratory duration, p = 0.84) or the odor-associated word target condition (volume, p = 0.81; peak, p = 0.41; duration, p = 0.98; mean amplitude, p = 0.12). For completeness, we also compared nonmatch and match trials on picture blocks and found no differences for the picture-cue condition (volume, p = 0.46; peak, p = 0.38; duration, p = 0.18; mean amplitude, p = 0.25) or the picture-associated word target condition (volume, p = 0.30; peak, p = 0.67; duration, p = 0.24; mean amplitude, p = 0.65). We can, therefore, conclude that breathing differences were unlikely to contribute to the fMRI findings and that any respiratory impact on the temporal precision of the FIR plots was probably negligible.

Discussion

A long-standing issue in olfactory perception is how the human brain integrates odor objects with lexical representations to support identification and naming. Although previous behavioral evidence suggests that lexical integration might be fundamentally different for olfaction compared with other sensory modalities such as vision (for review, see Jönsson and Stevenson, 2014; Herz, 2005), only limited evidence has been available to inform theoretical views of the underlying neural mechanisms. In this study, converging results from ERP and fMRI experiments show that the neural integration of target words was critically dependent on the sensory modality (olfactory or visual) of preceding sensory cues. Our findings suggest that the human brain possesses an odor-specific lexical-integration system, which may encode and maintain predictive semantic aspects of odor input to guide subsequent word choice and thus influence olfactory naming.

Our ERP results revealed a spatiotemporally distinct neural network for linking words with olfactory semantic content. Odor-specific processing, as indexed via the N400 response, generated a more posterior amplitude distribution than comparable picture-cued effects. Importantly, the modality-specific differences in N400 temporal and topographical properties were observed despite the fact that the same sets of target words were used in all conditions. We also found that that the N400 time window from onset to closure was 50 ms wider for odor-cued (vs picture-cued) processing. Interestingly, whereas the odor-cued N400 emerged 25 ms earlier than the picture-cued N400, olfactory trials were associated with longer RTs. This apparently paradoxical effect may relate to the inherent difficulties of mapping odor stimuli onto their lexical referents (Olofsson et al., 2013; Olofsson, 2014). That is, given the relative challenges of extracting semantic content from odor cues, the processes of odor-word integration may translate to an overall prolonged N400 temporal window that begins earlier for olfactory (vs visual) semantic elaboration, even though behavioral responses are slower. It is also plausible that the unique anatomical organization of the olfactory system, with very few synapses connecting the odor periphery with object-naming areas, engages cortical mechanisms that are initiated quickly (with early N400 onset) but are ultimately inefficient (with longer RTs).

In a separate experiment, we used an fMRI cross-adaptation paradigm to specify the cortical substrates of odor–language integration. Sequential presentation of semantically matching olfactory cues and words elicited a reduced (cross-adapting) fMRI response in both OFC and dorsal ATL, when compared with nonmatching olfactory cues and also when compared with visual cue conditions (Fig. 5), implying that these brain areas are actively involved in linking odor percepts to their names. Interestingly, time-series plots demonstrated increased activity in OFC and ATL at the onset of the odor cue itself (Fig. 6), consistent with the idea that predictive representations in these regions are already established before delivery and deliberation of the target word. Such profiles are reminiscent of olfactory predictive coding mechanisms that emerge in OFC in response to a visual cue but before arrival of odor (Zelano et al., 2011). Importantly, fMRI cross-adaptation in OFC was observed specifically in response to odor-cued word targets, but not to picture-cued word targets, even though the identical set of words appeared in olfactory and visual blocks. These observations imply that the OFC responses are not driven by general (modality-independent) breaches of expectation per se and underscore the advantages of testing both olfactory and visual modalities within the same experimental design.

Of note is that both OFC and ATL are recognized as sensory convergence zones for olfactory and nonolfactory projections (Markowitsch et al., 1985; Morán et al., 1987; Carmichael and Price, 1994) and would thus be well positioned to mediate cross-modal interactions between different sensory sources. Human OFC is also known to participate in multisensory integration between olfactory and visual sources of information (de Araujo et al., 2003, 2005; Gottfried and Dolan, 2004; Osterbauer et al., 2005), and ATL plays a key role in multimodal semantic processing (Patterson et al., 2007; Visser et al., 2010; Mesulam et al., 2013). On the basis of nonhuman primate data (Morán et al., 1987; Morecraft et al., 1992), the OFC and ATL are reciprocally connected but also receive independent projections from piriform cortex, suggesting that odor inputs have several path opportunities for interfacing with the language system.

The specific contributions of the ATL to olfactory processing are poorly characterized in both human and nonhuman species. In the human brain, the dorsomedial sector of ATL has been referred to as the temporal insular area or “area TI” by Beck (1934) and is characterized as agranular cortex innervated by olfactory fibers in both the monkey and the human brain (Morán et al., 1987; Ding et al., 2009). Furthermore, dorsal ATL is functionally coherent with olfactory cortical regions, including the olfactory tubercle, piriform cortex, and OFC, in studies using task-free fMRI (Pascual et al., 2013). Atrophy in the temporal pole and adjacent ATL is associated with deficits of odor matching and identification (Olofsson et al., 2013), and left ATL, in particular, has been shown to be critical for linking object representations with their word associations in the temporosylvian language network (Mesulam et al., 2013). In the context of the current experiments, these combined higher-level odor representations in OFC and dorsomedial ATL may serve as optimal links to knowledge-based and even hedonic-based representations that support odor–word mapping.

Some methodological aspects require further discussion. First, given the low sample size in the fMRI experiment, statistical robustness of the behavioral and imaging data might have been enhanced with additional participants, though the strong behavioral correspondence between the ERP and fMRI data sets indicates that these findings were nevertheless reproducible across independent groups of participants. Second, although breathing differences between nonmatching and matching trials were not observed in the fMRI study, respiratory data were not collected during the ERP study, which might have confounded the N400 effects. However, it is important to emphasize that after delivery of the odor cue, participants had no way of predicting whether the upcoming word target would be semantically congruent. Thus, they had no information that could be used to modulate their breathing in a differential manner. Even after presentation of the target word, it is unlikely that conscious access to semantic congruity would have been available within the brief N400 window, such that participants could have modulated their respiratory patterns. Indeed, the absence of cue-related and target-related breathing effects was fully borne out in the fMRI study, which closely mirrored the ERP study, yet showed no significant respiratory differences between matching and nonmatching conditions.

One implication of our findings is that key anatomical components of object naming are modality specific rather than amodal. With the identification of a dedicated neural interface between olfaction and language, it follows that such a network likely plays a critical role in the human sense of smell. Whereas humans can arguably discriminate 1 trillion odors (Bushdid et al., 2014), olfaction is unique among the senses in its reliance on verbal translation for efficient working memory maintenance and episodic memory encoding (Rabin and Cain, 1984; Jehl et al., 1997). A lexical semantic pathway to odor objects within the ATL and OFC would help endow olfactory percepts with enriched conceptual content, adding to our enjoyment of food odors and fragrances and enhancing our olfactory behavioral expertise to categorize and discriminate smells.

Although language may promote a more flexible and adaptive array of olfactory behaviors, it is clear that verbally mediated information shapes odor object perception to a larger extent than it shapes perception of other sensory objects (Dalton et al., 1997; Distel and Hudson, 2001; Herz, 2003; Djordjevic et al., 2008). The domineering influence of verbal context on odor identification may have its root in fundamental synaptic differences between the visual and olfactory information streams, and it may help account for why odors remain so hard to name. The extensive multisynaptic pathways from visual striate areas to inferotemporal cortex enable the encoding of fine-grained representations of visual objects. These highly differentiated visual percepts are then in a position to establish precise linkages to their lexical referents, with rapid and robust naming.

In contrast, as shown here, odors trigger lexical associations through caudal OFC and dorsomedial ATL, two areas shown in the macaque brain to be only one synapse away from piriform cortex (Morán et al., 1987; Morecraft et al., 1992). Olfactory percepts are, therefore, likely to be less differentiated or elaborated at the stage where lexical associations are triggered. The relative unavailability of synaptic links between odors and their lexical referents generates an impoverished store of olfactory concepts and may ultimately account for why it takes longer to elicit word meaning from an odor and why the process is less precise. The net effect would be to make olfactory perceptual representations much more susceptible to modulation by language concepts (Yeshurun et al., 2008), especially those that are contemporaneous with an odor encounter. The dynamic interplay between the olfactory and lexical systems, and their interface with higher-order centers for retrieval and verbalization, such as the IFG (Olofsson et al., 2013), may be collectively responsible for the elusive nature of olfactory language.

Footnotes

This work was supported by a grant from The Swedish Research Council (421-2012-806) and a Pro Futura Scientia VII fellowship from The Swedish Foundation for Humanities and Social Sciences to J.K.O., National Institute on Aging (NIA) Grant T32AG20506 (predoctoral and postdoctoral training program) to R.S.H. and N.E.B., National Institute on Deafness and Other Communication Disorders (NIDCD) Grant R01DC008552 to M.-M.M., NIDCD Grants R01DC010014 and K08DC007653 to J.A.G., and Alzheimer's Disease Core Center Grant P30AG013854 to M.-M.M. from the NIA. We thank Joseph Boyle and Katherine Khatibi for technical assistance and Keng Nei Wu for the pen-and-ink drawings that were used as stimuli in the fMRI experiment.

References

- Adler J. Chemoreceptors in bacteria. Science. 1969;166:1588–1597. doi: 10.1126/science.166.3913.1588. [DOI] [PubMed] [Google Scholar]

- Antony JW, Gobel EW, O'Hare JK, Reber PJ, Paller KA. Cued memory reactivation during sleep influences skill learning. Nat Neurosci. 2012;15:1114–1116. doi: 10.1038/nn.3152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arbib MA. From monkey-like action recognition to human language: an evolutionary framework for neurolinguistics. Behav Brain Sci. 2005;28:105–167. doi: 10.1017/s0140525x05000038. [DOI] [PubMed] [Google Scholar]

- Beck E. Die myeloarchitektonik der dorsalen Schlafenlappenrinde beim Menschen. J Psychol Neurol. 1934;41:129–264. [Google Scholar]

- Bowman NE, Kording KP, Gottfried JA. Temporal integration of olfactory perceptual evidence in human orbitofrontal cortex. Neuron. 2012;75:916–927. doi: 10.1016/j.neuron.2012.06.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buckner RL, Goodman J, Burock M, Rotte M, Koutstaal W, Schacter D, Rosen B, Dale AM. Functional-anatomic correlates of object priming in humans revealed by rapid presentation event-related fMRI. Neuron. 1998;20:285–296. doi: 10.1016/S0896-6273(00)80456-0. [DOI] [PubMed] [Google Scholar]

- Bushdid C, Magnasco MO, Vosshall LB, Keller A. Humans can discriminate more than 1 trillion olfactory stimuli. Science. 2014;343:1370–1372. doi: 10.1126/science.1249168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cain WS. Know with the nose–keys to odor identification. Science. 1979;203:467–470. doi: 10.1126/science.760202. [DOI] [PubMed] [Google Scholar]

- Cain WS, de Wijk R, Lulejian C, Schiet F, See LC. Odor identification: perceptual and semantic dimensions. Chem Senses. 1998;23:309–326. doi: 10.1093/chemse/23.3.309. [DOI] [PubMed] [Google Scholar]

- Carmichael ST, Price JL. Architectonic subdivision of the orbital and medial prefrontal cortex in the macaque monkey. J Comp Neurol. 1994;346:366–402. doi: 10.1002/cne.903460305. [DOI] [PubMed] [Google Scholar]

- Castle PC, Van Toller S, Milligan GJ. The effect of odour priming on cortical EEG and visual ERP responses. Int J Psychophysiol. 2000;36:123–131. doi: 10.1016/S0167-8760(99)00106-3. [DOI] [PubMed] [Google Scholar]

- Chwilla DJ, Brown CM, Hagoort P. The N400 as a function of the level of processing. Psychophysiology. 1995;32:274–285. doi: 10.1111/j.1469-8986.1995.tb02956.x. [DOI] [PubMed] [Google Scholar]

- Cousineau D. Confidence intervals in within-subject designs: a simpler solution to Loftus and Masson's method. Tutorials Quant Methods Psychol. 2005;1:42–45. [Google Scholar]

- Dalton P, Wysocki CJ, Brody MJ, Lawley HJ. The influence of cognitive bias on the perceived odor, irritation and health symptoms from chemical exposure. Int Arch Occup Environ Health. 1997;69:407–417. doi: 10.1007/s004200050168. [DOI] [PubMed] [Google Scholar]

- de Araujo IE, Rolls ET, Kringelbach ML, McGlone F, Phillips N. Taste-olfactory convergence, and the representation of the pleasantness of flavour, in the human brain. Eur J Neurosci. 2003;18:2059–2068. doi: 10.1046/j.1460-9568.2003.02915.x. [DOI] [PubMed] [Google Scholar]

- de Araujo IE, Rolls ET, Velazco MI, Margot C, Cayeux I. Cognitive modulation of olfactory processing. Neuron. 2005;46:671–679. doi: 10.1016/j.neuron.2005.04.021. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Naccache L, Cohen L, Le Bihan DL, Mangin JF, Poline JB, Rivière D. Cerebral mechanisms of word masking and unconscious repetition priming. Nat Neurosci. 2001;4:752–758. doi: 10.1038/89551. [DOI] [PubMed] [Google Scholar]

- Ding SL, Van Hoesen GW, Cassell MD, Poremba A. Parcellation of human temporal polar cortex: a combined analysis of multiple cytoarchitectonic and pathological markers. J Comp Neurol. 2009;514:595–623. doi: 10.1002/cne.22053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Distel H, Hudson R. Judgement of odor intensity is influenced by subjects' knowledge of the odor source. Chem Senses. 2001;26:247–251. doi: 10.1093/chemse/26.3.247. [DOI] [PubMed] [Google Scholar]

- Djordjevic J, Lundstrom JN, Clément F, Boyle JA, Pouliot S, Jones-Gotman M. A rose by any other name: would it smell as sweet? J Neurophysiol. 2008;99:386–393. doi: 10.1152/jn.00896.2007. [DOI] [PubMed] [Google Scholar]

- Eskenazi B, Cain WS, Novelly RA, Mattson R. Odor perception in temporal-lobe patients with and without temporal lobectomy. Neuropsychologia. 1986;24:553–562. doi: 10.1016/0028-3932(86)90099-0. [DOI] [PubMed] [Google Scholar]

- Gottfried JA, Dolan RJ. Human orbitofrontal cortex mediates extinction learning while accessing conditioned representations of value. Nat Neurosci. 2004;7:1145–1152. doi: 10.1038/nn1314. [DOI] [PubMed] [Google Scholar]

- Gottfried JA, Wilson DA. Smell. In: Gottfried JA, editor. Neurobiology of sensation and reward. Boca Raton, FL: CRC; 2011. pp. 99–125. [Google Scholar]

- Gottfried JA, Smith AP, Rugg MD, Dolan RJ. Remembrance of odors past: human olfactory cortex in cross-modal recognition memory. Neuron. 2004;42:687–695. doi: 10.1016/S0896-6273(04)00270-3. [DOI] [PubMed] [Google Scholar]

- Gottfried JA, Winston JS, Dolan RJ. Dissociable codes of odor quality and odorant structure in human piriform cortex. Neuron. 2006;49:467–479. doi: 10.1016/j.neuron.2006.01.007. [DOI] [PubMed] [Google Scholar]

- Grigor J, Van Toller S, Behan J, Richardson A. The effect of odour priming on long latency visual evoked potentials of matching and non-matching objects. Chem Senses. 1999;24:137–144. doi: 10.1093/chemse/24.2.137. [DOI] [PubMed] [Google Scholar]

- Henson RN, Rugg MD. Neural response suppression, haemodynamic effects, and behavioural priming. Neuropsychologia. 2003;41:263–270. doi: 10.1016/S0028-3932(02)00159-8. [DOI] [PubMed] [Google Scholar]

- Herz RS. The effect of verbal context on olfactory perception. J Exp Psychol Gen. 2003;132:595–606. doi: 10.1037/0096-3445.132.4.595. [DOI] [PubMed] [Google Scholar]

- Herz RS. The unique interaction between language and olfactory perception and cognition. In: Rosen DT, editor. Trends in experimental research. London: Nova Publishing; 2005. pp. 91–99. [Google Scholar]

- Holloway ID, Battista C, Vogel SE, Ansari D. Semantic and perceptual processing of number symbols: evidence from a cross-linguistic fMRI adaptation study. J Cogn Neurosci. 2013;25:388–400. doi: 10.1162/jocn_a_00323. [DOI] [PubMed] [Google Scholar]

- Hurley RS, Paller KA, Wieneke CA, Weintraub S, Thompson CK, Federmeier KD, Mesulam MM. Electrophysiology of object naming in primary progressive aphasia. J Neurosci. 2009;29:15762–15769. doi: 10.1523/JNEUROSCI.2912-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hurley RS, Paller KA, Rogalski EJ, Mesulam MM. Neural mechanisms of object naming and word comprehension in primary progressive aphasia. J Neurosci. 2012;32:4848–4855. doi: 10.1523/JNEUROSCI.5984-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jehl C, Royet JP, Holley A. Role of verbal encoding in short- and long-term odor recognition. Perc Psychophys. 1997;59:100–110. doi: 10.3758/BF03206852. [DOI] [PubMed] [Google Scholar]

- Jones-Gotman M, Zatorre RJ. Olfactory identification deficits in patients with focal cerebral excision. Neuropsychologia. 1988;26:387–400. doi: 10.1016/0028-3932(88)90093-0. [DOI] [PubMed] [Google Scholar]

- Jones-Gotman M, Zatorre RJ, Cendes F, Olivier A, Andermann F, McMackin D, Staunton H, Siegel AM, Wieser HG. Contribution of medial versus lateral temporal-lobe structures to human odour identification. Brain. 1997;120:1845–1856. doi: 10.1093/brain/120.10.1845. [DOI] [PubMed] [Google Scholar]

- Jönsson FU, Stevenson RJ. Odor knowledge, odor naming and the “tip of the nose” experience. In: Schwartz BW, Brown AS, editors. Tip of the tongue states and related phenomena. Cambridge, UK: Cambridge UP; 2014. pp. 305–326. [Google Scholar]

- Kareken DA, Mosnik DM, Doty RL, Dzemidzic M, Hutchins GD. Functional anatomy of human odor sensation, discrimination, and identification in health and aging. Neuropsychology. 2003;17:482–495. doi: 10.1037/0894-4105.17.3.482. [DOI] [PubMed] [Google Scholar]

- Kjelvik G, Evensmoen HR, Brezova V, Håberg AK. The human brain representation of odor identification. J Neurophysiol. 2012;108:645–657. doi: 10.1152/jn.01036.2010. [DOI] [PubMed] [Google Scholar]

- Kowalewski J, Murphy C. Olfactory ERPs in an odor/visual congruency task differentiate ApoE epsilon 4 carriers from non-carriers. Brain Res. 2012;1442:55–65. doi: 10.1016/j.brainres.2011.12.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Simmons WK, Bellgowan PS, Baker CI. Circular analysis in systems neuroscience: the dangers of double dipping. Nat Neurosci. 2009;12:535–540. doi: 10.1038/nn.2303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kutas M, Federmeier KD. Electrophysiology reveals semantic memory use in language comprehension. Trends Cogn Sci. 2000;4:463–470. doi: 10.1016/S1364-6613(00)01560-6. [DOI] [PubMed] [Google Scholar]

- Kutas M, Federmeier KD. Thirty years and counting: finding meaning in the N400 component of the event-related brain potential. Annu Rev Psychol. 2011;62:621–647. doi: 10.1146/annurev.psych.093008.131123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kutas M, Hillyard SA. Reading senseless sentences: brain potentials reflect semantic incongruity. Science. 1980;207:203–205. doi: 10.1126/science.7350657. [DOI] [PubMed] [Google Scholar]

- Lawless H, Engen T. Associations to odors: interference, mnemonics, and verbal labeling. J Exp Psychol Hum Learn. 1977;3:52–59. doi: 10.1037/0278-7393.3.1.52. [DOI] [PubMed] [Google Scholar]

- Li W, Howard JD, Gottfried JA. Disruption of odour quality coding in piriform cortex mediates olfactory deficits in Alzheimer's disease. Brain. 2010;133:2714–2726. doi: 10.1093/brain/awq209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mesulam MM, Wieneke C, Hurley R, Rademaker A, Thompson CK, Weintraub S, Rogalski EJ. Words and objects at the tip of the left temporal lobe in primary progressive aphasia. Brain. 2013;136:601–618. doi: 10.1093/brain/aws336. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morán MA, Mufson EJ, Mesulam MM. Neural inputs into the temporopolar cortex of the rhesus monkey. J Comp Neurol. 1987;256:88–103. doi: 10.1002/cne.902560108. [DOI] [PubMed] [Google Scholar]

- Morecraft RJ, Geula C, Mesulam MM. Cytoarchitecture and neural afferents of orbitofrontal cortex in the brain of the monkey. J Comp Neurol. 1992;323:341–358. doi: 10.1002/cne.903230304. [DOI] [PubMed] [Google Scholar]

- Olofsson JK. Time to smell: a cascade model of olfactory perception based on response-time (RT) measurement. Front Psychol. 2014;5:33. doi: 10.3389/fpsyg.2014.00033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olofsson JK, Rogalski E, Harrison T, Mesulam MM, Gottfried JA. A cortical pathway to olfactory naming. Evidence from primary progressive aphasia. Brain. 2013;136:1245–1259. doi: 10.1093/brain/awt019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Osterbauer RA, Matthews PM, Jenkinson M, Beckmann CF, Hansen PC, Calvert GA. Color of scents: chromatic stimuli modulate odor responses in the human brain. J Neurophysiol. 2005;93:3434–3441. doi: 10.1152/jn.00555.2004. [DOI] [PubMed] [Google Scholar]

- Pascual B, Masdeu JC, Hollenbeck M, Makris N, Insausti R, Ding SL, Dickerson BC. Large-scale brain networks of the human left temporal pole: a functional connectivity MRI study. Cereb Cortex. 2013 doi: 10.1093/cercor/bht260. Advance online publication. Retrieved May 1, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patterson K, Nestor PJ, Rogers TT. Where do you know what you know? The representation of semantic knowledge in the human brain. Nat Rev Neurosci. 2007;8:976–987. doi: 10.1038/nrn2277. [DOI] [PubMed] [Google Scholar]

- Rabin MD, Cain WS. Odor recognition: familiarity, identifiability, and encoding consistency. J Exp Psychol Learn Mem Cogn. 1984;10:316–325. doi: 10.1037/0278-7393.10.2.316. [DOI] [PubMed] [Google Scholar]

- Royet JP, Koenig O, Gregoire MC, Cinotti L, Lavenne F, Le Bars D, Costes N, Vigouroux M, Farget V, Sicard G, Holley A, Mauguière F, Comar D, Froment JC. Functional anatomy of perceptual and semantic processing for odors. J Cogn Neurosci. 1999;11:94–109. doi: 10.1162/089892999563166. [DOI] [PubMed] [Google Scholar]

- Sarfarazi M, Cave B, Richardson A, Behan J, Sedgwick EM. Visual event related potentials modulated by contextually relevant and irrelevant olfactory primes. Chem Senses. 1999;24:145–154. doi: 10.1093/chemse/24.2.145. [DOI] [PubMed] [Google Scholar]

- Savic I, Gulyas B, Larsson M, Roland P. Olfactory functions are mediated by hierarchical and parallel processing. Neuron. 2000;26:735–745. doi: 10.1016/S0896-6273(00)81209-X. [DOI] [PubMed] [Google Scholar]

- Stevenson RJ, Case TI, Mahmut M. Difficulty in evoking odor images: the role of odor naming. Mem Cognit. 2007;35:578–589. doi: 10.3758/BF03193296. [DOI] [PubMed] [Google Scholar]

- Van Petten C, Rheinfelder H. Conceptual relationships between spoken words and environmental sounds: event-related brain potential measures. Neuropsychologia. 1995;33:485–508. doi: 10.1016/0028-3932(94)00133-A. [DOI] [PubMed] [Google Scholar]

- Visser M, Jeffries E, Ralph MAL. Semantic processing in the anterior temporal lobes: a meta-analysis of the functional neuroimaging literature. J Cogn Neurol. 2010;22:1083–1094. doi: 10.1162/jocn.2009.21309. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Schwartz S, Duhoux S, Dolan RJ, Driver J. Selective attention modulates neural substrates of repetition priming and “implicit” visual memory: suppressions and enhancements revealed by FMRI. J Cogn Neurosci. 2005;17:1245–1260. doi: 10.1162/0898929055002409. [DOI] [PubMed] [Google Scholar]

- Yeshurun Y, Dudai Y, Sobel N. Working memory across nostrils. Behav Neurosci. 2008;122:1031–1037. doi: 10.1037/a0012806. [DOI] [PubMed] [Google Scholar]

- Zelano C, Montag J, Khan R, Sobel N. A specialized odor memory buffer in primary olfactory cortex. PLoS One. 2009;4:e4965. doi: 10.1371/journal.pone.0004965. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zelano C, Mohanty A, Gottfried JA. Olfactory predictive codes and stimulus templates in piriform cortex. Neuron. 2011;72:178–187. doi: 10.1016/j.neuron.2011.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]