Abstract

The amygdala is known to play an important role in the response to facial expressions that convey fear. However, it remains unclear whether the amygdala’s response to fear reflects its role in the interpretation of danger and threat, or whether it is to some extent activated by all facial expressions of emotion. Previous attempts to address this issue using neuroimaging have been confounded by differences in the use of control stimuli across studies. Here, we address this issue using a block design functional magnetic resonance imaging paradigm, in which we compared the response to face images posing expressions of fear, anger, happiness, disgust and sadness with a range of control conditions. The responses in the amygdala to different facial expressions were compared with the responses to a non-face condition (buildings), to mildly happy faces and to neutral faces. Results showed that only fear and anger elicited significantly greater responses compared with the control conditions involving faces. Overall, these findings are consistent with the role of the amygdala in processing threat, rather than in the processing of all facial expressions of emotion, and demonstrate the critical importance of the choice of comparison condition to the pattern of results.

Keywords: amygdala, facial expressions, emotion

INTRODUCTION

Facial expressions convey important information in human communication and the correct and efficient decoding of emotions from faces is a critical ability for adaptive social behaviour (Adolphs, 1999; Izard et al., 2001). Neuropsychological studies have demonstrated impairments in emotion recognition in patients with amygdala damage (Adolphs et al., 1994; Young et al., 1995, 1996; Anderson et al., 2000). The deficits in emotion recognition have been reported to be particularly severe for the perception of fear (Adolphs et al., 1994; Calder et al., 1996; Broks et al., 1998) and are often accompanied by an attenuated experience of fear and a reduced reaction to potential threats (Broks et al., 1998; Sprengelmeyer et al., 1999; Feinstein et al., 2010). Functional neuroimaging studies have provided support to the hypothesis that the amygdala is involved in processing fearful expressions and threatening stimuli (Calder et al., 2001). In particular, greater amygdala activation has been found when fearful faces were presented when compared with happy (Morris et al., 1996, 1998) or mildly happy faces (Phillips et al., 1998), but there are also reports of greater amygdala activation to expressions of fear than to anger (Whalen et al., 2001).

Despite this converging evidence from neuropsychology and functional brain imaging, it is still a matter of debate whether the amygdala’s role in evaluating emotional expressions is in some way specific for fear. For example, in patients with amygdala lesions, the deficit is not usually restricted to fear; most patients show impaired recognition of more than one emotion, even though the deficit in fear recognition tends to be the most severe (Adolphs et al., 1999). Likewise, neuroimaging studies have reported amygdala activation for emotions other than fear, including sadness (Blair et al., 1999) and happiness (Breiter et al., 1996). Indeed, some neuroimaging studies report amygdala responses to several facial expressions without any specific effect of emotion type (Winston et al., 2003; Fitzgerald et al., 2006), a pattern that might be consistent with the idea that the amygdala is activated by emotionally salient stimuli and is involved in detecting relevant stimuli regardless of their positive or negative valence (Sander et al., 2003).

The debate has not been resolved by looking at the patterns of findings across studies, as different meta-analyses of functional neuroimaging studies investigating emotional processing have reached inconsistent conclusions concerning whether the amygdala plays a specific role in fear recognition (Phan et al, 2002; Vytal and Hamann, 2010), or shows a more general activation in response to emotional faces (Sergerie et al., 2008). What these meta-analyses do agree upon, though, is that interpretation is limited by the fact that there are few studies which compare several expressions within a single experiment (Vytal and Hamann, 2010). Moreover, variable results in the literature may be due to the use of different non-emotional stimuli (e.g. neutral faces or non-face images) as the comparison condition whose activation is subtracted from the emotion conditions (Sergerie et al., 2008).

The importance of the control or comparison condition is shown clearly by considering a study by Fitzgerald et al. (2006). In this study, they used six different facial expressions as stimuli (fear, disgust, anger, sadness, happiness and neutral) and measured amygdala activation to each expression by comparing it to a baseline condition involving pictures of radios. Amygdala activation was found for all the facial expressions. However, this face vs non-face contrast leaves open the possibility that the observed amygdala activation is to faces, rather than to facially expressed emotion per se. To demonstrate that it is a response to facially expressed emotion, there must be a contrast that involves comparing one type of face (e.g. an emotional face) to another (such as a neutral face). When Fitzgerald et al. (2006) compared overall activation across the different facial expressions, they found no significant differences. However, in their histogram of this null effect (Fitzgerald et al., 2006; Figure 1B), the parameter estimate for the amygdala response to fear was higher than for any other emotion, and almost double that for anger and disgust. Issues of the choice of an appropriate comparison condition and of statistical power are therefore clearly important.

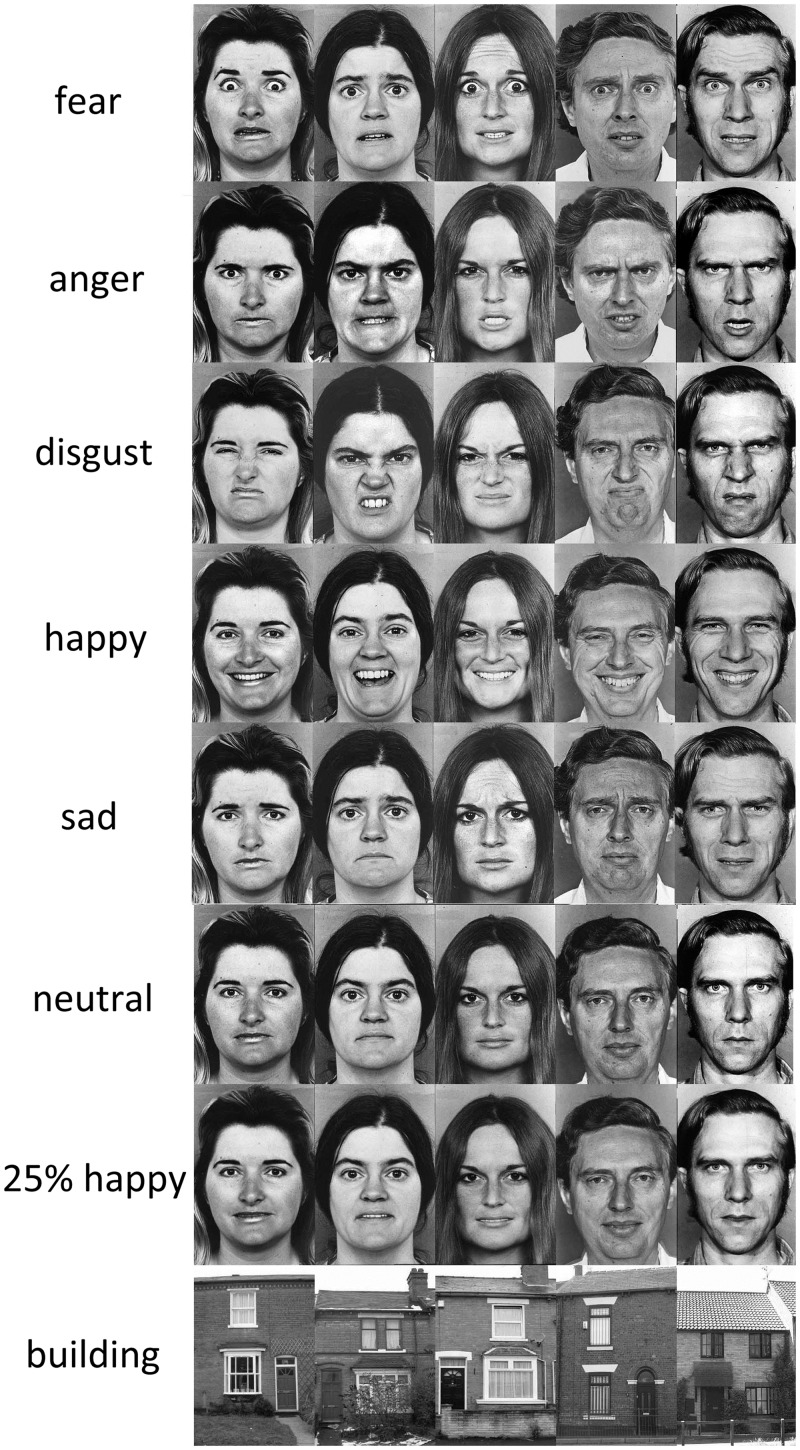

Fig. 1.

Stimuli used in the main experiment. Each row depicts images presented in the eight conditions of the fMRI scan.

In light of the above, this study aimed at clarifying amygdala responses to faces displaying different emotions. We followed Sergerie et al.’s (2008) advice to take advantage of the greater statistical power of a block design as compared to an event-related paradigm, and we examined whether the adoption of different comparison conditions affected the pattern of results. Our functional magnetic resonance imaging (fMRI) block design experiment included expressions of five basic emotions (fear, anger, disgust, sadness and happiness), and three different comparison conditions. Pictures of houses were used as a non-face contrast, to find amygdala responses to faces in general. Neutral and mildly happy (a 25% morph along the neutral to happy continuum) expressions were used to identify emotion-specific activations. The mildly happy face was used in addition to the neutral face because neutral faces can appear slightly cold and hostile (Ekman and Rosenberg, 1997), and a mildly happy baseline has therefore been used in some previous fMRI studies (e.g. Phillips et al. 1998, 1999).

MATERIALS AND METHODS

Participants

Twenty-four healthy volunteers (12 male, 12 female, mean age = 24.3 years, range 19–35) took part in the experiment. All participants were right-handed, with a western cultural background, and had normal or corrected to normal vision with no history of neurological illness. The study was approved and conducted following the guidelines of the Ethics Committee of the York Neuroimaging Centre, University of York. All participants gave written consent prior to their participation.

Stimuli

Figure 1 shows the stimuli for the eight conditions used in the experiment. Face stimuli were greyscale images from the FEEST set (Young et al., 2002). Five models (F5, F6, F8, M1 and M6) were selected on the basis of the visual similarity of the posed expression across different models, the per cent recognition rate for each model’s expression, and the similarity of the action units (muscle groups) used to pose the expressions. For each model, the neutral pose and the expressions of fear, anger, disgust, sadness and happiness were used. An additional condition was created with faces with a 25% happy expression produced with computer manipulation by morphing the images along the neutral-happy continuum for each model (Calder et al., 1997). Stimuli for the building condition were greyscale pictures of houses matched for luminance, size and resolution.

fMRI data acquisition

Scanning was performed at the York Neuroimaging Centre at the University of York with a 3 T HD MRI system with an eight channel phased array head coil (GE Signa Excite). Axial images were acquired for functional and structural MRI scans. For fMRI scanning, echo-planar images were acquired using a T2*-weighted gradient echo sequence with blood oxygen level-dependent contrast [repetition time (TR) = 3 s, echo time (TE) = 32.7 ms, flip angle = 90°, acquisition matrix 128 × 128, field of view = 288 mm × 288 mm]. Whole head volumes were acquired with 38 contiguous axial slices, each with an in-plane resolution of 2.25 mm× 2.25 mm and a slice thickness of 3 mm. The slices were positioned for each participant to ensure optimal imaging of the temporal lobe regions where the amygdala is situated. T1-weighted images were acquired for each participant to provide high-resolution structural images using an Inversion Recovery (IR = 450 ms) prepared 3D-FSPGR (Fast Spoiled Gradient Echo) pulse sequence (TR = 7.8 s, TE = 3 ms, flip angle = 20°, acquisition matrix = 256 × 256, field of view = 290 mm × 290 mm, in-plane resolution = 1.1 mm× 1.1 mm, slice thickness = 1 mm). To improve co-registration between fMRI and the 3D-FSPGR structural a high-resolution T1 FLAIR was acquired in the same orientation planes as the fMRI protocol (TR = 2850 ms, TE = 10 ms, acquisition matrix 256 × 224 interpolated to 512 giving effective in-plane resolution of 0.56 mm).

fMRI design

To identify the face-selective region of the amygdala, a localizer scan was conducted after each fMRI experiment. There were four conditions: faces, objects, places and scrambled faces. Images from each condition were presented in a blocked design with five images in each block. Each image was presented for 1 s followed by a 200 ms fixation cross. Blocks were separated by a 9 s grey screen. Each condition was repeated five times in a counterbalanced design. The participants’ task was to detect the presence of a red dot that was superimposed on 18% of the images. Face images were taken from the Radboud Face Database (Langner et al., 2010) and varied in expression, identity and viewpoint. Images of objects and scenes came from a variety of Internet sources.

Next, we investigated brain responses to different basic emotions and the corresponding comparison conditions. A block design was used with eight conditions comprising five basic emotions (fear, anger, disgust, sadness and happiness), a non-face condition (buildings), a mildly happy face condition and a neutral face condition. Within each block five images from each condition were presented in a pseudorandom order for 1 s followed by a 200 ms fixation cross, giving a block duration of 6 s; blocks were interleaved with a 9 s fixation cross on a grey screen. Blocks corresponding to each of the eight conditions were repeated eight times in a counterbalanced order, resulting in a total of 64 blocks and a scan duration of 16 min. As in many previous studies of amygdala responses to facial expressions, participants were not asked explicitly to classify the emotions of the faces themselves, but a red spot detection task was used to monitor attention during the fMRI session. In one or two images per block a small red spot appeared; participants were instructed to look at the stimuli and press a response button whenever they saw the red spot. The red spot target could appear near the eye or mouth region of the faces, or in corresponding locations in the building images, and its location was counterbalanced across conditions. Participants correctly detected the majority of red spots (mean accuracy = 98.2%, s.d. = 2), showing that they were alert and attentive to the stimuli.

After the MRI scans, participants were asked to complete a behavioural task to check that they could correctly recognize the facial expressions. The face stimuli used in the experiment were presented one at a time on a computer screen, and participants were required to sort them into six emotions (fear, anger, disgust, sadness, happiness and neutral).

fMRI data analysis

Statistical analysis of the fMRI data was carried out using FEAT in the FSL toolbox (http://www.fmrib.ox.ac.uk/fsl). The first three volumes (9 s) of each scan were removed to minimize the effects of magnetic saturation, and slice-timing correction was applied. Motion correction was followed by spatial smoothing (Gaussian, full width at half maximum 6 mm) and temporal high-pass filtering (cut off, 0.01 Hz). Regressors for each condition in the general linear model were convolved with a gamma hemodynamic response function. Individual participant data were then entered into a higher level group analysis using a mixed effects design (FLAME, http://www.fmrib.ox.ac.uk/fsl) whole-brain analysis. One participant was excluded from the following analyses because of excessive movement during the experimental scan.

The response to each of the five facial expression conditions was contrasted to each of the three control conditions. Since the amygdala was our a priori region of interest we anatomically masked the right and left amygdala at group level using the Harvard-Oxford sub-cortical probability atlas. This atlas represents each structure as a standard space image with value from 0 to 100, according to the cross-population probability of a given voxel being in that structure. The number of significant voxels was then determined using both relatively liberal 5–100% amygdala masks and more conservative 50–100% amygdala masks to take into account any possible difference between amygdala responses and activations in peri-amygdalar regions.

To complement these analyses of the anatomically defined amygdala, a functional mask derived from the localizer scan was used to examine the per cent signal change for each condition within the face-responsive region of the amygdala. This mask was based on averaged contrasts of the face condition vs each of the non-face conditions in the independent localizer scan, thresholded at Z > 2.3 (P < 0.01). Within this region the per cent signal change was extracted from each participant for each condition by means of FEATqueary in FSL toolbox (FEAT, http://www.fmrib.ox.ac.uk/fsl) and a repeated measures two-way ANOVA with factors hemisphere × stimulus was carried out to test significant differences between conditions in left and right amygdala.

RESULTS

Behavioural data

A post-scan behavioural task was used to check that participants in the fMRI experiment could correctly categorize the facial expressions. Results confirmed that participants could recognize the different expressions with the high accuracy expected for this image set (90.3% ± 6.0: fear = 95.4% ± 7.8, anger = 93.7% ± 11.7, disgust = 77.9% ± 21.7, happiness = 98.3% ± 3.8, sadness = 80.4% ± 18.5). The neutral faces were also classified as neutral (96.25% ± 8.2) and the 25% happy faces were mainly categorized as neutral (63.3%) or happy (32.9%), consistent with their position on the neutral-happy continuum (Young et al., 1997).

fMRI analysis

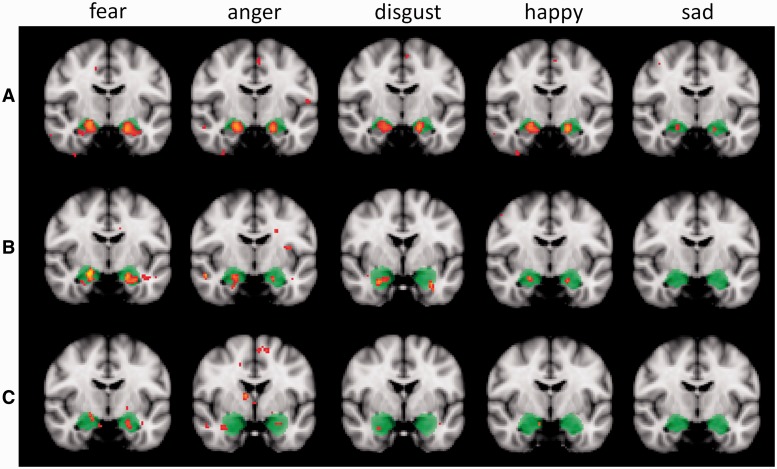

Figure 2 shows the spatial extent of voxels in the amygdala that were more active when viewing faces posing emotional expressions compared with each of the control conditions at a significance threshold of Z > 2.6 (P < 0.005, uncorrected; or P < 0.02, small volume correction). The anatomically defined amygdala region from the 5–100% amygdala mask from the Harvard-Oxford atlas is superimposed on each image. The top row of Figure 2 shows voxels that were significantly more active to each expression compared to buildings within the amygdala. The middle row of Figure 2 shows voxels that were significantly more active in the emotion conditions compared with the 25% happy faces. Finally, the bottom row of Figure 2 shows voxels that were significantly more active in the emotion conditions compared with the neutral faces.

Fig. 2.

Statistical significance maps thresholded at Z > 2.6 (P < 0.005) depicting amygdala activation in each facial expression condition vs the three comparison conditions: buildings (A), 25% happy faces (B) and neutral faces (C). The amygdala region is anatomically defined with the 5–100% masks from the Harvard-Oxford sub-cortical probability atlas and it is highlighted in the figure in green colour. Images follow the radiological convention with the right hemisphere represented on the left side. For each contrast the cursor was positioned on the peak voxel in the left amygdala. Fear and anger showed a significantly higher response when compared to all control conditions.

As can be seen in Figure 2, a substantial region of activation appeared in the left and right amygdala when fearful, angry, disgusted and happy facial expressions were contrasted with buildings, and significant activation was also observed for sad faces but to a smaller extent. When contrasted with the 25% happy faces all conditions except sadness showed some significant activation, although the extent was less than in the building comparisons. In contrasts with neutral faces the number of active voxels was further reduced for all expressions, particularly for disgust, happiness and sadness.

To explore this pattern in detail, the numbers of active voxels and peak Z-values for each contrast are reported in Table 1. These data were calculated at a statistical threshold of Z > 2.6 (P < 0.005) considering both the 5–100% amygdala mask and the more restricted 50–100% mask. Taking into account the differing extent of the masks (830 voxels in the left amygdala and 950 voxels in the right amygdala included in the 5–100% masks; 227 voxels in the left amygdala and 278 in the right amygdala included in the 50–100% masks), it is clear that the observed activations mostly occurred within the amygdala rather than peri-amygdala regions. For the critical contrasts of expressions of basic emotions against other face stimuli (25% happy, or neutral) the numbers of active voxels were consistently highest to expressions of fear and anger.

Table 1.

Significant active voxels in the amygdala

| Condition | 5–100% amygdala mask |

50–100% amygdala mask |

|||||

|---|---|---|---|---|---|---|---|

| Left | Right | Z max | Left | Right | Z max | ||

| Building | Fear | 201 (24.2) | 248 (26.1) | 4.92 | 84 (37) | 130 (46.8) | 4.89 |

| Anger | 150 (18.1) | 240 (25.3) | 5.23 | 82 (36.1) | 129 (46.4) | 5.25 | |

| Disgust | 89 (10.7) | 199 (20.9) | 4.98 | 33 (14.5) | 121 (43.5) | 4.75 | |

| Happy | 106 (12.8) | 182 (19.2) | 4.98 | 55 (24.2) | 106 (38.1) | 4.85 | |

| Sad | 21 (2.5) | 18 (1.9) | 3.42 | 10 (4.4) | 12 (4.3) | 3.31 | |

| 25% happy | Fear | 122 (14.7) | 115 (12.1) | 4.08 | 45 (19.8) | 63 (22.7) | 4.08 |

| Anger | 37 (4.5) | 111 (11.7) | 3.39 | 32 (14.1) | 56 (20.1) | 3.16 | |

| Disgust | 36 (4.3) | 83 (8.7) | 3.44 | 12 (5.3) | 56 (20.1) | 3.44 | |

| Happy | 35 (4.2) | 63 (6.6) | 3.70 | 21 (9.2) | 35 (12.6) | 3.20 | |

| Sad | 0 | 0 | 0 | 0 | |||

| Neutral | Fear | 46 (5.5) | 38 (4) | 3.15 | 22 (9.7) | 18 (6.5) | 3.15 |

| Anger | 9 (1.1) | 71 (7.5) | 3.66 | 7 (3.1) | 24 (8.6) | 3.41 | |

| Disgust | 0 | 25 (2.6) | 3.29 | 0 | 13 (4.7) | 3.29 | |

| Happy | 0 | 5 (0.5) | 2.92 | 0 | 0 | ||

| Sad | 0 | 0 | 0 | 0 | |||

Numbers of active voxels passing the threshold of Z > 2.6 (P < 0.005) and peak Z-values in the left and right amygdala for each expression vs each control condition. Data in the left columns refer to the 5–100% amygdala masks from the Harvard-Oxford atlas, which comprised a total of 830 voxels in the left amygdala and 950 voxels in the right amygdala. Data in the right columns refer to the 50–100% amygdala masks from the Harvard-Oxford atlas, which comprised a total of 227 voxels in the left amygdala and 278 voxels in the right amygdala. Numbers in brackets refer to active voxels in percentage with respect to total voxels in each mask.

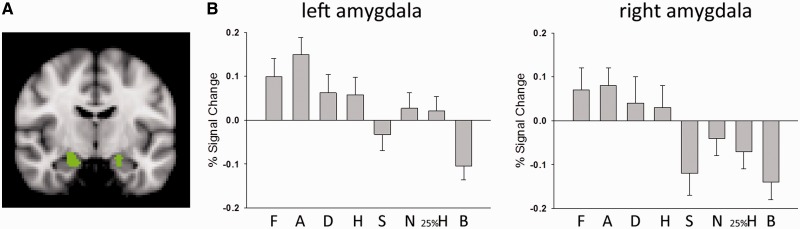

Having looked at responses within the anatomically defined amygdala region, we next examined the response to each condition in the face-responsive sub-regions within the left and right amygdala defined functionally with masks from the independent functional localizer. This functionally defined face-responsive region of the amygdala is shown in Figure 3A, with per cent signal change in the region for each condition shown in Figure 3B. A repeated measures ANOVA of the per cent signal change was carried out with the two factors of hemisphere (left, right) and stimulus type (fearful, angry, disgusted, happy, sad, neutral, 25% happy, building). This analysis revealed a significant main effect of stimulus type [F(3.9, 86.1) = 7.5, P < 0.001], but there was no significant interaction between hemisphere × stimulus type (P = 0.29). To determine the difference in response between each facial expression and each control condition, paired t-tests were carried out on the combined responses across hemispheres.

Fig. 3.

(A) Face-responsive regions in the left and right amygdala (shown in green) based on the functional localizer scan which comprised a total of 29 voxels in the left amygdala and 151 voxels in the right amygdala; the image was taken at the MNI coordinate x = 20, y = −10, z = −20 and follows the radiological convention with the right hemisphere represented on the left side. (B) Per cent signal change for each condition in left and right amygdala defined with the functional localizer masks (F = fear expressions, A = anger, D = disgust, H = happy, S = sad, N = neutral, 25%H = 25% happy along neutral-happy continuum, B = buildings). The response to fear and anger was significantly greater than the response to all of the control conditions. Bars represent standard errors of the means.

The response to fear was significantly greater than the response to buildings [t(22) = 4.16, P < 0.001], 25% happy faces [t(22) = 2.12, P = 0.046] and neutral faces [t(22) = 2.61, P = 0.016]. The response to anger was also significantly greater than the response to buildings [t(22) = 8.96, P < 0.001], 25% happy faces [t(22) = 5.43, P < 0.001] and neutral faces [t(22) = 3.17, P = 0.004]. The response to disgust and happy was only greater than the response to buildings {[t(22) = 4.53, P < 0.001], [t(22) = 4.84, P < 0.001], respectively}. None of the other comparisons reached statistical significance (all P > 0.05). This overall pattern is therefore consistent with that based on the anatomically defined regions. For the critical contrasts of expressions of basic emotions against other face stimuli (25% happy, or neutral), only expressions of fear and anger reached statistical significance.

DISCUSSION

The aim of this study was to determine whether the amygdala is responsive to emotional expressions in general, or is selective for particular expressions, such as fear, that signal the presence of potential threat. To address a possible source of conflicting conclusions from the previous literature (Sergerie et al., 2008), we looked systematically at the consequence of using differing contrasts to evaluate amygdala responses. Specifically, the responses to face images posing different emotional expressions were contrasted with a non-face (buildings) condition, and with face conditions involving mildly happy or neutral expressions. We also compared the use of tightly defined anatomical mask restricted to the amygdala itself to a more liberal anatomical mask that included some peri-amygdala regions, and we complemented these anatomically based procedures with a functional mask involving face-responsive voxels in the amygdala based on an independent functional localizer scan.

The results showed that different procedural choices can have a substantial effect on the apparent pattern of amygdala responsiveness, and this may help resolve some previous controversies. For contrasts of face conditions vs buildings, the amygdala responded to some extent to most expressions, with a number of significantly activated voxels to all five basic emotions (fear, anger, disgust, happiness and sadness) for both anatomical masks (Figure 2A; Table 1) and higher per cent signal change in the face-responsive part of the amygdala for fear, anger, disgust and happiness compared with buildings. This pattern bears out the widely cited findings of Fitzgerald et al. (2006), who maintained that the amygdala responds to all facial expressions using a contrast involving pictures of radios. However, although the pattern of results is the same as Fitzgerald et al. (2006), we question their interpretation. A contrast between emotional faces and any non-face condition can only show that a region is face responsive; it does not establish that it is involved in the perception of emotion per se.

To demonstrate that a region is responsive to facial emotion, it is essential to use a comparison that eliminates other aspects of face perception (such as perception of age, sex and identity). The most direct way to achieve this is with non-emotional faces. For this reason, we compared the amygdala’s responses to five basic emotions to its responses to neutral or to 25% happy faces. Two points of comparison (neutral and 25% happy) were used because although neutral expressions might initially seem the obvious choice it is at present unclear whether they have a status akin to that of an additional basic emotion, and because the somewhat ‘poker faced’ neutral expression can be seen as slightly formal or hostile. For these reasons, some previous studies have used a 25% morph along a neutral to happy continuum as a more socially acceptable looking variant of a relatively unemotional face (Phillips et al., 1998, 1999), leading us also to include it here.

As Figure 2 and Table 1 show, these contrasts between specific expressions of basic emotions and neutral or 25% happy faces substantially reduced the numbers of active voxels in the anatomically defined amygdala, with no significantly activated voxels for sadness with either contrast, no activated voxels to happiness for the neutral contrast, and no activated voxels for disgust in the left amygdala for the neutral contrast. However, activation of voxels in both the left and the right amygdala was consistently noted for expressions of fear and anger for both contrasts (neutral or 25% happy), whether the anatomical amygdala was more liberally defined (with the 5–100% from the Harvard-Oxford atlas) or relatively tightly defined (the 50–100% from the Harvard-Oxford atlas). When the face-responsive region of the amygdala was functionally identified from the independent localizer scan (Figure 3), statistically significant differences in per cent signal change compared with the neutral or the 25% happy conditions were only found for fear and anger.

Previous neuroimaging studies provided mixed evidence; being taken to support either a specific role for the amygdala in processing fearful faces (Calder et al., 2001) or a more general amygdala activation for a number of expressions (Winston et al., 2003; Fitzgerald et al., 2006). Our results show clearly why each position has some merits. The amygdala responded to all face expressions to some extent, as evidenced by contrasting each expression with buildings. As noted earlier, however, this face vs non-face contrast does not rule out the possibility that the activation is to faces per se, rather than more specifically to facial expressions. The more easily interpreted contrasts are therefore those between facial expression conditions and the neutral or 25% happy face comparison conditions, since any differences found for these will reflect the processing of expression. Sergerie et al.’s (2008) meta-analysis has already noted the importance of the control condition, reporting stronger amygdala activations when a low-level baseline, such as scrambled-images or a grey screen, is used as compared with control conditions with neutral faces or other pictures. Our results support this conclusion and directly demonstrate that the use of a control condition with stimuli belonging to the face category (faces with a neutral or a mildly happy expression) or a non-face category (e.g. buildings) can affect the results and therefore point towards different conclusions.

Several studies in the affective neuroscience literature have identified the amygdala as a neural correlate for processing threatening stimuli (Dalgleish, 2004; Vytal and Hamann, 2010) and its consistent response to fearful and angry faces in the present results fits this general conception. On the other hand, Sander et al. (2003) pointed out that the amygdala involvement in processing fear-related stimuli does not necessary imply that its role is restricted to fear; instead they proposed a role for the amygdala in detecting relevant stimuli regardless of their valence. To test specific effects of emotion type in amygdala activation, we extracted the per cent signal change from face-responsive sub-regions within left and right amygdala and the signal for each expression was compared with each control condition. This analysis showed that only fear and anger produced significant higher activation than neutral and mildly happy faces, which is consistent with the hypothesis that the amygdala is involved in processing threat-related expressions. However, a more complex pattern was evident than a pure response to physical threat per se; both fear and anger were significantly different from the face control conditions, but disgust and happy expressions also activated some voxels in the anatomically defined amygdala and showed a change in per cent signal for the functionally defined region that might have reached statistical significance in a study with greater power. There are two main possible reasons why this might be the case, each worth taking seriously for further investigation. One is that because the amygdala contains multiple nuclei (Aggleton and Young, 1999), only some of these are involved in evaluation of specific emotions and others serve more general social purposes. This is difficult to rule out with the current spatial resolution of fMRI. The alternative is that the amygdala has a more general role in emotional appraisal for which fear and likely anger, as suggested from our data, are the most effective elicitors (Sander et al., 2003). Our stimuli were selected from a widely used set and previous studies reported that ratings for angry and fearful faces were not significantly different in terms of intensity, valence, arousal and skin conductance responses (Ekman et al., 1987; Johnsen et al., 1995; Matsumoto et al., 1999). Nonetheless, an important question for future studies will be to determine whether amygdala responses to fearful and angry faces are driven by shared perceptual properties of these expressions or by common semantic characteristics (such as signalling threat). To achieve this, it may be useful to take additional measures such as participants’ eye-movement scanpaths.

It is worth noting also that in within-category contrasts the activation was reduced when emotional faces were contrasted with neutral as compared with the 25% happy condition. Previous studies have also reported amygdala activation to neutral faces (Somerville et al., 2004; Fitzgerald et al., 2006). Indeed, other fMRI experiments have preferred a mildly happy expression as a control condition (Phillips et al., 1998, 1999) to avoid the slightly cold appearance which can be perceived from completely neutral faces. Our results support this view and directly demonstrate how the control condition can affect results even for within-category contrasts.

In summary, this study helps clarify conflicting results in the literature about amygdala responses to facial expressions. Our findings show that the amygdala is highly responsive to fearful faces, but we did not find that the activation was specific to this emotion since increased fMRI signal was also observed for angry faces and to a lesser extent for disgusted expressions. Overall, these findings are consistent with the role of the amygdala in processing threatening and aversive stimuli rather than having a more general role in processing emotion. These findings demonstrate the critical importance of the choice of comparison condition in understanding the selectivity of responses in this region of the brain.

REFERENCES

- Adolphs R. Social cognition and the human brain. Trends in Cognitive Sciences. 1999;12:469–79. doi: 10.1016/s1364-6613(99)01399-6. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Damasio H, Damasio A. Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature. 1994;372:669–72. doi: 10.1038/372669a0. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Hamann S, et al. Recognition of facial emotion in nine individuals with bilateral amygdala damage. Neuropsychologia. 1999;37:1111–7. doi: 10.1016/s0028-3932(99)00039-1. [DOI] [PubMed] [Google Scholar]

- Aggleton JP, Young AW. The enigma of the amygdala: on its contribution to human emotion. In: Nadel L, Lane RD, editors. Emotion and Cognitive Neuroscience. New York: Oxford University Press; 1999. pp. 106–28. [Google Scholar]

- Anderson AK, Spencer DD, Fulbright RK, Phelps EA. Contribution of the anteromedial temporal lobes to the evaluation of facial emotion. Neuropsychology. 2000;14:526–36. doi: 10.1037//0894-4105.14.4.526. [DOI] [PubMed] [Google Scholar]

- Blair RJR, Morris JS, Frith CD, Perrett DI, Dolan RJ. Dissociable neural responses to facial expressions of sadness and anger. Brain. 1999;122:883–93. doi: 10.1093/brain/122.5.883. [DOI] [PubMed] [Google Scholar]

- Breiter HC, Etcoff NL, Whalen PJ, et al. Response and habituation of the human amygdala during visual processing of facial expression. Neuron. 1996;17:875–87. doi: 10.1016/s0896-6273(00)80219-6. [DOI] [PubMed] [Google Scholar]

- Broks P, Young AW, Maratos EJ, et al. Face processing impairments after encephalitis: amygdala damage and recognition of fear. Neuropsychologia. 1998;36(1):59–70. doi: 10.1016/s0028-3932(97)00105-x. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Lawrence AD, Young AW. Neuropsychology of fear and loathing. Nature Review Neuroscience. 2001;2:352–62. doi: 10.1038/35072584. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW, Rowland D, Perrett DI. Computer-enhanced emotion in facial expressions. Proceedings of the Royal Society B. 1997;264:919–25. doi: 10.1098/rspb.1997.0127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calder AJ, Young AW, Rowland D, Perrett DI, Hodges JR, Etcoff NL. Facial emotion recognition after bilateral amygdala damage: differentially severe impairment of fear. Cognitive Neuropsychology. 1996;13:699–745. [Google Scholar]

- Dalgleish T. The emotional brain. Nature Review Neuroscience. 2004;5:582–9. doi: 10.1038/nrn1432. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV, O'Sullivan M, Chan A. Universals and cultural differences in the judgments of facial expressions of emotion. Journal of Personality and Social Psychology. 1987;53:712–7. doi: 10.1037//0022-3514.53.4.712. [DOI] [PubMed] [Google Scholar]

- Ekman P, Rosenberg E. What the Face Reveals. New York: Oxford University Press; 1997. [Google Scholar]

- Feinstein JS, Adolphs R, Damasio A, Tranel D. The human amygdala and the induction and experience of fear. Current Biology. 2010;21:34–8. doi: 10.1016/j.cub.2010.11.042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fitzgerald DA, Angstadt M, Jelsone LM, Nathan PJ, Phan KL. Beyond threat: amygdala reactivity across multiple expressions of facial affect. NeuroImage. 2006;30:1441–8. doi: 10.1016/j.neuroimage.2005.11.003. [DOI] [PubMed] [Google Scholar]

- Izard C, Fine S, Schultz D, Mostow A, Ackerman B, Youngstrom E. Emotion knowledge as a predictor of social behaviour and academic competence in children at risk. Psychological Science. 2001;12:18–23. doi: 10.1111/1467-9280.00304. [DOI] [PubMed] [Google Scholar]

- Johnsen BH, Thayer JF, Hugdahl K. Affective judgment of the Ekman faces: a dimensional approach. Journal of Psychophysiology. 1995;9:193–202. [Google Scholar]

- Langner O, Dotsch R, Bijlstra G, Wigboldus DHJ, Hawk ST, van Knippenberg A. Presentation and validation of the Radbound Faces Database. Cognition and Emotion. 2010;24(8):1377–88. [Google Scholar]

- Matsumoto D, Kasri F, Kooken K. American-Japanese cultural differences in judgments of expression intensity and subjective experience. Cognition and Emotion. 1999;13:201–18. [Google Scholar]

- Morris JS, Frith CD, Perrett DI, et al. A differential neural response in the human amygdala to fearful and happy facial expression. Nature. 1996;383:812–5. doi: 10.1038/383812a0. [DOI] [PubMed] [Google Scholar]

- Morris JS, Friston KJ, Büchel C, et al. A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain. 1998;121:47–57. doi: 10.1093/brain/121.1.47. [DOI] [PubMed] [Google Scholar]

- Phan KL, Wager T, Taylor SF, Liberzon I. Functional neuroanatomy of emotion: a meta-analysis of emotion activation studies in PET and fMRI. NeuroImage. 2002;16:331–48. doi: 10.1006/nimg.2002.1087. [DOI] [PubMed] [Google Scholar]

- Phillips ML, Williams L, Senior C, et al. A differential neural response to threatening and non-threatening negative facial expressions in paranoid and non-paranoid schizophrenics. Psychiatry Research: Neuroimaging Section. 1999;92:11–31. doi: 10.1016/s0925-4927(99)00031-1. [DOI] [PubMed] [Google Scholar]

- Phillips ML, Young AL, Scott SK, et al. Neural responses to facial and vocal expressions of fear and disgust. Proceedings of the Royal Society B. 1998;265:1809–17. doi: 10.1098/rspb.1998.0506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sander D, Jordan G, Zalla T. The human amygdala: an evolved system for relevance detection. Reviews in the Neuroscience. 2003;14:303–16. doi: 10.1515/revneuro.2003.14.4.303. [DOI] [PubMed] [Google Scholar]

- Sergerie K, Chochol C, Armony JL. The role of the amygdala in emotional processing: a quantitative meta-analysis of functional neuroimaging studies. Neuroscience and Behavioural Reviews. 2008;32:811–30. doi: 10.1016/j.neubiorev.2007.12.002. [DOI] [PubMed] [Google Scholar]

- Somerville LH, Kim H, Johnstone T, Alexander AL, Whalen PJ. Human amygdala responses during presentation of happy and neutral faces: correlations with state anxiety. Biological Psychiatry. 2004;55:897–903. doi: 10.1016/j.biopsych.2004.01.007. [DOI] [PubMed] [Google Scholar]

- Sprengelmeyer R, Young AW, Schroeder U, et al. Knowing no fear. Proceedings of the Royal Society: Biological Sciences. 1999;266:2451–6. doi: 10.1098/rspb.1999.0945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vytal K, Hamann S. Neuroimaging support for discrete neural correlates of basic emotion: a voxel-based meta-analysis. Journal of Cognitive Neuroscience. 2010;22:2864–85. doi: 10.1162/jocn.2009.21366. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Shin LM, McInerney SC, Fischer H, Wright CI, Rauch SL. A functional MRI study of human amygdala responses to facial expressions of fear versus anger. Emotion. 2001;1:70–83. doi: 10.1037/1528-3542.1.1.70. [DOI] [PubMed] [Google Scholar]

- Winston JS, O’Doherty J, Dolan RJ. Common and distinct neural responses during direct and incidental processing of multiple facial emotions. NeuroImage. 2003;20:84–97. doi: 10.1016/s1053-8119(03)00303-3. [DOI] [PubMed] [Google Scholar]

- Young AW, Aggleton JP, Hellawell DJ, Johnson M, Broks P, Hanley JR. Face processing impairments after amygdalotomy. Brain. 1995;118:15–24. doi: 10.1093/brain/118.1.15. [DOI] [PubMed] [Google Scholar]

- Young AW, Hellawell DJ, Van De Wal C, Johnson M. Facial expression processing after amygdalotomy. Neuropsychologia. 1996;34:31–9. doi: 10.1016/0028-3932(95)00062-3. [DOI] [PubMed] [Google Scholar]

- Young AW, Perrett DI, Calder AJ, Sprengelmeyer R, Ekman P. Facial Expressions of Emotion: Stimuli and Test (FEEST) Bury St. Edmunds: Thames Valley test Company; 2002. [Google Scholar]

- Young AW, Rowland D, Calder AJ, Etcoff NL, Seth A, Perrett DI. Facial expression megamix: tests of dimensional and category accounts of emotion recognition. Cognition. 1997;63:271–313. doi: 10.1016/s0010-0277(97)00003-6. [DOI] [PubMed] [Google Scholar]