Abstract

The different temporal dynamics of emotions are critical to understand their evolutionary role in the regulation of interactions with the surrounding environment. Here, we investigated the temporal dynamics underlying the perception of four basic emotions from complex scenes varying in valence and arousal (fear, disgust, happiness and sadness) with the millisecond time resolution of Electroencephalography (EEG). Event-related potentials were computed and each emotion showed a specific temporal profile, as revealed by distinct time segments of significant differences from the neutral scenes. Fear perception elicited significant activity at the earliest time segments, followed by disgust, happiness and sadness. Moreover, fear, disgust and happiness were characterized by two time segments of significant activity, whereas sadness showed only one long-latency time segment of activity. Multidimensional scaling was used to assess the correspondence between neural temporal dynamics and the subjective experience elicited by the four emotions in a subsequent behavioral task. We found a high coherence between these two classes of data, indicating that psychological categories defining emotions have a close correspondence at the brain level in terms of neural temporal dynamics. Finally, we localized the brain regions of time-dependent activity for each emotion and time segment with the low-resolution brain electromagnetic tomography. Fear and disgust showed widely distributed activations, predominantly in the right hemisphere. Happiness activated a number of areas mostly in the left hemisphere, whereas sadness showed a limited number of active areas at late latency. The present findings indicate that the neural signature of basic emotions can emerge as the byproduct of dynamic spatiotemporal brain networks as investigated with millisecond-range resolution, rather than in time-independent areas involved uniquely in the processing one specific emotion.

Keywords: basic emotions, EEG, LORETA, ERP, IAPS, time, rapid perception

INTRODUCTION

A longstanding and yet unresolved issue in the study of emotions concerns the best way to characterize their underlying structure. So-called discrete theories posit that a limited set of basic emotion categories (e.g. happiness, fear, sadness, anger or disgust) have unique physiological and neural profiles (Panksepp, 1998; Ekman, 1999). Other approaches conceptualize emotions as the byproduct of more fundamental dimensions such as valence (positive vs negative) and arousal (high vs low) (Russell, 1980; Kuppens et al., 2013), or action tendency (approach vs withdrawal) (Davidson, 1993). Still other perspectives, such as the constructionist account, assume that emotions emerge out of psychological primitives such as raw somatic feelings, perception, conceptualization, or attention, none of which are specific to any discrete emotion (Lindquist et al., 2012).

Recent years have seen a resurgence of this debate in the field of social and affective neuroscience that has primarily focused on two complementary aspects. The first issue concerns whether affective states can be grouped meaningfully into discrete psychological categories, such as fear and sadness (Barrett, 2006). The second aspect concerns whether it is possible to characterize the neural signature that defines and differentiates consistently and univocally each emotion from the others (i.e. whether each and every instance of, for example, fear is associated with a specific pattern of neural activity that is not shared by the other emotions) (Phan et al., 2002; Murphy et al., 2003; Kober et al., 2008; Vytal and Hamann, 2010; Said et al., 2011). In neuroscience research, evidence supporting a discrete account of emotions has come traditionally from neuropsychological studies on patients with focal brain damage. Classic examples are the role of the amygdala in fear perception and experience (Whalen et al., 2001, 2004; Adolphs et al., 2005; Whalen and Phelps, 2009), that of the orbitofrontal cortex (OFC) in anger (Berlin et al., 2004), or of the insula in disgust (Calder et al., 2000; Keysers et al., 2004; Caruana et al., 2011). Conversely, the neuroimaging literature [functional MRI (fMRI) and PET] has provided mixed results with some studies and meta-analyses supporting the existence of discrete and non-overlapping neural correlates for basic emotions (Phan et al., 2002; Fusar-Poli et al., 2009b; Vytal and Hamann, 2010), whereas others found little evidence that discrete emotion categories can be consistently and specifically localized in distinct brain areas (Murphy et al., 2003; Kober et al., 2008; Lindquist et al., 2012).

Whichever account one may wish to endorse, temporal dynamics are crucial to understand the neural architecture of emotions. In fact, emotions unfold over time and their different temporal profiles are supposed to be functionally coherent with their evolutionary role in regulating interactions with the surrounding environment (Frijda, 2007). For example, fear, anger or disgust provide the organism with reflex-like reactions to impending environmental danger, which are adaptive and critical for survival only if they occur rapidly (Tamietto and de Gelder, 2010). Conversely, promptness of reactions, and their underlying neural activity, is possibly a less critical component in other emotions such as sadness or happiness. These latter emotions typically involve aspects related to the evaluation of self-relevance and they have a more pronounced social dimension, as their expression is linked to affiliative or approach responses. Therefore, the neural signature of sadness and happiness may involve a slower unfolding over time than that of fear or disgust (Fredrickson, 1998; Baumeister et al., 2001).

Aside from its theoretical relevance, including the time element in our current understanding of emotions can also yield new discoveries about how emotions are represented in the brain, and can help to reconcile seemingly contradictory neuroimaging findings. In fact, the function of a given brain area is partly determined by the network of other regions it is firing with, as well as by the specific time-range at which they connect and synchronize. Therefore, the neural network involved in emotion processing could, in principle, overlap spatially across emotions, being the unique temporal profile of connectivity and synchrony the critical neural signature that differentiates one specific emotion from the others, and not necessarily the involvement of dedicated areas (Scarantino, 2012).

Despite the obvious importance of the time component in understanding how emotions are represented in the brain, and the emphasis on this aspect in emotion theories, relatively little is known about the neural temporal dynamics of emotion processing (Linden et al., 2012; Waugh and Schirillo, 2012). As it happens, the overwhelming majority of neuroimaging studies used fMRI or PET methods, which have a good spatial resolution but a poor temporal one, as much as several seconds. Moreover, fMRI analyses typically model the data with canonical hemodynamic functions that hypothesize an invariance of parameters, such as delay envelope and dispersion (Friston et al., 1994). Conversely, EEG methods offer excellent temporal resolution, in the range of milliseconds (Olofsson et al., 2008), and can be combined with methods for functional brain imaging such as the low-resolution brain electromagnetic tomography (LORETA) which uses inverse problem techniques to increase the spatial localization accuracy of electric neuronal activity up to 7 mm (Pascual-Marqui et al., 1994; Esslen et al., 2004).

In this study we first investigated the temporal dynamics of neural activity associated with viewing complex pictures of fear, disgust, happiness and sadness derived from the International Affective Picture System (IAPS) dataset and equated for arousal (Lang et al., 1997). These four emotions are probably the most investigated of all in past neuroimaging studies and offer the opportunity to cover a wide range of the emotional space according to different theoretical perspectives. In fact, they represent typical examples of basic emotions according to discrete theories, and they occupy opposite endpoints on the dimensional spectrum of emotions, with happiness opposed to sadness, fear, and disgust along the valence dimension, and sadness opposed to disgust, fear and happiness along the arousal dimension. We then evaluated the correspondence between subjective emotional experience induced by the pictures, on the one hand, and the neural signature derived from the temporal profiles associated with their perception, on the other. Finally, we estimated with LORETA the underlying neural generators of this event-related potentials (ERPs) activity with the aim of assessing time-dependent changes in the activation of cortical networks involved in emotion processing. We hypothesize that the neural signature characterizing emotion processing can be found in the profile of temporal dynamics specific for each basic emotion rather than in the identification of brain areas uniquely involved in processing each emotion.

METHODS

Participants

Twenty-nine right-handed volunteers (17 male, 12 female; mean age = 24.6 years) took part in the study. All participants had no personal history of neurological or psychiatric illness, drug or alcohol abuse, or current medication, and they had normal or corrected-to-normal vision. Handedness was assessed with the ‘measurement of handedness’ questionnaire (Chapman and Chapman, 1987). All subjects were informed about the aim and design of the study and gave their written consent for participation. The study was performed in accordance with the ethical standards laid down in the 1964 Declaration of Helsinki and participants provided written informed consent approved by the local ethics committee.

Stimuli and validation

One hundred and eighty standardized stimuli were preselected from the IAPS dataset (Lang et al., 1997). Stimuli were presented in color, equated for luminance and contrast, and selected so as to have a similar rating along the arousal dimension, based on values reported in the original validation. This warrants that possible difference in arousal across stimulus categories cannot account for the present results. IAPS stimuli included unpleasant (e.g. scenes of violence, threat and injuries), pleasant (e.g. sporting events, erotic scenes) and neutral scenes (e.g. household objects, landscapes). The broad range of stimulus types adds an important dimension of ecological validity, as the same valence or emotion can be induced at times by pictures displaying facial or bodily expressions, or complex events and landscape, therefore extending generalizability beyond facial expressions, which are the stimuli most commonly used in emotion research.

The preselected IAPS stimuli were then categorized into four basic emotion categories: fear disgust, happiness and sadness, and in a fifth, emotionally neutral, category; with each category including 36 images. Stimulus categorization was then validated in a study including 30 participants (half females), who served as judges and who did not participate in the main experiment (M = 24.7 years; s.d. = 4.3; age-range = 18–34 years). For this purpose, the stimuli were presented one by one on a touch-screen and shown until response with the five labels corresponding to the four emotion categories and to the neutral one displayed below the pictures. The order of the five labels, from left to right, was randomized between trials. Subjects were instructed to categorize each stimulus in a five-alternative forced-choice procedure as quickly and accurately as possible by touching one of the five labels on the touch-screen. Correct categorization refers here to the fact that pictures categorized as expressing one emotion were actually judged by participants in the validation experiment as expressing the intended emotion. Percentage of correct categorization as a function of the different emotions was: 93.5% for fear, 88% for disgust, 93.1% for happy, 84.2% for sad and 80.4% for neutral images. Overall, there was a highly significant consistency between the intended and the judged emotional content of the stimuli (Cohen K = 0.89, P ≤ 0.001). The 20 best-recognized pictures for each of the five categories (all correctly recognized above 93%) were finally selected and used in the main EEG experiment.

To ensure that there was no luminance difference across the final images in the five emotion categories, we measured with a photometer the luminance of each image on the screen used for the main experiment. This luminance value represents the mean luminance of each image as recorded in three different points. The mean luminance of the four emotion categories and of the neutral category was then extracted by averaging the luminance values of the 20 images composing each category. Finally, we computed a series of independent sample t-tests comparing the five emotion categories against each other in all possible pairs of combinations, resulting in ten t-tests. There was no significant difference in any of the comparisons (P > 0.05, corrected for multiple comparisons), so that we can confidently rule out the possibility that differences in the EEG data are due to luminance differences in the stimuli, rather than to their emotional content.

Procedure

The stimuli were presented on a 21 inch computer screen. Subjects were seated at 1 m distance from the screen with their head comfortably positioned in a chin and forehead rest. Participants underwent a passive exposure design in which they were simply required to look passively at the pictures. This procedure enabled us to reduce movement artifacts and to avoid possible confounds related to cognitive demands or motor execution tasks, which EEG is very sensitive to.

Each picture was displayed on the screen for 500 ms Followed by 1 s inter-stimulus interval consisting of a black screen. The stimuli in the four emotion categories were presented in a block design. Each block consisted of 20 pictures. The experiment started with the presentation of a neutral block and each emotion block was always interleaved with a neutral block. This is a standard procedure to enable return to baseline neurophysiological activity after exposure to emotional stimuli, and to ensure that neural activity triggered by a given emotion block does not interfere with the activity recorded in the next, and different, emotion block (Decety and Cacioppo, 2011). Moreover, the order of presentation of the four emotion blocks was randomized between subjects in order to prevent any possible bias due to a fixed sequence of presentation of the different emotions. Within each block, the 20 neutral or 20 emotional pictures were presented in a random order. There was an additional 5 min break after each block to enable resting.

After the EEG recordings, participants underwent a self-report session to assess their subjective categorization and rating of the pictures. Participants viewed again the same pictures presented in the main EEG experiment and performed two independent tasks in succession. First, they sorted out the pictures into the four emotion categories and one neutral category, similar to the categorization task previously performed by the judges in the validation experiment. Second, they rated the arousal and valence of their own emotional experience, as triggered by the displayed picture, on two independent 9-point Likert scales ranging from 1 (very unpleasant/not at all arousing) to 9 (very pleasant/very arousing). Both tasks, categorization and rating, had no time constraint and reaction times were not recorded; rather, accuracy was emphasized so as to warrant response reliability and maximal attention from the subjects to their own feelings. The data of the categorization task were used to assess the degree of agreement between the categorization resulting from the validation experiment and the categorization performed by the participants to the main experiment. The data on the ratings of valence and arousal were entered into the representational similarity analysis and the multidimensional scaling (MDS) to investigate the correspondence between subjective emotional experience and neural temporal dynamics (see below).

EEG recording

EEG was recorded from 19 sites (Fp1, Fp2, F7, F8, F3, F4, Fz, C3, C4, Cz, T3, T4, T5, T6, P3, P4, Pz, O1 and O2). The electro-oculogram (EOG) was measured in order to facilitate the identification of eye-movement artifacts and remove them from EEG recordings. EEG and EOG signals were amplified by a multi-channel bio-signal amplifier (band pass 0.3–70 Hz) and A/D converted at 256 Hz per channel with 12 bit resolution and 1/8–2 μV/bit accuracy. The impedance of recording electrodes was monitored for each subject prior to data collection and the threshold was kept below 5 kΩ. Recordings were performed in a dimly lit and electrically shielded room.

EEG preprocessing

EEG epochs of 1 s duration were identified off-line on a computer display. Epochs with eye-movements, eye-blinks, muscle and head movement artifacts were discarded from successive analyses. Artifact-free epochs were recomputed to average reference and digitally band-passed to 1–45 Hz. Average ERPs were computed separately for each subject and condition and the waveforms were transformed into topographic maps of the ERP potential distributions. At the sampling rate of 256 Hz, each topographic ERP map corresponds to the EEG activity in a time segment of 3.9 ms, for a total of 128 maps covering the 500 ms of stimulus exposure.

Time-dependent cortical localization of EEG activity and limitations

The LORETA software was used to localize the neural activity underlying the EEG recordings. LORETA is an inverse solution method that computes from the EEG recordings the three-dimensional (3D) distribution of current density using a linear constrain requiring that the current density distribution be the smoothest of all the solutions. LORETA uses a three-shell spherical model registered to the digitalized Talaraich and Tournoux atlas with a spatial resolution of up to 7 mm. The result is a 3D image consisting of 2394 voxels (Pascual-Marqui et al., 1994, for a detailed description of the method).

Admittedly, the spatial resolution of LORETA has several intrinsic limitations especially considering the number of recording sites used in the present experiment. In fact, the three-shell head model, which represents the space where the inverse problem is solved, is restricted to cortical areas and represents only an approximation of the real geometry of the grey and white matter regions. Second, the use of an average brain template does not take into consideration anatomical specificities of the subjects. Third, due to the smoothness assumption used to solve the inverse problem, LORETA is incapable of resolving activity from closely spaced sources. In these cases, LORETA finds a single source of neural activity located in-between the original two sources (Pizzagalli, 2007). Therefore, although the activations found will be ultimately similar to those resulting from fMRI analysis, they are based on different principles and cannot attain a comparable spatial resolution.

However, a number of cross-modal validation studies combining LORETA with fMRI or PET have been published in recent years (Worrell et al., 2000; Pascual-Marqui et al., 2002; Vitacco et al., 2002; Pizzagalli et al., 2003; Mulert et al., 2004). In general these studies reported substantial consistency between the spatial localization resulting from LORETA and that found with fMRI methods, with discrepancies in the range of about 1.5 cm. Therefore, taking into account these limitations and within this average error range, time-dependent cortical localization obtained with LORETA can be considered reliable unless otherwise proven.

Statistical analyses

Differences in the temporal dynamics of ERP maps

To test for possible differences between ERP scalp potential maps of the four emotions vs the neutral condition, we computed a global measure of dissimilarity between two scalp potential maps (topographic analysis of variance—TANOVA) (Strik et al., 1998). The dissimilarity, d, is defined as

where Vs1e and Vs2e denote the average reference potential for the same subject s at the same electrode e under conditions 1 and 2, respectively, whereas A1 and A2 are the global field power over all subjects for conditions 1 and 2, as defined below for the condition c.

Statistical significance for each pair of maps was assessed non-parametrically with a randomization test corrected for multiple comparisons. Therefore, possible differences in the temporal dynamics between each emotion and the neutral condition were assessed at the high temporal resolution of 3.9 ms, because each of the 128 ERP maps for a given emotion was contrasted against the corresponding one for the neutral condition. According to Esslen et al. (2004), the period of one or more consecutive ERP maps that differed significantly between a given emotion and the neutral condition is defined as ‘time segment’.

Time-dependent localization of significant differences in temporal dynamics

When the TANOVA found one or more time segments in a given emotion condition that were statistically different from the corresponding time segments in the neutral condition at the threshold of P < 0.05, an averaged LORETA image for those time segments was defined as the average of current density magnitude over all instantaneous images for each voxel. Time-dependent localization was based on voxel-by-voxel t-tests of LORETA images between each emotion vs neutral condition. The method used for testing statistical significance is non-parametric and thus does not need to comply with assumptions of normality distribution of the data. It is based on estimating, via randomization, the empirical probability distribution for the max-statistics (the maximum of the t-statistics), under the null hypothesis of no difference between voxels, and corrects for multiple comparisons (i.e. for the collection of t-tests performed for all voxels, and for all time samples) (Nichols and Holmes, 2002).

RESULTS

Temporal dynamics

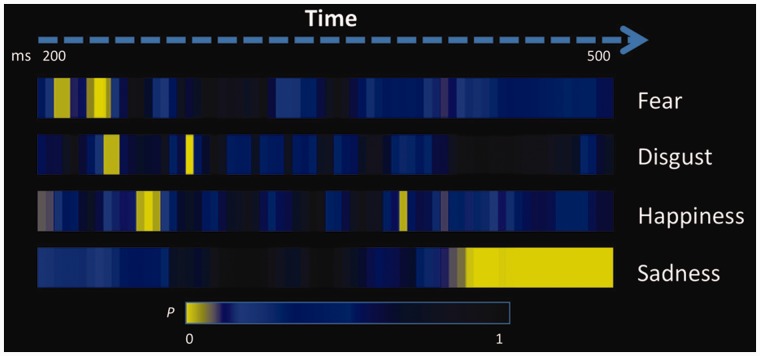

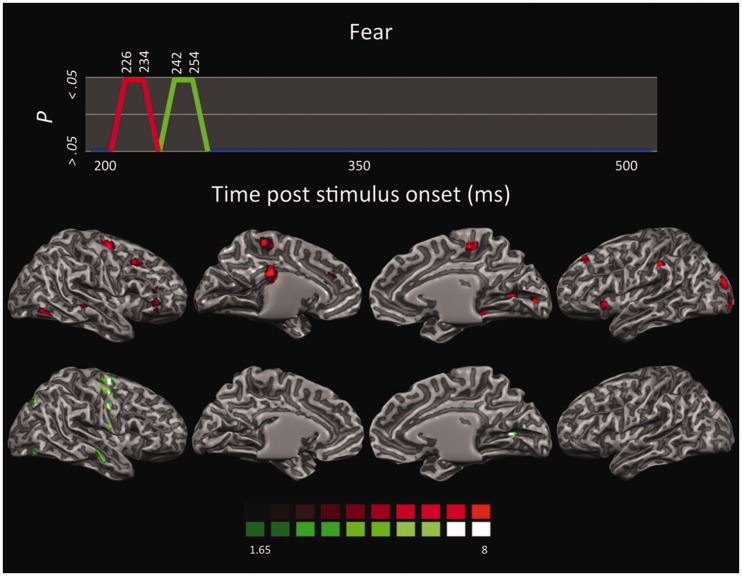

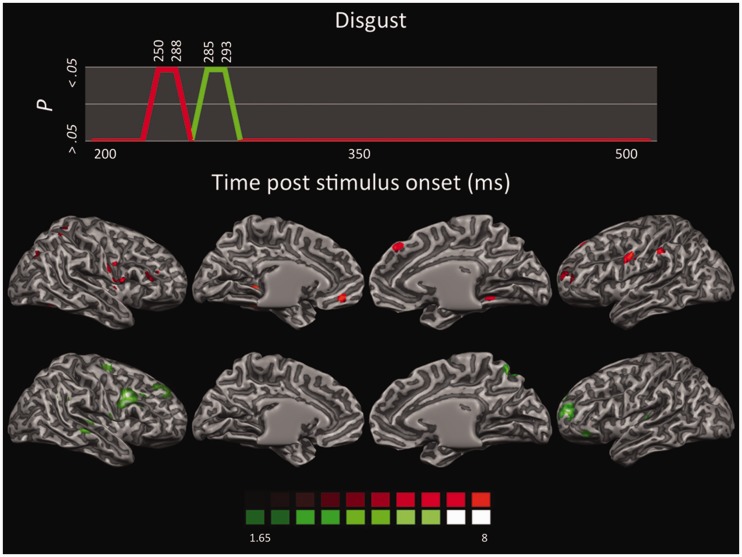

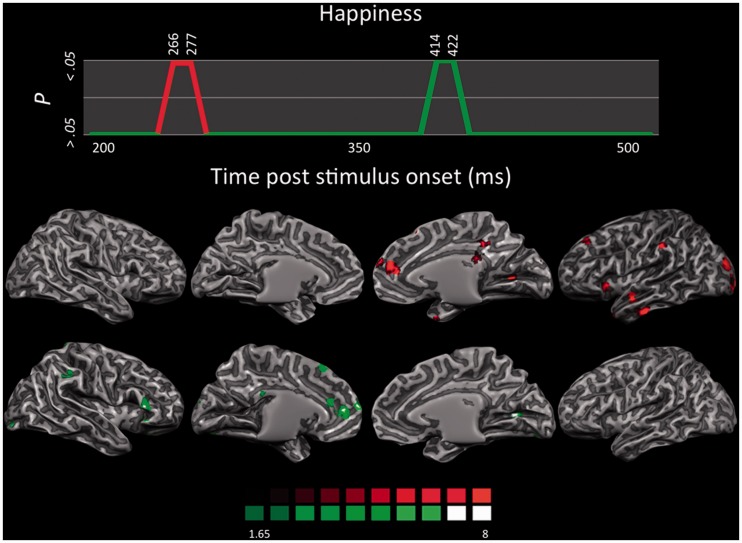

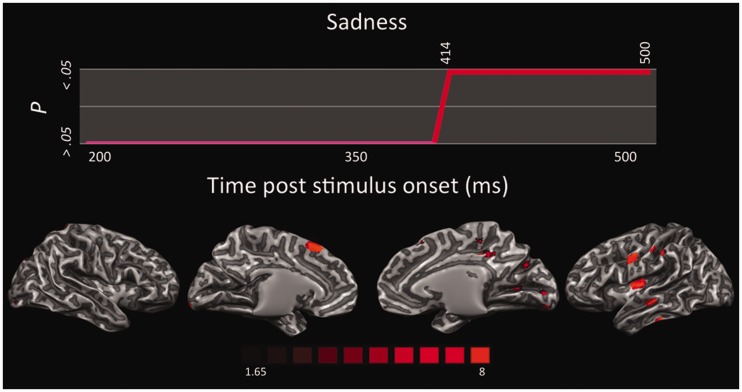

The TANOVA of ERP maps among the four emotions vs neutral condition showed that the observation of each basic emotion was accompanied by specific temporal dynamics, involving a clearly cut sequence across emotions, as well as differences in the onset times, duration and number of significant time segments (Figure 1). No time segment of any emotion proved to be significantly different from the neutral condition before 200 ms post-stimulus onset. Fear elicited the earliest significant effect in the time segments from 226 to 234 ms, and, again, in the time segments from 242 to 254 ms post-stimulus exposure. Disgust was the subsequent emotion inducing significant ERP differences from the neutral condition, with a first difference in the continuous time segments from 250 to 258 ms and from 285 to 293 ms. Happiness was the third emotion with a first significant time segment from 266 to 277 ms and a later segment from 414 to 422 ms post-stimulus onset. The last emotion to trigger significant ERP responses compared with neutral stimuli was sadness with one long sequence of contiguous time segments from 414 to 500 ms post-exposure. Therefore, among the four emotions investigated, fear triggered the fastest significant neuronal response, followed shortly thereafter by disgust, and then by happiness and sadness.

Fig. 1.

Time segments of significant differences for each emotion condition compared with the neutral condition. All yellow segments indicate significant differences at P < 0.05 among ERP maps as resulting from the TANOVA.

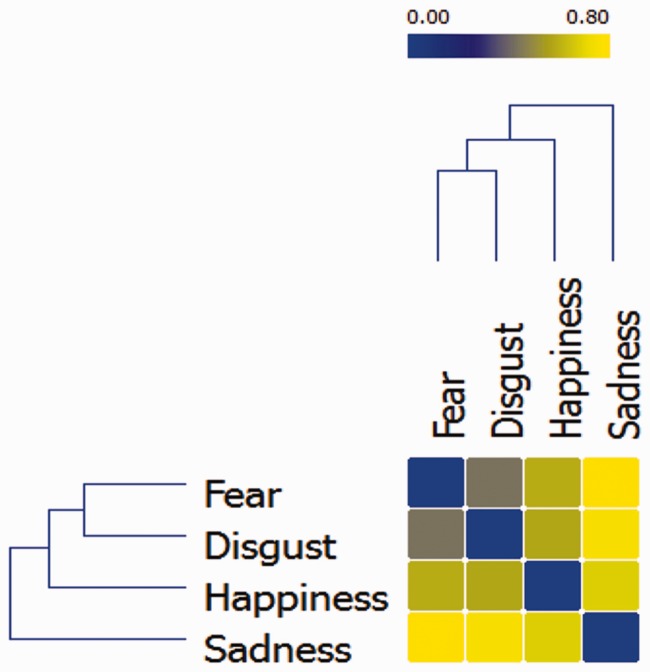

The similarity/dissimilarity between the temporal profiles of the four emotions can be quantified and spatially represented by computing a distance matrix where the distance between emotions is inversely proportional to the similarity of their neural temporal dynamics (i.e. distance = 1 − similarity). The matrix was reordered to minimize the cross-correlation values of the diagonal and submitted to a hierarchical clustering algorithm to obtain a dendrogram of the task-related networks (Johansen-Berg et al., 2004). This procedure groups data over a variety of scales by creating a cluster tree that represent a multilevel hierarchy where clusters at one level are joined to clusters at the next level, thereby enabling us to choose the most appropriate level for each emotion. The dendrogram was built using the Ward method, which adopts an analysis of variance approach to evaluate the distances between clusters (Ward, 1963) (Figure 2). Results indicate that fear and disgust had similar temporal dynamics, as they were indeed grouped at the same level. Conversely, happiness and sadness were each positioned at a different level, indicating a temporal profile dissimilar from each other as well as from the profile of fear and disgust.

Fig. 2.

Hierarchical dendrogram displaying the similarity/dissimilarity between the neural temporal dynamics of the four emotions. Similarity is represented as the inverse correlation from blue (highest similarity, r = 1) to yellow (highest dissimilarity; r = 0).

Correspondence between subjective emotion experience and neural temporal dynamics of emotion perception

Participants labeled the emotional pictures in the expected emotion categories, as there was a high concordance between the intended emotion, as defined in the validation experiment, and the self-reported categorization (Cohen K = 0.77, P < 0.001). Moreover, participants’ evaluation of their own subjective experience along the valence and arousal dimensions was in good agreement with the emotional content of the pictures, indicating that the subjects were able to feel the expected emotion when presented with the stimuli (Table 1).

Table 1.

Mean (M) and standard deviation (s.d.) of valence and arousal of the different emotional stimuli as judged by participants in the behavioural experiment following the EEG experiment

| Valence | Arousal | |||

|---|---|---|---|---|

| M | s.d. | M | s.d. | |

| Happiness | 7.28 | 1.66 | 5.05 | 2.21 |

| Sadness | 2.65 | 1.53 | 4.88 | 2.11 |

| Disgust | 3.14 | 1.68 | 5.15 | 2.25 |

| Fear | 2.95 | 1.72 | 5.46 | 2.14 |

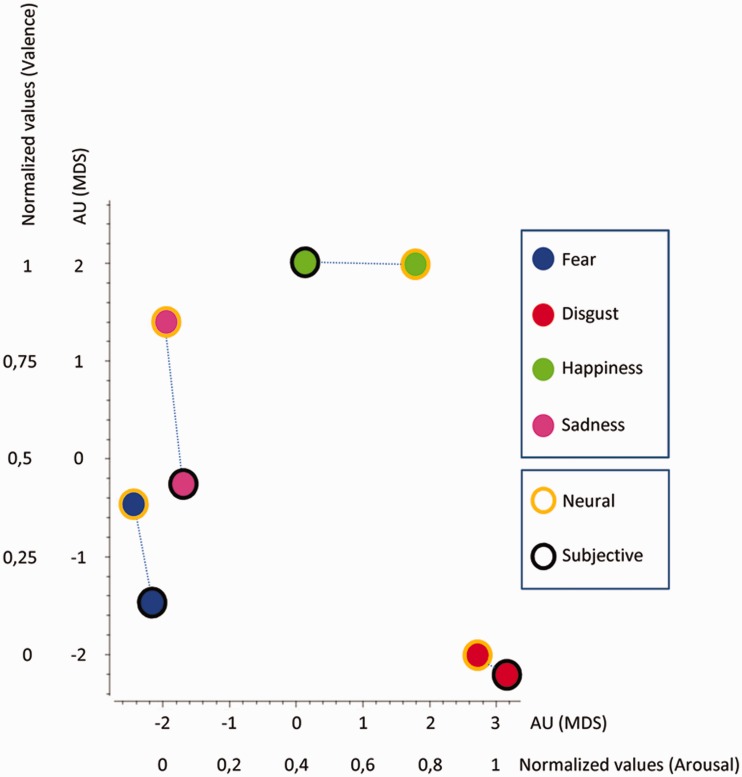

To investigate the correspondence between the neural temporal dynamics of passive emotion perception from EEG data, and the psychological representation, as reported afterward from the subjective rating of emotion experience, we performed a representational similarity analysis (Kriegeskorte et al., 2008).

As the two datasets have different dimensions, we reduce the dimensionality of the neural temporal dynamics data with the MDS technique in order to make EEG data comparable with the dimensionality of the subjective experience ratings. We thus transformed the neural temporal dynamics data of each emotion category into a vector containing all the values at each time point. A representational similarity matrix was then built up by calculating the correlation between each vector (r), and the distance matrix (or representational dissimilarity matrix) was set up as 1 − r. Finally, MDS was applied to the distance matrix to provide a bidimensional geometrical representation of the EEG results comparable to the bidimensional representation of the subjective emotion experience as emerging from the ratings of valence and arousal. EEG data of different emotions having shorter Euclidean distance were represented closer to each other. The final MDS graph therefore results from the superimposition of the MDS graph displaying the bidimensional representation of the neural temporal dynamics of the four emotions, with the MDS graph reporting the bidimensional representation of the subjective evaluation of arousal and valence. In this final MDS graph, neural temporal dynamics data are expressed in arbitrary units (AU), whereas subjective scores of emotional experience are reported in normalized values (Figure 3).

Fig. 3.

MDS displaying the relations between neural temporal dynamics and subjective experience for all four emotions. EEG values are reported in AU, whereas subjective ratings of valence and arousal are reported in normalized values to make comparable the two datasets.

We found a similar distribution of neural temporal dynamics and subjective experience for all four emotions. In fact, for each emotion, the two classes of data tended to cluster together. This indicates that psychological categories that typically define emotions, such as fear, anger or happiness, have a rather close correspondence at the brain level, at least in terms of neural temporal dynamics.

Time-dependent spatial localization

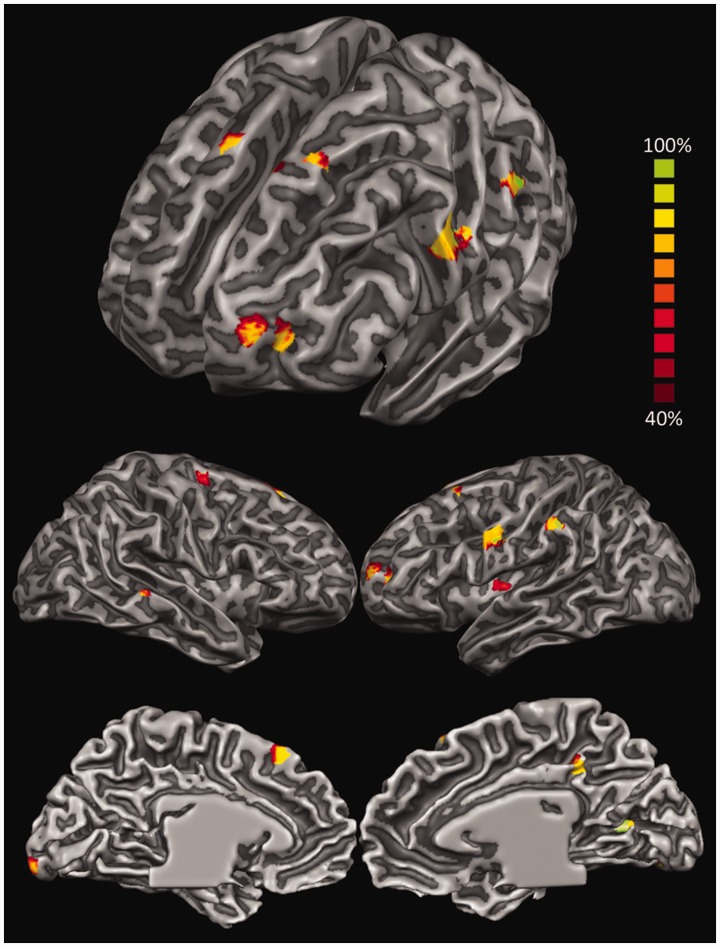

LORETA analysis was applied to all time segments for which the TANOVA returned a significant difference in the comparison between each of the four emotion conditions and the neutral condition. As there were two significant time segments for fear, disgust and happiness, and one significant time segment for sadness, this resulted in a total of seven activation maps.

Initially, all maps originating from the contrasts between each emotion and the neutral condition were pooled together to investigate the neural space common to the processing of all four emotions. We thus built up a probabilistic map reporting the inferred location of the neural generators active in at least 40% of the activation maps (Figure 4 and Table 2). The analysis highlighted the activity in brain areas previously found to be involved in emotion perception as well as in several related functions (Adolphs, 2002; Phan et al., 2002; Murphy et al., 2003; de Gelder et al., 2006; Kober et al., 2008; Pessoa, 2008; Fusar-Poli et al., 2009b; Sabatini et al., 2009; de Gelder et al., 2010; Tamietto and de Gelder, 2010; Vytal and Hamann, 2010; Said et al., 2011; Lindquist et al., 2012). These activations encompass indeed, from more posterior to anterior regions, occipitotemporal areas involved in low- and high-order visual perception of socially and emotionally salient aspects of the stimuli (Said et al., 2011); lateral parietal areas important in the bottom-up attentional modulation of visual perception as well as in motor-resonance and empathic processing (Corbetta and Shulman, 2002; Keysers, 2011; Borgomaneri et al., 2012, 2013), especially in association with frontal motor and supplementary motor areas (SMA) (Keysers et al., 2010); insular regions (Ins) relevant in interoception (Craig, 2009); the posterior cingulate cortex (PCC) that is involved in the retrieval and representation of contextual information (Maddock, 1999) as well as in self-generated thoughts during emotion processing (Greicius et al., 2003); and, finally, lateral prefrontal areas involved in executive functions, goal-directed attentional control, response selection and theory of mind (Miller, 2000; Corbetta and Shulman, 2002; Wood and Grafman, 2003; Amodio and Frith, 2006; Pessoa, 2008).

Fig. 4.

LORETA-based statistical non-parametric maps (SnMP) displaying the areas significantly activated in at least 40% of the activation maps and during the entire 500 ms after stimulus onset for all emotions pooled together and contrasted against the neutral condition.

Table 2.

Significant differences in brain areas activated in at least 40% of the activation maps during the entire 500 ms after stimulus onset for all emotions pooled together and contrasted against the neutral condition

| Lobe | Surface | Area | Hemisphere | Talairach coordinates |

||

|---|---|---|---|---|---|---|

| X | Y | Z | ||||

| Frontal | ||||||

| Lateral | ||||||

| alPFC/SFG | L | −20 | 55 | 16 | ||

| dlPFC/SFG | L/R | −27/12 | 16/24 | 55/55 | ||

| MI/PCG | L | −56 | −1 | 32 | ||

| SMA/MFG | R | 27 | −8 | 58 | ||

| Medial | ||||||

| dmPFC/SFG | L | −6 | 22 | 48 | ||

| Insular | ||||||

| midIns | L | −37 | −6 | 7 | ||

| Limbic | ||||||

| PCC | R | 6 | −38 | 40 | ||

| Parietal | ||||||

| Lateral | ||||||

| IPL/SMG | L | −57 | −31 | 40 | ||

| Temporal | ||||||

| Lateral | ||||||

| STS | R | 59 | −32 | 5 | ||

| Occipital | ||||||

| Medial | ||||||

| V1–V2/OP | L | −13 | −98 | 3 | ||

| V1/CS | R | 23 | −68 | 10 | ||

alPFC, anterolateral prefrontal cortex; dlPFC, dorsolateral prefrontal cortex; SFG, superior frontal gyrus; MI, primary motor cortex; PCG, precentral gyrus; SMA, supplementary motor cortex; MFG, middle frontal gyrus; dmPFC, dorsomedial prefrontal cortex; midIns, medial insula; PCC, posterior cingulate cortex; IPL, inferior parietal lobule; SMG, supramarginal gyrus; STS, superior temporal sulcus; V1, primary visual cortex; V2, secondary visual cortex; OP, occipital pole; CS, calcarine sulcus.

Subsequently, we investigated the time-dependent activity elicited by each emotion condition. Figures 5–8 display the statistical LORETA maps for each significant time segment and emotion compared with neutral condition. All activated areas are summarized in Table 3 as a function of the different emotions. All contrasts resulted in areas of significant activations compared with neutral, whereas there was no significant deactivation.

Fig. 5.

LORETA-based SnMP for the contrast fear vs neutral as a function of the two time segments showing significant activations (first time segment in red; second time segment in green).

Fig. 6.

LORETA-based SnMP for the contrast disgust vs neutral as a function of the two time segments showing significant activations (first time segment in red; second time segment in green).

Fig. 7.

LORETA-based SnMP for the contrast happiness vs neutral as a function of the two time segments showing significant activations (first time segment in red; second time segment in green).

Fig. 8.

LORETA-based SnMP for the contrast sadness vs neutral in the continuous time segment showing significant activations.

Table 3.

Significant differences in brain areas as a function of each emotion contrasted against the neutral condition

| Lobe | Surface | Area | Hemisphere | Talairach Coordinates |

Emotion |

|||||

|---|---|---|---|---|---|---|---|---|---|---|

| X | Y | Z | Fear | Disgust | Happiness | Sadness | ||||

| Frontal | ||||||||||

| Lateral | ||||||||||

| alPFC/SFG | L | −24 | 48 | 15 | • | |||||

| dlPFC/SFG | L | −18 | 34 | 33 | • | • | • | |||

| dlPFC/MFG | R | 20 | 23 | 38 | • | • | ||||

| dlPFC/MFG | R | 16 | 30 | 45 | • | • | ||||

| vlPFC/IFG | L | −39 | 36 | 4 | • | |||||

| vlPFC/IFG | R | 50 | 25 | 13 | • | • | • | |||

| vPMC/IFG | R | 45 | 3 | 30 | • | • | ||||

| MI/PCG | L | −38 | −27 | 54 | • | |||||

| MI/PCG | L | −55 | −4 | 27 | • | • | • | |||

| MI/PCG | R | 44 | −9 | 49 | • | • | ||||

| Medial | ||||||||||

| preSMA/SFG | L | −20 | 16 | 56 | • | • | • | |||

| dmPFC/MFG | L | −12 | 45 | 11 | • | |||||

| dmPFC/MFG | R | 6 | 43 | 21 | • | • | ||||

| PCL | L | −3 | −29 | 55 | • | |||||

| PCL | R | 7 | −32 | 48 | • | • | • | |||

| vmPFC/MFG | L | −8 | 43 | −7 | • | |||||

| Orbital | ||||||||||

| OFC/IFG | L | −33 | 33 | −7 | • | • | ||||

| OFC/IFG | R | 16 | 31 | −17 | • | |||||

| Insular | ||||||||||

| antIns | L | −30 | 23 | 1 | • | • | ||||

| midIns | L | −37 | −7 | 7 | • | |||||

| midIns | R | 35 | −5 | 8 | • | |||||

| postIns | L | −33 | −18 | 7 | • | |||||

| Limbic | ||||||||||

| ACC | L | −13 | 39 | 13 | • | • | ||||

| PCC | L | −4 | −25 | 32 | • | • | ||||

| PCC | R | 3 | −27 | 29 | • | • | ||||

| Parietal | ||||||||||

| Lateral | ||||||||||

| SI/PoG | L | −45 | −20 | 39 | • | • | ||||

| SI/PoG | R | 58 | −11 | 26 | • | • | ||||

| SPL | R | 27 | −45 | 60 | • | • | ||||

| IPL/SMG | L | −57 | −31 | 40 | • | • | • | • | ||

| IPL/SMG | R | 47 | −37 | 31 | • | • | ||||

| Temporal | ||||||||||

| Lateral | ||||||||||

| TP | L | −33 | 17 | −28 | • | • | ||||

| TP | R | 28 | 12 | −28 | • | |||||

| ITS | L | −53 | −12 | 21 | • | • | ||||

| STS | L | −48 | −20 | −4 | • | • | ||||

| STS | R | 65 | −24 | 0 | • | • | ||||

| Orbital | ||||||||||

| PHG | L | −37 | −23 | −19 | • | • | ||||

| FG | L | −35 | −38 | −17 | • | |||||

| Occipital | ||||||||||

| Lateral | ||||||||||

| V3d/MOG | L | −23 | −88 | 16 | • | |||||

| V3d/MOG | L | −32 | −75 | 18 | • | |||||

| V3d/PCUN | R | −23 | −74 | 32 | • | • | • | |||

| IOG | R | 37 | −65 | −6 | • | • | ||||

| Medial | ||||||||||

| V1−V2/OP | L | −13 | −97 | 5 | • | • | ||||

| V1/CS | R | 22 | −71 | 10 | • | • | • | |||

| V4v/LG | R | 21 | −88 | 12 | • | • | ||||

alPFC, anterolateral prefrontal cortex; antIns, anterior insula; CS, calcarine sulcus; IFG, inferior frontal gyrus; IOG, inferior occipital gyrus; LG, lingual gyrus; MFG, middle frontal gyrus; midIns, medial insula; MOG, middle occipital gyrus; OP, occipital pole; PCG, precentral gyrus; PCUN, precuneus; postIns, posterior insula; PoG, postcentral gyrus; SFG, superior frontal gyrus; SMG, supramarginal gyrus; TP, temporal pole.

Time-dependent localization of fear

Overall, fear perception activated a host of neural structures primarily localized in the right hemisphere (Figure 5). The first time segment (226–234 ms) revealed significant activations in striate and extrastriate visual areas along the ventral stream, including right superior temporal sulcus (STS) and left temporal pole, which are functionally and anatomically connected with the amygdala (Vuilleumier et al., 2004; Bach et al., 2011; Tamietto et al., 2012). The left anterior insula and bilateral paracentral lobule (PCL) were also activated. These areas are involved in interoception (Craig, 2009) and in the emotion-related modulation of interoceptive feelings (Roy et al., 2009), respectively. Moreover, significant activations were found in the left inferior parietal lobule (IPL) implicated in the representation of high-level features of emotional visual stimuli (Becker et al., 2012; Sarkheil et al., 2012; Mihov et al., 2013), and in the ventrolateral prefrontal cortex involved in object categorization and in feature-based representation of stimuli. Both regions compose the ventral frontoparietal network involved in the directing of attention toward salient stimuli in the environment (Corbetta and Shulman, 2002; Vuilleumier, 2005). The left cingulate cortex was also active in the posterior as well as anterior regions, although the extent of the former activation was much more prominent. Whereas the anterior cingulate cortex (ACC) seems involved in evaluating the emotional and motivational value of environmental signals and is anatomically connected with the amygdala (Vogt et al., 1987), the PCC is associated with evaluative functions and conceptualization of core affective feelings, two functions often performed in synergy with the prefrontal cortex. In fact, the right dorsolateral prefrontal cortex (dlPFC) was also found active; an area that, in addition, plays a role in executive and goal-directed control of attention as well as in emotion regulation (Ochsner et al., 2004).

The second time segment (242–254 ms) showed a later sweep of activity in the left striate and right extrastriate occipitotemporal areas, in the right primary motor and somatosensory areas, and in right premotor areas involved in motor resonance and in action planning and execution (Rizzolatti et al., 2001) also during perception of emotions from bodily expressions and from IAPS stimuli, as is the present case (Borgomaneri et al., 2012, 2013).

Time-dependent localization of disgust

Perception of disgust showed a prevailing involvement of the right hemisphere, similar to that observed in fear perception (Figure 6). In the first time segment (250–288) there were significant activations in the right extrastriate areas, such as the precuenus and left fusiform gyrus (FG), but generally to a lesser extent than the occipitotemporal activations reported for fear. Right superior parietal lobule (SPL) and left IPL were also activated, as well as the right primary somatosensory cortex (SI) and the Ins. In the frontal lobe, the primary motor cortices (MI) were activated bilaterally, as well as the left pre-supplementary motor area (preSMA), the right ventral premotor cortex (vPMC), the right dorsomedial prefrontal cortex (dmPFC) and left ventromedial prefrontal cortex (vmPFC) and the ventrolateral prefrontal cortex bilaterally (vlPFC). Particularly interesting is the activity in the dorsal and ventral regions of the medial prefrontal cortex, as these cortical midline areas are associated with self-relevant processing, with dorsal regions more involved in the assessment of whether a stimulus is self-relevant, and ventral regions engaged in elaborating about this self-relevance (Ochsner et al., 2004; Northoff et al., 2006; Waugh et al., 2010).

The second time segment (285–293) showed small activations along superior and inferior banks of the right STS and more sustained frontal activations including large blobs in the left dlPFC vlPFC, and in the left anterolateral cortex and OFC. Although activity in the anterolateral cortex and OFC has been traditionally associated with anger and aggressive behaviors (Grafman et al., 1996; Murphy et al., 2003; Vytal and Hamann, 2010), a recent meta-analysis found that OFC is more consistently activated during the perception and experience of disgust (Lindquist et al., 2012). This is in keeping with physiological data indicating the OFC as a site of convergence between polymodal eteroceptive (i.e. sensory modalities) and interoceptive (i.e. visceral) sensory information (Barbas and Pandya, 1989; Barbas et al., 2003), on the one hand, and reversal learning and decision making, on the other (Rolls, 1996; Rolls et al., 1996). Therefore, OFC seems well suited to integrate sensory information from the environment and from the internal milieu to guide behavior.

Time-dependent localization of happiness

Happiness activated a smaller number of areas compared with fear and disgust, with a clear lateralization in the left hemisphere (Figure 7). In the first time segment (266–277 ms), occipitotemporal visual areas were activated, including the bilateral temporal pole and left inferior temporal sulcus (ITS), and the left IPL at the supramarginal gyrus and those locations also activated by fear and disgust. The left anterior Ins was also significantly active during this initial time segment. Finally, the left dlPFC, right dmPFC, and right PCC were activated as well.

Areas activated in the second time segment (414–422 ms) again encompass the posterior occipitotemporal visual areas, the left parahippocampal gyrus (PHG), the right vlPFC, the left dlPFC, and the left preSMA. Activation in the dmPFC extended more caudally to include the left ACC. The OFC was also activated bilaterally. Finally, a few voxels at the border between the splenium of the corpus callosum and the PCC were found.

In both time segments, the processing of happy stimuli seems to be characterized by higher activity in the medial prefrontal cortex and in the ACC compared with fear and disgust. These two regions are closely interconnected (Petrides and Pandya, 1999), and, for example, activity in the ACC increases when depressed subjects respond positively to pharmacological treatments (Mayberg et al., 2000). Moreover, activity in the vmPFC was found to be consistently associated with happiness across neuroimaging studies (Lindquist et al., 2012). Both these regions are also implicated in the components of imitative behaviors together with the IPL and they form a network involved in emotional contagion (Lee et al., 2006).

Time-dependent localization of sadness

Sadness activated a long continuous time segment for which the putative neural generators were located in occipitotemporal visual areas, including left STS and ITS, left IPL, SI and right SPL, left Ins, right PCL, left preSMA and MI, and right dlPFC (Figure 8). The role of the Ins in the perception and experience of sadness has been recently emphasized by several meta-analyses. In addition, improvement of depressive symptoms after antidepressant treatments was strongly associated with greater grey matter volume in the Ins (Chen et al., 2007). Therefore, the selectivity of the insula for disgust has been questioned in favor of a more general role in tasks that involve awareness of bodily states (Vytal and Hamann, 2010; Lindquist et al., 2012). Also, higher activity in mPFC and medial temporal gyrus has been reported in patients with major depression and anhedonia (Beauregard et al., 1998; Mitterschiffthaler et al., 2003).

Winner-take-all comparison across emotions

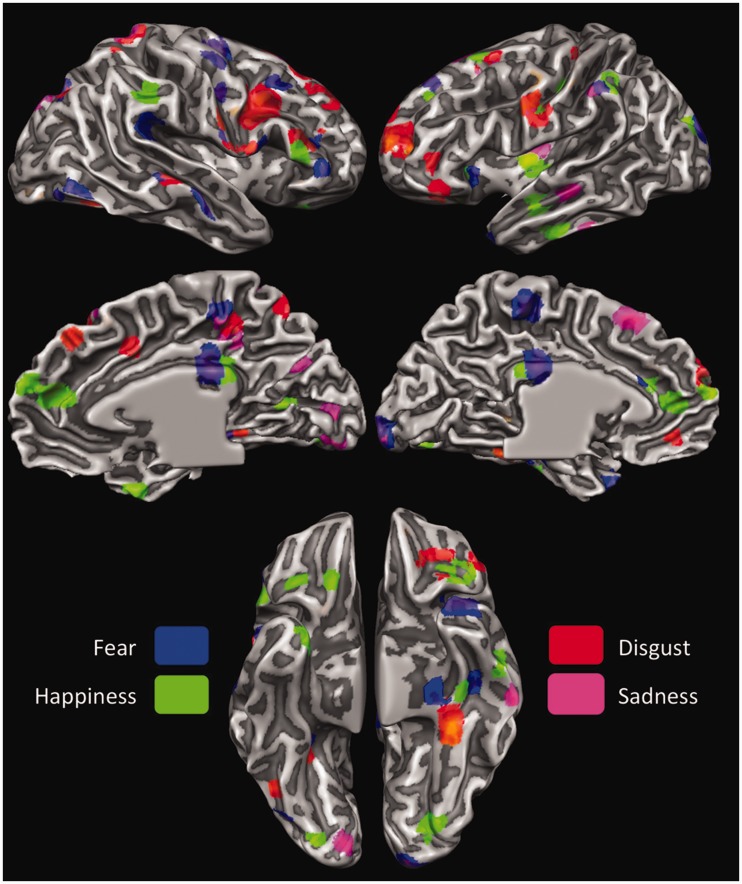

Finally, to provide a comparative assessment of the areas involved in processing the four basic emotions, and to highlight the possible selectivity of brain areas for specific emotions, we performed a winner-take-all analysis on the activation maps for each emotion. The activation maps for the four emotions were thus contrasted against each other, and each active voxel was attributed to only the emotion for which the correlation was strongest. As displayed in Figure 9, the results are highly consistent with the results obtained from the LORETA maps of the different time segments. The main differences concern more extended activations in the bilateral PCC for fear and happiness, a more widespread activity in the bilateral OFC for happiness, and in the left posterior Ins, again, for happiness.

Fig. 9.

LORETA-based winner-take-all maps displaying areas most responsive to each of the four emotions contrasted against each other.

DISCUSSION

This study investigated the neural temporal dynamics associated with the perception of fear, disgust, happiness and sadness, their relation to subjective experience and self-report, and the time-dependent spatial localization of the ERP neural generators. Our results complement and extend with novel findings the current knowledge on the signature patterns of neural activity that characterize emotion perception.

First, the high temporal resolution of EEG shows that emotions are not continually represented in the brain, but significant activations take place during only a few short time segments. This emphasizes the nature of emotions as dynamic processes, an aspect often central to many emotion theories but largely overlooked in current emotion research. Many prior studies indeed used EEG/ERP to investigate emotion processing and reported a number of interesting findings (Meeren et al., 2005; Righart and de Gelder, 2006; Olofsson et al., 2008). However, most of these studies traditionally looked at pre-determined time-windows and analyzed preselected peaks and reference-dependent waveform components, including early components induced by IAPS stimuli (Smith et al., 2003; Delplanque et al., 2004; Carretie et al., 2006; Groen et al., 2013). Conversely, we used a continuous and global analysis approach based on testing, in a data-driven fashion, all possible significant differences among all reference-independent topographic maps for the four emotion conditions vs neutral condition. The few studies that used an approach similar to ours reported earlier effects in ERP epochs around 150 ms post-stimulus onset (Eger et al., 2003; Esslen et al., 2004). However, there are several methodological differences that prevent any simple or direct comparison with this study. Remarkably, we used complex and varied images derived from the IAPS database, whereas the previous studies used facial expressions. There is evidence that the very rapid effects of emotion processing occurring in visually responsive areas are determined by modulatory influences of subcortical emotion-sensitive structures, such as the amygdala or the pulvinar, over occipitotemporal areas along the ventral stream (Vuilleumier et al., 2004; Garrido et al., 2012). These subcortical structures undertake a fast but coarse processing of the emotional aspects of the stimulus via magnocellular pathways (Morris et al., 1999; Vuilleumier et al., 2003; Whalen et al., 2004). Such coarse processing is possible in the case of facial expressions and body postures that are recognizable also at low spatial frequencies (Tamietto et al., 2009; Tamietto and de Gelder, 2010; Van den Stock et al., 2011). Yet, if emotions are displayed by complex scenes that involve relevant visual information also in high spatial frequencies, then these structures fail to process the stimulus and to enhance the early activity of cortical visual areas (de Gelder et al., 2002). Moreover, we used a passive exposure design and asked participants to evaluate the stimuli only in a dedicated behavioral session subsequent to the EEG experiment, whereas prior studies required participants to categorize or evaluate the facial expressions explicitly during the experiment, thereby combining perceptive functions with additional processes related to response selection and execution that have influenced EEG measurements.

Second, the neural temporal dynamics of emotion processing showed specific patterns of unfolding over different time windows that were characteristic for each of the four emotions studied. Also in this case, the temporal features inherent in processing specific emotions have rarely been addressed with a bottom-up and continuous approach and, to our knowledge, never investigated with a passive exposure design and for complex emotional scenes. These temporal dynamics display a profile that is biologically plausible and is consistent with the evolutionary properties of each emotion. In fact, negative emotions such as fear or disgust evolved to provide the organism with fast reflexive reactions to impending danger, such as flight, revulsion or gag reflex (de Gelder et al., 2004; Tamietto et al., 2009; Caruana et al., 2011; Garrido et al., 2012; Adolphs, 2013). Consistently, these two emotions displayed the earliest significant differences from the neutral condition. Happiness and sadness, conversely, are less immediately dangerous than fear or disgust, are less directly associated with instrumental reactions, and induce broader and more self-focused thinking (Fredrickson, 1998; Baumeister et al., 2001; Waugh et al., 2010). Alternatively, these latter emotions can also be considered as having a more pronounced social dimension, as their expressions are linked to affiliative or approach responses. These differences were reflected in the temporal dynamics of happiness and sadness, with their associated neural activity unfolding significantly later in time compared with fear and disgust. More specifically, the later onset and prolonged activation of sadness agrees with clinical evidence showing sustained activation of emotion-sensitive structures in depressed patients (Siegle et al., 2002). Notably, when the different temporal dynamics were directly contrasted with a multilevel hierarchical clustering that enabled us to assess their similarity/dissimilarity, the different emotions grouped according to their predicted biological and evolutionary significance, with fear and disgust clustered together and separated from happiness and sadness. These distances in the neural temporal profiles reflect similar distances measured on subjective categorization of emotional facial expressions, as previous behavioral studies reported maximal distances from sadness to fear and from disgust to happiness (Russell and Bullock, 1985; Posner et al., 2005).

Third, we directly investigated the correspondence between the neural temporal dynamics, as emerging from EEG data during passive emotion perception, and the psychological representation of emotions, as emerging from the subjective ratings of the same stimuli in the successive behavioral task. Our goal was to assess in a fully data-driven procedure to what extent psychological concepts of individual emotions along with their valence and arousal attributes pick out distinguishable neural states with consistent and specific neurophysiological temporal properties. The results showed that the four emotions clustered differently but, most importantly, the data revealed a close correspondence between the neural temporal dynamics of emotion perception and the subsequent and independent subjective rating of the same stimuli, indicating that changes in the neural profile entailed a shift in the corresponding point of the spatial representation of the subjective experience. This association suggests that neural dynamics carve a representation space of basic emotions that has a psychological consistency as defined by discrete emotion categories, and which, in turn, seems to reflect distinguishable neural states. Because neural temporal dynamics were recorded during passive perception, there is no direct evidence that the subjects actually felt the intended emotion. This is however a highly reasonable surmise considering that the data concerning emotion experience were gathered on the same participants and in response to the same stimuli presented during the EEG session. To some extent, the high correspondence between neural temporal dynamics and subjective experience is further strengthened by the fact that the two datasets were gathered in different sessions and under different task demands. The present results thus extend prior studies that also used MDS to map in a common space the psychological and physiological representations of emotions, reporting a good agreement between the two domains (Sokolov and Boucsein, 2000).

Fourth, a critical aspect of our method concerned the three-dimensional and time-dependent localization of neural activity during 3.9 ms time segments that significantly discriminated between each of the four emotion categories and the neutral category. We found a hemispheric laterality effect with increased activity in the left hemisphere for emotions with a positive valence such as happiness, and in the right hemisphere for emotions with a negative valence such as fear and disgust. These results are in line with the valence hypothesis in the hemispheric lateralization of emotion processing, which postulates a preferential engagement of the left hemisphere for positive emotions and of the right hemisphere for negative emotions (Fusar-Poli et al., 2009a). A number of previous EEG studies reported similar laterality effects as a function of emotional valence, but they did not localize the electrical sources of this EEG activity (Hugdahl and Davidson, 2004). The study by Esslen et al. (2004) represents a remarkable exception insofar as the LORETA method was used, and the authors reported results similar to ours in response to facial expressions.

Our results showed that different cortical areas were involved in the perception of the four basic emotions studied (see Table 2 and Figure 9 for an overview). The emotion-sensitive areas identified by the LORETA analysis agree with prior findings investigating emotion processing using neuroimaging techniques with better spatial but worse temporal resolution, such as fMRI (Adolphs, 2002; Phan et al., 2002; Murphy et al., 2003; de Gelder et al., 2006, 2010; Kober et al., 2008; Pessoa, 2008; Fusar-Poli et al., 2009b; Sabatini et al., 2009; Tamietto and de Gelder, 2010; Vytal and Hamann, 2010; Said et al., 2011; Lindquist et al., 2012). In the case of fear, disgust and happiness, the first time segment showed a more widespread activation including low- and high-level visual areas, sensory-motor and prefrontal areas. Conversely, the second time segment showed a more limited number of active areas, predominantly localized anteriorly in sensory-motor and prefrontal areas, therefore suggesting that this second time window implements more reflective processes related to motor-resonance and appraisal of self-relevance.

Interestingly, our analysis did not reveal specific brain areas uniquely involved in the perception of one distinct basic emotion, showing instead a remarkable spatial overlapping of neural activity across emotions. Lack of evidence that discrete emotions consistently and specifically correspond to spatially distinct brain areas has been previously interpreted as suggesting that psychological concepts such as fear, happiness or disgust do not have a characteristic physiological or neural signature (Lindquist et al., 2012). However, such an interpretation implies a one-to-one mapping between basic emotion categories and single brain regions, and tends to underestimate two aspects of the neural functioning (Scarantino, 2012). First, brain regions do not have functions in isolation, but can fulfill multiple functions depending on the networks to which they belong. Second, a given brain region can have a function in a network at a specific time window without being exclusively involved in that function at all times. In other words, the functional specialization of a neural structure for a specific emotion does not necessarily require that the structure is exclusively activated for that emotion. Instead, this functional specialization can occur as the preferential activation of a brain area within the context of the neural network co-activated at that specific time window.

These theoretical considerations find direct and novel empirical support in the present findings. In fact, each emotion showed a specific temporal profile of neural activity in segregated time windows characterized by a unique spatial profile of co-activations. Moreover, there was a high correspondence between neural temporal dynamics and subjective evaluations, as discussed earlier. Finally, as shown in our winner-take-all analysis, brain regions can be preferentially, rather than exclusively, selective for a particular emotion within different time windows. This indicates the pivotal role of the temporal aspect in the investigation of the neural correlates of basic emotions and suggests that the neural signature of basic emotions can emerge as the byproduct of dynamic spatiotemporal networks.

Acknowledgments

T.C. and F.C. were supported by the Fondazione Carlo Molo, Torino, Italy. M.T. was supported by a Vidi grant from the Netherlands Organization for Scientific Research (NWO) (grant 452-11-015) and by a FIRB—Futuro in Ricerca 2012—grant from the Italian Ministry of Education University and Research (MIUR) (grant RBFR12F0BD). B.d.G. was supported by the project ‘TANGO—Emotional interaction grounded in realistic context’ under the Future and Emerging Technologies (FET) program from the European Commission (FP7-ICT-249858) and by the European Research Council under the European Union’s Seventh Framework Programme, ERC Advanced Grant.

REFERENCES

- Adolphs R. Recognizing emotion from facial expressions: psychological and neurological mechanisms. Behavioral and Cognitive Neuroscience Reviews. 2002;1(1):21–62. doi: 10.1177/1534582302001001003. [DOI] [PubMed] [Google Scholar]

- Adolphs R. The biology of fear. Current Biology. 2013;23(2):R79–93. doi: 10.1016/j.cub.2012.11.055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Gosselin F, Buchanan TW, Tranel D, Schyns P, Damasio AR. A mechanism for impaired fear recognition after amygdala damage. Nature. 2005;433(7021):68–72. doi: 10.1038/nature03086. [DOI] [PubMed] [Google Scholar]

- Amodio DM, Frith CD. Meeting of minds: the medial frontal cortex and social cognition. Nature Reviews Neuroscience. 2006;7(4):268–77. doi: 10.1038/nrn1884. [DOI] [PubMed] [Google Scholar]

- Bach DR, Behrens TE, Garrido L, Weiskopf N, Dolan RJ. Deep and superficial amygdala nuclei projections revealed in vivo by probabilistic tractography. Journal of Neuroscience. 2011;31(2):618–23. doi: 10.1523/JNEUROSCI.2744-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barbas H, Pandya DN. Architecture and intrinsic connections of the prefrontal cortex in the rhesus monkey. The Journal of Comparative Neurology. 1989;286(3):353–75. doi: 10.1002/cne.902860306. [DOI] [PubMed] [Google Scholar]

- Barbas H, Saha S, Rempel-Clower N, Ghashghaei T. Serial pathways from primate prefrontal cortex to autonomic areas may influence emotional expression. BMC Neuroscience. 2003;4:25. doi: 10.1186/1471-2202-4-25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF. Are emotions natural kinds? Perspectives on Psychological Science. 2006;1(1):28–58. doi: 10.1111/j.1745-6916.2006.00003.x. [DOI] [PubMed] [Google Scholar]

- Baumeister RF, Bratslavsky E, Finkenauer C, Vohs KD. Bad is stronger than good. Review of General Psychology. 2001;5:323–277. [Google Scholar]

- Beauregard M, Leroux JM, Bergman S, et al. The functional neuroanatomy of major depression: an fMRI study using an emotional activation paradigm. Neuroreport. 1998;9(14):3253–8. doi: 10.1097/00001756-199810050-00022. [DOI] [PubMed] [Google Scholar]

- Becker B, Mihov Y, Scheele D, et al. Fear processing and social networking in the absence of a functional amygdala. Biological Psychiatry. 2012;72(1):70–7. doi: 10.1016/j.biopsych.2011.11.024. [DOI] [PubMed] [Google Scholar]

- Berlin HA, Rolls ET, Kischka U. Impulsivity, time perception, emotion and reinforcement sensitivity in patients with orbitofrontal cortex lesions. Brain. 2004;127(Pt 5):1108–26. doi: 10.1093/brain/awh135. [DOI] [PubMed] [Google Scholar]

- Borgomaneri S, Gazzola V, Avenanti A. Motor mapping of implied actions during perception of emotional body language. Brain Stimulation. 2012;5(2):70–6. doi: 10.1016/j.brs.2012.03.011. [DOI] [PubMed] [Google Scholar]

- Borgomaneri S, Gazzola V, Avenanti A. Temporal dynamics of motor cortex excitability during perception of natural emotional scenes. Social Cognitive and Affective Neuroscience. 2014;9(10):1451–7. doi: 10.1093/scan/nst139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calder AJ, Keane J, Manes F, Antoun N, Young AW. Impaired recognition and experience of disgust following brain injury. Nature Neuroscience. 2000;3(11):1077–8. doi: 10.1038/80586. [DOI] [PubMed] [Google Scholar]

- Carretie L, Hinojosa JA, Albert J, Mercado F. Neural response to sustained affective visual stimulation using an indirect task. Experimental Brain Research. 2006;174(4):630–7. doi: 10.1007/s00221-006-0510-y. [DOI] [PubMed] [Google Scholar]

- Caruana F, Jezzini A, Sbriscia-Fioretti B, Rizzolatti G, Gallese V. Emotional and social behaviors elicited by electrical stimulation of the insula in the macaque monkey. Current Biology. 2011;21(3):195–9. doi: 10.1016/j.cub.2010.12.042. [DOI] [PubMed] [Google Scholar]

- Chapman LJ, Chapman JP. The measurement of handedness. Brain and Cognition. 1987;6(2):175–83. doi: 10.1016/0278-2626(87)90118-7. [DOI] [PubMed] [Google Scholar]

- Chen CH, Ridler K, Suckling J, et al. Brain imaging correlates of depressive symptom severity and predictors of symptom improvement after antidepressant treatment. Biological Psychiatry. 2007;62(5):407–14. doi: 10.1016/j.biopsych.2006.09.018. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nature Reviews Neuroscience. 2002;3(3):201–15. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Craig AD. How do you feel—now? The anterior insula and human awareness. Nature Reviews Neuroscience. 2009;10(1):59–70. doi: 10.1038/nrn2555. [DOI] [PubMed] [Google Scholar]

- Davidson RJ. Cerebral asymmetry and emotion: conceptual and methodological conundrums. Cognition & Emotion. 1993;7:115–38. [Google Scholar]

- de Gelder B, Meeren HK, Righart R, van den Stock J, van de Riet WA, Tamietto M. Beyond the face: exploring rapid influences of context on face processing. Progress in Brain Research. 2006;155:37–48. doi: 10.1016/S0079-6123(06)55003-4. [DOI] [PubMed] [Google Scholar]

- de Gelder B, Pourtois G, Weiskrantz L. Fear recognition in the voice is modulated by unconsciously recognized facial expressions but not by unconsciously recognized affective pictures. Proceedings of the National Academy of Sciences of the United States of America. 2002;99(6):4121–6. doi: 10.1073/pnas.062018499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B, Snyder J, Greve D, Gerard G, Hadjikhani N. Fear fosters flight: a mechanism for fear contagion when perceiving emotion expressed by a whole body. Proceedings of the National Academy of Sciences of the United States of America. 2004;101(47):16701–6. doi: 10.1073/pnas.0407042101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B, Van den Stock J, Meeren HK, Sinke CB, Kret ME, Tamietto M. Standing up for the body. Recent progress in uncovering the networks involved in the perception of bodies and bodily expressions. Neuroscience and Biobehavioral Reviews. 2010;34(4):513–27. doi: 10.1016/j.neubiorev.2009.10.008. [DOI] [PubMed] [Google Scholar]

- Decety J, Cacioppo JT. The Oxford Handbook of Social Neuroscience. New York: Oxford University Press; 2011. [Google Scholar]

- Delplanque S, Lavoie ME, Hot P, Silvert L, Sequeira H. Modulation of cognitive processing by emotional valence studied through event-related potentials in humans. Neuroscience Letters. 2004;356(1):1–4. doi: 10.1016/j.neulet.2003.10.014. [DOI] [PubMed] [Google Scholar]

- Eger E, Jedynak A, Iwaki T, Skrandies W. Rapid extraction of emotional expression: evidence from evoked potential fields during brief presentation of face stimuli. Neuropsychologia. 2003;41(7):808–17. doi: 10.1016/s0028-3932(02)00287-7. [DOI] [PubMed] [Google Scholar]

- Ekman P. Basic emotions. In: Dalgleish T, Power MJ, editors. Handbook of Cognition and Emotion. New York, NY: John Wiley & Sons Ltd; 1999. pp. 45–60. [Google Scholar]

- Esslen M, Pascual-Marqui RD, Hell D, Kochi K, Lehmann D. Brain areas and time course of emotional processing. Neuroimage. 2004;21(4):1189–203. doi: 10.1016/j.neuroimage.2003.10.001. [DOI] [PubMed] [Google Scholar]

- Fredrickson BL. What good are positive emotions? Review of General Psychology. 1998;2(3):300–19. doi: 10.1037/1089-2680.2.3.300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frijda NH. The Laws of Emotion. Mahwah, NJ: Lawrence Erlbaum Associates; 2007. [Google Scholar]

- Friston KJ, Jezzard P, Turner R. Analysis of functional MRI time-series. Human Brain Mapping. 1994;1(2):153–71. [Google Scholar]

- Fusar-Poli P, Placentino A, Carletti F, et al. Laterality effect on emotional faces processing: ALE meta-analysis of evidence. Neuroscience Letters. 2009a;452(3):262–7. doi: 10.1016/j.neulet.2009.01.065. [DOI] [PubMed] [Google Scholar]

- Fusar-Poli P, Placentino A, Carletti F, et al. Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. Journal of Psychiatry & Neuroscience. 2009b;34(6):418–32. [PMC free article] [PubMed] [Google Scholar]

- Garrido MI, Barnes GR, Sahani M, Dolan RJ. Functional evidence for a dual route to amygdala. Current Biology. 2012;22:129–34. doi: 10.1016/j.cub.2011.11.056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grafman J, Schwab K, Warden D, Pridgen A, Brown HR, Salazar AM. Frontal lobe injuries, violence, and aggression: a report of the Vietnam Head Injury Study. Neurology. 1996;46(5):1231–8. doi: 10.1212/wnl.46.5.1231. [DOI] [PubMed] [Google Scholar]

- Greicius MD, Krasnow B, Reiss AL, Menon V. Functional connectivity in the resting brain: a network analysis of the default mode hypothesis. Proceedings of the National Academy of Sciences of the United States of America. 2003;100(1):253–8. doi: 10.1073/pnas.0135058100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Groen Y, Wijers AA, Tucha O, Althaus M. Are there sex differences in ERPs related to processing empathy-evoking pictures? Neuropsychologia. 2013;51(1):142–55. doi: 10.1016/j.neuropsychologia.2012.11.012. [DOI] [PubMed] [Google Scholar]

- Hugdahl K, Davidson RJ, editors. The Asymmetrical Brain. Cambridge, MA: The MIT Press; 2004. [Google Scholar]

- Johansen-Berg H, Behrens TE, Robson MD, et al. Changes in connectivity profiles define functionally distinct regions in human medial frontal cortex. Proceedings of the National Academy of Sciences of the United States of America. 2004;101(36):13335–40. doi: 10.1073/pnas.0403743101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keysers C. The Empathic Brain. New York, NY: Dana Press; 2009. [Google Scholar]

- Keysers C, Kaas JH, Gazzola V. Somatosensation in social perception. Nature Reviews Neuroscience. 2010;11(6):417–28. doi: 10.1038/nrn2833. [DOI] [PubMed] [Google Scholar]

- Keysers C, Wicker B, Gazzola V, Anton JL, Fogassi L, Gallese V. A touching sight: SII/PV activation during the observation and experience of touch. Neuron. 2004;42(2):335–46. doi: 10.1016/s0896-6273(04)00156-4. [DOI] [PubMed] [Google Scholar]

- Kober H, Barrett LF, Joseph J, Bliss-Moreau E, Lindquist K, Wager TD. Functional grouping and cortical-subcortical interactions in emotion: a meta-analysis of neuroimaging studies. Neuroimage. 2008;42(2):998–1031. doi: 10.1016/j.neuroimage.2008.03.059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Bandettini P. Representational similarity analysis—connecting the branches of systems neuroscience. Frontiers in Systems Neuroscience. 2008;2:4. doi: 10.3389/neuro.06.004.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuppens P, Tuerlinckx F, Russell JA, Barrett LF. The relation between valence and arousal in subjective experience. Psychological Bulletin. 2013;139:917–40. doi: 10.1037/a0030811. [DOI] [PubMed] [Google Scholar]

- Lang PJ, Bradley MM, Cuthbert BN. International Affective Picture System (IAPS): Technical Manual and Affective Ratings. Gainesville, FL: NIMH Center for the Study of Emotion and Attention, University of Florida; 1997. [Google Scholar]

- Lee TW, Josephs O, Dolan RJ, Critchley HD. Imitating expressions: emotion-specific neural substrates in facial mimicry. Social Cognitive and Affective Neuroscience. 2006;1(2):122–35. doi: 10.1093/scan/nsl012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Linden DE, Habes I, Johnston SJ, et al. Real-time self-regulation of emotion networks in patients with depression. PLoS One. 2012;7(6):e38115. doi: 10.1371/journal.pone.0038115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist KA, Wager TD, Kober H, Bliss-Moreau E, Barrett LF. The brain basis of emotion: a meta-analytic review. The Behavioral and Brain Sciences. 2012;35(3):121–43. doi: 10.1017/S0140525X11000446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maddock RJ. The retrosplenial cortex and emotion: new insights from functional neuroimaging of the human brain. Trends in Neurosciences. 1999;22(7):310–6. doi: 10.1016/s0166-2236(98)01374-5. [DOI] [PubMed] [Google Scholar]

- Mayberg HS, Brannan SK, Tekell JL, et al. Regional metabolic effects of fluoxetine in major depression: serial changes and relationship to clinical response. Biological Psychiatry. 2000;48(8):830–43. doi: 10.1016/s0006-3223(00)01036-2. [DOI] [PubMed] [Google Scholar]

- Meeren HK, van Heijnsbergen CC, de Gelder B. Rapid perceptual integration of facial expression and emotional body language. Proceedings of the National Academy of Sciences of the United States of America. 2005;102(45):16518–23. doi: 10.1073/pnas.0507650102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mihov Y, Kendrick KM, Becker B, et al. Mirroring fear in the absence of a functional amygdala. Biological Psychiatry. 2013;73(7):e9–11. doi: 10.1016/j.biopsych.2012.10.029. [DOI] [PubMed] [Google Scholar]

- Miller EK. The prefrontal cortex and cognitive control. Nature Reviews Neuroscience. 2000;1(1):59–65. doi: 10.1038/35036228. [DOI] [PubMed] [Google Scholar]

- Mitterschiffthaler MT, Kumari V, Malhi GS, et al. Neural response to pleasant stimuli in anhedonia: an fMRI study. Neuroreport. 2003;14(2):177–82. doi: 10.1097/00001756-200302100-00003. [DOI] [PubMed] [Google Scholar]

- Morris JS, Ohman A, Dolan RJ. A subcortical pathway to the right amygdala mediating “unseen” fear. Proceedings of the National Academy of Sciences of the United States of America. 1999;96(4):1680–5. doi: 10.1073/pnas.96.4.1680. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mulert C, Jager L, Schmitt R, et al. Integration of fMRI and simultaneous EEG: towards a comprehensive understanding of localization and time-course of brain activity in target detection. Neuroimage. 2004;22(1):83–94. doi: 10.1016/j.neuroimage.2003.10.051. [DOI] [PubMed] [Google Scholar]

- Murphy FC, Nimmo-Smith I, Lawrence AD. Functional neuroanatomy of emotions: a meta-analysis. Cognitive, Affective & Behavioral Neuroscience. 2003;3(3):207–33. doi: 10.3758/cabn.3.3.207. [DOI] [PubMed] [Google Scholar]

- Nichols TE, Holmes AP. Nonparametric permutation tests for functional neuroimaging: a primer with examples. Human Brain Mapping. 2002;15(1):1–25. doi: 10.1002/hbm.1058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Northoff G, Heinzel A, de Greck M, Bermpohl F, Dobrowolny H, Panksepp J. Self-referential processing in our brain—a meta-analysis of imaging studies on the self. Neuroimage. 2006;31(1):440–57. doi: 10.1016/j.neuroimage.2005.12.002. [DOI] [PubMed] [Google Scholar]

- Ochsner KN, Ray RD, Cooper JC, et al. For better or for worse: neural systems supporting the cognitive down- and up-regulation of negative emotion. Neuroimage. 2004;23(2):483–99. doi: 10.1016/j.neuroimage.2004.06.030. [DOI] [PubMed] [Google Scholar]

- Olofsson JK, Nordin S, Sequeira H, Polich J. Affective picture processing: an integrative review of ERP findings. Biological Psychology. 2008;77(3):247–65. doi: 10.1016/j.biopsycho.2007.11.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Panksepp J. Affective Neuroscience: The Foundation of Human and Animal Emotions. New York: Oxford University Press; 1998. [Google Scholar]

- Pascual-Marqui RD, Esslen M, Kochi K, Lehmann D. Functional imaging with low-resolution brain electromagnetic tomography (LORETA): a review. Methods and Findings in Experimental and Clinical Pharmacology. 2002;24(Suppl. C):91–5. [PubMed] [Google Scholar]

- Pascual-Marqui RD, Michel CM, Lehmann D. Low resolution electromagnetic tomography: a new method for localizing electrical activity in the brain. International Journal of Psychophysiology. 1994;18(1):49–65. doi: 10.1016/0167-8760(84)90014-x. [DOI] [PubMed] [Google Scholar]

- Pessoa L. On the relationship between emotion and cognition. Nature Reviews Neuroscience. 2008;9(2):148–58. doi: 10.1038/nrn2317. [DOI] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. Dorsolateral prefrontal cortex: comparative cytoarchitectonic analysis in the human and the macaque brain and corticocortical connection patterns. The European Journal of Neuroscience. 1999;11(3):1011–36. doi: 10.1046/j.1460-9568.1999.00518.x. [DOI] [PubMed] [Google Scholar]

- Phan KL, Wager T, Taylor SF, Liberzon I. Functional neuroanatomy of emotion: a meta-analysis of emotion activation studies in PET and fMRI. Neuroimage. 2002;16(2):331–48. doi: 10.1006/nimg.2002.1087. [DOI] [PubMed] [Google Scholar]

- Pizzagalli DA. Electroencephalography and high-density electrophysiological source localization. In: Cacioppo JT, Tassinary LG, Berntson GG, editors. The Handbook of Psychophysiology. 3rd edn. Cambridge: Cambridge University Press; 2007. pp. 56–84. [Google Scholar]

- Pizzagalli DA, Oakes TR, Davidson RJ. Coupling of theta activity and glucose metabolism in the human rostral anterior cingulate cortex: an EEG/PET study of normal and depressed subjects. Psychophysiology. 2003;40(6):939–49. doi: 10.1111/1469-8986.00112. [DOI] [PubMed] [Google Scholar]

- Posner J, Russell JA, Peterson BS. The circumplex model of affect: an integrative approach to affective neuroscience, cognitive development, and psychopathology. Development and Psychopathology. 2005;17(3):715–34. doi: 10.1017/S0954579405050340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Righart R, de Gelder B. Context influences early perceptual analysis of faces—an electrophysiological study. Cerebral Cortex. 2006;16(9):1249–57. doi: 10.1093/cercor/bhj066. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fogassi L, Gallese V. Neurophysiological mechanisms underlying the understanding and imitation of action. Nature Reviews Neuroscience. 2001;2(9):661–70. doi: 10.1038/35090060. [DOI] [PubMed] [Google Scholar]

- Rolls ET. The orbitofrontal cortex. Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences. 1996;351(1346):1433–43. doi: 10.1098/rstb.1996.0128. discussion 1443–44. [DOI] [PubMed] [Google Scholar]

- Rolls ET, Critchley HD, Mason R, Wakeman EA. Orbitofrontal cortex neurons: role in olfactory and visual association learning. Journal of Neurophysiology. 1996;75(5):1970–81. doi: 10.1152/jn.1996.75.5.1970. [DOI] [PubMed] [Google Scholar]

- Roy M, Piche M, Chen JI, Peretz I, Rainville P. Cerebral and spinal modulation of pain by emotions. Proceedings of the National Academy of Sciences of the United States of America. 2009;106(49):20900–5. doi: 10.1073/pnas.0904706106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Russell JA. A circumplex model of affect. Journal of Personality and Social Psychology. 1980;48:1290–8. [Google Scholar]

- Russell JA, Bullock M. Multidimensional scaling of emotional facial expressions: Similarity from preschoolers to adults. Journal of Personality and Social Psychology. 1985;48:1290–8. [Google Scholar]

- Sabatini E, Della Penna S, Franciotti R, et al. Brain structures activated by overt and covert emotional visual stimuli. Brain Research Bulletin. 2009;79(5):258–64. doi: 10.1016/j.brainresbull.2009.03.001. [DOI] [PubMed] [Google Scholar]

- Said CP, Haxby JV, Todorov A. Brain systems for assessing the affective value of faces. Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences. 2011;366(1571):1660–70. doi: 10.1098/rstb.2010.0351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sarkheil P, Goebel R, Schneider F, Mathiak K. Emotion unfolded by motion: a role for parietal lobe in decoding dynamic facial expressions. Social Cognitive and Affective Neuroscience. 2013;8(8):950–7. doi: 10.1093/scan/nss092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scarantino A. Functional specialization does not require a one-to-one mapping between brain regions and emotions. The Behavioral and Brain Sciences. 2012;35(3):161–2. doi: 10.1017/S0140525X11001749. [DOI] [PubMed] [Google Scholar]