Abstract

Chemical reaction networks (CRNs) formally model chemistry in a well-mixed solution. CRNs are widely used to describe information processing occurring in natural cellular regulatory networks, and with upcoming advances in synthetic biology, CRNs are a promising language for the design of artificial molecular control circuitry. Nonetheless, despite the widespread use of CRNs in the natural sciences, the range of computational behaviors exhibited by CRNs is not well understood.

CRNs have been shown to be efficiently Turing-universal (i.e., able to simulate arbitrary algorithms) when allowing for a small probability of error. CRNs that are guaranteed to converge on a correct answer, on the other hand, have been shown to decide only the semilinear predicates (a multi-dimensional generalization of “eventually periodic” sets). We introduce the notion of function, rather than predicate, computation by representing the output of a function f : ℕk → ℕl by a count of some molecular species, i.e., if the CRN starts with x1, …, xk molecules of some “input” species X1, …, Xk, the CRN is guaranteed to converge to having f(x1, …, xk) molecules of the “output” species Y1, …, Yl. We show that a function f : ℕk → ℕl is deterministically computed by a CRN if and only if its graph {(x, y) ∈ ℕk × ℕl ∣ f(x) = y} is a semilinear set.

Finally, we show that each semilinear function f (a function whose graph is a semilinear set) can be computed by a CRN on input x in expected time O(polylog ∥x∥1).

1 Introduction

The engineering of complex artificial molecular systems will require a sophisticated understanding of how to program chemistry. A natural language for describing the interactions of molecular species in a well-mixed solution is that of (finite) chemical reaction networks (CRNs), i.e., finite sets of chemical reactions such as A + B → A + C. When the behavior of individual molecules is modeled, CRNs are assigned semantics through stochastic chemical kinetics [13], in which reactions occur probabilistically with rate proportional to the product of the molecular count of their reactants and inversely proportional to the volume of the reaction vessel.

Traditionally CRNs have been used as a descriptive language to analyze naturally occurring chemical reactions (as well as numerous other systems with a large number of interacting components such as gene regulatory networks and animal populations). However, recent investigations have viewed CRNs as a programming language for engineering artificial systems. These works have shown CRNs to have eclectic computational abilities. Researchers have investigated the power of CRNs to simulate Boolean circuits [18], neural networks [14], and digital signal processing [15]. CRNs can simulate a bounded-space Turing machine efficiently, if the number of reactions is allowed to scale polynomially with the Turing machine’s space usage [26]. Other work has shown CRNs can efficiently simulate a bounded-space Turing machine, with the number of reactions independent of the space bound, albeit with an arbitrarily small, non-zero probability of error [3].1 Even Turing universal computation is possible with an arbitrarily small, non-zero probability of error over all time [24]. The computational power of CRNs also provides insight on why it can be computationally difficult to simulate them [23], and why certain questions are frustratingly difficult to answer (or even undecidable)[11, 27]. For example, it is PSPACE-hard to predict whether a particular species is producible [26]. The programming approach to CRNs has also, in turn, resulted in novel insights regarding natural cellular regulatory networks [7]. The importance of the model is underscored by the fact that equivalent models repeatedly arise in theoretical computer science under different guises: e.g. vector addition systems [16], petri nets [20], population protocols [1].

Recent work proposes concrete chemical implementations of arbitrary CRNs, particularly using nucleic-acid strand-displacement cascades as the physical reaction primitive [25, 6]. Thus, since in principle any CRN can be built, hypothetical CRNs with interesting behaviors are becoming of more than theoretical interest. One day artificial CRNs may underlie embedded controllers for biochemical, nanotechnological, or medical applications, where environments are inherently incompatible with traditional electronic controllers.

One of the best-characterized computational abilities of CRNs is the deterministic computation of predicates (decision problems) as investigated by Angluin, Aspnes and Eisenstat [2]. (They considered the equivalent distributed computing model of population protocols motivated by sensor networks.) Some CRNs, when started in an initial configuration assigning nonnegative integer counts to each of k different input species, are guaranteed to converge on a single “true” or “false” answer, in the sense that there are two special “voting” species T and F so that eventually either T is present and F absent to indicate “true”, or vice versa to indicate “false.” The set of inputs S ⊆ ℕk that cause the system to answer “true” is then a representation of the decision problem solved by the CRN. Angluin, Aspnes and Eisenstat showed that the input sets S decidable by some CRN are precisely the semilinear subsets of ℕk (see below).

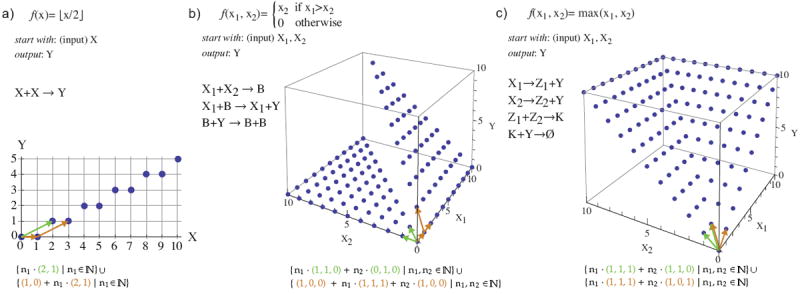

We extend these prior investigations of decision problems or predicate computation to study deterministic function computation. Consider the three examples in Fig. 1(top). These CRNs have the property that they converge to the right answer no matter the order in which the reactions happen to occur, and are thus insensitive to stochastic effects as well as reaction rate constants. Formally, we say a function f : ℕk → ℕl is computed by a CRN C if the following is true. There are “input” species X1, …, Xk and “output” species Y1, …, Yl such that, if C is initialized with x1, …, xk copies of X1, …, Xk, then it is guaranteed to reach a configuration in which the counts of Y1, …, Yl are described by the vector f(x1, …, xk), and these counts never again change. For example, the CRN C with the single reaction X → 2Y computes the function f(x) = 2x in the sense that, if C starts in an initial configuration with x copies of X and 0 copies of Y, then C is guaranteed to stabilize to a configuration with 2x copies of Y. Similarly, the function f(x) = ⌊x/2⌋ is computed by the single reaction 2X → Y (Fig. 1(a)), in that the final configuration is guaranteed to have exactly ⌊x/2⌋ copies of Y (and 0 or 1 copies of X, depending on whether x is even or odd).

Figure 1.

Examples of deterministically computable functions. (Top) Three functions and examples of CRNs deterministically computing them. The input is represented in the molecular count of X (for (a)), and moleculer counts of X1, X2 (for (b) and (c)). The output is represented by the molecular count of Y. Example (a) computes via the relative stoichiometry of reactants and products of a single reaction. In example (b), the second and third reactions convert B to Y and vice versa, catalyzed by X1 and B, respectively. Thus, if there are any X1 remaining after the first reaction finishes (and thus x1 > x2), all of B can get converted to Y permanently (since some B is required to convert Y back to B). Since in this case the first reaction produces x2 molecules of B, x2 molecules of the output Y are eventually produced. If the first reaction consumes all of X1 (and thus x1 ≤ x2), eventually any Y that was produced in the second reaction gets converted to B by the third reaction. To see that the CRN in (c) correctly computes the maximum, note that the first two reactions eventually produce x1 + x2 molecules of Y, while the third reaction eventually produces min(x1, x2) molecules of K. Thus the last reaction eventually consumes min(x1, x2) molecules of Y leaving x1 + x2 − min(x1, x2) = max(x1, x2) Y ’s. (Bottom) Graphs of the three functions. The set of points belonging to the graph of each of these functions is a semilinear set. Under each plot this semilinear set is written in the form of a union of linear sets corresponding to Equation 1.1. The defining vectors are shown as colored arrows in the graph.

It is illuminating to compare the computation of division by 2 shown in Fig. 1(a) with another reasonable alternative: reactions X → Y and Y → X (i.e. the reversible reaction X ⇌ Y). If the rate constants of the two reactions are equal, the system equilibrium is at half of the initial amount of X transformed to Y. There are two stark differences between this implementation and that of Fig. 1(a). First, this CRN would not have an exact output count of Y, but rather a distribution around the equilibrium. (However, in the limit of large numbers, the error as a fraction of the total would converge to zero.) Second, the equilibrium amount of Y for any initial amount of X would depend on the relative rate constants of the two reactions. In contrast, the deterministic computation discussed in this paper relies on the identity and stoichiometry of the reactants and products rather than the rate constants. While the rates of reactions are analog quantities, the identity and stoichiometry of the reactants and products are naturally digital. Methods for physically implementing CRNs naturally yield systems with digital stoichiometry that can be set exactly [25, 6]. While rate constants can be tuned, being analog quantities, it cannot be expected that they can be controlled precisely.

A few general properties of this type of deterministic computation can be inferred. The first property is that a deterministic CRN is able to handle input molecules added at any time, and not just initially. Otherwise, if the CRN could reach a state after which it no longer “accepts input”, then there would be a sequence of reactions that would lead to an incorrect output even if all input is present initially. (It is always possible that some input molecules remain unreacted for arbitrarily long.)

The second general property of deterministic computation relates to composition. As any bona fide computation must be composable, it is important to ask: can the output of one deterministic CRN be the input to another? The problem is that deterministic CRNs have, in general, no way of knowing when they are done computing, or whether they will change their answer in the future. This is essentially because a CRN cannot deterministically detect the absence of a species, and thus, for example, cannot discern when all input has been read. Moreover, simply concatenating two deterministic CRNs (renaming species to avoid conflict) does not always yield a deterministic CRN. For example, consider computing the function f(x1, x2) = ⌊max(x1, x2)/2⌋ by composing the CRNs in Fig. 1(c) and (a). The new CRN is:

where W is the output species of the max computation, that acts as the input to the division by 2 computation. Note that if W happens to be converted to Y by the last reaction before it reacts with K, then the system can converge to a final output value of Y that is larger than expected. In other words, because the first CRN needs to consume its output W, the second CRN can interfere by consuming W itself (in the process of reading it out).

In contrast to the above example, two deterministic CRNs can be simply concatenated to make a new deterministic CRN if the first CRN never consumes its output species (i.e. it produces its output “monotonically”). Since it doesn’t matter when the input to the second CRN is produced (the first property, above), the overall computation will be correct. Yet deterministically computing a non-monotonic function without consuming output species is impossible (see Section 4). In a number of places in this paper, we convert a non-monotonic function into a monotonic one over more outputs, to allow the result to be used by a downstream CRN.

What do the functions in Fig. 1(top) have in common such that the CRNs computing them can inevitably progress to the right answer no matter what order the reactions occur in? What other functions can be computed similarly? Answering these questions may seem difficult because it appears like the three examples, although all deterministic, operate on different principles and seem to use different ideas.

We show that the functions deterministically computable by CRNs are precisely the semilinear functions, where we define a function to be semilinear if its graph {(x, y) ∈ ℕk × ℕl ∣ f(x) = y} is a semilinear subset of ℕk × ℕl. This means that the graph of the function is a union of a finite number of linear sets – i.e. sets that can be written in the form

| (1.1) |

for some fixed vectors b, u1, …, up ∈ ℕk+l. Fig. 1 (bottom) shows the graphs of the three example functions expressed as a union of sets of this form. Informally, semilinear functions can be thought of as “piecewise linear functions” with a finite number of pieces, and linear domains of each piece.2

This characterization implies, for example, that such functions as f(x1, x2) = x1x2, f(x) = x2, or f(x) = 2x are not deterministically computable. For instance, the graph of the function f(x1, x2) = x1x2 consists of infinitely many lines of different slopes, and thus, while each line is a linear set, the graph is not a finite union of linear sets. Our result employs the predicate computation characterization of Angluin, Aspnes and Eisenstat [2], together with some nontrivial additional technical machinery.

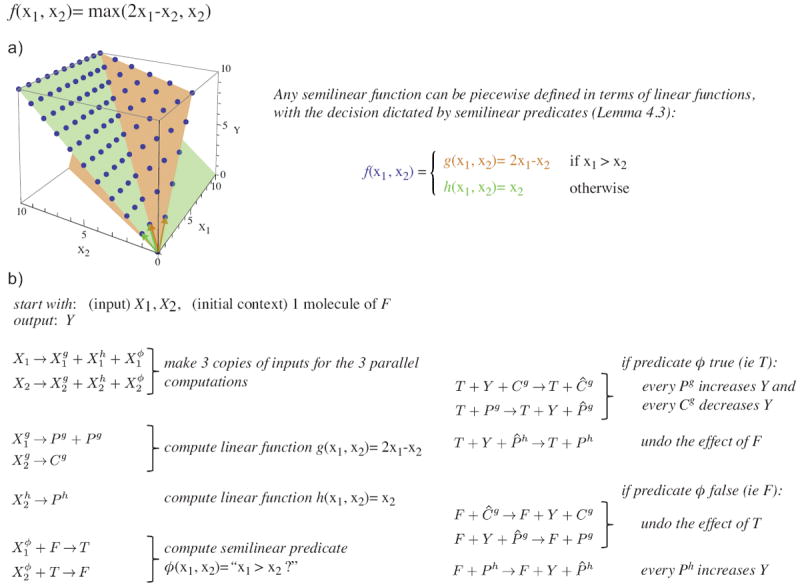

While the example CRNs in Fig. 1 all seem to use different “tricks”, in Section 4 we develop a systematic construction for any semilinear function. To get the gist of this construction see the example in Fig. 2. To obtain a CRN computing the example semilinear function f(x1, x2) = max(2x1 − x2, x2), we decompose the function into “linear” pieces: f1(x1, x2) = 2x1 − x2 and f2(x1, x2) = x2 (formally partial affine functions, see Section 2). Then semilinear predicate computation (per [2]) is used to decide which linear function should be applied to a given input. A decomposition compatible with this approach is always possible by Lemma 4.3. Linear functions such as f1 and f2 are easy for CRNs to deterministically compute by the relative stoichiometry of the reactants and products (analogously to the example in Fig. 1(a)). However, note that to correctly compose the computation of f1 with the downstream computation (Fig. 1(b), right column) we convert f1 from a non-monotonic function with one output, to a monotonic function with two outputs such that the original output is encoded by their difference.

Figure 2.

An example capturing the essential elements of our systematic construction for computing semilinear functions (Lemma 4.4). To compute the target semilinear function, we recast it as a piecewise function defined in terms of linear functions, such that semilinear predicates can decide which of the linear functions is applicable for a given input (this recasting is possible by Lemma 4.3). (a) The graph of the target function visualizing the decomposition into linear functions. (b) A CRN deterministically computing the target function with intuitive explanations of the reactions. We use tri-molecular reactions for simplicity of exposition; however, these can be converted into a sequence of bimolecular reactions. Note that we allow an “initial context”: a fixed set of molecules that are always present in the initial state in addition to the input. The linear functions g and h are computed monotonically by representing the output as the difference of P (“produce”) minus C (“consume”) species. Thus although Pg − Cg could be changing non-monotonically, Pg and Cg do not decrease over time, allowing them to be used as inputs for downstream computation. To compute the semilinear predicate ϕ(x1, x2) = “x1 > x2?”, a single molecule, converted between F (ϕ = “false”) and T (ϕ = “true”) forms, goes back and forth consuming and . Whether it gets stuck in the F or T forms indicates the excess of or . The reactions in the right column use the output of this predicate computation to set the count of Y (the global output) to either the value computed by g or h. Note that the CRN cannot “know” when the predicate computation has finished since the absence of or cannot be detected. Thus the reactions in the right column must be capable of responding to a change in F/T. Species P̂g, P̂h, and Ĉg are used to backup the values of Pg, Ph, and Cg, enabling the switch in output.

In the last part of this paper, we turn our attention to optimizing the time required for CRNs to converge to the answer. While the construction of Section 4 uses O(∥x∥ log ∥x∥) time, in Section 5, we show that every semilinear function can be deterministically computed on input x in expected time polylog(∥x∥). This is done by a similar technique used by Angluin, Aspnes, and Eisenstat [2] to show the equivalent result for predicate computation. They run a slow deterministic computation in parallel with a fast randomized computation, allowing the deterministic computation to compare the two answers and update the randomized answer only if it is incorrect, which happens with low probability. However, novel techniques are required since it is not as simple to “nondestructively compare” two integers (so that the counts are only changed if they are unequal) as to compare two Boolean values.

2 Preliminaries

Given a vector x ∈ ℕk, let , where x(i) denotes the ith coordinate of x. We abuse notation and consider the sets ℕk × ℕl and ℕk+l to be the same, because it is sometimes convenient to treat an ordered pair of vectors as being concatenated into a single longer vector. A set A ⊆ ℕk is linear if there exist vectors b, u1, …, up ∈ ℕk such that

Set A is semilinear if it is a finite union of linear sets. If f : ℕk → ℕl is a function, define the graph of f to be the set {(x, y) ∈ ℕk × ℕl ∣ f(x) = y}. A function is semilinear if its graph is a semilinear set.

We say a partial function f : ℕk ⤏ ℕl is affine if there exist kl rational numbers a1,1, …, ak,l ∈ ℚ and l + k nonnegative integers b1, …, bl, c1, …, ck ∈ ℕ such that, if y = f(x), then for each j ∈ {1, …, l}, , and for each i ∈ {1, …, k}, x(i) − ci ≥ 0. (In matrix notation, there exist a k × l rational matrix A and vectors b ∈ ℕl and c ∈ ℕk such that f(x) = A(x − c) + b.) In other words, the graph of f, when projected onto the (k+1)-dimensional space defined by the k coordinates corresponding to x and the single coordinate corresponding to y(j), is a subset of a k-dimensional hyperplane.

Four aspects of the definition of affine functions invite explanation.

First, we allow partial functions because Lemma 4.3 characterizes the semilinear functions as finite combinations of affine functions, where the union of the domains of the functions is the entire input space ℕk. The value of an affine function on an input outside of its domain is irrelevant (and in fact may be non-integer).

Second, we have two separate “constant offsets” bj and ci. Affine functions over the reals are typically defined with only one of these, bj. Our definition captures the form that is directly computable with molecular counts: we can take x, subtract c, multiply by A, and subtract b, with every intermediate result being integer-valued. If we try to incorporate the c offset into the b offset, we could end up with fractional intermediate computations.

Third, it may seem overly restrictive to require bj and ci to be nonnegative. In fact, our proof of Lemma 4.2 is easily modified to show how to construct a CRN to compute an affine function that allows negative values for bj and ci. However, Lemma 4.3 shows that, when the function is such that its graph is a nonnegative linear set, then we may freely assume that bj and ci to be nonnegative. Since this simplifies some of our definitions, we use this convention.

Fourth, the requirement that x(i) − ci ≥ 0 seems artificial. When we prove that every semilinear function can be written as a finite union of partial affine functions with linear graphs (Lemma 4.3), however, this will follow from the fact that the “offset vector” in the definition of a linear set is required to be nonnegative.

Note that by appropriate integer arithmetic, a partial function f : ℕk ⤏ ℕl is affine if and only if there exist kl integers n1,1, …, nk,l ∈ ℤ and 2l+k nonnegative integers b1, …, bl, c1, …, ck, d1, …, dl ∈ ℕ such that, if y = f(x), then for each j ∈ {1, …, l}, , and for each i ∈ {1, …, k}, x(i) − ci ≥ 0. Each dj may be taken to be the least common multiple of the denominators of the rational coefficients in the original definition. We will employ this latter definition when convenient.

2.1 Chemical reaction networks

If Λ is a finite set (in this paper, of chemical species), we write ℕΛ to denote the set of functions f : Λ → ℕ. Equivalently, we view an element c ∈ ℕΛ as a vector of |Λ| nonnegative integers, with each coordinate “labeled” by an element of Λ. Given X ∈ Λ and c ∈ ℕΛ, we refer to c(X) as the count of X in c. We write c ≤ c′ to denote that c(X) ≤ c′ (X) for all X ∈ Λ. Given c, c′ ∈ ℕΛ, we define the vector component-wise operations of addition c + c′, subtraction c − c′, and scalar multiplication nc for n ∈ ℕ. If Δ ⊂ Λ, we view a vector c ∈ ℕΔ equivalently as a vector c ∈ ℕΛ by assuming c(X) = 0 for all X ∈ Λ \ Δ.

Given a finite set of chemical species Λ, a reaction over Λ is a triple α = 〈r, p, k〉 ∈ ℕΛ × ℕΛ × ℝ+, specifying the stoichiometry of the reactants and products, respectively, and the rate constant k. If not specified, assume that k = 1 (this is the case for all reactions in this paper), so that the reaction α = 〈r, p, 1〉 is also represented by the pair 〈r, p〉. For instance, given Λ = {A, B, C}, the reaction A + 2B → A + 3C is the pair 〈(1, 2, 0), (1, 0, 3)〉. A (finite) chemical reaction network (CRN) is a pair C = (Λ, R), where Λ is a finite set of chemical species, and R is a finite set of reactions over Λ. A configuration of a CRN C = (Λ, R) is a vector c ∈ ℕΛ. We also write #cX to denote c(X), the count of species X in configuration c, or simply #X when c is clear from context.

Given a configuration c and reaction α = 〈r, p〉, we say that α is applicable to c if r ≤ c (i.e., c contains enough of each of the reactants for the reaction to occur). If α is applicable to c, then write α(c) to denote the configuration c + p − r (i.e., the configuration that results from applying reaction α to c). If c′ = α(c) for some reaction α ∈ R, we write c →C c′, or merely c → c′ when C is clear from context. An execution (a.k.a., execution sequence) ε is a finite or infinite sequence of one or more configurations ε = (c0, c1, c2, …) such that, for all i ∈ {1, …, |ε| − 1}, ci−1 → ci. If a finite execution sequence starts with c and ends with c′, we write , or merely c →* c′ when the CRN C is clear from context. In this case, we say that c′ is reachable from c.

Turing machines, for example, have different semantic interpretations depending on the computational task under study (deciding a language, computing a function, etc.). Similarly, in this paper we use CRNs to decide subsets of ℕk and to compute functions f : ℕk → ℕl. In the next two subsections we define two semantic interpretations of CRNs that correspond to these two tasks.

2.2 Stable decidability of predicates

We now review the definition of stable decidability of predicates introduced by Angluin, Aspnes, and Eisenstat [2].3 Intuitively, some species “vote” for a true/false answer and the system stabilizes to an output when a consensus is reached and it can no longer change its mind. The determinism of the system is captured in that it is impossible to stabilize to an incorrect answer, and the correct stable output is always reachable.

A chemical reaction decider (CRD) is a tuple D = (Λ, R, Σ, ϒ, ϕ, σ), where (Λ, R) is a CRN, Σ ⊆ Λ is the set of input species, ϒ ⊆ Λ is the set of voters4, ϕ : ϒ → {0, 1} is the (Boolean) output function, and σ ∈ ℕΛ\Σ is the initial context. An input to D will be a vector i0 ∈ ℕΣ (equivalently, i0 ∈ ℕk if we write Σ = {X1, …, Xk} and assign Xi to represent the i’th coordinate). Thus a CRD together with an input vector defines an initial configuration i defined by i(X) = i0(X) if X ∈ Σ, and i(X) = σ(X) otherwise. We say that such a configuration is a valid initial configuration, i.e., i ↾ (Λ \ Σ) = σ. If we are discussing a CRN understood from context to have a certain initial configuration i, we write #0 X to denote i(X).

We extend ϕ to a partial function Φ : ℕΛ ⤏ {0, 1} as follows. Φ(c) is undefined if either c(X) = 0 for all X ∈ ϒ, or if there exist X0, X1 ∈ ϒ such that c(X0) > 0, c(X1) > 0, ϕ(X0) = 0 and ϕ(X1) = 1. Otherwise, there exists b ∈ {0, 1} such that (∀X ∈ ϒ)(c(X) > 0 ⇒ ϕ(X) = b); in this case, the output Φ(c) of configuration c is b.

A configuration c is output stable if Φ(c) is defined and, for all c′ such that c →* c′, Φ(c′) = Φ(c).5 We say a CRD D stably decides the predicate ψ : ℕΣ → {0, 1} if, for any valid initial configuration i ∈ ℕΛ with i ↾ Σ = i0, for all configurations c ∈ ℕΛ, i →* c implies c →* c′ such that c′ is output stable and Φ(c′) = ψ(i0). Note that this condition implies that no incorrect output stable configuration is reachable from i. We say that D stably decides a set A ∈ ℕk if it stably decides its indicator function.

The following theorem is due to Angluin, Aspenes, and Eisenstat [2]:

Theorem 2.1 ([2])

A set A ⊆ ℕk is stably decidable by a CRD if and only if it is semilinear.

The model they use is defined in a slightly different way. They study population protocols, a distributed computing model in which a fixed-size set of agents, each having a state from a finite set, undergo successive pairwise interactions, the two agents updating their states upon interacting. This is equivalent to chemical reaction networks in which all reactions have exactly two reactants and two products. However, the result carries over to our more general model, as we now explain.

The reverse direction, that every semilinear predicate is decided by some CRD, follows directly from the result on population protocols, since population protocols are a subset of the set of all CRNs. The forward direction of Theorem 2.1, that every stably decidable set is semilinear, holds even if stable decidability is defined with respect to any relation →* on ℕk that is reflexive, transitive, and “respects addition”, i.e., [(∀c1, c2, x ∈ ℕk) (c1 →* c2) ⇒ (c1 + x →* c2 + x)]. These properties can easily be shown to hold for the CRN reachability relation. The third property, in particular, means that if some molecules c1 can react to form molecules c2, then it is possible for them to react in the presence of some extra molecules x, such that no molecules from x react at all.

2.3 Stable computation of functions

Aspnes and Ruppert [4] describe an extension from Boolean predicates to functions, by generalizing the output function ϕ to a larger range ϕ : ϒ → {0, …, l}. Equivalently, one can consider multiple voting species V0, … Vl; if the CRN converges to only Vj votes, then then output is j. However, this output encoding handles only bounded-range functions: the size of ϒ must be at least the range of the function. By contrast our results concern functions with unbounded range.

We now define a notion of stable computation of functions in which the output and input are encoded identically — in molecular counts of certain species. Intuitively, the inputs to the function are the initial counts of input species X1, …, Xk, and the outputs are the counts of output species Y1, …, Yl. The system stabilizes to an output when the counts of the output species can no longer change. Again determinism is captured in that it is impossible to stabilize to an incorrect answer and the correct stable output is always reachable.

Let k, l ∈ ℤ+. A chemical reaction computer (CRC) is a tuple C = (Λ, R, Σ, Γ, σ), where (Λ, R) is a CRN, Σ ⊂ Λ is the set of input species, Γ ⊂ Λ is the set of output species, such that Σ ∩ Γ = ∅, |Σ| = k, |Γ| = l, and σ ∈ ℕΛ\Σ is the initial context. Write Σ = {X1, X2, …, Xk} and Γ = {Y1, Y2, …, Yl}. We say that a configuration c is output count stable if, for every c′ such that c →* c′ and every Yi ∈ Γ, c(Yi) = c′(Yi) (i.e., the counts of species in Γ will never change if c is reached). As with CRD’s, we require initial configurations i of C with input i0 ∈ ℕΣ to obey i(X) = i0(X) if X ∈ Σ and i(X) = σ(X) otherwise, calling them valid initial configurations. We say that C stably computes a function f : ℕk → ℕl if for any valid initial configuration i ∈ ℕΛ, i →* c implies c →* c′ such that c′ is an output count stable configuration with f(i(X1), i(X2), …, i(Xk)) = (c′(Y1), c′(Y2), …, c′(Yl)). Note that this condition implies that no incorrect output stable configuration is reachable from i.

As an example of a formally defined CRC consider the function f(x) = ⌊x/2⌋ shown in Fig. 1(a). This function is stably computed by the CRC (Λ, R, Σ, Γ, σ) where (Λ, R) is the CRN consisting of a single reaction 2X → Y, Σ = {X} is the set of input species, Γ = {Y} is the set of output species, and the initial context σ is zero for all species in Λ \ Σ. In Fig. 2(b) the initial context σ(F) = 1, and is zero for all other species in Λ \ Σ. In Fig. 1(a) there is at most one reaction that can happen in any reachable configuration. In contrast, different reactions may occur next in Fig. 1(b) and (c). However, from any reachable state, we can reach the output count stable configuration with the correct amount of Y, satisfying our definition of stable computation.

In Sections 4–5 we will describe systematic (but much more complex) constructions for these and all functions with semilinear graphs.

2.4 Fair execution sequences

Note that by defining deterministic computation in terms of certain states being reachable and others not, we cannot guarantee the system will get to the correct output for any possible execution sequence. For example suppose an adversary controls the execution sequence. Then {X → 2Y, A → B, B → A} will not reach the intended output state y = 2x if the adversary simply does not let the first reaction occur, always preferring the second or third.

Intuitively, in a real chemical mixture, the reactions are chosen randomly and not adversarially, and the CRN will get to the correct output. In this section we follow Angluin, Aspnes, and Eisenstat [2] and define a combinatorial condition called fairness on execution sequences that captures what is minimally required of the execution sequence to be guaranteed that a stably deciding/computing CRD/CRC will reach the output stable state. In the next section we consider the kinetic model, which ascribes probabilities to execution sequences. The kinetic model also defines the time of reactions, allowing us to study the computational complexity of the CRN computation. Note that in the kinetic model, if the reachable configuration space is bounded for any start configuration (i.e. if from any starting configuration there are finitely many configurations reachable) then any observed execution sequence will be fair with probability 1. This will be the case for our constructions in Sections 4 and 5.

Let Δ ⊆ Λ. We say that p ∈ ℕΔ is a partial configuration (with respect to Δ). We write p = c ↾ Δ for any configuration c such that c(X) = p(X) for all X ∈ Δ, and we say that p is the restriction of c to Δ. Say that a partial configuration p with respect to Δ is reachable from configuration c′ if there is a configuration c reachable from c′ and p = c ↾ Δ. In this case, we write c′ →* p.

An infinite execution ε = (c0, c1, c2, …) is fair if, for all partial configurations p, if p is infinitely often reachable then it is infinitely often reached.6 In other words, no reachable partial configuration is “starved”.7 This definition, applied to finite executions, deems all of them fair vacuously. We wish to distinguish between finite executions that can be extended by applying another reaction and those that cannot. Say that a configuration is terminal if no reaction is applicable to it. We say that a finite execution is fair if and only if it ends in a terminal configuration. For any species A ∈ Λ, we write #∞A to denote the eventual convergent count of A if #A is guaranteed to stabilize on any fair execution sequence; otherwise, #∞A is undefined.

The next lemma characterizes stable computation of functions by CRCs in terms of fair execution sequences, showing that the counts of output species will converge to the correct output values on any fair execution sequence. An analogous lemma holds for CRDs.

Lemma 2.2

A CRC stably computes a function f : ℕk → ℕl if and only if for every valid initial configuration i ∈ ℕΛ, every fair execution E = (i, c1, c2, …) contains an output count stable configuration c such that f(i(X1), i(X2), …, i(Xk)) = (c(Y1), c(Y2), …, c(Yl)).

Proof

The “if” direction follows because every finite execution sequence can be extended to be fair, and thus an output count stable configuration with the correct output is always reachable. The “only if” direction is shown as follows. We know that from any reachable configuration c, some correct output stable configuration c′ is reachable (but possibly different c′ for different c). We will argue that in any infinite fair execution sequence there is some partial configuration that is reachable infinitely often, and that any state with this partial configuration is the correct stable output state. Consider an infinite fair execution sequence c1, c2, …, and the corresponding reachable correct output stable configurations , , …. As in Lemma 11 of [2], there is some integer k ≥ 1 such that a configuration is output count stable if and only if it is output count stable when each coordinate that is larger than k is set to exactly k (k-truncation). The infinite sequence , , … must have an infinite subsequence sharing the same k-truncation. Let p be the partial configuration consisting of the correct output and all the coordinates less than k in the shared truncation. This partial configuration is reachable infinitely often, and no matter what the counts of the other species outside of p are, the resulting configuration is output count stable.

2.5 Kinetic model

The following model of stochastic chemical kinetics is widely used in quantitative biology and other fields dealing with chemical reactions between species present in small counts [13]. It ascribes probabilities to execution sequences, and also defines the time of reactions, allowing us to study the computational complexity of the CRN computation in Sections 4 and 5.

In this paper, the rate constants of all reactions are 1, and we define the kinetic model with this assumption. A reaction is unimolecular if it has one reactant and bimolecular if it has two reactants. We use no higher-order reactions in this paper when using the kinetic model.

The kinetics of a CRN is described by a continuous-time Markov process as follows. Given a fixed volume υ and current configuration c, the propensity of a unimolecular reaction α : X → … in configuration c is ρ(c, α) = #cX. The propensity of a bimolecular reaction α : X + Y → …, where X ≠ Y, is . The propensity of a bimolecular reaction α : X + X → … is . The propensity function determines the evolution of the system as follows. The time until the next reaction occurs is an exponential random variable with rate ρ(c) = Σα ∈ R ρ(c, α) (note that ρ(c) = 0 if no reactions are applicable to c). The probability that next reaction will be a particular αnext is .

The kinetic model is based on the physical assumption of well-mixedness that is valid in a dilute solution. Thus, we assume the finite density constraint, which stipulates that a volume required to execute a CRN must be proportional to the maximum molecular count obtained during execution [24]. In other words, the total concentration (molecular count per volume) is bounded. This realistically constrains the speed of the computation achievable by CRNs. Note, however, that it is problematic to define the kinetic model for CRNs in which the reachable configuration space is unbounded for some start configurations, because this means that arbitrarily large molecular counts are reachable.8 We apply the kinetic model only to CRNs with configuration spaces that are bounded for each start configuration.

We now prove two lemmas about the complexity of certain common sequences of reactions. Besides providing simple examples of the kinetic model, they capture patterns that will be used throughout Section 4 and 5. These lemmas are implicit or explicit in many earlier papers on stochastic CRNs.

Lemma 2.3

Let {A1, …, Am} be a set of species, such that the count of each is O(n). Then the expected time for i unimolecular reactions Ai → …, in which none of the Ai appear as products, to consume all Ai’s is O(log n).

Proof

In any configuration c, the propensity of the ith reaction is #c Ai. Let k = Σi #c Ai. The time until next reaction is an exponential random variable with propensity k. Thus the expected time until the next reaction occurs is 1/k. Every time one of the reactions occurs, one of the Ai’s is consumed, and so k decreases by 1. Thus, by linearity of expectation, the expected time to consume all the Ai molecules is .

Lemma 2.4

Let L be a species with count 1, and A a species of count n. Then, if the volume is υ = O(n), the expected time for reaction L + A → L + B to convert all A’s to B’s is O(n log n).

Proof

When exactly k molecules of species A remain, the propensity of the reaction is k/υ. Thus the expected time until the next reaction is υ/k. Therefore by linearity of expectation, the expected time for L to react with every A is .

3 Exactly the semilinear functions can be deterministically computed

In this section we use Theorem 2.1 to show that only “simple” functions can be stably computed by CRCs. This is done by showing how to reduce the computation of a function by a CRC to the decidability of its graph by a CRD, and vice versa. In this section we do not concern ourselves with kinetics. Thus the volume is left unspecified, and we consider the combinatorial-only condition of fairness on execution sequences for our positive result (Lemma 3.2) and direct reachability arguments for the negative result (Lemma 3.1).

The next lemma shows that every function computable by a chemical reaction network is semilinear by reducing stably deciding a set that is the graph of a function to stably computing that function. It turns out that the reduction technique of introducing “production” and “consumption” indicator species will be a general technique, used repeatedly in this paper.

Lemma 3.1

Every function stably computable by a CRC is semilinear.

Proof

Suppose there is a CRC C stably computing f. We will construct a CRD D that stably decides the graph of f. By Theorem 2.1, this implies that the graph of f is semilinear. Intuitively, the difficulty lies in checking whether the amount of the outputs Yi produced by C matches the value given to the decider D as input. What makes this non-trivial is that D does not know whether C has finished computing, and thus must compare Yi while Yi is potentially being changed by C. In particular, D cannot consume Yi or that could interfere with the operation of C.

Let C = (Λ, R, Σ, Γ, σ) be the CRC that stably computes f : ℕk → ℕl, with input species Σ = {X1, …, Xk} and output species Γ = {Y1, …, Yl}. We will modify C to obtain the following CRD D = (Λ′, R′, Σ′, ϒ′, ϕ′, σ′). Let and , where each , are new species. Intuitively, represents the number of Yi’s produced by C and the number of Yi’s consumed by C. The goal is for D to stably decide the predicate . In other words, the initial configuration of D will be the same as that of C except for some copies of , equal to the purported output of f to be tested by D.

Let Λ′ = Λ ∪ YC ∪ YP ∪ {F, T}. Let Σ′ = Σ ∪ YC. Let ϒ′ = {F, T}, with ϕ(F) = 0 and ϕ(T) = 1. Let σ′(T) = 1 and σ′(S) = 0 for all S ∈ Λ′\ (Σ′ ∪ {T}). We will modify R to obtain R′ as follows. For each reaction α that consumes a net number n of Yi molecules, append n products to α. For each reaction α that produces a net number n of Yi molecules, append n products to α. For example, the reaction A + 2B + Y1 + 3Y3 → Z + 3Y1 + 2Y3 becomes .

Then add the following additional reactions to R′, for each i ∈ {1, …, l},

| (3.1) |

| (3.2) |

| (3.3) |

| (3.4) |

Observe that if , then from any reachable configuration we can reach a configuration without any or for all i, and such that no more of either kind can be produced. (The CRC stabilizes and all of and is consumed by reaction 3.1.) In this configuration we must have #T > 0 because the last instance of reaction 3.1 produced it (or if no output was ever produced, T comes from the initial context σ′), and T can no longer be consumed in reactions 3.2–3.3. Thus, since all of F can be consumed in reaction 3.4, a configuration with #T > 0 and #F = 0 is always reachable, and this configuration is output stable.

Now suppose for some output coordinate i* ∈ {1, …, l}. This means that from any reachable configuration we can reach a configuration with either or but not both, and such that for all i, no more of and can be produced. (This happens when the CRC stabilizes and reaction 3.1 consumes the smaller of or .) From this configuration, we can reach a configuration with #F > 0 and #T = 0 through reactions 3.2–3.3. This is an output stable configuration since reactions 3.2–3.4 require T.

The next lemma shows the converse of Lemma 3.1. Intuitively, it uses a random search of the output space to look for the correct answer to the function and uses a predicate decider to check whether the correct solution has been found.

Lemma 3.2

Every semilinear function is stably computable by a CRC.

Proof

Let f : ℕk → ℕl be a semilinear function, and let

denote the graph of f. We then consider the set

Intuitively, Ĝ defines the same function as G, but with each output variable expressed as the difference between two other variables. Note that Ĝ is not the graph of a function since for each y ∈ ℕl there are many pairs (yP, yC) such that yP − yC = y. However, we only care that Ĝ is a semilinear set so long as G is a semilinear set, by Lemma 3.3, proven below.

Then by Theorem 2.1, Ĝ is stably decidable by a CRD D = (Λ, R, Σ, ϒ, ϕ, σ), where

and we assume that ϒ contains only species T and F such that for any output-stable configuration of D, exactly one of #T or #F is positive to indicate a true or false answer, respectively.

Define the CRC C = (Λ′, R′, Σ′, Γ′, σ′) as follows. Let Σ′ = {X1, …, Xk}. Let Γ′ = {Y1, …, Yl}. Let Λ′ = Λ ∪ Γ′. Let σ′(S) = σ(S) for all S ∈ Λ \ Σ, and let σ′(S) = 0 for all S ∈ Λ′ \ (Λ \ Σ). Intuitively, we will have F change the value of y (by producing either or molecules), since F’s presence indicates that D has not yet decided that the predicate is satisfied. It essentially searches for new values of y that do satisfy the predicate. This indirect way of representing the value y is useful because yP and yC can both be increased monotonically to change y in either direction. If D had Yj as a species directly, and if we wanted to test a lower value of yj, then this would require consuming a copy of Yj, but this may not be possible if D has already consumed all of them.

Let R′ be R plus the following reactions for each j ∈ {1, …, l}:

| (3.5) |

| (3.6) |

It is clear that reactions (3.5) and (3.6) enforce that at any time, #Yj is equal to the total number of produced by reaction (3.5) minus the total number of produced by reaction (3.6) (although some of each of or may have been produced or consumed by other reactions in R).

Suppose that f(x) ≠ (#Y1, …, #Yl). Then if there are no F molecules present, the counts of and are not changed by reactions (3.5) and (3.6). Therefore only reactions in R proceed, and by the correctness of D, eventually an F molecule is produced (since eventually D must reach an output-stable configuration answering “false”, although F may appear before D reaches an output-stable configuration, if some T are still present). Once F is present, by the fairness condition (choosing Δ = {Y1, …. Yl}), eventually the value of (#Y1, …, #Yl) will change by reaction (3.5) or (3.6). In fact, every value of (#Y1, …, #Yl) is possible to explore by the fairness condition.

Suppose then that f(x) = (#Y1, …, #Yl). Perhaps F is present because the reactions in R have not yet reached an output-stable “true” configuration. Then perhaps the value of (#Y1, …, #Yl) will change so that f(x) ≠ (#Y1, …, #Yl). But by the fairness condition, a correct value of (#Y1, …, #Yl) must be present infinitely many times, so again by the fairness condition, since from such a configuration it is possible to eliminate all F molecules before producing or molecules, this must eventually happen. When all F molecules are gone while f(x) = (#Y1, …, #Yl) and D is in an output-stable configuration (thus no F can ever again be produced), then it is no longer possible to change the value of (#Y1, …, #Yl), whence C has reached a count-stable configuration with the correct answer. Therefore C stably computes f.

Note that the total molecular count (hence the required volume) of the CRC in Lemma 3.2 is unbounded. In Section 4 we discuss an alternative construction that avoids this problem.

Lemma 3.3

Let k, l ∈ ℤ+, and suppose G ⊆ ℕk × ℕl is semilinear. Define

Then Ĝ is semilinear.

Proof

Let G1, …, Gt be linear sets such that . For each i ∈ {1, …, t}, define

It suffices to show that each Ĝi is linear since . Let i ∈ {1, …, t} and let b, u1, …, ur ∈ ℕk × ℕl be such that

Define the vectors v1, …, vr ∈ ℕk × ℕl × ℕl as vj = (uj, 0l). Here, 0l denotes the vector in ℕl consisting of all zeros. In other words, let vj be uj on its first k + l coordinates and 0 on its last l coordinates. Similarly define b′ = (b, 0l).

Also, for each j ∈ {1, …, l} define vr+j = (0k, 0j−1 10l−j, 0j−1 10l−j). (i.e., a single 1 in the position corresponding to the jth output coordinate, one for yP and one for yC). Without the vectors vr+j, the set of points defined by b′, v1, …, vr would be simply Gi with l 0’s appended to the end of each vector. By adding the vectors vr+j, for each (x, y) ∈ Gi and each yP, yC ∈ ℕl such that y = yP − yC, we have that for some n1, …, nr+l ∈ ℕ; in particular, for n1, …, nr chosen such that and nr+j = yC(j) for each j ∈ {1, …, l}.

Thus , whence Ĝi is linear.

Lemmas 3.1 and 3.2 immediately imply the following theorem.

Theorem 3.4

A function f : ℕk → ℕl is stably computable by a CRC if and only if it is semilinear.

One unsatisfactory aspect of Lemma 3.2 is that we do not reduce the computation of f directly to a CRD deciding the graph G of f, but rather to D deciding a related set Ĝ. It is not clear how to directly reduce to a CRD deciding G since it is not obvious how to modify such a CRD to monotonically produce extra species that could be processed by the CRC computing f. Lemma 3.1, on the other hand, directly uses C as a black-box. Although we know that C, being a chemical reaction computer, is only capable of computing semilinear functions, if we imagine that some external powerful “oracle” controlled the reactions of C to allow it to stably compute a non-semilinear function, then D would decide that function’s graph. Thus Lemma 3.1 is more like the black-box oracle Turing machine reductions employed in computability and complexity theory, which work no matter what mythical device is hypothesized to be responsible for answering the oracle queries.

4 Deterministic computation of semilinear functions in O(∥x∥ log ∥x∥) time

Lemma 3.2 describes how a CRC can deterministically compute any semilinear function. However, there are problems with this construction if we attempt to use it to evaluate the speed of semilinear function computation in the kinetic model. First, the configuration space is unbounded for any input since the construction searches over outputs without setting bounds. Thus, more care must be taken to ensure that any infinite execution sequence will be fair with probability 1 in the kinetic model. What is more, since the maximum molecular count is unbounded, it is not clear how to set the volume for the time analysis. Even if we attempt to properly define kinetics, it seems like any reasonable time analysis of the random search process will result in expected time at least exponential in the size of the output.9

For our asymptotic time analysis, let the input size n = ∥x∥ be the number of input molecules. The total molecular count attainable will always be O(n); thus, by the finite density constraint, we assume the volume υ = Θ(n). We now describe a direct construction for computing semilinear functions in O(n log n) time that does not rely on the search technique explored in the previous section, but rather uses the mathematical structure of the semilinear graph.

For the asymptotic running time analysis, we will repeatedly assume that reactions complete “sequentially”: upstream reactions complete before downstream ones start. Although this is unrealistic, it provides an upper bound on the computation time that is easy to calculate. Note that in proving the correctness of our CRN algorithms we cannot make this assumption, because we must show that the computation is correct no matter in what order the reaction occur.

We use the technique of “running multiple CRNs in parallel” on the same input. To accomplish this it is necessary to split the inputs X1, …, Xk into separate molecules using a reaction , which will add only O(log n) to the time complexity by Lemma 2.3, so that each of the p separate parallel CRNs do not interfere with one another. For brevity we omit stating this formally when the technique is used.

We require the following theorem, due to Angluin, Aspnes, Diamadi, Fischer, and René [1], which states that any semilinear predicate can be decided by a CRD in expected time O(n log n). (This was subsequently reduced to O(n) by Angluin, Aspnes, and Eisenstat [3], but O(n log n) suffices for our purpose.)

Theorem 4.1 ([1])

Let ϕ : ℕk → {0, 1} be a semilinear predicate. Then there is a stable CRD D that decides ϕ, and the expected time to reach an output-stable state is O(n log n), where n is the number of input molecules.

The next lemma shows that affine partial functions can be computed in expected time O(n log n) by a CRC. For its use in proving Theorem 4.4, we require that the output molecules be produced monotonically. Unfortunately, this is impossible for general affine partial functions. For example, consider the function f(x1, x2) = x1 − x2 where the domain of f is dom f = {(x1, x2) ∣ x1 ≥ x2}. By withholding a single copy of X2 and letting the CRC stabilize to the output value #Y = x1 − x2 + 1, then allowing the extra copy of X2 to interact, the only way to stabilize to the correct output value x1 − x2 is to consume a copy of the output species Y. Therefore Lemma 4.2 is stated in terms of an encoding of affine partial functions that allows monotonic production of outputs, encoding the output value y(j) as the difference between the counts of two monotonically produced species and , using the same technique used in the proofs of Lemmas 3.1 and 3.2.

Let f : ℕk ⤏ ℕl be an affine partial function, where, letting y = f(x), for all j ∈ {1, …, l}, for integer ni,j and nonnegative integer bj, ci, and dj. Define f̂ : ℕk ⤏ ℕl × ℕl as follows. For each x ∈ dom f, define yC ∈ ℕl for each j ∈ {1, …, l} as . That is, yC(j) is the negation of the j’th coordinate of the output if taking the weighted sum of the inputs on only those coordinates with a negative coefficient ni,j. The value yP(j) is then similarly defined for all the positive coefficients and the bj offset: for each x ∈ dom f, define yP ∈ ℕl for each j ∈ {1, …, l} as . Because x(i) − ci ≥ 0, yP and yC are always nonnegative. Then if y = f(x), we have that y = yP − yC. Define f̂ as f̂(x) = (yP, yC).

Lemma 4.2

Let f : ℕk ⤏ ℕl be an affine partial function. Then there is a CRC that computes f̂ : ℕk ⤏ ℕl × ℕl in expected time O(n log n), where n is the number of input molecules, such that the output molecules monotonically increase with time (i.e. none are ever consumed), and at most O(n) molecules are ever produced.

Proof

If (yP, yC) = f̂(x), then there exist kl integers n1,1, …, nk,l ∈ ℤ and 2l + k nonnegative integers b1, …, bl, c1, …, ck, d1, …, dl ∈ ℕ such that, for each j ∈ {1, …, l}, and . Define the CRC as follows. It has input species Σ = {X1, …, Xk} and output species .

For each j ∈ {1, …, l}, start with bj copies of . This accounts for the bj offsets.

For each i ∈ {1, …, k}, start with a single molecule , and for each m ∈ {0, …, ci − 1}, add the reactions

| (4.1) |

| (4.2) |

This accounts for the ci offsets by eventually producing x(i) − ci copies of . Reaction (4.1) takes expected time O(n) to complete because each reaction instance takes expected time at most O(n) (since this is the slowest time for any reaction in volume O(n)) and a constant number, ci, of such reaction instances must take place. Once is produced (hence there are now x(i) − ci copies of Xi), reaction (4.2) takes time O(n log n) to complete by Lemma 2.4.

For each i ∈ {1, …, k}, add the reaction

| (4.3) |

This allows each output to be associated with its own copy of the input. Reaction (4.3) takes time O(log n) to complete by Lemma 2.3.

For each i ∈ {1, …, k} and j ∈ {1, …, l}, if ni,j > 0, add the reaction

| (4.4) |

and if ni,j < 0, add the reaction

| (4.5) |

Reaction (4.4) produces dj(yP (j) − bj) copies of , and reaction (4.5) produces djyC(j) copies of . Each takes time O(log n) to complete by Lemma 2.3.

Finally, to produce the correct number of and output molecules, we must divide the count of each and by dj. For each j ∈ {1, …, l}, start with a single copy of a molecule and another . For each j ∈ {1, …, l} and each m ∈ {0, …, dj − 1}, add the reactions

By Lemma 2.4, each of these reactions requires time O(n log n) to complete.

The next lemma characterizes semilinear functions as finite piecewise linear functions, where each of the pieces is defined over an input domain that is a linear set. This will enable us to use CRCs as constructed in Lemma 4.2 to compute semilinear functions in Lemma 4.4.

Lemma 4.3

Let f : ℕk → ℕl be a semilinear function. Then there is a finite set {f1 : ℕk ⤏ ℕl, …, fm : ℕk ⤏ ℕl} of affine partial functions, where each dom fi is a linear set, such that, for each x ∈ ℕk, if fi(x) is defined, then f(x) = fi(x), and .

We split the semilinear function into partial functions, each with a graph that is a linear set. The non-trivial aspect of our argument is showing that (straightforward) linear algebra over the reals can be used to solve our problem about integer arithmetic. For example, consider a partial function defined by the following linear graph: b = 0, u1 = (1, 1, 1), u2 = (2, 0, 1), u3 = (0, 2, 1) (where the first two coordinates are inputs and the last coordinate is the output). Note that the set of points where this function is defined is where x1 + x2 is even. Given an input point x, the natural approach to evaluating the function is to solve for the coefficients n1, n2, n3 such that x can be expressed as a linear combination of u1, u2, u3 restricted to the first two coordinates. Then the linear combination of the last coordinate of u1, u2, u3 with coefficients n1, n2, n3 would give the output. However, the vectors u1, u2, u3 are not linearly independent (yet this linear set cannot be expressed with less than three basis vectors — illustrating the difference between real spaces and integer-valued linear sets), so there are infinitely many real-valued solutions for the coefficients. We show that ui must span a real subspace with at most one output value for any input coordinates. Then we can throw out a vector (say u1) to obtain a set of linearly independent vectors (u2, u3) and solve for n2, n3 ∈ ℝ, and let n1 = 0. In this example, the resulting partial affine function is f(x1, x2) = (x1 + x2)/2.

Proof of Lemma 4.3

Let G = {(x, y) ∈ ℕk × ℕl | f(x) = y} be the graph of f. Since G is semilinear, it is a finite union of linear sets {L1, …, Ln}. It suffices to show that each of these linear sets Lm is the graph of an affine partial function. Since Lm is linear, its projection onto any subset of its coordinates is linear. Therefore dom fm (the projection of Lm onto its first k coordinates) is linear.

We consider each output coordinate separately, since if we can show that each y(j) is an affine function of x, then it follows that y is an affine function of x. Fix j ∈ {1, …, l}. Let be the (k + 1)-dimensional projection of Lm onto the coordinates defined by x and y(j), which is linear because Lm is. Since is linear, there exist vectors b, u1, …, up ∈ ℕk+1 such that .

Consider the real-vector subspace spanned by u1, …, up. It cannot contain the vector j = (0, …, 0, 1)T. Suppose it does. Take a subset of linearly independent vectors spanning this subspace from the above list (we possibly remove some linearly dependent vectors); say u1, …, up′. The unique solution to the coefficients ξ1, …, ξp′ ∈ ℝ such that j = ξ1u1 + … + ξp′up′ can be obtained by using the left-inverse of the matrix with columns u1, …, up′ (the left inverse exists because the matrix is full-rank). Since the elements of the left-inverse matrix are rational functions of the matrix elements, and vectors u1, …, up′ consist of numbers in ℕ, the coefficients ξ1, …, ξp′ are rational. We can multiply all the coefficients by the least common multiple of their denominators c yielding cj = m1u1 + … + mp′up′ where m1, …, mp′ ∈ ℤ. Now consider a point a in defined as b + n1u1 + … + np′up′, where ni ∈ ℕ. Define . We choose a such that ni are large enough that . Since , we have that both a and are in . This is a contradiction because is the graph of a partial function and cannot contain two different points that agree on their first k coordinates. Therefore j is not contained in the span of u1, …, up.

Consider again the real-vector subspace spanned by u1, …, up. Again, let u1, …, up′ be a subset of linearly independent vectors spanning this subspace. Since j is not in it, the subspace must be at most k dimensional. If it is strictly less than k dimensional, add enough vectors in ℕk+1 to the basis set for the spanned subspace to be exactly k-dimensional but not include j. Call this new set of k linearly independent vectors w1, …, wk, where wi = ui for i ∈ {1, …, p′}. Let v1, …, vk ∈ ℕk be w1, …, wk restricted to the first k coordinates. The fact that w1, …, wk are linearly independent, but j is not in the subspace spanned by them, implies that v1, …, vk are linearly independent as well. This can be seen as follows. If v1, …, vk were not linearly independent, then we could write vk = ξ1v1 + … + ξk−1vk−1 for some ξi ∈ ℝ. However, . Since j is proportional to , we obtain a contradiction. Therefore v1, …, vk are linearly independent.

We now describe how to construct an affine function y(j) = f(x) for from w1, …, wk. Let matrix V be the square matrix with v1, …, vk as columns. Let b′ be b restricted to its first k coordinates. We claim that y(j) = b(k + 1) + (w1(k + 1), …, wk(k + 1)) · V−1 · (x − b′). Below we’ll show that this expression computes the correct value y(j). But first we show that it defines a partial affine function f (x). Because v1, …, vk are linearly independent, the inverse V−1 is well-defined. We need to show for integer ni,j and nonnegative integer bj, ci, and dj, and that on the domain of f, x(i) − ci ≥ 0. The offset bj = b(k + 1), which is a non-negative integer because b is a vector of non-negative integers. Since the offset vector b′ is the same for each output dimension, and it is likewise non-negative, we obtain the offset ci = b′(i). Further, since V−1 consists of rational elements (because V consists of elements in ℕ), we can define dj and ni,j as needed. Finally, note that the least value of x(i) that could be in is b′(i) = ci, and thus on the domain of f, x(i) − ci ≥ 0.

Finally, we show that this expression computes the correct value y(j). Let (ξ1, …, ξk)T ≜ V−1 · (x − b′), which implies that . If our value of y(j) is incorrect, then ∃n1, …, np ∈ ℕ such that and agree on the first k coordinates but not on the k + 1st. Recall that the real-vector subspace spanned by w1, …, wk includes the subspace spanned by u1, …, up but does not include j. But is proportional to j and lies in the subspace spanned by w1, …, wk. Therefore we obtain a contradiction, implying that our value of y(j) is computed correctly.

The next lemma shows that every semilinear function f can be computed by a CRC in O(n log n) time. It uses a systematic construction based on breaking down f into a finite number of partial affine functions f1, …, fm, in which deciding which fi to apply is itself a semilinear predicate. Intuitively, the construction proceeds by running many CRCs and CRDs in parallel on input x, computing all fi’s and all predicates of the form ϕi = “x ∈ dom fi?” The ϕi predicate computation is used to activate (in the case of a “true” answer) or deactivate (in case of “false”) the outputs of fi. Since eventually one CRD stabilizes to “true” and the remainder to “false”, eventually the outputs of one fi are activated and the remainder deactivated, so that the value f(x) is properly computed.

Lemma 4.4

Let f : ℕk → ℕl be semilinear. Then there is a CRC C that stably computes f, and the expected time for C to reach a count-stable configuration on input x is O(n log n), where n is the number of input molecules (the O() constant depends on f but not on n).

Proof

The CRC will have input species Σ = {X1, …, Xk} and output species Γ = {Y1, …, Yl}.

By Lemma 4.3, there is a finite set {f1 : ℕk ⤏ ℕl, …, fm : ℕk ⤏ ℕl} of affine partial functions, where each dom fi is a linear set, such that, for each x ∈ ℕk, if fi(x) is defined, then f(x) = fi(x). We compute f on input x as follows. Since each dom fi is a linear (and therefore semilinear) set, we compute each predicate ϕi = “x ∈ dom fi and (∀i′ ∈ {1, …, i − 1}) x ∉ dom fi′?” by separate parallel CRD’s. The latter condition ensures that for each x, precisely one of the predicates is true, in case the domains of the partial functions have nonempty intersection.

By Lemma 4.2, we can compute each f̂i by parallel CRC’s. Assume that for each i ∈ {1, …, m} and each j ∈ {1, …, l}, the jth pair of outputs y P (j) and y C (j) of the ith function is represented by species and . We interpret each and as an “inactive” version of “active” output species and .

For each i ∈ {1, …, m}, we assume that the CRD computing the predicate ϕi represents its output by voting species Ti to represent “true” and Fi to represent “false”. Then add the following reactions for each i ∈ {1, …, m} and each j ∈ {1, …, l}:

| (4.6) |

| (4.7) |

| (4.8) |

The latter two reactions implement the reverse direction of the first reaction using only bimolecular reactions. Also add the reactions

| (4.9) |

| (4.10) |

and

| (4.11) |

| (4.12) |

That is, a “true” answer for function i activates the ith output and a “false” answer deactivates the ith output. Eventually each CRD stabilizes so that precisely one i has Ti present, and for all i′ ≠ i, Fi′ is present. At this point, all outputs for the correct function f̂i are activated and all other outputs are deactivated. The reactions enforce that at any time, . In particular, #Yj ≥ #Kj and #Yj ≥ #Mi,j at all times, so there will never be a Kj or Mi,j molecule that cannot participate in the reaction of which it is a reactant. Eventually and stabilize to 0 for all but one value of i (by reaction (4.10)), and for this value of i, stabilizes to y(j) and stabilizes to 0 (by reaction (4.11)). Eventually #Kj stabilizes to 0 by reaction (4.12). Eventually #Mi,j stabilizes to 0 since Fi is absent for the correct function f̂i. This ensures that #Yj stabilizes to y(j).

It remains to analyze the expected time to stabilization. Recall n = ∥x∥. By Lemma 4.2, the expected time for each affine function computation to complete is O(n log n). Since we have m parallel computations, and m depends on f but not n, the expected time for all of the computations to complete is O(n log n). We must also wait for each predicate computation to complete. By Theorem 2.1, each of these predicates takes expected time at most O(n log n) to complete, so again all of them complete in expected time O(n log n).

Eventually, the Ti leaders must convert inactive output species to active, and Fi′ (for i′ ≠ i) must convert active output species to inactive. By Lemma 2.4, each of these requires at most O(n log n) expected time, and therefore they all complete in expected time at most O(n log n). Finally, reactions (4.11) and (4.12) are at least as fast as the process described in Lemma 2.4. Thus it takes O(n log n) expected time for reactions (4.11) and (4.12) to consume all and Kj molecules, at which point the system has stabilized.

5 Optimization to polylog(∥x∥) time

Angluin, Aspnes, and Eisenstat combined the slow, deterministic predicate-deciding results of [2] with a fast, error-prone simulation of a bounded-space Turing machine to show that semilinear predicates can be computed without error in expected polylogarithmic time [3]. We show that a similar technique implies that semilinear functions can be computed by CRNs without error in expected polylogarithmic time in the kinetic model, combining the same Turing machine simulation with our O(n log n) construction described in Lemma 4.4.

We in fact use the construction of [3] in order to conduct the fast, error-prone computation in our proof of Theorem 5.2. The next theorem formalizes the properties of that construction that we require.

Theorem 5.1 ([3])

Let f : ℕk → ℕl be a function by a t(m)-time-bounded, s(m)-space-bounded Turing machine, where m ≈ log n is the input length in binary, and let c ∈ ℕ. Then there is a CRC C that computes f correctly with probability at least 1 − n−c, and the expected time for C to reach a count-stable configuration is O(t(m)5). Furthermore, the total molecular count never exceeds O(2s(m)).

Semilinear functions on an m-bit input can be computed in time O(m) and space O(m) on a Turing machine. Therefore the bounds on CRC expected time and molecular count stated in Theorem 5.1 are O(log5n) and O(n), respectively, when expressed in terms of the number of input molecules n.

Theorem 5.1 is a rephrasing of the main result of Angluin, Aspnes, and Eisenstat [3]. However, a modification of their construction is required to achieve “uniformity” with respect to input size. Rephrasing their construction to our language of CRNs, they allow a different amount of “fuel” species (call it F) for every input size. Indeed, because their model exclusively uses two-reactant, two-product reactions, and thus preserves the total molecular count, this non-uniformity is necessary: the required amount of fuel molecules depends on the space usage s(m), so that the tape of the Turing machine can be accurately represented throughout the computation. We, however, require a uniform initial state. Luckily, we do not need to supply these fuel molecules as part of the input configuration. Instead, these fuels may be generated from the inputs by letting the first reaction of the input Xi be , where is the input interacting with the rest of the CRC, and c ∈ ℕ is chosen large enough that the CRC of [3] will not run out of F molecules. Since the CRC of [3] is used here only to compute semilinear functions, which require only O(n) space to compute on a Turing machine, c · n copies of F are sufficient to run this CRC if c is sufficiently large.

The following theorem is the main theorem of this section.

Theorem 5.2

Let f : ℕk → ℕl be semilinear. Then there is a CRC C that stably computes f, and the expected time for C to reach a count-stable configuration is O(log5 n), where n is the number of input molecules.

Proof

Our CRC will use the counts of Yj for each output dimension y(j) as the global output, and begins by running in parallel:

A fast, error-prone CRC F to compute y = f(x) with high probability, as in Theorem 5.1. For any constant c > 0, we can design F so that it is correct and finishes in time O(log5 n) with probability at least 1 − n−c, while never reaching total molecular count higher than O(n). We modify the CRC to store the output in three separate sets of species Yj (the global output), Bj, and Cj redundantly (i.e. y = b = c) as follows. Upon halting F copies an “internal” output species Ŷj to Yj, Bj, and Cj through reactions H + Ŷj → H + Yj + Bj +Cj (in asymptotically negligible time).10 In this way we are guaranteed that the amount of Yj produced by C is the same as the amounts of Bj and Cj no matter whether its computation is correct or not. This redundant storage is used for later comparison and possible replacement with the slow, deterministic CRC (described next).

A slow, deterministic CRC S for y′ = f(x). It is constructed as in Lemma 4.4, running in expected O(n log n) time.

A slow, deterministic CRD D for the semilinear predicate “b = f(x)?”. It is constructed as in Theorem 2.1 and runs in expected O(n) time.

Following Angluin, Aspnes, and Eisenstat [3], we construct a “timed trigger” as follows, using a single leader molecule, a single “marker” molecule, and n = ∥x∥ “interfering” molecules. To ensure that there are always n interfering molecules, we can let them be the input molecules, and a special species I that is generated in the reactions , where is the input species interacting with the remainder of the CRC. The leader will then interact with both Xi and I as interfering molecules.

The leader fires the trigger if it encounters the marker molecule, M, d times without any intervening reactions with the interfering molecules, where d is a constant. Note that choosing d larger increases the expected time for this event to happen, since it becomes more likely that the leader encounters an interfering molecule before encountering the M molecule d times in a row. This happens rarely enough that with high probability the trigger fires after F and D finishes (time analysis is presented below). When the trigger fires, it checks if D is outputting a “no” (e.g. has a molecule of L0), and if so, produces a molecule of Pfix. This indicates that the output of the fast CRC F is not to be trusted, and the system should switch from the possible erroneous result of F to the sure-to-be correct result of S.

Once a Pfix is produced, the system converts the output molecules of the slow, deterministic CRC S to the global output Yj, and kills enough of the global output molecules to remove the ones produced by the fast, error-prone CRC:

| (5.1) |

| (5.2) |

| (5.3) |

Finally, Pfix triggers a process consuming all species of F other than Yj, Bj, and Cj in expected O(log n) time so that afterward, F cannot produce any output molecules. More formally, let QF be the set of all species used by F. For all , add the reactions

| (5.4) |

| (5.5) |

where K ∉ QF is a unique species.

First, observe that the output will always eventually converge to the right answer, no matter what happens: If Pfix is eventually produced, then the output will eventually be exactly that given by S which is guaranteed to converge correctly. If Pfix is never produced, then the fast, error-prone CRC must produce the correct amount of Yj — otherwise, D will detect a problem.

For the expected time analysis, let us first analyze the trigger. The probability that the trigger leader will fire on any particular reaction number is at most n−d. In time n2, the expected number of leader reactions is O(n2). Thus, the expected number of firings of the trigger in n2 time is n−d+2. This implies that the probability that the trigger fires before n2 time is at most n−d+2. The expected time for the trigger to fire is O(nd).

We now consider the contribution to the total expected time from 3 cases:

F is correct, and the trigger fires after time n2. There are two subcases: (a) F finishes before the trigger fires. Conditional on this, the whole system converges to the correct answer, never to change it again, in expected time O(log5 n). This subcase contributes at most O(log5 n) to the total expected time. (b) F finishes after the trigger fires. In this case, we may produce a Pfix molecule and have to rely on the slow CRC S. The probability of this case happening is at most n−c. Conditional on this case, the expected time for the trigger to fire is still O(nd). The whole system converges to the correct answer in expected time O(nd), because everything else is asymptotically negligible. Thus the contribution of this subcase to the total expectation is at most O(n−c · nd) = O(n−c+d).

F is correct, but the trigger fires before n2 time. In this case, we may produce a Pfix molecule and have to rely on the slow CRC S for the output. The probability of this case occurring is at most n−d+2. Conditional on this case occurring, the expected time for the whole system to converge to the correct answer can be bounded by O(n2). Thus the contribution of this subcase to the total expectation is at most O(n−d+2 · n2) = O(n−d+4).

F fails. In this case we’ll have to rely on the slow CRC S for the output again. Since this occurs with probability at most n−c, and the conditional expected time for the whole system to converge to the correct answer can be bounded by O(nd) again, the contribution of this subcase to the total expectation is at most O(n−c · nd) = O(n−c+d).

So the total expected time is bounded by O(log5 n) + O(n−c+d) + O(n−d+4) + O(n−c+d) = O(log5 n) for d > 4, c > d.

6 Conclusion

We defined deterministic computation of CRNs corresponding to the intuitive notion that certain systems are guaranteed to converge to the correct answer no matter what order the reactions happen to occur in. We showed that this kind of computation corresponds exactly to the class of functions with semilinear graphs. We further showed that all functions in this class can be computed efficiently.

A work on chemical computation can stumble by attempting to shoehorn an ill-fitting computational paradigm into chemistry. While our systematic construction may seem complex, we are inspired by examples like those shown in Fig. 1 that appear to be good fits to the computational substrate. While delineation of computation that is “natural” for a chemical system is necessarily imprecise and speculative, it is examples such as these that makes us satisfied that we are studying a form of natural chemical computation.

In theoretical computer science, the notion of randomized computation has received significant attention. However, the additional computational power given by error-prone computation compared with deterministic computation is usually rather limited. For example, the class of languages decided by Turing machines, whether they are required to be deterministic or randomized (or even nondeterministic), is the same. In the case of polynomial-time Turing machines, it is widely conjectured [19] that P = BPP, i.e., that randomization adds at most a polynomial speedup to any predicate computation. In contrast, CRNs are unusual in the large gap between the power of randomized and deterministic computation: While randomized CRNs can simulate arbitrary Turing machines with high probability [24], deterministic computation is severely limited to semilinear functions only.

Our systematic constructions (unlike the examples in Fig. 1) rely on a carefully chosen initial context — the “extra” molecules that are necessary for the computation to proceed. Some of these species need to be present in a single copy (“leader”). We left unanswered whether it may be possible to dispense with this level of control of the chemical environment, but this question has since been answered affirmatively by Doty and Hajiaghayi [12]. However, the construction of [12] runs in expected time O(n); it remains open whether there is are leaderless CRNs computing any semilinear function in sublinear expected time.

In contrast to the CRN model discussed in this paper, which is appropriate for small chemical systems in which every single molecule matters, classical “Avogadro-scale” chemistry is modeled using real-valued concentrations that evolve according to mass-action ODEs. Moreover, despite relatively small molecular counts, many biological chemical systems are well-modeled by massaction ODEs. While the scaling of stochastic CRNs to mass-action systems is understood from a dynamical systems perspective [17], little work has been done comparing their computational abilities. There are hints that single/few-molecule CRNs perform a fundamentally different kind of computation. For example, recent theoretical work has investigated whether CRNs can tolerate multiple copies of the network running in parallel finding that they can lose their computational abilities [9, 10].

Does our notion of deterministic computation have an equivalent in mass-action systems? Consider what happens when the CRN shown in Fig. 1(c) is viewed as a mass-action reaction network, with (non-negative) real-valued inputs [X1]0, [X2]0 and output [Y]∞ (where we use the standard mass-action convention: [·]0 for the initial concentration, and [·]∞ for the equilibrium concentration). In the limit t → ∞, the mass-action system will converge to the correct output amount of [Y]∞ = max([X1]0, [X2]0), and moreover, the output amount is independent of what (non-zero) rate constants are assigned to the reactions. Thus one is tempted to connect the notion of deterministic computation studied here and the property of robustness to rate parameters of a mass-action system. Parameter robustness is a recurring motif in biologically relevant reaction networks due to much evidence that biological systems tend to be robust to parameters [5].