The authors results data can be used in the design of interventions that reduce patient wait times, increase efficient use of resources, and improve scheduling patterns.

Abstract

Purpose:

The use of time in outpatient cancer clinics is a marker of quality and efficiency. Inefficiencies such as excessive patient wait times can have deleterious effects on clinic flow, functioning, and patient satisfaction. We propose a novel method of objectively measuring patient time in cancer clinic examination rooms and evaluating its impact on overall system efficiency.

Methods:

We video-recorded patient visits (N = 55) taken from a larger study to determine patient occupancy and flow in and out of examination rooms in a busy urban clinic in a National Cancer Institute–designated comprehensive cancer center. Coders observed video recordings and assessed patient occupancy time, patient wait time, and physician-patient interaction time. Patient occupancy time was compared with scheduled occupancy time to determine discrepancy in occupancy time. Descriptive and correlational analyses were conducted.

Results:

Mean patient occupancy time was 94.8 minutes (SD = 36.6), mean wait time was 34.9 minutes (SD = 28.8), and mean patient-physician interaction time was 29.0 minutes (SD = 13.5). Mean discrepancy in occupancy time was 40.3 minutes (range, 0.75 to 146.5 minutes). We found no correlation between scheduled occupancy time and patient occupancy time, patient-physician interaction time, and patient wait time, or between discrepancy in occupancy time and patient-physician interaction time.

Conclusion:

The method is useful for assessing clinic efficiency and patient flow. There was no relationship between scheduled and actual time patients spend in exam rooms. Such data can be used in the design of interventions that reduce patient wait times, increase efficient use of resources, and improve scheduling patterns.

Introduction

Inefficient use of time drains resources and disrupts clinic flow.1 This inefficiency also affects patients, who often experience long wait times for appointments with their oncologists, reducing their satisfaction,2–6 increasing their distress,7 and increasing patient and caregiver costs.8–9 There is also evidence that long wait times may also directly and indirectly reduce patient adherence to recommended treatment.10–12

Quality health care is, by definition, care that uses resources effectively and efficiently.13 However, empirical studies of the use of resources and the costs of poor quality are rare.14 This is especially true for studies on the relationship between the use of clinic time for patient visits, patient flow (ie, the movement of patients through the care setting15), as well as costs to systems and to patients.1 A few notable exceptions have directly linked time associated with cancer care (ie, travel time, wait time, and time for treatment) to dollar amounts,8–9 but few if any studies have directly reported on the breakdown of patient time in the examination room. Valid, reliable, and objective metrics are still needed to empirically demonstrate how time is used in examination rooms so that the degree of inefficiency can be measured, potential reasons for inefficacies can be identified, and interventions to improve flow can be developed.

Long wait times for patients are not just an inconvenience that reduces patient satisfaction within and outside of cancer centers.3,6,16–20 Time-focused research has consistently demonstrated that wait time significantly influences the emotional turmoil and health outcomes of patients with cancer.3 An investigation of the patient perceptions of oncology outpatient clinics assessed 252 patients' perceptions of factors that influenced patient satisfaction. Among these patients, 27% described the wait times as “excessively long” and as overwhelmingly the worst part of an oncology clinic, worse even than the uncertainty over what patients will be told during the visit and the distress and pain of a physical examination.7 More recent research has shown that more than 25% of cancer patients found wait times to be high or very high in emotional cost and physiological distress4 and found long wait times to affect treatment adherence.5,10–12

One specific and important component of patient time is the time patients spend in the cancer clinic exam room. However, this aspect of time has not been assessed in prior research primarily because it is difficult to objectively measure what transpires once a patient enters an examination room. In this article, we propose a novel way to measure patient time and patient flow within the examination room. Specifically we describe a customized, state-of-the-art video technology at a National Cancer Institute (NCI) –designated Comprehensive Cancer Center, which permits remote video-recording of patients and their oncologist during the time each is in the examination room.21

The primary purpose of this article is to offer an evaluation of the utility of a novel method for objectively measuring patient time in the examination room and how it is used. We assess total patient occupancy time in the examination room, patient wait time before the arrival of the oncologist, physician-patient interaction time, scheduled occupancy time, and discrepancy in occupancy time. Subsequently, we examine the relationships among the various types of time. In addition to providing direct information on patient time in examination rooms, these data can also be used to evaluate efficiency in clinics and patient flow with limited physical resources.

Methods

Patients and Setting

This observational study is based on video-recorded interaction data collected as part of a larger NCI-funded investigation of the process, quality, and outcomes related to patient-oncologist communication and treatment decision making in racially discordant interactions. Data for this secondary analysis include 55 video recordings collected in the outpatient breast cancer clinic of an NCI-designated comprehensive cancer center providing care in an urban area. The data were collected between March 2012 and April 2014. The recordings are not necessarily a comprehensive representation of all breast cancer clinic visits during this time frame. The parent study had strict inclusion requirements (eg, the visit had to be for the first discussion of nonsurgical treatments for breast cancer including chemotherapy or endocrine therapy). In addition, a large percentage (31.5%) of the patients identified from medical records as eligible for the parent study could not be contacted before the scheduled visit, and the refusal rate among patients who were contacted was 47.4%. (We believe the high refusal rate was in part due to the substantial percentage of the patients who had first learned they had breast cancer only shortly before they were approached to participate in the parent study. Not surprising, this was a difficult time for some patients to consider an additional request.)

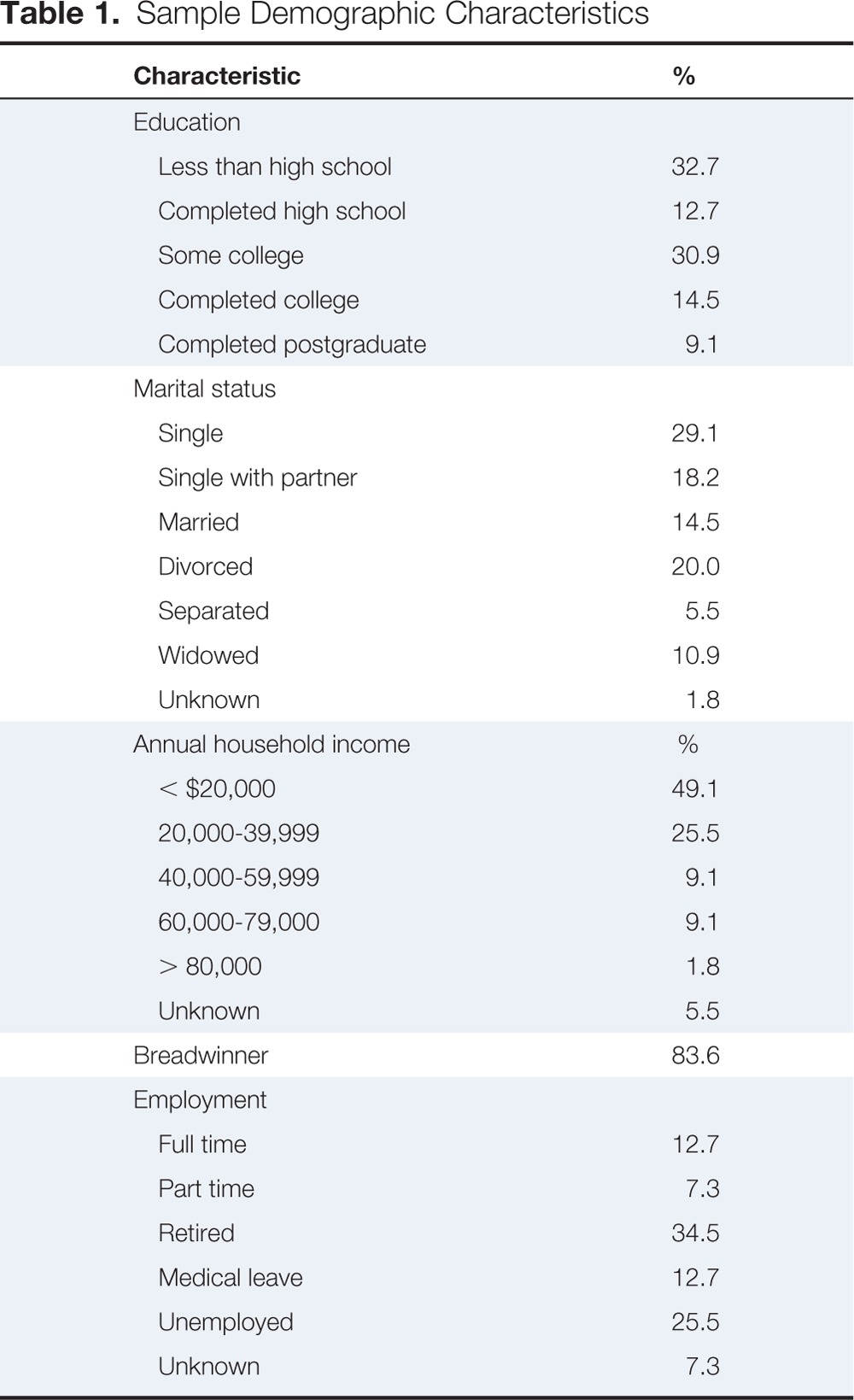

Medical oncologists were included if they saw patients with breast cancer at the participating clinic. Patients of these oncologists were included if they (1) self-identified as Black, African American, or Afro-Caribbean; (2) were between the ages of 25 and 85 years; (3) had a confirmed diagnosis of breast cancer; and (4) were visiting a participating medical oncologist for the first time to discuss and decide whether to begin chemotherapy or endocrine treatment for their breast cancer. In terms of previous treatment, 61.8% of the sample (n = 34) had surgery before this visit but had not met with a medical oncologist to decide whether chemotherapy or endocrine therapy would be appropriate treatments. All patients were native English speakers, and all physicians were either native English speakers or fluent in English. No translation services were needed. Patient demographic characteristics are shown in Table 1.

Table 1.

Sample Demographic Characteristics

| Characteristic | % |

|---|---|

| Education | |

| Less than high school | 32.7 |

| Completed high school | 12.7 |

| Some college | 30.9 |

| Completed college | 14.5 |

| Completed postgraduate | 9.1 |

| Marital status | |

| Single | 29.1 |

| Single with partner | 18.2 |

| Married | 14.5 |

| Divorced | 20.0 |

| Separated | 5.5 |

| Widowed | 10.9 |

| Unknown | 1.8 |

| Annual household income | % |

| < $20,000 | 49.1 |

| 20,000-39,999 | 25.5 |

| 40,000-59,999 | 9.1 |

| 60,000-79,000 | 9.1 |

| > 80,000 | 1.8 |

| Unknown | 5.5 |

| Breadwinner | 83.6 |

| Employment | |

| Full time | 12.7 |

| Part time | 7.3 |

| Retired | 34.5 |

| Medical leave | 12.7 |

| Unemployed | 25.5 |

| Unknown | 7.3 |

Participating patients completed questionnaires assessing background sociodemographic and psychological variables before seeing the oncologist. Each examination room was equipped with unobtrusive digital video recording devices that recorded all occupants of the examination room during patient visits. Equipment was controlled from a remote location by research assistants.21

The study was approved by the affiliated university's institutional review board. All physicians and patients provided consent as study participants, which included permission to be video recorded. Our previous work has demonstrated that the recording system does not unduly alter physician and patient interactions and behaviors.21–24

Data Collection and Analysis

To measure each type of time in patient visits, a trained research assistant observed and coded the video recorded visit using Studiocode software.25 The research assistant coded for the following factors: patient occupancy time (the total time the patient was in the examination room); patient wait time (the time the patient spent in the examination room before seeing the oncologist, but not including the presence of other health care providers); and physician-patient interaction time (the time the patient and oncologist were both in the room). After coding was completed, the first author compared patient occupancy time with scheduled occupancy time (the allocated time provided by clinic scheduling personnel obtained from clinic records) to attain measures of discrepancy in occupancy time (difference between scheduled occupancy time and patient occupancy time). Appointment times are determined a priori using a standardized approach. Patients are scheduled for 60-minute appointments if they have never met with their oncologist. The large majority (94.5%) of our sample was composed of this type of patient. The remaining 5.5% were scheduled for 20- or 40-minute appointments because they had met their oncologist previously but had not discussed nonsurgical treatment options (eg, chemotherapy or endocrine therapy).

Descriptive statistics were assessed for all five types of time (patient occupancy time, patient wait time, physician-patient interaction time, scheduled occupancy time, and discrepancy in occupancy time). Subsequent analyses were conducted with nonparametric tests as a result of non-normal distributions of the outcome data. Initially, because some patients had already undergone surgery and some had not, Mann-Whitney U tests were conducted to determine any differences. Rank order correlation analyses using Spearman's rho were then conducted to determine how all types of time are related.

Results

Participants

The sample included 55 female patients with breast cancer with a mean age of 57.2 years (SD = 10.8). The 55 clinic visits included a total of five medical oncologists. Each oncologist saw an average of 11 patients (SD = 7.6; range, 1 to 20).

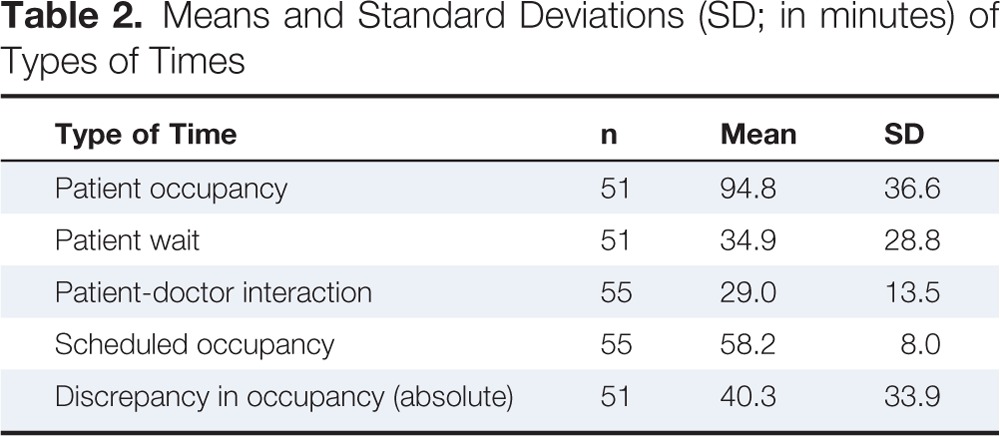

Types of Time

Average patient occupancy time was 94.8 minutes (SD = 36.6), and average patient wait time before seeing the oncologist, but not including the presence of other health care providers, was 34.9 minutes (SD = 28.8; Table 2). Average physician-patient interaction time was 29.0 minutes (SD = 13.5). Average discrepancy in occupancy time was 40.3 minutes, with a range of 0.75 to 146.5 minutes. Only 10.9% (n = 6) of visits occupied the room for less time than originally scheduled. Mann-Whitney U tests were conducted to assess any differences in time between patients who underwent surgery and those that did not. No differences were found.

Table 2.

Means and Standard Deviations (SD; in minutes) of Types of Times

| Type of Time | n | Mean | SD |

|---|---|---|---|

| Patient occupancy | 51 | 94.8 | 36.6 |

| Patient wait | 51 | 34.9 | 28.8 |

| Patient-doctor interaction | 55 | 29.0 | 13.5 |

| Scheduled occupancy | 55 | 58.2 | 8.0 |

| Discrepancy in occupancy (absolute) | 51 | 40.3 | 33.9 |

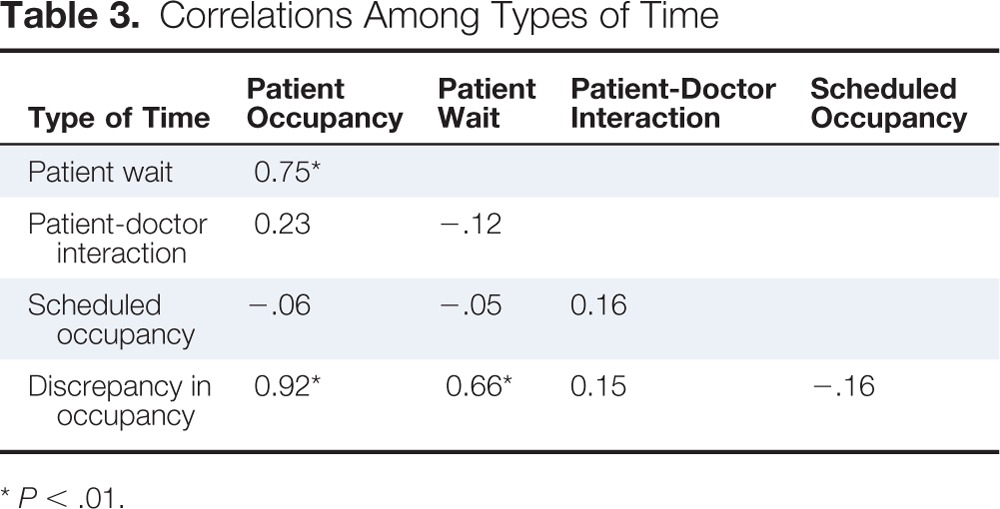

Relationships Among Types of Time

Scheduled occupancy time was unrelated to patient occupancy time (rs[51] = −0.06, ns), patient-physician interaction time (rs[55] = 0.16, ns) and was also unrelated to patient wait time (rs[51] = −0.05, ns). Discrepancy in occupancy time was unrelated to physician-patient interaction time (rs[51] = 0.15, ns; Table 3).

Table 3.

Correlations Among Types of Time

| Type of Time | Patient Occupancy | Patient Wait | Patient-Doctor Interaction | Scheduled Occupancy |

|---|---|---|---|---|

| Patient wait | 0.75* | |||

| Patient-doctor interaction | 0.23 | −.12 | ||

| Scheduled occupancy | −.06 | −.05 | 0.16 | |

| Discrepancy in occupancy | 0.92* | 0.66* | 0.15 | −.16 |

P < .01.

Discussion

We assessed the utility of using video recordings of patient occupancy in examination rooms in an NCI-designated comprehensive cancer center as a method for objectively measuring patient time and how it is used in oncology examination rooms. This method proved useful in assessing patient occupancy of the examination room, patient wait time, and physician-patient interaction time. We stress that the data reported may not be representative of the patients in this clinic but it does offer a demonstration and an evaluation of this method as a means to measure an important aspect of health care quality: patient time in the examination room.

Our focus on assessing an objective measure of examination room time was prompted by the inclusion of “timeliness” and “efficiency” as two aims of health care quality improvement in the Institute of Medicine's seminal report “Crossing the Quality Chasm.”26 Increasing concerns over poorly managed health care systems and the associated economic costs caused by lapses in the system (which are often time related) have led health care policymakers, practitioners, and researchers to assess a variety of areas of practice in an effort to improve the quality of health care.27–29 A system-level lens is appropriate for this type of methodological assessment as it is commonly recognized that health care quality is a systems attribute27 that requires attention to the design and organization of systems, alongside the clinical aspects of care.28

Secondarily, we sought to determine how this method can meaningfully reflect overall clinic efficiency and patient flow. Our data demonstrate, in this case, that there is no systematic empirical relationship between clinic scheduling and patterns of patient flow. Thus, it is difficult to plan and control day-to-day operations. In addition, the discrepancy in occupancy time was unrelated to patient time spent with physician, indicating that excess occupancy time is not necessarily devoted to longer conversations between the patient and the physician.

We found that patients with cancer are experiencing significant wait times in examination rooms, waiting an average of 34.9 minutes. Patients are occupying examination rooms for an average of 43.7 minutes longer than the time scheduled (excluding cases when occupancy time was less than schedule time). Notably, it is estimated that examination room costs at this cancer center run $55 per hour, meaning that an average of $40.06 may be lost per office visit. For our sample alone (40.06 × 55 patients) $2,203.30 was spent on inefficiencies. This amount does not include staffing costs or costs for patients such as taking time off from work, paying for childcare, and so on. These data are even more striking when considering that patients' clinic wait times in this study were in addition to the time patients spent waiting in the reception area. Clinic records show that in 2013, patients typically waited between 22 and 32 minutes from the point of registration to the point of entering the examination room. Our cameras were collecting only a portion of the patients' waiting experience, indicating further inefficiencies in the system and negative effects on the patients' experience.

Importantly, our findings demonstrate that, generally, is it not one or two physicians who are thwarting clinic efficiency, but rather it is likely the system that is not accommodating the context. Many reasons can account for lags and inefficiencies. The observations of physician leaders in the clinic and members of our research team indicate three likely causes of system inefficiency. First, increased wait time can result from delays in clinic-related processes such as obtaining referrals and laboratory results and in moving patients in and out of examination rooms. Second, oncology patients are likely experiencing one of the most stressful periods of their lives, which negatively affects them socially and psychologically. Many patients require long and difficult conversations with their oncologist that may not fit neatly into the allocated appointment time. Finally, time is needed for the extensive clinical research and trials conducted in the clinic. The process of explaining protocols and obtaining patient consent requires additional time that affects daily clinic schedules.

The major strength of this investigation lies in the objective nature of the measurement and its validity. Video recording examination room occupancy allows for an accurate assessment of individual patient appointments. We are in the unique position to offer unbiased information regarding how much time patients spend occupying examination rooms, waiting for physicians, and interacting with physicians. These metrics are crucial for understanding clinic efficiency and patient flow.

Limitations

These data and the conclusions drawn from them must be considered within the limitations of the study. First, the sample size is small, and the study was conducted in one clinic. More work will be needed to make comparisons across clinics and to make tentative generalizations. Second, we had minimal control over various aspects of the design, including the number of patients seen by each physician. Third, we lacked specific data on patients' wait times in the reception area before they were ushered into the examination room. This is an important puzzle piece, crucial to understanding the patient's full clinic experience. Fourth, the patients involved in the parent study self-selected to participate, which may bias the findings because patients who agree to participate in a communication study may differ from those who refuse. Finally, we lacked consistent data regarding scheduled appointment start times. We know the extent of time patients waited, but we were unable to assess the relationship between scheduled appointment start time and actual start time. The data we have show that the discrepancy between scheduled start time and actual start time is 23.3 minutes, with the majority of appointments starting after the scheduled time.

The technology used in this novel method for assessing time and patient flow may or may not be available in other clinic settings. However, it is possible that adaptations could be made to allow for similar equipment to be used to make the same measurements through the use of portable cameras or audio recorders.

Future Directions

Organizations engaging in improvement processes that involve acquiring new knowledge, and creating new capabilities in an effort to account for that new knowledge, are involved in organizational learning.30 Health care delivery researchers have suggested that learning is a cyclical and multilevel process.31–32 System flaws and inefficiencies are often organizational, involve multiple individuals and/or systems within the hospital, and require a more holistic view of the organizational and clinical aspects of care.33–34 We have engaged with the cancer clinic's Healthcare Efficiency Committee, which is actively integrating our findings into clinic improvement process discussions. Given the findings from this investigation and the explanations for system lags provided by our physician leaders, further efforts are warranted to understand the causes of system weakness and the development of interventions.

This method can help inform interventions to improve efficiency by identifying where weaknesses or time lags occur and how to design interventions that have an enhanced likelihood of success. Ideally, clinic managers can use these data to develop an intervention with two aims: (1) to take control of a somewhat chaotic system, and (2) put the lag time to better use. For example, if delays in patient flow are continuing to occur in the examination room, perhaps this time could be used for patient education and counseling in response to physician concerns over patients' social and psychological well-being.

In conclusion, we have evaluated a method of measuring a major component of health care quality: patient flow and the use of examination room time. We were able to successfully obtain these measurements and find interesting results of overall system efficiency. Our work is timely given recent publication trends and calls in public forums for this type of research. Specifically, examinations of clinic efficiency and innovative methods for interventions for improved delivery of oncology health services have recently been published in Journal of Oncology Practice.35–36 Also, the American Society of Clinical Oncology Quality Care Symposium held in San Diego in November 2013 encouraged health care quality researchers to use data-capturing methodologies, such as video recording that accurately reflect what is occurring in oncology examination rooms so that further aspects of quality can be examined. Our systematic analysis of time in video-recorded clinic visits meets this call.

Acknowledgment

Supported by National Cancer Institute Grant U54 CA153606 (T. Albrecht, PI, R. Chapman, Co-PI). Approved by Wayne State University Institutional Review Board (No. 125211B3E). Versions of this paper were presented at the ASCO Quality Care Symposium, November 1-2, 2013, San Diego, CA, and at the Michigan Cancer Consortium, November 20, 2013, East Lansing, MI.

Authors' Disclosures of Potential Conflicts of Interest

The authors indicated no potential conflicts of interest.

Author Contributions

Conception and design: Lauren M. Hamel, Susan Eggly, Terrance L. Albrecht

Collection and assembly of data: Susan Eggly, Rifky Tkatch, Jennifer Vichich, Terrance L. Albrecht

Data analysis and interpretation: Lauren M. Hamel, Susan Eggly, Robert Chapman, Louis A. Penner, Terrance L. Albrecht

Manuscript writing: All authors

Final approval of manuscript: All authors

References

- 1.Brown ML, Yabroff KR. Economic impact of cancer in the United States. In: Schottenfeld D, Fraumeni JF Jr, editors. Cancer Epidemiology and Prevention. Oxford, England: Oxford University Press; 2006. pp. 202–213. [Google Scholar]

- 2.Gesell SB, Gregory N. Identifying priority actions for improving patient satisfaction with outpatient cancer care. J Nurs Care Qual. 2004;19:226–233. doi: 10.1097/00001786-200407000-00009. [DOI] [PubMed] [Google Scholar]

- 3.Gourdji I, McVey L, Loiselle C. Patients' satisfaction and importance ratings of quality in an outpatient oncology center. J Nurs Care Qual. 2003;18:43–55. doi: 10.1097/00001786-200301000-00007. [DOI] [PubMed] [Google Scholar]

- 4.Catania C, De Pas T, Minchella I, et al. Waiting and the waiting room: How do you experience them? Emotional implications and suggestions from patients with cancer. J Cancer Educ. 2011;26:388–394. doi: 10.1007/s13187-010-0057-2. [DOI] [PubMed] [Google Scholar]

- 5.Lis CG, Rodeghier M, Gupta D. Distribution and determinants of patient satisfaction in oncology: A review of the literature. Patient Prefer Adherence. 2009;3:287–304. doi: 10.2147/ppa.s6351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Sandolva GA, Brown AD, Sullivan T, et al. Factors that influence cancer patients' overall perceptions of the quality of care. Int J Qual Health C. 2006;4:266–272. doi: 10.1093/intqhc/mzl014. [DOI] [PubMed] [Google Scholar]

- 7.Thomas S, Glynne-Jones R, Chait I. Is it worth the wait? A survey of patients' satisfaction with an oncology outpatient clinic. Eur J Cancer Care. 1997;6:50–58. doi: 10.1111/j.1365-2354.1997.tb00269.x. [DOI] [PubMed] [Google Scholar]

- 8.Yabroff KR, Davis WW, Lamont EB, et al. Patient time costs associated with cancer care. J Natl Cancer Inst. 2007;9:14–23. doi: 10.1093/jnci/djk001. [DOI] [PubMed] [Google Scholar]

- 9.Yabroff KR, Kim Y. Time costs associated with informal caregiving for cancer survivors. Cancer. 2009;115:4362–4373. doi: 10.1002/cncr.24588. [DOI] [PubMed] [Google Scholar]

- 10.Lacy NL, Paulman A, Reuter MD, et al. Why we don't come: Patient perceptions on no-shows. Ann Fam Med. 2004;2:541–554. doi: 10.1370/afm.123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Paterson BL, Charlton P, Richard S. Non-attendance in chronic disease clinics: A matter of non-compliance? J Nurs Healthc Chronic Illn. 2010;2:63–74. [Google Scholar]

- 12.Pesata V, Pallija G, Webb AA. A descriptive study of missed appointments: Families' perceptions of barriers to care. J Pediatr Health Care. 1999;13:178–182. doi: 10.1016/S0891-5245(99)90037-8. [DOI] [PubMed] [Google Scholar]

- 13.Campbell SM, Roland MO, Buetow SA. Defining quality of care. Soc Sci Med. 2000;51:1611–25. doi: 10.1016/s0277-9536(00)00057-5. [DOI] [PubMed] [Google Scholar]

- 14.Ovretveit J. The economics of quality – a practical approach. Int J Health Care Qual Assur. 2000;13:200–207. doi: 10.1108/09526860010372803. [DOI] [PubMed] [Google Scholar]

- 15.Institute for Healthcare Improvement. Cracking the code to hospital-wide patient flow. 2007:1–4. [Google Scholar]

- 16.Anderson RT, Camacho FT, Balkrishnan R. Willing to wait?: The influence of patient wait time on satisfaction with primary care. BMC Health Serv Res. 2007;7:31–35. doi: 10.1186/1472-6963-7-31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Boudreaux ED, d'Autremont S, Wood K, et al. Predictors of emergency department patient satisfaction: Stability over 17 months. Acad Emerg Med. 2004;11:51–58. doi: 10.1197/j.aem.2003.06.012. [DOI] [PubMed] [Google Scholar]

- 18.Cassidy-Smith TN, Baumann B, Bourdeaux ED. The disconfirmation paradigm: Throughput times and emergency department patient satisfaction. Am J Emerg Med. 2007;32:7–13. doi: 10.1016/j.jemermed.2006.05.028. [DOI] [PubMed] [Google Scholar]

- 19.Eilers G. Improving patient satisfaction with waiting time. J Am Coll Health. 2004;53:41–43. doi: 10.3200/JACH.53.1.41-48. [DOI] [PubMed] [Google Scholar]

- 20.Leddy KM, Kaldenberg DO, Becker BW. Timeliness in ambulatory care treatment. J Ambulatory Care Manage. 2003;26:138–149. doi: 10.1097/00004479-200304000-00006. [DOI] [PubMed] [Google Scholar]

- 21.Albrecht TL, Ruckdeschel JC, Ray FL, et al. A portable, unobtrusive device for videorecording clinical interactions. Behav Res Meth. 2005;37:165–169. doi: 10.3758/bf03206411. [DOI] [PubMed] [Google Scholar]

- 22.Albrecht TL, Eggly SS, Gleason ME, et al. Influence of clinical communication on patients' decision making on participation in clinical trials. J Clin Oncol. 2008;26:2666–2673. doi: 10.1200/JCO.2007.14.8114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Riddle DL, Albrecht TL, Coovert MD, et al. Differences in audiotaped versus videotaped physician-patient interactions. J Nonverbal Behav. 2002;26:219–239. [Google Scholar]

- 24.Penner J, Orom H, Albrecht T, et al. Camera-related behaviors during videorecorded medical interactions. J Nonverbal Behav. 2007;31:99–117. [Google Scholar]

- 25.Studiocode. New South Wales, Australia: 2011. Computer Software Version 5.5.0. [Google Scholar]

- 26.Institute of Medicine. Washington, DC: National Academies Press; 2001. Crossing the Quality Chasm: A New Health System for the 21st Century. [PubMed] [Google Scholar]

- 27.Berwick DM. Improvement, trust and the healthcare workforce. Qual Saf Health Care. 2003;12:2–6. doi: 10.1136/qhc.12.6.448. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Buetow S, Roland M. Clinical governance: Bridging the gap between managerial and clinical approaches to quality of care. Qual Saf Health Care. 1999;8:184–190. doi: 10.1136/qshc.8.3.184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Iedema R. New approaches to researching patient safety. Soc Sci Med. 2009;69:1701–4. doi: 10.1016/j.socscimed.2009.09.050. [DOI] [PubMed] [Google Scholar]

- 30.Fiol CM, Lyles MA. Organizational learning. Acad Manage Rev. 1985;10:803–813. [Google Scholar]

- 31.Bohmer RM, Edmondson AC. Organizational learning in health care. Health Forum J. 2001;44:32–35. [PubMed] [Google Scholar]

- 32.Carroll JS, Edmondson AC. Leading organisational learning in health care. Qual Saf Health Care. 2002;11:51–56. doi: 10.1136/qhc.11.1.51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Goodman PS, Ramanujam R, Carroll JS, et al. Organizational errors: Directions for future research. Res Organ Behav. 2011;31:151–176. [Google Scholar]

- 34.Ramanujam R, Rousseau DM. The challenges are organizational not just clinical. JOB. 2006;27:811–827. [Google Scholar]

- 35.Balogh EP, Bach PB, Eisenberg PD, et al. Practice-changing strategies to delivery affordable, high-quality cancer care: Summary of an institute of medicine workshop. J Oncol Pract. 2013;9:54s–59s. doi: 10.1200/JOP.2013.001123. [DOI] [PubMed] [Google Scholar]

- 36.Kallen MA, Terrell JA, Lewis-Patterson P, et al. Improving wait time for chemotherapy in an outpatient clinic at a comprehensive cancer center. J Oncol Pract. 2012;8:e1–e7. doi: 10.1200/JOP.2011.000281. [DOI] [PMC free article] [PubMed] [Google Scholar]