Abstract

Bioactive food components have shown potential health benefits for more than a decade. Currently there are no recommended levels of intake [i.e., Dietary Reference Intakes (DRIs)] as there are for nutrients and fiber. DRIs for essential nutrients were based on requirements for each specific nutrient to maintain normal physiologic or biochemical function and to prevent signs of deficiency and adverse clinical effects. They were later expanded to include criteria for reducing the risk of chronic degenerative diseases for some nutrients. There are many challenges for establishing recommendations for intakes of nonessential food components. Although some nonessential food components have shown health benefits and are safe, validated biomarkers of disease risk reduction are lacking for many. Biomarkers of intake (exposure) are limited in number, especially because the bioactive compounds responsible for beneficial effects have not yet been identified or are unknown. Furthermore, given this lack of characterization of composition in a variety of foods, it is difficult to ascertain intakes of nonessential food components, especially with the use of food-frequency questionnaires designed for estimating intakes of nutrients. Various intermediary markers that may predict disease outcome have been used as functional criteria in the DRI process. However, few validated surrogate endpoints of chronic disease risk exist. Nonvalidated intermediary biomarkers of risk may possibly predict clinical outcomes, but more research is needed to confirm the associations between cause and effect. One criterion for establishing acceptable intermediary outcome indicators may be the maintenance of normal physiologic function throughout adulthood, which presumably would lead to reduced chronic disease risk. Multiple biomarkers of outcomes that demonstrate the same health benefit may also be helpful. It would be beneficial to continue to refine the process of setting DRIs by convening a workshop on establishing a framework for nonessential food components that would take into consideration intermediary biomarkers indicative of optimal health.

Introduction

Over the past decade, there has been a dramatic increase in the number of studies focused on investigating the bioactivity and potential health benefits of a range of non-nutritive bioactive food components. Emerging evidence shows that, although bioactive food components are not essential to life, they may confer a range of effects that support and improve health. However, consumers and health care professionals are inundated by messages about both their health benefits and risks. Given the growing scientific and consumer interest, a framework is needed to evaluate these nonessential, yet bioactive, food components and to develop evidence-based recommendations regarding safe and effective intakes. Because the science is emerging, statements on their dietary importance can only be approximate and not definitive, but nevertheless, the best existing advice needs to be conveyed to the public.

This article briefly examines the current DRI process as it might be applied for assessing nonessential food components, considers the limitations of this model for DRIs, and discusses the application of an alternative (or expanded) framework. To further guide considerations for an alternative approach, this article discusses the example of fiber as 1 area in which the DRI framework has already been expanded beyond essentiality. Finally, recent developments in the European Food Safety Authority’s health claim process are summarized.

Current Status of Knowledge

Overview of the current DRI process

During the 1990s, the National Academy of Science Institute of Medicine’s Food and Nutrition Board with the active involvement of Health Canada began developing and implementing a new paradigm to establish recommended nutrient intakes that replaced and expanded on the 1994 RDAs. This process resulted in a common set of reference values, known as “Dietary Reference Intakes” (DRIs), which are used in both the United States and Canada. The DRIs include the Estimated Average Requirement (EAR)9, the RDA, Adequate Intake (AI), Estimated Energy Requirement (EER), Acceptable Macronutrient Distribution Range, and the Tolerable Upper Intake Level (UL) (1). The scientific data used to establish the DRIs came largely from experimental and observational studies. The nutrient-based reference values are based on the relations between nutrient intakes and indicators of adequacy, prevention of chronic disease, and avoidance of an excessive nutrient intake in healthy populations. Inadequate intake of an essential nutrient leads to dietary deficiencies, some of which result in aberrations in absorption and utilization of the nutrient. Adequate dietary intakes of nutrients are important for normal growth, maintenance of normal plasma concentrations of nutrients, and other aspects of general health and well-being. Before 1990, the RDAs focused primarily on reducing the incidence of deficiency diseases. The current DRIs are intended to help people optimize their health, avoid excessive nutrient intake, and prevent both deficiencies and chronic degenerative disease (1).

The EAR, RDA, AI, EER, and UL standards that comprise the DRIs can be used to describe distribution of requirements, estimated distributions of risk, and distribution of intakes of nutrients from foods. The EAR is the average/median usual daily nutrient intake amount that is estimated to meet the requirements of half of healthy individuals in a particular life stage or sex group. The RDA is the average daily dietary nutrient intake amount that is sufficient to meet the nutrient requirements of nearly all healthy individuals in a particular life stage and sex group. The AI is a recommended average daily intake amount based on observed or experimentally determined approximations or estimates of nutrient intake by a group (or groups) of apparently healthy people assumed to be adequate in nutrition status. The EER is the average dietary energy intake predicted to maintain energy balance in a healthy adult of a defined age, sex, weight, height, and level of physical activity that is consistent with good health. The metabolic unit for the sum of the energy used by an organism is the total energy expenditure. The UL is the highest average daily nutrient intake amount that is likely to pose no risk of adverse health effects in almost all individuals in the population (1). DRIs are established for most nutrients. An RDA can be mathematically derived from a nutrient that has been assigned an EAR. An AI is typically used if data are too limiting to develop an EAR for any life stage. The Acceptable Macronutrient Distribution Range is a range of intakes for a particular energy source that is not experimentally derived but thought to be associated with a reduced risk of chronic diseases, while providing adequate intakes of essential nutrients.

Functional indicators must be feasible, valid, reproducible, sensitive, and specific in order to be selected for use in the DRI process (2). In addition to causal associations, changes in the indicator must be plausibly related to changes in the risk of an adverse health outcome, result in changes that are usually outside of the homeostatic range, and lead to changes generally associated with adverse sequelae. In addition, the measurement of the indicator must be accomplished accurately and reproducibly between laboratories. Functional indicators for DRIs, as defined in the most recent Institute of Medicine (IOM) report on calcium and vitamin D, are health outcomes that serve as the basis for estimating a nutrient requirement for specific clinical or health outcomes (2).

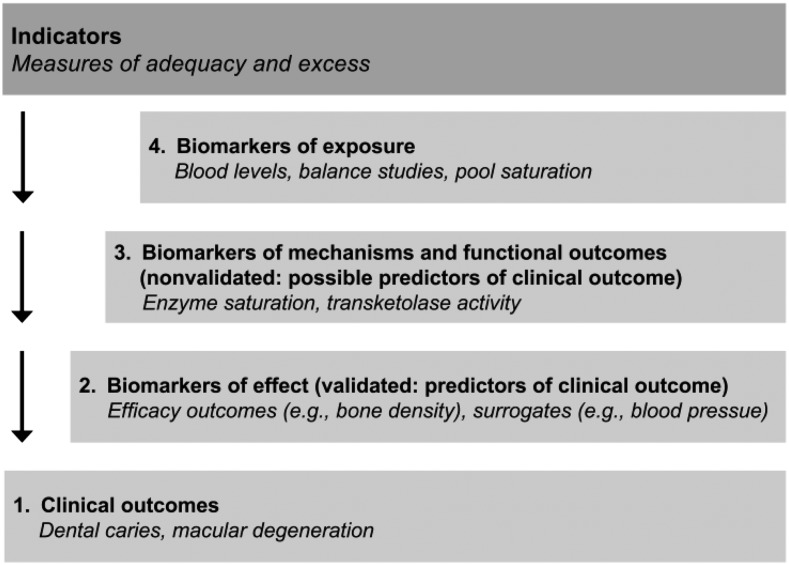

“Indicator” is an umbrella term, encompassing common indicators such as intake/exposures, intermediary biomarkers, risk factors, surrogate endpoints, and endpoints/outcomes (3). Indicators used in the DRIs can take various forms, and different indicators have been used over the years (Fig. 1). A “biomarker of exposure” is a validated measure of nutrient intake, whereas a “biomarker of status” is a validated measure of nutritional status. A “biomarker of effect” or a “surrogate biomarker” is an indicator that can be relied on to be causally related to and predictive of the health outcome. A “biomarker of effect” is considered to be modifiable and affects the health outcome. A “clinical outcome,” or health outcome, refers to the ultimate measurable effect of interest for nutrients. Indicators that precede the occurrence of a health outcome may or may not be predictive of the health outcome itself and therefore must be validated before this link is assumed.

FIGURE 1.

Various forms of indicators. Note that a clinical outcome may also be referred to as a health outcome. Adapted with permission from reference 3.

With the use of the 2011 DRI report on calcium and vitamin D as an example, numerous studies showed that inadequate calcium intake results in resorption of minerals from bone leading to reduced bone mass and osteoporosis (2). In addition to skeletal health, other possible but as yet undemonstrated relations between calcium and health outcomes may exist. These include altered risk of malignant neoplasms (certain cancers), cardiovascular disease (CVD), altered physical performance and risk of falls, changes in immune responses, neuropsychological functioning, and reproductive outcomes. The IOM committee considered the analytical approach, study population, research design, and overall quality of the evidence for each potential indicator and used these in evaluating each study that was reviewed. Measures related to bone health were the only indicators examined that had strong evidence linking cause and effect. Thus, the outcomes used to establish DRIs for calcium were limited to those related to bone health, including bone accretion, bone maintenance, and bone loss. An EAR could be established because sufficient evidence existed from large-scale randomized trials and calcium balance studies to estimate this reference value (2).

Nonessential food components and the DRI process

Nutrients that are required for normal bodily functioning but that cannot be synthesized either by the body or produced in adequate amounts for good health must be obtained from the diet and thus are called essential nutrients. Essential nutrients are also defined by collective physiologic evidence of their importance in the diet. As with most substances, excessive intakes of essential nutrients can be harmful. However, most essential nutrients have a wide margin (or range) of safe intakes. The exact range varies depending on the nutrient and population.

Nonessential bioactive food components cannot be made by the body and may be absorbed from foods that are consumed. These include ingested food components that may be metabolized as fuels and those that provide carbon skeletons and amino groups for endogenous synthesis of body constituents (e.g., fats to FAs and proteins to amino acids). Others consist of some food components that may have health benefits and are considered to be part of a healthy diet (e.g., fiber) and may thus have substantial impact on health. However, despite their potential health benefits, inadequate intakes of these food components do not result in biochemical or clinical signs or symptoms of deficiency.

The framework for the development of the DRIs has evolved over the years and is well described by others (1, 3, 4). The criteria for determining the DRIs for essential nutrients were originally based on requirements for a specific nutrient to maintain normal physiologic/biochemical function to prevent a deficiency, with subsequent clinical effects. With advances in nutritional science, the basis for nutrient reference values was expanded to specifically include measures for reducing the risk of chronic degenerative disease when data were available.

Thus, the nutrient requirement framework has developed into what is essentially a risk assessment model, as is exemplified in the most recent DRI report on calcium and vitamin D (2). The framework is designed to permit users to make decisions when data are limited and then to offer options for making scientific judgments under conditions of uncertainty. The framework, as it now stands, includes identification of potential indicators of both adequacy and excess, with selection of the best functional indicators to serve as the basis for DRI values, assessment of dietary intake from national survey data (if available), and consideration of the associations between intake and response (dose-response relations) on the basis of the available literature. In addition, the current DRI framework recognizes the utility of organizing and rating the available data through the use of systematic evidence-based reviews. A systematic review used to assess a relation between a nutrient and a health outcome is broader and different from one used for a medical intervention (2). The research that needs to be assessed is greater for determining the relation between a nutrient and health outcome because nutrients can have both direct and indirect effects on a number of health outcomes.

In a few instances, the DRI framework for essential nutrients has been applied using nonessential food components, such as carotenoids, fiber, and certain trace elements. Of these nonessential food components, fiber best fit the framework by having consistent epidemiologic evidence and well-controlled clinical trials supported by human and animal data that showed possible mechanisms of action and benefit, and little risk of adverse effects. A DRI for fiber was thus established. The following examples illustrate how the current DRI framework has been applied to nonessential food components.

Fiber.

Dietary fiber and functional fiber, the combination of which is termed “total fiber,” are nonessential dietary constituents that had sufficient data on their potential health benefits to establish an AI but not an EAR (1, 5). The AIs for total fiber were based on the intake amounts that were observed to prevent coronary heart disease (CHD) in epidemiologic, clinical, and mechanistic studies. The reduction in diabetes risk was used as a secondary endpoint to support the recommended intake amounts. An AI was not established if there were insufficient or no data for dietary fiber intake in particular age groups (i.e., 0–6 mo and 7–12 mo). AIs were established for age groups in which the intake amount was shown to provide the greatest protection against CHD and extrapolated for other groups that might possibly benefit, such as children. An UL was not set for fiber because serious chronic adverse effects have not been observed from excessive intakes.

Experimental data, acquired since the early 1990s, from both humans and animals demonstrate the blood cholesterol–lowering effect of dietary fibers in adults. This finding is further supported by epidemiologic evidence showing the relation between an increased intake of high-fiber foods and a reduction in CVD risk (6). Humans showed a reduction in LDL cholesterol, which is a validated surrogate endpoint for CHD. These intervention studies were performed by using fiber-rich foods, fiber-enriched fractions from food (i.e., brans), and isolated polysaccharides. There is also some evidence from epidemiologic studies to suggest that diets high in fiber-rich foods decrease the risk of hypertension, which is also a risk factor for CVD. However, 1 issue that arises when examining observational epidemiologic studies on the effect of dietary fiber is that it is not possible to distinguish between the effects of dietary fiber per se and fiber-rich foods, which contain certain other bioactive phytochemical food components such as other constituents of whole grains that could be responsible for reducing the risk of chronic disease. Moreover, foods high in fiber are generally low in fat, saturated fat, and cholesterol, which is also associated with a reduced risk of certain chronic degenerative diseases. Thus, isolating fiber as a single factor accounting for the effects is difficult, and fiber must thus be evaluated in the context of the total dietary pattern.

Although evidence for establishing an EAR for fiber was insufficient, an AI for fiber was set because numerous large, prospective, observational cohort studies showed a significant inverse relation between dietary fiber intake and CHD risk (5). The evidence of a substantial protective effect of dietary fiber for CHD came from large, adequately powered, prospective studies (5). These studies made it possible to relate the number of grams of dietary fiber per day to the decreased incidence of CHD (5). Studies also showed that the effect of fiber on decreases in blood cholesterol concentration was not due simply to the replacement of fat in the diet. The AI was based on the usual daily intake of energy for each age group and was expressed in grams per day of total fiber, because the evidence suggested that the beneficial effects of fiber in humans were most likely to be related to the amount of food consumed (and not to an individual’s age or body weight) and because grams per kilocalorie would not be useful advice because most individuals did not know how many kilocalories they consumed in a day. An EAR was not set because prospective studies showed that the impact of dietary fiber on the development of CHD occurred continuously across a range of intakes (5).

Other nonessential food components.

β-Carotene and other carotenoids were reviewed and rejected for a DRI standard. The IOM committee concluded that, although epidemiologic evidence suggested that higher blood concentrations of β-carotene and other carotenoids obtained from foods were associated with a lower risk of several chronic degenerative diseases (e.g., certain cancers, CVD, age-related macular degeneration, and cataracts), a DRI could not be established because the observed beneficial effect could be due to other substances found in carotenoid-rich foods or to behaviors associated with increased fruit and vegetable consumption (7). Moreover, although the epidemiologic evidence was consistent for benefits in some cases, other evidence from 3 large randomized clinical trials indicated a lack of evidence of an overall benefit on total cancer or CVD and indeed suggested possible harm from very large doses in population subgroups such as smokers and asbestos workers. Consequently, a DRI was not established. β-Carotene is an example that illustrates that the understanding of the role of a food component in a food matrix may differ from one that is provided in isolation or in another matrix such as a dietary supplement. It also illustrates the importance of active investigation of possible adverse effects.

There is keen interest in many other bioactive components in addition to those discussed above with regard to their effect on human health. These bioactive components present challenges in establishing a DRI. Some have been discussed in recent workshops that examined ways to establish a process for setting a recommended dietary intake (8, 9). These include n–3 long-chain PUFAs (primarily EPA and DHA) (8). Interestingly, China has established a process for setting a special category of DRIs, and the Chinese Nutrition Society had expert review panels evaluate 18 phytochemicals (e.g., lycopene, isoflavones, phytosterols) (9). In recent years, the USDA Agricultural Research Service’s (ARS) Nutrient Databank has provided special interest databases on flavonoids, which include the 5 subclasses of flavonoids (flavonols, flavones, flavanones, flavan-3-ols, and anthocyanidins) of select foods. Moreover, isoflavone and proanthocyanidin content of select foods has also been provided in separate databases. Special interest databases of select foods would not exist if there was not high interest in the biologic effects on human health by these nonessential food components.

Components to consider in the development of an alternative framework

Emerging data suggest that several nonessential food components are safe and may be beneficial to human health. For example, fiber was evaluated by using the existing DRI process and an AI was determined, as previously discussed. However, the current framework may not be suitable for developing intake recommendations for other nonessential bioactive components, as was shown in the case of carotenoids. Therefore, the question remains regarding what framework would be suitable to develop intake recommendations for nonessential food components that may have health benefits, are without significant risk of adverse effects, and are considered to be a part of a healthy diet but lack validated biomarkers of disease risk reduction. Because food and its components influence health, a food-based approach to maintaining and improving health and reducing the risk of chronic disease provides the potential for health benefits to the public, although the specific risk factors and determinants of health vary across the life span.

Another key question concerns what health effects should form the basis for evaluating a nonessential food component. If quantitative reference values for nonessential food components are established, can these be translated into food-based recommendations? The scientific data must demonstrate consistent results showing that the effects of the food component of interest account for the health impact.

In 1998, the Panel on Dietary Antioxidants and Related Compounds convened by the IOM’s Food and Nutrition Board proposed a definition and plan for review of dietary antioxidants and related compounds (10). It had been shown that antioxidants (e.g., β-carotene, vitamin C, and vitamin E) might confer health effects through a variety of different mechanisms because the compounds differ in their chemical behavior and biologic properties. Because antioxidants work through different mechanisms, they may not confer a common health benefit. As a result, the IOM panel decided to base the definition of antioxidants on their functional or physiologic parameters rather than on a specific benefit. The proposed definition for dietary antioxidant substances was therefore based on maintaining normal physiologic function in humans. Evidence for antioxidant activity was based on several potential biomarkers that became available due to scientific progress. For some antioxidants, such as β-carotene and other carotenoids, food composition data were available to estimate their consumption. As discussed below, a comprehensive food composition database is required to assess dietary intakes in a population. The evaluation of scientific evidence for an essential nutrient or nonessential food component to obtain a DRI generally encompasses a range of evidence, starting with preliminary suggestive observational data and progressing to definitive data from randomized, double-blind, controlled intervention trials. Experimental human studies must be relied on to set human nutrient requirements.

Study types.

In an “ideal” world, observational epidemiologic studies would show an association between a food component of interest, an endpoint, and intermediate biomarkers, which would be further supported by clinical studies showing an effect on the same biomarkers and would thus confirm causality. Although causal inference from epidemiologic studies is limited, prospective cohort studies are considered the most reliable type of observational study design (11) and emphasis should be placed on these studies. Before the start of the study, known confounders that influence disease risk need to be collected and adjusted for to minimize bias related to age, race, sex, ethnicity, body weight, and smoking status, among others. If data are sufficient, a dose-response curve for excessive intake of a nutrient, either essential or nonessential, can also be estimated if adverse events are monitored and completely ascertained and if the study is large enough. This curve can be used to identify adverse health effects. However, these data are often lacking. Thus, steps for addressing uncertainty are critical for ensuring that no adverse effects from the food component exist.

Existing evidence, primarily from large prospective studies, has shown beneficial effects of fruit and vegetable consumption with respect to decreasing chronic disease risk (12). However, it may be difficult to isolate the effects of a single food component from multicollinearity and the confounding effects of other food components (both essential and nonessential nutrients), even in well-designed, large-scale observational studies. Moreover, the nutrients or food components responsible are difficult to ascertain because of multiple nutrient interactions that would need to be isolated for further study and for possible substitutions of nutrients known to increase risk of certain chronic diseases (e.g., saturated fats and CHD). Finally, adverse events may not have been monitored and so safety is difficult to determine. Therefore, it is difficult, and not always possible, to test the effect of individual nutrients or food components on chronic disease risk with the use of such data.

Historically, large randomized controlled intervention studies have been given the greatest weight in evaluating nutrient-disease relations. However, these studies have their limitations as well. These limitations include length (duration too short), confounding, multicollinearity introduced by other nutrients in the diet that interact with the constituent of interest, subject compliance, and so forth. Because chronic disease develops over a long period of time, longer-term trials are desirable but they can be cost-prohibitive and may possibly be unethical if diseases are likely to progress in the absence of intervention to irreversible and harmful disease endpoints. Thus, for many chronic disease clinical trials, biomarkers of effect or risk biomarkers rather than unambiguous health outcomes are used for assessing relations between diet and disease.

The ability to replicate study results is another critical criterion. The greater the consistency among studies, the greater the level of confidence can be in the observed relation. In addition, regardless of the study type, the substance (bioactive food component) that is the focus of study must be clearly defined by using reliable, reproducible, and validated analytical methods to analyze the food component in both the food matrix and from biologic samples (blood and/or urine). The ability to replicate enables researchers to directly attribute the biologic effect to the food component. Therefore, it is important that accurate and reliable analytical methods for measuring the substance of interest are available.

It is not always possible to set a UL for nonessential food constituents because of the paucity of data indicating the effects of chronic intakes of high amounts of nutrients. Experimental animal and observational data are useful and relevant in these circumstances (D.A. Balentine, unpublished results, 2014).

Estimating intake: biomarkers of intake exposure and dietary assessment tools.

Biomarkers of exposure refer to the concentration of a nutrient measured in blood samples or balance studies. They have also been used as an indicator of dietary intake in setting DRI reference values. Biomarkers of both exposure and status can be used to estimate inadequate and excessive intakes of a nutrient (3). They measure either the substance itself or a metabolite of the substance in validated biologic samples. One may question how well the marker reflects actual dietary exposure. Therefore, it is critical to understand the nature of the biologic indicator (13). Perhaps there should be less concern with the link of the biomarker to exposure and more concern with the link of biologic status to a biomarker (13). Regardless, a precise and repeatable marker that reflects the constituent of interest is needed.

There are many limitations to nutritional biomarkers. Chief among them is that some bioactive food constituents are unknown or are yet to be identified. When available, nutritional biomarkers can provide an accurate measure of dietary intake that may have less potential for error than dietary intake measurements. For certain dietary constituents, such as iron, it is possible that >1 measure of exposure would provide a more accurate measure of intake than a single measure. For bioactive food components, it may be more informative in preliminary studies to rely on food group analyses from food intake questionnaires because the constituents in food are unknown. However, if biomarkers of exposure and/or a combination of validated FFQs and serial 24-h recalls are used together, the ability to distinguish the background diet from the substance of interest may be more feasible. The assumption that greater repeatability ensures more valid estimates of the association between exposure and disease risk cannot be assumed in the case of biomarkers of exposure, but multiple measures do provide better estimates of usual intakes. When biochemical measures are used, the assay needs to be validated, which is especially important for newly emerging dietary components, such as bioactive components. There can be large variation within and between laboratories, which can make it difficult to compare results among studies. For a measurement of exposure to be valid, it needs to be highly replicable and very precise to be linked to dietary intake. The validity of exposure biomarkers depends on their ability to reflect actual intake precisely, and repeatability does not establish validity (13). There should be evidence of a correlation between the intake amount of the food component and the concentration of the food component or its metabolite in the biologic sample. However, at present, few reliable and validated biomarkers of exposure exist for most nutrients. This limitation becomes especially critical in estimating nutrient intakes from observational studies. Therefore, the dietary assessment method is of great importance in evaluating dietary intake. Observational studies rely on diet records, 24-h recalls, diet histories, or FFQs. Dietary exposure estimates for DRIs have also been based on 24-h recalls for food and quantitative assessments of water, as well as on supplement intakes from national food intake surveys (3). An FFQ is the most commonly used tool to estimate food intake, and FFQs validated for the constituents of interest or serial 24-h recalls are reliable tools for estimating usual food intake (14). All of these methods are dependent on participants’ ability to recall the food they consumed; thus, there is always some bias in self-reporting. As a result, with this bias comes uncertainty.

Regardless of whether food intake assessment involves estimating intake of a whole food or a component of food, observed effects on outcomes can be confounded by interactions with other food components or from displacement of certain foods in the diet (15–17). To ensure the accuracy of a biomarker of exposure, the biomarker must be reproducible, reliable, and valid (13). Moreover, it is critical that the biomarker reflects actual dietary exposure of the food component. It is possible that a single biomarker is not enough to reflect intake of a food component, and a combination of biomarkers of exposure may thus be required for assessment. A major impediment to estimating intake for certain bioactive components is that they consist of many different subclasses and compounds, and a good food composition database may not exist for them. Many food databases may lack analytical values for foods. It is important to have accurate and reliable analytical methods for measuring the substance of interest. Bioavailability may vary, which thus affects measurement of intake. Moreover, some bioactive components are rapidly absorbed and eliminated, which presents a challenge in estimating food intake from plasma if that were a marker of exposure.

Because observational studies estimate the intake of a whole food on the basis of an assessment tool to ascertain dietary intake, the food component of interest then becomes an estimate of an estimate when a reliable independent biomarker of exposure does not exist. It is critical that high-quality, updated, valid, and comprehensive food composition databases for non-nutrient bioactive components be made available. There has been significant progress in improving the accuracy of these databases over the past several years and continued progress will be important. Furthermore, sources of variability can include food processing, seasonal variation, cooking procedures, food storage, and nutrient bioavailability. These variations present significant challenges for accurately assessing the intakes of bioactive food components.

As funding allows, nutrient databases, such as the USDA-ARS’s National Nutrient Databank, continually update and expand their food composition databases to represent the U.S. food supply. Among their objectives is to include data on existing food yield and nutrient retention factor tables to reflect current food preparation methods, processing, and new food products. They have developed authoritative food composition databases for non-nutritive components that have been shown to be beneficial to human health such as isothiocyanates and other sulfur-containing compounds. The USDA-ARS’s Nutrient Data Laboratory has expanded on existing databases (e.g., flavonoids) to include more foods, variability estimates, cultivar, weather, growing conditions, etc., that may have an impact on nutrient values. Moreover, they have continued to update and expand the nutrient values for several bioactive compounds in raw, processed, and prepared foods in their special interest databases such as flavonoids, isoflavones, proanthocyanidins, and others. Providing more of this information in national nutrient databases would ensure more accurate assessment of bioactive food components.

Biomarkers of effect.

Biomarkers of effect are defined as characteristics that are reliably and accurately measured and evaluated as indicators of normal biologic processes, pathogenic processes, or pharmacologic responses to an intervention (18). Long-term clinical trials are expensive and are not always feasible to continue until a clinical outcome presents itself (e.g., occurrence of a chronic disease). Various biomarkers have been used since the inception of the DRI process and are thus used as the intermediate endpoints that may predict disease outcome. The most reliable biomarkers of effect are those that are validated surrogate endpoints of disease risk; however, few such endpoints exist. Surrogate endpoints of chronic disease risk predict clinical benefit or harm and can be modified by various factors such as diet but also other factors. Surrogate endpoints of chronic disease are risk biomarkers that serve as substitutes for clinical endpoints (e.g., CHD). However, not all risk biomarkers are surrogate marker endpoints (e.g., C-reactive protein may predict the risk of CHD but it is not a substitute for a CHD endpoint) that could be a direct measure of CHD risk such as LDL cholesterol. Risk biomarkers encompass those that may be in the causal pathway for disease risk but have not been accepted, often due to lack of scientific evidence to validate them as surrogate endpoints for disease risk (e.g., HDL cholesterol as a surrogate endpoint for CHD is not validated at present). There may be several pathways to the resulting disease, which is often the case with cancer, and this issue of multiple pathways further complicates validation of a potential surrogate endpoint for disease risk because a biomarker may only identify 1 of many pathways. These biomarkers are considered biomarkers of effect, which have been used in DRI development, and it has been suggested that their utility in filling data gaps makes them critical components in using the DRI process (3).

Mechanistic and functional biomarkers have also been used in DRI development. These types of biomarkers assess the impact of the consumed nutrient on intermediate physiologic effects or biochemical actions. Maintaining or improving physiologic function may lead to optimal health, but the indicative risk factors may or may not be in a causal pathway for disease risk reduction. For example, a reduction in chronic inflammation—as measured by changes in high-sensitivity C-reactive protein, various interleukins, and serum amyloid A—may all lead to a reduction in CHD risk. Risk factors related to immune function, gastrointestinal health, vision, muscular health, or brain/cognitive function may consist of several indicators in a common pathway indicative of optimal physiologic functioning.

Disease risk is multifactorial (e.g., lifestyle, genetics, and environmental factors), and both essential and nonessential nutrients as well as many other factors can affect many biologic processes. Because food components may elicit multiple effects (e.g., lowering blood pressure, decreasing inflammation, and inhibiting platelet aggregation, among others, some of which may not be considered surrogate endpoints for chronic disease risk), questions remain regarding whether these measurements can be taken collectively (or considered as a single group) as evidence of an effect if it is consistent and whether this evidence is enough to represent a beneficial health outcome. Moreover, there may be many pathways to disease outcome, which is often the case with cancer. Questions also exist regarding how nonsurrogate endpoints of chronic disease can be validated and what are the accepted intermediary endpoints of health if chronic disease is not the endpoint of health interest. Nonvalidated intermediary risk biomarkers may possibly be predictors of clinical outcomes.

Validated surrogate endpoints of disease risk have been identified over many years of research from well-designed clinical trials and are supported by large prospective cohort studies that show consistency of results that are widely agreed upon by experts in the field. There are many emerging biologic indicators of disease risk/health that may be potential intermediary biomarkers. One focus should be on maintaining normal physiologic function throughout adulthood, which then leads to reducing the risk of chronic disease. This can only be ascertained through reliable and measurable intermediary biomarkers. Nonessential food components such as β-carotene and other carotenoids as well as certain flavonoids have been shown to modulate a variety of intermediate endpoints. Studies that validate whether changes in an intermediate endpoint are predictive of health outcomes are still lacking, however (7). Evidence exists for modifying the risk of CVD by modifying blood pressure, a surrogate endpoint for CHD, or modifying less accepted risk biomarkers (in the United States, at least) that are indicative of risk of developing CVD such as flow-mediated dilation, an indicator of vascular endothelial function (19, 20). Multiple endpoint markers may potentially be helpful if all show the same beneficial effect on a particular health outcome (e.g., CHD). This is especially true for nutritional studies in which nutrients may have multiple biologic effects.

Questions remain regarding alternative biomarkers of effect that might be considered and their role in developing an alternative framework. For example, how are the appropriate endpoints chosen if they are not validated surrogate endpoints of disease risk? Should the endpoints or intermediate endpoints chosen be well accepted by experts in the field and reside in the causal pathway for a particular health effect? How many of these “biomarkers” need to be measured and showing the same effect? Would an IOM committee accept several risk biomarkers that all demonstrate consistency and reproducibility with the health outcome of decreasing risk of CHD but show no validated surrogate endpoint?

A preponderance of data on the food component under study from the epidemiologic, clinical, and mechanistic (animal and in vitro) standpoints would be required to clearly identify potential indicators of optimal health. It is possible that the selection of indicators could be based on a particular food component of interest as long as the process is transparent, evidence-based, and the food component has public health impact.

Should the European model be a guide?

“General function” claims under Article 13.1 of the European Commission regulation on nutrition and health claims refer to the role of a nutrient or other substance as follows: growth, development, functions of the body, psychological and behavioral functions, slimming or weight control, reduction in the sense of hunger, increase in the sense of satiety, or reduction of the available energy from the diet (21). General function claims do not include disease risk reduction. This model selects for appropriate outcomes, including biomarkers. Several cardiovascular claims have been approved on the basis of biomarkers that were reliably measured in short-term intervention studies. These biomarkers included those not considered validated surrogate endpoints for CHD such as maintaining/improving elasticity of blood vessels (measured by endothelium-dependent vasodilation), maintaining normal platelet aggregation, and contributing to the protection of blood lipids from oxidative stress. All cardiovascular claim evidence was based on experimental studies, with reliable and accurate measurement of outcomes. An equivalent type of claim in the United States would be structure/function claims, which are not reviewed before marketing by the U.S. FDA. Are these outcomes or biomarkers of effect—if they are taken collectively as recognized by scientific experts to contribute to health and are possibly present in the causal pathway for disease risk—enough for consideration to make a public health recommendation for a reliable level of intake? The benefits of nonessential food components must be shown to contribute to overall good health or health-related well-being, and their specific role must be derived from a large body of strong scientific evidence. The biomarker could not be accepted for substantiation if the data were limiting. As reviewed above, there are numerous challenges in obtaining valid, reproducible, and reliable data. Biomarkers for health effects need to be related to the disease.

The question remains as to what is an appropriate process for establishing a framework toward acceptance of a “biomarker of health.” A biomarker of health must be substantiated by accepted scientific evidence that takes into account the totality of available scientific data. The scientific evidence must be generally accepted by experts in the field and have a beneficial physiologic effect that can include reducing the risk of disease. The evidence would be weighed to be able to make conclusions on substantiation. A committee of experts was convened by the IOM at the request of the FDA to recommend a framework for the evaluation of biomarkers in chronic disease (18). The committee recommended a 3-part framework consisting of 1) analytical validation (Is the biomarker able to be accurately measured?), 2) qualification (Is the biomarker associated with the clinical endpoint of concern?), and 3) utilization (What is the specific context of the proposed use?). Perhaps this type of evaluation system could be used or modified as warranted to develop criteria for substantiating a biomarker of effect.

Next steps

As the 1998 IOM Panel on Dietary Antioxidants and Related Compounds stated in its report on the proposed definition for dietary antioxidants, as new data emerge on these and other related compounds (e.g., flavonoids, phenols, and polyphenols, among others), these food components should be examined for setting a DRI (10). The Food and Nutrition Board would be 1 appropriate group to convene a committee of scientific experts to develop a new or alternative framework for assessing nonessential food components. The first step in this process would be to convene a workshop to examine the feasibility of such a framework. This would require the cooperation of funding organizations (especially the federal government) because they are the primary source of funds for the DRI process. The NIH and other scientific conferences have been used to assemble scientific experts in a particular area to critically evaluate current research and identify research needs and gaps, as was done for vitamin D (22). These types of conferences inform DRI decisions. However, there are always many competing priorities for government funding. Nevertheless, in the interest of conveying health messages to the public that are consistent with sound scientific information, this is an area that deserves serious consideration. A workshop would be the first step in identifying the elements of the framework to be included, and these elements need to be focused and clearly defined. The workshop would consist of discussions on the scientific evidence required for making recommendations regarding the intake of nonessential food components for maintaining and promoting optimal health. Those resources that are essential for developing a comprehensive framework for some nonessential food components need to be identified. It is likely that there is not only 1 DRI model; rather, there are multiple models for setting standards that have evolved over the years as new data have become available. It is possible that the established categories of reference intakes that are part of the current DRI are not suitable for nonessential food components, which potentially calls for establishing new terminology. Or the use of the AI may be appropriate. This is just 1 of the many considerations that warrant discussion at a workshop.

Conclusions

The inclusion of disease risk reduction as an indicator in the DRI process may have led to some unintended consequences. Moving forward, there needs to be recognition that the scientific evidence accumulated for indicators or biomarkers exists on a continuum as indicators evolve with the emergence of new scientific evidence. There is a need for a more flexible model that incorporates both chronic and nonchronic disease biomarkers that are more indicative of the normal physiologic functioning that leads to and supports optimal health. Nonchronic disease indicators would need to be inclusive of nonvalidated surrogate endpoints that are widely recognized and accepted by scientific experts as risk biomarkers in the pathway for disease.

It is important to consider the possible consequences of not developing a framework for nonessential food components, in which the biology is not fully understood but there is clear evidence that they have health benefits. Questions remain regarding the public health consequences of inaction. Communicating evidence-based information about healthy food choices to the public is critical. The public is inundated daily with nutritional information regarding dietary choices. Conveying accurate and reliable information about the health benefits of many of the nonessential food components would enhance the public’s understanding of the importance of these foods, or food extracts containing them, in their diet. The recognition of the importance of these nonessential food components in the diet and the need for validation of intermediary biomarkers to assess maintenance of optimal health might help to spur research funding in this area and greatly enhance our understanding of the role of nonessential food components in health. Moreover, developing a framework for these nonessential food components could lead to intake recommendations based on sound scientific data that would convey the importance of the safe use of these compounds. This would benefit policy makers who are tasked with providing evidence-based dietary guidance and would inform the values chosen for labeling foods and dietary supplements.

Acknowledgments

All authors read and approved the final version of the manuscript.

Footnotes

Abbreviations used: AI, Adequate Intake; ARS, Agricultural Research Service; CHD, coronary heart disease; CVD, cardiovascular disease; EAR, Estimated Average Requirement; EER, Estimated Energy Requirement; IOM, Institute of Medicine; UL, Tolerable Upper Intake Level.

References

- 1.Institute of Medicine. Dietary Reference Intakes: the essential guide to nutrient requirements. Washington: The National Academies Press; 2006. [Google Scholar]

- 2.Institute of Medicine. Dietary Reference Intakes for calcium and vitamin D. Washington: The National Academies Press; 2011. [PubMed] [Google Scholar]

- 3.Taylor CL. Framework for DRI development: components “known” and components “to be explored”. Washington: Institute of Medicine; 2011. [Google Scholar]

- 4.Institute of Medicine. The development of DRIs 1994–2004: lessons learned and new challenges: workshop summary. Washington: The National Academies Press; 2008. [Google Scholar]

- 5.Institute of Medicine. Dietary Reference Intakes for energy, carbohydrate, fiber, fat, fatty acids, cholesterol, protein, and amino acids. Washington: The National Academies Press; 2005. [Google Scholar]

- 6.Gallaher DD. Dietary fiber. In: Russell RM, Bowman B, editors. Present knowledge in nutrition. Washington: ILSI Press, 2006. p. 102–10. [Google Scholar]

- 7.Institute of Medicine. Dietary Reference Intakes for vitamin C, vitamin E, selenium and carotenoids. Washington: The National Academies Press; 2000. [PubMed] [Google Scholar]

- 8.Biesalski HK, Erdman JW, Hathcock J, Ellwood K, Beatty S, Johnson E, Marchioli R, Lauritzen L, Rice HB, Shao A, et al. Nutrient reference values for bioactives: new approaches needed? A conference report. Eur J Nutr 2013;52 Suppl 1:1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lupton JR, Atkinson SA, Chang N, Fraga CG, Levy J, Messina M, Rishardson DP, van Ommen B, Yang Y, Griffiths JC, et al. Exploring the benefits and challenges of establishing a DRI-like process for bioactives. Eur J Nutr 2014;53 Suppl 1:1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Institute of Medicine. Dietary Reference Intakes: proposed definition and plan for review of dietary antioxidants and related compounds: a report of the Standing Committee on the Scientific Evaluation of Dietary Reference Intakes and its Panel on Dietary Antioxidants and Related Compounds. Washington: The National Academies Press; 1998. [PubMed] [Google Scholar]

- 11.Greer N, Mosser G, Logan G, Halaas GW. A practical approach to evidence grading. Jt Comm J Qual Improv 2000;26:700–12. [DOI] [PubMed] [Google Scholar]

- 12.USDA; U.S. Department of Health and Human Services. Dietary guidelines for Americans, 2010. 7th ed. Washington: U.S. Government Printing Office; 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Marshall JR. Methodologic and statistical considerations regarding use of biomarkers of nutritional exposure in epidemiology. J Nutr 2003;133 Suppl 3:881S–7S. [DOI] [PubMed] [Google Scholar]

- 14.Subar AF, Ziegler RG, Thompson FE, Johnson CC, Weissfeld JL, Reding D, Kavounis KH, Hayes RB. Is shorter always better? Relative importance of questionnaire length and cognitive ease on response rates and data quality for two dietary questionnaires. Am J Epidemiol 2001;153:404–9. [DOI] [PubMed] [Google Scholar]

- 15.Sempos CT, Liu K, Ernst ND. Food and nutrient exposures: what to consider when evaluating epidemiologic evidence. Am J Clin Nutr 1999;69:1330S–8S. [DOI] [PubMed] [Google Scholar]

- 16.Willett WC, editor. Overview of nutritional epidemiology. In: Nutritional epidemiology. Oxford: Oxford University; 1990. p. 16–7. [Google Scholar]

- 17.Willett WC, editor. Issues in analysis and presentation of dietary data. In: Nutritional epidemiology. Oxford: Oxford University; 1998. p. 339–40. [Google Scholar]

- 18.Institute of Medicine. Evaluation of biomarkers and surrogate endpoints in chronic disease. Washington: The National Academies Press; 2010. [PubMed] [Google Scholar]

- 19.Hooper L, Kroon PA, Rimm EB, Cohn JS, Harvey I, Le Cornu KA, Ryder JJ, Hall WL, Cassidy A. Flavonoids, flavonoid-rich foods, and cardiovascular risk: a meta-analysis of randomized controlled trials. Am J Clin Nutr 2008;88:38–50. [DOI] [PubMed] [Google Scholar]

- 20.Erdman JW, Jr, Balentine D, Arab L, Beecher G, Dwyer JT, Folts J, Harnly J, Hollman P, Keen CL, Mazza G, et al. Flavonoids and heart health: proceedings of the ILSI North America Flavonoids Workshop, May 31-June 1, 2005, Washington, DC. J Nutr 2007;137(3 Suppl 1):718S–37S. [DOI] [PubMed] [Google Scholar]

- 21.European Food Safety Authority. General function” health claims under Article 13 [cited 2013 Jul 1]. Available from: http://www.efsa.europa.eu/en/topics/topic/article13.htm.

- 22.Yetley EA, Brulé D, Cheney MC, Davis CD, Esslinger KA, Fischer PWF, Friedl KE, Greene-Finestone LS, Guenther PM, Klurfeld DM, et al. Dietary Reference Intakes for vitamin D: justification for a review of the 1997 values. Am J Clin Nutr 2009;89:719–27. [DOI] [PMC free article] [PubMed] [Google Scholar]