Abstract

Despite research suggesting that stored sensorimotor information about tool use is a component of the semantic representations of tools, little is known about the action features or organizing principles that underlie this knowledge. We used methods similar to those applied in other semantic domains to examine the “architecture” of action semantic knowledge. In Experiment 1, participants sorted photographs of tools into groups according to the similarity of their associated “use” actions and rated tools on dimensions related to action. The results suggest that the magnitude of arm movement, configuration of the hand, and manner of motion during tool use play a role in determining how tools cluster in action “semantic space”. In Experiment 2, we validated the architecture uncovered in Experiment 1 using an implicit semantic task for which tool use knowledge was not ostensibly relevant (blocked cyclic word-picture matching). Using stimuli from Experiment 1, we found that participants performed more poorly during blocks of trials containing tools used with similar versus unrelated actions, and the amount of semantic interference depended on the magnitude of action similarity among tools. Thus, the degree of featural overlap between tool use actions plays a role in determining the overall semantic similarity of tools.

Keywords: actions, semantic memory, manipulable objects, tool use, tools

With experience, we learn to use tools in complex ways that cannot always be inferred from the objects' physical structures. For instance, typing on a keyboard and whisking an egg both require access to learned knowledge of skilled use. But, there is increasing evidence that knowledge of tool use actions may be recruited when we think of these objects even during tasks that do not ostensibly require action information. A number of behavioral studies have demonstrated that action-related experimental manipulations affect participants' behavior on lexical and semantic tasks with tool pictures or words (Campanella & Shallice, 2011a; Helbig, Graf, & Kiefer, 2006; Helbig, Steinwender, Graf, & Kiefer, 2010; Myung, Blumstein, & Sedivy, 2006). For example, participants are faster to make a lexical decision to a target word when the target is preceded by a word referring to a tool used with the same action (e.g., typewriter – piano) (Myung et al., 2006). Moreover, participants asked to find a picture of a target tool in an array look longer at distractor tools used in the same way as the target, relative to unrelated distractors (Lee, Middleton, Mirman, Kalénine, & Buxbaum, 2012; see also Myung et al., 2006). Accordingly, on the same tasks, stroke patients with tool use impairments and deficient action recognition (apraxia) exhibit abnormally reduced and delayed patterns of looking at distractor tools that are used in the same manner as targets (Lee, Mirman, & Buxbaum, in press; Myung et al., 2010). Together, this evidence suggests that action knowledge may be a component of the semantic representations of tools (see also Campanella & Shallice, 2011a).

On most accounts of semantic memory, artifact concepts are distributed over sets of features that encode different kinds of information, including color, shape, function, manipulation, and typical location (e.g., Allport, 1985; Barsalou, 1999; McRae, de Sa, & Seidenberg, 1997; Vigliocco, Vinson, Lewis, & Garrett, 2004; Warrington & Shallice, 1984). There is growing evidence that many of these semantic features may be grounded in sensory and motor systems as a result of our real-world experiences with objects (for reviews, see Barsalou, 2008; Martin, 2007). For example, visual attributes of objects are represented within areas specialized for processing different aspects of visual input. Areas of the brain involved in color perception are active when participants retrieve color information about objects from semantic memory (Simmons et al., 2007; see also Goldberg, Perfetti, & Schneider, 2006), and semantic priming in reaction time tasks has been observed between words referring to objects related by color (e.g., “emerald” and “cucumber”) when participants first complete a color-based Stroop task (Yee, Ahmed, & Thompson-Schill, 2012). Similarly, semantic priming in reaction time tasks or as measured by event-related potentials (ERP) is found between words referring to objects of the same size (Setti, Caramelli, & Borghi, 2009) or shape (e.g., “ball” and “apple”) (Kellenbach, Wijers, & Mulder, 2000; Schreuder, Flores d'Arcais, & Glazenborg, 1984; Zwaan & Yaxley, 2004; see also Yee, Huffstetler, & Thompson-Schill, 2011; Zwaan, Stanfield, & Yaxley, 2002).

In contrast to this increasingly refined understanding of the attributes likely to serve as “elemental features” in the domain of visual semantics, there have been fewer comparable attempts to understand the putative spatiomotor parameters that may serve as elemental features in the domain of action semantics. Many behavioral (e.g., Filliter, McMullen, & Westwood, 2005; Kalénine & Bonthoux, 2008; Siakaluk, Pexman, Aguilera, Owen, & Sears, 2008; Siakaluk, Pexman, Sears, et al., 2008) and neuroimaging (e.g., Boronat et al., 2005; Gerlach, Law, & Paulson, 2002; Hargreaves et al., 2012; Kellenbach, Brett, & Patterson, 2003; Mecklinger, Gruenewald, Besson, Magnié, & von Cramon, 2002) studies contrast performance on objects with or without associated actions or examine the degree to which objects can be easily acted upon (“manipulability”). While these kinds of comparisons can address the claim that action knowledge contributes to artifact concepts, they do not reveal the specific features of object-directed actions that are relevant in tasks that recruit action knowledge.

Evidence to date suggests that if action features contribute to the conceptual representations of tools, these features are likely to reflect knowledge of skilled use actions rather than grasp-to-move actions. Like other semantic features, tool use actions are learned via repeated experience. By contrast, the actions appropriate for grasping tools and other objects in order to transport them can be computed based on objects' physical structures, without any prior knowledge. Furthermore, although there is evidence that both use and grasp actions are evoked by the sight of tools (e.g., Bub, Masson, & Cree, 2008; Tucker & Ellis, 1998), their activations follow different time courses: actions based on object structure (grasps) are elicited rapidly and quickly decay, while tool use actions are activated more slowly but maintained over longer intervals of time, making them capable of producing short-term interference effects (Jax & Buxbaum, 2010). Finally, tool use actions (“functional” actions) are more strongly evoked by tool words than grasp actions (“volumetric” actions), data that have been taken to indicate that use actions may be more central to linguistically-accessed tool concepts than grasp actions (Bub et al., 2008; see also Bub & Masson, 2012; Masson, Bub, & Newton-Taylor, 2008). Thus, since tool use knowledge exhibits characteristics typical of semantic memory (see also Buxbaum & Kalénine, 2010), we focus our research on understanding the organization of action features associated with skilled tool use actions.

Previous work with healthy participants and patients with disorders of action provides some evidence for candidate action features that may be recruited during semantic processing. One prime candidate is hand posture. A number of studies indicate that patients with apraxia are particularly impaired in their knowledge of the hand postures appropriate for using tools (Buxbaum, Kyle, Grossman, & Coslett, 2007; Buxbaum, Sirigu, Schwartz, & Klatzky, 2003; Jax & Buxbaum, 2013; see Andres, Pelgrims, & Olivier, in press; Pelgrims, Olivier, & Andres, 2011 for similar findings with transcranial magnetic stimulation in neurologically-intact participants). Relatedly, studies of eye movements reveal that patients with apraxia exhibit abnormally delayed and reduced competition between tools used with similar hand postures during auditory word-picture matching tasks (Lee et al., in press; see also Myung et al., 2010). Behavioral studies with neurologically-intact participants also suggest that hand posture during use may be an action semantic feature of tools. Bub, Masson, & Cree (2008) trained participants to associate colors with specific hand postures (e.g., poke, pinch). Later, participants viewed familiar tool objects depicted in these colors and responded to each object by performing the hand posture cued by the object's color. Participants took longer to respond when the cued hand posture was incongruent with the hand posture typically associated with using or grasping a tool. Together, these data suggest that hand posture is likely to be one of the action features of tool concepts.

Of course, our knowledge of tool use actions extends beyond static hand postures. For example, although a hammer and a garlic press are both used with a “clench” hand posture, their actions differ in many ways. We speculate that another candidate action feature that may affect semantic processing concerns the amount of arm movement that occurs when a tool is used. Evidence for this feature comes from a study by Klatzky, Pellegrino, McCloskey, & Lederman (1993) in which participants rated verb phrases describing tool and non-tool object-directed actions (e.g., “saw a board”, “pinch an apricot”) on various action dimensions (e.g., amount of force required to perform the action, whether not the action requires grasping an object). Participants also sorted the verb phrases into groups according to the similarity of their actions. Klatzky & colleagues (1993) found that ratings related to movement of the arm were tightly correlated, as were ratings related to the configuration of the hand. A distinction between actions performed with large versus small arm movements was also evident in the ways in which participants grouped the verb phrases. Although corroborating evidence is scant, the magnitude of arm movement during tool use may be another candidate action feature of tools.

In addition to our limited knowledge of the elemental features encoded within action semantics, the architecture of action semantics – the proximity of tool use representations to each other, and the relationships between them – is also unknown. In other semantic domains, this question propels an important area of inquiry. The structure of semantic memory, more broadly, is often gleaned from analyses of verbal “feature norms” produced by participants (e.g., “is red”, “has legs”) (McRae, Cree, Seidenberg, & McNorgan, 2005; McRae et al., 1997; Vinson & Vigliocco, 2008). Given that it is difficult to verbalize the spatiomotor parameters of tool use actions, however, some of the methods traditionally used to understand semantics from a verbal perspective (like the feature norms approach) may be ill-suited for investigating the contribution of action features to the structure of semantic memory. Moreover, what we know about the architecture of other types of semantic features may not be true of action semantics. A direct comparison of the similarity structures of several semantic feature types (sensory/perceptual, functional, encyclopedic, and verbal) revealed that concepts are related in different ways in each of these modalities of knowledge rather than being organized similarity across modalities (Dilkina & Lambon Ralph, 2012). Thus, the architecture of action semantics may be different than other kinds of semantic knowledge.

In the present study, we aimed to advance our understanding of the role of action knowledge in the representation of tool concepts by examining a nuanced set of candidate action features and the relationships between tool use actions in the same way as has been done in other semantic domains (Experiment 1). Describing these elemental action features and the architecture that underlies them is an important step towards being able to predict and interpret patterns of priming and competition between objects that vary in the similarity of the actions they evoke. Additionally, this endeavor may aid us in understanding which combinations of objects in an array are particularly disruptive to the performance of patients with apraxia.

The methods we used in Experiment 1 are based on those of Klatzky & colleagues (1993) (described above), in which participants rated verb phrases on various action dimensions and sorted the verb phrases into groups according to the similarity of the actions. However, we extend the results of Klatzky & colleagues by querying participants' knowledge of skilled tool use actions, in particular. To do so, we use as stimuli photographs of tools instead of verb phrases. In order to sort or rate these tools according to their use actions, participants have to draw upon stored knowledge of actions evoked by the sight of objects. By contrast, the verb phrases used by Klatzky & colleagues (1993) may not have engaged skilled use knowledge, either because they referred to unfamiliar, infrequent object-directed actions with which participants had no experience (e.g., “choke a chicken”, “crank an ice cream maker”) or because they referred to actions that can be inferred merely from objects' physical structures (e.g., “pinch an apricot”, “touch wet paint”).

In Experiment 1, participants sorted pictured tools into groups according to the overall similarity of their use actions. Participants were not instructed to attend to particular features of actions when sorting, so any specific dimensions informing the sorting procedure were spontaneous. Participants subsequently rated the tools along several explicit dimensions related to use actions. These ratings were designed to help us understand the dimensions subjects spontaneously used to determine action similarity in the sorting task. As has been assumed with verbal feature norms, we propose that collecting similarities from a sorting task and action ratings offers “a window into important aspects of meaning” (Vinson, Vigliocco, Cappa, & Siri, 2003, p. 351; see also Medin, 1989). On the other hand, sorting and rating tasks prevent the need for participants to translate action knowledge into verbal form.

Given evidence that hand posture is a distinct component of tool use representations (e.g., Buxbaum et al., 2003), we predicted that participants would be more likely to sort tools used with similar hand postures into the same groups – even though they were not instructed to attend to specific features of actions. We also predicted that tools used with large movements of the arm (e.g., hammer) would be more likely to be sorted into groups together than tools primarily used with smaller, hand-centered actions (e.g., garlic press), and vice versa. Similarly, and based on the findings of Klatzky & colleagues (1993), we predicted that arm-related action ratings would be more strongly correlated with each other than with hand-related ratings, and vice versa. Finally, we predicted that tools assigned similar explicit ratings would be more likely to be grouped together in the sorting task. Thus, these ratings served to help us identify the action features spontaneously used by participants when sorting by action similarity. Together, results from the sorting and rating tasks in Experiment 1 allowed us to uncover a candidate architecture of action semantics; we tested the validity of this architecture in Experiment 2.

Experiment 1

Materials and Methods

Participants

Twenty-four paid volunteers (73% female) participated in Experiment 1. Because we plan to use data from this experiment to design future neuropsychological studies, these participants were selected to fall within the age and education range of the stroke participants we typically recruit (mean age = 63.1 years, SD = 8.1; mean education = 16.7 years, SD = 1.8). All participants were right-handed, spoke English fluently, and had no history of neurological or major psychiatric disorders. One participant was excluded for not following instructions.

Stimuli

We selected color photographs of tools from the Bank of Standardized Stimuli (BOSS) (Brodeur, Dionne-Dostie, Montreuil, & Lepage, 2010) (Figure 1). Brodeur & colleagues (2010) collected normative data on 480 photographs, including name agreement, familiarity, visual complexity, and manipulability. From this database, we chose 60 photographs that depicted manmade objects associated with distinct movements during skilled use (i.e., tools). All tools were typically used with a single hand. On scales from 1 – 5, the 60 tools were rated as significantly more familiar (M = 4.08 out of 5, SD = 0.3, t(59) = 25.1, p < .001) and more manipulable (M = 3.27 out of 5, SD = 0.6, t(59) = 3.5, p < .001) than the midpoint of the rating scale. This result confirms that the stimuli were both strongly manipulable and likely to be familiar to participants.

Figure 1.

Examples of stimuli used in Experiment 1 (photographs from Brodeur et al., 2010).

Design and procedure

Each participant completed the experiment in three phases. The first phase was designed to familiarize participants with the stimuli and allowed the experimenter to determine if a participant was unfamiliar with a particular tool. Each tool was displayed for unlimited time on a computer screen using E-Prime software (Psychology Software Tools, Inc., www.pstnet.com), and participants were asked to name each one. If the participant was familiar with a tool but could not retrieve its name, the experimenter said aloud the tool's modal name (from Brodeur et al., 2010). Tools with which a participant was unfamiliar were removed from the remaining two experimental phases; however, this situation only occurred with a single participant and a single item.

During the second phase of Experiment 1, participants sorted the 60 tools according to the tools' use actions. The sorting procedure we used was based on that used by Klatzky & colleagues (1993); both procedures are truncated variants of the agglomerative hierarchical sorting method (Giordano et al., 2011). Each photograph was sized to 2 inches × 2 inches, printed, and mounted on a square of thick foam board. These squares were arranged randomly on a large table. Participants were instructed to sort the tools into groups based on “how the objects are typically used”; thus, we ensured that participants based their decisions on the skilled use actions associated with the tools rather than the way in which the tools were grasped for transport. Tools selected as examples purposely shared little visual or categorical similarity but were associated with similar movements during skilled use (i.e., a basketball and a Bongo drum, and a kitchen timer and a safe). Before beginning to sort, participants were again reminded to focus on “the movements you make when you use the objects” and not the other ways in which the tools could be related.

The sorting phase of the experiment consisted of three rounds. In the first round, participants were instructed to sort the 60 tools into a minimum of 12 and a maximum of 16 groups. (These numbers were determined by allowing a group of pilot participants to sort the tools into as many groups as they liked; pilot participants preferred 12 – 16 groupings.) Each group had to contain at least two tools. Participants were given unlimited time to sort the tools. When a participant signaled that he/she had finished the first round of sorting, the experimenter recorded the positions of the tools in their groups using a digital camera. In the second round of sorting, participants were instructed to combine each group with one other group using the same criterion as in the first round of sorting, i.e., participants combined groups if the movements associated with using the tools in one group were similar to those in another. Thus, participants reduced the total number of groups by about half. (Participants who created an odd number of groups in the first round had one group that remained uncombined in the second round.) Finally, in the third round of sorting, participants were again instructed to combine groups using the same criterion as in round two.

During the last phase of Experiment 1, participants rated the 60 tools on five dimensions related to their use actions. Trials were blocked by rating type. The order in which participants completed the five rating scales was counterbalanced across participants. For each rating scale, participants viewed the tools one at a time on a computer screen and assigned a rating on a 1 – 5 Likert-type scale. Four of the ratings were based on those collected by Klatzky & colleagues (1993). They were:

“Amount of arm”. Each participant rated how much of his/her arm is in motion when he/she uses the tool. 1 = using the tool primarily involves motion in the fingers vs. 5 = using the tool involves substantial motion throughout the whole arm.

“Distance the hand travels”. Each participant rated how much his/her hand moves through space when he/she uses the tool. 1 = little or no movement of the hand through space vs. 5 = hand moves as far through space as the body allows.

“Amount of force”. Each participant rated how much force is required to use the tool. 1 = very little force vs. 5 = a great deal of force.

-

“Surface area of the hand”. Each participant rated how much of his/her hand makes contact with the tool when he/she uses it. 1 = only a small part of the hand touches the tool vs. 5 = the full surface of the hand touches the tool.

We also included a fifth rating, motivated by the action-sentence compatibility effect (ACE) (e.g., Borreggine & Kaschak, 2006; Glenberg et al., 2008); for example, participants are faster to judge the sensibility of a sentence describing an object moved towards the body when the response is also made with a towards-the-body movement (Glenberg & Kaschak, 2002). Furthermore, objects typically used near the body (e.g., “cup”) evoke different patterns of neural activity than objects used away from the body (e.g., “key”) (Rüschemeyer, Pfeiffer, & Bekkering, 2010).

“Towards/away”. Each participant rated whether the tool is used on/near the body, or out in space. 1 = the tool is used directly on the body vs. 5 = the tool is used far out in space.

Following Klatzky & colleagues (1993), information about the configuration of the hand during tool use was categorized in terms of hand posture and hand flexion by two trained coders. Possible hand postures were “poke”, “palm”, “pinch”, or “clench” (Klatzky, McCloskey, Doherty, Pellegrino, & Smith, 1987), as well as a third prehensile posture representing a hybrid between a pinch and clench (“hybrid”, e.g., the hand posture associated with using a hair clip or grilling tongs) (Buxbaum et al., 2003). The coders assigned the same hand posture 75% of the time; ties were resolved by a third trained coder. The majority of coding disagreements (11/15) arose when deciding between prehensile hand postures (pinch, clench, or hybrid). Per Klatzky & colleagues, from this hand posture information, we created a sixth, binary variable, “prehensile”, that reflected whether or not the hand posture associated with a tool was prehensile (i.e., “pinch”, “clench”, “hybrid”) or not (i.e., “poke”, “palm”). These coders also rated the 60 tools for the extent to which the hand is flexed during use. Values on this seventh variable (“amount of flexion”) ranged from 1 – 3, with higher numbers indicating increased flexion. Coders assigned the same flexion score 82% of the time. Given the continuous nature of the flexion variable, ties were resolved by averaging the two coders' ratings.

Data analysis

Using the data from the sorting phase of the experiment, we computed the use action similarity of each pair of tools. For each participant and each round of sorting, pairs of tools assigned to the same group received a similarity value of 1. Pairs of tools in different groups received a similarity value of 0. The pairwise similarity values for all three rounds of sorting were then summed to create a set of overall similarity values for each participant. Finally, for each pair of items, we summed the similarity values across participants. These similarity values served as input for a non-metric multidimensional scaling analysis (PROXSCAL in SPSS).

Using the data from the ratings phase of the experiment, we averaged each item's rating on each of the 7 ratings scales across all participants. These ratings were submitted to a principal component analysis (see Results), and we saved each tool's scores on the two resulting components. We also computed the Euclidean distance (difference) between every pair of tools on the two component scores. These distance measures allowed us to ask, using multiple regression, whether tools that were assigned similar principal components scores in the ratings phase were more likely to be grouped together in the sorting phase.

In addition, to allow us to determine if participants merely grouped tools by taxonomic category rather than action similarity, we used Latent Semantic Analysis (Landauer & Dumais, 1997) to approximate the overall semantic similarity of each pair of tools. LSA values have been used by other researchers as a way to assess semantic similarity (M. W. Howard & Kahana, 2002; Rastle, Davis, Marslen-Wilson, & Tyler, 2000). Using LSA@CU (http://lsa.colorado.edu) and the “General Reading up to 1st Year College (300 factors)” topic space, we looked up the resulting values for each pair of tools using the tools' modal names (from Brodeur et al., 2010). These values, along with the principal component score distances, were entered as predictors of use action similarity in a multiple regression analysis. Because the similarity values did not follow a normal distribution, we used log-transformed values in this regression analysis.

Results

Ratings

The ratings that participants assigned to the tools were highly consistent: for each of the 5 rating scales, standard errors of the mean for each tool were less than .27. Table 1 shows the correlations between the 7 dimensions. Variables that described the magnitude of arm movement (e.g., “amount of arm”, “distance the hand travels”, “amount of force”) tended to be more highly correlated with each other than with variables that described hand posture (i.e., “prehensile” and “amount of flexion”).

Table 1.

Correlations between explicit action rating scales (mean item values).

| Arm | Distance | Force | Surface | Towards/away | Prehensile | |

|---|---|---|---|---|---|---|

| Distance | .95* | |||||

| Force | .80* | .77* | ||||

| Surface | .85* | .79* | .83* | |||

| Towards/away | .58* | .72* | .69* | .59* | ||

| Prehensile | .40* | .36++ | .24+ | .41* | .05 | |

| Flexion | .62* | .58* | .52* | .66* | .34++ | .49* |

Note:

p < .10,

p < .05,

p < .0023 (Bonferroni-corrected significance threshold)

To confirm this observation, we conducted a principal component analysis (PCA) of the 7 rating scales. Two components with eigenvalues greater than 1 were extracted (Table 2). The initial solution was submitted to a varimax rotation to make the data more interpretable. Ratings that described magnitude of arm movement during tool use loaded onto the first component; this component accounted for 55% of the variance in the ratings. The “towards/away” rating also loaded onto the first component, indicating that tools used with larger arm movements are usually used away from the body. Ratings that described the configuration of the hand loaded onto the second component, which accounted for 28% of the variance in the ratings.

Table 2.

Principal component analysis of explicit action ratings (varimax rotation).

| Component | ||

|---|---|---|

|

| ||

| 1 | 2 | |

| Arm | .83 | .45 |

| Distance | .87 | .35 |

| Force | .89 | .22 |

| Surface | .79 | .47 |

| Towards/away | .88 | -.10 |

| Prehensile | .03 | .91 |

| Flexion | .45 | .70 |

Variance explained by Component 1 = 55%; variance explained by Component 2 = 28%

The distinction between arm- and hand-related ratings offers the possibility that these two sources of information each contribute to the representation of action knowledge. Such a division is not surprising given that, biomechanically, the movement of the arm is not dependent upon the configuration of the hand, and vice versa. Of interest in the current experiment is whether an arm/hand distinction is spontaneously used by participants to sort tools according to the similarity of their use actions: do participants base their groupings on the relatedness of arm movement and hand configuration, even though they are not instructed to attend to any specific features of actions?

Similarities from sorting

Multidimensional scaling

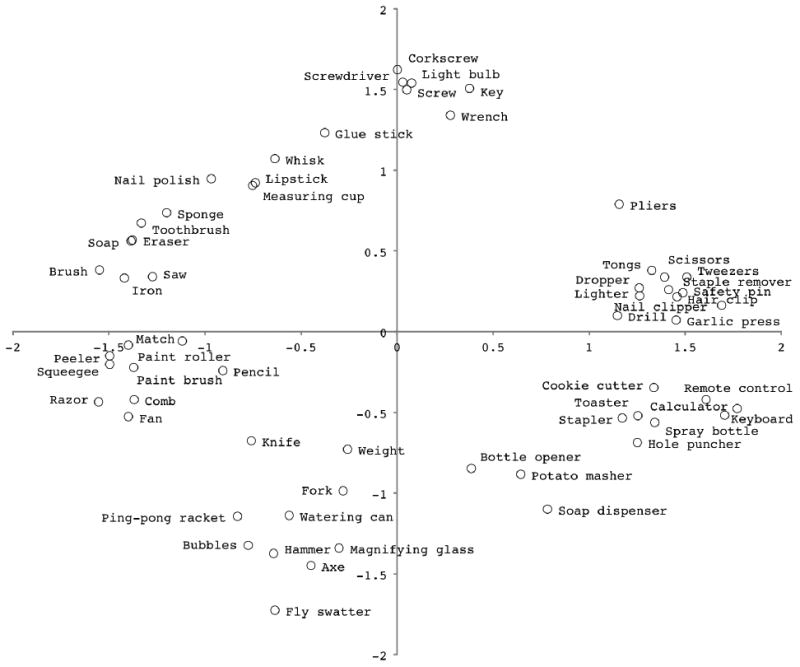

To evaluate the structure of action similarities, we carried out a non-metric multidimensional scaling (MDS) analysis of data from the sorting phase of the experiment (PROXSCAL algorithm in SPSS). Using a scree plot to assess goodness-of-fit, we determined that two dimensions optimally represented the data. The final normalized raw stress value for this two-dimensional solution was low (.06), indicating a good fit to the original data (Borg & Groenen, 2005; Cox & Cox, 2001).

The location of each tool within the resulting two-dimensional space is shown in Figure 2. The clustering of tools within this space appears to reflect similarities of the configuration of the hand, the magnitude of the arm movement, and the manner of motion during use. For example, “screwdriver”, “light bulb”, and “key” – tools used with a rotation of the wrist and prehensile hand postures (i.e., clench/pinch/hybrid) – cluster together tightly. Additionally, tools used with non-prehensile hand postures (i.e., poke/palm) occur exclusively in the bottom right quadrant of action space. On the other hand, some tools have few close neighbors in action space (e.g., “bottle opener”, “pliers”), suggesting that they are used with relatively unique actions.

Figure 2.

Two dimensional multidimensional scaling (MDS) solution to the action similarities from the sorting task.

To objectively interpret the meaning of these two dimensions, we used the “property fitting” method, in which a multidimensional scaling solution is mapped onto known attributes of the stimuli (Chang & Carroll, 1989; Kruskal & Wish, 1978). Here, we used as our known attributes the tools' scores on the two principal components derived from explicit action ratings. For each component, the tools' values on the two MDS dimensions were used to predict the tools' component scores. The resulting regression coefficients are normalized so that their sum of squares equals 1 (Kruskal & Wish, 1978). These normalized coefficients (“direction cosines”) indicate the cosine of the angle between the known attribute and a given MDS dimension. For a known attribute to provide a satisfactory interpretation of a MDS dimension, Kruskal & Wish (1978) suggest that the overall fit of the regression model must be high (significant at the .01 level, minimally), and the regression coefficient for that dimension must also be high. If these conditions are met, the dimension with the highest regression coefficient is the best fit for that known attribute.

In the first regression (Table 3), we found that the second MDS dimension (Figure 2, y-axis) was the best fit for the “magnitude of arm movement” component [Model 1: R2 = .14, F(2, 57) = 4.65, p = .01]. The negative regression coefficient indicates that larger values on this component were associated with smaller values on the second MDS dimension. Accordingly, “axe”, “hammer”, and “ping-pong racket” were assigned negative y-values, while “nail polish”, “key”, and “tweezers” were all assigned positive y-values (Figure 2).

Table 3.

Multiple regressions predicting principal component scores (from explicit action ratings) from the two multidimensional scaling (MDS) dimensions (from sorting task).

| Predictor | Model 1: Component 1 (magnitude of arm movement) | Model 2: Component 2 (configuration of the hand) | ||||

|---|---|---|---|---|---|---|

|

|

|

|||||

| β | Direction cosine | t | β | Direction cosine | t | |

| 1st dimension (x) | -.25 | -0.68 | -2.07* | -.53 | -0.93 | -4.94*** |

| 2nd dimension (y) | -.28 | -0.73 | -2.24* | .22 | 0.37 | 2.00+ |

Note: direction cosines for each model are normalized β values whose sum of squares equals 1.

p < .10,

p < .05,

p < .005,

p < .001

In the second regression (Table 3), we found that the first MDS dimension (Figure 2, x-axis) was the best fit for the “hand configuration” component [Model 2: R2 = .33, F(2, 57) = 14.16, p < .001]. Again, the negative regression coefficient indicates that larger values on the “hand configuration” component (i.e., tools used with prehensile hand postures and increased hand flexion) were associated with smaller values on the first MDS dimension. Thus, “axe”, “paint roller”, and “iron” were all assigned negative x-values, while “stapler”, “keyboard”, and “tweezers” were assigned positive x-values (Figure 2).

In sum, the multidimensional scaling analysis indicated that tools were organized in the “action space” derived from the sorting task according to both the magnitude of the arm movement and configuration of the hand during use, despite the fact that participants were not instructed to focus on those (or any) particular aspects of movement. Nevertheless, the overall fit of both property fitting regression models was relatively low (Table 3). Thus, participants likely drew upon additional sources of action similarity when sorting tools not captured by our explicit ratings. In particular, several tight clusters of tools within the derived action space appear to reflect the manner of motion during use. For example, “corkscrew”, “key”, and “light bulb” are all used with a turning motion, “soap”, “eraser”, and “toothbrush” with a back-and-forth motion, and “cookie cutter”, “toaster”, and “stapler” with an up-and-down motion.

Regression analyses

As a final way of understanding the dimensions of action that participants spontaneously used when sorting tools, we used a two-step multiple regression to predict the (log-transformed) action similarity of each pair of tools from the explicit action ratings. We hypothesized that tools used with similar actions would also be assigned similar ratings. Thus, greater similarity in the sorting phase of the experiment, where participants spontaneously used action features, should be associated with smaller distances between tools on the explicit ratings. Given the collinearity among our seven explicit ratings, we computed these pairwise distances on the scores from the two components derived from a principal component analysis of the explicit ratings. As discussed above, the first component reflected the magnitude of arm movement during tool use, and the second component reflected the configuration of the hand.

To ensure that participants were not merely grouping tools by overall semantic or taxonomic similarity, we entered at step 1 of the regression the pairwise distances between the tools' modal names from the LSA@CU implementation of Latent Semantic Analysis (LSA) (Landauer & Dumais, 1997). The LSA algorithm extracts the semantic representations of words from text corpora, and the resulting representations can be compared to determine the similarity of a pair of words.

At step 1, LSA values significantly predicted action similarities from the sorting task (Table 4). At step 2, action similarity was significantly predicted both by overall semantic similarity (LSA) and by the two principal components derived from the explicit action ratings (magnitude of arm movement and configuration of the hand). The addition of these action predictors significantly improved the model fit over LSA values alone. Thus, action similarities derived from the sorting task were significantly predicted by similarities on arm- and hand-related ratings even after accounting for the influence of overall semantic relatedness. Nevertheless, the overall fit of the second model was low (Table 4). As with our interpretation of the MDS solution, this lack of fit suggests that when participants sort tools into groups, they are influenced not only by the magnitude of arm movement and configuration of the hand but also by other aspects of actions like the manner of motion during use.

Table 4.

Multiple regression predicting log-transformed action similarities (from sorting task) from semantic similarity (LSA) and distance on each of the principal components (from explicit action ratings).

| Predictor | Step 1: LSA only | Step 2: All predictors | ||

|---|---|---|---|---|

| β | t | β | t | |

| LSA values | .15 | 6.25*** | .11 | 4.76*** |

| distance(Component 1) | - | - | -.15 | -6.24*** |

| distance(Component 2) | - | - | -.19 | -8.09*** |

|

| ||||

| Step 1 R2 = .02, Step 2 R2 = .07, R2 change = .05, F(2, 1766) = 45.96, p < .001 | ||||

Note: Component 1 reflects the magnitude of arm movement during tool use; Component 2 reflects the configuration of the hand.

p < .001

Discussion

In Experiment 1, we examined the elemental features and underlying architecture of action semantics by adopting an approach used in other semantic domains. We examined action ratings and action similarities derived from a sorting task for a set of tool photographs. We found that the ratings consisted of two basic components, one describing the magnitude of arm movement during tool use actions, and one describing the configuration of the hand. Similarly, multidimensional scaling analysis revealed that participants were sensitive to both the magnitude of arm movement and the configuration of the hand when sorting tools by use action similarity, and inspection of the MDS results suggested that participants were also sensitive to the manner of motion during use. Finally, we assessed the degree to which explicit action ratings predicted the dimensions subjects spontaneously used to determine similarity in the sorting task and found that arm- and hand-related ratings components were significant, albeit weak, predictors of action similarity, over and above overall semantic similarity. These data suggest that the magnitude of arm movement and configuration of the hand may serve as elemental features of action semantics; other action features may encode manner of motion during use. Furthermore, the architecture of action semantics may be determined by the similarity of actions on these elemental features.

In order to complete the sorting and rating tasks, participants needed to draw upon stored knowledge of tool use actions. Similar logic has been applied in the broader semantic literature, where researchers have assumed that while verbally-generated semantic features are not literal reflections of concepts, generating features requires conceptual access (e.g., Vinson et al., 2003). In addition, MDS and regression analyses revealed that participants spontaneously made use of hand-, arm-, and possibly motion-related attributes when sorting tools into groups, despite the absence of instructions to do so. An analogous hypothetical experiment in the visual domain would be one in which participants are instructed to sort objects according to overall visual similarity and respond by forming groups based on object color, shape, and/or size. In this way, the sorting procedure allowed us to discover organizational principles of action semantic space without biasing participants' attention to one type of action feature or another.

However, explicit action ratings accounted for only a small proportion of the variance among similarities derived from the sorting task. We selected these ratings based on those used by Klatzky & colleagues (1993). In that study, action ratings accounted for much more variance among sorting similarities than the current study. We speculate that this difference stems from the nature of the stimuli used in each experiment. Klatzky & colleagues asked participants to sort a wide variety of verb phrases describing actions. Some phrases described familiar tool use actions (e.g., “hammer a nail”), but others described prehensile grasping actions (e.g., “pinch an apricot”), intransitive actions (“wave one's hand”), or transitive actions not requiring skilled object use (e.g., “touch wet paint”). By contrast, stimuli in the current study were photographs of tools, and participants were instructed to think of canonical tool use actions. Thus, participants may have attended to different action features depending on the breadth of stimuli present in the experiment. Sorting a wide range of actions into groups (i.e., Klatzky et al., 1993) may require attention to only broad distinctions between hand- and arm-related features, while sorting a narrower range (i.e., the current study) may implicitly encourage attention to finer distinctions between actions. For example, the MDS analysis in Experiment 1 shows tools clustering according to manner-of-motion (e.g., up-and-down, squeezing, back-and-forth), a feature that may be critical for discriminating between tool use actions, in particular.

Alternatively, the lack of fit between explicit action ratings and sorting similarities may reflect variability in the actions brought to mind by different participants. While participants in Klatzky & colleagues' (1993) experiment sorted phrases explicitly describing actions, we relied on participants' ability to retrieve the typical use actions associated with photographs of tools. If participants differ in the way in which they typically use a tool (e.g., squeezing a stapler with a “clench” hand posture versus pushing down on a stapler with a “palm” hand posture), or if a tool's use action depends, in part, on characteristics of the recipient object (e.g., stapling two papers versus a thick stack of papers), noise will be added to the action ratings and sorting similarities. Importantly, however, the modest predictive power of the explicit ratings in Experiment 1 has no bearing on whether action similarities derived from the sorting task reflect the mental structure of tool action knowledge. We test the psychological validity of this structure in Experiment 2.

When sorting and rating tools in Experiment 1, participants explicitly brought to mind the actions associated with using tools. In Experiment 2, we aimed to validate the action semantic space revealed in Experiment 1 by using an implicit semantic task in which access to tool use actions was not ostensibly required to perform the task (e.g., Campanella & Shallice, 2011a; Myung et al., 2006). The task was based on the finding that repeated access to the same semantic category produces semantic interference (Belke, Meyer, & Damian, 2005; Belke, 2008; Campanella & Shallice, 2011b; Damian, Vigliocco, & Levelt, 2001; D. Howard, Nickels, Coltheart, & Cole-Virtue, 2006; Kroll & Stewart, 1994; Schnur, Schwartz, Brecher, & Hodgson, 2006). Usually, this evidence comes from experiments in which participants repeatedly name pictured objects that occur within blocks of other semantically related or unrelated items (“semantic blocking”, e.g., Belke et al., 2005; Schnur et al., 2006; but see D. Howard et al., 2006). Although the exact mechanism that produces this semantic interference is debated (e.g., Belke, 2013; D. Howard et al., 2006; Oppenheim, Dell, & Schwartz, 2010), descriptively, activation of one lexical or semantic representation makes it difficult to subsequently access related lexical or semantic representations. Furthermore, the amount of interference observed between related stimuli depends on the “semantic distance” between them: repeated access of very closely related concepts produces more interference than repeated access of less closely related concepts (Vigliocco, Vinson, Damian, & Levelt, 2002). Thus, manipulations of semantic blocking offer a means to investigate the organization of the semantic system (Belke, 2013; Campanella & Shallice, 2011a).

Accordingly, in Experiment 2, we used a semantic blocking paradigm to investigate the influence of use action similarity on semantic processing of tools. In this task, participants matched written words to one of two photographs, and stimuli occurred within blocks that were repeated 4 times. We selected stimuli for Experiment 2 based on similarity data from the sorting task in Experiment 1, manipulating the level of use action similarity of blocks of tools while keeping taxonomic/categorical and visual similarity constant. Campanella & Shallice (2011a) used a similar design but did not assess action similarity empirically or manipulate the level of action similarity among tools. They found that participants were less accurate matching words to pictures when distractor and target tools were manipulated with similar actions than when they were completely unrelated or only visually-related. Similarly, if action similarities in Experiment 1 reflect the architecture of action knowledge evoked during implicit semantic processing of tools (e.g., Myung et al., 2006), then we expect greater interference on action-related versus unrelated blocks of tools in Experiment 2.

To determine if some use actions are represented more closely than others in action semantic space, we also manipulated the level of action similarity among tools: tools were selected from the semantic space uncovered in Experiment 1 such that they were used with highly similar, moderately similar, or unrelated actions, while categorical/taxonomic and visual similarity were constant across conditions. Given evidence that semantic relatedness occurs on a continuum rather than being an all-or-nothing characteristic (Vigliocco et al., 2002), we predicted graded amounts of interference according to the level of action similarity among tools. Critically, the presence of a graded effect of action similarity in Experiment 2 would serve to validate the action semantic architecture derived in Experiment 1.

Experiment 2

Materials and Methods

Participants

Twenty-six paid volunteers (21 female) participated in Experiment 2 (mean age = 32.6 years, SD = 8.1; mean education = 16.9 years, SD = 2.3). All participants were right-handed, spoke English fluently, and had no history of neurological disorders. One participant was excluded for having an average accuracy score lower than 3 standard deviations from the group mean. None of the participants in Experiment 2 participated in Experiment 1.

Stimuli

As in Experiment 1, we used the same color photographs of tools from the BOSS database (Brodeur et al., 2010). In Experiment 2, however, we created blocks of 4 tools each such that the tools in some blocks were used with very similar actions (high action similarity), moderately similar actions (moderate action similarity), or unrelated actions (unrelated). We used the raw similarities derived from the sorting task in Experiment 1 to create these blocks. However, we also wanted to ensure that blocks of one type were visually and categorically (taxonomically) similar to blocks of another type. Matching the blocks on categorical similarity was particularly critical given that previous semantic blocking studies have manipulated semantic similarity by choosing objects from the same or different semantic categories (e.g., Belke et al., 2005; Schnur et al., 2006). Thus, it was important that action similarity between tools be uncorrelated with categorical similarity.

To match blocks of tools on visual and categorical similarity, we collected normative data using Amazon's Mechanical Turk (MTurk), an online labor market that allows researchers to quickly collect large quantities of data (Mason & Suri, 2012). This resource has been used by cognitive psychology researchers to collect similar normative data about stimuli (Cohen-Shikora, Balota, Kapuria, & Yap, in press; Kuperman, Stadthagen-Gonzalez, & Brysbaert, in press). Each MTurk participant rated 59 of the possible 1770 pairs of stimuli for either visual or categorical similarity on a 1 – 5 scale. Participants rating visual similarity were instructed to determine how similar the two tools looked; participants rating categorical similarity were instructed to determine the extent to which the two tools were members of the same category. A participant's data was excluded if he/she 1) was a non-native speaker of English, 2) failed to answer age and native language demographic questions, 3) answered any 1 of 5 “catch” trials incorrectly (trials in which the pair was a set of identical tools), or 4) had completed that rating survey before. Thirty participants together rated an entire set of tool pairs for visual or categorical similarity. In total, we collected data from 120 participants for each type of rating, meaning that each tool pair was rated 4 times. These values were averaged to determine a pair's final visual and categorical similarity values.

We used these visual and categorical similarity ratings, together with the action similarity values from the sorting task in Experiment 1, to select 15 blocks of 4 stimuli each for Experiment 2. Five of these blocks contained tools with high action similarity, 5 blocks contained tools with moderate action similarity, and 5 blocks contained tools used with unrelated actions. Because we cannot know whether similarities derived from the sorting task map linearly onto the similarities of mental representations, we merely aimed to ensure that the average pairwise action similarity for each block differed significantly between the three block types (all p < .005) (Table 5). In addition, high, moderate, and unrelated blocks were equivalent on average pairwise visual and categorical similarity (all p > .10). We also used normative data available from the BOSS database (Brodeur et al., 2010) to match the block types on average name agreement, familiarity, visual complexity, and manipulability (all p > .05). Finally, the three block types were matched for the average number of letters, phonemes, and syllables in their tools' modal names (Brodeur et al., 2010) (all p > .40). Controlling for these factors ensured that any observed differences between high, moderate, and unrelated blocks could be attributable to effects of action similarity.

Table 5.

Normative characteristics of stimuli used in Experiment 2.

| Block type | Actio similarity | Category similarity | Visual similarity | Name agreement | Familiarity | Visual complexity | Manipulability | Letters | Phonemes | Syllables |

|---|---|---|---|---|---|---|---|---|---|---|

| High action similarity | 38.6 (9.7) | 1.6 (0.3) | 2.0 (0.3) | 80% (1.0) | 4.2 (0.1) | 2.4 (0.2) | 3.2 (0.2) | 8.3 (2.1) | 6.1 (1.4) | 2.3 (0.4) |

| Moderate action similarity | 21.6 (0.5) | 1.5 (0.3) | 1.9 (0.4) | 72% (0.8) | 4.0 (0.2) | 2.2 (0.1) | 3.4 (0.3) | 8.9 (1.9) | 6.6 (1.5) | 2.5 (0.8) |

| Unrelated | 5.6 (0.6) | 1.5 (0.2) | 1.6 (0.4) | 76% (1.5) | 4.1 (0.2) | 2.5 (0.2) | 3.2 (0.3) | 7.9 (1.9) | 5.9 (1.4) | 2.2 (0.8) |

Note: standard deviations in parentheses.

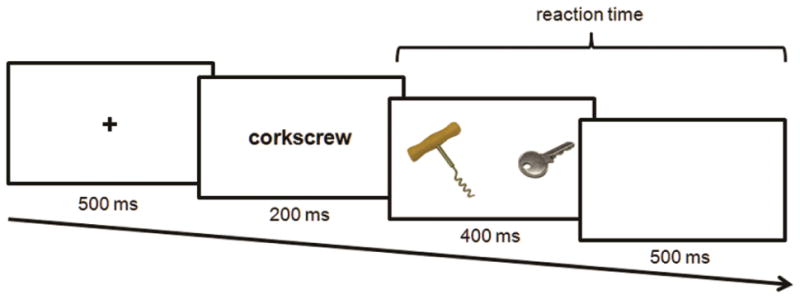

Design and procedure

At the beginning of each trial, participants viewed a fixation cross for 500 ms (Figure 3). Then, the target word (tool name) appeared on the screen for 200 ms. Finally, two tool photographs appeared on the screen, side by side, for 400 ms. Participants were instructed to select the tool that matched the name they had read by pressing a left- or right-oriented key on the keyboard with their left or right index fingers, respectively. Although the tool photographs only remained on the screen for 400 ms, participants were allowed to make their responses up to 500 ms after the photographs had disappeared from the screen.

Figure 3.

Timeline for each trial in Experiment 2, with an example of a stimulus pair with high action similarity (as determined by Experiment 1). Accuracy and reaction times were collected when the tool photographs were on the screen, as well as for 500 ms after the photographs had disappeared.

As described above, trials were blocked by the level of use action similarity among tools in a block. Each block consisted of 4 tools. Since participants viewed 2 tools on each trial, and either tool could be the target, 12 trials were created from each block of tools (6 pairs X each tool in the pair as the target). Trials within each block of 12 appeared in random order, and the location of the target and distractor were randomized on each trial. Additionally, blocks of 12 trials were repeated 3 subsequent times, back-to-back (4 total “cycles”). Thus, each block of 4 tools yielded 48 trials. With 5 blocks at each level of action similarity, each participant completed 720 trials during the experiment.

The order in which blocks of each type occurred was pseudorandom. We wanted to minimize between-block semantic interference (i.e., when 4 tools in one block are used with similar actions to the 4 tools in another block, just by chance). Therefore, we computed the average pairwise similarity between tools in back-to-back blocks for many randomly generated block orders. Then, we selected five block orders with low average between-block similarity. The assignment of block orders was counterbalanced across participants.

At the end of the experiment, participants were administered a questionnaire to determine whether or not they noticed that blocks of tools differed by level of action similarity. We asked participants to determine how difficult the experiment felt (e.g., “very difficult”, “moderately difficult”, etc.”) and whether there were any objects that he or she didn't recognize or had never seen before. Finally, we asked participants to describe anything they noticed to be unusual about the experiment.

Data analysis

Trials were incorrect either if participants selected the wrong tool or did not make a response within the deadline (900 ms). For analyses with reaction times, we only included responses on correct trials; we also excluded trials with reaction times less than 250 ms. And, for each participant, we excluded outlier trials greater than 3 standard deviations from the participant's average reaction time for trials of that level of action similarity and cycle. Together, these criteria for eliminating trial-level outliers excluded 3.1% of all correct trials (3.0% of high similarity trials, 3.0% of moderate similarity trials, and 3.2% of unrelated trials).

Results

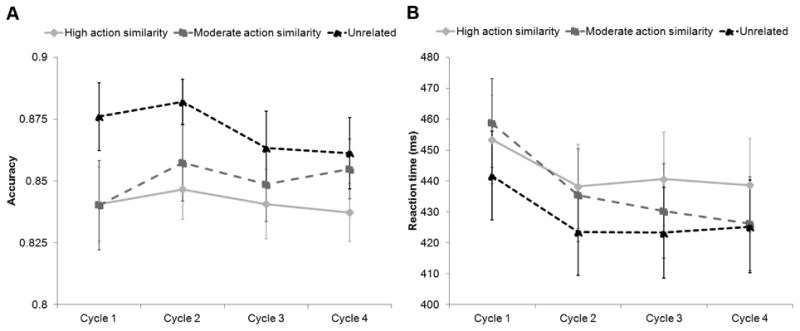

We first looked for effects of action similarity (high, moderate, unrelated) and cycle (1 – 4) on participants' accuracy using a two-way repeated measures ANOVA (Figure 4A). We found a significant main effect of action similarity [F(2, 48) = 6.98, p < .005], but no effect of cycle [F(3, 72) = .95, p = .42], and no action similarity X cycle interaction [F(6, 144) = .63, p = .71]. The main effect of action similarity significantly fit a linear contrast [F(1, 24) = 13.11, p < .005], indicating that accuracy decreased linearly with increasing action similarity among tools in a block. Further, planned comparisons between levels of action similarity showed that participants performed less accurately on trials in high action similarity blocks (Mhigh = .84) than unrelated blocks (Munrelated = .87) (p < .005), and less accurately on trials in moderate action similarity blocks (Mmoderate = .85) than unrelated blocks (p < .05). Accuracy on high blocks was numerically less than accuracy on moderate action similarity blocks, but this difference did not reach significance (p = .26). Although there was no main effect of cycle or interaction between cycle and action similarity, we ran a second ANOVA with only high and unrelated levels of action similarity to ensure that there was no evidence for “refractoriness” on blocks of high action similarity (i.e., a greater decrease in accuracy over cycles for related than unrelated). Indeed, when only considering these two types of blocks, the interaction between action similarity and cycle was not significant [[F(3, 72) = .50, p = .69]; the difference in accuracy between blocks of tools used with highly similar actions versus unrelated actions remained constant over cycles.

Figure 4.

A) Accuracy and B) reaction time results from Experiment 2. Error bars represent 1 standard error of the mean.

Next, we examined participants' reaction times for effects of action similarity and cycle using a two-way repeated measures ANOVA (Figure 4B). Here, we found significant main effects of action similarity [F(2, 48) = 7.95, p < .005] and cycle [F(3, 72) = 20.10, p < .001], as well as a significant interaction between the two [F(6, 144) = 2.86, p < .05]. Tests of simple effects at each level of action similarity (using the Holm-Sidak correction for multiple comparisons) showed that for moderate action similarity and unrelated blocks, reaction times on cycle 1 were slower than reaction times on cycles 2 – 4 (all p < .05); reaction times did not differ among cycles 2 – 4 (all p > .2). A similar pattern emerged for high action similarity blocks, in which reaction times on cycle 1 were marginally slower than reaction times on cycles 2 – 4 (all p < .1), and reaction times among cycles 2 – 4 did not differ (all p > .9). Thus, participants sped up their responses in all conditions after the first cycle.

Tests of simple main effects at each cycle revealed that, for all cycles, trials in high action similarity blocks were responded to more slowly than unrelated blocks (all p < .05, except in cycle 3, where p = .06). Reaction times in moderate similarity blocks, on the other hand, began by patterning like trials in high action similarity blocks: in cycles 1 and 2, reaction times to high and moderate blocks were not different from each other (all p > .2). And, like high similarity blocks, moderate similarity blocks were responded to significantly more slowly than unrelated blocks in cycle 1 (p < .001), with a non-significant effect in the same direction during cycle 2 (p = .11). In cycles 3 and 4, however, trials in moderate action similarity blocks patterned like trials in unrelated blocks. Reaction times to moderate blocks were not different from those to unrelated blocks (all p > .7), and, like unrelated blocks, they were significantly faster than reaction times to high action similarity blocks (all p < .05). This pattern indicates that while action similarity affected reaction time, the way in which it did so depended on both the degree of action similarity among tools in a block and the number of times the stimuli had been repeated. While tools in high action similarity blocks were always responded to more slowly than unrelated blocks, tools in moderate action similarity blocks overcame an initial slow-down to become as fast as unrelated blocks by the last two cycles.

Finally, we carried out a second ANOVA using only high action similarity and unrelated blocks in order to test for an accumulation of interference for highly similar blocks over cycles, relative to unrelated (i.e., refractoriness). In reaction time, this interference would manifest as less speed-up over cycles (e.g., Schnur et al., 2006). As with accuracy, the interaction between action similarity and cycle was not significant [F(3, 72) = .37, p = .78]. Thus, the difference between blocks of tools used with highly similar versus unrelated actions remained constant over cycles.

In the post-experiment questionnaire, participants reported that the experiment felt moderately difficult to “slightly too easy”, and no participants reported trouble recognizing the tools. Two participants reported noticing that some tools were blocked by their use actions. We excluded these two participants and re-analyzed accuracy and reaction time data; all critical patterns of significance remained the same.

Discussion

Both in terms of accuracy and reaction times, participants performed more poorly on a blocked cyclic word-picture matching task when tools in a block were used with highly similar relative to unrelated use actions. Moreover, there was evidence in both the accuracy and reaction time data of graded effects of similarity: accuracy decreased linearly with increasing action similarity among tools in a block, and reaction times of moderately similar items patterned differently than reaction times of unrelated and highly related items. To our knowledge, this is the first time that graded effects of action similarity on semantic processing of tools have been reported. Critically, participants were not instructed to think of tools' use actions, nor was this information ostensibly relevant for the task. Moreover, the results of the post-experiment questionnaire suggest that it is unlikely that our results depend on explicit consideration of tool use actions. Instead, the effect of use action similarity in Experiment 2 is consistent with previous reports of the implicit activation of action knowledge about tools during semantic tasks (e.g., Campanella & Shallice, 2011b; Myung et al., 2006).

Several explanations have been put forward to account for interference observed when participants repeatedly access semantically related stimuli. On one account, incremental learning strengthens the connections between the semantic and lexical representations of target items while also weakening connections of related, non-target items (Oppenheim et al., 2010). As a result, semantically related items presented with a target on earlier trials are more difficult to access when they are targets on later trials. On another account, repeated access of the same semantic category results in persistent co-activation of the lexical representations of category members, making lexical selection difficult (Belke et al., 2005).

While Experiment 2 was not designed to adjudicate between competing accounts, our results do expand the notion of “semantic relatedness” within this field of inquiry: tools used with similar actions have more similar semantic representations than those used with different actions. Further, we found that interference effects on accuracy were modulated by the degree of action similarity between tools, rather than being an all-or-nothing phenomenon. These results suggest that tools used with similar actions have overlapping semantic representations. They share action features which are implicitly activated during semantic tasks, along with other kinds of semantic features (e.g., visual, functional). Thus, all else equal, the semantic representations of tools used with similar actions have more features in common than tools used with different actions, and this representational similarity yields greater interference in a semantic blocking paradigm. That we observed differences in accuracy for different levels of action similarity is particularly informative given that all experimental stimuli came from the same taxonomic category (i.e., tools). Prior research in this domain has used taxonomic category as a proxy for semantic similarity (e.g., Belke et al., 2005; Damian et al., 2001). The present results, however, call for a more nuanced approach in which similarity within and across different types of semantic features determines semantic relatedness rather than category membership alone. Furthermore, unlike previous studies of semantic blocking, participants in Experiment 2 were not aware that objects were blocked by similarity. Thus, conscious awareness of the experimental manipulation is not necessary to affect participants' behavior.

While some previous studies using semantic blocking manipulations have found that semantic interference effects (usually on reaction time) accumulate over presentation cycles (“refractoriness”) (e.g., Biegler, Crowther, & Martin, 2008; Schnur et al., 2006; see D. Howard et al., 2006 for evidence that semantic interference can accumulate even without semantic blocking), other studies have not observed any increase in interference after the second cycle (see Belke & Stielow, in press, for a review). Instead, reaction times are typically equivalent between conditions for the first cycle. Reaction times speed up after the first cycle for all conditions, but this speed-up is less pronounced for semantically-related blocks (Belke et al., 2005). Although a full discussion of the presence or absence of cumulative semantic interference is outside the scope of this paper, we found no evidence that semantic interference for high similarity blocks (in accuracy or reaction time) grew over time relative to unrelated blocks. In fact, we observed differences in accuracy and reaction time between high and unrelated levels of action similarity even on the first cycle, and those differences remained constant for the remaining three cycles. We suggest that this particular discrepancy between our results and prior studies (e.g., Schnur et al., 2006) stems from our usage of a word-picture matching task, in which participants select between two objects on each trial, versus the typically-used picture naming task, in which participants see only one stimulus per trial. While picture naming requires at least two trials to produce interference between related stimuli, interference in the current experiment could begin to emerge even on the first trial of the first cycle of a block. At first glance, the lack of a cumulative interference effect reported here seems incompatible with the results of Campanella & Shallice (2011a). They similarly used a word-picture matching paradigm but found a linear decrease in raw accuracy over cycles for manipulation-related stimuli. No such change over cycles was observed for unrelated stimuli. However, although semantic interference effects are typically defined relative to unrelated stimuli (e.g., Belke et al., 2005), Campanella & Shallice (2011a) did not test whether the decrease in accuracy observed for manipulation-related stimuli was statistically different from performance over cycles on unrelated stimuli. Furthermore, although Campanella & Shallice did not report accuracy by cycle in the unrelated condition, it seems likely that participants experienced interference on manipulation-related relative to unrelated objects even on the first cycle: accuracy for manipulation-related stimuli in the first cycle (73.32%) was well below the average accuracy for unrelated stimuli (93.4%).

Our results – and potentially those of Campanella & Shallice (2011a) – suggest that action similarity can affect performance even on a single trial. Indeed, the interfering effects of semantic similarity have been reported in paradigms that do not repeatedly cycle through stimuli – most notably in the picture-word interference paradigm (e.g., Glaser & Glaser, 1989; Schriefers, Meyer, & Levelt, 1990; see MacLeod, 1991 for a review). In this task, participants typically name pictures of objects while ignoring a simultaneously presented distractor word. Participants' naming responses are slower in the presence of distractor names that are semantically-related to the target than in the presence of unrelated names. Some researchers propose that this interference arises from competition during lexical selection (e.g., Levelt, Roelofs, & Meyer, 1999; but see Mahon, Costa, Peterson, Vargas, & Caramazza, 2007) . On a competition account of the current results, the lexical representations of distractor objects may receive activation due to their action semantic similarity to the target. This increased activation of non-target items makes selection of the target more difficult even on a single experimental trial.

Finally, we predicted that semantic interference would be graded according to the level of use action similarity between tools. In accuracy, we indeed observed this pattern (described above). However, participants' reaction times showed an unexpected pattern of results across cycles: moderately similar blocks were responded to as slowly as high similarity blocks during the first two cycles, but as quickly as unrelated blocks during the last two cycles. We offer a speculative interpretation of this surprising pattern of effects. Motivated by an account proposed by Belke (2008), this result may reflect a differential impact of top-down control during blocks of tools used with moderately similar versus highly similar actions. During the first cycle, repeated access to semantic representations causes interference (e.g., due to incremental learning, Oppenheim et al., 2010, or increased competition among related lexical representations, Belke et al., 2005) on action-related relative to unrelated blocks. This interference spreads not only to tools within an experimental block, but, more generally, to any tools used with a similar action. After the first cycle, however, the set of tools in a block is known to participants, and participants can use this knowledge to bias processing towards items in a block in a top-down way (Belke & Stielow, in press; Belke, 2008). This bias globally reduces competition from action-related tools in the semantic network that are not presented in the block. However, it does not prevent competition between the tools that do appear in the block. Thus, for high action similarity blocks, selection of the target is still difficult. We speculate that on moderate action similarity bocks, tool actions are more dissimilar and so compete with each other less strongly. As a result, the top-down bias applied to tools within moderately similar blocks is sufficient to eliminate reaction time differences between moderately similar and unrelated blocks. We have offered one possible explanation for this pattern of results, but further research is needed to determine the precise origin of this effect.

General Discussion

In these experiments, we investigated the elemental features and architecture of knowledge about how tools are used. In Experiment 1, we found that participants spontaneously consider the magnitude of arm movement, configuration of the hand, and possibly manner of motion when they sort tools by the overall similarity of their typical use actions. The degree to which tool use actions share these elemental features determines how globally similar participants perceive them to be. This experiment resulted in a novel description of a semantic space in which individual tools are positioned as a function of the similarity of their action features. In Experiment 2, we tested whether the action similarities derived from Experiment 1 predicted behavior on an implicit semantic task, blocked cyclic word-picture matching. We found that even though blocks were matched for taxonomic and visual similarity, average name agreement, familiarity, visual complexity, manipulability, and number of letters, phonemes, and syllables, accuracy decreased with increasing action similarity among tools in a block. We also observed slower reaction times to blocks of tools used with highly similar versus unrelated actions. Blocks of tools used with moderately similar actions showed a more complex pattern of reaction times – yet a pattern different from blocks of tools used with unrelated actions, nonetheless. These data demonstrate, for the first time, that overlap in the features of postures and movements associated with tools plays an important role in determining their overall semantic similarity.

Our results speak to an ongoing debate regarding the representation of tools in semantic memory. On embodied accounts of semantics, our experiences perceiving and manipulating objects in the world cause sensory and motor areas of the brain to be recruited during later semantic processing (Allport, 1985; Barsalou, 1999; Gallese & Lakoff, 2005; Pulvermüller, 1999). Although neuroimaging studies of tool concepts show activation of brain areas also implicated in action execution (e.g., Boronat et al., 2005; Chao & Martin, 2000; Grafton, Fadiga, Arbib, & Rizzolatti, 1997) and visual motion perception (see Beauchamp & Martin, 2007 for a review), such evidence cannot rule out the possibility that these regions are activated epiphenomenally, possibly after conceptual access occurs (Mahon & Caramazza, 2008). The results of Experiment 2 and other studies (Campanella & Shallice, 2011a; Helbig et al., 2006, 2010; Lee et al., 2012; Myung et al., 2006), on the other hand, demonstrate that action properties of tools can affect behavior on semantic tasks, even when participants are not instructed to think about object-directed actions. Since it is unclear why epiphenomenally activated action knowledge would affect performance on a task requiring the association of a name with a picture, this evidence bolsters the claim that action knowledge is a component of the semantic representations of tools.

Furthermore, the graded effects of action similarity that we observed in Experiment 2 suggest that similarity within action semantic space is computed in much the same way as other aspects of semantic similarity: similarity is proportional to the overlap between the semantic features of objects (Cree, McRae, & McNorgan, 1999; McRae et al., 1997; Plaut, 1995). While previous studies have shown that action knowledge about tools comes online during semantic tasks, none have speculated about what the elemental features of tool use knowledge might be. For example, Myung and colleagues (2006) found priming on a lexical decision task between manipulation-related tools (e.g., piano – typewriter). When choosing stimuli, they operationalized action similarity in a multidimensional way, stating that “… it was the similarity of the general action patterns among objects rather than that of detailed finger positions that defined common manipulation movements” (2006, p. 228). Similarly, Helbig and colleagues (2006) found priming when participants named pairs of tools used with “similar typical actions” (e.g., pliers – nutcracker) relative to those used with different actions (e.g., pliers – horseshoe). Our results suggest that the architecture of action semantics is likely determined by similarity on multiple action features (i.e., magnitude of arm movement, configuration of the hand, manner of motion). Indeed, in Experiment 1, only a relatively small amount of variance in the sorting data was explained by the explicit action ratings. We believe this result indicates that the dimensions for which we collected ratings represent only a subset of the action features that contribute to the meanings of tools. Yet, further research is necessary to determine which elemental features of actions play the biggest role in determining the similarity structure of action semantics. Given that tools sharing two or more semantic features may be more semantically similar than those sharing one or no features, another area of exploration might assess whether the influence of various action features is additive or interactive in determining overall similarity.

There are several mechanisms by which tool use knowledge could come to be recruited during the semantic processing of tools. One possibility is that when we think of or see tools, we re-activate, or simulate, knowledge of use actions within the motor system (e.g., Gallese & Lakoff, 2005; Jeannerod, 2001; Pulvermüller, 1999). These simulations, also proposed to underlie action recognition, may recruit the same representations used for action execution (Jeannerod, 2001; Rizzolatti & Craighero, 2004; Rizzolatti & Sinigaglia, 2010). In support of this account, patients with deficits of skilled object use (i.e., apraxia) are impaired when making explicit judgments about the actions associated with using tools (Buxbaum & Saffran, 2002; Buxbaum, Veramonti, & Schwartz, 2000; Myung et al., 2010), as well as similarity judgments about tools even when not instructed to think of their actions (Buxbaum & Saffran, 2002). When asked to find a target object in an array, patients with apraxia also exhibit abnormally slowed and reduced patterns of looking to manipulation-related distractors (Lee et al., in press; Myung et al., 2010). Thus, normal semantic processing of tools depends on the status of the action system.

Alternatively, or in addition, tool use knowledge recruited during semantic tasks may be grounded in visual, rather than motoric, experiences with tools and tool actions. In fact, we often have more experience visually perceiving tool use actions – both our own and those of others – than we have performing them. Thus, at least some knowledge about tool use activated by semantic processing of tools may be represented within brain systems specialized for visual perception of tool actions. For instance, parts of the left middle temporal gyrus respond more strongly to the rigid motion of tool actions than other kinds of visual motion (Beauchamp, Lee, Haxby, & Martin, 2002, 2003), and lesions to this same brain region impair visual recognition and production of tool use actions (Buxbaum, Shapiro, & Coslett, in press; Kalénine, Buxbaum, & Coslett, 2010). Of course, the recruitment of visual or motoric knowledge of tool use actions is not mutually exclusive; we may draw upon information from both modalities when we think of the meanings of tools.

In addition to adding more precision to accounts of semantic memory, the present set of results also has implications for our understanding of action-related competition between objects in an array. Previous research has demonstrated that the intention to perform an action makes objects compatible with the action more salient; for instance, preparing a grasp facilitates visual detection of objects congruent with the prepared grasp (Craighero, Bello, Fadiga, & Rizzolatti, 2002; Craighero, Fadiga, Rizzolatti, & Umiltà, 1998, 1999). Moreover, when there are objects in an array sharing a feature (e.g., “graspability”) congruent with a planned action (e.g., a grasp), attention is diverted to objects containing those action features, enhancing between-object competition and making selection of the target more difficult (Botvinick, Buxbaum, Bylsma, & Jax, 2009; Pavese & Buxbaum, 2002). We suggest an extension of these effects, from the low-level reaching and grasping actions previously studied to the skilled, complex, tool use actions explored here. Specifically, when one plans to use a tool (e.g., turning a key), the semantic representation of that tool – including its action features – will be activated. As a result, attention will be drawn to tools that share action features with the target (e.g., a screwdriver). A recent study by Bub, Masson, & Lin (2013) speaks to this prediction. Participants held actions in working memory while naming pictures of tools. When the planned action shared a single motor feature with the pictured tool (i.e., grasped with the same hand or same wrist orientation), naming latencies were slowed. Bub & colleagues conclude that the identification of manipulable objects causes automatic retrieval of motor features congruent and incongruent with planned actions.