Abstract

This study compared elderly hearing aid (EHA) users and elderly normal-hearing (ENH) individuals on identification of auditory speech stimuli (consonants, words, and final word in sentences) that were different when considering their linguistic properties. We measured the accuracy with which the target speech stimuli were identified, as well as the isolation points (IPs: the shortest duration, from onset, required to correctly identify the speech target). The relationships between working memory capacity, the IPs, and speech accuracy were also measured. Twenty-four EHA users (with mild to moderate hearing impairment) and 24 ENH individuals participated in the present study. Despite the use of their regular hearing aids, the EHA users had delayed IPs and were less accurate in identifying consonants and words compared with the ENH individuals. The EHA users also had delayed IPs for final word identification in sentences with lower predictability; however, no significant between-group difference in accuracy was observed. Finally, there were no significant between-group differences in terms of IPs or accuracy for final word identification in highly predictable sentences. Our results also showed that, among EHA users, greater working memory capacity was associated with earlier IPs and improved accuracy in consonant and word identification. Together, our findings demonstrate that the gated speech perception ability of EHA users was not at the level of ENH individuals, in terms of IPs and accuracy. In addition, gated speech perception was more cognitively demanding for EHA users than for ENH individuals in the absence of semantic context.

Keywords: hearing aid users, gating paradigm, speech perception, cognition

Introduction

Several decades of research have shown the adverse effects of hearing impairment on the peripheral and central auditory systems, which in turn cause decreased language understanding, especially under degraded listening conditions (e.g., Tyler, Baker, & Armstrong-Bendall, 1983). Although current digital hearing aids that utilize advanced technologies (e.g., directional microphones, nonlinear amplification of auditory signals, and noise reduction algorithms) are improving the hearing ability of hearing-impaired people, the capacity for hearing aid users to identify speech stimuli is not on par with that of individuals with normal hearing (e.g., Dimitrijevic, John, & Picton, 2004). Furthermore, the degree of hearing aid benefit varies greatly across hearing-impaired individuals (Benson, Clark, & Johnson, 1992).

One reason why hearing aids do not fully restore hearing ability may be that the clinical settings of hearing aids for most hearing-impaired individuals are primarily based on pure-tone thresholds extracted from their audiograms and speech audiometry, despite individual differences in, for example, cognitive function (for a review, see Lunner, Rudner, & Rönnberg, 2009; Rudner & Lunner, 2013). Independent empirical studies have demonstrated that the cognitive capacity of hearing aid users (e.g., working memory capacity) is associated with their speech perception success (Humes, 2002; Humes, Kidd, & Lentz, 2013; Rudner, Foo, Sundwall-Thorén, Lunner, & Rönnberg, 2008; Rudner, Rönnberg, & Lunner, 2011). In addition, hearing impairment has been associated with changes to anatomical structure (Husain et al., 2011; Peele, Troiani, Grossman, & Wingfield, 2011), cortical plasticity in the brain (Campbell & Sharma, 2013), and reduced temporal and spectral resolution (Arlinger & Dryselius, 1990); these changes may also be related to behavioral speech perception outcomes of hearing aid users.

In the present study, we compared the auditory speech perception abilities of elderly hearing aid (EHA) users and elderly normal-hearing (ENH) individuals, using the gating paradigm (Grosjean, 1980). The gating paradigm enables the measurement of isolation points (IPs). IP refers to the specific point in the entire duration of a speech signal (e.g., a consonant or a word) when a listener is able to correctly guess the identity of that signal with no subsequent change in his or her decision after hearing the remainder of that signal. The present study compared the speech perception abilities of EHA users with age-matched normal-hearing counterparts in terms of IP and accuracy, for different gated speech tasks. This comparison was conducted to clarify the extent to which hearing aid use compensates for the speech perception ability of EHA users when compared with that of age-matched ENH individuals.

The speech stimuli used in the present study (consonants, words, and final words in highly predictable [HP] and less predictable [LP] sentences) vary in terms of their linguistic (i.e., acoustical, lexical, and sentential) properties. Listeners identify consonants based on the acoustic cues (e.g., voicing, manner, and place of articulation) that are distributed across the entire duration of consonants (cf., Smits, 2000). When these acoustical cues are available, listeners are able to correctly identify consonants (cf., Sawusch, 1977). For the identification of words, lexical information helps listeners to correctly identify words. Prior gating studies have shown that listeners need a little more than half of the duration of the whole word to correctly identify the target word (Grosjean, 1980). For the identification of final words in sentences, listeners benefit from contextual backgrounds. By definition, this context is much greater in HP sentences than in LP sentences (Moradi, Lidestam, Saremi, & Rönnberg, 2014).

In addition, we measured the relationships between the IPs and accuracy of gated speech tasks and a reading span test to investigate the contribution of working memory to early and accurate identification of gated stimuli among EHA users and ENH individuals.

The EHA users in the present study wore their own hearing aids using their existing amplification setting throughout the testing. Hearing aid settings were not changed during the testing. The rationale for this approach was that we were interested in obtaining the speech perception abilities of experienced hearing aid users under settings that were ecologically similar to their typical listening experiences, to minimize the potential effect of novelty on their performance in gated speech tasks.

Methods

Participants

There were two groups of participants in the present study: EHA users and ENH individuals.

EHA users

Twenty-four native Swedish speakers (13 men and 11 women) with a symmetrical bilateral mild to moderate sensorineural hearing impairment participated in this study. All had been habitual hearing aid users for at least two years. Their average age was 72.4 years (SD = 3.3 years, range: 67–79 years).

The study inclusion criteria were as follows: (a) bilateral hearing impairment with an average threshold of >35 dB for pure-tone frequencies of 500, 1000, 1500, and 2000 Hz; (b) Swedish as the native language; and (c) age over 65 years.

The participants were selected from an audiology clinic patient list at Linköping University Hospital, Sweden. They were bilateral hearing aids users and wore various behind-the-ear (BTE), in-the-ear (ITE), and receiver-in-the-ear (RITE) digital hearing aids. Table 1 shows the brands and models of hearing aids used by these participants.

Table 1.

Brands and Models of Hearing Aids Used by EHA Users.

| Hearing aid | BTE, ITE, or RITE | Number of participants |

|---|---|---|

| Phonak, Versata Art micro | BTE | 4 |

| Oticon, Vigo Pro T | BTE | 3 |

| Widex, Clear C4-9 | BTE | 2 |

| Beltone, True9 45-DPW | ITE | 1 |

| Oticon, Hit Pro 13 | BTE | 1 |

| Oticon, Hit Pro 312 | BTE | 1 |

| Oticon, Vigo Pro Power | RITE | 1 |

| Oticon, Syncro T | BTE | 1 |

| Oticon, Tego | BTE | 1 |

| Oticon, Tego Pro | BTE | 1 |

| Oticon, Vigo | RITE | 1 |

| Oticon, Vigo Pro 312 | BTE | 1 |

| Phonak, Exelia Art | ITE | 1 |

| Phonak, Exelia Art M | BTE | 1 |

| Phonak, Exelia Art micro | BTE | 1 |

| Phonak, Exelia Art Petite | ITE | 1 |

| Phonak, Versata Art M | BTE | 1 |

| Widex, Senso Vita SV-9 VC | BTE | 1 |

Note. BTE = behind-the-ear; ITE = in-the-ear; RITE = receiver-in-the-ear

For 15 EHA users, the current hearing aid was the first device used following the diagnosis of their hearing impairment. The remaining participants (nine EHA users) had had experience of another hearing aid prior to their current hearing aid. Twenty-two of the participants used the current hearing aids from 9 months to 4 years, while two of participants used the current hearing aids for around 5.5 years.

The hearing aids were fitted for them, based on each listener’s individual needs, by licensed audiologists who were independent of the present study. All of these hearing aids used nonlinear processing and had been fitted according to manufacturer’s instruction.

Table 2 reports the pure-tone average (PTA) thresholds across seven frequencies (125, 250, 500, 1000, 2000, 4000, and 8000 Hz) for the left and right ears of the EHA and ENH groups.

Table 2.

Means and Standard Deviations for Hearing Thresholds and Pure-Tone Averages (in dB HL) for EHA Users and ENH Individuals.

| Group | 125 Hz (SD) | 250 Hz (SD) | 500 Hz (SD) | 1000 Hz (SD) | 2000 Hz (SD) | 4000 Hz (SD) | 8000 Hz (SD) | PTA (SD) |

|---|---|---|---|---|---|---|---|---|

| EHA | ||||||||

| Right ear (dB HL) | 27.50 (13.35) | 26.04 (13.35) | 28.96 (10.93) | 36.46 (10.37) | 49.17 (8.81) | 59.38 (9.24) | 65.00 (13.83) | 41.79 (6.18) |

| Left ear (dB HL) | 30.63 (13.21) | 30.21 (15.78) | 31.46 (14.02) | 36.25 (10.56) | 53.13 (11.96) | 62.50 (10.00) | 70.83 (15.01) | 45.00 (6.33) |

| ENH | ||||||||

| Right ear (dB HL) | 8.75 (3.69) | 9.58 (3.27) | 11.25 (3.04) | 15.42 (3.27) | 18.96 (2.54) | 23.33 (3.81) | 37.29 (4.42) | 17.80 (2.26) |

| Left ear (dB HL) | 9.17 (3.19) | 10.21 (3.12) | 12.08 (2.92) | 15.21 (3.12) | 19.79 (3.12) | 25.83 (3.51) | 38.33 (4.58) | 18.66 (2.51) |

Note. PTA = pure-tone averages; EHA = elderly hearing aid; ENH = elderly normal hearing.

Elderly people with normal hearing

Twenty-four native Swedish speakers with normal hearing (11 men and 13 women) were recruited to take part in the study. These individuals were selected from the general population living near the hearing clinic. Their average age was 71.5 years (SD = 3.1 years, range: 66–77 years). They were recruited primarily via invitation letters sent to their addresses and flyers. Four of the participants were spouses of hearing aid users.

All ENH participants passed pure-tone air conduction screening from 125 through 8000 Hz. The inclusion criteria for this group were as follows: (a) a mean threshold of <20 dB for frequencies of 500, 1000, 1500, and 2000 Hz; (b) Swedish as the native language; and (c) age over 65 years.

All participants in both groups (EHA and ENH) reported themselves to be in good health. They were free of middle ear pathology, tinnitus, head injuries resulting in loss of consciousness, Parkinson’s disease, seizure, dementia, or psychological disorders that might compromise their ability to perform the speech and cognitive tasks.

They were invited by letter to take part in the study. All participants gave written consent for their participation in this study, and all were provided with written information regarding the procedures employed in the study. The Linköping regional ethical review board approved the study, including the informational materials and consent procedure.

Measures

Gated speech tasks

The gated speech stimuli employed in the present study have been described in detail by Moradi et al. (2014). A brief description of the gated tasks is provided below.

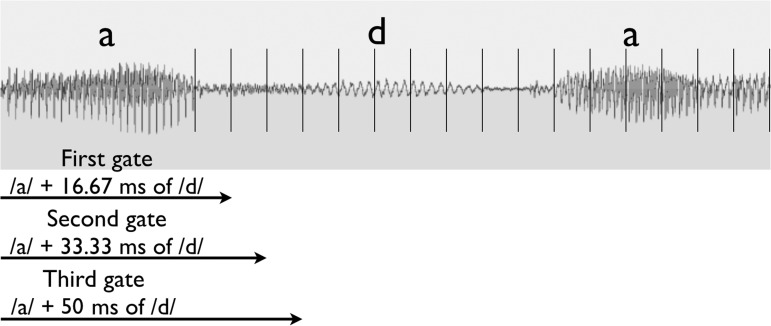

Consonants

The present study used 18 Swedish consonants presented in a vowel–consonant–vowel syllable format (/aba, ada, afa, aga, aja, aha, aka, ala, ama, ana, aŋa, apa, ara, aʈa, asa, aʃa, ata, ava/). The consonant gate size was set at 16.67 ms. Gating started after the first vowel /a/ and at the beginning of the consonant onset. Hence, the first gate included the vowel /a/ plus the initial 16.67 ms of the consonant, the second gate provided an additional 16.67 ms of the consonant (a total of 33.34 ms of the consonant), and so on. The minimum, average, and maximum total duration of consonants was 85, 198, and 410 ms, respectively. The consonant gating task took around 10 to 15 min to complete. Figure 1 illustrates an example of the gating paradigm for consonant identification.

Figure 1.

Illustration of gating for syllable /ada/.

Words

Twenty-three Swedish monosyllabic words (all nouns) were presented to the participants in a consonant–vowel–consonant (CVC) format. These words were selected from the 46 Swedish monosyllabic words (e.g., bad, dus, gap, jul, ram, sot, and väck) used by Moradi, Lidestam, and Rönnberg (2013) and Moradi et al. (2014). The words had average to high frequency according to the Swedish language corpus. In addition, each word had a small to average number of neighbors (i.e., three to six alternative words with the same pronunciation of the first two phonemes: e.g., the word /dop/ had the neighbors /dok, dog, dos, don/). The word gate size in the present study was set to 33.33 ms, as used by Moradi et al. The rationale for this gate size was based on pilot studies in which words were presented (in CVC format) with the gate size of 16.67 ms starting from the onset of the first consonant. The participants in the pilot studies complained that word identification took a long time, causing exhaustion and loss of motivation. To resolve this problem, we doubled the gate size (to 33.33 ms) and started the gating from the second phoneme of each word. The minimum, average, and maximum duration of words was 548, 723, and 902 ms, respectively. The word-gating task took around 15 to 20 min to complete.

Final words in sentences

The sentences for final word identification in LP or HP sentences were extracted from a battery of sentences developed by Moradi et al. (2013, 2014). Each sentence was either an LP or HP sentence, according to how predictable the last word in each sentence was. This battery was created based on pilot studies to develop a Swedish version of final words in LP and HP sentences. An example of a final word in an HP sentence is “Lisa gick till biblioteket för att låna en bok” (“Lisa went to the library to borrow a book”). An example of a final word in an LP sentence is “I förorten finns en fantastisk dal” (“In the suburb there is a fantastic valley”). The final word in each sentence was a monosyllabic noun.

In the present study, each participant received 11 LP and 11 HP sentences (a total of 22 sentences). Similar to Moradi et al. (2013, 2014), the gating started from the onset of the first phoneme of the target word. Because of the supportive context on word identification, and based on the pilot data, we set the gate size at 16.67 ms to optimize resolution time. The average duration of each sentence was 3,030 ms. The minimum, average, and maximum duration for target words at the end of sentences was 547, 710, and 896 ms, respectively. The sentence gating task took around 10 to 15 min to complete.

Cognitive test

The reading span test was used to measure the working memory capacity of the participants. It required the retention and recall of words presented within simple sentences. Baddeley, Logie, Nimmo-Smith, and Brereton (1985) created a reading span test based on a technique devised by Daneman and Carpenter (1980), in which sentences are presented visually, word by word, on a computer screen. Rönnberg, Arlinger, Lyxell, and Kinnefors (1989) created a Swedish version of the reading span test, which comprises 54 sentences. We used a shorter version of the test (24 sentences rather than 54); this version has been used successfully in other studies (Moradi et al., 2013, 2014; Ng, Rudner, Lunner, Pedersen, & Rönnberg, 2013).

Performance in this test required two parallel actions: comprehension and retention. First, the subject had to interpret whether the sentences, shown on the middle of a computer screen, were sensible (semantically correct) or absurd (semantically incorrect). For example, “Pappan kramade dottern” (“The father hugged his daughter”) or “Räven skrev poesi” (“The fox wrote poetry”). The test began with two-sentence sets, which were followed by three-sentence sets, and so forth, up to five-sentence sets. Participants were asked to press the “L” key for semantically correct sentences or the “S” key for semantically incorrect sentences. Second, after the sentence set had been presented (post cueing), the computer instructed the participants to repeat either the first or the last words of each sentence in the current set by typing them in the correct serial order. The total number of correctly recalled words across all sentences in the test was the participant’s reading span score. The maximum score for the test was 24. The reading span test took approximately 15 min per participant to complete.

Word comprehension test

We employed “Ordförståelse B” (Word Comprehension B), a pen and paper multiple-choice test, to measure participants’ vocabulary comprehension. The test, which consists of 34 sentences, is part of the DLS™ (Swedish diagnostic tests of reading and writing, Järpsten, 2002). Each sentence is missing its last word (e.g., passive is the same as —–); sentences are followed by a list of four words. The participant’s task is to choose the word that corresponds to the meaning and context of the sentence. We used this test to ensure that the EHA and ENH groups did not differ in terms of their word comprehension ability. The scores are computed by counting the correctly completed words (scores range from 0 to 34). This test took around 10 min to complete.

Both the working memory capacity and word comprehension tests were administered visually in the present study, aiming to omit any potential effect of hearing problems that might impact on the EHA users if those tests were administered auditorily.

Procedure

A female speaker with standard Swedish dialect read all of the items with normal intonation at a normal speaking rate in a quiet studio. Each speech stimulus (consonant, word, or sentence) was recorded several times, and we selected the speech stimulus with the most natural intonation and clearest enunciation. In addition, speech stimuli were matched for sound level intensity. The sampling rate of the recording was 48 kHz, and the bit depth was 16 bits.

In the next step, the onset and offset times of each recorded stimulus were marked (with Sound Studio 4 software) to segment the different types of stimuli. For each target, the onset time of the target was located as precisely as possible by inspecting the speech waveform (in Sound Studio 4) and using auditory feedback. Each segmented section was then verified and saved as a “.wav” file. The root mean square value was calculated for each speech stimulus, and the stimuli were then rescaled to equate amplitude levels across the speech stimuli.

Participants were tested individually in a sound booth for audiometry and the gating experiment. The gated speech signals were delivered via a MacBook Pro routed to the input of two loudspeakers (Genelec 8030A). The speakers were located within the sound booth chamber, 70 cm in front of the participants, at 20° azimuth to the left and right of them. The MacBook Pro was outside of the sound booth in front of the experimenter, enabling the experimenter to control the stimulus presentation. MATLAB (R2010b) software was used to gate and present the stimuli (for MATLAB script used to gate speech stimuli, see Lidestam, 2014). A microphone (in the sound booth routed into the audiometry equipment) transferred the responses of participants to the experimenter via a headphone connected to the audiometry equipment. The experimenter recorded the participant’s verbal responses. The sound pressure level (SPL) of the gated stimuli was calibrated to 65 dB SPL (measured with a sound level meter in free field, Larson-Davis, System 824) and presented in the vicinity of the participant’s head.

The first session started with pure-tone hearing threshold (125–8000 Hz) measurements (Interacoustics AC40). For the identification of gated speech tasks, the participants read written instructions about the identification of different types of speech tasks according to the gating paradigm. Before any gated task was administered, the participants underwent a training session to become familiarized with the stimuli (the gating paradigm presentation stimuli) and to perform trial runs. In the trial runs, participants practiced three consonants (/k ŋ v/) and two words (/bil [car]/ and /tum [inch]/). The experimenter presented trial items to participants and asked them which consonant (or word) comes to their minds after each presentation (i.e., first gate, second gate, third gate, and so on). If it was wrong (which is common at initial presentation of gated tasks), the experimenter said no and presented another gate (for instance, after gate 3, the experimenter presented gate 4). If the participant correctly identified an item in the trial, the experimenter said yes and also presented more gates to familiarize the participants with the gating paradigm (see Figure 1). This feedback was provided during the practice but not during the experiment. After the practice, the actual gating tasks started. All participants began with the consonant identification task, followed by the words task, and ending with the final words in sentences task. The order of items within each gated task type (i.e., consonants, words, and final words in sentences) varied among the participants. The scoring of IPs in the present study was similar to that described by Moradi et al. (2013, 2014). To be sure that the participants were not randomly guessing the target speech signal in the gated identification of speech tasks, we continued gating presentation of speech tasks after the first correct response. In other words, we never stopped the presentation of gates after the first identification by a listener. We continued the presentation of stimuli until the listener responded correctly for six consecutive gates. If the participants were able to correctly repeat their responses on six consecutive gates, we considered it as a correct response. The IP in this case was the first gate that the participant gave the correct response. When a target was not correctly identified, its entire duration plus one gate size was calculated as the IP for that item (this scoring method accords with previous studies utilizing the gating paradigm; Elliott, Hammer, & Evan, 1987; Hardison, 2005; Metsala, 1997; Moradi et al., 2013, 2014; Walley, Michela, & Wood, 1995).

Each participant underwent audiometry and performed in the gated tasks in the first session. The first session took 60 to 70 min to complete and included short rest periods to prevent fatigue. In the second session, the reading span and word comprehension tests were administered. The second session for those tasks took around 25 min to complete.

Results

Table 3 shows the mean ages, years of formal education, word comprehension test results, and reading span test results for the EHA and ENH groups. There were no significant between-group differences in terms of word comprehension and reading span test scores. In addition, Tables 4 and 5 show the mean IPs and accuracy for the auditory gated tasks, respectively, for the EHA and ENH groups.

Table 3.

Means, Standard Deviations, and Significance Levels for EHA Users’ and ENH Individuals’ Age, Years of Formal Education, and Word Comprehension Test and Reading Span Test Results.

| Parameter | EHA M (SD) | ENH M (SD) | Inferential statistics EHA vs. ENH (df = 46) |

|---|---|---|---|

| Age (years) | 72.42 (3.27) | 71.46 (3.12) | t = 1.04, p = .304 |

| Years of formal education | 13.04 (3.03) | 13.46 (2.64) | t = −0.51, p = .614 |

| Word comprehension test | 32.45 (1.22) | 32.83 (1.01) | t = −1.16, p = .250 |

| Reading span test | 12.04 (1.88) | 12.21 (1.89) | t = −0.31, p = .760 |

Note. EHA = elderly hearing aid; ENH = elderly normal hearing.

Table 4.

Descriptive and Inferential Statistics for IPs of Consonants, Words, and Final Words in HP and LP Sentences for EHA Users and ENH Individuals.

| Type of gated task | Descriptive statistics |

Inferential statistics |

|

|---|---|---|---|

| EHA M (SD) | ENH M (SD) | ENH vs. EHA (df = 46) | |

| Consonants (ms) | 145.28 (27.02) | 117.46 (18.02) | t = 3.99, p < .001, d = 1.24 |

| Words (ms) | 560.34 (34.20) | 502.01 (31.32) | t = 6.11, p < .001, d = 1.78 |

| Final words in LP (ms) | 140.40 (23.59) | 122.22 (19.73) | t = 2.90, p = .006, d = .84 |

| Final words in HP (ms) | 20.20 (3.46) | 20.25 (2.84) | t = −0.59, p = .953 |

Note. EHA = elderly hearing aid; ENH = elderly normal hearing; IP = isolation point; LP = less predictable; HP = highly predictable.

Table 5.

Descriptive and Inferential Statistics for Accuracy of Consonants, Words, and Final Words in HP and LP Sentences for EHA Users and ENH Individuals.

| Type of gated task | Descriptive statistics |

Inferential statistics |

|

|---|---|---|---|

| EHA M (SD) | ENH M (SD) | ENH vs. EHA (df = 46) | |

| Consonants (%) | 80.32 (11.70) | 94.68 (6.45) | t = 5.27, p < .001, d = 1.58 |

| Words (%) | 84.76 (8.69) | 98.73 (2.39) | t = 7.60, p < .001, d = 2.52 |

| Final words in LP (%) | 96.60 (4.15) | 98.62 (3.18) | t = 1.89, p = .065, d = .55 |

| Final words in HP (%) | 100.00 (0.00) | 100.00 (0.00) | – |

Note. EHA = elderly hearing aid; ENH = elderly normal hearing; LP = less predictable; HP = highly predictable.

Group Comparison of IPs

Consonants

A 2 (hearing impairment [EHA, ENH]) × 18 (consonants) mixed analysis of variance (ANOVA) with repeated measures on the second factor was conducted to examine the effect of hearing impairment on the IPs for consonant identification. The results demonstrated a main effect of hearing impairment, F(1, 46) = 17.61, p < .001, = .28, and a main effect of consonants, F(6.68, 307.16) = 73.92, p < .001, = .62, with an interaction between hearing impairment and consonants, F(6.68, 307.16) = 2.62, p = .014, = .05. Tests of simple effects of consonants on hearing impairment showed that the effect of consonants was significant for both the EHA group, F(7.12, 307.16) = 45.86, p < .001, = .73, and the ENH group, F(4.15, 307.16) = 44.52, p < .001, = .62.

Words

The mean IP of the EHA group (M = 560.34, SD = 34.20 ms) was greater than that of the ENH group (M = 502.01, SD = 31.32 ms) for word identification, t(46) = 6.16, p < .001, d = 1.76.

Final words in sentences

A 2 (hearing impairment [EHA, ENH]) × 2 (sentence predictability [high vs. low]) mixed ANOVA with repeated measures on the second factor was conducted to examine the effect of hearing impairment on the IP of final words in sentences. The results showed a main effect of hearing impairment, F(1, 46) = 7.28, p = .01, = .14, and a main effect of sentence predictability, F(1, 46) = 1398.01, p < .001, = .97, with an interaction between hearing impairment and sentence predictability, F(1, 46) = 9.42, p = .004, = .17. Tests of simple effects showed that the effect of sentence predictability was significant in both the EHA group, F(1, 23) = 639.98, p < .001, = .94, and the ENH group, F(1, 23) = 460.54, p < .001, = .92.

Subsequent one-tailed t-tests conducted as planned comparisons indicated delayed identification of the final words in LP sentences for the EHA group compared with the ENH group, t(46) = 2.90, p = .006, d = .84. However, there were no between-group differences in the IPs for final words in HP sentences, t(46) = 0.06, p = .953.

Group Comparison of Accuracy

Consonants

A 2 (hearing impairment [EHA, ENH]) × 18 (consonants) mixed ANOVA with repeated measures on the second factor was conducted to examine the effect of hearing impairment on the accuracy of consonant identification. The results showed a main effect of hearing impairment, F(1, 46) = 27.72, p < .001, = .38, and a main effect of consonants, F(17, 782) = 3.06, p < .001, = .06. However, the interaction between hearing impairment and consonants was not significant, F(17, 782) = .30, p = .99.

Words

The EHA group had lower accuracy (M = 84.76, SD = 08.69 ms) than the ENH group (M = 98.73, SD = 02.39 ms) for word identification, t(46) = 7.60, p < .001, d = 2.37.

Final words in sentences

A 2 (hearing impairment [EHA, ENH]) × 2 (sentence predictability [high vs. low]) mixed ANOVA with repeated measures on the second factor was conducted to examine the effect of hearing impairment on the accuracy of final word identification in sentences. No significant main effect for hearing impairment was obtained, F(1, 46) = 3.58, p = .065, = .07. However, the main effect for sentence predictability was significant, F(1, 46) = 20.04, p < .001, = .30. There was no significant interaction effect, F(1, 46) = 3.58, p = .065.

Correlation Between Gated Task Performance and Reading Span Test Results

Tables 6 and 7 show the data for the Pearson correlations between the IPs and accuracy for each gated task and the reading span test results, for the EHA group (Tables 8 and 9 show the data for the ENH group). In the EHA group only, better performance in the reading span test was associated with earlier and more accurate identification of consonants and words.

Table 6.

Correlation Matrix for IPs of Gated Speech Tasks and the Reading Span Test Among EHA Users.

| 1 | 2 | 3 | 4 | 5 | |

|---|---|---|---|---|---|

| 1. Consonants | .61** | .17 | −.07 | −.61** | |

| 2. Words | −.04 | −.19 | −.67** | ||

| 3. Final words in LP sentences | .39 | −.07 | |||

| 4. Final words in HP sentences | .27 | ||||

| 5. Reading span test |

Note. EHA = elderly hearing aid; IP = isolation point; LP = less predictable; HP = highly predictable.

p < .01.

Table 7.

Correlation Matrix for Accuracy of Gated Speech Tasks and the Reading Span Test Among EHA Users.

| 1 | 2 | 3 | 4 | 5 | |

|---|---|---|---|---|---|

| 1. Consonants | .57** | .24 | – | .53** | |

| 2. Words | .14 | – | .82** | ||

| 3. Final words in LP sentences | – | .14 | |||

| 4. Final words in HP sentences | – | ||||

| 5. Reading span test |

Note. EHA = elderly hearing aid; LP = less predictable; HP = highly predictable. Accuracy in final word identification in HP sentences was 100%; hence, there were no correlations to report for this variable.

p < .01.

Table 8.

Correlation Matrix for IPs of Gated Speech Tasks and the Reading Span Test Among ENH Individuals.

| 1 | 2 | 3 | 4 | 5 | |

|---|---|---|---|---|---|

| 1. Consonants | .63** | .14 | .07 | −.29 | |

| 2. Words | −.15 | −.12 | −.38 | ||

| 3. Final words in LP sentences | .49* | −.04 | |||

| 4. Final words in HP sentences | .17 | ||||

| 5. Reading span test |

Note. ENH = elderly normal hearing; IP = isolation point; LP = less predictable; HP = highly predictable.

p < .05. **p < .01.

Table 9.

Correlation Matrix for Accuracy of Gated Speech Tasks and the Reading Span Test Among ENH Individuals.

| 1 | 2 | 3 | 4 | 5 | |

|---|---|---|---|---|---|

| 1. Consonants | .22 | −.37 | – | −.06 | |

| 2. Words | .01 | – | −.27 | ||

| 3. Final words in LP sentences | – | −.08 | |||

| 4. Final words in HP sentences | – | ||||

| 5. Reading span test |

Note. ENH = elderly normal hearing; LP = less predictable; HP = highly predictable. Accuracy in final word identification in HP sentences was 100%; hence, there were no correlations to report for this variable.

Discussion

The results of the present study showed that the EHA group required larger initial segments of the speech signals for the correct identification of consonants, words, and final words in LP but not in HP sentences. In addition, if there was no prior supportive semantic context, performance accuracy was significantly reduced in the EHA group compared with the ENH group. In the absence of a semantic context, the EHA group seemed to rely on working memory to aid the identification of speech stimuli, as suggested by the correlation patterns.

Consonants

EHA users generally needed longer IPs and had lower accuracy for consonant identification compared with ENH individuals. The effect of hearing impairment on consonant identification was evident for IPs such that EHA users needed a larger portion of the auditory signal relative to ENH individuals. The identification of a given phoneme is based on the relationship between acoustic (perceptual) cues of a signal occurring within a specific duration of time (cf., Rosen, 1992). Each factor that changes the relationship between the acoustic cues (e.g., external noise) will distort the speech signal. In the present study, the results for the EHA group indicate that hearing aids failed to efficiently restore the relationship between the acoustic cues to deliver a clear speech signal into the central auditory system; this is clearly illustrated by the accuracy results (80% for the EHA group vs. 95% for the ENH group).

To account for this inferior and cognitively demanding performance of EHA users for consonant identification, Eckert, Cute, Vaden, Kuchinsky, and Dubno (2012) reported that age-related hearing loss is associated with structural changes in the superior temporal gyrus (STG). Mesgarani, Cheung, Johnson, and Chang (2014) revealed that some areas in the STG are responsible for the analysis and encoding of distinctive acoustic features that are important for the differentiation of phonemes from each other. In sum, it seems that poor sensory coding (caused by age-related hearing loss) produces poorer performance as well as (in the longer term) producing changes in STG in the EHA group, which probably resulted in inferior performance in consonant identification relative to the ENH group.

Another explanation may be related to a deficit in “phonemic restoration” ability caused by hearing impairment (Baskent, Eiler, & Edwards, 2010); this ability is preserved in elderly individuals with normal hearing (Saija, Akyürek, Andringa, & Baskent, 2014). Phonemic restoration refers to a top-down perceptual process in which the brain creates a coherent speech percept from a degraded auditory signal (Warren, 1970).

Grossberg and Kazerounian (2011) developed a neural model for phonemic restoration and suggested that phonemic restoration is a conscious process that utilizes cognitive functions (i.e., attention) to create a successful perceptual restoration. In their model, there is an interaction between bottom-up signals, which are affected by noise, and prototypes (i.e., consonants). During phonemic restoration, a conscious matching process is utilized, wherein acoustic cues that match with prototypes are attended to while those that are not are ignored. The listener finally makes a decision about the nature of the degraded signal on the basis of this exchange process between the impoverished signal and prototypes.

De Neys, Schaeken, and d’Ydewalle (2003) as well as Kane and Engle (2000) showed that performance that requires attentional and inference-making processes greatly depends on working memory capacity. Hence, it can be inferred that individuals with greater working memory capacity have more efficient phoneme restoration ability, which in turn results in faster and more accurate identification of consonants. The correlation matrices (Tables 6 and 7) revealed that those EHA users with greater working memory capacity had reduced IPs and better accuracy for consonant identification. Therefore, we suggest that EHA users with better working memory capacities also have better phoneme restoration abilities, which results in better performance for consonant identification. This notion is in line with neuroimaging studies, which show that presumed cognitive support networks in prefrontal brain areas are required in addition to predominantly auditory brain areas to correctly identify ambiguous phonemes (Dehaene-Lambertz et al., 2005; Dufor, Serniclaes, Sprenger-Charolles, & Démonet, 2007).

Words

The EHA group had longer IPs (560 vs. 502 ms) and lower accuracy (85% vs. 99%) for word identification compared with the ENH group. Our findings are consistent with Dimitrijevic et al. (2004), who found that even when provided with hearing aids, elderly hearing-impaired individuals showed inferior word identification performance compared with ENH individuals.

Hearing-impaired individuals, even after compensating for audibility, demonstrate lower accuracies in identifying vowels (e.g., Charles, 2012) and consonants (e.g., Harkrider, Plyler, & Hedrick, 2009), which are the main constituents of words, compared with those with normal hearing. Therefore, more lexical candidates may be activated for EHA users than for ENH individuals. Hence, EHA users may require more of the input signal to drop these extra-activated lexical candidates and map incoming acoustic signals with corresponding phonological representations in the mental lexicon. This extra-activation process is illustrated by the fact that the EHA group had delayed IPs for word identification compared with the ENH group.

In addition, the accuracy rate for word identification was lower in the EHA group than in the ENH group. Because EHA users demonstrate lower accuracy for consonants and vowels, we suggest that the misperception of a given consonant (or vowel) can lead to word misidentification by activating incorrect lexical candidates in the mental lexicon. By retrieving more of the signal, the EHA users may realize their mistake and guess the correct word (as revealed by relatively late IPs for correct responses), or they may not realize their error and proceed with an incorrect response (as illustrated by poor accuracy results).

Recent studies have demonstrated the adverse effects of hearing impairment on lexical retrieval (Classon, Löfkvist, Rudner, & Rönnberg, 2013; Rönnberg et al., 2011) and on the neural brain areas involved in word recognition (Boyen, Langers, de Kleine, & van Dijk, 2013; Eckert et al., 2012; Husain et al., 2011; Peele et al., 2011). In fact, EHA users have difficulties not only in receiving lexical signals (bottom-up processing) but also in obtaining lexical feedback (or top-down lexical guidance) for mapping incoming signals onto corresponding phonological representations in the mental lexicon. Consequently, the process of matching a signal with the corresponding lexical representation becomes effortful (or cognitively demanding) for EHA users compared with ENH individuals (cf., the Ease of Language Understanding [ELU] model, Rönnberg et al, 2013; Rönnberg, Rudner, Foo, & Lunner, 2008). In turn, those EHA users with greater working memory capacity can identify words with shorter IPs and with greater accuracy than those with lower working memory capacity.

Final Words in Sentences

The EHA group had longer IPs than the ENH group for the identification of final words in LP sentences (140 ms vs. 122 ms, respectively), despite accuracy scores were at or near ceiling for both groups (97% for EHA group vs. 99% for ENH group). In addition, there were no significant between-group differences in the IPs and accuracy for final word identification in HP sentences.

The limited prior context provided in LP sentences activates a set of lexical candidates that are matched to the content of the sentences. Listeners need to hear a specific amount of the target signal to drop the other candidates so that only one suitable lexical candidate is left. Because EHA users hear signals with less clarity than ENH individuals, we suggest that the misperception of initial segments of target words in an LP sentence likely activates more lexical candidates (that match the content of the sentence) in EHA users compared with ENH individuals. The EHA group required more of the target signal to drop these extra-activated candidates and to identify the final words in LP sentences (compared with the ENH group), as illustrated by the IP results. According to our results, there was no difference between the two groups in the accuracy of final words identified in LP sentences. This indicates that the EHA group was just as capable of correctly identifying the target words in LP sentences as the ENH group but with delayed IPs.

In HP sentences, the prior context most likely activates only one, or very few, lexical candidates that match the meaning of the sentence. This will reduce listeners’ dependency on hearing the target signal. Support for this notion comes from gating paradigm studies (e.g., Moradi et al., 2014), which revealed that the mean IPs for target words in HP sentences were located between the first and second gates during the presentation of target words. Similarly, the mean IPs for the final words in HP sentences, for both the EHA and ENH groups, in the present study were between the first and second gates. This indicates that an HP semantic context has an immediate effect on final word identification, eliminating the dependency on the target words for both the EHA and the ENH groups.

In summary, our findings corroborate prior studies that have suggested that continuously increasing semantic support eliminates the adverse effects of hearing impairment on final word identification in sentences (Gordon-Salant & Fitzgibbons, 1997; Lash, Rogers, Zoller, & Wingfield, 2013).

In contrast to the identification of consonants and words, the identification of final words in LP and HP sentences was not correlated with the working memory capacities of the EHA group. This indicates that prior semantic context reduces the cognitive demands required for processing final words in sentences. We suggest that EHA users benefit from the availability of semantic context, exploiting it to identify target signals without the necessity of explicit cognitive resources (e.g., working memory) to aid in the disambiguation of target signals.

The facilitating effect of semantic context on reducing cognitive demands for processing speech stimuli in young and normal-hearing listeners was demonstrated by Moradi et al. (2014). In that study, under noisy conditions, the IPs for final words in HP and LP sentences were not correlated with the cognitive capacities. Wingfield, Alexander, and Cavigelli (1994) reported that such a facilitating effect was dependent on the cognitive capacities of the participants. In Wingfield et al. (1994), older adults only showed an increased benefit from semantic context when the semantic context preceded the target words, while younger subjects benefited from semantic context provided either before or after the target words. Wingfield et al. hypothesized that this effect was caused by the difficulty that older listeners have in maintaining prospective semantic context in their working memories. The semantic context in our study preceded target words; therefore, the processing of target words was not dependent on the working memory capacities of the EHA users.

One limitation of the present study is that we measured gated speech perception under aided conditions only. For future studies, we would suggest that researchers investigate the gated speech perception ability of hearing-impaired individuals under both aided and unaided conditions, to estimate the benefit provided by the hearing aids. Another limitation of the present study is that the settings of hearing aids (i.e., noise reduction) may have caused artifacts due to front-end processing in the identification of speech stimuli, particularly consonants. The potential impact of front-end processing on the identification of speech stimuli, in particular under silent condition, needs further investigation by adding, for instance, an aided condition with a linear amplification.

Conclusion

Our results suggest that the provision of hearing aids does not compensate speech abilities to the level of ENH individuals when supportive semantic context is lacking. In such cases, speech perception becomes cognitively demanding for EHA users, and those with better working memory capacity demonstrate superior performance in the identification of speech stimuli.

Acknowledgments

The authors thank Amin Saremi for his technical support; Katarina Marjanovic for speaking the recorded stimuli; Elisabet Classon for providing the word comprehension test; and Stefan Stenfelt, Wycliffe Yumba, Emmelie Nordqvist, Carine Signoret, and Helena Torlofson for their assistance during this study. Furthermore, the authors thank two anonymous reviewers for their valuable comments.

Declaration of Conflicting Interests

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding

The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This research was supported by a grant from the Swedish Research Council (349-2007-8654).

References

- Arlinger S., Dryselius H. (1990) Speech recognition in noise, temporal and spectral resolution in normal and impaired hearing. Acta Otolaryngologica Supplementum 469: 30–37. [PubMed] [Google Scholar]

- Baddeley A. D., Logie R., Nimmo-Smith I., Brereton R. (1985) Components of fluent reading. Journal of Memory and Language 24: 119–131. [Google Scholar]

- Baskent D., Eiler C. L., Edwards B. (2010) Phonemic restoration by hearing-impaired listeners with mild to moderate sensorineural hearing loss. Hearing Research 260: 54–62. [DOI] [PubMed] [Google Scholar]

- Benson D., Clark T. M., Johnson J. S. (1992) Patient experiences with full dynamic range compression. Ear and Hearing 13: 320–330. [PubMed] [Google Scholar]

- Boyen K., Langers D. R. M., de Kleine E., van Dijk P. (2013) Gray matter in the brain: Differences associated with tinnitus and hearing loss. Hearing Research 295: 67–78. [DOI] [PubMed] [Google Scholar]

- Campbell J., Sharma A. (2013) Compensatory changes in cortical resource allocation in adults with hearing loss. Frontiers in System Neuroscience 7: 71.doi: 10.3389/fnsys.2013.00071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Charles, L. (2012). Vowel perception in normal and hearing impaired listeners (University of Tennessee Honors Thesis Projects). Retrieved from http://trace.tennessee.edu/utk_chanhonoproj/1510.

- Classon, E., Löfkvist, U., Rudner, M., & Rönnberg, J. (2013). Verbal fluency in adults with postlingually acquired hearing impairment. Speech, Language, and Hearing. Advance online publication. doi: 10.1179/2050572813Y.0000000019.

- Daneman M., Carpenter P. A. (1980) Individual differences in working memory and reading. Journal of Verbal Learning and Verbal Behavior 19: 450–466. [Google Scholar]

- Dehaene-Lambertz G., Pallier C., Serniclaes W., Sprenger-Charolles L., Jobet A., Dehane S. (2005) Neural correlates of switching from auditory to speech perception. NeuroImage 24: 21–33. [DOI] [PubMed] [Google Scholar]

- De Neys W., Schaeken W., d’Ydewalle G. (2003) Working memory span and everyday conditional reasoning: A trend analysis. In: Alterman R., Kirsh D. (eds) Proceedings of the Twenty-Fifth Annual Conference of the Cognitive Science Society, Boston, MA: Erlbaum, pp. 312–317. [Google Scholar]

- Dimitrijevic A., John M. S., Picton T. W. (2004) Auditory steady-state responses and word recognition scores in normal-hearing and hearing-impaired adults. Ear and Hearing 25: 68–84. [DOI] [PubMed] [Google Scholar]

- Dufor O., Serniclaes W., Sprenger–Charolles L., Démonet J.-F. (2007) Top-down processes during auditory phoneme categorization in dyslexia: A PET study. NeuroImage 34: 1692–1707. [DOI] [PubMed] [Google Scholar]

- Eckert M. A., Cute S. L., Vaden K. I., Jr, Kuchinsky S. E., Dubno J. R. (2012) Auditory cortex signs of age-related hearing loss. Journal of the Association for Research in Otolaryngology 13: 703–713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elliott L. L., Hammer M. A., Evan K. E. (1987) Perception of gated, highly familiar spoken monosyllabic nouns by children, teenagers, and older adults. Perception & Psychophysics 42: 150–157. [DOI] [PubMed] [Google Scholar]

- Gordon-Salant S., Fitzgibbons P. J. (1997) Selected cognitive factors and speech recognition performance among young and elderly listeners. Journal of Speech, Language, and Hearing Research 40: 423–431. [DOI] [PubMed] [Google Scholar]

- Grosjean F. (1980) Spoken word recognition processes and gating paradigm. Perception & Psychophysics 28: 267–283. [DOI] [PubMed] [Google Scholar]

- Grossberg S., Kazerounian S. (2011) Laminar cortical dynamics of conscious speech perception: Neural model of phonemic restoration using subsequent context in noise. The Journal of the Acoustical Society of America 130: 440–460. [DOI] [PubMed] [Google Scholar]

- Hardison D. M. (2005) Second-language spoken word identification: Effects of perceptual training, visual cues, and phonetic environment. Applied Psycholinguistics 26: 579–596. [Google Scholar]

- Harkrider A. W., Plyler P. N., Hedrick M. S. (2009) Effects of hearing loss and spectral shaping on identification and neural response patterns of stop-consonant stimuli in young adults. Ear and Hearing 30: 31–42. [DOI] [PubMed] [Google Scholar]

- Humes L. E. (2002) Factors underlying the speech-recognition performance of elderly hearing-aid wearers. The Journal of the Acoustical Society of America 112: 1112–1132. [DOI] [PubMed] [Google Scholar]

- Humes L. E., Kidd G. R., Lentz J. J. (2013) Auditory and cognitive factors underlying individual differences in aided speech-understanding among older adults. Frontiers in Systems Neuroscience 7: 55.doi:10.3389/fnsys.2013.00055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Husain F. T., Medina R. E., Davis C. W., Szymko-Bennett Y., Simonyan K., Pajor N. M., Horwitz B. (2011) Neuroanatomical changes due to hearing loss and chronic tinnitus: A combined VBM and DTT study. Brain Research 1369: 74–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Järpsten B. (2002) DLS™ handledning, Stockholm, Sweden: Hogrefe Psykologiförlaget AB. [Google Scholar]

- Kane M. J., Engle R. W. (2000) Working memory capacity, proactive interference, and divided attention: Limits on long-term memory retrieval. Journal of Experimental Psychology: Learning, Memory, and Cognition 26: 336–358. [DOI] [PubMed] [Google Scholar]

- Lash A., Rogers C. S., Zoller A., Wingfield A. (2013) Expectation and entropy in spoken word recognition: Effects of age and hearing acuity. Experimental Aging Research 39: 235–253. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lidestam B. (2014) Audiovisual presentation of video-recorded stimuli at a high frame rate. Behavior Research Methods 46: 499–516. [DOI] [PubMed] [Google Scholar]

- Lunner T., Rudner M., Rönnberg J. (2009) Cognition and hearing aids. Scandinavian Journal of Psychology 50: 395–403. [DOI] [PubMed] [Google Scholar]

- Mesgarani N., Cheung C., Johnson K., Chang E. F. (2014) Phonetic feature encoding in human superior temporal gyrus. Science 343: 1006–1010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Metsala J. L. (1997) An examination of word frequency and neighborhood density in the development of spoken-word recognition. Memory & Cognition 25: 47–56. [DOI] [PubMed] [Google Scholar]

- Moradi S., Lidestam B., Rönnberg J. (2013) Gated audiovisual speech identification in silence vs. noise: Effects on time and accuracy. Frontiers in Psychology 4: 359.doi: 10.3389/fpsyg.2013.00359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moradi S., Lidestam B., Saremi A., Rönnberg J. (2014) Gated auditory speech perception: Effects of listening conditions and cognitive capacity. Frontiers in Psychology 5: 531.doi: 10.3389/fpsyg.2014.00531. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ng E. H. N., Rudner M., Lunner T., Pedersen M. S., Rönnberg J. (2013) Effects of noise and working memory capacity on memory processing of speech for hearing-aid users. International Journal of Audiology 52: 433–441. [DOI] [PubMed] [Google Scholar]

- Peele J. E., Troiani V., Grossman M., Wingfield A. (2011) Hearing loss in older adults affects neural systems supporting speech comprehension. The Journal of Neuroscience 31: 12638–12643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rönnberg J., Arlinger S., Lyxell B., Kinnefors C. (1989) Visual evoked potentials: Relation to adult speechreading and cognitive functions. Journal of Speech, Language, and Hearing Research 32: 725–735. [PubMed] [Google Scholar]

- Rönnberg J., Danielsson H., Rudner M., Arlinger S., Sternang O., Wahlin Å., Nilsson L.-G. (2011) Hearing loss is negatively related to episodic and semantic long-term memory but not to short-term memory. Journal of Speech, Language, and Hearing Research 54: 705–726. [DOI] [PubMed] [Google Scholar]

- Rönnberg, J., Lunner, T., Zekveld, A., Sörqvist, P., Danielsson, H., Lyxell, B., … , Rudner, M. (2013). The Ease of Language Understanding (ELU) model: Theoretical, empirical, and clinical advances. Frontiers in Systems Neuroscience, 7(00031). doi:10.3389/fnsys.2013.00031. [DOI] [PMC free article] [PubMed]

- Rönnberg J., Rudner M., Foo C., Lunner T. (2008) Cognition counts: A working memory system for ease of language understanding (ELU). International Journal of Audiology 47(Suppl. 2): S99–S105. [DOI] [PubMed] [Google Scholar]

- Rosen S. (1992) Temporal information in speech acoustic, auditory and linguistic aspects. Philosophical Transactions of the Royal Society B Biological Sciences 336: 367–373. [DOI] [PubMed] [Google Scholar]

- Rudner M., Foo C., Sundewall-Thorén E., Lunner T., Rönnberg J. (2008) Phonological mismatch and explicit cognitive processing in a sample of 102 hearing-aid users. International Journal of Audiology 47(Suppl. 2): S91–S98. [DOI] [PubMed] [Google Scholar]

- Rudner M., Lunner T. (2013) Cognitive spare capacity as a window on hearing aid benefit. Seminars in Hearing 34: 298–307. [Google Scholar]

- Rudner M., Rönnberg J., Lunner T. (2011) Working memory supports listening in noise for persons with hearing impairments. Journal of the American Academy of Audiology 22: 156–167. [DOI] [PubMed] [Google Scholar]

- Saija J. D., Akyürek E. G., Andringa T. C., Baskent D. (2014) Perceptual restoration of degraded speech is preserved with advancing age. Journal of the Association for Research in Otolaryngology 15: 139–148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sawusch J. R. (1977) Processing of place information in stop consonants. Perception & Psychophysics 22: 417–426. [Google Scholar]

- Smits R. (2000) Temporal distribution of information for human consonant recognition in VCV utterances. Journal of Phonetics 27: 111–135. [Google Scholar]

- Tyler R. S., Baker L. J., Armstrong-Bendall G. (1983) Difficulties experienced by hearing-aid candidates and hearing-aid users. British Journal of Audiology 17: 191–201. [DOI] [PubMed] [Google Scholar]

- Walley A. C., Michela V. L., Wood D. R. (1995) The gating paradigm: Effects of presentation format on spoken word recognition by children and adults. Attention, Perception, & Psychophysics 57: 343–351. [DOI] [PubMed] [Google Scholar]

- Warren R. A. (1970) Perceptual degradation of missing speech sounds. Science 167: 392–393. [DOI] [PubMed] [Google Scholar]

- Wingfield A., Alexander A. H., Cavigelli S. (1994) Does memory constrain utilization of top-down information in spoken word recognition? Evidence from normal aging. Language and Speech 37: 221–235. [DOI] [PubMed] [Google Scholar]