Abstract

This paper presents an econometric mediation analysis. It considers identification of production functions and the sources of output effects (treatment effects) from experimental interventions when some inputs are mismeasured and others are entirely omitted.

JEL Code: D24, C21, C43, C38

Keywords: Production Function, Mediation Analysis, Measurement Error, Missing Inputs

1 Introduction

William Barnett is a pioneer in the development and application of index theory and productivity accounting analyses. He has also pioneered the estimation of production functions. This paper follows in the tradition of Barnett’s work. It develops an econometric mediation analysis to explain the sources of experimental treatment effects. It considers how to use experiments to identify production functions in the presence of unmeasured and mismeasured inputs. The goal of the analysis is to determine the causes of effects: sources of the treatment effects properly attributable to experimental variation in measured inputs.

Social experiments usually proceed by giving a vector of inputs to the treatment group and withholding it from the control group. Analysts of social experiments report a variety of treatment effects. Our goal is to go beyond the estimation of treatment effects and examine the mechanisms through which experiments generate these effects. Thus we seek to use experiments to estimate the production functions producing treatment effects. This exercise is called mediation analysis in the statistics literature (Imai et al., 2011, 2010; Pearl, 2011). Such analyses have been used for decades in economics (Klein and Goldberger, 1955; Theil, 1958) and trace back to the work on path analysis by Sewall Wright (1921; 1934).

We provide an economically motivated interpretation of treatment effects. Treatment may affect outcomes through changing inputs. Treatment may also affect outcomes through shifting the map between inputs and outputs for treatment group members. When there are unmeasured (by the analyst) inputs, empirically distinguishing these two cases becomes problematic. We present a framework for making this distinction in the presence of unmeasured inputs and when the measured inputs are measured with error.

A fundamental problem of mediation analysis is that even though we might observe experimental variation in some inputs and outputs, the relationship between inputs and outputs might be confounded by unobserved variables. There may exist relevant unmeasured inputs changed by the experiment that impact outputs. If unmeasured inputs are not statistically independent of measured ones, then the observed empirical relation between measured inputs and outputs might be due to the confounding effect of experimentally induced changes in unmeasured inputs. In this case, treatment effects on outputs can be wrongly attributed to the enhancement of measured inputs instead of experimentally induced increase in unmeasured inputs.

Randomized Controlled Trials (RCTs) generate independent variation of treatment which allows the analyst to identify the causal effect of treatment on measured inputs and outputs. Nevertheless, RCTs unaided by additional assumptions do not allow the analyst to identify the causal effect of increases in measured inputs on outputs nor do they allow the analyst to distinguish between treatment effects arising from changes in production functions induced by the experiment or changes in unmeasured inputs when there is a common production function for treatments and controls.

This paper examines these confounding effects in mediation analysis. We demonstrate how econometric methods can be used to address them. We show how experimental variation can be used to increase the degree of confidence in the validity of the exogeneity assumptions needed to make valid causal statements. In particular, we show that we can test some of the strong assumptions implicitly invoked to infer causal effects in statistical mediation analyses. We analyze the invariance of our estimates of the sources of treatment effects to changes in measurement schemes.

The paper is organized in the following fashion. Section 2 discusses the previous literature and defines the mediation problem as currently framed in the statistics literature. Section 3 presents a mediation analysis within a linear framework with both omitted and mismeasured inputs. Section 4 discusses identification. Section 5 presents an estimation method. Section 6.1 discusses an invariance property when input measures are subject to affine transformations. Section 6.2 discusses further invariance results for general monotonic transformations of measures and for nonlinear technologies. Section 7 concludes.

2 Assumptions in Statistical Mediation Analysis

The goal of mediation analysis as framed in the literature in statistics is to disentangle the average treatment effect on outputs that operates through two channels: (1) Indirect output effects arising from the effect of treatment on measured inputs and (2) Direct output effects that operate through channels other than changes in the measured inputs. The mediation literature often ignores the point that Direct Effects are subject to some ambiguity: they can arise from inputs changed by the experiment that are not observed by the analyst, but can also arise from changes in the map between inputs and the outputs.

To clarify ideas it is useful to introduce some general notation. Let D denote treatment assignment. D = 1, if an agent is treated and D = 0 otherwise. Let Y1 and Y0 be counterfactual outputs when D is fixed at “1” and “0” respectively. By fixing, we mean an independent manipulation where treatment status is set at d. The distinction between fixing and conditioning traces back to Haavelmo (1943). For recent discussions see Pearl (2001, 2011) and Heckman and Pinto (2012). We use the subscript d ∈ {0, 1} to represent variables when treatment is fixed at d. In this notation Yd represents output Y when treatment status is fixed at d and the realized output is given by

| (1) |

In our notation, the average treatment effect between treatment and control groups is given by

| (2) |

We define a vector of inputs when treatment is fixed at d by , where 𝒥 is an index set for inputs. We define the vector of realized inputs by θ in a fashion analogous to Y : θ = Dθ1 + (1 − D)θ0. While output Y is assumed to be observed, we allow for some inputs to be unobserved. Notationally, let 𝒥p ⊆ 𝒥 be the index set of proxied inputs—inputs for which we have observed measurements. We represent the vector of proxied inputs by . We allow for the possibility that observed measurements may be imperfect proxies of measured inputs so that measured inputs may not be observed directly. We denote the remaining inputs indexed by 𝒥\𝒥p as unmeasured inputs, which are represented by .

We postulate that the output Y is generated through a production function whose arguments are both measured and unmeasured inputs in addition to an auxiliary set of baseline variables X. Variables in X are assumed not to be caused by treatment D that affects output Y in either treatment state. The production function for each treatment regime is

| (3) |

Equation (3) states that output Yd under treatment regime D = d is generated by ( , X) according to function fd such that d ∈ {0, 1}. If f1 = f0, functions (f1, f0) are said to be invariant across treatment regimes. Invariance means that the relationship between inputs and output is determined by a stable mechanism defined by a deterministic function unaffected by treatment.

From Equation (2), the average treatment effect or ATE is given by:

Expectations are computed with respect to all inputs. Treatment effects operate through the impact of treatment D on inputs ( ), dε{0, 1} and also by changing the map between inputs and the outcome, namely, fd(·); d ∈ {0, 1}. Observed output is given by .

We are now equipped to define mediation effects. Let represent the counterfactual output when treatment status D is fixed at d and proxied inputs are fixed at the some value . From production function (3),

| (4) |

Note that the subscript d of Yd,θ̄p arises both from the selection of the production function fd(·), from the choice of d, and from changes in unmeasured inputs . Moreover, conditional on X and fixing , the source of variation of Yd,θ̄p is attributable to unmeasured inputs . Keeping X implicit, use to represent the value output would take fixing D to d and simultaneously fixing measured inputs to be .

In the mediation literature, ATE is called the total treatment effect. It is often decomposed into direct and indirect treatment effects. The indirect effect (IE) is the effect of changes in the distribution of proxied inputs (from to ) on mean outcomes while holding the technology fd and the distribution of unmeasured inputs fixed at treatment status d. Formally, the indirect effect is

Here expectations are taken with respect to and X. One definition of the direct effect (DE) is the average effect of treatment holding measured inputs fixed at the level appropriate to treatment status d but allowing technologies and associated distributions of unobservables to change with treatment regime:

| (5) |

Robin (2003) terms these effects as the pure, direct, and indirect effects, while Pearl (2001) calls them the natural direct and indirect effects.

We can further decompose the direct effect of Equation (5) into portions associated with the change in the distribution of ; d ∈ {0, 1} and the change in the map between inputs and outputs fd(·); d ∈ {0, 1}. Define

| (6) |

DE′(d, d′) is the treatment effect mediated by changes in the map between inputs and outputs when fixing the distribution of measured inputs at , and unmeasured inputs at for d, d′ ∈ {0, 1}. Define

| (7) |

DE″ (d, d′) is the treatment effect mediated by changes unmeasured inputs from to while setting the production function at fd′ (·) where measured inputs are fixed at for d, d′ ∈ {0, 1}.

The definition of direct effect in Equation (5) is implicit in the mediation literature. Definitions (6) – (7) are logically coherent. Direct effects (5) can be written alternatively as:

The source of the direct treatment effect is often ignored in the statistical literature. It can arise from changes in unobserved inputs induced by the experiment (from to ). It could also arise from an empowerment effect e.g. that treatment modifies the technology that maps inputs into outputs (from f0 to f1). The change in technology may arise from new inputs never previously available such as parenting information as studied by Cunha (2012) and Heckman et al. (2012). If both measured and unmeasured inputs were known (including any new inputs never previously available to the agent), then the causal relationship between inputs and outputs could be estimated. Using the production function for each treatment state, one could decompose treatment effects into components associated with changes in either measured or unmeasured inputs. Since unmeasured inputs are not observed, the estimated relationship between measured inputs and outputs may be confounded with changes in unmeasured inputs induced by the experiment.

In this framework, under the definition of a direct effect (Equation 5), we can decompose the total treatment effect into the direct and indirect effect as follows:

The literature of mediation analysis deals with the problem of confounding effects of unobserved inputs and the potential technology changes by invoking different assumptions. We now examine those assumptions.

The standard literature on mediation analysis in psychology regresses outputs on mediator inputs (Baron and Kenny, 1986). The assumptions required to give these regressions a causal interpretation are usually not explicitly stated. This approach often adopts the strong assumption of no variation in unmeasured inputs conditional on the treatment. Under this assumption, measured and unmeasured inputs are statistically independent. Moreover, the effect of unmeasured inputs are fully summarized by a dummy variable for treatment status. In addition, this literature assumes full invariance of the production function, that is, f1(·) = f0(·). Under these assumptions, function (3) reduces to

| (8) |

which can readily be identified and estimated. A similar framework is also used by in Pearl (2001).

Imai et al. (2011, 2010) present a different analysis and invoke two conditions in their Sequential Ignorability Assumption. Their approach does not explicitly account for unobserved inputs. They invoke statistical relationships that can be interpreted as a double randomization, i.e., they assume that both treatment status and measured inputs are randomized. More specifically, their approach assumes independence of both treatment status D and measured inputs with respect to conditional on covariates X.

Assumption A-1. Sequential Ignorability (Imai et al., 2011, 2010)

| (i) |

| (ii) |

| (iii) |

Condition (i) of Assumption (A-1) states that both counterfactual outputs and counterfactual measured inputs are independent of D conditional on pre-program variables. These statistical relationships are generated by a RCT that randomly assigns treatment status D given X. Indeed, if treatment status D were randomly assigned by a randomization protocol that conditions on preprogram variables X, then Yd ⫫ D|X (see e.g. Heckman et al. (2010) for a discussion). But proxied and unmeasured inputs are also outcomes in a RCT, and therefore . Condition (i) of Assumption (A-1) is invoked to eliminate the dependence arising from the fact that for fixed X the source of variation of is .

Condition (ii) declares that counterfactual outcomes given d and are independent of unmeasured inputs given the observed treatment status and the pre-program variables X. In other words, input is statistically independent of potential outputs when treatment is fixed at D = d and measured inputs are fixed at conditional on treatment assignment D and same pre-program characteristics X. The same randomization rationale used to interpret Condition (i) can be applied to Condition (ii). Thus Condition (ii) can be understood as if a second RCT were implemented for each treatment group such that measured inputs are randomized through a randomization protocol conditional on pre-program variables X and treatment status D. This randomization is equivalent to assuming that for all d and d′. Condition (iii) is a support condition that allows the estimation of treatment effects conditioned on the values X takes. Even though the Imai et al. (2010) and Imai et al. (2011) approach is weaker than the Pearl (2001) solution which is based on lack of variation of unobserved inputs, their assumptions are nonetheless still quite strong.

Imai et al. (2010) show that under Assumption A-1, the direct and indirect effects are given by:

| (9) |

| (10) |

Pearl (2011) uses the term Mediation Formulas for Equations (9)–(10). Like Imai et al. (2010), Pearl (2011) invokes the assumption of exogeneity on mediators conditioned on variables X to generate these equations.

Identification of the direct and indirect effects under the strong implicit assumption A-1, translates to an assumption of no-confounding effects on both treatment and measured inputs. This assumption does not follow from a randomized assignment of treatment. Randomized trials ensure independence between treatment status and counterfactual inputs/outputs, namely Yd ⫫ D|X and . Thus RCTs identify treatment effects for proxied inputs and for outputs. However, random treatment assignment does not imply independent variation between proxied inputs and unmeasured inputs . In particular, it does not guarantee independence between counterfactual outputs , which is generated in part by , and measured inputs as assumed in Condition (ii) of Assumption A-1.

2.1 Mediation Analysis under RCT

It is useful to clarify the strong causal relationships implied by Condition (ii) of Assumption A-1 in light of a mediation model based on a RCT. To this end, we first start by defining a standard confounding model arising from uncontrolled pre-program unobserved variables. We then introduce a general RCT model and establish the benefits of RCTs in comparison with models that rely on standard matching assumptions. We then define a general mediation model with explicitly formulated measured and unmeasured inputs. We then examine the causal relationships of the mediation model that are implied by Condition (ii) of Assumption A-1. We show that the assumptions made in Assumption A-1 are stronger than standard assumptions invoked in matching.

A standard confounding model can be represented by three variables: (1) An output of interest Y ; (2) A treatment indicator D that causes the output of interest. As before, we use D = 1 for treated and D = 0 for untreated; (3) An unobserved variable V that causes both D and Y. A major difference between unobserved variable V and unobserved input is that V is not caused by treatment D while we allow to be determined by treatment. Thus, , where means equal in distribution. We discuss the relationship between unobserved variable and V in presenting our mediation model.

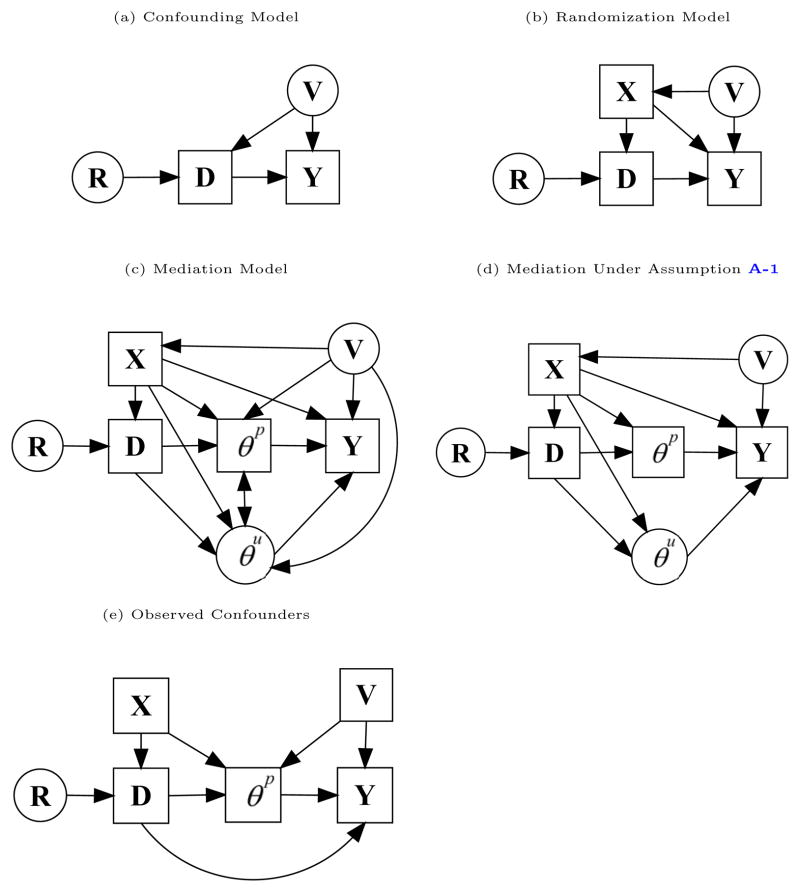

Model (a) of Figure 1 represents the standard confounding model as a Directed Acyclic Graph (DAG).1 In this model, (Y1, Y0) ⫫ D does not hold due to confounding effects of unobserved variables V. As a consequence, the observed empirical relationship between output Y and treatment D is not causal and ATE cannot be evaluated by the conditional difference in means between treated and untreated subjects, i.e., E(Y |D = 1) − E(Y |D = 0). Nevertheless, if V were observed, ATE could be identified from ∫ E(Y |D = 1, V = υ) − E(Y |D = 0, V = υ)dFV (υ) as (Y1, Y0) ⫫ D|V holds.

Figure 1.

Mechanisms of Causality for Treatment Effects

Notes: This chart represents five causal models as directed acyclic graphs. Arrows represent causal relationships. Circles represent unobserved variables. Squares represent observed variables. Y is an output of interest. V are unobserved variables. D is the treatment variable. X are pre-program variables. R is the random device used in RCT models to assign treatment status. θp are measured inputs. θu are unmeasured inputs. Both θp and θu play the role of mediation variables. Figure (a) shows a standard confounding model. Figure (b) shows a general randomized trial model. Figure (c) shows a general mediation model where unobserved variables V cause mediation variable (θp; θu): Figure (d) shows the causal relationships of a mediation model that are allowed to exist for Assumption A-1 to hold. Figure (e) shows the mediation model presented in Pearl (2001).

The literature on matching (Rosenbaum and Rubin, 1983) solves the problem of confounders by assumption. It postulates that a set of observed pre-program variables, say X, spans the space generated by unobserved variables V although it offers no guidance on how to select this set. Thus it assumes that observed pre-program variables X can be found such that (Y1, Y0) ⫫ D|X holds. In this case, ATE can be computed by

For a review of matching assumptions and their limitations see Heckman and Navarro (2004) and Heckman and Vytlacil (2007).

Randomized controlled trials solve the problem of confounders by design. A standard RCT model for confounders can be represented by five variables: (1) An output of interest Y ; (2) A treatment indicator D that causes the output of interest and is generated by a random device R and variables X used in the randomization protocol; (3) Pre-program variables X used in the randomization protocol; (4) A random device R that assigns treatment status. (5) An unobserved variable V that causes both X and Y. Model (b) in Figure 1 represents the RCT model as a DAG.

In the RCT model, potential confounding effects of unobserved variables V are eliminated by observed variables X. ATE can be identified by

While (Y1, Y0) ⫫ D|X holds in both matching and RCT models, it holds by assumption in matching models and by design in RCT models.

We now examine mediation analysis under the assumption that treatment status is generated by a RCT. To this end, we explicitly include measured and unmeasured inputs (θp, θu) to our RCT framework depicted in Model (b) of Figure 1. Inputs mediate treatment effects, i.e. inputs are caused by D and cause Y. Moreover, we also allow pre-program variables X to cause mediators θp, θu. The most general mediation model is described by the following relationships: (R1) mediators θp, θu are caused by unobserved variable V ; and (R2) measured inputs can cause unmeasured ones and vice-versa. Model (c) of Figure 1 represents this mediation model for RCT as a Directed Acyclic Graph (DAG).

A production function representation that rationalizes the mediation model is

| (11) |

Equation (11) differs from Equation (3) by explicitly introducing pre-program unobserved variables V. Yd,θ̄p is now defined as:

| (12) |

It is the variation in , V, and X that generate randomness in outcome Yd, fixing θ̄p.

We gain further insight into Assumption A-1 by examining it in light of the mediation model. The mediation model is constructed under the assumption that treatment status is generated by a RCT. Therefore Condition (i) of Assumption A-1 holds. However randomization does not generate Condition (ii) of Assumption A-1. If either R1 or R2 occurs, measured and unmeasured inputs will not be independent conditioned on observed variables (D, X). As a consequence, . Model (d) of Figure 1 represents a mediation model in which Assumption A-1 holds, but neither R1 nor R2 occurs.

Condition (ii) is stronger than the conditions invoked in conventional matching analyses. Indeed, if V is assumed to be observed (a matching assumption), then relationship R1 reduces to a causal relationship among observed variables. Nevertheless, the matching assumption does not rule out R2. Relationship R2 would not apply if we adopt the strong assumption that unmeasured inputs have no variation conditional on the treatment.2 The no-variation assumption assures that measured and unmeasured mediators are statistically independent conditional on D. This model is represented as a DAG in Model (e) of Figure 1. Pearl (2001) shows why Condition (ii) will not hold for Model (e) of Figure 1. However the direct effect (Equation 5) can be computed by Condition (ii):

A general solution to the mediation problem is outside the scope of this paper. Instead we use a linear model to investigate how experimental variation coupled with additional econometric exogeneity assumptions can produce a credible mediation analysis for the case where some inputs are unobserved (but may be changed by the experiment) and proxied variables θp are measured with error. Our analysis is based on the production function defined in Equation (3). We assume that the map between inputs and output Yd is given by a linear function. We then show how multiple measures on inputs and certain assumptions about the exogeneity of inputs allow us to test for invariance, i.e. whether f1(·) is equal to f0(·). Alternatively, invoking invariance we show how to test the hypothesis that increments in are statistically independent of .

3 A Linear Model for Meditation Analysis

We focus on examining a linear model for the production function of output in sector d. The benefit of the linear model stems from its parsimony in parameters, which facilitates reliable estimation in small samples. Non-linear or non-parametric procedures require large samples often not available in RCTs. We write:

| (13) |

where κd is an intercept, αd and βd are, respectively, |𝒥|-dimensional and |X|-dimensional vectors of parameters where |Q| denotes the number of elements in Q. Pre-program variables X are assumed not to be affected by the treatment, their effect on Y can be affected by the treatment. ε̃d is a zero-mean error term assumed to be independent of regressors θd and X.

Technology (13) is compatible with a Cobb-Douglas model using linearity in logs. Thus an alternative to (13) is

| (14) |

| (15) |

We discuss the estimation of θd in Section 3.1. There, we also adopt a linear specification for the measurement system that links unobserved inputs θ with measurements M. The Cobb-Douglas specification can be applied to the linear measurement system by adopting a linear-in-logs specification in the same fashion as used in outcome equations (14)–(15).

Analysts of experiments often collect an array of measures of the inputs. However, it is very likely that there are relevant inputs not measured. We decompose the term αdθd in equation (13) into components due to inputs that are measured and inputs that are not:

| (16) |

where d ∈ {0, 1}, , and εd is a zero-mean error term defined by . Any differences in the error terms between treatment and control groups can be attributed to differences in the inputs on which we have no measurements. Without loss of generality we assume that , where means equality in distribution. Note that the error term ∊d is correlated with the measured inputs if measured inputs are correlated with unmeasured inputs.

We seek to decompose treatment effects into components attributable to changes in the inputs that we can measure. Assuming that changes in unmeasured inputs attributable to the experiment are independent of X, treatment effects can be decomposed into components due to changes in inputs E(Δθj) and components due to changes in parameters :

| (17) |

Equation (17) can be simplified if treatment affects inputs, but not the impact of inputs and background variables on outcomes, i.e. ; j ∈ 𝒥p and β1 = β0.4 This says that all treatment effects are due to changes in inputs. Under this assumption, the term associated with X drops from the decomposition. Note that under this assumption there still may be a direct effect (Equation 5) but it arises from experimentally induced shifts in unmeasured inputs.

If measured and unmeasured inputs are independent in the no-treatment outcome equation, α0 can be consistently estimated by standard methods. Under this assumption, we can test if the experimentally-induced increments in unmeasured inputs are independent of the experimentally induced increments in measured inputs. This allows us to test a portion of Condition (ii) of Assumption A-1. The intuition for this test is as follows. The inputs for treated participants are the sum of the inputs they would have had if they were assigned to the control group plus the increment due to treatment. If measured and unmeasured input increments are independent, α1 is consistently estimated by standard methods and we can test H0 : plim α̂1 = plim α̂0 where (α̂1, α̂ 0) are least squares estimators of (α1, α0). Notice that even if α̂0 is not consistently estimated, the test of the independence of the increments from the base is generally valid. Assuming the exogeneity of X, we can also test if plim β̂1 = plim β̂0.

Note further that if we maintain that measured inputs are independent of unmeasured inputs for both treatment and control groups, we can test the hypothesis of autonomy H0 : α1 = α0. Thus there are two different ways to use the data from an experiment (a) to test the independence of the increments given that unmeasured inputs are independent of measured inputs or (b) to test H0 : α1 = α0 maintaining full independence.

Imposing autonomy simplifies the notation. Below we show conditions under which we can test for autonomy. Equation (16) can be expressed as

| (18) |

In this notation, the observed outcome can be written as:

| (19) |

where τ = τ1 − τ0 is the contribution of unmeasured variables to mean treatment effects, ε = Dε1 + (1 − D)ε0 is a zero-mean error term, and , j ∈ 𝒥p denotes the inputs that we can measure.

If the , j ∈ 𝒥p are measured without error and are independent of the error term ∊, least squares estimators of the parameters of equation (19) are unbiased for αj, j ∈ 𝒥p. If, on the other hand, the unmeasured inputs are correlated with both measured inputs and outputs, least squares estimators of αj, j ∈ 𝒥p, are biased and capture the effect of changes in the unmeasured inputs as they are projected onto the measured components of θ, in addition to the direct effects of changes in measured components of θ on Y.

The average treatment effect is

| (20) |

Input j can explain treatment effects only if it affects outcomes (αj ≠ 0) and, on average, is affected by the experiment ( ). Using experimental data it is possible to test both conditions.

Decomposition (20) would be straightforward to identify if the measured variables are independent of the unmeasured variables, and the measurements are accurate. The input term of Equation (20) is easily constructed by using consistent estimates of the αj and the effects of treatment on inputs. However, measurements of inputs are often riddled with measurement error. We next address this problem.

3.1 Addressing the Problem of Measurement Error

We assume access to multiple measures on each input. This arises often in many studies related to the technology of human skill formation. For example, there are multiple psychological measures of the same underlying development trait. (See e.g., Cunha and Heckman (2008) and Cunha et al. (2010)). More formally, let the index set for measures associated with factor j ∈ 𝒥p be ℳj. Denote the measures for factor j by , where mj ∈ ℳj, d ∈ {0, 1}. θd denotes the vector of factors associated with the inputs that can be measured in treatment state d, i.e., d ∈ {0, 1}.

We assume that each input measure is associated with at most one factor. The following equation describes the relationship between the measures associated with factor j and the factor:

| (21) |

To simplify the notation, we keep the covariates X implicit. Parameters are measure-specific intercepts. Parameters are factor loadings. The ∊d in (18) and are mean-zero error terms assumed to be independent of θd; d ∈ {0, 1}, and of each other. The factor structure is characterized by the following equations:

| (22) |

| (23) |

The assumption that the parameters , j ∈ 𝒥p, do not depend on d simplifies the notation, as well as the interpretation of the estimates obtained from our procedure. It implies that the effect of treatment on the measured inputs operates only through the latent inputs and not the measurement system for those inputs. However, these assumptions are not strictly required. They can be tested by estimating these parameters separately for treatment and control groups and checking if measurement equation factor loadings and measurement equation intercepts differ between treatment and control groups.

4 Identification

Identification of factor models requires normalizations that set the location and scale of the factors (e.g., Anderson and Rubin, 1956 ). We set the location of each factor by fixing the intercepts of one measure—designated “the first”— to zero, i.e. , j ∈ 𝒥p. This defines the location of factor j for each counterfactual condition. We set the scale of the factor by fixing the factor loadings of the first measure of each skill to one, i.e. , j ∈ 𝒥p. For all measures that are related to a factor (i.e. have a non-zero loading on the factor, ) , the decomposition of treatment effects presented in this paper is invariant to the choice of which measure is designated as the “first measure” for each factor provided that the normalizing measure has a non-zero loading on the input. The decompositions are also invariant to any affine transformations of the measures. Our procedure can be generalized to monotonic nonlinear transformations of the measures.

Identification is established in four steps. First, we identify the means of the factors, . Second, we identify the measurement factor loadings , the variances of the measurement system, and the factor covariance structure Σθd. Third, we use the parameters identified from the first and second steps to secure identification of the measurement intercepts . Finally, we use the parameters identified in the first three steps to identify the factor loadings α and intercept τd of the outcome equations. We discuss each of these steps.

1. Factor Means

We identify and from the mean of the designated first measure for treatment and control groups: , j ∈ 𝒥p, d ∊; {0,1}.

2. Measurement Loadings

From the covariance structure of the measurement system, we can identify: (a) the factor loadings of the measurement system ; (b) the variances of the measurement error terms, ; and (c) the factor covariance matrix, Σθd. Factors are allowed to be freely correlated. We need at least three measures for each input j ∈ 𝒥p, all with non-zero factor loadings. The can depend on d ∈ {0, 1}, and we can identify . Thus we can test if , j ∈ 𝒥p, and do not have to impose autonomy on the measurement system.

3. Measurement Intercepts

From the means of the measurements, i.e. , we identify , mj ∈ ℳj\{1}, j ∈ 𝒥p. Recall that the factor loadings and factor means are identified. Assuming equality of the intercepts ( ) between treatment and control groups guarantees that treatment effects on measures, i.e. , operate solely through treatment effects on factor means, i.e. . However, identification of our decomposition requires intercept equality only for the designated first measure of each factor. We can test for all mj ∈ ℳj\{1}, j ∈ 𝒥p, and hence do not have to impose autonomy on the full measurement system.

4. Outcome Equation

Outcome factor loadings in equation (18) can be identified using the covariances between outcomes and the designated first measure of each input. We form the co-variances of each outcome Yd with the designated first measure of each input j ∈ 𝒥pto obtain Cov(Yd, M1,d) = Σθdα where α = (αj, j ∈ 𝒥p). By the previous argument, Σθd is identified. Thus α is identified whenever det(Σθd ≠ 0. We do not have to impose autonomy or structural invariance. Outcome factor loadings α can depend on d ∈ {0, 1}, as they can be identified through Cov(Yd, M1,d) = Σθdαd which can be identified separately for treatments and controls. We can test , j ∈ 𝒥p. Using E(Yd), we can identify τd because all of the other parameters of each outcome equation are identified.

5 Estimation Procedure

We can estimate the model using a simple three stage procedure. First, we estimate the measurement system. Second, from these equations we can estimate the skills for each participant. Third, we estimate the relationship between participant skills and outcomes. Proceeding in this fashion makes identification and estimation transparent.

Step 1

For a given set of dedicated measurements, and choice of the number of factors, we estimate the factor model using measurement system (21)–(23). There are several widely used procedures to determine the number of factors. Examples of these procedures are the scree test (Cattell, 1966), Onatski’s criterion (2009), and Horn’s (1965) parallel analysis test. In addition, the Guttman-Kaiser rule (Guttman, 1954, and Kaiser, 1960, 1961 ) is well known to overestimate the number of factors (see Zwick and Velicer, 1986, Gorsuch, 2003, and Thompson, 2004). We refer to Heckman et al. (2013) for a detailed discussion of the selection of number of factors.

Step 2

We use the measures and factor loadings estimated in the first step to compute a vector of factor scores for each participant i. We form unbiased estimates of the true vector of skills for agent i. The factor measure equations contain X which we suppress to simplify the expressions. Notationally, we represent the measurement system for agent i as

| (24) |

where φ represents a matrix of the factor loadings estimated in first step and Mi is the vector of stacked measures for participant i subtracting the intercepts of equation (21). The dimension of each element in equation (24) is shown beneath it, where

is the union of all the index sets of the measures. The error term for agent i, ηi, has zero mean and is independent of the vector of skills θi. Cov(ηi, ηi) = Ω. The most commonly used estimator of factor scores is based on a linear function of measures: θS,i = L′Mi. Unbiasedness requires that L′φ =L|𝒥|, where L|𝒥| is a |𝒥|-dimensional identity matrix.5 To achieve unbiasedness, L must satisfy L′ = (φ′Ω−1φ)−1φ′ɷ−1. The unbiased estimator of the factor is:

Factor score estimates can be interpreted as the output of a GLS estimation procedure where measures are taken as dependent variables and factor loadings are treated as regressors. By the Gauss-Markov theorem, for a known φ the proposed estimator is the best linear unbiased estimator of the vector of inputs θi.6

Step 3

The use of factor scores instead of the true factors to estimate equation (18) generates biased estimates of outcome coefficients α. Even though estimates of θi are unbiased, there is still a discrepancy between the true and measured θi due to estimation error. To correct for the bias, we propose a bias-correction procedure. Because we estimate the variance of θ and the variance of the measurement errors in the first step of our procedure, we can eliminate the bias created by the measurement error.

Consider the outcome model for agent i :

| (25) |

where (θi, Zi) ⫫ ∊i and E(∊i) = 0. For brevity of notation, we use Zi to denote pre-program variables, treatment status indicators, and the intercept term of equation (18). From equation (24), the factor scores θS,i can be written as the inputs θi plus a measurement error Vi, that is,

| (26) |

Replacing θi with θS,i yields Yi = αθS,i + γZi + ∊i − αVi. The linear regression estimator of α and γ is inconsistent:

| (27) |

This is the multivariate version of the standard one-variable attenuation bias formula. All covariances in A can be computed directly except for the terms that involve θ. Cov(θ, θ) is estimated in step (1). Using equation (26), we can compute Cov(Z, θS) = Cov(Z, θ). Thus, A is identified. Our bias-correction procedure consists of pre-multiplying the least squares estimators (α̂,γ̂) by A−1, thus providing consistent estimates of (α,γ).7 A one step maximum likelihood procedure, while less intuitive, directly estimates the parameters without constructing the factors and accounts for measurement error. It is justified in large samples under standard regularity conditions.

6 Invariance to Transformation of Measures

We present some invariance results regarding the decomposition of treatment effects under transformations of the measures used to proxy the inputs. Our analysis is divided into two parts. Section 6.1 examines the invariance of the decomposition for affine transformation of measures under the linear model discussed in the previous section. Section 6.2 relaxes the linearity assumption of Section 6.1 and discusses some generalized results for the case of non-linear monotonic transformations using the analysis of Cunha et al. (2010).

6.1 Invariance to Affine Transformations of Measures

We first establish conditions under which outcome decomposition (20), relating treatment effects to experimentally induced changes in inputs, is invariant to affine transforms of any measure of input for any factor. Decomposition (20) assumes α1 = α0. We also consider forming decompositions for the more general nonautonomous case where α1 ≠ α0. We establish the invariance of the treatment effect due to measured inputs (see Equation (20)) but not of other terms in the decompositions that arise in the more general case. Throughout we assume autonomy of the measurement system so that intercepts and factor loadings are the same for treatments and controls for all measurement equations. Our analysis can be generalized to deal with that case but at the cost of greater notational complexity.

Before presenting a formal analysis, it is useful to present an intuitive motivation. Let be an affine transformation of the measure , for some j ∈ 𝒥p and mj ∈ ℳj. Specifically, define by:

| (28) |

Let be the factor loading, error term and intercept associated with the transformed measure , d ∈ {0, 1}. The key condition for the invariance of decomposition (20) to linear transformations of the different measures is that be invariant.

We apply the same normalization to the transformed system as we do to the original system. Suppose that the measure transformed is a “first measure” so mj = 1. Recall that in the original system, and . Transformation (28) can be expressed as

Applying the normalization rule to this equation defines factor θ̃j = b + aθj, i.e. the scale and the location of the factor are changed, so that in the transformed system the intercept is 0 and the factor loading 1:

where is a rescaled mean zero error term. This transformation propagates through the entire system, where is replaced by .

Notice that in decomposition (20), the induced shift in the mean of the factor is irrelevant. It differences out in the decomposition. The scale of θj is affected. The covariance matrix Σθd is transformed to Σθ̃d where

where Ia is a square diagonal matrix of the same dimension as the number of measured factors and the jth diagonal is a and the other elements are unity. The factor loading for the outcome function for the set of transformed first measures, M̃1,d =M1,dIa, is the solution to the system of equations

Thus

Since θ̃d = Iaθd, it follows trivially that decomposition (20), , is invariant to transformations.

Suppose next that the transformation is applied to any measure other than a first measure. Invoking the same kind of reasoning, it is evident that θ̃d = θd and α̃d = αd. Thus the decomposition is invariant. Clearly, however, the intercept of the transformed measure becomes

and the factor loading becomes

The preceding analysis assumes that the outcome system is autonomous: α0 = α1, and β0 = β1. Suppose that α1 ≠ α0. To simplify the argument, we continue to assume that β0 = β1. In this case

In the general case, the decomposition is not unique due to a standard index number problem. Using the notation Δα = α1 − α0,

For any α* that is an affine transformation of (α0,α1):

For all three decompositions, the first set of terms associated with the mean change in skills due to treatment is invariant to affine transformations. The proof follows from the preceding reasoning. Any scaling of the factors is offset by the revised scaling of the factor loadings.

Notice, however that when α1 ≠ α0, in constructing decompositions of treatment effects we acquire terms in the level of the factors. For transformations to the first measure, the change in the location is shifted. Even though the scales of (Δα) and E(θd) offset, there is no compensating shift in the location of the factor. Thus the terms associated with the levels of the factor are not, in general invariant to affine transformations of first measures although the decompositions are invariant to monotonic transformations of any non-normalization measures. Obviously the point of evaluation against E(θ1 − θ0) is evaluated depends on the choice of α0, α1, and α* if they differ.

We now formally establish these results. It is enough to consider the transformation of one measure within group j for treatment category d. First, suppose that the transformation (28) is not applied to the first measure, that is, mj ≠ 1. In this case, ; j ∈ 𝒥p are invariant as they are identified through the first measure of each factor which is not changed. We can also show that the αj, j ∈ 𝒥p, are invariant. We identify α = [αj; j ∈ 𝒥p] through Cov(Yd,M1,d) = Σθdα. Therefore it suffices to show that covariance matrix Σθd is invariant under the linear transformation (28). But the covariance between the factors is identified through the first measure of each factor. The variance of factor j under transformation (28) is identified by:

| (28) |

so that the variance is unchanged. Hence αd is unchanged.

Now suppose that transformation (28) is applied to the first measure, mj = 1. In this case, the new variance of factor j is given by:

| (29) |

The new covariance between factors j and j′ is given by:

| (30) |

Let Σ̃θd be the new factor covariance matrix obtained under transformation (28). According to equations (29)–(30), Σ̃θd= IaΣθdIa, where, as before, Ia is a square diagonal matrix whose j-th diagonal element is a and has ones for the remaining diagonal elements. By the same type of reasoning, we have that the covariance matrix Cov(Yd,M1,d) computed under the transformation is given by: Cov(Yd, M̃1,d) = Ia Cov(Yd,M1,d). Let α̃ be the outcome factor loadings under transformation (28). Thus,

| (31) |

and therefore . In other words, transformation (28) only modifies the j-th factor loading which is given by .

Let the difference in factor means between treatment groups be , j′ ∈ 𝒥p, and let Δ̃j′ be the difference under transformation (28). Transformation (28) only modifies the j-th difference in means which is given by Δ̃j = aΔj and thereby α̃jΔ̃j = αjΔj. Thus α̃j′ for all j′ ∈ 𝒥p, as claimed. It is straightforward to establish that if α1 ≠ α0, the decomposition is, in general, not invariant to affine transformations, although the term associated with E(θ1 − θ0) is.

6.2 A Sketch of More General Invariance Results

We next briefly consider a more general framework. We draw on the analysis of Cunha et al. (2010) to extend the discussion of the preceding subsection to a nonlinear nonparametric setting. We present two basic results: (1) outcome decomposition terms that are locally linear in θ are invariant to monotonic transformations of θ; and (2) terms associated with shifts in the technology due to the experimental manipulation are not. In this section we allow inputs to be measured with error but assume that unmeasured inputs are independent of the proxied ones. We focus only on invariance results and only sketch the main ideas.

Here we sketch the main results. Following the previous notation, we use D for the binary treatment status indicator, D = 1 for treated and D = 0 for control. We denote Yd; d ∈ {0, 1} to denote the output Y when treatment D is fixed at value d. In the fashion, θd; d ∈ {0, 1} denotes the input θ when treatment D is fixed at value d. For sake of simplicity, let the production function be given by f: supp(θ) → supp(Y ) where supp means support. Thus Yd = f(θd); d ∈ {0, 1}.

We analyze both the invariant and noninvariant case. We relax the the invariance assumption for the production function by indexing it by treatment status. We use fd: supp(θ) → supp(Y ) to denote the production function that governs the data generating process associated with treatment status D = d.

In this notation, the average treatment effect is given by:

| (32) |

Equation (32) repeats the discussion in Section 2 that there are two sources of treatment effects exist: (1) treatment might shift the map between θ and the outcomes from f0 to f1 (i.e. it might violate invariance); and (2) treatment might also change the inputs from θ0 to θ1.

Assume the existence of multiple measures of θ that are generated through an unknown function M: supp(θ) → supp(M) that is monotonic in θ. Then, under conditions specified in Cunha, Heckman, and Schennach (2010), the marginal distributions of θ1 and θ0 can be non-parametrically identified (although not necessarily the joint distribution of θ1 and θ0). We develop the scalar case.

Theorem T-1. The scalar case

Let the production function be a uniformly differentiable scalar function fd: supp(θ) → supp(Y ); d ∈ {0, 1, }. If the production function is autonomous, i.e. f1(t) = f0(t) ∀ t ∈ supp(θ), then the effect attributable to changes in θ is invariant to monotonic transformations M of θ.

Proof

Without loss of generality, write the input for treated in terms of the input for untreated plus the difference across inputs. Thus θ1 = θ0 + Δ. Now, under structural invariance:

From uniform differentiability of M and f we have that:

Thus the infinitesimal contribution of a change in input to output can be decomposed as:

If we use θ as the argument of the function, under conditions specified in Cunha et al. (2010) nonparametric regression identifies . If we use M(θ) as the argument, nonparametric regression identifies but the increment to input is now . The combined terms for the output decomposition remain the same in either case. Thus the decomposition is invariant to monotonic transformations M of inputs θ. Extension to the vector case is straightforward.

Suppose that we relax autonomy. For sake of simplicity, take the scalar case and let the input for the treated input be written as θ1 = θ0+Δ. In this case, we can write the total change in output induced by treatment as:

If we rework the rationale of the proof for Theorem T-1 and apply the intermediate value theorem, we obtain the following expression:

| (33) |

where is an intermediate value in the interval (θ0, θ0 + Δ). The first term is invariant for the same reasons stated in Theorem T-1 which concerns the autonomous case. Namely, the change in offsets the change in .

The source of non-invariance of the second term in Equation (33) is attributed to the shift in production function f0 to f1 due to treatment. This shift implies that the output evaluation will differ when evaluated at the same input points θ0. Under structural invariance or autonomy, f1(·) = f0(·) and regardless of the transformation M, we have that f1(M(θ0)) = f0(M(θ0)) and, therefore, the second term of Equation (33) vanishes.

7 Summary and Conclusions

Randomization identifies treatment effects for outputs and measured inputs. If there are unmeasured inputs that are statistically dependent on measured inputs, unaided experiments do not identify the causal effects of measured inputs on outputs.

This paper reviews the recent statistical mediation literature that attempts to identify the causal effect of measured changes in inputs on treatment effects. We relate it to conventional approaches in the econometric literature. We show that the statistical mediation literature achieves its goals under implausibly strong assumptions. For a linear model, we relax these assumptions maintaining exogeneity assumptions that can be partially relaxed if the analyst has access to experimental data. Linearity gives major simplifying benefits even in the case where is independent of , where the point of evaluation of mean effects does not depends on the distribution of . Extension of this analysis to the nonlinear case is a task left for future work.

We also present results for the case where there is measurement error in the proxied inputs, a case not considered in the statistical literature. When the analyst has multiple measurements on the mismeasured variables, it is sometimes possible to circumvent this problem. We establish invariance to the choice of monotonic transformations of the input measures for both linear and nonlinear technologies.

Acknowledgments

This research was supported in part by the American Bar Foundation, the JB & MK Pritzker Family Foundation, Susan Thompson Buffett Foundation, NICHD R37HD065072, R01HD54702, a grant to the Becker Friedman Institute for Research and Economics from the Institute for New Economic Thinking (INET), and an anonymous funder. We acknowledge the support of a European Research Council grant hosted by University College Dublin, DEVHEALTH 269874. The views expressed in this paper are those of the authors and not necessarily those of the funders or persons named here. We thank the editor and an anonymous referee for helpful comments.

Footnotes

See Pearl (2009) and Heckman and Pinto (2012) for discussions of causality and Directed Acyclic Graphs.

Pearl (2001) invokes this assumption.

Alternative decompositions are discussed below in section 6.1.

These are called structural invariance or autonomy assumptions in the econometric literature. See, e.g., Hurwicz (1962). These assumptions do not rule out heterogenous responses to treatment because θ1 and θ0 may vary in the population.

The method is due to Bartlett (1937) and is based on the restricted minimization of mean squared error, subject to L′φ = L|𝒥|.

Note that the assumption that φ is known can be replaced with the assumption that φ is consistently estimated and we can use an asymptotic version of the Gauss-Markov theorem replacing “unbiased” with “unbiased in large samples”. Standard GMM methods can be applied.

See Croon (2002) for more details on this bias correction approach.

Contributor Information

James Heckman, Email: jjh@uchicago.edu, Department of Economics, University of Chicago, 1126 E. 59th Street, Chicago, IL 60637, Phone: (773) 702-0634, Fax: (773) 702-8490.

Rodrigo Pinto, Email: rodrig@uchicago.edu, Department of Economics, University of Chicago, 1126 E. 59th Street, Chicago, IL 60637, Phone: (312) 955-0485, Fax: (773) 268-6844.

References

- Anderson TW, Rubin H. Statistical inference in factor analysis. In: Neyman J, editor. Proceedings of the Third Berkeley Symposium on Mathematical Statistics and Probability. Vol. 5. Berkeley: University of California Press; 1956. pp. 111–150. [Google Scholar]

- Baron RM, Kenny DA. The moderator-mediator variable distinction in social psychological research: Conceptual, strategic, and statistical considerations. Journal of Personality and Social Psychology. 1986;51(6):1173–1182. doi: 10.1037//0022-3514.51.6.1173. [DOI] [PubMed] [Google Scholar]

- Bartlett MS. The statistical conception of mental factors. British Journal of Psychology. 1937 Jul;28(1):97–104. [Google Scholar]

- Cattell RB. The scree test for the number of factors. Multivariate Behavioral Research. 1966;1(2):245–276. doi: 10.1207/s15327906mbr0102_10. [DOI] [PubMed] [Google Scholar]

- Croon MA. Using predicted latent scores in general latent structure models. In: Marcoulides GA, Moustaki I, editors. Latent Variable and Latent Structure Models. NJ: Lawrence Erlbaum Associates, Inc; 2002. pp. 195–223. [Google Scholar]

- Cunha F. Eliciting maternal beliefs about the technology of skill formation. Presented at, Family Inequality Network: Family Economics and Human Capital in the Family; November 16, 2012.2012. [Google Scholar]

- Cunha F, Heckman JJ. Formulating, identifying and estimating the technology of cognitive and noncognitive skill formation. Journal of Human Resources. 2008 Fall;43(4):738–782. [Google Scholar]

- Cunha F, Heckman JJ, Schennach SM. Estimating the technology of cognitive and noncognitive skill formation. Econometrica. 2010 May;78(3):883–931. doi: 10.3982/ECTA6551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gorsuch RL. Factor analysis. In: Weiner IB, Freedheim DK, Schinka JA, Velicer WF, editors. Handbook of psychology: Research methods in psychology. Vol. 2. Hoboken, NJ: John Wiely & Sons, Inc; 2003. pp. 143–164. Chapter 6. [Google Scholar]

- Guttman L. Some necessary conditions for common-factor analysis. Psychometrika. 1954;19(2):149–161. [Google Scholar]

- Haavelmo T. The statistical implications of a system of simultaneous equations. Econometrica. 1943 Jan;11(1):1–12. [Google Scholar]

- Heckman J, Holland M, Oey T, Olds D, Pinto R, Rosales M. Unpublished manuscript. University of Chicago; 2012. A reanalysis of the nurse family partnership program: The memphis randomized control trial. [Google Scholar]

- Heckman JJ, Moon SH, Pinto R, Savelyev PA, Yavitz AQ. Analyzing social experiments as implemented: A reexamination of the evidence from the HighScope Perry Preschool Program. Quantitative Economics. 2010 Aug;1(1):1–46. doi: 10.3982/qe8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heckman JJ, Navarro S. Using matching, instrumental variables, and control functions to estimate economic choice models. Review of Economics and Statistics. 2004 Feb;86(1):30–57. [Google Scholar]

- Heckman JJ, Pinto R. Unpublished manuscript. University of Chicago; 2012. Causal analysis after Haavelmo: Definitions and a unified analysis of identification. [Google Scholar]

- Heckman JJ, Pinto R, Savelyev PA. Unpublished manuscript. University of Chicago, Department of Economics; 2013. Understanding the mechanisms through which an influential early childhood program boosted adult outcomes. (first draft, 2008). Forthcoming, American Economic Review. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heckman JJ, Vytlacil EJ. Econometric evaluation of social programs, part II: Using the marginal treatment effect to organize alternative economic estimators to evaluate social programs and to forecast their effects in new environments. In: Heckman J, Leamer E, editors. Handbook of Econometrics. 6B. Amsterdam: Elsevier; 2007. pp. 4875–5143. Chapter 71. [Google Scholar]

- Horn JL. A rationale and test for the number of factors in factor analysis. Psychometrika. 1965;30(2):179–185. doi: 10.1007/BF02289447. [DOI] [PubMed] [Google Scholar]

- Hurwicz L. On the structural form of interdependent systems. In: Nagel E, Suppes P, Tarski A, editors. Logic, Methodology and Philosophy of Science. Stanford University Press; 1962. pp. 232–239. [Google Scholar]

- Imai K, Keele L, Tingley D, Yamamoto T. Unpacking the black box of causality: Learning about causal mechanisms from experimental and observational studies. American Political Science Review. 2011;105(4):765–789. [Google Scholar]

- Imai K, Keele L, Yamamoto T. Identification, inference and sensitivity analysis for causal mediation effects. Statistical Science. 2010;25(1):51–71. [Google Scholar]

- Kaiser HF. The application of electronic computers to factor analysis. Educational and Psychological Measurement. 1960;20(1):141–151. [Google Scholar]

- Kaiser HF. A note on Guttman’s lower bound for the number of common factors. British Journal of Statistical Psychology. 1961;14(1):1–2. [Google Scholar]

- Klein LR, Goldberger AS. An Econometric Model of the United States, 1929–1952. Amsterdam: North-Holland Publishing Company; 1955. [Google Scholar]

- Onatski A. Testing hypotheses about the number of factors in large factor models. Econometrica. 2009;77(5):1447–1479. [Google Scholar]

- Pearl J. Direct and indirect effects. Proceedings of the Seventeenth Conference on Uncertainty in Artificial Intelligence; San Francisco, CA: Morgan Kaufmann Publishers Inc; 2001. pp. 411–420. [Google Scholar]

- Pearl J. Causality: Models, Reasoning, and Inference. 2. New York: Cambridge University Press; 2009. [Google Scholar]

- Pearl J. The mediation formula: A guide to the assessment of causal pathways in nonlinear models. Forthcoming in Causality: Statistical Perspectives and Applications 2011 [Google Scholar]

- Robin J-M. Comments on “structural equations, treatment effects and econometric policy evaluation” by james j. heckman and edward vytlacil. Presented at the Sorbonne; Paris. 2003. Dec 23, [Google Scholar]

- Rosenbaum PR, Rubin DB. The central role of the propensity score in observational studies for causal effects. Biometrika. 1983 Apr;70(1):41–55. [Google Scholar]

- Theil H. Economic Forecasts and Policy. Amsterdam: North-Holland Publishing Company; 1958. [Google Scholar]

- Thompson B. Exploratory and confirmatory factor analysis: understanding concepts and applications. Washington, DC: American Psychological Association; 2004. [Google Scholar]

- Wright S. Correlation and causation. Journal of Agricultural Research. 1921;20:557–585. [Google Scholar]

- Wright S. The method of path coefficients. Annals of Mathematical Statistics. 1934;5(3):161–215. [Google Scholar]

- Zwick WR, Velicer WF. Comparison of five rules for determining the number of components to retain. Psychological Bulletin. 1986;99(3):432–442. [Google Scholar]