Abstract

There is growing consensus that the US clinical trials system is broken, with trial costs and complexity increasing exponentially, and many trials failing to accrue. Yet concerns about the expense and failure rate of randomized trials are only the tip of the iceberg; perhaps what should worry us most is the number of trials that are not even considered because of projected costs and poor accrual. Several initiatives, including the Clinical Trials Transformation Initiative and the “Sensible Guidelines Group” seek to push back against current trends in clinical trials, arguing that all aspects of trials - including design, approval, conduct, monitoring, analysis and dissemination - should be based on evidence rather than contemporary norms. Proposed here are four methodologic fixes for current clinical trials. The first two aim to simplify trials, reducing costs and increasing patient acceptability by dramatically reducing eligibility criteria - often to the single criterion that the consenting physician is uncertain which of the two randomized arms is optimal - and by clinical integration, investment in data infrastructure to bring routinely collected data up to research grade to be used as endpoints in trials. The second two methodologic fixes aim to shed barriers to accrual, either by cluster randomization of clinicians (in the case of modifications to existing treatment) or by early consent, where patients are offered the chance of being randomly selected to be offered a novel intervention if disease progresses at a subsequent point. Such solutions may be partial, or result in a new set of problems of their own. Yet the current crisis in clinical trials mandates innovative approaches: randomized trials have resulted in enormous benefits for patients and we need to ensure that they continue to do so.

Introduction

There is growing consensus that the US clinical trials system is broken. It has been routine for researchers to wonder whether clinical trials might “go the way of the Oldsmobile”1 (a once popular car that is now obsolete) and even for newspaper editorial writers to express concerns about “faltering clinical trials”. 2 Consider, for example, that over 70% of Phase III cancer trials did not achieve accrual goals and that nearly half failed to accrue even one-third of the total number of patients required. 3 Alternatively, consider that costs to accrue a single patient to a clinical trial can approach $50,000 4 and that opening a typical trial can involve 370 different steps and a median time from proposal to activation of over 18 months. 5 Moreover, concerns about the expense and failure rate of randomized trials are only the tip of the iceberg; perhaps what should worry us most is the number of trials that are not even considered because of anticipated cost and poor accrual.

Much has been written about the role that regulation plays in increasing clinical trial costs and complexity, including editorials in this journal.6 Authors have pointed to the need for multiple approvals from different ethics oversight boards and other regulatory agencies as particularly problematic,6–8 arguing that repeat approvals are unlikely to improve trials, especially as recommendations from different oversight entities can be contradictory. Excessive trial monitoring is also a major area of concern, with calls to move away from standard reporting of all adverse events in all trials on a patient-by-patient basis and towards a more risk-based approach that focuses on randomized comparisons in the context of current knowledge about the experimental interventions.6, 9

But blame can be also be shared with trialists: overly complicated protocols, with multiple primary endpoints, batteries of additional tests and seemingly endless eligibility criteria10 also serve to inflate costs and depress accrual.8 Empirical research comparing trial protocols has clearly demonstrated that trials have become more complex over time, with trial specific procedures, eligibility criteria and work burden increasing by about 10% per year.11 DeMets and Califf have ascribed these recent developments to changes in clinical trial leadership: instead of academics leading trials with a public health orientation, industry “now drives … design, conduct and analysis” with the primary motive being sales and compliance with regulatory requirements.12

Several initiatives seek to push back against current trends in clinical trials, aiming to simplify trials and regulatory procedures. The Clinical Trials Transformation Initiative CITTI (http://www.ctti-clinicaltrials.org/) aims to “identify and promote practices that will increase the quality and efficiency of clinical trials”. CTTI suggests that all aspects of trials - including design, approval, conduct, monitoring, analysis and dissemination - should be based on evidence. This is in contrast to contemporary procedures that have generally evolved as a result of haphazard processes, often without a compelling scientific or ethical basis. CTTI’s goals are aligned with those of the “Sensible Guidelines” group (https://www.ctsu.ox.ac.uk/research/sensible-guidelines) that has held several conferences, with associated papers,7, 13–17 on clinical trial conduct and design. A typical example of a Sensible Guidelines group initiative is endpoint adjudication. Instead of seeing adjudication as an essential methodology, such that trials without adjudication are seen as flawed, the Sensible Guidelines group has attempted to determine the circumstances under which adjudication would improve trial validity.16 The NIH Collaboratory (https://www.nihcollaboratory.org) aims to “rethink clinical trials” and is involved in a number of demonstration projects of large, simple trials with a public health orientation. Perhaps the most ambitious recent effort is PCORnet (www.PCORnet.org), a national clinical trials infrastructure to facilitate pragmatic clinical trials using randomization in the course of routine care, and utilizing data from EMRs. No trials have yet been conducted using this network, but several are planned.

Here, I will build on this prior work by briefly outlining four simple methodologic approaches to address common problems of contemporary randomized trials. The four approaches are divided into two stages. First, trials can be simplified, and costs dramatically reduced, by reducing eligibility criteria and by integrating data collection into routine clinical practice. Once this is achieved the second stage is to remove barriers to accrual.

Stage 1: Simplify trials to reduce costs and improve tolerability

-

1

Reducing eligibility criteria. Lengthy and complex eligibility criteria can often be replaced with just one: if the doctor has important uncertainty what to do, then the patient is eligible for the randomized trial.18 The uncertainty principle was pioneered by the ISIS trialists19 in a series of studies looking at interventions such as beta-blockers after suspected myocardial infarction. Even if the uncertainty principle is not formally invoked, eligibility criteria can often be dramatically simplified.20 As a simple example, a trial comparing two statins will typically involve a set of criteria related to contraindications, such as elevated creatine kinase.21 But checking creatine kinase is often considered to be standard care before statin therapy, at least in older patients. In other words, a doctor would not consider a patient for a statin if creatine kinase was high and so the question of which statin was better would not even arise. Naturally, there will be trials where trial eligibility should not be reduced to just the uncertainty criterion. Nonetheless, investigators should still limit criteria to a small number, far less than the 50, 70 or even 100 criteria common in contemporary clinical trials.

-

2

Clinical integration. Consider that a typical randomized trial requires creation of a data infrastructure entirely separate from that of clinical practice. This is problematic because, in general, whatever a researcher needs to know about a patient, a doctor should want to know. If a patient has a recurrence of cancer, for example, this is important data for a trialist studying adjuvant therapy, but the patient’s doctor will also need to be informed for consideration of appropriate salvage therapy. We have previously proposed22 investing in data infrastructure for clinical practice to bring routinely collected data up to research grade. Doctors support such investment because it helps them to provide better care. Once this infrastructure is in place, data collected routinely in clinical practice can be used as endpoints in clinical trials. As one well-known example, the long-term follow-up for the seminal West of Scotland Coronary Prevention Study comparing pravastatin with placebo was based entirely on routine records held by the National Health Service, including death, hospital discharges and cancer.23 There is empirical evidence that such an approach, which avoids expensive and time-consuming event adjudication, is valid for cardiovascular outcomes.24 Endpoints taken from routine clinical practice can also include patient-reported outcomes: our own group has completed a clinical trial comparing the effects of a surgical modification on urinary continence after radical prostatectomy25 in which there was zero protocol specified data collection. As patient-reported outcomes were recorded as a routine part of clinical care, all trial endpoints were downloaded from the electronic medical record at the end of the trial. Experiences such of these have led to calls for “Embedding … research into practice”26 and for “trials … to be conducted as a routine component of clinical care”.8 The use of a clinically-integrated approach does limit endpoints to those routinely collected and, because blinding is sometimes not possible, there is a possibility of bias in outcomes assessment. It is also possible that some trials require endpoints that should not be collected as a routine part of clinical practice. Clinically-integrated trials may also be associated with greater loss to follow-up, as there are no special procedures to ensure compliance with data recording, although the major power advantages of clinical integration should offset problems with drop-out.

Stage 2: Shed barriers to accrual

If a trial has minimal eligibility criteria and no additional data gathering, two additional procedures become possible.

-

3

Cluster randomization. For studies comparing treatments that that are already widely used in practice – what is generally classed as “comparative effectiveness research” – cluster randomization offers the promise of dramatically improved accrual, simply because it is easier to randomize doctors than patients. Instead of a doctor having to remember to consent patients, and fit what can sometimes be a lengthy consent discussion into a busy clinic, cluster randomization might involve a much abbreviated consent process (see below for further comments on informed consent). For trials where the effects of treatment may depend on the clinician – such as surgery – cluster trials can involve crossover. For example, a surgeon could treat patients using one technique for three months and then crossover to the other for patients presenting during the subsequent three months, with the order determined at random. A further advantage of crossover for cluster randomized trials is that it offsets the power disadvantages associated with having the cluster as the unit of analysis, such that the number of patients in a cluster crossover trial is typically similar to that of a traditional trial where individual patients are randomized.27 Cluster rather than individual randomization is also preferable for clinicians, because they can practice consistently over a period of time, instead of switching back and forth with each new patient.

Cluster randomization raises the specter of selection bias: doctors know a patient’s allocation and could theoretically influence any consent or schedule patients for treatment during periods associated with particular interventions. This could be carefully monitored by tracking surgeon’s accrual rates over time, then comparing patient characteristics between crossover periods. Bias associated with unblinding can be limited by use of objective endpoints or studying therapies where patients are not likely to have strong preferences; e.g. between two similar analgesics.

-

4

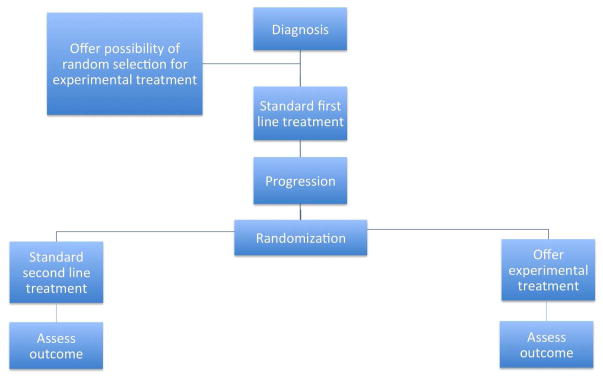

Early consent. Cluster randomization, with abbreviated consent, is not appropriate for novel treatments, where patients need to be fully informed that risks and benefits are uncertain. In many cases, the consent process will be less fraught for the patient and logistically easier for the trialist if it takes place early in the course of treatment, well before the patient needs to consider the intervention that is subject to randomization. At the time of initial treatment, patients can be told that, if additional treatment is indicated subsequently, then they may be randomly selected to be offered an experimental therapy. If they are selected, the risks and benefits would be fully explained and they could choose whether to accept or decline. Figure 1 shows the outline of an example trial. The initial consent would only be to release data to trialists and to have the chance of being selected for the novel treatment. Patients who consent would be registered and randomized to standard care or novel treatment. At the time of treatment, patients in the experimental arm would be given further information and the option whether to proceed or to choose standard care. Analysis would be by intent to treat. This methodology is similar to the cohort multiple randomized controlled design of Relton et al. 28

Early consent trials would be compromised if a high proportion of patients subsequently refused the experimental treatment, something that would bias the results towards the null.

Figure 1.

Early consent design.

Illustrative examples

To illustrate how these approaches might be used in practice, we will start with the INFORM-P trial, a comparison between using the oldest available red blood cells for in-patient transfusion (the current standard practice) versus the freshest available blood. Eligibility criteria were minimal – a few specific classes of patients were excluded – randomization was built into the order entry system and the primary endpoint was in-hospital mortality, which could be downloaded from the hospital database.29, 30

A second example is the Veterans Affairs (VA) “point-of-care” randomized trial comparing two different management approaches to hyperglycemia, sliding scale versus weight-based insulin. There are only two eligibility criteria: the patient needs insulin but is not in ICU. There are no study specific diagnostic procedures or “follow-up events”. Study outcomes, which include length of stay, infections and kidney injury, and other trial data, such as patient demographics, baseline glucose and glomerular filtration rate, are “collected directly from the computerized patient record system”.31

INFORM-P and the VA hyperglycemia study incorporate the first two methodologic approaches associated with trial simplicity, namely reduced eligibility criteria and clinical integration. A trial that also includes cluster randomization is currently underway at our own institution. The trial is of factorial design, comparing modifications to radical prostatectomy: two different approaches to the lymph node dissection and two approaches to suturing of the urethra. All four approaches are widely used by urologists. The endpoints of this trial are cancer recurrence and complications for the lymph node comparison, both of which are routinely recorded in the clinical record, and patient-reported urinary continence for the suturing comparison, an endpoint that collected via an electronic questionnaire for all patients irrespective of participation in the trial.32 As outcomes may be surgeon dependent, the cluster randomization involves crossover. All patients undergoing surgery are eligible and surgeons are told always to offer what in their judgment is the best clinical care. For example, consider a surgeon operating during a time period when limited resection has been randomly assigned; the surgeon would be free to dissect more widely if the patient is at particularly high risk or if there is evidence of widespread nodal involvement on imaging or during surgery. Surgeons are asked to record which approach they used and, if they did not follow randomization, to state whether this decision was made before or during surgery.25 There are plans to extend the study to include comparisons related to the timing of catheter removal and use of antibiotics.

As an example of the use of early consent to evaluate a new therapy, rather than cluster randomization for modifications of existing treatments, we will consider focal therapy for prostate cancer, a novel competitor to the established approaches of radiotherapy or surgery. In an early consent design, patients would be approached at the time of prostate biopsy, before diagnosis, and asked whether, were they to be diagnosed with an aggressive cancer in need of treatment, they would be willing to be randomly selected to be offered a new treatment. They would be informed that they could decide at that time whether to try the new treatment or opt for radiotherapy or surgery. They would also be informed that agreeing to take part would not require any additional visits, tests, procedures or questionnaires, because all data for the trial are routinely collected from patients irrespective of trial participation. Data on patient-reported and oncologic outcomes would be downloaded from hospital databases at the end of the trial and analyzed by intent-to-treat.33

Consent

Clinical-integration and cluster randomization raise several issues concerning informed consent. For instance, in a clinically-integrated trial, consent does not need to be sought for trial-specific procedures (such as scans or questionnaires) because these are absent; similarly, consent for the intervention is not relevant in a cluster randomized trial because, patients receive the same treatment regardless of whether or not they consent. The general theme of recent initiatives such as CTTI and the Sensible Guidelines groups is that trial procedures need to be rationally applied on a trial-by-trial basis, rather than being universally imposed, irrespective of the trial design and study context. As such, the possibility has been raised as to whether a waiver of consent might be appropriate in some trials. This is particularly for trials where the randomized comparisons are routine in everyday clinical practice, such that patients might receive one or the other on a quasi-random basis when presenting for care depending on the provider they happen to see; consider, for example, a trial of two antibiotics for infection.34 One line of argument has been to question the traditional sharp distinction between research and clinical care, with the former requiring more stringent ethical oversight and associated procedures, such as informed consent and safety monitoring, and the latter requiring little if any oversight. If this distinction falls, then there is room for a more practical approach that focuses on risk and burden irrespective of whether a medical activity is labeled research, clinical practice or quality assurance.35

Yet the SUPPORT trial controversy36 shows that such arguments are far from being universally accepted. In SUPPORT, premature infants were randomized to different levels of oxygen, both within clinical practice guidelines of the time. Critics of SUPPORT argue that randomization between different clinical approaches is “not the key issue” as “those giving consent to the trial should have been informed of all uncertainties”.37 Such an argument of course ignores the question of whether parents of infants not on the trial were informed of all uncertainties during routine care. Nonetheless, it is clear the nature and content of consent will be a critical issue in considering solutions to the current crisis in clinical trials.

Pragmatic versus explanatory trials

The methodologic fixes proposed in this paper are clearly more applicable to trials that are towards the pragmatic rather than the explanatory end of the spectrum.38 If a trial aims to test a very specific hypothesis about the causal effect of an intervention on an outcome, then it may not be appropriate at all to reduce eligibility criteria, or to restrict outcomes to those routinely collected in clinical practice. But implementation of the methodologies described above need not substantively modify the study question. As an example, the study question of a trial comparing two similar chemotherapy regimens is no more or less pragmatic in a trial that includes eligibility criteria related to chemotherapy contraindications than in a trial that avoids such eligibility criteria on the grounds that the choice of chemotherapy regimen is irrelevant for a contraindicated patient. The study question is similarly unaffected if the trial obtains patient-reported outcomes using a web-based system implemented in clinic for all patients rather than by paper questionnaire especially for trial patients. Randomization at the oncologist level rather than the patient level also does not require a change in the study question.

Concluding remarks

The approaches outlined in this paper developed from an extensive literature on clinical trial design. Trial simplification was described by Yusuf, Collins and Peto as long ago as 1984;39 clinical-integration has been subject to considerable discussion since the advent of the electronic medical record;40 cluster randomization has, of course, a long history in research; early consent is an adaptation of the “Zelen” design,41 and has been described previously.28 I have tried to show how these ideas are complementary, and together provide a way to tackle important problems in contemporary clinical trials. There is the practical point that even if a trial based on the methodologic approaches described in this paper were regarded as imperfect, an imperfect trial that can be completed is preferable to a perfect trial that will never be done. An “imperfect” randomized trial is still qualitatively less biased than most observational alternatives.

The current crisis in clinical trials surely mandates that we explore some innovative solutions. Those solutions may be partial, or result in a new set of problems of their own. But that has to be a better option than trying the same thing over again, hoping for a different result, or perhaps just giving up altogether. Randomized trials have resulted in enormous benefits for patients: we need to ensure that they continue to do so.

Acknowledgments

Funding: Supported in part by funds from David H. Koch provided through the Prostate Cancer Foundation, the Sidney Kimmel Center for Prostate and Urologic Cancers, P50-CA92629 SPORE grant from the National Cancer Institute to Dr. H Scher, and the P30-CA008748 NIH/NCI Cancer Center Support Grant to MSKCC.

References

- 1.Dilts D. US cancer trials may go the way of the Oldsmobile. Nat Med. 2010;16:632. doi: 10.1038/nm0610-632. [DOI] [PubMed] [Google Scholar]

- 2.The New York Times. New York, NY: The New York Times Company; 2010. Faltering Cancer Trials. [Google Scholar]

- 3.Cheng SK, Dietrich MS, Dilts DM. A sense of urgency: Evaluating the link between clinical trial development time and the accrual performance of cancer therapy evaluation program (NCI-CTEP) sponsored studies. Clin Cancer Res. 2010;16:5557–5563. doi: 10.1158/1078-0432.CCR-10-0133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Silverman E. Pharmalot. Los Angeles, CA: UBM Canon; 2011. Clinical trial costs are rising rapidly. [Google Scholar]

- 5.Dilts DM, Sandler AB, Baker M, et al. Processes to activate phase III clinical trials in a Cooperative Oncology Group: the Case of Cancer and Leukemia Group B. J Clin Oncol. 2006;24:4553–4557. doi: 10.1200/JCO.2006.06.7819. [DOI] [PubMed] [Google Scholar]

- 6.Yusuf S. Damage to important clinical trials by over-regulation. Clin Trials. 2010;7:622–625. doi: 10.1177/1740774510387850. [DOI] [PubMed] [Google Scholar]

- 7.Duley L, Antman K, Arena J, et al. Specific barriers to the conduct of randomized trials. Clin Trials. 2008;5:40–48. doi: 10.1177/1740774507087704. [DOI] [PubMed] [Google Scholar]

- 8.Eapen ZJ, Vavalle JP, Granger CB, et al. Rescuing clinical trials in the United States and beyond: a call for action. Am Heart J. 2013;165:837–847. doi: 10.1016/j.ahj.2013.02.003. [DOI] [PubMed] [Google Scholar]

- 9.Reith C, Landray M, Califf RM. Randomized clinical trials--removing obstacles. N Engl J Med. 2013;369:2269. doi: 10.1056/NEJMc1312901. [DOI] [PubMed] [Google Scholar]

- 10.Fuks A, Weijer C, Freedman B, et al. A study in contrasts: eligibility criteria in a twenty-year sample of NSABP and POG clinical trials. National Surgical Adjuvant Breast and Bowel Program. Pediatric Oncology Group . J Clin Epidemiol. 1998;51:69–79. doi: 10.1016/s0895-4356(97)00240-0. [DOI] [PubMed] [Google Scholar]

- 11.Getz KA, Wenger J, Campo RA, et al. Assessing the impact of protocol design changes on clinical trial performance. Am J Ther. 2008;15:450–457. doi: 10.1097/MJT.0b013e31816b9027. [DOI] [PubMed] [Google Scholar]

- 12.DeMets DL, Califf RM. A historical perspective on clinical trials innovation and leadership: where have the academics gone? JAMA. 2011;305:713–714. doi: 10.1001/jama.2011.175. [DOI] [PubMed] [Google Scholar]

- 13.Yusuf S, Bosch J, Devereaux PJ, et al. Sensible guidelines for the conduct of large randomized trials. Clin Trials. 2008;5:38–39. doi: 10.1177/1740774507088099. [DOI] [PubMed] [Google Scholar]

- 14.Eisenstein EL, Collins R, Cracknell BS, et al. Sensible approaches for reducing clinical trial costs. Clin Trials. 2008;5:75–84. doi: 10.1177/1740774507087551. [DOI] [PubMed] [Google Scholar]

- 15.Armitage J, Souhami R, Friedman L, et al. The impact of privacy and confidentiality laws on the conduct of clinical trials. Clin Trials. 2008;5:70–74. doi: 10.1177/1740774507087602. [DOI] [PubMed] [Google Scholar]

- 16.Granger CB, Vogel V, Cummings SR, et al. Do we need to adjudicate major clinical events? Clin Trials. 2008;5:56–60. doi: 10.1177/1740774507087972. [DOI] [PubMed] [Google Scholar]

- 17.Baigent C, Harrell FE, Buyse M, et al. Ensuring trial validity by data quality assurance and diversification of monitoring methods. Clin Trials. 2008;5:49–55. doi: 10.1177/1740774507087554. [DOI] [PubMed] [Google Scholar]

- 18.Sackett DL. Why randomized controlled trials fail but needn’t: 1. Failure to gain “coal-face” commitment and to use the uncertainty principle. CMAJ. 2000;162:1311–1314. [PMC free article] [PubMed] [Google Scholar]

- 19.First International Study of Infarct Survival Collaborative Group. Randomised trial of intravenous atenolol among 16 027 cases of suspected acute myocardial infarction: ISIS-1. Lancet. 1986;2:57–66. [PubMed] [Google Scholar]

- 20.George SL. Reducing patient eligibility criteria in cancer clinical trials. J Clin Oncol. 1996;14:1364–1370. doi: 10.1200/JCO.1996.14.4.1364. [DOI] [PubMed] [Google Scholar]

- 21.Saku K, Zhang B, Noda K. Randomized head-to-head comparison of pitavastatin, atorvastatin, and rosuvastatin for safety and efficacy (quantity and quality of LDL): the PATROL trial. Circ J. 2011;75:1493–1505. doi: 10.1253/circj.cj-10-1281. [DOI] [PubMed] [Google Scholar]

- 22.Vickers AJ, Scardino PT. The clinically-integrated randomized trial: proposed novel method for conducting large trials at low cost. Trials. 2009;10:14. doi: 10.1186/1745-6215-10-14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ford I, Murray H, Packard CJ, et al. Long-term follow-up of the West of Scotland Coronary Prevention Study. N Engl J Med. 2007;357:1477–1486. doi: 10.1056/NEJMoa065994. [DOI] [PubMed] [Google Scholar]

- 24.Pogue J, Walter SD, Yusuf S. Evaluating the benefit of event adjudication of cardiovascular outcomes in large simple RCTs. Clin Trials. 2009;6:239–251. doi: 10.1177/1740774509105223. [DOI] [PubMed] [Google Scholar]

- 25.Vickers AJ, Bennette C, Touijer K, et al. Feasibility study of a clinically-integrated randomized trial of modifications to radical prostatectomy. Trials. 2012;13:23. doi: 10.1186/1745-6215-13-23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Califf RM, Platt R. Embedding cardiovascular research into practice. JAMA. 2013;310:2037–2038. doi: 10.1001/jama.2013.282771. [DOI] [PubMed] [Google Scholar]

- 27.Hejblum G, Chalumeau-Lemoine L, Ioos V, et al. Comparison of routine and on-demand prescription of chest radiographs in mechanically ventilated adults: a multicentre, cluster-randomised, two-period crossover study. Lancet. 2009;374:1687–1693. doi: 10.1016/S0140-6736(09)61459-8. [DOI] [PubMed] [Google Scholar]

- 28.Relton C, Torgerson D, O’Cathain A, et al. Rethinking pragmatic randomised controlled trials: introducing the “cohort multiple randomised controlled trial” design. BMJ. 2010;340:c1066. doi: 10.1136/bmj.c1066. [DOI] [PubMed] [Google Scholar]

- 29.Blajchman MA, Carson JL, Eikelboom JW, et al. The role of comparative effectiveness research in transfusion medicine clinical trials: proceedings of a National Heart, Lung, and Blood Institute workshop. Transfusion. 2012;52:1363–1378. doi: 10.1111/j.1537-2995.2012.03640.x. [DOI] [PubMed] [Google Scholar]

- 30.Heddle NM, Cook RJ, Arnold DM, et al. The effect of blood storage duration on in-hospital mortality: a randomized controlled pilot feasibility trial. Transfusion. 2012;52:1203–1212. doi: 10.1111/j.1537-2995.2011.03521.x. [DOI] [PubMed] [Google Scholar]

- 31.Fiore LD, Brophy M, Ferguson RE, et al. A point-of-care clinical trial comparing insulin administered using a sliding scale versus a weight-based regimen. Clin Trials. 2011;8:183–195. doi: 10.1177/1740774511398368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Vickers AJ, Savage CJ, Shouery M, et al. Validation study of a web-based assessment of functional recovery after radical prostatectomy. Health Qual Life Outcomes. 2010;8:82. doi: 10.1186/1477-7525-8-82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Anastasiadis E, Ahmed HU, Relton, et al. A novel randomised controlled trial design in prostate cancer. BJU Int. doi: 10.1111/bju.12735. Epub ahead of print 14 March 2014. [DOI] [PubMed] [Google Scholar]

- 34.Truog RD, Robinson W, Randolph A, et al. Is informed consent always necessary for randomized, controlled trials? New Engl J Med. 1999;340:804–807. doi: 10.1056/NEJM199903113401013. [DOI] [PubMed] [Google Scholar]

- 35.Kass NE, Faden RR, Goodman SN, et al. The research-treatment distinction: a problematic approach for determining which activities should have ethical oversight. Hastings Cent Rep. 2013;(Spec No):S4–S15. doi: 10.1002/hast.133. [DOI] [PubMed] [Google Scholar]

- 36.Drazen JM, Solomon CG, Greene MF. Informed consent and SUPPORT. New Engl J Med. 2013;368:1929–1931. doi: 10.1056/NEJMe1304996. [DOI] [PubMed] [Google Scholar]

- 37.Pharoah PD. The US Office for Human Research Protections’ judgment of the SUPPORT trial seems entirely reasonable. BMJ. 2013;347:f4637. doi: 10.1136/bmj.f4637. [DOI] [PubMed] [Google Scholar]

- 38.Thorpe KE, Zwarenstein M, Oxman AD, et al. A pragmatic-explanatory continuum indicator summary (PRECIS): a tool to help trial designers. CMAJ. 2009;180:E47–E57. doi: 10.1503/cmaj.090523. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Yusuf S, Collins R, Peto R. Why do we need some large, simple randomized trials? Stat Med. 1984;3:409–422. doi: 10.1002/sim.4780030421. [DOI] [PubMed] [Google Scholar]

- 40.Roehr B. The appeal of large simple trials. BMJ. 2013;346:f1317. doi: 10.1136/bmj.f1317. [DOI] [PubMed] [Google Scholar]

- 41.Zelen M. A new design for randomized clinical trials. New Engl J Med. 1979;300:1242–1245. doi: 10.1056/NEJM197905313002203. [DOI] [PubMed] [Google Scholar]