Abstract

We systematically reviewed randomized controlled trials (RCTs) assessing the effectiveness of computerized decision support systems (CDSSs) featuring rule- or algorithm-based software integrated with electronic health records (EHRs) and evidence-based knowledge. We searched MEDLINE, EMBASE, Cochrane Central Register of Controlled Trials, and Cochrane Database of Abstracts of Reviews of Effects. Information on system design, capabilities, acquisition, implementation context, and effects on mortality, morbidity, and economic outcomes were extracted.

Twenty-eight RCTs were included. CDSS use did not affect mortality (16 trials, 37395 patients; 2282 deaths; risk ratio [RR] = 0.96; 95% confidence interval [CI] = 0.85, 1.08; I2 = 41%). A statistically significant effect was evident in the prevention of morbidity, any disease (9 RCTs; 13868 patients; RR = 0.82; 95% CI = 0.68, 0.99; I2 = 64%), but selective outcome reporting or publication bias cannot be excluded. We observed differences for costs and health service utilization, although these were often small in magnitude.

Across clinical settings, new generation CDSSs integrated with EHRs do not affect mortality and might moderately improve morbidity outcomes.

The quality of medical care is variable and often suboptimal across health care systems.1 Despite the growing availability of knowledge from randomized controlled trials (RCTs) and systematic reviews to guide clinical practice, there remains a discrepancy in the application of evidence into health care services.2 Current research demonstrates the potential of computerized decision support systems (CDSSs) to assist with problems raised in clinical practice, increase clinician adherence to guideline- or protocol-based care, and, ultimately, improve the overall efficiency and quality of health care delivery systems.1,3,4 CDSSs have been additionally shown to increase the use of preventive care in hospitalized patients, facilitate communication between providers and patients, enable faster and more accurate access to medical record data, improve the quality and safety of medication prescribing, and decrease the rate of prescription errors.5–9 A recent study estimated that the adoption of Computerized Physician Order Entry and Clinical Decision Support could prevent 100 000 inpatient adverse drug events (ADEs) per year, resulting in increased inpatient bed availability by more than 700 000 bed-days and opportunity savings approaching €300 million in the studied European Union member states (i.e., the Czech Republic, France, the Netherlands, Sweden, Spain, and the United Kingdom).10

Electronic Health Records (EHRs) represent another innovation that is gaining momentum in health care systems. In the United States, the use of EHRs is encouraged by the $27 billion allocated in reimbursement incentives by the 2009 Health Information Technology for Economic and Clinical Health (HITECH) Act. Under the Act, clinicians and hospitals must demonstrate “meaningful use” of EHRs by adhering to a set of criteria, which includes the implementation of clinical decision support rules relevant to a specialty or high priority hospital condition such as diagnostic test ordering.11 The integration of CDSSs with EHRs through the delivery of guidance messages to health care professionals at the point of care may maximize the impact of both innovations.

A primary barrier to successful CDSS evaluation is its broad definition adopted by the research community, which encompasses a diverse range of interventions and functions (see the box on page e2). The inclusion of studies with variable interventions across diverse health care settings precluded systematic reviews from reaching a decisive understanding of the impact of CDSSs.9,12–14 To address this issue, we conducted a systematic review to rigorously evaluate the impact of CDSSs linked to EHRs on critical outcomes—mortality, morbidity, and costs—and adopted a narrow definition of the intervention to facilitate its coherent and accurate evaluation.

Definitions of Computerized Decision Support Systems (CDSSs) Adopted by Authors of Other Systematic Reviews

| Bates et al.15(p524) (and later adopted by Ash et al.4(p980)) defined a CDSS as a computer-based system providing “passive and active referential information as well as reminders, alerts, and guidelines.” |

| Kawamoto et al.16(p1) (and later adopted by Bright et al.9(p29)) identified a CDSS as “any electronic system designed to aid directly in clinical decision making, in which characteristics of individual patients are used to generate patient-specific assessments or recommendations that are then presented to clinicians for consideration.” |

| Payne17(p47S) classified CDSSs as “computer applications designed to aid clinicians in making diagnostic and therapeutic decisions in patient care.” |

METHODS

Our study protocol18 is registered on PROSPERO: the international prospective register of systematic reviews (ID: 2014:CRD42014007177). This work was performed in accordance with the PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions.19

Eligibility Criteria

Population.

Postgraduate health professionals (medical, nursing, and allied health) in primary, secondary, and tertiary care settings. Only interventions that were implemented in real, nonsimulated, clinical settings were considered.

Types of interventions.

We adapted the definition of a CDSS by Haynes et al.20 and Eberhardt et al.21 We defined a CDSS as an information system aimed to support clinical decision-making, linking patient-specific information in EHRs with evidence-based knowledge to generate case-specific guidance messages through a rule- or algorithm-based software. Our inclusion criteria emphasize the implementation of evidence-based medicine, meaning that computer-generated guidance messages had to be based on literature or a priori evidence (e.g., guidelines or point-of-care services) and not on expert opinions. This knowledge had to then be delivered to medical doctors or allied health care professionals through electronic media (e.g., computer, smartphone, or tablet). We did not exclude a CDSS, however, based on the degree of literature it covered in the literature surveillance system. In other words, we included a CDSS if it integrated a single evidence-based guideline or incorporated multiple evidence-based guidelines. We also included CDSSs irrespective of the level of patient information archived in the EHR.

Systems that alter the guidance based on previous experience or average behaviors were excluded.

We included software guidance messages, irrespective of the form (e.g., recommendations, alerts, prompts, or reminders), as well as guidance messages, regardless of the target assistance (e.g., diagnostic test ordering and interpretation, treatment planning, therapy recommendations, primary preventive care, therapeutic drug monitoring and dosing, drug prescribing, or chronic disease management). Patient-specific information had to derive from EHRs. Our operational definitions for considering a study “compliant” with the EHR were inclusive: from clinical data repository and health data repository (CDHR), to electronic medical–patient record (EMR and EPR), and EHR.22

Our inclusion criteria match the “6S” Haynes’ model for evidence-based literature products23 and the evolution of online point-of-care services.24 The box below describes, in detail, the characteristics of the CDSSs we evaluated.

Characteristics of Computerized Decision Support Systems (CDSSs)

| Implementation strategy | |

| Channel | Electronic-based |

| Sharing | Local application, networked, or Web applications |

| Type of device | Local personal computer or handheld device |

| Computational architecture | CDSS built into local EHR, knowledge available from central repository, entire system housed outside local site, clouding system |

| Information | |

| Nature | Knowledge-based |

| Provider | Contents provided by national/international publisher, professional society, health care organization, or governmental agency |

| EBM methodology | General references, specific guidelines for a given clinical condition, suggestions considering a patient’s unique clinical data, list of possible diagnoses, drug interaction alerts, or preventive care reminders |

| Format: delivery form | Messages reminders, prompts, alerts, algorithms, recommendations, rules, order sets, warnings, data reports, and dashboards |

| Target | |

| Targeted setting | Primary, secondary, or tertiary |

| Target expertise | Preventive care (e.g., immunization, screening, or disease management guidelines for secondary prevention) |

| Diagnosis (e.g., suggestions for possible diagnoses that match a patient’s signs and symptoms) | |

| Planning or implementing treatment (e.g., guidelines for specific diagnoses, drug dosage recommendations, or warnings for drug interactions). | |

| Follow-up management (e.g., corollary orders, reminders for ADE monitoring) | |

| Hospital, provider efficiency (e.g., care plans to minimize length of stay) | |

| Cost reductions and improved patient convenience (e.g., duplicate testing alerts or drug formulary guidelines) | |

| Overall goals | Improved overall efficiency, early disease identification, accurate diagnosis, adherence of treatment to protocols, or prevention of ADEs |

| Time | |

| Timing | Immediately at the point of care, before the patient encounter, after the patient encounter, or at any time |

| Type of presentation | “Automatic” (key issues: timing, autonomy and user control over response) |

| “On demand” (key issues: speed, ease of access, autonomy and user control over response) | |

| Person: health professional | Physicians, nurses, or allied health professionals |

Note. ADE = adverse drug event; EBM = evidence-based medicine; EHR = electronic health record.

Types of comparison groups.

To address our objectives, we considered the following comparisons: access to CDSSs according to our definition compared with (1) standard care with no access to CDSSs, (2) CDSSs that do not generate advice, or (3) CDSSs that are not based on evidence. Trials comparing arms accessing the same CDSS at different intensities (e.g., one arm having guidance messages pushed to the health professional vs another arm having guidance message statically available in a folder) were not pooled together with the other trials in the quantitative analyses.

Types of outcomes and assessment measures.

We identified a priori the following (primary) outcome measures for included studies:

1. Mortality: We selected mortality as it is the most relevant and objective outcome, although there may exist variability across studies with regards to the time frame during which mortality is captured.

2. Morbidity: We selected and grouped objective patient outcomes such as occurrence of illness (e.g., pneumonia, myocardial infarction, stroke), progression of diseases and hospitalizations.

3. Economic outcomes: Information about health care utilization (e.g., length of stay, emergency department visits, and primary care consultations), and costs.

We did not consider the following outcomes: patient satisfaction, measures of process, and health care professional activity or performance (e.g., adherence to guidelines, rates of screening and other preventive measures, provision of counseling, rates of appropriate drug administration, and identification of at-risk behaviors).

Types of studies.

To be eligible, studies had to be randomized controlled trials (RCTs). Randomization was allowed to be either at the individual- or at the cluster-level.

Data Sources

We systematically searched the English-language literature indexed in MEDLINE, EMBASE, Cochrane Central Register of Controlled Trials, and Cochrane Database of Abstracts of Reviews of Effects. Studies found in the bibliographies of Systematic Reviews on CDSSs, as well as those identified by experts, were also considered. The full search strategies for MEDLINE and EMBASE are included in the Appendix.

Study Selection and Data Extraction

We identified RCTs of the CDSSs fulfilling the aforementioned eligibility criteria. We combined the results into a reference management software program (EndNote X5 for Windows, Thomson Reuters, Philadelphia, PA). The database was filtered for duplications to derive a unique set of records. Investigators (K. H. K., T. L., L. B., L. B., V. P., G. R., A. V., and S. B.) independently examined the search results and screened the titles and abstracts; the full text reports of all potentially relevant trials were subsequently screened. Investigators (K. H. K., T. L., L. B., L. B., V. P., G. R., A. V., and S. B.) independently abstracted information on CDSS characteristics and effect estimates from all included trials using a modified version of The Cochrane Effective Practice and Organisation of Care Review Group (EPOC) data collection checklist: study setting and methods (design), comparators, computerized CDSS characteristics, patient or provider characteristics, and outcomes. We performed all steps in the study selection and data extraction processes in duplicate. When necessary, we attempted to contact the study authors to clarify uncertainties in the study design or results.

Risk of Bias Assessment

Two investigators (K. H. K., L. M.) assessed the potential risk for bias in included studies using the criteria outlined in the Cochrane Handbook for Systematic Reviews of Interventions.25 The assessment involved the following key domains: sequence generation, allocation concealment, blinding of outcome assessors, incomplete outcome data, selective outcome reporting, and other sources of bias (e.g., extreme baseline imbalance or failure to disclose source of funding for the study). We did not assess the blinding of personnel and participants given the nature of the intervention. In fact, the use of masking procedures to prevent personnel and participants from knowing the allocation to the intervention or control arms was impractical. Furthermore, blinding does not affect mortality, an outcome of this review. Our assessment referred only to studies reporting mortality or morbidity outcomes. Any disagreement was resolved by discussion or by the involvement of a third investigator (S. B.).

Data Synthesis

Risk ratios and 95% confidence intervals (CIs) were calculated for each trial by reconstructing contingency tables based on the number of patients randomly assigned and the number of patients with the outcome of interest (analysis in accordance with the intention-to-treat principle). For the cluster-randomized trials, to calculate adjusted (inflated) CIs that account for the clustering, we performed an approximate analysis as recommended in the Cochrane Handbook.25 Our approach was to multiply the standard error of the effect estimate (from the analysis ignoring the clustering) by the square root of the design effect.25 For this, we used an intracluster correlation coefficient (ICC = 0.027) borrowed from an external source.26 Then, each meta-analysis was performed twice, assuming either a fixed-effects27 or a random-effects model.28 In the absence of heterogeneity, the fixed-effects and the random-effects models provide similar results. When heterogeneity is found, the random-effects model is considered to be more appropriate, although both models may be biased.29

For all statistical analyses we used the R software environment,30 version 3.0.1, and the “meta” package for R,31 version 2.3–0. Selective outcome reporting or publication bias was assessed using the Begg and Mazumdar adjusted rank correlation test32 and the Egger regression asymmetry test.33 To evaluate whether the results of the studies were homogeneous, we used the Cochran Q test with a 0.10 level of significance.34 We also calculated the I2 statistic35 that describes the percentage variation across studies that is attributed to heterogeneity rather than chance. We regarded an I2 value less than 40% as indicative of “not important heterogeneity” and a value higher than 75% as indicative of “considerable heterogeneity.”25 To evaluate the stability of the results, we also performed a “leave-one-out” sensitivity analysis. The scope of this approach was to evaluate the influence of individual studies by estimating the summary relative risk in the absence of each study.36 All P values are 2-tailed. For all tests (except for heterogeneity), a probability level less than .05 was considered statistically significant.

RESULTS

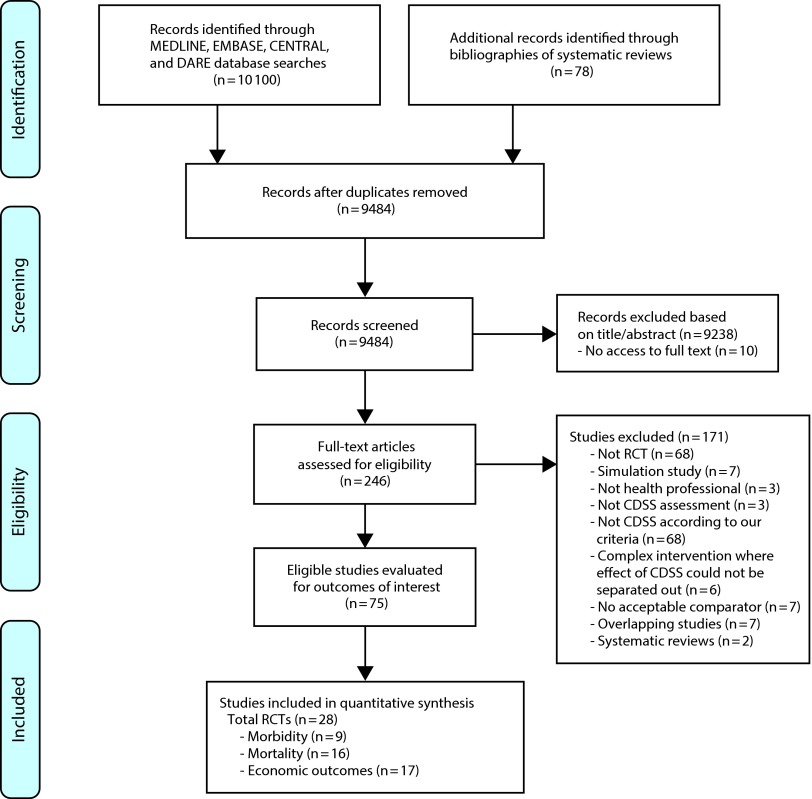

The results of our search and selection process are presented in Figure 1. We identified 28 RCTs, which met the predefined inclusion criteria.37–64 Eighteen studies reported mortality or morbidity data37–54 and were included in the meta-analyses, while 10 more studies reported only economic outcomes.55–64 A description of the RCTs is provided in the Appendix (available as a supplement to this article at http://www.ajph.org).

FIGURE 1—

Summary of evidence search and selection.

Note. CDSS = computerized decision support systems; RCT = randomized controlled trial

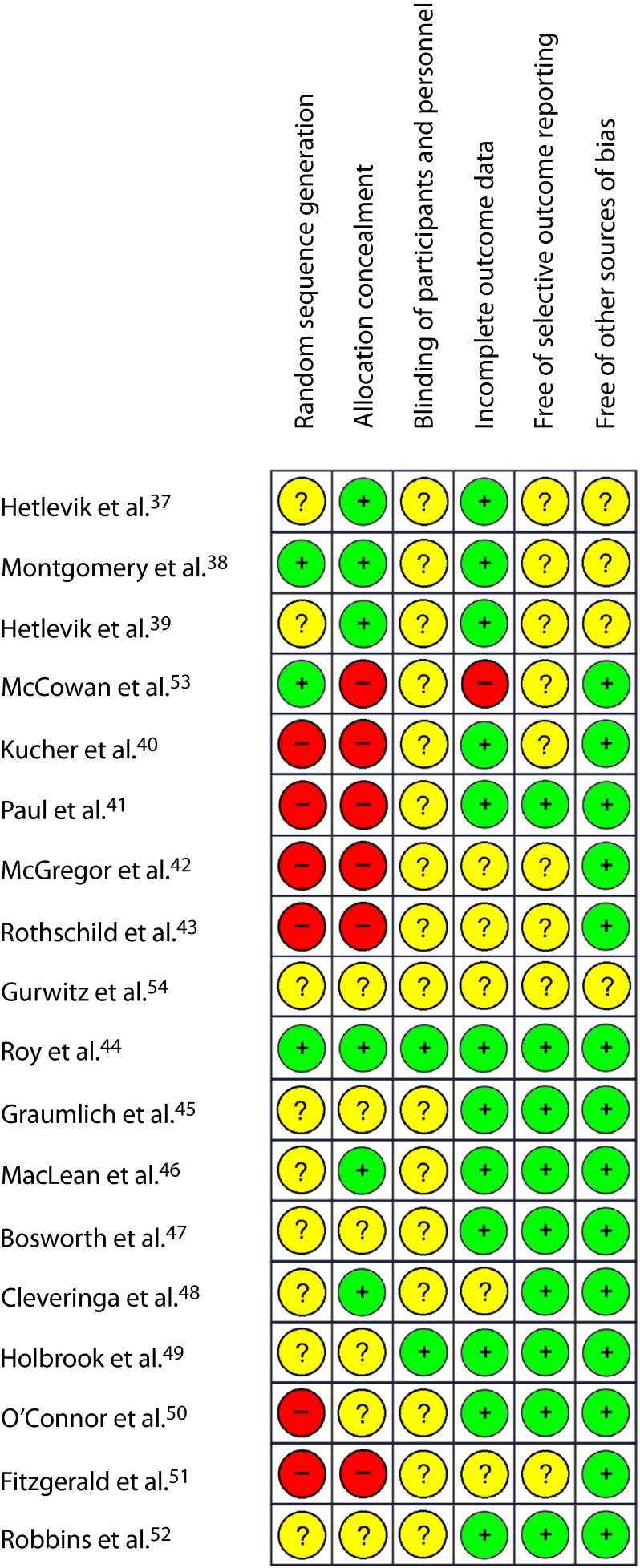

Risk of Bias in Studies Included in the Meta-Analyses

Overall, the assessment of the 18 studies incorporated in the meta-analyses indicated high risk of bias across 7 (39%) and unclear risk for 10 studies (56%). Only 1 study44 (5%) was judged to be at low risk for bias. We noticed that the majority of trials did not measure mortality as an outcome, but reported it as additional information, often as a reason for loss to follow-up. Readers should be aware that our risk of bias assessment did not evaluate studies based on their intended outcomes, but according to 2 outcomes of our systematic review: mortality and morbidity. Quality assessment items are summarized in Figure 2.

FIGURE 2—

Summary of risk-of-bias assessments of the randomized controlled trials included in the meta-analyses.

Note. Green (+) = low risk of bias; Yellow (?) = unclear risk of bias; Red (–) = high risk of bias.

Meta-Analysis of Mortality Outcomes

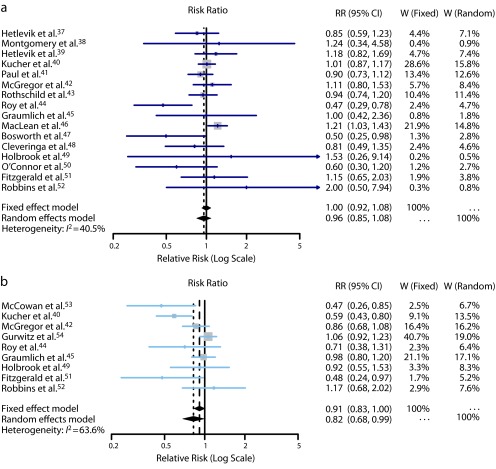

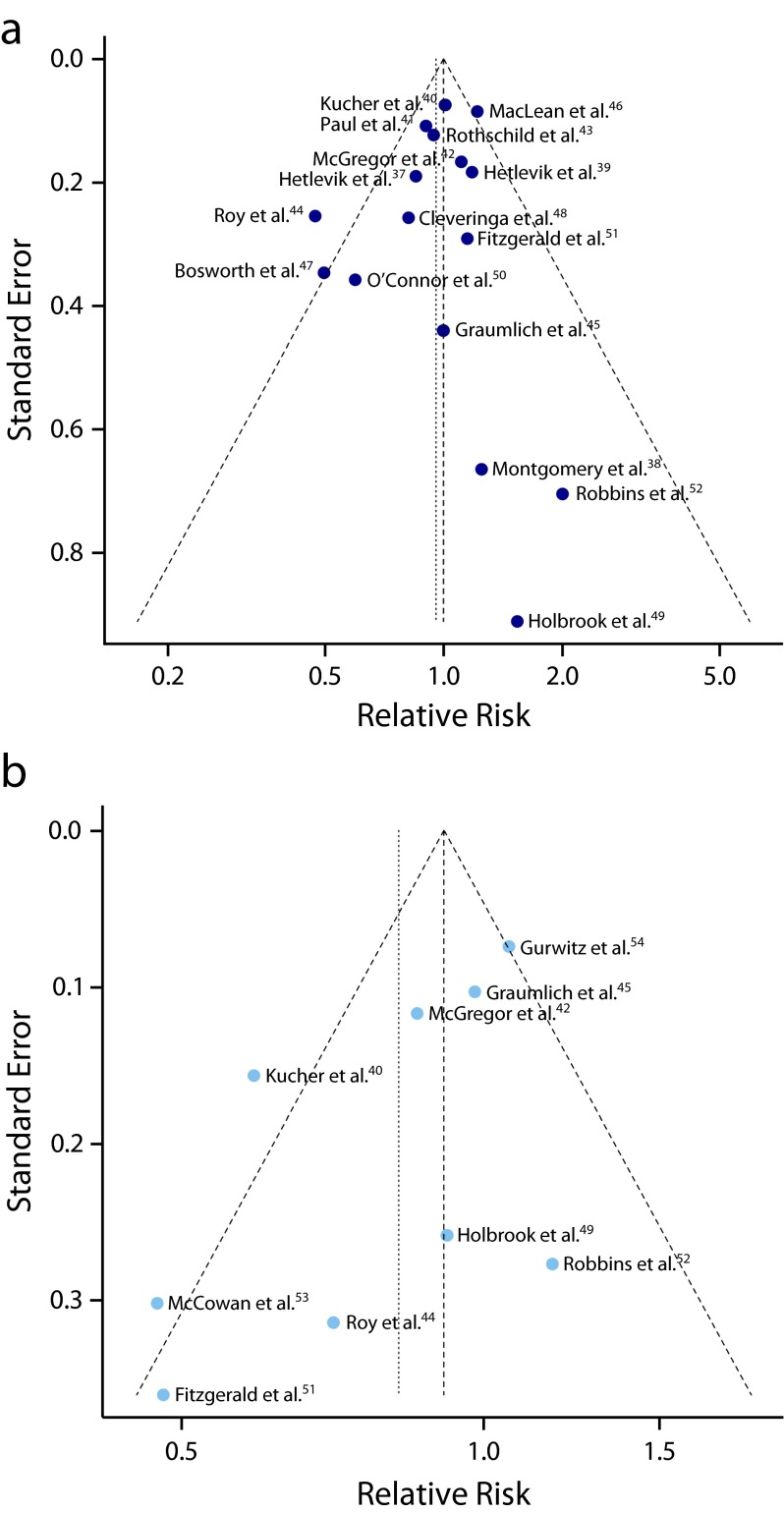

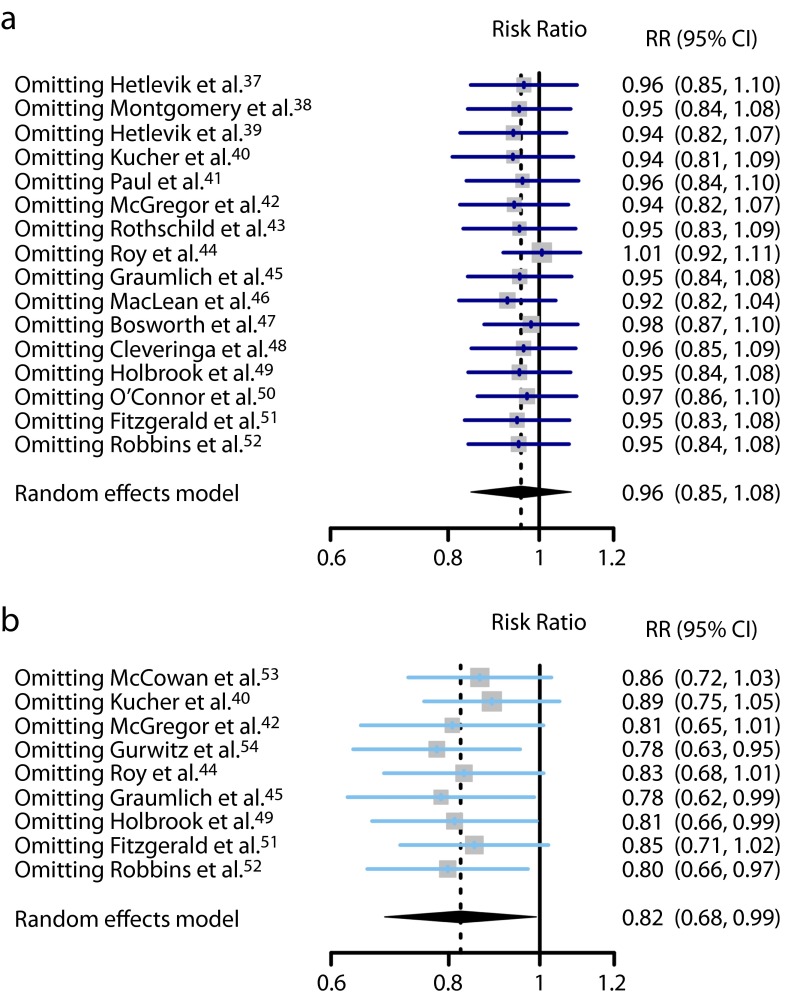

Sixteen RCTs contributed to this analysis.37–52 A total of 37 395 individuals participated in these trials: 18 848 in the intervention groups and 18 547 in the control groups. Seven trials37,41,42,44,47,48,50 reported a lower mortality in the intervention group, while 8 trials38–40,42,46,49,51,52 reported a higher mortality. Only 3 were statistically significant.44,46,47 The overall mortality rate on all 16 RCTs was 6.2% in the intervention groups (1171 deaths) and 6.0% in the control groups (1111 deaths). The pooled effect estimate was not statistically significant assuming either a fixed effects model (RR = 1.00; 95% CI = 0.92, 1.08), or a random effects model (RR = 0.96; 95% CI = 0.85, 1.08). Figure 3 shows the forest plot of the RR estimates and 95% CIs from the individual trials and the pooled results. The Cochran Q test had a P value of .047 and the corresponding I2 statistic was 41%, both indicating moderate variability between studies. Visual inspection of the funnel plot (Figure 4a) indicated that pooled data did not appear to be heavily influenced by publication bias, although it is also possible that few studies are “missing” from the area of nonsignificance. The P values for the tests of Begg and Egger were P = .96 and P = .29, respectively, also suggesting a low probability of publication bias. The “leave-one-out” sensitivity analysis, removing a study at a time (Figure 5), confirmed the stability of our results.

FIGURE 3—

Forest plots from individual studies and meta-analysis for (a) mortality, all follow-up, and (b) morbidity, any disease.

Note. CI = confidence interval; RR = risk ratio; W = weight. The RR and 95% CI for each study are displayed on a logarithmic scale.

FIGURE 4—

Funnel plots of observed relative risk against standard error for (a) mortality and (b) morbidity.

FIGURE 5—

“Leave-one-out” sensitivity analyses for studies with (a) mortality outcomes and (b) morbidity outcomes.

Note. CI = confidence interval; RR = risk ratio. Pooled estimates are from random-effects models with 1 study omitted at a time.

Meta-Analysis of Morbidity Outcomes

Nine RCTs contributed to this analysis.40,42,44,45,49,51–54 A total of 13 868 individuals participated in these trials. The analysis revealed a weak inverse association between CDSS use and morbidity from any disease. The difference between the CDSS and control groups in the occurrence of morbidity outcomes was marginally significant assuming a random-effects model (RR = 0.82; 95% CI = 0.68, 0.99), but not significant assuming a fixed-effects model (RR = 0.91; 95% CI = 0.83, 1.00). Figure 3 shows the forest plot of the RR estimates and 95% CIs from the individual trials and the pooled results. The Cochran’s Q test had a P value of .005 and the corresponding I2 statistic was 64%, both indicating substantial variability between studies. Visual inspection of the funnel plot (Figure 4b) indicated slight asymmetry, with relatively few studies existing midway in the area of nonsignificance. The P values for the Begg and the Egger’s tests were P = .18 and P = .07, respectively, suggesting the possible existence of selective outcome reporting bias or small study effects. The sensitivity analysis confirmed that the pooled estimates were fairly unstable (Figure 5).

Qualitative Assessment of Economic Outcomes

Seventeen RCTs reported economic outcomes.41–43,45,46,50,53,55–64 Three of these46,50,59 presented the economic data in separate publications.65–67 Differences were seen for costs and health service utilization (e.g., drug or test orders), but these were often small in magnitude. Across economic outcomes, interventions equipped with CDSSs did not consistently perform better than nonequipped ones. Data regarding the impact of CDSSs on cost and health services utilization are given in Table 1.

TABLE 1—

Impact of Computerized Decision Support Systems (CDSSs) on Costs and Health Services Utilization

| Study | Impact Dimension | Outcomes Reported | Reference Time, Months | CDSS Costsa | Cost Differenceb | Variablec | Impactd |

| O’Connor et al.50,e | Cost-effectiveness | Outpatient and pharmacy costs | 12 | Year 1: 120; following years: 76 | 21 690 | $/QALY | ↑ |

| Pharmacy costs | 12 | Dominant | $/QALY | ↓ | |||

| Pharmacy costs | 12 | 3017 | $/QALY | ↑ | |||

| Holbrook et al.59,f | Cost-effectiveness | Disease management costs | 12 | 1821 | 153 169 | $/QALY | ↑ |

| Subramanian et al.56 | Cost allocation | Drug order costs | 12 | . . . | −7.31 | Cost/resident-y | ↔ |

| Drug order and laboratory test costs | 12 | . . . | −4.71 | Cost/resident-y | ↔ | ||

| Paul et al.41 | Cost allocation | Antibiotic costs | 6 | . . . | −68 | Cost per patient | ↔ |

| Health services utilization | Length of hospital stay | 6 | . . . | −0.62 | Days/patient | ↓ | |

| McGregor et al.42 | Cost allocation | Antimicrobial costs | 3 | . . . | −37.64 | Cost/patient | ↔ |

| Health services utilization | Length of hospital stay | 3 | . . . | −1.0 | Days/patient | ↓ | |

| Time spent to perform interventions | . . . | −0.9 | Person-hours/d | ↓ | |||

| Abdel-Kader et al.57 | Health services utilization | Renal referrals | 12 | . . . | −6.8% | Absolute change in referrals | ↓ |

| Renal test orders | 12 | . . . | 9.2% | Absolute change in referrals | ↑ | ||

| Renal drug orders | 12 | . . . | No difference | ↔ | |||

| Tamblyn et al.60 | Health services utilization | Psychotropic drug orders | 22 | . . . | −0.05 | Drug unit/patient | ↔ |

| Bell et al.58 | Health services utilization | Hospital visits | 24 | . . . | 0.15 | No./patient | ↑ |

| Drug order and laboratory/diagnostic tests | 24 | . . . | Mixed results | . . . | ↑↓ | ||

| MacLean et al.46,g | Cost allocation | Hospital and emergency room costs | 1 | 4 | −10.94 | Cost/patient/mo | ↔ |

| MacLean et al.46 | Cost allocation | Cost of hospital days and primary/secondary care visits | 12 | . . . | −2426 | Cost for all patients/y | ↔ |

| Javitt et al.61 | Cost allocation | Cost of visits and drug orders | 1 | . . . | −24.8 | Cost per patient/mo | ↔ |

| Apkon et al.62 | Cost allocation | Cost of visits, drug orders, and tests | 2 | . . . | 91 | Cost/patient | ↑ |

| Tierney et al.63 | Cost allocation | Outpatient and inpatient charges | 12 | . . . | Mixed results | Cost/patient | ↑↓ |

| Graumlich et al.45 | Health services utilization | Emergency room visits | 6 | . . . | −5.2% | Absolute change in visits | ↓ |

| McCowan et al.53 | Health services utilization | Hospital visits | 6 | . . . | −3% | Absolute change in no. patients | ↓ |

| Primary care visits | 6 | . . . | −19% | Absolute change in no. patients | ↓ | ||

| Drug orders | 6 | . . . | −19% | Absolute change in no. patients | ↓ | ||

| Rothschild et al.43 | Health services utilization | Length of stay | 5–6 | . . . | −1.4 (total), −1.8 (ICU) | Days/patient | ↓ |

| Cobos et al.64 | Cost allocation | Costs for disease management | 12 | . . . | −82 | Cost/patient | ↓ |

| Drug order costs | 12 | . . . | −80 | Cost/patient | ↓ | ||

| Gonzales et al.55 | Health services utilization | Antibiotic drug order | 6 | . . . | −15.1% | % of patients prescribed antibiotics | ↓ |

Note. ICU = intensive care unit; QALY = quality-adjusted life year.

Costs are reported in US$, per patient. For costs not originally reported in US$, they were converted to US$ at the rate of January 1 of the study’s publication year.

When the cost to implement the CDSS was not reported, it was not accounted for in the cost difference between the intervention and control.

If the time period corresponding to the variable was not reported, it was assumed it to be the duration of the follow-up (provided in time horizon).

↑, increase; ↓, decrease; ↔, no change; ↑↓, mixed impacts for this outcome.

Economic analysis in Gilmer et al.65

Economic analysis in O’Reilly et al.66

Economic analysis in Khan et al.67

DISCUSSION

This systematic review of 28 RCTs revealed little evidence for a difference in mortality when pooling results from comparisons of adoption of a CDSS integrated with an EHR versus health care settings without a CDSS. Our review indicates that differences in mortality outcomes, if they exist, appear small across studies and health care services, and may exist only in particular settings with specific diseases and circumstances. However, most of the studies were underpowered and too short to prove or exclude an effect on mortality, and effects as large as a 25% increase or reduction could still be possible. We found weak evidence that an active CDSS is associated with a lower risk for morbidity. All morbidity outcomes selected were relevant from a clinical and health services perspective. Again, results on morbidity outcomes were very diverse, limiting quantitative inferences; however, the summary RR morbidity decrease of 10% to 18% places CDSSs linked to EHRs at the top of the spectrum of quality improvement interventions for their potential impact on health outcomes. The beneficial effects of CDSSs might still be greater than that suggested by the current analysis given the limited number of actual studies providing results on hard outcomes. Finally, we observed differences for costs and health service utilization, but these were often small in magnitude.

Several other systematic reviews provided pooled estimates of the RRs for CDSSs. All reviews observed large between-study heterogeneity. This is expected given the variability in intervention, settings, diseases, and study designs. Despite this limitation, they concluded in favor of CDSSs. Our review exhibits several differences. We adopted stricter inclusion criteria, selecting only CDSSs featuring a rule- or algorithm-based software integrated with EHRs and evidence-based knowledge. The CDSSs we included can be viewed as a second generation in terms of their technology, information management, and linkage to EHRs. Furthermore, we did not include process and laboratory outcomes such as adherence to guideline recommendations or change in blood values. Analyzing estimates from process outcomes is problematic. Their relevance is questionable and the quality of the data may have been less than optimal, particularly when the data sources were administrative rather than clinical. The overlap between our review and others is limited, as there exists approximately 50% in terms of the studies and less in terms of the rough data. The results of our review complement previous analyses showing that CDSSs are best oriented to directly affect process outcomes (recommendation adherence) and, with decreasing impact, morbidity and mortality.

Several included studies were cluster-RCTs that did not report if they accounted for clustering effects. Trials randomizing at the group level should not be analyzed at the individual participant level. If the clustering is ignored, P values will be artificially small. This problem might result in false positive conclusions that the CDSS has an effect when it does not. Thus, we adjusted estimates of the RRs for our data synthesis using a method that inflates variances. However, such adjusted results should be interpreted cautiously; if the clustering effect is limited across studies, the analysis may be too conservative.

Our meta-analysis has additional limitations. We did not evaluate the quality of the evidence-based information supporting the CDSS recommendations. We accepted study authors’ description of a CDSS as evidence-based at face value, even if the authors did not explain the source of evidence or knowledge in detail. Furthermore, the limited number of trials, especially regarding the meta-analysis for the morbidity outcomes, increases the uncertainty of the findings and conclusions. The trials included were conceptually heterogeneous in terms of their design, setting, participants, and interventions, as well as the definition and measurement of outcomes. In addition, although our literature search was as inclusive as possible without the exclusion of studies based on methodological characteristics, the search was restricted to studies published in indexed journals. We did not search for unpublished studies or for source data. Moreover, the trials included in this meta-analysis were not designed to specifically analyze the relationship between mortality and CDSS use. In fact, mortality was additional information provided often as a reason behind loss to follow-up. Additionally, the follow-up was too short to detect a sufficient number of deaths to show potentially relevant differences. Finally, we cannot exclude that pooling the mortality outcome across different settings (e.g., intensive care units versus primary care) could have influenced the overall result toward a null effect with primary care studies bearing larger weight in the meta-analysis.

The results of this review may provide sufficient evidence to fuel the debate on the prospects of CDSSs linked to EHRs. For those perceiving CDSSs as an autocratic command to doctors, our systematic review may be interpreted as evidence that they do not affect patient mortality, on average, and should be abandoned. For those interested in CDSS dissemination, our results, which show a decrease in morbidity across all settings by one fifth, may be used as an argument to increase CDSS adoption within health care services. Both interpretations might be exaggerated as the evidence is still in its infancy along with the technology and implementation. Many of the trials adopted locally developed CDSS interventions, which may have compromised their level of integration into clinicians’ workflow. The next generation of CDSS trials should focus on systems with a more global outlook featuring authoritative point-of-care services68 and full integration with EHRs. The conclusion of a landmark article by Sim et al.,69 published almost 15 years ago, still reflects the current scenario:

Although the promise of clinical decision support system-facilitated evidence-based medicine is strong, substantial work remains to be done to realize the potential benefits.69(p527)

In conclusion, our results on health care services equipped with versus health care not equipped with CDSSs suggest, in broad terms, that this technology does not result in substantial benefits or risks for patients in terms of mortality. This effect, when it occurs, is largely dependent on the disease and setting characteristics. Focusing on subgroup analyses, however, can lead to misleading claims when the overall data are limited and unavoidably weak because of inherent design problems. Effects on morbidity might exist and the magnitude of the effect, in the order of 10% to 20%, could be large enough to impact mortality if appropriate follow-up is ensured. The results of this study may provide enough evidence to advance the debate on the prospects of CDSSs.

Acknowledgments

This work was supported by the Italian Ministry of Health (GR-2009-1606736) and by Regione Lombardia (D.R.G. IX/4340 26/10/2012). L. Moja is employed by the IRCCS Galeazzi and Università degli Studi di Milano, which have nonexclusive contracts with commercial publishers to develop or adapt CDSSs based on critically appraised studies and systematic reviews. I. Kunnamo is the Editor-in-Chief at Duodecim Medical Publications, a Finnish company owned by the Finnish Medical Society Duodecim, which develops the Evidence-Based Medicine electronic Decision Support (EBMeDS) service and publishes EBM Guidelines. Massimo Mangia is the Chief Executive Officer of Medilogy, an Italian company that develops and supplies MediDSS, a CDSS.

The authors would like to thank Vanna Pistotti for her support with developing the search strategy.

Note. Funding sources had no role in the writing of this article or the decision to submit it for publication.

Human Participant Protection

An institutional review board approval was not needed for this systematic review because data were obtained from secondary sources.

References

- 1.Roshanov PS, Fernandes N, Wilczynski JM et al. Features of effective computerised clinical decision support systems: meta-regression of 162 randomised trials. BMJ. 2013;346:f657. doi: 10.1136/bmj.f657. [DOI] [PubMed] [Google Scholar]

- 2.National Research Council. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: The National Academies Press; 2001. [PubMed] [Google Scholar]

- 3.Chaudhry B, Wang J, Wu S et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med. 2006;144(10):742–752. doi: 10.7326/0003-4819-144-10-200605160-00125. [DOI] [PubMed] [Google Scholar]

- 4.Ash JS, McCormack JL, Sittig DF et al. Standard practices for computerized clinical decision support in community hospitals: a national survey. J Am Med Inform Assoc. 2012;19(6):980–987. doi: 10.1136/amiajnl-2011-000705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kaushal R, Shojania KG, Bates DW. Effects of computerized physician order entry and clinical decision support systems on medication safety: a systematic review. Arch Intern Med. 2003;163(12):1409–1416. doi: 10.1001/archinte.163.12.1409. [DOI] [PubMed] [Google Scholar]

- 6.Bonnabry P, Despont-Gros C, Crauser D et al. A risk analysis method to evaluate the impact of a computerized provider order entry system on patient safety. J Am Med Inform Assoc. 2008;15(4):453–460. doi: 10.1197/jamia.M2677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.de Lusignan S, Chan T. The development of primary care information technology in the United Kingdom. J Ambul Care Manage. 2008;31(3):201–210. doi: 10.1097/01.JAC.0000324664.88131.d2. [DOI] [PubMed] [Google Scholar]

- 8.Romano MJ, Stafford RS. Electronic health records and clinical decision support systems: impact on national ambulatory care quality. Arch Intern Med. 2011;171(10):897–903. doi: 10.1001/archinternmed.2010.527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bright TJ, Wong A, Dhurjati R et al. Effect of clinical decision-support systems: a systematic review. Ann Intern Med. 2012;157(1):29–43. doi: 10.7326/0003-4819-157-1-201207030-00450. [DOI] [PubMed] [Google Scholar]

- 10. Swedish Presidency of the EU, e-Health for a Healthier Europe! Opportunities for a better use of healthcare resources, 2009. Available at: http://www.government.se/content/1/c6/12/98/15/5b63bacb.pdf. Accessed July 1, 2014.

- 11.Miriovsky BJ, Shulman LN, Abernethy AP. Importance of health information technology, electronic health records, and continuously aggregating data to comparative effectiveness research and learning health care. J Clin Oncol. 2012;30(34):4243–4248. doi: 10.1200/JCO.2012.42.8011. [DOI] [PubMed] [Google Scholar]

- 12.Jeffery R, Iserman E, Haynes RB. Can computerized clinical decision support systems improve diabetes management? A systematic review and meta-analysis. Diabet Med. 2013;30(6):739–745. doi: 10.1111/dme.12087. [DOI] [PubMed] [Google Scholar]

- 13.Roshanov PS, Misra S, Gerstein HC et al. Computerized clinical decision support systems for chronic disease management: a decision-maker-researcher partnership systematic review. Implement Sci. 2011;6:92. doi: 10.1186/1748-5908-6-92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Souza NM, Sebaldt RJ, Mackay JA et al. Computerized clinical decision support systems for primary preventive care: a decision-maker-researcher partnership systematic review of effects on process of care and patient outcomes. Implement Sci. 2011;6:87. doi: 10.1186/1748-5908-6-87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Bates DW, Kuperman GJ, Wang S et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality. J Am Med Inform Assoc. 2003;10(6):523–530. doi: 10.1197/jamia.M1370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kawamoto K, Houlihan CA, Balas EA, Lobach DF. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ. 2005;330(7494):765. doi: 10.1136/bmj.38398.500764.8F. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Payne TH. Computer decision support systems. Chest. 2000;118(2 suppl):47S–52S. doi: 10.1378/chest.118.2_suppl.47s. [DOI] [PubMed] [Google Scholar]

- 18. Effectiveness of computerized decision support systems linked to patient records: a systematic review and meta-analysis. PROSPERO 2014:CRD42014007177. Available at: http://www.crd.york.ac.uk/PROSPERO/display_record.asp?ID=CRD42014007177. Accessed July 1, 2014.

- 19.Moher D, Liberati A, Tetzlaff J, Altman DG PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med. 2009;151:264–269, W64. doi: 10.7326/0003-4819-151-4-200908180-00135. [DOI] [PubMed] [Google Scholar]

- 20.Haynes RB, Wilczynski NL. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: methods of a decision-maker-researcher partnership systematic review. Implement Sci. 2010;5:12. doi: 10.1186/1748-5908-5-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Eberhardt J, Bilchik A, Stojadinovic A. Clinical decision support systems: potential with pitfalls. J Surg Oncol. 2012;105(5):502–510. doi: 10.1002/jso.23053. [DOI] [PubMed] [Google Scholar]

- 22.Gunter TD, Terry NP. The emergence of national electronic health record architectures in the United States and Australia: models, costs, and questions. J Med Internet Res. 2005;7(1):e3. doi: 10.2196/jmir.7.1.e3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Dicenso A, Bayley L, Haynes RB. Accessing pre-appraised evidence: fine-tuning the 5S model into a 6S model. Evid Based Nurs. 2009;12(4):99–101. doi: 10.1136/ebn.12.4.99-b. [DOI] [PubMed] [Google Scholar]

- 24.Moja L, Banzi R. Navigators for medicine: evolution of online point-of-care evidence-based services. Int J Clin Pract. 2011;65(1):6–11. doi: 10.1111/j.1742-1241.2010.02441.x. [DOI] [PubMed] [Google Scholar]

- 25.Higgins JPT, Green S. Cochrane Handbook for Systematic Reviews of Interventions, version 5.0.1. The Cochrane Collaboration. 2011. Available at: www.cochrane-handbook.org. Accessed October 6, 2014.

- 26. Health Services Research Unit. Database of ICCs: Spreadsheet (empirical estimates of ICCs from changing professional practice studies). Available at: http://www.abdn.ac.uk/hsru/research/delivery/behaviour/methodological-research. Accessed July 1, 2014.

- 27.Mantel N, Haenszel W. Statistical aspects of the analysis of data from retrospective studies of disease. J Natl Cancer Inst. 1959;22:719–748. [PubMed] [Google Scholar]

- 28.DerSimonian R, Laird N. Meta-analysis in clinical trials. Control Clin Trials. 1986;7:177–188. doi: 10.1016/0197-2456(86)90046-2. [DOI] [PubMed] [Google Scholar]

- 29.Petitti DB. Statistical methods in meta-analysis. In: Petitti DB, editor. Meta-Analysis, Decision Analysis, and Cost-Effectiveness Analysis. New York: Oxford University Press; 1999. [Google Scholar]

- 30. R Core Team. R: a language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing; 2013. Available at: http://www.R-project.org. Accessed July 1, 2014.

- 31.Schwarzer G. Meta: an R package for meta-analysis. 2013. Available at: http://cran.r-project.org/package=meta. Accessed July 1, 2014.

- 32.Begg CB, Mazumdar M. Operating characteristics of a rank correlation test for publication bias. Biometrics. 1994;50:1088–1101. [PubMed] [Google Scholar]

- 33.Egger M, Davey Smith G, Schneider M, Minder C. Bias in meta-analysis detected by a simple, graphical test. BMJ. 1997;315:629–634. doi: 10.1136/bmj.315.7109.629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Cochran WG. The combination of estimates from different experiments. Biometrics. 1954;10:101–129. [Google Scholar]

- 35.Higgins JPT, Thompson SG, Deeks JJ, Altman DG. Measuring inconsistency in meta-analyses. BMJ. 2003;327:557–560. doi: 10.1136/bmj.327.7414.557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Tobias A. Assessing the influence of a single study in the meta-analysis estimate. Stata Tech Bull. 1999;8:15–17. [Google Scholar]

- 37.Hetlevik I, Holmen J, Krüger O. Implementing clinical guidelines in the treatment of hypertension in general practice. Evaluation of patient outcome related to implementation of a computer-based clinical decision support system. Scand J Prim Health Care. 1999;17(1):35–40. doi: 10.1080/028134399750002872. [DOI] [PubMed] [Google Scholar]

- 38.Montgomery AA, Fahey T, Peters TJ, MacIntosh C, Sharp DJ. Evaluation of computer based clinical decision support system and risk chart for management of hypertension in primary care: randomised controlled trial. BMJ. 2000;320(7236):686–690. doi: 10.1136/bmj.320.7236.686. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Hetlevik I, Holmen J, Krüger O, Kristensen P, Iversen H, Furuseth K. Implementing clinical guidelines in the treatment of diabetes mellitus in general practice. Evaluation of effort, process, and patient outcome related to implementation of a computer-based decision support system. Int J Technol Assess Health Care. 2000;16(1):210–227. doi: 10.1017/s0266462300161185. [DOI] [PubMed] [Google Scholar]

- 40.Kucher N, Koo S, Quiroz R et al. Electronic alerts to prevent venous thromboembolism among hospitalized patients. N Engl J Med. 2005;352(10):969–977. doi: 10.1056/NEJMoa041533. [DOI] [PubMed] [Google Scholar]

- 41.Paul M, Andreassen S, Tacconelli E et al. Improving empirical antibiotic treatment using TREAT, a computerized decision support system: cluster randomized trial. J Antimicrob Chemother. 2006;58(6):1238–1245. doi: 10.1093/jac/dkl372. [DOI] [PubMed] [Google Scholar]

- 42.McGregor JC, Weekes E, Forrest GN et al. Impact of a computerized clinical decision support system on reducing inappropriate antimicrobial use: a randomized controlled trial. J Am Med Inform Assoc. 2006;13(4):378–384. doi: 10.1197/jamia.M2049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Rothschild JM, McGurk S, Honour M et al. Assessment of education and computerized decision support interventions for improving transfusion practice. Transfusion. 2007;47(2):228–239. doi: 10.1111/j.1537-2995.2007.01093.x. [DOI] [PubMed] [Google Scholar]

- 44.Roy PM, Durieux P, Gillaizeau F et al. A computerized handheld decision-support system to improve pulmonary embolism diagnosis: a randomized trial. Ann Intern Med. 2009;151(10):677–686. doi: 10.7326/0003-4819-151-10-200911170-00003. [DOI] [PubMed] [Google Scholar]

- 45.Graumlich JF, Novotny NL, Nace GS, Aldag JC. Patient and physician perceptions after software-assisted hospital discharge: cluster randomized trial. J Hosp Med. 2009;4(6):356–363. doi: 10.1002/jhm.565. [DOI] [PubMed] [Google Scholar]

- 46.Maclean CD, Gagnon M, Callas P, Littenberg B. The Vermont diabetes information system: a cluster randomized trial of a population based decision support system. J Gen Intern Med. 2009;24(12):1303–1310. doi: 10.1007/s11606-009-1147-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Bosworth HB, Olsen MK, Dudley T et al. Patient education and provider decision support to control blood pressure in primary care: a cluster randomized trial. Am Heart J. 2009;157(3):450–456. doi: 10.1016/j.ahj.2008.11.003. [DOI] [PubMed] [Google Scholar]

- 48.Cleveringa FG, Welsing PM, van den Donk M et al. Cost-effectiveness of the diabetes care protocol, a multifaceted computerized decision support diabetes management intervention that reduces cardiovascular risk. Diabetes Care. 2010;33:258–263. doi: 10.2337/dc09-1232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Holbrook A, Pullenayegum E, Thabane L et al. Shared electronic vascular risk decision support in primary care: Computerization of Medical Practices for the Enhancement of Therapeutic Effectiveness (COMPETE III) randomized trial. Arch Intern Med. 2011;171(19):1736–1744. doi: 10.1001/archinternmed.2011.471. [DOI] [PubMed] [Google Scholar]

- 50.O’Connor PJ, Sperl-Hillen JM, Rush WA et al. Impact of electronic health record clinical decision support on diabetes care: a randomized trial. Ann Fam Med. 2011;9(1):12–21. doi: 10.1370/afm.1196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Fitzgerald M, Cameron P, Mackenzie C et al. Trauma resuscitation errors and computer-assisted decision support. Arch Surg. 2011;146(2):218–225. doi: 10.1001/archsurg.2010.333. [DOI] [PubMed] [Google Scholar]

- 52.Robbins GK, Lester W, Johnson KL et al. Efficacy of a clinical decision-support system in an HIV practice: a randomized trial. Ann Intern Med. 2012;157(11):757–766. doi: 10.7326/0003-4819-157-11-201212040-00003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.McCowan C, Neville RG, Ricketts IW et al. Lessons from a randomized controlled trial designed to evaluate computer decision support software to improve the management of asthma. Med Inform Internet Med. 2001;26(3):191–201. doi: 10.1080/14639230110067890. [DOI] [PubMed] [Google Scholar]

- 54.Gurwitz JH, Field TS, Rochon P et al. Effect of computerized provider order entry with clinical decision support on adverse drug events in the long-term care setting. J Am Geriatr Soc. 2008;56(12):2225–2233. doi: 10.1111/j.1532-5415.2008.02004.x. [DOI] [PubMed] [Google Scholar]

- 55.Gonzales R, Anderer T, McCulloch CE et al. A cluster randomized trial of decision support strategies for reducing antibiotic use in acute bronchitis. JAMA Intern Med. 2013;173(4):267–273. doi: 10.1001/jamainternmed.2013.1589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Subramanian S, Hoover S, Wagner JL et al. Immediate financial impact of computerized clinical decision support for long-term care residents with renal insufficiency: a case study. J Am Med Inform Assoc. 2012;19(3):439–442. doi: 10.1136/amiajnl-2011-000179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Abdel-Kader K, Fischer GS, Li J, Moore CG, Hess R, Unruh ML. Automated clinical reminders for primary care providers in the care of CKD: a small cluster-randomized controlled trial. Am J Kidney Dis. 2011;58(6):894–902. doi: 10.1053/j.ajkd.2011.08.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Bell LM, Grundmeier R, Localio R et al. Electronic health record-based decision support to improve asthma care: a cluster-randomized trial. Pediatrics. 2010;125(4):e770–e777. doi: 10.1542/peds.2009-1385. [DOI] [PubMed] [Google Scholar]

- 59.Holbrook A, Thabane L, Keshavjee K et al. Individualized electronic decision support and reminders to improve diabetes care in the community: COMPETE II randomized trial. CMAJ. 2009;181(1-2):37–44. doi: 10.1503/cmaj.081272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Tamblyn R, Eguale T, Buckeridge DL et al. The effectiveness of a new generation of computerized drug alerts in reducing the risk of injury from drug side effects: a cluster randomized trial. J Am Med Inform Assoc. 2012;19(4):635–643. doi: 10.1136/amiajnl-2011-000609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Javitt JC, Rebitzer JB, Reisman L. Information technology and medical missteps: evidence from a randomized trial. J Health Econ. 2008;27(3):585–602. doi: 10.1016/j.jhealeco.2007.10.008. [DOI] [PubMed] [Google Scholar]

- 62.Apkon M, Mattera JA, Lin Z et al. A randomized outpatient trial of a decision-support information technology tool. Arch Intern Med. 2005;165(20):2388–2394. doi: 10.1001/archinte.165.20.2388. [DOI] [PubMed] [Google Scholar]

- 63.Tierney WM, Overhage JM, Murray MD et al. Effects of computerized guidelines for managing heart disease in primary care. J Gen Intern Med. 2003;18(12):967–976. doi: 10.1111/j.1525-1497.2003.30635.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Cobos A, Vilaseca J, Asenjo C et al. Cost effectiveness of a clinical decision support system based on the recommendations of the European Society of Cardiology and other societies for the management of hypercholesterolemia: report of a cluster-randomized trial. Dis Manag Health Outcomes. 2005;13(6):421–432. [Google Scholar]

- 65.Gilmer TP, O’Connor PJ, Sperl-Hillen JM et al. Cost-effectiveness of an electronic medical record based clinical decision support system. Health Serv Res. 2012;47(6):2137–2158. doi: 10.1111/j.1475-6773.2012.01427.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.O’Reilly D, Holbrook A, Blackhouse G, Troyan S, Goeree R. Cost-effectiveness of a shared computerized decision support system for diabetes linked to electronic medical records. J Am Med Inform Assoc. 2012;19(3):341–345. doi: 10.1136/amiajnl-2011-000371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Khan S, Maclean CD, Littenberg B. The effect of the Vermont Diabetes Information System on inpatient and emergency room use: results from a randomized trial. Health Outcomes Res Med. 2010;1(1):e61–e66. doi: 10.1016/j.ehrm.2010.03.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Banzi R, Liberati A, Moschetti I, Tagliabue L, Moja L. A review of online evidence-based practice point-of-care information summary providers. J Med Internet Res. 2010;12(3):e26. doi: 10.2196/jmir.1288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Sim I, Gorman P, Greenes RA et al. Clinical decision support systems for the practice of evidence-based medicine. J Am Med Inform Assoc. 2001;8(6):527–534. doi: 10.1136/jamia.2001.0080527. [DOI] [PMC free article] [PubMed] [Google Scholar]