Abstract

Hidden Markov random fields (HMRFs) are conventionally assumed to be homogeneous in the sense that the potential functions are invariant across different sites. However in some biological applications, it is desirable to make HMRFs heterogeneous, especially when there exists some background knowledge about how the potential functions vary. We formally define heterogeneous HMRFs and propose an EM algorithm whose M-step combines a contrastive divergence learner with a kernel smoothing step to incorporate the background knowledge. Simulations show that our algorithm is effective for learning heterogeneous HMRFs and outperforms alternative binning methods. We learn a heterogeneous HMRF in a real-world study.

1 Introduction

Hidden Markov models (HMMs) and hidden Markov random fields (HMRFs) are useful approaches for modelling structured data such as speech, text, vision and biological data. HMMs and HMRFs have been extended in many ways, such as the infinite models [Beal et al., 2002, Gael et al., 2008, Chatzis and Tsechpenakis, 2009], the factorial models [Ghahramani and Jordan, 1997, Kim and Zabih, 2002], the high-order models [Lan et al., 2006] and the nonparametric models [Hsu et al., 2009, Song et al., 2010]. HMMs are homogeneous in the sense that the transition matrix stays the same across different sites. HMRFs, intensively used in image segmentation tasks [Zhang et al., 2001, Celeux et al., 2003, Chatzis and Varvarigou, 2008], are also homogeneous. The homogeneity assumption for HMRFs in image segmentation tasks is legitimate, because people usually assume that the neighborhood system on an image is invariant across different regions. However, it is necessary to bring heterogeneity to HMMs and HMRFs in some biological applications where the correlation structure can change over different sites. For example, a heterogeneous HMM is used for segmenting array CGH data [Marioni et al., 2006], and the transition matrix depends on some background knowledge, i.e. some distance measurement which changes over the sites. A heterogeneous HMRF is used to filter SNPs in genome-wide association studies [Liu et al., 2012a], and the pairwise potential functions depend on some background knowledge, i.e. some correlation measure between the SNPs which can be different between different pairs. In both of these applications, the transition matrix and the pairwise potential functions are heterogeneous and are parameterized as monotone parametric functions of the background knowledge. Although the algorithms tune the parameters in the monotone functions, there is no justification that the parameterization of the monotone functions is correct. Can we adopt the background knowledge about these heterogeneous parameters adaptively during HMRF learning, and recover the relation between the parameters and the background knowledge nonparametrically?

Our paper is the first to learn HMRFs with heterogeneous parameters by adaptively incorporating the background knowledge. It is an EM algorithm whose M-step combines a contrastive divergence style learner with a kernel smoothing step to incorporate the background knowledge. Details about our EM-kernel-PCD algorithm are given in Section 3 after we formally define heterogeneous HMRFs in Section 2. Simulations in Section 4 show that our EM-kernel-PCD algorithm is effective for learning heterogeneous HMRFs and outperforms alternative methods. In Section 5, we learn a heterogeneous HMRF in a real-world genome-wide association study. We conclude in Section 6.

2 Models

2.1 HMRFs And Homogeneity Assumption

Suppose that 𝕏 = {0, 1, …, m − 1} is a discrete space, and we have a Markov random field (MRF) defined on a random vector X ∈ 𝕏d. The conditional independence is described by an undirected graph 𝔾(𝕍, 𝔼). The node set 𝕍 consists of d nodes. The edge set 𝔼 consists of r edges. The probability of x from the MRF with parameters θ is

| (1) |

where Z(θ) is the normalizing constant. Q(x; θ) is some unnormalized measure with ℂ(𝔾) being some subset of the cliques in 𝔾. The potential function ϕc is defined on the clique c and is parameterized by θc. For simplicity in this paper, we consider pairwise MRFs, whose potential functions are defined on the edges, namely |ℂ(𝔾)| = r. We further assume that each pairwise potential function is parameterized by a single parameter, i.e. θc = {θc}.

A hidden Markov random field [Zhang et al., 2001, Celeux et al., 2003, Chatzis and Varvarigou, 2008] consists of a hidden random field X ∈ 𝕏d and an observable random field Y ∈ 𝕐d where 𝕐 is another space (either continuous or discrete). The random field X is a Markov random field with density P(x; θ), as defined in Formula (1), and its instantiation x cannot be measured directly. Instead, we can observe the emitted random field Y with its individual dimension Yi depending on Xi for i = 1, …, d, namely where φ = {φ0, …, φm−1} and φxi parameterizes the emitting distribution of Yi under the state xi. Therefore, the joint probablity of x and y is

| (2) |

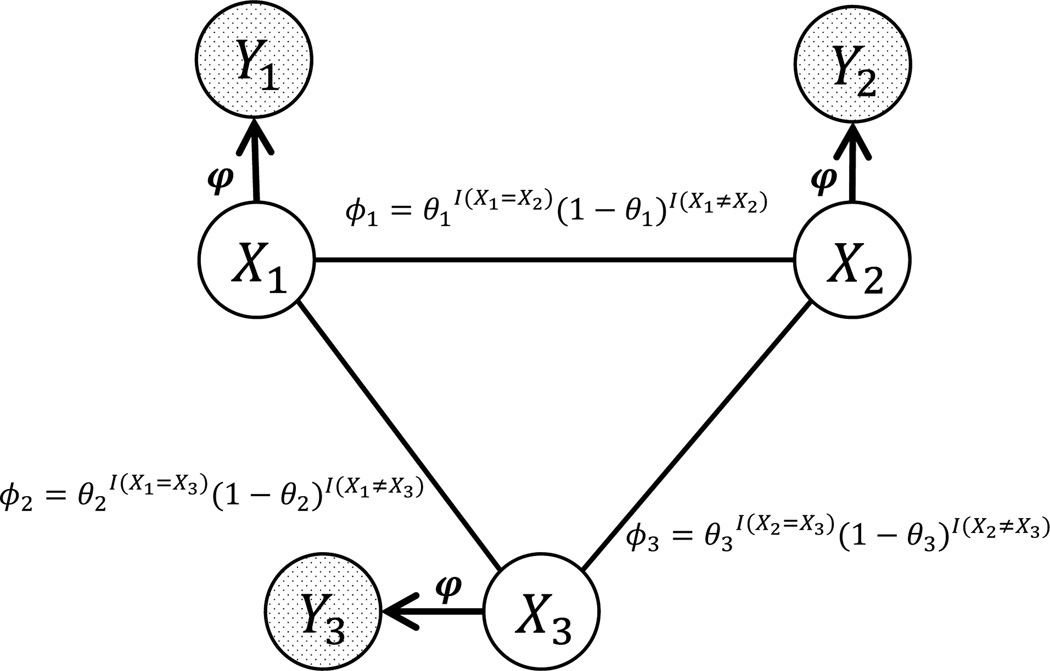

Example 1: One pairwise HMRF model with three latent variables (X1, X2, X3) and three observable variables (Y1, Y2, Y3) is given in Figure 1. Let 𝕏 = {0, 1}. X1, X2 and X3 are connected by three edges. The pairwise potential function ϕi on edge i (connecting Xu and Xv) parameterized by θi (0<θi<1) is for i = 1, 2, 3, where I is an indicator variable. Let 𝕐 = R. For i = 1, 2, 3, and , namely φ0 = {μ0, σ0} and φ1 = {μ1, σ1}.

Figure 1.

The pairwise HMRF model with three latent nodes (X1, X2, X3) and observable nodes (Y1, Y2, Y3) with parameters θ = {θ1, θ2, θ3} and φ = {φ0, φ1}.

In common applications of HMRFs, we observe only one instantiation y which is emitted according to the hidden state vector x, and the task is to infer the most probable state configuration of X, or to compute the marginal probabilities of X. In both tasks, we need to estimate the parameters θ = {θ1, …, θr} and φ = {φ0, …, φm−1}. Usually, we seek maximum likelihood estimates of θ and φ which maximize the log likelihood

| (3) |

Since we only have one instantiation (x, y), we usually have to assume that θi’s are the same for i = 1, …, r for effective parameter learning. This homogeneity assumption is widely used in computer vision problems because people usually assume that the neighborhood system on an image is invariant across its different regions. Therefore, conventional HMRFs refer to homogeneous HMRFs, similar to conventional HMMs whose transition matrix is invariant across different sites.

2.2 Heterogeneous HMRFs

In a heterogeneous HMRF, the potential functions on different cliques can be different. Taking the model in Figure 1 as an example, θ1, θ2 and θ3 can be different if the HMRF is heterogeneous. As with conventional HMRFs, we want to be able to address applications that have one instantiation (x, y) where y is observable and x is hidden. Therefore, learning an HMRF from one instantiation y is infeasible if we free all θ’s. To partially free the parameters, we assume that there is some background knowledge k = {k1, …, kr} about the parameters θ = {θ1, …, θr} in the form of some unknown smooth mapping function which maps θi to ki for i = 1, …, r. The background knowledge describes how these potential functions are different across different cliques. Taking pairwise HMRFs for example, the potentials on the edges with similar background knowledge should have similar parameters. We can regard the homogeneity assumption in conventional HMRFs as an extreme type of background knowledge that k1 = k2 = … = kr. The problem we solve in this paper is to estimate θ and φ which maximize the log likelihood ℒ(θ, φ) in Formula (3), subject to the condition that the estimate of θ is smooth with respect to k.

3 Parameter Learning Methods

Learning heterogeneous HMRFs in above manner involves three difficulties, (i) the intractable Z(θ), (ii) the latent x, and (iii) the heterogeneous θ. The way we handle the intractable Z(θ) is similar to using contrastive divergence [Hinton, 2002] to learn MRFs. We review contrastive divergence and its variations in Section 3.1. To handle the latent x in HMRF learning, we introduce an EM algorithm in Section 3.2, which is applicable to conventional HMRFs. In Section 3.3, we further address the heterogeneity of θ in the M-step of the EM algorithm.

3.1 Contrastive Divergence for MRFs

Assume that we observe s independent samples 𝒳 = {x1, x2, …, xs} from (1), and we want to estimate θ. The log likelihood ℒ(θ|𝒳) is concave w.r.t. θ, and we can use gradient ascent to find the MLE of θ. The partial derivative of ℒ(θ|𝒳) with respect to θi is

| (4) |

where ψi is the sufficient statistic corresponding to θi, and Eθψi is the expectation of ψi with respect to the distribution specified by θ. In the i-th iteration of gradient ascent, the parameter update is

where η is the learning rate. However the exact computation of Eθψi takes time exponential in the treewidth of 𝔾. A few sampling-based methods have been proposed to solve this problem. The key differences among these methods are how to draw particles and how to compute Eθψ from the particles. MCMC-MLE [Geyer, 1991, Zhu and Liu, 2002] uses importance sampling, but might suffer from degeneracy when θ(i) is far away from θ(1). Contrastive divergence [Hinton, 2002] generates new particles in each iteration according to the current θ(i) and does not require the particles to reach equilibrium, so as to save computation. Variations of contrastive divergence include particle-filtered MCMC-MLE [Asuncion et al., 2010], persistent contrastive divergence (PCD) [Tieleman, 2008] and fast PCD [Tieleman and Hinton, 2009]. Because PCD is efficient and easy to implement, we employ it in this paper. Its pseudocode is provided in Algorithm 1. Other than contrastive divergence, MRF can be learned via ratio matching [Hyvärinen, 2007], non-local contrastive objectives [Vickrey et al., 2010], noise-contrastive estimation [Gutmann and Hyvärinen, 2010] and minimum KL contraction [Lyu, 2011].

Algorithm 1.

PCD-n Algorithm [Tieleman, 2008]

| 1: |

Input: independent samples 𝒳 = {x1, x2, …, xs} from P(x; θ), maximum iteration number T |

| 2: | Output: θ̂ from the last iteration |

| 3: | Procedure: |

| 4: | Initialize θ(1) and initialize particles |

| 5: | Calculate E𝒳ψ from 𝒳 |

| 6: | for i = 1 to T do |

| 7: | Advance particles n steps under θ(i) |

| 8: | Calculate Eθ(i) ψ from the particles |

| 9: | θ(i+1) = θ(i) + η (E𝒳 ψ − Eθ(i) ψ) |

| 10: | Adjust η |

| 11: | end for |

3.2 Expectation-Maximization for Learning Conventional HMRFs

We begin with a lower bound of the log likelihood function, and then introduce the EM algorithm which handles the latent variables in HMRFs. Let qx(x) be any distribution on x∈𝕏d. It is well known that there exists a lower bound of the log likelihood ℒ(θ, φ) in (3), which is provided by an auxiliary function ℱ(qx(x), {θ, φ}) defined as follows,

| (5) |

where KL[qx(x)|P(x|y, θ, φ)] is the Kullback-Leibler divergence between qx(x) and P(x|y, θ, φ), the posterior distribution of the hidden variables. This Kullback-Leibler divergence is the distance between ℒ(θ, φ) and ℱ(qx(x), {θ, φ}).

Expectation-Maximization: We maximize ℒ(θ, φ) with an expectation-maximization (EM) algorithm which iteratively maximizes its lower bound ℱ(qx(x), {θ, φ}). We first initialize θ(0) and φ(0). In the t-th iteration, the updates in the expectation (E) step and the maximization (M) step are

| (E) |

| (M) |

In the E-step, we maximize ℱ(qx(x), {θ(t−1), φ(t−1)}) with respect to qx(x). Because the difference between ℱ(qx(x), {θ, φ}) and ℒ(θ, φ) is KL[qx(x)|P(x|y, θ, φ)], the maximizer in the E-step is P(x|y, θ(t−1), φ(t−1), namely the posterior distribution of x|y under the current estimated parameters θ(t−1) and φ(t−1). This posterior distribution can be calculated by Markov chain Monte Carlo for general graphs.

In the M-step, we maximize with respect to {θ, φ}, which can be rewritten as

It is obvious that this function can be maximized with respect to φ and θ separately as

| (6) |

Estimating φ: Estimating φ in this maximum likelihood manner is straightforward, because the maximization can be rewritten as follows,

where .

Estimating θ: Estimating θ in Formula (6) is difficult due to the intractable Z(θ). Some approaches [Zhang et al., 2001, Celeux et al., 2003] use pseudo-likelihood [Besag, 1975] to estimate θ in the M-step. It can be shown that is convex with respect to θ. Therefore, we can use gradient ascent to find the MLE of θ, which is similar to using contrastive divergence [Hinton, 2002] to learn MRFs in Section 3.1.

Denote by . The partial derivative of with respect to θi is

.

Therefore, the derivative here is similar to the derivative in contrastive divergence in Formula (4) except we have to reweight it to . We run the EM algorithm until both θ and φ converge. Note that when learning homogeneous HMRFs with this algorithm, we tie all θ’s all the time, namely θ = {θ}. Therefore, we name this parameter learning algorithm for conventional HMRFs the EM-homo-PCD algorithm.

3.3 Learning Heterogeneous HMRFs

Learning heterogeneous HMRFs is different from learning conventional homogeneous HMRFs in two ways. First, we need to free the θ’s in heterogeneous HMRFs. Second, there is some background knowledge k about how the θ’s are different, as introduced in Section 2. Therefore, we make two modifications to the EM-homo-PCD algorithm in order to learn heterogeneous HMRFs with background knowledge. First, we estimate the θ’s separately, which obviously brings more variance in estimation. Second, within each iteration of the contrastive divergence update, we apply a kernel regression to smooth the estimate of the θ’s with respect to the background knowledge k. Specifically, in the i-th iteration of PCD update, we advance the particles under θ̂(i) for n steps, and calculate the moments Eθ̂(i) ψ from the particles. Therefore, we can update the estimate as

Then we regress θ̃(i+1) with respect to k via Nadaraya-Watson kernel regression [Nadaraya, 1964, Watson, 1964], and set θ̂(i+1) to be the fitted values. For ease of notation, we drop the iteration index (i + 1). Suppose that θ̃ = {θ̃1, …, θ̃r} is the estimate before kernel smoothing; we set the smoothed estimate θ̂ = {θ̂1, …, θ̂r} as

where

For the kernel function K, we use the Epanechnikov kernel, which is usually computationally more efficient than a Gaussian kernel. We tune the bandwidth h through cross-validation, namely we select the bandwidth which minimizes the leave-one-out score

Tuning the bandwidth is usually computation-intensive, so we tune it every t0 iterations to save computation. We name our parameter learning algorithm for heterogeneous HMRFs the EM-kernel-PCD algorithm. Its pseudo-code is given in Algorithm 2.

Another intuitive way of handling background knowledge about these heterogeneous parameters is to create bins according to the background knowledge and tie the θ’s that are in the same bin. Suppose that we have b bins after we carefully select the binwidth,

Algorithm 2.

EM-kernel-PCD Algorithm

| 1: |

Input: sample y, background knowledge k, max iteration number T, initial bandwidth h |

| 2: | Output: θ̂ from the last iteration |

| 3: | Procedure: |

| 4: | Initialize θ̂, φ̂ and particles |

| 5: | while not converge do |

| 6: | E-step: infer x̂ from y |

| 7: | Calculate Ex̂ φ from x̂ |

| 8: | for i = 1 to T do |

| 9: | Advance particles for n steps under θ̂(i) |

| 10: | Calculate Eθ̂(i) φ from the particles |

| 11: | |

| 12: | θ̂(i+1) = kernelRegFit(θ̃(i+1), k, h) |

| 13: | Adjust η and tune bandwidth h |

| 14: | end for |

| 15: | MLE φ̂ from x̂ and y |

| 16: | end while |

namely we have θ = {θ1, …, θb}. The rest of the algorithm is the same as the EM-homo-PCD algorithm in Section 3.2. We name this parameter learning algorithm via binning the EM-binning-PCD algorithm. We can also regard our EM-kernel-PCD algorithm as a soft-binning version of EM-binning-PCD.

4 Simulations

We investigate the performance of our EM-kernel-PCD algorithm on heterogeneous HMRFs with different structures, namely a tree-structure HMRF and a grid-structure HMRF. In the simulations, we first set the ground truth of the parameters, and then set the background knowledge. We then generate one example x and then generate one example y|x. With the observable y, we apply EM-kernel-PCD, EM-binning-PCD and EM-homo-PCD to learn the parameters θ. We eventually compare the three algorithms by their average absolute estimate error where θ̂i is the estimate of θi.

For the HMRFs, each dimension of X takes values in {0, 1}. The pairwise potential function ϕi on edge i (connecting Xu and Xv) parameterized by ϕi (0 < ϕi < 1) is for i = 1, 2, 3, where I is an indicator variable. For the tree structure, we choose a perfect binary tree of height 12, which yields a total number of 8,191 nodes and 8,190 parameters, i.e. d = 8,191 and r = 8,190. For the grid-structure HMRFs, we choose a grid of 100 rows and 100 columns, which yields a total number of 10,000 nodes and 19,800 parameters, i.e. d = 10,000 and r = 19,800. For both of the two models, we generate θi ~ U(0.5, 1) independently and then generate the background knowledge ki. We have two types of background knowledge. In the first type of background knowledge, we set ki = sin θi + ε. In the second type of background knowledge, we set , where ε is some random Gaussian noise from . We try three values for σε, namely 0.0, 0.01 and 0.02. Then we generate one instantiation x. Finally, we generate one observable y from a d dimensional multivariate normal distribution N(μx, σ2𝕀) where μ = 2 is the strength of signal, and σ2 = 1.0 is the variance of the manifestation, and 𝕀 is the identity matrix of dimension d. For our EM-kernel-PCD algorithm, we use an Epanechnikov kernel with α = β = 5. For tuning bandwidth h, we try 100 values in total, namely 0.005, 0.01, 0.015, …, 0.5. For the EM-binning-PCD algorithm, we set the binwidth to be 0.005. The rest of the parameter settings for the three algorithms are the same, including the n parameter in PCD which is set to be 1 and the number of particles which is set to be 100. We also replicate each experiment 20 times, and the averaged results are reported.

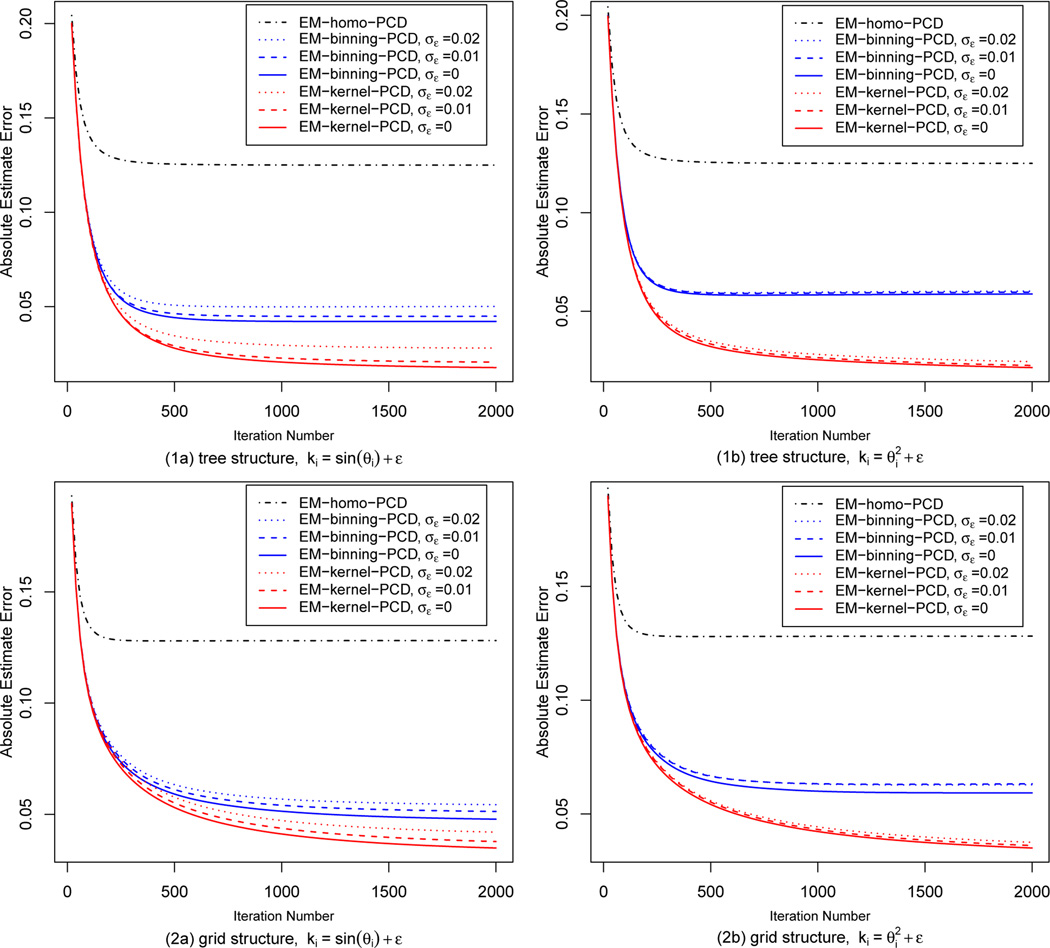

Performance of the algorithms The results from the tree-structure HMRFs and the grid-structure HMRFs are reported in Figure 2. We plot the average absolute error of the estimate of the three algorithms against the number of iterations of PCD update. We have separate plots for background knowledge ki = sin θi + ε, and background knowledge . Since there are three noise levels for background knowledge, both the EM-kernel-PCD algorithm and the EM-binning-PCD algorithm have three variations. All the three algorithms converge as they iterate. It is observed that the absolute estimate error of the EM-homo-PCD algorithm reduces to 0.125 as it converges. Since the parameters θi’s are drawn independently from the uniform distribution on the interval [0.5, 1], the EM-homo-PCD algorithm ties all the θi’s and estimates them to be 0.75. Therefore, the averaged absolute error is . Our EM-kernel-PCD algorithm significantly outperforms the EM-binning-PCD algorithm and the EM-homo-PCD algorithm. It is also observed that as the noise level of background knowledge increases, the performance of the EM-kernel-PCD algorithm and the EM-binning-PCD algorithm deteriorates. However, as long as the noise level is moderate, the performance of our EM-kernel-PCD algorithm is satisfactory. The results from the tree-structure HMRFs and the grid-structure HMRFs are comparable except that it takes more iterations to converge in grid-structure HMRFs than in tree-structure HMRFs.

Figure 2.

Performance of EM-homo-PCD, EM-binning-PCD and EM-kernel-PCD in tree-HMRFs and grid-HMRFs for two types of background knowledge: (a) ki = sin θi + ε, and (b) .

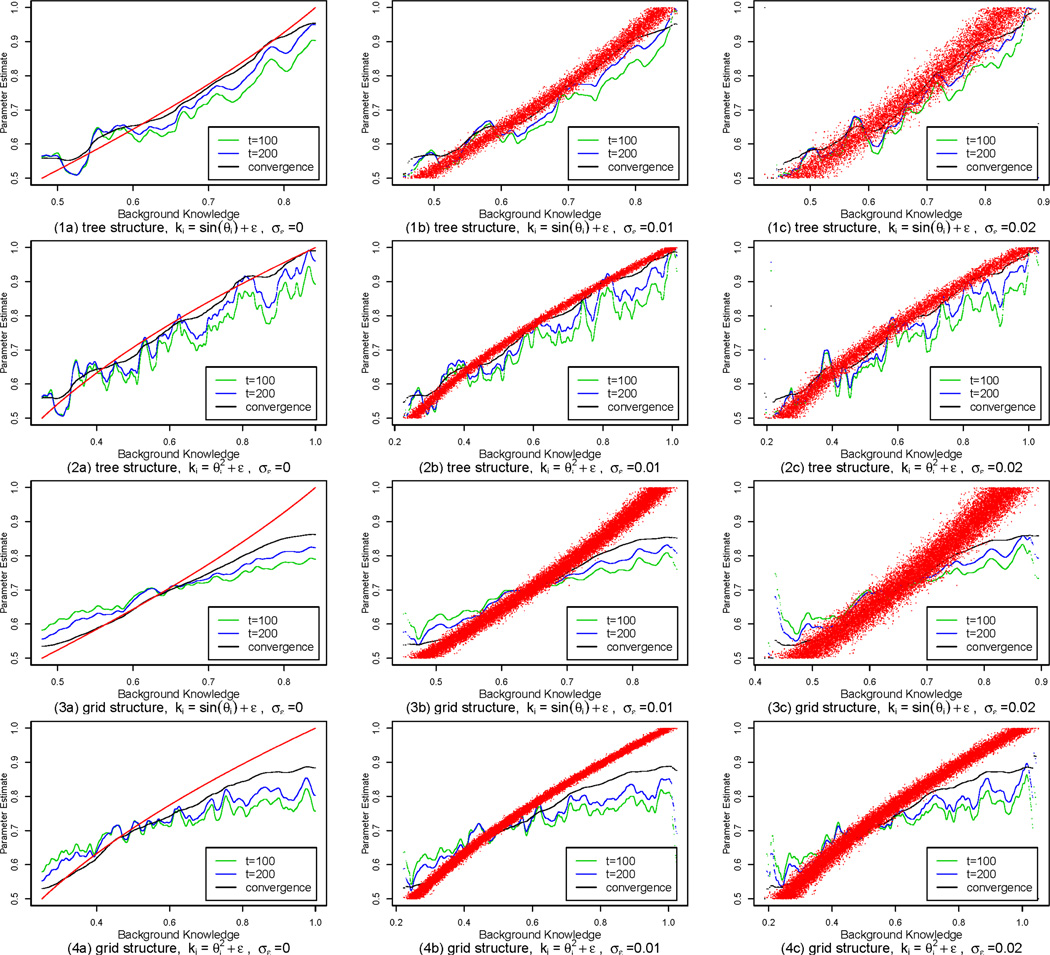

Behavior of the algorithms We then plot the estimated parameters against their background knowledge in the iterations of our EM-kernel-PCD algorithm. We provide plots for after 100 iterations, after 200 iterations and after convergence respectively, to show how the EM-kernel-PCD algorithm behaves during the gradient ascent. Figure 3 shows the plots for the background knowledge ki = sin θi + ε and the background knowledge with three levels of noise (namely σε=0, 0.01 and 0.02) for both the tree-structure HMRFs and the grid-structure HMRFs. It is observed that as the algorithm iterates, it gradually recovers the relationship between the parameters and the background knowledge. There is still a gap between our estimate and the ground truth. This is because we only have one hidden instantiation x and we have to infer x from the observed y in the E-step. Especially at the boundaries, we can observe a certain amount of estimate bias. The boundary bias is very common in kernel regression problems because there are fewer data points at the boundaries [Fan, 1992].

Figure 3.

The behavior of the EM-kernel-PCD algorithm during gradient ascent for different types of background knowledge with different levels of noise in the tree-structure HMRFs and the grid-structure HMRFs. The red dots show the mapping pattern between the ground truth of the parameters and their background knowledge.

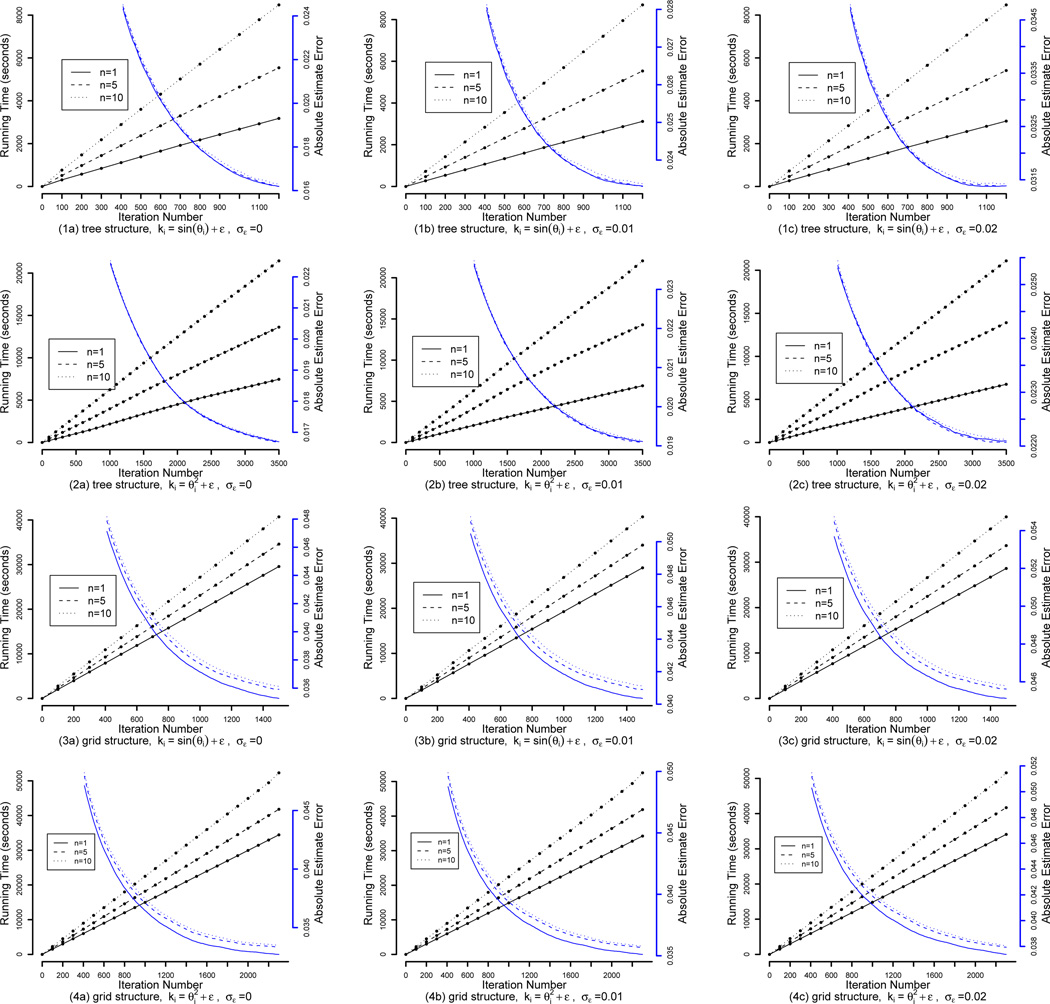

Choosing parameter n One parameter in contrastive divergence algorithms is n, the number of MCMC steps we need to perform under the current parameters in order to generate the particles. The rationale of contrastive divergence is that it is enough to find the direction to update the parameters by a few MCMC steps using the current parameters, and we do not have to reach the equilibrium. Therefore, the parameter n is usually set to be very small to save computation when we are learning general Markov random fields. Here we explore how we should choose the n parameter in our EM-kernel-PCD algorithm for learning HMRFs. We choose three values for n in the simulations, namely 1, 5 and 10. In Figure 4, the running time and absolute estimate error are plotted for the three choices in the tree-structure HMRFs and grid-structure HMRFs under different levels of noise in the background knowledge ki = sin θi + ε and the background knowledge . The running time increases as n increases, but the estimation accuracy does not increase. This observation stays the same for different structures and different levels of noise in different types of background knowledge. This suggests that we can simply choose n = 1 in our EM-kernel-PCD algorithm.

Figure 4.

Absolute estimate error (plotted in blue, in the units on the right axes) and running time (plotted in black, in minutes on the left axes) of the EM-kernel-PCD algorithm in the tree-structure HMRFs and the grid-structure HMRFs when we choose different n values; n is the number of MCMC steps for advancing particles in the PCD algorithm. The absolute estimate error in the first 400 iterations is not shown in the plots.

5 Real-world Application

We use our EM-kernel-PCD algorithm to learn a heterogeneous HMRF model in a real-world genome-wide association study on breast cancer. The dataset is from NCI’s Cancer Genetics Markers of Susceptibility (CGEMS) study [Hunter et al., 2007]. In total, 528,173 genetic markers (single-nucleotide polymorphisms or SNPs) for 1,145 breast cancer cases and 1,142 controls are genotyped on the Illumina HumanHap500 array, and the task is to identify the SNPs which are associated with breast cancer. This dataset has been used in the study of Liu et al. [2012b]. We build a heterogeneous HMRF model to identify the associated SNPs. In the HMRF model, the hidden vector X ∈ {0, 1}d denotes whether the SNPs are associated with breast cancer, i.e. Xi = 1 means that the SNPi is associated with breast cancer. For each SNP, we can perform a two-proportion z-test from the minor allele count in cases and the minor allele count in controls. Denote Yi to be the test statistic from the two-proportion z-test for SNPi. It can be derived that Yi|Xi=0 ~ N(0, 1) and Yi|Xi=1 ~ N(μ1, 1) for some unknown μ1 (μ1 ≠ 0). We assume that X forms a pairwise Markov random field with respect to the graph 𝔾. The graph 𝔾 is built as follows. We query the squared correlation coefficients (r2 values) among the SNPs from HapMap [The International HapMap Consortium, 2003]. Each SNP becomes a node in the graph. For each SNP, we connect it with the SNP having the highest r2 value with it. We also remove the edges whose r2 values are below 0.25. There are in total 340,601 edges in the graph. The pairwise potential function ϕi on edge i (connecting Xu and Xv) parameterized by θi (0 < θi < 1) is for i = 1, …, 340,601, where I is an indicator variable. It is believed that two SNPs with a higher level of correlation are more likely to agree in their association with breast cancer. Therefore, we set the background knowledge k about the parameters to be the r2 values between the SNPs on the edge. We first perform the two-proportion z-test and set y to be the calculated test statistics. Then we estimate θ|y, k in the heterogeneous HMRF with respect to 𝔾 using our EM-kernel-PCD algorithm. After we estimate θ and μ1, we calculate the marginal probabilities of the hidden X. Eventually, we rank the SNPs by the marginal probabilities P(Xi = 1|y; θ̂, μ̂1), and select the SNPs with the largest marginal probabilities.

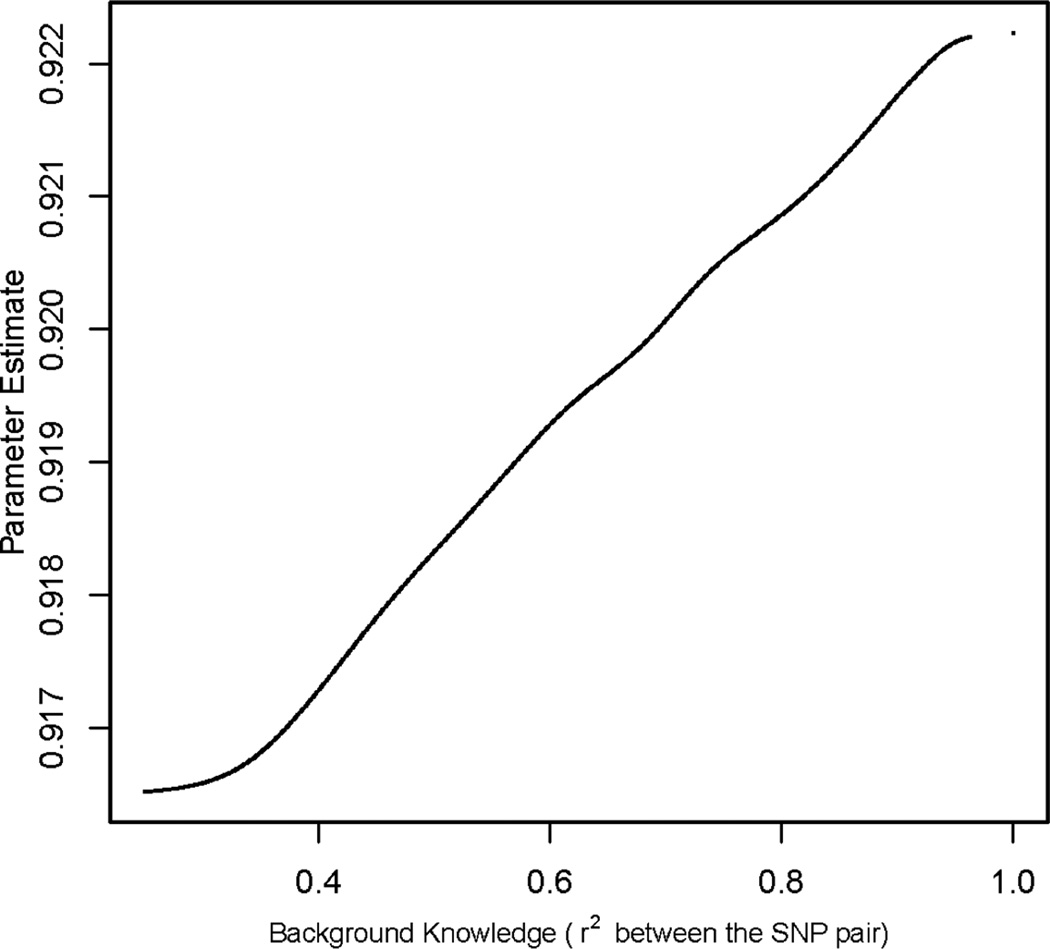

The algorithm ran for 46 days on a single processor (AMD Opteron Processor, 3300 MHz) before it converged. We plotted the estimated parameters against their background knowledge, namely the r2 values between the pairs of SNPs on the edges. The plot is provided in Figure 5. It is observed that the mapping between the estimated parameters and the background knowledge is monotone increasing, as we expect. Finally we calculated the marginal probabilities of the hidden X, and ranked the SNPs by the marginal probabilities P(Xi = 1|y; θ̂, μ̂1). There are in total five SNPs with P(Xi = 1|y; θ̂, μ̂1) greater than 0.99, which means they are associated with breast cancer with a probability greater than 0.99 given the observed test statistics y under the estimated parameters θ̂ and μ̂1. There is strong evidence in the literature that supports the association with breast cancer for three of them. The two SNPs rs2420946 and rs1219648 on chromosome 10 are reported by Hunter et al (2007), and have been further validated by 1,776 cases and 2,072 controls from three additional studies. Their associated gene FGFR2 is very well known to be associated with breast cancer in the literature. There is also strong evidence supporting the association of the SNP rs7712949 on chromosome 5. The SNP rs7712949 is highly correlated (r2=0.948) with SNP rs4415084 which has been identified to be associated with breast cancer by another six large-scale studies. 1

Figure 5.

The estimated parameters against their background knowledge, namely the r2 values between the pairs of SNPs.

6 Conclusion

Capturing parameter heterogeneity is an important issue in machine learning and statistics, and it is particular challenging in HMRFs due to both the intractable Z(θ) and the latent x. In this paper, we propose the EM-kernel-PCD algorithm for learning the heterogeneous parameters with background knowledge. Our algorithm is built upon the PCD algorithm which handles the intractable Z(θ). The EM part we add is for dealing with the hidden x. The kernel smoothing part we add is to adaptively incorporate the background knowledge about the heterogeneity in parameters in the gradient ascent learning. Eventually, the relation between the parameters and the background knowledge is recovered in a nonparametric way, which is also adaptive to the data. Simulations show that our algorithm is effective for learning heterogeneous HMRFs and outperforms alternative binning methods.

Similar to other EM algorithms, our algorithm only converges to a local maximum of the likelihood ℒ(θ, φ), although the lower bound ℱ(qx(x), {θ, φ}) nondecreases over the EM iterations (except for some MCMC error introduced in the E-step). Our algorithm also suffers from long run time due to computationally expensive PCD algorithm within each M-step. These two issues are important directions for future work.

Acknowledgements

The authors gratefully acknowledge the support of NIH grants R01GM097618, R01CA165229, R01LM010921, P30CA014520, UL1TR000427, NSF grants DMS-1106586, DMS-1308872 and the UW Carbone Cancer Center.

Footnotes

Appearing in Proceedings of the 17th International Conference on Artificial Intelligence and Statistics (AISTATS) 2014, Reykjavik, Iceland. JMLR: W&CP volume 33.

Contributor Information

Jie Liu, CS, UW-Madison.

Chunming Zhang, Statistics, UW-Madison.

Elizabeth Burnside, Radiology, UW-Madison.

David Page, BMI & CS, UW-Madison.

References

- Asuncion AU, Liu Q, Ihler AT, Smyth P. Particle filtered MCMC-MLE with connections to contrastive divergence. ICML. 2010 [Google Scholar]

- Beal MJ, Ghahramani Z, Rasmussen CE. The infinite hidden Markov model. NIPS. 2002 [Google Scholar]

- Besag J. Statistical analysis of non-lattice data. Journal of the Royal Statistical Society. Series D (The Statistician) 1975;24(3):179–195. [Google Scholar]

- Celeux G, Forbes F, Peyrard N. EM procedures using mean field-like approximations for Markov model-based image segmentation. Pattern Recognition. 2003;36:131–144. [Google Scholar]

- Chatzis SP, Tsechpenakis G. The infinite hidden Markov random field model. ICCV. 2009 doi: 10.1109/TNN.2010.2046910. [DOI] [PubMed] [Google Scholar]

- Chatzis SP, Varvarigou TA. A fuzzy clustering approach toward hidden Markov random field models for enhanced spatially constrained image segmentation. IEEE Transactions on Fuzzy Systems. 2008;16:1351–1361. [Google Scholar]

- Fan J. Design-adaptive nonparametric regression. Journal of the American Statistical Association. 1992.;87(420):998–1004. ISSN 01621459. [Google Scholar]

- Gael JV, Saatci Y, Teh YW, Ghahramani Z. Beam sampling for the infinite hidden Markov model. ICML. 2008 [Google Scholar]

- Geyer CJ. Markov chain Monte Carlo maximum likelihood. Computing Science and Statistics. 1991:156–163. [Google Scholar]

- Ghahramani Z, Jordan MI. Factorial Hidden markov models. Machine Learning. 1997;29:245–273. [Google Scholar]

- Gutmann M, Hyvärinen A. Noise-contrastive estimation: A new estimation principle for unnormalized statistical models. AISTATS. 2010 [Google Scholar]

- Hinton G. Training products of experts by minimizing contrastive divergence. Neural Computation. 2002;14:1771–1800. doi: 10.1162/089976602760128018. [DOI] [PubMed] [Google Scholar]

- Hsu D, Kakade SM, Zhang T. A spectral algorithm for learning hidden Markov models. COLT. 2009 [Google Scholar]

- Hunter DJ, Kraft P, Jacobs KB, Cox DG, Yeager M, Hankinson SE, Wacholder S, Wang Z, Welch R, Hutchinson A, Wang J, Yu K, Chatterjee N, Orr N, Willett WC, Colditz GA, Ziegler RG, Berg CD, Buys SS, Mccarty CA, Feigelson HS, Calle EE, Thun MJ, Hayes RB, Tucker M, Gerhard DS, Fraumeni JF, Hoover RN, Thomas G, Chanock SJ. A genome-wide association study identifies alleles in fgfr2 associated with risk of sporadic postmenopausal breast cancer. Nature Genetics. 2007;39(7):870–874. doi: 10.1038/ng2075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hyvärinen A. Some extensions of score matching. Computational Statistics & Data Analysis. 2007;51(5):2499–2512. [Google Scholar]

- Kim J, Zabih R. Factorial Markov random fields. ECCV. 2002:321–334. [Google Scholar]

- Lan X, Roth S, Huttenlocher D, Black MJ. Efficient belief propagation with learned higher-order markov random fields. ECCV. 2006:269–282. [Google Scholar]

- Liu J, Zhang C, McCarty C, Peissig P, Burnside E, Page D. High-dimensional structured feature screening using binary Markov random fields. AISTATS. 2012a [PMC free article] [PubMed] [Google Scholar]

- Liu J, Zhang C, McCarty C, Peissig P, Burnside E, Page D. Graphical-model based multiple testing under dependence, with applications to genome-wide association studies. UAI. 2012b [PMC free article] [PubMed] [Google Scholar]

- Lyu S. Unifying non-maximum likelihood learning objectives with minimum KL contraction. NIPS. 2011:64–72. [Google Scholar]

- Marioni JC, Thorne NP, Tavaré S. BioHMM: a heterogeneous hidden Markov model for segmenting array CGH data. Bioinformatics. 2006;22:1144–1146. doi: 10.1093/bioinformatics/btl089. [DOI] [PubMed] [Google Scholar]

- Nadaraya E. On estimating regression. Theory of Probability and Its Applications. 1964;9(1):141–142. [Google Scholar]

- Song L, Boots B, Siddiqi S, Gordon G, Smola A. Hilbert space embeddings of hidden Markov models. ICML. 2010 [Google Scholar]

- The International HapMap Consortium. The international hapmap project. Nature. 2003;426:789–796. doi: 10.1038/nature02168. [DOI] [PubMed] [Google Scholar]

- Tieleman T. Training restricted Boltzmann machines using approximations to the likelihood gradient. ICML. 2008 [Google Scholar]

- Tieleman T, Hinton G. Using fast weights to improve persistent contrastive divergence. ICML. 2009 [Google Scholar]

- Vickrey D, Lin C, Koller D. Non-local contrastive objectives. ICML. 2010 [Google Scholar]

- Watson GS. Smooth regression analysis. The Indian Journal of Statistics, Series A. 1964;26(4):359–372. [Google Scholar]

- Zhang Y, Brady M, Smith S. Segmentation of brain MR images through a hidden Markov random field model and the expectation-maximization algorithm. IEEE Transactions on Medical Imaging. 2001 doi: 10.1109/42.906424. [DOI] [PubMed] [Google Scholar]

- Zhu SC, Liu X. Learning in Gibbsian fields: How accurate and how fast can it be? IEEE Transactions on Pattern Analysis and Machine Intelligence. 2002;24:1001–1006. [Google Scholar]