Abstract

Background

Primary treatment of localized prostate cancer can result in bothersome urinary, sexual, and bowel symptoms. Yet clinical application of health-related quality-of-life (HRQOL) questionnaires is rare. We employed user-centered design to develop graphic dashboards of questionnaire responses from patients with prostate cancer to facilitate clinical integration of HRQOL measurement.

Methods

We interviewed 50 prostate cancer patients and 50 providers, assessed literacy with validated instruments (Rapid Estimate of Adult Literacy in Medicine short form, Subjective Numeracy Scale, Graphical Literacy Scale), and presented participants with prototype dashboards that display prostate cancer-specific HRQOL with graphic elements derived from patient focus groups. We assessed dashboard comprehension and preferences in table, bar, line, and pictograph formats with patient scores contextualized with HRQOL scores of similar patients serving as a comparison group.

Results

Health literacy (mean score, 6.8/7) and numeracy (mean score, 4.5/6) of patient participants was high. Patients favored the bar chart (mean rank, 1.8 [P = .12] vs line graph [P <.01] vs table and pictograph); providers demonstrated similar preference for table, bar, and line formats (ranked first by 30%, 34%, and 34% of providers, respectively). Providers expressed unsolicited concerns over presentation of comparison group scores (n = 19; 38%) and impact on clinic efficiency (n = 16; 32%).

Conclusion

Based on preferences of prostate cancer patients and providers, we developed the design concept of a dynamic HRQOL dashboard that permits a base patient-centered report in bar chart format that can be toggled to other formats and include error bars that frame comparison group scores. Inclusion of lower literacy patients may yield different preferences.

Primary treatment of prostate cancer (PCa) usually involves prostatectomy or radiation therapy to eradicate the cancer and can result in substantial changes in health-related quality of life (HRQOL) for urinary, sexual, and bowel function.1–4 Yet, patients are often unaware of the magnitude of their dysfunctions relative to expected outcomes5 despite consideration of HRQOL assessment with validated instruments as a quality performance measure.6,7 Unfortunately, clinical implementation of survey assessment is onerous, which results in limited availability of HRQOL data for PCa patient counseling, either before treatment to aid decision making or after treatment to track HRQOL convalescence. Integration of HRQOL measurement into clinical care has potential to empower patients with improved understanding of their HRQOL detriments, leading to more informative discussions with their providers and greater self-efficacy for their PCa care.8

We sought to address deficiencies in PCa care that limit discussion of the HRQOL impacts of treatment. We address these deficiencies through the user-centered design of an HRQOL measurement and presentation system informed by input from patients and providers. Herein, we report on the initial user-centered design, in which we identified patient-centered element of an HRQOL dashboard and assessed comprehension and preferences of prototype dashboards for inclusion in the system. Although HRQOL assessment tools that target clinician users have established effectiveness in clinical care for other conditions,9–11 research is limited on the design of meaningful presentations of HRQOL data that engage patients and providers as collaborative system users.

User-centered design is an informatics framework that incorporates target users directly in software development.12 Soliciting input from target users during iterative prototyping cycles results in technology that is more readily adopted by end users.13 Through user-centered design of graphic reports of PCa HRQOL or “dashboards,” we can generate important design concepts that can enhance the clinical care of PCa survivors.

METHODS

We conducted our user-centered design in 2 phases. First, in our preliminary work, we identified HRQOL dashboard elements that are meaningful to patients through focus groups with PCa survivors.14 When asked what questions an HRQOL dashboard could help answer, participants rated the following questions highest: “How am I doing compared with patients like me?”; “How am I doing compared with before treatment?”; and “What can I expect in the future?” These questions map to the longitudinal presentation of the HRQOL of the patient with the context of comparison group scores derived from HRQOL outcomes of matched patients. This representation facilitates comparison with similar patients, enabling comparison of current HRQOL with HRQOL before treatment and permits patients to project expected outcomes based on the HRQOL trends of comparison group patients.

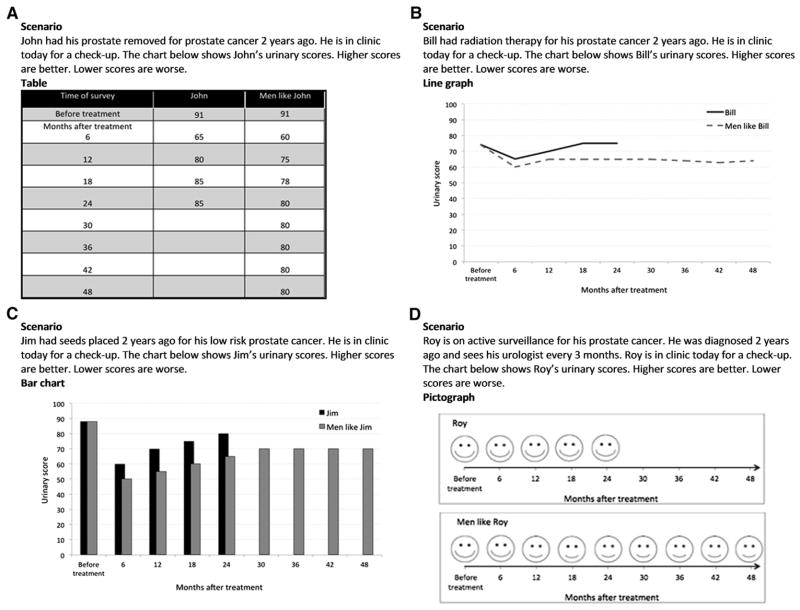

In the second phase of user-centered design, we designed four prototype formats for the HRQOL dashboard (Fig 1) that illustrate the patient-centered elements: (1) Bar charts; and (2) line graphs that display HRQOL data over time; (3) tables that display HRQOL data in raw form; and (4) facial expression pictographs modeled after the Wong-Baker FACES pains scale,15 as pictographs have exhibited strong information transfer for lower literacy patients.16 We then conducted individual interviews with patient and providers to ascertain comprehension and compare preferences among prototype formats of the HRQOL dashboard.

Fig 1.

Prototype HRQOL dashboards and accompanying scenarios for (A) table, (B) line graph, (C) bar chart, and (D) pictograph formats. The displayed dashboards demonstrate the positive frame versions.

Study participants

This study was approved by the University of Washington Institutional Review Board. Written consent was obtained from all participants. We tested prototype dashboards with 50 PCa patients and 50 PCa providers. We recruited patients from local PCa support groups and from UW Medicine urology clinics. Eligible patients were ≥21 years old, had a diagnosis of PCa irrespective of stage or time since treatment, and could read and understand English. Eligible providers were recruited from area urology and radiation oncology clinics and were included if they participated in PCa care. Feedback from focus group patients established that patients commonly engage nonphysician providers (nurses or nursing aides) in HRQOL discussions. Thus, we enrolled 25 physician providers and 25 nonphysician providers. We collected demographic and clinical information from all participants.

Assessment of participant literacy

We assessed participants’ health literacy, numeracy, and graphic literacy with validated literacy instruments. To measure health literacy, we used the short form Rapid Estimate of Adult Literacy in Medicine (REALM-SF),17 a prevalent health literacy instrument that uses medical word recognition and pronunciation to stratify individuals into health literacy levels. The REALM-SF is highly correlated with education level and other measures of health literacy18–20 and is scored from 0 to 7. We did not evaluate providers with the REALM-SF, because providers are familiar with medical terminology.

We assessed numeric literacy using the Subjective Numeracy Scale (SNS).21 The SNS measures self-perceptions of mathematical faculty rather than objective performance of mathematical operations. The preference subdomain measures predilections for information in numeric versus prose formats; the ability subdomain measures individuals’ subjective capacity to perform calculations. SNS is highly correlated with objective numeracy scales22 but may cause less frustration among study participants.

We evaluated graphic literacy with the Graphical Literacy Scale.23 The Graphical Literacy Scale assesses whether individuals understand common graphic representations of numeric health information and is divided into 3 subdomains: Reading, reading between, and reading beyond. Reading measures the ability of an individual to extract information from a graph. Reading between measures one’s ability to compare groups based on graphic displays. Reading beyond measures one’s ability to generate predictions based on graphic displays.

Dashboard evaluation

After literacy assessment, participants viewed each prototype dashboard and responded to questions that measured comprehension and perceived usefulness. Participants then rank-ordered the HRQOL dashboards according to their preference and provided qualitative input on design elements.

Each dashboard illustrated sample HRQOL data for a fictitious patient scenario based on assessment of example patients with the Expanded Prostate Cancer Index.24 Expanded Prostate Cancer Index is the most commonly used PCa-specific HRQOL instrument,25 measuring symptom severity on a 0–100 scale for urinary, sexual, and bowel domains, with a greater score indicating better HRQOL. Dashboards were counterbalanced for positive and negative framing (the example patient was doing better or worse than comparison group patients) with randomized order of chart format presentation.

We tested participant comprehension for each prototype dashboard according to the 3 subdomains of graphic literacy. Thus, to assess reading, we asked participants to determine HRQOL scores of example patients at interval time points before or after treatment. To assess reading between, we asked participants to indicate the relationship between the HRQOL trend of example patients’ HRQOL and comparison group scores. To assess reading beyond, we asked participants about expected HRQOL at future time points within the time scale of the prototype dashboard.

We evaluated perceived usefulness of dashboards by asking the participant to rate the helpfulness and their level of confidence using each prototype on a 4-point scale. Predilections for the formats were established by rank order preferences. We combined the quantitative assessments with semistructured interview questions to elicit qualitative feedback about prototype HRQOL dashboards. With each format, we inquired about challenges with comprehension and reasons for rank order preferences. We transcribed responses and sorted them into common themes using affinity diagraming.26

Statistical analysis

We summarized participant characteristics with descriptive statistics and assessed literacy differences between patients and providers using Mann–Whitney U tests. We scored dashboard comprehension as percentage of questions correct for each dashboard. Differences within and between patient and provider participants in HRQOL dashboard comprehension were assessed with Mann–Whitney U tests to compare reading, reading between, reading beyond, and total comprehension scores. We compared comprehension among formats for each participant group using Kruskal–Wallis tests. We assessed differences in perceived helpfulness, and confidence ratings, and preference among formats for each participant group using Kruskal–Wallis tests. Paired comparisons between formats for each participant group were made using Wilcoxon signed-rank tests. We did not perform subgroup analyses by health literacy level owing to a lack of patient participants with low health literacy. We present qualitative feedback as the number and percentage of participants expressing common themes.

RESULTS

Characteristics of patient and provider participant are displayed in Tables I and II, respectively. Most patient participants were white, married/partnered, and had localized PCa. Patients had high educational attainment with 78% having at least a college degree. Providers were experienced with most (74%) having been in clinical practice for >10 years. Most physician provider participants were urologists with the remainder practicing radiation oncology. Most nonphysician providers were registered nurses or nursing aides.

Table I.

Characteristics of patient participants

| Patient characteristic | n (%) |

|---|---|

| Age (y), mean (SD) | 71.2 (9.7) |

| <60 | 8 (16) |

| 60–69 | 12 (24) |

| 70–79 | 20 (40) |

| ≥80 | 10 (20) |

| Race/ethnicity | |

| White | 44 (88) |

| Black | 4 (8) |

| Other | 2 (4) |

| Marital status | |

| Married/partnered | 37 (74) |

| Divorced/separated | 8 (16) |

| Single/widower | 5 (10) |

| Education level | |

| Postgraduate | 19 (38) |

| College graduate | 20 (40) |

| <College graduate | 11 (22) |

| Treatment | |

| Radical prostatectomy | 23 (46) |

| External beam radiation therapy | 16 (32) |

| Brachytherapy | 8 (16) |

| Multiple therapies | 17 (34) |

| Time since treatment (y), mean (SD) | 5.5 (4.5) |

SD, Standard deviation.

Table II.

Characteristics of provider participants

| Provider characteristic | n (%) |

|---|---|

| Age (y), mean (SD) | 46.5 (10.4) |

| <40 | 13 (26) |

| 40–49 | 15 (30) |

| 50–59 | 18 (36) |

| ≥60 | 4 (8) |

| Race/ethnicity | |

| White | 36 (72) |

| Black | 2 (4) |

| Other | 11 (22) |

| Medical training | |

| Physician providers | |

| Urology | 19 (38) |

| Radiation oncology | 6 (12) |

| Nonphysician providers | |

| Physician extender* | 8 (16) |

| Registered nurse | 12 (24) |

| Medical assistant | 5 (10) |

| Time in practice (y), mean (SD) | 16.6 (11.1) |

Includes nurse practitioners and physician assistants.

SD, Standard deviation.

Table III displays the literacy of participants. The health literacy of patient participants was high, with mean REALM-SF score approaching the maximum REALM-SF score of 7, consistent with at least a high school reading level. The numeracy of patients and providers was similar overall and within SNS subdomains of preference and ability. Despite high health literacy and high numeracy among patients, their graphic literacy was less than that of providers. Within subdomains, patients had comparable reading and reading between scores, but significantly less reading beyond scores.

Table III.

Health literacy, numeracy, and graphic literacy of patient and provider study participants

| Patient scores mean (SD) | Provider scores mean (SD) | |

|---|---|---|

| Health literacy | ||

| REALM-SF score | 6.8 (1.0) | N/A |

| Numeracy | ||

| SNS total score | 4.5 (0.9) | 4.7 (0.9) |

| SNS ability score | 4.4 (1.0) | 4.7 (0.9) |

| SNS preference score | 4.5 (0.9) | 4.6 (0.9) |

| Graphic literacy | ||

| GLS total score | 79.2 (17.7) | 86.8 (14.8) |

| GLS reading score | 94.5 (16.2) | 96.5 (8.8) |

| GLS reading between score | 81.0 (25.0) | 88.0 (21.0) |

| GLS reading beyond score | 65.6 (22.5) | 78.0 (22.6) |

GLS, Graphic Literacy Scale, scored as percent correct; REALM-SF, Rapid Estimate of Adult Literacy in Medicine Short Form, scored 0–7; SD, standard deviation; SNS, Subjective Numeracy Scale, scored 0–6.

Comprehension of prototype HRQOL dashboards varied by participant group (Table IV). Providers had high graphic literacy for prototype dashboards, including high Reading between and reading beyond scores irrespective of chart type. Providers showed no difference in comprehension among formats. Patient participants had lower reading between and reading beyond scores for all formats. Patient comprehension varied among formats for reading (P = .01) and reading beyond (P = .02) subscores. Differences in comprehension between patients and providers were greatest for pictographs. Patients had high reading scores for the table format.

Table IV.

Comprehension of patient and provider participants for prototype health-related quality-of-life dashboard formats according to dimensions of graphic literacy

| Percent correct, mean (SD) | Patient scores, n (%) | Provider scores, n (%) |

|---|---|---|

| Table | ||

| Overall | 89 (21) | 99 (4) |

| Reading | 96 (17) | 99 (7) |

| Reading between | 82 (37) | 99 (7) |

| Reading beyond | 88 (33) | 99 (7) |

| Bar | ||

| Overall | 88 (23) | 98 (6) |

| Reading | 92 (25) | 99 (7) |

| Reading between | 85 (35) | 100 (0) |

| Reading beyond | 88 (31) | 96 (17) |

| Line | ||

| Overall | 88 (18) | 98 (7) |

| Reading | 87 (28) | 99 (7) |

| Reading between | 86 (34) | 98 (14) |

| Reading beyond | 92 (23) | 98 (14) |

| Pictograph | ||

| Overall | 79 (31) | 97 (8) |

| Reading | 77 (39) | 98 (10) |

| Reading between | 87 (32) | 96 (20) |

| Reading beyond | 73 (42) | 98 (14) |

SD, Standard deviation.

Helpfulness and confidence ratings varied among dashboard formats for both patients (P < .001) and providers (P = .001). Among those formats, pictographs had the lowest helpfulness ratings (P <.01 for both patient and provider participants compared with table, bar, and line graph formats) and the lowest ratings of confidence in interpretation (P < .05 for patient participants and P < .01 for provider participants compared with table, bar, and line graph formats).

Preference rankings varied among dashboard formats for both patients (P <.001) and providers (P = .001). Concordant with helpfulness and confidence ratings, preference rankings for pictographs were low: 72% of patients and 68% of providers ranked pictographs 4 as the least preferred dashboard format. Preference rankings among patients favored the bar charts, with 44% of patients ranking bar chart dashboards first as most preferred (mean ranking, 1.8 ± 0.9 [P = .12] vs line graphs [P < .01] vs tables and pictographs). In contrast, there was no universally preferred dashboard format among provider participants, of whom 30% ranked tables first (mean, 2.3 ± 1.1), 34% ranked bar charts first (mean, 1.8 ± 0.7), and 34% ranked line graphs first (mean, 2.3 ± 1.1).

Several themes emerged from patient qualitative feedback. The pictograph, which demonstrated low comprehension and low preference rankings, was found to be too complicated, with “too many steps to interpret” in 20% of patients and difficulty disentangling the facial expressions, felt to be “too similar” by 18% of patients. The table, which demonstrated high reading comprehension scores, was felt to be easy to understand by 16% of patients, but 18% of patients felt this format made HRQOL trends difficult to interpret.

Themes elicited from providers focused on concern about utilization of a comparison group and concern about the effect of an HRQOL dashboard on clinic efficiency. Concern over presentation of patient HRQOL contextualized with comparison group scores was expressed by 38% of provider participants, including 47% of urologists. Provider participants suggested eliminating the comparison group entirely, informing the comparison group scores with confidence intervals or error bars to better counsel patients about the meaning of their scores, and consideration of conditional presentation of comparison group scores depending on a patient’s HRQOL results. Several provider participants (32%) expressed concern that utilization of HRQOL dashboards in their clinic could adversely impact the efficiency of their clinics and inhibit patient throughput.

DISCUSSION

Our study contributes several important findings. First, implementation of HRQOL measurement in clinical PCa practice using graphic reports will likely require a dynamic interface. Patient preferences favored the bar chart format. This finding corroborates previous publications assessing graphic preferences in other patient populations, where vertical bars with scales were found to be the fastest and most accurately interpreted graphic format for communication of risk.27 Graphic trends in the popular press further support our finding among patient participants. Graphs presented in USA Today Snapshots Online were found to employ bar chart formats in 74% of graphs analyzed, including 71% of graphs depicting percentages.28

Providers, however, did not exhibit a consensus preference for the dashboard format. Equal proportions of provider participants favored tables, bar charts, and line graphs. We can exclude table formats from further consideration owing to the expressed challenge among patients of inferring HRQOL trends from tables. Yet, some system users may prefer visualizing longitudinal data in line graph formats. Among cancer survivors, line graphs have been shown to convey HRQOL information better than other chart types.29 A dynamic dashboard in which the graphic format may be toggled depending on individual preferences may aid information transfer during a clinic visit. A dynamic HRQOL dashboard would also allow inclusion of additional elements that may be provider centered but may also enhance the ability of HRQOL dashboards to be used for patient counseling. For example, providers suggested that the addition of error bars or confidence intervals would help them to explain differences between patients and their comparison groups.

Second, comprehension, perceived usefulness, and preference for pictographs were low. This finding is at odds with prior studies. McCaffery et al30 compared pictographs with bar charts to test optimal graphic formats for conveying risk to adults with lower educational attainment and lower literacy levels. They found that pictographs resulted in fewer errors and faster interpretation when the risk percentages were low. In a study of >2,000 individuals recruited through the internet, pictographs were rated highly by both high and low numeracy participants.31

Our findings may not indicate that pictographs provide poor representations of HRQOL; rather, it may be that our prototype pictographs were poorly designed. To compare comprehension among prototype dashboard formats corresponding to subdomains of graphic literacy, our study design required participants to abstract scores from the pictographs, which necessitated a scale. This extra step in interpretation was noted as a barrier to comprehension of pictographs by several patients. Additionally, temporal trends are difficult to represent with existing pictograph formats. Further study will explore whether relational pictographs without a scale offer greater ease of interpretation than the prototype pictographs we studied.

Last, providers underscored potential barriers to clinical implementation of HRQOL dashboards. Almost half of the urologists expressed concern about inclusion of comparison group scores on the prototype dashboards. The study design underlying development of our HRQOL dashboard focused on creating a patient-centered tool. Patients participating in focus groups that informed prototype design indicated that the most important function of the HRQOL dashboards would be to allow patients to compare their HRQOL trends with those of similar patients.14 Thus, despite provider concerns, inclusion of a comparison group should remain a central design element of PCa HRQOL dashboards. Providers also articulated reservations about the impact of HRQOL dashboards on clinic efficiency. Certainly, efforts to implement HRQOL dashboards broadly in clinical PCa practice would require detailed workflow and patient flow assessments to minimize detriments to the efficiency of the patient–provider encounter and ensure sustainability of HRQOL dashboards. For some patients—especially those with good HRQOL—use of the dashboard might actually increase the time efficiency of the in-person, patient–provider encounter. Furthermore, a dashboard could streamline collection of HRQOL data within providers’ practices, which may facilitate emerging initiatives in quality improvement that leverage HRQOL measurement.6

Our study has several limitations. First, given the small sample size and high literacy of our patient participants, our results may not be generalizable to the larger PCa population. We were limited to a convenience sample of patients derived from our clinics and from local PCa support groups. More than three quarters of our patient participants had at least a college degree. Survey of patients from other geographic areas with greater racial/ethnic and socioeconomic diversity may very well yield different results. Most notably, we cannot conclude that our findings represent the preferences and concerns of low literacy patients. Based on our findings from this study, we are exploring design modifications to pictographs—known to appeal to lower literacy individuals16—to enhance comprehension, including elimination of scale legends. Similarly, the results from our interviews with provider participants may not generalize to all practitioners caring for PCa patients. Concerns about the threatening nature of comparison scores and about detriments to clinic workflow may be specific to providers in this study. We hypothesize, however, that these concerns are indicative of common provider beliefs regarding new clinic interventions.

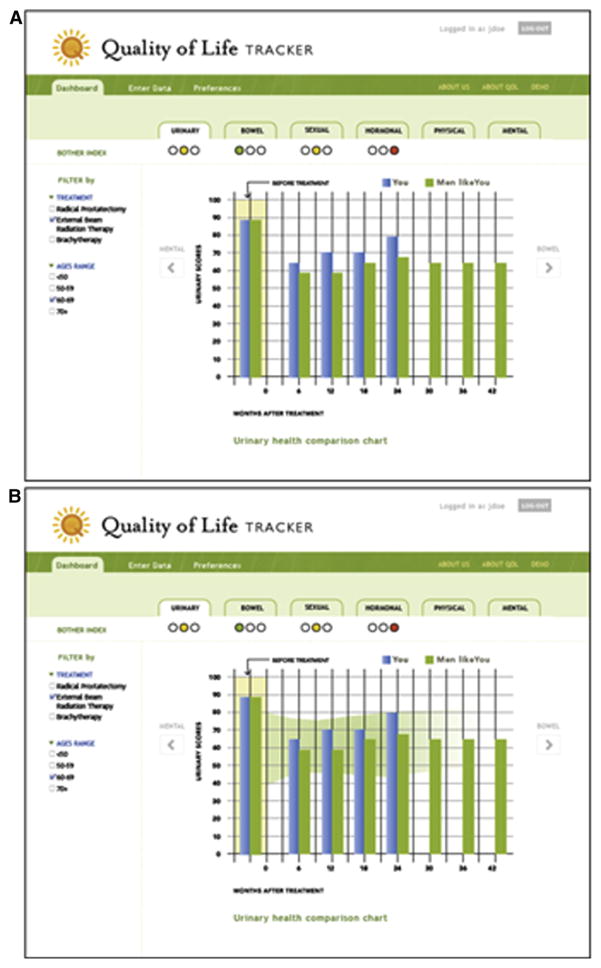

Despite these limitations, we believe that user-centered design with patients and providers has produced the important design concept of a dynamic HRQOL dashboard that will present patient-centered content in formats preferred by patient and provider, and augmented by elements that may enhance patient counseling. Our base concept presents a longitudinal bar chart with patient HRQOL scores presented alongside comparison group scores matched to the demographic and clinical characteristics of the patient, and baseline HRQOL. This format can be toggled to other graph formats with inclusion of error bars that augment a provider’s ability to counsel patients about their HRQOL through dynamic interaction with the dashboard (Fig 2). Future research efforts will explore the impact of HRQOL dashboards on patient–provider communication and patient self-efficacy and will identify patient characteristics that are associated with greater benefit from dashboard-integrated clinical PCa care.

Fig 2.

Proposed dynamic dashboard which incorporates individual patient characteristics and preferences to generate the final visual display. The figure displays normative curves which can be seen both with (A) and without (B) error bars.

Acknowledgments

Supported by the National Cancer Institute at the National Institutes of Health, grant number R03 CA158054.

References

- 1.Gore JL, Kwan L, Lee SP, Reiter RE, Litwin MS. Survivorship beyond convalescence: 48-month quality-of-life outcomes after treatment for localized prostate cancer. J Natl Cancer Inst. 2009;101:888–92. doi: 10.1093/jnci/djp114. [DOI] [PubMed] [Google Scholar]

- 2.Litwin MS, Hays RD, Fink A, Ganz PA, Leake B, Leach GE, et al. Quality-of-life outcomes in men treated for localized prostate cancer. JAMA. 1995;273:129–35. doi: 10.1001/jama.273.2.129. [DOI] [PubMed] [Google Scholar]

- 3.Sanda MG, Dunn RL, Michalski J, Sandler HM, Northouse L, Hembroff L, et al. Quality of life and satisfaction with outcome among prostate-cancer survivors. N Engl J Med. 2008;358:1250–61. doi: 10.1056/NEJMoa074311. [DOI] [PubMed] [Google Scholar]

- 4.Stanford JL, Feng Z, Hamilton AS, Gilliland FD, Stephenson RA, Eley JW, et al. Urinary and sexual function after radical prostatectomy for clinically localized prostate cancer: the Prostate Cancer Outcomes Study. JAMA. 2000;283:354–60. doi: 10.1001/jama.283.3.354. [DOI] [PubMed] [Google Scholar]

- 5.Hartzler A, Olson KA, Dalkin B, Gore JL. Enhancing communication after treatment: what cancer patients want from a quality of life dashboard. AMIA Annu Symp Proc. 2011;2011:1795. [Google Scholar]

- 6.Litwin MS. Prostate cancer patient outcomes and choice of providers: development of an infrastructure for quality assessment. Santa Monica, CA: Rand; 2000. [Google Scholar]

- 7.Spencer BA, Steinberg M, Malin J, Adams J, Litwin MS. Quality-of-care indicators for early-stage prostate cancer. J Clin Oncol. 2003;21:1928–36. doi: 10.1200/JCO.2003.05.157. [DOI] [PubMed] [Google Scholar]

- 8.Davison BJ, Degner LF. Empowerment of men newly diagnosed with prostate cancer. Cancer Nurs. 1997;20:187–96. doi: 10.1097/00002820-199706000-00004. [DOI] [PubMed] [Google Scholar]

- 9.Berry DL, Blumenstein BA, Halpenny B, Wolpin S, Fann JR, Austin-Seymour M, et al. Enhancing patient-provider communication with the electronic self-report assessment for cancer: a randomized trial. J Clin Oncol. 2011;29:1029–35. doi: 10.1200/JCO.2010.30.3909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Carlson LE, Groff SL, Maciejewski O, Bultz BD. Screening for distress in lung and breast cancer outpatients: a randomized controlled trial. J Clin Oncol. 2010;28:4884–91. doi: 10.1200/JCO.2009.27.3698. [DOI] [PubMed] [Google Scholar]

- 11.Ruland CM, Holte HH, Roislien J, Heaven C, Hamilton GA, Kristiansen J, et al. Effects of a computer-supported interactive tailored patient assessment tool on patient care, symptom distress, and patients’ need for symptom management support: a randomized clinical trial. J Am Med Inform Assoc. 2010;17:403–10. doi: 10.1136/jamia.2010.005660. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wolpin S, Stewart M. A deliberate and rigorous approach to development of patient-centered technologies. Semin Oncol Nurs. 2011;27:183–91. doi: 10.1016/j.soncn.2011.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kinzie MB, Cohn WF, Julian MF, Knaus WA. A user-centered model for web site design: needs assessment, user interface design, and rapid prototyping. J Am Med Inform Assoc. 2002;9:320–30. doi: 10.1197/jamia.M0822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hartzler AOK, Dalkin B, Gore J. Enhancing Communication After Treatment: What Cancer Patients Want from a Quality of Life Dashboard. AMIA Annu Symp Proc. 2011;2011:1795. [Google Scholar]

- 15.Wong DL, Baker CM. Pain in children: comparison of assessment scales. Pediatr Nurs. 1988;14:9–17. [PubMed] [Google Scholar]

- 16.Houts PS, Witmer JT, Egeth HE, Loscalzo MJ, Zabora JR. Using pictographs to enhance recall of spoken medical instructions II. Patient Educ Couns. 2001;43:231–42. doi: 10.1016/s0738-3991(00)00171-3. [DOI] [PubMed] [Google Scholar]

- 17.Arozullah AM, Yarnold PR, Bennett CL, Soltysik RC, Wolf MS, Ferreira RM, et al. Development and validation of a short-form, rapid estimate of adult literacy in medicine. Med Care. 2007;45:1026–33. doi: 10.1097/MLR.0b013e3180616c1b. [DOI] [PubMed] [Google Scholar]

- 18.Davis TC, Crouch MA, Long SW, Jackson RH, Bates P, George RB, et al. Rapid assessment of literacy levels of adult primary care patients. Fam Med. 1991;23:433–5. [PubMed] [Google Scholar]

- 19.Slosson RL, Nicholson CL, Hibpshman TH. Slosson Intelligence Test (SIT-R) for children and adults. East Aurora, NY: Slosson Educational Publications; 1991. [Google Scholar]

- 20.Parker RM, Baker DW, Williams MV, Nurss JR. The test of functional health literacy in adults: a new instrument for measuring patients’ literacy skills. J Gen Intern Med. 1995;10:537–41. doi: 10.1007/BF02640361. [DOI] [PubMed] [Google Scholar]

- 21.Fagerlin A, Zikmund-Fisher BJ, Ubel PA, Jankovic A, Derry HA, Smith DM. Measuring numeracy without a math test: development of the Subjective Numeracy Scale. Med Decis Making. 2007;27:672–80. doi: 10.1177/0272989X07304449. [DOI] [PubMed] [Google Scholar]

- 22.Lipkus IM, Hollands JG. The visual communication of risk. J Natl Cancer Inst Monogr. 1999:149–63. doi: 10.1093/oxfordjournals.jncimonographs.a024191. [DOI] [PubMed] [Google Scholar]

- 23.Galesic M, Garcia-Retamero R. Graph literacy: a cross-cultural comparison. Med Decis Making. 2011;31:444–57. doi: 10.1177/0272989X10373805. [DOI] [PubMed] [Google Scholar]

- 24.Wei JT, Dunn RL, Litwin MS, Sandler HM, Sanda MG. Development and validation of the expanded prostate cancer index composite (EPIC) for comprehensive assessment of health-related quality of life in men with prostate cancer. Urology. 2000;56:899–905. doi: 10.1016/s0090-4295(00)00858-x. [DOI] [PubMed] [Google Scholar]

- 25.Penson DF. The effect of erectile dysfunction on quality of life following treatment for localized prostate cancer. Rev Urol. 2001;3:113–9. [PMC free article] [PubMed] [Google Scholar]

- 26.Beyer H, Holtzblatt K. Contextual design: defining customer-centered systems. San Francisco: Morgan Kaufmann; 1998. p. xxiii.p. 472. [Google Scholar]

- 27.Feldman-Stewart D, Brundage MD, Zotov V. Further insight into the perception of quantitative information: judgments of gist in treatment decisions. Med Decis Making. 2007;27:34–43. doi: 10.1177/0272989X06297101. [DOI] [PubMed] [Google Scholar]

- 28.Schield M. Percentage graphs in USA Today Snapshots Online. 2006:2364–71. Available from: http://www.statlit.org/pdf/2006SchieldASA.pdf.

- 29.Brundage M, Feldman-Stewart D, Leis A, Bezjak A, Degner L, Velji K, et al. Communicating quality of life information to cancer patients: a study of six presentation formats. J Clin Oncol. 2005;23:6949–56. doi: 10.1200/JCO.2005.12.514. [DOI] [PubMed] [Google Scholar]

- 30.McCaffery KJ, Dixon A, Hayen A, Jansen J, Smith S, Simpson JM. The influence of graphic display format on the interpretations of quantitative risk information among adults with lower education and literacy: a randomized experimental study. Med Decis Making. 2012;32:532–44. doi: 10.1177/0272989X11424926. [DOI] [PubMed] [Google Scholar]

- 31.Hawley ST, Zikmund-Fisher B, Ubel P, Jancovic A, Lucas T, Fagerlin A. The impact of the format of graphical presentation on health-related knowledge and treatment choices. Patient Educ Couns. 2008;73:448–55. doi: 10.1016/j.pec.2008.07.023. [DOI] [PubMed] [Google Scholar]