ABSTRACT

BACKGROUND

Clinical performance measurement has been a key element of efforts to transform the Veterans Health Administration (VHA). However, there are a number of signs that current performance measurement systems used within and outside the VHA may be reaching the point of maximum benefit to care and in some settings, may be resulting in negative consequences to care, including overtreatment and diminished attention to patient needs and preferences. Our research group has been involved in a long-standing partnership with the office responsible for clinical performance measurement in the VHA to understand and develop potential strategies to mitigate the unintended consequences of measurement.

OBJECTIVE

Our aim was to understand how the implementation of diabetes performance measures (PMs) influences management actions and day-to-day clinical practice.

DESIGN

This is a mixed methods study design based on quantitative administrative data to select study facilities and quantitative data from semi-structured interviews.

PARTICIPANTS

Sixty-two network-level and facility-level executives, managers, front-line providers and staff participated in the study.

APPROACH

Qualitative content analyses were guided by a team-based consensus approach using verbatim interview transcripts. A published interpretive motivation theory framework is used to describe potential contributions of local implementation strategies to unintended consequences of PMs.

KEY RESULTS

Implementation strategies used by management affect providers’ response to PMs, which in turn potentially undermines provision of high-quality patient-centered care. These include: 1) feedback reports to providers that are dissociated from a realistic capability to address performance gaps; 2) evaluative criteria set by managers that are at odds with patient-centered care; and 3) pressure created by managers’ narrow focus on gaps in PMs that is viewed as more punitive than motivating.

CONCLUSIONS

Next steps include working with VHA leaders to develop and test implementation approaches to help ensure that the next generation of PMs motivate truly patient-centered care and are clinically meaningful.

Electronic supplementary material

The online version of this article (doi:10.1007/s11606-014-3020-9) contains supplementary material, which is available to authorized users.

KEY WORDS: performance measurement, primary care, Veterans, implementation research

BACKGROUND

The Institute of Medicine has called for healthcare systems to adopt Veterans Health Administration’s (VHA) quality management practices1 that have been credited for helping transform VHA into one of the highest quality healthcare systems in the United States.2–4 In 1995, when the first system-wide clinical performance measures (PMs) were introduced in the VHA, the objective was to increase use of evidence-based practices that were under-utilized.1,5 Since 1995, the VHA has experienced dramatic improvements in quality across the spectrum of care, including diabetes management.5–7 For example, in 1997, only 40 % of diabetes patients met the blood pressure (BP) target (< 140/90 mmHg) but this percentage increased to 82 % by 2010 (unpublished administrative data). In the VHA, what started as a few key measures used prospectively to motivate facility-led quality improvement efforts8 has evolved into a proliferation of hundreds of measures with a retrospective focus on compliance to national policies and performance goals.3

There are signs that current PM systems inside and outside the VHA may be reaching the point of diminishing returns in driving continued improvements; increasingly high compliance may lead to overtreatment and de-incentivizing provision of less care when appropriate, which can undermine safety and even cause harm.8–10 Many clinical PMs have achieved 80–90 % compliance, but the “logic” of PMs creates pressure to ratchet11 targets ever higher. As quality targets become increasingly aggressive, there is increasing evidence of overtreatment, indicating the system may have reached the point at which further “improvements” in meeting measures may actually result in net harm.8,9,12 For example, one study showed that facility-level rates of potential BP overtreatment ranged from 3–20 %; facilities with higher rates of meeting BP PMs had higher rates of overtreatment.8

Studies have identified several other undesired consequences of PMs, including overemphasis on measured care at the expense of other aspects of professional competence and gaming of clinical measures,13 both of which may undermine personalized responses to patient needs and preferences and provider and patient autonomy.14,15 More effort may be expended on ensuring compliant documentation to get “credit” for a measure without focusing on improving clinical processes.9

Research-Operations Partnership

Our research team has a long-standing partnership with VHA’s Office of Informatics and Analytics (OIA), which is responsible for clinical performance measurement in the VHA. Through this partnership, we have assessed properties of PMs, developed new measures for diabetes (and helped implement them VHA-wide), and collaborated on multiple research projects (such as this one). Our research center has an agreement with OIA to develop new approaches to performance measurement.

Study Objective

While others have described unintended consequences of PMs at the system level and at the front-line, little work has examined how their implementation affects managers and providers. We sought to understand how diabetes PMs are implemented and more specifically, the pathways through which management decisions about implementing measures affects day-to-day practice. This information is needed to inform how best to craft and implement PM implementation strategies to minimize negative and maximize positive effects at the clinical level.

METHODS

This was a mixed methods study using quantitative administrative data to guide selection of study facilities and qualitative data from in-person and telephone-based semi-structured interviews. Interviews took place during a seven-month period in 2012. The VA Ann Arbor Healthcare System Institutional Review Board approved study procedures.

Sampling: Facilities

To maximize variation among study facilities, we purposively selected four VHA medical centers based on their ranking in the highest or lowest quartiles of performance on current quality measures for BP (< 140/90 mmHg) and low density lipoprotein (LDL) cholesterol (< 130 mg/dL), and their rates of possible overtreatment of BP8 and LDL9 among diabetic patients (see Table 1). Further stratification was used to ensure the sample included facilities from different U.S. regions. At each facility, we conducted a one-day visit that included in-person interviews with key informants. The analysis team was blinded to the facilities’ performance until after the analysis phase.

Table 1.

Performance Quadrant of Study Facilities

| Highest Overtreatment | Lowest Overtreatment | |

|---|---|---|

| Highest Control | Facility 1 Control: 81.3 % Overtreatment: 14.8 % |

Facility 4 Control: 77.2 % Overtreatment: 8.9 % |

| Lowest Control | Facility 3 Control: 70.1 % Overtreatment: 15.2 % |

Facility 2 Control: 66.6 % Overtreatment: 8.7 % |

Sampling: Individuals

For each facility, we invited leaders from its network (VHA facilities are separated geographically into 21 networks), the director, clinical and quality managers, computer application coordinators, and primary care providers (PCPs) and staff to participate in one interview through email with one follow-up phone call to non-responders. Table 2 lists participants.

Table 2.

Participants by Role and Facility

| Invited | Interviewed: | |||||

|---|---|---|---|---|---|---|

| Facility 1 | Facility 2 | Facility 3 | Facility 4 | TOTAL | ||

| VISN Executive Leaders | 14 | 3 | 1 | 2 | 3 | 9 |

| Facility Executive Director | 4 | 1 | 0 | 1 | 0 | 2 |

| Facility Chief of Staff/Associate Chief of Staff of Primary/Ambulatory Care | 11 | 2 | 2 | 2 | 2 | 8 |

| Primary Care Provider (MD, NP) | 20 | 4 | 4 | 3 | 4 | 15 |

| Nurse Care/Case Manager | 7 | 2 | 3 | 1 | 1 | 7 |

| Other (CAC, Business Manager, Quality Management, etc.) | 21 | 4 | 4 | 3 | 10 | 21 |

| TOTAL | 77 | 16 | 14 | 12 | 20 | 62 |

Data Collection

Twenty-one telephone interviews and 41 in-person interviews were conducted privately by LJD, SK, EK, and/or AMR. All interviews included a lead interviewer and a note-taker. Overall, 81 % of invited individuals participated; the rate for regional-level and facility-level executive leaders was lower (69 %).

Interview topics included: diabetes care structure, diabetes PM implementation, management, communications, monitoring, and impact (see online Appendix for the interview guide). Interviews were audio recorded and transcribed verbatim. The average interview lasted 31 min (range: 12–52 min).

Methodological Orientation/Analysis

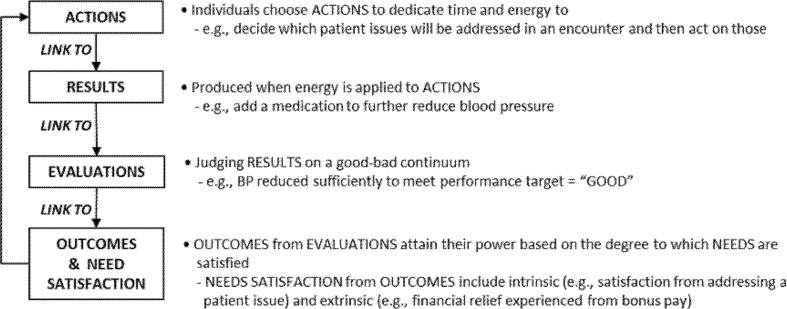

We used an inductive content analysis approach with the qualitative data, where themes are identified based on textual data.16,17 CHR and DB independently coded each transcript, meeting with LJD weekly to compare coding and resolve discrepancies by consensus. QSR NVivo v10 was used to manage, code, and analyze the data. We used Pritchard and Ashwood’s Motivation Theory as a framework to organize and interpret our findings,18,19 as depicted in Fig. 1. This theory helps to elucidate how PMs motivate individual desirable or undesirable actions in a mutually reinforcing feedback loop that combines to create a strong (weak) motivational force that then leads to behavioral intention. Fundamentally, individuals are motivated by their expectation of how their actions will lead to positive or negative outcomes. Motivation to perform desired actions is substantially increased by strengthening all linkages in the chain building motivational force;20 however, even one weak link will undermine motivation for desired actions and result in undesirable actions and significant negative unintended consequences.19 Our focus in this paper is on key contributors to strong (or weak) linkages in the motivation pathway.

Figure 1.

Interpretive framework based on motivation theory applied to performance measurement.

RESULTS

We present our results along the links of the chain in our interpretive framework (Fig. 1), highlighting gaps in the chain that appear to lead to unintended behavioral responses to PMs. While we highlight results that show how the implementation of PMs can be improved, it is important to interpret results in light of their acknowledged role in the literature for improving quality of care in VA, which was recognized by many executive leaders: “…when all is said and done, over the last 15, 16, 17 years, I guess, that we’ve really been focusing on performance measures, the quality of care that we’re delivering to our Veterans has skyrocketed and I weigh a large percentage of the success on the use of performance measures.” (Facility 1, Clinical Manager)

Link from Action to Results

The facility with the highest control/lowest overtreatment was in the process of developing “actionable” reports that exemplified the required linkage between action and results. The reports were explicitly designed to identify patients who needed specific actions and then were to be provided to individuals who, by virtue of the focus on actionable PMs, would have the resources, knowledge, and authority to execute that action. The physician developer described the reports:

"…our diabetes [reporting] routine… identifies whether their A1cs’ are actionable…It calculates the duration of time above certain levels and how many Primary Care encounters there are at those levels to provide some indication as to whether these are refractory treatment failures within Primary Care…You can only treat individual people and collectively they’ll contribute to an A1c of nine, but then how do you get the workforce to address a performance measure? They can’t…they need help not with the A1c of nine but where…their patients are failing in terms of a missed opportunity for treatment intensification.” (Facility 4)

Focusing on patients with “missed opportunities” (e.g., those who are being seen and have room to intensify their regimen but have not) identifies a situation more directly under providers’ control.

However, managers in the other three facilities often simply listed “fall-out” patients who did not meet performance targets and gave them to providers to address. Action plans to address gaps in performance were required. The majority of PCPs expressed frustration with being held accountable for issues over which they had little or no control. In addition to being held accountable for patients “refusing to cooperate” (Facility 1, PCP), they were also not able to address gaps in clinical processes outside of primary care:

“Well, a lot of times it’s something to do with the organization, like you put in the labs and…the lab people will only do what has been ordered for that day. Anything that was done before, they wouldn’t do it, so in a sense it’s negating your labs…that’s always an ongoing thing…it’s not that we didn’t get it checked…[it is] a system error….” (Facility 2, PCP)

A nurse care manager expressed frustration with her ability to address these gaps and the lack of response to action plans:

“I’ve done a couple of action plans…but again, it crosses services lines, so I’ve never had buy-in from the other higher up people….” (Facility 3)

Clinical managers also felt this powerlessness. At one facility, the primary care clinical lead was responsible for meeting “…350 something” (Facility 3) measures though the ability to improve clinical processes was outside their sphere of control:

“…when I walked in here, it was basically primary [care]… everybody’d leave the room and…I’d say, ‘Wait a minute! Everybody owns these measures… a guy goes to Audiology to get his hearing aids, he don’t [sic] ever come to Primary Care, but I’m going to get dinged because he didn’t get his flu shot?’” (Facility 3, Clinical Leader)

Link from Results to Evaluation

For a strong results-to-evaluation link, evaluated individuals must have clear criteria, agreed to by key individuals, at multiple levels of the organization, which indicate on a continuum how good or bad results are. Many of the current PMs are operationalized as point-of-care, computerized clinical decision support—“clinical reminders” in VHA parlance—that are triggered in the electronic health record during encounters with patients. These clinical reminders provide a good/bad dichotomous indicator for a particular patient on a particular measure. However, clinical reminder targets are set by local decision-makers and they are not always consistent with the top-level organizational targets or even best medical practice. For example, these reminders reinforced a singular treatment target for HbA1c levels in all diabetic patients. The 2010 VA/DoD diabetes treatment guidelines call for HbA1c targets to be personalized based on risk. However, reminders were set to pop-up when HbA1c was greater than 7 % at three of the study facilities at a time when the national performance targets focused on decreasing poor control based on a looser target (e.g., keep patients < 9 %). At one facility, a quality manager dedicated to achieving high performance, but who had no medical training had the authority to keep the clinical reminder set on a more stringent goal: “…I’m always trying to make sure the reminder goes a little bit more than the minimum….” (Facility 1)

Managers rely heavily on clinical reminders to help ensure goals are attained, and thus providers feel pressure to address every reminder:

“We don’t always have a lot of ability to think outside the computer…It doesn’t allow for any real autonomy because if we don’t meet the measure, we’re going to get a letter in two weeks saying, ‘You didn’t do what you’re supposed to do’, so everyone is kind of beaten into doing what the computer says, so that we don’t get a letter….” (Facility 1, PCP 1)

The role of clinical reminders in providing evaluative feedback can be further undermined by their idiosyncratic implementation. For example, one system goal was to achieve a specific percentage of diabetic patients with HbA1c achieving a certain goal. This metric applied only to patients under 70 years old. However, three facilities did not implement a critically important age filter in their clinical reminders. Though documented purpose and technical specifications are available for each measure, this information is lost along the way or even changed at the local level without input from providers. This lack of voice was expressed most acutely at the highest control/highest overtreatment facility; “…[the communication] does seem demagoguery…it really goes one direction.” (Facility 1, PCP 2)

Local implementation approaches can unwittingly change the intent of a PM. For example, national leaders were concerned with equity of diabetes care because women tended to have worse performance on diabetes measures. Although a reporting system was constructed to enable tracking of gender disparities in Veterans with diabetes, implementation details were left up to facilities. Two facilities operationalized this goal in a way that led to unintended consequences. For example, one operationalized the measure by setting a goal to reduce the percentage difference in women compared to men with uncontrolled HbA1c and to apply that target at the level of the individual provider, tied to performance pay. Providers were not part of the development of the local measurement approach and only became aware of the initiative when results were released at staff meetings; perversely, the PM was unable to detect surpassingly excellent care:

“…you could have a provider with only half a dozen women, [but] if two of them dropped out and…weren’t in compliance, then there was tremendous gender disparity…it would behoove [the provider] to the tune of 10 % of $15,000 which is $1500 to make a couple of calls and contact those women…. And long-story-short, that’s exactly what happened, and the irony is, we are now out of compliance with gender disparity, because get this: we are treating women too well! …their A1Cs and their LDLs …are in excess,betterthan men and so we have to lighten up.” (Facility 1, Clinical Leader)

Link from Evaluations to Outcomes and Need Satisfaction

Evaluation criteria are inextricably linked with meeting PMs, which in turn are built into the clinical reminders used to alert the provider when a measure hasn’t been met. While reminders and management dashboards that track their fulfillment can be useful to help ensure PMs are addressed, they have proliferated to the point where one provider said:

“…we almost need a clinical reminder to say, ‘How are you doing today?’ to the patient.” (Facility 2, Business Manager)

The linkage of clinical reminders to PMs is reinforced by the “fall-out” reports described above. The majority of providers felt a disconcerting dissonance between their belief they were doing the best for their patient, while at the same time feeling “dinged” or “discouraged” when a reminder was not met. In fact, at one facility, a clinical leader was told,

“Your providers are terrible doctors because…they haven’t met these goals.” (Facility 3)

This dissonance was reinforced by increasingly aggressive performance goals…

“…one time at this meeting I joked that it was like a 94 % number that we had and we weren’t meeting the benchmark, and I said, ‘You know, in my book a 94 % is an A’ and everybody… agreed with me…I feel like people deserve a pat on the back and praise rather…[than this] kind of criticism….” (Facility 2, Clinical Leader)

…and further reinforced by top-down unilateral communications about PMs that were felt most acutely at the facility with the highest control/highest overtreatment:

“…there’s a great pressure upon leaders to make sure that all their dashboard indicators are turned green. And so it’s…the dashboard cowboys that they’ve all become…very relentless and…just very rigid on their thinking.” (Facility 1, PCP 2)

In all facilities except for the highest control/lowest overtreatment facility, individuals described a “punitive type of mentality” (Facility 2, Business Manager). Leaders at these facilities described how they concentrate attention on PMs “that are in the red.” This attention sometimes undermined providers’ ability to address issues that were most important for their patients:

“…a PCP…told me the story of a patient she’s followed for a number of years…[a] Veteran whose wife died and…50 years after Korea, he’s finally having flashbacks, now triggered by the death of his wife. So she did a warm hand-off over to Mental Health to handle his PTSD issues and the guy was completely breaking down. She does what she thinks is right, comes back the next day, she has a note that she didn’t do the reminder to ask him to stop smoking. And she’s like, ‘Well, what’s success anymore?’” (Facility 1, Network Director)

Though physicians receive performance-related pay (as do many leaders and managers), the few that mentioned this had an unclear understanding of how the amount was calculated. Local facilities decide specific physician PMs in deference to a longstanding VHA culture of preserving local authority and based on organizational theory that supports giving units control over the criteria with which they will be evaluated.1 There is no system for ensuring that physician incentives actually align with national PMs or local clinical objectives. One interviewee articulated the feeling that performance pay can undermine inherent intrinsic motivation of the providers and hints at the oppositional wedge between them and administrators:

“…I think that if you use [clinical reminders as] the hammer, the question is, is that effective? …it comes from the basic assumption that administrators are more motivated to improve patient care than PCPs who devoted their time to it…that’s not a compliment to your Primary Care workforce that [administrators] are more motived and therefore we presume that you need your performance pay in order to do a good job…we should really be taking advantage of their dedication and facilitating their approach to the problem rather than saying that we have an agenda that trumps theirs because we are more motivated than they are collectively….” (Facility 4, Clinical Manager)

DISCUSSION

PMs are a powerful management tool that can and have driven tremendous improvements in healthcare provision and quality. However, our results show how lack of integrated ownership through the whole system and gaps in conditions necessary to build positive motivational force for improvement at the local clinical level, as explicated through motivation theory,18,19 undermine the positive potential of PMs.

Action to Results

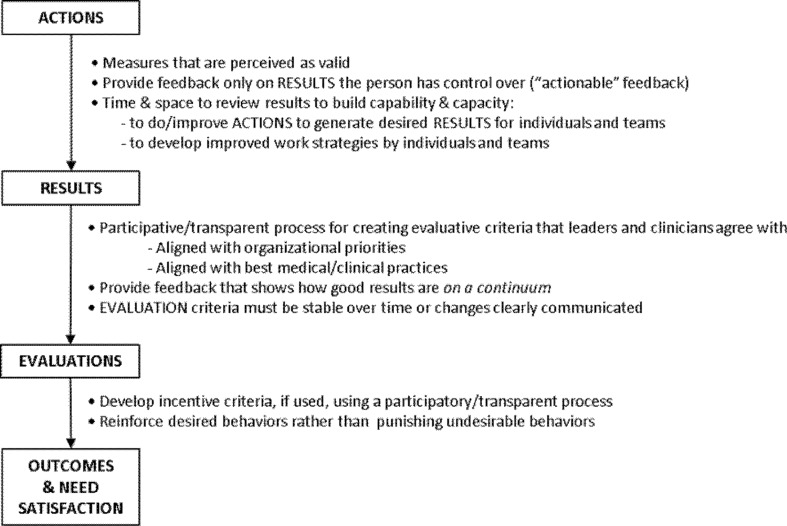

First, the link between actions and results can be undermined by feedback that largely consists of lists of patients failing to meet performance targets. Providers perceive these lists as an accusation of fault on their part. Most providers (as well as some managers) expressed helplessness in their ability to respond, especially when large proportions of the list consisted of challenging patients that, despite best efforts, could not achieve treatment goals. One facility was developing “actionable” reports that would be targeted to patients for whom there was a more obvious response (e.g., candidates for starting insulin) under the control of the provider that had not yet been tried. This is a concrete example of an approach to ensure that issues are directed to the people who are best equipped to address them and in line with the need to establish strong links between action and results.21 When performance gaps cannot be addressed by individual providers, PMs need to be designed to incentivize and grant authority to coalitions of individuals across organizational boundaries (e.g., primary care working in collaboration with lab staff) who can design and implement improved clinical processes. These approaches, together, provide a foundation for quality improvement, of which PMs should be a positive integral component, with fully engaged staff and managers (Fig. 2).19,22

Figure 2.

Recommended strategies to ensure strong motivational force in response to performance measurement.

Results to Evaluation

Second, the link between results and evaluation can be undermined when criteria instituted through clinical reminders by administrative managers do not align with treatment guidelines, the latest evidence, and especially principles of patient-centered care. For example, it is becoming more clear that tight glycemic control is not appropriate in older patients and under some circumstances, the recommendation is to encourage the patient to participate in deciding their treatment goal.23 Providers seemed to suffer cognitive dissonance as they tried to focus on addressing the needs and preferences of their individual patients, while simultaneously trying to satisfy the demands of a one-size-fits-all PM system reinforced by clinical reminders and reports of fall-out patients. These findings are affirmed by other recent studies.15 As listed in Fig. 2, evaluative criteria should be developed transparently (open communication as measures are being developed) using a participative process to adapt and implement PMs in local clinics.19,24

Evaluation to Outcomes/Need Satisfaction

Third, the link from evaluation to outcomes and need satisfaction can be undermined by a punitive environment where the focus is on failure to meet increasingly aggressive targets;11,25 in all but the highest control/lowest overtreatment facility, providers expressed feeling like failures, despite doing what they perceived as “A work.” Managers are rewarded monetarily for high aggregate performance, but individual providers falling a few points off-target felt penalized. A top-down structure, with little perceived input on PMs, evaluation criteria, or clear and transparent formulas to operationalize performance pay weaken this link.19,26 Furthermore, there is evidence that the extrinsic motivational force triggered by PMs can undermine inherent intrinsic desire of providers to personalize treatment plans that improve the health and well-being of their patients.27,28

A supportive performance infrastructure is vital to support the recommendations designed to strengthen linkages along the motivational pathway, including: information systems and data analysis support;29,30 full collaborative participation of leaders and staff in designing specific, localized measures;19,31 and reflexive review of feedback and improvement planning.19,32 This requires significant time and resource investments, but doing so has the potential to reverse the negative feedback cycle. Organizational psychologists studying industries other than healthcare have shown that a positive reinforcing motivational force results in greater commitment of individuals to organizational goals, more innovation, more effective feedback, less role ambiguity and conflict for employees, greater trust between administrative managers and staff, and most importantly, the freedom to better serve the organization’s customers.19,21,22

Hospitals, physician practices, and Accountable Care Organizations are struggling with a growing plethora of PMs that are designed to track system quality and yet are promulgated through the system, assuming they will automatically become a positive motivational force for front-line individuals who must reconcile them with the need to be responsive to unique needs of individual patients.33 The challenges were voiced in a Wall Street Journal editorial by a non-VHA provider, “…we have had every aspect of our professional lives invaded by the quality police. Each day we are provided with lists of patients whose metrics fall short of targeted goals….I must complete these tasks while reviewing more than 300 other preventative care measures…Primary-care providers are swamped with lists, report cards and warnings about their performance….”34 We have offered diagnoses and potential root causes for these de-motivating pressures, offering a way forward and enabling others to learn from the VHA experience.

This study has limitations. First, findings are based on interviews with staff at only four of the over 900 clinical facilities in the VHA and may have included individuals with particularly strong feelings about performance measures. However, our intent was to delve more deeply to understand the pathway of PM implementation and associated behavioral responses. It is also important to note that our focus was on diabetes measures, which are among the most developed within one of the largest and high quality healthcare systems in the U.S., and thus, our findings may not be applicable to other systems with poorer performance.35 Also, our interviews took place in the midst of a major transformation in primary care to the Primary Care Medical Home (PCMH) model that was driving significant changes in priorities and performance targets (e.g., three study facilities were in the process of dismantling diabetes clinics to reassign staff to PCMH teams).

Next Steps for the Partnership

We are working with VHA system leaders to use these lessons to develop and test implementation approaches to help ensure that the next generation of PMs are clinically meaningful and provide the latitude and incentive to provide care that is individualized to patient needs and preferences, by ensuring strong links in the motivational chain for every individual—from managers to providers and their patients. It is clear from these results that effective implementation of measures is crucial for success. We are currently facilitating national-level discussions to incorporate these lessons to improve VHA’s PM system. There is plenty of evidence of PMs’ powerful role in improving healthcare, including unintended positive benefits.36 The intent with this critique is to make them even better positioned for the next generation.

Electronic supplementary material

(DOCX 23 kb)

Acknowledgements

The authors would like to thank the clinical leaders, physicians, and staff who participated in this study. The views expressed in this article are those of the authors and do not necessarily represent the views of the Department of Veterans Affairs.

Contributors

Thanks also to Rob Holleman for his analysis support and Mandi Klamerus for her assistance in editing this manuscript.

Funders

This work was undertaken as part of a grant funded by the Department of Veteran Affairs, Quality Enhancement Research Initiative (QUERI; Grant RRP 11–420). Ann-Marie Rosland is a VA HSR&D Career Development Awardee.

Prior Presentations

Laura Damschroder, Claire Robinson, Joseph Francis, Tim Hofer, Doug Bentley, Sarah Krein, Ann-Marie Rosland, Eve Kerr. Bringing Performance Measurement into the Age of Patient-centered Care. AcademyHealth 2014 Annual Research Meeting (ARM). Poster Presentation. San Diego, CA. June 8, 2014.

Conflict of Interest

The authors declare that they do not have a conflict of interest.

REFERENCES

- 1.Kizer KW, Kirsh SR. The Double Edged Sword of Performance Measurement. J Gen Intern Med. 2012. Epub 2012/01/25. [DOI] [PMC free article] [PubMed]

- 2.Kerr EA, Gerzoff RB, Krein SL, Selby JV, Piette JD, Curb JD, et al. Diabetes care quality in the Veterans Affairs Health Care System and commercial managed care: the TRIAD study. Ann Intern Med. 2004;141(4):272–281. doi: 10.7326/0003-4819-141-4-200408170-00007. [DOI] [PubMed] [Google Scholar]

- 3.Longman P. Best Care Anywhere, 3rd Edition: Why VA Health Care Would Work Better For Everyone. 3rd ed: Berrett-Koehler Publishers; 2012. 240 p.

- 4.Francis J, Perlin JB. Improving performance through knowledge translation in the Veterans Health Administration. J Contin Educ Health Prof. 2006;26(1):63–71. doi: 10.1002/chp.52. [DOI] [PubMed] [Google Scholar]

- 5.Kupersmith J, Francis J, Kerr E, Krein S, Pogach L, Kolodner RM, et al. Advancing evidence-based care for diabetes: lessons from the Veterans Health Administration. Health Aff (Millwood) 2007;26(2):w156–w168. doi: 10.1377/hlthaff.26.2.w156. [DOI] [PubMed] [Google Scholar]

- 6.Kerr EA, Fleming B. Making performance indicators work: experiences of US Veterans Health Administration. BMJ. 2007;335(7627):971–973. doi: 10.1136/bmj.39358.498889.94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Sawin CT, Walder DJ, Bross DS, Pogach LM. Diabetes process and outcome measures in the Department of Veterans Affairs. Diabetes Care. 2004;27(Suppl 2):B90–B94. doi: 10.2337/diacare.27.suppl_2.B90. [DOI] [PubMed] [Google Scholar]

- 8.Kerr EA, Lucatorto MA, Holleman R, Hogan MM, Klamerus ML, Hofer TP, et al. Monitoring Performance for Blood Pressure Management Among Patients With Diabetes Mellitus: Too Much of a Good Thing?Monitoring Performance for BP Management in Diabetes. Arch Intern Med. 2012:1–8. Epub 2012/05/30. [DOI] [PMC free article] [PubMed]

- 9.Beard AJ, Hofer TP, Downs JR, Lucatorto M, Klamerus ML, Holleman R, et al. Assessing appropriateness of lipid management among patients with diabetes mellitus: moving from target to treatment. Circ Cardiovasc Qual Outcomes. 2013;6(1):66–74. doi: 10.1161/CIRCOUTCOMES.112.966697. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Cassel CK, Guest JA. Choosing wisely: helping physicians and patients make smart decisions about their care. JAMA. 2012;307(17):1801–1802. doi: 10.1001/jama.2012.476. [DOI] [PubMed] [Google Scholar]

- 11.Pollitt C. The logics of performance management. Evaluation. 2013;19:346–363. doi: 10.1177/1356389013505040. [DOI] [Google Scholar]

- 12.Tseng CL, Soroka O, Maney M, Aron DC, Pogach LM. Assessing potential glycemic overtreatment in persons at hypoglycemic risk. JAMA Intern Med. 2014;174(2):259–268. doi: 10.1001/jamainternmed.2013.12963. [DOI] [PubMed] [Google Scholar]

- 13.Lester HE, Hannon KL, Campbell SM. Identifying unintended consequences of quality indicators: a qualitative study. BMJ Quality & Safety. 2011;20(12):1057–1061. doi: 10.1136/bmjqs.2010.048371. [DOI] [PubMed] [Google Scholar]

- 14.Powell AA, White KM, Partin MR. Halek K. Neil B, et al. Unintended Consequences of Implementing a National Performance Measurement System into Local Practice. J Gen Intern Med: Christianson JB; 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kansagara D, Tuepker A, Joos S, Nicolaidis C, Skaperdas E. Hickam D. A Qualitative Study of Staff Experiences Implementing and Measuring Practice Transformation. J Gen Intern Med: Getting Performance Metrics Right; 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Forman J, Damschroder LJ. Qualitative Content Analysis. In: Jacoby L, Siminoff L, editors. Empirical Research for Bioethics: A Primer. Oxford, UK: Elsevier Publishing; 2008. pp. 39–62. [Google Scholar]

- 17.Mayring P. Qualitative content analysis. Forum on Qualitative Social Research. 2000;1(2).

- 18.Pritchard R, Ashwod E. Managing motivation: A manager’s guide to diagnosing and improving motivation. New York: Psychology Press; 2008. [Google Scholar]

- 19.Pritchard RD, Weaver SJ, Ashwood E. Evidence-Based Productivity Improvement: A Practical Guide to the Productivity Measurement and Enhancement System (ProMES): Routledge; 2011.

- 20.Lunenburg FC. Expectancy theory of motivation: motivating by altering expectations. International Journal of Management, Business, and Adminstration. 2011;15(1):1–6. [Google Scholar]

- 21.van der Geer E, van Tuijl HFJM, Rutte CG. Performance management in healthcare: performance indicator development, task uncertainty, and types of performance indicators. Soc Sci Med. 2009;69(10):1523–1530. doi: 10.1016/j.socscimed.2009.08.026. [DOI] [PubMed] [Google Scholar]

- 22.Pritchard RD, Harrell MM, DiazGranados D, Guzman MJ. The productivity measurement and enhancement system: a meta-analysis. J Appl Psychol. 2008;93(3):540–567. doi: 10.1037/0021-9010.93.3.540. [DOI] [PubMed] [Google Scholar]

- 23.Vijan S, Sussman JB, Yudkin JS, Hayward RA. Effect of Patients’ Risks and Preferences on Health Gains With Plasma Glucose Level Lowering in Type 2 Diabetes Mellitus. Intern Med: JAMA; 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Lesser CS, Lucey CR, Egener B, Braddock CH, Linas SL, Levinson W. A behavioral and systems view of professionalism. JAMA. 2010;304(24):2732–2737. doi: 10.1001/jama.2010.1864. [DOI] [PubMed] [Google Scholar]

- 25.Mehrotra A, Sorbero ME, Damberg CL. Using the lessons of behavioral economics to design more effective pay-for-performance programs. Am J Manag Care. 2010;16(7):497–503. [PMC free article] [PubMed] [Google Scholar]

- 26.Van Herck P, Annemans L, De Smedt D, Remmen R, Sermeus W. Pay-for-performance step-by-step: introduction to the MIMIQ model. Health Policy. 2011;102(1):8–17. doi: 10.1016/j.healthpol.2010.09.014. [DOI] [PubMed] [Google Scholar]

- 27.Fehr E, Falk A. Psychological foundations of incentives. European Economic Review. 2002;46:687–724. doi: 10.1016/S0014-2921(01)00208-2. [DOI] [Google Scholar]

- 28.Cassel CK, Jain SH. Assessing individual physician performance: does measurement suppress motivation? JAMA. 2012;307(24):2595–2596. doi: 10.1001/jama.2012.6382. [DOI] [PubMed] [Google Scholar]

- 29.Nudurupati SS, Bititci US, Kumar V, Chan FTS. State of the art literature review on performance measurement. Computers & Industrial Engineering. 2011;60(2):279–290. doi: 10.1016/j.cie.2010.11.010. [DOI] [Google Scholar]

- 30.Friedberg MW, Damberg CL. A five-point checklist to help performance reports incentivize improvement and effectively guide patients. Health Aff (Millwood) 2012;31(3):612–618. doi: 10.1377/hlthaff.2011.1202. [DOI] [PubMed] [Google Scholar]

- 31.Bourne M, Neely A, Mills J, Platts K. Why some performance measurement initiatives fail: lessons from the change management literature. Int J Bus Perform Manag. 2003;5(2):245–269. doi: 10.1504/IJBPM.2003.003250. [DOI] [Google Scholar]

- 32.Witter S, Toonen J, Meessen B, Kagubare J, Fritsche G, Vaughan K. Performance-based financing as a health system reform: mapping the key dimensions for monitoring and evaluation. BMC Health Services Research. 2013;13(1):367. doi: 10.1186/1472-6963-13-367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Panzer RJ, Gitomer RS, Greene WH, Webster PR, Landry KR, Riccobono CA. Increasing demands for quality measurement. JAMA. 2013;310(18):1971–1980. doi: 10.1001/jama.2013.282047. [DOI] [PubMed] [Google Scholar]

- 34.McEvoy V. Why ’Metrics’ Overload Is Bad Medicine; Doctors must focus on lists and box-checking rather than patients. Wall Street Journal. 2014 February 12, 2014;Sect. Opinion.

- 35.Doran T, Kontopantelis E, Reeves D, Sutton M, Ryan AM. Setting performance targets in pay for performance programmes: what can we learn from QOF? BMJ. 2014;348:g1595. doi: 10.1136/bmj.g1595. [DOI] [PubMed] [Google Scholar]

- 36.Powell AA, White KM, Partin MR, Halek K, Hysong SJ, Zarling E, et al. More than a score: a qualitative study of ancillary benefits of performance measurement. BMJ quality & safety. 2014. Epub 2014/02/14. [DOI] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX 23 kb)