Abstract

The present study seeks to evaluate a hybrid model of identification that incorporates response-to-intervention (RTI) as a one of the key symptoms of reading disability. The one-year stability of alternative operational definitions of reading disability was examined in a large scale sample of students who were followed longitudinally from first to second grade. The results confirmed previous findings of limited stability for single-criterion based operational definitions of reading disability. However, substantially greater stability was obtained for a hybrid model of reading disability that incorporates RTI with other common symptoms of reading disability.

Keywords: reading disability, identification, hybrid model, RTI

Over 2.5 million students are identified as having some form of learning disability (National Center For Learning Disabilities, 2011), and students with learning disabilities account for more than 41% of individuals who are eligible for special education services (IDEA Part B Child Count, 2010). The majority of identified learning disabilities are in reading (Fuchs, Fuchs, Mathes, Lipsey, & Roberts, 2002, Gersten, Fuches, Williams, & Baker, 2001, President’s Commission on Excellence in Special Education, 2002). Yet despite the national attention that learning and reading disabilities have received since the 1960s (Bateman, 1964) and despite the fact that specific learning disability became a federally recognized classification in 1968 (U.S. Office of Education, 1968), agreement on a definition of reading disability remains elusive (Fletcher, Stuebing, Morris, and Lyon, 2013).

An agreed upon definition is essential for both research and practice. If different researchers use different operational definitions of reading disability to select participants in their studies, it is likely to be difficult for knowledge to cumulate. Similarly, it will be disconcerting to everyone involved if eligibility for special education services based upon having a learning disability in reading depends upon where one happens to attend school.

The first reported case of reading disability is attributed to a general practitioner named Pringle Morgan in 1896 (Snowling, 1996). A contemporary of Pringle Morgan, the ophthalmologist James Hinshelwood, also wrote about the disorder during this time period. Reading disability was conceptualized initially as “congenital word blindness,” a term that captures two essential features of reading disability that are true today. First, congenital refers to a condition that is present at birth and that may have genetic ties, which is true for reading disability: Having a first degree relative with reading disability raises one’s own chances of having a reading problem. Second, word blindness refers to what is perhaps the most salient observable feature of reading disability, namely an inability to efficiently read the words on a page. Early speculation centered on a problem in vision or memory, whereas today many cases of reading disability are attributed to language impairments (Fletcher, Lyon, Fuchs, & Barnes, 2006; Lyon, 1995; Stanovich, 1994; Vellutino & Fletcher, 2005). Additionally, in view of the recent release of the DSM-V (American Psychiatric Association, 2013), it has been argued that deficits in other skills beyond those that pertain specifically to language impairments also potentially contribute to reading disability (see Pennington & Peterson, in press). However, moving beyond this general conceptualization of reading disability to a specific operational definition has proven to be extremely difficult.

Alternative operational definitions of reading disability that have been proposed over the years or more recently can be grouped into four kinds of models: IQ-achievement discrepancy models, cognitive discrepancy models, response to instruction or intervention models, and hybrid models (see Fletcher, Stuebing, Morris, & Lyon, 2013).

Alternative Models of Learning and Reading Disabilities

Aptitude/IQ-Achievement Discrepancy Models

The aptitude/IQ-achievement discrepancy model first appeared in the early 1960s (Bateman, 1964) and is perhaps the most widely implemented approach to identifying individuals with learning disabilities. Using this model, achievement is compared to aptitude, commonly measured by an IQ test, and an individual would be identified as having a learning disability if there is a significant discrepancy between IQ and achievement. Although aptitude-achievement discrepancy models have been used widely, they have several shortcomings.

Perhaps the most important shortcoming is that they function as “wait to fail” models (Fletcher et al., 2002; Fletcher, Lyon, Fuchs, & Barnes, 2007; Speece, 2002). They require children to first experience academic difficulties – or fail – before receiving help. Early identification and remediation of learning difficulties has been shown to result in positive academic outcomes (Stanovich, 2000). Yet it is common that identification does not occur until second grade or even later. Part of the problem is attributable to floor effects on measures of reading. If reading instruction does not begin in earnest in the United States until first grade, and the achievement measure is a test of reading, it will take a substantial period of time before a severe discrepancy can emerge. However, if one allows measures of emergent literacy that emphasize letter name and sound knowledge in addition to beginning decoding and word recognition to count as the achievement measure, earlier identification should be possible.

There are other concerns for the aptitude/IQ—achievement discrepancy model (see, e.g., Learning Disabilities Roundtable, 2002; Sternberg & Grigorenko, 2002; Wagner, 2008). Use of the model in isolation offers little insight into the specific needs of the child nor does it inform instruction (Vaughn & Fuchs, 2003). It does not adequately consider educational history and does not necessarily discriminate between a true disability and the effects of limited or poor quality reading instruction (Vellutino, Scanlon, Small, & Fanuele, 2006), even though quality of instruction and intervention are important predictors of reading outcomes (Carlisle, Kelcey, Berebitsky, & Phelps, 2011; Fuchs & Fuchs, 2006; Kavale, 1995; Shaywitz, Fletcher, Holahan, & Shaywitz, 1992). Moreover, due to the use of IQ testing, this model can lead to steering minority children and those from culturally or linguistically diverse backgrounds away from programs designed to remediate reading disabilities and to programs designed for general poor academic performance such as Title I (Steubing et al., 2002).

Cognitive Discrepancy Models

Cognitive discrepancy models rely on finding both poor academic achievement and discrepant performance on tasks (i.e., showing evidence of strengths and weaknesses as opposed to flat performance) that are believed to explain or be related to the poor academic performance. Examples include discrepancy-consistency (Naglieri, 2010), concordance-discordance (Hale et al., 2008), and cross-battery assessment (Flanagan, Ortiz, & Alfonso, 2007). Using these methods, a student would be identified as having a learning disability if he or she displays low achievement that can be accounted for by cognitive processing deficits, and also displays cognitive strengths in other areas so that the problem is not one of across-the-board poor cognitive processing. One complication of use of this model is that it can be difficult to clearly establish links between common cognitive processing tasks and achievement in reading (Torgesen, 2002). A recent simulation study of three cognitive discrepancy models also found that they were biased towards finding an absence of a learning disability (Steubing, Fletcher, Branum-Martin, & Francis, 2012).

Response-to-Intervention Models

Measuring response to effective instruction and intervention (RTI) for the purpose of identifying individuals who have a reading disability has been proposed as a potential replacement for discrepancy models (Fuchs, Mock, Morgan, & Young, 2003). The RTI model first appeared in the 1980s (Fuchs & Fuchs, 2006) and aims to identify students with learning disabilities using a multi-tier instructional process framework (National Joint Committee on Learning Disabilities, 2005). The model consists of three main tiers. In Tier I, all students are provided with high quality instruction and behavioral support; movement to Tier II would indicate that a student has been identified as having academic performance that is consistently below his or her peers, and in Tier II, these students are targeted to receive more specialized remediation that is integrated within their general education. Students would then move to Tier III if they have insufficient response to Tier II. In Tier III, comprehensive diagnostic assessments are administered to the student to ascertain if he or she is eligible to receive special education services (see Johnson, Mellard, Fuchs, & McKnight, 2006). A student would be identified as having a learning disability if he or she displays significantly low achievement and insufficient response to intervention (Fuchs, Fuchs, & Speece, 2002).

One potential advantage of this approach is that effective instruction and intervention are provided prior to ultimate determination of eligibility to receive special education services. This is important because otherwise an RTI approach is as much as a wait-to-fail model as the traditional IQ-achievement discrepancy model because it takes time to progress through the tiers of instruction. Strengths of this approach to identification over discrepancy models are that it seeks to provide early interventions at the first sign of academic difficulties and utilizes empirically validated evidence-based instruction and intervention. Another advantage of the approach is that information potentially useful for informing instruction is accumulated along the way, although this is tempered by the fact that eligibility is achieved by failing to respond to instruction. As a result, what is learned primarily is what does not work rather than what does.

One limitation of this approach is that different definitions of lack of response to intervention identify largely non-overlapping groups of students (Barth et al., 2008; Fuchs, Fuchs, & Compton, 2004; Waesche, Schatschneider, Maner, Ahmed, & Wagner, 2011). A second is that its reliability is unknown, and there is reason to be concerned given variability in the effectiveness of instruction and intervention. By not including a measure of cognitive ability in the definition, the problem of under identification of non-majority students is lessened (VanDerHeyden, Witt, & Gilbertson, 2007). However, it has the potential to identify all slow learners as having a learning disability (Kavale, Kauffman, Bachmeier, & LeFever, 2008).

Problems General to Existing Models

No model for identifying individuals with reading disability that relies on a single criterion (e.g., IQ-achievement discrepancy, inadequate response to instruction) will yield reliable identification for any low base rate condition such as reading disability. For example, Waesche et al. (2011) examined rates of agreement and two-year longitudinal stability for four operational definitions of reading disability in a study of 288,114 students who were followed longitudinally from first through third grade. The first operational definition was based on aptitude-achievement discrepancy and the remaining three were variants based on response to instruction: low achievement, low growth, and dual discrepancy. Rates of agreement among alternative definitions were variable but only poor to moderate overall. One-year longitudinal stability was poor.

The reason for poor agreement among alternative operational definitions of reading disability and poor stability is now well understood. When a cut point is placed on any continuous distribution, measurement error will result in unreliable identification of individuals near the cut point (Francis et al., 2005; Wagner et al., 2011; Waesche et al., 2011). The problem is that for low base rate conditions including reading disability, nearly all affected individuals are close to the cut point. This problem affects each of the approaches we have just reviewed.

There are several potential solutions to this problem. One is to consider multiple sources of information. More information reduces measurement error, and less measurement error reduces the problem of instability of identification for individuals near the cut point. A second is to eliminate the cut point and to move to a continuous indicator in the form of risk for reading disability. This second approach is based on the view that reading disability cannot be observed directly but rather is an unobservable (i.e., latent condition) that can be inferred from patterns of performance.

Hybrid Models

One approach to considering more sources of information is the use of a hybrid model (Fletcher et al., 2013; Wagner, 2008). A hybrid model, presumably, could incorporate multiple indicators of reading disability to create a comprehensive identification framework that should theoretically be better at identifying children with learning or reading disability. An example of a hybrid model is the approach recommended by a consensus group of researchers convened by the U.S. Department of Education Office of Special Education Programs (Bradley et al., 2002, p. 798). The model consists of three criteria. First, the student must demonstrate low achievement. Second, there is evidence of insufficient response to effective, research-based interventions. Third, the poor achievement is not attributable to exclusionary factors such as mental retardation, sensory deficits, serious emotional disturbance, lack of proficiency in the language of instruction, and lack of opportunity to learn. This model appears to represent a step in the right direction by incorporating both low achievement and poor response to effective, research-based interventions. However, there are at least two limitations of the model. First, it has not been evaluated empirically. Second, it does not fully represent much of what we know about reading disability.

For example, many cases of reading disability are attributed to impaired phonological processing (Snowling, 2000), yet this information is not incorporated into the model. A hybrid model implemented by Compton, Fuchs, Fuchs, and Bryant (2006) addressed this limitation in part. They began by giving two screening measures, an experimental word identification fluency task and the Rapid Color Naming subtest of the Comprehensive Test of Phonological Processing (CTOPP) (Wagner, Torgesen, & Rashotte, 1999). These were used to identify the poorest performing students in classrooms for additional assessment. Next, an assessment was carried out consisting of measures of phonological awareness (Sound Matching from the CTOPP), rapid naming (Rapid Digit Naming from the CTOPP), oral vocabulary, and word identification fluency again. Alternative forms of the word identification fluency task were administered weekly for five weeks to provide a measure of RTI. The combined assessments and measure of RTI provided to be an adequate predictor of second grade reading disability status.

Another limitation of existing hybrid models is that they only incorporate behavioral measures and ignore potentially useful information from other levels of analysis. For example, boys are approximately two times more likely to have reading disability, and this greater incidence is not attributable to ascertainment bias but rather represents genuinely greater vulnerability of boys for reading disability (Quinn & Wagner, in press). In addition, having a first-degree relative with reading disability is itself a risk factor for reading disability, yet this information is not incorporated into the model.

The Present Study

The goal of the present study was to evaluate a hybrid model of reading disability that represents an attempt to incorporate more of what is known from the research literature about reading disability. The model has three key features.

First, reading disability is viewed as a latent construct, in that it is not observable directly but is manifested through observed symptoms. As such, it is not possible to observe the presence of a reading disability directly. The best we can do is to infer the presence of a reading disability from its effect on measurable indicators and the presence of other diagnostically relevant information.

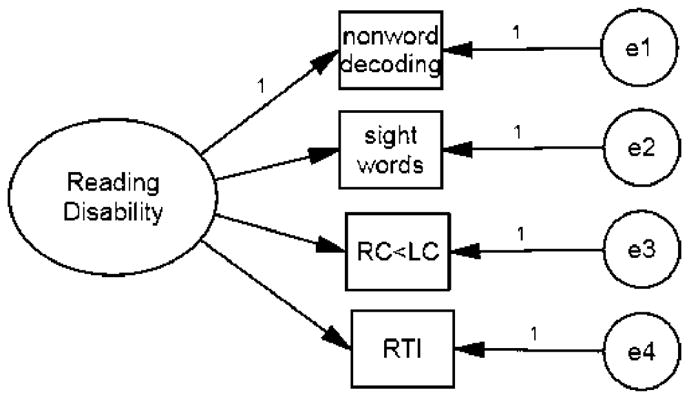

Second, the model distinguishes causes, consequences, and correlates of reading disability. For example, impaired phonological awareness is often viewed as a cause of reading disability (e.g., Snowling, 2000). Genetic risk, as determined by presence of family members with reading disability, and gender are also potential causes that influence the probability of having a reading disability. Consequences of reading disability include a constellation of four symptoms. The first symptom is impaired decoding of pseudowords, which is a measure of phonological decoding. The second symptom is impaired reading of sight words. Through reading experience and countless exposures, common words begin to be recognized on sight with minimal attention and processing resources, thereby freeing up cognitive resources to be used for comprehension. The third symptom is impaired reading comprehension relative to listening comprehension. Difficulty in decoding the words on a page should result in impaired reading comprehension relative to listening comprehension for the same passage when read to the impaired reader. A functionally important aspect of impaired reading comprehension relative to listening comprehension is that the individual is likely to profit from assistive technology in the form of a computer-based reader. The fourth symptom is a poor response to instruction and intervention, which signals that the reading problem is not simply the result of limited instruction. Finally, ADHD and other co-morbid conditions would be viewed as correlates.

Third, as just described, RTI is incorporated into the model directly. It is one of the four key symptoms of reading disability. Rather than view RTI as a sufficient and alternative approach to identifying individuals with reading disability, it is integrated into a comprehensive assessment model.

In the present study, we sought to evaluate only the latent construct of reading disability as represented by the four symptoms just described. This part of the model is portrayed in Figure 1. Because we had longitudinal data on our sample, we focused on the one-year stability of alternative operational definitions of reading disability as our primary means of comparing alternative definitions. In doing so, three measures were of interest. Cohen’s Kappa is proportion of agreement across the two time points corrected for chance. Although frequently used, Kappa has an important limitation. It overestimates the agreement or stability for individuals with reading disability (Waesche et al., 2011). The reason for this overestimation of agreement or stability is that Kappa is an average of agreement for the determination of having a reading disability and for the determination of not having a reading disability, and determination of not having a reading disability is a more accurate process than is determination of having a reading disability. The reason determination of not having a reading disability is more accurate is that average and above students are well away from the cut point used to determine the presence of reading disability. Measurement error will not place them near the cut point, let alone on a different side of it. On the other hand, for low base rate conditions like reading disability, all affected individuals are fairly close to the cut point because there just is not much room on the lower side of the cut point. Measurement error is more likely to place them on the other side of the cut point on a second evaluation compared to the chances of that happening for the average or good readers far from the cut point. Measurement error can affect the classification of relatively poor readers whose reading performance places them near but above the cut-point, and therefore the status of these individuals can switch from not having a reading disability to having one as a function of measurement error. But the overall accuracy of determining that individuals do not have a reading disability is higher because of the average or above-average readers who are well away from the cut point.

Figure 1.

The hybrid model used in the present study.

Because of this problem with Kappa, two additional statistics were included that focus solely on the accuracy of the determination that reading disability is present. The first is the affected-status agreement statistic (ASAS) (Waesche et al., 2011; Wagner et al., 2011). The ASAS is the proportion of students classified as having a reading disability at both times out of the total number of students classified as having a reading disability at either time. With perfect stability, ASAS will equal 1.0. With complete instability, ASAS will equal 0. The second additional statistic is consistency. Consistency refers to the proportion of students who were classified as reading disabled at the time 1 who also were classified as reading disabled at time 2.

Study Aims

The aims of the present study were twofold. First, we sought to synthesize previous findings related to reading disability using a hybrid model that includes four symptoms: impaired decoding, impaired sight word reading, impaired reading comprehension compared to listening comprehension, and poor response to instruction. Second, we investigated longitudinal stability of the four operational definitions (i.e., symptoms) of reading disability mentioned above and two additional definitions--having N number of symptoms and having N or more symptoms--over one year.

Method

Participants

The participants were 31,339 students from Reading First schools in Florida who were in first grade during the 2003–2004 school year. The students were from 291 elementary schools from 34 school districts. Females were 48.3% of the sample, with males representing 51.7%. The sample was diverse, with 41.1% white, 32.3% black, 20.8% Hispanic, 4.2% mixed, and 1.2% Hispanic. Approximately 75% of participants received free or reduced lunch, and 17% were identified as having limited English proficiency. The students were followed longitudinally for a year, with 24,687 students remaining in the sample at the second time point.

Measures

The measures obtained assessed word-level reading, vocabulary, and reading comprehension.

Dynamic Indicators of Basic Early Literacy Skills (DIBELS)

Two subtests from the DIBELS (Good, Kaminski, Smith, Laimon, & Dill, 2001) were used. Oral reading fluency (ORF) was individually administered. Participants read a passage for one minute and the number of words read correctly in one minute was scored. They did this for three passages and the median number of words read correctly across the three passages was their ORF score. The median alternate form reliability for ORF is .94 (Good, Kaminski, Smith, & Bratten, 2001). Test-retest reliability for ORF calculated on a sub-sample of students was .96 (Catts, Petscher, Schatschneider, Bridges, & Mendoza, 2009). Nonword fluency (NWF) required participants to read vowel-consonant and consonant-vowel-consonant single-syllable nonwords. Scoring guidelines gave credit for correctly pronouncing individual sounds or for pronouncing the nonword as a blended unit.

Peabody Picture Vocabulary Test (PPVT; 3rd ed.)

This measure of receptive vocabulary (Dunn & Dunn, 1997) required participants to point to one of four pictures that best matched a spoken vocabulary word. Median split-half reliability of the PPVT is .93, and median test-retest reliabilities are .93.

Florida Comprehensive Assessment Test (FCAT)

The reading comprehension subtest from the FCAT was used to measure reading comprehension. This subtest required students to read passages silently and to answer comprehension questions based on the passages.

Procedures

Operational definitions were created using the following procedures.

Low-achievement operational definitions were created for NWF and ORF by obtaining distributions and identifying participants whose performance fell at or below a 25th, 20th, 15th, 10th, 5th, or 3rd percentile cut-off.

To operationalize unexpected low achievement in NWF and ORF, each of these variables was regressed on vocabulary, which served as a proxy for verbal aptitude. Due to the strong correlations between PPVT vocabulary and other assessments of verbal aptitude (Dunn & Dunn, 1997), such as the Wechsler Intelligence Scale for Children (WISC-III Verbal IQ; Wechsler, 1991) (r = .91), vocabulary served as a valid proxy measure of verbal aptitude. The distribution of the residuals from this regression was then used to identify individuals who fell at or below the target percentile cut-offs.

The RTI-based operational definition was a dual discrepancy version that took into account both low achievement and low growth. Latent growth curve modeling was used to obtain slopes and end-of-year intercepts for ORF. The slopes and end-of-year intercepts were converted to z scores and summed to create an RTI score that equally weighted slope and end-of-year intercept. The distribution of this summed z score was used in an identical manner to the previous distributions.

The just described operational definitions were identical to those used by Waesche et al. (2011). We also developed new operational definitions for the present study.

Previous research has demonstrated that defining reading disability as a discrepancy between listening and reading comprehension results in accurate estimates of stability, gender ratio, and prevalence as do individual assessments of reading (see Badian, 1999). Thus, we used this same discrepancy in the present study. The reading comprehension poorer than listening comprehension operational definition was created by regressing reading comprehension on vocabulary, which was served here as a proxy for listening comprehension, and then using the distribution of residuals in an identical manner to that described above. Vocabulary is a strong predictor of listening comprehension (Senechal, Oullettee, & Rodney, 2007), and as such, is also a valid proxy measure of listening comprehension.

We also developed something analogous to a DSM-style operational definition by adopting an operational definition that was any one, two, three, or four of the following previously described operational definitions: low achievement in nonword reading; low achievement in ORF; poor response to instruction; and reading comprehension worse that listening comprehension. For this operational definition, the 25th percentile cut-point was used for the four individual criteria. We also developed a second, related operational definition that was any one (or two, etc.) or more criteria.

Results

The main results are presented in Table 1. For each operational definition of reading disability, the first column represents the incidence or proportion of the sample that met the criterion or criteria in first grade, and the second column presents the same information for second grade. Kappa is presented in the third column, followed by ASAS in the fourth column, and consistency in the fifth and final column.

Table 1.

Incidence Rates, Kappa, Affected Status Agreement Statistics, and Consistency Values for Alternative Operational Definitions of Reading Disability

| Incidence | |||||

|---|---|---|---|---|---|

| Variable | G1 | G2 | Kappa | ASAS | Consistency |

| NWF25 | .30 | .21 | .40 | .38 | .47 |

| NWF20 | .25 | .17 | .38 | .34 | .42 |

| NWF15 | .18 | .12 | .36 | .29 | .37 |

| NWF10 | .12 | .07 | .33 | .24 | .31 |

| NWF5 | .05 | .03 | .35 | .23 | .29 |

| NWF3 | .03 | .02 | .38 | .25 | .31 |

| UNEXNWF25 | .30 | .23 | .38 | .37 | .47 |

| UNEXNWF20 | .24 | .18 | .36 | .32 | .42 |

| UNEXNWF15 | .18 | .13 | .34 | .28 | .37 |

| UNEXNWF10 | .12 | .08 | .31 | .23 | .31 |

| UNEXNWF5 | .06 | .04 | .27 | .18 | .24 |

| UNEXNWF3 | .04 | .02 | .23 | .15 | .21 |

| ORF25 | .25 | .22 | .59 | .52 | .65 |

| ORF20 | .19 | .17 | .58 | .48 | .62 |

| ORF15 | .15 | .13 | .57 | .46 | .59 |

| ORF10 | .09 | .08 | .55 | .41 | .55 |

| ORF5 | .04 | .04 | .56 | .41 | .53 |

| ORF3 | .02 | .02 | .56 | .41 | .57 |

| UNEXORF25 | .25 | .23 | .55 | .48 | .63 |

| UNEXORF20 | .20 | .18 | .52 | .44 | .58 |

| UNEXORF15 | .15 | .14 | .47 | .38 | .52 |

| UNEXORF10 | .10 | .09 | .42 | .31 | .45 |

| UNEXORF5 | .05 | .05 | .34 | .23 | .36 |

| UNEXORF3 | .03 | .03 | .29 | .19 | .31 |

| RTI25 | .24 | .24 | .52 | .47 | .64 |

| RTI20 | .19 | .19 | .51 | .43 | .61 |

| RTI15 | .13 | .14 | .49 | .39 | .58 |

| RTI10 | .09 | .09 | .46 | .34 | .53 |

| RTI5 | .04 | .04 | .47 | .33 | .52 |

| RTI3 | .02 | .03 | .45 | .30 | .52 |

| LCRC25 | .24 | .24 | .48 | .43 | .61 |

| LCRC20 | .19 | .19 | .46 | .39 | .57 |

| LCRC15 | .15 | .14 | .44 | .35 | .51 |

| LCRC10 | .10 | .09 | .39 | .29 | .44 |

| LCRC5 | .05 | .05 | .33 | .22 | .36 |

| LCRC3 | .03 | .03 | .29 | .18 | .39 |

| ANY1 | .17 | .17 | .14 | .16 | .28 |

| ANY2 | .06 | .07 | .08 | .08 | .15 |

| ANY3 | .09 | .09 | .25 | .19 | .32 |

| ANY4 | .10 | .07 | .41 | .30 | .39 |

| ANY1PLUS | .43 | .40 | .52 | .56 | .70 |

| ANY2PLUS | .26 | .23 | .57 | .51 | .65 |

| ANY3PLUS | .19 | .17 | .55 | .46 | .59 |

| ANY4PLUS | .10 | .07 | .41 | .30 | .39 |

| 2PLUS1PLUS | .26 | .40 | .48 | .48 | .83 |

| 3PLUS1PLUS | .19 | .41 | .42 | .40 | .88 |

| 4PLUS1PLUS | .10 | .40 | .25 | .23 | .91 |

| 4PLUS2PLUS | .10 | .23 | .38 | .30 | .76 |

| 4PLUS3PLUS | .10 | .17 | .44 | .34 | .66 |

| 3PLUS2PLUS | .19 | .23 | .55 | .47 | .71 |

Notes. NWF = Nonword Fluency. UNEXPNWF = Unexpected Nonword Fluency. ORF = Oral Reading Fluency. UNEXPORF = Unexpected Oral Reading Fluency. RTI = Response to Instruction. LCRC = Listening Comprehension Reading Comprehension Discrepancy. ANY = Any Symptom; ANYNPLUS = N or more Symptoms.

Beginning with the first six rows that present stability for a low-achievement operational definition based on NWF performance at the 25th through 3rd percentiles, the incidence rates for first and second grade roughly track the percentile levels of the cut points. Regardless of the percentile at which the cut point was set, the one year stabilities were poor for Kappa, ASAS, and consistency. As the cut point percentile was decreased, the stabilities worsened, but more for the ASAS and consistency statistics than for Kappa. The larger drop off for ASAS and consistency relative to Kappa reflects their sensitivity to detecting stability specifically for the reading disabled classification. Kappa represents the average stability of the reading disabled and not reading disabled classifications. This result is consistent with the idea that the more stringent the cut point, the closer affected individuals are to the cut point and the more likely they will change upon repeated assessment.

The results for unexpected low NWF operational definition were highly similar to those obtained for low-achievement NWF, with the exception that stability appeared to drop off more for unexpected low-achievement in NWF compared to simple low achievement in NWF as the cut-off percentile became smaller. This may represent the fact that residuals, which are what the unexpected low achievement values represent, can be less stable than simple scores on a single measure.

The pattern of results for operational definitions based on low achievement in ORF were highly similar to those obtained for the comparable operational definitions based on NWF, with the exception that the values for ORF were substantially higher.

The pattern of results for unexpected low achievement in ORF mirrored those of unexpected low achievement in nonword reading. Stability again appeared to drop off more for unexpected low-achievement in ORF compared to simple low achievement in ORF as the cut-off percentile became smaller. This may reflect the fact that the unexpected low achievement in ORF scores were residuals rather than simple scores.

Moving down in the table to the RTI rows, the results were comparable to those obtained for low achievement in ORF, probably reflecting the fact that the RTI criteria of low achievement and low growth were based on ORF.

Turning to the operational definitions based on a discrepancy between reading comprehension and listening comprehension, we were pleasantly surprised to see that the stability values—Kappa, ASAS, and consistency—were actually higher than those obtained for low achievement in NWF although not as high as those obtained for low achievement in ORF. One reason to be concerned about the stability of a discrepancy between reading and listening comprehension is that it was based on a residual score, as were unexpected low performance in NWF and ORF.

The results displayed in the next rows for any one through four symptoms were surprising. We expected greater stability for an operational definition based on, for example, any one of four symptoms (i.e., low achievement in NWF, low achievement in ORF, low RTI, and low reading comprehension relative to listening comprehension) as opposed to specifying one particular symptom. Our rationale was that the four symptoms are correlated because they all are manifestations of word-level reading disability, but any one may or may not be present at a given time because of the cut point reliability problem. The cut-off used for the four symptoms was the 25th percentile, so it makes sense to compare the stability of the any symptom operational definition to that of the other definitions at the 25th percentile. Comparing for example, any one symptom and low achievement in NWF at the 25th percentile cut-off, the stability of any one symptom was markedly lower than that for low achievement in NWF. The values for Kappa were .14 versus .40 for any one symptom and low-achievement in NWF, respectively. Comparable values for ASAS were .16 and .38, and values for consistency were .28 and .47.

The next rows in Table 1 present the stability of operational definitions of having a given number symptoms or more in the rows labeled anyNplus, where N is from one to four symptoms. The stability of these operational definitions is dramatically better than that found for any one through four symptoms just reported above. In fact, the consistency of having any one symptom or more was .70, the highest value of any discussed so far. The fact that the stability rose dramatically for the operational definition of having N or more as opposed to exactly N symptoms supports our explanation of the low stability found for the operational definitions based on exactly N symptoms.

The final rows in Table 1 present data that address the question of what proportion of the sample meets the criterion of having N or more symptoms in grade one had at least N or more symptoms in grade two. These consistency results were the highest of any operational definitions and were remarkably good overall. For example, a consistency value of .91 was obtained for the case of having four symptoms in first grade and one or more symptoms in second grade. This result indicates that an individual who meets all four symptoms in first grade has over a 90% chance of showing at least one or more symptoms of reading disability in second grade. The first grade incidence rate for having all four symptoms was .10, when the 25th percentile was used as the cut-off criterion for each symptom. The incidence rate would of course decline if a lower percentile criterion was adopted, and increase if a higher percentile criterion was adopted.

Discussion

Overall, the results for operational definitions of reading disability that are based on any single criterion confirm previous findings about limited stability over time or agreement across operational definitions (Barth et al., 2008; Fuchs et al., 2004; Fletcher et al., 2013; Francis et al., 2005; Hale, et al., 2010; Waesche et al., 2011; Wagner et al., 2011) and is also a concern for learning disabilities more broadly (Kavale, 2005; Scruggs & Mastropieri, 2002). Replication of previous findings of decreased stability for more stringent percentile cut-offs, especially for statistics such as the ASAS and consistency that target stability or agreement specifically for the determination that a reading disability is present, supports the explanation for the lack of stability in terms of measurement error around a cut-off applied to a continuous distribution for a low base-rate condition (Francis et al., 2005).

We initially were surprised that changing the operational definition to the presence of any single symptom as opposed to specifying which symptom reduced stability considerably whereas we had expected stability to improve. We looked further at the data to understand why the results were contrary to our predictions. In retrospect, we did not appreciate an unintended consequence of specifying a particular number of symptoms as an operational definition of reading disability. Because the symptoms are correlated, it indeed is likely to be the case that someone who has, for example, two symptoms in first grade might have either one, two, three, or four symptoms in second grade. For assessing the stability of the operational definition of low NWF, the only thing that determines stability is whether the criterion of low NWF was met in both first and second grade. The fact that criteria for other symptoms might be met in second grade does not penalize the stability of the criterion of low NWF. In contrast, the stability of an operational definition that says there are precisely two symptoms is penalized if there are three symptoms in second grade—even if two of them are the same symptoms that were present in first grade. Thus, this pattern of results makes sense given that the stability of an operational definition that specifies exactly how many symptoms will be present will be penalized if either new symptoms are present or an existing symptom is not present at follow up. The stability of an operational definition that is based on specifying one particular symptom is determined only by the presence of the same symptom at follow up regardless of what happens to other symptoms.

Further, we can only speculate as to why stability for classification based on ORF is higher than that for NWF. It may be that ORF is measured more reliably than is NWF. The ORF score is the median number of words read correctly over three passages. The NWF score comes from a single, one-minute performance. It also may be the case that reading connected text for meaning, which is what ORF requires, recruits more cognitive processes and sources of knowledge than does the more isolated task of decoding nonwords. We are encouraged by the results obtained for operational definitions based on the presence of N or more symptoms that include RTI as one of the symptoms in a hybrid model. In particular, individuals who are identified on the basis of having multiple symptoms of reading disability initially are highly likely to have one or more symptoms of reading disability at follow up.

Implications for Practice

Our findings have several important practical implications. First, school-based identification using the criteria of N or more symptoms that includes RTI as one of the symptoms, is promising due to its high stability over one year. Given that schools across the nation are adopting RTI-based identification methods as a means of diagnosing students with learning disabilities (Fuchs & Fuchs, 2006), the above criteria has the potential to reduce “remissions” of reading disability (see Fergusson, Horwood, Caspi, Moffitt, & Silva, 1996; Shaywitz, Escobar, Shaywitz, Fletcher, & Makuch, 1992) that are may be encountered if other less stable criteria are used (e.g., identifying reading disability based on the presence one specific criterion that may contain higher amounts of measurement error).

Since 2011, at least 13 states partially require RTI for identification of specific learning disabilities, and many additional states have at least some guidelines for RTI implementation in place (Zirkel, 2011; Zirkel & Thomas, 2010). Over two-thirds of districts around the nation have either started the process of district-wide implementation or are fully implementing RTI (Response to Intervention Adoption Survey, 2011); however, because states are allowed to use their own identification criteria to determine a student’s learning disability status (Fletcher, 2008; IDEA, 2004), stability of identification criteria at the state- or district-level is potentially questionable. Therefore, a hybrid approach that incorporates other symptoms in addition to RTI, such as decoding and sight word reading ability, has the potential to reduce the likelihood that a student will be eligible for services in one state and not in another merely due to differences in state-mandated identification criteria.

Second, this issue of stability (Fuchs et al., 2004; Fletcher et al., 2013) also has the potential to affect outcomes of targeted instruction and intervention practices. An increased variability in the identification of reading disability can influence which students are selected to take part in intervention programs (i.e., misidentification) as well as distort the potential effectiveness of interventions. For instance, if students are identified as having a reading disability at the first assessment point, selected to take part in a targeted intervention and then upon the second assessment period after the intervention, found to not meet the identification criteria for reading disability, there is a great deal of ambiguity surrounding the cause of this improvement. Thus, utilizing more stable criteria that identifies students as having reading disability at multiple assessment points, such as those described in the present paper, would allow researchers and practitioners to make more compelling claims about the effectiveness of interventions in remediating reading disability.

Future Directions

Important areas for future research include testing how the model performs for subgroups identified on the basis of gender, socioeconomic status, race or ethnic background, and English language proficiency (e.g., Friend, DeFries, & Olson, 2001; Rutter et al., 2004). Additional empirical studies as well as simulation studies could also be used to explore the optimal cut-offs to apply to particular symptoms. Alternatively, it is important to explore dimensional versions of the model in which probability of having a reading disability is operationalized in terms of either a weighted or unit-weighted sum of scores from the underlying continuous distributions that cut points were applied to for the present models. Finally, it is important to incorporate variables that affect risk for the presence of reading disability (e.g., Catts, Fey, Zhang, & Tomblin, 2001), including sex, genetic risk, and poor phonological processing.

There are at least three important limitations of our study that should be acknowledged. First, the measures the results are based upon were brief measures that could easily be applied to large samples as opposed to the longer and more intensive assessments that typically are individually administered to individuals with reading problems. Second, RTI was operationalized as response to what essentially would be considered Tier 1 general education as opposed to response to intensive, standardized interventions. Third, our sample was primarily drawn from Reading First schools, which, unlike typical public schools in the US, serve a larger percentage of low SES students. Therefore, it is important to replicate this study with samples from schools not in Reading First.

In conclusion, given the multifaceted nature of learning disabilities in general (Fuchs, Deshler, & Reschly, 2004; Mellard, Deshler, & Barth, 2004), our results provide initial empirical support for hybrid models of reading disability that include RTI among other symptoms (Fletcher et al., 2007, 2013; Kavale, et al., 2008; U.S. Department of Special Education Programs, 2002; Wagner, 2008).

Acknowledgments

This research was supported by Grant P50 HD052120 from the Eunice Kennedy Shriver National Institute for Child Health and Human Development.

References

- American Psychiatric Association. Diagnostic and statistical manual of mental disorders. 5. Arlington, VA: American Psychiatric Publishing; 2013. [Google Scholar]

- Badian NA. Reading disability defined as a discrepancy between listening and reading comprehension: A longitudinal study of stability, gender differences, and prevalence. Journal of Learning Disabilities. 1999;32(2):138–148. doi: 10.1177/002221949903200204. [DOI] [PubMed] [Google Scholar]

- Barth AE, Stuebing KK, Anthony JL, Denton CA, Mathes PG, Fletcher JM, Francis DJ. Agreement among response to intervention criteria for identifying responder status. Learning and Individual Differences. 2008;18(3):296–307. doi: 10.1016/j.lindif.2008.04.004. doi: http://dx.doi.org/10.1016/j.lindif.2008.04.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bateman BD. An educational view of a diagnostic approach to learning disabilities. In: Hellmuth J, editor. Learning disorders. Vol. 1. Seattle, WA: Special Child Publications; 1965. pp. 219–239. [Google Scholar]

- Bradley R, Daneilson L, Hallahan DP, editors. Identification of learning disabilities: Research and practice. Mahwah, NJ: Erlbaum; 2002. [Google Scholar]

- Carlisle J, Kelcey B, Berebitsky D, Phelps G. Embracing the complexity of instruction: A study of the effects of teachers’ instruction on students’ reading comprehension. Scientific Studies of Reading. 2011;15(5):409–439. doi: http://dx.doi.org/10.1080/10888438.2010.497521. [Google Scholar]

- Catts HW, Fey ME, Zhang X, Tomblin JB. Estimating the risk of future reading difficulties in kindergarten children: A research-based model and its clinical implementation. Language, Speech, and Hearing Services in Schools. 2001;32(1):38. doi: 10.1044/0161-1461(2001/004). doi: http://dx.doi.org/10.1044/0161-1461(2001/004. [DOI] [PubMed] [Google Scholar]

- Catts HW, Petscher Y, Schatschneider C, Bridges MS, Mendoza K. Floor effects associated with universal screening and their impact on the early identification of reading disabilities. Journal of Learning Disabilities. 2009;42(2):163–176. doi: 10.1177/0022219408326219. doi: http://dx.doi.org/10.1177/0022219408326219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Compton DL, Fuchs D, Fuchs LS, Bryant JD. Selecting at-risk readers in first grade for early intervention: A two-year longitudinal study of decision rules and procedures. Journal of Educational Psychology. 2006;98(2):394–409. doi: http://dx.doi.org/10.1037/0022-0663.98.2.394. [Google Scholar]

- Dunn LM, Dunn DM. Peabody Picture Vocabulary Test—Third Edition (PPVT-III) Circle Pines: MN: American Guidance Service; 1977. [Google Scholar]

- Fergusson DM, Horwood LJ, Caspi A, Moffitt TE, Silva PA. The (artefactual) remission of reading disability: Psychometric lessons in the study of stability and change in behavioral development. Developmental Psychology. 1996;32(1):132–140. doi: http://dx.doi.org/10.1037/0012-1649.32.1.132. [Google Scholar]

- Flanagan DP, Ortiz SO, Alfonso VC, editors. Essentials for cross-battery assessment. New York: Wiley; 2007. [Google Scholar]

- Fletcher J. Identifying learning disabilities in the context of Response to Intervention: A hybrid model. 2008 Retrieved from http://www.rtinetwork.org/Learn/LD/ar/HybridModel.

- Fletcher JM, Lyon GR, Barnes M, Stuebing KK, Francis DJ, Olson RK, Shaywitz SE. Classification of Learning Disabilities: An evidence-based evaluation. In: Bradley R, Danielson L, Hallahan DP, editors. Identification of learning disabilities: Research to practice. Mahwah, NJ: Lawrence Erlbaum; 2002. pp. 185–250. [Google Scholar]

- Fletcher JM, Lyon GR, Fuchs LS, Barnes MA. Learning disabilities. New York, NY: Guilford; 2006. [Google Scholar]

- Fletcher JM, Lyon GR, Fuchs L, Barnes M. Learning disabilities: From identification to intervention. New York: Guilford Press; 2007. [Google Scholar]

- Fletcher JM, Stuebing KK, Morris RD, Lyon GR. Classification and definition of learning disabilities: A hybrid model. In: Swanson HL, Harris K, editors. Handbook of learning disabilities. 2. New York, NY: Guilford; 2013. pp. 33–50. [Google Scholar]

- Francis DJ, Fletcher JM, Stuebing KK, Lyon GR, Shaywitz BA, Shaywitz SE. Psychometric Approaches to the Identification of LD IQ and Achievement Scores Are Not Sufficient. Journal of Learning disabilities. 2005;38(2):98–108. doi: 10.1177/00222194050380020101. doi: http://dx.doi.org/10.1177/00222194050380020101. [DOI] [PubMed] [Google Scholar]

- Friend A, DeFries JC, Olson RK. Parental education moderates genetic influences on reading disability. Psychological Science. 2008;19(11):1124–1130. doi: 10.1111/j.1467-9280.2008.02213.x. doi: http://dx.doi.org/10.1111/j.1467-9280.2008.02213.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuchs D, Deshler DD, Reschly DJ. National Research Center on Learning Disabilities: Multimethod Studies of Identification and Classification Issues. Learning Disability Quarterly. 2004;27(4):189–195. doi: http://dx.doi.org/10.2307/1593672. [Google Scholar]

- Fuchs D, Fuchs LS. Introduction to response to intervention: What, why, and how valid is it? Reading Research Quarterly. 2006;41(1):93–99. doi: http://dx.doi.org/10.1598/RRQ.41.1.4. [Google Scholar]

- Fuchs D, Fuchs LS, Compton DL. Identifying reading disabilities by responsiveness-to-instruction: Specifying measures and criteria. Learning Disability Quarterly. 2004;27(4):216–227. doi: http://dx.doi.org/10.2307/1593674. [Google Scholar]

- Fuchs D, Fuchs LS, Mathes PG, Lipsey MW, Roberts PH. Is “learning disabilities” just a fancy term for low achievement? A meta-analysis of reading differences between low achievers with and without the label. In: Bradley R, Danielson L, Hallahan DP, editors. Identification of learning disabilities: Research to practice. The LEA series on special education and disability. Mahwah, NJ, US: Erlbaum; 2002. pp. 737–762. [Google Scholar]

- Fuchs D, Mock D, Morgan PL, Young CL. Responsiveness-to-intervention: Definitions, evidence, and implications for the learning disabilities construct. Learning Disabilities Research & Practice. 2003;18(3):157–171. doi: http://dx.doi.org/10.1111/1540-5826.00072. [Google Scholar]

- Fuchs LS, Fuchs D, Speece DL. Treatment validity as a unifying construct for identifying learning disabilities. Learning Disability Quarterly. 2002;25(1):33–45. doi: http://dx.doi.org/10.2307/1511189. [Google Scholar]

- Gersten R, Fuchs LS, Williams JP, Baker S. Teaching reading comprehension strategies to students with learning disabilities: A review of research. Review of Educational Research. 2001;71(2):279–320. doi: http://dx.doi.org/10.3102/00346543071002279. [Google Scholar]

- Good RH, Kaminski RA, Smith S, Bratten J. Technical adequacy of second grade DIBELS Oral Reading Fluency passages (Tech. Rep. No. 8) Eugene: University of Oregon; 2001. [Google Scholar]

- Good RH, Kaminski RA, Smith S, Laimon D, Dill S. Dynamic Indicators of Basic Early Literacy Skills. 5. Eugene: University of Oregon; 2001. [Google Scholar]

- Hale J, Alfonso V, Berninger V, Bracken B, Christo C, Clark E, Yalof J. Critical issues in response-to-intervention, comprehensive evaluation, and specific learning disabilities identification and intervention: An expert white paper consensus. Learning Disability Quarterly. 2010;33(3):223–236. Retrieved from http://www.iapsych.com/articles/hale2010.pdf. [Google Scholar]

- Hale JB, Fiorella C, Miller J, Wenrich K, Teodori A, Henzel J. WISC-IV interpretation for specific learning disabilities and intervention: A cognitive hypothesis testing approach. In: Prifitera A, Sakolfske D, Weisss L, editors. WISC-IV clinical assessment and intervention. 2. New York, NY: Elsevier; 2007. pp. 109–171. [Google Scholar]

- Johnson E, Mellard DF, Fuchs D, McKnight MA. Responsiveness to intervention (RTI): How to do it. Lawrence, KS: National Research Center on Learning Disabilities; 2006. [Google Scholar]

- Kavale KA. Setting the record straight on learning disabilities and low achievement: The tortuous path of ideology. Learning Disabilities Research & Practice. 1995;10:145–152. [Google Scholar]

- Kavale KA. Identifying specific learning disability: Is responsiveness to intervention the answer? Learning Disability Quarterly. 2005;38:553–562. doi: 10.1177/00222194050380061201. doi: http://dx.doi.org/10.1177/00222194050380061201. [DOI] [PubMed] [Google Scholar]

- Kavale KA, Kauffman JM, Bachmeier RJ, LeFever GB. Response-to-intervention: Separating the rhetoric of self-congratulation from the reality of specific learning disability identification. Learning Disability Quarterly. 2008;31 (3):135–150. [Google Scholar]

- Learning Disabilities Roundtable. Specific learning disabilities: Finding common ground. Washington DC: U.S. Department of Education, Office of Special Education Programs, Office of Innovation and Development; 2002. [Google Scholar]

- Lyon GR. Toward a definition of dyslexia. Annals of Dyslexia. 1995;45:3–27. doi: 10.1007/BF02648210. [DOI] [PubMed] [Google Scholar]

- Mellard DF, Deshler DD, Barth A. LD identification: It’s not simply a matter of building a better mousetrap. Learning Disability Quarterly. 2004:229–242. doi: http://dx.doi.org/10.2307/1593675.

- Nagieri JA. The discrepancy-consistency approach to SLD identification using the PASS theory. In: Flanagan DP, Alfonso VC, editors. Essentials of specific learning disability identification. New York: Wiley; 2010. pp. 145–172. [Google Scholar]

- National Joint Committee on Learning Disabilities. Responsiveness to intervention and learning disabilities: Concepts, benefits and questions. Alexandria, VA: 2005. [Google Scholar]

- Pennington BF, Peterson RL. Specific learning disorder. In: Tasman A, Lieberman J, Kay J, First M, Riba M, editors. Psychiatry. 4. New York: John Wiley & Sons; in press. [Google Scholar]

- President’s Commission on Excellence in Special Education. A new era: Revitalizing special education for children and their families. Washington, DC: United States Department of Education; 2002. [Google Scholar]

- Quinn JM, Wagner RK. Gender differences in reading impairment and in the identification of impaired readers: Results from a large scale study of at-risk readers. Journal of Learning Disabilities. doi: 10.1177/0022219413508323. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rutter M, Caspi A, Fergusson D, Horwood LJ, Goodman R, Maughan B, Carroll J. Sex differences in developmental reading disability. JAMA: the journal of the American Medical Association. 2004;291(16):2007–2012. doi: 10.1001/jama.291.16.2007. doi: http://dx.doi.org/10.1001/jama.291.16.2007. [DOI] [PubMed] [Google Scholar]

- Shaywitz SE, Escobar MD, Shaywitz BA, Fletcher JM, Makuch R. Evidence that dyslexia may represent the lower tail of a normal distribution of reading ability. The New England Journal of Medicine. 1992;326(3):145–150. doi: 10.1056/NEJM199201163260301. [DOI] [PubMed] [Google Scholar]

- Scruggs TE, Mastropieri MA. On Babies and bathwater: Addressing the problems of identification of learning disabilities. Learning Disability Quarterly. 2002;25:155–168. doi: http://dx.doi.org/10.2307/1511299. [Google Scholar]

- Senechal M, Ouellette G, Rodney D. The mistunderstood giant: On the predictive role of early vocabulary to future reading. In: Dickenson DK, Neuman SB, editors. Handbook of early literacy. Vol. 2. New York, NY: Guildford Press; 2007. pp. 173–184. [Google Scholar]

- Shaywitz SE, Escobar MD, Shaywitz BA, Fletcher JM, Makuch R. Evidence that dyslexia may represent the lower tail of a normal distribution of reading ability. The New England Journal of Medicine. 1992;326(3):145–150. doi: 10.1056/NEJM199201163260301. [DOI] [PubMed] [Google Scholar]

- Shaywitz BA, Fletcher JM, Holahan JM, Shaywitz SE. Discrepancy compared to low achievement definitions of reading disability: Results from the Connecticut Longitudinal Study. Journal of Learning Disabilities. 1992;25:639–648. doi: 10.1177/002221949202501003. [DOI] [PubMed] [Google Scholar]

- Snowling MJ. Dyslexia: A hundred years on. British Medical Journal. 1996;313:1096. doi: 10.1136/bmj.313.7065.1096. doi: http://dx.doi.org/10.1136/bmj.313.7065.1096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snowling MJ. Dyslexia. 2. Oxford: Blackwell Publishers; 2000. [Google Scholar]

- Spectrum K12, American Association of School Administrators, Council of Administrators of Special Education, National Association of State Directors of Special Education, & State Title 1 Directors. Response to intervention (RTI) adoption survey. Spectrum K12. 2011 Retrieved from http://rti.pearsoned.com/docs/RTIsite/2010RTIAdoptionSurveyReport.pdf.

- Speece D. Classification of learning disabilities: Convergence, expansion, and caution. In: Bradley R, Danielson L, Hallahan D, editors. Identification of learning disabilities: Research to practice. Mahwah NJ: Erlbaum; 2002. pp. 467–519. [Google Scholar]

- Stanovich KE. Does dyslexia exist? Child Psychology & Psychiatry & Allied Disciplines. 1994;35(4):579–595. doi: 10.1111/j.1469-7610.1994.tb01208.x. [DOI] [PubMed] [Google Scholar]

- Stanovich KE. Progress in understanding reading: Scientific foundations and new frontiers. New York, NY: Guilford Press; 2000. [Google Scholar]

- Sternberg RJ, Grigorenko EL. Difference scores in the identification of children with learning disabilities: It’s time to use a different method. Journal of School Psychology. 2002;40(1):65–83. doi: http://dx.doi.org/10.1016/S0022-4405(01)00094-2. [Google Scholar]

- Stuebing KK, Fletcher JM, Branum-Martin L, Francis DJ. Evaluation of the technical adequacy of three methods for identifying specific learning disabilities based on cognitive discrepancies. School Psychology Review. 2012;41(1):3–22. [PMC free article] [PubMed] [Google Scholar]

- Stuebing KK, Fletcher JM, LeDoux JM, Lyon GR, Shaywitz SE, Shaywitz BA. Validity of IQ-discrepancy classification of reading disabilities: A meta-analysis. American Educational Research Journal. 2002;39:469–518. [Google Scholar]

- Torgesen JK. Empirical and theoretical support for direct diagnosis of learning disabilities by assessment of intrinsic processing weaknesses. In: Bradley R, Danielson L, Hallahan D, editors. Identification of learning disabilities: Research to practice. Mahwah, NJ: Erlbaum; 2002. pp. 565–650. [Google Scholar]

- U.S. Department of Education 2004 Individuals with Disabilities Education Improvement Act of 2004, Pub. L. No. 108-446 § 1400.

- U.S. Office of Education. First annual report of the National Advisory Committee on Handicapped Children. Washington, DC: U.S. Department of Health, Education and Welfare; 1968. [Google Scholar]

- U.S. Office of Special Education Programs, National Joint Committee on Learning Disabilities. Specific learning disabilities: Finding common ground. 2002. [Google Scholar]

- VanDerHeyden AM, Witt JC, Gilbertson D. A multi-year evaluation of the effects of a response to intervention (RTI) model on identification of children for special education. Journal of School Psychology. 2007;45(2):225–256. doi: http://dx.doi.org/10.1016/j.jsp.2006.11.004. [Google Scholar]

- Vaughn S, Fuchs LS. Redefining learning disabilities as inadequate response to instruction: The promise and potential problems. Learning Disabilities Research & Practice. 2003;18(3):137–146. doi: http://dx.doi.org/10.1111/1540-5826.00070. [Google Scholar]

- Vellutino FR, Fletcher JM. Developmental dyslexia. In: Snowling M, Hulme C, editors. The Science of Reading: A Handbook. Malden: Blackwell Publishing; 2005. pp. 363–378. [Google Scholar]

- Vellutino FR, Scanlon DM, Small S, Fanuele DP. Response to intervention as a vehicle for distinguishing between children with and without reading disabilities: Evidence for the role of kindergarten and first-grade interventions. Journal of Learning Disabilities. 2006;39(2):157–169. doi: 10.1177/00222194060390020401. doi: http://dx.doi.org/10.1177/00222194060390020401. [DOI] [PubMed] [Google Scholar]

- Waesche JB, Schatschneider C, Maner JK, Ahmed Y, Wagner RK. Examining agreement and longitudinal stability among traditional and RTI-based definitions of reading disabilitiy using the affected-status agreement statistic. Journal of Learning Disabilities. 2011;44:296–307. doi: 10.1177/0022219410392048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wagner RK. Rediscovering dyslexia: New approaches for Identification and classification. In: Reid G, Fawcett A, Manis F, Siegel L, editors. The handbook of dyslexia. Thousand Oaks, CA: Sage; 2008. pp. 174–191. [Google Scholar]

- Wagner RK, Torgesen JK, Rashotte CA. The Comprehensive Test of Phonological Processing. Austin, TX: Pro-Ed Publishers; 1999. [Google Scholar]

- Wagner RK, Waesche JB, Schatschneider C, Maner JK, Ahmed Y. Using response to intervention for identification and classification. In: McCardle P, Miller B, Lee J, Tzeng O, editors. Dyslexia across languages: Orthography and the brain-gene-behavior link. Baltimore, MD: Brooks Publishing; 2011. pp. 202–213. [Google Scholar]

- Wechsler D. The Wechsler Intelligence Scale for Children—Third Edition (WISC-III) San Antonio, TX: The Psychological Corporation; 1991. [Google Scholar]

- Zirkel PA. RTI confusion in the case law and the legal commentary. Learning Disability Quarterly. 2011;34(4):242–247. [Google Scholar]

- Zirkel PA, Thomas LB. State laws and guidelines for implementing RTI. Teaching Exceptional Children. 2010;43(1):60–73. [Google Scholar]