Abstract

The striatum is critical for reward-guided and habitual behavior. Anatomical and interference studies suggest a functional heterogeneity within striatum. Medial regions, such as nucleus accumbens core and dorsal medial striatum play roles in goal-directed behavior, while dorsal lateral striatum is critical for control of habitual action. Subdivisions of striatum are topographically connected with different cortical and subcortical structures forming channels that carry information related to limbic, associative, and sensorimotor functions. Here, we describe data showing that as one progresses from ventral-medial to dorsal-lateral striatum, there is a shift from more prominent value encoding to activity more closely related to associative and motor aspects of decision-making. In addition, we will describe data suggesting that striatal circuits work in parallel to control behavior and that regions within striatum can compensate for each other when functions are disrupted.

Keywords: striatum, nucleus accumbens, rat, monkey, value, habit, reward, goal, single unit

Introduction

Decision-making is governed by goal-directed and stimulus-response (S-R) driven mechanisms, with the former being more closely associated with medial regions of striatum, including nucleus accumbens core (NAc) and dorsal medial striatum (DMS), and the latter with dorsal lateral striatum (DLS). During learning, the transition from goal-directed behavior to S-R driven habits is thought to depend on “spiraling” connectivity from ventral-medial regions in striatum to dopamine (DA) neurons, which then project to more dorsal lateral portions of striatum (Houk, 1995; Haber et al., 2000; Joel et al., 2002; Ikemoto, 2007; Niv and Schoenbaum, 2008; Takahashi et al., 2008; van der Meer and Redish, 2011). This network (Figure 1) allows for feed-forward propagation of information from limbic networks to associative and sensorimotor networks (Haber et al., 2000; Haber, 2003; Ikemoto, 2007; Haber and Knutson, 2010).

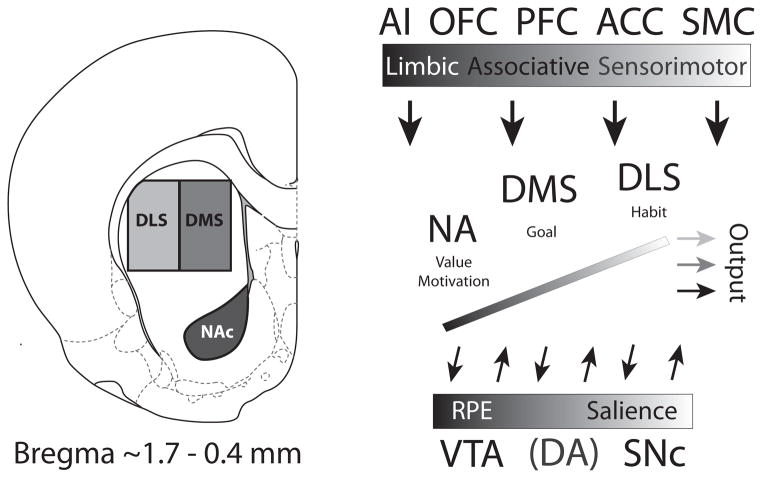

Figure 1.

Recording locations and connectivity of NAc, DMS and DLS. The boxes shown in the coronal section are approximations of recording sites from studies described in Figures 2–4 (reprinted from Paxinos G, Watson C. The Rat Brain, Compact Third Edition. London: Academic Press, 1997; 11–15. With permission from Elsevier). Recording sites for nucleus accumbens core (NAc) were ~1.6 mm anterior to bregma, 1.5 mm lateral to midline, and 4.5 mm ventral to brain surface taken from Roesch et al. 2009. Recording sites from dorsal medial striatum (DMS) were ~0.4 mm posterior to bregma, 2.6 mm lateral to midline, 3.5 mm ventral to brain surface (Stalnaker et al. 2010). Both NAc and DMS receive projections from medial prefrontal cortex (mPFC) and anterior cingulate cortex (ACC), however NAc is more heavily innervated by agranular insular cortex (AI). Recording sites for dorsal lateral striatum (DLS) were ~1.0 mm anterior to bregma, 3.2–3.6 mm lateral to midline, and 3.5 mm ventral to brain surface (Stalnaker et al., 2010, Burton et al., 2013). DLS receives predominantly sensorimotor-related information from sensorimotor cortex (SMC), as opposed to more ventromedial parts of striatum, which receives visceral information from AI. Striatal areas between NAc and DLS receive higher order associative information from mPFC and ACC. Functional differentiation can be recognized not only through cortical inputs, but also through afferents arising from amygdala, hippocampus and thalamus (Voorn et al., 2004). Amygdalostriatal projections are heaviest ventrally and progressively taper off in a dorsolateral gradient, with sensorimotor parts of striatum only sparsely innervated. The medial (A8) and lateral (A10) dopamine neurons project predominantly dorsolaterally and ventromedially in striatum, respectively, whereas dopamine neurons more centrally located (A9) project broadly to the intermediary striatal zone, with some dorsal dominance (Voorn et al., 2004). Progression from medial to lateral (VTA to SNc) reflects a shift from reward prediction error (RPE) encoding to salience encoding (Bromberg-Martin et al., 2010). Abbreviations: AI, agranular insula; OFC, orbitofrontal cortex; PFC, prefrontal cortex; ACC, anterior cingulate cortex; SMC, sensorimotor cortex; NA, nucleus accumbens; DMS, dorsal medial striatum; DLS, dorsal lateral striatum; VTA, ventral tegmental area; SNc, substantia nigra pars compacta; DA, dopamine.

Here, we review neural correlates from our labs related to reward-guided decision-making in NAc, DMS, and DLS (Figure 1). We will specifically focus on neural correlates from studies where animals performed the same behavioral task, thus allowing for direct comparison. Along the way we will describe neural and behavioral changes that occur when these subdivisions are selectively interfered with, offering insight into how these networks guide decision-making. From these studies it appears that different regions in striatum can compensate for each other when function in one is disrupted, suggesting that these structures can work in parallel.

The review is broken down into three sections based on popular ways to subdivide striatum. The classic division has been to subdivide striatum along the dorsal-ventral axis. We will begin our discussion of neural correlates by focusing on neural selectivity from the extremes of this division, nucleus accumbens and dorsal lateral striatum (Figure 1). Next, we will examine correlates from dorsal striatum along the medial-lateral axis. This work has focused on the finding that DMS and DLS function can be clearly dissociated using devaluation and contingency degradation paradigms showing their respective roles in goal-driven and habitual behaviors (Balleine and O’Doherty, 2010). Finally, we will discuss a synthesis of the dorsal-ventral and the medial-lateral distinction of striatum, namely, a ventromedial to dorsolateral functional organization based on connectivity (Voorn et al., 2004; Haber and Knutson, 2010; Nakamura et al., 2012). Afferents innervating striatum progress from limbic to associative to sensorimotor, moving from ventral-medial to central to dorsal-lateral striatum, respectively (Haber et al., 2000; Haber, 2003; Voorn et al., 2004; Haber and Knutson, 2010). In this section, we will describe primate data illustrating how reward, motor, and cognitive neural correlates progress across the diagonal of striatum (Figure 5B). Collectively these studies suggest that as one progresses from ventral-medial to dorsal-lateral striatum, there is a shift from more prominent value encoding to encoding that better reflects associative and sensorimotor functions.

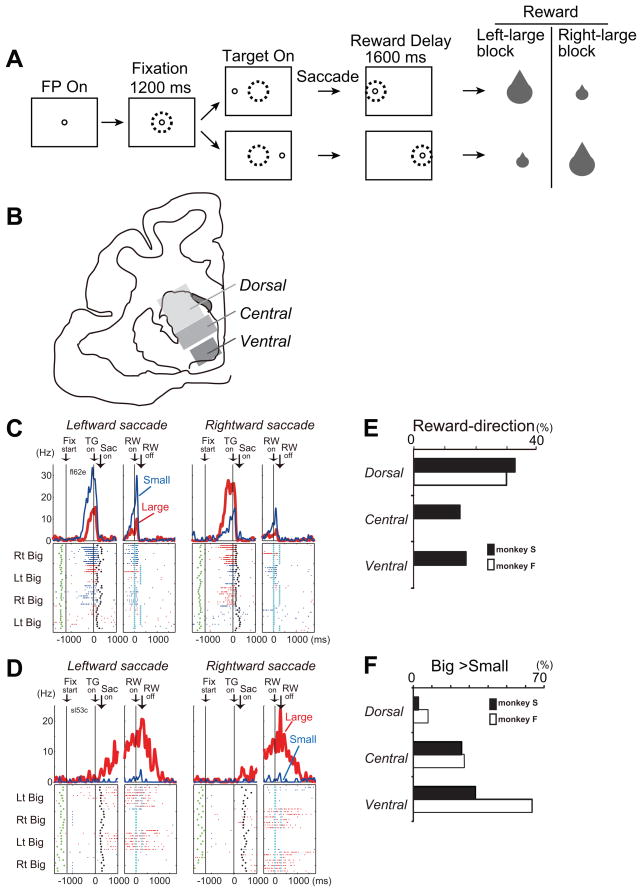

Figure 5.

A. Visually guided saccade task with an asymmetric reward schedule. After the monkey fixated on the FP (fixation point) for 1200 ms, the FP disappeared and a target cue appeared immediately on either the left or right, to which the monkey made a saccade to receive a liquid reward. The dotted circles indicate the direction of gaze. In a block of 20–28 trials (e.g., left-big block), one target position (e.g., left) was associated with a big reward and the other position (e.g., right) was associated with a small reward. The position-reward contingency was then reversed (e.g., right-big block). B. Subdivisions of the primate caudate. C. An example of dorsal caudate neuronal activity showing selective activity associated with the direction of rewarded direction. Note that this neuron showed stronger pre-target activity for the right-big block. The activity in the big- and small-reward trials is shown in red and blue, respectively. The histograms and raster plots are shown in two sections; the left section is aligned to the time of target cue onset (TG on), and the right is aligned to reward onset (RW on). The green dots, the onset of a FP (Fix start); the black dots, saccade onset (Sac on); and the light blue dots, reward onset and offset. D. An example of ventral caudate neuronal activity associated with an expectation of a big reward. E and F. Percentage of neurons that showed reward-direction effect (E) and big-reward preference (F) for each subdivision of the caudate. Modified from Nakamura et al., 2012.

Nucleus Accumbens Core versus Dorsal Lateral Striatum

Several studies have reported that neural activity in both NAc and DLS is correlated with the value of expected outcomes. We examined these correlates using an odor-guided decision-making task during which we manipulated anticipated value by independently varying reward size and the length of delay preceding reward delivery (Roesch et al., 2009). As illustrated in Figure 2A, rats were trained to nose-poke in a central odor port where one of three different odors was presented. Odors predicted different stimulus-response associations, with one odor signaling the rat to respond to the left (forced-choice), another odor signaling the rat to respond to the right (forced-choice), and a third odor signaled that the rat was free to choose left or right (free-choice) to obtain a liquid sucrose reward. Odors were presented in a pseudo-random sequence such that the free-choice odor was presented on 7/20 trials and the left/right odors were presented in equal proportions for the remaining trials.

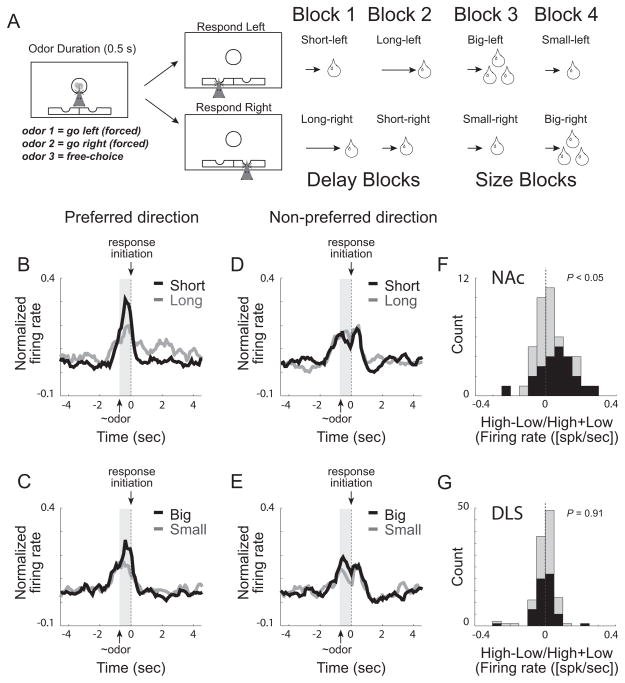

Figure 2.

A. Odor-guided choice task during which the delay to and size of reward were independently varied in ~60 trial blocks. Upon illumination of house lights rats started the trial by poking into the central port. After 500 ms, an odor signaled the trial type. For odors 1 and 2, rats had to go to a left or right fluid well to receive reward (forced-choice trials). A third odor signaled that the rat was free to choose either well to receive the reward that was associated with that response direction during the given block of trials. In blocks 1–4, the length of the delay (blocks 1 and 2) to reward and the size of reward (blocks 3 and 4) were manipulated: Short-delay = 0.5s wait before delivery of 1 bolus reward; Long-delay = 1–7s wait before 1 bolus reward; Big-reward = 0.5s wait for 2–3 boli reward; Small-reward = 0.5s wait before 1 bolus reward. Throughout each recording session each of these trial types were associated with both directions and all three odors, allowing us to examine different associative correlates. Rats were more motivated by big rewards and short delays as indicated by faster reaction time and better accuracy, as well higher probability of selecting the more valued reward on free-choice trials. BE. Average firing over time for all cue-response VS neurons. Cue-responsive neurons were defined as those neurons that exhibited a significant increase in firing during odor sampling (odor onset to port exit) above baseline (1s before the start of the trial) averaged over all trial-types. Preferred and non-preferred direction was defined by which direction elicited the strongest response averaged over outcomes and odors. Average firing was significantly higher for cues that predicted higher valued outcomes, but only in the preferred direction. F and G. Distribution of value indices for NAc and DLS; Value index = high−low/high+low during the cue-epoch (high value = large, short; low value = small, long). Neural activity was taken during odor onset to odor port exit. Black bars represent neurons that showed significant modulation of expected outcome (p < 0.05). Modified from (Roesch et al., 2009; Roesch and Bryden, 2011).

We manipulated value of response directions by changing the delay to and size of reward in a series of independent trial blocks (Figure 2A). At the beginning of each recording session, one reward well was randomly designated as short (0.5s delay to reward delivery) and the other as long (1–7s delay to reward delivery). After ~60 trials, this response-outcome contingency switched (block two). In blocks three and four, we held the delay to reward delivery constant at 0.5s and manipulated reward size. In the third block, the response direction that was previously associated with long delay now produced a big reward (2 boli), while the reward in the other fluid well remained small (1 bolus). Finally, in block four, response-outcome contingencies reversed one last time (Figure 2A).

During performance of this task, rats switched their side preference after each block shift to obtain the better reward. Rats chose the higher value reward more often than the low value reward on free-choice trials (~60/40 over the entire trial block), and performed faster and more accurately for high value outcomes on forced-choice trials (reaction time: high value = 153ms, low value = 172ms; percent correct: high value = 84%, low value = 76%). After training, drivable electrodes were implanted in either NAc or DLS and recording commenced for several months. We found that both regions were significantly modulated by response direction and expected value.

In NAc, the majority of neurons fired significantly more strongly for odor cues that predicted high value outcomes for actions made into the neuron’s response field (Roesch et al., 2009). This is illustrated in the population of cue-responsive NAc neurons in Figure 2B–E for both delay and size blocks. Neural activity was significantly stronger during presentation of cues that predicted large reward and short delay, but only in the preferred direction (i.e., the direction that elicited the stronger response averaged over outcome manipulation). These data suggest that activity in NAc represents the motivational value associated with chosen actions and might be critical for translating cue-evoked value signals into motivated behavior (Catanese and van der Meer, 2013; McGinty et al., 2013). Consitent with this hypothesis, we and others have shown that firing in NAc is significantly correlated with reaction time (Roesch et al., 2009; Bissonette et al., 2013; McGinty et al., 2013).

In contrast to NAc, the majority of neurons in DLS did not fire more strongly for high value outcomes. This is illustrated in Figure 2F and G, which plots the distributions of value indices computed by subtracting firing on low-value reward (i.e., long and small) from high-value reward (i.e., short and big) trials during cue sampling (odor onset to port exit) for both NAc and DLS after learning (i.e. last 10 trials in each block). For NAc, this distirubtion was signifcantly shifted in the positive direction, indicating a preponderance of cells that exhibited stronger firing for more valued outcomes (Figure 2F; Wilcoxon, p < 0.05). In contrast to NAc, the distribution of value indices obtained from DLS was not significantly shifted from zero (Figure 2G).

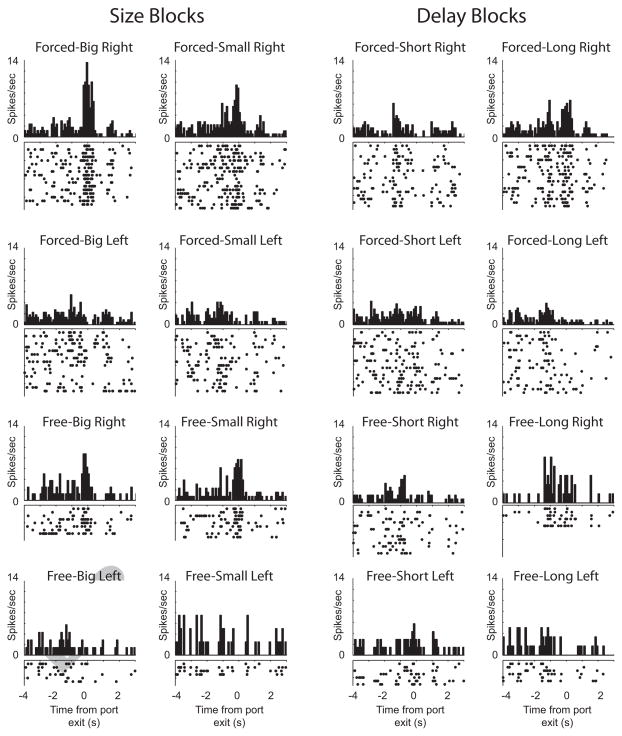

Importantly, this is not to say that activity in DLS was unaffected by they identity of expected outcomes. Over 40% of DLS neurons showed significant modulation by the outcome expected at the end of the trial, however the counts of neurons that showed maximal firing for each trial type were equally distributed across outcome type (i.e., short-delay, long-delay, large-reward, and small-reward) (Stalnaker et al., 2010; Burton et al., 2013). Unlike NAc, firing in DLS was remarkably associative as illustrated by the single neuron example in Figure 3. This neuron fired the strongest when the odor cue predicted large reward and was directionally tuned in that firing was stronger for movements made to the right (i.e. direction contralateral to the recording site).

Figure 3.

Single cell example from DLS showing activity during performance of the odor-guided size and delay choice task described in Figure 2A (modified from Burton et al., 2013).

These results suggest that neural correlates in NAc are more closely tied to the motivational level associated with choosing higher value goals, and that correlates in DLS are more associative, representing expected outcomes and response directions across a range of stimuli. Thus, these results indicate that both NAc and DLS process task parameters during task performance, yet it is unclear whether these areas encode this information in parallel or if correlates in one area are dependent on the other. The spiraling connectivity of the DA system certainly suggests that evaluative prediction signals arising from NAc might be necessary to relay errors in reward prediction to more dorsal-lateral regions in striatum so that associations can be updated when contingencies change. If there is indeed functional connectivity between them, especially from the NAc to DLS, then manipulation of NAc signals should affect task-related activity in DLS. To address this issue we recorded from DLS in rats with unilateral NAc lesions (Burton et al., 2013).

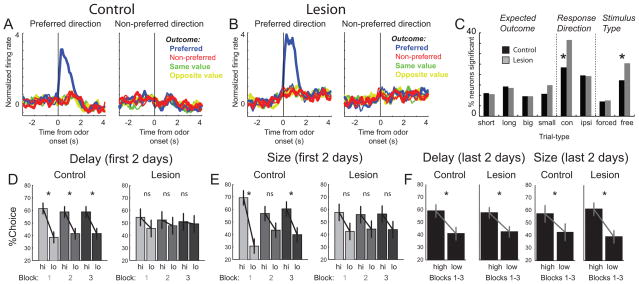

Surprisingly, we found that task-related neural selectivity in DLS was enhanced, not attenuated, by NAc lesions (Figure 4A–C). At the population level, the difference in firing between preferred and non-preferred trial-types was larger in rats with NAc lesions (Figure 4A and B). Further, counts of neurons exhibiting neural correlates related to the direction of the behavioral response and the identity of the stimulus that triggered that response were elevated after NAc lesions (Figure 4C; Response Direction, Stimulus Type). Although NAc lesions impacted stimulus and response encoding in DLS, NAc lesions had no impact on outcome encoding in DLS. That is, the counts of neurons encoding for expected outcomes were similar across groups and each of the four outcome types were still equally represented (Figure 4C; Expected Outcome). We concluded that NAc lesions enhance selectivity for stimuli and responses in DLS, while leaving expected outcome encoding intact (Burton et al., 2013).

Figure 4.

Stimulus and response encoding in DS was enhanced after VS lesions. A–B. Average firing rate for controls (A) and lesions (B) for free- or forced-choices trials, depending on which elicited the strongest response. All trials are referenced to the trial-type that elicited the maximal firing during odor sampling. Data were normalized by subtracting the mean and dividing by the standard deviation (Z score). Blue = preferred outcome; red = non-preferred outcome; green = same value and yellow = opposite value; thin = first 5 trials; thick = last 5 trials. C. Height of each bar indicates the percent of neurons that showed a main effect or interaction of outcome (short, long, big and small), direction (contra and ipsilateral) and odor-type (free and forced choice) for increasing- and decreasing-type neurons. * p < 0.05; chi-square. D–F. Percent choice on free-choice trials for controls (n = 5) and rats with bilateral lesions (n = 5) during the first and last 2 days of testing. Each day rats performed 3 blocks of either size or delay trials across 4 days for each manipulation. During the first 2 days of testing, scores were broken down by the three blocks to demonstrate that VS lesions impaired all three trial blocks. High (hi) = short and large. Low (lo) = long and small. Asterisks indicate planned comparisons revealing statistically significant differences (t test, p<0.05). Error bars indicate SEM. For more information please see Burton et al., 2013.

These data suggest that neural selectivity in DLS is not dependent on NAc and that DLS can compensate for the loss of NAc function. Consistent with this hypothesis, disruption of choice behavior after bilateral NAc lesions was transient and rats recovered the ability to accurately choose the more valued action over several days of training (Figure 4D–F) (Burton et al., 2013). Although we cannot prove this, we think that rats normally use goal-directed mechanisms to perform free-choice trials, but after loss of NAc, rely more heavily on S-R processes to perform the task. This theory is consistent with the enhanced stimulus and response correlates observed in DLS after NAc lesions. The hypothesis that DLS can compensate for NAc is further supported by data showing that the same NAc lesions improved performance on a 2-way avoidance paradigm, which is a known DLS-dependent behavior (Lichtenberg et al., 2013).

Similar results have been described for NAc after loss of DLS function. Elimination of the indirect pathway in DLS has been shown to impair accuracy in rats performing an auditory discrimination task. As in our study, rats recovered function during subsequent behavioral sessions suggesting that some other brain region, possibly NAc, was compensating for lost DLS function. If true, then lesions of both NAc and DLS should eliminate functional recovery. Indeed, Nishizawa and colleagues found that lesioning NAc in rats with impaired DLS function did not recover normal levels of performance (Nishizawa et al., 2012).

Together, these results suggest that NAc and DLS both guide behavior normally during performance of reward-related tasks, and that NAc and DLS can compensate for each other when the other area is offline. The single unit data suggests that NAc governs reward-guided decision-making by signaling the motivational value of expected goals, whereas DLS appears to better represent associations between outcomes, external stimuli, and actions. These roles are consistent with devaluation paradigms demonstrating that NAc and DLS are critical for goal-directed and habitual behaviors, respectively (Balleine and O’Doherty, 2010; Singh et al., 2010).

Medial versus Lateral Dorsal Striatum

So far, we have discussed the functional segregation between NAc and DLS, which is a common way to divide striatal function (i.e., dorsal-ventral plane). Other studies have focused on segregation of function across medial and lateral aspects of striatum, specifically within dorsal striatum (DS). Reward-related actions controlled by DS are thought to reflect the integration of two different learning processes, one that controls goal-directed behavior and the other the acquisition of habits (Balleine and O’Doherty, 2010). Goal-directed behavior is governed by neural representations of associations between responses and outcomes (R-O), whereas habit formation is controlled by relationships between stimuli and responses (S-R). One way to assess the role that specific brain areas play in these two functions is to devalue the reward that the animal is anticipating. If behavior is under control of the expected outcome (RO), then that behavior should cease when the outcome is no longer desirable after devaluation. However, if behavior is stimulus-driven (S-R), then outcome devaluation should not impact behavioral output. This procedure has demonstrated that both NAc and DMS lesions impair goal-directed behavior, leaving the animal’s behavior stimulus-driven and habitual. In contrast, DLS lesions inhibit the formation of habits, leaving the animal’s behavior under the control of anticipated goals (Balleine and O’Doherty, 2010).

These experiments clearly show that DMS and DLS are important for goal- and habit-driven behavior, respectively. However, it is unclear exactly what aspects of behavior are computed in each area. To examine this, we compared the activity of single neurons in DMS and DLS in rats performing the same odor-guided decision-making size/delay task described in Figure 2A (Stalnaker et al., 2010; Burton et al., 2013). We found that activity patterns in both DMS and DLS reflected a full spectrum of associations between external stimuli, behavioral responses, and possible outcomes as illustrated by the firing of the single cell example described earlier (Figure 3). Remarkably, we found no major differences in the type of information carried by DMS and DLS neurons during the sampling of odors or the execution of the behavioral response, suggesting that both DMS and DLS carry task correlates related to stimuli, responses and expected outcomes. Note, firing patterns in DMS and DLS were different from those described earlier in NAc. In NAc, the majority of neurons exhibited elevated firing when the more valuable reward was expected (Figure 2B–E).

Although there were no apparent differences between DMS and DLS at the time of the decision, differences did emerge when examining activity around the time of unexpected reward delivery and omission (i.e, the first 10 trials after block switches; Figure 2A). A subset of fast-firing neurons in DMS showed action-specific reward prediction error correlates, firing more or less strongly when reward was better or worse than expected, respectively (Stalnaker et al., 2012). Remarkably, these prediction error signals were only present in each neuron’s non-preferred direction, possibly providing a mechanism by which associative action might update decision-making by selectively influencing a particular action-outcome representation. In contrast to DMS, we did not find prediction error correlates in either DLS or NAc (Roesch et al., 2009; Stalnaker et al., 2012).

Consistent with these findings, Gremel and Costa (2013) have recently shown that neural activity in both DMS and DLS carry signals related to both goal-directed behavior and habitual action. However, they found that modulation of firing in DMS and DLS was more prominent when behaviors were goal-directed and stimulus driven, respectively. They were able to demonstrate this in their task (unlike ours) because it allowed for a clean dissociation between goal- and stimulus-driven behavior by training animals on either a random ratio or random- interval schedule of reinforcement, respectively. Reinforcer devaluation confirmed that behaviors under these schedules were indeed goal- and habit-driven (Gremel and Costa, 2013). Consistent with previous work, lesions to DMS hindered goal-directed decisions promoting habitual behavior during performance of their task, whereas lesions to DLS hindered habitual responding, forcing animals to be goal-driven. In both cases, animals could still perform the task, but were using different functional mechanisms to control behavior. These results suggest that neural correlates that control goal-directed and habitual actions are processed in parallel, and that these regions are modulated differently depending on the mechanism that is ultimately controlling behavior.

Other studies also report no large-scale differences between encoding in DLS and DMS, but do show a striking dissociation between areas during different task events and stages of training (Yin et al., 2009; Thorn et al., 2010). It has been shown that DLS neurons exhibit heightened selectivity during action portions of various tasks, which emerge steadily during task acquisition. In contrast, neural activity in DMS was selective early in acquisition when animals were making cue-based decisions. Excitotoxic lesions targeting DMS and DLS confirmed the importance of these signals during learning. DMS lesions impaired rat performance during early phases of training, but had no effect on performance after extended training. Lesions to DLS affected skill learning during the early phases of training and throughout the rest of training as well.

All of these studies provide clear evidence that there are parallel and differential firing patterns in DMS and DLS. Activity of neurons in both DMS and DLS reflect the full spectrum of task correlates during performance of our odor-guided reward task. Encoding of a number of parameters including response direction (left versus right), external stimuli (free-choice, forced-left, and forced-right), and expected outcome (delay: short versus long; size: large versus small) were observed in both areas. Other studies have reported clear distinctions between DMS and DLS in simpler tasks that have examined activity across learning as animals transition from goal-directed to habitual performance. These studies demonstrate that both DMS and DLS carry signals related to goal-directed and habitual responding, but the modulation strength of these task correlates depends on the mechanism currently governing behavior (goal or habit).

From Ventromedial to Dorsolateral Striatum

The majority of rodent work in DS has focused on dissociating medial versus lateral function. Unfortunately, little attention has been given to the examination of neural correlates across the dorsal-ventral plane of DS. In monkeys, Nakamura and colleagues recently dissected neural correlates in primate caudate, closely examining differences not only in the medial-lateral plane, but also in the dorsal-ventral plane (Nakamura et al., 2012).

In this study, monkeys made saccades to target stimuli located to the left or right of fixation, much in the same way that our rats were signaled to move to the left or right depending on the identity of the odor presented at the central odor port. As in our experiment, the reward size was biased between targets; e.g. left-ward saccade was associated with big, and right-ward saccade was associated with small rewards (Figure 5A). Over the course of a single recording session, the association between reward size and the location of the target switched several times.

In this experiment the caudate was divided into three subdivisions (Figure 5B); dorsal, central, and ventral based on anatomical connections with different parts of cortical and subcortical structures reported by Haber and Knutson (Haber and Knutson, 2010). Specifically, the division between “dorsal” and “central” was set at the lower edge of the lateral ventricle, which was approximately 5mm from the top edge of the caudate. The division between “central” and “ventral” was 8mm from the top edge of the caudate. As described below, the observed functional segregation in the caudate accorded well with the tripartite subdivisions observed in humans (Karachi et al., 2002), as well as the progression of innervation from limbic to associative to sensorimotor afferents as one moves from ventral-medial to central to dorsal-lateral striatum (Haber and Knutson, 2010).

In the dorsal caudate, they found neural correlates related to the location and size of the expected outcome as illustrated in Figure 5C. For this neuron, activity was high before the onset of spatial targets in trial blocks where the larger reward was delivered for saccades made to the right. Even on trials where the eventual target instructed the monkey to saccade to the left for small reward, activity was high, demonstrating that activity reflected the relationship between the action and the larger reward. Unlike activity described above for NAc, activity of these neurons represent the value of a specific action, not the value of the action that was about to occur. Consistent with this finding, others have demonstrated that activity of neurons in the DS can represent action-value in different paradigms in primates and rats, as well as correlates related to the choice itself (Samejima et al., 2005; Lau and Glimcher, 2007, 2008; Stalnaker et al., 2010).

More importantly, as they moved their recording electrodes medial-ventrally into the caudate, they found that activity became more driven by reward size (Figure 5F) and less driven by response direction (Figure 5E). Ventral-medial portions of the caudate, including the central and ventral part, tended to fire more strongly for big compared to small reward (e.g., Figure 5D) and showed fewer neurons modulated by response direction, whereas dorsal-lateral portions of caudate exhibited high directionality and reward selectivity, but showed no preference for large over small reward (Figure 5E and F).

This work is consistent with work by Schultz and colleagues examining task-related activity throughout monkey striatum, including dorsal and ventromedial parts of putamen (DLS), dorsal and ventral caudate (DMS), and in NAc as monkeys performed a go/nogo task for reward (Apicella et al., 1991; Hollerman et al., 1998). They found that there were twice as many neurons that increased firing to reward for both go and nogo trials in ventral compared to dorsal striatal areas, whereas the number of neurons that were modulated by movement type, including non-rewarded movements (i.e., nogos), were more prominent in the head of the caudate (Apicella et al., 1991; Hollerman et al., 1998).

These data parallel differences between NAc and lateral/medial DS described earlier in rats. NAc neurons in rats exhibited stronger firing for high value outcomes, whereas outcome selective neurons in DMS and DLS were equally distributed across the four outcomes (i.e., short-delay, long-delay, large-reward, and small-reward). These data are also consistent with a host of other studies examining differences between ventral-medial portions of striatum (which includes NAc and often ventral DMS) versus more dorsal lateral regions. For example, Takahashi and colleagues showed that in rats learning and reversing odor discriminations for appetitive and aversive outcomes, activity in NAc better reflected the motivational significance of cues that tracked across contingency reversals, whereas DS better reflected associations between cues and subsequent responses (Takahashi et al., 2007). Likewise, van der Meer and colleagues have shown differences between firing in ventral and dorsal striatum in rats running mazes for reward (van der Meer et al., 2010). During decisions points, as rats pondered which direction to select, activity in ventral striatum represented future rewards, a feature that was absent in the more lateral regions of dorsal striatum during performance of the same task. Instead, neural activity in more dorsal lateral aspects of striatum represented action-rich components of the maze.

Other studies have examined striatal functions in more complicated choice paradigms that not only vary the relative value between two actions, but also the net value of the trials as a whole. In primates it has been shown that neural activity in more ventral-medial portions of striatum better reflected the overall value of the two options, whereas activity in more lateral aspects of dorsal striatum better represented the difference in value between the options and the action necessary to obtain it (Cai et al., 2011). In rats performing a self-paced choice task for different rewards, it was found that both DMS and NAc signaled the net-value of the two options, but only DMS exhibited a significant number of neurons that coded for relative value between two actions (Wang et al., 2013).

Thus, there appears to be a continuum of correlates as one moves from ventral-medial striatum to dorsal-lateral striatum, with activity in ventral-medial aspects better reflecting value and motivation, and more dorsal-lateral aspects better reflecting associative and motor aspects of behavior.

Conclusions

In conclusion, we describe data showing that although there is considerable overlap of neural correlates across striatum, as one progresses from ventral-medial striatum to dorsal-lateral striatum, there is a shift from more prominent value encoding to activity more closely related to associative and motor aspects of decision-making. Neural activity in NAc appears to be strongly correlated with the expected value of outcomes critical for motivating behavior in the pursuit of reward. Neural correlates in both DMS and DLS are more associative than NAc, representing different expected outcomes across a range of stimuli and responses. DMS is necessary for goal-directed behavior and correlates in this region are more prominent early in learning when cue-based decisions are being made. In contrast, DLS is critical for habit formation and its neural correlates emerge during learning and persist through acquisition. These correlates are entirely consistent with functional channels in basal ganglia (Alexander et al., 1986; Alexander and Crutcher, 1990; Alexander et al., 1990; Joel and Weiner, 2000; Nakahara et al., 2001; Haber, 2003; Voorn et al., 2004; Haruno and Kawato, 2006; Balleine and O’Doherty, 2010).

Lastly, we have shown that selectivity for stimuli and responses in DLS are enhanced after NAc lesions during performance of a task where function is recovered after several days of training. Others have shown that functional recovery after lost function in DLS is critically dependent on NAc. Thus, it appears that animals normally use both goal (DMS) and habit (DLS) processes to guide behavior, and that interference of one mechanism tips the scales of behavioral control to the other. Although these networks likely interact and are critical during learning as behavior transitions from goal-directed to habitual actions, there is considerable evidence that these circuits can compensate for each other during lost function and that encoding in one part of striatum is not entirely dependent on the other.

Acknowledgments

This work was supported by grants from the NIDA (R01DA031695, MR).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Alexander GE, Crutcher MD. Functional architecture of basal ganglia circuits: neural substrates of parallel processing. Trends in neurosciences. 1990;13:266–271. doi: 10.1016/0166-2236(90)90107-l. [DOI] [PubMed] [Google Scholar]

- Alexander GE, DeLong MR, Strick PL. Parallel organization of functionally segregated circuits linking basal ganglia and cortex. Annual review of neuroscience. 1986;9:357–381. doi: 10.1146/annurev.ne.09.030186.002041. [DOI] [PubMed] [Google Scholar]

- Alexander GE, Crutcher MD, DeLong MR. Basal ganglia-thalamocortical circuits: parallel substrates for motor, oculomotor, “prefrontal” and “limbic” functions. Progress in brain research. 1990;85:119–146. [PubMed] [Google Scholar]

- Apicella P, Ljungberg T, Scarnati E, Schultz W. Responses to reward in monkey dorsal and ventral striatum. Experimental brain research Experimentelle Hirnforschung Experimentation cerebrale. 1991;85:491–500. doi: 10.1007/BF00231732. [DOI] [PubMed] [Google Scholar]

- Balleine BW, O’Doherty JP. Human and rodent homologies in action control: corticostriatal determinants of goal-directed and habitual action. Neuropsychopharmacology : official publication of the American College of Neuropsychopharmacology. 2010;35:48–69. doi: 10.1038/npp.2009.131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bissonette GB, Burton AC, Gentry RN, Goldstein BL, Hearn TN, Barnett BR, Kashtelyan V, Roesch MR. Separate populations of neurons in ventral striatum encode value and motivation. PLoS One. 2013;8:e64673. doi: 10.1371/journal.pone.0064673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bromberg-Martin ES, Matsumoto M, Hikosaka O. Dopamine in motivational control: rewarding, aversive, and alerting. Neuron. 2010;68:815–834. doi: 10.1016/j.neuron.2010.11.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burton AC, Bissonette GB, Lichtenberg NT, Kashtelyan V, Roesch MR. Ventral Striatum Lesions Enhance Stimulus and Response Encoding in Dorsal Striatum. Biological psychiatry. 2013 doi: 10.1016/j.biopsych.2013.05.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cai X, Kim S, Lee D. Heterogeneous coding of temporally discounted values in the dorsal and ventral striatum during intertemporal choice. Neuron. 2011;69:170–182. doi: 10.1016/j.neuron.2010.11.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Catanese J, van der Meer M. A network state linking motivation and action in the nucleus accumbens. Neuron. 2013;78:753–754. doi: 10.1016/j.neuron.2013.05.021. [DOI] [PubMed] [Google Scholar]

- Gremel CM, Costa RM. Orbitofrontal and striatal circuits dynamically encode the shift between goal-directed and habitual actions. Nat Commun. 2013;4:2264. doi: 10.1038/ncomms3264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haber SN. The primate basal ganglia: parallel and integrative networks. Journal of chemical neuroanatomy. 2003;26:317–330. doi: 10.1016/j.jchemneu.2003.10.003. [DOI] [PubMed] [Google Scholar]

- Haber SN, Knutson B. The reward circuit: linking primate anatomy and human imaging. Neuropsychopharmacology : official publication of the American College of Neuropsychopharmacology. 2010;35:4–26. doi: 10.1038/npp.2009.129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haber SN, Fudge JL, McFarland NR. Striatonigrostriatal pathways in primates form an ascending spiral from the shell to the dorsolateral striatum. J Neurosci. 2000;20:2369–2382. doi: 10.1523/JNEUROSCI.20-06-02369.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haruno M, Kawato M. Heterarchical reinforcement-learning model for integration of multiple cortico-striatal loops: fMRI examination in stimulus-action-reward association learning. Neural networks : the official journal of the International Neural Network Society. 2006;19:1242–1254. doi: 10.1016/j.neunet.2006.06.007. [DOI] [PubMed] [Google Scholar]

- Hollerman JR, Tremblay L, Schultz W. Influence of reward expectation on behavior-related neuronal activity in primate striatum. J Neurophysiol. 1998;80:947–963. doi: 10.1152/jn.1998.80.2.947. [DOI] [PubMed] [Google Scholar]

- Houk J, Adams JL, Barto AG, editors. A model of how the basal ganglia generate and use neural signals that predict reinforcement. 1995. [Google Scholar]

- Ikemoto S. Dopamine reward circuitry: two projection systems from the ventral midbrain to the nucleus accumbens-olfactory tubercle complex. Brain Res Rev. 2007;56:27–78. doi: 10.1016/j.brainresrev.2007.05.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joel D, Weiner I. The connections of the dopaminergic system with the striatum in rats and primates: an analysis with respect to the functional and compartmental organization of the striatum. Neuroscience. 2000;96:451–474. doi: 10.1016/s0306-4522(99)00575-8. [DOI] [PubMed] [Google Scholar]

- Joel D, Niv Y, Ruppin E. Actor-critic models of the basal ganglia: new anatomical and computational perspectives. Neural Netw. 2002;15:535–547. doi: 10.1016/s0893-6080(02)00047-3. [DOI] [PubMed] [Google Scholar]

- Karachi C, Francois C, Parain K, Bardinet E, Tande D, Hirsch E, Yelnik J. Three-dimensional cartography of functional territories in the human striatopallidal complex by using calbindin immunoreactivity. The Journal of comparative neurology. 2002;450:122–134. doi: 10.1002/cne.10312. [DOI] [PubMed] [Google Scholar]

- Lau B, Glimcher PW. Action and outcome encoding in the primate caudate nucleus. J Neurosci. 2007;27:14502–14514. doi: 10.1523/JNEUROSCI.3060-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lau B, Glimcher PW. Value representations in the primate striatum during matching behavior. Neuron. 2008;58:451–463. doi: 10.1016/j.neuron.2008.02.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lichtenberg NT, Kashtelyan V, Burton AC, Bissonette GB, Roesch MR. Nucleus accumbens core lesions enhance two-way active avoidance. Neuroscience. 2013 doi: 10.1016/j.neuroscience.2013.11.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGinty VB, Lardeux S, Taha SA, Kim JJ, Nicola SM. Invigoration of reward seeking by cue and proximity encoding in the nucleus accumbens. Neuron. 2013;78:910–922. doi: 10.1016/j.neuron.2013.04.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakahara H, Doya K, Hikosaka O. Parallel cortico-basal ganglia mechanisms for acquisition and execution of visuomotor sequences - a computational approach. Journal of cognitive neuroscience. 2001;13:626–647. doi: 10.1162/089892901750363208. [DOI] [PubMed] [Google Scholar]

- Nakamura K, Santos GS, Matsuzaki R, Nakahara H. Differential reward coding in the subdivisions of the primate caudate during an oculomotor task. J Neurosci. 2012;32:15963–15982. doi: 10.1523/JNEUROSCI.1518-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nishizawa K, Fukabori R, Okada K, Kai N, Uchigashima M, Watanabe M, Shiota A, Ueda M, Tsutsui Y, Kobayashi K. Striatal indirect pathway contributes to selection accuracy of learned motor actions. J Neurosci. 2012;32:13421–13432. doi: 10.1523/JNEUROSCI.1969-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niv Y, Schoenbaum G. Dialogues on prediction errors. Trends Cogn Sci. 2008;12:265–272. doi: 10.1016/j.tics.2008.03.006. [DOI] [PubMed] [Google Scholar]

- Roesch MR, Bryden DW. Impact of size and delay on neural activity in the rat limbic corticostriatal system. Frontiers in neuroscience. 2011;5:130. doi: 10.3389/fnins.2011.00130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roesch MR, Singh T, Brown PL, Mullins SE, Schoenbaum G. Ventral striatal neurons encode the value of the chosen action in rats deciding between differently delayed or sized rewards. J Neurosci. 2009;29:13365–13376. doi: 10.1523/JNEUROSCI.2572-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Samejima K, Ueda Y, Doya K, Kimura M. Representation of action-specific reward values in the striatum. Science. 2005;310:1337–1340. doi: 10.1126/science.1115270. [DOI] [PubMed] [Google Scholar]

- Singh T, McDannald MA, Haney RZ, Cerri DH, Schoenbaum G. Nucleus Accumbens Core and Shell are Necessary for Reinforcer Devaluation Effects on Pavlovian Conditioned Responding. Frontiers in integrative neuroscience. 2010;4:126. doi: 10.3389/fnint.2010.00126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stalnaker TA, Calhoon GG, Ogawa M, Roesch MR, Schoenbaum G. Neural correlates of stimulus-response and response-outcome associations in dorsolateral versus dorsomedial striatum. Frontiers in integrative neuroscience. 2010;4:12. doi: 10.3389/fnint.2010.00012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stalnaker TA, Calhoon GG, Ogawa M, Roesch MR, Schoenbaum G. Reward prediction error signaling in posterior dorsomedial striatum is action specific. J Neurosci. 2012;32:10296–10305. doi: 10.1523/JNEUROSCI.0832-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Takahashi Y, Schoenbaum G, Niv Y. Silencing the critics: understanding the effects of cocaine sensitization on dorsolateral and ventral striatum in the context of an actor/critic model. Front Neurosci. 2008;2:86–99. doi: 10.3389/neuro.01.014.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Takahashi Y, Roesch MR, Stalnaker TA, Schoenbaum G. Cocaine exposure shifts the balance of associative encoding from ventral to dorsolateral striatum. Frontiers in integrative neuroscience. 2007;1:11. doi: 10.3389/neuro.07/011.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thorn CA, Atallah H, Howe M, Graybiel AM. Differential dynamics of activity changes in dorsolateral and dorsomedial striatal loops during learning. Neuron. 2010;66:781–795. doi: 10.1016/j.neuron.2010.04.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van der Meer MA, Redish AD. Ventral striatum: a critical look at models of learning and evaluation. Curr Opin Neurobiol. 2011;21:387–392. doi: 10.1016/j.conb.2011.02.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van der Meer MA, Johnson A, Schmitzer-Torbert NC, Redish AD. Triple dissociation of information processing in dorsal striatum, ventral striatum, and hippocampus on a learned spatial decision task. Neuron. 2010;67:25–32. doi: 10.1016/j.neuron.2010.06.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Voorn P, Vanderschuren LJ, Groenewegen HJ, Robbins TW, Pennartz CM. Putting a spin on the dorsal-ventral divide of the striatum. Trends in neurosciences. 2004;27:468–474. doi: 10.1016/j.tins.2004.06.006. [DOI] [PubMed] [Google Scholar]

- Wang AY, Miura K, Uchida N. The dorsomedial striatum encodes net expected return, critical for energizing performance vigor. Nat Neurosci. 2013;16:639–647. doi: 10.1038/nn.3377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yin HH, Mulcare SP, Hilario MR, Clouse E, Holloway T, Davis MI, Hansson AC, Lovinger DM, Costa RM. Dynamic reorganization of striatal circuits during the acquisition and consolidation of a skill. Nat Neurosci. 2009;12:333–341. doi: 10.1038/nn.2261. [DOI] [PMC free article] [PubMed] [Google Scholar]