Abstract

The ability to perceive a regular beat in music and synchronize to this beat is a widespread human skill. Fundamental to musical behaviour, beat and meter refer to the perception of periodicities while listening to musical rhythms and often involve spontaneous entrainment to move on these periodicities. Here, we present a novel experimental approach inspired by the frequency-tagging approach to understand the perception and production of rhythmic inputs. This approach is illustrated here by recording the human electroencephalogram responses at beat and meter frequencies elicited in various contexts: mental imagery of meter, spontaneous induction of a beat from rhythmic patterns, multisensory integration and sensorimotor synchronization. Collectively, our observations support the view that entrainment and resonance phenomena subtend the processing of musical rhythms in the human brain. More generally, they highlight the potential of this approach to help us understand the link between the phenomenology of musical beat and meter and the bias towards periodicities arising under certain circumstances in the nervous system. Entrainment to music provides a highly valuable framework to explore general entrainment mechanisms as embodied in the human brain.

Keywords: music beat and meter perception, steady-state evoked potentials, electroencephalogram, neural entrainment, sensorimotor integration, multisensory integration

1. Introduction

One of the richest features of music is its temporal structure. In particular, the beat, which usually refers to the perception of periodicities while listening to music, can be considered as a cornerstone of music and dance behaviours. Even when music is not strictly periodic, humans perceive periodic pulses and spontaneously entrain their body to these beats [1]. The beats can be grouped or subdivided in meters, which correspond to harmonics or subharmonics of the beat frequency (e.g. the meter of a waltz, which is a three-beat meter, has a frequency of f/3, f being the frequency of the beats). Typically, beat and meter perception is known to occur within a specific frequency range corresponding to musical tempo (i.e. approx. 0.5–5 Hz) [2,3]. The particular status of temporal periodicity within this frequency range is hypothesized to be the key element allowing optimal coordination of body movement with the musical flow because it yields optimal predictability [4,5].

Entrainment to music is an extremely common behaviour, shared by humans of all cultures. It is a highly complex activity, which involves auditory, and also visual, proprioceptive and vestibular perception. It also requires attention, motor synchronization, performance and coordination within and across individuals [6,7]. Hence, a large network of brain structures is involved during entrainment to music [8–10]. There is relatively recent and growing interest in understanding the functional and neural mechanisms of neural entrainment to music, as it may constitute a unique gateway to understanding human brain function. A major goal in this research area is to narrow the gap between entrainment to musical rhythms in human individuals on the one hand and phenomena of entrainment in the activity of neurons on the other. In both the cases, entrainment processes—that is, synchronization or frequency coupling [11]—and tendencies towards temporal periodic activity have been described as fundamental functional characteristics (e.g. [12–16] on the one hand and [17–20] on the other). Therefore, a first question of interest that has motivated this research is whether the perceived temporal periodicities constituted by the musical beat and meter entrain neural responses at the exact frequency of the beat and meter.

2. Neural entrainment to periodic sensory inputs: the frequency-tagging approach

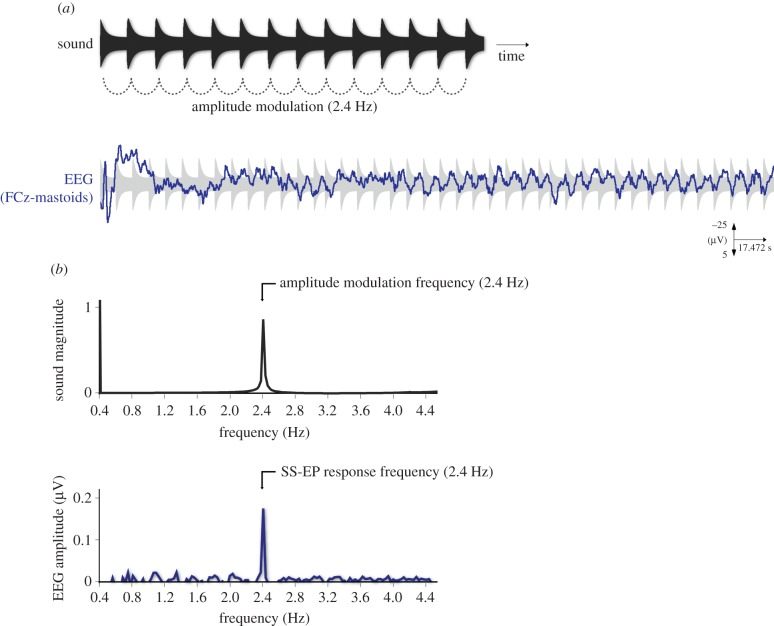

In this review, we describe an electrophysiological approach developed to capture the processing of beat and meter in the human brain with the electroencephalogram (EEG). This approach is built on the long-standing observation that when a stimulus, or a property of a stimulus, is repeated at a fixed rate (i.e. periodically), it generates a periodic change in voltage amplitude in the electrical activity recorded on the human scalp by EEG. In ideal conditions, this electrophysiological response is stable in phase and amplitude over time, and for this reason, it has been defined as a ‘steady-state’ evoked potential (SS-EP) [21]. This response was further investigated using various sensory inputs and periodic changes of various properties of these inputs, such as the periodic amplitude modulation of a continuous tone (e.g. with visual stimuli, [21,22]; with auditory stimuli, [23–27]; and with somatosensory stimuli, [28–30]). This electrophysiological method has also been called ‘frequency-tagging’, usually when more than one frequency input is used. Indeed, as the SS-EP is a periodic response, it is confined to a specific frequency and it is thus natural to analyse it in the frequency domain instead of the time domain. Hence, the stimulus frequency determines the response frequency content: the response spectrum presents narrow-band peaks at frequencies that are directly related to the stimulus frequency [31]. Figure 1 gives an example of such a periodic response to periodic input in the auditory system, as recorded with EEG. In this example, a periodic neural response was elicited in healthy participants by the long-lasting periodic modulation of the amplitude of a tone.

Figure 1.

(a) Sound envelope excerpt of a pure tone amplitude-modulated periodically at 2.4 Hz (upper graph) and EEG response to this sound as obtained from electrode FCz (fronto-central electrode) from the average across 10 participants (band-pass filtered between 0.3 and 30 Hz). Typical transient evoked potentials are elicited at the onset of the sound, but after a few seconds the entrainment of the EEG to the periodic amplitude modulation becomes visible. (b) Envelope spectrum of this sound, with peak of intensity magnitude at 2.4 Hz (here, normalized between 0 and 1; upper panel), and the corresponding EEG spectrum averaged across 10 participants and across the 64 channels, with peak of EEG amplitude (i.e. the SS-EP elicited in response to the periodic sensory stimulation) at 2.4 Hz. Adapted from [32]. (Online version in colour.)

While originally designed to investigate ‘low level’ sensory processes [26] and their attentional modulation (e.g. [33,34]), the frequency-tagging approach has been recently extended to characterize higher levels of perception and cognition, for instance, figure-ground segregation [35] or face perception [31,36,37]. In our own studies, we investigated whether the musical beat and meter—which refer to perceived periodicities induced by, but not necessarily present within, the sound input—would elicit neural responses which could be tagged in the EEG based on their expected frequencies, i.e. at the exact frequency of the beat and meter.

3. Neural entrainment to rhythmic patterns

Musical beat and meter periodicities are perceived from sounds, whether or not these sounds are actually periodic. Indeed, they can be induced not only by isochronous pulses (as with a metronome) but also by complex rhythmic structures [1]. Hence, as described by music theorists, the beat is not itself a stimulus property, although it is usually induced by a rhythmic stimulus [1,12–16,38–40]. In fact, many frequency and phase combinations are available in a musical piece and could be selected by individuals as their own perceived beat, within a given culture [5,41].

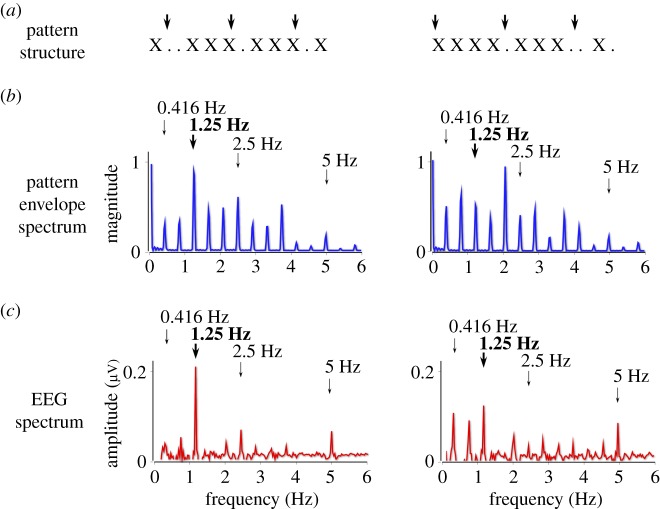

On the basis of this observation, we recorded the EEG while human participants listened to rhythmic patterns. These patterns consist of short sounds alternating with silences (i.e. acoustic sequences that are not strictly isochronous), in contrast to the sound of figure 1 (figure 2) [39]. That is, the envelope spectrum of these patterns does not contain only one frequency, as in the sound of figure 1, but it contains multiple frequencies within the specific frequency range for beat and meter perception (figure 2). Commonly found in Western music, these rhythmic patterns are expected to induce, at least to some extent, a spontaneous perception of beat and meter, even if these rhythms are not strictly periodic in reality [40].

Figure 2.

In Nozaradan et al. [42], participants listened to 33-s rhythmic sound patterns. (a) Two examples of rhythmic patterns, consisting of a sequence of short tones (crosses) and silences (dots). The vertical arrows indicate beat location as perceived by the participants (as evaluated after the EEG recordings by a tapping task). Note that the pattern presented on the right can be considered as syncopated, as some beats occur on silences rather than sounds. (b) The frequency spectrum of the sound envelope. The expected beat- and meter-related frequencies are indicated by thick and thin vertical arrows, respectively. Importantly, in the pattern shown on the right, the beat frequency (at 1.25 Hz) does not have predominant acoustic energy, as compared with the pattern presented on the left. (c) The frequency spectrum of the EEG recorded while listening to these patterns (global field amplitude averaged across eleven participants). A nonlinear transformation of the sound envelope was observed, resulting in a selective enhancement of the neural responses elicited at frequencies corresponding to beat and meter. This selective enhancement occurred even when the beat frequency was not predominant. Adapted from [42].

In the EEG spectrum, these rhythmic stimuli elicit multiple peaks at frequencies corresponding exactly to the rhythmic patterns' envelope (figure 2). Most importantly, there is a selective enhancement of the responses elicited at beat and meter frequencies (referred to as beat- and meter-related SS-EPs) in the EEG spectrum, compared with the frequencies contained in the rhythmic patterns that have no relevance for beat and meter. In addition, this selective enhancement of the neural response at frequencies corresponding to the perceived beat and meter is dampened when playing the rhythmic patterns too fast or too slow, such as to move the tempo away from the ecological musical tempo range.

Taken together, these observations can be interpreted as evidence for a process of selective enhancement of the neural response at beat and meter frequencies, or selective beat- and meter-related neural entrainment, related to the perceived beat and meter induced by complex rhythms. Moreover, they provide evidence for resonance frequencies shaping beat and meter neural entrainment in correspondence with resonance frequencies related to the perception of beat and meter (i.e. musical tempo). In addition, the fact that the frequency-tagging approach allows us to compare the input and the output spectra with one another makes it a well-suited approach to provide insight to the quality of the sound transduction to the cortex. More specifically, this comparison should allow us to evaluate the input–output transformation possibly related to perceptual aspects of the sound inputs [43], such as the perception of beat and meter. Importantly, this can be made in the absence of an overt behavioural measure, so that it is not contaminated by decisional or movement-related bias. Because it does not require an explicit overt behaviour, the approach can be used similarly in typical human adults and in populations who are unable to provide overt behavioural responses, such as infants or certain patient populations [31]. However, purely neural responses in the absence of any behavioural evidence of beat perception or movement synchronization have to be taken with caution, as these measures could represent distinct aspects of processing of the musical beat and meter [5].

4. Tagging the neural correlate of internally driven meter

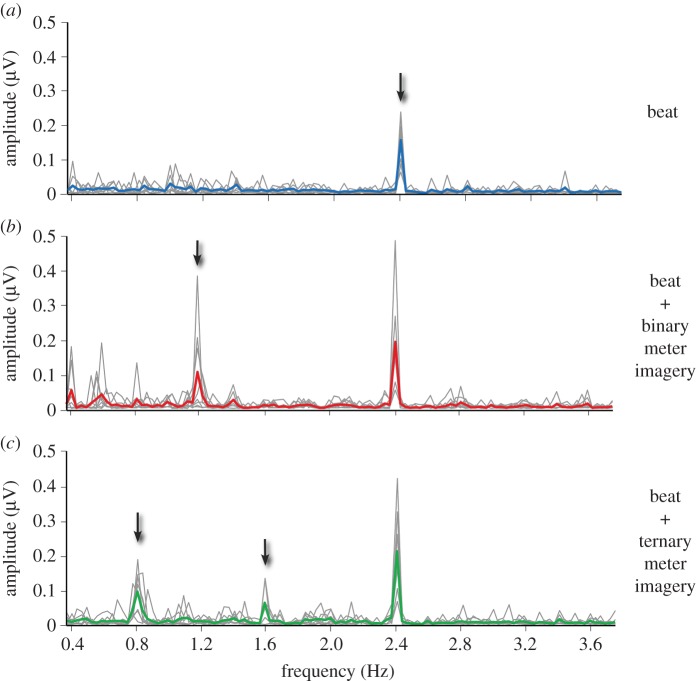

Figures 1 and 2 give examples of neural responses elicited at frequencies corresponding to the perceived beat and meter. In both the cases, these frequencies are present in the spectrum of the sound envelope itself. However, an outstanding issue is whether the neural entrainment to the beat and meter emerges in the human brain when the beat and meter frequencies are not present in the spectrum of the acoustic input. This situation refers to musical contexts in which meter perception only relies on mental imagery, or internally driven interpretation. We tested the frequency-tagging approach in this context, asking participants to listen to a periodic sound and to voluntarily imagine the meter of this beat as either binary or ternary (i.e. as in a march or a waltz, respectively; figure 3) [44]. In this case, the sensory input was thus periodic and this temporal periodicity was located within the frequency range for beat and meter perception. Moreover, instead of being induced spontaneously (as in [39]), the beat and meter were induced in response to an external instruction imposing a specific frequency and phase for the metric interpretation.

Figure 3.

Beat- and meter-related SS-EPs elicited by the 2.4 Hz auditory beat in (a) the control condition, (b) the binary meter imagery condition and (c) the ternary meter imagery condition. The frequency spectra represent the amplitude of the EEG signal (µV) as a function of frequency, averaged across all scalp electrodes, after applying spectral baseline correction procedure (see [44]). The group-level average frequency spectra are shown using a thick coloured line, while single-subject spectra are shown with grey lines. Note that in all three conditions, the auditory stimulus elicited a clear beat-related SS-EP at f = 2.4 Hz (arrow in a). Also note the emergence of a meter-related SS-EP at 1.2 Hz in the binary meter imagery condition (arrow in b), and at 0.8 Hz and 1.6 Hz in the ternary meter imagery condition (arrows in c). Adapted from [44].

In this study, we showed that mentally imposing a meter on this sound elicited neural activities at frequencies corresponding exactly to the perceived and imagined beat and meter (figure 3). Hence, as the frequencies corresponding to the imagined meters were not present in the sound input, the results of this experiment can be interpreted as evidence for internally driven meter-related SS-EPs.

5. Neural entrainment underlying sensorimotor synchronization to the beat

Synchronizing movements to external inputs is best observed with music [2–5,12]. The periodic temporal structure of beats is thought to facilitate movement synchronization on musical rhythms. Indeed, a fascinating aspect of beat perception is its strong relationship with movement [45–50]. On the one hand, music spontaneously entrains humans to move [45–47]. On the other hand, it has been shown that movement influences the perception of musical rhythms [46,51].

How distant brain areas involved in sensorimotor synchronization are able to coordinate their activity remains, at present, largely unknown. Externally paced tapping has been little investigated with EEG, probably because of the lack of spatial resolution of this technique, preventing us from easily disentangling movement-related potentials from potentials elicited by the processing of the external pacing stimulus. In some of these studies, the electrophysiological activities elicited by the tapping movements were analysed as single transient event-related potentials (ERPs) [52–56]. By aligning trials to the onset of the movement or to the tap, a scalp response is defined around 100 ms before movement onset. Source reconstructions of this evoked potential point to a generator within the primary motor cortex contralateral to the moving hand, suggesting that it reflects movement planning and execution. In addition, an ERP is elicited around 100 ms after movement onset, whose source is located in the primary somatosensory cortex, suggesting that this potential reflects tactile and somatosensory feedback. In the frequency domain, the repetitive movements elicit SS-EPs at frequencies corresponding to the frequency of the periodic movement [52–54,57,58]. This approach appears to be a powerful way to increase the signal-to-noise ratio with reduced testing duration. However, these studies focused solely on the movement-related SS-EPs without investigating concomitantly the SS-EPs elicited in response to the pacing stimulus.

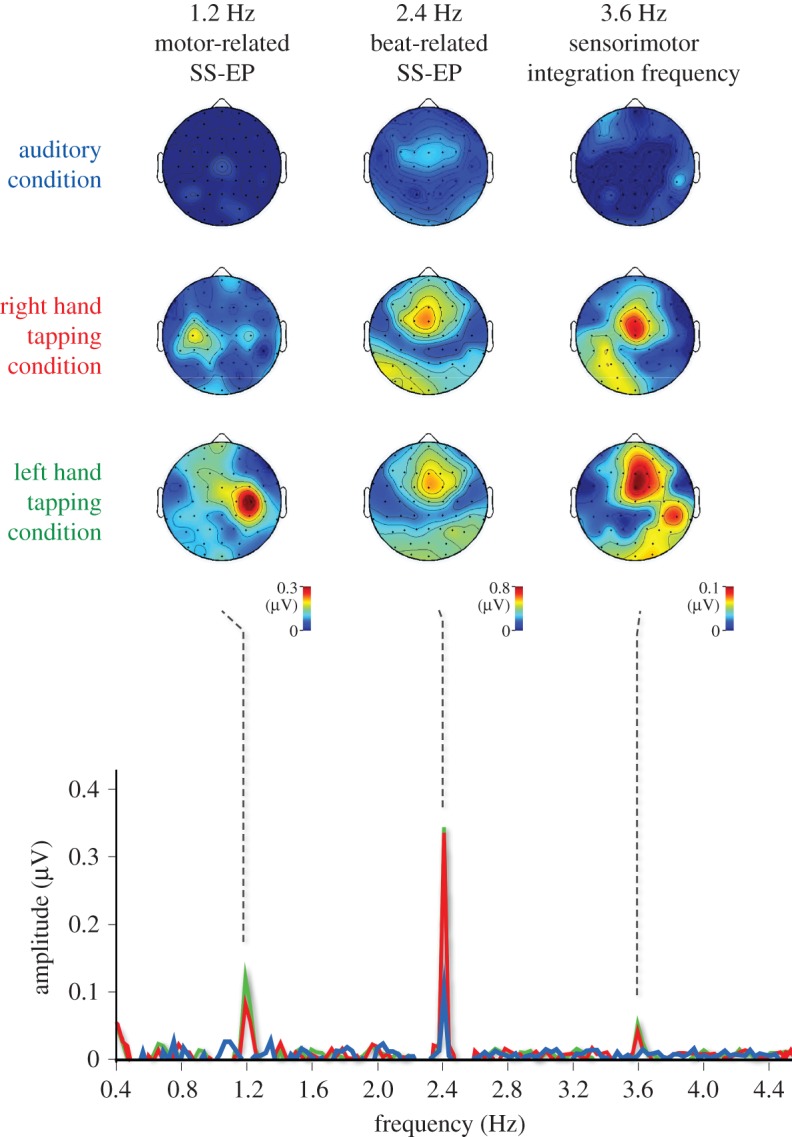

To explore sensorimotor synchronization to the beat using the frequency-tagging approach, EEG can be recorded while participants listen to an auditory beat and tap their hand on every second beat (figure 4) [32]. In this context, sensorimotor synchronization to the beat is supported in the human brain by two distinct neural activities: an activity elicited at beat frequency probably involved in beat processing and a distinct neural activity elicited at a frequency corresponding to the movement and probably involved in the production of synchronized movements [52–54,59–61] (figure 4). Most importantly, there is evidence for an interaction between sensory- and movement-related activities when participants tap to the beat, in the form of (i) an additional peak appearing at 3.6 Hz, compatible with a nonlinear product of sensorimotor integration (i.e. 2.4 Hz + 1.2 Hz); (ii) phase coupling of beat- and movement-related activities; and (iii) selective enhancement of beat-related activities over the hemisphere contralateral to the tapping, suggesting a top-down effect of movement-related activities on auditory beat processing (figure 4) [32].

Figure 4.

Group-level average frequency spectra (Hz) of the noise-subtracted EEG amplitude signals obtained in the auditory condition (i.e. listening without moving; in blue), the right hand-tapping condition (red) and the left hand-tapping condition (green), averaged across all scalp channels. In all conditions, the 2.4 Hz auditory beat elicited an SS-EP at 2.4 Hz. As shown in the corresponding topographical maps, this beat-related SS-EP was maximal over fronto-central electrodes. In the left and right hand-tapping conditions, the 1.2 Hz hand-tapping movement was related to the appearance of an additional SS-EP at 1.2 Hz. As shown in the topographical maps, this movement-related SS-EP was maximal over the central electrodes contralateral to the moving hand. In these two conditions, an additional SS-EP emerged at 3.6 Hz, referred to as cross-modulation SS-EP, whose scalp topography showed patterns similar to both beat-related and movement-related SS-EPs topographies. Adapted from [32].

This experiment differs from previous electrophysiological studies of sensorimotor synchronization to the beat at several levels. First, instead of searching for coupling across a wide range of frequency bands, we were able to predict the frequency rates at which the activity should take place, based on the periodicity of the performed movement and of the pacing sound. Second, the concentration of these movement- and beat-related activities within very narrow frequency bands improved the signal-to-noise ratio, as this aspect is fundamental to further assessing phase coherence and scalp topographies [31,35,36,62]. Third, instead of calculating coherence across electrodes within the same frequency band, the electrodes of interest were selected based on the scalp topography of these activities, which were identified in the frequency domain.

Importantly, our interpretation of the results relies, to a great extent, on the validity of the neural source assumptions for the elicited frequency-tagged responses. Indeed, because the spatial resolution of the approach is still limited by the inherent constraints of the scalp EEG, alternative interpretation of the complex signature of movement-related activities in the frequency domain cannot be excluded (i.e. the generation of responses at harmonic frequencies may not necessarily have the same scalp topography as the response obtained at 1.2 Hz). Future studies based on intracerebral recordings of the auditory and motor cortex [63–65] could help to clarify this issue.

6. Multisensory temporal binding induced by beat structure

As a final illustration of the potential of our approach, we explored how humans build an integrated representation of beat when it is induced through distinct sensory channels (e.g. auditory and visual simultaneously). Indeed, although beats are preferentially conveyed by auditory input [66–68], beat perception often co-occurs with visual movements such as when dancing or watching a conductor directing an orchestra [69]. In our study [42], the auditory and visual beats were either temporally congruent (i.e. synchronous in frequency and phase, thought to lead to a unified perception of beat) or temporally incongruent (i.e. at slightly distinct frequencies, thus not leading to a unified audiovisual beat percept).

Previous EEG recordings in humans have revealed that the congruency of combined auditory and visual stimulation enhances the magnitude of stimulus-induced EEG responses across both auditory and visual cortices [70–72]. However, because of the unavoidable temporal overlap between the neural responses to concurrent streams of sensory input, disentangling the neural activities related to each sensory stream, although critical to studying multisensory integration, is difficult [73].

Using the frequency-tagging approach in a more standard way, we aimed at testing whether this method could overcome these limitations. The stimuli were periodically modulated for experimental purpose, to ‘tag’ the corresponding neural responses based on their frequencies. To this end, features of the auditory and visual inputs (amplitude and luminance, respectively), distinct from those inducing the beat, were additionally modulated at distinct frequencies (at 11 and 10 Hz, respectively, thus faster than beat and meter frequency range). These additional periodic modulations allowed us to isolate in the EEG spectrum the SS-EPs elicited by the processing of simultaneously presented auditory and visual stimuli, based on their distinct frequency rates.

In this experiment, synchronous audiovisual beats elicited enhanced auditory and visual SS-EPs as compared with asynchronous audiovisual beats. Moreover, this increase resulted from increased phase consistency of the SS-EPs across trials [42]. Taken together, these results suggest that temporal congruency enhances the processing of multisensory inputs, possibly through a dynamic binding by synchrony of the elicited activities and/or improved dynamic attending. This interpretation is in line with previous research showing that temporal congruency facilitates multisensory integration [74–81] and that multisensory perception may result from a process of binding by synchrony of the cortical responses to sensory inputs sharing similar temporal dynamics [71,82,83].

7. Discussion and perspectives

This review has emphasized the potential of the frequency-tagging approach to explore the neural entrainment to musical beat and meter as induced in various contexts such as sensorimotor synchronization or multisensory integration. Taken together, the results of these studies illustrate the advantages that characterize the frequency-tagging method [30,31]: (i) an objective identification of the neural responses elicited at the exact frequency of the expected perceived beat and meter; (ii) a straightforward quantification of these potentials using the frequency domain analysis; (iii) a high signal-to-noise ratio given the concentration of the response of interest within narrow frequency bands; and (iv) neural responses related to perceptual or cognitive aspects, probed without the need to perform explicit behavioural responses possibly biasing these measures.

(a). Making a bridge between beat- and meter-related steady-state evoked potentials, transient ERPs and ongoing oscillatory activities

In the work reviewed here, the term ‘beat- and meter-related SS-EPs' was used in reference to the EEG frequency-tagging method by which this approach was inspired, to characterize the peaks observed in the EEG spectrum in response to the auditory rhythms. Moreover, these observed neural responses to rhythmic inputs were also related to an entrainment phenomenon: neural responses whose frequency and phase are locked to the stimulus (independently of the phase lag between the driving, acoustic, input and the driven, neural, output, and independently of the ability of the driven periodic output to arise spontaneously without any input or to continue after the train of periodic inputs) [11].

Importantly, by contrasting the sound envelope spectrum to the corresponding EEG spectrum, the frequency-tagging approach may be particularly well suited to assess not only how the responding neurons entrain to the rhythmic input over time, but also how temporal periodicities that are not physically prominent or even not present in the input emerge in the neural response. Interestingly, this latter observation has previously been predicted by modelling the responding neural network as a network of nonlinear oscillators [16,84,85].

Whether the neural responses described in this review result from ongoing neural activities resonating at the frequency of the stimulation [23,86,87], or whether they result from the linear superposition of independent transient ERP responses elicited by the repetition of the isochronous or rhythmic, non-isochronous, stimulus [30,88] remains a matter of debate. For instance, it could be proposed that the beat-induced periodic EEG response identified using the frequency-tagging approach constitutes a direct correlate of the actual mechanism through which attentional and perceptual processes are dynamically modulated as a function of time [84,85,89–91]. Phase entrainment to auditory streams has been demonstrated in many previous studies using rhythmic background sounds at δ (1–4 Hz) and θ (2–8 Hz) frequencies, and auditory performance was found to covary with the entrained oscillatory phase [19,85]. Transposed into the context of beat and meter induction, it could be hypothesized that the responsiveness of the neuronal population entrained to the beat may be expected to vary according to the phase of the beat-induced cycle. If the beat-induced cycle, as observed in the form of beat- and meter-related SS-EPs, reflects cyclic modulation of excitability in neural populations, this would account for the previous observations that event-related potentials elicited at different time points relative to the beat or meter cycle exhibit differences in amplitude [92–99]. Finding correlation between beat-related SS-EP, transient evoked responses and ongoing oscillatory neural activity by eliciting these brain responses concomitantly within a given experimental design could provide insight into this view [100,101].

(b). Retrieving time resolution and phase from steady-state evoked potentials

The frequency-tagging approach may appear to be an electrophysiological method that lacks time resolution, as the elicited activities are identified in the frequency domain rather than in the time domain. However, it may offer the possibility to study the frequency tuning function corresponding to a given stimulation. The frequency tuning function is thought to give an indication of the ‘sampling rate’ of a given neural network, i.e. not only the latency to process a single input but also the timing necessary between successive inputs to be processed. This concept was used in Nozaradan et al. [39] to reveal the resonance frequencies thought to shape musical beat and meter perception. By showing that the selective enhancement of the neural response at frequencies corresponding to the perceived beat and meter occurs within a specific frequency range, the results of this study suggest that beat and meter perception is supported by entrainment and resonance phenomena within the responding neural network [16].

In addition to the frequency tuning function, another temporal aspect crucial to beat perception is the phase selected for the beat within a given rhythmic pattern. To this end, the high signal-to-noise ratio obtained by the frequency-tagging method may help to recover phase information [31], although this possibility has not been exploited in the studies reviewed here.

(c). Sound envelope and beat induction

In our experiments, the stimuli have been designed such as to induce the beat and meter exclusively based on the dynamics of amplitude modulation, specifically beneath 5 Hz, of a pure tone. Nevertheless, the perception of musical rhythm and meter does not only rely on the information conveyed by amplitude modulation, but also exploits harmonic structure, timbre modulations or even endogenous imagery of a temporal structure that can be imposed onto the sound [102]. In theory, one could hypothesize that these numerous features, processed by distinct neural populations [103], would be integrated within a unified representation corresponding to the percept of beat and meter. Such cross-feature interactions may be hypothesized to emerge when the sound envelope and the other features set up widespread synchrony at low frequencies across cortical neurons, thus adjusting to each other by synchrony of the periodic modulation of their responsiveness [104].

This hypothesis is built on models proposing that when there is task-relevant temporal structure that sensory systems can entrain to, lower frequency brain activities entrain to this temporal structure and become instrumental in sensory processing, by modulating the excitability of the neural population accordingly ([105]; see also [89,90]).

(d). Mirroring between pitch and meter processing

Periodicity could be considered as the critical determinant of pitch (i.e. the perceptual phenomenon of sounds organized within a scale from low to high tones; e.g. [105]), similar to musical meter. Indeed, the auditory system is apparently highly sensitive to the similarity between the successive periods of an acoustic waveform [103,105,106]. As only a small number of repetitions of the period is necessary to perceive pitch, similarly only a small number of repetitions of a meter is sufficient to induce a meter percept, thus revealing the stability of this percept. Also, the nervous system is tolerant to perturbation or deterioration of this periodicity, as periodicities can be perceived from stimuli that are not strictly periodic in reality, suggesting that percepts of periodicity are supported by invariants abstracted from non-periodic inputs. This property of the auditory system has been hypothesized to emerge from the fact that most natural sounds are not strictly periodic, either within the frequency range of meter or within the frequency range of pitch [1,103,107].

Stability, tolerance and invariance in periodicity perception might result from nonlinear transformations of the sound's spectral content at various levels of the auditory pathway [104,107]. This is illustrated for example by the missing fundamental phenomenon, in which a pitch can be induced at a given frequency although this frequency is not conveyed in the sound input in reality. Similarly, a beat percept can be induced by a rhythmic pattern at a frequency that is not present in the sound envelope, as illustrated in highly syncopated rhythms [108,109].

In fact, one may speculate that meter and pitch emerge from similar physiological properties of the auditory neurons, but occurring at different frequency ranges (between 30 and 20 000 Hz for the pitch, and between 0.5 and 5 Hz for the meter). For instance, it could be hypothesized that the processing of periodicities (detection and reconstruction) within the frequency range specific to beat and meter could be supported by brain areas specifically devoted to this processing and functionally organized as an array of band-pass filters (i.e. a model similar to models proposed for pitch) [103]. Interestingly, the neural responses corresponding to perceived meter and pitch can be explained using similar models of nonlinear oscillators, corroborating the view of common nonlinear neural behaviours responsible for these percepts. Hence, investigating the parallel between pitch and meter periodicity using similar neurophysiological approaches (e.g. the frequency-tagging approach) may help understanding their respective phenomenology and underlying neural mechanisms.

(e). Musicians versus non-musicians

Is neural entrainment to beat and meter modulated by musical training? Intuitively, one would expect increased neural entrainment in musicians compared with non-musicians. However, the contrast between musicians and non-musicians could be reflected not only in terms of the robustness of the neural synchronization at these specific frequencies, but also in terms of distinct resonance frequencies for beat and meter across these two groups, peaking at slower or faster beat frequencies in non-musicians versus musicians. The frequency-tagging approach could help clarifying this issue, as it may provide information regarding the ‘sampling rate’ of the responding neural population. For instance, the resonance frequencies for beat and meter could be retrieved in musicians versus non-musicians, by exploring the selective neural entrainment at beat and meter frequencies elicited throughout different musical tempi.

(f). Cultural diversity in rhythm perception

To explore the biological foundations of beat and meter properly, it is important to be aware of the diversity encountered across cultures regarding the rhythmic material and metrical forms. As one could expect, rhythm has not been similarly developed across musical cultures [110]. Importantly, as most of the empirical research on musical rhythm has been performed on Western individuals, the literature concerning beat and meter is probably biased. The EEG frequency-tagging approach could also help addressing some of the questions pertaining to cross-cultural differences in beat induction.

Taken together, the studies reviewed here illustrate how music constitutes a rich framework to explore the phenomenon of entrainment at the level of neural networks, its involvement in dynamic cognitive processing, as well as its role in the general representation of temporal structures. They also provide further evidence that neural entrainment, as indexed by the EEG frequency-tagging approach, may play a crucial role in the formation of coherent representations from streams of dynamic sensory inputs. More generally, these results suggest that studying musical rhythm perception constitutes a unique opportunity to gain insight into the general mechanisms of entrainment at different scales, from neural systems to entire bodies.

Acknowledgements

The author thanks Dr Bruno Rossion for his helpful comments on a previous version of this review.

Funding statement

S.N. is supported by the Belgian National Fund for Scientific Research (F.R.S.-FNRS) and FRSM 3.4558.12 Convention grant (to Pr. A. Mouraux).

References

- 1.London J. 2004. Hearing in time: psychological aspects of musical meter. London, UK: Oxford University Press. [Google Scholar]

- 2.van Noorden L, Moelants D. 1999. Resonance in the perception of musical pulse. J. New Music Res. 28, 43–66. ( 10.1076/jnmr.28.1.43.3122) [DOI] [Google Scholar]

- 3.Repp BH. 2005. Sensorimotor synchronization: a review of the tapping literature. Psychon. Bull. Rev. 12, 969–992. ( 10.3758/BF03206433) [DOI] [PubMed] [Google Scholar]

- 4.Phillips-Silver J, Keller PE. 2012. Searching for roots of entrainment and joint action in early musical interactions. Front. Hum. Neurosci. 6, 26 ( 10.3389/fnhum.2012.00026) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Patel AD, Iversen JR. 2014. The evolutionary neuroscience of musical beat perception: the action simulation for auditory prediction (ASAP) hypothesis. Front. Psychol. 8, 57 ( 10.3389/fnsys.2014.00057) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Phillips-Silver J, Aktipis CA, Bryant GA. 2010. The ecology of entrainment: foundations of coordinated rhythmic movement. Music Percept. 28, 3–14. ( 10.1525/mp.2010.28.1.3) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Todd NP. 1999. Motion in music: a neurobiological perspective. Music Percept. 17, 115–126. ( 10.2307/40285814) [DOI] [Google Scholar]

- 8.Zatorre RJ, Chen JL, Penhune VB. 2007. When the brain plays music: auditory-motor interactions in music perception and production. Nat. Rev. Neurosci. 8, 547–558. ( 10.1038/nrn2152) [DOI] [PubMed] [Google Scholar]

- 9.Bengtsson SL, Ullén F, Ehrsson HH, Hashimoto T, Kito T, Naito E, Forssberg H, Sadato N. 2009. Listening to rhythms activates motor and premotor cortices. Cortex 45, 62–71. ( 10.1016/j.cortex.2008.07.002) [DOI] [PubMed] [Google Scholar]

- 10.Grahn JA. 2012. Neural mechanisms of rhythm perception: current findings and future perspectives. Top. Cogn. Sci. 4, 585–606. ( 10.1111/j.1756-8765.2012.01213.x) [DOI] [PubMed] [Google Scholar]

- 11.Pikovsky A, Rosenblum M, Kurths J. 2001. Synchronization: a universal concept in nonlinear sciences. Cambridge, UK: Cambridge University Press. [Google Scholar]

- 12.McAuley JD. 2010. Tempo and rhythm. In Music perception, Springer Handbook of Auditory Research, vol. 36 (eds Jones M Reiss, Fay RR, Popper AN.), pp. 165–200. New York, NY: Springer. [Google Scholar]

- 13.Jones MR. 1976. Time, our lost dimension: toward a new theory of perception, attention, and memory. Psychol. Rev. 83, 323–355. ( 10.1037/0033-295X.83.5.323) [DOI] [PubMed] [Google Scholar]

- 14.Stevens CJ. 2012. Music perception and cognition: a review of recent cross-cultural research. Top. Cogn. Sci. 4, 653–667. ( 10.1111/j.1756-8765.2012.01215.x) [DOI] [PubMed] [Google Scholar]

- 15.Todd NP, Lee CS, O'Boyle DJ. 2002. A sensorimotor theory of temporal tracking and beat induction. Psychol. Res. 66, 26–39. ( 10.1007/s004260100071) [DOI] [PubMed] [Google Scholar]

- 16.Large EW. 2008. Resonating to musical rhythm: theory and experiment. In The psychology of time (ed. Grondin S.). Bingley, UK: Emerald. [Google Scholar]

- 17.Buzsáki G, Draguhn A. 2004. Neuronal oscillations in cortical networks. Science 304, 1926–1929. ( 10.1126/science.1099745) [DOI] [PubMed] [Google Scholar]

- 18.Hutcheon B, Yarom Y. 2000. Resonance, oscillation and the intrinsic frequency preferences of neurons. Trends Neurosci. 23, 216–222. ( 10.1016/S0166-2236(00)01547-2) [DOI] [PubMed] [Google Scholar]

- 19.VanRullen R, Zoefel B, Ilhan B. 2014. On the cyclic nature of perception in vision versus audition. Phil. Trans. R. Soc. B 369, 20130214 ( 10.1098/rstb.2013.02014) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Giraud AL, Poeppel D. 2012. Cortical oscillations and speech processing: emerging computational principles and operations. Nat. Neurosci. 15, 511–517. ( 10.1038/nn.3063) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Regan D. 1966. Some characteristics of average steady-state and transient responses evoked by modulated light. Electroencephalogr. Clin. Neurophysiol. 20, 238–248. ( 10.1016/0013-4694(66)90088-5) [DOI] [PubMed] [Google Scholar]

- 22.Van der Tweel LH, Lunel HF. 1965. Human visual responses to sinusoidally modulated light. Electroencephalogr. Clin. Neurophysiol. 18, 587–598. ( 10.1016/0013-4694(65)90076-3) [DOI] [PubMed] [Google Scholar]

- 23.Galambos R, Makeig S, Talmachoff PJ. 1981. A 40-Hz auditory potential recorded from the human scalp. Proc. Natl Acad. Sci. USA 78, 2643–2647. ( 10.1073/pnas.78.4.2643) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Pantev C, Roberts LE, Elbert T, Ross B, Wienbruch C. 1996. Tonotopic organization of the sources of human auditory steady-state responses. Hear Res. 101, 62–74. ( 10.1016/S0378-5955(96)00133-5) [DOI] [PubMed] [Google Scholar]

- 25.Galambos R. 1982. Tactile and auditory stimuli repeated at high rates (30–50 per sec) produce similar event related potentials. Ann. NY Acad. Sci. 388, 722–728. ( 10.1111/j.1749-6632.1982.tb50841.x) [DOI] [PubMed] [Google Scholar]

- 26.Picton TW, John MS, Dimitrijevic A, Purcell D. 2003. Human auditory steady-state responses. Int. J. Audiol. 42, 177–219. ( 10.3109/14992020309101316) [DOI] [PubMed] [Google Scholar]

- 27.Ross B, Draganova R, Picton TW, Pantev C. 2003. Frequency specificity of 40-Hz auditory steady-state responses. Hear. Res. 186, 57–68. ( 10.1016/S0378-5955(03)00299-5) [DOI] [PubMed] [Google Scholar]

- 28.Tobimatsu S, Zhang YM, Kato M. 1999. Steady-state vibration somatosensory evoked potentials: physiological characteristics and tuning function. Clin. Neurophysiol. 110, 1953–1958. ( 10.1016/S1388-2457(99)00146-7) [DOI] [PubMed] [Google Scholar]

- 29.Colon E, Nozaradan S, Legrain V, Mouraux A. 2012. Steady-state evoked potentials to tag specific components of nociceptive cortical processing. Neuroimage 60, 571–581. ( 10.1016/j.neuroimage.2011.12.015) [DOI] [PubMed] [Google Scholar]

- 30.Regan D. 1989. Human brain electrophysiology: evoked potentials and evoked magnetic fields in science and medicine. New York, NY: Elsevier. [Google Scholar]

- 31.Rossion B. 2014. Understanding individual face discrimination by means of fast periodic visual stimulation. Exp. Brain Res. 15, 87 ( 10.1186/1471-2202-15-87) [DOI] [PubMed] [Google Scholar]

- 32.Nozaradan S, Zerouali Y, Peretz I, Mouraux A. In press. Capturing with EEG the neural entrainment and coupling underlying sensorimotor synchronization to the beat. Cereb. Cortex. ( 10.1093/cercor/bht261) [DOI] [PubMed] [Google Scholar]

- 33.Morgan ST, Hansen JC, Hillyard SA. 1996. Selective attention to stimulus location modulates the steady-state visual evoked potential. Proc. Natl Acad. Sci. USA 93, 4770–4774. ( 10.1073/pnas.93.10.4770) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Toffanin P, de Jong R, Johnson A, Martens S. 2009. Using frequency tagging to quantify attentional deployment in a visual divided attention task. Int. J. Psychophysiol. 72, 289–298. ( 10.1016/j.ijpsycho.2009.01.006) [DOI] [PubMed] [Google Scholar]

- 35.Appelbaum LG, Wade AR, Pettet MW, Vildavski VY, Norcia AM. 2008. Figure-ground interaction in the human visual cortex. J. Vis. 8, 1–19. ( 10.1167/8.9.8) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Rossion B, Boremanse A. 2011. Robust sensitivity to facial identity in the right human occipito-temporal cortex as revealed by steady-state visual-evoked potentials. J. Vis. 11, 16 ( 10.1167/11.2.16) [DOI] [PubMed] [Google Scholar]

- 37.Boremanse A, Norcia AM, Rossion B. 2013. An objective signature for visual binding of face parts in the human brain. J. Vis. 13, 6 ( 10.1167/13.11.6) [DOI] [PubMed] [Google Scholar]

- 38.Lerdahl F, Jackendoff R. 1983. A generative theory of tonal music. Cambridge, MA: MIT Press. [Google Scholar]

- 39.Nozaradan S, Peretz I, Mouraux A. 2012. Selective neuronal entrainment to the beat and meter embedded in a musical rhythm. J. Neurosci. 32, 17 572–17 581. ( 10.1523/JNEUROSCI.3203-12.2012) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Povel DJ, Essens PJ. 1985. Perception of temporal patterns. Music Percept. 2, 411–441. ( 10.2307/40285311) [DOI] [PubMed] [Google Scholar]

- 41.Desain P, Honing H. 2003. The formation of rhythmic categories and metric priming. Perception 32, 341–365. ( 10.1068/p3370) [DOI] [PubMed] [Google Scholar]

- 42.Nozaradan S, Peretz I, Mouraux A. 2012. Steady-state evoked potentials as an index of multisensory temporal binding. NeuroImage 60, 21–28. ( 10.1016/j.neuroimage.2011.11.065) [DOI] [PubMed] [Google Scholar]

- 43.Herrmann B, Henry MJ, Grigutsch M, Obleser J. 2013. Oscillatory phase dynamics in neural entrainment underpin illusory percepts of time. J. Neurosci. 33, 15 799–15 809. ( 10.1523/JNEUROSCI.1434-13.2013) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Nozaradan S, Peretz I, Missal M, Mouraux A. 2011. Tagging the neuronal entrainment to beat and meter. J. Neurosci. 31, 10 234–10 240. ( 10.1523/JNEUROSCI.0411-11.2011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Janata P, Tomic ST, Haberman JM. 2012. Sensorimotor coupling in music and the psychology of the groove. J. Exp. Psychol. Gen. 141, 54–75. ( 10.1037/a0024208) [DOI] [PubMed] [Google Scholar]

- 46.Phillips-Silver J, Trainor LJ. 2005. Feeling the beat: movement influences infant rhythm perception. Science 308, 1430 ( 10.1126/science.1110922) [DOI] [PubMed] [Google Scholar]

- 47.Madison G. 2006. Experiencing groove induced by music: consistency and phenomenology. Music Percept. 24, 201–208. ( 10.1525/mp.2006.24.2.201) [DOI] [Google Scholar]

- 48.Grahn JA, Brett M. 2007. Rhythm and beat perception in motor areas of the brain. J. Cogn. Neurosci. 19, 893–906. ( 10.1162/jocn.2007.19.5.893) [DOI] [PubMed] [Google Scholar]

- 49.Chen JL, Penhune VB, Zatorre RJ. 2008. Listening to musical rhythms recruits motor regions of the brain. Cereb. Cortex. 18, 2844–2854. ( 10.1093/cercor/bhn042) [DOI] [PubMed] [Google Scholar]

- 50.Teki S, Grube M, Griffiths TD. 2011. A unified model of time perception accounts for duration-based and beat-based timing mechanisms. Front. Integr. Neurosci. 5, 90 ( 10.3389/fnint.2011.00090) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Phillips-Silver J, Trainor LJ. 2007. Hearing what the body feels: auditory encoding of rhythmic movement. Cognition 105, 533–546. ( 10.1016/j.cognition.2006.11.006) [DOI] [PubMed] [Google Scholar]

- 52.Gerloff C, Toro C, Uenishi N, Cohen LG, Leocani L, Hallett M. 1997. Steady-state movement-related cortical potentials: a new approach to assessing cortical activity associated with fast repetitive finger movements. Electroencephalogr. Clin. Neurophysiol. 102, 106–113. ( 10.1016/S0921-884X(96)96039-7) [DOI] [PubMed] [Google Scholar]

- 53.Gerloff C, Uenishi N, Nagamine T, Kunieda T, Hallett M, Shibasaki H. 1998. Cortical activation during fast repetitive finger movements in humans: steady-state movement-related magnetic fields and their cortical generators. Electroencephalogr. Clin. Neurophysiol. 109, 444–453. ( 10.1016/S0924-980X(98)00045-9) [DOI] [PubMed] [Google Scholar]

- 54.Kopp B, Kunkel A, Müller G, Mühlnickel W, Flor H. 2000. Steady-state movement-related potentials evoked by fast repetitive movements. Brain Topogr. 13, 21–28. ( 10.1023/A:1007830118227) [DOI] [PubMed] [Google Scholar]

- 55.Pollok B, Müller K, Aschersleben G, Schmitz F, Schnitzler A, Prinz W. 2003. Cortical activations associated with auditorily paced finger tapping. Neuroreport 14, 247–250. ( 10.1097/00001756-200302100-00018) [DOI] [PubMed] [Google Scholar]

- 56.Müller K, Schmitz F, Schnitzler A, Freund HJ, Aschersleben G, Prinz W. 2000. Neuromagnetic correlates of sensorimotor synchronization. J. Cogn. Neurosci. 12, 546–555. ( 10.1162/089892900562282) [DOI] [PubMed] [Google Scholar]

- 57.Osman A, Albert R, Ridderinkhof KR, Band G, van der Molen M. 2006. The beat goes on: rhythmic modulation of cortical potentials by imagined tapping. J. Exp. Psychol. Hum. Percept. Perform. 32, 986–1005. ( 10.1037/0096-1523.32.4.986) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Bourguignon M, Jousmäki V, Op de Beeck M, Van Bogaert P, Goldman S, De Tiège X. 2012. Neuronal network coherent with hand kinematics during fast repetitive hand movements. Neuroimage 59, 1684–1691. ( 10.1016/j.neuroimage.2011.09.022) [DOI] [PubMed] [Google Scholar]

- 59.Daffertshofer A, Peper CL, Beek PJ. 2005. Stabilization of bimanual coordination due to active interhemispheric inhibition: a dynamical account. Biol. Cybern. 92, 101–109. ( 10.1007/s00422-004-0539-6) [DOI] [PubMed] [Google Scholar]

- 60.Kourtis D, Seiss E, Praamstra P. 2008. Movement-related changes in cortical excitability: a steady-state SEP approach. Brain Res. 1244, 113–120. ( 10.1016/j.brainres.2008.09.048) [DOI] [PubMed] [Google Scholar]

- 61.Bourguignon M, De Tiège X, Op de Beeck M, Pirotte B, Van Bogaert P, Goldman S, Hari R, Jousmäki V. 2011. Functional motor-cortex mapping using corticokinematic coherence. Neuroimage 55, 1475–1479. ( 10.1016/j.neuroimage.2011.01.031) [DOI] [PubMed] [Google Scholar]

- 62.Rossion B, Prieto EA, Boremanse A, Kuefner D, Van Belle G. 2012. A steady-state visual evoked potential approach to individual face perception: effect of inversion, contrast-reversal and temporal dynamics. Neuroimage 63, 1585–1600. ( 10.1016/j.neuroimage.2012.08.033) [DOI] [PubMed] [Google Scholar]

- 63.Nourski KV, Brugge JF. 2011. Representation of temporal sound features in the human auditory cortex. Rev. Neurosci. 22, 187–203. ( 10.1515/rns.2011.016) [DOI] [PubMed] [Google Scholar]

- 64.Gourévitch B, Le Bouquin Jeannès R, Faucon G, Liégeois-Chauvel C. 2008. Temporal envelope processing in the human auditory cortex: response and interconnections of auditory cortical areas. Hear. Res. 237, 1–18. ( 10.1016/j.heares.2007.12.003) [DOI] [PubMed] [Google Scholar]

- 65.Zion Golumbic EM, et al. 2013. Mechanisms underlying selective neuronal tracking of attended speech at a ‘cocktail party’. Neuron 77, 980–991. ( 10.1016/j.neuron.2012.12.037) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Patel AD, Iversen JR, Chen Y, Repp BH. 2005. The influence of metricality and modality on synchronization with a beat. Exp. Brain Res. 163, 226–238. ( 10.1007/s00221-004-2159-8) [DOI] [PubMed] [Google Scholar]

- 67.Glenberg AM, Mann S, Altman L, Forman T. 1989. Modality effects in the coding and reproduction of rhythms. Mem. Cogn. 17, 373–383. ( 10.3758/BF03202611) [DOI] [PubMed] [Google Scholar]

- 68.Grahn JA, Henry MJ, McAuley JD. 2011. FMRI investigation of cross-modal interactions in beat perception: audition primes vision, but not vice versa. Neuroimage 54, 1231–1243. ( 10.1016/j.neuroimage.2010.09.033) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Repp BH. 2006. Musical synchronization. In Music, motor control and the brain (eds Altenmüller E, Wiesendanger M, Kesselring J.), pp. 55–76, ch. 4 London, UK: Oxford University Press. [Google Scholar]

- 70.Luo H, Liu Z, Poeppel D. 2010. Auditory cortex tracks both auditory and visual stimulus dynamics using low-frequency neuronal phase modulation. PLoS Biol. 8, e1000445 ( 10.1371/journal.pbio.1000445) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Schroeder CE, Lakatos P, Kajikawa Y, Partan S, Puce A. 2008. Neuronal oscillations and visual amplification of speech. Trends Cogn. Sci. 12, 106–113. ( 10.1016/j.tics.2008.01.002) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Schall S, Quigley C, Onat S, König P. 2009. Visual stimulus locking of EEG is modulated by temporal congruencey of auditory stimuli. Exp. Brain Res. 198, 137–151. ( 10.1007/s00221-009-1867-5) [DOI] [PubMed] [Google Scholar]

- 73.Besle J, Bertrand O, Giard MH. 2009. Electrophysiological (EEG, sEEG, MEG) evidence for multiple audiovisual interactions in the human auditory cortex. Hear. Res. 258, 143–151. ( 10.1016/j.heares.2009.06.016) [DOI] [PubMed] [Google Scholar]

- 74.Vroomen J, Keetels M. 2010. Perception of intersensory synchrony: a tutorial review. Atten. Percept. Psychophys. 72, 871–884. ( 10.3758/APP.72.4.871) [DOI] [PubMed] [Google Scholar]

- 75.Welch RB, Warren DH. 1980. Immediate perceptual response to intersensory discrepancy. Psychol. Bull. 88, 638–667. ( 10.1037/0033-2909.88.3.638) [DOI] [PubMed] [Google Scholar]

- 76.Sekuler R, Sekuler AB, Lau R. 1997. Sound alters visual motion perception. Nature 385, 308 ( 10.1038/385308a0) [DOI] [PubMed] [Google Scholar]

- 77.Bertelson P. 1999. Ventriloquism: a case of cross-modal perceptual grouping. In Cognitive contributions to the perception of spatial and temporal events (eds Aschersleben G, Bachmann T, Musseler J.), pp. 247–362. Amsterdam, The Netherlands: Elsevier. [Google Scholar]

- 78.Zampini M, Shore DI, Spence C. 2003. Audiovisual temporal order judgments. Exp. Brain Res. 152, 198–210. ( 10.1007/s00221-003-1536-z) [DOI] [PubMed] [Google Scholar]

- 79.Fujisaki W, Nishida S. 2005. Temporal frequency characteristics of synchrony-asynchrony discrimination of audio-visual signals. Exp. Brain Res. 166, 455–464. ( 10.1007/s00221-005-2385-8) [DOI] [PubMed] [Google Scholar]

- 80.Vatakis A, Spence C. 2006. Audiovisual synchrony perception for music, speech, and object actions. Brain Res. 1111, 134–142. ( 10.1016/j.brainres.2006.05.078) [DOI] [PubMed] [Google Scholar]

- 81.Petrini K, Russell M, Pollick F. 2009. When knowing can replace seeing in audiovisual integration of actions. Cognition 110, 432–439. ( 10.1016/j.cognition.2008.11.015) [DOI] [PubMed] [Google Scholar]

- 82.Kayser C. 2009. Phase resetting as a mechanism for supramodal attentional control. Neuron 64, 300–302. ( 10.1016/j.neuron.2009.10.022) [DOI] [PubMed] [Google Scholar]

- 83.Senkowski D, Talsma D, Grigutsch M, Herrmann CS, Woldorff MG. 2007. Good times for multisensory integration: effects of the precision of temporal synchrony as revealed by gamma-band oscillations. Neuropsychologia 45, 561–571. ( 10.1016/j.neuropsychologia.2006.01.013) [DOI] [PubMed] [Google Scholar]

- 84.Jones MR, Boltz M. 1989. Dynamic attending and responses to time. Psychol. Rev. 96, 459–491. ( 10.1037/0033-295X.96.3.459) [DOI] [PubMed] [Google Scholar]

- 85.Large EW, Jones MR. 1999. The dynamics of attending: how we track time varying events. Psychol. Rev. 106, 119–159. ( 10.1037/0033-295X.106.1.119) [DOI] [Google Scholar]

- 86.Vialatte FB, Maurice M, Dauwels J, Cichocki A. 2010. Steady-state visually evoked potentials: focus on essential paradigms and future perspectives. Prog. Neurobiol. 90, 418–438. ( 10.1016/j.pneurobio.2009.11.005) [DOI] [PubMed] [Google Scholar]

- 87.Zhang L, Peng W, Zhang Z, Hu L. 2013. Distinct features of auditory steady-state responses as compared to transient event-related potentials. PLoS ONE 8, e69164 ( 10.1371/journal.pone.0069164) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Capilla A, Pazo-Alvarez P, Darriba A, Campo P, Gross J. 2011. Steady-state visual evoked potentials can be explained by temporal superposition of transient event-related responses. PLoS ONE 6, e14543 ( 10.1371/journal.pone.0014543) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Lakatos P, Karmos G, Mehta AD, Ulbert I, Schroeder CE. 2008. Entrainment of neuronal oscillations as a mechanism of attentional selection. Science 320, 110–113. ( 10.1126/science.1154735) [DOI] [PubMed] [Google Scholar]

- 90.Schroeder CE, Lakatos P. 2009. Low-frequency neuronal oscillations as instruments of sensory selection. Trends Neurosci. 32, 9–18. ( 10.1016/j.tins.2008.09.012) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Henry MJ, Herrmann B. 2012. A precluding role of low-frequency oscillations for auditory perception in a continuous processing mode. J. Neurosci. 32, 17 525–17 527. ( 10.1523/JNEUROSCI.4456-12.2012) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Brochard R, Abecasis D, Potter D, Ragot R, Drake C. 2003. The ‘tick-tock’ of our internal clock: direct brain evidence of subjective accents in isochronous sequences. Psychol. Sci. 14, 362–366. ( 10.1111/1467-9280.24441) [DOI] [PubMed] [Google Scholar]

- 93.Snyder JS, Large EW. 2005. Gamma-band activity reflects the metric structure of rhythmic tone sequences. Cogn. Brain Res. 24, 117–126. ( 10.1016/j.cogbrainres.2004.12.014) [DOI] [PubMed] [Google Scholar]

- 94.Pablos Martin X, Deltenre P, Hoonhorst I, Markessis E, Rossion B, Colin C. 2007. Perceptual biases for rhythm: the mismatch negativity latency indexes the privileged status of binary versus non-binary interval ratios. Clin. Neurophysiol. 118, 2709–2715. ( 10.1016/j.clinph.2007.08.019) [DOI] [PubMed] [Google Scholar]

- 95.Grube M, Griffiths TD. 2009. Metricality-enhanced temporal encoding and the subjective perception of rhythmic sequences. Cortex 45, 72–79. ( 10.1016/j.cortex.2008.01.006) [DOI] [PubMed] [Google Scholar]

- 96.Iversen JR, Repp BH, Patel AD. 2009. Top-down control of rhythm perception modulates early auditory responses. Ann. NY Acad. Sci. 1169, 58–73. ( 10.1111/j.1749-6632.2009.04579.x) [DOI] [PubMed] [Google Scholar]

- 97.Fujioka T, Zendel BR, Ross B. 2010. Endogenous neuromagnetic activity for mental hierarchy of timing. J. Neurosci. 30, 458–466. ( 10.1523/JNEUROSCI.3086-09.2010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Schaefer RS, Vlek RJ, Desain P. 2010. Decomposing rhythm processing: electroencephalography of perceived and self-imposed rhythmic patterns. Psychol. Res. ( 10.1007/s00426-010-0293-4) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Bouwer FL, Honing H. 2013. How much beat do you need? An EEG study on the effects of attention on beat perception using only temporal accents. In Conf. Abstr.: 14th Rhythm Production and Perception Workshop, Birmingham, 11th–13th September 2013 Frontiers in Human Neuroscience ( 10.3389/conf.fnhum.2013.212.00030) [DOI] [Google Scholar]

- 100.Henry MJ, Obleser J. 2012. Frequency modulation entrains slow neural oscillations and optimizes human listening behaviour. Proc. Natl Acad. Sci. USA 109, 20 095–20 100. ( 10.1073/pnas.1213390109) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Henry MJ, Herrmann B. 2014. Low-frequency neural oscillations support dynamic attending in temporal context. Timing Time Percept. 2, 62–86. ( 10.1163/22134468-00002011) [DOI] [Google Scholar]

- 102.Repp BH. 2010. Do metrical accents create illusory phenomenal accents? Atten. Percept. Psychophy. 72, 1390–1403. ( 10.3758/APP.72.5.1390) [DOI] [PubMed] [Google Scholar]

- 103.Eggermont JJ. 2001. Between sound and perception: reviewing the search for a neural code. Hear. Res. 157, 1–42. ( 10.1016/S0378-5955(01)00259-3) [DOI] [PubMed] [Google Scholar]

- 104.Musacchia G, Large EW, Schroeder CE. 2013. Thalamocortical mechanisms for integrating musical tone and rhythm. Hear. Res. 308, 50–59. ( 10.1016/j.heares.2013.09.017) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Schnupp J, Nelken I, King A. 2010. Auditory neuroscience: making sense of sound. Cambridge, MA: MIT Press. [Google Scholar]

- 106.Bendor D, Wang X. 2010. Neural coding of periodicity in marmoset auditory cortex. J. Neurophysiol. 103, 1809–1822. ( 10.1152/jn.00281.2009) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Large EW, Almonte FV. 2012. Neurodynamics, tonality, and the auditory brainstem response. Ann. NY Acad. Sci. 1252, E1–E7. ( 10.1111/j.1749-6632.2012.06594.x) [DOI] [PubMed] [Google Scholar]

- 108.Lerud KD, Almonte FV, Kim JC, Large EW. 2013. Mode-locking neurodynamics predict human auditory brainstem responses to musical intervals. Hear. Res. 308, 50–59. [DOI] [PubMed] [Google Scholar]

- 109.Velasco MJ, Large EW. 2011. Pulse detection in syncopating rhythms using neural oscillators. In Proc. 12th Annu. Conf. Int. Soc. for Music Information Retrieval, pp. 185–190. Miami, FL: ISMIR University of Miami. [Google Scholar]

- 110.Pressing J. 2002. Black Atlantic rhythm: its computational and transcultural foundations. Music Percept. 19, 285–310. ( 10.1525/mp.2002.19.3.285) [DOI] [Google Scholar]