Abstract

Introduction

Optimal identification of subtle cognitive impairment in the primary care setting requires a very brief tool combining (a) patients’ subjective impairments, (b) cognitive testing, and (c) information from informants. The present study developed a new, very quick and easily administered case-finding tool combining these assessments (‘BrainCheck’) and tested the feasibility and validity of this instrument in two independent studies.

Methods

We developed a case-finding tool comprised of patient-directed (a) questions about memory and depression and (b) clock drawing, and (c) the informant-directed 7-item version of the Informant Questionnaire on Cognitive Decline in the Elderly (IQCODE). Feasibility study: 52 general practitioners rated the feasibility and acceptance of the patient-directed tool. Validation study: An independent group of 288 Memory Clinic patients (mean ± SD age = 76.6 ± 7.9, education = 12.0 ± 2.6; 53.8% female) with diagnoses of mild cognitive impairment (n = 80), probable Alzheimer's disease (n = 185), or major depression (n = 23) and 126 demographically matched, cognitively healthy volunteer participants (age = 75.2 ± 8.8, education = 12.5 ± 2.7; 40% female) partook. All patient and healthy control participants were administered the patient-directed tool, and informants of 113 patient and 70 healthy control participants completed the very short IQCODE.

Results

Feasibility study: General practitioners rated the patient-directed tool as highly feasible and acceptable. Validation study: A Classification and Regression Tree analysis generated an algorithm to categorize patient-directed data which resulted in a correct classification rate (CCR) of 81.2% (sensitivity = 83.0%, specificity = 79.4%). Critically, the CCR of the combined patient- and informant-directed instruments (BrainCheck) reached nearly 90% (that is 89.4%; sensitivity = 97.4%, specificity = 81.6%).

Conclusion

A new and very brief instrument for general practitioners, ‘BrainCheck’, combined three sources of information deemed critical for effective case-finding (that is, patients’ subject impairments, cognitive testing, informant information) and resulted in a nearly 90% CCR. Thus, it provides a very efficient and valid tool to aid general practitioners in deciding whether patients with suspected cognitive impairments should be further evaluated or not (‘watchful waiting’).

Introduction

Cognitive disorders are frequently underdiagnosed and, consequently, diseases such as dementia are undertreated [1]. The early identification of cognitive impairments is critical to initiate diagnostic procedures, since early diagnoses lead to optimal treatment and potentially improve prognoses and decrease morbidity. National task forces and academies have concluded that routine dementia screening – that is, population-based screening of individuals irrespective of the existence of cognitive complaints – cannot be recommended based on existing data, most notably screening tests’ mediocre diagnostic specificity [2-5]. Instead, speciality groups recommend a case-finding strategy whereby general practitioners (GPs) test patients with suspected or observable early signs or symptoms of cognitive impairment [6-10]. This strategy requires optimally sensitive and specific case-finding tools that are well suited to the primary care setting.

Many patient-directed cognitive or informant-based tools are available for case-finding in the primary care setting [4,6,11-16]. Lin and colleagues list 51 such tools in their comprehensive review [4]. Most were initially designed as screening tools, and thus the majority were evaluated in only one study relevant to the primary care setting (that is, 36/51 instruments; see ‘supplements’, ‘supplemental content’, ‘supplement. additional information on interventions’ in [4]). Of these tools, the best-studied cognitive (patient-directed) case-finding tool is the Mini-Mental Status Examination (MMSE) [17]. However, the MMSE suffers from low inter-rater reliability (IRR) and low sensitivity for mild impairment (for example [18]) and inappropriateness for primarily nonverbal forms of cognitive impairment (for example, mild cognitive impairment (MCI) and dementia in Parkinson disease [19]). The Montreal Cognitive Assessment is similar in design to the MMSE in that it assesses multiple cognitive domains and has a maximum of 30 points [20]. Compared with the MMSE, the Montreal Cognitive Assessment identified more MCI and Alzheimer’s dementia (AD) patients (sensitivity) but fewer healthy controls (specificity) [20]. Additional instruments include the Rowland Universal Dementia Assessment Scale [21] and the CANTABmobile (Cambridge Cognition, Cambridge, UK), a 10-minute to 15-minute screening instrument comprised of a visual paired associates learning test, the Geriatric Depression Scale, and assessment of activities of daily living. While these tools are valuable instruments to quickly assess global cognitive functioning, particularly in a memory clinic setting [22], their relatively long administration time and potential burden to the patient–GP relationship reduce their feasibility and acceptability in the primary care setting.

Shorter patient-directed cognitive tools that have likewise been well studied include the clock drawing test (CDT) [23], Mini-Cog [24], and the Memory Impairment Screen [6,11-16,25]. The CDT primarily assesses executive dysfunction and is less influenced by sociodemographic factors (for example, educational level, language) than other case-finding tools [14]. The CDT yields adequate sensitivity and specificity for dementia when administered on its own [14], which are improved when combined with other cognitive instruments that assess episodic memory functioning [26]. For example, the Mini-Cog combines the CDT with a three-item delayed word recall task [24]. Also the Mini-Cog can be administered very quickly (circa 3 minutes), has a high sensitivity and correct classification rate (CCR), and is less susceptible to low education and literacy than, for example, the MMSE [24,27]. The Memory Impairment Screen is a short cognitive case-finding tool that focuses purely on episodic memory functioning [25]. This four-item delayed and cued recall test can be quickly administered and performance is not significantly affected by demographic factors, but its sensitivity and specificity are inferior to, for example, Mini-Cog [13]. Thus, short instruments that combine executive and episodic memory assessments appear most promising for accurate case-finding in the GP setting.

The subjective impression of the patient’s informant (for example, family member) is a critical component of an accurate assessment of the patient’s cognitive and behavioral profiles [6,28,29], although this information may not always be available. Informants may notice changes in cognitive functioning not noticed by patients, especially when patients suffer from neurodegenerative syndromes associated with a lack of awareness [30]. One widely used informant-directed tool that is available in many different languages is the Informant Questionnaire on Cognitive Decline in the Elderly (IQCODE) [31,32]. This tool performs adequately in the primary care setting [4]. Moreover, a very short, seven-item version of the IQCODE is available, which distinguishes healthy older individuals from patients with AD and MCI with high correct classification rates (that is, 90.5% and 80.1%, respectively) [33]. The General Practitioner Assessment of Cognition (GPCOG) [28] was developed specifically as a GP case-finding tool and combines two critical sources of information: patient-directed cognitive testing (that is CDT, time orientation, a report of a recent event and a word recall test) and informant-directed questioning (that is, six informant questions asking whether the patient’s functioning has changed compared with ‘a few years ago’). Although the combination of two separate sources of information is assumed to bolster diagnostic accuracy compared with tests relying on one source of information, surprisingly the diagnostic accuracy of the GPCOG was comparable with that of the MMSE (that is, area under the receiver operating characteristic curve = 0.91 vs. 0.89, respectively) [28].

Cordell and colleagues recently suggested that case-finding tools can be further optimized by including questions on patient’s subjective functioning [6]. Indeed, questions on subjective cognitive impairments [34] and depression [35] are good predictors of the future development of, for example, MCI and dementia [36]. Moreover, these questions are easy to administer in the primary care setting as they typically correspond to the questions GPs pose during routine history-taking.

The emerging consensus regarding case-finding for patients with potential MCI and early dementia in the primary care setting is that it optimally requires the combination of patient-directed cognitive testing, informant information on patient functioning and patient information on subjective cognitive impairments [6]. However, to our knowledge, no case-finding tool combines these three components into a single tool that is feasible to administer in the primary care setting; that is, a tool which is very brief and nonthreatening. The first goal of this study was therefore to develop a very brief, user-friendly case-finding tool for primary care physicians that combines a patient-directed tool (that is, cognitive testing and subjective patient information) and an informant-directed tool (that is, subjective informant information). We then performed a study to obtain GPs’ judgments on the feasibility of the patient-directed instrument; that is, the instrument administered by GPs. Finally, we conducted a validation study to determine the optimal scoring criteria and corresponding CCR of the patient-directed instrument alone (applicable in situations in which no informant is available) and of the combined patient-directed and informant-directed tool; that is, BrainCheck. The informant-directed instrument (that is, very short IQCODE) was previously validated by Ehrensperger and colleagues [33].

BrainCheck development

We describe the development of the BrainCheck instrument, followed by the feasibility and validation studies.

Methods

BrainCheck is composed of patient-directed and informant-directed components.

Patient-directed instrument

A Swiss dementia task force, strengthened by an international advisory board (see Acknowledgements), was charged with developing a very brief (that is, applied in a few minutes) patient-directed instrument sensitive to subtle cognitive decline. The task force created the following yes/no questions about subjective memory performance (Q1 to Q3; cf. [37]) and depressive symptoms (Q4 and Q5 [38]):

Have you experienced a recent decline in your ability to memorize new things?

Have any of your friends or relatives made remarks about your worsened memory?

Do your memory or concentration problems affect your everyday life?

During the past month, have you often been bothered by feeling down, depressed or hopeless?

During the past month, have you often been bothered by little interest or pleasure in doing things?

The CDT [23] was included in the patient-directed instrument for the efficient assessment of cognitive, in particular executive, function. The CDT presents patients with a predrawn circle (10 cm diameter) and instructs them to ‘Please draw a clock with all the numbers and hands’ (no specific time or time limit specified). Once finished, patients are asked to ‘Write down in numbers the time shown on the clock you have just drawn, as it would appear on a timetable or TV guide.’ The following scoring criteria were used [26], each requiring a yes/no decision:

CDT-1. Are exactly 12 numbers present?

CDT-2. Is the number 12 correctly placed?

CDT-3. Are there two distinguishable hands (length or thickness)?

CDT-4. Does the time drawn correspond to the time written in numbers (±5 minutes)?

In addition, the following GP judgment of overall CDT accuracy was included:

CDT-5. Was the clock, including ‘time in numbers’, perfect?

Informant-directed instrument

The very short and validated seven-item version of the IQCODE was selected as the informant-directed instrument [33]. Briefly, this tool requires informants to rate patients’ current cognitive abilities compared with 2 years earlier. Judgments are rated on a five-point scale from ‘much improved’ (1) to ‘much worse’ (5), with (3) representing ‘no change’. Informants are typically provided with the instructions and questions, and fill out the questionnaire on their own. The seven-item IQCODE includes the following items:

Remembering things about family and friends; for example, occupations, birthdays, addresses.

Remembering things that have happened recently.

Recalling conversations a few days later.

Remembering what day and month it is.

Remembering where to find things that have been put in a different place from usual.

Learning new things in general.

Handling financial matters; for example, pension, dealing with the bank.

The total score of the seven-item IQCODE is the mean of all items. A maximum of two missing answers was allowed, in which case the mean of the remaining items was used as the total score. This procedure was adopted to increase the generalizability of the present findings to the general practice setting, where informants do not always provide complete questionnaire data.

Feasibility study

Following the construction of the patient-directed tool, a feasibility study was performed to acquire GPs' judgments on the feasibility and acceptability of the patient-directed tool; that is, the instrument which the GP administers. The patient-directed data collected here were not the focus of this feasibility study and were not analyzed to ascertain the validity of BrainCheck (see Validation study). However, inter-rater reliability of CDT scoring between GPs and expert memory clinic raters was calculated to determine the quality of the CDT scoring instructions.

The ethics committees at each recruiting site approved the study (Ethikkommission beider Basel; Comité d’Ethique Réhabilitation et Gériatrie – Psychiatrie, Hopitaux Universitaires de Genève; Kantonale Ethikkommission Bern; Commission d’Ethique de la Recherche Clinique, Lausanne; Ethikkommission des Kantons St. Gallen; Ethikkommission der beiden Stadtspitäler Triemli und Waid, Zurich), and all participants provided informed consent.

Methods

Participants

Fifty-two GPs from the Basel and Lausanne regions of Switzerland agreed to assess between one and five consecutive patients fulfilling the following inclusion criteria: suspected cognitive problems (that is, reported by patient or informant or suspected by the GP), age ≥50 years, education ≥7 years, and fluent native speakers of German or French. Patients with severe auditory or visual impairments who could not complete the patient-directed instrument were excluded. In total, 184 patients were tested (60% women) with a mean age (± standard deviation) of 77.3 ± 9.1 years and a mean education of 11.5 ± 2.9 years.

Procedure

GPs administered the patient-directed tool according to a clinical record form. Following every administration of the patient-directed tool, GPs completed a questionnaire on the feasibility and acceptability of the patient-directed tool administration including judgments of wording and format, and patient's acceptance and understanding of the test. Questions were rated on a five-point scale: ‘not at all’ (1), ‘a little’ (2), ‘moderately’ (3), ‘quite a bit’ (4), ‘extremely’ (5). GPs were remunerated 50 CHF for each completed patient-directed tool questionnaire. Finally, GPs were invited to additionally provide their global feedback on the feasibility and acceptability of the patient-directed tool overall on a five-point scale: ‘strongly disagree’ (1), ‘disagree’ (2), ‘neither agree nor disagree’ (3), ‘agree’ (4), ‘strongly agree’ (5).

Statistical analyses

GPs’ judgments on the feasibility and acceptability of the patient-directed instrument were summarized descriptively. The IRRs of CDT scoring between the GPs and expert memory clinic raters were assessed in exploratory analyses.

Results

Fifty-two GPs administered the patient-directed tool to 184 patients in response to memory complaints by the patient (65.8%) and/or by the informant (41.3%) and/or based on the GP’s suspicion of cognitive problems (42.9%). Each of these GPs rated the feasibility and acceptability of the instrument following every test administration.

These GPs judged the patient-directed questions to be well accepted (4.63 ± 0.73) (mean ± standard deviation based on the ratings ‘not at all’ (1), ‘a little’ (2), ‘moderately’ (3), ‘quite a bit’ (4), ‘extremely’ (5)) and understood (4.41 ± 0.83) by the patients, and the CDT to be acceptable to patients (4.57 ± 0.74).

Forty-nine of the 52 GPs additionally provided global feedback on the tool. They were satisfied with the patient-directed instrument (3.94 ± 0.83) (rated ‘strongly disagree’ (1), ‘disagree’ (2), ‘neither agree nor disagree’ (3), ‘agree’ (4), ‘strongly agree’ (5)). They considered the tool to be helpful (3.80 ± 0.91), with clearly worded questions (4.59 ± 0.67), in a format suited to the answers patients provide (4.25 ± 0.76) and economically suited to the healthcare system (4.41 ± 0.91). Of these physicians, 81.6% stated that they would use the tool if it was shown to be reliable and valid (4.02 ± 1.16).

The CDT scoring IRR between the GPs and expert raters in the feasibility study was κ = 0.50 to 0.69 for the five CDT criteria (all P >0.0001), indicating moderate agreement (CDT-1, κ = 0.69; CDT-2, κ = 0.50; CDT-3, κ = 0.59; CDT-4, κ = 0.53; CDT-5, κ = 0.50). Based on these results and verbal feedback from GPs in the feasibility study, refined CDT scoring instructions were created for the final version of BrainCheck. These revised CDT scoring instructions included a list of perfect clock criteria and additional guidance on test administration and scoring for GPs.

Validation study

Methods

The validation study aimed to determine the optimal score criteria for the patient-directed instrument alone (for patients with no informant) and the combined BrainCheck instrument, and their corresponding CCRs for cognitively healthy participants versus patients with cognitive symptoms. Ethics committees at all study sites (see Feasibility study) approved this study, and all participants provided informed consent.

Participants

All patients who participated in the validation study had been referred by their GPs to the memory clinics in Basel, Geneva, Berne, Lausanne, St. Gallen and Zurich. This patient sample was independent from patients who participated in the feasibility study. All patient-directed data for the validation study were then collected at the respective memory clinic. All patients received a comprehensive dementia work-up at the participating site that included comprehensive neuropsychological testing, an interview with the informant, an internal medical and neurological examination, psychopathological status and magnetic resonance brain imaging followed by an interdisciplinary diagnosis conference. All diagnosing clinicians were blind to patients’ BrainCheck results (the STARD initiative [39]). MCI was diagnosed according to Winblad and colleagues’ criteria [40]: deficits (score <–1.28 of demographically-adjusted z score) in one or more cognitive domain (that is, attention, executive functioning, memory, language, visuospatial functioning, praxia and gnosis) that represent a decline from an earlier level of cognitive functioning, but which were not severe enough to fulfill Diagnostic and Statistical Manual of Mental Disorders, 4th edition (DSM-IV) criteria for dementia (that is, intact activities of daily living as reported by next of kin). Dementia and major depression were diagnosed according to the DSM-IV criteria. The inclusion criteria for patients were: complete results from all aforementioned examinations; diagnoses of MCI [40], dementia (DSM-IV [41]) or major depression (DSM-IV [41]); age ≥50 years; MMSE [17] score ≥20/30; a minimum 7 years of education; and fluent German or French language proficiency. The exclusion criterion was the existence of sensory deficits that prohibited administration of BrainCheck.

Patient-directed data were gathered from 126 healthy older participants of an ongoing longitudinal study at the Memory Clinic in Basel; that is, the Basel Study on the Elderly [36,42]. These individuals had been examined with comprehensive neuropsychological testing and a thorough medical questionnaire. All individuals who fulfilled the following inclusion criteria were included in the present analyses: cognitively, neurologically and psychiatrically healthy; no high fever lasting longer than 1 week within the last week; no chronic pain; no full anesthesia within the last 3 months; fluent German language proficiency; and comparable age and education levels with the patient sample (see Table 1, entire sample).

Table 1.

Demographic characteristics of healthy individuals and patients in the validation study

| Cognitively impaired groups | P value, NC vs. patients | ||||

|---|---|---|---|---|---|

| NC | Mild cognitive impairment | Dementia | Major depression | ||

| Entire sample | |||||

| n | 126 | 80 | 185 | 23 | |

| Female | 50 (40) | 44 (55) | 99 (54) | 12 (52) | 0.008a |

| Age (years) | 75.2 ± 8.8 | 74.9 ± 8.1 | 78.5 ± 6.5 | 67.4 ± 9.6 | n.s.b |

| Education (years) | 12.5 ± 2.7 | 12.2 ± 2.5 | 11.9 ± 2.7 | 12.4 ± 2.7 | n.s.b |

| MMSE | 28.9 ± 1.2 | 27.1 ± 2.2 | 24.2 ± 2.5 | 27.9 ± 1.8 | 0.001b |

| BrainCheck subsample | |||||

| n | 70 | 21 | 86 | 6 | |

| Female | 20 (29) | 12 (57) | 44 (51) | 4 (67) | 0.001a |

| Age (years) | 77.2 ± 8.9 | 75.3 ± 7.5 | 78.4 ± 6.3 | 68.2 ± 11.4 | n.s.b |

| Education (years) | 12.5 ± 2.9 | 11.6 ± 2.1 | 11.7 ± 2.6 | 11.2 ± 1.9 | n.s.b |

| MMSE | 28.6 ± 1.2 | 27.5 ± 1.8 | 24.4 ± 2.5 | 27.0 ± 1.9 | 0.001b |

Data presented as n (%) or mean ± standard deviation. MMSE, Mini-Mental Status Examination; NC, healthy individuals. aChi-square test. b t test.

Seven-item IQCODE data were available from native German-speaking subgroups of 70 normal control participants and 115 patients. IQCODE data for two AD patients were excluded because of three missing items, resulting in 113 patients and 70 controls with comparable age and education levels (see Table 1, BrainCheck subsample).

Procedure

At each memory clinic, the patient-directed instrument was administered prior to the clinical neuropsychological battery by trained clinicians who neither conducted the clinical neuropsychological examination nor took part in any interdisciplinary diagnosis conference. Administration was standardized between memory clinics and conducted according to the Swiss consensus guidelines [43]. The 16-item German IQCODE was administered to informants in the BrainCheck subsample according to standardized procedures [44], and data from the seven-item form (that is, mean score [33]) were used in the present analyses.

Statistical analyses

The IRRs of CDT scoring between clinicians at the six memory clinics and expert raters at the Basel memory clinic were assessed in exploratory analyses.

For the following analyses, please note that the Memory Clinic diagnosis of healthy control or patient represented the criterion or gold standard against which the BrainCheck data were evaluated.

A classification and regression tree (CART) analysis was conducted to separate healthy controls from patients based on the patient-directed data. In its first step, CART identifies that variable which best discriminates between two groups, here between patients and control participants. If this variable is categorical, each value produces a branch of a tree. For each branch, the variable that best discriminates the subset of participants on this branch is determined, generating additional branches (for categorical variables), and so on. This process proceeds until improvements in fit fall below an a priori determined complexity parameter of 1%. The resulting decision rule proved to be stable in 10,000 bootstrap samples. Both the CART and bootstrapping analyses were performed in R software [45] using the R-package rpart. The resulting algorithm generates a case-finding result of either further evaluation (based on the probability that score X belongs to the present Memory Clinic patient distribution) or watchful waiting (based on the probability that score X belongs to the present healthy control distribution) based on the patient-directed instrument data alone.

We attempted to model the combined patient-directed and informant-directed data using two independent approaches: traditional logistic regression and the CART approach described above. Both approaches failed to adequately model the combined data, most probably because of higher-order interactions in the data associated with highly parameterized models. A decision algorithm was therefore developed by generating a contingency table of the patient-directed predictor variables identified by the CART analysis and the group variable (healthy participant vs. patient). Homogeneous subgroups consisting of either a high or a low proportion of patients were identified, corresponding to a preliminary classification. This preliminary classification was extended to include informant data from the seven-item IQCODE by determining separate optimal seven-item IQCODE mean cutoff scores for each homogeneous subgroup via receiver operating characteristic (curve) analyses modeling optimal CCRs. The ensuing decision rules were evaluated in 10,000 bootstrap replicates [46] to estimate the variability of their sensitivities and specificities.

Results

CDT scores between memory clinic clinicians who regularly perform CDTs and the expert raters were comparable (IRR κ = 0.69 to 0.90; P >0.0001) (CDT-1, κ = 0.90; CDT-2, κ = 0.85; CDT-3, κ = 0.69; CDT-4, κ = 0.78; CDT-5, κ = 0.75).

A CART analysis was applied to the five questions (Q1 to Q5) and five CDT scoring criteria (CDT1 to CDT5) of the patient-directed tool to derive an algorithm that classified the maximum proportion of healthy participants into the watchful waiting group and the maximum proportion of patients into the further examination group. Individual item scores, as opposed to mean scores on Q1 to Q5 and CDT, were analyzed to determine which individual items contributed best to diagnostic discriminability. This procedure enabled us to drop inferior items, thereby maximizing BrainCheck’s efficiency and diagnostic accuracy.

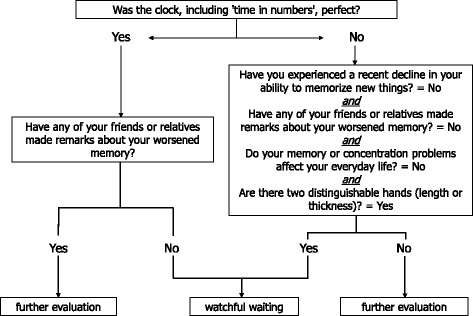

The CART selected four questions (Q1 to Q3, Q5) and two CDT scoring criteria (CDT-3, CDT-5) in the final model. The top of the classification tree is occupied by CDT-5 (perfect CDT), reflecting the largest weight in discriminating healthy individuals from patients. A middle level of the classification tree was occupied by the subjective questions on memory functioning (Q1 to Q3) and CDT-3 (‘two distinguishable clock hands?’). A final level of the tree was occupied by Q5 on depressed mood (‘little interest or pleasure in doing things?’). Since Q5 added an additional level of complexity to the classification algorithm, CCRs were calculated for classification algorithms with and without Q5 to determine whether classification complexity could be reduced without sacrificing CCR. These analyses revealed comparable CCRs with and without Q5 (difference: 1.3%); Q5 was therefore eliminated. The final algorithm included the three memory-related questions and two CDT items (see Figure 1). The application of this algorithm to the entire sample resulted in a high sensitivity (83.0%) and specificity (79.4%), with an overall CCR of 81.2%.

Figure 1.

Decision algorithm for the patient-directed tool. Sensitivity = 83.0%, specificity = 79.4%, correct classification rate = 81.2%.

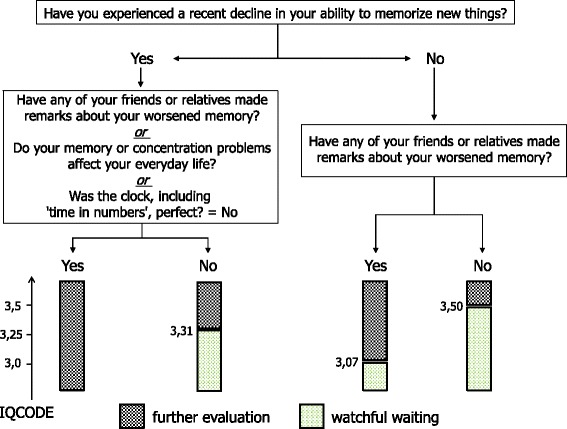

The combined patient-directed and informant-directed data were analyzed next (see Methods). The mean seven-item IQCODE score was used, whereby one missing item (17 patients, eight control participants) or maximally two missing items (three patients, zero control participants) were allowed (percentage of complete datasets: 83% for patients, 89% for control participants). A six-way contingency table with the five patient-directed variables identified by the CART analysis and the group variable (healthy participant vs. patient) demonstrated that the CDT-3 variable had no effect on the categorization of the BrainCheck sample into healthy individuals versus patients. The CDT-3 variable was therefore removed from the equation, and a second, five-way contingency table was generated with the four remaining patient-directed variables and the group variable. This contingency table revealed four homogeneous subgroups, corresponding to a preliminary classification. Receiver operating characteristic (curve) analyses for each of these homogeneous subgroups determined the IQCODE cutoff score (IQCODE_cut) that optimally categorized individuals. Thus, depending on the results of the patient-directed questions, different seven-item IQCODE cutoff scores were used to determine whether watchful waiting or further evaluation was indicated, where values exceeding the cutoff score indicate further evaluation (see Figure 2). Logically, further evaluation would be indicated when scores on both the patient-directed and informant-directed instruments would be deficient. However, this situation never occurred in the present sample. We therefore added this rule based on the aforementioned logic. The final BrainCheck decision rules are thus as follows:

If patient-directed instrument = not normal and IQCODE = not normal (that is, IQCODE mean ≥3.29), then BrainCheck = ‘further evaluation indicated’.

If Q1 = no & Q2 = yes, then use IQCODE_cut = 3.07.

If Q1 = no & Q2 = no, then use IQCODE_cut = 3.50.

If Q1 = yes & Q2 = no & Q3 = no & C5 = yes, then use IQCODE_cut = 3.31.

If Q1 = yes & not (Q2 = no & Q3 = no & C5 = yes), then ‘further evaluation indicated’.

Figure 2.

Patient-directed and informant-directed case-finding (BrainCheck) decision algorithm. Sensitivity = 97.4%, specificity =81.6%, correct classification rate = 89.4%. IQCODE, Informant Questionnaire on Cognitive Decline in the Elderly.

The application of these rules to the BrainCheck sample resulted in a sensitivity of 97.3%, a specificity of 81.4%, and a CCR of 89.35%. The classification performance following 10,000 bootstrap replicates resulted in a mean sensitivity of 97.4% (quartile range: 96.4 to 98.3%), a mean specificity of 81.6% (quartile range: 78.4 to 84.6%) and a CCR of 89.4% (see Table 2).

Table 2.

Diagnostic discriminability of the individual and combined (BrainCheck) patient-directed and informant-directed screening instruments in the BrainCheck subsample

| Sensitivity (%) | Specificity (%) | CCR (%) | |

|---|---|---|---|

| Patient-directed instrument | 85.8 | 74.3 | 79.9 |

| Informant-directed instrument | 81.4 | 75.7 | 78.6 |

| BrainCheck | 97.4 | 81.6 | 89.4 |

CCR, correct classification rate.

Discussion

The present study developed and validated BrainCheck as a new, very short (that is about 3-minute) case-finding tool for cognitive disturbances combining direct cognitive testing, patients’ subjective impressions of their cognitive functioning and informant information. The feasibility study showed that GPs judged the administration of the patient-directed instrument to be feasible (that is, time efficient) and acceptable. The BrainCheck’s CCR reached nearly 90% in the validation study. The items with the greatest discriminatory power were questions on subjective (patient) and observed (informant) memory and executive functioning and the results of cognitive testing (that is, CDT). We therefore recommend the BrainCheck as a case-finding tool, because it meets the challenge of combining patient-directed cognitive testing, informant information on patient functioning and patient information on subjective cognitive impairments [6] and provides a high CCR. Because we realize that informants may not always be available to provide information on patients, we additionally determined the optimal scoring criteria and diagnostic accuracy of the patient-directed instrument alone. These analyses were performed on the largest dataset at our disposal in order to provide GPs with the most robust and reliable findings. These analyses revealed that the patient-directed instrument data correctly classified 81% of the BrainCheck validation sample, suggesting that it can be administered on its own when no informant is available. Critically, the patients in both validation samples were very mildly impaired (that is, MMSE = 25.3 ± 2.8, entire sample; MMSE = 25.1 ± 2.6, BrainCheck subsample), suggesting that this case-finding tool can detect subtle cognitive impairments in a primary care setting.

Cordell and colleagues recommended combining patient-directed and informant-directed case-finding questions at older individuals’ annual wellness visits when signs or symptoms of cognitive dysfunction are present [6]. Moreover, the new research diagnostic criteria for MCI recommend querying informants about patients’ cognitive functioning and the extent to which patients’ cognitive functioning has declined [47], and research criteria for probable AD recommend the acquisition of informant information on patients’ cognitive functioning [48]. Informant information is critical as family members may be more competent in judging changes in patients’ cognitive functioning in syndromes associated with an early lack of awareness; for example, AD [30]. Moreover, informant-based case-finding tools are obviously less confrontational for patients than direct cognitive testing.

The CDT was adopted because it is very brief and easy to administer and has acceptable sensitivity and specificity in differentiating healthy participants from those with cognitive impairments [14]. However, IRR analyses showed poor correspondence between the GPs and expert raters, in line with previous findings [49]. In response to this finding, we developed detailed scoring criteria for use in the GP setting. Two CDT variables – whether two distinguishable hands are present and whether the clock is perfect – were among the best discriminators between healthy participants and patients based on patient-directed data alone, whereas only the whether the clock is perfect variable survived in the BrainCheck algorithm. Similarly, Scanlan and colleagues recommended that untrained examiners use the rating normal CDT versus abnormal CDT for the classification of healthy versus demented individuals [50] (see also [13]). We note that 32% of healthy participants in the patient-directed sample drew imperfect clocks; it is therefore advisable to combine the CDT with other case-finding instruments for cognitive impairment.

Case-finding tools similar to the patient-directed and informant-directed components of BrainCheck exist (see Introduction and [4]), although to our knowledge no published tool combines the three sources of information deemed critical for optimal case-finding (that is, cognitive testing of patient, informant information, patients’ subjective impressions of their cognitive functioning) into a single, very short tool [6]. Perhaps the most similar tool in content and length is the GPCOG, which combines patient-directed cognitive testing and six informant questions [28]. In a validation study, Brodaty and colleagues studied 283 individuals from the community who complained of memory impairment [28]. The GPCOG was administered by local GPs, and the diagnostic gold standard was defined as memory clinic diagnoses. In this sample, the patient-directed GPCOG’s sensitivity and specificity were 82% and 70%, compared with 89% sensitivity and 66% specificity for the informant-directed GPCOG. The patterns of sensitivity and specificity in the GPCOG and BrainCheck patient-directed and informant-directed tools were thus similar, with greater sensitivity than specificity and overall comparable diagnostic accuracies across the two sources of data. The higher diagnostic accuracy for BrainCheck compared with GPCOG most probably originates from the nature of the present sample: memory clinic patients are more likely to have a positive diagnosis, and the healthy individuals participating in research studies are more likely to have a negative diagnosis compared with community-based residents. As the MMSE was used to select patients for the present validation study, a direct comparison of MMSE and BrainCheck sensitivity and specificity rates in the present sample is not warranted. Both the GPCOG and BrainCheck are thus brief, encompass multiple domains and demonstrate good diagnostic accuracy. BrainCheck’s, but not GPCOG’s, demonstrably superior diagnostic accuracy compared with the MMSE may be accounted for by the greater variability in MMSE administration methods across different GP practices in Brodaty and colleagues’ study [28] and BrainCheck’s inclusion of information on the patient’s subjective cognitive functioning.

There are three caveats to the present study. First, as noted above, the BrainCheck CCR is potentially artificially inflated by an inestimable magnitude since patients referred to a memory clinic more probably represent a subset of primary care patients with more cognitive impairment, and optimally healthy participants from the validation study are those that are less likely to be screened by GPs. Second, as with any validation study, we note that external validation, particularly in the GP setting, is required to confirm the performance levels of the patient-directed instrument alone and BrainCheck. We note that correct classification rates from studies in the GP setting are generally lower than those obtained from memory clinic samples. Finally, we note that our validation study sample included too few patients with depression to reliably test the validity of case-finding questions about this disorder.

Conclusions

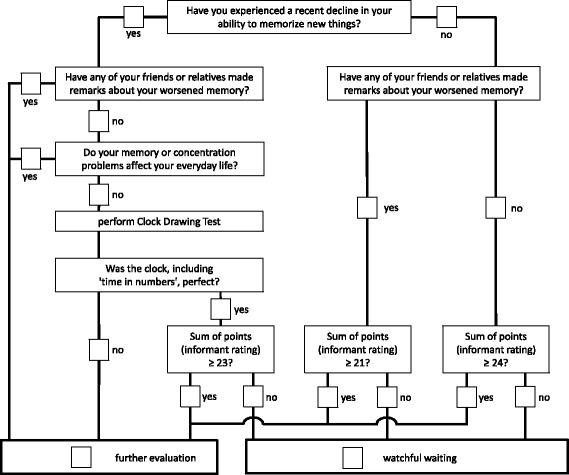

There are several advantages to the new BrainCheck case-finding tool. First, there is no formal episodic memory task, which is time consuming and frequently frustrates patients and possibly GPs. Second, the patient-directed instrument can be administered in a very short time (that is, circa 3 minutes). Third, the seven-item IQCODE may be administered by a nurse, the physician's assistant or other trained professionals, saving the GP additional time. We provide decision algorithms that enable GPs to determine whether further evaluation or watchful waiting is indicated; that is, repeated screening in 6 to 12 months. When such a patient scores within the range of the present Memory Clinic patient sample, then the algorithm recommends further evaluation since an underlying syndrome is more probable. If the GP’s patient with suspected cognitive symptoms scores within the range of the present control sample, then the algorithm recommends watchful waiting; that is, the longitudinal observation of suspected cognitive symptoms. Lastly, while the BrainCheck decision algorithm is complex, we developed an easy-to-use App for the iPhone and iPad (Android app under development) for GPs and other healthcare service providers for a one-time nominal charge that automatically calculates the BrainCheck results. We also offer a paper-and-pencil version of the BrainCheck free of charge. The paper-and-pencil version has been modified to be optimally efficient, as it includes only those questions necessary to derive a decision (see Figure 3). In summary, BrainCheck meets criteria for an efficient and effective routine case-finding tool for primary care patients with suspected cognitive symptoms: it is brief, simple to use, sensitive and specific.

Figure 3.

Simplified, paper-and-pencil flowchart version of BrainCheck modified for optimally efficient administration. In contrast to the decision algorithm (cf. Figures 1, 2), this simplified version requires the IQCODE sum. This version can therefore only be used when complete IQCODE data are available (that is, no missing data). IQCODE, Informant Questionnaire on Cognitive Decline in the Elderly.

Acknowledgements

This work was supported by an unrestricted educational grant from Vifor Pharma, Switzerland. The funding source had no role in the design, conduct or analysis of this study or in the decision to submit the article for publication.

Peter Fuhr, MD, Prof., Katharina Henke, PhD, Prof., Stanley Hesse, MD, Alexander Kurz, MD, Prof., Valerio Rosinus, MD, Armin Schnider, MD, Prof. and Peter Tschudi, MD, Prof. provided valuable advice in the early stages of planning the present study during their tenure on national and international advisory boards.

Abbreviations

- AD

Alzheimer’s dementia

- CART

classification and regression tree

- CCR

correct classification rate

- CDT

clock drawing test

- DSM-IV

Diagnostic and Statistical Manual of Mental Disorders, 4th edition

- GP

general practitioner

- GPCOG

General Practitioner Assessment of Cognition

- IQCODE

Informant Questionnaire on Cognitive Decline in the Elderly

- IQCODE_cut

cutoff score of the Informant Questionnaire on Cognitive Decline in the Elderly

- IRR

inter-rater reliability

- MCI

mild cognitive impairment

- MMSE

Mini-Mental State Examination

Footnotes

Competing interests

The authors declare that they have no competing interests. The authors note that they developed an iTunes app to facilitate implementation of BrainCheck in the primary care setting. The money from the sale of this app is distributed between Apple, the programmers of the app and the Basel Memory Clinic where, should the initial investment be recouped, any proceeds will be used exclusively for nonprofit purposes.

Authors’ contributions

AUM, NSF, IB, GG, AvG, DI, RM, BR and RWK conceptualized the study and its design. Data were acquired by MD, IB, GG, AvG, DI, RM, BR and AUM. MME, AUM, KIT, MB, NSF and MD analyzed and interpreted the data. MME, AUM, KIT, MB and MD drafted the manuscript and all authors critically revised the manuscript for important intellectual content, and read and approved the final manuscript.

Contributor Information

Michael M Ehrensperger, Email: michael.ehrensperger@fps-basel.ch.

Kirsten I Taylor, Email: kirsten.taylor@fps-basel.ch.

Manfred Berres, Email: berres@rheinahrcampus.de.

Nancy S Foldi, Email: nancy.foldi@qc.cuny.edu.

Myriam Dellenbach, Email: myriam.dellenbach@medi.ch.

Irene Bopp, Email: irene.bopp@waid.zuerich.ch.

Gabriel Gold, Email: gabriel.gold@hcuge.ch.

Armin von Gunten, Email: armin.von-gunten@chuv.ch.

Daniel Inglin, Email: daniel.inglin@geriatrie-sg.ch.

René Müri, Email: rene.mueri@insel.ch.

Brigitte Rüegger, Email: brigitte.ruegger@waid.zuerich.ch.

Reto W Kressig, Email: reto.kressig@fps-basel.ch.

Andreas U Monsch, Email: andreas.monsch@fps-basel.ch.

References

- 1.Iliffe S, Robinson L, Brayne C, Goodman C, Rait G, Manthorpe J, Ashley P, DeNDRoN Primary Care Clinical Studies Group Primary care and dementia: 1. diagnosis, screening and disclosure. Int J Geriatr Psychiatry. 2009;24:895–901. doi: 10.1002/gps.2204. [DOI] [PubMed] [Google Scholar]

- 2.Boustani M, Peterson B, Hanson L, Harris R, Lohr KN, U.S. Preventive Services Task Force Screening for dementia in primary care: a summary of the evidence for the U.S. Preventive Services Task Force. Ann Intern Med. 2003;138:927–937. doi: 10.7326/0003-4819-138-11-200306030-00015. [DOI] [PubMed] [Google Scholar]

- 3.Canadian Task Force on the Periodic Health Examination . Canadian Guide to Clinical Prevention Health Care. Ottawa: Canada Communication Group; 1994. [Google Scholar]

- 4.Lin JS, O’Connor E, Rossom RC, Perdue LA, Eckstrom E. Screening for cognitive impairment in older adults: a systematic review for the U.S. Preventive Services Task Force. Ann Intern Med. 2013;159:601–612. doi: 10.7326/0003-4819-159-9-201311050-00730. [DOI] [PubMed] [Google Scholar]

- 5.Petersen RC, Stevens JC, Ganguli M, Tangalos EG, Cummings JL, DeKosky ST. Practice parameter: early detection of dementia: mild cognitive impairment (an evidence-based review). Report of the Quality Standards Subcommittee of the American Academy of Neurology. Neurology. 2001;56:1133–1142. doi: 10.1212/WNL.56.9.1133. [DOI] [PubMed] [Google Scholar]

- 6.Cordell CB, Borson S, Boustani M, Chodosh J, Reuben D, Verghese J, Thies W, Fried LB, Medicare Detection of Cognitive Impairment Workgroup Alzheimer’s Association recommendations for operationalizing the detection of cognitive impairment during the medicare annual wellness visit in a primary care setting. Alzheimers Dement J Alzheimers Assoc. 2013;9:141–150. doi: 10.1016/j.jalz.2012.09.011. [DOI] [PubMed] [Google Scholar]

- 7.Geldmacher DS, Kerwin DR. Practical diagnosis and management of dementia due to Alzheimer’s disease in the primary care setting: an evidence-based approach. Prim Care Companion CNS Disord. 2013;15:PCC.12r01474. doi: 10.4088/PCC.12r01474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Santacruz KS, Swagerty D. Early diagnosis of dementia. Am Fam Physician. 2001;63:703–713. [PubMed] [Google Scholar]

- 9.Stähelin HB, Monsch AU, Spiegel R. Early diagnosis of dementia via a two-step screening and diagnostic procedure. Int Psychogeriatr. 1997;9:123–130. doi: 10.1017/S1041610297004791. [DOI] [PubMed] [Google Scholar]

- 10.Villars H, Oustric S, Andrieu S, Baeyens JP, Bernabei R, Brodaty H, Brummel-Smith K, Celafu C, Chappell N, Fitten J, Frisoni G, Froelich L, Guerin O, Gold G, Holmerova I, Iliffe S, Lukas A, Melis R, Morley JE, Nies H, Nourhashemi F, Petermans J, Ribera Casado J, Rubenstein L, Salva A, Sieber C, Sinclair A, Schindler R, Stephan E, Wong RY, Vellas B. The primary care physician and Alzheimer’s disease: an international position paper. J Nutr Health Aging. 2010;14:110–120. doi: 10.1007/s12603-010-0022-0. [DOI] [PubMed] [Google Scholar]

- 11.Brodaty H, Low L-F, Gibson L, Burns K. What is the best dementia screening instrument for general practitioners to use? Am J Geriatr Psychiatry. 2006;14:391–400. doi: 10.1097/01.JGP.0000216181.20416.b2. [DOI] [PubMed] [Google Scholar]

- 12.Cullen B, O’Neill B, Evans JJ, Coen RF, Lawlor BA. A review of screening tests for cognitive impairment. J Neurol Neurosurg Psychiatry. 2007;78:790–799. doi: 10.1136/jnnp.2006.095414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ismail Z, Rajji TK, Shulman KI. Brief cognitive screening instruments: an update. Int J Geriatr Psychiatry. 2010;25:111–120. doi: 10.1002/gps.2306. [DOI] [PubMed] [Google Scholar]

- 14.Lorentz WJ, Scanlan JM, Borson S. Brief screening tests for dementia. Can J Psychiatry Rev Can Psychiatr. 2002;47:723–733. doi: 10.1177/070674370204700803. [DOI] [PubMed] [Google Scholar]

- 15.Milne A, Culverwell A, Guss R, Tuppen J, Whelton R. Screening for dementia in primary care: a review of the use, efficacy and quality of measures. Int Psychogeriatr IPA. 2008;20:911–926. doi: 10.1017/S1041610208007394. [DOI] [PubMed] [Google Scholar]

- 16.Woodford HJ, George J. Cognitive assessment in the elderly: a review of clinical methods. QJM Mon J Assoc Physicians. 2007;100:469–484. doi: 10.1093/qjmed/hcm051. [DOI] [PubMed] [Google Scholar]

- 17.Folstein MF, Folstein SE, McHugh PR. ‘Mini Mental State’ – a practical method for grading the cognitive state of patients for the clinician. J Psychiatry Res. 1975;12:189–198. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]

- 18.Monsch AU, Foldi NS, Ermini-Fünfschilling DE, Berres M, Seifritz E, Taylor KI, Stähelin HB, Spiegel R. Improving the diagnostic accuracy of the mini-mental state examination. Acta Neurol Scand. 1995;92:145–150. doi: 10.1111/j.1600-0404.1995.tb01029.x. [DOI] [PubMed] [Google Scholar]

- 19.Hoops S, Nazem S, Siderowf AD, Duda JE, Xie SX, Stern MB, Weintraub D. Validity of the MoCA and MMSE in the detection of MCI and dementia in Parkinson disease. Neurology. 2009;73:1738–1745. doi: 10.1212/WNL.0b013e3181c34b47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Nasreddine ZS, Phillips NA, Bédirian V, Charbonneau S, Whitehead V, Collin I, Cummings JL, Chertkow H. The Montreal Cognitive Assessment, MoCA: a brief screening tool for mild cognitive impairment. J Am Geriatr Soc. 2005;53:695–699. doi: 10.1111/j.1532-5415.2005.53221.x. [DOI] [PubMed] [Google Scholar]

- 21.Storey JE, Rowland JTJ, Basic D, Conforti DA, Dickson HG. The Rowland Universal Dementia Assessment Scale (RUDAS): a multicultural cognitive assessment scale. Int Psychogeriatr IPA. 2004;16:13–31. doi: 10.1017/S1041610204000043. [DOI] [PubMed] [Google Scholar]

- 22.Mitchell AJ, Malladi S. Screening and case finding tools for the detection of dementia. Part I: evidence-based meta-analysis of multidomain tests. Am J Geriatr Psychiatry. 2010;18:759–782. doi: 10.1097/JGP.0b013e3181cdecb8. [DOI] [PubMed] [Google Scholar]

- 23.Friedman M, Leach L, Kaplan E, Winocur G, Shulman KI, Delis DC. Clock Drawing: A Neuropsychological Analysis. New York: Oxford University Press; 1994. [Google Scholar]

- 24.Borson S, Scanlan J, Brush M, Vitaliano P, Dokmak A. The mini-cog: a cognitive ‘vital signs’ measure for dementia screening in multi-lingual elderly. Int J Geriatr Psychiatry. 2000;15:1021–1027. doi: 10.1002/1099-1166(200011)15:11<1021::AID-GPS234>3.0.CO;2-6. [DOI] [PubMed] [Google Scholar]

- 25.Buschke H, Kuslansky G, Katz M, Stewart WF, Sliwinski MJ, Eckholdt HM, Lipton RB. Screening for dementia with the memory impairment screen. Neurology. 1999;52:231–238. doi: 10.1212/WNL.52.2.231. [DOI] [PubMed] [Google Scholar]

- 26.Thalmann B, Spiegel R, Stähelin HB, Brubacher D, Ermini-Fünfschilling D, Bläsi S, Monsch AU. Dementia screening in general practice: optimized scoring for the clock drawing test. Brain Aging. 2002;2:36–43. [Google Scholar]

- 27.Borson S, Scanlan JM, Watanabe J, Tu S-P, Lessig M. Simplifying detection of cognitive impairment: comparison of the Mini-Cog and Mini-Mental State Examination in a multiethnic sample. J Am Geriatr Soc. 2005;53:871–874. doi: 10.1111/j.1532-5415.2005.53269.x. [DOI] [PubMed] [Google Scholar]

- 28.Brodaty H, Pond D, Kemp NM, Luscombe G, Harding L, Berman K, Huppert FA. The GPCOG: a new screening test for dementia designed for general practice. J Am Geriatr Soc. 2002;50:530–534. doi: 10.1046/j.1532-5415.2002.50122.x. [DOI] [PubMed] [Google Scholar]

- 29.Wilkins CH, Wilkins KL, Meisel M, Depke M, Williams J, Edwards DF. Dementia undiagnosed in poor older adults with functional impairment. J Am Geriatr Soc. 2007;55:1771–1776. doi: 10.1111/j.1532-5415.2007.01417.x. [DOI] [PubMed] [Google Scholar]

- 30.Tabert MH, Albert SM, Borukhova-Milov L, Camacho Y, Pelton G, Liu X, Stern Y, Devanand DP. Functional deficits in patients with mild cognitive impairment: prediction of AD. Neurology. 2002;58:758–764. doi: 10.1212/WNL.58.5.758. [DOI] [PubMed] [Google Scholar]

- 31.Jorm AF, Scott R, Jacomb PA. Assessment of cognitive decline in dementia by informant questionnaire. Int J Geriatr Psychiatry. 1989;4:35–39. doi: 10.1002/gps.930040109. [DOI] [Google Scholar]

- 32.Jorm AF. The Informant Questionnaire on cognitive decline in the elderly (IQCODE): a review. Int Psychogeriatr IPA. 2004;16:275–293. doi: 10.1017/S1041610204000390. [DOI] [PubMed] [Google Scholar]

- 33.Ehrensperger MM, Berres M, Taylor KI, Monsch AU. Screening properties of the German IQCODE with a two-year time frame in MCI and early Alzheimer’s disease. Int Psychogeriatr IPA. 2010;22:91–100. doi: 10.1017/S1041610209990962. [DOI] [PubMed] [Google Scholar]

- 34.Reisberg B, Shulman MB, Torossian C, Leng L, Zhu W. Outcome over seven years of healthy adults with and without subjective cognitive impairment. Alzheimers Dement J Alzheimers Assoc. 2010;6:11–24. doi: 10.1016/j.jalz.2009.10.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Wilson RS, Barnes LL, de Leon CF M, Aggarwal NT, Schneider JS, Bach J, Pilat J, Beckett LA, Arnold SE, Evans DA, Bennett DA. Depressive symptoms, cognitive decline, and risk of AD in older persons. Neurology. 2002;59:364–370. doi: 10.1212/WNL.59.3.364. [DOI] [PubMed] [Google Scholar]

- 36.Schmid NS, Taylor KI, Foldi NS, Berres M, Monsch AU. Neuropsychological signs of Alzheimer’s disease 8 years prior to diagnosis. J Alzheimers Dis JAD. 2013;34:537–546. doi: 10.3233/JAD-121234. [DOI] [PubMed] [Google Scholar]

- 37.Holsinger T, Deveau J, Boustani M, Williams JW., Jr Does this patient have dementia? JAMA J Am Med Assoc. 2007;297:2391–2404. doi: 10.1001/jama.297.21.2391. [DOI] [PubMed] [Google Scholar]

- 38.Spitzer RL, Williams JB, Kroenke K, Linzer M, De Gruy FV, 3rd, Hahn SR, Brody D, Johnson JG. Utility of a new procedure for diagnosing mental disorders in primary care. The PRIME-MD 1000 study. JAMA J Am Med Assoc. 1994;272:1749–1756. doi: 10.1001/jama.1994.03520220043029. [DOI] [PubMed] [Google Scholar]

- 39.Bossuyt PM. Towards complete and accurate reporting of studies of diagnostic accuracy: the STARD initiative. Ann Intern Med. 2003;138:40. doi: 10.7326/0003-4819-138-1-200301070-00010. [DOI] [PubMed] [Google Scholar]

- 40.Winblad B, Palmer K, Kivipelto M, Jelic V, Fratiglioni L, Wahlund L-O, Nordberg A, Bäckman L, Albert M, Almkvist O, Arai H, Basun H, Blennow K, De Leon M, Decarli C, Erkinjuntti T, Giacobini E, Graff C, Hardy J, Jack C, Jorm A, Ritchie K, Van Duijn C, Visser P, Petersen RC. Mild cognitive impairment - beyond controversies, toward a consensus: report of the International Working Group on Mild Cognitive Impairment. J Intern Med. 2004;256:240–246. doi: 10.1111/j.1365-2796.2004.01380.x. [DOI] [PubMed] [Google Scholar]

- 41.American Psychiatric Association . DSM-IV: Diagnostic and Statistical Manual of Mental Disorders. Washington, DC: American Psychiatric Press; 1994. [Google Scholar]

- 42.Monsch AU, Thalmann B, Schneitter M, Bernasconi F, Aebi C, Camachova-Davet Z, Stähelin HB. The Basel study on the Elderly’s search for preclinical cognitive markers of Alzheimer’s disease. Neurobiol Aging. 2000;21:31. doi: 10.1016/S0197-4580(00)82817-1. [DOI] [Google Scholar]

- 43.Monsch AU, Hermelink M, Kressig RW, Fisch HP, Grob D, Hiltbrunner B, Martensson B, Rüegger-Frey B, von Gunten A, Swiss Expert Group Konsensus zur Diagnostik und Betreuung von Demenzkranken in der Schweiz. Schweiz Med Forum. 2008;8:144–149. [Google Scholar]

- 44.Jorm AF. A short form of the Informant Questionnaire on Cognitive Decline in the Elderly (IQCODE): development and cross-validation. Psychol Med. 1994;24:145–153. doi: 10.1017/S003329170002691X. [DOI] [PubMed] [Google Scholar]

- 45.R Development Core Team . R: A Language and Environment for Statistical Computing. Vienna, Austria: R Foundation for Statistical Computing; 2011. [Google Scholar]

- 46.Efron B. Bootstrap methods: another look at the Jackknife. Ann Stat. 1979;7:1–26. doi: 10.1214/aos/1176344552. [DOI] [Google Scholar]

- 47.Albert MS, DeKosky ST, Dickson D, Dubois B, Feldman HH, Fox NC, Gamst A, Holtzman DM, Jagust WJ, Petersen RC, Snyder PJ, Carrillo MC, Thies B, Phelps CH. The diagnosis of mild cognitive impairment due to Alzheimer’s disease: recommendations from the National Institute on Aging–Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimers Dement. 2011;7:270–279. doi: 10.1016/j.jalz.2011.03.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.McKhann GM, Knopman DS, Chertkow H, Hyman BT, Jack CR, Jr, Kawas CH, Klunk WE, Koroshetz WJ, Manly JJ, Mayeux R, Mohs RC, Morris JC, Rossor MN, Scheltens P, Carrillo MC, Thies B, Weintraub S, Phelps CH. The diagnosis of dementia due to Alzheimer’s disease: recommendations from the National Institute on Aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimers Dement J Alzheimers Assoc. 2011;7:263–269. doi: 10.1016/j.jalz.2011.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Mainland BJ, Amodeo S, Shulman KI. Multiple clock drawing scoring systems: simpler is better. Int J Geriatr Psychiatry. 2014;29:127–136. doi: 10.1002/gps.3992. [DOI] [PubMed] [Google Scholar]

- 50.Scanlan JM, Brush M, Quijano C, Borson S. Comparing clock tests for dementia screening: naïve judgments vs formal systems–what is optimal? Int. J Geriatr Psychiatry. 2002;17:14–21. doi: 10.1002/gps.516. [DOI] [PubMed] [Google Scholar]