Abstract

Purpose:

The technological advances in real-time ultrasound image guidance for high-dose-rate (HDR) prostate brachytherapy have placed this treatment modality at the forefront of innovation in cancer radiotherapy. Prostate HDR treatment often involves placing the HDR catheters (needles) into the prostate gland under the transrectal ultrasound (TRUS) guidance, then generating a radiation treatment plan based on CT prostate images, and subsequently delivering high dose of radiation through these catheters. The main challenge for this HDR procedure is to accurately segment the prostate volume in the CT images for the radiation treatment planning. In this study, the authors propose a novel approach that integrates the prostate volume from 3D TRUS images into the treatment planning CT images to provide an accurate prostate delineation for prostate HDR treatment.

Methods:

The authors’ approach requires acquisition of 3D TRUS prostate images in the operating room right after the HDR catheters are inserted, which takes 1–3 min. These TRUS images are used to create prostate contours. The HDR catheters are reconstructed from the intraoperative TRUS and postoperative CT images, and subsequently used as landmarks for the TRUS–CT image fusion. After TRUS–CT fusion, the TRUS-based prostate volume is deformed to the CT images for treatment planning. This method was first validated with a prostate-phantom study. In addition, a pilot study of ten patients undergoing HDR prostate brachytherapy was conducted to test its clinical feasibility. The accuracy of their approach was assessed through the locations of three implanted fiducial (gold) markers, as well as T2-weighted MR prostate images of patients.

Results:

For the phantom study, the target registration error (TRE) of gold-markers was 0.41 ± 0.11 mm. For the ten patients, the TRE of gold markers was 1.18 ± 0.26 mm; the prostate volume difference between the authors’ approach and the MRI-based volume was 7.28% ± 0.86%, and the prostate volume Dice overlap coefficient was 91.89% ± 1.19%.

Conclusions:

The authors have developed a novel approach to improve prostate contour utilizing intraoperative TRUS-based prostate volume in the CT-based prostate HDR treatment planning, demonstrated its clinical feasibility, and validated its accuracy with MRIs. The proposed segmentation method would improve prostate delineations, enable accurate dose planning and treatment delivery, and potentially enhance the treatment outcome of prostate HDR brachytherapy.

Keywords: prostate, CT, segmentation, transrectal ultrasound (TRUS), HDR brachytherapy

1. INTRODUCTION

Radiotherapy is an important treatment modality for localized prostate cancer. The past few decades have witnessed a significant evolution in radiation techniques, such as the intensity-modulated radiation therapy (IMRT) and high-dose-rate (HDR) brachytherapy. In particular, the technological advances in real-time ultrasound (US) image guidance for HDR prostate brachytherapy have placed this treatment modality at the forefront of innovation in the field of cancer radiotherapy.1

HDR prostate brachytherapy involves using a radiation source (Iridium-192) to deliver high radiation dose to the prostate gland through a series of catheters that are temporarily placed within the prostate transperineally under transrectal ultrasound (TRUS) guidance.2 This HDR procedure allows the dose delivered to surrounding normal tissues to be minimized, thereby permitting safe dose escalation to the prostate gland.3–6 Recent data clearly show an improved efficacy of this treatment approach in patients with locally advanced cancer when compared with conventional 3D external beam and IMRT techniques.7 As a result, an increasing number of men, many of younger ages, are undergoing prostate HDR brachytherapy instead of radical prostatectomy for localized prostate cancer.8,9

The key to the success of HDR prostate brachytherapy is the accurate segmentation of the prostate in treatment-planning CT images. If the prostate is not accurately localized, high therapeutic radiation dose could be delivered to the surrounding normal tissues (e.g., rectum and bladder) during the treatment, which may cause severe complications such as rectal bleeding. More importantly, this may also lead to an undertreatment of cancerous regions within the prostate gland, and therefore, result in a poor treatment outcome.

In the clinic, physicians’ manual segmentation of the prostate on CT images is the common practice and gold standard in prostate radiotherapy.10,11 However, prostate CT segmentation is challenging mainly due to the low image contrast between the prostate and its surrounding tissues, and the uncertainty in defining the prostate base and apex on CT images. It is well-known that the accuracy and reproducibility of prostate volume manually contoured on CT images are poor.10–15 Dubois et al. showed, for example, that a large variation and inconsistency existed in CT-based prostate contours among physicians.11 Hoffelt et al. reported that CT consistently overestimated the prostate volume by approximately 50% compared with TRUS.15 Roach et al. also found that CT-defined prostate volume was on average 32% larger (range 5%–63%) than MRI-defined prostate volume.13 Rasch et al. demonstrated that CT-derived prostate volumes were larger than MR-derived volumes, and the average ratio between the CT and MR prostate volumes was 1.4, which was significantly different from 1 (p < 0.005).14

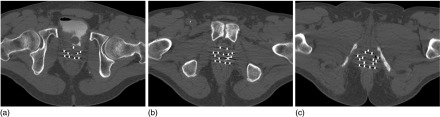

Many CT prostate segmentation technologies have been investigated in recent years, such as the model-based,16–21 classification-based,22–27 and registration-based28,29 methods (detailed in Sec. 4). Most of these segmentation approaches are based on the appearance and texture of the prostate gland on CT images. In prostate HDR brachytherapy, prostate CT images are acquired after the insertions of the HDR catheters. The frequently used metal catheters introduce considerable artifacts to the CT images, as shown in Fig. 1. These artifacts often smear the appearance and texture of the CT prostate images; therefore, these previous methods may not work well for the prostate HDR application.

FIG. 1.

Significant artifacts induced by the HDR metal catheters (white dots) in the axial CT prostate images: (a) prostate base, (b) prostate midgland, and (c) prostate apex.

Studies have shown that TRUS and MRI are superior imaging modalities in terms of prostate contour as compared with CT;30,31 and both TRUS-defined and MRI-defined prostate volumes have been shown to correlate closely with the prostate volume on pathologic evaluation.30,32 In this paper, we propose a new approach that integrates an intraoperative TRUS-based prostate volume into the treatment planning through TRUS–CT fusion based on the catheter locations.

2. METHODS AND MATERIALS

2.A. Prostate segmentation method

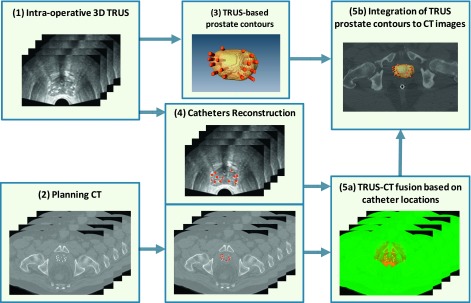

Our prostate segmentation approach for the HDR prostate brachytherapy involves five major steps (Fig. 2): (1) The 3D TRUS prostate images are captured after the catheter insertions during the HDR procedure; (2) A postoperative CT scan is obtained with all catheters for the brachytherapy treatment planning; (3) The prostate volume is contoured (segmented) in the TRUS images; (4) The HDR catheters in the 3D TRUS and CT images are reconstructed; (5) The TRUS–CT image registration is performed using HDR catheters as landmarks, and the TRUS-based prostate volume is integrated into the 3D CT images for HDR treatment planning.

FIG. 2.

Flow chart of integrating TRUS-based prostate volume into CT-based HDR treatment planning.

2.A.1. 3D intraoperative TRUS image acquisition

The TRUS scan was performed in the operating room after the catheter insertions. The 3D TRUS images were captured with a clinical ultrasound scanner (HI VISION Avius, Hitachi Medical Group, Japan) and a transrectal 7.5 MHz prostate biplane probe (UST-672-5/7.5). During the data acquisition, the transrectal probe was held with a mechanical SurePoint stepper (Bard Medical, Inc., GA) to allow for a manual stepwise movement along the longitudinal axis. The patient was scanned in the lithotomy position, and a series of parallel axial (transverse) scans were captured, from the apex to the base with a 1 or 2 mm step size, to cover the entire prostate gland plus the 5–10 mm anterior and posterior margins. For a typical prostate, 30–40 TRUS images would cover 60–80 mm in the longitudinal direction (with a 2 mm step size).

2.A.2. CT image acquisition for HDR treatment planning

After the catheter insertion and TRUS scan, the patient was then transferred to a CT simulation room to obtain 3D CT images for HDR treatment planning (Electra Oncentra v4.3). Even though MRI has better soft tissue contrast than CT,33 using the MR images for treatment planning is problematic.1 For example, most HDR catheters are not MRI compatible, and the catheter reconstructions can be difficult with MRIs. MRI is more expensive and less available as compared with CT. Therefore, CT is still the most commonly used image modality for radiotherapy dose calculation.1 The treatment planning CT was acquired following standard CT protocol. A helical CT scan was taken after 40 mL of contrast was injected into the bladder, and a rectal marker was placed into the rectum. All patients were scanned in the head first supine, feet-down position without the probe or immobilization device. The slice thickness was 1.0 mm through the whole pelvic region, and the matrix size was 512 × 512 pixels with 0.68 × 0.68 mm2 pixel size.

2.A.3. Prostate volume contour in TRUS images

A radiation oncologist manually contoured the prostate volumes using TRUS prostate images. For a typical prostate of 50 mm, with 2 mm slide thickness, approximately 25 TRUS slides needed to be contoured. In general, it takes 5–15 min. to contour a prostate volume. Although this might be time consuming, because the TRUS images are greatly degraded due to the HDR catheter insertion, we feel manual contours would provide the most accurate prostate volumes.

2.A.4. Catheter reconstruction in TRUS and CT images

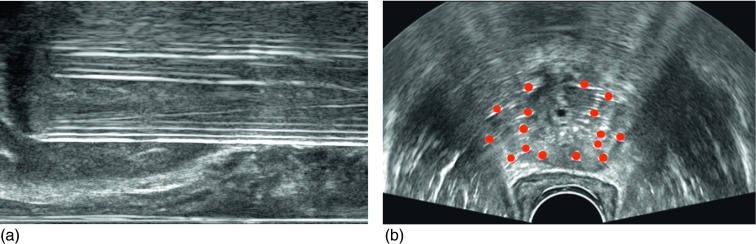

For TRUS images, catheter reconstruction is challenging due to the artifacts induced by the multiple scattering from HDR catheters. As shown in Fig. 3, bright band/tail and dark shadow artifacts34 are present on the longitudinal [Fig. 3(a)] and axial [Fig. 3(b)] TRUS images. To deal with these artifacts, we used a manual catheter reconstruction method. Specifically, we first located the tips of all catheters located close to the base of the prostate. We then identified the brightest point of each catheter and placed 2 mm circles on an axial TRUS image. Such operation was repeated on every three to four slides, and the catheter locations on the skipped slides were interpolated. The final step was 3D catheter reconstruction for the TRUS prostate images. For CT images, we were able to use the threshold method to automatically detect the HDR catheters because of the high contrast between the HDR catheters and soft tissue. We tested a range of Hounsfield unit (HU) thresholds in this study, and the best threshold of 950 HU was determined by matching the catheter diameter on the CT image to the real catheter diameter of 2 mm.

FIG. 3.

Catheter artifacts on TRUS images. (a) Bright band artifacts seen on a longitudinal TRUS image, and (b) HDR catheters reconstruction on the axial TRUS image.

2.A.5. TRUS–CT registration

2.A.5.a. Landmark similarities.

In this study, we used HDR catheters as landmarks for image registration. In general, the catheters were uniformly and symmetrically distributed inside the prostate gland, except for regions near the urethra where catheters were placed at least 5 mm away from the urethra. In other words, the catheters followed uniform spacing along the periphery with several interior catheters. After catheter insertion, the catheters were locked onto a needle template, which was fixed onto the patient throughout the HDR brachytherapy. This is critical to ensure no relative displacement among the catheters and no catheter movement inside the prostate gland during the intraoperative TRUS scan, planning CT scan and final dose delivering. Such evenly distributed catheters will provide exceptional landmarks for the TRUS–CT registration to capture the nonrigid prostate deformation between TRUS and CT images. The corresponding catheter pairs in the CT and TRUS images with the same number were used as landmarks to improve our registration accuracy.

Our TRUS–CT registration method was performed by matching the catheter locations, where and were the landmark point sets on the catheter surface from the planning CT and TRUS images, respectively. We assumed the detected catheters’ surface was {x|i = 1, …, I} in the CT image, and {y|j = 1, …, J} in the TRUS image. The correspondences between and are described by a fuzzy correspondence matrix P. We defined a binary corresponding matrix P with dimension (I + 1) × (J + 1)

| (1) |

The matrix P = {pij} consists of two parts. The I × J inner submatrix defines the correspondences of X and Y. It is worth noting that pij have real values between 0 and 1, which denote the fuzzy correspondences between the landmarks.35 If xi is mapped to yj, then pij = 1, otherwise pij = 0. The (J + 1)th column and the (I + 1)th row define the outliers in X and Y, respectively. If a landmark cannot find its correspondence, it is regarded as an outlier, and the extra entry of this landmark will be set as 1. That is, if xi is an outlier, then there is pi,J+1 = 1. Similarly, if yj is an outlier, then there is pI+1,j = 1. P satisfies the row and column normalization conditions, and P is subject to .

2.A.5.b. Similarity function.

In this study, the similarity between the two sets of catheter landmarks xi and yj in TRUS and CT images can be defined by a Euclidean distance between their point sets. We used a soft assign technique allowing P to take values from interval [0, 1] in energy function.36 The continuous property of P acknowledges the ambiguous matches between X and Y. For the catheter landmarks, our registration task is to find an optimal correspondence matrix P and an optimal spatial transform f, which matches these two points’ sets, X and Y, as closely as possible. Therefore, the following energy function for registration between TRUS and CT images is minimized,35,37

| (2) |

where α, δ, ξ, and λ are the weights for the energy terms. pij is the fuzzy correspondence matrix. f denotes the transformation between the TRUS and CT images. The first term is the geometric feature-based energy term defined by the Euclidean distance. Similarly the space between and is measured by the Euclidean distance—a smaller distance indicates a higher similarity between them. The second term is an entropy term that comes from the deterministic annealing technique,38 which is used to directly control the fuzziness of P. δ is called the temperature parameter. The third term is used to direct the correspondence matrix P converging to binary, and is used to balance the outlier rejection. As δ is gradually reduced to zero, the fuzzy correspondences become binary. The first three terms constitute the similarity metric on both catheter landmarks in TRUS and CT images. The last term is the regularization of the transformation, which is described by the bending energy of f. In a nonrigid registration, smoothness is necessary to restrict the mappings from not being too arbitrary. The local deformation ought to be characterized as a smooth function to discourage arbitrary unrealistic shape deformation. So this smoothness penalty term is introduced to regularize the local deformation by the second order spatial derivatives. For the registration, parameter α in Eq. (2) was set to 0.5, and δ, ξ, and λ were three dynamic parameters that were initially set as 1 and decreased to 0.05 with the progress of iterations.35,37,39

The overall similarity function can be minimized by an alternating optimization algorithm that successively updates the correspondence matrix pij and the transformation function f. First, with the fixed transformation f, the correspondence matrices between the landmarks are updated by minimizing E(f). The updated correspondence matrices are then treated as the temporary correspondences between the landmarks. Second, with the fixed temporary correspondence matrix pij, the transformation function f is updated. The two steps are alternatively repeated until there are no updates of the correspondence matrix P. By optimizing an overall similarity function that integrates the similarities between the landmarks and the smoothness constraints on the estimated transformation between the TRUS and CT images, the correspondences between the landmarks and more importantly, the dense transformation between the TRUS and CT images can be simultaneously obtained.

2.A.5.c. Transformation model.

The transformation between the TRUS and CT images are represented by a general function, which can be modeled by various function bases (e.g., multiquadratic,40 thin-plate spline (TPS),41,42 radial basis,43 or B-spline44). In this study, we chose the B-splines as the transformation basis. Unlike the TPS or the elastic-body splines, the B-splines translation of a point is only determined by the area immediately surrounding the control point, resulting in a locally controlled transformation. In the case of the HDR procedure, the major deformations are caused by a transrectal probe, and are spatially localized; therefore, a locally controlled transformation would be advantageous for registering the TRUS images and result in smooth transformation fields.45

2.B. Prostate-phantom experiments

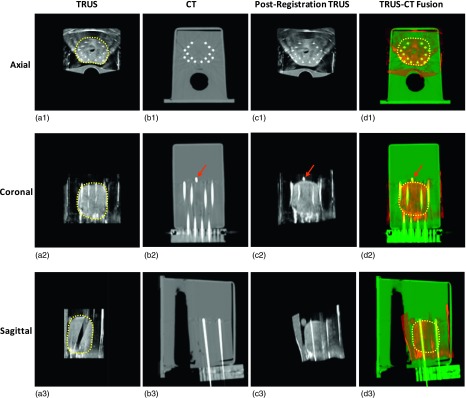

In order to validate the prostate segmentation method, we first conducted experiments with a multimodality prostate phantom (CIRS Model 053). In this phantom, a tissue-mimicking prostate, along with structures simulating the rectal wall, seminal vesicles, and urethra, is contained within an 11.5 × 7.0 × 9.5 cm3 clear plastic container. For the US scan, a HI VISION Avius US machine (Hitachi Medical Group, Japan) with a 7.5 MHz prostate biplane probe (UST-672-5/7.5) was used. To mimic a prostate HDR procedure, 14 HDR catheters were implanted into the prostate under US guidance and the prostate was deformed by the pressure of the US probe during the ultrasound scan. The voxel size of the 3D US dataset was 0.08 × 0.08 × 0.50 mm3. Figure 4(a) shows the axial, coronal, and sagittal US images of the prostate phantom. For the CT scan, a Philips CT scanner (Philips, The Netherlands) was used, and the prostate was not deformed during the CT scan. The voxel size of the 3D CT dataset was 0.29 × 0.29 × 0.80 mm3. Figure 4(b) shows the axial, coronal, and sagittal CT images of the prostate phantom.

FIG. 4.

3D TRUS–CT registered results of the prostate phantom. (a1)–(a3) are TRUS images in the axial, coronal, and sagittal directions; (b1)–(b3) are CT images in three directions; (c1)–(c3) are the postregistration TRUS images; (d1)–(d3) are the TRUS–CT fusion images, where the prostate volume is transformed from original preregistration TRUS images. The close match between the gold marker (arrows) and catheters in TRUS and CT demonstrates the accuracy of our method.

The registration accuracy was evaluated using the fiducial localization error (FLE) and target registration error (TRE). The registration’s accuracy depends on the FLE, which is the error in locating the fiducials (points) employed in the registration process.46 In this study, we used reconstructed catheters from TRUS and CT images as fiducials (landmarks) to perform the registration, so the mean surface distances of the corresponding catheters between the CT and postregistration TRUS images were used to quantify the FLE. The TRE is an important measure of the accuracy of the performed registration, which is the distance, after registration, between a pair of corresponding fiducials that are not used in the registration process.46 In this study, the displacements of gold markers between the CT and postregistration TRUS images were used to quantify the TRE.

To evaluate the segmentation accuracy, we compared the surface, and absolute and DICE volume differences of anatomical structures (prostate and urethra) between the CT and postregistration TRUS images, which are the two essential measurements in the morphometric assessments. The segmentation accuracy was quantified with three surface measures (the average surface distance, root-mean square (RMS), and maximum surface distance) and two volume measures (the absolute volume difference and Dice volume overlap).

2.C. Preliminary clinical study

We conducted a retrospective clinical study with ten patients who had received HDR brachytherapy for localized prostate cancer between January and June 2013. In this group of ten patients, 12–16 catheters (mean ± STD: 14.6 ± 1.4) were implanted. The same Hitachi US machine and Philips CT scanner detailed in the phantom study were used to image the patients. For TRUS images, the voxel size was 0.12 × 0.12 × 1.00 mm3 for three patients and 0.12 × 0.12 × 2.00 mm3 for the remaining seven patients. For the planning CT images, the voxel size was 0.68 × 0.68 × 1.00 mm3 for all patients. The accuracy of our approach was assessed through the locations of three implanted gold markers, as well as previous T2-weighted MR images of the patients. To evaluate the accuracy of the prostate registration, we calculated the TRE and FLE.

In this pilot study, all patients had previous diagnostic MR scans of the prostate. As compared with CT, MRI has high soft tissue contrast and clear prostate boundaries.33 Studies have shown that accurate prostate volumes can be obtained with both MRI and US.30,31,47 Hence, in this study, we used prostate contours from the MR images as the gold standard to evaluate our prostate segmentation method. All patients were scanned in feet-down supine position with a body coil using a Philips MRI with a voxel size of 1.0 × 1.0 × 2.00 mm3. All prostates were manually segmented from the T2-weighted MR images. Because of various patients’ positioning during CT and MR scans, the prostate shape and size may vary between the MR and CT images. To compute the volume difference and Dice overlap between the MRI-defined prostate and our TRUS-based segmented prostate, we registered the MR images to CT images,48–50 and then applied the corresponding deformation to the respective prostates of MR images to obtain the MRI-based prostate volume.

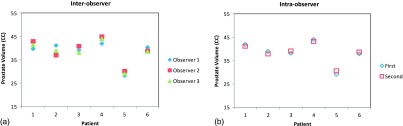

To evaluate the interobserver reliability of the prostate manual contours, three observers (two radiologists and one US physicist) were asked to independently contour the prostate US and MR images of six subjects. Each observer was blinded to other observers’ contours. The variations of the prostate volume were calculated for the assessment of consistency among measurements by the three observers. In addition, the effect of interobserver segmentation was further evaluated by comparing the variations of our automated segmentation results based on each observer’s contours.

To evaluate the intraobserver reliability of the prostate manual contours, one observer was asked to contour the prostate of the six sets of US and MR images twice. The time between the first and second contours was roughly 5 months, which was long enough to reduce recall bias. From these contours, the variations of the prostate volume were computed for the assessment of consistency among measurements by the same observer. In addition, the effect of intraobserver segmentation was further evaluated by comparing the variations of our automated segmentation results based on the same observer’s contours performed at two different times.

3. RESULTS

3.A. Prostate-phantom study

3.A.1. Registration accuracy

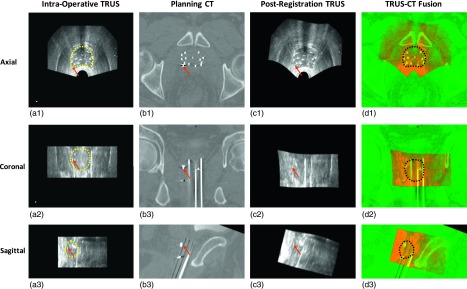

The prostate [Fig. 4(a), yellow dotted line] and the urethra were manually contoured on the TRUS images. The catheters were reconstructed on both TRUS and CT images. Figure 4(c) shows the postregistration TRUS images, and Fig. 4(d) shows the fusion images between the postregistration TRUS and CT images. From Fig. 4, we can obtain a visual assessment of the catheter and gold-marker match between the postregistration TRUS and CT images. To further quantify the accuracy of the registration, we calculated the three gold markers between the postregistration TRUS and CT images. The length of each gold marker is 3 mm; therefore the gold marker was often seen on two to three consecutive postregistration TRUS and CT images (0.8 mm slice thickness), and we used the center position (x, y, and z coordinates) to calculate the TRE of each gold marker between the CT and postregistration TRUS images. Table I illustrates the TRE for three gold markers, and the mean TRE is 0.41 ± 0.11 mm. For the FLE, the mean surface distance of the catheter pairs between the CT and postregistration TRUS images is 0.18 ± 0.15 mm.

TABLE I.

TRE between the centers of three gold markers for prostate phantom.

| Gold markers | ΔX | ΔY | ΔZ | Distance (mm) |

|---|---|---|---|---|

| 1 | 1 | 2 | 0 | 0.51 |

| 2 | 0 | 1 | 0 | 0.29 |

| 3 | 1 | 1 | 0 | 0.42 |

| Mean ± STD | 0.41 ± 0.11 |

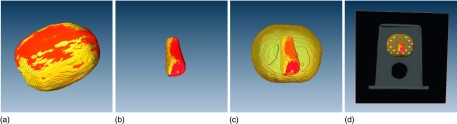

3.A.2. Segmentation accuracy

Figure 5 provides a 3D visualization comparison of the prostate and urethra between our segmentation results and the gold standards (manual segmentations from CT images). The quantitative evaluations of the surface distance and volume difference between the prostate and urethra of the TRUS and CT images of the prostate phantom are shown in Table II. The volume of the phantom prostate is 53.53 cm3. For both prostate and urethra, the mean surface distance, RMS, and maximum surface distance between our segmentations and the gold standards are in submillimeter or millimeter range. The less than 2% absolute volume difference and the more than 97% Dice volume overlap for both structures demonstrate the accurate volume segmentation of our proposed TRUS-based segmentation method. Not only can the proposed method accurately segment the prostate, it can also accurately segment the urethra—a smaller structure, located in the center of prostate, which further indicates the robustness of our proposed method.

FIG. 5.

3D comparison of segmented prostate and urethra with the gold standards. (a) 3D prostate overlap comparison between the postregistration TRUS and gold-standard CT; (b) 3D urethra overlap between the postregistration TRUS and CT; (c) 3D overlap of the urethra inside the prostate; (d) 3D result of the prostate and urethra in 3D CT image.

TABLE II.

Surface distance and volume difference between our segmentations and CT-defined structures.

| Volume difference (%) | |||||

|---|---|---|---|---|---|

| Surface distance (mm) | Absolute volume | Dice volume | |||

| Mean ± STD | RMS | Max | difference | overlap | |

| Prostate | 0.39 ± 0.25 | 0.41 | 1.32 | 1.65 | 97.84 |

| Urethra | 0.20 ± 0.16 | 0.21 | 0.68 | 1.83 | 97.75 |

3.B. Preliminary clinical study

3.B.1. Registration accuracy—TRE and FLE

Here, we used the case of a 58-yr-old patient who received HDR treatment for intermediate prostate cancer to demonstrate our proposed segmentation method. Figure 6(a) shows the intraoperative TRUS images and Fig. 6(b) displays the treatment planning CT images after catheter insertion. The fusion images between the planning CT and postregistration TRUS [Fig. 6(c)] are shown in Fig. 6(d). The prostate contour in the intraoperative TRUS image [Fig. 6(a)] was deformed to the postregistration TRUS, based on a deformable TRUS–CT registration. Finally, the prostate volume in TRUS images was integrated into the treatment planning CT.

FIG. 6.

Integration of TRUS-based prostate volume into postoperative CT images. (a1)–(a3) are TRUS images in axial, coronal, and sagittal directions; (b1)–(b3) are the postoperative CT; (c1)–(c3) are the postregistration TRUS images; (d1)–(d3) are the TRUS–CT fusion images, where the prostate volume is integrated. The close match between the gold markers (arrows) in the TRUS and CT demonstrates the accuracy of our method.

Seven patients received combined radiotherapy (external beam radiotherapy plus HDR brachytherapy) for prostate cancer treatment, while three patients received HDR monotherapy. For patients receiving combined radiotherapy, three gold markers were implanted before their external beam radiotherapy for the prostate localization during treatment. For patients receiving monotherapy, three gold markers were implanted during the HDR procedure for the prostate localization. Three gold markers were placed at the base, middle, or apex of the prostate under the TRUS guidance.

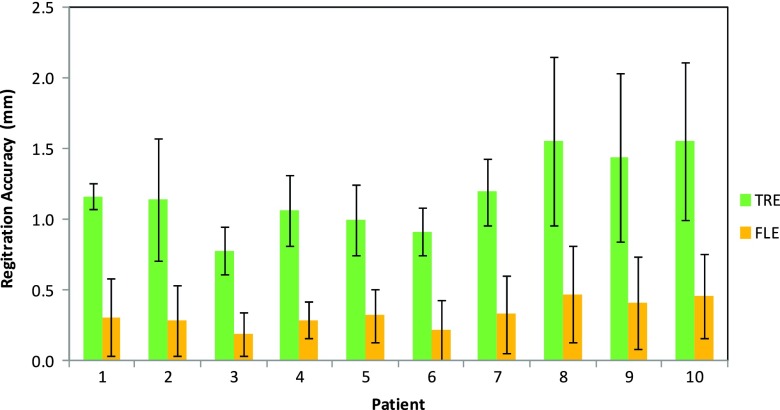

We calculated TRE of the gold markers and the FLE of the HDR catheters in the CT and postregistration TRUS images to evaluate the accuracy of our registration, as shown in Fig. 7. This figure shows the TRE and FLE for ten patients. Overall, the TRE of the gold markers for all patients was 1.18 ± 0.26 mm, and the FLE (mean surface distance) of the HDR catheters for all patients was 0.33 ± 0.09 mm. The close match between the gold markers and the HDR catheters in the TRUS and CT demonstrated the accuracy of our method.

FIG. 7.

The TRE of the gold markers and the FLE of the HDR catheters for ten patients.

3.B.2. Segmentation accuracy—comparison with MR prostate volume

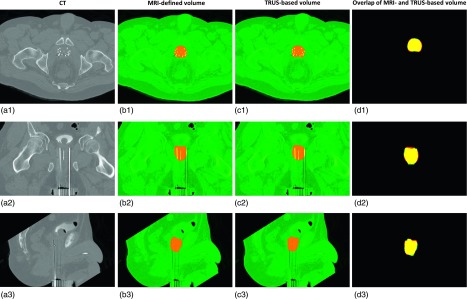

Here, we used the same 58-yr old patient, shown in Fig. 6, to illustrate the comparison between our TRUS-based prostate segmentation and the MR-defined prostate volume. Figure 8(a) shows the treatment planning CT images. Figure 8(b) shows the CT images with the MRI-defined prostate volume, and the MRI-defined prostate volume of this patient is 40.9 cm3. Figure 8(c) shows the CT images with our TRUS-based segmented prostate volume, and our segmented prostate volume for this patient is 38.2 cm3. Figure 8(d) displays the 3D overlap shown between our TRUS-based prostate volume and MR-based prostate volume. The absolute prostate volume difference of this patient is 6.51%, and the Dice volume overlap is 92.77%. The Large yellow overlap areas at three directions show a close match of the prostate contours obtained from our prostate segmentation and the MRI-defined boundary (ground truth). In particular, our segmentation matched very well with the MR-defined prostate at the base and apex, which is usually a difficult area to segment on CT images.

FIG. 8.

Comparison of the TRUS-based prostate segmentation and MR-defined prostate volume. (a1)–(a3) are the axial, coronal, and sagittal CT images; (b1)–(b3) are the CT images with MRI-defined prostate volume; (c1)–(c3) are the CT images with our TRUS-based segmented prostate volume; (d1)–(d3) are the volume overlap between our TRUS-based segmented prostate volume and MRI-based prostate volume.

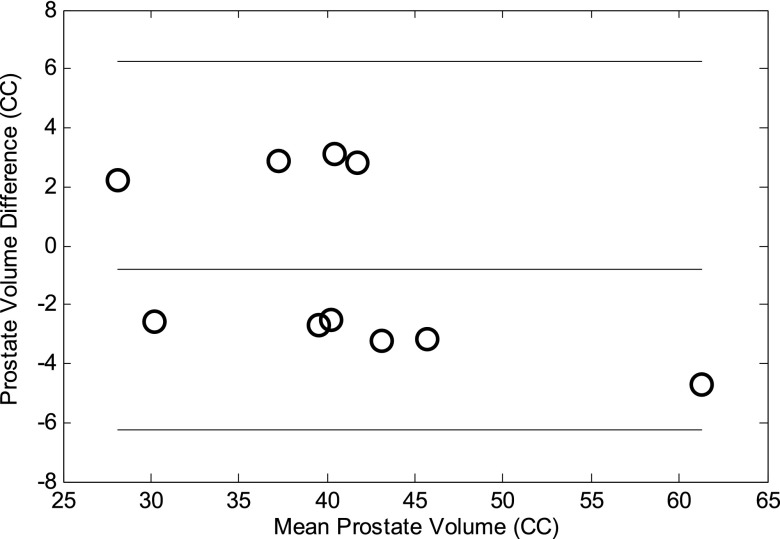

In Table III, the mean surface distance, absolute prostate volume differences, and Dice volume overlaps between our segmented prostate, and the MR-defined prostate of all ten patients are displayed. The average prostate-surface difference between our approach and the corresponding MRI was around 0.60 mm; the average absolute prostate-volume difference was less than 10%; and the average Dice volume overlap was over 90%. The small surface and volume difference and high volume overlap demonstrated the prostate volume contour accuracy of our TRUS–CT-based registration method. The Bland–Altman analysis51 is a method for statistical evaluation of agreement between two measurements. Figure 9 shows the systematic differences and estimate bias and limits of agreement between the TRUS-based and MRI-based prostate volumes. The relative bias in the TRUS-based volume over the MRI-based volume was 1.7%, which may be due to the prostate swelling from the implant needles.

TABLE III.

Prostate surface and volume comparison between our segmentation and the MR-defined prostate.

| Patient | P01 | P02 | P03 | P04 | P05 | P06 | P07 | P08 | P09 | P10 | Mean ± STD |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean surface distance (mm) | 0.58 | 0.61 | 0.59 | 0.57 | 0.49 | 0.63 | 0.73 | 0.57 | 0.61 | 0.56 | 0.61 ± 0.06 |

| Absolute volume difference (%) | 7.13 | 7.75 | 6.97 | 6.71 | 6.51 | 6.23 | 7.35 | 8.19 | 8.04 | 8.35 | 7.28 ± 0.86 |

| Dice volume overlap (%) | 92.72 | 91.27 | 93.89 | 92.97 | 92.77 | 92.54 | 91.41 | 90.69 | 91.14 | 90.36 | 91.89 ± 1.19 |

| TRUS prostate volume (CC) | 41.51 | 42.03 | 43.14 | 39.02 | 38.19 | 44.11 | 58.87 | 29.21 | 38.68 | 28.94 | 40.37 ± 8.40 |

FIG. 9.

Bland–Altman analysis between our segmentation and the MR-defined prostate. The lines indicate the mean difference (the middle line), the mean difference + 2SD (the top line), and the mean difference − 2SD (the bottom line).

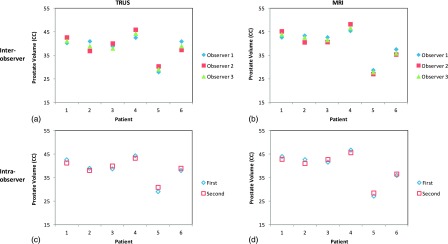

3.B.3. Inter- and intraobserver reliability

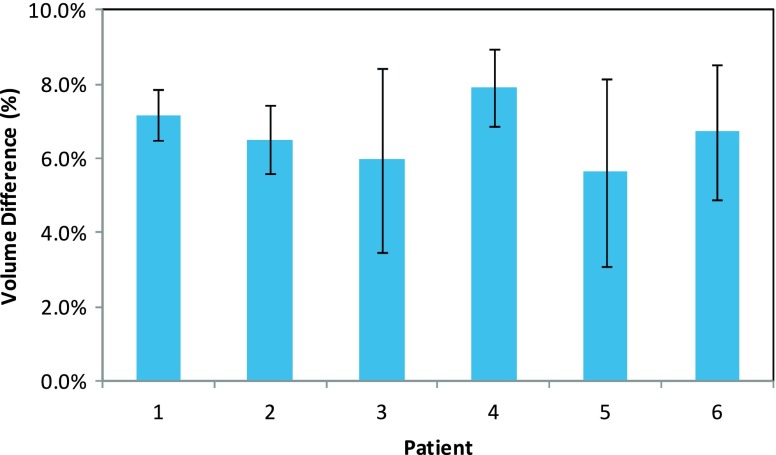

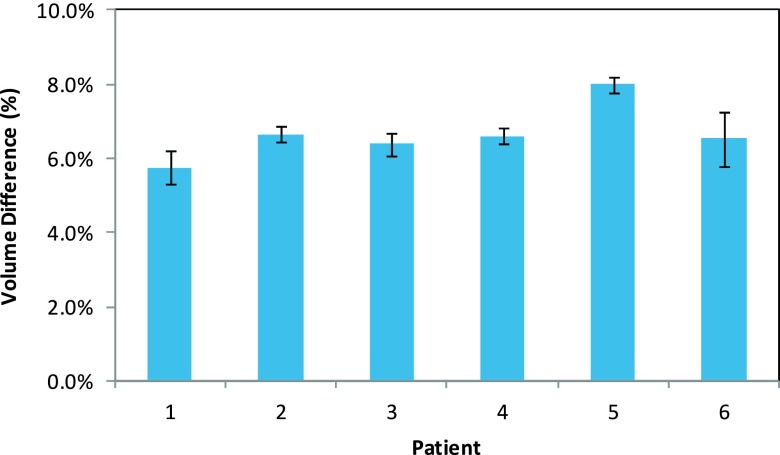

Inter- and intraobserver reliability of the prostate contours is demonstrated in Fig. 10. Among the manual segmentations of the three observers, the mean prostate volume difference was −1.13% ± 8.40%, 1.11% ± 4.70% and 0.31% ± 4.94% for the TRUS, and 1.79% ± 6.16%, −0.85% ± 3.32%, and 1.12% ± 3.16% for the MRI. Between the two measurements of the same observer, the mean prostate volume difference was −0.93% ± 3.19% for the TRUS, and −0.07% ± 3.56% for the MRI. Figure 11 compares the automated segmented prostate CT volumes based on the three observers’ segmentations and one observer’s segmentations at two different times. The mean prostate volume difference for our automated CT segmentation was −1.95% ± 7.50%, 1.64% ± 4.51%, and 0.05% ± 4.85% based on the manual segmentations of three observers, and −0.04% ± 3.30% based on the manual segmentations of the same observer at two different times. The inter- and intraobserver reliability study showed the consistency in the manual segmentations, as well as in our automated segmentations based on various sets of manual segmentations.

FIG. 10.

Inter- and intraobserver reliability of the prostate contours. Among three observers, prostate volume comparison for TRUS (a) and MRI (b); between the two measurements of the same observer, prostate volume comparison for TRUS (c) and MRI (d). Interobserver reliability was demonstrated by the agreement between three observers’ prostate volumes. And intraobserver reliability was demonstrated by the agreement between the two sets of prostate volumes performed by one observer.

FIG. 11.

Prostate volume comparison of our automated segmentations based on the three observer’s segmentations (a) and the same observer’s segmentations at two different times (b).

Figure 12 shows the volume difference between our automated segmentation volumes and the MR-defined prostate volumes for three observers. There are no significant prostate volume differences among these three observers (p-values = 0.43, 0.32, and 0.28 between any two observers). Figure 13 shows the volume difference between our automated segmentation volumes and the MR-defined prostate volumes for the same observer. There is no significant volume difference between the two measurements (p-value = 0.37).

FIG. 12.

The volume difference between our automated segmentation volumes and the MR-defined prostate volumes for three interobservers.

FIG. 13.

The volume difference between our automated segmentation volumes and the MR-defined prostate volumes for the same intraobserver.

4. DISCUSSIONS

We proposed a novel CT prostate segmentation approach through TRUS–CT deformable registration based on the HDR catheter locations, which may significantly improve the prostate contour accuracy in US-guided CT-based prostate HDR treatment. This method was tested through a prostate-phantom study and a pilot clinical study. In the prostate-phantom study, the mean displacement of the three implanted gold markers was less than 0.5 mm. In addition, the small surface and volume difference of both the prostate and the urethra further demonstrated that our approach not only captured the external deformation (prostate contour), but also the internal deformation (urethra). In the clinical study, we further demonstrated its clinical feasibility and validated the segmentation accuracy with the patients’ MRIs.

The novelty of our approach is the integration of TRUS-based prostate volume into CT-based prostate HDR treatment planning. To the best of our knowledge, this is the first study on CT prostate segmentation with catheters based on the TRUS volume in HDR brachytherapy. This approach has three distinctive strengths. (1) We utilize 3D TRUS images to provide accurate prostate delineation and improve prostate contours for CT-based HDR treatment planning. (2) The TRUS and planning CT images both are acquired post catheter insertions, so these HDR catheters uniformly and symmetrically distributed inside the prostate gland provide exceptional landmarks for the later TRUS–CT registration to capture the nonrigid prostate deformation between the TRUS and CT modalities. (3) Our approach is clinically feasible, and can be easily adapted into the HDR procedure. The 3D TRUS data are acquired in the operating room during a HDR procedure; and the patient scan takes 1–3 min. Therefore no prior TRUS or additional patient visits for imaging are required. In addition, these TRUS images acquired during the HDR procedure provide the most authentic prostate volume for HDR treatment planning as compared with patients’ previous TRUS or MRI.

The robustness of the proposed prostate segmentation resulted from the accurate registration between the TRUS and CT images. The registration between CT and US images of the prostate is often very challenging, mainly because the anatomical structures in the US images are embedded in a noisy and low contrast environment, with little distinctive information regarding the material density measured in the CT images. To overcome these difficulties, many approaches were proposed to achieve prostate registration between CT and US. For example, Fallavollita et al. reported an intensity-based registration method using TRUS and CT. Their registration error was 0.54 ± 0.11 mm in the phantom study and 2.86 ± 1.26 mm in the clinical study.52,53 Using a similar registration method, Dehghan et al. reported a 0.70 ± 0.20 mm error in the implanted seed locations for the phantom study and a 1.80 ± 0.90 mm registration error for the clinical study.52,53 Even et al. used 1–2 fiducial markers and 3–4 needle tips to perform a rigid registration between TRUS and cone-beam CT, and their registration errors were within 3 mm for 85% of their patients.54 Yang et al. presented a hybrid approach that simultaneously optimized the similarities from the point-based registration and the volume matching method. In a phantom study, a target registration error of 3-voxels (1.5 mm) was reported.39 Firle et al. used the segmented structures (e.g., prostate or urethra) for US and CT prostate registration. Their prostate-phantom study demonstrated a 0.55–1.67 mm accuracy, but no clinical study was reported.55 In this study, we proposed a catheter-based registration method. In the HDR procedure, the catheters were fixed with the needle template after the completion of the catheter insertion in the operating room to ensure no catheter movement or displacement relative to the prostate gland throughout the brachytherapy procedure. In order to deliver a uniform dose to the prostate and spare the surrounding normal tissues, such as the bladder and the rectum, the catheters were evenly placed to cover the entire prostate. Such uniformly and symmetrically distributed catheters provide exceptional landmarks to capture the nonrigid prostate deformation between the TRUS and CT images. Even though some catheters may be curved due to the deflection, manual catheter segmentation can easily capture the curvatures. In addition, we chose a B-splines transformation model. Therefore, the translation of a point is only determined by the area immediately surrounding the control points to ensure locally controlled transformation.56 Because the deformations caused by a transrectal probe are spatially localized, this locally controlled transformation could be advantageous for registering TRUS images and results in smooth transformation fields. As a result, our registration between the CT and US prostate images has achieved submillimeter accuracy in the phantom study, and a 1.18 ± 0.26 mm accuracy in the clinical study.

In recent years, many prostate segmentation methods based on CT images have been proposed, and these approaches can be broadly classified into three main categories: model-based, classification-based, or registration-based methods.26 Model-based methods16–21 construct the statistical models based on the prostate shape or appearance to guide segmentation in a new set of images. Feng et al. presented a deformable-model-based segmentation method by using both the shape and appearance information learned from the previous images to guide an automatic segmentation of the new CT images.17 Chowdhury et al. proposed a linked statistical shape model that links the shape variations of a structure of interest across MR and CT imaging modalities to concurrently segment the prostate on the MRI and CT images.21 In the classification-based methods,21–27 the segmentation process is formulated as a classification problem, where classifiers are trained from the training images, and based on which voxel-wise classification is performed for each voxel in the new image to determine whether it belongs to the prostate or the nonprostate region. Li et al. presented an online-learning and patient-specific classification method based on the location-adaptive image context to achieve the segmentation of the prostate in CT images.22 In Liao’s paper, a patch-based representation in the discriminative feature space with logistic sparse LASSO was used as the anatomical signature to deal with the low contrast problem in the prostate CT images, and a multiatlase label fusion method formulated under sparse representation framework was designed to segment the prostate.26 Finally, registration-based methods28,29 explicitly estimate the deformation field from the planning image to the treatment image so that the segmented prostate in the planning image can be warped to the treatment image space to localize the prostate in the treatment images. Davis et al.’s paper exemplifies the registration method by combining a large deformation image registration with a bowel gas segmentation and deflation algorithm for adaptive radiation therapy of the prostate.28

We compared our method with six previous prostate CT segmentation methods proposed by Chen et al.,16 Feng et al.,17 Li et al.,22 Liao et al.,23,26 and Davis et al.28 The mean Dice volume overlap of these 6 methods ranged between 82.0% and 90.9%. In contrast, our approach integrated accurate TRUS prostate volume into the CT images, and the average Dice volume overlap between our segmentation and the MRI-based prostate volume was 91.89%. The volume discrepancies mostly occurred at the base and apex of the prostate. The prostate boundary on the MRI is sometimes not clear around the prostate base and apex. Therefore, the volume discrepancies may be related to the MRI-based manual segmentation error. Of course, many other factors, such as patient position, rectal probe, and catheter-induced prostate swelling, could also contribute to the discrepancies.

For future studies, we will either decrease the number of catheters used in the deformable registration or incorporate automatic catheter recognition in TRUS images to speed up the registration and segmentation. We will also introduce automatic segmentation methods for TRUS prostate images to eliminate physicians’ manual contours.57–64 Meanwhile, we are conducting a clinical study with a larger cohort to further investigate treatment outcomes (e.g., cancer control and side effects) in the clinic.

5. CONCLUSION

Accurate segmentation of the prostate volume in the treatment planning CT is a key step to the success of CT-based HDR prostate brachytherapy. We have developed a novel segmentation approach to improve prostate contour utilizing intraoperative TRUS-based prostate volume in the treatment planning. In a preliminary study of ten patients, we demonstrated its clinical feasibility and validated the accuracy of the segmentation method with MRI-defined prostate volumes. Our multimodality technology, which incorporates accurate TRUS prostate volume and fits efficiently with the HDR brachytherapy workflow, could improve prostate contour in planning CT, enable accurate dose planning and delivery, and potentially enhance the prostate HDR treatment outcomes.

ACKNOWLEDGMENTS

This research is supported in part by the DOD Prostate Cancer Research Program (PCRP) Award W81XWH-13-1-0269 and the National Cancer Institute (NCI) Grant No. CA114313. The authors report no conflicts of interest in conducting the research.

REFERENCES

- 1.Challapalli A., Jones E., Harvey C., Hellawell G. O., and Mangar S. A., “High dose rate prostate brachytherapy: An overview of the rationale, experience and emerging applications in the treatment of prostate cancer,” Br. J. Radiol. 85, S18–S27 (2012). 10.1259/bjr/15403217 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Williams S. G., Taylor J. M. G., Liu N., Tra Y., Duchesne G. M., Kestin L. L., Martinez A., Pratt G. R., and Sandler H., “Use of individual fraction size data from 3756 patients to directly determine the alpha/beta ratio of prostate cancer,” Int. J. Radiat. Oncol. 68, 24–33 (2007). 10.1016/j.ijrobp.2006.12.036 [DOI] [PubMed] [Google Scholar]

- 3.Sato M., Mori T., Shirai S., Kishi K., Inagaki T., and Hara I., “High-dose-rate brachytherapy of a single implant with two fractions combined with external beam radiotherapy for hormone-naive prostate cancer,” Int. J. Radiat. Oncol. 72, 1002–1009 (2008). 10.1016/j.ijrobp.2008.02.055 [DOI] [PubMed] [Google Scholar]

- 4.Pisansky T. M., Gold D. G., Furutani K. M., Macdonald O. K., McLaren R. H., Mynderse L. A., Wilson T. M., Hebl J. R., and Choo R., “High-dose-rate brachytherapy in the curative treatment of patients with localized prostate cancer,” Mayo Clin. Proc. 83, 1364–1372 (2008). 10.4065/83.12.1364 [DOI] [PubMed] [Google Scholar]

- 5.Jereczek-Fossa B. A. and Orecchia R., “Evidence-based radiation oncology: Definitive, adjuvant and salvage radiotherapy for non-metastatic prostate cancer,” Radiother. Oncol. 84, 197–215 (2007). 10.1016/j.radonc.2007.04.013 [DOI] [PubMed] [Google Scholar]

- 6.Stewart A. J. and Jones B., Radiobiologic Concepts for Brachytherapy (Williams & Wilkins, Philadelphia, PA: Lippincott, 2002). [Google Scholar]

- 7.“Prostate cancer,” NCCN clinical practice guidelines in oncology, V.2.2009 (2009) (available URL: http://www.nccn.org/professionals/physician_gls/PDF/prostate.pdf). [DOI] [PubMed]

- 8.Mettlin C. J., Murphy G. P., McDonald C. J., and Menck H. R., “The National Cancer Data base Report on increased use of brachytherapy for the treatment of patients with prostate carcinoma in the U.S.,” Cancer 86, 1877–1882 (1999). [DOI] [PubMed] [Google Scholar]

- 9.Cooperberg M. R., Lubeck D. P., Meng M. V., Mehta S. S., and Carroll P. R., “The changing face of low-risk prostate cancer: Trends in clinical presentation and primary management,” J. Clin. Oncol. 22, 2141–2149 (2004). 10.1200/JCO.2004.10.062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Acosta O., Dowling J., Drean G., Simon A., Crevoisier R., and Haigron P., “Multi-atlas-based segmentation of pelvic structures from CT scans for planning in prostate cancer radiotherapy,” in Abdomen and Thoracic Imaging, edited by El-Baz A. S., Saba L., and Suri J. (Springer, NY, 2014), pp. 623–656. [Google Scholar]

- 11.Dubois D. F., Prestidge B. R., Hotchkiss L. A., Prete J. J., and Bice W. S., “Intraobserver and interobserver variability of MR imaging- and CT-derived prostate volumes after transperineal interstitial permanent prostate brachytherapy,” Radiology 207, 785–789 (1998). 10.1148/radiology.207.3.9609905 [DOI] [PubMed] [Google Scholar]

- 12.Yang X., Liu T., Marcus D. M., Jani A. B., Ogunleye T., Curran W. J., and Rossi P. J., “A novel ultrasound-CT deformable registration process improves physician contouring during CT-based HDR brachytherapy for prostate cancer,” Brachytherapy 13, S67–S68 (2014). 10.1016/j.brachy.2014.02.316 [DOI] [Google Scholar]

- 13.Roach M., FaillaceAkazawa P., Malfatti C., Holland J., and Hricak H., “Prostate volumes defined by magnetic resonance imaging and computerized tomographic scans for three-dimensional conformal radiotherapy,” Int. J. Radiat. Oncol. 35, 1011–1018 (1996). 10.1016/0360-3016(96)00232-5 [DOI] [PubMed] [Google Scholar]

- 14.Rasch C., Barillot I., Remeijer P., Touw A., van Herk M., and Lebesque J. V., “Definition of the prostate in CT and MRI: A multi-observer study,” Int. J. Radiat. Oncol. 43, 57–66 (1999). 10.1016/S0360-3016(98)00351-4 [DOI] [PubMed] [Google Scholar]

- 15.Hoffelt S. C., Marshall L. M., Garzotto M., Hung A., Holland J., and Beer T. M., “A comparison of CT scan to transrectal ultrasound-measured prostate volume in untreated prostate cancer,” Int. J. Radiat. Oncol. 57, 29–32 (2003). 10.1016/S0360-3016(03)00509-1 [DOI] [PubMed] [Google Scholar]

- 16.Chen S. Q., Lovelock D. M., and Radke R. J., “Segmenting the prostate and rectum in CT imagery using anatomical constraints,” Med. Image Anal. 15, 1–11 (2011). 10.1016/j.media.2010.06.004 [DOI] [PubMed] [Google Scholar]

- 17.Feng Q. J., Foskey M., Chen W. F., and Shen D. G., “Segmenting CT prostate images using population and patient-specific statistics for radiotherapy,” Med. Phys. 37, 4121–4132 (2010). 10.1118/1.3464799 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Stough J. V., Broadhurst R. E., Pizer S. M., and Chaney E. L., “Regional appearance in deformable model segmentation,” Lect. Notes Comput. Sci. 4584, 532–543 (2007). [DOI] [PubMed] [Google Scholar]

- 19.Freedman D., Radke R. J., Zhang T., Jeong Y., Lovelock D. M., and Chen G. T. Y., “Model-based segmentation of medical imagery by matching distributions,” IEEE Trans. Med. Imaging 24, 281–292 (2005). 10.1109/TMI.2004.841228 [DOI] [PubMed] [Google Scholar]

- 20.Smitsmans M. H., Wolthaus J. W., Artignan X., de Bois J., Jaffray D. A., Lebesque J. V., and van Herk M., “Automatic localization of the prostate for on-line or off-line image-guided radiotherapy,” Int. J. Radiat. Oncol., Biol., Phys. 60, 623–635 (2004). 10.1016/j.ijrobp.2004.05.027 [DOI] [PubMed] [Google Scholar]

- 21.Chowdhury N., Toth R., Chappelow J., Kim S., Motwani S., Punekar S., Lin H. B., Both S., Vapiwala N., Hahn S., and Madabhushi A., “Concurrent segmentation of the prostate on MRI and CT via linked statistical shape models for radiotherapy planning,” Med. Phys. 39, 2214–2228 (2012). 10.1118/1.3696376 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Li W., Liao S., Feng Q. J., Chen W. F., and Shen D. G., “Learning image context for segmentation of the prostate in CT-guided radiotherapy,” Phys. Med. Biol. 57, 1283–1308 (2012). 10.1088/0031-9155/57/5/1283 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Liao S. and Shen D. G., “A feature-based learning framework for accurate prostate localization in CT images,” IEEE Trans. Image Process. 21, 3546–3559 (2012). 10.1109/TIP.2012.2194296 [DOI] [PubMed] [Google Scholar]

- 24.Haas B., Coradi T., Scholz M., Kunz P., Huber M., Oppitz U., Andre L., Lengkeek V., Huyskens D., Van Esch A., and Reddick R., “Automatic segmentation of thoracic and pelvic CT images for radiotherapy planning using implicit anatomic knowledge and organ-specific segmentation strategies,” Phys. Med. Biol. 53, 1751–1771 (2008). 10.1088/0031-9155/53/6/017 [DOI] [PubMed] [Google Scholar]

- 25.Ghosh P. and Mitchell M., “Segmentation of medical images using a genetic algorithm,” in GECCO 2006: Genetic and Evolutionary Computation Conference (ACM, New York, NY, 2006), Vols. 1 and 2, pp. 1171–1178 10.1145/1143997.1144183 [DOI] [Google Scholar]

- 26.Liao S., Gao Y. Z., Lian J., and Shen D. G., “Sparse patch-based label propagation for accurate prostate localization in CT images,” IEEE Trans. Med. Imaging 32, 419–434 (2013). 10.1109/TMI.2012.2230018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Ghosh P. and Mitchell M., “Prostate segmentation on pelvic CT images using a genetic algorithm,” Proc. Soc. Photo.-Opt. Instrum. 6914 , 91442–91449 (2008). 10.1117/12.770834 [DOI] [Google Scholar]

- 28.Davis B. C., Foskey M., Rosenman J., Goyal L., Chang S., and Joshi S., “Automatic segmentation of intra-treatment CT images for adaptive radiation therapy of the prostate,” in Medical Image Computing and Computer-Assisted Intervention - Miccai 2005 (Springer Berlin Heidelberg, Palm Springs, CA, 2005) Vol. 3749, pp. 442–450 10.1007/11566465_55 [DOI] [PubMed] [Google Scholar]

- 29.Foskey M., Davis B., Goyal L., Chang S., Chaney E., Strehl N., Tomei S., Rosenman J., and Joshi S., “Large deformation three-dimensional image registration in image-guided radiation therapy,” Phys. Med. Biol. 50, 5869–5892 (2005). 10.1088/0031-9155/50/24/008 [DOI] [PubMed] [Google Scholar]

- 30.Lee J. S. and Chung B. H., “Transrectal ultrasound versus magnetic resonance imaging in the estimation of prostate volume as compared with radical prostatectomy specimens,” Urol. Int. 78, 323–327 (2007). 10.1159/000100836 [DOI] [PubMed] [Google Scholar]

- 31.Weiss B. E., Wein A. J., Malkowicz S. B., and Guzzo T. J., “Comparison of prostate volume measured by transrectal ultrasound and magnetic resonance imaging: Is transrectal ultrasound suitable to determine which patients should undergo active surveillance?,” Urol. Oncol.:Semin. Orig. Invest. 31, 1436–1440 (2013). 10.1016/j.urolonc.2012.03.002 [DOI] [PubMed] [Google Scholar]

- 32.Tewari A., Indudhara R., Shinohara K., Schalow E., Woods M., Lee R., Anderson C., and Narayan P., “Comparison of transrectal ultrasound prostatic volume estimation with magnetic resonance imaging volume estimation and surgical specimen weight in patients with benign prostatic hyperplasia,” J. Clin. Ultrasound 24, 169–174 (1996). [DOI] [PubMed] [Google Scholar]

- 33.Mazaheri Y., Shukla-Dave A., Muellner A., and Hricak H., “MR imaging of the prostate in clinical practice,” Magn. Reson. Mater. Phys. 21, 379–392 (2008). 10.1007/s10334-008-0138-y [DOI] [PubMed] [Google Scholar]

- 34.Peikari M., Chen T. K., Lasso A., Heffter T., Fichtinger G., and Burdette E. C., “Characterization of ultrasound elevation beamwidth artifacts for prostate brachytherapy needle insertion,” Med. Phys. 39, 246–256 (2012). 10.1118/1.3669488 [DOI] [PubMed] [Google Scholar]

- 35.Chui H. and Rangarajan A., “A new point matching algorithm for non-rigid registration,” Comput. Vis. Image Und. 89, 114–141 (2002). 10.1016/S1077-3142(03)00009-2 [DOI] [Google Scholar]

- 36.Belongie S., Malik J., and Puzicha J., “Shape matching and object recognition using shape contexts,” IEEE Trans. Pattern Anal. 24, 509–522 (2002). 10.1109/34.993558 [DOI] [PubMed] [Google Scholar]

- 37.Zhan Y., Ou Y., Feldman M., Tomaszeweski J., Davatzikos C., and Shen D., “Registering histologic and MR images of prostate for image-based cancer detection,” Acad. Radiol. 14(11), 1367–1381 (2007). 10.1016/j.acra.2007.07.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Yuil A. L. and Kosowsky J. J., “Statistical physics algorithms that converge,” Neural Comput. 6, 341–356 (1994). 10.1162/neco.1994.6.3.341 [DOI] [Google Scholar]

- 39.Yang X. F., Akbari H., Halig L., and Fei B. W., “3D non-rigid registration using surface and local salient features for transrectal ultrasound image-guided prostate biopsy,” Proc. SPIE 7964 , 79642V1–7964V8 (2011). 10.1117/12.878153 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Hardy R. L., “Hardy’s multiquadric–biharmonic method for gravity field predictions II,” Comput. Math. Appl. 41, 1043–1048 (2001). 10.1016/S0898-1221(00)00338-2 [DOI] [Google Scholar]

- 41.Bookstein F. L., “Principal warps - thin-plate splines and the decomposition of deformations,” IEEE Trans. Pattern Anal. 11, 567–585 (1989). 10.1109/34.24792 [DOI] [Google Scholar]

- 42.Stammberger T., Hohe J., Englmeier K. H., Reiser M., and Eckstein F., “Elastic registration of 3D cartilage surfaces from MR image data for detecting local changes in cartilage thickness,” Magn. Reson. Med. 44, 592–601 (2000). [DOI] [PubMed] [Google Scholar]

- 43.Arad N. and Reisfeld D., “Image warping using few anchor points and radial functions,” Comput. Graph. Forum 14, 35–46 (1995). 10.1111/1467-8659.1410035 [DOI] [Google Scholar]

- 44.Xie Z. Y. and Farin G. E., “Image registration using hierarchical B-splines,” IEEE Trans. Vis. Comput. Graph. 10, 85–94 (2004). 10.1109/TVCG.2004.1260760 [DOI] [PubMed] [Google Scholar]

- 45.Mazaheri Y., Bokacheva L., Kroon D. J., Akin O., Hricak H., Chamudot D., Fine S., and Koutcher J. A., “Semi-automatic deformable registration of prostate MR images to pathological slices,” J. Magn. Reson. Imaging 32, 1149–1157 (2010). 10.1002/jmri.22347 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Moghari M. H. and Abolmaesumi P., “Distribution of target registration error for anisotropic and inhomogeneous fiducial localization error,” IEEE Trans. Med. Imaging 28, 799–813 (2009). 10.1109/TMI.2009.2020751 [DOI] [PubMed] [Google Scholar]

- 47.Smith W. L., Lewis C., Bauman G., Rodrigues G., D’Souza D., Ash R., Ho D., Venkatesan V., Downey D., and Fenster A., “Prostate volume contouring: A 3D analysis of segmentation using 3DTRUS, CT, and MR,” Int. J. Radiat. Oncol., Biol., Phys. 67, 1238–1247 (2007). 10.1016/j.ijrobp.2006.11.027 [DOI] [PubMed] [Google Scholar]

- 48.Dean C. J., Sykes J. R., Cooper R. A., Hatfield P., Carey B., Swift S., Bacon S. E., Thwaites D., Sebag-Montefiore D., and Morgan A. M., “An evaluation of four CT–MRI co-registration techniques for radiotherapy treatment planning of prone rectal cancer patients,” Br. J. Radiol. 85, 61–68 (2012). 10.1259/bjr/11855927 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Betgen A., Pos F., Schneider C., van Herk M., and Remeijer P., “Automatic registration of the prostate on MRI scans to CT scans for radiotherapy target delineation,” Radiother. Oncol. 84 , S119 (2007). [Google Scholar]

- 50.Levy D., Schreibmann E., Thorndyke B., Li T., Yang Y., Boyer A., and Xing L., “Registration of prostate MRI/MRSI and CT studies using the narrow band approach,” Med. Phys. 32 , 1895 (2005). 10.1118/1.1997433 [DOI] [Google Scholar]

- 51.Bland J. M. and Altman D. G., “Statistical methods for assessing agreement between two methods of clinical measurement,” Lancet 1, 307–310 (1986). 10.1016/S0140-6736(86)90837-8 [DOI] [PubMed] [Google Scholar]

- 52.Fallavollita P., Aghaloo Z. K., Burdette E. C., Song D. Y., Abolmaesumi P., and Fichtinger G., “Registration between ultrasound and fluoroscopy or CT in prostate brachytherapy,” Med. Phys. 37, 2749–2760 (2010). 10.1118/1.3416937 [DOI] [PubMed] [Google Scholar]

- 53.Dehghan E., Lee J., Fallavollita P., Kuo N., Deguet A., Burdette E. C., Song D., Prince J. L., and Fichtinger G., “Point-to-volume registration of prostate implants to ultrasound,” in Medical Image Computing and Computer-Assisted Intervention (Miccai 2011) (Springer Berlin Heidelberg, Toronto, Canada, 2011), Vol. 6892, pp. 615–622. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Even A. J., Nuver T. T., Westendorp H., Hoekstra C. J., Slump C. H., and Minken A. W., “High-dose-rate prostate brachytherapy based on registered transrectal ultrasound and in-room cone-beam CT images,” Brachytherapy 13 , 128–136 (2013). 10.1016/j.brachy.2013.08.001 [DOI] [PubMed] [Google Scholar]

- 55.Firle E. A., Chen W., and Wesarg S., “Registration of 3D U/S and CT images of the prostate,” in Cars 2002: Computer Assisted Radiology and Surgery, Proceedings (Springer Berlin Heidelberg, Congress and Exhibition Paris, 2002), pp. 527–532. [Google Scholar]

- 56.Yang X., Rossi P., Ogunleye T., Jani A. B., Curran W. J., and Liu T., “A new CT prostate segmentation for CT-based HDR brachytherapy,” Proc. SPIE 9036 , 90362K-9 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Yang X. and Fei B., “3D prostate segmentation of ultrasound images combining longitudinal image registration and machine learning,” Proc. SPIE 8316, 83162O (2012). 10.1117/12.912188 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Yang X., Schuster D., Master V., Nieh P., Fenster A., and Fei B., “Automatic 3D segmentation of ultrasound images using atlas registration and statistical texture prior,” Proc. SPIE 7964, 796432 (2011). 10.1117/12.877888 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Yan P., Xu S., Turkbey B., and Kruecker J., “Adaptively learning local shape statistics for prostate segmentation in ultrasound,” IEEE Trans. Biomed. Eng. 58, 633–641 (2011). 10.1109/TBME.2010.2094195 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Zhan Y. and Shen D., “Deformable segmentation of 3-D ultrasound prostate images using statistical texture matching method,” IEEE Trans. Med. Imaging 25, 256–272 (2006). 10.1109/TMI.2005.862744 [DOI] [PubMed] [Google Scholar]

- 61.Noble J. A. and Boukerroui D., “Ultrasound image segmentation: A survey,” IEEE Trans. Med. Imaging 25, 987–1010 (2006). 10.1109/TMI.2006.877092 [DOI] [PubMed] [Google Scholar]

- 62.Shen D., Zhan Y., and Davatzikos C., “Segmentation of prostate boundaries from ultrasound images using statistical shape model,” IEEE Trans. Med. Imaging 22, 539–551 (2003). 10.1109/TMI.2003.809057 [DOI] [PubMed] [Google Scholar]

- 63.Zhan Y. Q. and Shen D. G., “Automated segmentation of 3D US prostate images using statistical texture-based matching method,” in Medical Image Computing and Computer-Assisted Intervention - Miccai 2003 (Springer Berlin Heidelberg, Montréal, Canada, 2003), Vol. 2878, pp. 688–696. [Google Scholar]

- 64.Qiu W., Yuan J., Ukwatta E., Sun Y., Rajchl M., and Fenster A., “Prostate segmentation: An efficient convex optimization approach with axial symmetry using 3-D TRUS and MR images,” IEEE Trans. Med. Imaging 33, 947–960 (2014). 10.1109/TMI.2014.2300694 [DOI] [PubMed] [Google Scholar]