Abstract

Users in virtual environments often find navigation more difficult than in the real world. Our new locomotion interface, Improved Redirection with Distractors (IRD), enables users to walk in larger-than-tracked space VEs without predefined waypoints. We compared IRD to the current best interface, really walking, by conducting a user study measuring navigational ability. Our results show that IRD users can really walk through VEs that are larger than the tracked space and can point to targets and complete maps of VEs no worse than when really walking.

Index Terms: I.3.6 [Computer Graphics]: Methodology and Techniques—Interaction techniques, I.3.7 [Computer Graphics]: Three-Dimensional Graphics and Realism—Virtual Reality, H.5.1 [Information Interfaces and Presentation]: Multimedia Information Systems-Artificial—augmented and virtual realities

1 Introduction

Navigation is important for virtual environment (VE) training applications in which spatial understanding of the VE must transfer to the real world. People navigate every day in the real world without problems, however users often find it challenging to transfer spatial knowledge acquired in a VE to the real world [7, 14, 8]. Development of locomotion interfaces that support navigation, both its wayfinding and locomotion components [5], is required for large VE exploration, and will improve VE training-transfer applications and reduce user disorientation.

Previous research suggests that locomotion interfaces that provide users with vestibular and proprioceptive feedback improve user navigation performance and are less likely to cause simulator sickness than locomotion interfaces that do not stimulate both systems [3, 16]. Recent research also suggests that users navigate best in VEs with real-walking locomotion interfaces [16]. VE locomotion interfaces such as walking-in-place, omni-directional treadmills, or bicycles [9, 4], do not stimulate the proprioceptive and vestibular systems as effectively as really walking.

Real-walking locomotion interfaces enable better user navigation, however the user must be tracked, restricting the VE size to the size of the tracked space. Current interfaces that enable real walking in larger-than-tracked-space VEs include Redirected-Walking [15], scaled-translational-gain [20, 21], seven-league-boots [10], and Motion Compression [12]. Each of these interfaces transform the VE or user motion by rotating or scaling. While transformations such enable large-scale real walking in VEs, the effect of transformations on navigational ability is unknown.

Additionally, with such techniques, users may attempt to walk out of the tracked space and a reorientation technique must be used [13]. Reorientation techniques stop the user and rotate the VE around the user to place the desired user path inside the tracked space. Previous research suggests that distractors, virtual objects or sounds in the VE, are promising reorientation technique because they are natural and preferred by users, and do not reduce presence [13].

We hypothesize that a locomotion interface that incorporates real walking, transformations of the VE around the user, and distractors, is a promising interface to support user-navigation in large VEs. To test our theory, we developed Improved Redirection with Distractors (IRD), a real-walking locomotion interface, that enables users to freely walk in larger-than-tracked-space VEs. We evaluated our system using common navigation and wayfinding metrics. In this paper, we present IRD and compare it to the current best approach, real walking, through a user study. Our results show that users can navigate and wayfind no worse when using IRD compared to real walking.

2 Background

A review of large-scale real-walking interfaces and reorientation techniques can be found in [13]. Additionally, [2] proposed rotating and scaling step size for a building walk through in which participants locomote through “portals” that teleport to different rooms in the VE. The system is effective for locomoting through buildings, however teleportation hinders navigational ability [6].

Search tasks are commonly used for locomotion-interface evaluation exploring navigational ability and VE training-transfer of spatial knowledge [1, 18, 22]. Our search task evaluation includes naïve search, in which targets have not yet been seen, and primed search, in which targets have previously been seen. Point-to-target techniques, in which users are asked to point to previously seen targets within the VE that are currently out of view, are a measure of a user’s ability to wayfind within VEs [3]. Map completion requires users to place targets on a map that correspond with VE locations. Maps are often used because they are the navigation metaphor for which people are most familiar [5]. We evaluate our interface through a user study measuring these common navigation and wayfinding metrics.

3 Improved Redirection with Distractors (IRD)

We designed and built Improved Redirection with Distractors (IRD), a locomotion interface that enables users to really walk in larger-than-tracked-space VEs. Our locomotion interface imperceptibly rotates the VE around the user, as in Redirected Walking [15], while eliminating the need for predefined waypoints by using distractors to stop the user when rotating the predicted user path back toward the center of the tracked space [13].

With IRD, the VE continuously and imperceptibly rotates around the user, attempting to keep the user’s immediate future path within the tracked space. The amount of imperceptible rotation added to the system is based on the angular speed of the user’s head turn, h. We rotate the scene based on head turn rate because user perception of rotation is most inaccurate during head turns [15]. We increase the total rotation of the VE based on a percentage of the user’s angular head turn speed, where our increased-rotation value, r, is based on previous work [17, 11]: 3% increase when rotating with head turn and 1% when rotating against head turn. This imperceptible increase in rotation is applied every frame. The direction of VE rotation is based on a steer-to-center algorithm in which the predicted user path (based average on user heading direction over the past second) is continuously steered toward the center of the tracked space [15].

We use distractors to keep users from walking out of the tracked space and redirect the user’s current path toward the center of the tracked space. When the user approaches the edge of the tracked space, a distractor appears, signaling the user to stop. The distractor moves back and forth in front of the user. Users have previously been instructed to turn their heads back and forth while watching the distractor. As the user is turning her head, the predicted path is rotated toward the center of the lab. Previous results suggest that users are less aware of increased rotation when focussing on visual distractors, therefore the percentage of increased rotation rate r is doubled when distractors are in use [13].

4 Experiment

We investigated the effect of IRD on navigation abilities comparing IRD to real walking (RW) in a between- subjects study. One of our study mazes is shown in Figure 3. The mazes were 8m × 8m and fit completely within our 9m × 9m tracked space. Participants in the RW condition really walked through the mazes without experiencing VE rotation or distractors. Participants in the IRD condition were restricted to walking in a physical space that was 6.5m × 6.5m, and experienced continuous VE rotation and distractors.

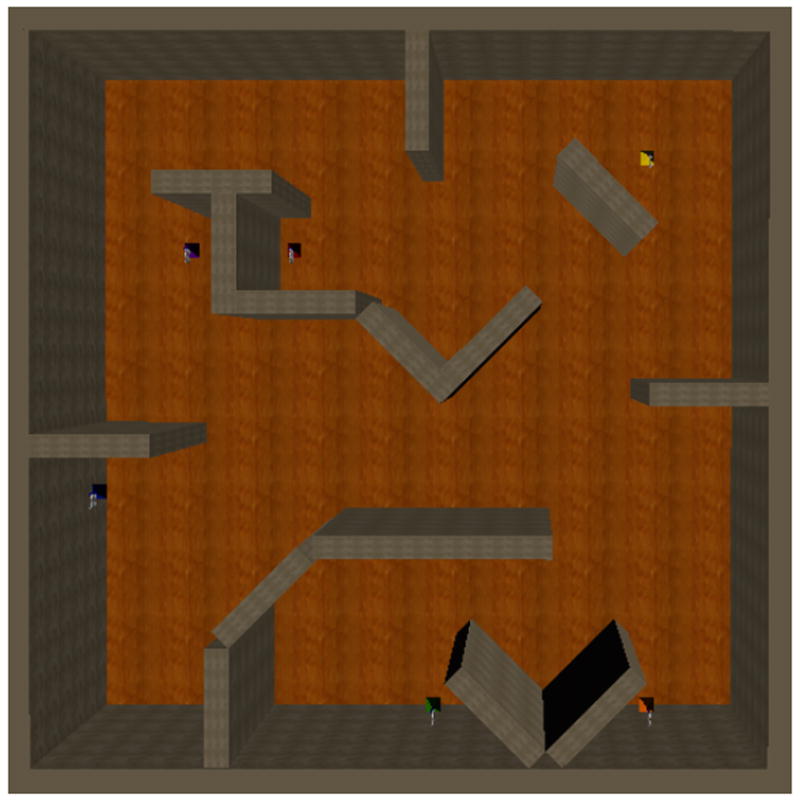

Figure 3.

Part 2: Primed search. An overhead view of the maze and target locations of the maze used in Part 2. Participants started in the bottom left corner of the maze.

We measured the distance participants walked, the angle between where participants pointed and the correct direction to the current target, and correctness of map target placement. Evaluation of IRD is based on being “no worse” than RW using 95% confidence interval equivalence testing. For each measure, if the 95% confidence intervals of the mean difference between IRD and RW falls within ± our predefined acceptable value of the difference, then IRD is no worse than RW [19].

4.1 Participants

Twenty-two participants, 18 men and 4 women, with average age 26, participated in the IRB-approved experiment. Nine men and two women were assigned the RW condition, and eight men and two women the IRD condition. One participant’s data was not used because the participant appeared to not be trying. Not all participants were naïve to IRD, therefore all participants in the IRD condition were informed about the locomotion interface.

4.2 Equipment

Participants wore an nVisor SX HMD display (1280× 1024 resolution, 60° diagonal FOV). The tracker was a 3rdTech HiBall 3000. The environment was rendered on a Pentium D dual-core 2.8GHz processor machine with an NVIDIA GeForce GTX 280 GPU with 4GB of RAM. The application was implemented in our locally-developed EVEIL library that communicates with the Gamebryo® software game engine from Emergent Technologies. The Virtual Reality Peripheral Network (VRPN) was used for tracker communication.

4.3 Study Design

The experiment used three virtual environments: a training environment and two testing environments (Figure 3). The environments are 8m × 8m mazes with uniquely colored and numbered targets placed at specified locations, and have the same wall and floor textures. The location and number of targets change between environments. To increase complexity and remove orientation cues, some walls in the Part 2 maze are at angles other than 90° To account for training effects, participants completed trials in the same order. Participants were randomly assigned to RW or IRD conditions, and completed all experiment parts, including training, within the assigned condition. Participants read written instructions before beginning each section of the experiment and were advised to ask questions if they were unclear of tasks. Participants in the IRD condition were reminded that a ghost distractor might appear within the environment. If the ghost appeared, they were to take one step backward and turn their head to watch the ghost. Participants were allowed to continue walking once the ghost disappeared.

4.3.1 Training

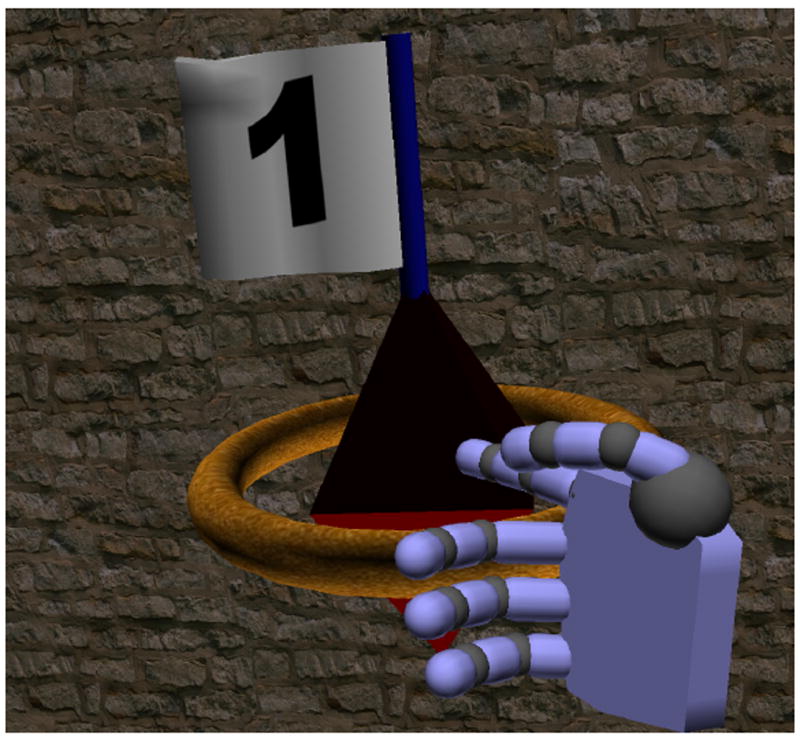

The training task was a directed search in which participants used a hand-held tracked device to select four targets in the maze. When a target was selected, a ring appeared around it and audio feedback was played to signify that the target had been found (Figure 2). Once participants had found the four targets, they were asked to stand inside a circle on the floor and practice pointing at a target 1.5m in front of them. The training session ended when the participant successfully pointed within 6cm of the center of the target.

Figure 2.

The virtual avatar hand selecting target 1, the red target.

After completing the training maze, participants removed the HMD filled in the missing targets on a 16cm × 16cm overhead view of the maze. The starting location was indicated and maps were presented such that the initial starting direction was away from the user. Participants placed a dot at the location corresponding to each target, and labeled each dot with the targets number or color. Participants were not given performance feedback during any part of the experiment.

4.3.2 Part 1: Naïve Search

For Part 1, naïve search, participants were instructed to find and remember the location of the six targets within the maze. As soon as participants found all targets, the virtual environment faded to white and participants were instructed to remove the HMD. Participants then completed a map in the same manner as in the training part of the experiment.

4.3.3 Part 2: Primed Search

The maze and target locations for Part 2, primed search, can be seen in Figure 3. Participants first followed a directed priming path that led to each of the six targets. After participants reached the end of the priming path, they removed the HMD and moved, in the real world, to the starting point.

Participants put the HMD back on and were asked to walk, as directly as possible, to one of the targets in the maze. Once the specified target was reached, they were instructed to point to each other target in turn. After participants pointed to each target, they were instructed to walk to another target and to repeat the pointing task. If a participant could not find a target within three minutes arrows appeared directing them to the target. Once the participant reached the target, the experiment continued with the participant pointing to all other targets. After completing the search and pointing tasks, participants removed the HMD and completed a map.

4.4 Results and Discussion

Our hypothesis is that for large VEs, navigational and wayfinding abilities are no worse when using IRD than RW. We measured navigation by naïve and primed search, and by distance walked between targets. Wayfinding was measured by a point-to-target task and map completion.

4.4.1 Part 1: Naïve Search

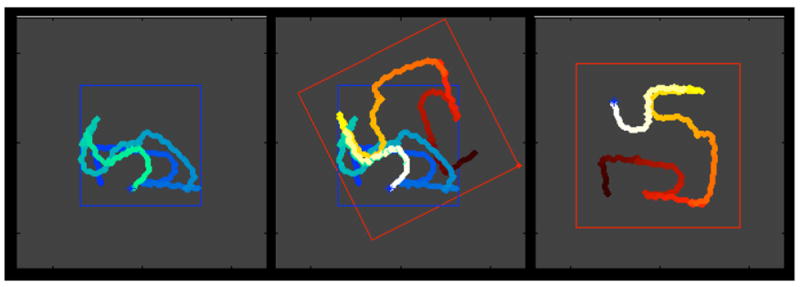

We measured total distance traveled, and a trend suggests that participants walked greater distances using IRD than RW, t(19) = 4.08, p = 0.0577. Walking longer distances may suggest that participants in IRD were more lost, however participants in IRD had to re-walk parts of the maze when distractors appeared because they had to take one step backward, which may also explain this effect. One IRD participant’s path through Part 1 can be seen in Figure 1.

Figure 1.

An IRD participant path in Part 1, the naïve search. The blue box is the tracked space and the red box is the VE. Left: real path over time (from dark to light). Right: virtual path over time (from dark to light). Center: Composite of left and right images with the final transformation applied to the VE and user’s virtual path.

Placement of a dot on a map was scored as correct if placed within 2cm of the correct location, and on the correct side of walls. A correctly placed-and-labeled target had to be correctly placed, based on the rules above, and had to be labeled with the correct number or color. Scores were calculated as the percentage correct out of the number of targets in the maze.

We defined an acceptable variation between IRD and RW to be answering within one question, our smallest measurable unit, or 16.7%. The 95% confidence interval (CI) of the mean difference between percentage of correctly placed targets was (-4.6%, 27.7%), x̄ = 11.5%, SE = 7.7%. Based on equivalence testing [19], as our predefined acceptable variation, 16.7%, is greater than the absolute value of our lower bound of the mean difference CI, we claim that, when performing a naïve search, participants in IRD are no worse than RW participants at placing targets on a map. That is, with 95% confidence, participants in the IRD score no more than 4.6% lower (-4.6%) on the placement of targets than RW participants.

The 95% CI of the mean difference between conditions of the percentage of correct placing-and-labeling targets was, (-21.4% and 38.9%), x̄ = 8.63%, SE = 14.3%. With 95% confidence, participants in IRD correctly place no fewer than 21.4% and no greater than 38.9% of the targets. Since 21.4% is greater than 16.7% we make no claim about user ability of placing-and-labeling targets between conditions when performing a naïve search.

When performing a naïve search, participants in IRD traveled longer distances to find targets but were no worse than participants in the RW condition at correctly placing targets on a map.

4.4.2 Part 2: Primed Search

The priming path was the same for all participants, so we compared priming path distance between IRD and RW. We found participants traveled significantly greater distances, approximately 20% longer, in IRD when traveling the same virtual path, t(19) = 6.07, p = 0.023. That is, our IRD algorithm increases the total distance participants travel when following identical routes. This result also suggests that even though participants travel greater distances in IRD, they are not necessarily making wrong turns or revisiting corridors, and are not more necessarily more lost. We believe an improved steering algorithm will reduce the number of distractors and thus reduce the difference in distance traveled between IRD and RW. Further evaluation of participant routes may reveal insight into user navigation.

We evaluated the difference in distance traveled to each of the six targets. Based on average sum of the shortest paths between targets, and the difference in the priming path distance, we decided that if participants using IRD traveled no more than 2m compared to RW, then IRD performance was no worse than RW. Using a Mixed Model ANOVA for distance measures between targets, we found the 95% CI of the mean difference between IRD and RW was (−0.451m, 3.66m), x̄ = 2.055, SE = 0.809. With 95% confidence, we found that participants using IRD will travel no more than 3.66m further compared to RW, which is greater than 2m. We make no claim about distances traveled between IRD and RW.

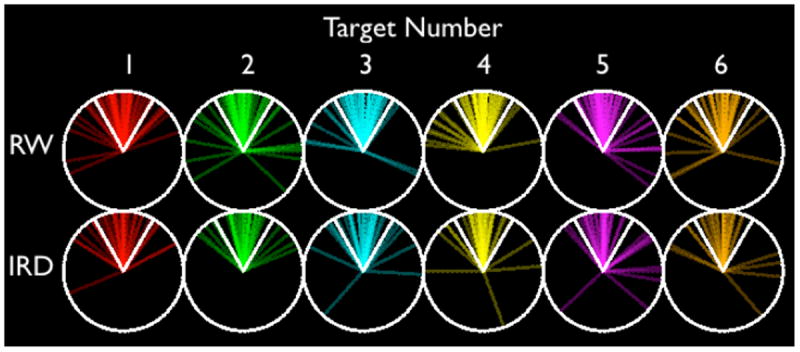

The composite results of all pointing data are shown in Figure 4. Results from [8] suggest that in the real world people point within 33° of a target, and 66° when in a VE. Based on the difference of these results, we predetermined that IRD is no worse than RW if participants were able to point within 15° of those in RW. We evaluated the absolute angular difference between pointing and target location using a Mixed Model ANOVA. Results show that the 95% CI of the mean difference between participant pointing is (−8.074° 8.165°), x̄ = 0.045, SE = 4.133. With 95% confidence, participants will point no less than −8.074° and no more than 8.165° when using IRD compared to RW. Since −8.074° > −15° and 8.165° < 15°, we conclude that pointing ability is equivalent between IRD and RW.

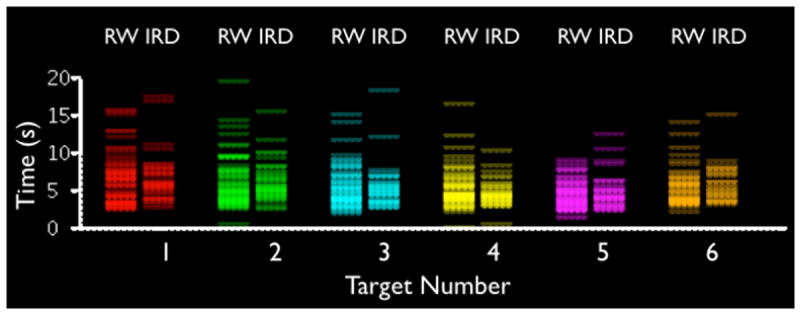

Figure 4.

The composite data of angular difference between pointing direction and target. The white angle lines are ±30°.

The total time participants took to point to targets can be seen in Figure 5. Since audio instruction time was greater than 1s, we predetermined that if participants could point within 1s, IRD was no worse than RW. We used a Mixed Model ANOVA and found the 95% CI between condition pointing time was (-1.153s, 0.811s), x̄ = –0.156, SE = 0.492. With 95% confidence, participants using IRD will take no more than 0.811s to point to a target. Since 0.811s is less than 1s, we claim that IRD is no worse than RW for time taken to point to targets.

Figure 5.

Composite total pointing time between conditions.

Map data was calculated in the same way as in Part 1. The mean difference between percentage of correctly placed targets was 95% CI (-35.2%, 36.4%), x̄ = 0.6%, SE = 017.1%. Since 35.2% is greater than 16.7%, we make no claim claim about target placement. The mean difference between conditions of the percentage of correct placing-and-labeling of targets was, 95% CI (-7.0% and 21.0%), x̄ = 7.0, SE = 6.9. With 95% confidence, participants in the IRD score no more than 7.0% lower on the placement of targets than RW participants. Since 7.0% is less than 16.7%, we claim that participants performed no worse in IRD than RW when placing and labeling targets on maps.

5 Conclusion

We have introduced a new large-scale real walking locomotion interface, IRD, and shown that users can navigate no worse when using IRD compared to the current best technique, real walking. We evaluated IRD by comparing it to real-walking, measuring user navigational ability.

For map completion, a wayfinding metric, our results show that users are no worse using IRD than RW when placing targets on a map after a naïve search and when placing and labeling targets on a map after a primed search. We also found that participants in IRD can accurately point to previously seen targets within ± 8° of participants using RW. Also, participants using IRD do not take longer to point to previously seen targets. This implies that, even with continuous imperceptible rotation of VEs, users can navigate no worse in IRD than RW.

A problem with IRD is that participants travel further when walking the same path compared to RW. This is due in part to the current distractor implementation. Improving the distractor algorithm to eliminate the extra step, and improving the steering algorithm should reduce participant walking distance in IRD.

We believe our results suggest that IRD is a promising interface for large-scale VE applications requiring user navigation.

Acknowledgments

The authors wish to thank the Link Foundation and the NIH National Institute for Biomedical Imaging and Bioengineering for supporting this work.

Contributor Information

Tabitha C. Peck, The University of North Carolina at Chapel Hill.

Henry Fuchs, The University of North Carolina at Chapel Hill.

Mary C. Whitton, The University of North Carolina at Chapel Hill.

References

- 1.Bowman DA. Principles for the Design of Performance-oriented Interaction Techniques. chapter 13. Lawrence Erlbaum Associates; 2002. pp. 277–300. [Google Scholar]

- 2.Bruder G, Steinicke F, Hinrichs KH. Arch-explore: A natural user interface for immersive architectural walkthroughs. 3DUI ’09: Proceedings of the 2009 IEEE Symposium on 3D User Interfaces; 2009. pp. 75–82. [Google Scholar]

- 3.Chance S, Gaunet F, Beall A, Loomis J. Locomotion mode affects the updating of objects encountered during travel: The contribution of vestibular and proprioceptive inputs to path integration. Presence. 1998 Apr;7(No. 2):168–178. [Google Scholar]

- 4.Darken RP, Cockayne WR, Carmein D. The omni-directional treadmill: a locomotion device for virtual worlds. New York, NY, USA: ACM; 1997. pp. 213–221. [Google Scholar]

- 5.Darken RP, Peterson B. Spatial Orientation, Wayfinding, and Representation, chapter. chapter 24. Lawrence Erlbaum Associates; 2002. pp. 493–518. [Google Scholar]

- 6.Darken RP, Sibert JL. A toolset for navigation in virtual environments. UIST ’93: Proceedings of the 6th annual ACM symposium on User interface software and technology; 1993. pp. 157–165. [Google Scholar]

- 7.Durlach NI, Mayor AS. Virtual reality: scientific and technological challenges. Washington, DC: National Academy Press; 1995. [Google Scholar]

- 8.Grant SC, Magee LE. Contributions of proprioception to navigation in virtual environments. Human Factors. 1998 Sep;40(3):489. doi: 10.1518/001872098779591296. [DOI] [PubMed] [Google Scholar]

- 9.Hollerbach JM. Locomotion Interfaces. chapter 11. Lawrence Erlbaum Associates; 2002. pp. 493–518. [Google Scholar]

- 10.Interrante V, Ries B, Anderson L. Seven league boots: A new metaphor for augmented locomotion through moderately large scale immersive virtual environments. 3D User Interfaces. 2007;0:null. [Google Scholar]

- 11.Jerald J, Peck T, Steinicke F, Whitton M. Sensitivity to scene motion for phases of head yaws. APGV ’08: Proceedings of the 5th symposium on Applied perception in graphics and visualization; 2008. pp. 155–162. [Google Scholar]

- 12.Nitzsche N, Hanebeck UD, Schmidt G. Motion compression for telepresent walking in large target environments. Presence: Teleoper Virtual Environ. 2004;13(1):44–60. [Google Scholar]

- 13.Peck TC, Fuchs H, Whitton MC. Evaluation of reorientation techniques and distractors for walking in large virtual environments. IEEE Transactions on Visualization and Computer Graphics. 2009;15(3):383–394. doi: 10.1109/TVCG.2008.191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Psotka J. Immersive training systems: Virtual reality and education and training. Instructional Science. 1995 Nov;23(Numbers 5-6):405–431. [Google Scholar]

- 15.Razzaque S. Redirected walking. 2005 [Google Scholar]

- 16.Ruddle RA, Lessels S. The benefits of using a walking interface to navigate virtual environments. ACM Trans Comput Hum Interact. 2009;16(1):1–18. [Google Scholar]

- 17.Steinicke F, Bruder G, Jerald J, Frenz H, Lappe M. Analyses of human sensitivity to redirected walking. 2008:149–156. [Google Scholar]

- 18.Waller D, Hunt E, Knapp D. The transfer of spatial knowledge in virtual environment training. Presence. 1998 Apr;7(No. 2):129–143. [Google Scholar]

- 19.Wellek S. Testing Statistical Hypotheses of Equivalence. Chapman and Hall; 2002. [Google Scholar]

- 20.Williams B, Narasimham G, McNamara TP, Carr TH, Rieser JJ, Bodenheimer B. Updating orientation in large virtual environments using scaled translational gain. New York, NY, USA: ACM; 2006. pp. 21–28. [Google Scholar]

- 21.Williams B, Narasimham G, Rump B, McNamara TP, Carr TH, Rieser J, Bodenheimer B. Exploring large virtual environments with an hmd when physical space is limited. New York, NY, USA: ACM; 2007. pp. 41–48. [Google Scholar]

- 22.Witmer BG, Bailey JH, Knerr BW, Parsons KC. Virtual spaces and real world places: transfer of route knowledge. Int J Hum Comput Stud. 1996;45(4):413–428. [Google Scholar]