Abstract

First impressions, especially of emotional faces, may critically impact later evaluation of social interactions. Activity in limbic regions, including the amygdala and ventral striatum, has previously been shown to correlate with identification of emotional content in faces; however, little work has been done describing how these signals may influence emotional face memory. We report an event‐related functional magnetic resonance imaging study in 21 healthy adults where subjects attempted to recognize a neutral face that was previously viewed with a threatening (angry or fearful) or nonthreatening (happy or sad) affect. In a hypothesis‐driven region of interest analysis, we found that neutral faces previously presented with a threatening affect recruited the left amygdala. In contrast, faces previously presented with a nonthreatening affect activated the left ventral striatum. A whole‐brain analysis revealed increased response in the right orbitofrontal cortex to faces previously seen with threatening affect. These effects of prior emotion were independent of task performance, with differences being seen in the amygdala and ventral striatum even if only incorrect trials were considered. The results indicate that a network of frontolimbic regions may provide emotional bias signals during facial recognition. Hum Brain Mapp, 2009. © 2009 Wiley‐Liss, Inc.

Keywords: amygdala, ventral striatum, fMRI, face, memory, emotion, orbitofrontal cortex

INTRODUCTION

The neural architecture for the recognition of faces has been well described, with multiple studies implicating a network of brain regions including the fusiform gyrus and the superior temporal sulcus [Vuilleumier and Pourtois,2007]. Prior research also suggests that the emotional information conveyed by facial affect may have similarities to other emotional stimuli [Britton et al.,2006b] and be processed by a network of limbic and cortical regions including the amygdala, ventral striatum, and orbitofrontal cortex (OFC) [Hariri et al.,2003; Killgore and Yurgelun‐Todd,2004; Loughead et al.,2008]. Building on a large literature examining fear conditioning in rodents [LeDoux,2000], multiple functional magnetic resonance imaging (fMRI) experiments have demonstrated that the amygdala responds robustly to threatening faces [Breiter et al.,1996; Morris et al.,1996; Phan et al.,2002]. Similarly, the OFC has been found to respond to displays of angry faces, suggesting that it may be important in modulating the emotional context of facial expression [Blair et al.,1999; Dougherty et al.,1999], or may be involved in the inhibition of a behavioral response to a perceived threat [Coccaro et al.,2007]. In contrast, the ventral striatum is most often associated with reward‐related behavior in both electrophysiological studies in animals [Schultz,2002] and human neuroimaging experiments [Knutson et al.,2001; Monk et al.,2008; O'Doherty et al.,2001]. Several imaging studies have demonstrated that the ventral striatum is recruited by the rewarding properties of beautiful or happy faces [Aharon et al.,2001; Monk et al.,2008]. However, other studies suggest that amygdala [Davis and Whalen,2001; Fitzgerald et al.,2006] and ventral striatal responses [Zink et al.,2003;2006] are better explained by salience than by specific threat or reward‐related properties.

This body of literature has almost uniformly investigated the neural correlates of passive viewing of emotional faces or facial affect identification. Beyond one small study, which found insular activation to the memory of neutral faces initially paired with a description of a disgusting behavior [Todorov et al.,2007], remarkably little research has investigated the neural correlates of emotional memory of faces [Gobbini and Haxby,2007]. The aphorism “You never have a second chance to make a first impression” attests to the importance of the affective content of a face when it is first encountered, as such information inevitably informs the interpretation of subsequent social interactions [Pessoa,2005; Sommer et al.,2008]. Identifying the neural basis of this phenomena is important for further understanding of both social cognition and its dysfunction in psychiatric disorders such as schizophrenia [Gur et al.,2007; Holt et al.,2005;2006; Kohler et al.,2000; Mathalon and Ford,2008], post‐traumatic stress disorder [Liberzon et al.,2007], and depression [Fu et al.,2007;2008].

We investigated the neural correlates of emotional face memory using an incidental memory paradigm and event‐related fMRI. Subjects made an “old” vs. “new” recognition judgment regarding previously viewed and novel faces. Importantly, the “old” faces had been originally presented with either threatening (angry or fearful) or nonthreatening (happy or sad) facial expressions, whereas in the forced‐choice recognition task all faces were shown with neutral expressions. We hypothesized that faces displaying a threatening affect on “first impression” would recruit the amygdala during the recognition task despite their current neutral affect. In contrast, we expected that neutral faces initially displayed with a nonthreatening affect would activate the ventral striatum. As described below, these hypotheses were supported. Furthermore, in an exploratory whole‐brain analysis, we found that faces previously viewed with threatening expressions recruited the OFC. These results delineate a frontolimbic network that may provide emotional bias signals to modulate facial memory, integrating critical information learned from an affect‐laden first impression.

EXPERIMENTAL PROCEDURES

Subjects

We studied 24 right‐handed participants, who were free from psychiatric or neurologic comorbidity. After a complete description of the study, subjects provided written informed consent. Three subjects were excluded for excessive in‐scanner motion (mean volume‐to‐volume displacement or mean absolute displacement greater than two standard deviations above the group average). This left a final sample of 21 subjects (47.6% male, mean age 32.0 years, SD = 8.6).

Task

The face recognition experiment was preceded by an emotion identification task similar to those previously reported by our laboratory [Gur et al.,2002b,c; Loughead et al.,2008]. In the emotion identification experiment, subjects viewed 30 faces displaying neutral, happy, sad, angry, or fearful affect, and were asked to label the emotion displayed (Fig. 1A). Stimuli construction and validation are described in detail elsewhere [Gur et al.,2002a]. Briefly, the stimuli were color photographs of actors (50% female) who had volunteered to participate in a study on emotion. They were coached by professional directors to express facial displays of different emotions. For the purpose of constructing the affect identification task, a subset of 30 intense expressions was selected based on high degree of accuracy of identification by the raters. During the affect identification task, subjects were not instructed to remember the faces or informed of the memory component of the experiment. The emotion identification and the face recognition experiments were separated by a diffusion tensor imaging acquisition lasting 10 min. Results from the emotion identification task will be reported elsewhere.

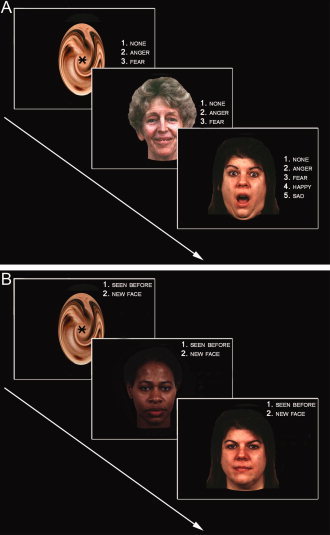

Figure 1.

Experimental Paradigm. A. Encoding task. Subjects initially performed an emotion identification task in which they identified the facial affect displayed. Five emotional labels were available, including two nonthreatening affects (happy and sad), two threatening affects (angry and fearful), and neutral. Subjects were not instructed to remember the faces displayed. B. Face recognition task. Following the affect identification task, subjects were asked to make a forced‐choice facial recognition judgment. Thirty faces (targets) from the affect identification task and thirty novel faces (foils) were displayed for 2 seconds each. Subjects made a simple “old” vs. “new” judgment as to whether the face had been previously displayed in the affect identification task. Faces were separated by a variable (0–12s) interval of crosshair fixation.

The face recognition experiment (Fig. 1B) presented 30 faces from the emotion identification experiment (targets) along with 30 novel faces (foils). Faces were displayed for two seconds and subjects were asked to make a simple “old” vs. “new” judgment using a two button response pad. Target faces initially presented with happy, sad, angry, or fearful affect were displayed with neutral expressions. Foil stimuli not previously seen were also displayed with a neutral expression. Faces shown with neutral expressions in the emotion identification experiment were treated as a covariate of no interest in the face recognition analysis. Interpretation of responses to neutral faces was confounded by their congruent affect in the emotion identification experiment: whereas target threat or nonthreat faces were displayed initially with a strong affect in the emotion identification experiment and subsequently represented with a neutral affect in the face recognition experiment, neutral faces were displayed with the same expression in both experiments, making neutral trials difficult to compare to other targets. Previous experiments in our laboratory [Gur et al.,2002b; Loughead et al.,2008] have conceptualized emotions along the dimension of threat‐relatedness. In the current experiment, faces originally displayed with an angry or fearful affect were modeled together as threat; faces originally displayed with a happy or sad affect were modeled as nonthreat. This grouping of emotions is suggested by the theories of Gray [Gray,1990], by previous data from our laboratory [Loughead et al.,2008], as well as other studies [Hariri et al.,2000; Suslow et al.,2006]. Overall, there were six trials per emotion, yielding 12 threat and 12 nonthreat trials per subject. Interspersed with the 60 task trials (30 targets, 30 foil) were 60 baseline trials of variable duration (0–12 seconds) during which a crosshair fixation point was displayed on a complex background (degraded face). No stimulus was presented twice and total task duration was 4 min and 16 seconds.

fMRI Procedures

Participants were required to demonstrate understanding of the task instructions, the response device, and complete one trial of practice prior to acquisition of face memory data. Earplugs were used to muffle scanner noise and head fixation was aided by foam‐rubber restraints mounted on the head coil. Stimuli were rear‐projected to the center of the visual field using a PowerLite 7300 video projector (Epson America, Long Beach, CA) and viewed through a head coil mounted mirror. Stimulus presentation was synchronized with image acquisition using the Presentation software package (Neurobehavioral Systems, Albany, CA). Subjects were randomly assigned to use their dominant or nondominant hand; responses were recorded via a nonferromagnetic keypad (fORP; Current Designs, Philadelphia, PA).

Image Acquisition

BOLD fMRI was acquired with a Siemens Trio 3 Tesla (Erlangen, Germany) system using a whole‐brain, single‐shot gradient‐echo (GE) echoplanar (EPI) sequence with the following parameters: TR/TE = 2000/32 ms, FOV = 220 mm, matrix = 64 × 64, slice thickness/gap = 3/0 mm, 40 slices, effective voxel resolution of 3 × 3 × 3 mm. To reduce partial volume effects in orbitofrontal regions, EPI was acquired obliquely (axial/coronal). The slices provided adequate brain coverage, from the superior cerebellum and inferior temporal lobe ventrally to the hand‐motor area in the primary motor cortex dorsally. Prior to time‐series acquisition, a 5‐min magnetization‐prepared, rapid acquisition GE T1‐weighted image (MPRAGE, TR 1620 ms, TE 3.87 ms, FOV 250 mm, matrix 192 × 256, effective voxel resolution of 1 × 1 × 1 mm) was collected for anatomic overlays of functional data and to aid spatial normalization to a standard atlas space [Talairach and Tournoux,1988].

Performance Analysis

Median percent correct and reaction time (RT, in milliseconds) were calculated for threat and nonthreat trials separately. Differences in percent correct were evaluated using the Wilcoxon Signed‐Rank test; RT between trial types was compared with paired two‐tailed t‐tests. Performance across all trials was evaluated by calculating the discrimination index Pr and the response bias Br [Snodgrass and Corwin,1988]. These were calculated using the following formulas:

| (1) |

| (2) |

The discrimination index Pr provides an unbiased measure of the accuracy of response, with higher values corresponding to a greater degree of accuracy. In contrast, the response bias Br provides an independent measure of the overall tendency of subjects to make “old“ or “new” responses regardless of accuracy. Positive values correspond to a familiarity bias (i.e., more likely to say “old” to a new item) whereas negative values correspond to conservative responses with a novelty bias (i.e., more likely to say “new“ to an “old” item).

Image Analysis

fMRI data were preprocessed and analyzed using FEAT (fMRI Expert Analysis Tool) Version 5.1, part of FSL (FMRIB's Software Library, http://www.fmrib.ox.ac.uk/fsl). Images were slice‐time corrected, motion corrected to the median image using a trilinear interpolation with six degrees of freedom [Jenkinson et al.,2002], high pass filtered (100s), spatially smoothed (6 mm FWHM, isotropic), and grand‐mean scaled using mean‐based intensity normalization. BET was used to remove nonbrain areas [Smith,2002]. The median functional and anatomical volumes were coregistered, and then transformed into the standard anatomical space (T1 MNI template, voxel dimensions of 2 × 2 × 2 mm) using trilinear interpolation.

Subject level time‐series statistical analysis was carried out using FILM (FMRIB's Improved General Linear Model) with local autocorrelation correction [Woolrich et al.,2001]. The seven condition events (four target emotions, neutrals, foils, and nonresponses) were modeled in the GLM after convolution with a canonical hemodynamic response function; temporal derivatives of each condition were also included in the model. Six rigid body movement parameters were included as nuisance covariates.

The contrast map of interest, threat vs. nonthreat [i.e., (anger + fear) > (happy + sad)], was subjected to an a priori region of interest (ROI) analysis in two structures implicated in threat and reward processing: the amygdala and ventral striatum. The amygdala ROI was defined using the AAL atlas [Tzourio‐Mazoyer et al.,2002]; the ventral striatum was defined from a publication‐based probabilistic MNI Atlas used as a binary mask at the threshold of 0.75 probability [Fox and Lancaster,1994; for more information see http://hendrix.imm.dtu.dk/services/jerne/ninf/voi/indexalphabetic]. Small volume correction [Friston,1997] was used to identify clusters of at least 10 contiguous 2 × 2 × 2 voxels within these ROIs.

In addition to the ROI analysis, two whole‐brain mixed effects analyses were performed (Flame2, FMRIB's local analysis of mixed effects): (1) each subject's whole‐brain contrast of threat vs. nonthreat was entered into a one‐sample t‐test and (2) each subject's mean activation in the task (all trials) relative to baseline was calculated and entered into a one sample t‐test. We corrected for multiple comparisons using a Monte Carlo method with AFNI AlphaSim (R. W. Cox, National Institutes of Health) at a Z threshold of 2.58 and a probability of spatial extent P < 0.01. Identified clusters were then labeled according to anatomical regions using the Talairach Daemon database. Coordinates of cluster locations in all analyses are reported in MNI space. To characterize BOLD response amplitudes, mean scaled beta coefficients (% signal change) for threat and nonthreat conditions were extracted from significant clusters in the a priori ROI analysis and certain clusters from the whole brain analysis.

Finally, we conducted three exploratory analyses to further examine these effects. First, we investigated the effect of individual emotions within significantly activated clusters from the a priori ROIs and certain regions from the whole‐brain analysis. To do this, we extracted beta coefficients from each target emotion (happy, sad, angry, fearful) versus a crosshair baseline. Significant difference between individual emotions were assessed with one‐tailed paired t‐tests. Second, we investigated whether the observed effects were affected by task performance. We modeled seven performance‐based regressors at the subject level: threat correct, threat incorrect, nonthreat correct, nonthreat incorrect, foils, neutrals, and nonresponses. Beta coefficients were extracted from above‐threshold voxels as described above, and entered into a 2 × 2 repeated‐measures ANOVA (threat vs. nonthreat × correct vs. incorrect) to test the main effect of correct response and interaction effects. In this analysis the main effect of threat vs. nonthreat was of limited utility as the contrast had already been used to select the voxels under investigation. Third, to ascertain if the threat vs. nonthreat differences noted in our a priori ROI analysis were present even when subjects did not make a correct response, we used the performance‐based regressors and restricted the threat vs. nonthreat contrast to incorrect trials only.

RESULTS

Task Performance

The response rate (81.2%) indicated that the task was difficult. Response accuracy was low (median 25% overall among the four target emotions) resulting in a very limited discrimination index (Pr = 0.27). The low accuracy was associated with a conservative response bias, whereby subjects were more likely to judge previously presented faces as “new” (Br = −0.29). There were not significant differences between threat and nonthreat trials in either response accuracy or response time (Table I). Although neutral faces and foils were not included in our analysis, accuracy for these trials was significantly higher: 50% for neutral trials and 76% for foils.

Table I.

Performance measures during face recognition task

| Percent correct | Median | S.D. |

|---|---|---|

| Non‐threat | 33% | 19% |

| Threat | 25% | 14% |

| Response time (msec) | ||

| Non‐threat | 1020 | 211 |

| Threat | 1069 | 149 |

| Non‐threat correct | 1139 | 173 |

| Non‐threat incorrect | 1005 | 241 |

| Threat correct | 1121 | 137 |

| Threat incorrect | 1058 | 152 |

Task Activation

The analysis of all trials vs. fixation revealed significant activation for the task in a distributed network including bilateral frontal, parietal, thalamic, anterior cingulate, insular, and medial temporal regions (Supp. Table and Fig. S1). This is consistent with general accounts of recognition memory [Shannon and Buckner,2004; Wheeler and Buckner,2003], as well as specific studies of face recognition [Calder and Young,2005; Ishai and Yago,2006].

Region of Interest Analysis

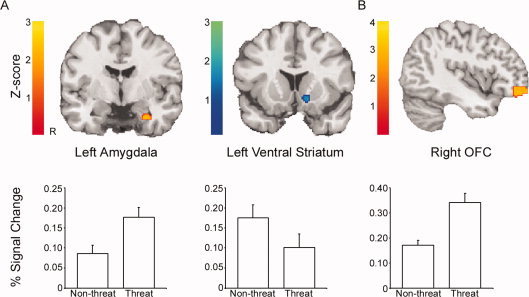

The a priori ROI analysis revealed significant effects in the left amygdala and left ventral striatum (Fig. 2A). The left amygdala responded more to threat than nonthreat stimuli in a cluster of 28 contiguous voxels surviving small volume correction (peak voxel: 30, −6, −28). The left ventral striatum showed the opposite effect, activating more to faces initially encountered with a nonthreatening affect; 28 voxels (peak voxel: −14, 16, −8) survived small volume correction. Although significant effects were only seen on the left, the right amygdala and ventral striatum exhibited similar subthreshold responses. Overall, these results are consistent with our hypotheses, suggesting that during the recall of emotionally‐valenced faces, the amygdala is differentially recruited by threat and the ventral striatum by nonthreat even when the recognition face cue lacks emotional content.

Figure 2.

Imaging results. A. Region of interest analysis. The amygdala and the ventral striatum were identified as a priori regions of interest for analysis of target responses. The left amygdala responded more to target faces previously displayed with a threatening (angry or fearful) affect. In contrast, the left ventral striatum (right panels) responded more to target faces previously displayed with a nonthreatening (happy or sad) affect. B. Whole‐brain analysis. In a voxelwise analysis, the right orbitofrontal cortex (OFC) responded to target faces previously displayed with a threatening affect.

Whole‐Brain Analysis

To examine brain regions beyond those selected in our a priori analysis, we performed a whole‐brain analysis of the threat > nonthreat contrast. The right OFC showed significantly greater BOLD response during threat trials compared with nonthreat, corrected at a Z threshold of 2.58 and a probability of spatial extent P < 0.01 (90 voxels, peak at 44, 56, −12; Fig. 2B). The left OFC exhibited a similar response that was not above this threshold. Two left lateral temporal clusters (posterior: 125 voxels, peak at −64, −38, −4; anterior: 96 voxels, peak at −48, −14, −8) were also found to be above threshold.

Exploratory Analysis of Individual Emotions

To probe the source of these threat vs. nonthreat differences, we examined the effect of individual emotions within the voxels found to be significant in the above analyses. The results indicate that the threat more than nonthreat difference was not driven by one individual emotion (Supp. Fig. S2). In the left amygdala, there were significant differences between each of the threat and nonthreatening affects: anger > happy [t(40) = 2.2, P = 0.01], anger > sad [t(40) = 2.3, P = 0.01], fear > happy [t(40) = 1.8, P < 0.05], fear > sad [t(40) = 1.8, P < 0.05]. There was no difference within threat and nonthreat affects. The same pattern was seen in the right OFC, with anger > happy [t(40) = 2.6, P < 0.01] and anger > sad [t(40) = 3.5, P < 0.001], as well as fear > happy [t(40) = 2.5, P < 0.01] and fear > sad [t(40) = 3.5, P = 0.001]. Again, there were not difference within threat or nonthreat emotions. Finally, in the ventral striatum, there was a significant difference between sad and fear [t(40 = 2.0, P < 0.05], as well as a trend towards a difference between happy and fear [t(40) = 1.4, P = 0.07]. There were no other significant differences between emotions in the ventral striatum; anger demonstrated a more intermediate response.

Exploratory Performance‐Based Analyses

Next, we examined the effect of recognition performance on the observed threat vs. nonthreat differences. Overall, we found that the effects described above were not significantly influenced by performance (Supp. Fig. S3). There was not a main effect of performance in the left amygdala [F(1,20) = 0.03, P = 0.85], left ventral striatum [F(1,20) = 0.004, P = 0.95], or right OFC [F(1,20) = 2.69, P = 0.11]. Furthermore, there were no interactions between performance and trial type in any of the above regions. However, this analysis was likely underpowered due to the limited number of correct trials.

Finally, when we restricted our analysis to incorrect trials only, we found that differences between threat and nonthreat trials persisted in our a priori ROIs. In the left amygdala, 4 voxels survived small volume correction when only incorrect trials were included; 50 voxels surpassed an uncorrected P = 0.05 threshold. In the left ventral striatum, 20 voxels survived small volume correction.

DISCUSSION

This study investigated the impact of an intense facial affect at first presentation on neural activation during a subsequent recognition task. We found that faces previously seen with a threatening expression (fearful or angry) provoked a greater BOLD response in the left amygdala and right OFC, whereas the left ventral striatum responded more to faces initially viewed with a nonthreatening expression. Furthermore, these differences were independent of performance. The implications and limitations of these findings are discussed later.

Amygdala and OFC Respond to Previously Threatening Faces

Past experiments have demonstrated the important role of the amygdala in fear conditioning in animals [for a review, see LeDoux,2000], in humans with amygdalar lesions [Bechara et al.,1995; LaBar et al.,1995], and in healthy controls in neuroimaging paradigms [Dolcos et al.,2004; LaBar and Cabeza,2006]. In addition to conditioning paradigms, the amygdala has been critically implicated in emotional learning across a wide variety of tasks [LaBar and Cabeza,2006]. Specifically, amygdalar activity during encoding of emotional stimuli predicts successful recollection [Canli et al.,2000; Dolcos et al.,2004]. Furthermore, neutral stimuli encoded in emotional contexts have been shown to recruit the amygdala upon retrieval [Dolan et al.,2000; Maratos et al.,2001; Smith et al.,2004; Taylor et al.,1998]. Work by our group [Gur et al.,2002b;2007] and others [Anderson et al.,2003; Williams et al.,2004] has demonstrated that the amygdala responds to displays of threatening faces; however, no previous experiment has investigated limbic contributions to facial affective memory.

Consistent with previous studies using nonfacial stimulus categories [Dolan et al.,2000; Maratos et al.,2001; Smith et al.,2004] we found that faces initially encountered with a threatening affect elicit amygdalar response upon representation with neutral affect. Gobbini and Haxby [2007] have proposed a core system of facial recognition that is modulated by emotional information through limbic inputs. Our results suggest that modulatory signals from limbic regions reflect not only the current emotional context, but also represent the prior emotional context from a previous exposure. For example, the negative “first impression” created by a threatening facial expression may produce amygdalar activation initially [Gur et al.,2002b; LeDoux,2000]. Later, when the face is re‐encountered with a neutral expression, the amygdala is again recruited in response to the emotional context present during encoding. Thus, these results outline a neural system that integrates social information about individuals over time.

The OFC is known to occupy a privileged role at the intersection of emotion and cognition. It has extensive reciprocal connections to medial temporal lobe [Cavada et al.,2000] and there is ample evidence for its role in the encoding of memories [Frey and Petrides,2002], specifically the aspects of memory associated with rewarding or aversive information [Morris and Dolan,2001; O'Doherty et al.,2001; Rolls,2000]. Two previous studies [Blair et al.,1999; Lee et al.,2008] have found that the OFC responds to angry faces; here we found that, like the amygdala, the right OFC responds to neutral faces previously presented with a threatening affect. Like the amygdala and OFC, two left lateral temporal clusters were also found to respond to previously threatening faces. These clusters are situated near the superior temporal sulcus, which has been shown to be involved in numerous functions [Hein and Knight,2008], including face perception [Haxby et al.,1999] gaze direction [Grosbras et al.,2005; Hoffman and Haxby,2000], and biological motion [Grossman and Blake,2002]. Extending findings that the STS may be involved in the processing displays of facial affect [Williams et al.,2008], our results suggest that lateral temporal regions may also be recruited by previously encountered affect as well.

Ventral Striatum Responds to Previously Nonthreatening Faces

Although much research on emotional memory has focused on the amygdala [Phelps,2004], there is increasing evidence that the striatum also plays an important role in the encoding of emotional information [Adcock et al.,2006; Brewer et al.,2008; Britton et al.,2006a; Phan et al.,2004; Satterthwaite et al.,2007]. Studies by Monk et al. [2008] and Aharon et al. [2001] have demonstrated that the ventral striatum is recruited by displays of happy and beautiful faces, suggesting that pleasant faces are processed as social reinforcers. Like happy faces, sad faces have been theorized to be rewarding because of their prosocial, affiliative properties, as well as demonstrating submission within a social hierarchy [Bonanno et al.,2008; Eisenberg et al.,1989; Eisenberg and Miller,1987; Killgore and Yurgelun‐Todd,2004; Lewis and Haviland‐Jones,2008]. Evidence that the human brain is particularly sensitive to affective displays that provide information on social context and hierarchy is accumulating [Britton et al.,2006a; Carter and Pelphrey,2008; Fliessbach et al.,2007; Guroglu et al.,2008; Kim et al.,2007; Rilling et al.,2008; Zink et al.,2008]. Also, two prior fMRI studies have found sad faces to activate the striatum [Beauregard et al.,1998; Fu et al.,2004]. Our results suggest that reward pathways are recruited not just when a nonthreatening face is first encountered, but also when it is re‐encountered in a neutral context. Thus, the ventral striatum may provide a reward signal that may act in opposition to the amygdala when making threat vs. nonthreat distinctions regarding previously encountered individuals.

Threat vs. Nonthreat Differences Persist Even When Faces Are Not Recognized

In an exploratory analysis, we did not find an effect of task performance on brain activation that would account for the differential response of these brain regions. Indeed, when we excluded correct trials and examined amygdalar and striatal responses to incorrect trials only, the results persisted (although at somewhat lower levels of significance). This potentially important finding suggests that successful recognition is not required for facial affective information to be preserved on a neural level. This is relevant to the ongoing controversy as to whether amygdalar responses to emotional information depend on active attention or if they occur automatically [Pessoa et al.,2005; Vuilleumier and Driver,2007]. Several backward‐masking fMRI studies have shown that the amygdala responds to fearful faces presented without awareness [Dannlowski et al.,2007; Whalen et al.,1998; Williams et al.,2006], providing evidence for an automatic “bottom‐up” response that does not require attention [LeDoux,2000; Phelps and LeDoux,2005]. Our task was not designed to isolate such stimulus‐driven properties of amygdala response. However, the results do suggest that a “first impression” that is not explicitly remembered may be encoded on a neural level and implicitly influence subsequent social interactions.

Limitations

Two limitations of this study should be acknowledged. First, the grouping of the emotions into threat and nonthreat categories may obscure differences between the individual component emotions. For example, while an angry face represents a direct threat indicated by gaze, a fearful face may indicate a more ambiguous environmental threat [Ewbank et al.,2009]. Furthermore, some studies [Whalen et al.,2001] have found that that the amygdala may respond more to fearful than angry faces. However, other studies have demonstrated that the amygdala does reliably respond to anger [Beaver et al.,2008; Evans et al.,2008; Ewbank et al.,2009; Hariri et al.,2000; Nomura et al.,2004; Sprengelmeyer et al.,1998; Stein et al.,2002; Suslow et al.,2006]. In the current study, anger and fear trials produced a similar response in amygdala and OFC clusters, indicating that the results were not driven by one particular emotion. The findings in the ventral striatum were more ambiguous, and distinctions other than the threat vs. nonthreat effect may have impacted the results. Future studies are necessary to investigate potentially important differences within categories of threatening and nonthreatening faces.

Second, the low accuracy in target recognition led our study to be underpowered for certain performance‐based comparisons. In particular, while we did not find an interaction of performance with the threat vs. nonthreat differences reported, this analysis was limited by the number of correct trials in each group. Performance was likely limited by the general difficulty of face recognition tasks [Calkins et al.,2005; Ishai and Yago,2006] and by the fact that subjects were not notified that they would be asked to later recognize the faces from the affect identification task. We observed a strong conservative response bias, suggesting low target accuracy was not due to simple guessing. The high proportion of incorrect trials allowed us to examine these trials separately in a subanalysis, finding that the threat vs. nonthreat differences are present in the amygdala and ventral striatum even when only incorrect trials were considered. Nonetheless, in the future it will be important to further assess the effects of correct recognition with a design that produces more correct trials and incorporates behavioral metrics of recognition confidence.

CONCLUSIONS

In summary, the current study provides the first evidence for frontolimbic responses that could provide emotional bias signals during facial recognition. The amygdala and OFC appear to signal previously threatening faces, whereas the ventral striatum may oppose this system by responding to previously nonthreatening faces. These differences do not appear to depend on accurate recognition of faces, suggesting a process that does not require explicit awareness. Emotional face memory plays an important role in social cognition and these results provide an intriguing first look into the neural correlates of emotional memory of faces. Furthermore, such processes could contribute to deficits in face recognition and social interaction seen in neuropsychiatric disorders such as schizophrenia, mood disorders, and brain injury [Calkins et al.,2005; Fu et al.,2008; Gainotti,2007; Holt et al.,2005;2006].

Supporting information

Additional Supporting Information may be found in the online version of this article.

Supplementary Figure 1

Supplementary Figure 2

Supplementary Figure 3

Acknowledgements

We thank Masaru Tomita and Kathleen Lesko for data collection. Caryn Lerman provided valuable discussion regarding first impressions.

REFERENCES

- Adcock RA, Thangavel A, Whitfield‐Gabrieli S, Knutson B, Gabrieli JD ( 2006): Reward‐motivated learning: Mesolimbic activation precedes memory formation. Neuron 50: 507–517. [DOI] [PubMed] [Google Scholar]

- Aharon I, Etcoff N, Ariely D, Chabris CF, O'Connor E, Breiter HC ( 2001): Beautiful faces have variable reward value: fMRI and behavioral evidence. Neuron 32: 537–551. [DOI] [PubMed] [Google Scholar]

- Anderson AK, Christoff K, Panitz D, De Rosa E, Gabrieli JD ( 2003): Neural correlates of the automatic processing of threat facial signals. J Neurosci 23: 5627–5633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beauregard M, Leroux JM, Bergman S, Arzoumanian Y, Beaudoin G, Bourgouin P, Stip E ( 1998): The functional neuroanatomy of major depression: An fMRI study using an emotional activation paradigm. Neuroreport 9: 3253–3258. [DOI] [PubMed] [Google Scholar]

- Beaver JD, Lawrence AD, Passamonti L, Calder AJ ( 2008): Appetitive motivation predicts the neural response to facial signals of aggression. J Neurosci 28: 2719–2725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bechara A, Tranel D, Damasio H, Adolphs R, Rockland C, Damasio AR ( 1995): Double dissociation of conditioning and declarative knowledge relative to the amygdala and hippocampus in humans. Science 269: 1115–1118. [DOI] [PubMed] [Google Scholar]

- Blair RJ, Morris JS, Frith CD, Perrett DI, Dolan RJ ( 1999): Dissociable neural responses to facial expressions of sadness and anger. Brain 122 ( Pt 5): 883–893. [DOI] [PubMed] [Google Scholar]

- Bonanno G, Goorin L, Coifman K ( 2008): Sadness and grief In: Lewis M, Haviland‐Jones J, Barrett L, editors. Handbook of Emotions. New York, NY: Guilford Publications. [Google Scholar]

- Breiter HC, Etcoff NL, Whalen PJ, Kennedy WA, Rauch SL, Buckner RL, Strauss MM, Hyman SE, Rosen BR ( 1996): Response and habituation of the human amygdala during visual processing of facial expression. Neuron 17: 875–887. [DOI] [PubMed] [Google Scholar]

- Brewer JA, Worhunsky PD, Carroll KM, Rounsaville BJ, Potenza MN ( 2008): Pretreatment brain activation during stroop task is associated with outcomes in cocaine‐dependent patients. Biol Psychiatry 64: 998–1004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Britton JC, Phan KL, Taylor SF, Welsh RC, Berridge KC, Liberzon I ( 2006a): Neural correlates of social and nonsocial emotions: An fMRI study. Neuroimage 31: 397–409. [DOI] [PubMed] [Google Scholar]

- Britton JC, Taylor SF, Sudheimer KD, Liberzon I ( 2006b): Facial expressions and complex IAPS pictures: Common and differential networks. Neuroimage 31: 906–919. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW ( 2005): Understanding the recognition of facial identity and facial expression. Nat Rev Neurosci 6: 641–651. [DOI] [PubMed] [Google Scholar]

- Calkins ME, Gur RC, Ragland JD, Gur RE ( 2005): Face recognition memory deficits and visual object memory performance in patients with schizophrenia and their relatives. Am J Psychiatry 162: 1963–1966. [DOI] [PubMed] [Google Scholar]

- Canli T, Zhao Z, Brewer J, Gabrieli JD, Cahill L ( 2000): Event‐related activation in the human amygdala associates with later memory for individual emotional experience. J Neurosci 20: RC99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carter EJ, Pelphrey KA ( 2008): Friend or foe? Brain systems involved in the perception of dynamic signals of menacing and friendly social approaches. Soc Neurosci 3: 151–163. [DOI] [PubMed] [Google Scholar]

- Cavada C, Company T, Tejedor J, Cruz‐Rizzolo RJ, Reinoso‐Suarez F ( 2000): The anatomical connections of the macaque monkey orbitofrontal cortex. A review. Cereb Cortex 10: 220–242. [DOI] [PubMed] [Google Scholar]

- Coccaro EF, McCloskey MS, Fitzgerald DA, Phan KL ( 2007): Amygdala and orbitofrontal reactivity to social threat in individuals with impulsive aggression. Biol Psychiatry 62: 168–178. [DOI] [PubMed] [Google Scholar]

- Dannlowski U, Ohrmann P, Bauer J, Kugel H, Arolt V, Heindel W, Suslow T ( 2007): Amygdala reactivity predicts automatic negative evaluations for facial emotions. Psychiatry Res 154: 13–20. [DOI] [PubMed] [Google Scholar]

- Davis M, Whalen PJ ( 2001): The amygdala: Vigilance and emotion. Mol Psychiatry 6: 13–34. [DOI] [PubMed] [Google Scholar]

- Dolan RJ, Lane R, Chua P, Fletcher P ( 2000): Dissociable temporal lobe activations during emotional episodic memory retrieval. Neuroimage 11: 203–209. [DOI] [PubMed] [Google Scholar]

- Dolcos F, LaBar KS, Cabeza R ( 2004): Interaction between the amygdala and the medial temporal lobe memory system predicts better memory for emotional events. Neuron 42: 855–863. [DOI] [PubMed] [Google Scholar]

- Dougherty DD, Shin LM, Alpert NM, Pitman RK, Orr SP, Lasko M, Macklin ML, Fischman AJ, Rauch SL ( 1999): Anger in healthy men: A PET study using script‐driven imagery. Biol Psychiatry 46: 466–472. [DOI] [PubMed] [Google Scholar]

- Eisenberg N, Miller PA ( 1987): The relation of empathy to prosocial and related behaviors. Psychol Bull 101: 91–119. [PubMed] [Google Scholar]

- Eisenberg N, Fabes RA, Miller PA, Fultz J, Shell R, Mathy RM, Reno RR ( 1989): Relation of sympathy and personal distress to prosocial behavior: A multimethod study. J Pers Soc Psychol 57: 55–66. [DOI] [PubMed] [Google Scholar]

- Evans KC, Wright CI, Wedig MM, Gold AL, Pollack MH, Rauch SL ( 2008): A functional MRI study of amygdala responses to angry schematic faces in social anxiety disorder. Depress Anxiety 25: 496–505. [DOI] [PubMed] [Google Scholar]

- Ewbank MP, Lawrence AD, Passamonti L, Keane J, Peers PV, Calder AJ ( 2009): Anxiety predicts a differential neural response to attended and unattended facial signals of anger and fear. Neuroimage 44: 1144–1151. [DOI] [PubMed] [Google Scholar]

- Fitzgerald DA, Angstadt M, Jelsone LM, Nathan PJ, Phan KL ( 2006): Beyond threat: Amygdala reactivity across multiple expressions of facial affect. Neuroimage 30: 1441–1448. [DOI] [PubMed] [Google Scholar]

- Fliessbach K, Weber B, Trautner P, Dohmen T, Sunde U, Elger CE, Falk A ( 2007): Social comparison affects reward‐related brain activity in the human ventral striatum. Science 318: 1305–1308. [DOI] [PubMed] [Google Scholar]

- Fox PT, Lancaster JL ( 1994): Neuroscience on the net. Science 266: 994–996. [DOI] [PubMed] [Google Scholar]

- Frey S, Petrides M ( 2002): Orbitofrontal cortex and memory formation. Neuron 36: 171–176. [DOI] [PubMed] [Google Scholar]

- Friston KJ ( 1997): Testing for anatomically specified regional effects. Hum Brain Mapp 5: 133–136. [DOI] [PubMed] [Google Scholar]

- Fu CH, Williams SC, Cleare AJ, Brammer MJ, Walsh ND, Kim J, Andrew CM, Pich EM, Williams PM, Reed LJ, Mitterschiffthaler MT, Suckling J, Bullmore ET ( 2004): Attenuation of the neural response to sad faces in major depression by antidepressant treatment: A prospective, event‐related functional magnetic resonance imaging study. Arch Gen Psychiatry 61: 877–889. [DOI] [PubMed] [Google Scholar]

- Fu CH, Williams SC, Brammer MJ, Suckling J, Kim J, Cleare AJ, Walsh ND, Mitterschiffthaler MT, Andrew CM, Pich EM, Bullmore ET ( 2007): Neural responses to happy facial expressions in major depression following antidepressant treatment. Am J Psychiatry 164: 599–607. [DOI] [PubMed] [Google Scholar]

- Fu CH, Williams SC, Cleare AJ, Scott J, Mitterschiffthaler MT, Walsh ND, Donaldson C, Suckling J, Andrew C, Steiner H, Murray RM ( 2008): Neural responses to sad facial expressions in major depression following cognitive behavioral therapy. Biol Psychiatry 64: 505–512. [DOI] [PubMed] [Google Scholar]

- Gainotti G ( 2007): Face familiarity feelings, the right temporal lobe and the possible underlying neural mechanisms. Brain Res Rev 56: 214–235. [DOI] [PubMed] [Google Scholar]

- Gobbini MI, Haxby JV ( 2007): Neural systems for recognition of familiar faces. Neuropsychologia 45: 32–41. [DOI] [PubMed] [Google Scholar]

- Gray J ( 1990): Brain systems that mediate both emotion and cognition. Cogn Emot 4: 269–288. [Google Scholar]

- Grosbras MH, Laird AR, Paus T ( 2005): Cortical regions involved in eye movements, shifts of attention, and gaze perception. Hum Brain Mapp 25: 140–154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossman ED, Blake R ( 2002): Brain areas active during visual perception of biological motion. Neuron 35: 1167–1175. [DOI] [PubMed] [Google Scholar]

- Gur RC, Sara R, Hagendoorn M, Marom O, Hughett P, Macy L, Turner T, Bajcsy R, Posner A, Gur RE ( 2002a): A method for obtaining 3‐dimensional facial expressions and its standardization for use in neurocognitive studies. J Neurosci Methods 115: 137–143. [DOI] [PubMed] [Google Scholar]

- Gur RC, Schroeder L, Turner T, McGrath C, Chan RM, Turetsky BI, Alsop D, Maldjian J, Gur RE ( 2002b): Brain activation during facial emotion processing. Neuroimage 16( 3 Pt 1): 651–662. [DOI] [PubMed] [Google Scholar]

- Gur RE, McGrath C, Chan RM, Schroeder L, Turner T, Turetsky BI, Kohler C, Alsop D, Maldjian J, Ragland JD, Gur RC ( 2002c): An fMRI study of facial emotion processing in patients with schizophrenia. Am J Psychiatry 159: 1992–1999. [DOI] [PubMed] [Google Scholar]

- Gur RE, Loughead J, Kohler CG, Elliott MA, Lesko K, Ruparel K, Wolf DH, Bilker WB, Gur RC ( 2007): Limbic activation associated with misidentification of fearful faces and flat affect in schizophrenia. Arch Gen Psychiatry 64: 1356–1366. [DOI] [PubMed] [Google Scholar]

- Guroglu B, Haselager GJ, van Lieshout CF, Takashima A, Rijpkema M, Fernandez G ( 2008): Why are friends special? Implementing a social interaction simulation task to probe the neural correlates of friendship. Neuroimage 39: 903–910. [DOI] [PubMed] [Google Scholar]

- Hariri AR, Bookheimer SY, Mazziotta JC ( 2000): Modulating emotional responses: Effects of a neocortical network on the limbic system. Neuroreport 11: 43–48. [DOI] [PubMed] [Google Scholar]

- Hariri AR, Mattay VS, Tessitore A, Fera F, Weinberger DR ( 2003): Neocortical modulation of the amygdala response to fearful stimuli. Biol Psychiatry 53: 494–501. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Ungerleider LG, Clark VP, Schouten JL, Hoffman EA, Martin A ( 1999): The effect of face inversion on activity in human neural systems for face and object perception. Neuron 22: 189–199. [DOI] [PubMed] [Google Scholar]

- Hein G, Knight RT ( 2008): Superior temporal sulcus—It's my area: Or is it? J Cogn Neurosci 20: 2125–2136. [DOI] [PubMed] [Google Scholar]

- Hoffman EA, Haxby JV ( 2000): Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nat Neurosci 3: 80–84. [DOI] [PubMed] [Google Scholar]

- Holt DJ, Weiss AP, Rauch SL, Wright CI, Zalesak M, Goff DC, Ditman T, Welsh RC, Heckers S ( 2005): Sustained activation of the hippocampus in response to fearful faces in schizophrenia. Biol Psychiatry 57: 1011–1019. [DOI] [PubMed] [Google Scholar]

- Holt DJ, Kunkel L, Weiss AP, Goff DC, Wright CI, Shin LM, Rauch SL, Hootnick J, Heckers S ( 2006): Increased medial temporal lobe activation during the passive viewing of emotional and neutral facial expressions in schizophrenia. Schizophr Res 82( 2‐3): 153–162. [DOI] [PubMed] [Google Scholar]

- Ishai A, Yago E ( 2006): Recognition memory of newly learned faces. Brain Res Bull 71( 1‐3): 167–173. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Bannister P, Brady M, Smith S ( 2002): Improved optimization for the robust and accurate linear registration and motion correction of brain images. Neuroimage 17: 825–841. [DOI] [PubMed] [Google Scholar]

- Killgore WD, Yurgelun‐Todd DA ( 2004): Activation of the amygdala and anterior cingulate during nonconscious processing of sad versus happy faces. Neuroimage 21: 1215–1223. [DOI] [PubMed] [Google Scholar]

- Kim H, Adolphs R, O'Doherty JP, Shimojo S ( 2007): Temporal isolation of neural processes underlying face preference decisions. Proc Natl Acad Sci USA 104: 18253–18258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knutson B, Adams CM, Fong GW, Hommer D ( 2001): Anticipation of increasing monetary reward selectively recruits nucleus accumbens. J Neurosci 21: RC159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kohler CG, Bilker W, Hagendoorn M, Gur RE, Gur RC ( 2000): Emotion recognition deficit in schizophrenia: Association with symptomatology and cognition. Biol Psychiatry 48: 127–136. [DOI] [PubMed] [Google Scholar]

- LaBar KS, LeDoux JE, Spencer DD, Phelps EA ( 1995): Impaired fear conditioning following unilateral temporal lobectomy in humans. J Neurosci 15: 6846–6855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaBar KS, Cabeza R ( 2006): Cognitive neuroscience of emotional memory. Nat Rev Neurosci 7: 54–64. [DOI] [PubMed] [Google Scholar]

- LeDoux JE ( 2000): Emotion circuits in the brain. Annu Rev Neurosci 23: 155–184. [DOI] [PubMed] [Google Scholar]

- Lee BT, Seok JH, Lee BC, Cho SW, Yoon BJ, Lee KU, Chae JH, Choi IG, Ham BJ ( 2008): Neural correlates of affective processing in response to sad and angry facial stimuli in patients with major depressive disorder. Prog Neuropsychopharmacol Biol Psychiatry 32: 778–785. [DOI] [PubMed] [Google Scholar]

- Lewis M, Haviland‐Jones J ( 2008): Handbook of Emotions. New York, NY: Guilford Publications. [Google Scholar]

- Liberzon I, King AP, Britton JC, Phan KL, Abelson JL, Taylor SF ( 2007): Paralimbic and medial prefrontal cortical involvement in neuroendocrine responses to traumatic stimuli. Am J Psychiatry 164: 1250–1258. [DOI] [PubMed] [Google Scholar]

- Loughead J, Gur RC, Elliott M, Gur RE ( 2008): Neural circuitry for accurate identification of facial emotions. Brain Res 1194: 37–44. [DOI] [PubMed] [Google Scholar]

- Maratos EJ, Dolan RJ, Morris JS, Henson RN, Rugg MD ( 2001): Neural activity associated with episodic memory for emotional context. Neuropsychologia 39: 910–920. [DOI] [PubMed] [Google Scholar]

- Mathalon DH, Ford JM ( 2008): Divergent approaches converge on frontal lobe dysfunction in schizophrenia. Am J Psychiatry 165: 944–948. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Monk CS, Telzer EH, Mogg K, Bradley BP, Mai X, Louro HM, Chen G, McClure‐Tone EB, Ernst M, Pine DS ( 2008): Amygdala and ventrolateral prefrontal cortex activation to masked angry faces in children and adolescents with generalized anxiety disorder. Arch Gen Psychiatry 65: 568–576. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris JS, Dolan RJ ( 2001): Involvement of human amygdala and orbitofrontal cortex in hunger‐enhanced memory for food stimuli. J Neurosci 21: 5304–5310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris JS, Frith CD, Perrett DI, Rowland D, Young AW, Calder AJ, Dolan RJ ( 1996): A differential neural response in the human amygdala to fearful and happy facial expressions. Nature 383: 812–815. [DOI] [PubMed] [Google Scholar]

- Nomura M, Ohira H, Haneda K, Iidaka T, Sadato N, Okada T, Yonekura Y ( 2004): Functional association of the amygdala and ventral prefrontal cortex during cognitive evaluation of facial expressions primed by masked angry faces: An event‐related fMRI study. Neuroimage 21: 352–363. [DOI] [PubMed] [Google Scholar]

- O'Doherty J, Kringelbach ML, Rolls ET, Hornak J, Andrews C ( 2001): Abstract reward and punishment representations in the human orbitofrontal cortex. Nat Neurosci 4: 95–102. [DOI] [PubMed] [Google Scholar]

- Pessoa L ( 2005): To what extent are emotional visual stimuli processed without attention and awareness? Curr Opin Neurobiol 15: 188–196. [DOI] [PubMed] [Google Scholar]

- Pessoa L, Padmala S, Morland T ( 2005): Fate of unattended fearful faces in the amygdala is determined by both attentional resources and cognitive modulation. Neuroimage 28: 249–255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phan KL, Wager T, Taylor SF, Liberzon I ( 2002): Functional neuroanatomy of emotion: A meta‐analysis of emotion activation studies in PET and fMRI. Neuroimage 16: 331–348. [DOI] [PubMed] [Google Scholar]

- Phan KL, Taylor SF, Welsh RC, Ho SH, Britton JC, Liberzon I ( 2004): Neural correlates of individual ratings of emotional salience: A trial‐related fMRI study. Neuroimage 21: 768–780. [DOI] [PubMed] [Google Scholar]

- Phelps EA ( 2004): Human emotion and memory: Interactions of the amygdala and hippocampal complex. Curr Opin Neurobiol 14: 198–202. [DOI] [PubMed] [Google Scholar]

- Phelps EA, LeDoux JE ( 2005): Contributions of the amygdala to emotion processing: From animal models to human behavior. Neuron 48: 175–187. [DOI] [PubMed] [Google Scholar]

- Rilling JK, Dagenais JE, Goldsmith DR, Glenn AL, Pagnoni G ( 2008): Social cognitive neural networks during in‐group and out‐group interactions. Neuroimage 41: 1447–1461. [DOI] [PubMed] [Google Scholar]

- Rolls ET ( 2000): The orbitofrontal cortex and reward. Cereb Cortex 10: 284–294. [DOI] [PubMed] [Google Scholar]

- Satterthwaite TD, Green L, Myerson J, Parker J, Ramaratnam M, Buckner RL ( 2007): Dissociable but inter‐related systems of cognitive control and reward during decision making: Evidence from pupillometry and event‐related fMRI. Neuroimage 37: 1017–1031. [DOI] [PubMed] [Google Scholar]

- Schultz W ( 2002): Getting formal with dopamine and reward. Neuron 36: 241–263. [DOI] [PubMed] [Google Scholar]

- Shannon BJ, Buckner RL ( 2004): Functional‐anatomic correlates of memory retrieval that suggest nontraditional processing roles for multiple distinct regions within posterior parietal cortex. J Neurosci 24: 10084–10092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith SM ( 2002): Fast robust automated brain extraction. Hum Brain Mapp 17: 143–155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith AP, Henson RN, Dolan RJ, Rugg MD ( 2004): fMRI correlates of the episodic retrieval of emotional contexts. Neuroimage 22: 868–878. [DOI] [PubMed] [Google Scholar]

- Snodgrass JG, Corwin J ( 1988): Pragmatics of measuring recognition memory: Applications to dementia and amnesia. J Exp Psychol Gen 117: 34–50. [DOI] [PubMed] [Google Scholar]

- Sommer M, Dohnel K, Meinhardt J, Hajak G ( 2008): Decoding of affective facial expressions in the context of emotional situations. Neuropsychologia 46: 2615–2621. [DOI] [PubMed] [Google Scholar]

- Sprengelmeyer R, Rausch M, Eysel UT, Przuntek H ( 1998): Neural structures associated with recognition of facial expressions of basic emotions. Proc Biol Sci 265: 1927–1931. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein MB, Goldin PR, Sareen J, Zorrilla LT, Brown GG ( 2002): Increased amygdala activation to angry and contemptuous faces in generalized social phobia. Arch Gen Psychiatry 59: 1027–1034. [DOI] [PubMed] [Google Scholar]

- Suslow T, Ohrmann P, Bauer J, Rauch AV, Schwindt W, Arolt V, Heindel W, Kugel H ( 2006): Amygdala activation during masked presentation of emotional faces predicts conscious detection of threat‐related faces. Brain Cogn 61: 243–248. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P ( 1988): Co‐planar stereotaxic atlas of the human brain. New York: Thieme. [Google Scholar]

- Taylor SF, Liberzon I, Fig LM, Decker LR, Minoshima S, Koeppe RA ( 1998): The effect of emotional content on visual recognition memory: A PET activation study. Neuroimage 8: 188–197. [DOI] [PubMed] [Google Scholar]

- Todorov A, Gobbini MI, Evans KK, Haxby JV ( 2007): Spontaneous retrieval of affective person knowledge in face perception. Neuropsychologia 45: 163–173. [DOI] [PubMed] [Google Scholar]

- Tzourio‐Mazoyer N, Landeau B, Papathanassiou D, Crivello F, Etard O, Delcroix N, Mazoyer B, Joliot M ( 2002): Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single‐subject brain. Neuroimage 15: 273–289. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Driver J ( 2007): Modulation of visual processing by attention and emotion: Windows on causal interactions between human brain regions. Philos Trans R Soc Lond B Biol Sci 362: 837–855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P, Pourtois G ( 2007): Distributed and interactive brain mechanisms during emotion face perception: Evidence from functional neuroimaging. Neuropsychologia 45: 174–194. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Rauch SL, Etcoff NL, McInerney SC, Lee MB, Jenike MA ( 1998): Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. J Neurosci 18: 411–418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whalen PJ, Shin LM, McInerney SC, Fischer H, Wright CI, Rauch SL ( 2001): A functional MRI study of human amygdala responses to facial expressions of fear versus anger. Emotion 1: 70–83. [DOI] [PubMed] [Google Scholar]

- Wheeler ME, Buckner RL ( 2003): Functional dissociation among components of remembering: Control, perceived oldness, and content. J Neurosci 23: 3869–3880. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams MA, Morris AP, McGlone F, Abbott DF, Mattingley JB ( 2004): Amygdala responses to fearful and happy facial expressions under conditions of binocular suppression. J Neurosci 24: 2898–2904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams LM, Liddell BJ, Kemp AH, Bryant RA, Meares RA, Peduto AS, Gordon E ( 2006): Amygdala‐prefrontal dissociation of subliminal and supraliminal fear. Hum Brain Mapp 27: 652–661. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams MA, McGlone F, Abbott DF, Mattingley JB ( 2008): Stimulus‐driven and strategic neural responses to fearful and happy facial expressions in humans. Eur J Neurosci 27: 3074–3082. [DOI] [PubMed] [Google Scholar]

- Woolrich MW, Ripley BD, Brady M, Smith SM ( 2001): Temporal autocorrelation in univariate linear modeling of FMRI data. Neuroimage 14: 1370–1386. [DOI] [PubMed] [Google Scholar]

- Zink CF, Pagnoni G, Chappelow J, Martin‐Skurski M, Berns GS ( 2006): Human striatal activation reflects degree of stimulus saliency. Neuroimage 29: 977–983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zink CF, Pagnoni G, Martin ME, Dhamala M, Berns GS ( 2003): Human striatal response to salient nonrewarding stimuli. J Neurosci 23: 8092–8097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zink CF, Tong Y, Chen Q, Bassett DS, Stein JL, Meyer‐Lindenberg A ( 2008): Know your place: Neural processing of social hierarchy in humans. Neuron 58: 273–283. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional Supporting Information may be found in the online version of this article.

Supplementary Figure 1

Supplementary Figure 2

Supplementary Figure 3