Abstract

In psychotherapy, the patient-provider interaction contains the treatment’s active ingredients. However, the technology for analyzing the content of this interaction has not fundamentally changed in decades, limiting both the scale and specificity of psychotherapy research. New methods are required in order to “scale up” to larger evaluation tasks and “drill down” into the raw linguistic data of patient-therapist interactions. In the current paper we demonstrate the utility of statistical text analysis models called topic models for discovering the underlying linguistic structure in psychotherapy. Topic models identify semantic themes (or topics) in a collection of documents (here, transcripts). We used topic models to summarize and visualize 1,553 psychotherapy and drug therapy (i.e., medication management) transcripts. Results showed that topic models identified clinically relevant content, including affective, content, and intervention related topics. In addition, topic models learned to identify specific types of therapist statements associated with treatment related codes (e.g., different treatment approaches, patient-therapist discussions about the therapeutic relationship). Visualizations of semantic similarity across sessions indicate that topic models identify content that discriminates between broad classes of therapy (e.g., cognitive behavioral therapy vs. psychodynamic therapy). Finally, predictive modeling demonstrated that topic model derived features can classify therapy type with a high degree of accuracy. Computational psychotherapy research has the potential to scale up the study of psychotherapy to thousands of sessions at a time, and we conclude by discussing the implications of computational methods such as topic models for the future of psychotherapy research and practice.

Keywords: Psychotherapy, Topic Models, Linguistics

“I believe that some aspects of psychoanalytic theory are not presently researchable because the intermediate technology required … does not exist. I mean auxiliaries and methods such as a souped-up, highly developed science of psycholinguistics, and the kind of mathematics that is needed to conduct a rigorous but clinically sensitive and psychoanalytically realistic job of theme tracing in the analytic protocol” (Meehl, 1978, p. 830).

Advances in technology have revolutionized research in much of psychology and healthcare, including major developments in pharmacology, neuroscience, and genetics. Yet, the science of patient-therapist interactions – the core of psychotherapy process research – has remained fundamentally unchanged for 70 years. Patients fill out surveys, or human coders rate some aspect of the interaction. Thus, while psychiatric and psychological guidelines recommend psychotherapy as a first line treatment for a number of mental disorders (APA, 2006), we still know relatively little about how psychotherapy works. As Meehl noted, existing research methods remain limited in their ability to explore the structure of verbal exchanges that are the essence of most psychotherapy. In the current paper, we move towards an answer to Meehl’s request for a “souped up mathematics” to mine the raw linguistic data of psychotherapy interactions. In traditional research on psychotherapy, human judgment and related behavioral coding are the rate-limiting factor. In this paper, we introduce a computational approach to psychotherapy research that is informed by traditional methods (e.g., behavioral coding) but does not rely on them as the primary data source. The key innovation in this computational approach is drawing on methods from computer science and machine learning that allow the direct, statistical analysis of session content, scaling up research to thousands of sessions.

Many Distinctions, but is There a Difference?

Some estimates indicate that there are over 400 different name brand psychotherapies (Lambert, 2013), each treatment offers a different approach to helping patients with psychological distress. While the clinical rationales and approaches differ, it is not clear that actual practices of these psychotherapies are meaningfully distinct. Potential differences in the process and outcome of psychotherapies have been a focus of psychotherapy science for over a century. As a comparison, there are many different drug therapies. However, the unique ingredients of treatments are chemical (and patentable). Thus, the actual distinctiveness of treatments is known, even if the specific mechanism of action or relative efficacy is not. In psychotherapy, the treatment consists primarily of words, and although cognitive behavioral (CBT) oriented treatments might focus strongly on patient behavior, the treatment is still verbally mediated (Wampold, 2007). Accordingly, scientific classification of treatments is more nebulous. What is not considered a ‘taxon’ of cognitive behavioral therapy may vary widely across experts and practitioners, with some definitions so broad as to include any scientifically justifiable intervention and others restricted to very specific psychological mechanisms (see Baardseth et al., 2013). This ambiguity is quite old, reaching back to debates between Freud and his early followers and can be found in current research comparing various cognitive behavioral psychotherapies and modern variants of psychoanalysis (e.g., psychodynamic psychotherapy; Leichsenring et al., 2013).

Some have argued that differences between psychotherapies are cosmetic (like the difference between generic ibuprofen and Advil) and that the underlying mechanisms of action are common across different approaches (Wampold, 2001). Meta-analyses generally suggest that most treatment approaches are of comparable efficacy (e.g., Benish, Imel, & Wampold, 2008; Imel, Wampold, Miller, & Fleming, 2008), and process studies cast doubt on the relationship between treatment-specific therapist behaviors and patient outcomes (Webb, DeRubeis, & Barber, 2010). One leading addiction researcher commented that, “… there is little evidence that treatments work as purported, suggesting that as of yet, we don’t know much about how brand name therapies work” (Morgenstern & McKay, 2007, p. 87S). Are the 400 hundred psychotherapies we have today unique, medical treatments? Or, are the different psychotherapies largely similar, distinguished by packaging that obscures what are mostly common components?

Given that psychotherapy is a conversation between patient and provider, the distinctiveness of a therapy approach should be found in the words patients and therapists use during their sessions. Yet, this is precisely where we find a fundamental methodological gap in psychotherapy research. The source data and information are linguistic and semantic, but the available tools used to study psychotherapy are not. Research on the active ingredients of psychotherapy has primarily relied on patient or therapist self-report measures (e.g., see reviews of empathy and alliance literature; Elliott, Bohart, Watson, & Greenberg, 2011; Horvath, Del Re, Flűckiger, & Symonds, 2011) or on behavioral coding systems, wherein human “coders” make ratings from audio or video recordings of the intervention session according to a priori theory-specific criteria (Crits-Christoph, Gibbons, & Mukherjeed, 2013).

Attempts at behavioral coding have varied in their depth from general, topographical assessments of the session such as those used in many Cognitive Behavioral Treatments (e.g., did the therapist ask about homework or set an agenda?) to highly detailed utterance level coding systems (e.g., Stiles, Shapiro, & Firth-Cozens, 1988; verbal response modes, Motivational Interviewing Skills Code; Moyers, Miller, & Hendrickson, 2005). However, behavioral coding as a technology has not fundamentally changed since Carl Roger’s first recorded a psychotherapy session in the 1940s (Kirschenbaum, 2004), and coding carries a number of disadvantages. It is extremely time consuming and reliability can be problematic to establish and maintain. In addition, there is no potential for human coding to scale up to larger applications (i.e., coding 1000 sessions takes 1000 times longer than coding 1 session, thus monitoring the quality of psychotherapy in a large scale naturalistic setting is not feasible over time). There is little flexibility – coding systems only code what they code. They must be developed a priori and cannot discover new meaning not specified in advance by the researcher. More substantively, coding systems are by nature extremely reductionistic – reducing the highly complex structure of natural human dialogue to a small number of behavioral codes.

Given these limitations, it is not surprising that the vast majority of raw data from psychotherapy is never analyzed and questions central to psychotherapy science remain either unanswered or impractical to address. Most content analyses of what patients and therapists actually discuss in psychotherapy are restricted to qualitative efforts that can be rich in content but by their nature are small in scope (e.g., Greenberg & Newman, 1996). While qualitative work remains important, the labor intensiveness of closely reading session content means that the vast majority of psychotherapy data is never analyzed. Consequently, the majority of psychotherapy studies are published without any detail as to what the specific conversations between patients and therapists actually entailed. Beyond the general theoretical description of the treatment outlined in manuals, what did the patients and therapists actually say? Are the different psychotherapies we have today linguistically unique? Or, do therapists who provide different name brand therapies say largely similar things? What specific therapist interventions, and in what combination are most predictive of good vs. bad outcomes? These basic questions form the backdrop of every therapist’s work, but have been impractical to consider given the current technology of behavioral coding and qualitative analysis.

A critical task for the next generation of psychotherapy research is to move beyond the use of behavioral coding to mine the raw verbal exchanges that are the core of psychotherapy, including acoustic and semantic content of what is said by patients and therapists. The use of discovery-oriented machine learning procedures offer new ways of exploring and categorizing psychotherapies based on the actual text of the patient and therapist speech.

Text Mining and Psychotherapy

The amount of data generated every day (e.g., digitized books, email, video, newspapers, blog posts, twitter, electronic medical records, cell phone calls) has expanded exponentially in the last decade with implications for business, government, science, and the humanities (Hilbert & Lopez, 2011). Developments in data-mining procedures have revolutionized our ability to analyze and understand this vast amount information, particularly in the area of text – sometimes called “computational linguistics” or “statistical text classification” (Manning & Schütze, 1999). Google books “n-gram” server (https://books.google.com/ngrams) allows for the evaluation of trends in single words (i.e., unigrams) or word combinations (bigrams, trigrams) in books. A recent paper analyzed words in 4% of all books (5,195,769 volumes), showing that patterns of emotion word use tracked in expected directions with major historical events (e.g., a sad peak during World War II; Acerbi, Lampos, Garnett, & Bentley, 2013).

There is a small literature that demonstrates the utility of computational linguistic approaches for the analysis of psychotherapy data. The majority of these studies rely on human defined computerized dictionaries in which a software program classifies words or sets of words into predefined categories. In an early study Reynes, Martindale, and Dahl (1984) found that “linguistic diversity” was higher in more productive sessions. In addition, Mergenthaler and his colleagues have published several studies demonstrating that emotion and abstraction word usage discriminates between improved and un-improved cases (e.g., Mergenthaler, 2008; see also Anderson, Bein, Pinnell, & Strupp, 1999). Studies that have used dictionary-based strategies hold promise, but also have important limitations. First, perhaps because large corpora of psychotherapy transcripts are hard to find, these studies have generally been limited in scope (n < 100), reducing the value added of a computerized technology that can evaluate a large set of sessions (i.e., 1,000 or 10,000) in a short amount of time. Second, computerized dictionaries are limited by the categories created by humans – the computer cannot ‘learn’ new categories. Finally, dictionaries cannot generally accommodate the effect of context on semantic meaning (e.g., “dark” may reference a mood or the sky at night).

Topic Models

One specific text-mining approach that holds promise for psychotherapy transcript data are topic models (also called, Latent Dirichlet Allocation; Blei, Ng, & Jordan, 2003). Topic models are data-driven, machine learning procedures that seek to identify semantic similarity among groups of words. Similar to factor analysis in which observed item values are functions of underlying dimensions, topic models view the observed words in a passage of text as a mixture of underlying semantic topics. An advantage of topic models is that they construct a linguistic structure from a set of documents inductively, requiring no external input, but can also be utilized in a supervised fashion to learn semantic content associated with particular codes or metadata (where metadata is any data outside of the text itself; Steyvers & Griffiths, 2007). There is recent work using these models to explore the structure of National Institute of Health grant applications (Talley et al., 2011), publications from the Proceedings of the National Academy of Sciences (Griffiths & Steyvers, 2004), articles from the New York Times (Rubin, Chambers, Smyth, & Steyvers, 2011), and the identify of scientific authors (Rosen-Zvi, Chemudugunta, & Griffiths, 2010). Perhaps more strikingly, topic models have been used in the humanities to facilitate “distant reading” in comparative literature such that hypotheses in literary criticism can be tested vis-à-vis the entire corpus of relevant work (e.g., exploring stylistic similarities in poems, see (Kao & Jurafsky, 2012; Kaplan & Blei, 2007).

With a few exceptions, topic models have yet to be applied to psychotherapy data (see Atkins et al., 2012 and also Salvatore et al., 2012 who used a derivative of latent semantic analysis - a forerunner to topic models; Landauer & Dumais, 1997). However, similar to the news articles, novels, and poems noted above, the words used during psychotherapy sessions by patients and therapists can be viewed as a large collection of text with a complex topical structure. The number of words generated during psychotherapy is quite large. A brief course of psychotherapy for a given patient may consist of 5-10 hours of unstructured dialogue including 12,000-15,000 words per hour (approximately 60,000 to 150,000 words, longer courses of treatment over 1 million words). In 2011, a PubMed search revealed 932 citations for psychotherapy clinical trials (out of 10,698 across all years). As a conservative estimate, if we consider: 500 studies per year, 50 participants per study, 5 sessions per participant, and 10,000 words per session, this leads to an estimate of 125M words of psychotherapy text per year from clinical trials alone. Regardless of the specific estimate, it is clear that a huge amount of psychotherapy data is generated every year and that this number is likely to increase. The use of discovery oriented text mining procedures such as topic models could facilitate new ways of exploring and categorizing psychotherapies based on the actual content of the patient and therapist speech (rather than labels established by schools of psychotherapy).

Current Study

To evaluate the potential of topic models to “learn” the language of psychotherapy, we applied two different types of topic models to transcripts from 1,553 psychotherapy and psychiatric medication management sessions. Our first goal was to verify that topic models would estimate clinically relevant semantic content in our corpus of therapy transcripts. Second, we determined if semi-supervised models could identify semantically distinctive content from different treatment approaches and interventions (e.g., therapist “here and now” process comments about the therapeutic relationship within a session). A third aim was to explore the overall linguistic similarity and distinctiveness of sessions from different treatment types (e.g., psychodynamic vs. humanistic/experiential). Our final goal was to classify treatment types of new psychotherapy sessions automatically, using only the words used during the session.

Method

Data Sources

The data for the current proposal come from two different sources: 1) a large, general psychotherapy corpus that includes sessions from a diverse array of therapies, and 2) a set of transcripts focused on Motivational Interviewing, a specific form of cognitive behavioral psychotherapy for alcohol and substance abuse.

General Psychotherapy Corpus

The general corpus holds 1,398 psychotherapy and drug therapy (i.e., medication management) transcripts (approximately 2.0 million talk turns, 8.3 million word tokens including punctuation) pulled from multiple theoretical approaches (e.g., Cognitive Behavioral; Psychoanalysis; Motivational Interviewing; Brief Relational Therapy). The corpus is maintained and updated by the ‘Alexander Street Press’ (http://alexanderstreet.com/) and made available via library subscription. In addition totranscripts, there is associated metadata such as patient ID, therapist ID, limited demographics, session numbers when there was more than a single session, therapeutic approach, patient’s primary symptoms, and a list of subjects discussed in the session.

The list of symptoms and subjects was assigned by publication staff to each transcript, and no inter-rater reliability statistics were available. All labels were derived from the DSM-IV and other primary psychology/psychiatry texts. Many sessions were conducted by prominent psychotherapists who developed particular treatment approaches (e.g., James Bugental, Existential; Albert Ellis, Rational Emotive; Carl Rogers, Person-Centered; William Miller, Motivational Interviewing), and hence may serve as exemplars of these treatment approaches. To facilitate analysis we categorized each psychotherapy session into 1 of 5 treatment categories, 1) Psychodynamic (e.g., psychoanalysis, brief relational therapy, psychoanalytic psychotherapy), 2) Cognitive Behavioral Therapy (e.g., Rationale Emotive Behavior Therapy, Motivational Interviewing, Relaxation Training, etc.), 3) Experiential/Humanistic (e.g., Person Centered, Existential), 4) other (e.g., Adlerian, Reality Therapy, Solution Focused, as well as group, family, and marital therapies), and finally 5) Drug therapy or medication management. However, in some cases, when a label was missing or more than one treatment label was assigned to a session, collateral information in the metadata was used to assign a single specific treatment label (i.e., a well known therapist associated with a specific intervention, reported use of specific interventions, and/or inspection of the raw transcript). If there was no collateral information or an appropriate label could not be determined, the first listed intervention was chosen as the treatment name or the treatment label and category was left messing. In addition to treatment category, analyses used one subject label, “counselor-client relations”. This session-level label (i.e., applied to an entire session) was assigned to a transcript when there was a discussion about the patient-therapist relationship or interaction during the therapy.

Motivational Interviewing Corpus (MI; Miller & Rollnick, 2002)

We supplemented the general corpus above with a set of MI sessions (n = 148, 30,000 talk turns, 1.0 million word tokens). Transcripts are a subset of sessions from five randomized trials of MI for drug or alcohol problems, including: problematic drinking in college freshman (Tollison, Lee, Neighbors, & Neil, 2008), 21st birthdays and spring break (Neighbors et al., 2012), problematic marijuana use (Lee et al., 2014), and drug use in a public safety-net hospital (Krupski, Joesch, & Dunn, 2012). Each study involved one or more in-person treatment arms that received a single session of MI. Sessions were transcribed as part of ongoing research focused on applying text-mining and speech signal processing methods to MI sessions (see, e.g., Atkins et al., 2014).

Data Analysis

The linguistic representation in our analysis consisted of the set of words in each talk turn. A part-of-speech tagger (Toutanova, Klein, & Manning, 2003) was used to analyze the types of words in each talk turn. We kept all nouns, adjectives and verbs and filtered out a number of word classes such as determiners and conjunctions (e.g., “the”, “a”) as well as pronouns. The resulting corpus dramatically reduces the size of the corpus to 1.2M individual words across 223K talk turns. We applied a topic model with 200 topics to this data set, treating each talk turn (either patient or therapist) as a “document.” In the topic modeling literature, the document defines the level at which words with similar themes are grouped together in the raw data. We could define documents in a number of ways (e.g., all words in the session or all words from a specific person), but we have found in previous research within clinical psychology (Atkins et al., 2012) that defining documents by talk turns enhances the interpretability of the resulting topics. In a topic model, each topic is modeled as a probability distribution over words and each document (talk turn) is treated as a mixture over topics. Each topic tends to cluster together words with similar meaning and usage patterns across talk turns. The probability distribution over topics in each talk turn gives an indication of which semantic themes are most prevalent in the talk turn. For further details on topic models (see Atkins et al., 2012).

Results

Exploration of Specific Topics

First, we used topic models to explore what therapists and patients talk about. As noted earlier, topic models estimate underlying dimensions in text, which ideally capture semantically similar content (i.e., the underlying “topics”). Thus, in applying topic models to psychotherapy transcripts, an initial question is whether the models extract relevant semantic content? Table 1 presents 20 selected topics (of 200 total) from an unsupervised topic model applied to all session transcripts (i.e., these topics were generated inductively without any input from the researchers). It is clear that the words in each topic provide semantically related content and capture aspects of the clinical encounter that we might expect therapists and patients to discuss. We have organized topics into four areas, 1) Emotions/Symptoms, 2) Relationships, 3) Treatment, and 4) Miscellaneous. Similar to factor analysis, all labels were supplied by the current authors-the model itself simply numbers them. The top 10 most probable words for each topic are provided along with author generated topic labels to aid interpretation. For example, the emotion category includes several symptom relevant topics. Topic 15 (Depression) includes many of the specific symptom criteria for depression (e.g., sadness, energy, hopelessness; the word “depression” is the 16th most probable word), and topic 149 (Anxiety) includes words relevant to the discussion of a panic attack.

Table 1.

Selected Topics

| Emotions | ||||

|---|---|---|---|---|

|

| ||||

| Anxiety: 149 | Crying: 124 | Hurt Feelings: 156 | Enjoyment: 100 | Depression: 15 |

|

| ||||

| anxiety | crying | feelings | enjoy | self |

| nervous | cry | hurt | fun | fine |

| anxious | hurt | strong | excited | low |

| panic | cried | emotions | enjoying | sad |

| attack | upset | express | find | appetite |

| attacks | emotional | emotion | enjoyed | hopeless |

| tense | tears | intense | pleasure | helpless |

| calm | face | touch | exciting | esteem |

| depressed | start | emotional | interest | irritable |

| hyper | sudden | hurts | company | energy |

| Relationships | ||||

|---|---|---|---|---|

|

| ||||

| Sex: 146 | Pregnancy: 168 | Conflict: 73 | Family Roles: 76 | Intimacy: 60 |

|

| ||||

| sex | baby | hate | sister | relationship |

| sexual | boy | fight | brother | relationships |

| normal | pregnant | stand | older | close |

| relationship | child | awful | younger | sexual |

| healthy | born | horrible | mother | involved |

| desire | boys | fighting | family | develop |

| satisfied | girl | terrible | daughter | intimate |

| involved | son | argument | father | connection |

| marriage | mother | argue | mom | open |

| enjoy | age | hated | sisters | physical |

| Treatment | ||||

|---|---|---|---|---|

|

| ||||

| Medication: 196 | Behav Pattern: 198 | MI Survey: 135 | Goal Setting: 131 | Subst Use Tx: 69 |

|

| ||||

| wellbutrin | behavior | information | set | treatment |

| prozac | pattern | questions | goal | program |

| zoloft | aggressive | feedback | goals | need |

| medicine | least | helpful | expectations | options |

| medicines | example | survey | successful | stay |

| effexor | irrational | based | success | meetings |

| lexapro | personality | interested | own | available |

| add | conscious | great | setting | sound |

| generic | follow | useful | working | use |

| lamictal | identify | use | accomplish | option |

| Miscellaneous | ||||

|---|---|---|---|---|

|

| ||||

| Change: 65 | Medical: 80 | Drinking: 104 | Appearance: 120 | Acceptance: 50 |

|

| ||||

| difference | doctor | alcohol | wear | accept |

| noticed | hospital | drinking | hair | find |

| notice | cancer | social | clothes | change |

| big | doctors | effects | looking | willing |

| huge | disease | tired | feet | least |

| change | nurse | outgoing | dress | accepting |

| improvement | surgery | drunk | stand | accepted |

| happens | sick | situations | wearing | situation |

| significant | patients | sounds | shoes | hope |

| differences | medical | relaxed | uSlY | possibility |

The relationship category illustrates how a topic model can handle differences in meaning depending on context. Topic 146 (Sex) and 60 (Intimacy) include derivatives of the words relationship and sex. In Topic 60, these words occur in the context of words such as closeness, intimacy, connection, and open, suggesting these words had a different implications then when they occur in Topic 60, which includes words such as desire, enjoy, and satisfied. The basic topic model can infer differing meaning of identical words (e.g., play used in reference to theater vs. children) as long as the documents that the words occur in have additional semantic information that would inform the distinction (Griffiths & Steyvers, 2007). In the treatment category, topic 196 includes a number of medication names and is clearly related to discussions of psychopharmacological treatment. Topic 198 (Behavior Patterns) includes words that might be typical in the examination of behavior/thought patterns (e.g., irrational, pattern, behavior, identify). We considering labeled this topic “CBT” given words that might be found in an examination of thoughts in cognitive therapy. However, we found that this topic was actually more prevalent in psychodynamic sessions as compared to CBT sessions. This finding highlights the complexities of topic models. While the model returns a cluster of words, the researcher must infer what the cluster means.

Identification of Therapist Interventions

To demonstrate the utility of a topic model in the discovery of language specific to different approaches to psychotherapy, we utilized a ‘labelled’ topic model (Rubin et al., 2011) wherein the model learns language that is associated with a particular label – in the present case a session-level label that identifies the type of psychotherapy (e.g., CBT vs. Psychodynamic). We used the output from this model to identify specific therapist talk turns that were statistically representative of a given label. In the general psychotherapy corpus, there were no labels or codes for talk turns, only for the session as a whole. Given the labels for each session and the heterogeneity of word usage across sessions, the model ‘learns’ which talk turns were most likely to give rise to a particular label for the entire session.

In Table 2, we provide four highly probable talk turns for six different treatments. The depicted statements are what might be considered prototypic therapist utterances for the each treatment. Client-centered talk turns appear to be reflective in nature, while utterances in rationale emotive behavior therapy have a quality of identifying irrational thought patterns. Brief relational interventions focus on here and now experiences, and the selected talk turns for MI were those typical for the brief structured feedback session that therapists were trained to provide in several of the MI clinical trials included in the corpus.

Table 2.

Most probable talk turns for specific treatment labels.

| Treatment Label | Example Therapist Talk Turns Assigned by Model |

|---|---|

| Drug Therapy | No trouble getting to sleep or staying asleep? and how’s your energy level holding up, you doing okay? So, so in this, lorazepam. so in this sweep or time, over these 3 months, how’s your mood been? separate from this setback, how’s your mood been in general? |

| I saw you, ah, … your mood was okay. you were recently stable. You were not sad or anxious or irritable. your appetite and sleep were fine. Your energy was good. You were exercising, some revving up in fall. Again, I had not seen you since august before. So and august was all the drama over the breakup. | |

| I’ll give you the 25’s. So let me write for the 200’s. okay, lamictal 200 and I’ll write for Wellbutrin, 3 of the 150’s? | |

|

Client-centered

Therapy |

And Its kind of like, I guess it’s like it felt great to be to finally sleep with [Name]. Its kind of like, like you really see yourself kind of extending yourself. |

| Yeah, I kind of sense like you really feel like a blank today. | |

| Yeah. It’s like you really kind of feel like emotionally you may … you’re deadened inside or keep yourself guarded. | |

| Psychoanalysis | I see. It’s as though you were being a kind of medium for us. |

| So you’re, you’re afraid on the one hand to let your thoughts go to something else because you feel you’re leaving the subject, eh. | |

| I gather from what you say that you must wonder whether or not to tell your parents that you’ve started analysis. Well, I don’t know that there is. It just strikes me that you tell me the dream, you don’t say anything about it, and then say what you’ve been thinking about now, among other things, whether you should learn to use a diaphragm, and whether you need it and why do you need it. Which are the questions in the dream. | |

|

Brief Relational

Therapy |

But how did it feel? Did it feel like I was letting you down? or did it feel like I was wimping out? did it? And I was sort of trying to explore what was going on between you and me. |

| I’m asking you now how does it feel to say it? | |

| Let us talk about what is going on here? How are you feeling right now? | |

|

Rational Emotive

Therapy |

Ok. And what about the bigger one, that “[Name] should do things the way that I want them done and if he doesn’t, he’s an asshole”? You are like a star student they were like whose. Where was I? So it is more of the catastrophic thinking and there is some self-doubting as well? |

| Well maybe there is three, because is there the ‘I can’t stand that he hasn’t called. I can’t stand this.’?…. | |

| To succeed. That is kind of your main or irrational belief. “I should not have to work as hard as other people to succeed.” | |

|

Motivational

Interviewing |

Okay final two continue to minimize my negative impacts on the environment. How if at all does Marijuana use affect attainment of that goal? Mm- hmm and how might that fit into your plans for spring break. |

| okay so eight drinks over two hours would put you at a point one seven two. | |

| So this next part is about BAC or Blood Alcohol Content. |

Note. The four most representative therapist talk turns for 5 specific treatments. Direct quotations from session transcripts reproduced with permission by Alexander Street Press (http://alexanderstreet.com/).

Table 2 presents results from a labeled topic model using psychotherapy type as the label categorizing a session. We explored whether the model could learn more nuanced, psychological labels, focusing on “client-counselor relations” – a code that was used to label sessions that included discussions between client and therapist about their relationship/interaction. As with the identification of therapist talk turns, the client-counselor relations code was assigned to an entire transcript. Consequently, the model must learn to discriminate between language in these sessions that is irrelevant to the label (e.g., general questions, scheduling, pleasantries, other interventions, etc.) and language that involves the client and therapist talking about their relationship. Table 3 provides the five most probable therapist talk turns associated with the client-counselor relations label. Each talk turn is clearly related to a therapist making a comment about the patient-therapist interaction.

Table 3.

Example Therapist Talk Turns Assigned by Model for Label Client-Counselor Relations

| Five Most Probable Talk Turns |

|---|

| I am asking you questions. I am asking you questions and asking you to look at stuff and you are joking and giggling again. |

| I guess I could try to explain it again. I’m just wondering if any explanation I give because we - we have - we have discussed what we’re doing in therapy or how this works. |

| Well you might garner sort of what it feels like just to be able to when I do different things. How it makes you feel that we bring attention to it sometimes. And - and your reactions to it are really important, ‘cause in the outside world, your reactions are going to be telling you what your experience is. |

| Okay, so let me come back for a second. Because what you are talking about is important and it is a big part of what this impasse that we have been having is all about. I was curious and I am not sure if you answered about the laughing today. |

| Well no… wait. There is something. We were on the cusp of discussing something really important when this came up? Let me ask you the question more directly. Did you want to discuss this whole thing with [Name] in the session? |

Note. Direct quotations from session transcripts reproduced with permission by Alexander Street Press, Counseling and Psychotherapy Transcripts, Client Narratives, and Reference Works (http://alexanderstreet.com/).

Discrimination of Treatment Approaches

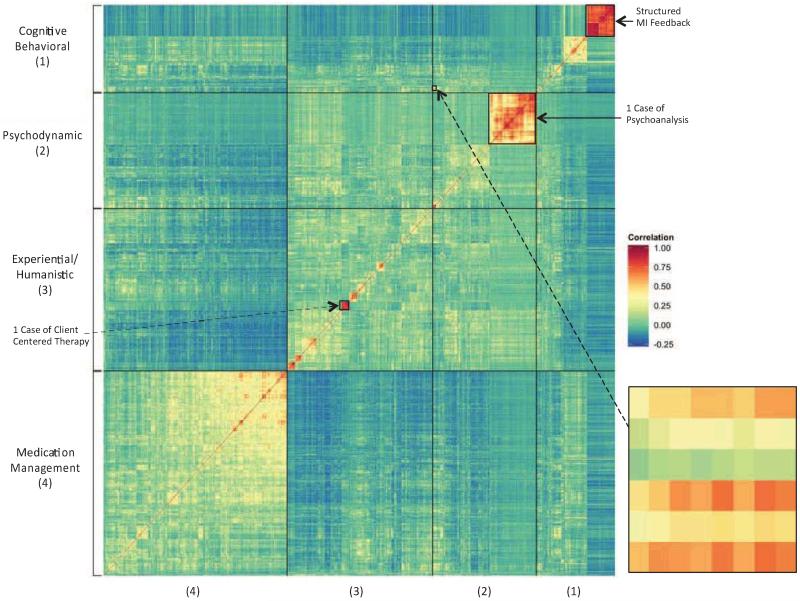

In addition to low-level identification of therapist statements, we used topic models to make high-level comparisons related to the linguistic similarity of sessions. How similar are sessions, given the semantic content identified by the topic model? We used the output from the unsupervised topic model to explore the semantic similarity of 1,318 sessions across 4 treatment categories (i.e., Medication Management, Psychodynamic, CBT, Humanistic/Existential). Specifically, it is possible to assign individual words within sessions to one of the 200 topics. The sum of the words in each topic for each session provide a session-level summary of the session’s semantic content – a model-based score on each of 200 topics for each of the 1,318 sessions.1 Given these semantic summaries of each session, we then computed a correlation matrix of each session with every other session. A high correlation between two sessions indicates similar semantic content, defined by the 200 topics of the topic model. Because a 1,318 × 1,318 matrix of correlations would be utterly unreadable, we present the correlation matrix visually using color-encoded values for the correlations.

This style of visualization is referred to as a heatmap, as the initial versions often used red to yellow coloring to note the intensity of the numeric values. In Figure 2 the color scale on the right shows how correlation values are mapped to specific colors: Orange and red pixels represent highly correlated sessions, and blue and green pixels indicate little correlation in topic frequencies. The correlation matrix was purposefully organized by treatment category. We have highlighted several highly correlated blocks of sessions that represent, (a) a set of highly structured motivational interviewing feedback sessions from a clinical trial, (b) a large number of sessions from a single case of psychoanalysis, and (c) several sessions from a single case of client-centered therapy. Sessions within treatment category are generally more correlated than outside of category (e.g., medication management sessions generally have similar topic loadings that are heavily driven by drug names, dosing schedules, etc.). However, correlations across psychodynamic and humanistic/experiential session were often moderate such that it is difficult to separate them from visual inspection of the plot. In addition, there are pockets of sessions that are correlated across categories. For example, the zoomed in portion of the heat map depicted in the lower right portion of Figure 2 highlights several psychodynamic and cognitive-behavioral sessions that had very similar topic loadings. Interestingly, several of these sessions had both CBT and brief relational therapy labels, suggesting that the model was sensitive to potential overlap in content that was identified by the human raters who created the database.

Figure 2.

A 1318 × 1318 heatmap, depicting the correlation of topics across each session. The color scale on the right shows how correlation values are mapped to specific colors. The correlation matrix is organized by treatment category and several select groupings of sessions are highlighted.

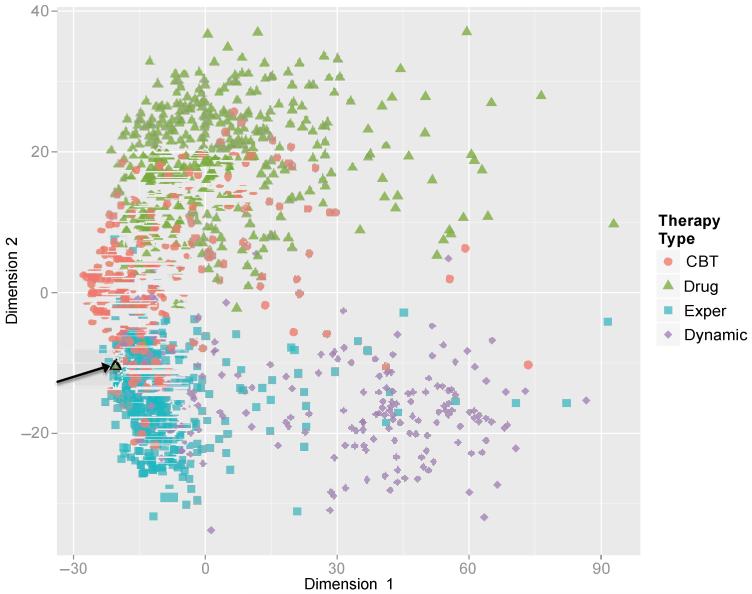

Figure 3 is an alternative visual representation that highlights the semantic similarities and differences across sessions, called a multidimensional scaling (MDS; Cox & Cox, 2000) plot. Using the same session-level topic scores from the correlation matrix above, MDS treats each session’s 200 values as a set of coordinates (in a 200 dimension, mathematical space). Thus, the topic model-based semantic scoring can be used to define distance values of each session from every other session within a 200 dimension semantic space. Somewhat similar to factor analysis, MDS finds an optimal, lower dimensional space that best represents the overall distance matrix; Figure 3 plots the results of the MDS. Each color-coded dot represents a single session. There was separation between treatment types such that treatment classes were broadly grouped together. However, there was variability within treatment approaches. For example, one set of CBT sessions (denoted in red) are notably different from other sessions. These are the structured MI sessions that all focus on drug or alcohol problems. Other CBT sessions are much more similar to other treatment approaches, and interestingly, appear to lie in between the highly structured medication management sessions and much less structured experiential sessions. In addition, we highlighted one medication management session that was distinct from the other medication management sessions, located much closer to experiential psychotherapy sessions. An inspection of this transcript revealed that there was no direct discussion of medications or dosage, potentially indicating a medication provider who focused on providing psychotherapy rather than checking medication dosage and side effects.

Figure 3.

Multidimensional scaling of 1318 sessions in a 200 topic space. Colors correspond to different treatment approaches. One outlier medication management session is circled in black.

The previous results are exploratory visualizations demonstrating how semantic content from a topic model could distinguish categories of psychotherapy. Our final analysis examined how accurately the 200 topics could discriminate these four classes of psychotherapy sessions, using a type of multinomial logistic regression. We used a machine learning regression model called a random forest model using the 200 topics as predictors (Breiman, 2001). Random forest models are a type of ensemble learner, in which many regressions are fit simultaneously and then aggregated into a single, overall prediction model.2 The prediction accuracy of the model is tested using sessions that were not used during the training phase. This is a type of cross-validation in which the prediction accuracy of a model is tested on data points that were not included in the model creation. The overall, cross-validated classification error rate was 13.3%, showing strong predictive ability of the topic model-based predictors. As we saw in the earlier visualizations, the semantic information identified by the topic model is highly discriminative of the classes of psychotherapy. Table 4 shows the specific types of errors that the model makes (called a confusion matrix). The rows contain the true psychotherapy categories, and the columns have the model predictions. The counts along the main diagonal indicate correct classifications by the model and off-diagonal elements are errors. Not surprisingly, the model is most accurate at identifying medication management sessions but is also quite accurate with experiential psychotherapy. It is less accurate with CBT and Psychodynamic sessions, which are more likely to be confused as experiential psychotherapy. This makes clinical sense as the hallmarks of good experiential psychotherapy are reflective listening skills, which are common (though not as strongly emphasized) to CBT and Psychodynamic treatments.

Table 4.

Confusion matrix of true vs. predicted treatment labels.

| Predicted Category | ||||||

|---|---|---|---|---|---|---|

| CBT | Drug | Exper | Dynamic | Class Error |

||

|

True

Category |

CBT | 153 | 17 | 42 | 8 | .30 |

| Drug | 4 | 454 | 7 | 1 | .03 | |

| Exper | 0 | 6 | 351 | 12 | .05 | |

| Dynamic | 4 | 8 | 66 | 185 | .30 | |

Note. CBT = cognitive behavioral therapy; Drug = medication management; Exper = experiential / humanistic therapy; Dynamic = psychodynamic or psychoanalytic therapy. The bolded, diagonal elements represent correct classifications by the model, and off-diagonal elements represent errors. The final column has the classification errors of the model for each category of therapy (i.e., row). The overall error-rate is .13.

Discussion

We used a specific computational method, topic models, to explore the linguistic structure of psychotherapy. Without any user input, these models discovered sensible topics representing the issues that therapists and patients discuss, and facilitated a high level representation of the linguistic similarity of sessions wherein we could identify specific cases, potentially overlapping content across treatment approaches, as well as outlier sessions. By including human-generated session labels, topic models learned therapist statements associated with different treatment approaches and interventions, including therapist comments about therapeutic relationship, which are often considered among the more complex interventions in the therapist repertoire. Using only the words spoken by patients and therapists, the topic model classified treatment sessions with a high degree of accuracy.

Limitations

While the present study represents – what we believe is – the largest comparative study of linguistic content from psychotherapy ever conducted, there are important limitations that we will discuss prior to highlighting potential implications. First, in terms of the data, the combined general psychotherapy and MI corpus is very heterogeneous along several dimensions (e.g., treatment approach, topics of discussion, etc.), but it is certainly not a random sample of general psychotherapy and they were not necessarily collected for research purposes. While the diversity of the corpus facilitates the examination of differences between approaches, the database is also highly unbalanced. There is an over-representation of select cases (over 200 sessions from 1 case), and relatively few sessions from many approaches. For example, CBT is relatively under-represented relative to its empirical standing in modern psychotherapy research, and much of the CBT are Motivational Interviewing sessions that may not be representative of other more modal CBT interventions (e.g., Prolonged Exposure, Cognitive Therapy for depression). As a result linguistic differences between treatments may be confounded with other differences in the selected sessions not related to approach (i.e., therapists, symptoms, idiosyncratic patient factors, etc.). The labeling of sessions was not done with standard adherence manuals, such that no estimates of reliability are possible. There is no symptom severity or diagnostic data beyond session level labels that indicate that depression was discussed in a session. There is no audio, which is clearly important to the evaluation of psychotherapy.

The model itself contains a number of important limitations. First, the topic model we used did not include information regarding the temporal ordering of words and talk turns. This is common to most topic models, which make a “bag of words” assumption that word order is not critical. For most prior applications (e.g., news articles and scientific abstracts), this may be a reasonable assumption, but for spoken language it is clearly quite tenuous. In addition, while the removal of specific words like pronouns reduces the complexity of the data, it is likely that these words are quite in important in psychotherapy and general human interactions (Williams-Baucom, Atkins, Sevier, Eldridge, & Christensen, 2010). The model was also restricted to text and did not have access to the acoustic aspects of these treatment interactions, which are also important (Imel et al., 2014). Future studies should incorporate the above features.

Transcription is a limitation of expanding this work. To use these methods researchers would be required to transcribe thousands of sessions from clinical trials. While this is an important practical limitation, we believe the primary reason that transcription remains uncommon is that the methods available to analyze transcript data in psychotherapy are labor intensive. In comparison to the cost of a clinical trial, the cost of basic transcription is minimal and could proceed in parallel to the clinical trial. Thus while transcription would add costs to clinical trials, the costs would be trivial compared to the potential long-term scientific impact of retaining the raw ingredients that were involved in the change process. It is also important to note that automated speech recognition (ASR) techniques continue to improve, and may someday completely eliminate the need for human transcription entirely.

Implications

The primary implications of the topic model and other associated machine learning approaches will be in, 1) targeted evaluation of questions in clinical trials that compare specific therapies, and 2) exploration of very large scale naturalistic datasets that capture variability in psychotherapy as actually practiced.

First, consider a recent large (n = 495) clinical trial comparing psychodynamic psychotherapy to CBT for social anxiety disorder (Leichsenring et al., 2013). Both treatments were better than wait-list. Between treatment comparisons were generally equivocal (e.g., CBT had somewhat larger remission rates, but response rates were not significantly different, no differences met clinically significant benchmarks set a priori). Differences between therapists (5-7% of variance in outcomes) were larger than treatment effects (1-3% of variance in outcomes). As is typical with large-scale psychotherapy clinical trials, there have already been published comments (Clark, 2013) and rejoinders (Leichsenring & Salzer, 2013) on possible explanations for the findings wherein Clark raised questions about the implementation of the CBT and Leichsenring reported that the competence of psychodynamic therapists may not have been ideal. In addition, (Leichsenring & Salzer, 2013) noted that CBT therapists used more dynamic interventions than dynamic therapists used CBT related interventions, raising questions about the internal validity of the trial. It is also possible that specific types of statements not specific to either intervention were responsible for between therapist differences in outcomes.

As with other large psychotherapy clinical trials (e.g., Elkin, 1989), the debate will likely continue. However, a fundamental problem remains. While all treatment sessions were recorded, comparisons of adherence and competence were based on a total of 50 sessions (Leichsenring & Salzer, 2013). As the mean number sessions for a patient was 25, and 416 patients received either CBT or psychodynamic treatment, the trial consisted of over 10,000 sessions (7 times more sessions than included in this paper). Analyses of what actually happened in this trial are driven by ½ of 1% of all available sessions. This sample size is typical and understandable given the labor intensiveness of behavioral coding. However, given the centrality of treatment mechanism questions to the field of psychotherapy, we look forward to more thorough analyses of process questions with computational methods. For example, researchers could conduct original human coding of subsets of sessions and use this data to train topic models that might examine a larger collection of sessions. This research may ultimately lead to more definitive answers regarding what actually happens during patient-therapist interactions and what specific therapist behaviors predict treatment outcomes within and across specific treatments.

Funding agencies may consider requiring archives of audio and transcripts for sessions in clinical trials such that they can be used in later research. While there are privacy concerns that would need to be addressed in such a procedure, there is simply no other way for researchers to adequately evaluate what happened in the treatment. While manuals exist, these prescriptive books are not sufficient to capture the complexity of what happens during the clinical encounter. To truly understand the mechanisms of psychotherapy we must begin to contend with the sheer complexity and volume of linguistic data that is created during our work.

More practically, topic models could be used as adjuncts to training and fidelity monitoring in clinical trials or naturalistic settings, automatically highlighting outlier sessions or noting particular therapist interventions that were inconsistent with the specified treatment approach. In naturalistic settings, topic models could be used as a quantitatively derived aid to the traditional qualitative, report based models of supervision. In combination with speech recognition, and selective human coding, one could imagine extremely large psychotherapy process studies (e.g., 100,000 sessions), that avoid confidentiality concerns by evaluating session content without requiring humans to listen directly to all sessions. Studies of this size could be positioned to discover specific processes that are involved in successful vs. non-successful cases.

Conclusions

We design treatments, package them in books and hope that trained providers implement them in a way that is faithful to the theory and makes sense for a given patient. This implementation often involves many hours of emotional, unstructured dialogue. Specifically, the patient-provider interaction contains much of the treatment’s active ingredients. The conversation is not simply a means of developing rapport and conducting an assessment to yield a diagnosis – it is the treatment. As a result, the questions of interest to psychotherapy researchers are complex and imbedded in extremely large speech corpora. Research questions may include understanding the unfolding of intricate psychoanalytic concepts over a large number of sessions, the cultivation of accurate empathy, or the competent use of cognitive restructuring to examine an accurately identified irrational thought. Moreover there is continued hope that a grand rapprochement may be possible wherein more general theories of psychotherapy process can replace and improve upon the traditional encampments that have characterized the scope of psychotherapy research for two generations.

Despite the fundamentally linguistic nature of these questions, most of the raw data in psychotherapy is never subjected to empirical scrutiny. The bulk of psychotherapy process research utilizes patient self-report or observer ratings of provider behavior. These methods have been available for decades and have yielded important insights about the nature of psychotherapy. However, existing methods are simply not sufficient to analyze data of this size and complexity, limiting both the nuance and scale of questions that psychotherapy researchers can address. There remains an almost lawful tension between the scope and the richness of our research. One can do a very large psychotherapy study, but the data will be restricted to utilization counts and self-report measures of treatment process and clinical outcomes. Alternatively, one can do detailed behavioral coding of sessions to evaluation therapist adherence, or qualitative work to extract themes, but the size of these studies is necessarily limited do to labor intensiveness of the work. Machine learning procedures such as the topic models used in the current study offer an opportunity to strike a balance between these poles, extracting complex information (e.g., discussions of the therapeutic relationship) on a large scale.

Most thinking about how technology will revolution psychotherapy focus on the digitization of treatment itself (i.e., computer based treatments, mobile apps, see Silverman, 2013). Many worry about how the ‘low tech’ field of psychotherapy will adjust to this world, while more optimistic commentaries expect the technological mediation of human interaction will simply provide more grist for the mill – albeit in a different form (Tao, 2014). However, we are poised for parallel technological revolution in psychotherapy where advanced computational methods like the machine learning approach described in this article may ultimately support, query, and expand the complex, messy beauty of a therapist and patient talking.

Acknowledgments

Funding for the preparation of this manuscript was provided by National Institute of Drug Abuse (NIDA) of the National Institutes of Health under award number R34/DA034860, the National Institute on Alcohol Abuse and Alcoholism (NIAAA) under award number R01/AA018673, and a special initiative grant from the College of Education, University of Utah. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health or University of Utah. The authors would also like to thank Alexander Street Press for consultation related to the analysis of psychotherapy transcripts.

Footnotes

The scores were also divided through by total number of words per session so that sessions with different lengths did not skew the results.

For the present analyses, we created 2,000 new datasets, each with 1,318 sessions sampled with replacement from the original sessions. Next, on each of the 2,000 samples a classification and regression tree model is fit, but only using a subset of the total predictors. Thirty predictors were selected randomly within each bootstrap-generated dataset. This process results in 2,000 sets of regression results, which are then combined into an overall prediction equation.

References

- Acerbi A, Lampos V, Garnett P, Bentley RA. The Expression of Emotions in 20th Century Books. PLoS ONE. 2013;8(3):e59030. doi: 10.1371/journal.pone.0059030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson T, Bein E, Pinnell B, Strupp H. Linguistic Analysis of Affective Speech in Psychotherapy: A case grammar approach. Psychotherapy Research. 1999;9(1):88–99. [Google Scholar]

- Association, A. P. American Psychiatric Association Practice Guidelines for the Treatment of Psychiatric Disorders. American Psychiatric Pub.; 2006. [Google Scholar]

- Atkins DC, Rubin TN, Steyvers M, Doeden MA, Baucom BR, Christensen A. Topic models: A novel method for modeling couple and family text data. Journal of Family Psychology. 2012;26(5):816–827. doi: 10.1037/a0029607. doi:10.1037/a0029607. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Atkins DC, Steyvers M, Imel ZE, Smyth P. Automatic evaluation of psychotherapy language with quantitative linguistic models: An initial application to Motivational Interviewing. Implementation Science. 2014 doi: 10.1186/1748-5908-9-49. Revise and Resubmit. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baardseth TP, Goldberg SB, Pace BT, Wislocki AP, Frost ND, Siddiqui JR, et al. Clinical Psychology Review. Clinical Psychology Review. 2013;33(3):395–405. doi: 10.1016/j.cpr.2013.01.004. [DOI] [PubMed] [Google Scholar]

- Benish SG, Imel ZE, Wampold BE. The relative efficacy of bona fide psychotherapies for treating post-traumatic stress disorder: A meta-analysis of direct comparisons. Clinical Psychology Review. 2008;28(5):746–758. doi: 10.1016/j.cpr.2007.10.005. doi:10.1016/j.cpr.2007.10.005. [DOI] [PubMed] [Google Scholar]

- Blei DM, Ng AY, Jordan MI. Latent dirichlet allocation. The Journal of Machine Learning Research. 2003;3:993–1022. [Google Scholar]

- Breiman L. Random forests. Machine Learning. 2001;45(1):5–32. [Google Scholar]

- Clark DM. Psychodynamic Therapy or Cognitive Therapy for Social Anxiety Disorder. American Journal of Psychiatry. 2013;170(11):1365. doi: 10.1176/appi.ajp.2013.13060744. [DOI] [PubMed] [Google Scholar]

- Cox TF, Cox MAA. Multidimensional Scaling. Second Edition CRC Press; 2000. [Google Scholar]

- Crits-Christoph P, Gibbons MBC, Mukherjeed D. Psychotherapy process-outcome research. In: Lambert MJ, editor. Bergin and Garfield’s Handbook of Psychotherapy and Behavior Change. 5th ed. Wiley; New Jersey: 2013. pp. 298–340. [Google Scholar]

- Elkin I. National Institute of Mental Health Treatment of Depression Collaborative Research Program. Archives of General Psychiatry. 1989;46(11):971. doi: 10.1001/archpsyc.1989.01810110013002. [DOI] [PubMed] [Google Scholar]

- Elliott R, Bohart AC, Watson JC, Greenberg LS. Empathy. Psychotherapy. 2011;48(1):43–49. doi: 10.1037/a0022187. doi:10.1037/a0022187. [DOI] [PubMed] [Google Scholar]

- Greenberg LS, Newman FL. An approach to psychotherapy change process research: Introduction to the special section. Journal of Consulting and Clinical Psychology. 1996;64(3):435. doi: 10.1037//0022-006x.64.3.435. [DOI] [PubMed] [Google Scholar]

- Griffiths TL, Steyvers M. Finding scientific topics. Proceedings of the National Academy of Sciences of the United States of America. 2004;101(Suppl 1):5228. doi: 10.1073/pnas.0307752101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Griffiths TL, Steyvers M, Tenenbaum JB. Topics in semantic representation. Psychological Review. 2007;114(2):211–244. doi: 10.1037/0033-295X.114.2.211. doi:10.1037/0033-295X.114.2.211. [DOI] [PubMed] [Google Scholar]

- Hilbert M, Lopez P. The World’s Technological Capacity to Store, Communicate, and Compute Information. Science. 2011;332(6025):60–65. doi: 10.1126/science.1200970. doi:10.1126/science.1200970. [DOI] [PubMed] [Google Scholar]

- Horvath AO, Del Re AC, Flűckiger C, Symonds D. Alliance in individual psychotherapy. Psychotherapy. 2011;48(1):9–16. doi: 10.1037/a0022186. [DOI] [PubMed] [Google Scholar]

- Imel ZE, Barco JR, Brown HJ, Baucom BR, Baer JS, Kircher JC, Atkins DC. The Association of Therapist Empathy and Synchrony in Vocally Encoded Arousal. Journal of Counseling Psychology. 2014;61(1):146–153. doi: 10.1037/a0034943. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Imel ZE, Wampold BE, Miller SD, Fleming RR. Distinctions without a difference: Direct comparisons of psychotherapies for alcohol use disorders. Psychology of Addictive Behaviors. 2008;22(4):533–543. doi: 10.1037/a0013171. doi:10.1037/a0013171. [DOI] [PubMed] [Google Scholar]

- Kao J, Jurafsky D. A Computational Analysis of Style, Affect, and Imagery in Contemporary Poetry. Naacl-Hlt. 2012;2012:1–10. [Google Scholar]

- Kaplan DM, Blei DM. A Computational Approach to Style in American Poetry. IEEE; 2007. pp. 553–558. doi:10.1109/ICDM.2007.76. [Google Scholar]

- Kirschenbaum H. Carl Rogers’s life and work: An assessment on the 100th anniversary of his birth. Journal of Counseling & Development. 2004;82(1):116–124. [Google Scholar]

- Krupski A, Joesch JM, Dunn C, Donovan D, Bumgardner K, Lord SP, Roy-Byrne P. Testing the effects of brief intervention in primary care for problem drug use in a randomized controlled trial: rationale, design, and methods. Addiction Science & Clinical Practice. 2012;7(1):27. doi: 10.1186/1940-0640-7-27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lambert MJ. Bergin and Garfield’s Handbook of Psychotherapy and Behavior Change. Wiley; New Jersey: 2013. [Google Scholar]

- Landauer TK, Dumais ST. A solution to Plato’s problem: The latent semantic analysis theory of acquisition, induction, and representation of knowledge. Psychological Review. 1997;104(2):211–240. [Google Scholar]

- Lee CM, Kilmer JR, Neighbors C, Atkins DC, Zheng C, Walker DD, Larimer ME. Indicated prevention for college student marijuana use: A randomized controlled trial. 2014 doi: 10.1037/a0033285. Manuscript submitted for publication. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leichsenring F, Salzer S. Response to Clark. American Journal of Psychiatry. 2013;170(11):1365. doi: 10.1176/appi.ajp.2013.13060744r. [DOI] [PubMed] [Google Scholar]

- Leichsenring F, Salzer S, Beutel ME, Herpertz S, Hiller W, Hoyer J, et al. Psychodynamic therapy and cognitive-behavioral therapy in social anxiety disorder: a multicenter randomized controlled trial. American Journal of Psychiatry. 2013;170(7):759–767. doi: 10.1176/appi.ajp.2013.12081125. [DOI] [PubMed] [Google Scholar]

- Neighbors C, Lee CM, Atkins DC, Lewis MA, Kaysen D, Mittmann A, Larimer ME. A randomized controlled trial of event-specific prevention strategies for reducing problematic drinking associated with 21st birthday celebrations. Journal of Consulting and Clinical Psychology. 2012;80(5):850–862. doi: 10.1037/a0029480. doi:10.1037/a0029480. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meehl PE. Theoretical risks and tabular asterisks: Sir Karl, Sir Ronald, and the slow progress of soft psychology. Journal of Consulting and Clinical Psychology. 1978;46(4):1–29. [Google Scholar]

- Mergenthaler E. Resonating minds: A school-independent theoretical conception and its application to psychotherapeutic processes. Psychotherapy Research. 2008;18(2):109–126. doi: 10.1080/10503300701883741. [DOI] [PubMed] [Google Scholar]

- Miller WR, Rollnick S. Motivational interviewing. Guilford Press; New York: 2002. [Google Scholar]

- Morgenstern J, McKay JR. Rethinking the paradigms that inform behavioral treatment research for substance use disorders. Addiction. 2007;102(9):1377–1389. doi: 10.1111/j.1360-0443.2007.01882.x. [DOI] [PubMed] [Google Scholar]

- Moyers TB, Miller WR, Hendrickson SML. How Does Motivational Interviewing Work? Therapist Interpersonal Skill Predicts Client Involvement Within Motivational Interviewing Sessions. Journal of Consulting and Clinical Psychology. 2005;73(4):590–598. doi: 10.1037/0022-006X.73.4.590. [DOI] [PubMed] [Google Scholar]

- Reynes R, Martindale C, Dahl H. Lexical differences between working and resistance sessions in psychoanalysis. Journal of Clinical Psychology. 1984;40(3):733–737. doi: 10.1002/1097-4679(198405)40:3<733::aid-jclp2270400315>3.0.co;2-g. [DOI] [PubMed] [Google Scholar]

- Rogers CR. The necessary and sufficient conditions of therapeutic personality change. Journal of Consulting Psychology. 1957;21(2):95–103. doi: 10.1037/h0045357. [DOI] [PubMed] [Google Scholar]

- Rosen-Zvi M, Chemudugunta C, Griffiths T, Smyth P, Steyvers M. Learning author-topic models from text corpora. ACM Transactions on Information Systems. 2010;28:1–38. [Google Scholar]

- Rubin TN, Chambers A, Smyth P, Steyvers M. Statistical topic models for multi-label document classification. Machine Learning. 2011;88(1-2):157–208. [Google Scholar]

- Salvatore S, Gennaro A, Auletta AF, Tonti M, Nitti M. Automated method of content analysis: A device for psychotherapy process research. Psychotherapy Research. 2012;22(3):256–273. doi: 10.1080/10503307.2011.647930. doi:10.1080/10503307.2011.647930. [DOI] [PubMed] [Google Scholar]

- Manning C, Schütze H. Foundations of Statistical Natural Language Processing. MIT Press; 1999. [Google Scholar]

- Silverman WH. The future of psychotherapy: One editor’s perspective. Psychotherapy. 2013;50(4):484–489. doi: 10.1037/a0030573. doi:10.1037/a0030573. [DOI] [PubMed] [Google Scholar]

- Steyvers M, Griffiths T. Probabilistic topic models. In: Landauer T, McNamara D, Dennis S, Kintsch W, editors. Latent Semantic Analysis. LEA; New Jersey: 2007. [Google Scholar]

- Stiles WB, Shapiro DA, Firth-Cozens JA. Verbal response mode use in contrasting psychotherapies: A within-subjects comparison. Journal of Consulting and Clinical Psychology. 1988;56(5):727–33. doi: 10.1037//0022-006x.56.5.727. [DOI] [PubMed] [Google Scholar]

- Talley EM, Newman D, Mimno D, Herr BW, Wallach HM, Burns GAPC, et al. Database of NIH grants using machine-learned categories and graphical clustering. Nature Publishing Group. 2011;8(6):443–444. doi: 10.1038/nmeth.1619. doi:10.1038/nmeth.1619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tao KW. Too Close and Too Far: Counseling Emerging Adults in a Technological Age. Psychotherapy. 2014 doi: 10.1037/a0033393. doi:10.1037/10.1037/a0033393. [DOI] [PubMed] [Google Scholar]

- Tollison S, Lee C, Neighbors C, Neil T. Questions and reflections: the use of motivational interviewing microskills in a peer-led brief alcohol intervention for college students. Behavior Therapy. 2008;39:183–194. doi: 10.1016/j.beth.2007.07.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toutanova K, Klein D, Manning CD, Singer Y. Feature-rich part-of-speech tagging with a cyclic dependency network; Proceedings of the 2003 Conference of the North American Chapter of the Association for Computational Linguistics on Human Language Technology; 2003; pp. 173–180. Association for Computational Linguistics. [Google Scholar]

- Wampold BE. The great psychotherapy debate: Models, methods, and findings. Erlbaum; Mahwah, NJ: 2001. [Google Scholar]

- Wampold BE. Psychotherapy: The humanistic (and effective) treatment. American Psychologist. 2007;62(8):855–73. doi: 10.1037/0003-066X.62.8.857. [DOI] [PubMed] [Google Scholar]

- Webb CA, DeRubeis RJ, Barber JP. Therapist adherence/competence and treatment outcome: A meta-analytic review. Journal of Consulting and Clinical Psychology. 2010;78(2):200–211. doi: 10.1037/a0018912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams-Baucom KJ, Atkins DC, Sevier M, Eldridge KA, Christensen A. “You” and “I” need to talk about “us”: Linguistic patterns in marital interactions. Personal Relationships. 2010;17(1):41–56. [Google Scholar]