Abstract

Our ability to recognize the emotions of others is a crucial feature of human social cognition. Functional neuroimaging studies indicate that activity in sensorimotor cortices is evoked during the perception of emotion. In the visual domain, right somatosensory cortex activity has been shown to be critical for facial emotion recognition. However, the importance of sensorimotor representations in modalities outside of vision remains unknown. Here we use continuous theta-burst transcranial magnetic stimulation (cTBS) to investigate whether neural activity in the right postcentral gyrus (rPoG) and right lateral premotor cortex (rPM) is involved in nonverbal auditory emotion recognition. Three groups of participants completed same–different tasks on auditory stimuli, discriminating between the emotion expressed and the speakers' identities, before and following cTBS targeted at rPoG, rPM, or the vertex (control site). A task-selective deficit in auditory emotion discrimination was observed. Stimulation to rPoG and rPM resulted in a disruption of participants' abilities to discriminate emotion, but not identity, from vocal signals. These findings suggest that sensorimotor activity may be a modality-independent mechanism which aids emotion discrimination.

Introduction

Simulation models of emotion recognition suggest that understanding another's emotions requires individuals to map the observed state onto their own representations which are active during the experience of the perceived emotion (Adolphs, 2002; Goldman and Sripada, 2005; Keysers and Gazzola, 2006). Supporting this, functional brain imaging studies indicate that perceiving another person's facial expressions correlates with increased activity in similar sensorimotor cortices (e.g., premotor and somatosensory cortices) as when the perceiver generates the same expression (Winston et al., 2003; Hennenlotter et al., 2005; van der Gaag et al., 2007) and that sensorimotor cortices are recruited during the perception of nonverbal emotion expressions (e.g., hearing somebody laughing) (Warren et al., 2006). Further, in the visual domain, there is growing evidence that sensorimotor activity plays a causal role in facial emotion recognition: neuropsychological findings indicate that deficits in the recognition of facial affect are related to damage within right hemisphere somatosensory-related cortices (Adolphs et al., 2000) and transcranial magnetic stimulation (TMS) findings in healthy adults are consistent with this (Pitcher et al., 2008).

It remains unclear, however, whether neural activity in sensorimotor cortices is central to global processing of emotion across modalities (e.g., in the auditory domain). To address this, we used continuous theta burst TMS (cTBS), an offline TMS paradigm (i.e., conducted while the participant is at rest) following which neural activity may be suppressed for several minutes (Di Lazzaro et al., 2005; Huang et al., 2005), to examine whether neural activity in sensorimotor cortices [right lateral premotor (rPM) and right postcentral gyrus (rPoG)] is involved in discriminating affect from vocal signals. We conducted three experiments. In experiment 1, we sought to establish the effects of cTBS targeted at rPM, rPoG, or the vertex (cTBS control site) on participants' abilities to complete a same–different auditory emotion discrimination task. We used nonverbal emotional vocalizations such as laughter or screams, which were adapted from a previous fMRI study investigating the role of sensorimotor resources in nonverbal auditory emotion perception (Warren et al., 2006). In experiment 2, we used identical stimuli and cTBS parameters, but instructed a new group of participants to complete a same–different auditory identity discrimination task. In experiment 3, we further examined the role of sensorimotor cortex activity in participants' auditory identity processing abilities, by using a task which previously been shown to be sensitive to selective neuropsychological impairment in vocal identity recognition (i.e., developmental phonagnosia) (Garrido et al., 2009). This allowed us to examine any nonspecific effects of cTBS and whether the effects observed in experiment 1 were selective to affective processing. Based on simulation accounts of emotion recognition we predicted that cTBS targeted at rPM and rPoG would result in a disruption of participants' abilities to discriminate the auditory emotions, but not identity, of others.

Materials and Methods

Participants.

Twenty-six healthy naive adult participants, 13 female and 13 male (aged 20–35 years), took part in the study. All were right handed, had normal or corrected-to-normal vision, and gave informed consent in accordance with the ethics committee of University College London. Twenty participants took part in experiments 1 and 2 (experiment 1: 6 female and 4 male aged 20–30 years; experiment 2: 5 female and 5 male aged 20–35 years). Six participants (2 female and 4 male aged 21–36 years) took part in experiment 3.

Materials.

Identical stimuli were used in experiments 1 and 2. Stimuli were one of four categories of nonverbal auditory emotions (amusement, sadness, fear, or disgust). These vocalizations are reliably recognized by human listeners (Meyer et al., 2005; Sauter and Scott, 2007; Sauter et al., 2010) and can be considered to be closer to emotional facial expressions than emotional speech because they do not contain the segmental structure of emotionally inflected speech or nonsense speech (Dietrich et al., 2006; Scott et al., 2009; Sauter et al., 2010). The stimuli were adapted from a previously validated set of nonverbal vocalizations (Sauter, 2006; Warren et al., 2006; Sauter and Scott, 2007; Sauter and Eimer, 2010; Sauter et al., 2010) and two of these emotions (amusement and fear) were adapted from stimuli used in a previous fMRI study investigating the role of sensorimotor resources in nonverbal auditory emotion perception (Warren et al., 2006). Ten stimuli, produced by four different actors (two male/two female), per emotion type were used. All emotional vocalizations were edited to 500 ms in duration and were presented aurally via headphones (for example stimuli, see www.visualcognition.net).

In experiment 3, participants discriminated two samples of noise-vocoded speech (Shannon et al., 1995). Stimuli were 21 sentences read by native British English speakers, which were normalized for peak amplitude and noise-vocoded using PRAAT (cf. Garrido et al., 2009). The stimuli were noise vocoded at three different numbers of channels (6, 16, 48). The higher numbers of channels used during vocoding, the more spectral detail associated with speaker recognition was preserved (Garrido et al., 2009).

Procedure.

All experiments consisted of three testing sessions conducted over three nonconsecutive days. At each testing session one of the three brain regions was stimulated (rPoG, rPM, or vertex). The order of site of stimulation was pseudo-randomized between participants in an ABC-BCA-CAB fashion. Participants completed the experimental task twice within each session, one run before cTBS (baseline performance) and the other following cTBS.

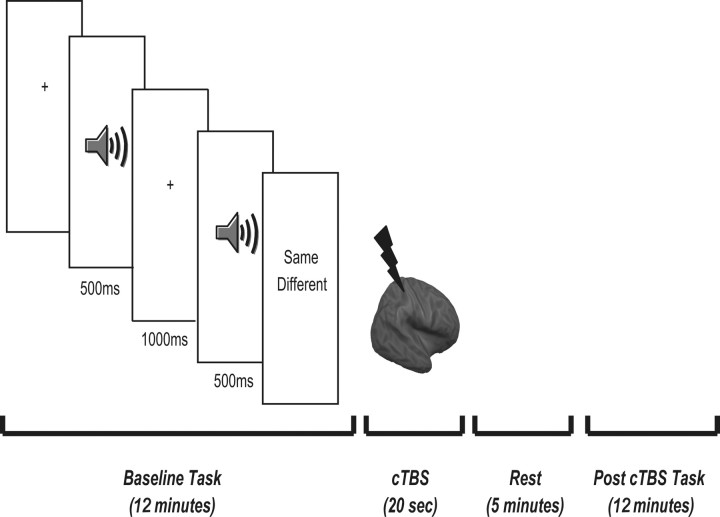

In experiment 1, the task comprised of 120 trials (preceded by 20 practice trials) divided between two blocks of 60 trials. Each trial began with the presentation of a fixation cross (1500 ms) followed by the presentation of the prime stimulus. Five hundred milliseconds after the offset of the prime stimulus, a second emotion was presented aurally. Concurrent with the presentation of the second emotion, participants were asked to indicate whether the second nonverbal emotion was the same or different from the first using a key press (Fig. 1). The need for speed and accuracy were emphasized. Each block lasted ∼12 min.

Figure 1.

Summary of cTBS and task protocol in experiments 1 and 2. Participants completed a same–different auditory emotion (experiment 1) or identity (experiment 2) matching task. Both experiments consisted of three testing sessions conducted over three nonconsecutive days. At each testing session one of the three brain regions was stimulated (rPoG, rPM or the vertex) and each task was completed before and 5 min following cTBS to each site. The 5 min rest period was based on the observed time course of effects seen in the motor cortex (Di Lazzaro et al., 2005; Huang et al., 2005).

In experiment 2, the same stimuli and paradigm was used, but participants were instructed to indicate whether the prime and target emotions were expressed by the same or different person.

Experiment 3 used the same methodology as Garrido et al. (2009), in which a developmental phonagnosic was shown to be impaired at discriminating pairs of male or female voices using noise-vocoded speech. The task required participants to discriminate whether two sequentially presented noise-vocoded sentences were said by the same or different speaker, with half being different pairs. For each pair, the speaker was always the same sex. There were a total of 84 trials, with 28 pairs per frequency level. The task lasted ∼15 min and accuracy levels were compared.

TMS parameters and coregistration.

TMS was delivered via a figure of eight coil with a 70 mm diameter using a Magstim Super Rapid Stimulator. An offline cTBS paradigm was used, which consisted of a burst of 3 pulses at 50 Hz repeated at intervals of 200 ms for 20 s, resulting in a total of 300 pulses. This paradigm was used to prevent any influence of online auditory and proprioceptive effects of TMS on task performance (Terao et al., 1997). Based upon previous findings (Di Lazzaro et al., 2005; Huang et al., 2005; Valessi et al., 2007; Kalla et al., 2009) the time window of reduced excitability following theta burst stimulation was expected to last between 20 and 30 min and a 5 min rest period after stimulation offset was implemented for each site stimulated.

TMS machine output was set to 80% of each participant's motor threshold with an upper limit of 50% of machine output. Motor threshold was defined using visible motor twitch of the contralateral first dorsal interosseus following single pulse TMS delivered to the best scalp position over motor cortex. Motor threshold was calculated using a modified binary search paradigm (MOBS) (Tyrrell and Owens, 1988; see also Thilo et al., 2004 for example use). For each subject, motor threshold was calculated following pre-cTBS baseline and before coregistration.

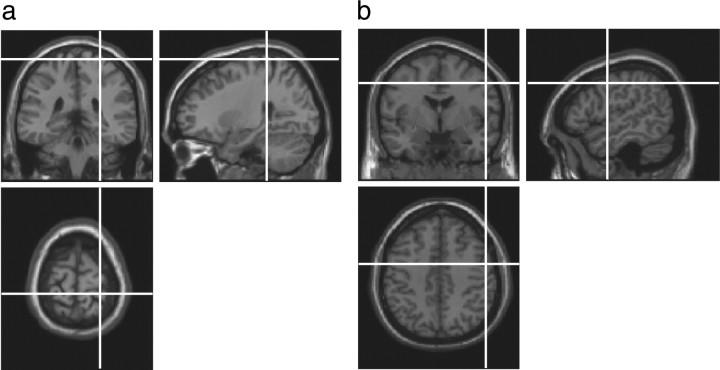

Locations for cTBS were identified using Brainsight TMS-magnetic resonance coregistration system (Rogue Research). FSL (FMRIB Software Library) software [FMRIB (Functional Magnetic Resonance Imaging of the Brain), Oxford, UK] was used to transform coordinates for each site to each subject's individual MRI scan (Fig. 2). The rPoG site was selected based on coordinates from 12 neurologically normal participants in an fMRI study following touch to their own face relative to the neck (Blakemore et al., 2005; 27, −27, −69) and was confirmed anatomically as the postcentral gyrus on each participant's structural scan. The coordinates for rPM (54, −2, 44) were the averages of neurologically normal participants in an fMRI study of nonverbal auditory emotion processing (Warren et al., 2006). The vertex was identified as the point midway between the inion and the nasion, equidistant from the left and right intertragal notches.

Figure 2.

Summary of cTBS sites stimulated, rPoG (a) and rPM (b). Locations of cTBS were determined using the Brainsight coregistration system. To ensure that any differences observed were not due to nonspecific effects of cTBS, the vertex was stimulated as a cTBS control site.

Results

The role of sensorimotor cortices in discriminating emotions and identity from auditory cues

Comparison of performance across sites and tasks in experiments 1 (emotion matching) and 2 (identity matching)

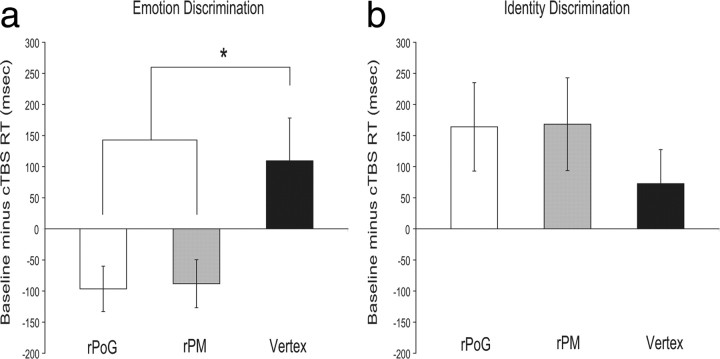

Baseline performance did not differ significantly across sites in either task (Emotion task group: F(2,18) = 1.64, p = 0.221; Identity task group: F(2,18) = 0.815, p = 0.458). To assess the effects across tasks and across sites, we calculated the difference between the post-cTBS and pre-cTBS baseline reaction times (i.e., baseline RT corrected for accuracy minus post-cTBS RT corrected for accuracy) for each site stimulated. A 2 (task group) × 3 (TMS site) mixed ANOVA was then conducted to determine the effects of cTBS on participants' abilities to recognize identity and emotion from auditory signals. The overall main effect of TMS site was not significant (F(2,36) = 0.361, p = 0.699), however a significant task group × TMS site interaction was found (F(2,36) = 3.43, p = < 0.05). This was because the effects of cTBS significantly differed across sites on the emotion (F(2,18) = 4.78, p = < 0.05) (Fig. 3a), but not on the identity task (F(2,18) = 0.574, p = 0.573) (Fig. 3b). The main effect of TMS site on the emotion task was due to significant impairments following cTBS targeted at rPM compared with the vertex (t = 2.81, p = < 0.05) and following cTBS targeted at rPoG relative to the vertex (t = 2.28, p = < 0.05). Therefore, cTBS stimulation of right sensorimotor cortices disrupted participants' abilities to discriminate between the auditory emotions (Fig. 3a), but not the vocal identities (Fig. 3b), of others. This impairment in emotion processing following cTBS to rPoG and rPM was not modulated by emotion type (supplemental Table 1, available at www.jneurosci.org as supplemental material).

Figure 3.

Magnitude of disruption or facilitation (mean ± SEM) in milliseconds following cTBS targeted at rPoG, rPM and the vertex (experiments 1 and 2). To determine whether the magnitude of impairment following cTBS stimulation differed across the sites and tasks we calculated the difference between the post-cTBS and pre-cTBS baseline reaction times (±3 SDs and all errors removed; and corrected for accuracy) for each condition (i.e., baseline RT/accuracy minus post-cTBS RT/accuracy for each site stimulated across tasks). A disruption in reaction times following stimulation is shown by a negative value and facilitation by a positive value. a, For the emotion discrimination task (experiment 1), participants (n = 10) were impaired in their abilities to discriminate between the auditory emotions of others following stimulation to rPoG and rPM compared with stimulation at the vertex (cTBS control site). b, This was not found to be the case when participants (n = 10) had to discriminate auditory identity (experiment 2)—the effects of cTBS targeted at rPoG, rPM and the vertex did not significantly differ between the sites stimulated, and there was a trend for facilitation at all sites. Between-group comparisons also revealed that the disruption in performance on the emotion-discrimination task following cTBS to rPoG and rPM was significantly different to the facilitation shown in the identity task. No significant difference between emotion discrimination and identity discrimination task performance was found following cTBS at the vertex. *p < 0.05.

Between-group comparisons also revealed a main effect of task group (F(1,18) = 12.81, p = < 0.005). This task-specific dissociation was modulated by site of stimulation, with cTBS targeted at rPoG (t = 3.28, p = < 0.005) and rPM (t = 3.06, p = < 0.01) resulting in a disruption of performance on the emotion task but a facilitation in performance on the identity task (Fig. 3). This pattern of effects was not found following stimulation at the vertex (cTBS control site), where there was a trend for facilitation in both tasks (t = 0.204, p = 0.841). Thus, the cTBS impairments observed at rPoG and rPM in the emotion task are not due to general impairments in processing following cTBS, but reflect a task-specific impairment on emotion discrimination performance.

Accuracy performance (i.e., post-cTBS percentage correct minus baseline percentage correct) did not significantly differ across sites in either task (supplemental Table 2, available at www.jneurosci.org as supplemental material).

Within-site comparisons in experiments 1 and 2

In addition to our between-site and -task comparisons, we also conducted a separate analysis for each task group which compared post-cTBS reaction time performance (corrected for accuracy) and accuracy performance relative to baseline for each brain region stimulated (i.e., rPoG baseline performance compared with rPoG post-cTBS performance; rPM baseline performance compared with rPM post-cTBS performance; vertex baseline performance compared with vertex post-cTBS performance).

For the emotion task group, cTBS to rPoG resulted in a significant disruption on reaction time performance compared with baseline (t(9) = 2.83, p = ≤.05; supplemental Fig. 1, available at www.jneurosci.org as supplemental material). This was also the case following stimulation at rPM (t(9) = 2.29, p = ≤.05; supplemental Fig. 1, available at www.jneurosci.org as supplemental material). No significant difference in performance was found following stimulation to our active control site (t(9) = 1.29, p = 0.229; supplemental Fig. 1, available at www.jneurosci.org as supplemental material). Therefore, consistent with our between-site analysis, suppressing sensorimotor cortex activity impaired participants' abilities to discriminate the auditory emotions of others.

Comparisons of within-site reaction time performance on the identity matching task revealed that participants were significantly faster in the post-cTBS blocks compared with baseline at rPoG [baseline mean ± SEM = 1977.34 ± 162.31; post-cTBS mean ± SEM = 1813.27 ± 116.84; (t(9) = 2.31, p = ≤ 0.05) and rPM (baseline mean ± SEM = 1866.44 ± 130.93; post-cTBS mean ± SEM = 1698.12 ± 122.72; (t(9) = 2.26, p = ≤ 0.05)]. Despite a similar trend for improved performance in the post-cTBS condition, this was not the case at the vertex (baseline mean ± SEM = 1997.14 ± 174; post-cTBS mean ± SEM = 1924.4 ± 177.07; (t(9) = 1.33, p = 0.216). Notably, the increased speed in reaction time performance did not significantly differ across sites (Fig. 3b) indicating that it is a nonspecific effect.

Our analysis of within-site accuracy performance revealed no significant differences at any site of stimulation in the emotion discrimination task (supplemental Table 3, available at www.jneurosci.org as supplemental material), nor were they found following stimulation at rPoG or rPM in the identity matching task (supplemental Table 3, available at www.jneurosci.org as supplemental material).

What is the relationship between the temporal effects of cTBS and performance (experiment 1)?

In the motor domain cTBS has been shown to cause a brief increasing (for ∼5 min post-cTBS) and then lasting decreasing suppression of neural activity (Di Lazzaro et al., 2005; Huang et al., 2005). To assess whether our main effect of cTBS on emotion discrimination was modulated by similar dynamics, we divided trials from experiment 1 into four blocks (30 trial segments lasting ∼3 min each) and compared the difference between the post-cTBS and pre-cTBS baseline reaction times across sites using a 3 (site) × 4 (block) ANOVA (supplemental Fig. 2, available at www.jneurosci.org as supplemental material). There was trend for reaction time dynamics to follow a similar pattern to that observed in the motor domain, however the interaction between site and block did not reach significance (F(6,54) = 1.12, p = 0.362).

The role of sensorimotor cortices in discriminating voices using noise-vocoded speech (experiment 3)

Between-site comparisons

Although experiments 1 and 2 demonstrated that cTBS to rPoG and rPM resulted in task- and site-specific impairment on auditory emotion but not auditory identity discrimination when using identical parameters and stimuli, the identity matching task used in experiment 2 had not previously been shown to be sensitive to impairment and therefore it remained possible that the task-specific effects were due to a lack of sensitivity in our identity control task. We therefore conducted a further control experiment to examine the effects of cTBS to rPoG, rPM and the vertex on an auditory identity processing task which has been shown to be sensitive to selective neuropsychological impairment in voice perception (Garrido et al., 2009).

To assess participants' abilities at discriminating identity from noise vocoded speech, we separated trials into each spectral frequency band (6, 16, 48) and compared accuracy across sites using a 3 (site) × 3 (spectral frequency) repeated-measures ANOVA. We took this approach to analysis based upon a previous study which used this task to demonstrate selective neuropsychological impairment at discriminating vocal impairment which was most pronounced on the 16 band frequency level (Garrido et al., 2009). This revealed that there was no main effect of TMS site (F(2,10) = 0.468, p = 0.639) (supplemental Fig. 3, available at www.jneurosci.org as supplemental material) or spectral frequency band (F(1.09,5.44) = 1.085, p = 0.350), nor was there a significant interaction (F(4,20) = 2.41, p = 0.084). This was also the case with reaction time performance. A 3 (site) × 3 (frequency band) ANOVA revealed no main effect of site (F(2,10) = 0.062, p = 0.940) or frequency band (F(2,10) = 0.853, p = 0.455), and the interaction did not reach significance (F(4,20) = 0.465, p = 0.761). These findings are consistent with our task-specific effects from experiments 1 and 2, and further demonstrate that cTBS stimulation of right sensorimotor cortices did not disrupt participants' abilities to discriminate between the auditory identities of others.

Within-site comparisons

As with our analyses of experiments 1 and 2, we also compared performance post-cTBS relative to baseline separately for each brain region stimulated in experiment 3. Comparisons of within-site accuracy performance when participants discriminated vocal identity from noise-vocoded speech were conducted separately for each frequency band. For the 6 band frequency level, participants were significantly more accurate in the post-cTBS blocks compared with baseline at the vertex (baseline mean ± SEM = 61.2 ± 2.21%; post-cTBS mean ± SEM = 67.9 ± 2.92%; (t(5) = 3.35, p = ≤ 0.05). They were also significantly more accurate for 16 band frequency items following cTBS stimulation of the premotor cortex compared with baseline [baseline mean ± SEM = 70.24 ± 3.28%; post-cTBS mean ± SEM = 80.75 ± 3.91%; (t(5) = 2.91, p = ≤ 0.05)]. No other significant differences were found and the facilitation in accuracy did not significantly differ across sites (supplemental Fig. 3, available at www.jneurosci.org as supplemental material), indicating that this is a nonspecific effect.

Comparisons of within-site reaction time performance when participants discriminated vocal identity from noise-vocoded speech were also conducted separately for each frequency band. No significant differences were found between baseline reaction times and post-cTBS reaction times at any site stimulated.

Discussion

The current study investigated whether the sensorimotor cortices are recruited in discriminating affect based on nonverbal vocal signals. Using neuronavigation procedures to coregister targeted sites onto each participant's structural MRI scan we observed that cTBS suppression of sensorimotor cortices led to a significant disruption in the ability to discriminate between the auditory emotions (Fig. 3a), but not identities (Fig. 3b; supplemental Fig. 3, available at www.jneurosci.org as supplemental material), of others. This pattern was not found following cTBS at the vertex, indicating that the differences observed were not due to nonspecific effects of cTBS. Therefore consistent with predictions, suppression of sensorimotor cortices reduced the ability to discriminate the auditory emotions, but not identities, of others.

In recent years a number of functional brain imaging studies have documented the role of premotor cortex activity in the mirroring of actions and emotions of others (Hennenlotter et al., 2005; Gazzola et al., 2006; Warren et al., 2006; van der Gaag et al., 2007; Jabbi and Keysers, 2008). Using stimuli adapted from one such study (Warren et al., 2006), our findings show that neural activity in rPM plays a central role in nonverbal auditory emotion discrimination in healthy adults. The findings also extend research demonstrating the involvement of right somatosensory-related cortices in facial affect recognition (Adolphs et al., 2000; Pitcher et al., 2008) and suggest that activity in rPoG may be central to the perception of emotion across different modalities. However, while we were able to confirm that our site corresponded to the rPoG, we are unable to confirm the precise somatotopy of the site stimulated (e.g., face or trunk representation) (cf. Blatow et al., 2007; Eickhoff et al., 2008; Fabri et al., 2005) and therefore we limit our claims on the spatial specificity of the effect in rPoG.

The task-specific nature of our findings also supports the role of sensorimotor activity as substrates for a mechanism that facilitates overt decisions about emotion vocalizations. Under equivalent conditions to experiment 1, cTBS targeted at sensorimotor cortices did not impair participants' ability to discriminate another's identity, indicating that the changes in reaction time are not simply due to a general reduction in reaction times following cTBS stimulation of these regions. This was also confirmed on a second control task (experiment 3), in which we showed that suppressing sensorimotor cortex activity did not impair participants' auditory identity discrimination abilities on a task which has previously been shown to be sensitive to impairment in developmental phonagnosia (Garrido et al., 2009). In contrast to a disruption in emotion discrimination abilities, there was a trend for facilitation when participants were asked to discriminate the identity of others. This facilitation is nonspecific because it is seen over all sites stimulated and does not differ significantly between sites. The nature of the effect may reflect practice in the post-cTBS blocks or intersensory facilitation following cTBS (Marzi et al., 1998; Walsh and Pascual-Leone, 2003).

There is growing evidence that the ability to detect affect from voice relies upon similar neural mechanisms which are recruited for visual social signals. For example, in the visual domain, event related potential (ERP) studies have demonstrated enhanced frontal positivity for emotional compared with neutral faces 150 ms after stimulus onset (Eimer and Holmes, 2002, 2007; Ashley et al., 2004). This mechanism also extends to the auditory domain, in which nonverbal auditory emotions, compared with spectrally rotated neutral sounds, result in an early frontocentral positivity which is similar in timing, polarity and scalp distribution to ERP markers of emotional face processing (Sauter and Eimer, 2010). Our findings add to this by demonstrating that sensorimotor activity is implicated in not only facial (Adolphs et al., 2000; Pitcher et al., 2008), but also auditory emotion perception and imply that sensorimotor resources may subserve an emotion-general processing mechanism in healthy adults.

The findings are also compatible with recent TMS findings documenting the necessity of the right frontoparietal operculum in emotional prosody (van Rijn et al., 2005; Hoekert et al., 2008). They extend these by demonstrating the importance of premotor resources in auditory emotion discrimination; by examining the role of sensorimotor cortices in nonverbal auditory emotion processing as opposed to emotional speech (for a discussion of the benefits of using nonverbal emotions instead of emotional speech see Dietrich et al., 2006; Sauter et al., 2010; Scott et al., 2009); and by showing a functional dissociation for the role of sensorimotor resources in discriminating speaker emotion, but not speaker identity, from vocal signals.

In sum, this study extends previous findings that sensorimotor activity is important in facial emotion recognition (Adolphs et al., 2000; Pitcher et al., 2008), by demonstrating that neural activity in sensorimotor cortices is involved in emotion processing across modalities. These resources are not specifically required for discriminating the identity of others and appear to play a specific role in facilitating emotion discrimination in healthy adults in a modality-independent manner.

Footnotes

This work was partly supported by a Medical Research Council grant to V.W., M.J.B. is supported by the Economic and Social Research Council, and S.K.S. is supported by Wellcome Trust Grant WT074414MA.

References

- Adolphs R. Neural systems for recognizing emotion. Curr Opin Neurobiol. 2002;12:169–177. doi: 10.1016/s0959-4388(02)00301-x. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Damasio H, Tranel D, Cooper G, Damasio AR. A role for somatosensory cortices in the visual recognition of emotion as revealed by three-dimensional lesion mapping. J Neurosci. 2000;20:2683–2690. doi: 10.1523/JNEUROSCI.20-07-02683.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ashley V, Vuilleumier P, Swick D. Time course and specificity of event-related potentials to emotional expressions. Neuroreport. 2004;15:211–216. doi: 10.1097/00001756-200401190-00041. [DOI] [PubMed] [Google Scholar]

- Blakemore SJ, Bristow D, Bird G, Frith C, Ward J. Somatosensory activations during the observation of touch and a case of vision-touch synaesthesia. Brain. 2005;128:1571–1583. doi: 10.1093/brain/awh500. [DOI] [PubMed] [Google Scholar]

- Blatow M, Nennig E, Durst A, Sartor K, Stippich C. fMRI reflects functional connectivity of human somatosensory cortex. Neuroimage. 2007;37:927–936. doi: 10.1016/j.neuroimage.2007.05.038. [DOI] [PubMed] [Google Scholar]

- Di Lazzaro V, Pilato F, Saturno E, Oliviero A, Dileone M, Mazzone P, Insola A, Tonali PA, Ranieri F, Hunag YZ, Rothwell JC. Theta-burst repetitive transcranial magnetic stimulation suppresses specific excitatory circuits in the human motor cortex. J Physiol. 2005;565:945–950. doi: 10.1113/jphysiol.2005.087288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dietrich S, Szameitat DP, Ackermann H, Alter K. How to disentangle lexical and prosodic information? Psychoacoustic studies on the processing of vocal interjections. Prog Brain Res. 2006;156:295–302. doi: 10.1016/S0079-6123(06)56016-9. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Grefkes C, Fink GR, Zilles K. Functional lateralization of face, hand, and trunk representations in anatomically defined human somatosensory areas. Cereb Cortex. 2008;18:2820–2830. doi: 10.1093/cercor/bhn039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eimer M, Holmes A. An ERP study on the time course of emotional face processing. Neuroreport. 2002;13:427–431. doi: 10.1097/00001756-200203250-00013. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A. Event-related brain potential correlates of emotional face processing. Neuropsychologia. 2007;45:15–31. doi: 10.1016/j.neuropsychologia.2006.04.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fabri M, Polonara G, Salvolini U, Manzoni T. Bilateral cortical representations of the trunk midline in human first somatic sensory area. Hum Brain Mapp. 2005;25:287–296. doi: 10.1002/hbm.20099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garrido L, Eisner F, McGettigan C, Stewart L, Sauter D, Hanley JR, Schweinberger SR, Warren JD, Duchaine B. Developmental phonagnosia: a selective deficit of vocal identity recognition. Neuropsychologia. 2009;47:123–131. doi: 10.1016/j.neuropsychologia.2008.08.003. [DOI] [PubMed] [Google Scholar]

- Gazzola V, Aziz-Zadeh L, Keysers C. Empathy and the somatotopic auditory mirror system in humans. Curr Biol. 2006;16:1824–1829. doi: 10.1016/j.cub.2006.07.072. [DOI] [PubMed] [Google Scholar]

- Goldman AI, Sripada CS. Simulationist models of face-based emotion recognition. Cognition. 2005;94:193–213. doi: 10.1016/j.cognition.2004.01.005. [DOI] [PubMed] [Google Scholar]

- Hennenlotter A, Schroeder U, Erhard P, Castrop F, Haslinger B, Stoecker D, Lange KW, Ceballos-Baumann AO. A common neural basis for receptive and expressive communication of pleasant facial affect. Neuroimage. 2005;26:581–591. doi: 10.1016/j.neuroimage.2005.01.057. [DOI] [PubMed] [Google Scholar]

- Hoekert M, Bais L, Kahn RS, Aleman A. Time course of the involvement of the right anterior superior temporal gyrus and the right fronto-parietal operculum in emotional prosody perception. PLoS One. 2008;3:e2244. doi: 10.1371/journal.pone.0002244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang YZ, Edwards MJ, Rounis E, Bhatia KP, Rothwell JC. Theta burst stimulation of the human motor cortex. Neuron. 2005;45:201–206. doi: 10.1016/j.neuron.2004.12.033. [DOI] [PubMed] [Google Scholar]

- Jabbi M, Keysers C. Inferior frontal gyrus activity triggers anterior insula response to emotional facial expressions. Emotion. 2008;8:775–780. doi: 10.1037/a0014194. [DOI] [PubMed] [Google Scholar]

- Kalla R, Muggleton NG, Cowey A, Walsh V. Human dorsolateral prefrontal cortex is involved in visual search for conjunctions but not features: a theta TMS study. Cortex. 2009;45:1085–1090. doi: 10.1016/j.cortex.2009.01.005. [DOI] [PubMed] [Google Scholar]

- Keysers C, Gazzola V. Towards a unifying theory of social cognition. Prog Brain Res. 2006;156:379–401. doi: 10.1016/S0079-6123(06)56021-2. [DOI] [PubMed] [Google Scholar]

- Marzi CA, Miniussi C, Maravita A, Bertolasi L, Zanette G, Rothwell JC, Sanes JN. Transcranial magnetic stimulation selectively impairs interhemispheric transfer of visuo-motor information in humans. Exp Brain Res. 1998;118:435–438. doi: 10.1007/s002210050299. [DOI] [PubMed] [Google Scholar]

- Meyer M, Zysset S, von Cramon DY, Alter K. Distinct fMRI responses to laughter, speech, and sounds along the human perisylvian cortex. Brain Res Cogn Brain Res. 2005;24:291–306. doi: 10.1016/j.cogbrainres.2005.02.008. [DOI] [PubMed] [Google Scholar]

- Pitcher D, Garrido L, Walsh V, Duchaine BC. Transcranial magnetic stimulation disrupts the perception and embodiment of facial expressions. J Neurosci. 2008;28:8929–8933. doi: 10.1523/JNEUROSCI.1450-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sauter DA. University of London; 2006. An investigation into vocal expressions of emotions: the roles of valence, culture, and acoustic factors. PhD thesis. [Google Scholar]

- Sauter DA, Eimer M. Rapid detection of emotion from human vocalizations. J Cogn Neurosci. 2010;44:474–481. doi: 10.1162/jocn.2009.21215. [DOI] [PubMed] [Google Scholar]

- Sauter DA, Scott SK. More than one kind of happiness: can we recognise vocal expressions of different positive states? Motiv Emot. 2007;31:192–199. [Google Scholar]

- Sauter DA, Eisner F, Calder AJ, Scott SK. Perceptual cues in nonverbal expressions of emotion. Q J Exp Psychol (Colchester) 2010;28:1–22. doi: 10.1080/17470211003721642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott SK, Sauter DA, McGettigan C. Brain mechanisms for processing perceived emotional vocalizations in humans. In: Brudzynski S, editor. Handbook of mammalian vocalizations, vol 19: An integrative neuroscience approach. London: Academic; 2009. pp. 187–198. [Google Scholar]

- Shannon RV, Zeng FG, Kamath V, Wygonski J, Ekelid M. Speech recognition with primarily temporal cues. Science. 1995;270:303–304. doi: 10.1126/science.270.5234.303. [DOI] [PubMed] [Google Scholar]

- Terao Y, Ugawa Y, Suzuki M, Sakai K, Hanajima R, Gemba-Shimizu K, Kanazawa I. Shortening of simple reaction time by peripheral electrical and submotor-threshold magnetic cortical stimulation. Exp Brain Res. 1997;115:541–545. doi: 10.1007/pl00005724. [DOI] [PubMed] [Google Scholar]

- Thilo KV, Santoro L, Walsh V, Blakemore C. The site of saccadic suppression. Nat Neurosci. 2004;7:13–14. doi: 10.1038/nn1171. [DOI] [PubMed] [Google Scholar]

- Tyrrell RA, Owens DA. A rapid technique to assess the resting states of eyes and other threshold phenomena: The modified binary search (MOBS) Behav Res Methods Instrum Comput. 1988;20:137–141. [Google Scholar]

- Vallesi A, Shallice T, Walsh V. Role of the prefrontal cortex in the foreperiod effect: TMS evidence for dual mechanisms in temporal preparation. Cereb Cortex. 2007;17:466–474. doi: 10.1093/cercor/bhj163. [DOI] [PubMed] [Google Scholar]

- van der Gaag C, Minderaa RB, Keysers C. Facial expressions: what the mirror neuron system can and cannot tell us. Soc Neurosci. 2007;2:179–222. doi: 10.1080/17470910701376878. [DOI] [PubMed] [Google Scholar]

- van Rijn S, Aleman A, van Diessen E, Berckmoes C, Vingerhoets G, Kahn RS. What is said or how it is said makes a difference: role of the right fronto-parietal operculum in emotional prosody as revealed by repetitive TMS. Eur J Neurosci. 2005;21:3195–3200. doi: 10.1111/j.1460-9568.2005.04130.x. [DOI] [PubMed] [Google Scholar]

- Walsh V, Pascual-Leone A. Transcranial magnetic stimulation: a neurochromometrics of mind. Cambridge, MA: MIT; 2003. pp. 84–88. [Google Scholar]

- Warren JE, Sauter DA, Eisner F, Wiland J, Dresner MA, Wise RJ, Rosen S, Scott SK. Positive emotions preferentially engage an auditory-motor “mirror” system. J Neurosci. 2006;26:13067–13075. doi: 10.1523/JNEUROSCI.3907-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winston JS, O'Doherty J, Dolan RJ. Common and distinct neural during direct and incidental processing of multiple facial emotions. Neuroimage. 2003;20:84–97. doi: 10.1016/s1053-8119(03)00303-3. [DOI] [PubMed] [Google Scholar]