Significance

Though the statistical properties of musical compositions have been widely studied, little is known about the statistical nature of musical interaction—a foundation of musical communication. The goal of this study was to uncover the general statistical properties underlying musical interaction by observing two individuals synchronizing rhythms. We found that the interbeat intervals between individuals exhibit scale-free cross-correlations, i.e., the next beat played by an individual is dependent on the entire history (up to several minutes) of their partner’s interbeat intervals. To explain this surprising observation, we introduce a general stochastic model that can also be used to study synchronization phenomena in econophysics and physiology. The scaling laws found in musical interaction are directly applicable to audio production.

Keywords: time series analysis, long-range cross-correlations, anticorrelations, musical coupling, interbrain synchronization

Abstract

Though the music produced by an ensemble is influenced by multiple factors, including musical genre, musician skill, and individual interpretation, rhythmic synchronization is at the foundation of musical interaction. Here, we study the statistical nature of the mutual interaction between two humans synchronizing rhythms. We find that the interbeat intervals of both laypeople and professional musicians exhibit scale-free (power law) cross-correlations. Surprisingly, the next beat to be played by one person is dependent on the entire history of the other person’s interbeat intervals on timescales up to several minutes. To understand this finding, we propose a general stochastic model for mutually interacting complex systems, which suggests a physiologically motivated explanation for the occurrence of scale-free cross-correlations. We show that the observed long-term memory phenomenon in rhythmic synchronization can be imitated by fractal coupling of separately recorded or synthesized audio tracks and thus applied in electronic music. Though this study provides an understanding of fundamental characteristics of timing and synchronization at the interbrain level, the mutually interacting complex systems model may also be applied to study the dynamics of other complex systems where scale-free cross-correlations have been observed, including econophysics, physiological time series, and collective behavior of animal flocks.

In his book Musicophilia, neurologist Oliver Sacks writes: “In all societies, a primary function of music is collective and communal, to bring and bind people together. People sing together and dance together in every culture, and one can imagine them having done so around the first fires, a hundred thousand years ago” (1). Sacks adds, “In such a situation, there seems to be an actual binding of nervous systems accomplished by rhythm” (2). These thoughts lead to the question: Is there any underlying and quantifiable structure to the subjective experience of “musical binding”? Here, we examine the statistical nature of musical binding (also referred to as musical coupling) when two humans play rhythms in synchrony.

Every beat a single (noninteracting) layperson or musician plays is accompanied by small temporal deviations from the exact beat pattern, i.e., even a trained musician will hit a drum beat slightly ahead or behind the metronome (with a SD of typically 5–15 ms). Interestingly, these deviations are statistically dependent and exhibit long-range correlations (LRC) (3, 4). Listeners significantly prefer music mirroring long-range correlated temporal deviations over uncorrelated (white noise) fluctuations (5, 6). LRC are also inherent in the reproduction of both spatial and temporal intervals of single subjects (4, 7–9) and in musical compositions, such as pitch fluctuations (a simple example of pitch fluctuations is a melody) (10, 11) and note lengths (12). The observation of power law correlations in fluctuations of pitch and note length in compositions reflects a hierarchical, self-similar structure in these compositions.

In this article, we examine rhythmic synchronization, which is at the foundation of musical interaction, from orchestral play to audience hand-clapping (13). More specifically, we show that the interbeat intervals (IBIs) of two subjects synchronizing musical rhythms exhibit long-range cross-correlations (LRCCs), which appears to be a general phenomenon given that these LRCC were found both in professional musicians and in laypeople.

The observation of LRCCs may point to characteristics of criticality in the dynamics of the considered complex system. LRCCs are characterized by a power law decay of the cross-correlation function and indicate that the two time series of IBIs form a self-similar (fractal) structure. Here, self-similarity implies that trends in the IBIs are likely to repeat on different timescales, i.e., patterns of IBI fluctuations of one musician tend to reproduce in a statistically similar way at a later time—even in the other musician’s play. A variety of complex systems exhibit LRCCs; examples include price fluctuations of the New York Stock Exchange (where the LRCCs become more pronounced during economic crises) (14–16), heartbeat and EEG fluctuations (15, 17), particles in a Lorentz channel (18), the binding affinity of proteins to DNA (15), schools of fish (19), and the collective response of starling flocks (20, 21). The origin of collective dynamics and LRCCs based on local interactions often appears elusive (20), and is the focus of current research (19, 21). Of particular interest are the rules of interactions of the individuals in a crowd (22, 23) and transitions to synchronized behavior (16, 24). We introduce a stochastic model for mutually interacting complex systems (MICS) that generates LRCCs and provides a physiologically motivated explanation for the surprising presence of long-term memory in the cross-correlations of musical performances.

Interbrain synchronization has received growing attention recently, including studies of interpersonal synchronization (see ref. 4 for an overview), coordination of speech rhythm (25), social interactions (26), cortical phase synchronization while playing guitar in duets (27, 28), and improvisation in classical music performances (29).

Notably, the differences between the beats of two musicians are on the order of only a few milliseconds, not much larger than the typical duration of a single action potential (∼1 ms). The neurophysical mechanisms of timing in the millisecond range are still widely open (30, 31). EEG oscillatory patterns are associated with error prediction during music performance (32). Fine motor skills, such as finger-tapping rhythm and rate, are used to establish an early diagnosis of Huntington disease (33). The neurological capacity to synchronize with a beat may offer therapeutic applications for Parkinson disease, but the mechanisms are unknown to date (34). This study offers a statistical framework that may help to understand these mechanisms.

Experimental Setup and Methods

The performances were recorded at the Harvard University Studio for Electroacoustic Composition (see SI Text for details) on a Studiologic SL 880 keyboard yielding 57 time series of Musical Instrument Digital Interface (MIDI) recordings (Fig. 1, Top). Each recording typically lasted 6–8 min and contained ∼1,000 beats per subject. The temporal occurrences t1, …, tn of the beats were extracted from the MIDI recordings and the interbeat intervals read In = tn − tn−1 with t0 = 0. However, the results presented here apply not only to MIDI but also to acoustic recordings. The subjects were asked to press a key with their index finger according to the following. Task type Ia: Two subjects played beats in synchrony with one finger each. Task type Ib: Sequential recordings were made, where subject B synchronized with prior recorded beats of subject A. Sequential recordings are widely used in professional studio recordings, where typically the drummer is recorded first, followed by layers of other instruments. Task type II: One subject played beats in synchrony with one finger from each hand. Task type III: One subject played beats with one finger (finger-tapping). Finger-tapping of single subjects is well studied in literature (4) and serves as a baseline, but our focus is on synchronization between subjects. In addition to periodic tapping, a 4/4 rhythm {1, 2.5, 3, 4}, where the second beat is replaced by an offbeat, was used in tasks I–III.

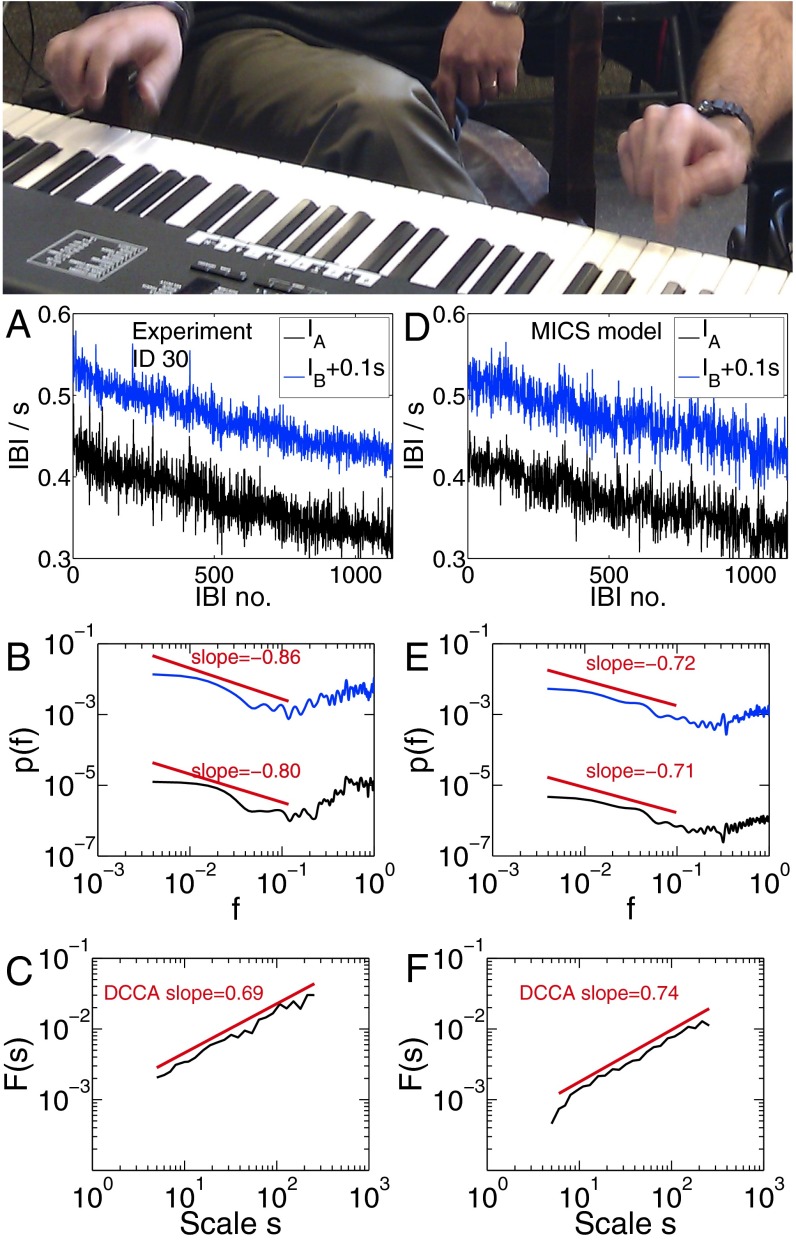

Fig. 1.

(Top) Two professional musicians A and B synchronizing their beats: comparison of experiments (A–C) with MICS model (D–F). (A) The IBIs of 1,134 beats of musician A (black curve) and B (blue curve, offset by 0.1 s for clarity) exhibit slowly varying trends and a tempo increase from 133 to 182 beats per minute. (B and E) The gPSD of time series IA, IB shows LRC asymptotically for small f and anticorrelations for large f separated by a vertex of the curve at f ∼ 0.1fNyquist (7). (C) Evidence of LRCC between IA and IB, DCCA exponent is δ = 0.69. (D–F) The MICS model for βA = βB = 0.85, n = 1,133 predicts δ = 0.74, in excellent agreement with the experimental data. A global trend extracted from A was added to the curve for illustration. Other parameters as in Fig. 2 A and B.

For analysis of the cross-correlations between two nonstationary time series (here, between two sequences of interbeat intervals; Fig. 1A), a modified version of detrended cross-correlation analysis (DCCA) was used (17) (Materials and Methods). We added global detrending as an initial step before DCCA, which has been shown crucial in analyzing slowly varying nonstationary signals (35). DCCA calculates the detrended covariance F(s) in windows of size s. LRCC are present if F(s) asymptotically follows a power law F(s) ∼ sδ with 0.5 < δ < 1.5. In contrast, δ = 0.5 indicates absence of LRCC.

A time series is considered long-range correlated if its power spectral density (PSD) asymptotically decays in a power law, p(f) ∼ 1/fβ for small frequencies f and 0 < β < 2. The limits β = 0 (β = 2) indicate white noise (Brownian motion), whereas −2 < β < 0 indicates anticorrelations. Throughout this article, we measure the power spectral frequency f in units of the Nyquist frequency (fNyquist = 1/2 Hz), which is half the sampling rate of the time series. A method tailored for studying long-range correlations in slowly varying nonstationary time series is detrended fluctuation analysis (DFA) (36, 37) (Materials and Methods). The DFA method consists of (i) local detrending of the signal in windows of size s with a polynomial of degree k (we used k = 2) and (ii) calculation of the variance FDFA (s) of detrended segments of length s. For fractal scaling, we obtain FDFA (s) ∼ sα with DFA exponent α (which in our case is the Hurst exponent). The DFA exponent quantifies the degree of persistence of memory in a given time series and is related to the PSD exponent β via β = 2α − 1.

Many time series of physical, biological, physiological, and social systems are nonstationary and exhibit long-range correlations. The PSD is commonly analyzed to gain insight into the dynamics of natural systems. However, for nonstationary time series the PSD method (also known as periodogram) fails: global trends manifest as spurious LRC in the PSD. We therefore propose global PSD (gPSD), which is an extension of the PSD method by including prior global detrending with a polynomial of degree k = 1 … 5 (Materials and Methods). We found good agreement between DFA and gPSD in our data set within the margin of error.

Note that in addition to the numerical error of the least-squares fitting procedure, the algorithms for fractal analysis have internal error bars analyzed in detail by Pilgram and Kaplan (38). For example, DFA results for the scaling exponent α are expected to have a SD of ∼±0.05 for data sets that are of the same size as ours. Because DCCA is strongly related to DFA, the internal errors of DCCA and DFA are expected to be of a comparable size.

Results

A representative example of the findings from a recording of two professional musicians, A and B, playing periodic beats in synchrony (task type Ia) is shown in Fig. 1. Evidence for LRCCs between IA and IB on timescales up to the total recording time is reported in Fig. 1C with DCCA exponent δ = 0.69 ± 0.05. The two subjects are rhythmically bound together on a timescale up to several minutes, and the generation of the next beat of one subject depends on all previous beat intervals of both subjects in a scale-free manner. LRCCs were found in all performances of both laypeople and professionals, when two subjects were synchronizing simple rhythms (Fig. 2C). Thus, rhythmic interaction can be seen as a scale-free process. In contrast, when a single subject is synchronizing his left and right hands (task type II), no significant LRCCs were observed (Fig. 2C), suggesting that the interaction of two complex systems is a necessary prerequisite for rhythmic binding. However, further research may clarify whether different types of two-handed play in single individuals can similarly lead to LRCCs between the two hands.

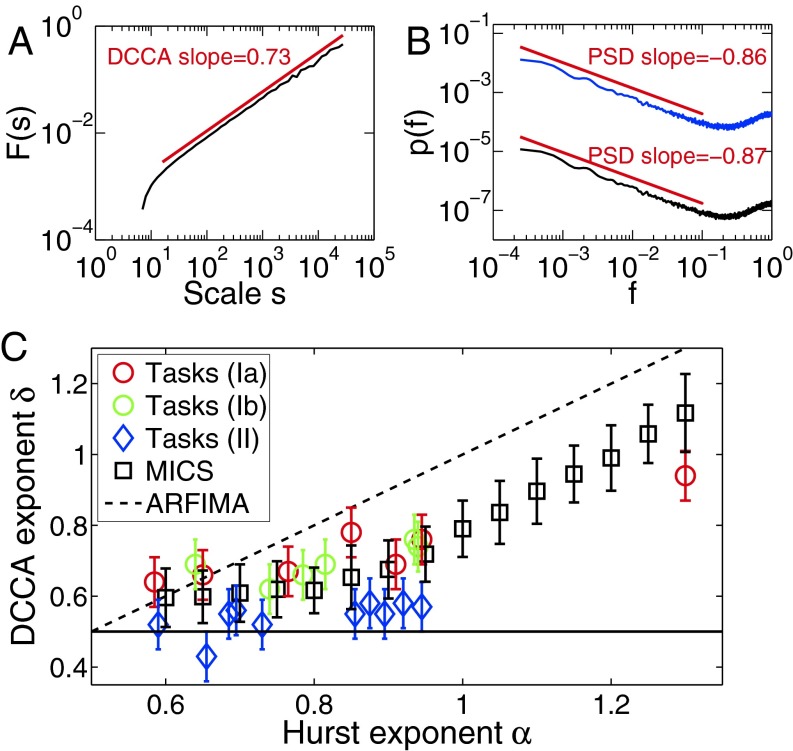

Fig. 2.

(A) Evidence of scale-free cross-correlations in the MICS model. (B) The PSD of IA (and IB) shows two regions: LRC asymptotically for small f with exponent β(IA) = 0.86 ∼ max(βA, βB) and anticorrelations for large f. Other parameters (A and B): n = 217, βA = βB = 0.85, coupling WA = WB = 0.5, and σA = σB = 6. (C) Excellent agreement is found between the predicted δ of the MICS model and tasks Ia (simultaneous recordings, marked by red circles) and Ib (sequential recordings; green circles). For each DFA (Hurst) exponent αA = αB ≡ α, 100 time series of length n = 2,048 were generated with the MICS model, and δ was calculated for all power law estimates with Pearson correlation coefficient R > 0.95 (for α ≥ 0.9 all realizations fulfilled this criterion). In contrast, the ARFIMA model (dashed line) deviates strongly for α > 0.75 from the experiments (tasks Ia and Ib). Recordings of single subjects (tasks II) are consistent with absence of LRCC, i.e., δ = 0.5 (black line).

What do the correlations between interbeat intervals look like in sequential recordings (task type Ib), where the musicians are recorded one after another? Surprisingly, we also found scale-free cross-correlations in sequential recordings (green circles in Fig. 2C), which is reproduced in the MICS model. Therefore, even though a wide class of pop and rock songs are currently recorded sequentially in recording studios, they do potentially contain LRCCs (and thus exhibit scale-free coupling) between individual tracks.

We identify two distinct regions in the PSD of the interbeat intervals separated by a vertex of the curve at a characteristic frequency fc ∼ 0.1fNyquist (Fig. 1B and SI Text): (i) The small frequency region asymptotically exhibits long-range correlations. This region covers long periods of time up to the total recording time. (ii) The high-frequency region exhibits short-range anticorrelations. This region translates to short timescales. These two regions were first described in single-subjects’ finger-tapping without a metronome (7). Because these two regions are observed in the entire data set (i.e., in all 57 recorded time series across all tasks), this finding suggests that these regions are persistent when musicians interact. Fig. 1E shows that the MICS model reproduces both regions and fc for interacting complex systems.

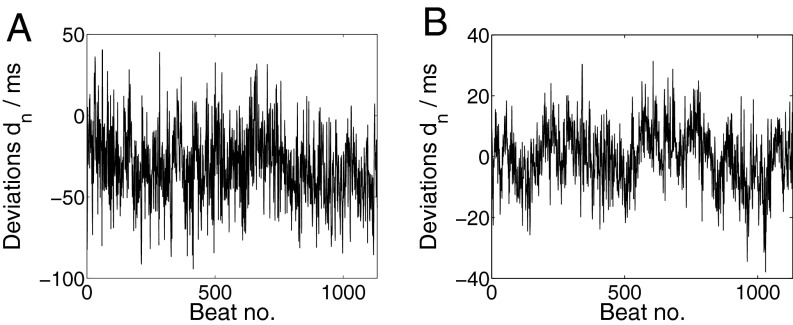

The two subjects potentially perceive the deviations dn = tA,n − tB,n between their beats. The DFA exponent α = 0.72 for the time series dn indicates long-range correlations in the deviations (averaging over the entire data set, we find ). In Fig. 3A the average deviation is , which may indicate that musician A led and B followed. An analysis how the scaling exponents differ in cases when no obvious leader–follower relation is observed can be found in SI Text.

Fig. 3.

The time series of deviations dn (Eq. 2) between the beats of two synchronizing individuals contain LRC both (A) in the experiment and (B) in the MICS model. (A) As a representative example, we show the dn obtained from the interbeat intervals IA,n and IB,n of the two professional musicians in Fig. 1A. Average deviation is . The DFA exponent reads α = 0.72, indicating long-range correlations. (B) Deviations dn simulated with the MICS model (Eq. 1) with DFA exponent α = 0.89. Other parameters are n = 1,133 data points, βA = βB = 0.85, σA = σB = 6, WA = WB = 0.5.

MICS Model

What is the origin of the scale-free cross-correlations? In algorithms generating LRCCs, such as a two-component autoregressive fractionally integrated moving average (ARFIMA) process (35), the n’th element of the time series is essentially generated by a stochastic term plus a weighted sum over all previous elements (see Eq. 4 in Materials and Methods). This weighted sum is also inherent in other well-known long-memory processes, such as fractional Brownian motion. Although these statistical processes are widely applied in finance, climate analysis, etc., a weighted sum over all previous elements does not appear to be (physiologically) meaningful in many cases, including our case, because it requires explicit memory for these elements. In fact, it is highly unlikely that each subject has declarative memory for hundreds of interbeat intervals played in the preceding minutes. Hence, the occurrence of LRCCs when musicians synchronize rhythms is surprising. Moreover, our experimental results deviate strongly from the analytical prediction based on two-component ARFIMA (dashed line in Fig. 2C), where δARFIMA = (αA + αB)/2 with DFA (Hurst) exponents αA, αB (35). In the following, a model for MICS will be introduced (see ref. 39 for a related model based on an activation-threshold mechanism), where LRCCs emerge dynamically from a local interaction.

Gilden et al. (7) presented a model in which the generation of temporal intervals by a single person is composed of two parts: an internal clock and a motor program associated with moving a finger or limb. The delay of the motor program is given by Gaussian white noise ξn. The internal clock generates beat intervals Cn where the PSD consists of 1/f noise (7) [which as a first step we generalize to 1/fβ noise with 0 < β < 2 to account for recent studies, where a range of power law exponents were found (3, 4)].

The following observation is built into the MICS model: When two subjects A and B are synchronizing a rhythm, each person attempts to (partly) compensate for the deviations dn = tA,n − tB,n perceived between the two n’th beats when generating the n + 1’th beat. We propose the following model for MICS:

| [1] |

where CA,n and CB,n are Gaussian-distributed 1/fβ noise time series with exponents 0 < βA,B < 2, and T is the mean beat interval. We set d0 = 0. The coupling strengths 0 < WA,B < 2 describe the rate of compensation of a deviation in the generation of the next beat. In the limit WA = WB = 0 and βA = βB = 1, the MICS model reduces to the Gilden model (7). The MICS model diverges for WA + WB ≥ 2, i.e., when subjects are overcompensating.

Anticorrelations on short timescales arise from the term −ξn−1: a long interbeat interval is likely followed by a short one and vice versa to maintain a given tempo (7), which offers an explanation for the two regions in the PSD of the interbeat intervals in Fig. 1B. Because the delay of the motor program has no long-term memory, its effect decays exponentially over time; hence, the anticorrelations are only seen on short timescales, whereas the long-time behavior is given by the long-range correlated clock noise. The relative strength of clock noise over motor noise is given by σA and σB and can be extracted from the slope of the anticorrelations in IA and IB for high f: the larger σA the smaller the anticorrelations in IA (7). One would expect the anticorrelations to decrease when every other beat is neglected. Indeed, by selecting all odd number terms {I2n−1} (or the even number terms), the short-range anticorrelations in the PSD are significantly reduced (both in the MICS model and in the data) and can even vanish if clock noise dominates (i.e., for large σA, σB). LRCCs are significantly reduced when selecting only every other element of the time series.

A comparison of the MICS model (Fig. 1 D–F) with our experiments (Fig. 1 A–C) shows excellent agreement. The vertex at the characteristic frequency fc in the PSD is reproduced by the MICS model (Fig. 1 B and E).

How do scale-free cross-correlations emerge in the MICS model? The deviations dn, which the musicians perceive and adapt to, can be written as a sum over all previous interbeat intervals

| [2] |

thus involving all previous elements of the time series of IBIs of both musicians. Therefore, the MICS model suggests that scale-free coupling of the two subjects emerges mainly through the adaptation to deviations between their beats.

What are predictions of the MICS model? (P1) Emergence of LRCCs (Fig. 2A): The predicted DCCA exponents δ, which are not a parameter of the model, are in excellent agreement with the experiments (Fig. 2C). (P2) Asymptotically, the DFA scaling exponents αA,B of the interbeat intervals are determined by the “clock” with the strongest persistence: αA = αB = [max(βA, βB) + 1]/2. This result is valid for long time series of length N ≳ 105 (Fig. 2B). Surprisingly, even when turning off, say, clock A (i.e., βA = 0), the long-time behavior of both IA and IB is asymptotically given by the exponent of the long-range correlated clock B (and vice versa) for large N. Thus, the musician with the higher scaling exponent determines the partner’s long-term memory in the IBIs. However, in experiments the exponents can differ significantly in shorter time series of length N ∼ 1,000, which can be seen by comparing the PSD exponents in Figs. 1E and 2B. (P3) Sequential recordings (WA = 0 and 0 < WB < 2): The MICS model predicts LRCC for sequential recordings in agreement with the experimental findings (green circles in Fig. 2C). (P4) The DFA exponent of the time series of deviations dn is given by α = [max(βA, βB) + 1]/2 in the limit N → ∞. However, the DFA exponents of the time series of deviations can significantly differ for finite lengths N ∼ 1,000 beats. A sample sequence is shown in Fig. 3B.

A possible extension of the MICS model is to consider variable coupling strengths W = W(dn). Because larger deviations are likely to be perceived more distinctly, one possible scenario is to introduce couplings W that increase with dn. For example, W may increase when large deviations such as glitches are perceived. In cases where the coupling strengths WA or WB depend on dn, Eq. 1 becomes nonlinear in dn.

Application: Musical Coupling

The observation of scale-free (fractal) musical coupling can be used to couple the interbeat intervals of two or more audio tracks, i.e., to imitate the generic interaction between musicians. Additional temporal deviations from the exact beat pattern, which, e.g., are introduced by intention by a musician to interpret a musical piece, are not modeled by the procedure below. Though the interbeat intervals are modified, all other characteristics, such as pitch, timbre, and loudness, remain unchanged.

Introducing musical coupling in two or more sequences is referred to as “group-humanizing.” More than two audio tracks can be group-humanized by having each additional track responding to, e.g., the average of all other tracks' deviations. In addition, it is possible to group-humanize sequences by having a computer respond to or follow a musician in a “humanized” manner. For example, a musician can play a MIDI instrument and the computer instruments adapt to his unique input.

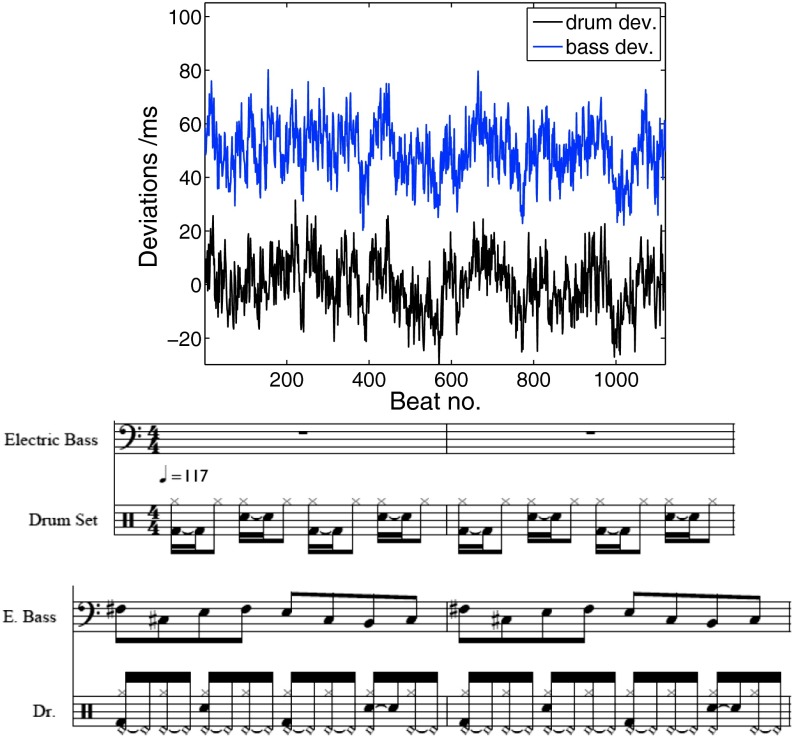

The procedure to introduce musical coupling in two audio tracks A and B is demonstrated using an instrumental version of the song “Billie Jean” by Michael Jackson. This song was chosen because drum and bass tracks consist of a simple rhythmic and melodic pattern that is repeated continuously throughout the entire song; this leads to a steady beat in drum and bass, which is well suited to demonstrate their generic mutual interaction. For simplicity, we merge all instruments into two tracks: track A includes all drum and keyboard sounds, and track B includes the bass. The interbeat intervals of tracks A and B read IA,t = Xt + T and IB,t = Yt + T, where T is the average interbeat interval given by the tempo (here, T = 256 ms, which corresponds to 234 beats per minute in the eighth notes). If the time series Xt (blue curve in Fig. 4, Upper) and Yt (black curve) are long-range cross-correlated, we obtain musical coupling between drum and bass tracks. We modified a MIDI version of the song Billie Jean following three different procedures (audio samples can be found in SI Text). (i) Musical coupling (also called group-humanizing): Xt and Yt contain LRCCs using a two-component ARMIFA process; (ii) “humanizing” the tracks separately: Xt and Yt each contain 1/fβ noise, but are statistically independent; and (iii) randomizing: Xt and Yt consist of Gaussian white noise.

Fig. 4.

(Upper) Scale-free coupling of two audio tracks: The deviations from their respective positions (e.g., given by a metronome) are shown in the drum track (upper blue curve, offset by 50 ms for clarity) and bass track (lower black curve) to introduce musical coupling. When an instrument is silent on a beat, the corresponding deviation is skipped. The time series each of length n = 1,120 were generated with a two-component ARFIMA process with Hurst exponents αA = αB = 0.9 and coupling constant W = 0.5. (Lower) Excerpt of the first four bars of the song “Billie Jean” by Michael Jackson. Because there is a drum sound on every beat, all 1,120 deviations are added to the drum track, whereas in the first two bars the bass pauses.

For all three procedures, a SD of 10 ms was chosen for Xt and Yt. Parameters are δ = 0.9 for musical coupling and β = 0.9 for humanizing. The time series of deviations Xt and Yt for musical coupling are shown in Fig. 4. The measured DCCA exponent reads δ = 0.93 (in agreement with the analytical value 0.9 within margins of error) showing LRCC. For separately humanized sequences, however, we expect absence of LRCC. Indeed, the detrended covariance of Xt and Yt oscillates around zero (SI Text), i.e., no LRCCs are found (35). Other processes that generate LRCC could also be used to induce musical coupling, including the MICS model (Eq. 1). Randomizing is implemented in professional audio editing software, whereas a group-humanizer program for coupling MIDI tracks is available from www.nld.ds.mpg.de/∼holgerh/download_software.html.

Our procedure of introducing musical coupling of a pair of sequences (IA,t, IB,t) reduces to humanizing the sequences IA and IB independently in the limit of vanishing coupling (WA = WB = 0). Therefore, humanizing the tracks independently can be seen as a special case of musical coupling. Because humanizing is preferred by listeners over randomizing (3, 5), we expect that the coupled/humanized samples (nos. 1 and 2) will be preferred over the randomized sample (no. 3). Audio sample 1 imitates the interplay of two musicians, whereas sample 2 only reflects the statistics of the play of single musicians that do not interact with each other.

Conclusion

We showed that rhythmic synchronization between individuals (both musicians and laypeople) exhibits long-term memory of the partner’s interbeat intervals up to several minutes for which an explanation is suggested in a physiologically motivated stochastic model for MICS. The MICS model suggests that scale-free coupling of the two subjects emerges mainly through the adaptation to deviations between their beats.

The MICS model may be applicable to other mutually interacting complex systems, such as synchronization phenomena and interdependencies in finance, heartbeats, EEG signals (15, 17), or bird flocks (20), where the origin of scale-free cross-correlations and resulting collective behavior is often elusive. On the methods side, to avoid artifacts in the PSD of slowly varying nonstationary time series, our analysis suggests prior global detrending. We demonstrated that the observed memory phenomenon in rhythmic synchronization can be imitated by fractal coupling of audio tracks and thus applied in electronic music. Though this study complements our understanding of timing and synchronization at the neural networks level, we hope that this work further stimulates the interdisciplinary study of neuronal correlates of timing and synchronization at the interbrain level, e.g., based on combined audio, EEG, or fMRI measurements.

Ethics Statement.

This study was reviewed by the Harvard University Committee on the Use of Human Subjects in Research, which determined that it meets the criteria for exemption to Institutional Review Board approval. Informed consent was obtained from the healthy adult subjects.

Materials and Methods

Globally Detrended Power Spectral Density.

To analyze the PSD of slowly varying nonstationary time series, we propose the gPSD method, which is a modification of the PSD method and involves prior global detrending; it extends previous approaches where linear global detrending (39) was used by including higher order polynomials of degree k. The gPSD method consists of the following steps, given a time series Xn of length N: (i) Global detrending of Xn with a polynomial of degree k = 1…5. (ii) Divide Xn in m segments of length N/m. (iii) Calculating the power spectral density in each (sliding of shifted) window of size N/m, where FFT denotes the fast Fourier transform, including zero padding, and r labels the different windows. (iv) Averaging the power spectra p(f) = 〈pr(f)〉 and calculating the power spectral exponent of p(f) by means of a least-squares fit. If not noted otherwise, we used k = 2, m = 8 and shifted the windows by N/(2m) thus obtaining r = 1 … 2m −1 power spectra for each time series. The fitting range for gPSD is [fmin, fc], where fmin = 1/(2N) is the smallest possible power spectral frequency, and the position of the characteristic frequency fc in the PSD was determined automatically.

Detrended Fluctuation Analysis.

A well-known method tailored for studying slowly varying nonstationary time series is DFA (for details, see refs. 36 and 37). The DFA fitting range used here reads [smin, N/4], where smin was determined automatically by minimizing the error of a least-squares fit (quantified by Pearson’s correlation coefficient). However, in this work we are not only interested in the DFA exponent itself but also in the shape of the PSD, which exhibits two distinct regions separated by a vertex at the characteristic frequency fc. DFA shows a much more smooth transition between the two regions; therefore, gPSD is not only a valuable independent measure for long-range correlations, but also vital to characterize the scaling behavior in both regions.

Detrended Cross-Correlations Analysis.

DCCA has been developed to analyze the cross-correlations between two nonstationary time series (17). As suggested in ref. 35, we added global detrending with a polynomial of degree k as initial step before DCCA. Global detrending proved to be a crucial step to calculate the DCCA exponent of the nonstationary time series in our data. Without global detrending much larger DCCA exponents are obtained, i.e., spurious LRCC are detected that reflect global trends. Given two time series Xn, , where n = 1 … N. The DCCA method including prior global detrending consists of the following steps: (i) Global detrending: fitting a polynomial of degree k to Xn and a polynomial to , where typically k = 1 … 5. We used k = 3 and carefully checked that the obtained DCCA scaling exponents did not change significantly with k. (ii) Integrating the time series and . (iii) Dividing the series into windows of size s. Least-squares fit and for both time series in each window. (iv) Calculating the detrended covariance

| [3] |

where Ns is the number of windows of size s. For fractal scaling, FDCCA(s) ∝ sδ with 0.5 < δ < 1.5. Absence of LRCC is indicated by fluctuations of FDCCA(s) around zero. If, however, the detrended covariance FDCCA(s) changes signs and fluctuates around zero as a function of the timescale s, LRCCs are absent. When setting and without global detrending (step 1), DFA is obtained. In this respect, DFA can be seen as a detrended autocorrelation analysis.

Two-Component ARFIMA.

A two-component ARFIMA process has been proposed that generates two time series x1,2, which exhibit LRCC (15, 17). The process is defined by

| [4] |

with Hurst exponents 0.5 < αA,B < 1, weights wn(d) = dΓ(n − d)/(Γ(1 − d)Γ(n + 1)), Gaussian white noise ξt,A, and ξt,B and gamma function Γ. The coupling constant W ranges from 0.5 (maximum coupling between xt and yt) to 1 (no coupling). It has been shown analytically that the cross-correlation exponent is given by δARFIMA = (αA + αB)/2 (dashed line in Fig. 2C). An example of two coupled time series generated with a two-component ARFIMA process is shown in Fig. 4.

Supplementary Material

Acknowledgments

I thank Eric J. Heller, Thomas Blasi, Caroline Scott, Kathryn V. Papp, and James Holden for helpful discussions, and the Harvard University Studio for Electroacoustic Composition (especially Hans Tutschku and Seth Torres) for providing resources. This work was supported by German Research Foundation Grant HE 6312/1-2.

Footnotes

Conflict of interest statement: A patent has been filed on the reported approach to introduce musical coupling.

This article is a PNAS Direct Submission.

See Commentary on page 12960.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1324142111/-/DCSupplemental.

References

- 1.Sacks O. Musicophilia: Tales of Music and the Brain. New York: Vintage Books; 2008. [Google Scholar]

- 2.Sacks O. The power of music. Brain. 2006;129(Pt 10):2528–2532. doi: 10.1093/brain/awl234. [DOI] [PubMed] [Google Scholar]

- 3.Hennig H, et al. The nature and perception of fluctuations in human musical rhythms. PLoS ONE. 2011;6(10):e26457. doi: 10.1371/journal.pone.0026457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Repp BH, Su YH. Sensorimotor synchronization: A review of recent research (2006-2012) Psychon Bull Rev. 2013;20(3):403–452. doi: 10.3758/s13423-012-0371-2. [DOI] [PubMed] [Google Scholar]

- 5.Hennig H, Fleischmann R, Geisel T. Musical rhythms: The science of being slightly off. Phys Today. 2012;65:64–65. [Google Scholar]

- 6.Hennig H, Fleischmann R, Geisel T. Immer haarscharf daneben [Just a hair off] Spektrum Wiss. 2012;9:16–20. German. [Google Scholar]

- 7.Gilden DL, Thornton T, Mallon MW. 1/f noise in human cognition. Science. 1995;267(5205):1837–1839. doi: 10.1126/science.7892611. [DOI] [PubMed] [Google Scholar]

- 8.Roberts S, Eykholt R, Thaut MH. Analysis of correlations and search for evidence of deterministic chaos in rhythmic motor control by the human brain. Phys Rev E Stat Phys Plasmas Fluids Relat Interdiscip Topics. 2000;62(2 Pt B):2597–2607. doi: 10.1103/physreve.62.2597. [DOI] [PubMed] [Google Scholar]

- 9.Rankin SK, Large EW, Fink PW. Fractal tempo fluctuation and pulse prediction. Music Percept. 2009;26:401–413. doi: 10.1525/mp.2009.26.5.401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Voss R, Clarke J. 1/f noise in music and speech. Nature. 1975;258:317–318. [Google Scholar]

- 11.Voss R, Clarke J. 1/f noise in music: Music from 1/f noise. J Acoust Soc Am. 1978;63:258–263. [Google Scholar]

- 12.Levitin DJ, Chordia P, Menon V. Musical rhythm spectra from Bach to Joplin obey a 1/f power law. Proc Natl Acad Sci USA. 2012;109(10):3716–3720. doi: 10.1073/pnas.1113828109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Néda Z, Ravasz E, Brechet Y, Vicsek T, Barabási AL. The sound of many hands clapping. Nature. 2000;403(6772):849–850. doi: 10.1038/35002660. [DOI] [PubMed] [Google Scholar]

- 14.Podobnik B, Horvatic D, Petersen AM, Stanley HE. Cross-correlations between volume change and price change. Proc Natl Acad Sci USA. 2009;106(52):22079–22084. doi: 10.1073/pnas.0911983106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Podobnik B, Wang D, Horvatić D, Grosse I, Stanley HE. Time-lag cross-correlations in collective phenomena. Europhys Lett. 2010;90:68001. [Google Scholar]

- 16.Preis T, Schneider JJ, Stanley HE. Switching processes in financial markets. Proc Natl Acad Sci USA. 2011;108(19):7674–7678. doi: 10.1073/pnas.1019484108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Podobnik B, Stanley HE. Detrended cross-correlation analysis: A new method for analyzing two nonstationary time series. Phys Rev Lett. 2008;100(8):084102. doi: 10.1103/PhysRevLett.100.084102. [DOI] [PubMed] [Google Scholar]

- 18.Karlis AK, Diakonos FK, Petri C, Schmelcher P. Criticality and strong intermittency in the Lorentz channel. Phys Rev Lett. 2012;109(11):110601. doi: 10.1103/PhysRevLett.109.110601. [DOI] [PubMed] [Google Scholar]

- 19.Herbert-Read JE, et al. Inferring the rules of interaction of shoaling fish. Proc Natl Acad Sci USA. 2011;108(46):18726–18731. doi: 10.1073/pnas.1109355108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Cavagna A, et al. Scale-free correlations in starling flocks. Proc Natl Acad Sci USA. 2010;107(26):11865–11870. doi: 10.1073/pnas.1005766107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Bialek W, et al. Statistical mechanics for natural flocks of birds. Proc Natl Acad Sci USA. 2012;109(13):4786–4791. doi: 10.1073/pnas.1118633109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Gallup AC, et al. Visual attention and the acquisition of information in human crowds. Proc Natl Acad Sci USA. 2012;109(19):7245–7250. doi: 10.1073/pnas.1116141109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Moussaïd M, Helbing D, Theraulaz G. How simple rules determine pedestrian behavior and crowd disasters. Proc Natl Acad Sci USA. 2011;108(17):6884–6888. doi: 10.1073/pnas.1016507108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Zhao L, et al. Herd behavior in a complex adaptive system. Proc Natl Acad Sci USA. 2011;108(37):15058–15063. doi: 10.1073/pnas.1105239108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kawasaki M, Yamada Y, Ushiku Y, Miyauchi E, Yamaguchi Y. Inter-brain synchronization during coordination of speech rhythm in human-to-human social interaction. Sci Rep. 2013;3:1692. doi: 10.1038/srep01692. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Dumas G, Nadel J, Soussignan R, Martinerie J, Garnero L. Inter-brain synchronization during social interaction. PLoS ONE. 2010;5(8):e12166. doi: 10.1371/journal.pone.0012166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Lindenberger U, Li SC, Gruber W, Müller V. Brains swinging in concert: Cortical phase synchronization while playing guitar. BMC Neurosci. 2009;10:22. doi: 10.1186/1471-2202-10-22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Sänger J, Müller V, Lindenberger U. Intra- and interbrain synchronization and network properties when playing guitar in duets. Front Hum Neurosci. 2012;6:312. doi: 10.3389/fnhum.2012.00312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Dolan D, Sloboda J, Jensen HJ, Crüts B, Feygelson E. The improvisatory approach to classical music performance: An empirical investigation into its characteristics and impact. Music Performance Research. 2013;6:1–38. [Google Scholar]

- 30.Buhusi CV, Meck WH. What makes us tick? Functional and neural mechanisms of interval timing. Nat Rev Neurosci. 2005;6(10):755–765. doi: 10.1038/nrn1764. [DOI] [PubMed] [Google Scholar]

- 31.Buonomano DV, Karmarkar UR. How do we tell time? Neuroscientist. 2002;8(1):42–51. doi: 10.1177/107385840200800109. [DOI] [PubMed] [Google Scholar]

- 32.Ruiz MH, Strübing F, Jabusch HC, Altenmüller E. EEG oscillatory patterns are associated with error prediction during music performance and are altered in musician’s dystonia. Neuroimage. 2011;55(4):1791–1803. doi: 10.1016/j.neuroimage.2010.12.050. [DOI] [PubMed] [Google Scholar]

- 33.Walker FOF. Huntington’s disease. Lancet. 2007;369(9557):218–228. doi: 10.1016/S0140-6736(07)60111-1. [DOI] [PubMed] [Google Scholar]

- 34.Hui E, Chui BT, Woo J. Effects of dance on physical and psychological well-being in older persons. Arch Gerontol Geriatr. 2009;49(1):e45–e50. doi: 10.1016/j.archger.2008.08.006. [DOI] [PubMed] [Google Scholar]

- 35.Podobnik B, et al. Quantifying cross-correlations using local and global detrending approaches. Eur Phys J B. 2009;71:243–250. [Google Scholar]

- 36.Peng CK, Havlin S, Stanley HE, Goldberger AL. Quantification of scaling exponents and crossover phenomena in nonstationary heartbeat time series. Chaos. 1995;5(1):82–87. doi: 10.1063/1.166141. [DOI] [PubMed] [Google Scholar]

- 37.Kantelhardt J, Koscielny-Bunde E, Rego H, Havlin S, Bunde A. Detecting long-range correlations with detrended fluctuation analysis. Physica A. 2001;295:441–454. [Google Scholar]

- 38.Pilgram B, Kaplan D. A comparison of estimators for 1/f noise. Physica D. 1998;114:108. [Google Scholar]

- 39.Torre K, Wagenmakers EJ. Theories and models for 1/f(beta) noise in human movement science. Hum Mov Sci. 2009;28(3):297–318. doi: 10.1016/j.humov.2009.01.001. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.