Abstract

Importance

Public reporting of quality is considered a key strategy for stimulating improvement efforts at US hospitals; however, little is known about the attitudes of hospital leaders towards existing quality measures.

Objective

To describe US hospital leaders’ attitudestowards hospital quality measures found on the Centers for Medicare and Medicaid Service’s Hospital Compare website,assess use of these measures for quality improvement activities, and examine the association between leaders’attitudes and hospital quality performance.

Design, Setting, and Participants

We mailed a 21-item questionnaire to senior hospital leaders from a stratified random sample of 630 US hospitals, including equal numbers with better-than-, as-, and worse-than-expected performance on mortality and readmission measures.

Main Outcomes andMeasures

We assessed levels of agreement with statements concerning existing quality measures, examined use of measures for improvement activities,and analyzed the association between leaders’ attitudes and hospital performance.

Results

Of the 630 hospitals surveyed, 380 (60%) responded.There were high levels of agreement with statements about whether publicreporting stimulates quality improvement (range: volume measures=53%, process measures=89%), the ability of hospitals to influence measure performance (range: volume measures=56%,process measures=96%), and effects of measures on hospital reputation (range: volume measures=54%, patient experience measures=90%). Approximately half of hospitals were concerned that focus on publicly reported quality measures would lead to neglect of other clinically important matters (range: mortality measures=46%, process measures=59%) and that risk-adjustment was inadequate to account for differences between hospitals (range: volume measures=45%, process measures=57%). More than 85% of hospitals reported incorporating performance on publicly reported quality measures into annual goals,and roughly90% regularly reviewed performance with senior clinical and administrative leaders and boards. When compared to hospitals identified as poor performers, those identified as havingsuperior performance had somewhat more favorable attitudes towards mortality and readmission measures and were more likely to link performance to the variable compensation plans of hospital leaders and physicians.

Conclusions and Relevance

While hospital leaders expressedimportant concerns about the methods and unintended consequences of public reporting, they indicated that themeasures reported on the Hospital Compare websiteexert a strong influence over local planning and improvement efforts.

Over the past decade, one of the Centers for Medicare and Medicaid Services’s(CMS) principal strategies for improving the outcomes of hospitalized patients has been to make information about health care quality more transparent through public reporting programs.1Performance measures currently published on CMS’s Hospital Compare website include those focused on processes of care (e.g., percentage of patientshospitalized for acute myocardial infarction treated with beta blockers); care outcomes, such as condition-specific mortality and readmissionrates; patients’ experience and satisfaction with care; and measuresof hospitalizationcosts and case volumes.2Since 2003, CMS has steadily expanded both the number of conditions and measures included in public reporting efforts, and many of these now serve as the basis for the value-based purchasing program legislated in the Affordable Care Act.3,4

In addition to helping consumers make more informed choices about where to obtain care, one of the primary goals of public reporting is to stimulate improvement efforts byproviders.5-7 Accordingly, the extent to which hospitals view these data to be validand meaningful may influence the effectiveness of this strategy. We therefore sought to describe the attitudes of senior hospital leaders towards the measures of hospital quality reported on CMS’s Hospital Compare website,and to assess how these measures are being used for performance improvement. Because we hypothesized that more favorable attitudes towards publiclyreported measures might reflect greater institutional commitment towards improvement, we also examined the association between the views of hospital senior leaders and hospital quality performance rankings.

Methods

Sample identification

Using information from the Hospital Compare website, we categorized hospitals into one of 3 groups based on their 30-day risk-standardizedmortality and readmissionrates for pneumonia, heart failure, and acute myocardial infarction. CMS uses hierarchical modeling to calculaterates for each hospital, based on the ratio of predicted to expected outcomes, multiplied by the national observed outcome rate. Conceptually, this allows for a comparison of a particular hospital’s performance given its case mix to an average hospital’s performance with the same case mix. Hospital performance is then compared relative to other institutions across the nation. For the purposes of the study, hospitals were considered to be better-than-expected if they were identified by CMS as “better than the US national rate” on at least one outcome measure and had no measures in which they were “worse than the US national rate”. Correspondingly, hospitals were categorized as worse-than-expected if they were identified as having worse performance than the US national rate on at least one measure and no measures in which they were better than the US national rate. Hospitals that were neither better than nor worse than US national performance on any outcome measure were considered to be performing as-expected. We excluded a small group of hospitals with mixed performance (i.e., those better than the national rate for some measures and worse for others). We matched sampled hospitals to the 2009 American Hospital Association Survey data to obtain hospital characteristics, including size, teaching status, population served, and region.

Of 4459 hospitals in the Hospital Compare database, we excluded 624(14%) because of missing data for one or both performance measures (resulting from low case volumes that did not meet the CMS threshold for reporting), and 136(3%) that had mixed performance. Of the remaining 3699 hospitals, 471 (13%) were better-than-expected, 2644 (71%) were as-expected,and 584 (16%) were worse-than-expected. We randomly selected 210 hospitals from each of the performance strata to reach80% power to detect a 20% difference in the proportion responding “Strongly agree/agree” among top and bottom performers with 95% confidence, allowing for multiple comparisons and a projected 60% response rate.

Survey administration

We identified the names, addresses, and telephone numbers of the chief executive officer and the senior executive responsible for quality at the hospital (e.g., chief quality officer, director of quality, vice president of medical affairs) through telephone inquiries and web searches. Using best practices in survey administration,8two weeks prior to mailing the survey,we sent a post card alerting potential participants of the goals and timing of the study. Following an initial mailing of the survey,we sent up to 3 remindersto hospitals that did not respond, and made up to 3 attempts to contact remaining non-respondents by telephone. A 2 dollar bill was included in the initial mailing as an incentive to participate. Survey administration was conducted between January and September 2012.

Survey content

The survey consisted of 10 Likert-style questions assessing level of agreement on a 4 item scale (strongly disagree to strongly agree), with statements about the role, strengths, and limitations of 6 types of performance measures reported on the Hospital Compare website: processes of care, mortality, readmission, patient experience, cost, and volume. Questions addressed the following concepts: whether public reporting of the measures stimulates quality improvement, whether the hospital is able to influence performance on the measures, whether the hospital’s reputation is influenced by performance on the measures, whether the measures accurately reflect quality of care for the conditions being measured, and whether performance on the measures can be used to draw inferences about quality of care more generally at the hospital. Additionally, we assessed levels of agreement with a number of common concerns raised about quality measures, including whether measured differences are clinically meaningful, whether the risk-adjustment methods are adequate to account for differences in patient case-mix, whether efforts to maximize performance on the measures can result in neglect of other more important matters (i.e. “teaching to the test”), whether hospitals may attempt to maximize their performance primarily by making changes to documentation and coding rather than improving clinical care (i.e. “gaming”), and whether random variation has a substantial likelihood of affecting the hospital’s ranking (Appendix 1).9-13

Finally, we included 6 questions focused on how quality measures were used at the respondent’s institution, including whether performance levels were incorporated into annual hospital goals and whether performance was regularly reviewed with a hospital’s Board of Trustees, senior administrative and clinical leaders, and front-line clinical staff. We also asked whether quality performance was used in the variable compensation or bonus program for senior hospital leaders and for hospital-based physicians.

Analysis

We first comparedthe characteristics of respondent and non-respondenthospitals to ascertain potential non-response bias via chi-square. In those instances in which a hospital returned more than 1 questionnaire,we selected the first response received. Overall summary statistics applicable to the study population (3699 hospitals)were constructed taking strata sampling weightsinto account using PROC SURVEYFREQ in SAS. We constructed summary statistics for each question using weighted frequencies and proportions.

To investigate the potential association between hospital performance (as measured by risk-standardized mortality and readmission rates)and the views of hospital leaders about those measures, we modeledresponses grouped as “strongly agree/agree” versus “disagree/strongly disagree” across the 3 performance groups via logistic regression (PROC SURVEYLOGISTIC). We carried out a similar analysis for questions focused on the use of the performance measures at the respondent’s institution. These analyses were adjusted for hospital characteristics, including number of beds, teaching status, urban or rural location, and geographic region, as well as respondent job title. Bonferroni adjustment was made for all pairwise tests among the performance strata. P-values <0.05 were considered significant.

All analyses were performed using SAS version 9.3(SAS Institute, Inc., Cary, NC).The study protocolwas approved by the Institutional Review Board atBaystate Medical Center.

Results

Of the 630 hospitals surveyed, a total of 380 (60%) responded. More than half of the responding hospitals had fewer than 200 beds,36% were teaching institutions, and approximately two-thirds were located in urban areas. (Table 1)There was no difference in response rates between hospitals with better than, at, or lower than expected performance. Additionally, respondent hospitals were similar to non-respondent hospitals with regard to size, teaching status, geographic region, urban or rural setting, and quality performance. The individual completing the questionnaire was most often the chief medical officer or equivalent (e.g.,vice-president of medical affairs, chief of staff) (40%), chief executive officer (30%), or the chief quality officeror equivalent (e.g.,vice presidentof quality, director of quality) (20%).

Table 1.

Characteristics of respondent and non-respondent hospitals

| Characteristic | Total | Respondent | Non Respondent | p | |||

|---|---|---|---|---|---|---|---|

| n | % | n | % | n | % | ||

| Teaching Status* | |||||||

| No | 408 | 65.5 | 237 | 64.2 | 171 | 67.3 | 0.43 |

| Yes | 215 | 34.5 | 132 | 35.8 | 83 | 32.7 | |

| Number of Beds* | |||||||

| <200 | 332 | 53.5 | 197 | 53.7 | 135 | 53.2 | |

| 200-400 | 179 | 28.8 | 106 | 28.9 | 73 | 28.7 | 0.98 |

| >400 | 110 | 17.7 | 64 | 17.4 | 46 | 18.1 | |

| Population Served* | |||||||

| Rural | 202 | 32.4 | 117 | 31.7 | 85 | 33.5 | 0.65 |

| Urban | 421 | 67.6 | 252 | 68.3 | 169 | 66.5 | |

| Geographic Region* | |||||||

| South | 264 | 42.4 | 152 | 41.2 | 112 | 44.3 | |

| Midwest | 143 | 23.0 | 78 | 21.1 | 65 | 25.7 | |

| Northeast | 116 | 18.6 | 83 | 22.5 | 33 | 13.0 | 0.05 |

| West | 97 | 15.6 | 54 | 14.6 | 42 | 16.6 | |

| Other | 3 | 0.4 | 2 | 0.6 | 1 | 0.4 | |

| Sampling Strata** | |||||||

| Better | 210 | 33.3 | 127 | 33.4 | 83 | 33.2 | |

| At | 210 | 33.3 | 119 | 31.3 | 91 | 36.4 | 0.33 |

| Worse | 210 | 33.3 | 134 | 35.3 | 76 | 30.4 | |

3 hospitals with IDs removed are included with non-respondents; we were unable to match all hospitals to AHA data, resulting in some missing values

Sampling strata labels correspond to better-than-, as-, and worse-than-expected quality performance

Attitudes towards existing quality measures

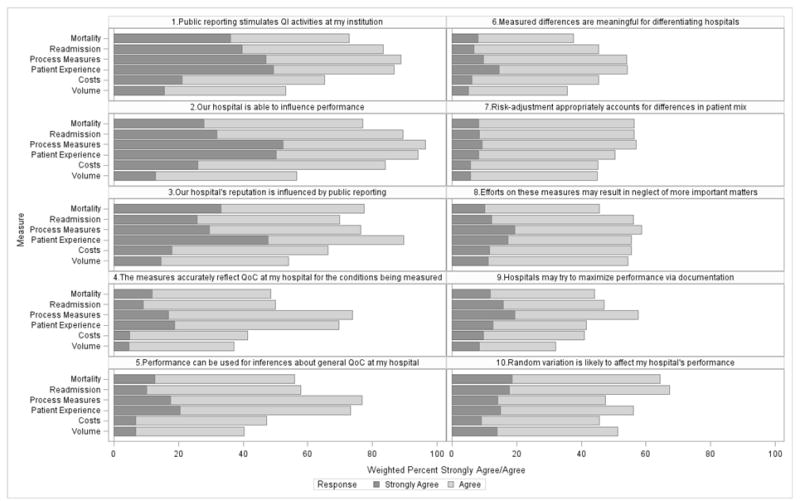

Responses to the attitude questions suggest that public reporting has captured the attention of hospital leaders. More than 70% of hospitals agreed with the statement,“public reporting stimulates quality improvement activity at my institution” for mortality, readmission, process, and patient experience measures; agreement for measures of cost and volume both exceeded 50%. (Figure 1, eTable 1) A similar pattern was observed for the statement,“our hospital is able to influence performance on this measure”:agreement for processes of care and patient experience measures exceeded 90%. Ninety percent of hospitals agreed that the hospital’s reputation was influenced by patient experience measures; agreement was approximately 70% for mortality, readmission, and process measures, and greater than 50% for cost and volume measures.

Figure 1.

Agreement of hospital senior leaders with statements concerning publicly reported quality measures

Respondents expressed concernsabout the methodology and implementation of quality measures.(Figure 1) While approximately 70% of respondents agreed with the statement that process and patient experience measures provided an accurate reflection of quality of care for the conditions measured, this fell to approximately 50% for measures of mortality and readmission, and lower still for measures of cost and volume. A similar pattern was observed when we asked whether measured performance could be used to draw inferences about quality of care in general, with higher agreement for measures of process and patient experience. Additionally, fewer than 50% of respondentsagreed with the statement that measured differences between hospitals were clinically meaningful for mortality, readmission, cost, and volume measures.Approximately half of hospital leaders surveyed agreed that risk-adjustment methods were adequate to account for differences in casemix. Between 46% and 59% of hospital leaders expressed concern that focus on the publicly reported quality measures might lead to neglect of other more important topics, and there were similar levels of concern (ranging from 32% to 58%) that hospitals might try to game the system by focusing their efforts primarily on changing documentation and coding rather than by making actual improvements in clinical care. Lastly, concern about the potential role of random variation affecting measured performance ranged from 45% for measures of cost to 67% for readmission measures.

Association between respondent role and attitudes

When compared to chief executive officers and chief medical officers, respondents who identified themselves as chief quality officers or vice presidents of quality were less likely to agree that public reporting stimulates quality improvement, and that measured differences are large enough to differentiate between hospitals.Chief Quality Officers were also the group most concerned about the possibility that public reporting might lead to gaming. (Table 2)

Table 2.

Association of response and respondent job

| Questions | Respondent Job Title |

Mortality | Readmission | ||

|---|---|---|---|---|---|

| % Strongly Agree/Agreeˆ | (SE) | % Strongly Agree/Agreeˆ | (SE) | ||

| 1.Public reporting of these performance measures stimulates QI activities at my institution | CMO | 70 | (3.1) | 79 | (2.8) |

| CEO/President | 73 | (3.3) | 85 | (2.6) | |

| CQO/VPQ | 66 | (4.3) | 80 | (3.6) | |

| Other | 90 | (3.2) | 97 | (1.0) | |

|

| |||||

| p-value1 | 0.002 | <.001 | |||

|

| |||||

| 2.Our hospital is able to influence performance on these measures | CMO | 74 | (3.0) | 91 | (1.6) |

| CEO/President | 76 | (3.2) | 90 | (1.6) | |

| CQO/VPQ | 75 | (4.0) | 81 | (1.7) | |

| Other | 92 | (3.1) | 92 | (3.1) | |

|

| |||||

| p-value1 | 0.02 | 0.03 | |||

|

| |||||

| 6.Measured differences are large enough to differentiate between hospitals (they are meaningful) | CMO | 38 | (3.1) | 43 | (3.2) |

| CEO/President | 36 | (3.4) | 52 | (3.7) | |

| CQO/VPQ | 23 | (3.9) | 28 | (4.0) | |

| Other | 63 | (6.2) | 59 | (6.3) | |

|

| |||||

| p-value1 | <.001 | <.001 | |||

|

| |||||

| 9.Hospitals may attempt to maximize performance primarily by altering coding documentation practices | CMO | 48 | (2.7) | 55 | (2.7) |

| CEO/President | 29 | (2.8) | 32 | (2.8) | |

| CQO/VPQ | 70 | (2.8) | 63 | (2.8) | |

| Other | 35 | (3.1) | 41 | (3.1) | |

|

| |||||

| p-value1 | <.001 | <.001 | |||

weighted % strongly agree/agree (unadjusted)

Association between hospital attitudes and performance

We observed few differences in attitudes towards mortality and readmission measures associated with hospital performance.(Table 3) Hospitals categorized as having better-than- expected performance were morelikely to agree that differences in mortality rates were large enough to differentiate between hospitals (i.e., that they were meaningful) but had similar views about whether the mortality measure stimulates improvement activity, the hospital’s ability to influence performance, and concerns about gaming. A similar pattern was seen with regard to views about the readmission measures, although hospitals with better-than-expected performance were also somewhat less likely to express concern about gaming.

Table 3.

Association between attitudes towards quality measures and hospital performance ˆ

| Questions | Performance Relative to National Average*** | Mortality | Readmission | ||

|---|---|---|---|---|---|

| % Strongly Agree/Agreeˆ | (SE) | % Strongly Agree/Agreeˆ | (SE) | ||

| 1.Public reporting of these performance measures stimulates QI activities at my institution | Better | 77 | (2.2) | 89 | (1.6) |

| At | 73 | (2.5) | 83 | (2.1) | |

| Worse | 70 | (2.4) | 81 | (2.1) | |

|

| |||||

| p-value1 | 0.62 | 0.19 | |||

|

| |||||

| 2.Our hospital is able to influence performance on these measures | Better | 86 | (1.8) | 89 | (1.6) |

| At | 76 | (2.4) | 90 | (1.6) | |

| Worse | 75 | (2.3) | 89 | (1.7) | |

|

| |||||

| p-value1 | 0.18 | 0.85 | |||

|

| |||||

| 6.Measured differences are large enough to Better differentiate between hospitals (i.e., they are meaningful) | Better | 53* | (2.6) | 55** | (2.6) |

| At | 35* | (2.6) | 44 | (2.8) | |

| Worse | 38 | (2.6) | 43 | (2.7) | |

|

| |||||

| p-value1 | 0.02 | 0.007 | |||

|

| |||||

| 9.Hospitals may attempt to maximize their Better performance primarily by altering documentation and coding practices | Better | 45 | (2.7) | 43* | (2.7) |

| At | 44 | (2.8) | 48* | (2.8) | |

| Worse | 44 | (2.8) | 48 | (2.8) | |

|

| |||||

| p-value1 | 0.05 | 0.01 | |||

weighted % strongly agree/agree (unadjusted)

p-value from model adjusting for teaching status, urban/rural location, small/medium/large bedsize, and respondent job title

adjusted levels differ, Bonferroni adjusted pairwise test p<0.05

adjusted level differs from other two levels, Bonferroni adjusted pairwise test p<.05

labels of better, at, and worse respectively correspond to better-than-, as-, and worse-than-expected quality performance

Use of quality measures by hospitals

Eighty-seven percent of hospitals reported incorporating performance on publicly reported measures into their hospital’s annual goals, while 90% reported regularly reviewing the results with the hospital’s Board of Trustees and 94% with senior clinical and administrative leaders. (Table 4)Approximately 3 out of 4 hospitals stated that they regularly review results with front line clinical staff. Half (51%) of hospitals reported that performance on measures was used in the variable compensation programs of senior hospital leaders, while roughly one-third (30%) used these measures in the variable compensation plan for hospital-based physicians.

Table 4.

Use of quality measures by hospitals and association with hospital performance ˆ

| Use of quality measure performance at institution | Overall N (%) | Hospitals with better- than- expected performance % (SE) | Hospitals with at- expected performance % (SE) | Hospitals with worse- than- expected performance % (SE) | P-value* |

|---|---|---|---|---|---|

| Incorporated in hospital annual goals | 87 (2.3) | 94 (1.2) | ** 85 (2.0) | 92 (1.4) | 0.004 |

| Reviewed regularly with board of trustees | 90 (2.0) | 93 (1.3) | 89 (1.7) | 92 (1.4) | 0.83 |

| Reviewed regularly with senior clinical and administrative leaders | 94 (1.7) | 96 (1.0) | 93 (1.4) | 94 (1.3) | 0.12 |

| Reviewed regularly with front-line clinical staff | 78 (2.8) | 81 (2.0) | 77 (2.3) | 78 (2.2) | 0.92 |

| Used in variable compensation program of senior hospital leaders | 51 (3.3) | 66 (2.4) | 48 (2.7) | 52 (2.6) | 0.29 |

| Used in variable compensation program of hospital-based physicians | 30 (1.8) | ** 45 (2.6) | 28 (2.4) | 26 (2.3) | 0.002 |

weighted % yes (unadjusted)

P-value from model adjusting for teaching status, urban/rural location, region, small/medium/large bedsize, and respondent job title

Stratum differs from other two strata, p<.05, using Bonferroni correction for multiple comparisons, after adjusting for hospital characteristics and job title

Association between use of measures and hospital performance

With two exceptions we observed no differences in the use of quality measures by hospitals across the 3 levels of performance. (Table 4)Hospitals with better-than-expected performance and those with worse-than-expected performance were both somewhat more likely to report incorporating performance on publicly reported quality measures into the hospital’s annual goals as compared to hospitals whose performance was at expected (94%, 92%, and 85%, respectively;p<0.01). In addition, hospitals with better-than-expected performance were more likely to incorporate performance on quality measures into the variable compensation plan of hospital-basedphysicians than hospitals with at- or worse-than-expected performance (45% vs.28%, and 26%, respectively;p<0.01).

Discussion

In this study of senior leaders from a diverse sample of 380 US hospitals,we found high levels of engagement with the quality measures currently made available to the public on CMS’s Hospital Compare website. There was strong belief that measures of care processes, patient experience, mortality, and readmission stimulate qualityimprovement efforts, a senseof empowerment that hospitalsare capable of bettering their performance, and that the public is paying attention. In turn, we found that these measures are now universallyreviewed with a hospital’sboardsand senior administrative and clinical leaders,and are commonly shared with front line staff. Nevertheless, there were important concerns about the adequacy of riskadjustment as well as unintended consequences of public reporting, including teaching to the test, and gaming. Equally troubling, roughly half of the leaders did not believe that measures accurately portrayed the quality of care for the conditions they addressed, or could be used to draw inferences about quality at the hospital more generally. Hospitals categorized as having better-than-expected performance on mortality and readmission measures were somewhat more likely to believe that the differences observed in mortality and readmission rates across institutions were clinically meaningful,

It has been more than 25 years since hospital risk-adjusted mortality rates calculated by the Health Care Financing Administration weredisclosed to the public following a Freedom of Information Act request by journalists.14-16 Shortly thereafter, Berwick and Waldsurveyed hospital leaders from a sample of 195 institutions, including those with high, low, and average mortality rates, to assess their attitudes towards the mortality measure, their use of the data, and problems incurred by release to the public.17They found limited support for transparency about overall hospital mortality rates. Very few believed the data to be valuable to the public, and only 31%felt that they were useful in guiding efforts to study or improve quality. In contrast,roughly 70% of hospitals in the present study agreed that mortality measures are effective at stimulating improvement efforts and that hospitals have the ability to influence performance. Potential explanations for this shift include the possibility that the condition-specific rates used today are felt to be more actionable for hospitals when compared to the global rate calculated in the past, that attitudes about the usefulness of outcome measures like mortality rates have simply evolved over time, or that newly introduced pay-for-performance programs linked to mortality have created powerful new incentives. Although attitudes towards the usefulness of mortality data may have evolved, the concerns we noted about the adequacy of risk adjustment largely echo those reported by Berwick; 55% of respondents in that study judged case-mix adjustment to be poor,17 a figure that was similar to the 44% in 2012 when our study was conducted who felt that the mortality measuresdo not adequately account for differences in case mix.

Our results should be considered in the context of several other studies that have examined attitudes towards quality measures among hospital staff, administrative leaders, and boards, and how this relates to performance. For example, Hafnerconducted structured focusgroups with senior hospital leaders and front line clinical staff at a random sample of 29 US hospitals to assess attitudes about the impact of publicly reporting performance data.18Among the themes that emerged included the idea that public reporting led to increased leadership involvement in quality improvement, and created a greater sense of accountability to both internal and external customers. These findings parallel our own observation about the impact of public reporting on quality improvement activities and planning efforts. They also noted that clinical staff expressed skepticism regarding the methodology with which data are collected, a finding that carried over to the senior leaders that we queried. Jha and Epstein surveyed chairs of boards of non-profit institutions and reported that slightly more than half identified clinical quality as one of the top two priorities for board oversight.19They also found that two-thirdsof boards had quality as an agenda item at every meeting, a figure somewhat lower than reported by respondents in our survey. Hospitals identified as being better performers on the basis of process measures found on the Hospital Compare web site were more likely to prioritize quality for board oversight.Finally, Vaughnsurveyed senior hospital leaders, mainly chief executive officers and chief quality officers, at a sample of hospitals in 8 states to examine the association between leadership involvement and quality performance.20They reported that hospitals where the board spentat least 25% of its time on quality issues received regular quality performance data, and where performance was incorporated into senior leader variable compensation had higher quality scores.

In the wake of multiple Institute of Medicine reports on the quality and safety of health care, the rise of organizations like the Institute for Healthcare Improvement and the National Patient Safety Foundation, and the growth of national initiativeslike the 100,000 Lives and Surviving SepsisCampaigns,the environment in which quality measurement is being carried out today would be hardly recognizable to the senior hospital leaders surveyed in the late 1980s.21-24Thefields of quality improvement and patient safety now routinely warrant their own vice-presidents, positions that were probably unimaginable to hospital leaders then. And there have been a number of advances in the science of quality measurement since the late 1980s, including the emergence of process and patient experience measures as well as improvements in methods for risk-adjustment. 25Furthermore, along with many other organizations, CMS now relies on the National Quality Forum to vet proposed quality measures. This process evaluates concerns raised by stakeholders about issues such as clinical meaningfulness and risk adjustment and includes input from professional societies, payers, and hospital organizations. While our study confirms that hospitals are clearly paying attention to public reporting programs, it also documents persistent concerns about the methods used to measure performance and of the unintended consequences of these programs. These concerns notwithstanding, public reporting programs show no sign of going away, the number of measures continues to expand,and most of the recent growth has been in the development of outcome measures. This is notable because there were differences in attitudes towards process and patient experience measures on the one hand, and mortality (and to a lesser extent readmission measures) on the other. Specifically, hospitals were less likely to report that mortality measures stimulated improvement efforts and that the hospital was able to influence mortality performance. While our study was not designed to answer this directly, we suspect that this variation reflects the reality that the path towards ensuring that patients with pneumonia are vaccinated prior to discharge is more straightforward than is increasing short term survival.

Our findings should be interpreted in light of several limitations. Most importantly, as a cross-sectional study, we cannot be sure whether the more sanguine attitudes expressed towards quality measures by the senior leaders at better performing institutions were the cause or instead the result oftheir performance designation. We suspect that both explanations may be partially true; hospitals that are more invested in quality measurement and improvement work (as reflected in their support for quality measures) are also more apt to be successful at it. At the same time, recognition for superior performance may have positiveeffects on one’s attitude towards quality measures more generally. In this regard, it was better performing institutions that were least likely to be concerned about the possibility of gaming. Another limitation is that the opinions expressed by respondents to the survey may not representthe views of other clinical or administrative leaders,nor certainly the views of front line clinical staff. Additionally, we categorized hospitals on the basis of their performance on mortality and readmission measures.Because higher volume institutions are more likely to be identified as outliers, our results may be less generalizable to small hospitals. Furthermore,it is possible that the associations we observed between attitudes towards quality measures and hospital performance might have been different had we used other measures for this purpose. Finally, we achieved a less than ideal response rate of 60%. However, our analysis of non-responders suggests that our respondent sample was not biased, as observable hospital characteristics, including quality performance, were similar among both groups.

In conclusion, while the quality measures reported on CMS’s Hospital Compare website play a major role in hospital planning and improvement efforts, importantconcerns about the adequacy of risk adjustment, the construct validity of contemporary measures, and the unintended consequences of measurement programs are common.

Supplementary Material

Acknowledgments

The authors thank Maureen Bisognano, President and CEO ofInstitute for Healthcare Improvement (IHI), who co-signed the survey invitation.

Funding/Support: This project was not supported by any external grants or funds.Dr. Ross is supported by the National Institute on Aging (K08 AG032886) and by the American Federation for Aging Research through the Paul B. Beeson Career Development Award Program.

Footnotes

Conflict of Interest Disclosures: Drs. Lindenauer and Ross receive support from the Centers for Medicare and Medicaid Services (CMS) to develop and maintain performance measures that are used for public reporting. Dr. Ross reports that he is a member of a scientific advisory board for FAIR Health, Inc.

References

- 1.Goodrich K, Garcia E, Conway PH. A history of and a vision for CMS quality measurement programs. Jt Comm J Qual Patient Saf. 2012;38(10):465–470. doi: 10.1016/s1553-7250(12)38062-8. [DOI] [PubMed] [Google Scholar]

- 2.Centers for Medicare and Medicaid Services. [March 21 2014]; Measures displayed on Hospital Compare. http://www.medicare.gov/hospitalcompare/Data/Measures-Displayed.html.

- 3.Conway PH, Mostashari F, Clancy C. The future of quality measurement for improvement and accountability. JAMA. 2013;309(21):2215–2216. doi: 10.1001/jama.2013.4929. [DOI] [PubMed] [Google Scholar]

- 4.Blumenthal D, Jena AB. Hospital value-based purchasing. J Hosp Med. 2013;8(5):271–277. doi: 10.1002/jhm.2045. [DOI] [PubMed] [Google Scholar]

- 5.Berwick DM, James B, Coye MJ. Connections between quality measurement and improvement. Med Care. 2003;41(1 Suppl):I30–38. doi: 10.1097/00005650-200301001-00004. [DOI] [PubMed] [Google Scholar]

- 6.Fung CH, Lim Y-W, Mattke S, Damberg C, Shekelle PG. systematic review: the evidence that publishing patient care performance data improves quality of care. Ann Intern Med. 2008;148(2):111–123. doi: 10.7326/0003-4819-148-2-200801150-00006. [DOI] [PubMed] [Google Scholar]

- 7.Marshall MN, Shekelle PG, Leatherman S, Brook RH. The public release of performance data: what do we expect to gain? A review of the evidence. JAMA. 2000;283(14):1866–1874. doi: 10.1001/jama.283.14.1866. [DOI] [PubMed] [Google Scholar]

- 8.Edwards P, Roberts I, Clarke M, et al. Increasing response rates to postal questionnaires: systematic review. British Medical Journal. 2002;324(7347):1183. doi: 10.1136/bmj.324.7347.1183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Werner RM, Asch DA. The unintended consequences of publicly reporting quality information. JAMA. 2005;293(10):1239–1244. doi: 10.1001/jama.293.10.1239. [DOI] [PubMed] [Google Scholar]

- 10.Bhalla R, Kalkut G. Could Medicare readmission policy exacerbate health care system inequity? Ann Intern Med. 2010;152(2):114–117. doi: 10.7326/0003-4819-152-2-201001190-00185. [DOI] [PubMed] [Google Scholar]

- 11.Farmer SA, Black B, Bonow RO. Tension between quality measurement, public quality reporting, and pay for performance. JAMA. 2013;309(4):349–350. doi: 10.1001/jama.2012.191276. [DOI] [PubMed] [Google Scholar]

- 12.Dimick JB, Welch HG, Birkmeyer JD. Surgical mortality as an indicator of hospital quality: the problem with small sample size. JAMA. 2004;292(7):847–851. doi: 10.1001/jama.292.7.847. [DOI] [PubMed] [Google Scholar]

- 13.Thomas JW, Hofer TP. Accuracy of risk-adjusted mortality rate as a measure of hospital quality of care. Med Care. 1999;37(1):83–92. doi: 10.1097/00005650-199901000-00012. [DOI] [PubMed] [Google Scholar]

- 14.Coles J. Public disclosure of health care performance reports: a response from the UK. Int J Qual Health Care. 1999;11(2):104–105. doi: 10.1093/intqhc/11.2.104. [DOI] [PubMed] [Google Scholar]

- 15.Dubois RW, Rogers WH, Moxley JH, 3rd, Draper D, Brook RH. Hospital inpatient mortality. Is it a predictor of quality? N Engl J Med. 1987;317(26):1674–1680. doi: 10.1056/NEJM198712243172626. [DOI] [PubMed] [Google Scholar]

- 16.Krakauer H, Bailey RC, Skellan KJ, et al. Evaluation of the HCFA model for the analysis of mortality following hospitalization. Health Serv Res. 1992;27(3):317–335. [PMC free article] [PubMed] [Google Scholar]

- 17.Berwick DM, Wald DL. Hospital leaders’ opinions of the HCFA mortality data. JAMA. 1990;263(2):247–249. [PubMed] [Google Scholar]

- 18.Hafner JM, Williams SC, Koss RG, et al. The perceived impact of public reporting hospital performance data: interviews with hospital staff. Int J Qual Health Care. 2011;23(6):697–704. doi: 10.1093/intqhc/mzr056. [DOI] [PubMed] [Google Scholar]

- 19.Jha A, Epstein A. Hospital governance and the quality of care. Health Aff (Millwood) 2010;29(1):182–187. doi: 10.1377/hlthaff.2009.0297. [DOI] [PubMed] [Google Scholar]

- 20.Thomas Vaughn MK. Engagement of Leadership in Quality Improvement Initiatives: Executive Quality Improvement Survey Results. Journal of Patient Safety. 2006;2(1):2–9. [Google Scholar]

- 21.Kohn LT, Corrigan JM, Donaldson MS, editors. To Err Is Human: Building a Safer Health System. Washington, D.C.: The National Academies Press; 2000. [PubMed] [Google Scholar]

- 22.Committee on Quality of Health Care in America, Institute of Medicine. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, D.C.: The National Academies Press; 2001. [Google Scholar]

- 23.Wachter RM, Pronovost PJ. The 100,000 Lives Campaign: A scientific and policy review. Jt Comm J Qual Patient Saf. 2006;32(11):621–627. doi: 10.1016/s1553-7250(06)32080-6. [DOI] [PubMed] [Google Scholar]

- 24.Dellinger RP, Levy MM, Carlet JM, et al. Surviving Sepsis Campaign: international guidelines for management of severe sepsis and septic shock: 2008. Crit Care Med. 2008;36(1):296–327. doi: 10.1097/01.CCM.0000298158.12101.41. [DOI] [PubMed] [Google Scholar]

- 25.Krumholz HM, Brindis RG, Brush JE, et al. Standards for statistical models used for public reporting of health outcomes: an American Heart Association Scientific Statement from the Quality of Care and Outcomes Research Interdisciplinary Writing Group: cosponsored by the Council on Epidemiology and Prevention and the Stroke Council. Endorsed by the American College of Cardiology Foundation. Circulation. 2006;113(3):456–462. doi: 10.1161/CIRCULATIONAHA.105.170769. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.