1. Introduction

The earliest experiments on human speech perception were conducted to identify the acoustic correlates of phonemic categories. That focus arose from the aims of the work, which in the mid-twentieth century were primarily occupied with trying to translate, in one direction or the other, from a string of alphabetic symbols to what was believed to be a string of acoustic elements isomorphically aligned with those symbols. A chief example of that work was a project designed to build a reading machine for the blind, described by A. Liberman in his book, Speech: A Special Code (1996). When that project was initiated in 1944, the goal of identifying invariant acoustic correlates to phonemic categories was considered reasonable and easily within reach. In that work, components of the acoustic signal relevant to phonemic decisions came to be termed cues (Repp, 1982), and the terminology continues to be used today.

Acoustic cues can arise from spectral, temporal, or amplitude structure in the speech signal. Spectral cues can further be described as stable across time (i.e., static) or time varying (i.e., dynamic). An example of a static spectral cue is the broad spectral pattern associated with fricatives (Strevens, 1960). When a fricative is produced, the vocal-tract constriction is held steady for a relatively long time, resulting in spectral structure that is steady for a long time, at least compared to other components of speech signals. However, some spectral patterns arising from briefer articulatory gestures can also create cues that have been termed static, such as the bursts associated with the release of vocal-tract closures (Stevens & Blumstein, 1978). Dynamic spectral cues are the transitions in formant frequencies that arise from movement between consonant constrictions and vowel postures (i.e., formant transitions) (Delattre, Liberman, & Cooper, 1955). The defining characteristic of these cues is that it is precisely the pattern of spectral change that informs the listener.

Temporal cues can involve the duration of single articulatory events or the timing between two articulatory events, such as between the release of a vocal-tract closure and the onset of laryngeal vibration; this is traditionally termed voice onset time (Lisker & Abramson, 1964). Amplitude structure is less likely than spectral or temporal structure to provide cues to phonemic identity. Instead, it more reliably specifies syllable structure, due to the fact that amplitude in speech signals is dependent on the degree of vocal-tract opening, which alternates most closely with syllable structure.

In the early work on acoustic cues, stimuli were constructed such that all components were held constant across the set, except for the specific cue being studied. That cue was manipulated across stimuli, forming a continuum of equal-sized steps. Individual tokens from these continua were presented multiple times to listeners for labeling, and the point along the continuum where responses changed from primarily one category to primarily another category was known as the phoneme boundary; responses on either side of that boundary were consistently and reliably given one label. This pattern of responding was dubbed categorical perception, and was considered a hallmark of human speech perception (Liberman, Harris, Hoffman, & Griffith, 1957). Listeners in those early studies were almost invariably adults with normal hearing (NH) listening to their native language, which suited the goal of identifying acoustic cues defining each phonemic category.

1.1. Trading relations and differences across listeners

It soon became apparent that the paradigm described above was based on a model of speech perception that was overly simplistic. One basis of that conclusion was that more than one cue was found to affect each phonemic decision (e.g., Dorman, Studdert-Kennedy, & Raphael, 1977). Furthermore, there were found to be tradeoffs among cues, such that the setting of one cue affected the labeling of stimuli at each level of the other cue (Best, Morrongiello, & Robson, 1981; Fitch, Halwes, Erickson, & Liberman, 1980; Polka & Strange, 1985). For example, Mann and Repp (1980) showed that the placement of the phoneme boundary along a continuum of fricative noises from /∫/ to /s/ was influenced by whether formant transitions in the voiced signal portion were appropriate for an initial /∫/ or /s/.

Perhaps more surprising than the discovery of cue trading relations was the finding that weights assigned to cues for specific phonemic decisions varied across listener groups. In particular, adult learners of a second language often have difficulty attending to the cues used in phoneme labeling by native speakers, if those cues conflict with ones used in their first language (e.g., Beddor & Strange, 1982; Cho & Ladefoged, 1999; Gottfried & Beddor, 1988) or if the phonemic contrast does not exist in the first language (e.g., Best, McRoberts, & Sithole, 1988; Crowther & Mann, 1994; Flege & Wang, 1989). This difference in weighting of acoustic cues across languages means that mature perceptual weighting strategies must be learned through experience with a first language, and evidence to that effect has been found: children's weighting strategies differ from those of adults, with modifications occurring across childhood (e.g., Greenlee, 1980; Nittrouer & Studdert-Kennedy, 1987; Wardrip-Fruin & Peach, 1984). Moreover, it has been demonstrated that differences across listener groups in weighting strategies cannot be attributed to differences in sensitivity to the acoustic cues in question, either in the cross-language work (e.g., Miyawaki et al. 1975) or in the developmental work (e.g., Nittrouer, 1996). However, one study found that children growing up in poverty or with histories of frequent otitis media with effusion demonstrated less mature perceptual weighting strategies than children with neither condition (Nittrouer & Burton, 2005). As a result, the authors suggested that the amount of experience a child gets with a first language influences the acquisition of mature weighting strategies. Critical to that interpretation were the assumptions that children growing up in poverty have reduced language input in the home (Hoff & Tian, 2005), and that the temporary conductive hearing loss imposed by otitis media with effusion diminishes opportunities to hear the ambient language. Because the children in neither experimental group had permanent hearing loss, it was concluded that their deficits could not be explained by poor sensitivity to the relevant acoustic cues. Instead, Nittrouer and Burton concluded that the diminished language experience of children in the experimental groups must explain the impediments to their discovering the most efficient weighting strategies for their native language – the strategies used by mature speakers.

1.2. How Deaf Listeners Perceive Speech Cues

There is, however, a group of listeners for whom that disassociation between sensitivity to acoustic cues and weighting of those cues cannot be presumed. Listeners with hearing loss generally have diminished spectral resolution, making it difficult for them to use spectral cues in phonemic decisions (e.g., Boothroyd, 1984). For the most part, access to duration cues remains intact for listeners with hearing loss, and many of them continue to have access to low-frequency components of speech signals. Consequently, empirical outcomes regarding phonemic decisions by listeners with hearing loss, prior to the advent of cochlear implants, showed that these listeners used duration and low-frequency cues similarly to how listeners with NH use those cues, but decisions requiring broader static or dynamic spectral cues were greatly hindered (e.g., Revoile, Holden-Pitt, & Pickett, 1985; Revoile, Pickett, & Kozma-Spytek, 1991).

One hypothesis that emerged from those early results was that hearing-impaired listeners had learned to make use of "redundant" acoustic cues, which are those not typically used by listeners with NH that nonetheless covary with the more typical cues (Lacroix & Harris, 1979). That suggestion is strikingly similar to recent ones for second-language learners, who have been described as "overweighting" redundant cues, which occurs when the typical cues are not ones listeners learned to weight in their first language (Llanos, Dmitrieva, Shultz, & Francis, 2013). Another relevant trend is that the weighting of acoustic cues for listeners with hearing loss can vary across listeners with similar audiometric profiles (e.g., Danaher, Wilson, & Pickett, 1978). Thus, there is ample evidence from a variety of populations that listeners can, and do, vary in terms of the perceptual weighting strategies employed to make phonemic decisions, and this variability cannot be explained strictly by auditory sensitivity.

Some listeners with hearing loss, however, had only one kind of acoustic structure available to them prior to the invention of the cochlear implant: Listeners with profound losses could use only the gross amplitude envelope to aid speech recognition (Erber, 1979). Because amplitude structure does not correspond well to phonemic structure (but instead corresponds to syllable structure), this meant that speech recognition scores for this group were quite poor. Speech recognition is often indexed using isolated words – many of which are one syllable long – so syllable structure is of little use to the recognition of these words. Fortunately, these profoundly deaf listeners are the very individuals who benefited when the multi-channel cochlear implant became clinically available. These devices provide access to the full spectrum of the speech signal. Accordingly, speech recognition scores have improved for this group to levels more commonly found for listeners with much less severe losses (Boothroyd, 1997). But as remarkable as these improvements have been, implant signal processing fails to provide spectral resolution commensurate to that enjoyed by listeners with NH, in spite of the broad spectral input. That limitation arises because the number of effective spectral channels is low (Friesen, Shannon, Başkent, & Wang, 2001), and there are interactions among those channels (Chatterjee & Shannon, 1998). Thus, individuals with cochlear implants (CIs) have only limited access to detailed spectral structure in the signal, and that limitation constrains their abilities to use spectral cues.

In keeping with the poor spectral resolution available through CIs, labeling studies with adult CI users have revealed weaker weighting of spectral cues for vowels (Lane et al., 2007; Winn, Chatterjee, & Idsardi, 2012), sibilants (Lane et al., 2007), syllable-initial voicing (Iverson, 2003), and manner distinctions (Moberly et al., 2014), compared to listeners with NH. When it comes to children with CIs, two studies have investigated how they weight acoustic cues in phonemic decisions. Giezen, Escudero, and Baker (2010) generated four sets of stimuli: two vowel contrasts, one syllable-initial voicing contrast, and one fricative contrast. Cue weights could be generated for responses to three sets of the stimuli, excluding the syllable-initial voicing contrast. Children with CIs were observed to weight the static spectral cue to the fricative contrast less than age-matched children with NH, but weight steady-state formant frequencies (also static cues) to the vowel contrasts similarly.

Hedrick, Bahng, Hapsburg, and Younger (2011) examined the weighting of acoustic cues by children with CIs for the fricative place contrast of /s/ versus /∫/. In these stimuli, synthetic fricative noises formed the continuum. These noises were single poles, varying in center frequency from 2.2 kHz to 3.7 kHz, in 250-Hz steps. The vocalic portions were also synthetic, consisting largely of steady-state formants. Only the second formant (F2) had a transition, which was 60 ms long and set to be appropriate for either an initial /s/ or /∫/. In this study, it was observed that children with CIs had difficulty recognizing the single-pole noises as either fricative: mean scores for both continuum endpoints were less than 80 percent correct. In addition, the absolute weight assigned to the F2 transition was less for children with CIs than for children with NH, an outcome that was most apparent from the diminished separation in labeling functions for the two F2-transition conditions. This outcome prompted the authors to speculate that perhaps the formant transition was not especially salient for children with CIs, so their attention to that cue was limited.

1.3. Perceptual Weighting Strategies and Other Language Skills

For all the attention that has been paid over the past six decades to how acoustic cues contribute to listeners' decisions about phoneme identity, there has been relatively little attention paid to how that process affects other language functions, such as those associated with the lexicon and verbal short-term memory. The tacit assumption has been that understanding how listeners recognize phonemes in speech signals will be sufficient to explain other language functions. This assumption likely arises from earlier perspectives, which suggested that the acoustic input decays rapidly, making it critical to recover phonemic structure (e.g., Pisoni, 1973). Accordingly, models arose describing the lexicon as organized strictly based on phonemic structure (e.g., Luce & Pisoni, 1998) and suggesting that phonemic structure is all that is encoded in short-term memory (e.g., Crowder & Morton, 1969). But there are detractors from this position. There are those who suggest that words are stored in both short- and long-term memory using acoustic structure itself, including that which does not meet definitions of acoustic cues (e.g., Port, 2007). An example of another kind of structure that could be used to encode words would be talker-specific qualities (e.g., Palmeri, Goldinger, & Pisoni, 1993). Thus it is essential to explore potential relationships between perceptual weighting strategies and other functions that would reasonably be expected to derive from those strategies. In short, maybe perceptual weighting strategies for the sorts of phonemic decisions we ask listeners to perform in the laboratory are not relevant to real-world language functions.

Only one study has addressed that question for listeners with CIs. Moberly et al. (2014) examined perceptual weighting strategies of adult CI users for a syllable-initial stop-glide contrast. Two acoustic cues to that contrast were examined: the rate of formant transition and the rate of amplitude rise. The former is the cue weighted most strongly by listeners with NH (Nittrouer & Studdert-Kennedy, 1986; Walsh & Diehl, 1991), but the latter is preserved more reliably by the signal processing of implants. As predicted in that study, listeners with CIs weighted the dynamic spectral cue (rate of formant transitions) less than listeners with NH, to varying extents. It was further observed that most listeners with CIs weighted the amplitude cue more than listeners with NH, again to varying extents. Word recognition was also measured, and strong positive correlations were found between the weight assigned to the dynamic spectral cue and word recognition. The weight assigned to the amplitude cue accounted for no significant amount of variability in word-recognition scores. Therefore it may be concluded that advantages seem to accrue to spoken language processes from the perceptual weighting strategies observed for mature language users listening to their native language. Developmentally, it has been observed that increasing sensitivity to word-internal phonemic structure (measured with phonemic awareness tasks) correlates with developmental shifts in perceptual weighting strategies (Boada & Pennington, 2006; Johnson, Pennington, Lowenstein, & Nittrouer, 2011; Nittrouer & Burton, 2005). Overall, then, acquisition of the perceptual weighting strategies typical among mature native speakers of a language has demonstrable, positive effects. Nonetheless, it continues to be critical that experiments explore the relationship between these weighting strategies and other language processes to ensure that the very focus of our work (i.e., weighting of acoustic cues to phonemic decisions) has ecological validity.

1.4. The Current Study

The study reported here had three goals. The first goal was to measure the perceptual weighting strategies of children with CIs who were deaf since birth, and compare them to children with NH. The second goal of this study was to assess whether the weighting strategies of children with CIs are explained solely by their poor sensitivity to acoustic cues, or if diminished linguistic experience accounts for some of the effect, as well. The final goal was to examine relationships between weighting strategies and other language skills, specifically word recognition and phonemic awareness.

Weighting strategies

Children with CIs have limited access to spectral cues in the acoustic speech signal. Unlike adults who lost their hearing after acquiring language, these children must develop perceptual weighting strategies using these degraded signals, which presumably is a difficult goal to achieve. Hearing loss exacerbates the problems imposed on listening by poor acoustic environments, such as noise and reverberation. Consequently, children with hearing loss have diminished opportunity to hear the language produced by people around them. That experiential deficit could delay the development of mature weighting strategies, independently of auditory sensitivity.

In this study, two phonemic contrasts were used to examine weighting strategies for three kinds of acoustic cues. A contrast involving word-final voicing was included in this study, in order to investigate children's weighting of a duration cue (vowel length) and a dynamic spectral cue (offset formant transitions). The contrast of cop versus cob was selected so that all formants at the ends of the stimuli would be falling. That meant that the broad pattern of excitation across the electrode array (and so across the basilar membrane) would be changing over time, moving from the basilar to apical end. This pattern should be easier for listeners with CIs to recognize than when formant transitions each change in different directions, as happens with place contrasts. Nonetheless, the vowel-length cue would be predicted to be the easier of the two cues for listeners with CIs to recognize because it would be robustly preserved in the signal processing of cochlear implants. That meant that if children with CIs did not weight this cue as strongly as children with NH, the likely explanation would be that they had insufficient experience with the ambient language to develop those weighting strategies.

The other contrast used in this study was one that was predicted to be much harder for children with CIs: place of constriction for syllable-initial fricatives, /s/ versus /∫/. This contrast involved two spectral cues: one static, the shape of the fricative noise, and one dynamic, formant transitions at vowel onset. Both cues are preserved poorly by the signal processing of cochlear implants. Thus both cues were predicted to be weighted less by children with CIs than by children with NH, as Hedrick et al. (2010) found. That difference between the two groups would indicate a significant deviation in typical speech development for children with CIs that could negatively impact the acquisition of other language skills. In the current study, stimulus construction was designed to keep these syllables as natural as possible. That was a difference in stimulus design from the single-pole noises used by Hedrick et al. In reality, fricative noises are spectrally broader, with the relative amplitude of various frequency regions differing across place of articulation. Another difference between stimuli in the current study and those of Hedrick et al. was that F3, along with F2, had transitions that varied according to fricative place. Having both formants varying replicates natural speech, and provides more salient cues.

Auditory sensitivity

Children’s sensitivity to the acoustic structure underlying one of these phonemic contrasts was assessed in this study, in order to examine whether developmental changes in perceptual weighting strategies for children with CIs are limited by their access to that acoustic structure. The specific structure chosen for this examination arose from the word-final voicing contrast, and involved measuring sensitivity to stimulus length and glides at stimulus offset. These elements of acoustic structure were selected instead of ones analogous to the fricative-place contrast, both because they have been examined before for children with NH (Nittrouer & Lowenstein, 2007), and because the probability was greater that children with CIs could recognize these kinds of structure than any structure related to the fricative-place contrast. In the Nittrouer and Lowenstein study, it was found that sensitivity per se did not explain how strongly children weighted the analogous acoustic cues. Thus, a disassociation between sensitivity and perceptual weighting was observed for children.

Other language skills

Finally, two language skills were examined in this study, both presumed to be related to perceptual weighting strategies. First, word recognition was measured, as Moberly et al. (2014) had done, to see if weighting strategies affect this ecologically valid measure. Second, sensitivity to phonemic structure in the speech signal was measured, using phonemic awareness tasks. Phonemic awareness has been found to correlate with a wide assortment of language phenomena, including reading (e.g., Liberman & Shankweiler 1985; Wagner & Torgesen 1987) and working memory (Brady, Shankweiler, & Mann, 1983; Mann & Liberman, 1984). Finding a correlation between weighting strategies and phonemic awareness would suggest that the development of mature weighting strategies should be an aim in clinical intervention for children with CIs because it underlies several language skills.

2. Methods

2.1. Participants

A total of 100 children were tested on the auditory discrimination and speech labeling tasks reported here. All children had just completed second grade at the time of testing, and all were participants in an ongoing longitudinal study (Nittrouer, 2010). Fifty-one of these children had severe-to-profound sensorineural hearing loss and wore CIs. The other 49 children had normal hearing, defined as pure-tone thresholds better than 15 dB hearing level for the octave frequencies of .25 to 8 kHz. These sample sizes provided greater than 90 percent power for detecting group differences when those differences were 1 SD (Cohen's d = 1), which is roughly the magnitude of differences that have been found for children with NH and those with CIs, both in this longitudinal study (e.g., Nittrouer, Caldwell, Lowenstein, Tarr & Holloman, 2012) and by other investigators (e.g., Geers, Nicholas, & Sedey, 2003).

The top four rows of Table 1 show group means (and SDs) for demographic measures obtained for children in these groups. Socio-economic status was indexed using a two-factor scale on which both the highest educational level and the occupational status of the primary income earner in the home are considered (Nittrouer & Burton, 2005). Scores for each of these factors range from 1 to 8, with 8 being high. Values for the two factors are multiplied together resulting in a range of possible scores from 1 to 64. Differences between the groups were not statistically significant. A score of 30 on this metric generally indicates that the primary income earner obtained a four-year university degree and holds a professional position, and this was generally the range of socio-economic status for these children.

Table 1.

Means and standard deviations (SDs) for demographic variables.

| Groups | ||||

|---|---|---|---|---|

| NH | CIs | |||

| N | 49 | 51 | ||

| M | (SD) | M | (SD) | |

| Age at time of testing (months) | 101 | (4) | 103 | (6) |

| Proportion of males | .45 | --- | .47 | --- |

| Socio-economic status | 35 | (13) | 34 | (11) |

| Brief IQ (Leiter-R) standard scores | 103 | (21) | 102 | (17) |

| Age at identification (months) | 7 | (7) | ||

| Pre-implant better-ear PTA (dB HL) | 100 | (16) | ||

| Age at 1st implant (months) | 20 | (13) | ||

| Age at 2nd implant (months); N = 34 | 44 | (21) | ||

| Mean time, 1st implant (months) | 83 | (14) | ||

| Mean time, 2nd implant (months); N = 34 | 59 | (22) | ||

| Aided PTA (dB HL) | 25 | (8) | ||

| Aided threshold at 6 kHz | 31 | (10) | ||

Socio-economic status is a 64 point scale. PTAs are for the three frequencies of .5, 1, and 2 kHz. Better-ear PTAs are for unaided thresholds in that better ear. Aided PTAs are for the ear with the better PTAs if both ears have CIs, or for the only ear with a CI, if there is only one.

The measure of nonverbal cognitive functioning was the Leiter International Performance Scale – Revised (Roid & Miller, 2002). This test is completely nonverbal, with instructions given through pantomime. Four subtests were administered: Figure Ground, Form Completion, Sequential Order, and Repeated Patterns. From these four subtests a measure was computed of nonverbal intelligence known as the Brief IQ, which can be represented as standard scores. Differences in means between groups were not statistically significant.

The bottom eight rows of Table 1 show information for the children with CIs. All children were identified before two years of age, and most before one year. Children received their implants early, which for 44 of the 51 children meant before three years of age. Consequently, these children had considerable experience with their CIs. Audiological records were collected from the children’s parents, and used to derive variables such as pre-implant, better-ear pure-tone average thresholds for the three speech frequencies of 0.5, 1.0, and 2.0 kHz. (Hereafter, these values will simply be termed pre-implant PTAs.) Audiological testing was also done in the laboratory at the time of testing, and used to derive aided thresholds. Aided pure-tone average thresholds for the three speech frequencies are reported for either the ear with a CI or for the better of the two ears, if a child had bilateral CIs. Aided thresholds at 6 kHz are also reported for either the only ear with a CI or the better ear, in the case of bilateral CIs.

Of these 51 children with CIs, 34 had bilateral implants. Of the 17 children with just one CI, five used a hearing aid (HA) on the contralateral ear. Thirty children had CIs from Cochlear Corporation, 18 from Advanced Bionics, two from MedEl, and one child had a Cochlear CI on one ear and an Advanced Bionics CI on the other ear. Twenty-four children with CIs had at least one year of bimodal experience (i.e., wore a hearing aid on the contralateral ear) immediately after they received their first CI. Seventeen of those children eventually received a second CI, and two simply stopped using the HA. Of the 27 children with no bimodal experience, 17 had bilateral CIs, and ten had only one CI. Six children had received bilateral CIs simultaneously.

2.2. Equipment

All testing took place in a sound-treated room. Stimuli were presented via a computer equipped with a Creative Labs Soundblaster digital-to-analog card. A Roland MA-12C powered speaker was used for audio presentation of stimuli, and it was positioned one meter in front of where children sat during testing, at 0-degrees azimuth. Stimuli in the labeling and discrimination tasks were presented at a 22.05-kHz sampling rate with 16-bit digitization. Stimuli in the word recognition task were presented at a 44.1-kHz sampling rate with 16-bit digitization.

Presentation of stimuli in the three phonological awareness tasks used an audio-visual format. Materials were presented with 1500-kbps data rate for the video signal, and 44.1-kHz sampling rate with 16-bit digitization for the audio signal.

Data collection for the word recognition task was video and audio recorded using a SONY HDR-XR550V video recorder. Sessions were recorded so scoring could be done at a later time by laboratory staff members as blind as possible to children’s hearing status. Children wore SONY FM transmitters in specially designed vests that transmitted speech signals to the receivers, which provided direct line input to the hard drives of the cameras. This procedure ensured good sound quality for all recordings. For the auditory discrimination, speech labeling and phonological awareness tasks responses were entered directly into the computer by the experimenter at the time of testing.

All children with hearing loss were tested wearing their customary auditory prostheses. Each device was checked at the start of testing, using the Ling six-sound test.

2.3. Materials

For the speech labeling task, 8 in. x 8 in. hand-drawn pictures were used for labeling. In the case of the cop-cob stimuli, a picture of a police officer served as the response target for cop and a picture of a corn cob served as the response target for cob. For sa-sha stimuli, a picture of a space creature served as the target for sa and a king from another country served as the target for sha. These pictures have been used before, so it was known that children can reliably match the stimuli to the pictures.

For the auditory discrimination task, a cardboard response card 4 in. × 14 in. with a line dividing it into two 7-in. halves was used. On half of the card were two black squares, and on the other half were one black square and one red circle. Participants pointed to the two black squares to indicate that two stimuli presented were heard as the same, and pointed to the square and the circle to indicate that they were different. Ten other cardboard cards (4 in. x 14 in., not divided in half) were used for training with children. On six cards were two simple drawings each of common objects (e.g., hat, flower, ball). On three of these cards the same object was drawn twice (identical in size and color) and on the other three cards two different objects were drawn (different in size and color). On four cards were two drawings each of simple geometric shapes: two with the same shape in the same color and two with different shapes, in different colors.

A game board with ten steps was also used with children during the labeling and discrimination tasks. Cartoon pictures were used as reinforcement and were presented on a color monitor after completion of each block of stimuli.

The CID-22 word lists were used to obtain a measure of word recognition (Hirsh et al., 1952). Although strictly a measure of word repetition, this task of asking listeners to repeat words presented auditorily is typically termed recognition in audiological practice (e.g., Guthrie & Mackersie, 2009). There are four of these lists, each consisting of 50 words. All words on these lists are monosyllabic and were originally selected by the test authors to be commonly occurring in American English. The phonetic composition of words within each list was designed to be balanced, meaning that the frequency of occurrence of these phonemes matches the frequency at which they occur in the language generally.

2.4. Stimuli

Two sets of stimuli were selected for use in the speech labeling tasks, along with two sets of stimuli for the auditory discrimination tasks.

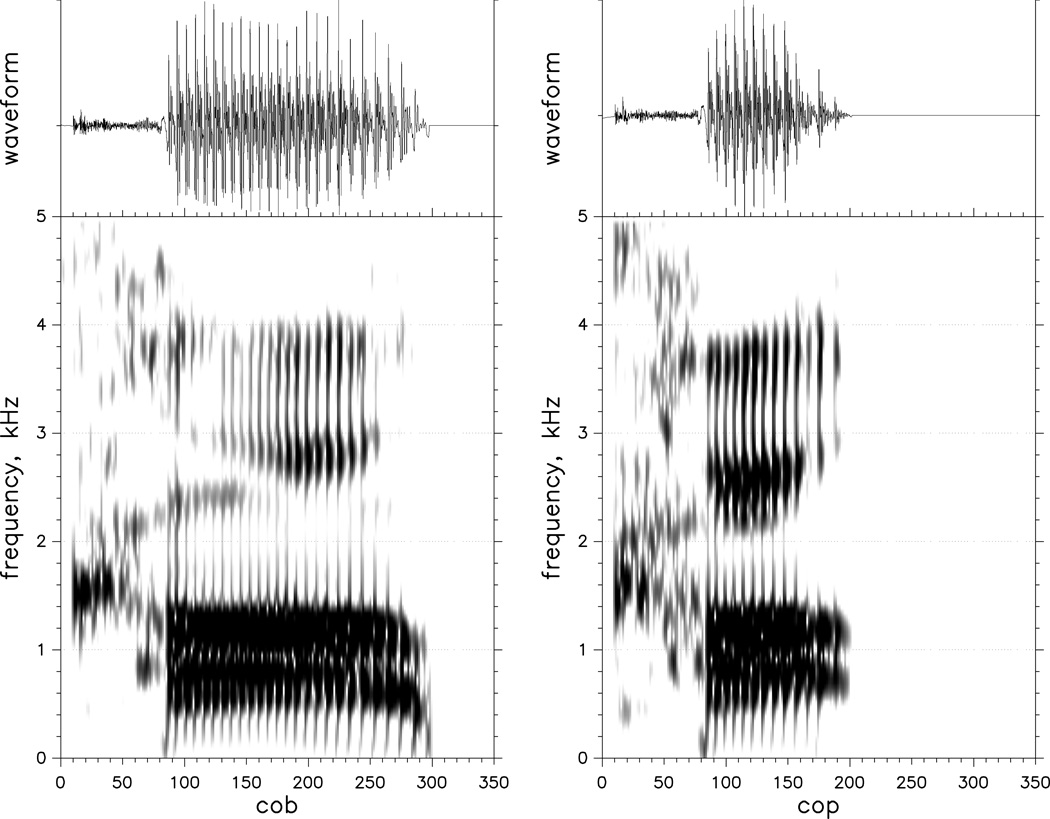

Cop-cob stimuli for labeling

Natural tokens of cop and cob spoken by a male talker were used to create these stimuli. The talker was recorded producing ten tokens of each word. The three tokens of each word that matched each other most closely in vocalic duration and fundamental frequency (f0) contour were selected for use. In each token, the release burst and any voicing during closure were deleted. Figure 1 shows a sample of a natural cob and cop used in this experiment, with those signal stretches deleted. Vocalic length was manipulated either by reiterating one pitch period at a time from the most stable spectral region of the syllable (to lengthen syllables) or by deleting pitch periods from the most stable spectral region of the syllable (to shorten syllables). For both kinds of manipulation, care was taken to align signal portions at zero crossings so no audible clicks resulted. Initial and final formant transitions were not disrupted. Across the three tokens used, the first formant (F1) at offset had a mean frequency of 625 Hz for cob and 801 Hz for cop. Seven stimuli varying in vocalic duration from roughly 82 ms to 265 ms were created from each token this way. These endpoint values were selected because they match the mean lengths of the natural tokens.

FIGURE 1.

Natural tokens of the words cop and cob used to create stimuli for labeling.

Sa-sha stimuli for labeling

Fourteen stimuli consisting of seven natural fricative noises and two synthetic vocalic portions were used. The noises were created by combining a natural /s/ noise and a /∫/ noise at seven relative amplitude levels. The two synthetic vocalic portions were generated using Sensyn, a version of the Klatt synthesizer.

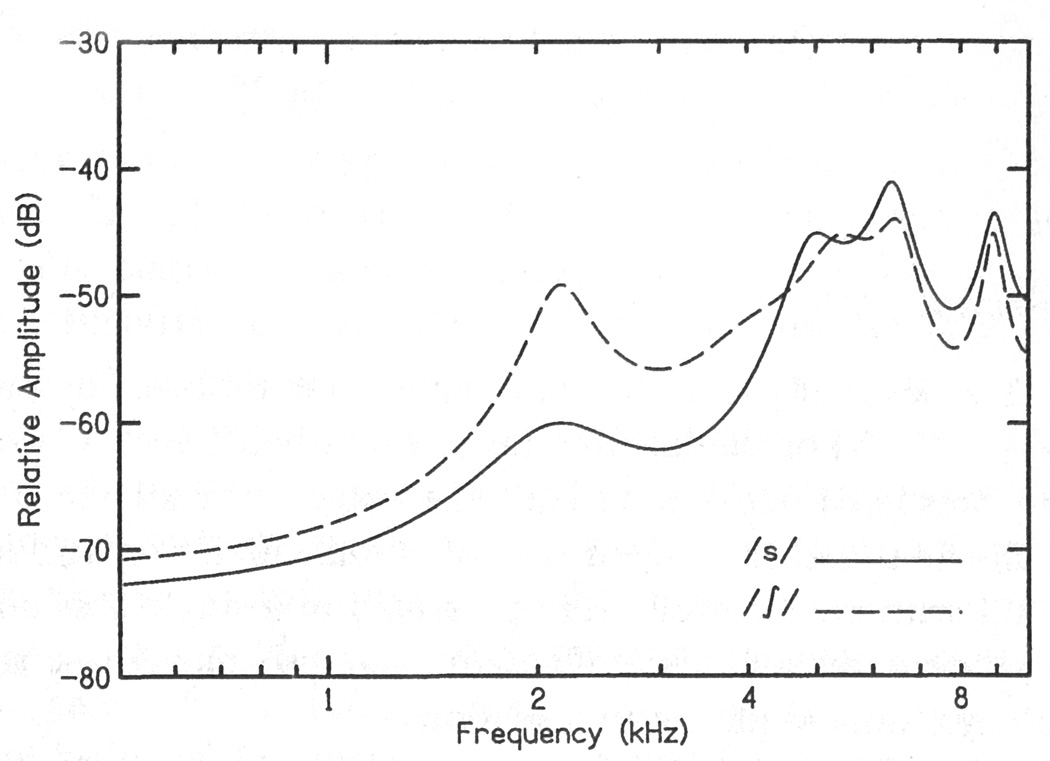

The composite noises were created by taking 100-ms sections from natural /s/ and /∫/ produced by a male talker. These sections of fricative noise were combined at seven /∫/-to-/s/ amplitude ratios, creating a continuum of noises from one that is most strongly /∫/-like to one that is most strongly /s/-like. The main difference among the resulting noises was the amplitude of the low-frequency pole relative to the higher frequency noise components. Figure 2 shows the most /∫/-like noise and the most /s/-like noise from this continuum. This method of stimulus creation preserved details of fricative spectra, while ensuring that a primary cue to sibilant place is preserved: the amplitude of noise in the F3 region of the following vocalic portion. Higher amplitude in this region is associated with more /∫/ responses (Hedrick & Ohde, 1993; Perkell, Boyce, & Stevens, 1979; Stevens, 1985).

FIGURE 2.

The most /∫/-like and most /s/-like fricative noises used to create stimuli for labeling. (from Nittrouer & Miller, 1997).

The vocalic portions were generated to be appropriate for /∫/ vowels following the production of either /s/ or /∫/. Both portions were 260 ms long, and f0 fell throughout from 120 Hz to 90 Hz. F1 rose over the first 50 ms from 450 Hz to a steady-state frequency of 650 Hz. F4 and F5 were stable throughout at 3250 Hz and 3700 Hz, respectively. For the /s/-like onset, F2 started at 1250 Hz and for the /∫/-like onset, F2 started at 1570 Hz. In both cases F2 fell to a steady-state frequency of 1130 Hz over the first 100 ms. For the /s/-like onset, F3 started at 2464 Hz and for the /∫/-like onset, F3 started at 2000 Hz. In both cases F3 transitioned to a steady-state frequency of 2300 Hz over the first 100 ms. Each of these vocalic portions was concatenated with each of the seven noises to create 14 fricative-vowel stimuli.

Duration stimuli for discrimination

Eleven nonspeech stimuli were used that varied in length from 110 ms to 310 ms in 20-ms steps. These stimuli have been used before (Nittrouer & Lowenstein, 2007), and consisted of three steady-state sinusoids of the frequencies 650 Hz, 1130 Hz, and 2600 Hz. These stimuli will be termed the dur stimuli in this report.

Glide stimuli for discrimination

Thirteen stimuli that have been used before (Nittrouer & Lowenstein, 2007) were used. These stimuli were all 150 ms long, and consisted of the same steady-state sinusoids as the dur stimuli, but the last 50 ms of 12 of these stimuli had falling glides. In this way they replicated the falling formants of the cob. Offset frequencies of the three sine waves changed by 20 Hz in each step on the continuum. For example, the lowest tone varied between 650 Hz and 410 Hz at stimulus offset between the first and thirteenth stimulus. These stimuli will be termed the glide stimuli in this report.

2.5. General Procedures

All testing took place in Columbus, Ohio at the Ohio State University and was approved by the Institutional Review Board. Data were collected during a series of two-day camps that occurred over the summer after these children had completed second grade. All children were tested in six individual sessions that lasted no longer than an hour each, with a minimum of one hour between test sessions. Measures collected during three of those sessions are described in this report: (1) auditory discrimination and speech labeling; (2) phonological awareness; and (3) word recognition with the CID word lists. The Leiter International Performance Scale subtests were administered in a separate session. All acoustic stimuli were presented at 68 dB SPL.

2.6. Task-Specific Procedures

Speech labeling

Children were introduced to response labels and corresponding pictures. Then ten live-voice practice trials (five of each response label) were presented. Children were asked both to say what they heard and to point to the appropriate picture. Ten digitized natural samples were then presented over the speaker to give children practice performing the task. The final practice task was that children heard "best exemplars" of the stimulus set: that is, the first and last stimuli from the continuum combined with the appropriate binary cues. For example, the best exemplar of sha was the stimulus with the most /∫/-like fricative noise and the vocalic portion that was appropriate for a preceding /∫/. Ten trials of these best exemplars (five of each) were presented once to children with feedback. Next, ten of these stimuli were presented, without feedback. Children needed to respond to nine out of the ten correctly to proceed to testing. They were given three chances to meet this criterion.

During testing, stimuli were presented in ten blocks of the 14 stimuli for either cop-cob or sa-sha. After each block, the child was permitted to move a game piece on the game board in order to keep track of progress. Cartoon characters also appeared on the computer monitor after each block of stimuli. Children needed to respond correctly to 70% of the best exemplars during testing in order to have their responses included in the statistical analyses. Logistic regression coefficients were computed to index the perceptual weight assigned to the property that varied in a continuous manner (duration or fricative-noise spectral shape, depending on the stimulus set) and to the property that varied in a binary manner (formant transitions for both stimulus sets), as described in Appendix A. Hereafter these regression coefficients are termed weighting factors.

Auditory discrimination

An AX procedure was used. In this procedure, participants judge one stimulus, which varies across trials (X), in comparison to a constant standard (A). Therefore, although it is a discrimination task, as is needed for nonspeech stimuli which have no natural labels, it is as close to a labeling task as possible: The constant ‘A’ stimulus serves as the category exemplar.

For the dur stimuli, the ‘A’ stimulus was the shortest member of the continuum. For the glide stimuli, the ‘A’ stimulus was the one without glide transitions at offset. That means that glides were at their highest values at offset in the standard, or A stimulus. The onset-to-onset interval was 600 ms for all trials in all conditions. The participant responded by pointing to the picture of the two black squares and saying “same” if the stimuli were judged as being the same, and by pointing to the picture of the black square and the red circle and saying “different” if the stimuli were judged as being different. The experimenter controlled the presentation of stimuli and entered responses using the keyboard. Stimuli were presented in 10 blocks of 11 (dur) or 13 (glide) stimuli, and children moved a game piece to the next space on the game board after each block.

Several kinds of training were provided. Before any testing was done, children were shown the drawings of the six same and different objects and asked to report if the two objects on each card were the same or different. Then they were shown the cards with drawings of same and different geometric shapes, and asked to report if the two shapes were the same or different. Finally, children were shown the card with the two squares on one side and a circle and a square on the other side and asked to point to ‘same’ and to ‘different.’

Before testing with stimuli in each condition, children were presented with five pairs of acoustic stimuli that were identical and five pairs of stimuli that were maximally different, in random order. They were asked to report whether the stimuli were the ‘same’ or ‘different,’ and were given feedback. Next these same training stimuli were presented, and participants were asked to report if they were the ‘same’ or ‘different,’ only without feedback. Participants needed to respond correctly to nine of the ten training trials without feedback in order to proceed to testing. During testing, children needed to respond correctly to at least 14 of these physically same and maximally different stimuli (70%) to have their data included in the final analysis.

The discrimination functions of each participant formed cumulative normal distributions, and probit functions were fit to these distributions (Finney, 1971). From these fitted functions distribution means were calculated and served as threshold estimates. Slopes were also computed, which serve as another metric of listeners' sensitivity to the acoustic property varied along the continuum.

Phonological awareness

Phonological awareness was assessed with three tasks that varied in difficulty. Work by Stanovich, Cunningham, and Cramer (1984) served as the basis for predictions of difficulty level for the tasks used here, along with a history of performance by children in other studies using these specific tasks (e.g., Nittrouer & Burton, 2005; Nittrouer & Miller, 1999; Nittrouer, Shune & Lowenstein, 2011). Using this set of tasks varying in difficulty diminished the probability that significant differences across groups in abilities would fail to be identified either because a selected task was so easy that even children with phonological delays were able to perform it or because it was so difficult that even typically developing children could not. In this particular case, all three tasks selected for use examined sensitivity specifically to phonemic structure, so are termed tests of phonemic awareness in this report.

Stimuli in all three phonemic awareness tasks were presented in audiovisual format on a computer monitor so that the children could see and hear the talker. This was done to maximize the abilities of the children with hearing loss to understand the stimuli. The goal in these tasks was not to measure recognition, but rather to evaluate children's sensitivity to phonemic structure in the speech signal. That meant that the availability of sensory evidence regarding the stimuli needed to be maximized. The talker for all stimuli was a man, who was easy to speechread. He spoke a midwestern American dialect. All answers were entered directly into the computer by the examiner. Practice was provided before each task. Percent correct scores were used as dependent variables for all three tasks.

The first task, the initial consonant choice (ICC) task, was viewed as the easiest. It consisted of 48 items and began with the child getting a target word to repeat. The child was given three opportunities to repeat this target word correctly. Were the target not to be repeated correctly within three attempts, testing would have advanced to the next trial and the missed trial would not have been included in the overall calculations of percent correct. However, all these children were able to recognize the target words correctly in the audiovisual format. Following correct repetition of the target word, the child was presented with three more words and had to choose the one that had the same beginning sound as the target word. These items can be found in Appendix B.

The second task, the final consonant choice (FCC) task, was considered to be intermediate in terms of difficulty for children of this age. This task consisted of 48 items, and was the same as the ICC task except that children had to choose the word that had the same ending sound as the target word. Items on this task can be found in Appendix C.

The third task, the phoneme deletion (PD) task involved the greatest amount of processing. The reason is that children needed to recognize phonemic structure in a non-word, manipulate that non-word structure so that one segment was removed, and then blend the remaining segments. The segment to be removed could occur anywhere within the word (e.g., Say ‘plig’ without the 'l' sound.) The task consisted of 32 items, which are found in Appendix D.

Word recognition

The CID-22 word lists were presented via a loudspeaker at 0° azimuth. Each child heard one of the 50-word lists, and lists were randomized across children within each group. Children were videorecorded repeating these words. At a later time, the recordings were viewed and scored on a phoneme-by-phoneme basis. Consistent and obvious errors of articulation were not marked as wrong. All phonemes in a single word needed to be correct in order for that word to be scored as correct. Percent correct word recognition scores were used here as the dependent measures.

3. Results

3.1. Attrition

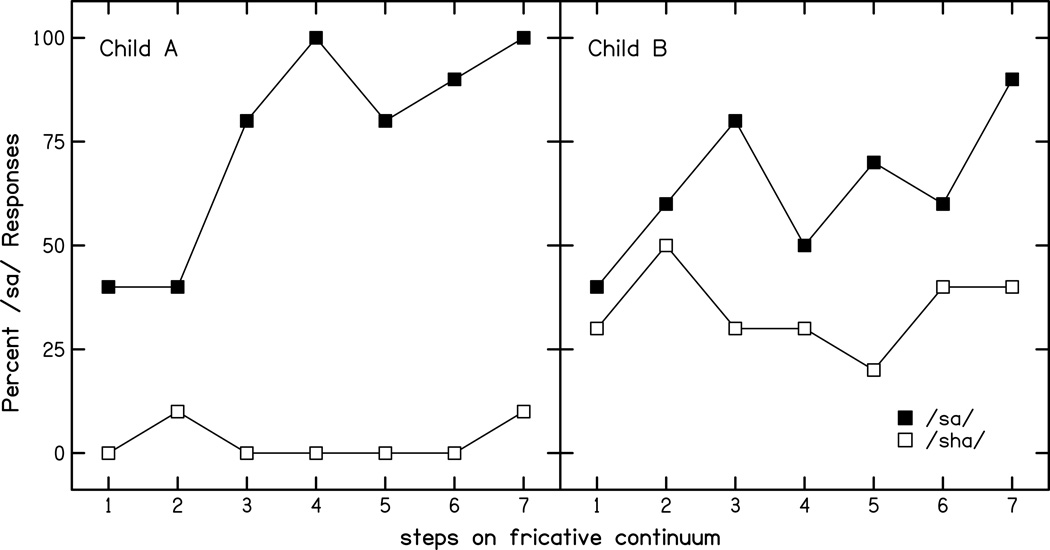

Table 2 shows the number of children in each group who were unable to meet the criteria to have their data included in the statistical analysis at either the level of training, where criterion to participate was 90 percent correct responses to the best exemplars, or at the level of testing, where the criterion was 70 percent correct responses to the best exemplars. Because labeling of speech stimuli is often viewed as dependent on auditory sensitivity to the acoustic cues underlying those labeling decisions, it is interesting that the greatest attrition was observed for the discrimination tasks. In particular, over half of the children with CIs (53%) were unable to meet criteria for the glide discrimination task. Most of those children were nonetheless able to meet criteria to have their data included in the labeling tasks, both of which involved a cue consisting of spectral glides (i.e., formant transitions). Where the cop-cob stimuli are concerned, it might be argued that the duration cue (i.e., vowel duration) provided an alternative to the spectral cue, thus facilitating the ability to reach criteria for these children in that task. However, where the sa-sha stimuli are concerned, the additional cue was the spectral shape of a stationary noise section, arguably even less salient for CI users than formant transitions.

Table 2.

Numbers of children in each group who were unable to meet criteria to have their data included in the statistical analyses at either the training or testing stage.

| Groups | ||

|---|---|---|

| NH | CIs | |

| Discrimination | ||

| Duration | 4 | 14 |

| Glide | 1 | 27 |

| Labeling | ||

| Cop-cob | 4 | 2 |

| Sa-sha | 2 | 21 |

For the children with CIs, t tests were performed to see if there were differences in demographic or audiologic factors that could explain the rate of attrition for the glide discrimination task or for the sa-sha labeling task. Specifically, the factors examined were socioeconomic status, age of identification, age of first implant, pre-implant PTAs, aided PTAs, and aided thresholds at 6 kHz. None of these factors differed for children who could or could not meet criteria for the glide discrimination task or for the sa-sha labeling task.

3.2. Perceptual Weighting Strategies

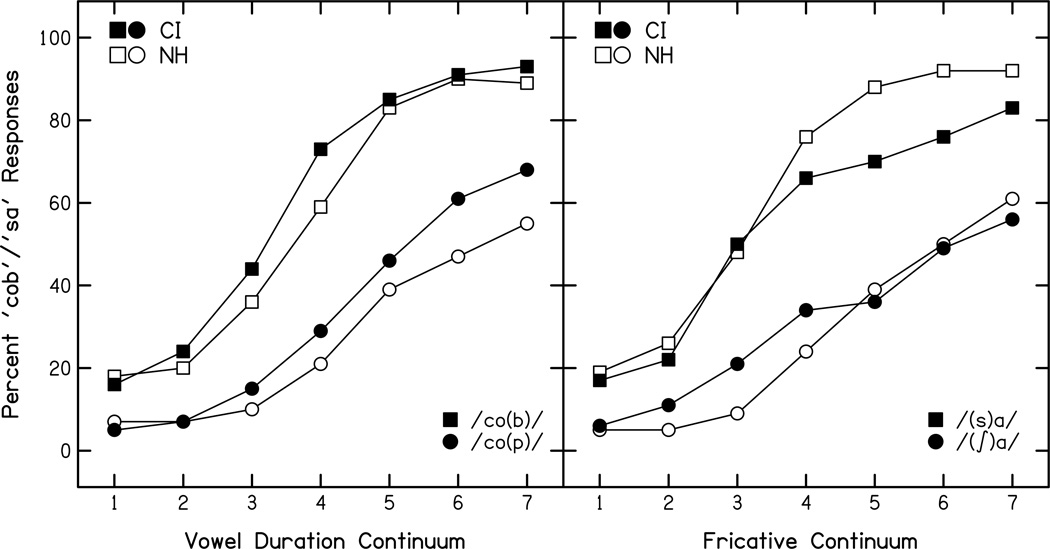

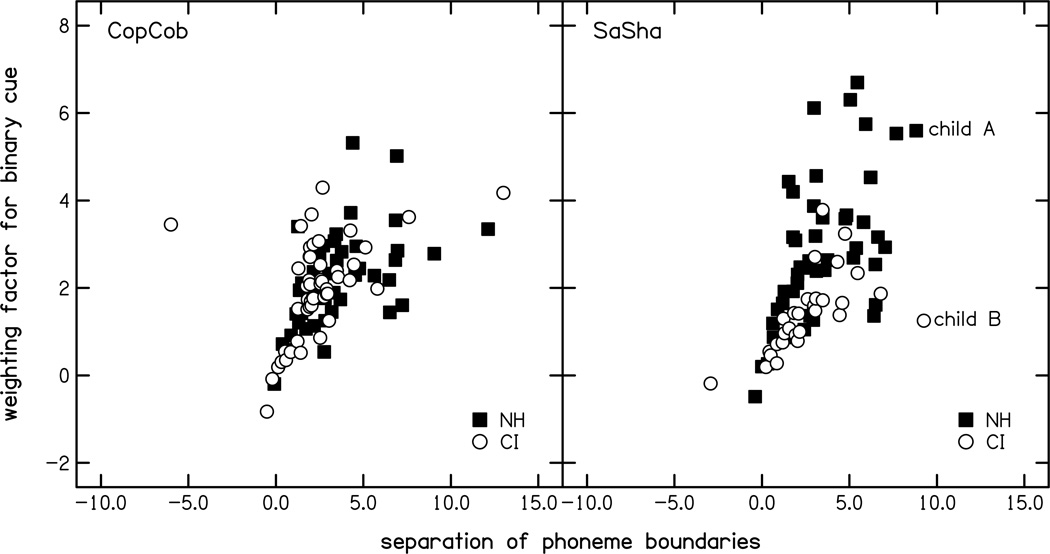

Next, outcomes of the labeling task were examined to see if children with CIs assigned similar perceptual weights to the acoustic cues as children with NH. Figure 3 shows labeling data for both the cop-cob stimuli (left panel) and the sa-sha stimuli (right panel).

FIGURE 3.

Labeling functions for cop-cob and sa-sha stimuli.

Cop-cob labeling

Table 3 shows weighting factors for each cue, in both labeling tasks, with cop-cob results on top. For the cop-cob task, it appears that children with CIs demonstrated a slightly different pattern of weighting across the two cues than children with NH. It appears that these children with CIs weighted the duration of the vocalic portion more and the offset transitions less than children with NH. However, neither of those weighting factors showed a significant difference across groups, and a repeated-measures ANOVA did not reveal a significant Factor x Group interaction. Consequently it must be concluded that children with CIs weighted the two acoustic cues relevant to the labeling of these stimuli as did children with NH.

Table 3.

Mean weighting factors (and SDs) for the labeling tasks.

| Groups | ||||

|---|---|---|---|---|

| NH | CIs | |||

| M | (SD) | M | (SD) | |

| Cop-cob | ||||

| N | 45 | 49 | ||

| Duration of vocalic portion | 5.05 | (2.68) | 5.72 | (2.78) |

| Offset transitions | 2.16 | (1.12) | 1.99 | (1.11) |

| Sa-sha | ||||

| N | 47 | 30 | ||

| Fricative-noise spectrum | 6.09 | (2.96) | 3.50 | (1.57) |

| Onset transitions | 2.84 | (1.67) | 1.41 | (0.88) |

Sa-sha labeling

For sa-sha, it appears children with CIs weighted both cues less than children with NH, and both of those differences were significant: fricative noise spectrum, t(75) = 4.42, p < .001, and onset transitions, t(75) = 4.30, p < .001. But again, a repeated-measures ANOVA failed to reveal a significant Factor x Group interaction. In this case it can be concluded that children with CIs weighted both of these spectral cues less than children with NH.

3.3. Auditory Sensitivity

The next scores examined were those for auditory sensitivity to the acoustic properties underlying the cop-cob labeling decisions. Both thresholds and slopes for the AX discrimination tasks were considered, and these values are shown in Table 4. Regarding thresholds, more sensitive thresholds (i.e., those located at smaller differences from the standard stimulus) are indicated by smaller values for the duration stimuli because the standard was the shortest stimulus. For the glide stimuli, thresholds are given in frequency of the lowest sine wave for convenience, but the higher sine waves co-varied with that value. In this case, the standard stimulus had no transitions at offset. Consequently, the first sine wave was highest at offset in the standard, so more sensitive thresholds are indicated by higher values.

Table 4.

Mean thresholds (and SDs) and slopes (and SDs) for the discrimination tasks.

| Groups | ||||

|---|---|---|---|---|

| NH | CIs | |||

| M | (SD) | M | (SD) | |

| Duration Stimuli | ||||

| N | 45 | 37 | ||

| Thresholds (ms) | 206 | (34) | 189 | (37) |

| Slopes | 17.6 | (9.6) | 20.5 | (12.2) |

| Glide Stimuli | ||||

| N | 48 | 24 | ||

| Thresholds (Hz) | 590 | (26) | 589 | (35) |

| Slopes | 21.5 | (10.1) | 17.5 | (9.2) |

Duration

Looking first at children's sensitivity to the duration of the stimuli, it was found that children with CIs had thresholds at shorter durations than children with NH, t(80) = 2.10, p = .039. This suggests that children with CIs were slightly more sensitive to this structure in these signals than were children with NH. No difference in the slopes of the discrimination functions was found for these two groups. Of course, there were 14 children with CIs who could not reach criteria to be included in this discrimination task. It might be concluded that they were less sensitive to stimulus length than the other children. However, all 14 of these children were able to reach criteria to be included in the cop-cob labeling task.

Glides

This task had the highest rate of attrition among children with CIs of any of the four experimental tasks. For the children with data remaining in the analysis, however, no significant difference between NH and CI groups was found for either thresholds or slopes.

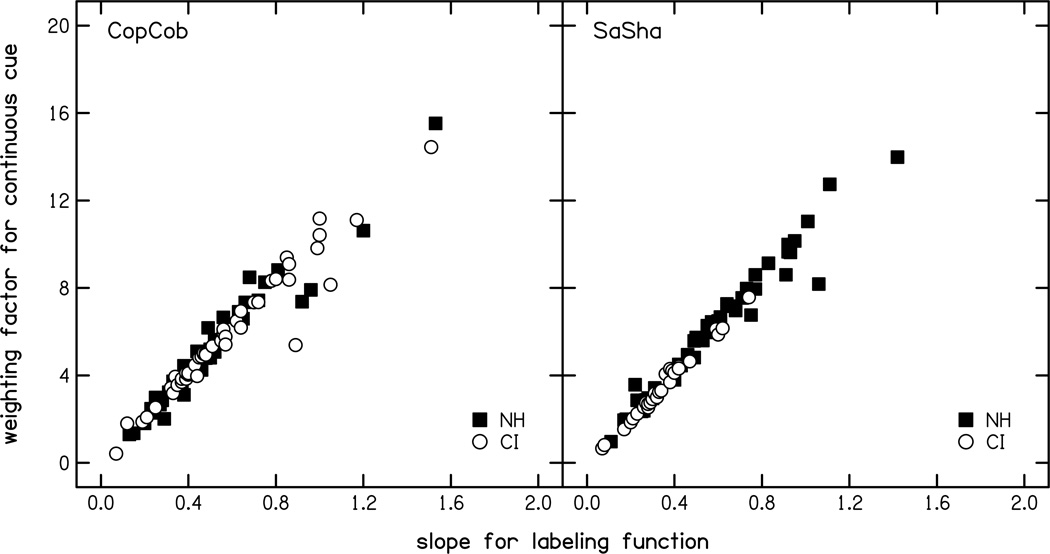

3.4. Relationship of Perceptual Weighting Factors to Auditory Sensitivity

The question posed by the second goal of this study concerned whether sensitivity to the acoustic cues presumed to underlie specific phonemic decisions could explain the perceptual weights assigned to those cues by children with CIs. That question was addressed in several ways.

Children who could/could not meet criteria in the discrimination tasks

The question was asked of whether the perceptual weights assigned to specific acoustic cues in the labeling tasks differed as a function of whether children with CIs could or could not reach criteria to participate in the discrimination tasks. If children failed to reach criteria in those discrimination tasks because of a real lack of sensitivity to the acoustic cue being manipulated, then it would be predicted that they would weight that cue weakly in the labeling task. To investigate that possibility, weighting factors for the duration cue in the cop-cob labeling task were investigated first. A t test was performed comparing weighting factors for that duration cue of children who could and could not reach criteria in the duration discrimination task. No significant difference in weighting factors was observed. That means that the 14 children who could not reach criteria in the duration discrimination task (and all of those children were able to reach criteria in the labeling task) showed similar weights for vowel duration in the labeling task as the children who could do the discrimination task.

Next, weighting factors for offset transitions in the cop-cob labeling task were examined as a function of whether or not children could reach criteria in the glide discrimination task. And again, all children (27 of them) who could not reach criteria in the glide discrimination task were able to reach criteria in the cop-cob labeling task. In addition, 22 of the 24 children who reached criteria in the glide discrimination task were retained in the cop-cob labeling task. However, here a significant difference between those two groups was found in weighting factors for the offset transitions in the labeling task, t(47) = 2.73, p = .009. Children who were not able to reach criteria for glide discrimination had lower weighting factors than those who could: 1.62 (SD = 1.05) for those who could not, compared to 2.44 (SD = 1.04) for those who could.

Finally, weighting factors for onset transitions in the sa-sha labeling task were examined as a function of whether or not children could reach criteria in the glide discrimination task. Although not as directly related as in the case of offset transitions for cop-cob labeling, both tasks nonetheless involve perception of spectral glides. Of the 30 children with CIs who were able to reach criteria to be included in the sa-sha labeling task, exactly half were also able to reach criteria in the glide discrimination task. However, no significant difference in weighting of those onset transitions was found between children who could and could not participate in that discrimination task.

Unlike the cop-cob labeling task, there was a relatively large number of children with CIs who did not reach criteria in the sa-sha labeling task. Therefore, potential differences in children’s performance were also examined in the other direction, to examine whether thresholds or slopes of the discrimination functions were different for children who could and could not do the sa-sha labeling task. Again, no difference in either of those measures derived from the glide discrimination task was observed.

Correlation analyses

Next, correlation analyses were undertaken to examine potential relationships between sensitivity to acoustic cues, as measured in the discrimination tasks, and weighting of those cues in labeling decisions. Pearson product-moment correlation coefficients were computed among the measures of auditory sensitivity derived from the discrimination tasks and weighting factors derived from the labeling tasks. These correlations were most straightforward for the cop-cob stimuli because stimuli in the discrimination task were based on the cop-cob stimuli. Nonetheless, it again made sense to examine the possibility of a relationship between measures from glide discrimination and the weighting factor obtained for onset transitions in the sa-sha labeling task. Thus, threshold and slope for the duration discrimination task were correlated with weighting factors for the duration cue in cop-cob labeling, and threshold and slope for the glide discrimination task were correlated with weighting factors for the transition cues in cop-cob and sa-sha labeling. Each of these six correlation coefficients were derived separately for children with NH and those with CIs in order to determine if different relationships existed for the groups of children. However, only two correlation coefficients were found to be significant: For children with NH, only the correlation coefficient between the slope of the glide discrimination functions and the weighting of onset transitions in the sa-sha labeling task was significant, with r(46) = .31, p = .036. For children with CIs, only the correlation coefficient between the slope of the duration discrimination functions and the weighting of vocalic duration in the cop-cob labeling task was significant, with r(35) = .36, p < .032. These correlation coefficients indicate that the weighting of acoustic cues in labeling decisions was not strongly dependent on how sensitive these children were to the underlying acoustic properties. In particular, a significant correlation coefficient was not found to exist between threshold or slope of the function in the glide discrimination task and the weighting of offset transitions in cop-cob labeling. Given the outcomes of these analyses, it seems fair to conclude that for these particular children, factors other than simple auditory sensitivity contributed to their weighting of acoustic cues in their speech labeling.

3.5. Relationship of Weighting Strategies to Phonemic Awareness and Word Recognition

The reason there is interest in examining perceptual weighting strategies for acoustic cues in children with CIs has to do with the related hypotheses that how well one can apply the appropriate (language-specific) weighting strategies for reaching phonemic decisions must surely be related to how well one can recover phonemic structure in the speech signal. In turn, the ability to recover phonemic structure should underlie other language processes, the most basic of which is word recognition. Therefore, the third goal of this study was to explore the data to see whether children’s perceptual weighting strategies were related to their phonemic awareness and word recognition.

To address this goal, each of the weighting factors shown in Table 3 was correlated with scores for each of the three phonemic awareness measures and with word recognition scores. These scores are shown in Table 5 for each group, and t tests revealed significant differences for all scores, with p < .001 in all cases. The correlations were computed with all children in the analysis, and for children with NH and CIs separately. Analyses revealed that the weighting factors derived using the cop-cob stimuli were not correlated with scores on either phonemic awareness or word recognition, for either children with NH or those with CIs. Thus those correlation coefficients are not reported here, but the implications are returned to in the Discussion section.

Table 5.

Means (and SDs) for the phonological awareness and word recognition measures.

| Groups | ||||

|---|---|---|---|---|

| NH | CIs | |||

| M | (SD) | M | (SD) | |

| Initial consonant choice | 87 | (13) | 66 | (24) |

| Final consonant choice | 70 | (18) | 38 | (25) |

| Phoneme deletion | 72 | (22) | 50 | (32) |

| Word recognition | 95 | (3) | 70 | (16) |

For the sa-sha labeling task, some significant correlation coefficients were obtained. These are shown in Table 6, for all children included in the analyses together (first two columns), and for children in each group separately (last four columns). The values shown in the top half of the table are correlation coefficients (and p values) of weighting factors for the fricative-noise spectrum and each additional measure; the values in the bottom half of the table are correlation coefficients (and p values) of weighting factors for onset transitions and each additional measure. These correlation coefficients reveal that weighting factors for both the fricative-noise spectrum and formant transitions explained variability in the phonemic awareness and word recognition scores when children in both groups were included in the analyses. However, results were different when correlation analyses were performed on each group separately. In this case, only weighting of the fricative-noise spectrum explained significant amounts of variance for any of the other measures, and only for some of the phonemic awareness tasks. Specifically, weighting of the fricative-noise spectrum explained significant amounts of variance for two of the three phonemic awareness tasks for children with NH. Although these correlation coefficients did not reach statistical significance for children with CIs, they were similar in magnitude, suggesting that the reduced sample size may have constrained the ability to reach statistical significance. Thus, for these children it was only the amount of attention, or weight, that they paid to the spectrum of the fricative noise that was associated with how sensitive they were to phonemic structure in the language that they hear.

Table 6.

Pearson product-moment correlation coefficients and p values obtained for weighting of fricative-noise spectra and formant transitions in sa-sha labeling task and each of three phonemic awareness tasks and word recognition. Precise p values are shown when p < .10.

| Groups | ||||||

|---|---|---|---|---|---|---|

| All | NH | CIs | ||||

| r | p | r | p | r | p | |

| N | 77 | 47 | 30 | |||

| Fricative-noise spectrum | ||||||

| Initial consonant choice | .35 | .002 | .24 | -- | .17 | -- |

| Final consonant choice | .48 | <.001 | .36 | .014 | .32 | .089 |

| Phoneme deletion | .36 | .001 | .31 | .034 | .33 | .081 |

| Word recognition | .43 | <.001 | .14 | -- | .09 | -- |

| Formant transitions | ||||||

| Initial consonant choice | .16 | -- | −.09 | -- | .06 | -- |

| Final consonant choice | .27 | .019 | −.04 | -- | .17 | -- |

| Phoneme deletion | .28 | .013 | .27 | .065 | .08 | -- |

| Word recognition | .39 | <.001 | .15 | -- | −.11 | -- |

Group differences

Although within-group correlation coefficients were not especially strong, it seemed reasonable to investigate whether children differed on phonemic awareness and word recognition as a function of whether or not they could perform the sa-sha labeling task. If they did, it might be suggested that those group differences were associated with how well children were able to attend to the acoustic cues to phonemic decisions. Consequently, scores for three groups were examined: children with NH who could meet criteria to be included in the sa-sha labeling task, children with CIs who could meet criteria to be included in the sa-sha labeling task, and children with CIs who could not meet criteria to be included in the sa-sha labeling task. For simplicity's sake, the CI groups are referred to as CIs-yes and CIs-no, respectively. There was not a sufficiently large sample of children with NH who could not meet criteria to form a group. Scores for each group on the measures of phonemic awareness and word recognition are shown in Table 7, and reveal that children with CIs generally performed more poorly than children with NH. In addition, it appears that the children in the CIs-no group had lower scores than the children in the CIs-yes group. Table 8 shows outcomes of one-way ANOVAs performed on each of these measures, along with outcomes of post hoc t tests. Significance levels were corrected for multiple contrasts using Bonferroni adjustments. These results reveal there were significant differences among groups on all four measures. Children with NH performed better than children in the CIs-no group on all measures, and better than children in the CIs-yes group on all but the phoneme deletion task. Children in the CIs-yes group performed better than children in the CIs-no group on all but the final consonant choice task. Thus there is some evidence that at least at the group level there is a relationship between the attention, or weight, that is given to the acoustic structure of the speech signal and sensitivity to phonemic structure.

Table 7.

Means (and SDs) for measures of phonemic awareness and CID word recognition. CIs-yes refers to children with CIs who were able to meet criteria to have their data included in the sa-sha labeling task; CIs-no refers to children with CIs who did not meet those criteria.

| Groups | ||||||

|---|---|---|---|---|---|---|

| NH | CIs-yes | CIs-no | ||||

| M | (SD) | M | (SD) | M | (SD) | |

| N | 47 | 30 | 21 | |||

| Initial consonant choice | 88 | (13) | 73 | (19) | 55 | (26) |

| Final consonant choice | 70 | (18) | 41 | (26) | 34 | (24) |

| Phoneme deletion | 72 | (22) | 59 | (27) | 37 | (34) |

| Word recognition | 95 | (3) | 75 | (9) | 62 | (21) |

Table 8.

Outcomes of one-way ANOVAs performed on each measure, along with outcomes of post hoc comparisons using Bonferroni adjustments. Degrees of freedom for all ANOVAs were 2, 95. Precise p values are shown when p < .10.

| F | p | NH v. | CIs-yes v. | ||

|---|---|---|---|---|---|

| CIs-yes | CIs-no | CIs-no | |||

| Initial consonant choice | 23.5 | < .001 | .003 | < .001 | .004 |

| Final consonant choice | 26.5 | < .001 | < .001 | < .001 | -- |

| Phoneme deletion | 12.4 | < .001 | -- | < .001 | .013 |

| Word recognition | 76.4 | < .001 | < .001 | < .001 | < .001 |

3.6. Potential Role of Audiological Factors

Finally, a potential role of audiological factors for any of the dependent measures included in this study was examined for the children with CIs. The 12 dependent measures included in the analyses were thresholds and slopes for discrimination functions (4), weighting factors for speech labeling (4), phonemic awareness (3), and word recognition (1). The five audiological factors examined were age of identification, age of first implant, pre-implant PTAs, aided PTAs, and aided thresholds at 6 kHz. Pearson product-moment correlation coefficients were computed between each dependent measure and each audiological factor. Of the 50 correlation coefficients computed, none was significant.

4. Discussion

The current study had three goals. The primary goal was to quantify perceptual weighting strategies for children with CIs, and see if they differed from those of children with NH. The second goal was to examine factors that would be expected to account for those weighting strategies, namely auditory sensitivity and linguistic experience. The final goal was to investigate the role that weighting strategies play in word recognition and phonemic awareness.

Examining perceptual weighting strategies for children with CIs was considered an important undertaking because these children have access to only degraded spectral signals, which could disrupt the development of mature weighting strategies. Another consideration is that the way perceptual weight is distributed across the various properties of the acoustic speech signal is language specific. That means that all children – those with normal hearing and hearing loss alike – must discover the perceptual weighting strategies used by speaker/listeners of the language they are learning, if they are to become proficient language users themselves. They must learn what acoustic structure, or cues, to direct their perceptual attention to when listening to speech, and learn how to organize that structure in order to recover relevant phonemic form. That learning requires adequate experience hearing a first language.

When it comes to developing appropriate and effective perceptual weighting strategies for speech, children with CIs are surely at a disadvantage, both in terms of the signal quality they receive, and in terms of the opportunities they have to hear their first language. Their sensory deficit and consequent need for a CI means they have only a limited and degraded signal reaching them. It also means that listening environments that are difficult for any listener are even more difficult for them. Consequently, the question was posed by the current study of whether or not children with CIs have adequate experience, even when the acoustic cues relevant to phonemic decisions are ones that are readily available through their CIs.

To meet the goals established by this study, perceptual weighting strategies used in the labeling of two sets of stimuli were examined. One of these stimulus sets (cop-cob) was selected because there was a high likelihood the relevant acoustic structure was well represented in the signals available to children with CIs. That meant that if a developmental delay in weighting strategies was observed for this stimulus set, an argument could be made for attributing that delay largely to deficits in experience hearing the ambient language. The other set of stimuli used in a labeling task (sa-sha) was one for which the relevant acoustic structure is degraded in the signal available to children with CIs. Thus, by comparing weighting strategies of children with CIs for these stimulus sets to those of children with NH, it might be possible to assess the primary source of delay in learning to process speech signals for these children with CIs: lack of availability of high-quality sensory input or inadequate listening experience. The resolution of that question could help determine where treatment efforts need to be placed.

For the stimulus set predicted to provide relevant signal structure to children with CIs (i.e., cop-cob), weighting factors derived from the labeling task were found to be similar for children with CIs and those with NH. This finding indicates that the children with CIs were able to acquire appropriate weighting strategies for their native language, when the cues were available. Thus they must have had sufficient listening opportunity. Adults (with NH) whose first languages either do not have syllable-final voicing distinctions for obstruents, or do not distinguish those differences based on the length of the preceding vowel fail to weight vowel duration as strongly as adult speaker/listeners of languages with this distinction (Crowther & Mann, 1994; Flege & Wang, 1989). Clearly these listeners never learned to pay attention to this acoustic cue, even though it is salient in the signals they hear. Furthermore, developmental studies have shown that children with NH younger than the children in this study do not weight vowel duration as strongly as adults in making these voicing decisions (Greenlee, 1980; Nittrouer, 2004; Wardrip-Fruin & Peach, 1984). The children with CIs in the current study obviously acquired the appropriate weighting strategies by eight years of age, so must have had adequate signal quality and opportunity to learn those strategies.

In contrast to outcomes for the cop-cob stimuli, these children with CIs showed reduced weighting for both acoustic cues in the sa-sha stimulus set, which was selected based on the premise that those cues would likely not be accessible. Both of these cues involve variation in broad spectral shape. But even though differences in outcomes across the two labeling tasks suggested that perceptual weighting strategies in speech labeling were explained by how accessible the sensory information was to these children with CIs, evidence for that conclusion was difficult to compile from the discrimination tasks. These latter tasks involved nonspeech stimuli, constructed to vary along the acoustic dimensions relevant to the speech labeling decision. In particular, they were nonspeech analogs of the cop-cob speech stimuli that varied in duration and extent of spectral glides at offset. One of the most intriguing results of this study was that the highest rates of attrition (due to children's failure to recognize even the best exemplars correctly) were found for these discrimination tasks. Even discriminating stimuli that varied in length by 200 ms was unattainable for some children with CIs, in spite of the fact that they were able to recognize and base labeling decisions on stimulus duration. Every child who failed to reach criteria in one or the other discrimination task was able to reach criteria for the cop-cob labeling task. This finding highlights the fact that all perception – regardless of whether it is for speech or nonspeech signals– involves organizing the sensory input to recover some kind of form. Where speech signals are concerned, the form to be recovered in the signal is familiar to children because they have considerable experience with those signals. It may be more difficult for these children to recognize a consistent form (i.e., create a standard category) with nonspeech signals because of their lack of experience with those particular signals.

Nonetheless, some evidence of a relationship between the ability to perform the discrimination task and weighting strategies for speech stimuli was found. In particular, children who were unable to meet criteria in the glide discrimination task – the task with the greatest attrition – had lower weighting factors for offset transitions in the cop-cob labeling task than the children who could meet criteria in the glide discrimination task. Thus there is evidence of general difficulty attending to spectral glides on the part of these children.

On the other hand, only two of six correlation coefficients computed on discrimination and labeling measures were found to be significant: one each for children with NH and children with CIs. From this perspective, auditory sensitivity to the acoustic cues underlying labeling decisions was not found to strongly predict the weight assigned to cues in those decisions. Similar outcomes have been found in the past for children (e.g., Nittrouer, 1996), as well as adults (e.g., Miyawaki et al., 1975). Thus, although salient acoustic structure can help focus attention on a specific cue – as in the case of stimulus length – other factors apparently account for how perceptual attention is directed, as well. These other factors come into play more strongly when the acoustic structure involved is not especially salient. The first factor that seems to influence perceptual attention is simply individual differences: some children could attend to less-salient cues better than other children. Second, decisions in which the categories involved are familiar to listeners facilitate the use of less-salient cues better than decisions with less familiar categories: children were better able to attend to less-salient cues in speech than in nonspeech signals.

The third goal of the current study was to examine the extent to which perceptual weighting strategies can explain children's sensitivity to, or awareness of phonemic structure in the speech signal. In order to address that goal, measures of phonemic awareness were obtained. Word recognition was also measured because it is presumably related to how well children can recover relevant form from the acoustic speech signal. When including children in both groups (NH and CIs), the correlation analyses revealed that weighting of both acoustic cues to the sa-sha contrast were correlated with scores on the phonemic awareness and word recognition tasks. However, when each group was considered separately, significant correlations were observed between only the weight assigned to fricative-noise spectra and two of the three phonemic awareness tasks. That pattern of results might be dismissed as indicating only that children with CIs are poorer at all speech and language tasks than children with NH (so performed more poorly on both the labeling and phonemic awareness tasks), but differences between children with CIs who could and could not do the sa-sha labeling task provided useful insights, as well. In particular, children with CIs who paid so little attention to the acoustic cues present in these sa-sha stimuli that they were unable to do the labeling task at all had poorer group means on two of the three phonemic awareness tasks and on word recognition than children with CIs who could meet criteria on the sa-sha labeling task. That outcome supports suggestions of a relationship between weighting strategies and other language skills.

4.1. Conclusions