Abstract

Objective

To analyze the impact of three primary care practice transformation program models on performance: Meaningful Use (MU), Patient-Centered Medical Home (PCMH), and a pay-for-performance program (eHearts).

Data Sources/Study Setting

Data for seven quality measures (QM) were retrospectively collected from 192 small primary care practices between October 2009 and October 2012; practice demographics and program participation status were extracted from in-house data.

Study Design

Bivariate analyses were conducted to measure the impact of individual programs, and a Generalized Estimating Equation model was built to test the impact of each program alongside the others.

Data Collection/Extraction Methods

Monthly data were extracted via a structured query data network and were compared to program participation status, adjusting for variables including practice size and patient volume. Seven QMs were analyzed related to smoking prevention, blood pressure control, BMI, diabetes, and antithrombotic therapy.

Principal Findings

In bivariate analysis, MU practices tended to perform better on process measures, PCMH practices on more complex process measures, and eHearts practices on measures for which they were incentivized; in multivariate analysis, PCMH recognition was associated with better performance on more QMs than any other program.

Conclusions

Results suggest each of the programs can positively impact performance. In our data, PCMH appears to have the most positive impact.

Keywords: Quality measurement, primary care, meaningful use, PCMH, pay-for-performance

The Institute of Medicine's 2001 report “Crossing the Quality Chasm” (National Research Council 2001) highlighted several key recommendations for changing the U.S. health system to address patient safety issues and improve the quality of health care. Recommendations included reengineering care processes, effectively using information technologies, developing effective care teams, and coordinating care across patient conditions, services, and sites of care over time. Since the seminal report, several programs, both nationally and locally, have been implemented to help strengthen and transform primary care. Though many recommendations came from successes observed in large integrated health systems, such as Kaiser, few have been demonstrated in independently owned practices.

In 2010, the Centers for Medicaid and Medicare System implemented a multiyear program to incentivize providers to meaningfully use (MU) electronic health records (EHR) and in later stages, health information systems, to pave the way for increased information exchange and care coordination across the health care system. Participants received incentives starting in March of 2011 with a maximum payment of $44,000 over 5 years for the Medicare incentive program and up to $63,750 for the Medicaid incentive program. In the first stage of the MU program, providers were expected to meet 20 measures related to use of the EHR and report on seven clinical quality measures. These incentives have spurred the adoption and use of EHR nationally, doubling the use of health information technology since 2012. By April 2013, nearly 300,000 eligible professionals and over 3,800 eligible hospitals had received incentive payments, representing more than half of physicians and other eligible professionals in the United States (NYC REACH website 2013). Recognizing that few independent practices would have the resources or knowledge base to adopt and utilize health IT, the Office of the National Coordinator established regional extension centers to deliver technical assistance and support for adoption and use of EHRs.

Separately, several states have implemented programs to incentivize practice transformation into a Patient-Centered Medical Home (PCMH) (Takach 2012). Formalized as a framework in 2007, with continued ongoing refinements, PCMH is a program where practices emphasize enhanced care through proactive communication between patients, providers, and staff; and through systematic use of disease registries, information technology, health information exchange, and other means to ensure patients obtain the proper care in a culturally appropriate manner (Berenson, Devers, and Burton 2011). To spur primary care transformation, New York State established a Medicaid incentive program in 2010 to reward Medicaid providers achieving PCMH recognition through the National Committee for Quality Assurance (NCQA). Incentive payments from Medicaid managed care plans ranged from $2 to $6 per member per month; incentive payments from Medicaid fee-for-service (FFS) ranged from $5.50 to $16.75 per office visit for Article 28 settings, and $7.00 to $21.25 per office visit for office-based settings (New York State Department of Health 2013). To date, New York State has paid nearly $148 million in incentives, with an estimated 38 percent statewide penetration of Medicaid members accessing a medical home (New York State Department of Health 2013). Research on the PCMH model suggests it may be associated with meaningful savings in terms of utilization as well as cost (Reid et al. 2010); however, a majority of practices have yet to achieve PCMH recognition, as practice transformation and completion of the NCQA application require considerable investments of time and financial resources, especially for small practices (Rittenhouse et al. 2011).

Alongside federal and state programs, local municipalities have begun to pioneer initiatives aimed at positively transforming the quality of primary care. In 2005, the New York City Department of Health and Mental Hygiene, recognizing the need for public health to become further integrated with the health system, established the Bureau of Primary Care Information Project (PCIP). PCIP's mission is to improve population health by helping primary care providers use information systems to improve delivery of prevention-oriented care (Mostashari, Tripathi, and Kendall 2009). PCIP also serves as New York City's regional extension center, assisting providers in the adoption and implementation of EHR systems (http://HealthIT.gov 2013). As of April 2014, PCIP provides support to approximately 14,000 providers in over 1,300 practices.

In addition to assisting interested providers with MU and PCMH programs and providing EHR system quality improvement (QI) support to practices, PCIP also piloted a pay-for-performance (P4P) program—Health eHearts (eHearts)—with small practices between April 2009 and September 2011. The Affordable Care Act has encouraged novel design of P4P programs as a means of exploring effective alternatives to the prevailing fee-for-service payment structure (Health Affairs 2012). eHearts focused specifically on improving cardiovascular clinical preventive services. Providers received payments of $20–$100 for each performance target that was met, with providers receiving higher payments for targets that were more difficult to achieve and patients who were more complex to treat (Bardach et al. 2013).

As multiple stakeholders seek to identify programs that foster improving health care quality, New York City provides a unique opportunity to study the impact of three practice transformation programs, with a special focus on small practices. In this study, we assessed the performance by small practices on selected clinical quality measures (QMs) and the potential effects of three programs: MU, PCMH, and eHearts.

Methods

Practice Selection

We restricted this study to small practices located in New York City with 10 or fewer providers or FTEs that had adopted eClinicalWorks EHR software at least 3 months prior to October 2009. Practices were excluded if quality measurement data were not available in October 2009 (T1) and October 2012 (T2). Data related to practice characteristics and program participation were obtained from PCIP's customer relationship management database, SalesForce. Program participation was treated as a dichotomous variable. Practices were categorized as PCMH “yes” if they had successfully completed the PCMH certification process by the time of data extraction and PCHM “no” if they had not; as MU “yes” if they had successfully attested to Stage 1 of Meaningful Use by the time of data extraction and MU “no” if they had not; and as eHearts “yes” if they had been randomized to the Health eHearts intervention arm (i.e., received financial incentives) and “no” if they had either been randomized to the control group, or if they had not participated in the program at all.

Measure Selection

Data used in this analysis were derived from practice EHRs that automatically transmitted QM data on a monthly basis to PCIP. Seven key QMs were included in the analytical dataset: smoking status recorded, smoking cessation intervention, blood pressure (BP) control for patients with hypertension, body mass index (BMI) recorded, hemoglobin A1C (HbA1c) testing, HbA1c control, and antithrombotic therapy for those with ischemic vascular disease (IVD) or diabetes. Clinical data from practices' EHR systems were used to generate the QMs. These measures are similar to measures endorsed by the National Quality Forum (n.d.). Table S1 shows detailed descriptions of the numerators and denominators for each QM included in the study. Additional practice and provider variables included were number of unique patients seen per month; number of providers; number of practice sites; months using EHR as of October 2012 (T2); months receiving provider-level feedback reports (dashboards); and number of QI visits as of October 2012 (T2).

Analysis

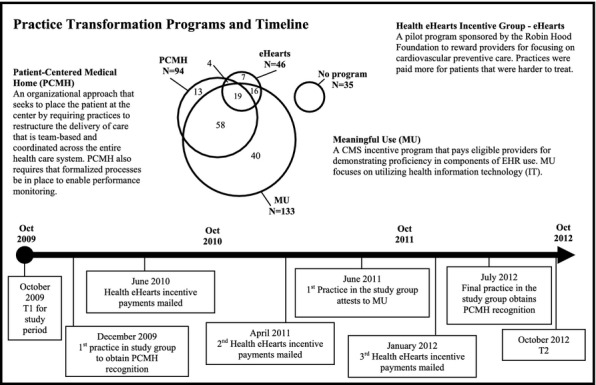

All statistical analyses were conducted using SAS version 9.2 (SAS Institute Inc., Cary, NC, USA). Comparisons between groups of practices, time points, or trends were considered significant if the observed p-value for a two-tailed statistical test was less than 0.05. Practice characteristics were based on available data as of October 2012 (T2). We used t-tests to compare characteristics between participants and nonparticipants in each of the different programs, as well as ANOVA tests to compare characteristics among practices participating in one, two or more, or no programs (Table1). In this analysis, a practice could have participated in more than one program, and some participated in all three (Figure1).

Table 1.

Practice Characteristics as of October 2012 (T2)

| Mean Practice Characteristics, by All Participation across All Programs | MU Only (n = 40) | eHearts Only (n = 7) | PCMH Only (n = 13) | Two or More Programs (n = 97) | No Program (n = 35) | All (n = 192) |

|---|---|---|---|---|---|---|

| Number of sites* | 1.38 | 1.00 | 1.85 | 1.13 | 1.31 | 1.26 |

| Number monthly patients | 662.88 | 355.86 | 1,079.08 | 740.27 | 559.23 | 700.07 |

| Number of providers | 2.25 | 1.29 | 4.62 | 2.89 | 2.48 | 2.75 |

| QI visits per provider | 9.33 | 12.43 | 9.00 | 10.15 | 11.54 | 10.20 |

| Months using EHR | 45.59 | 53.53 | 45.55 | 47.44 | 45.93 | 47.05 |

| Months receiving dashboard** | 20.31 | 21.17 | 19.13 | 19.92 | 15.03 | 19.10 |

| Programs | n | Mean | SD | |||

| Months since PCMH recognition | 94 | 15.38 | 8.48 | |||

| Months since MU signup | 133 | 22.58 | 5.60 | |||

| Months with health eHearts incentives | 46 | 20.01 | 5.69 | |||

| Geographic distribution | n | % | ||||

| Bronx | 23 | 12 | ||||

| Brooklyn | 63 | 33 | ||||

| Manhattan | 53 | 28 | ||||

| Queens | 45 | 23 | ||||

| Staten Island | 8 | 4 | ||||

| Quality measure performance | n | Mean | Improvement (October 2009 to October 2012) | |||

| Body mass index | 191 | 0.87 | 0.16** | |||

| Smoking status recorded | 191 | 0.74 | 0.25** | |||

| Smoking cessation intervention | 130 | 0.34 | 0.10** | |||

| Antithrombotic therapy | 132 | 0.68 | 0.18** | |||

| HbA1c testing | 138 | 0.51 | 0.20** | |||

| HbA1c control | 156 | 0.40 | 0.27** | |||

| BP control with HTN | 136 | 0.65 | 0.11** | |||

|

MU |

eHearts |

PCMH |

||||

| Mean practice characteristics, by participation in individual programs | No (n = 59) | Yes (n = 133) | No (n = 146) | Yes (n = 46) | No (n = 98) | Yes (n = 94) |

| Number of sites | 1.37 | 1.21 | 1.30 | 1.13 | 1.30 | 1.22 |

| Number of patients | 672.60 | 712.30 | 752.90 | 532.50 | 587.90 | 817.00* |

| Number of providers | 2.92 | 2.68 | 3.00 | 1.98 | 2.21 | 3.28 |

| QI visits per provider | 10.74 | 10.00 | 9.11 | 13.45* | 11.74 | 8.73 |

| Months using EHR | 46.97 | 46.83 | 46.22 | 48.95* | 46.83 | 46.91 |

| Months receiving dashboard | 17.01 | 20.03** | 18.43 | 21.22* | 18.67 | 19.55 |

Note.

p < .05;

p < .001.

Figure 1.

Program Descriptions

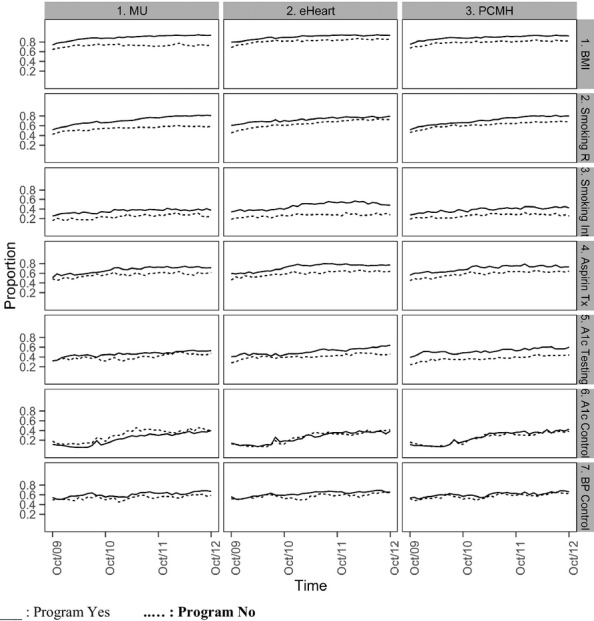

Trend graphs of average monthly practice rates were generated for each quality measure and each program, stratified by participation (Figure2). We used t-tests to compare practice-level performance rates on each of the seven quality measures between T1 and T2 (Table1); and by incentive program during each time period (Table S2). To assess the impact of participating in each incentive program, we used Generalized Estimating Equation (GEE) models to estimate the probability of eligible patients meeting numerator criteria for each QM in T2, adjusting for practice cluster effects and the patient volume at each practice. The independent variables we included in the GEE models were participation in each of the three programs, their interactions and the practice characteristics; practices with “no program” served as the referent. Odds ratios generated from the GEE model are shown in Table2.

Figure 2.

Quality Measure Trend by ProgramNotes. BMI = body mass index; Smoking R = smoking status recorded; Smoking Int = Smoking Cessation Intervention; Aspirin Tx = Antithrombotic Therapy; A1c = Hemoglobin A1c; BP = blood pressure.

Table 2.

GEE Model Results: Odds Ratio (95% Confidence Interval)

| MU | eHearts | PCMH | |

|---|---|---|---|

| Body mass index recorded | 7.91 (2.37, 26.39) | 3.03 (0.89, 10.30) | 0.85 (0.25, 2.90) |

| Smoking status recorded | 1.21 (0.53, 2.74) | 1.42 (0.72, 2.79) | 3.19 (1.55, 6.54) |

| Smoking cessation intervention | 1.55 (0.67, 3.61) | 1.81 (1.03, 3.18) | 1.70 (1.01, 2.87) |

| Antithrombotic therapy | 1.47 (0.87, 2.48) | 1.57 (1.04, 2.38) | 1.49 (0.89, 2.47) |

| Hemoglobin A1c testing | 0.79 (0.44, 1.42) | 1.81 (1.05, 3.10) | 1.79 (1.002, 3.18) |

| Hemoglobin A1c control | 0.99 (0.97, 1.01) | 1.12 (0.71, 1.78) | 1.88 (1.09, 3.26) |

| Blood pressure control | 1.11 (0.88, 1.41) | 0.98 (0.79, 1.22) | 0.95 (0.79, 1.15) |

Results

Of 660 practices sending regular data transmissions to PCIP through the eClinicalWorks system, a total of 192 practices met inclusion criteria for this study. Table1 shows practice characteristics at T2. Of the 192 practices included in the analysis, 53.8 percent were solo practitioners; 49.0 percent had achieved PCMH recognition; 69.3 percent had achieved MU Stage 1; and 24.0 percent had received financial incentives through eHearts. On average, practices had been using an EHR for 47.1 months, received dashboards for 19.1 months, and had PCMH recognition for 15.4 months, and had been participating in MU for 22.5 months. On average, practices saw 700.1 patients per month; had 2.7 providers; and received 10.2 total PCIP QI staff visits.

Table1 also shows practice characteristics broken out by program participation. PCMH-recognized practices had a larger number of unique patients per month (817) versus nonrecognized practices (588), and also more providers per practice (3.3 vs. 2.2). Compared to nonparticipants, practices that participated in the eHearts incentive program received a greater number of QI visits (13.5 vs. 9.1) and had used their EHRs and received feedback dashboards for an average of 3 months longer. Finally, practices that participated in the MU program had an average of 3 more months of exposure to dashboards. There were no differences between participants and nonparticipants for the remaining characteristics.

Table1 also shows means of QM performance in by time period. From T1 to T2, performance on all QMs improved significantly. Performance on smoking status recorded changed from 48.8 percent to 74.2 percent; smoking cessation intervention from 23.1 to 33.6 percent; BP control among patients with hypertension from 53.1 to 64.6 percent; BMI recorded from 71.0 to 87.2 percent; HbA1c testing from 31.7 to 51.4 percent; HbA1c control from 13.5 to 40.5 percent; and antithrombotic therapy from 50.1 to 68.2 percent.

Figure1 shows detailed descriptions of the three programs and the distribution of practice participation in the programs. Approximately half of practices participated in more than one of the programs of interest. The largest participation overlap was in PCMH and MU, with 58 practices participating in both programs (but not in eHearts); 19 practices participated in all three programs, and 35 participated in none of them.

Figure2 shows the trend for monthly practice-level average performance rates for all QMs from October 2009 through October 2012, by program. Table S2 provides further detail on rates at T1 and T2. During T1, there were few significant differences in QM performance between practices that did and did not participate in at least one program, but by T2, participating practices performed significantly better than nonparticipators on all measures except BP control and HbA1c control. Breaking out by specific program, PCMH-recognized practices performed significantly better on smoking cessation intervention, HbA1c testing, and antithrombotic therapy in both time periods, and also outperformed nonrecognized practices on smoking status and BMI recorded in T2. In T1, eHearts participants outperformed nonparticipants on all measures except BP control and HbA1c control, and this pattern continued in T2. MU participants performed significantly better than nonparticipants on smoking cessation intervention in T1, and similar to eHearts participants, by T2 significantly outperformed nonparticipants on all QMs except the two control measures.

As a supplement to the trends in overall QM performance rates shown in Table1, Table S3 shows trends in the size of QM numerators and denominators. Across all practices, the mean size of numerators and denominators increased significantly between T1 and T2. As shown in Table1, QM rates also increased significantly over time, which indicates that numerators increased more than denominators and suggests both better documentation and improved performance over time.

Table2 shows the GEE model results with the odds ratios (OR) and 95 percent confidence intervals (CI) for the three programs. Any interaction term of the programs was not statistically significant because of their small sample size. Thus, we only kept the main program effect in the model. We used different combinations of the practice characteristic variables in the model and found that none of them were significant. In the adjusted model, patients receiving care at PCMH-recognized practices were significantly more likely than patients receiving care at nonrecognized practices to have a recorded smoking status (OR = 3.19; CI = 1.55–6.54), receive a smoking cessation intervention (OR = 1.70; CI = 1.01–2.87), and have their HbA1c tested (OR = 1.79; CI = 1.002–3.18) and under control (OR = 1.09; CI = 1.09–3.26). Compared to non-eHearts practices, patients seeking care at eHearts incentivized practices were more likely to receive a smoking cessation intervention (OR = 1.81; CI = 1.03–3.18), have their HbA1c tested (OR = 1.81; CI = 1.05–3.10), and receive antithrombotic therapy (OR = 1.57; CI = 1.04–2.38). Finally, patients seeking care at MU practices were more likely to have a BMI recorded (OR = 7.91; CI = 2.37–26.39) compared to patients seeking care at non-MU practices. No single program was significantly associated with improving BP control for patients with hypertension.

Discussion

In this paper, we compared the effect of participating in three practice transformation programs, MU, eHearts, and PCMH, on performance on clinical quality measures. Overall, performance on all QMs improved over time, which is consistent with our previous studies (Shih et al. 2011; Wang et al. 2013). All three programs were associated with improvements in performance. Practices that did not participate in any of the programs had lower initial performance and smaller overall gains in performance on the quality measures.

In the bivariate analysis of individual program impact, we observed that at T1, PCMH-recognized practices already performed significantly better on process measures (i.e., measures that reflect the delivery of provider services) related to delivering smoking cessation interventions, testing diabetic patients' HbA1c, and appropriately prescribing antithrombotic therapy. By the end of the study, they were also performing significantly better than nonrecognized practices on simpler process measures that only involve a single click or point of data entry in the EHR, namely, BMI and smoking status recorded. The only measures for which PCMH-recognized and nonrecognized did not differ in performance were BP control in hypertensive patients and HbA1c control in diabetics. Of the measures we evaluated, these were the only “control” measures, that is, measures dependent on patient health status (Agency for Healthcare Research and Quality 2014). However, participating practices may have been at a disadvantage on these control measures as they are specified. For example, participating practices performed better than nonparticipating practices on the HbA1c testing measure, which means that they identified more patients in need of control, and thereby increased the pool of patients eligible for the HbA1c control measure. This would also be true for the BP control measure if participating practices had more patients with a baseline BP recorded.

Similar to what we observed with PCMH-recognized practices, compared with nonparticipants, eHearts practices performed significantly better at T1 on all process measures, but not on control measures. By T2, eHearts practices still outperformed non-eHearts practices on all process measures and made significantly better improvement than nonparticipants on the process measures that were incentivized in the eHearts program (i.e., smoking cessation intervention and antithrombotic therapy). MU participants and nonparticipants were more similar to each other at T1. By T2, MU-attesting practices performed better on all but one of the process measures, and better on BP control.

In the bivariate analysis, there was a relatively consistent mapping between program purpose and the type of measure on which practices improved—for instance, eHearts practices tended to improve more on measures directly incentivized by eHearts—in the multivariate analysis, we observed less clear patterns. However, in terms of the sheer number of measures, PCMH recognition was associated with better performance by the end of the study on more measures than for any other program. Notably, in the final model, none of the programs were strongly associated with improving BP control for patients with hypertension. However, as previously discussed, the model does not measure what percentage of patients at each practice has a diagnosis of hypertension or diabetes.

We observed significant increases in screening measures and the documentation of risk factors. Clinical processes have been shown to serve as an important first step in the prevention of adverse outcomes through increased awareness of patient panel risk factors. For instance, Ketola and colleagues observed that a quality improvement program focused on increasing documentation of cardiovascular risk factors resulted in short-term and sustained improvements in blood glucose and cholesterol levels among patient panels (Ketola et al. 2000). Other studies have also observed slower improvement on quality metrics that depend on patient outcomes, particularly for persistent and pervasive conditions like hypertension and diabetes. A study published recently by Kaiser Permanente to demonstrate improvements in blood pressure control spanned 8 years (Jaffe et al. 2013). The 2-year trend data in this analysis suggest movement in the right direction on the outcomes-based measures (HbA1c control and BP control). Even with these results, we acknowledge that the relationship between documentation, clinical performance, and outcomes is a complex one. For example, Glickman and colleagues noted that despite improvements in performance by hospitals on individual quality measures, such as aspirin therapy and smoking cessation counseling, there was no change in in-hospital mortality from acute myocardial infarction (Glickman et al. 2007). Campbell et al. (2007) acknowledge that quality improvement programs dependent on electronic reporting are often criticized for improving reporting without the true assurance of improving care quality, but also note that developers of quality metrics judge both recording of processes and the delivery of processes themselves to be essential components of care improvement.

Providers in this study serve approximately 1.5 million New York City residents; as such, our results could translate into substantial impact in our community. For instance, as shown in Table1, between T1 and T2, the overall mean practice-level performance rate for smoking cessation intervention improved by 10 percentage points. This translates to approximately 1,000 additional smokers receiving medications or counseling to quit smoking (Table S3).

If these study findings were to be used to inform decisions by policy makers as to which programs to further support, PCMH appears to be a strong contender. The New York State legislative report indicates an average of $17,000 was paid to participating providers in the PCMH incentive program with dramatic results for increasing key quality metrics (New York State Department of Health 2013). In addition, evidence from medical home pilots from across the country indicates that PCMH processes are associated with lower hospitalizations for ambulatory care-sensitive conditions (Hebert et al. 2014) and lower total costs to the health system (Nielsen et al. 2012). However, system-wide cost-savings do not translate to savings in the provider community. Rather, recent studies indicate that running a successful PCMH requires additional staff with specific training and expertise (Patel et al. 2013) and that higher medical home ratings are associated with higher practice operating costs (Nocon et al. 2012). Additional resources are also needed to transform a practice to become PCMH-recognized, though we were not able to locate any publications that quantify this. These costs are perhaps one of the reasons that fewer practices in this study group have successfully transformed and qualified for PCMH recognition, mirroring small practice trends nationwide (Rittenhouse et al. 2011). Further research is needed to understand the time and cost burdens for practice transformation to qualify for and maintain PCMH recognition, and whether shared savings and other alternative payment models can drive more small practice providers to adopt PCMH processes.

Practices participating in the incentive pilot eHearts also demonstrated statistically significant increases in QMs, but the cost of the program was substantially more than the average incentive payments by New York State Medicaid. Practices in eHearts were eligible to receive up to $100,000 in incentive payments per year, and also received significant onsite practice support and personalized quality feedback dashboards. As such, the eHearts program may not be as economical if the only practice change is improvement in QM performance. A review of P4P systematic reviews supports the idea that the P4P model can be effective as an instrument of quality improvement (Eijkenaar et al. 2013); however, depending on program design, benefits may be short term; may have unintended consequences, including discouraging the provision of nonincentivized services; and may not be uniformly impactful (Glickman et al. 2009; Roland and Campbell 2014).

Though the MU program was the most broadly adopted and implemented across all the practices, only one measure, BMI recorded, was associated with improvement. However, policy makers should not discount the value of the MU program because it focuses on improving documentation of key clinical processes, which is often the first and a necessary step in practice transformation (Parsons et al. 2012). In addition, wide participation may not be motivated by the incentives alone; providers may fear the threat of penalties beginning in 2015 for those not achieving Stage 1 of MU.

There are a few limitations to our study. All practices were using the eClinicalWorks software, as this vendor collaborated with PCIP to develop the automated data transmission process for QMs; results and observations may not be generalizable to other practices using different EHR systems. In addition, the data included in this study to characterize practices were limited to easily measurable elements such as patient volume and EHR adoption timelines. We were unable to capture other characteristics, such as provider motivation and practice organizational structure and culture, which have been linked to practice's decision to become PCMH-recognized (Meyer 2010; Howard et al. 2011). As such, and consistent with previous results, we do not know whether the programs helped to drive real improvements in performance or if better performing practices simply chose to participate in these programs more often than poorer performers (Wang et al. 2014).

Furthermore, we only explored a limited set of quality measures over a 3-year period that we know our eClinicalWorks practices tend to document reliably based on previous analyses (Parsons et al. 2012). We do not know if the patterns we found will be consistent across different measures or over the long term. Furthermore, we receive our data in the form of counts of patients aggregated to the physician level, which did not allow us to capture incremental changes in individual patients' health or to analyze the stability of patient panels at individual practices. In addition, our data do not allow us to truly distinguish between better documentation and true increases in performance on quality measures, although results from Table S3 (i.e., higher QM performance rates in T2 coupled with increases in denominator size) suggest that both quality of documentation and preventive service delivery are increasing. Further on-the-ground analysis is required to validate that services documented in the EHR actually reflect those delivered at the point of care.

While our models allowed us to explore the effect of an individual program while controlling for participation in other programs, we were unable to systematically explore interactions of programs because we lacked sufficient power to detect whether participation in multiple programs simultaneously would lead to further improvement in QM performance. In the future, we hope to test multivariate models with additional quality measures and covariates to understand which program or combination of programs is most likely to yield greater improvement.

This study provides a unique side-by-side comparison of three different practice transformation programs for small primary care practices. Two of these programs, meaningful use and PCMH, have achieved prominence nationally; the third, eHearts, is modeled on a widely recognized transformation framework related to payment reform. As federal and state programs continue to redesign and incentivize practitioners for providing comprehensive primary care, studies are needed to understand which approaches are most effective for driving improvements in practices serving medically underserved communities.

Acknowledgments

Joint Acknowledgment/Disclosure Statement: The authors wish to acknowledge Katherine Zhu for her assistance with the literature review, and fellow PCIP staff who have spent countless hours providing technical support to providers participating in practice transformation programs. This work was partially funded through New York City Tax Levy and the Robin Hood Foundation.

Disclosures: None.

Disclaimers: None.

Supporting Information

Additional supporting information may be found in the online version of this article:

Author Matrix.

Table S1. Description of Quality Measures.

Table S2. Quality Measure Trends by Programs.

Table S3. Trends in Size of QM Numerators and Denominators.

References

- Agency for Healthcare Research and Quality. 2014. “ Selecting Health Outcome Measures for Clinical Quality Measurement ” [accessed on April 1, 2014]. Available at http://www.qualitymeasures.ahrq.gov/tutorial/HealthOutcomeMeasure.aspx. [DOI] [PubMed]

- Bardach NS, Wang JJ, De Leon SF, Shih SC, Boscardin J, Goldman E, Dudley A. Effect of Pay-for-Performance Incentives on Quality of Care in Small Practices with Electronic Health Records: A Randomized Trial. Journal of the American Medical Association. 2013;310(10):1051–9. doi: 10.1001/jama.2013.277353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berenson RA, Devers KJ, Burton RA Urban Institute. 2011. and. “ Will the Patient-Centered Medical Home Transform the Delivery of Health Care? ” [accessed on May 15, 2012]. Available at http://www.urban.org/UploadedPDF/412373-will-patient-centered-medical-home-transform-delivery-healthcare.pdf.

- Campbell S, Reeves D, Kontopantelis E, Middleton E, Sibbald B, Roland M. Quality of Primary Care in England with the Introduction of Pay for Performance. New England Journal of Medicine. 2007;357(2):181–90. doi: 10.1056/NEJMsr065990. [DOI] [PubMed] [Google Scholar]

- Eijkenaar F, Emmert M, Scheppach M, Schöffski O. Effects of Pay for Performance in Health Care: A Systematic Review of Systematic Reviews. Health Policy. 2013;110(2–3):115–30. doi: 10.1016/j.healthpol.2013.01.008. [DOI] [PubMed] [Google Scholar]

- Glickman SW, Ou FS, Delong ER, Roe MT, Lytle BL, Mulgund J, Rumsfeld JS, Gibler WB, Ohman M, Schulman KA, Peterson ED. Pay for Performance, Quality of Care and Outcomes in Acute Myocardial Infarction. Journal of the American Medical Association. 2007;297(21):2373–80. doi: 10.1001/jama.297.21.2373. [DOI] [PubMed] [Google Scholar]

- Glickman SW, Boulding W, Roos JM, Staelin R, Peterson ED, Schulman KA. Alternative Pay-for-Performance Scoring Methods: Implications for Quality Improvement and Patient Outcomes. Medical Care. 2009;47(10):1062–8. doi: 10.1097/MLR.0b013e3181a7e54c. [DOI] [PubMed] [Google Scholar]

- Health Affairs. 2012. “ Health Policy Briefs: Pay for Performance ” [accessed on March 30, 2014]. Available at https://www.healthaffairs.org/healthpolicybriefs/brief.php?brief_id=78.

- HealthIT.gov. 2013. Regional Extension Centers [accessed on March 3, 2014]. Available at http://www.healthit.gov/providers-professionals/regional-extension-centers-recs.

- Hebert PL, Chuan-Fen L, Wong ES, Hernandez SE, Batten A, Lo S, Lemon JM, Conrad DA, Grembowski D, Nelson K, Fihn SD. Patient-Centered Medical Home Initiative Produced Modest Economic Results for Veterans Health Administration, 2010-2012. Health Affairs (Millwood) 2014;33(6):980–7. doi: 10.1377/hlthaff.2013.0893. [DOI] [PubMed] [Google Scholar]

- Howard M, Brazil K, Akhtar-Danesh N, Agarwal G. Self-Reported Teamwork in Family Health Team Practices in Ontario. Canadian Family Physician. 2011;57(5):e185–91. [PMC free article] [PubMed] [Google Scholar]

- Jaffe MG, Lee GA, Young JD, Sidney S, Go AS. Improved Blood Pressure Control Associated with a Large-Scale Hypertension Program. Journal of the American Medical Association. 2013;310(7):699–705. doi: 10.1001/jama.2013.108769. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ketola E, Sipila R, Makela M, Klockars M. Quality Improvement Programme for Cardiovascular Disease Risk Factor Recording in Primary Care. Qual Health Care. 2000;9(3):175–80. doi: 10.1136/qhc.9.3.175. and. “.”. doi:10.1136/qhc.9.3.175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meyer H. Group Health's Move to The Medical Home: For Doctors, It's Often a Hard Journey. Health Affairs (Millwood) 2010;29(5):844–51. doi: 10.1377/hlthaff.2010.0345. [DOI] [PubMed] [Google Scholar]

- Mostashari F, Tripathi M, Kendall M. A Tale of Two Large Community Electronic Health Record Extension Projects. Health Affairs (Millwood) 2009;28(2):345–56. doi: 10.1377/hlthaff.28.2.345. [DOI] [PubMed] [Google Scholar]

- National Quality Forum. n.d. “ Measures, Reports and Tools ” [accessed on April 10, 2014]. Available at http://www.qualityforum.org/Measures_Reports_Tools.aspx.

- National Research Council. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: The National Academies Press; 2001. [PubMed] [Google Scholar]

- New York State Department of Health. 2013. “ The Patient-Centered Medical Home Initiative in New York State Medicaid. Report to the Legislature.” [accessed on March 29, 2014]. Available at http://www.health.ny.gov/health_care/medicaid/redesign/docs/pcmh_initiative.pdf.

- Nielsen M, Langner B, Zema C, Hacker T, Grundy P. 2012. and. “ Benefits of Implementing the Primary Care Patient-Centered Medical Home: A Review of Cost & Quality Results, 2012 ” [accessed on June 5, 2014]. Available at http://www.pcpcc.org/sites/default/files/media/benefits_of_implementing_the_primary_care_pcmh.pdf.

- Nocon RS, Sharma R, Birnberg JM, Ngo-Metzger Q, Mee Lee S, Chin MH. Association between Patient-Centered Medical Home Rating and Operating Cost at Federally Funded Health Centers. Journal of the American Medical Association. 2012;308(1):60–6. doi: 10.1001/jama.2012.7048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- NYC REACH website. 2013. “ Incentive Payments ” [accessed on April 20, 2014]. Available at http://www.nycreach.org/use/incentive.

- Parsons A, McCullough C, Wang J, Shih S. Validity of EHR Derived Quality Measurement for Performance Monitoring. Journal of the American Medical Informatics Association. 2012;19(4):604–9. doi: 10.1136/amiajnl-2011-000557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patel MS, Arron MJ, Sinsky TA, Green EH, Baker DW, Bowen JL, Day S. Estimating the Staffing Infrastructure for a Patient-Centered Medical Home. American Journal of Managed Care. 2013;19(6):509–16. [PubMed] [Google Scholar]

- Reid RJ, Coleman K, Johnson EA, Fishman PA, Hsu C, Soman MP, Trescott CE, Erikson M, Larson EB. The Group Health Medical Home at Year Two: Cost Savings, Higher Patient Satisfaction, and Less Burnout for Providers. Health Affairs (Millwood) 2010;29(5):835–43. doi: 10.1377/hlthaff.2010.0158. [DOI] [PubMed] [Google Scholar]

- Rittenhouse DR, Casalino LP, Shortell SM, McClellan SR, Gillies RR, Alexander JA, Drum ML. Small and Medium-Size Physician Practices Use Few Patient-Centered Medical Home Processes. Health Affairs (Millwood) 2011;30(8):1575–84. doi: 10.1377/hlthaff.2010.1210. [DOI] [PubMed] [Google Scholar]

- Roland M, Campbell S. Successes and Failures of Pay for Performance in the United Kingdom. New England Journal of Medicine. 2014;370(20):1944–9. doi: 10.1056/NEJMhpr1316051. [DOI] [PubMed] [Google Scholar]

- Shih SC, McCullough CM, Wang JJ, Singer J, Parsons AS. Health Information Systems in Small Practices. Improving the Delivery of Clinical Preventive Services. American Journal of Preventive Medicine. 2011;41(6):603–9. doi: 10.1016/j.amepre.2011.07.024. [DOI] [PubMed] [Google Scholar]

- Takach M. About Half of the States Are Implementing Patient-Centered Medical Homes for Their Medicaid Populations. Health Affairs (Millwood) 2012;31(11):2432–40. doi: 10.1377/hlthaff.2012.0447. [DOI] [PubMed] [Google Scholar]

- Wang JJ, Sebek KM, McCullough CM, Amirfar SJ, Parsons AS, Singer J, Shih SC. Sustained Improvement in Clinical Preventive Service Delivery among Independent Primary Care Practices after Implementing Electronic Health Record Systems. Preventing Chronic Disease. 2013;10:E130. doi: 10.5888/pcd10.120341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang JJ, Winther C, Cha J, McCullough C, Parsons A, Singer J, Shih S. Patient-Centered Medical Home and Quality Measurement in Small Practices. American Journal of Managed Care. 2014;20(6):481–9. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Author Matrix.

Table S1. Description of Quality Measures.

Table S2. Quality Measure Trends by Programs.

Table S3. Trends in Size of QM Numerators and Denominators.