Abstract

Background

Event Related Potentials (ERPs) elicited by visual stimuli have increased our understanding of developmental disorders and adult cognitive abilities for decades; however, these studies are very difficult with populations who cannot sustain visual attention such as infants and young children. Current methods for studying such populations include requiring a button response, which may be impossible for some participants, and experimenter monitoring, which is subject to error, highly variable, and spatially imprecise.

New Method

We developed a child-centered methodology to integrate EEG data acquisition and eye-tracking technologies that uses “attention-getters” in which stimulus display is contingent upon the child’s gaze. The goal was to increase the number of trials retained. Additionally, we used the eye-tracker to categorize and analyze the EEG data based on gaze to specific areas of the visual display, compared to analyzing based on stimulus presentation.

Results Compared with Existing Methods

The number of trials retained was substantially improved using the child-centered methodology compared to a button-press response in 7–8 year olds. In contrast, analyzing the EEG based on eye gaze to specific points within the visual display as opposed to stimulus presentation provided too few trials for reliable interpretation.

Conclusions

By using the linked EEG-eye-tracker we significantly increased data retention. With this method, studies can be completed with fewer participants and a wider range of populations. However, caution should be used when epoching based on participants’ eye gaze because, in this case, this technique provided substantially fewer trials.

I. Introduction

For decades, ERPs have provided a powerful, temporally sensitive window into the neuronal underpinnings of a variety of cognitive functions. One advantage of ERPs is the ability to compare participants from birth into late adulthood (Fonaryova-Key, Dove, & Maguire, 2005). To optimize this potential, paradigms used with adults must be possible with young children. Developmental ERP studies successfully employ visual and auditory tasks, however visual tasks are significantly more difficult. A primary problem is children’s reduced ability to attend to visual stimuli throughout the large number of trials necessary to obtain an adequate signal-to-noise ratio. This study attempts to increase data retention using a linked EEG-eye-tracker design, which offers two benefits. First, the eye-tracker can objectively identify when visual attention has waned, stop the experiment to display an “attention-getter” until attention reengages, then continue the experiment. Thus, stimuli are only presented when the child is paying attention, presumably increasing the number of trials retained. Second, the eye-tracker can pinpoint where the participant is looking on the screen, allowing analysis of EEG data based on visual attention to a specific visual location.

For developmental researchers, although it is possible to obtain useful visual ERP data, data retention poses a significant problem. It is common to retain fewer than 50% of trials visually displayed to children (e.g., Grossmann, et al., 2007; Peltola, et al., 2009; Leppänen et al, 2007; 2009). Current best practices include requiring children to overtly respond to the stimuli (e.g., Ellis & Nelson, 1999; Todd et al., 2008) or using passive tasks during which an experimenter, blind to the display, tracks the participant’s attention toward and away from the stimuli (e.g., Bane & Birch, 1992; Grossmann, et al., 2007; Leppänen et al, 2007). Each method has challenges that may be minimized using eye tracking.

Behavioral responses can be difficult for children under 3 and can result in unnecessary study confounds. Further, data is often lost unnecessarily for children under 8 due to developmental issues, including an inability to understand the task or general inhibition or processing speed problems. For example, Hajcak and Dennis (2009) investigated how emotion influences visual processing in 5–8 year olds by studying ERP responses to emotional and neutral images. To maintain attention, children rated the valance and arousal of each picture. While the images elicited the intended emotions, less than 50% of the 25 participants could perform the rating. Because the behavioral response was secondary to the ERP analysis, data from all children, regardless of ability to perform the task, were combined for the ERP analysis, creating a confound in the ERP data. Posing additional problems to data retention, inhibition and motor control are not fully developed in school-aged children, which may lead to incorrect responses in button response tasks. This influences data retention because incorrect responses are often removed from the EEG analysis, whether the error was due to cognitive abilities related to the task or a failure to inhibit a response appropriately. Following this, younger participants likely lose more trials, skewing the data disproportionally with age.

Displaying the visual stimuli while an experimenter monitors the child’s attention is also commonly used in infant EEG studies. In this method, the experimenter responds to gross movements toward or away from the screen, which poses subjectivity and accuracy problems. Other behavioral visual categorization tasks with infants (i.e., habituation and looking time) have established an objective measure of visual attention by using an eye-tracker to employ a short attention-getter video to reengage the child when attention wanes, resulting in substantially higher retention rates than those currently found in infant ERP studies (e.g., Rhodes, et al., 2002). Applying these same techniques to ERP studies with children may improve data retention and identification of visual attention. Importantly, data loss in ERP studies is also due to ocular and motor artifacts in addition to visual attention. Extending eye-tracking methods to ERP studies will allow researchers to weigh the costs and benefits of employing this technology.

Eye-trackers also provide data regarding visual focus on a particular location within the visual stimuli, which would benefit visual categorization studies. For example, Quinn et al. (2007) found that infants and adults attend to an animal’s head as the primary classification feature when differentiating animals. Linking eye-tracking with EEG would allow the categorization of ERP data collected when participants look to the animal’s head or body, thus specifying the neurological underpinnings of categorization related to specific visual features.

We asked children to categorize animals as dogs or not dogs during either simultaneous EEG, behavioral response and eye-tracking, or just EEG and behavioral response recording, to address two questions: (1) can a child-centered, linked EEG-eye-tracker system retain more EEG data than the traditional button-response method and (2) is there an advantage to categorizing the EEG data based on eye gaze? Specifically, does comparing ERPs while attending to the head of the target items (dogs) versus non-target items (animals) uncover important differences in categorization?

2. Methods

2.1. Participants

Twenty children (6–8 years) participated in the study. Of these, 4 were excluded (2 electrode bridging, 1 failure to calibrate eye-tracker, 1 poor task performance). Useable data from 16 children was analyzed (11 male, 5 female; M=7.33 years, SD=0.59 years), 8 in the traditional button-press condition and 8 in the eye-tracker condition. These children are old enough to easily categorize animals so classification errors are due to inhibition or motor control problems, not an inability to correctly categorize. Participants were right-handed, English speakers with no known neurological issues (e.g., brain injury, seizures, learning disabilities).

2.2. Stimuli

Pictures were black drawings on a white background including 40 dogs (20 items and their mirror-images) and 160 other animals (80 items and their mirror-images), totaling 200 images. The unequal conditions should elicit the classic ERP oddball effect, specifically a larger P3 response to rare items (dogs) compared to common ones (other animals), an index of categorization. Mirror images encouraged participants to shift their gaze to view the animals’ heads. All images were cropped to pixel dimensions of 720×720 and centered in the middle of the screen display (dimensions: 1280×1024). The picture was presented until the participant responded, after which a ‘+’ fixation appeared for 1.5 seconds.

2.3. Procedure

Participants sat approximately 60 centimeters from the Tobii 1750 17-inch display monitor, eye-level with the center of the screen, wearing a 64-electrode cap. Calibration, which generally took less than 30 seconds, was performed using Tobii’s automated procedure with 5 calibration dots at various points on the screen. Participants were in one of two conditions: the button-press condition, which did not track eye gaze, or the eye-tracker condition, which was identical to the first condition but tracked eye gaze with the eye-tracker, in sync with E-Prime 2.0 presentation software (Psychology Software Tools, Inc).

Participants pressed one button in response to dogs and another for other animals. Some visual categorization ERP studies require a response only for the most commonly occurring condition and none for rare items as a way of increasing the size of the P3 ERP response (e.g., Maguire, White, & Brier, 2011). In such cases, the P3 is enhanced partially because of the inhibition required to withhold a response. Because inhibitory processes are still developing beyond age 7, the two-button response should limit data loss due to inhibitory errors. The handedness of the button responses was counterbalanced across participants.

2.4 EEG Data Acquisition

A Neuroscan 64-electrode Quickcap recorded EEG activity with Neuroscan 4.3.2 software and a Synamps2 amplifier. Electrode impedances were below 5 kOhms and referenced to an electrode near the vertex during data collection then re-referenced offline to the average over the head. Data were sampled at 1000Hz, high pass filtered at 0.1Hz during data collection, then low pass filtered at 30Hz offline.

2.5. Eye-tracker Data Acquisition

The Tobii 1750 infrared eye-tracker using E-Prime stimulus display software has a sampling rate of 50Hz and an effective accuracy between .05 and 1cm at a 60cm distance. Eye-tracker data needed to address two goals. First, identify whether the participant’s gaze was directed at the stimulus. If not, the program removed the picture, displayed an attention-getter until attention was recaptured, and then continued with the next trial. Second, identify where on the screen the participant was looking to group EEG data based on visual gaze.

To address the first goal, eye gaze was captured online and used to determine stimulus presentation, using the following parameter. Prior to the trial, visual gaze was required on the “+” fixation for 1.5 seconds, an interval that assured sustained attention as opposed to a saccade and assured that the ERP response to one trial did not influence following ERP response. During each trial, if subjects diverted their gaze from the screen for longer than 1-second (prior to completing a button press), Tobii signaled E-Prime to display an “attention-getter”, a brightly-colored star rotating off-axis in the center of the screen. The 1-second duration to classify diverted gaze is based on pilot data in which shorter time intervals triggered many more attention-getter displays. This also limited the use of the attention-getter to instances when the child was unable to reengage their attention without an outside influence. The attention-getter was presented in a loop until the subject reestablished visual attention for at least 1 second, which assures real visual fixation to the screen.

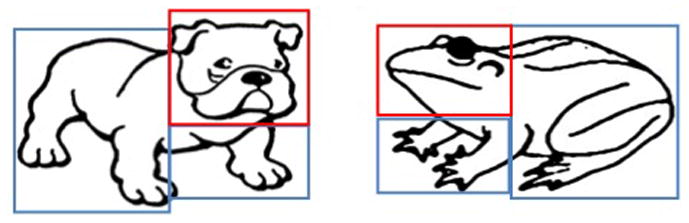

To address the second goal related to where the participant was looking, regions of interest (ROIs) were identified using the Tobii software. Each image had three categories of ROIs: the head, the body, and the background. As can be seen in Figure 1, because straight lines were required for the ROI, two ROIs were sometimes needed to cover the animal’s body. In these cases, each body ROI was given the same classification and treated as a single ROI. When eye gaze was detected in an ROI, it was recorded in E-Prime and signals (trigger codes) specifying the ROI were sent to the EEG collection software. Trigger codes were also sent to mark each stimulus presentation and the subject’s button response, allowing the identification of trials during which subjects looked at the head of animal to respond.

Figure 1. Stimuli examples with ROIs.

Examples of study stimuli coded for eye gaze Regions of Interest (ROIs). The red box represents the critical feature.

2.6 Timing Considerations

Three factors influence the temporal precision of the data in a linked EEG-eye-tracker design: (1) the lag between when the software sends a stimulus to be displayed on the monitor and when the stimulus is actually displayed, (2) the lag between when the participant looks at an ROI and when that trigger appears in the continuous EEG file and (3) the sampling rate of the eye-tracker. We accounted for the first two factors (see below), addressing the goals of this study. Issues relating to the third factor are addressed in the Discussion.

Using E-Prime’s internal “OnsetDelay” variable, we accounted for the time lag between when an image is sent to the monitor and when the image was actually displayed. Offline, this lag can be corrected on a trial-by-trial basis; however, the time lag was 33ms (two refresh cycles) in over 99% of trials thus 33ms was added to each image trigger. For establishing the delay between visual fixation and a trigger submission, we ran a test experiment prior to actual data collection, in which the entire screen was an ROI and eye gaze detection simulated a keystroke response. This experiment identified a constant reaction time of 3ms in E-Prime which also appeared in the EEG file. Thus, for all subjects, each ROI trigger in the EEG file was adjusted by 3ms. For more detailed information on all aspects of the coding see the first author’s website (http://www.utdallas.edu/bbs/brainlab/).

3.0 Results

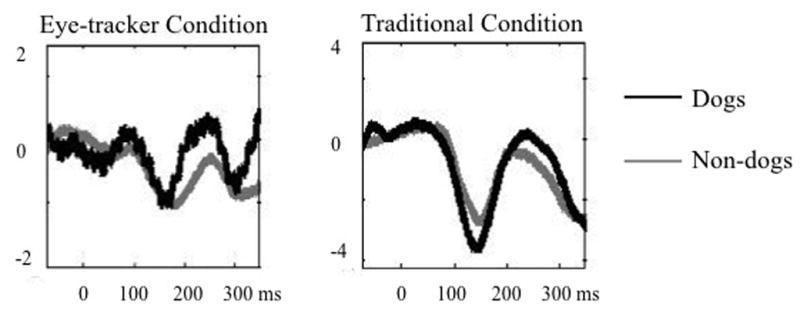

To assess the primary objective of retaining more data with the linked EEG-eye-tracker method versus traditional methods, we compared the proportion of retained epochs in the eye-tracker condition to the button-press condition. Proportion of retained epochs was calculated by dividing the number of clean epochs by the total number of correct trials for each individual in each condition. Standard data cleaning methods were used to remove eye blinks, bad electrodes, and excessive noise and/or movement; EEG data were epoched at the point of stimulus presentation. A 2-way ANOVA revealed significantly higher data retention in the eye-tracker condition (66.34% or an average of 132.68 of the 200 trials) compared to the button-press condition (44.03% or 88.06 trials), F(1, 14)=5.50, p=0.034. Importantly, error rates did not statistically differ between the eye-tracker and button-press conditions: M=97.19; SD=1.98; M=98.06; SD=2.01, respectively; t(14)=0.88, p=0.40, indicating that the differences in retention rates were due to differences in the noise level in the EEG, not behavioral differences. As seen in Figure 2, both conditions exhibit a P3 ERP consistent with expectations even with a relatively small sample, suggesting both methods appropriately assess categorization. Importantly, overall study duration, calculated as the amount of time between the presentation of the first stimulus and the final participant response, did not differ between the two conditions, p=0.99, nor did reaction times, p=.31.

Figure 2.

Midline central-parietal (average Cz and CPz) ERPs.

The second objective was to investigate whether using more precise EEG data collected during attention to a specific visual feature uncovers differences in categorization. When ROI triggers for eye gaze to the head of the animal (our primary feature of interest) appeared in the EEG file between stimulus presentation and button response for a given trial, that trial was classified as “attention to the head”. Unfortunately, participants looked to the head far less often than expected, only 39.31% of the time. Only 40 trials were dogs and, considering errors and EEG noise removed 43.6% of the data, fewer than 10 dog trials per participant on average with looks to the head were retained, making it unrealistic to address this question with this study design.

4.0 Discussion

We predicted that the linked EEG-eye-tracker technology would result in higher data retention than the current best practices and allow for a more precise way to analyze EEG data based on participants’ eye gaze. Using the eye tracker to provide gaze-related attention-getters resulted in significantly greater data retention, potentially requiring fewer trials in the study design. Further, given that habituation studies using this child-centered method of maintaining and tracking attention are possible with infants, this type of study design could provide an objective and passive way to study visual ERPs across the lifespan. On the other hand, categorizing data based on eye gaze was not successful. Removing noisy data and only retaining trials with a clear visual fixation to the area of interest resulted in too few trials per participant for successful data analysis. This finding should not deter others from using this method, however. Instead, researchers considering using eye-tracking to more precisely categorize visual data should note that this method may require more trials to provide a robust signal-to-noise ratio and/or stimuli that more consistently elicit gaze shifts. Overall, these findings provide new insights as to the potential benefits and considerations for using eye-tracking with EEG.

In addition to the goals of this study, there are other uses of the linked EEG-eye-tracker method that could be incredibly informative. For instance, it permits the creation of an EEG epoch starting at the point of visual fixation within a specific ROI. Such a measure would provide new insights into areas such as visual categorization and reading in typical and atypical populations. Importantly, this would require a greater degree of temporal precision than in the current study. The methods section discussed ways to account for temporal differences between the eye-tracker and the EEG equipment related to screen refresh rates and processing delays; however, the sampling rate of the eye-tracker also impacts temporal precision. While the EEG collects data at 1000Hz, eye-tracker rates vary. In this case, Tobii 1750 data collection occurred at 50Hz, well below the precision of the EEG. Studies that use eye-tracker data to analyze EEG data time-locked to visual attention need to take this into consideration. This is less of a concern when focusing on later ERP peaks, such at the N400 or P600; however, early peaks, such as the N1 or P2, could be greatly influenced by this delay.

There are two other important considerations related to our data. The first is that we cannot definitively equate visual gaze with visual attention. Importantly, same problem exists with the current experimenter-monitored technique. Second, it is possible that the attention-getter could be more interesting than the stimuli, thus encouraging the child to look away from the screen. Because the overall study duration did not differ between groups, we do not consider this an issue for the current study but note that it is worth considering in other research.

Linking eye-tracking and EEG methodologies could greatly impact the types of questions researchers can ask and the populations they can study. However, there are important practical issues to consider when identifying whether this methodology would strengthen a particular design. Here we have provided information about the relative benefits and considerations to inform those decisions.

Acknowledgments

We would like to thank the following people for their help in this project: McKenna Jackson, Bambi Delarosa, Julie Schneider and Alyson Abel. This work was funded by NIH/NICHD grant 1R03HD064629-01 and University of Texas at Dallas Faculty Initiative Grants awarded to the first author.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Ellis AE, Nelson CA. Category prototypicality judgments in adults and children: Behavioral and electrophysiological correlates. Developmental Neuropsychology. 1999;15:193–212. [Google Scholar]

- Fonaryova-Key A, Dove G, Maguire MJ. Linking brainwaves to the brain: An ERP component primer. Developmental Neuropsychology. 2005;27:183–216. doi: 10.1207/s15326942dn2702_1. [DOI] [PubMed] [Google Scholar]

- Grossmann T, Striano T, Friederici AD. Developmental changes in infants’ processing of happy and angry facial expressions: A neurobehavioral study. Brain and Cognition. 2007;64:30–41. doi: 10.1016/j.bandc.2006.10.002. [DOI] [PubMed] [Google Scholar]

- Hajcak G, Dennis TA. Brain potentials during affective picture processing in children. Bioloical Psychology. 2009;80:333–338. doi: 10.1016/j.biopsycho.2008.11.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leppänen JM, Moulson MC, Vogel-Farley VK, Nelson CA. An ERP study of emotional face processing in adults and infant brain. Child Development. 2007;78:232–235. doi: 10.1111/j.1467-8624.2007.00994.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leppänen JM, Richmond J, Vogel-Farley VK, Moulson MC, Nelson CA. Categorical representation of facial expressions in the infant brain. Infancy. 2009;14(3):346–362. doi: 10.1080/15250000902839393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maguire MJ, White J, Brier MR. How semantic categorization influences inhibitory processing in middle-childhood: An event related potentials study. Brain and Cognition. 2001;76:77–86. doi: 10.1016/j.bandc.2011.02.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelson CA, Thomas KM, de Haan M, Wewerka SS. Delyaed recognition memory in infants and adults as revealed by event-related potentials. International Journal of Psychophysiology. 1998;29:145–165. doi: 10.1016/s0167-8760(98)00014-2. [DOI] [PubMed] [Google Scholar]

- Peltola MJ, Leppänen JM, Maki S, Hietannen JK. Emergence of enhanced attention to fearful faces between 5 and 7 months of age. Social Cognitive and Affective Neuroscience. 2009;4(2):134–142. doi: 10.1093/scan/nsn046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quinn PC, Westerlund A, Nelson CA. Neural markers of categorization in 6-month-old infants. Psychological Science. 2006;17(1):59–66. doi: 10.1111/j.1467-9280.2005.01665.x. [DOI] [PubMed] [Google Scholar]

- Rhodes G, Geddes K, Jeffery L, Dziurawiec S, Clark A. Are average and symmetric faces attractive to infants Discrimination and looking preferences. Perception. 2002;31:315–321. doi: 10.1068/p3129. [DOI] [PubMed] [Google Scholar]

- Southgate V, Csibra G, Kaufman J, Johnson MH. Distinct processing of objects and faces in the infant brain. Journal of Cognitive Neuroscience. 2008;20:741–749. doi: 10.1162/jocn.2008.20052. [DOI] [PubMed] [Google Scholar]

- Todd RM, Lewis MD, Meusel LA, Zelazo PD. The time course of social-emotional processing in childhood: ERP responses to facial affect and personal familiarity in a go-nogo task. Neuropsychologia. 2008;46:595–613. doi: 10.1016/j.neuropsychologia.2007.10.011. [DOI] [PubMed] [Google Scholar]