Abstract

The fast adoption of smartphone applications (apps) by behavioral scientists pose a new host of opportunities as well as knowledge and interdisciplinary challenges. Therefore, this brief report will discuss the lessons we have learned during the development and testing of smartphone apps for behavior change, and provide the reader with guidance and recommendations about this design and development process. We hope that the guidance and perspectives presented in this brief report will empower behavioral scientists to test the efficacy of smartphone apps for behavior change, further advance the contextual behavioral etiology of behavioral disorders and help move the field towards personalized behavior change technologies.

Keywords: smartphone apps, design, interdisciplinary collaborations, mHealth, behavior change, personalized care

Emerging collaborations between behavioral scientists and software technologists to develop mobile research tools has no precedent in the history of psychology (e.g., Miller, 2012). Not surprisingly, mobile technologies are quickly becoming the tool of choice to gather behavioral data and deliver behavioral interventions (e.g., Aguilera & Muench, 2012; Kumar et al., 2013; Steinhubl, 2013).

Among the different available mobile technologies, one stands out for its versatility and relevance for contextual behavioral science (CBS) researchers: smartphone apps. Smartphone apps are one of the most popular and far reaching types of mobile technologies available today. Apps allow CBS researchers to gather contextual data on situational factors, private events (e.g., mood) and individual’s responses to them in what approaches a descriptive functional analysis of a target behavior. This data gathering can be seamless (e.g., during an individual’s daily activities) and has the great advantage of avoiding the recall bias inherent in retrospective global self-reports (e.g., Sato & Kawahara, 2011).

These new technologies however, pose several challenges for behavioral scientists. First, they require knowledge and experience that are outside their traditional field, such as mobile user experience design (UX). Learning how to design an app to fit within the context of an individual’s daily life is a significant challenge, especially when scientific measures are a key consideration. Although generally the emphasis is on mobile “technology,” the mobile design requirement cannot be overlooked nor stressed enough. At times, design is a larger obstacle than the technical development of the app. Second, the multidisciplinary nature of smartphone app design and development requires a strong collaborative team and raises intellectual property (IP) issues. Despite the space and time constraints to designing and developing mobile contextual behavioral health apps, the promise and value of mobile smartphones makes the challenges worth solving. Furthermore, as the industry embraces behavioral modification apps, non-traditional funding opportunities will become available to researchers.

The aim of this brief report is to orient the reader about the process considerations involved in the development of smartphone apps for behavior change. Two authors (W.R., N.W.), are software developers and designers with experience working with behavioral scientists to develop mindfulness and acceptance-based apps. Two other authors (J.B. and R.V.) are developing and testing Acceptance and Commitment Therapy smoking cessation apps, and the fifth author (M.M.), is involved in the development of a mobile intervention to prevent suicidality among individuals with severe psychopathology. This brief report offers guidance, based on the combined experience of all the authors of this paper, to tackle the predictable challenges scientists may face, and offer some tips on how to lessen the time, cost and expertise requirements.

Vision and Team

Perhaps the most important step in designing smartphone apps for behavior change, and potentially the best place to save costs and complexity, is the vision-forming phase of the process. This can occur before mobile technologists are involved and can help guide decisions during the team building phase that follows. A clear vision of end-goals, interventions deployed, the contexts in which they will be used and the problems to be solved with mobile technology, can dramatically decrease the cost, shorten timelines and increase the chance of a successful project. Any feature or user requirement that can be removed saves time, money and complexity while also mitigating the risk of users not being able or willing to use the resulting app in their daily life. Furthermore, any late in-the-project change requests to features, content or design that can be avoided in this way, can significantly save on engineering and user experience “design iteration” costs and will go far in cultivating efficient teamwork and trust.

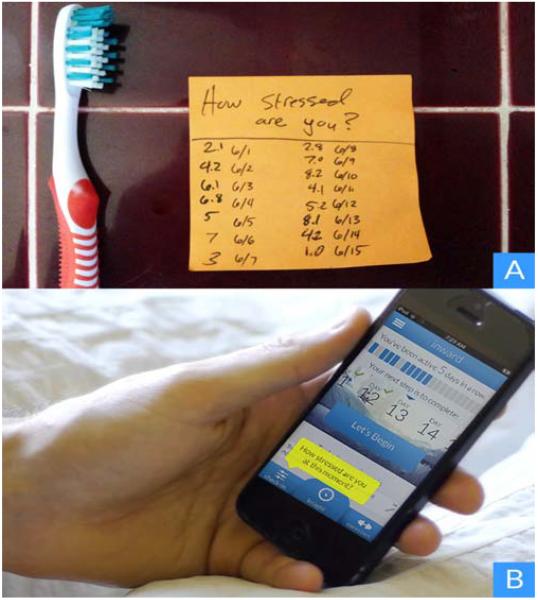

One way to reduce the complexity of requirements in early stages is using “low-tech and low-cost experiments” that will help refine the mobile behavioral health app’s vision. Borrowing from the popular book “Lean Startup” (Ries, 2011), we recommend to engage in early experiences that enable the team (a) not to build things people do not want or will not use, by (b) testing out hypotheses early, as quickly and as cheaply as possible. Such tests can be done using low-tech tools. Post-it notes (see Figure 1), are one example, as well as other low-cost tools such as manually formatted emails, text messages or electronic surveys using free products like Google apps. When interpreting these surveys, special attention should be placed on the varied range of responses from users. In addition, we also recommend having several small (4-6 member) focus groups of potential users to respond to the initial design concepts. We have found that moving from conceptualizing on a white board to post-it notes quickly and often, while involving several team members as well as individuals from the future app demographic, makes it easier to build a team and design with a clear vision for the final app.

Figure 1.

Low-cost, low-tech methods of testing behaviors such as strategically placed post-it notes for daily reporting (panel A), can provide insight and refinement that is translated into a full app experience (panel B).

An example of the importance of using “low-tech and low-cost” strategies when needed, is a study conducted by one of the authors of this brief report (RV). In this study (Vilardaga, Hayes, Atkins, Bresee, & Kambiz, 2013), the use of Portable Digital Assistants (an early 90s’ technology) enabled him to assess the feasibility of measuring certain psychological processes and procedures in a particularly challenging population (individuals with serious mental illness). Wanting to use one of the more sophisticated technologies available at the time (early smartphones) would have delayed this learning process. Since the growth of mobile technologies is exponential, researchers at any given point in time will find themselves in a similar choice point. In our view, early testing should take precedence over the use of more advanced technologies (e.g., machine learning).

Once a vision is clarified, a basic inventory of skill sets and roles can help determine who is playing what role in the team. Main roles may include a scientist or content expert, intervention provider, content producer of media assets, app designer, app developer, project manager, and app testers. Some individuals can play many of these roles. Although all team members should participate in brainstorming and discussions, how roles are assigned earlier on can have a major impact in the communication process and ownership of IP. For this reason, some projects of this type opt for an Open Source licensing agreement that allow for the subsequent inclusion of the Open Source code into proprietary codebases. The Open mHealth initiative (Chen et al., 2012) and the MIT License (Open Source Initiative, 2014) are examples of this type of approach.

Each team can differ greatly depending on the range of expertise and clarity of vision. Some teams include experts in both research and intervention content areas, while in others most members are only familiar with the content. In addition to the core team, commercial mobile technologists may be consulted early on to reduce future costs, since determining how and what to build can be more expensive and time consuming than building it. Although unusual, cost and timelines can be further reduced if the mobile technologists have first hand experience “mobilizing” certain content areas, such as two of the authors (W.R., N.W.), who are technologists with expertise in mindfulness and acceptance-based therapies.

IP ownership is another team consideration. If it is critical that the underlining technology or design of the app is owned by the core research team, this might require in-house development or use of a “work for hire” development team that may not specialize in the principles and therapies explored in the research project. Care must be taken in this case that the technologies can continue to move forward after the completion of the “work for hire” pilot. A firm IP strategy can be quite helpful in navigating unexpected contingencies (e.g., transitions between development teams or within in-house developers) that will require code to be co-developed, re-purposed, or shared. It is in such environments that Open Source and/or “hybrid” licensing may be able to ease the required transitions without putting ironclad constraints on the movement and sharing of code, all the while granting greater visibility, easier testing, and more widespread adoption of the software through code-sharing resources (such as https://github.com/).

Finally, it is also important to keep in mind the individual or collective values of the team. The values of a company or research group are guiding verbal contingencies that should be assessed early on in the process of forming a team to develop a mobile behavioral health app. Team members should also ask themselves if they find some personal meaning in the project they are about to embark on. The function of this evaluation of values is that of facilitating group cohesion and minimize the impact of potential misunderstandings during this collaborative process. Successful companies are very well aware of the importance of defining their “mission.” A complete alignment of values among members of a team might not be necessary. However, in our experience it is an important verbal context for the success of a project.

Table 1 provides app developers and researchers with a number of guiding questions that will hopefully help them navigate the vision and team phases outlined above.

Table 1. Guiding questions for the vision and team phase of contextual behavioral mobile development.

| “What problems are we solving with mobile technology, and what are the “active ingredients” of the intervention that must be included?” |

| “How can we test our hypotheses with low-tech, low-cost prototypes before spending time and money assembling the team and building out the app?” |

| “Might a cheaper and more accessible technology choice better suit us (e.g., SMS, email surveys, in clinic devices)?” |

| “Is there any reason to work with commercial team members?” |

| “Does the internal team have the ability to customize open source software to get the benefits of a platform without paying licensing fees?” |

| “What is our IP strategy and requirements and how does that impact team building?” |

Discovery and Technical Scope

During the process of app development, there are multiple rounds of “discovery.” The first may be included in the team building process so that a rough estimate of the cost and timeline can be agreed upon (in the case of a contract even before it is signed). In these discussions, certain details of the project can be left open, depending on further discovery and design phases. Discussing these issues early on and then following up on them is important to foster team trust and making sure expectations are managed appropriately as details begin to be planned out.

Once the project officially starts, it helps to evaluate that there is a shared vision for how the team works, and the project’s scope and timeline. Each team member will bring to the discovery phase unique combinations of insight and requirements. In some cases, scientists might be more involved in leading those requirement discussions, and in others, mobile technologists themselves. In both cases, incentives can be aligned so that the resulting solution is reusable across future projects given common needs across researchers, a trend we see in the industry and a key to getting good value and teamwork.

The end goal and the deadlines to achieve it will define the “technical scope,” in other words, the range of features and functionalities that need to be implemented in a specific app. This term is common among mobile technologists. During these initial interactions, it is important to clearly define the app features, and how adding or taking away features might affect the end-goal and the planned timeline and budget needed to achieve it.

Although software development timelines are difficult to predict before further development, a mutually agreed upon scope and timeline should be established before development begins. Establishing concrete deadlines allows all parties involved to roughly judge the schedule for the core development of the technology and to identify early the need for timeline adjustments. Discovery can include areas where further investigation will determine if something will or will not fit within the agreed upon scope. It is not uncommon to change scope once the project begins, since design features become more clear as iterations are implemented. However, it is important to have a clear process so that all parties can agree to any new timeline and associated costs.

Table 2 provides guiding questions for the discovery and technical scope phase of app development.

Table 2. Guiding questions for the discovery and technical scope phase of contextual behavioral mobile development.

| “Who is the user, in what environment, with what mindset, with how much time, and how often will this app be used?” |

| “What did we learn from low-tech and low-cost prototypes (see Figure 1)?” “Are there unknown design features that we should plan on experimenting with across multiple iterations?” |

| “What did we learn from initial formal or informal focus groups with the target population?” |

| “What data must be collected, in what format, how often, and how it will be delivered to the research team?” |

| “What type of devices will be supported (e.g., iOS or Android, version of operating system, bandwidth)?” |

| “Is the scope as clear as possible in terms of key goals, milestones, dates and number of iterations to guide the project?” |

Design Iterations, Development and Deployment

The final steps in smartphone app development include the actual design and deployment of the app. In that regard, it is important to realize that app design is substantially different from web design. Developing for mobile platforms has to respect the fact that there will be less space and time to engage the user’s attention, so approaching this task by keeping in mind the need for simplicity of the user’s experience is critical. Therefore, the team needs to consider which features to include, and if the app features fit the user’s natural environment. Asking these questions will help the team come together to discuss the research, understand its pieces, and define the goals of the end outcome.

The notion of “engagement” cannot be stressed enough by all parties during the design phase. It is both the means in which the app is designed to (a) collect enough data to make it statistically meaningful, and (b) deliver the intervention with a focus on efficacy. As stated by the Spanish philosopher Baltasar Gracián, “Good things, when short, are twice as good” (Gracián, 1647). The same is true for app design. It is easy to look at a manualized intervention and imagine a one-to-one translation into mobile. However, this is to be avoided given both the new capabilities mobile devices offer, along with their extreme space and time constraints. The process in which one maps the “active ingredients” of an intervention to mobile capabilities and constraints is a craft that requires several iterations and experts of both the intervention and user interface design.

For example, in one app that the authors (W.R., N.W.) created, a manualized 16-session mindfulness-based smoking cessation intervention was reduced to a 21-days of 5-10 minutes of audio, video or input. The focus was on making the intervention “digestible” within the context of the user’s daily life. Each additional feature (e.g., reminders, tips, tracking) was designed to fit the potential distractions encountered by users in their daily environment. In other words, app designers have to take into account how much attention would be required by the user to understand and properly engage with the app without interfering with other activities, and by maintaining the intervention’s active ingredients.

Another important design consideration is that although not every face-to-face intervention can be translated to mobile technology, questioning the assumption of what is possible is important. For example, one of the authors (J.B.) developed an Acceptance and Commitment Therapy (ACT) app for smoking cessation. Before this app was developed, some ACT theoreticians and clinicians thought it was not possible to adapt ACT into a smartphone format because its original form was delivered by a clinician and involved lengthy sessions with face-to-face experiential exercises. However, the app was developed and successfully tested in a randomized trial (Bricker et al., n.d.)

From a scientific perspective, the team needs to make sure that the elements of an app intervention are theoretically consistent with the psychological model they are testing. In addition, careful attention must be paid to the degree in which specific questions and exercises will be interpreted by the end-user in a way that is consistent with their theoretical model. This is important since the user’s full attention may not be available for all or some of the app assessments or interventions, but a well-designed app with high levels of acceptability by the end-user does not necessarily make it scientifically useful (e.g., Angry Birds app). The assessment questions might be tied to divergent theoretical models, the exercises might have elements contradictory to empirically driven processes of change, and the back-end structure of the app might not allow proper data collection. In other words, an app can be very well designed and received by the end-user, but may not help us advance our understanding of the contextual behavioral etiology of behavioral patterns to be targeted for change.

One important remark on design, in particular to the “mobilization” of contextual behavioral interventions and data collection, is about expecting iterations. As the development of the application and content occurs, there will always be opportunity to make changes. Some changes will be small and trivial, while others may surprisingly require large scale changes to the underlying design of the interface or technology below its surface. This might not be problematic as long as the app vision, timeline and budget allows for such iterations in terms of reworking design, content and the technical implications as described in the technical scope section above.

Finally, deployment is different depending on what platforms are chosen to launch the behavioral change app. Typically, developers choose one single platform to focus resources on and only expand to more platforms when they have the time, clarity and resources to do so. For example, deployment on Apple OS devices (i.e., iPhone, iPad and iTouch), requires more implementation steps (e.g., approval process to make changes to app) than the Android “ecosystem,” however, the Apple OS is less “fragmented” across devices and device makers, so there is less debugging and compatibility issues to navigate. See Table 3 for a list of questions relevant to design, develoment and deployment of behavior change apps.

Table 3. Guiding questions for the design iterations, development and deployment phase of contextual behavioral mobile apps.

| “Can the app be used in most daily scenarios and situations?” |

| “Is the app content theoretically consistent with the psychological model we are testing?” |

| “Will the use of notifications be helpful, or instead might be distracting or not feasible in some situations?” |

| “Do the exercises and questions have the intended effect on the end-user?” |

| “If changes are needed, are they: (a) within scope, or (b) outside of scope? (Are they really needed?) Can we agree on the implications for timeline and cost?” |

| “How are we deploying the app, with how many users, for how long and how will this process be managed?” |

| “What kinds of changes do we expect after deployment; who is responsible for testing and finding “bugs,” and how will maintenance and support be handled?” |

Summary and conclusion

There is an emerging “symbiotic” relationship in our field between behavioral scientists and mobile technologists. In line with that, this brief report provided guidance and tips with respect to the development of smartphone apps for behavior change, a fast growing trend. Our goal was not to provide a detailed and systematic account about how to develop smartphone apps, but to orient the reader towards practical considerations that might be important to keep in mind throughout this process. These practical considerations in app development included the establishment of a clear vision and team, an emphasis in the iterative nature of this process of discovery, IP considerations, and finally some important elements of the design of the app itself and its deployment.

If Moore’s law continues to hold in the years to come, the cost of mobile technologies will continue to drop (Moore, 1975), making more feasible the development of these tools by a wider range of contextual behavioral scientists. The guidance and perspectives in this report will hopefully empower behavioral scientists to test the efficacy of smartphone apps for behavior change, further advance the contextual behavioral etiology of behavioral disorders, and move the field towards personalized behavior change technologies.

Acknowledgments

Support for the writing of this manuscript was provided by grants from the National Institutes of Health (T32MH082709 to RV, R01CA166646 to JB and R01AA020248-01 to MM).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Walter R. Roth, inward inc., Silicon Valley

Roger Vilardaga, University of Washington & Fred Hutchinson Cancer Research Center

Nathanael Wolfe, inward inc., Silicon Valley

Jonathan B. Bricker, Fred Hutchinson Cancer Research Center & University of Washington

Michael G. McDonell, University of Washington

References

- Aguilera A, Muench F. There’s an app for that: Information technology applications for cognitive behavioral practitioners. The Behavior Therapist. 2012;35(4):65–73. [PMC free article] [PubMed] [Google Scholar]

- Bricker JB, Mull K, Vilardaga R, Kientz J, Mercer LD, Akioka K, Heffner JL. Randomized, controlled pilot trial of a smartphone app for smoking cessation using acceptance and commitment therapy. doi: 10.1016/j.drugalcdep.2014.07.006. (n.d.) manuscript submitted for publication. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen C, Haddad D, Selsky J, Hoffman JE, Kravitz RL, Estrin DE, Sim I. Making sense of mobile health data: An open architecture to improve individual- and population-level health. Journal of Medical Internet Research. 2012;14(4) doi: 10.2196/jmir.2152. (PMID: 22875563 PMCID: PMC3510692) doi: 10.2196/jmir.2152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gracián B. The art of worldly wisdom. Huesca; Spain: 1647. [Google Scholar]

- Kumar S, Nilsen WJ, Abernethy A, Atienza A, Patrick K, Pavel M, Swendeman D. Mobile health technology evaluation: The mHealth evidence workshop. American Journal of Preventive Medicine. 2013;45(2):228–236. doi: 10.1016/j.amepre.2013.03.017. doi: 10.1016/j.amepre.2013.03.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller G. The smartphone psychology manifesto. Perspect. Psychol. Sci. Perspectives on Psychological Science. 2012;7(3):221–237. doi: 10.1177/1745691612441215. [DOI] [PubMed] [Google Scholar]

- Moore G. Progress in digital integrated electronics. Electron devices meeting, 1975 international. 1975;21:11–13. [Google Scholar]

- Open Source Initiative The MIT Licence. 2014 May; Retrieved 2014-05-02, from http://opensource.org/about.

- Ries E. Random House Digital, Inc; 2011. The lean startup: How today’s entrepreneurs use continuous innovation to create radically successful businesses. [Google Scholar]

- Sato H, Kawahara J.-i. Selective bias in retrospective self-reports of negative mood states. Anxiety, stress, and coping. 2011;24(4):359–367. doi: 10.1080/10615806.2010.543132. (PMID: 21253957) doi: 10.1080/10615806.2010.543132. [DOI] [PubMed] [Google Scholar]

- Steinhubl S. Can mobile health technologies transform health care? JOURNAL-AMERICAN MEDICAL ASSOCIATION. 2013;310(22):2395. doi: 10.1001/jama.2013.281078. [DOI] [PubMed] [Google Scholar]

- Vilardaga R, Hayes SC, Atkins DC, Bresee C, Kambiz A. Comparing experiential acceptance and cognitive reappraisal as predictors of functional outcome in individuals with serious mental illness. Behaviour Research and Therapy. 2013;51(8):425–433. doi: 10.1016/j.brat.2013.04.003. doi: 10.1016/j.brat.2013.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]