Abstract

Neurons in the primary visual cortex are more or less selective for the orientation of a light bar used for stimulation. A broad distribution of individual grades of orientation selectivity has in fact been reported in all species. A possible reason for emergence of broad distributions is the recurrent network within which the stimulus is being processed. Here we compute the distribution of orientation selectivity in randomly connected model networks that are equipped with different spatial patterns of connectivity. We show that, for a wide variety of connectivity patterns, a linear theory based on firing rates accurately approximates the outcome of direct numerical simulations of networks of spiking neurons. Distance dependent connectivity in networks with a more biologically realistic structure does not compromise our linear analysis, as long as the linearized dynamics, and hence the uniform asynchronous irregular activity state, remain stable. We conclude that linear mechanisms of stimulus processing are indeed responsible for the emergence of orientation selectivity and its distribution in recurrent networks with functionally heterogeneous synaptic connectivity.

Introduction

When arriving at the cortex from the sensory periphery, sensory signals are further processed by local recurrent networks. Indeed, the vast majority of all the connections a cortical neuron receives are from the cortical networks within which it is embedded and only a small fraction of connections are from feedforward afferents: The fraction of recurrent connections has been estimated to be as large as 80% [1]. What is the precise role of this recurrent network in sensory processing is not yet fully clear.

In the primary visual cortex of mammals like carnivores and primates, for instance, it has been proposed that the recurrent network might be mainly responsible for the amplification of orientation selectivity [2], [3]. Only a small bias provided by the feedforward afferents would be enough, and selectivity is then amplified by a non-linear mechanism implemented by the recurrent network. This mechanism is a result of the feature-specific connectivity assumed in the model, where neurons with similar input selectivities are connected to each other with a higher probability. This, in turn, could follow from the arrangement of neurons in orientation maps [4]–[6], which implies that nearby neurons have similar preferred orientations. As nearby neurons are also connected with a higher likelihood than distant neurons, feature-specific connectivity is a straight-forward result in this scenario.

Feature-specific connectivity is not evident in all species, however. In rodent visual cortex, for instance, a salt-and-pepper organization of orientation selectivity has been reported, with no apparent spatial clustering of neurons according to their preferred orientations [6]. As a result, each neuron receives a heterogeneous input from pre-synaptic sources with different preferred orientations [7].

Although an over-representation of connections between neurons of similar preferred orientations has been reported in rodents [8]–[12], presumably as a result of a Hebbian growth process during a later stage of development [13], such feature-specific connectivity is not yet statistically significant immediately after eye opening [10]. A comparable level of orientation selectivity, however, has indeed been reported already at this stage [10]. If cortical recurrent networks make a contribution to sensory processing at this stage, random recurrent networks should be chosen as a model [14]–[16]. Activity-dependent reorganization of the network, however, may still refine the connectivity and improve the performance of the processing later during development.

Here we study the distribution of orientation selectivity in random recurrent networks with heterogeneous synaptic projections, i.e. networks where the recurrent connectivity does not depend on the preferred feature of the input to the neurons. We show that in structurally homogeneous networks, the heterogeneity in functional connectivity, i.e. the heterogeneity in preferred orientations of recurrently connected neurons, is indeed responsible for a broad distribution of selectivities. A linear analysis of the network operation can account quite precisely for this distribution, for a wide range of network topologies including Erdős-Rényi random networks and networks with distance-dependent connectivity.

Methods

Network Model

In this study, we consider networks of leaky integrate-and-fire (LIF) neurons. For this spiking neuron model, the sub-threshold dynamics of the membrane potential  of neuron

of neuron  is described by the leaky-integrator equation

is described by the leaky-integrator equation

| (1) |

The current  represents the total input to the neuron, the integration of which is governed by the leak resistance

represents the total input to the neuron, the integration of which is governed by the leak resistance  , and the membrane time constant

, and the membrane time constant  . When the voltage reaches the threshold at

. When the voltage reaches the threshold at  , a spike is generated and transmitted to all postsynaptic neurons, and the membrane potential is reset to the resting potential at

, a spike is generated and transmitted to all postsynaptic neurons, and the membrane potential is reset to the resting potential at  . It remains at this level for short absolute refractory period,

. It remains at this level for short absolute refractory period,  , during which all synaptic currents are shunted.

, during which all synaptic currents are shunted.

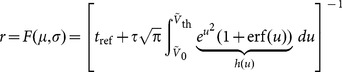

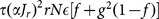

The response statistics of a LIF neuron, which is driven by randomly arriving input spikes, can be analytically solved in the stationary case. Assuming a fixed voltage threshold,  , the solution of the first-passage time problem in response to randomly and rapidly fluctuating input yields explicit expressions for the moments of the inter-spike interval distribution [17], [18]. In particular, the mean response rate of the neuron,

, the solution of the first-passage time problem in response to randomly and rapidly fluctuating input yields explicit expressions for the moments of the inter-spike interval distribution [17], [18]. In particular, the mean response rate of the neuron,  , in terms of the mean,

, in terms of the mean,  , and variance,

, and variance,  , of the fluctuating input is obtained

, of the fluctuating input is obtained

|

(2) |

with  and

and  .

.

Employing a mean field ansatz, the above theory can be applied to networks of identical pulse-coupled LIF neurons, randomly connected with homogeneous in-degrees, and driven by external excitatory input of the same strength. Under these conditions, all neurons exhibit the same mean firing rate, which can be determined by a straight-forward self-consistency argument [19], [20]: The firing rate  is a function of the first two cumulants of the input fluctuations,

is a function of the first two cumulants of the input fluctuations,  and

and  , which are, in turn, functions of the input. If

, which are, in turn, functions of the input. If  is the input (stimulus) firing rate, and

is the input (stimulus) firing rate, and  is the mean response rate of all neurons in the network, respectively, we have the relation

is the mean response rate of all neurons in the network, respectively, we have the relation

| (3) |

Here  denotes the amplitude of an excitatory post-synaptic potential (EPSP) of external inputs, and

denotes the amplitude of an excitatory post-synaptic potential (EPSP) of external inputs, and  denotes the amplitude of recurrent EPSPs. The factor

denotes the amplitude of recurrent EPSPs. The factor  is the inhibition-excitation ratio, which fixes the strength of inhibitory post-synaptic potentials (IPSPs) to

is the inhibition-excitation ratio, which fixes the strength of inhibitory post-synaptic potentials (IPSPs) to  . Synapses are modeled as

. Synapses are modeled as  , where the pre-synaptic current is delivered to the post-synaptic neuron instantaneously, after a fixed transmission delay of

, where the pre-synaptic current is delivered to the post-synaptic neuron instantaneously, after a fixed transmission delay of  .

.

The remaining structural parameters are the total number of neurons in the network,  , the connection probability,

, the connection probability,  , and the fraction

, and the fraction  of neurons in the network that are excitatory (

of neurons in the network that are excitatory ( ), implying that a fraction

), implying that a fraction  is inhibitory (

is inhibitory ( ). For all networks considered here we have used

). For all networks considered here we have used  and

and  .

.  was always fixed at

was always fixed at  . For all network connectivities, we fix the in-degree, separately for the excitatory and the inhibitory population, respectively. That is, each neuron, be it excitatory or inhibitory, receives exactly

. For all network connectivities, we fix the in-degree, separately for the excitatory and the inhibitory population, respectively. That is, each neuron, be it excitatory or inhibitory, receives exactly  connections randomly sampled from the excitatory population and

connections randomly sampled from the excitatory population and  connections randomly sampled from the inhibitory population. Multiple synaptic contacts and self-contacts are excluded.

connections randomly sampled from the inhibitory population. Multiple synaptic contacts and self-contacts are excluded.

In our simulations, inputs are stationary and independent Poisson processes, denoted by a vector  of average firing rates. Its i-th entry,

of average firing rates. Its i-th entry,  , corresponding to the average firing rate of the input to the i-th neuron, depends on the stimulus orientation

, corresponding to the average firing rate of the input to the i-th neuron, depends on the stimulus orientation  and the input preferred orientation (PO) of the neuron

and the input preferred orientation (PO) of the neuron  according to

according to

| (4) |

The baseline  is the level of input common to all orientations, and the peak input is

is the level of input common to all orientations, and the peak input is  . The input PO is assigned randomly and independently to each neuron in the population. To measure the output tuning curves in numerical simulations, we stimulated the networks for

. The input PO is assigned randomly and independently to each neuron in the population. To measure the output tuning curves in numerical simulations, we stimulated the networks for  different stimulus orientations, covering the full range between

different stimulus orientations, covering the full range between  and

and  in steps of

in steps of  . The stimulation at each orientation was run for

. The stimulation at each orientation was run for  , using a simulation time step of

, using a simulation time step of  . Onset transients (the first

. Onset transients (the first  ) were discarded.

) were discarded.

Linearized Rate Equations

To quantify the response of a network to tuned input, we first compute its baseline (untuned) output firing rate,  . This procedure is described elsewhere in detail [16], and we only recapitulate the main steps and equations here. If the attenuation of the baseline and amplification of the modulation is performed by two essentially independent processing channels in the network [16], the baseline firing rate can be computed from the fixed point equation

. This procedure is described elsewhere in detail [16], and we only recapitulate the main steps and equations here. If the attenuation of the baseline and amplification of the modulation is performed by two essentially independent processing channels in the network [16], the baseline firing rate can be computed from the fixed point equation

| (5) |

the root of which can be found numerically [16], [20].

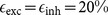

Now we linearize the network dynamics about an operating point defined by the baseline. First, we write the full nonlinear rate equation of the network as  . Here, the mean and the variance of the input are expressed, in matrix-vector notation, as

. Here, the mean and the variance of the input are expressed, in matrix-vector notation, as

| (6) |

where  and

and  are N-dimensional column vectors of input and output firing rates, respectively, and

are N-dimensional column vectors of input and output firing rates, respectively, and  is the weight matrix of the network. Its entry

is the weight matrix of the network. Its entry  , the weight of a synaptic connection from neuron

, the weight of a synaptic connection from neuron  to neuron

to neuron  , is either

, is either  if there is no synapse,

if there is no synapse,  if there is an excitatory synapse, or

if there is an excitatory synapse, or  if there is an inhibitory synapse. Matrix

if there is an inhibitory synapse. Matrix  is the element-wise square of

is the element-wise square of  , that is

, that is  .

.

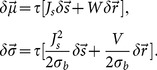

The extra firing rate of all neurons,  (output modulation), in response to a small perturbation of their inputs,

(output modulation), in response to a small perturbation of their inputs,  (input modulation), is obtained by linearizing the dynamics about the baseline, i.e. about

(input modulation), is obtained by linearizing the dynamics about the baseline, i.e. about  and

and  (obtained from Eq. (3) evaluated at

(obtained from Eq. (3) evaluated at  and

and  )

)

|

(7) |

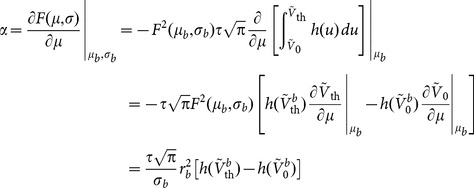

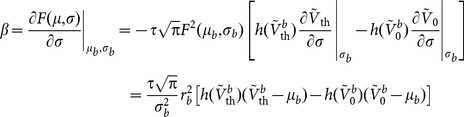

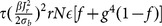

The partial derivatives of  at this operating point can be computed from Eq. (2) as

at this operating point can be computed from Eq. (2) as

|

(8) |

and, in a similar fashion,

|

(9) |

where  , and

, and  ,

,  ,

,  and

and  are the corresponding parameters evaluated at the baseline (for further details on this derivation, see [21]).

are the corresponding parameters evaluated at the baseline (for further details on this derivation, see [21]).

We also need to express  and

and  in terms of the input perturbations. In fact, they can be written in terms of

in terms of the input perturbations. In fact, they can be written in terms of  and

and  from Eq. (6) as:

from Eq. (6) as:

|

(10) |

For the total output perturbation,  , we therefore obtain

, we therefore obtain

| (11) |

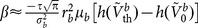

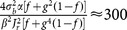

With the simulation parameters used here, our network typically operates in a fluctuation-driven regime of activity with a comparable level of input mean and fluctuations,  . As a result, the contribution of the mean,

. As a result, the contribution of the mean,  , to output modulation in Eq. (11) is

, to output modulation in Eq. (11) is  larger than the contribution of the variance,

larger than the contribution of the variance,  . In the noise-dominated regime,

. In the noise-dominated regime,  and

and  are small compared to

are small compared to  in Eq. (9), and hence we can write

in Eq. (9), and hence we can write  , yielding

, yielding  . Thus, with a comparable level of mean and fluctuations, the contribution of the mean to output modulation is

. Thus, with a comparable level of mean and fluctuations, the contribution of the mean to output modulation is  larger than the contribution of the variance. In fact, the more the network operates in the noise-dominated regime, the more

larger than the contribution of the variance. In fact, the more the network operates in the noise-dominated regime, the more  becomes dominant over

becomes dominant over  , making the second term on the right hand side of Eq. (11) negligible.

, making the second term on the right hand side of Eq. (11) negligible.

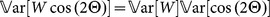

For the network shown in Fig. 1 and Fig. 2, for instance,  . Given the general parameters of our simulation, we obtain

. Given the general parameters of our simulation, we obtain  and

and  . This yields

. This yields  and

and  , and finally

, and finally  and

and  . In response to feedforward input perturbations, therefore, the contribution of the mean term (

. In response to feedforward input perturbations, therefore, the contribution of the mean term ( ) is

) is  times the contribution of the variance term (

times the contribution of the variance term ( ). In response to recurrent perturbation vectors with zero mean, both the mean term (

). In response to recurrent perturbation vectors with zero mean, both the mean term ( ) and the variance term (

) and the variance term ( ) would respond with zero output, on average. The variance, in contrast, is not zero; a similar computation as in Eq. (3) yields

) would respond with zero output, on average. The variance, in contrast, is not zero; a similar computation as in Eq. (3) yields  and

and  , the terms resulting from the mean and variance contributions, respectively. That is, the mean contribution is dominant again by a factor of

, the terms resulting from the mean and variance contributions, respectively. That is, the mean contribution is dominant again by a factor of  .

.

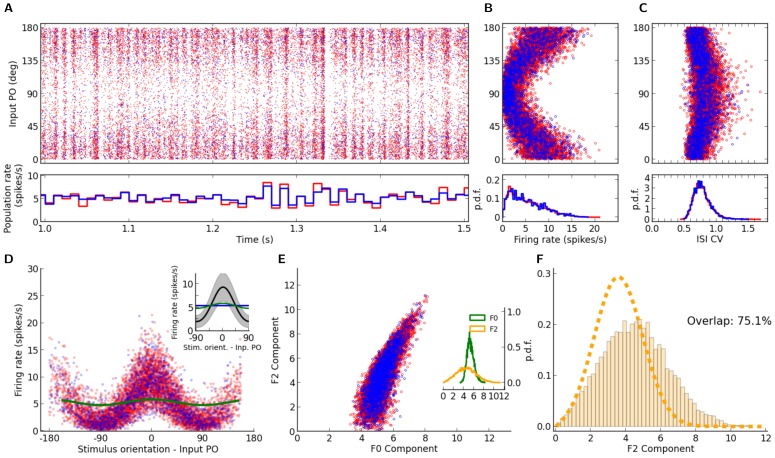

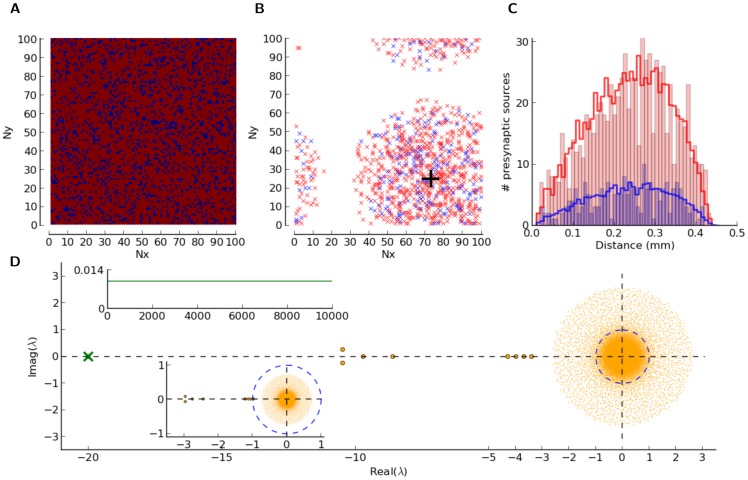

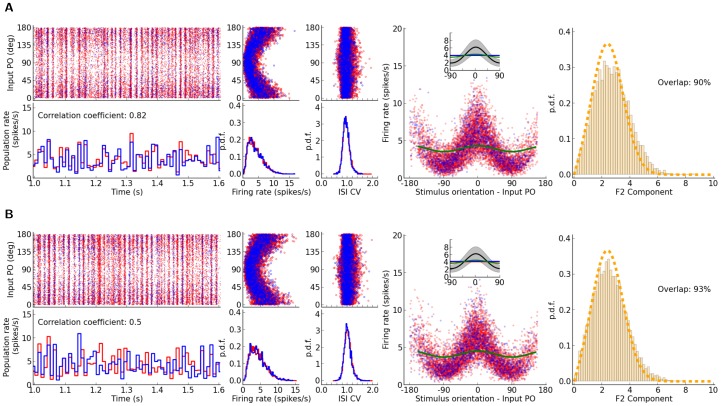

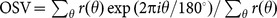

Figure 1. Distribution of orientation selectivity in networks with Erdős-Rényi random connectivity.

(A) Raster plot of network activity in response to stimulus with orientation  . Neurons are sorted according to their input preferred orientations,

. Neurons are sorted according to their input preferred orientations,  , indicated on the vertical axis. The histogram on the bottom shows the population firing rates, averaged in time bins of

, indicated on the vertical axis. The histogram on the bottom shows the population firing rates, averaged in time bins of  width. Here, and in all other figures, red and blue colors denote excitatory and inhibitory neurons, or neuronal populations, respectively. (B) Average firing rates, for all neurons in the network, estimated from the spike count over the whole stimulation period (

width. Here, and in all other figures, red and blue colors denote excitatory and inhibitory neurons, or neuronal populations, respectively. (B) Average firing rates, for all neurons in the network, estimated from the spike count over the whole stimulation period ( ). The distribution of firing rates over the population is depicted in the histogram at the bottom. (C) Coefficient of Variation (CV) of the inter-spike intervals (ISI),

). The distribution of firing rates over the population is depicted in the histogram at the bottom. (C) Coefficient of Variation (CV) of the inter-spike intervals (ISI),  , computed for all neurons in the network with more than

, computed for all neurons in the network with more than  spikes during the stimulation. The distribution of

spikes during the stimulation. The distribution of  is plotted at the bottom. (D) Sample output tuning of

is plotted at the bottom. (D) Sample output tuning of  excitatory and

excitatory and  inhibitory neurons randomly chosen from the network, all aligned at their input preferred orientations. The input tuning (green, same as Eq. (4)) is normalized to the population average of the baseline (mean over all orientations) of output tuning curves. Inset: The mean (across population) of aligned output tunings are shown in black. The gray shading indicates

inhibitory neurons randomly chosen from the network, all aligned at their input preferred orientations. The input tuning (green, same as Eq. (4)) is normalized to the population average of the baseline (mean over all orientations) of output tuning curves. Inset: The mean (across population) of aligned output tunings are shown in black. The gray shading indicates  extracted from the population. Linearly interpolated versions of individual tuning curves (generated at a resolution of

extracted from the population. Linearly interpolated versions of individual tuning curves (generated at a resolution of  ) have been used to compute

) have been used to compute  and

and  of aligned tuning curves. The population average of the baseline (mean over all orientations) of output tuning curves is shown separately for excitatory and inhibitory populations with a red and a blue line, respectively (the lines highly overlap, since the average activity almost coincide for both populations). The normalized input tuning curve (green) is obtained by the same method as used for the main plot. (E) Scatter plot of F0 and F2 components, extracted from individual output tuning curves in the network. The individual distributions of F0 and F2 components over the population are plotted in the inset. (F) Distribution of single-neuron F2 components from a network simulation (histogram) compared with the prediction of our theory (dashed line, computed from Eq. (25)). To evaluate the goodness of match, the overlap of the empirical and predicted probability density functions (

of aligned tuning curves. The population average of the baseline (mean over all orientations) of output tuning curves is shown separately for excitatory and inhibitory populations with a red and a blue line, respectively (the lines highly overlap, since the average activity almost coincide for both populations). The normalized input tuning curve (green) is obtained by the same method as used for the main plot. (E) Scatter plot of F0 and F2 components, extracted from individual output tuning curves in the network. The individual distributions of F0 and F2 components over the population are plotted in the inset. (F) Distribution of single-neuron F2 components from a network simulation (histogram) compared with the prediction of our theory (dashed line, computed from Eq. (25)). To evaluate the goodness of match, the overlap of the empirical and predicted probability density functions ( and

and  , respectively) is computed as

, respectively) is computed as  . This returns an overlap index between

. This returns an overlap index between  and

and  , corresponding to no overlap and perfect match of distributions, respectively. Parameters of the network simulation are:

, corresponding to no overlap and perfect match of distributions, respectively. Parameters of the network simulation are:  ,

,  ,

,  ,

,  ,

,  ,

,  ,

,  .

.

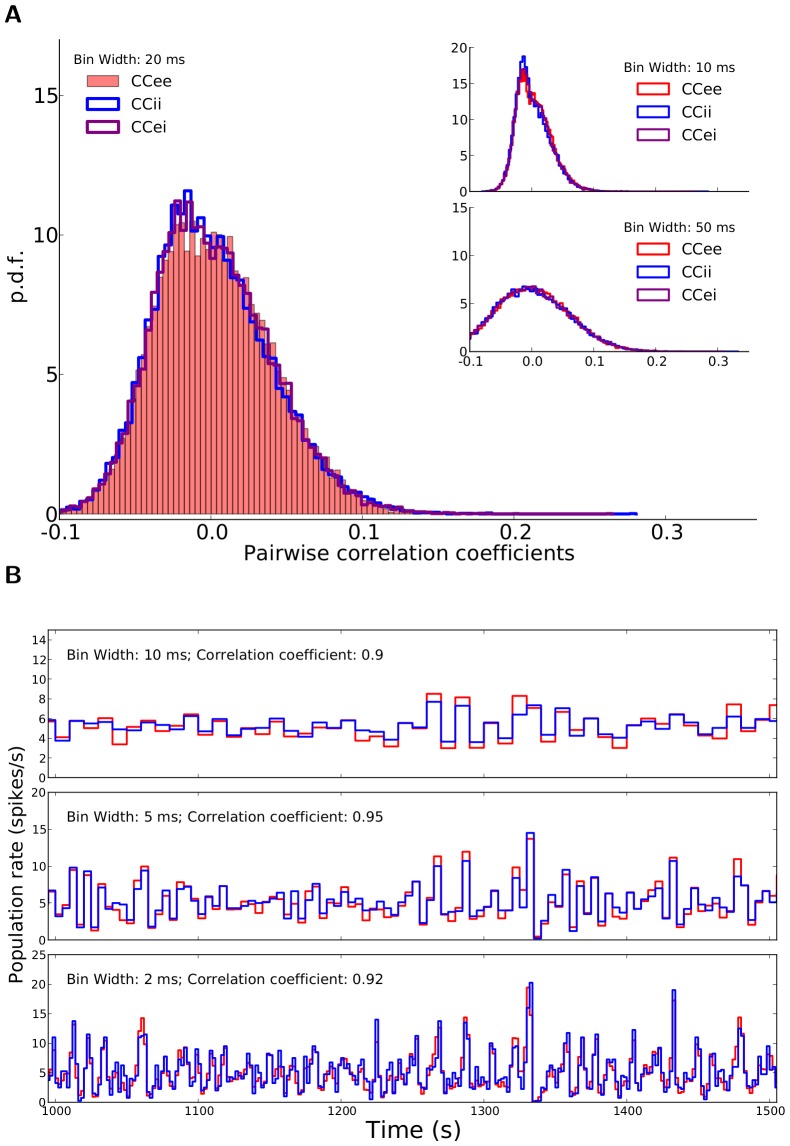

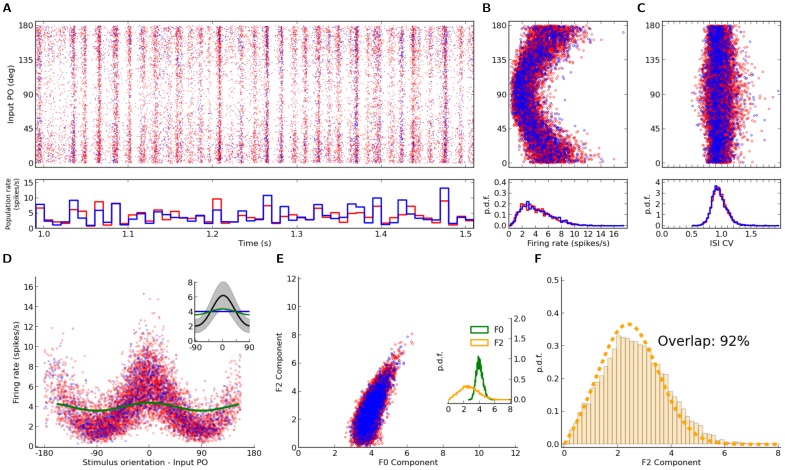

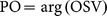

Figure 2. Correlations in the network.

(A) Distribution of correlation coefficients for pairs of neurons in the network. For the example network of Fig. 1, the distribution of Pearson correlation coefficients (CC) between spike trains of pairs of neurons is plotted.  excitatory and

excitatory and  inhibitory neurons are randomly sampled from the network and all pairwise correlations (between pairs of excitatory,

inhibitory neurons are randomly sampled from the network and all pairwise correlations (between pairs of excitatory,  , between pairs of inhibitory,

, between pairs of inhibitory,  , and between excitatory and inhibitory,

, and between excitatory and inhibitory,  , samples), based on spike counts in bins of width

, samples), based on spike counts in bins of width  are computed. The corresponding distributions for smaller (

are computed. The corresponding distributions for smaller ( ) and larger (

) and larger ( ) bins are shown in the inset (top and bottom, respectively). (B) The time series for the excitatory and inhibitory population spike counts indicate a fine balance on the population level. The correlation of activity between excitatory (red) and inhibitory (blue) populations is quite high on different time scales. The similarity of the temporal pattern of population activities is again quantified by the Pearson correlation coefficient.

) bins are shown in the inset (top and bottom, respectively). (B) The time series for the excitatory and inhibitory population spike counts indicate a fine balance on the population level. The correlation of activity between excitatory (red) and inhibitory (blue) populations is quite high on different time scales. The similarity of the temporal pattern of population activities is again quantified by the Pearson correlation coefficient.

In the rest of our computation we therefore ignore the second part of the right hand side in Eq. (11) and approximate the output modulation as:

| (12) |

We call

| (13) |

the “linearized gain” and write the linearized rate equation of the network in response to small input perturbations as:

| (14) |

Linear and Supralinear Gains

The gain  is the linearized gain in the firing rate of a single LIF neuron in response to small changes in its mean input, while it is embedded in a recurrent network operating in its baseline AI state. That is, the extra firing rate,

is the linearized gain in the firing rate of a single LIF neuron in response to small changes in its mean input, while it is embedded in a recurrent network operating in its baseline AI state. That is, the extra firing rate,  , of a neuron in response to a perturbation in its input,

, of a neuron in response to a perturbation in its input,  , when all other neurons are receiving the same, untuned input as before, divided by the input modulation weighted by its effect on the postsynaptic membrane

, when all other neurons are receiving the same, untuned input as before, divided by the input modulation weighted by its effect on the postsynaptic membrane  .

.

Alternative to the analytic derivation we pursued above, this gain can also be evaluated numerically by perturbing the baseline firing rate with an extra input,  :

:

|

(15) |

(Note that, as this is the response gain of an individual neuron to an individual perturbation in its input when all other neurons receive the same baseline input, it is not needed to consider the perturbation in the recurrent firing rate,  , in the baseline state.)

, in the baseline state.)

If this procedure is repeated for each  , a numerical

, a numerical  –

– curve is obtained. This is the curve we have plotted in Fig. 3A as “Numerical perturbation”. If this curve was completely linear, it should not be much different from the results of our analytical perturbation (Eq. (13), denoted by “Linearized gain” in Fig. 3A). The results of the numerical perturbation, however, show some supralinear behavior, i.e. larger perturbations lead to a higher input-output gain. As a result, if we compute the gain at a perturbation size equal to the input modulation (

curve is obtained. This is the curve we have plotted in Fig. 3A as “Numerical perturbation”. If this curve was completely linear, it should not be much different from the results of our analytical perturbation (Eq. (13), denoted by “Linearized gain” in Fig. 3A). The results of the numerical perturbation, however, show some supralinear behavior, i.e. larger perturbations lead to a higher input-output gain. As a result, if we compute the gain at a perturbation size equal to the input modulation ( ), a different gain is obtained. We use the term “stimulus gain” to refer to this supralinear gain at the modulation size of input (i.e. when

), a different gain is obtained. We use the term “stimulus gain” to refer to this supralinear gain at the modulation size of input (i.e. when  ):

):

| (16) |

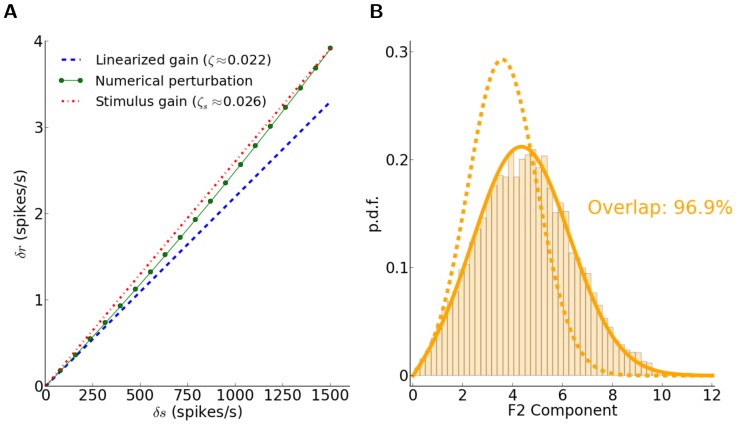

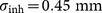

Figure 3. Supralinear neuronal gain affects the linear prediction.

(A) Discrepancy of the linearized gain with the gain computed at stronger input modulations. The linearized gain of the neuron obtained analytically from Eq. (13) (dashed blue line) is compared with the numerical solution of Eq. (5) with an input perturbation equal to the modulation in the feedforward input,  (see Eq. (15) in Methods). The red line shows the corresponding linearized gain that would have been computed with this perturbation,

(see Eq. (15) in Methods). The red line shows the corresponding linearized gain that would have been computed with this perturbation,  (Eq. (16)). (B) Comparison of our theoretical prediction of the distribution with

(Eq. (16)). (B) Comparison of our theoretical prediction of the distribution with  and

and  (dashed and solid lines, respectively). The overlap index of the improved prediction, i.e. when

(dashed and solid lines, respectively). The overlap index of the improved prediction, i.e. when  is replaced by

is replaced by  in Eq. (30), has greatly increased.

in Eq. (30), has greatly increased.

This is shown by the red line in Fig. 3A.

Linear Tuning in Recurrent Networks

Once we obtained the linearized gains at the baseline state of network operation, the linearized rate equation of the network for modulations about the baseline activity is obtained. Each neuron responds to the aggregate perturbation in its input with a gain obtained by the linearization formalism employed. The total perturbation consists of a feedforward component, which is the modulation in the input (stimulus) firing rate of the neuron, and a recurrent component, which is a linear sum of the respective output perturbations of the pre-synaptic neurons in the recurrent network. This can, therefore, be written, in vector-matrix notation, as:

| (17) |

If  is invertible, the output firing rates can be computed directly as

is invertible, the output firing rates can be computed directly as

| (18) |

which can be further expanded into

| (19) |

Ignoring higher-order contributions  , Eq. (19) can be approximated as

, Eq. (19) can be approximated as

| (20) |

Eq. (20) for each stimulus orientation returns the modulation of the output firing rate of all neurons in the network in response to a given input modulation.

We then assume that all inputs  are linearly tuned to the stimulus

are linearly tuned to the stimulus  according to

according to

| (21) |

where  is the baseline rate in absence of stimulation and the vector

is the baseline rate in absence of stimulation and the vector  is the vector of preferred feature for the i-th neuron. The length of the vector that represents the preferred feature

is the vector of preferred feature for the i-th neuron. The length of the vector that represents the preferred feature  is the tuning strength. To ensure the linearity of operation, the firing rate

is the tuning strength. To ensure the linearity of operation, the firing rate  should remain always positive

should remain always positive

| (22) |

If this condition is satisfied, the linearity of the tuning and positivity of firing rates remain compatible. If the condition is violated, partial rectification of the neuronal tuning curve follows and the linear analysis does not fully hold.

To obtain the operation of the network on input preferred feature vectors, we can write Eq. (20) for input tuning curves

| (23) |

Here  is a matrix the rows of which are given by the transposed preferred features

is a matrix the rows of which are given by the transposed preferred features  . Therefore, all neurons in the recurrent network are again linearly tuned, with preferred features encoded by the rows of the matrix

. Therefore, all neurons in the recurrent network are again linearly tuned, with preferred features encoded by the rows of the matrix  . From here we can compute the matrix of output feature vectors,

. From here we can compute the matrix of output feature vectors,  , as

, as

| (24) |

The first term on the right-hand side is the weighted tuning vector of the feedforward input each neuron receives, and the second term is the mixture of tuning vectors of corresponding pre-synaptic neurons in the recurrent network.

Distribution of Orientation Selectivity

The length of the output feature vector represents the amplitude of the modulation component of output tuning curves. This is a measure of orientation selectivity, and we compute its distribution here.

Orientation is a two-dimensional feature, and the input feature vector ( in Eq.(24)) is now a vector of two-dimensional input feature vectors (a vector of vectors). Its each entry, corresponding to the input orientation selectivity vector of each neuron, can, therefore, be determined by a length and a direction. The length of all vectors is

in Eq.(24)) is now a vector of two-dimensional input feature vectors (a vector of vectors). Its each entry, corresponding to the input orientation selectivity vector of each neuron, can, therefore, be determined by a length and a direction. The length of all vectors is  , as all inputs have the same modulation, and the direction is twice the input PO of neurons (see Eq. (4)), which are drawn independently from a uniform distribution on

, as all inputs have the same modulation, and the direction is twice the input PO of neurons (see Eq. (4)), which are drawn independently from a uniform distribution on  . They are assumed to be independent of the weight matrix

. They are assumed to be independent of the weight matrix  , implying the absence of feature specific connectivity.

, implying the absence of feature specific connectivity.

The feedforward tuning vector of each neuron is accompanied by a contribution from the recurrent network (Eq. 24). For each neuron, the recurrent contribution is a vectorial sum of the input tuning vectors of its pre-synaptic neurons. According to the multivariate Central Limit Theorem, the summation of a large number of independent random variables leads to an approximate multi-variate normal distribution of the output features. Tuning strength is given by the length of output tuning vectors,  . For a bivariate normal distribution with parameters

. For a bivariate normal distribution with parameters  and

and  , we can compute the distribution of this length

, we can compute the distribution of this length

| (25) |

where

is the modified Bessel function of the first kind and zeroth order. Therefore, we only need to compute the mean and the variance of the resulting distribution.

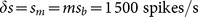

The mean of the distribution  is equal to the length of feedforward feature vector,

is equal to the length of feedforward feature vector,  . This is because the expected value of the contribution of the recurrent network vanishes in each direction

. This is because the expected value of the contribution of the recurrent network vanishes in each direction

| (26) |

and

and  denote, respectively, the random variables from which the weights and input POs are drawn. A similar computation yields

denote, respectively, the random variables from which the weights and input POs are drawn. A similar computation yields  . Here we have used the property that the two random variables

. Here we have used the property that the two random variables  and

and  are independent, and that all orientations are uniformly represented in the input (

are independent, and that all orientations are uniformly represented in the input ( ). As a result, we obtain

). As a result, we obtain

| (27) |

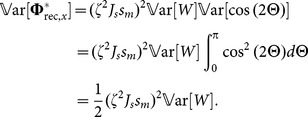

The recurrent contribution does not, on average, change the length of output feature vectors. However, it creates a distribution of selectivity, which can be quantified by its variance

|

(28) |

Again, we have exploited the independence of random variables  and

and  , and the uniform representation of input POs (

, and the uniform representation of input POs ( ), to factorize the variance, i.e.

), to factorize the variance, i.e.  . Similar computation yields the same variance for the second dimension.

. Similar computation yields the same variance for the second dimension.

For our random networks, the weights for each row of the weight matrix are drawn from a binomial distribution,  . The number of non-zero elements is determined by connection probabilities (

. The number of non-zero elements is determined by connection probabilities ( and

and  for excitation and inhibition respectively), and each non-zero entry is weighted by the synaptic strength (

for excitation and inhibition respectively), and each non-zero entry is weighted by the synaptic strength ( and

and  for excitation and inhibition respectively). The variance

for excitation and inhibition respectively). The variance  can therefore be computed explicitly:

can therefore be computed explicitly:

| (29) |

For more complex connectivities, the variance can be numerically computed from the weight matrix. For our networks here, the mean and the variance of the distribution of output tuning vectors can, therefore, be expressed as

| (30) |

For an output tuning curve with a cosine shape,  , the tuning strength we introduced above corresponds to

, the tuning strength we introduced above corresponds to  , namely the modulation (F2) component of the tuning curve.

, namely the modulation (F2) component of the tuning curve.  is also obtained as the baseline firing rate of the network,

is also obtained as the baseline firing rate of the network,  , from Eq. (5). To compare the prediction with the result of our simulations, we compute the mean and modulation of individual output tuning curves from the simulated data. Mean and modulation are taken from the zeroth and the second Fourier components of each tuning curve (F0 and F2 components), respectively. The distribution given by Eq. (25) should, therefore, precisely match the distribution of modulation (F2) component of output tuning curves obtained from simulations, if our linear analysis grasps the essential mechanisms of orientation selectivity in model recurrent networks.

, from Eq. (5). To compare the prediction with the result of our simulations, we compute the mean and modulation of individual output tuning curves from the simulated data. Mean and modulation are taken from the zeroth and the second Fourier components of each tuning curve (F0 and F2 components), respectively. The distribution given by Eq. (25) should, therefore, precisely match the distribution of modulation (F2) component of output tuning curves obtained from simulations, if our linear analysis grasps the essential mechanisms of orientation selectivity in model recurrent networks.

Results

Erdős-Rényi Random Networks

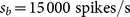

We first study excitatory-inhibitory Erdős-Rényi random networks of LIF neurons (Eq. (1)) with a doubly fixed in-degree, namely where both the excitatory in-degree and the inhibitory in-degree is fixed for both excitatory and inhibitory neurons. Figs. 1A–C show the response of a network with  and

and  to the stimulus of

to the stimulus of  orientation. The network with these parameters operates in the fluctuation-driven regime, which shows asynchronous-irregular (AI) dynamics (Fig. 1A), with low firing rates (Fig. 1B) and high variance of inter-spike intervals (ISI) (Fig. 1C). The network at this regime is capable of amplifying the weak tuning of the input, as it is reflected both in the network tuning curve in response to one orientation (Fig. 1B) and in individual tuning curves in response to different stimulus orientations (Fig. 1D).

orientation. The network with these parameters operates in the fluctuation-driven regime, which shows asynchronous-irregular (AI) dynamics (Fig. 1A), with low firing rates (Fig. 1B) and high variance of inter-spike intervals (ISI) (Fig. 1C). The network at this regime is capable of amplifying the weak tuning of the input, as it is reflected both in the network tuning curve in response to one orientation (Fig. 1B) and in individual tuning curves in response to different stimulus orientations (Fig. 1D).

The joint distribution of the modulation (F2) component of (individual) output tuning curves and the respective baseline (F0) component (Fig. 1E) shows that the average values of these two components have become comparable after network operation. However, the F2 component has a much broader distribution (Fig. 1E, inset). The distribution predicted by our theory (Eq. (25)) matches partially with the distribution measured in the simulations (Fig. 1F). The degree of match is quantified by an index, which assesses the overlap area of the two probability distributions.

As our analysis is based on the assumption of linearity of network interactions, the result of our theoretical prediction holds only if the network is operating in the linear regime. Any violation of our linear scheme would, therefore, lead to a deviation of the linear prediction from the measured distribution. The remaining discrepancy should, therefore, be attributed to any factor which invalidates our approximation scheme here. Possible contributing factors of this sort in our networks are partial rectification of tuning curves, correlations and synchrony in the network providing the input, and supralinearity of neuronal gains.

Partial rectification of firing rates is obvious in Fig. 1B. However, this does not seem to be a very prominent effect. Only a small fraction of the population is strictly silent, as is evident in the distribution of firing rates (Fig. 1B, bottom). Correlations, in contrast, seems to be a more important contributor, as is reflected in the raster plot of network activity (Fig. 1A).

To investigate the possible contribution of correlations in the distribution of orientation selectivity, we plotted the distribution of pairwise correlations in the network (Fig. 2). Although the distribution of pairwise correlations has a very long tail, on average correlations are very small in the network (Fig. 2A). This is the case for excitatory-excitatory, excitatory-inhibitory, and inhibitory-inhibitory correlations, and there is the same trend when spike counts are computed for different bin widths (Fig. 2A, insets). Low pairwise correlations in the network are a result of recurrent inhibitory feedback, which actively decorrelates the network activity [22]–[24]. As illustrated in Fig. 2B, upsurges in the population activity of excitatory neurons are tightly coupled to a corresponding increase in the activity of the inhibitory population. However, the cancellation is not always exact and some residual correlations remain.

Since each neuron receives random inputs from 10% of the population, approximately the same correlation of excitation and inhibition is, on average, also expected in the recurrent input to each neuron. Note that, as our networks are inhibition-dominated, the net recurrent inhibition would be stronger than the net recurrent excitation (indeed twice as strong, given the parameters we have used). Altogether, this implies that inhibition is capable of fast tracking of excitatory upsurges (Fig. 2B) such that fast fluctuations in the population activity would not be seen in the recurrent input from the network.

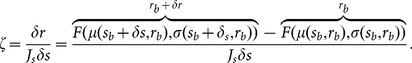

Finally, the single-neuron gain that we computed by linearization (Eq. (13)) could be a source of mismatch, as for a highly non-linear system it might only be valid for small perturbations in the input, and not for stronger modulations. This is shown in Fig. 3A, where the linearized gain,  , from Eq. (13) is compared with

, from Eq. (13) is compared with  , the numerically obtained neuronal gain (see Eq. (15) in Methods) when the perturbation has the size of the input modulation,

, the numerically obtained neuronal gain (see Eq. (15) in Methods) when the perturbation has the size of the input modulation,  . This gain could be approximated analytically by expanding Eq. (5) to higher order terms. Here, however, we have computed this gain numerically (Eq. (16)).

. This gain could be approximated analytically by expanding Eq. (5) to higher order terms. Here, however, we have computed this gain numerically (Eq. (16)).

When the prediction of Eq. (25) is repeated with the new gain ( ), a great improvement in the match between the measured and predicted distributions is indeed observed (Fig. 3B). We therefore concluded that the main source of mismatch in our prediction was our misestimate of the actual neuronal gains. Other sources of nonlinearity, like rectification and correlations, could therefore be responsible for the remaining discrepancy of distributions (less than 5% in the regime considered here). However, given so many possible sources of nonlinearity in our networks, both at the level of spiking neurons and network interactions, it is indeed quite surprising that a linear prediction works so well.

), a great improvement in the match between the measured and predicted distributions is indeed observed (Fig. 3B). We therefore concluded that the main source of mismatch in our prediction was our misestimate of the actual neuronal gains. Other sources of nonlinearity, like rectification and correlations, could therefore be responsible for the remaining discrepancy of distributions (less than 5% in the regime considered here). However, given so many possible sources of nonlinearity in our networks, both at the level of spiking neurons and network interactions, it is indeed quite surprising that a linear prediction works so well.

A remark about rectification in our networks should be made at this point. In the type of networks we are considering here, rectification is in fact not a single-neuron property, i.e. only the result of a rectification effect due to the spike threshold in the LIF neuron. This is not the case as the linearized gain of neurons within the network (Eq. (13)) implies a non-zero response even to small perturbations in the input. This is a result of (internally generated) noise within the recurrent network, as a consequence of balance of excitation and inhibition, which smoothens the embedded f-I curve [25], [26]. Rectification could therefore only happen at the level of network, e.g. by increasing the amount of inhibition.

As our networks are inhibition-dominated, increasing the recurrent coupling would be one way to increase the inhibitory feedback within the network. This can be done in two different ways, either by increasing the connection density or by increasing the weights of synaptic connections. The first strategy is tried in Fig. 4A, where the connection probability has been increased (from  to

to  ). The second strategy is added to the first in Fig. 4B, where an increase in the connection density is accompanied by an increase in synaptic weights (from

). The second strategy is added to the first in Fig. 4B, where an increase in the connection density is accompanied by an increase in synaptic weights (from  to

to  ). In both cases, however, a significant rectification of tuning curves did not result, and the prediction of our linear theory still holds.

). In both cases, however, a significant rectification of tuning curves did not result, and the prediction of our linear theory still holds.

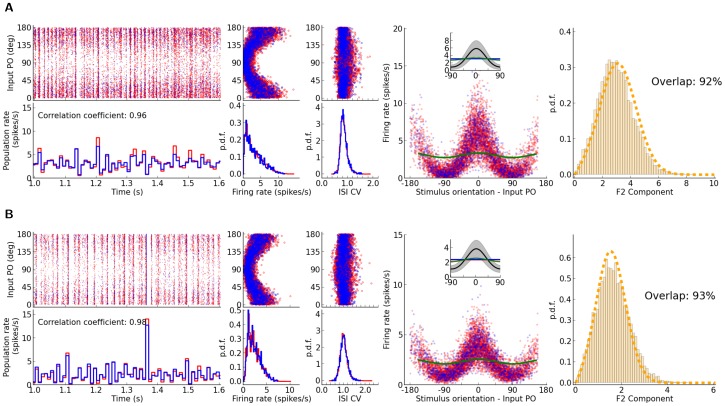

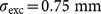

Figure 4. The impact of the strength of recurrent coupling on the distribution of selectivities.

The figure layout is similar to Fig. 1 (panel (E) not included), shown are networks with stronger recurrent couplings. In (A), the recurrent coupling is increased by doubling the connection density; in (B), this is further enhanced by doubling all recurrent weights. The parameters of network simulations are: (A)  ,

,  ,

,  ,

,  ,

,  ,

,  ,

,  , and (B)

, and (B)  ,

,  ,

,  ,

,  ,

,  ,

,  ,

,  . The predicted distributions are computed by considering

. The predicted distributions are computed by considering  (see Fig. 3).

(see Fig. 3).

This unexpected effect can be explained intuitively as follows: An increase in recurrent coupling not only decreases the baseline firing rate of the network, but also changes neuronal gains ( and

and  ). A crucial factor in determining this gain is the average membrane potential of neurons in the network, which in turn sets the mean distance to threshold. The larger the mean distance to threshold is in the network, the less is the neuronal gain. This in turn decreases the mean F2 component of output tuning curves. As a result, with a reduced baseline firing rate, a significant rectification of tuning curves still does not follow, as output modulation components have been scaled down by a comparable factor. This is indeed the case in networks of Fig. 4, where the mean (over neurons) membrane potential (temporally averaged) and the neuronal gains have both been decreased compared to the network of Fig. 1 (results not shown; for a detailed analysis, see [16]).

). A crucial factor in determining this gain is the average membrane potential of neurons in the network, which in turn sets the mean distance to threshold. The larger the mean distance to threshold is in the network, the less is the neuronal gain. This in turn decreases the mean F2 component of output tuning curves. As a result, with a reduced baseline firing rate, a significant rectification of tuning curves still does not follow, as output modulation components have been scaled down by a comparable factor. This is indeed the case in networks of Fig. 4, where the mean (over neurons) membrane potential (temporally averaged) and the neuronal gains have both been decreased compared to the network of Fig. 1 (results not shown; for a detailed analysis, see [16]).

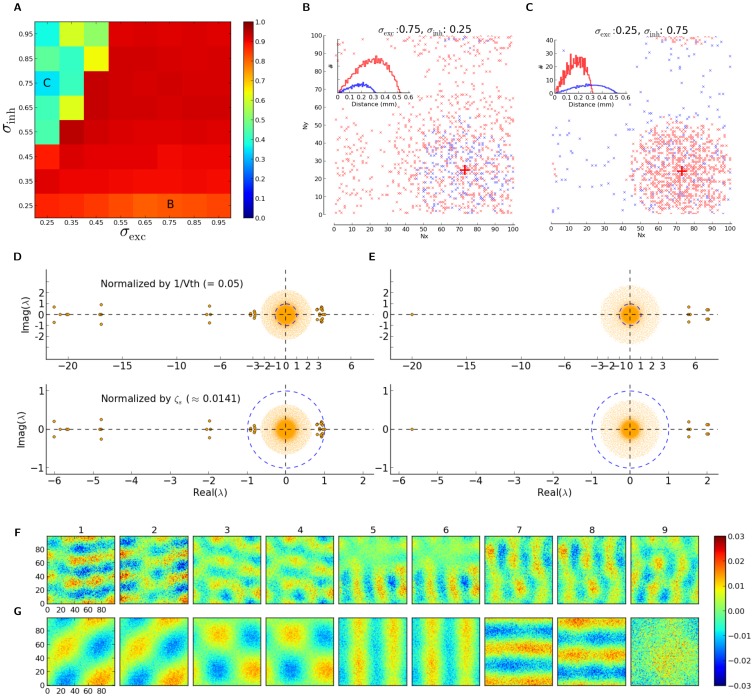

Networks With Distance-Dependent Connectivity

To extend the scope of the linear analysis, we asked if our theory can also account for networks with different statistically defined topologies. In particular, we considered networks with a more realistic pattern of distance-dependent connectivity: Each neuron is assigned a random position in a two-dimensional rectangle representing a  flat sheet of cortex (Fig. 5A). The probability of having a connection between a pre-synaptic excitatory (inhibitory) neuron to a given post-synaptic neuron falls off as a Gaussian function with distance, with parameter

flat sheet of cortex (Fig. 5A). The probability of having a connection between a pre-synaptic excitatory (inhibitory) neuron to a given post-synaptic neuron falls off as a Gaussian function with distance, with parameter  (

( ). Similar to the Erdős-Rényi random networks considered before, we fix the in-degree, i.e. each neuron receives exactly

). Similar to the Erdős-Rényi random networks considered before, we fix the in-degree, i.e. each neuron receives exactly  excitatory and

excitatory and  inhibitory connections. Multiple synaptic contacts and self-contacts are not allowed.

inhibitory connections. Multiple synaptic contacts and self-contacts are not allowed.

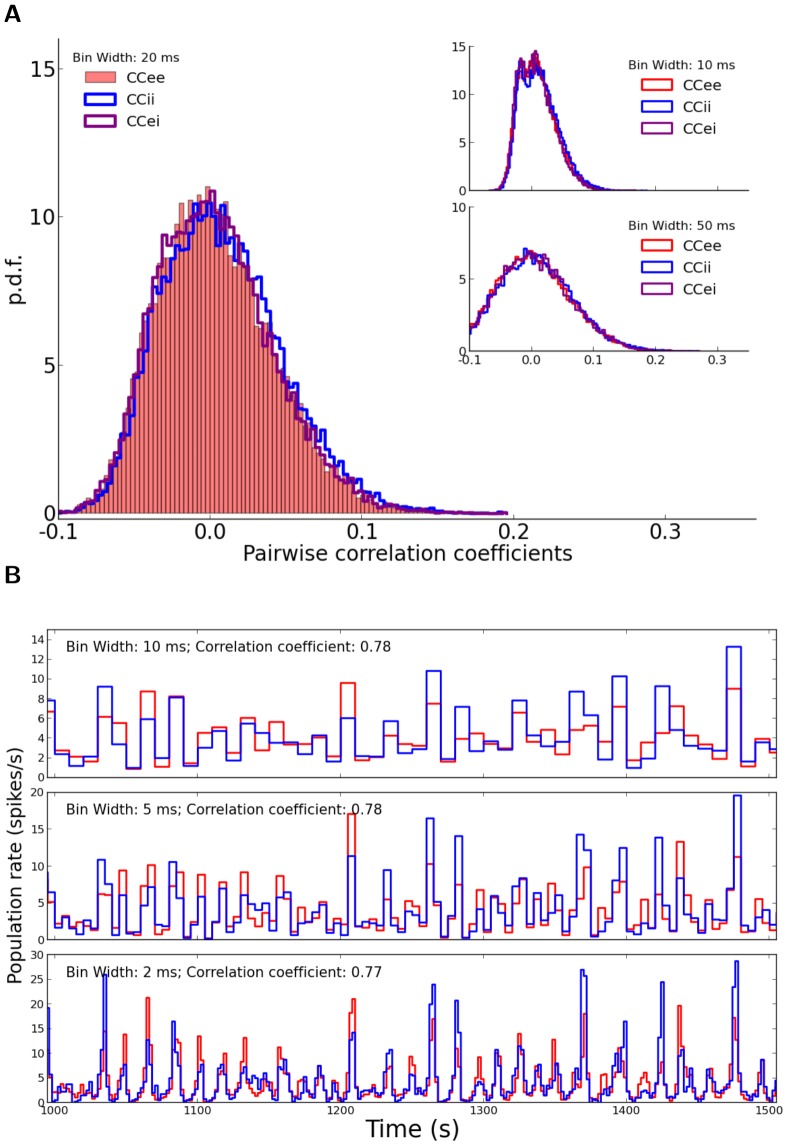

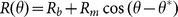

Figure 5. Networks with distance-dependent connectivity.

(A) Random positioning of  excitatory (red) and

excitatory (red) and  inhibitory (blue) neurons in a square, representing a flat

inhibitory (blue) neurons in a square, representing a flat  sheet of cortex, wrapped to a torus. (B) For a sample (excitatory) neuron (large black cross), positions of excitatory (red) and inhibitory (blue) pre-synaptic neurons are explicitly shown as little crosses. A Gaussian connectivity profile with

sheet of cortex, wrapped to a torus. (B) For a sample (excitatory) neuron (large black cross), positions of excitatory (red) and inhibitory (blue) pre-synaptic neurons are explicitly shown as little crosses. A Gaussian connectivity profile with  was assumed. For each post-synaptic neuron, we fixed the number of randomly drawn pre-synaptic connections of either type, i.e.

was assumed. For each post-synaptic neuron, we fixed the number of randomly drawn pre-synaptic connections of either type, i.e.  and

and  (

( ). Multiple synapses and self-coupling were not allowed. (C) Histogram of distances to pre-synaptic neurons for the sample neuron (bars) and for the entire population (lines). (D) Eigenvalue spectrum of the weight matrix,

). Multiple synapses and self-coupling were not allowed. (C) Histogram of distances to pre-synaptic neurons for the sample neuron (bars) and for the entire population (lines). (D) Eigenvalue spectrum of the weight matrix,  . Weights are normalized by the reset voltage,

. Weights are normalized by the reset voltage,  , leading to

, leading to  or

or  , depending on whether the synapse is excitatory or inhibitory, respectively. We used

, depending on whether the synapse is excitatory or inhibitory, respectively. We used  . For better visibility, the eigenvalues outside the bulk of the spectrum are shown by larger dots. The green cross marks the eigenvalue corresponding to the uniform eigenmode, which is plotted in the top inset. Re-normalized spectrum, according to the gain

. For better visibility, the eigenvalues outside the bulk of the spectrum are shown by larger dots. The green cross marks the eigenvalue corresponding to the uniform eigenmode, which is plotted in the top inset. Re-normalized spectrum, according to the gain  , is shown in the bottom inset; i.e.

, is shown in the bottom inset; i.e.  and

and  , for excitatory and inhibitory connections, respectively.

, for excitatory and inhibitory connections, respectively.

The connectivity profile is illustrated in Figs. 5B, C. The pre-synaptic sources of a sample neuron are plotted in Fig. 5B, for  . The resulting distribution of the distances of connected neurons, for the example neuron and for the entire population, is shown in Fig. 5C.

. The resulting distribution of the distances of connected neurons, for the example neuron and for the entire population, is shown in Fig. 5C.

Note that the connectivity depends only on the physical distance. As input preferred orientations are assigned randomly and independently of the actual position of neurons in space, distance-dependent connectivity does not imply any feature-specific connectivity. That is, neither a spatial nor a functional map of orientation selectivity is present here.

Before discussing the simulations of the spiking networks, it is informative to look at the eigenvalue spectrum of the associated weight matrix,  . It is plotted, for

. It is plotted, for  and

and  , in Fig. 5D. Each entry of the matrix is normalized by the reset voltage,

, in Fig. 5D. Each entry of the matrix is normalized by the reset voltage,  , for the eigenvalue spectrum shown in the main panel. The effective firing rate equation of the network can then be written as

, for the eigenvalue spectrum shown in the main panel. The effective firing rate equation of the network can then be written as  . The exceptional eigenvalue (green cross) corresponding to the uniform eigenvector (inset, top) and the bulk of eigenvalues (orange dots) are the structural properties that this network has in common with the previous Erdős-Rényi networks (not shown). There is, however, a small number of additional (in this case,

. The exceptional eigenvalue (green cross) corresponding to the uniform eigenvector (inset, top) and the bulk of eigenvalues (orange dots) are the structural properties that this network has in common with the previous Erdős-Rényi networks (not shown). There is, however, a small number of additional (in this case,  ) eigenvalues in between, which are the consequence of the specific realization of our distance-dependent connectivity here. The corresponding eigenmodes will, in principle, affect the response of the network, both in its spontaneous state and in response to stimulation.

) eigenvalues in between, which are the consequence of the specific realization of our distance-dependent connectivity here. The corresponding eigenmodes will, in principle, affect the response of the network, both in its spontaneous state and in response to stimulation.

All these eigenvalues have, however, negative real parts. They will, therefore, ensure the stability of the linearized network dynamics, as far as these eigenmodes are concerned. The bulk of the spectrum, in contrast, also comprises eigenvalues with real parts larger than  , which implies an instability. An alternative normalization of the weight matrix according to the neuronal gain

, which implies an instability. An alternative normalization of the weight matrix according to the neuronal gain  (Fig. 5, inset, bottom; see also [16]), however, does not render these modes unstable.

(Fig. 5, inset, bottom; see also [16]), however, does not render these modes unstable.

Here, we are resorting to a linearized rate equation describing the response of the network to (small) perturbations,  (see Eq. (17) in Methods). The eigendynamics corresponding to the common-mode (green cross) is faster, and hence it relaxes to the fixed point more rapidly than the other eigenmodes. The common mode effectively leads to the uniform, baseline state of the network (reflected in the baseline firing rate,

(see Eq. (17) in Methods). The eigendynamics corresponding to the common-mode (green cross) is faster, and hence it relaxes to the fixed point more rapidly than the other eigenmodes. The common mode effectively leads to the uniform, baseline state of the network (reflected in the baseline firing rate,  ), about which the network dynamics has indeed been linearized in our linear prediction. The effect of other eigenmodes, in the stationary state, should therefore be computed by considering the linearized gain about that uniform, baseline state.

), about which the network dynamics has indeed been linearized in our linear prediction. The effect of other eigenmodes, in the stationary state, should therefore be computed by considering the linearized gain about that uniform, baseline state.

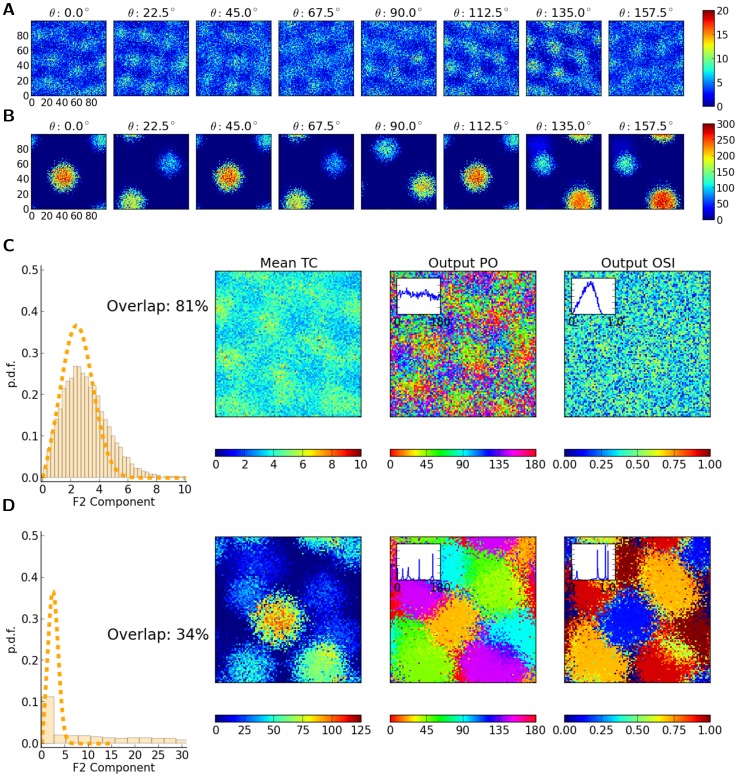

Simulation results for a network with this connectivity are illustrated in Fig. 6. Inspection of the spiking activity of the network (Fig. 6A) does not suggest a behavior very different from the behavior of random networks shown in Fig. 1. The irregularity of firing is, however, more pronounced, as the variance of inter-spike intervals is larger (Fig. 6C); the ISI CV has indeed a distribution about 1, which is more similar to the strongly coupled networks described in Fig. 4.

Figure 6. Distribution of orientation selectivity in a network with distance-dependent connectivity.

Same figure layout as Fig. 1, for a network with distance-dependent connectivity, similar to Fig. 5. Parameters of the network simulation are:  ,

,  ,

,  ,

,  ,

,  ,

,  ,

,  ,

,  . Note that the distribution of F2 components is computed by using the stimulus gain

. Note that the distribution of F2 components is computed by using the stimulus gain  , as in Fig. 3.

, as in Fig. 3.

Similar to Erdős-Rényi networks, networks with distance-dependent local connectivity are capable of amplifying the weak tuning of the input signal, and comparable levels of baseline (F0) and modulation (F2) components are emerging (Fig. 6E). When the predicted distribution of F2 components is obtained applying the normalization by the linear gain  , a very good match to the measured distribution is obtained (Fig. 6F), comparable to predictions in Fig. 4, and only slightly worse than the prediction in Fig. 1.

, a very good match to the measured distribution is obtained (Fig. 6F), comparable to predictions in Fig. 4, and only slightly worse than the prediction in Fig. 1.

Although partial rectification of tuning curves seems to be negligible in the example shown (Fig. 6B), correlations in the network could still be responsible for the remaining discrepancy. Moreover, size and structure of correlations in the network might be different here as compared to random networks due to non-homogeneous connectivity. Distance-dependent connectivity implies that connectivity is locally dense, which can lead to more shared input and this way impose strong correlations at the output.

In fact, however, pairwise correlations do not seem to be systematically larger than in random networks Fig. 2A, judged by the distribution of Pearson correlation coefficients (Fig. 7A). In contrast, the fluctuations in the activity of excitatory and inhibitory populations seem to be even less correlated (compare Fig. 7B with Fig. 2B). Occasional partial imbalance of excitatory and inhibitory input may therefore cause systematic distortions of our linear prediction.

Figure 7. Correlations in a network with distance-dependent connectivity.

Distribution of correlation coefficients for pairs of neurons (A) and temporal correlation of population activities (B) in the example network of Fig. 5 with distance-dependent connectivity. Other conventions are similar to Fig. 2.

Another potential contributor to the discrepancy of predictions are the different structural properties of these networks, reflected among other things in their respective eigenvalue spectrum. It is therefore informative to look more carefully into the eigenvalues which mark the difference to Erdős-Rényi networks, i.e. the ones localized between the bulk spectrum and the exceptional eigenvalue corresponding to the common-mode. To evaluate this, the first ten eigenvectors (corresponding to the ten largest eigenvalues sorted by their magnitude) of the network are plotted (Fig. 8A). The first eigenvector is the uniform vector (common-mode), and the tenth one is hardly distinguishable from noise. (Note that the corresponding eigenvalue is already part of the bulk.) In between, there are eight eigenvectors with non-random spatial structure.

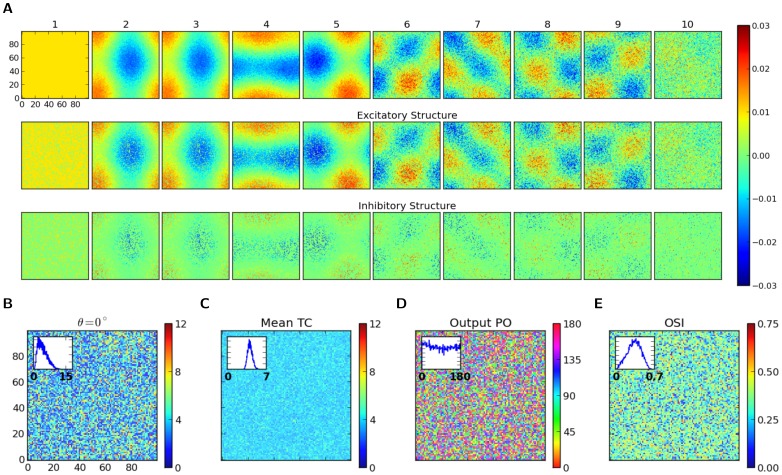

Figure 8. Structure and dynamics of a network with distance-dependent connectivity.

(A) First ten eigenvectors, corresponding to the ten eigenvalues of largest magnitude, are plotted for the sample network described and discussed in Figs. 5 and 6. For each eigenvector, the value of the vector corresponding to each neuron is plotted at the respective spatial position of the neuron (as in Fig. 5A). In the first row, this is shown for all neurons, and in the bottom rows, the structure of eigenvectors are separately plotted for excitatory and inhibitory neurons, respectively (with zeros replaced on the positions of the other population, respectively). Only the real part of the components of the eigenvectors are plotted here. Note that the tenth eigenvector already corresponds to an eigenvalue from the bulk of the spectrum in Fig. 5D. (B) Shown is the mean firing rate of neurons in the network, extracted from a  simulation, in response to a stimulus with orientation

simulation, in response to a stimulus with orientation  . (C) For each neuron, the mean tuning curve (Mean TC) is plotted as the average (over different orientations) of the mean firing rate. (C, D) From each tuning curve,

. (C) For each neuron, the mean tuning curve (Mean TC) is plotted as the average (over different orientations) of the mean firing rate. (C, D) From each tuning curve,  , the output preferred orientation (Output PO) and output orientation selectivity index (Output OSI) is extracted and plotted, respectively. They are obtained as the angle and length of the orientation selectivity vector,

, the output preferred orientation (Output PO) and output orientation selectivity index (Output OSI) is extracted and plotted, respectively. They are obtained as the angle and length of the orientation selectivity vector,  ; i.e.

; i.e.  and

and  . Insets show the distributions in each case.

. Insets show the distributions in each case.

These eigenvectors reflect the specific sample from the network ensemble we are considering here, and they can, in principle, prefer a specific pattern of stimulation in the input. While other patterns of input stimulation would be processed by the network  with a small gain, any input pattern matching these special eigenmodes would experience the highest gain (in absolute terms) from the network. The corresponding eigenvalues

with a small gain, any input pattern matching these special eigenmodes would experience the highest gain (in absolute terms) from the network. The corresponding eigenvalues  have, however, a negative real part, therefore these modes would in this case be attenuated: the corresponding eigenvalues of the operator

have, however, a negative real part, therefore these modes would in this case be attenuated: the corresponding eigenvalues of the operator  that yields the stationary firing rate vector, namely

that yields the stationary firing rate vector, namely  , would then be very small.

, would then be very small.

We do not, however, explicitly represent any of these patterns in our stimuli. The stimuli considered in this work can be broken down to a linear sum of the common-mode (i.e. the first eigenvector) and the modulation component (i.e. a random pattern, as preferred orientations are assigned randomly and independently to all neurons, irrespective of the position of the neuron in space). The modulation component would therefore only have a very small component in the direction of each any special eigenmode. It is however possible that for non-stationary inputs to the network, transient patterns with a bias for selected eigenmodes resonate more than others.

The question arises, if spatially structured eigenmodes (cf. Fig. 8A) have an impact on the observed pattern of spontaneous and evoked neuronal activity. Plotting the response of the network to a stimulus reflecting one particular orientation, as well as the mean activity of neurons over different orientations, do not reveal any visible structure (Fig. 8B, C). The baseline activity of the network seems to be quite uniform, and the response to a certain orientation does not reveal any structure beyond the random spatial pattern one would expect from the random assignment of preferred orientations of the input. This is further supported by visual inspection of the map of preferred orientations for the output (Fig. 8D) and orientation selectivity index (Fig. 8E) in the network.

In principle, it is conceivable that spatially structured eigenmodes could affect the response of the network by setting the operating point of the network differently at different positions in space, as a result of the selective attenuation of certain eigenmodes. However, we have never observed such phenomena in our simulations. The fact that those structured modes get attenuated (and not amplified) might be one reason; another reason might be the fact that eigenmodes are typically heterogeneous and non-local, which makes the selection of the corresponding overall preferred pattern unlikely. Spatial structure of the network, and of its built-in linear eigenmodes, are therefore not dominant in determining the distribution of orientation selectivity. They could, however, be potential contributors in the small deviation of the predicted distribution from the measured one.

Spatial Imbalance of Excitation and Inhibition

To test the robustness of our predictions, we went beyond the case of spatial balance of excitation and inhibition, and also simulated networks with different extents of connectivity. Roughly the same overall behavior of the network, and accuracy of our predictions, were observed for the case of more localized inhibition and less localized excitation ( and

and  , Fig. 9A), as well as for the case of more localized excitation and less localized inhibition (

, Fig. 9A), as well as for the case of more localized excitation and less localized inhibition ( and

and  , Fig. 9B).

, Fig. 9B).

Figure 9. The impact of spatial extent of excitation and inhibition on the distribution of F2 components.

Same illustration as in Fig. 4, for simulations with different extents of excitatory and inhibitory connectivity. (A) shows the results for a network with inhibition being more localized than excitation ( and

and  ). In (B) we show the results for excitation being more localized than inhibition (

). In (B) we show the results for excitation being more localized than inhibition ( and

and  ). Other parameters are the same as in Fig. 6. The distribution of F2 components is computed after re-normalization of the connectivity matrix by

). Other parameters are the same as in Fig. 6. The distribution of F2 components is computed after re-normalization of the connectivity matrix by  , as explained before.

, as explained before.

This trend was further corroborated when we systematically scanned the accuracy of our predictions for a large set of different networks, by scanning the parameter space (Fig. 10A). Indeed, for most of the parameters studied, the predicted distribution of orientation selectivity matched very well with the actual distribution (more than 90% overlap). For the more “extreme” combinations of parameters, however, where the spatial extent of excitation and inhibition were highly out of balance, the quality of the match degraded. The deviation was more significant when excitation was more local and inhibition was more global (Fig. 10A, upper left portion). Note that, even for the most extreme cases of local excitation ( ), the accuracy of our prediction is still fairly good, as long as the inhibition has a similar extent (

), the accuracy of our prediction is still fairly good, as long as the inhibition has a similar extent ( –

–  ).

).

Figure 10. Accuracy of the linear prediction for different spatial extents of excitation and inhibition.

(A) The overlap index (using  ) is plotted for networks with different extents of excitation and inhibition. (B, C) Pre-synaptic connections for a sample post-synaptic neuron, along with the histogram of distances to pre-synaptic neurons for the entire population (inset), are shown here for two extreme cases, marked in panel (A). (D, E) Eigenvalue distribution of the example networks in (B) and (C), respectively. Two ways of normalization of the weight matrix are compared in the top and bottom panels. (F, G) First nine eigenmodes, corresponding to the nine largest positive eigenvalues (in terms of their real component), are plotted for the example networks in (A). Panels (F) and (G) correspond to the networks in (B) and (C), respectively. Note that the ninth eigenvector in (G) corresponds to an eigenvalue from the bulk of the spectrum in (E). Only the real part of the components of the eigenmodes are plotted.

) is plotted for networks with different extents of excitation and inhibition. (B, C) Pre-synaptic connections for a sample post-synaptic neuron, along with the histogram of distances to pre-synaptic neurons for the entire population (inset), are shown here for two extreme cases, marked in panel (A). (D, E) Eigenvalue distribution of the example networks in (B) and (C), respectively. Two ways of normalization of the weight matrix are compared in the top and bottom panels. (F, G) First nine eigenmodes, corresponding to the nine largest positive eigenvalues (in terms of their real component), are plotted for the example networks in (A). Panels (F) and (G) correspond to the networks in (B) and (C), respectively. Note that the ninth eigenvector in (G) corresponds to an eigenvalue from the bulk of the spectrum in (E). Only the real part of the components of the eigenmodes are plotted.

To investigate what happens in each extreme case, we chose two examples (marked in Fig. 10A) for further analysis. The connectivity patterns of these two examples, with  and (0.25,0.75) (numbers indicated in

and (0.25,0.75) (numbers indicated in  ), are illustrated in Fig. 10B, C, respectively. The eigenvalue spectra of the corresponding weight matrices are shown in Fig. 10D, E. When the weights are normalized with respect to the reset voltage (upper panels), both spectra suggest an unstable linearized dynamics, as they both have eigenvalues with a real part larger than one.

), are illustrated in Fig. 10B, C, respectively. The eigenvalue spectra of the corresponding weight matrices are shown in Fig. 10D, E. When the weights are normalized with respect to the reset voltage (upper panels), both spectra suggest an unstable linearized dynamics, as they both have eigenvalues with a real part larger than one.

The picture changes, however, when a normalization according to the effective gain,  , is performed. While the network with local excitation still has several clearly unstable eigenmodes (Fig. 10E, bottom), the spectrum of the network with local inhibition comprises only one positive eigenvalue which is only slightly larger than one (Fig. 10D, bottom). Some of the eigenvectors corresponding to the largest positive eigenvalues are plotted for both networks in Fig. 10F, G, respectively. From this, it seems therefore possible that the source of deviation from the linear prediction is indeed instability of the linearized dynamics (namely the instability of the uniform asynchronous-irregular state about which we perform the linearization) for these extreme parameter settings. When this instability is more pronounced, i.e. for the network with local excitation, the deviation is highest. When the network is at the edge of instability, i.e. for the network with local inhibition, our predictions show only a modest deviation.

, is performed. While the network with local excitation still has several clearly unstable eigenmodes (Fig. 10E, bottom), the spectrum of the network with local inhibition comprises only one positive eigenvalue which is only slightly larger than one (Fig. 10D, bottom). Some of the eigenvectors corresponding to the largest positive eigenvalues are plotted for both networks in Fig. 10F, G, respectively. From this, it seems therefore possible that the source of deviation from the linear prediction is indeed instability of the linearized dynamics (namely the instability of the uniform asynchronous-irregular state about which we perform the linearization) for these extreme parameter settings. When this instability is more pronounced, i.e. for the network with local excitation, the deviation is highest. When the network is at the edge of instability, i.e. for the network with local inhibition, our predictions show only a modest deviation.

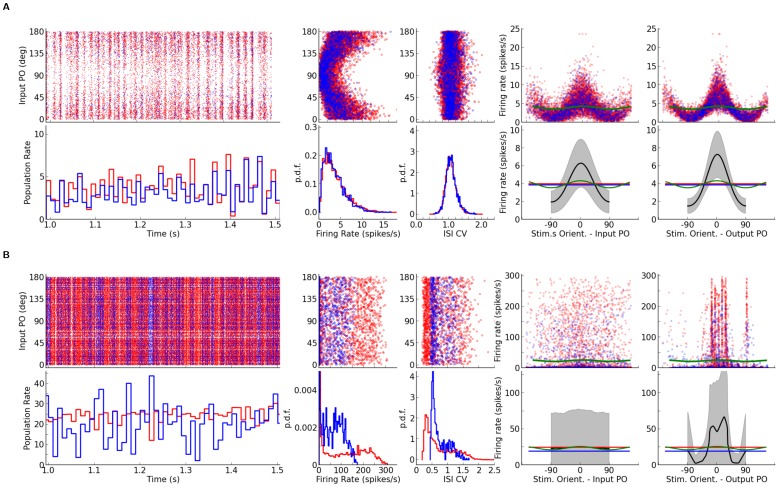

To test this hypothesis further, namely that instability of the linearized dynamics is the source of mismatch between the linear prediction and the actual distribution of orientation selectivity, we need to scrutinize the response behavior of the sample networks. The outcome of this is shown in Fig. 11. While the network with local inhibition does not look very different from other examples considered before (Fig. 11A), the behavior of the network with local excitation very clearly shows deviating behavior (Fig. 11B). First, firing rates are much higher than in the less extreme cases, for both excitatory and inhibitory populations (Fig. 11B, first column). Moreover, the activity of excitatory and inhibitory neuronal populations are not well correlated in time, as it is the case for the other networks (Fig. 11B, first column, bottom). The firing rate distribution has a very long tail, and the tail is longer for the excitatory than for the inhibitory population (Fig. 11B, second column). The long tail is accompanied by a peculiar peak at zero firing rate (which is cut for illustration purposes in Fig. 11B, second column, bottom). It reflects the fact that most of the neurons in the network are actually silent, and a small fraction of the population is highly active. The average irregularity of spike trains (the CV of the inter-spike intervals) in the network is reduced compared to our previous examples (Fig. 11B, third column). All these properties are consistent with the presumed instability of the linearized dynamics, as inferred from the eigenvalue spectrum.

Figure 11. Orientation selectivity in networks with extreme spatial imbalance of excitation and inhibition.

(A, B) As extreme examples, networks with highly local inhibition ( and

and  , Fig. 10B) or highly local excitation (

, Fig. 10B) or highly local excitation ( and

and  , Fig. 10C), were considered, respectively. The spiking activity of the network (first column), distribution of firing rates (second column) and spike train irregularity index (third column), as well as output tuning curves (fourth and fifth columns). In the fourth column, the tuning curves are aligned according to their Input PO, whereas in the fifth column they are aligned according to their Output PO. Other conventions are the same as Fig. 9.

, Fig. 10C), were considered, respectively. The spiking activity of the network (first column), distribution of firing rates (second column) and spike train irregularity index (third column), as well as output tuning curves (fourth and fifth columns). In the fourth column, the tuning curves are aligned according to their Input PO, whereas in the fifth column they are aligned according to their Output PO. Other conventions are the same as Fig. 9.

In terms of functional properties of the network, the output tuning curves are much more scattered when aligned by the respective preferred orientations of the inputs (Fig. 11B, fourth column, upper panel). In fact, the mean output tuning curve for all neurons of the network does not show any amplification, if it is aligned at the Input PO (Fig. 11B, fourth column, lower panel). The picture changes, however, if tuning curves are aligned according to their Output PO (Fig. 11B, fifth column). Here a clear amplification of the modulation is evident in output tuning curves, although the relation to the feedforward input gets lost. Also, the average output tuning curve is not smooth, i.e. not all orientations are uniformly represented in the distribution of output preferred orientations.