Abstract

Diverse ion channels and their dynamics endow single neurons with complex biophysical properties. These properties determine the heterogeneity of cell types that make up the brain, as constituents of neural circuits tuned to perform highly specific computations. How do biophysical properties of single neurons impact network function? We study a set of biophysical properties that emerge in cortical neurons during the first week of development, eventually allowing these neurons to adaptively scale the gain of their response to the amplitude of the fluctuations they encounter. During the same time period, these same neurons participate in large-scale waves of spontaneously generated electrical activity. We investigate the potential role of experimentally observed changes in intrinsic neuronal properties in determining the ability of cortical networks to propagate waves of activity. We show that such changes can strongly affect the ability of multi-layered feedforward networks to represent and transmit information on multiple timescales. With properties modeled on those observed at early stages of development, neurons are relatively insensitive to rapid fluctuations and tend to fire synchronously in response to wave-like events of large amplitude. Following developmental changes in voltage-dependent conductances, these same neurons become efficient encoders of fast input fluctuations over few layers, but lose the ability to transmit slower, population-wide input variations across many layers. Depending on the neurons' intrinsic properties, noise plays different roles in modulating neuronal input-output curves, which can dramatically impact network transmission. The developmental change in intrinsic properties supports a transformation of a networks function from the propagation of network-wide information to one in which computations are scaled to local activity. This work underscores the significance of simple changes in conductance parameters in governing how neurons represent and propagate information, and suggests a role for background synaptic noise in switching the mode of information transmission.

Author Summary

Differences in ion channel composition endow different neuronal types with distinct computational properties. Understanding how these biophysical differences affect network-level computation is an important frontier. We focus on a set of biophysical properties, experimentally observed in developing cortical neurons, that allow these neurons to efficiently encode their inputs despite time-varying changes in the statistical context. Large-scale propagating waves are autonomously generated by the developing brain even before the onset of sensory experience. Using multi-layered feedforward networks, we examine how changes in intrinsic properties can lead to changes in the network's ability to represent and transmit information on multiple timescales. We demonstrate that measured changes in the computational properties of immature single neurons enable the propagation of slow-varying wave-like inputs. In contrast, neurons with more mature properties are more sensitive to fast fluctuations, which modulate the slow-varying information. While slow events are transmitted with high fidelity in initial network layers, noise degrades transmission in downstream network layers. Our results show how short-term adaptation and modulation of the neurons' input-output firing curves by background synaptic noise determine the ability of neural networks to transmit information on multiple timescales.

Introduction

Gain scaling refers to the ability of neurons to scale the gain of their responses when stimulated with currents of different amplitudes. A common property of neural systems, gain scaling adjusts the system's response to the size of the input relative to the input's standard deviation [1]. This form of adaptation maximizes information transmission for different input distributions [1]–[3]. Though this property is typically observed with respect to the coding of external stimuli by neural circuits [1], [3]–[7], Mease et al. [8] have recently shown that single neurons during early development of mouse cortex automatically adjust the dynamic range of coding to the scale of input stimuli through a modulation of the slope of their effective input-output relationship. In contrast to previous work, perfect gain scaling in the input-output relation occurs for certain values of ionic conductances and does not require any explicit adaptive processes that adjust the gain through spike-driven negative feedback, such as slow sodium inactivation [4], [9], [10] and slow afterhyperpolarization (AHP) currents [10], [11]. However, these experiments found that gain scaling is not a static property during development. At birth, or P0 (postnatal day 0), cortical neurons show limited gain scaling; in contrast, at P8, neurons showed pronounced gain-scaling abilities [8]. Here, we examined how the emergence of the gain-scaling property in single cortical neurons during the first week of development might affect signal transmission over multiple timescales across the cortical network.

Along with the emergence of gain scaling during the first week of neural development, single neurons in the developing cortex participate in large-scale spontaneously generated activity which travels across different regions in the form of waves [12]–[14]. Pacemaker neurons located in the ventrolateral (piriform) cortex initiate spontaneous waves that continue to propagate dorsally across the neocortex [13]. Experimentally, much attention has been focused on synaptic interactions in initiating and propagating activity, with a particular emphasis on the role of GABAergic circuits, which are depolarizing in early development [15], [16]. While multiple network properties play an important role in the generation of spontaneous waves, here we ask how the intrinsic computational properties of cortical neurons, in particular gain scaling, can affect the generation and propagation of spontaneous activity. Changes in intrinsic properties may play a role in wave propagation during development, and the eventual disappearance of this activity as sensory circuits become mature.

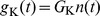

A simple model for propagating activity, like that observed during spontaneous waves, is a feedforward network in which activity is carried from one population, or layer, of neurons to the next without affecting previous layers [17]. We compare the behavior of networks composed of conductance-based neurons with either immature (nongain-scaling) or mature (gain-scaling) computational properties [8]. These networks exhibit different information processing properties with respect to both fast and slow timescales of the input. We determine how rapid input fluctuations are encoded in the precise spike timing of the output by the use of linear-nonlinear models [18], [19], and use noise-modulated frequency-current relationships to predict the transmission of slow variations in the input [20], [21].

We find that networks built from neuron types with different gain-scaling ability propagate information in strikingly different ways. Networks of gain-scaling (GS) neurons convey a large amount of fast-varying information from neuron to neuron, and transmit slow-varying information at the population level, but only across a few layers in the network; over multiple layers the slow-varying information disappears. In contrast, nongain-scaling (NGS) neurons are worse at processing fast-varying information at the single neuron level; however, subsequent network layers transmit slow-varying signals faithfully, reproducing wave-like behavior. We qualitatively explain these results in terms of the differences in the noise-modulated frequency-current curves of the neuron types through a mean field approach: this approach allows us to characterize how the mean firing rate of a neuronal population in a given layer depends on the firing rate of the neuronal population in the previous layer through the mean synaptic currents exchanged between the two layers. Our results suggest that the experimentally observed changes in intrinsic properties may contribute to the transition from spontaneous wave propagation in developing cortex to sensitivity to local input fluctuations in more mature networks, priming cortical networks to become capable of processing functionally relevant stimuli.

Results

Single cortical neurons acquire the ability to scale the gain of their responses in the first week of development, as shown in cortical slice experiments [8]. Here, we described gain scaling by characterizing a single neuron's response to white noise using linear/nonlinear (LN) models (see below). Before becoming efficient encoders of fast stimulus fluctuations, the neurons participate in network-wide activity events that propagate along stereotypical directions, known as spontaneous cortical waves [13], [22]. Although many parameters regulate these waves in the developing cortex, we sought to understand the effect of gain scaling in single neurons on the ability of cortical networks to propagate information about inputs over long timescales, as occur during waves, and over short timescales, as occur when waves disappear and single neurons become efficient gain scalers. More broadly, we use waves in developing cortex as an example of a broader issue: how do changes in intrinsic properties of biophysically realistic model neurons affect how a network of such neurons processes and transmits information?

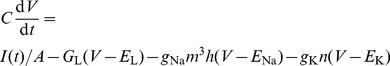

We have shown that in cortical neurons in brain slices, developmental increases in the maximal sodium ( ) to potassium (

) to potassium ( ) conductance ratio can explain the parallel transition from nongain-scaling to gain scaling behavior [8]. Furthermore, the gain scaling ability can be controlled by pharmacological manipulation of the maximal

) conductance ratio can explain the parallel transition from nongain-scaling to gain scaling behavior [8]. Furthermore, the gain scaling ability can be controlled by pharmacological manipulation of the maximal  to

to  ratio [8]. The gain scaling property can also be captured by changing this ratio in single conductance-based model neurons [8]. Therefore, we first examined networks consisting of two types of neurons: where the ratio of

ratio [8]. The gain scaling property can also be captured by changing this ratio in single conductance-based model neurons [8]. Therefore, we first examined networks consisting of two types of neurons: where the ratio of  to

to  was set to either 0.6 (representing immature, nongain-scaling neurons) or 1.5 (representing mature, gain-scaling neurons).

was set to either 0.6 (representing immature, nongain-scaling neurons) or 1.5 (representing mature, gain-scaling neurons).

Two computational regimes at different temporal resolution

We first characterized neuronal responses of conductance-based model neurons using methods previously applied to experimentally recorded neurons driven with white noise. The neuron's gain scaling ability is defined by a rescaling of the input/output function of a linear/nonlinear (LN) model by the stimulus standard deviation [8]. Using a white noise input current, we extracted LN models describing the response properties of the two neuron types to rapid fluctuations, while fixing the mean (DC) of the input current. The LN model [18], [19], [23] predicts the instantaneous time-varying firing rate of a single neuron by first identifying a relevant feature of the input, and after linearly filtering the input stimulus with this feature, a nonlinear input-output curve that relates the magnitude of that feature in the input (the filtered stimulus) to the probability of firing. We computed the spike-triggered average (STA) as the relevant feature of the input [18], [24], and then constructed the nonlinear response function as the probability of firing given the stimulus linearly filtered by the STA.

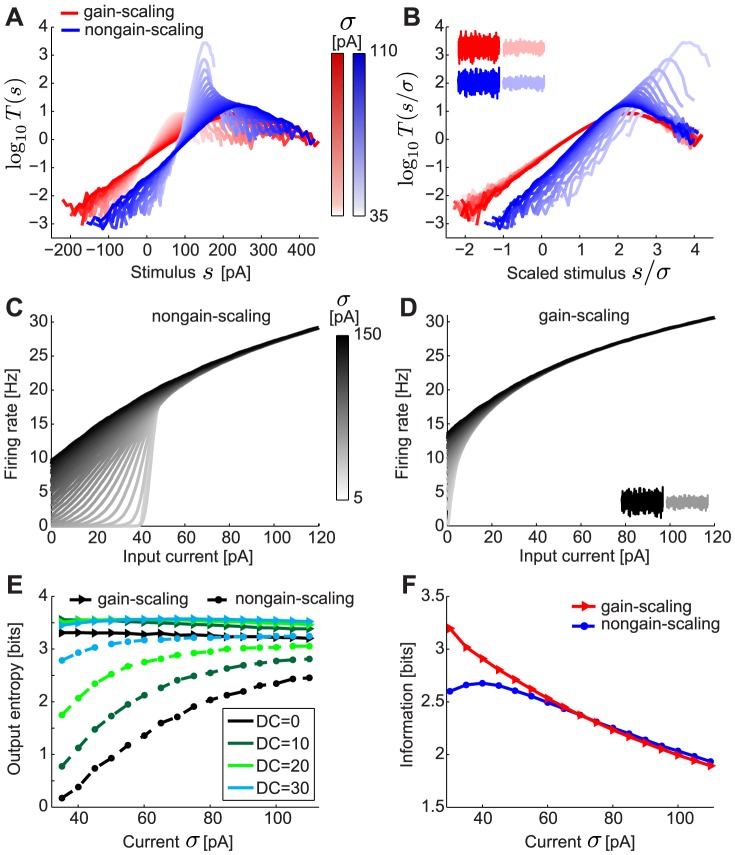

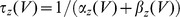

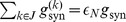

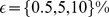

Repeating this procedure for noise stimuli with a range of standard deviations ( ) produces a family of curves for both neuron types (Figure 1A). While the linear feature is relatively constant as a function of the magnitude of the rapid fluctuations,

) produces a family of curves for both neuron types (Figure 1A). While the linear feature is relatively constant as a function of the magnitude of the rapid fluctuations,  , the nonlinear input-output curves change, similar to experimental observations in single neurons in cortical slices [8]. When the input is normalized by

, the nonlinear input-output curves change, similar to experimental observations in single neurons in cortical slices [8]. When the input is normalized by  , the mature neurons have a common input-output curve with respect to the normalized stimulus (Figure 1B, red) [8] over a wide range of input DC. In contrast, the input-output curves of immature neurons have a different slope when compared in units of the normalized stimulus (Figure 1B, blue). Gain scaling has previously been shown to support a high rate of information transmission about stimulus fluctuations in the face of changing stimulus amplitude [1]. Indeed, these GS neurons have higher output entropy, and therefore transmit more information, than NGS neurons (Figure 1E). The output entropy is approximately constant regardless of

, the mature neurons have a common input-output curve with respect to the normalized stimulus (Figure 1B, red) [8] over a wide range of input DC. In contrast, the input-output curves of immature neurons have a different slope when compared in units of the normalized stimulus (Figure 1B, blue). Gain scaling has previously been shown to support a high rate of information transmission about stimulus fluctuations in the face of changing stimulus amplitude [1]. Indeed, these GS neurons have higher output entropy, and therefore transmit more information, than NGS neurons (Figure 1E). The output entropy is approximately constant regardless of  for a range of mean (DC) inputs – this is a hallmark of their gain-scaling ability. The changing shape of the input-output curve for the NGS neurons results in an increasing output entropy as a function of

for a range of mean (DC) inputs – this is a hallmark of their gain-scaling ability. The changing shape of the input-output curve for the NGS neurons results in an increasing output entropy as a function of  (Figure 1E). With the addition of DC, the output entropy of the NGS neurons' firing eventually approaches that of the GS neurons; this is accompanied with a simultaneous decrease in the distance between rest and threshold membrane potential of the NGS neurons as shown previously [8]. Thus, GS neurons are better at encoding fast fluctuations, a property which might enable efficient local computation independent of the background signal amplitude in more mature circuits after waves disappear.

(Figure 1E). With the addition of DC, the output entropy of the NGS neurons' firing eventually approaches that of the GS neurons; this is accompanied with a simultaneous decrease in the distance between rest and threshold membrane potential of the NGS neurons as shown previously [8]. Thus, GS neurons are better at encoding fast fluctuations, a property which might enable efficient local computation independent of the background signal amplitude in more mature circuits after waves disappear.

Figure 1. LN models and  –

– curves for gain-scaling (GS) and nongain-scaling (NGS) neurons.

curves for gain-scaling (GS) and nongain-scaling (NGS) neurons.

A. The nonlinearities in the LN model framework for a GS (red) ( pS/µm2 and

pS/µm2 and  pS/µm2) and a NGS (blue) (

pS/µm2) and a NGS (blue) ( pS/µm2 and

pS/µm2 and  pS/µm2) neuron simulated as conductance-based model neurons (Eq. 2). The nonlinearities were computed using Bayes' rule:

pS/µm2) neuron simulated as conductance-based model neurons (Eq. 2). The nonlinearities were computed using Bayes' rule:  , where

, where  is the neuron's mean firing rate and

is the neuron's mean firing rate and  is the linearly filtered stimulus (see also Eq. 7 in Methods). B. The same nonlinearities as A, in stimulus units scaled by

is the linearly filtered stimulus (see also Eq. 7 in Methods). B. The same nonlinearities as A, in stimulus units scaled by  (magnitude of stimulus fluctuations). The nonlinearities overlap for GS neurons over a wide range of

(magnitude of stimulus fluctuations). The nonlinearities overlap for GS neurons over a wide range of  . C–D. The

. C–D. The  –

– curves for a NGS (C) and a GS neuron (D) for different values of

curves for a NGS (C) and a GS neuron (D) for different values of  . E. The output entropy as a function of the mean (DC) and

. E. The output entropy as a function of the mean (DC) and  (amplitude of fast fluctuations). F. Information about the output firing rate of the neurons as a function of

(amplitude of fast fluctuations). F. Information about the output firing rate of the neurons as a function of  .

.

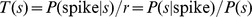

The response of a neuron to slow input variations may be described in terms of its firing rate as a function of the mean input  through a frequency-current (

through a frequency-current ( –

– ) curve. This description averages over the details of the rapid fluctuations. The shape of this

) curve. This description averages over the details of the rapid fluctuations. The shape of this  –

– curve can be modulated by the standard deviation (

curve can be modulated by the standard deviation ( ) of the background noise [20], [21]. Here, the "background noise'' is a rapidly-varying input that is not considered to convey specific stimulus information but rather, provides a statistical context that modulates the signaled information assumed to be contained in the slow-varying mean input. Thus, a neuron's slow-varying responses can be characterized in terms of a family of

) of the background noise [20], [21]. Here, the "background noise'' is a rapidly-varying input that is not considered to convey specific stimulus information but rather, provides a statistical context that modulates the signaled information assumed to be contained in the slow-varying mean input. Thus, a neuron's slow-varying responses can be characterized in terms of a family of  –

– curves parameterized by

curves parameterized by  .

.

Comparing the  –

– curves for the two neuron types using the same conductance-based models reveals substantial differences in their firing thresholds and also in their modulability by

curves for the two neuron types using the same conductance-based models reveals substantial differences in their firing thresholds and also in their modulability by  (Figure 1C,D). NGS neurons have a relatively high threshold at low

(Figure 1C,D). NGS neurons have a relatively high threshold at low  , and the

, and the  –

– curves are significantly modulated by the addition of noise, i.e. with increasing

curves are significantly modulated by the addition of noise, i.e. with increasing  (Figure 1C). In contrast, the

(Figure 1C). In contrast, the  –

– curves of GS neurons have lower thresholds, and show minimal modulation with the level of noise (Figure 1D). This behavior is reflected in the information that each neuron type transmits about firing rate for a range of

curves of GS neurons have lower thresholds, and show minimal modulation with the level of noise (Figure 1D). This behavior is reflected in the information that each neuron type transmits about firing rate for a range of  (Figure 1F). This information quantification determines how well a distribution of input DC can be distinguished at the level of the neuron's output firing rate while averaging out the fast fluctuations. The information would be low for neurons whose output firing rates are indistinguishable for a range of DC inputs, and high for neurons whose output firing rates unambiguously differ for different DC inputs. The two neuron types convey similar information for large

(Figure 1F). This information quantification determines how well a distribution of input DC can be distinguished at the level of the neuron's output firing rate while averaging out the fast fluctuations. The information would be low for neurons whose output firing rates are indistinguishable for a range of DC inputs, and high for neurons whose output firing rates unambiguously differ for different DC inputs. The two neuron types convey similar information for large  where the

where the  –

– curves are almost invariant to noise magnitude. For GS neurons, most information is conveyed about the input rate at low

curves are almost invariant to noise magnitude. For GS neurons, most information is conveyed about the input rate at low  where the

where the  –

– curve encodes the largest range of firing rates (0 to 30 Hz). The information encoded by NGS neurons is non-monotonic: at low

curve encodes the largest range of firing rates (0 to 30 Hz). The information encoded by NGS neurons is non-monotonic: at low  these neurons transmit less information because of their high thresholds, compressing the range of inputs being encoded. Information transmission is maximized at

these neurons transmit less information because of their high thresholds, compressing the range of inputs being encoded. Information transmission is maximized at  for which the

for which the  –

– curve approaches linearity, simultaneously maximizing the range of inputs and outputs encoded by the neuron. For both neuron types, the general trend of decreasing information as

curve approaches linearity, simultaneously maximizing the range of inputs and outputs encoded by the neuron. For both neuron types, the general trend of decreasing information as  increases is the result of compressing the range of outputs (10 to 30 Hz).

increases is the result of compressing the range of outputs (10 to 30 Hz).

These two descriptions characterize the different processing abilities of the two neuron types. GS neurons with their  -invariant input-output relations of the LN model are better suited to efficiently encode fast current fluctuations because information transmission is independent of

-invariant input-output relations of the LN model are better suited to efficiently encode fast current fluctuations because information transmission is independent of  . However, NGS neurons with their

. However, NGS neurons with their  -modulatable

-modulatable  –

– curves are better at representing a range of mean inputs, as illustrated by their ability to preserve the range of input currents in the range of output firing rates.

curves are better at representing a range of mean inputs, as illustrated by their ability to preserve the range of input currents in the range of output firing rates.

The ratio of  and

and  is sufficient for modulating a neuron's intrinsic computation

is sufficient for modulating a neuron's intrinsic computation

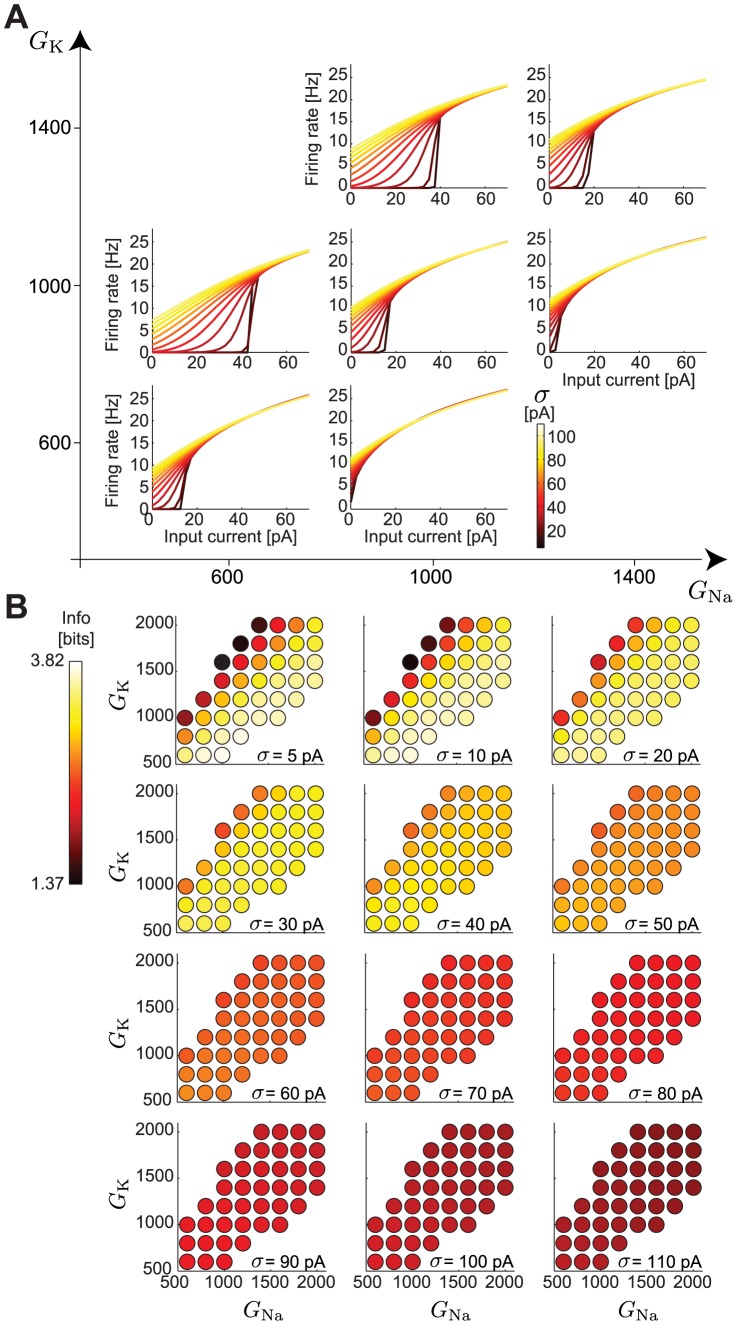

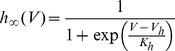

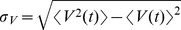

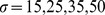

To characterize the spectrum of intrinsic properties that might arise as a result of different maximal conductances,  and

and  , we determined the

, we determined the  –

– curves for a range of maximal conductances in the conductance-based model neurons (Figure 2). Mease et al. [8] previously classified neurons as spontaneously active, excitable or silent, and based on the neurons' LN models determined gain-scaling ability as a function of the individual

curves for a range of maximal conductances in the conductance-based model neurons (Figure 2). Mease et al. [8] previously classified neurons as spontaneously active, excitable or silent, and based on the neurons' LN models determined gain-scaling ability as a function of the individual  and

and  for excitable neurons. Models with low

for excitable neurons. Models with low  had nonlinear input-output relations that did not scale completely with

had nonlinear input-output relations that did not scale completely with  , while models with high

, while models with high  had almost identical nonlinear input-output relations for all

had almost identical nonlinear input-output relations for all  [8]. Therefore, gain scaling ability increased with increasing ratio, independent of each individual conductance.

[8]. Therefore, gain scaling ability increased with increasing ratio, independent of each individual conductance.

Figure 2.

–

– curves and information as a function of individual maximal Na and K conductances.

curves and information as a function of individual maximal Na and K conductances.

A. The  –

– curves for different maximal Na and K conductances,

curves for different maximal Na and K conductances,  and

and  , in pS/µm2 (compare to Figure 1C,D). B. The information for the different models as a function of

, in pS/µm2 (compare to Figure 1C,D). B. The information for the different models as a function of  (compare to Figure 1F).

(compare to Figure 1F).

We examined the modulability of  –

– curves by

curves by  in excitable model neurons while independently varying

in excitable model neurons while independently varying  and

and  (Figure 2). Like gain scaling, the modulability by

(Figure 2). Like gain scaling, the modulability by  also depended only on the ratio

also depended only on the ratio  , rather than either conductance alone, with larger modulability observed for smaller ratios. To further explore the implications of such modulability by

, rather than either conductance alone, with larger modulability observed for smaller ratios. To further explore the implications of such modulability by  , we computed the mutual information that each model neuron transmits about mean inputs for a range of

, we computed the mutual information that each model neuron transmits about mean inputs for a range of  (Figure 2). Neurons with

(Figure 2). Neurons with  behaved like GS neurons in Figure 1F, while neurons with

behaved like GS neurons in Figure 1F, while neurons with  behaved like NGS neurons.

behaved like NGS neurons.

These results suggest that the ability of single neurons to represent a distribution of mean input currents by their distribution of output firing rates can be captured only by changing the ratio of  and

and  . Therefore, we focused on studying two neuron types with

. Therefore, we focused on studying two neuron types with  in the two extremes of the conductance range of excitable neurons: GS neurons with

in the two extremes of the conductance range of excitable neurons: GS neurons with  and NGS neurons with

and NGS neurons with  .

.

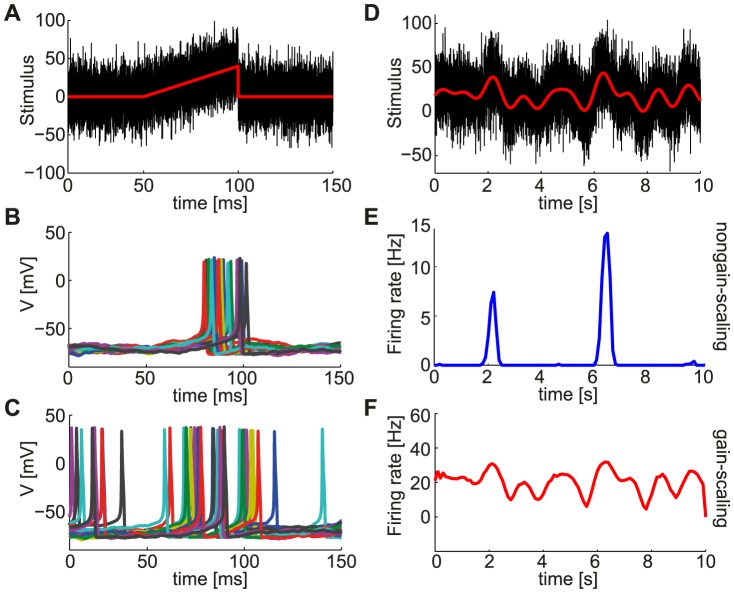

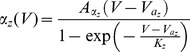

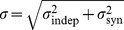

Population responses of the two neuron types

Upon characterizing single neuron responses of the two neuron types to fast-varying information via the LN models and to slow-varying information via the  –

– curves, we compared their population responses to stimuli with fast and slow timescales. A population of uncoupled neurons of each type was stimulated with a common slow ramp of input current, and superimposed fast-varying noise inputs, generated independently for each neuron (Figure 3A). The population of NGS neurons fired synchronously with respect to the ramp input and only during the peak of the ramp (Figure 3B), while the GS neurons were more sensitive to the background noise and fired asynchronously during the ramp (Figure 3C) with a firing rate that was continuously modulated by the ramp input. This suggests that the sensitivity to noise fluctuations of the GS neurons at the single neuron level allows them to better encode slower variations in the common signal at the population level [25]–[27], in contrast to the NGS population which only responds to events of large amplitude independent of the background noise.

curves, we compared their population responses to stimuli with fast and slow timescales. A population of uncoupled neurons of each type was stimulated with a common slow ramp of input current, and superimposed fast-varying noise inputs, generated independently for each neuron (Figure 3A). The population of NGS neurons fired synchronously with respect to the ramp input and only during the peak of the ramp (Figure 3B), while the GS neurons were more sensitive to the background noise and fired asynchronously during the ramp (Figure 3C) with a firing rate that was continuously modulated by the ramp input. This suggests that the sensitivity to noise fluctuations of the GS neurons at the single neuron level allows them to better encode slower variations in the common signal at the population level [25]–[27], in contrast to the NGS population which only responds to events of large amplitude independent of the background noise.

Figure 3. Stimulus encoding varies with the intrinsic properties of neurons.

A. Noise fluctuations (black) superimposed on a short ramping input stimulus (red) with rise time of 50 ms were presented to two separate populations of 100 independent conductance-based model neurons with different gain-scaling properties. B,C. Voltage responses of (B) 100 NGS ( pS/µm2 and

pS/µm2 and  pS/µm2) and (C) 100 GS neurons (

pS/µm2) and (C) 100 GS neurons ( pS/µm2 and

pS/µm2 and  pS/µm2) to the ramp input in A. The different colors indicate voltage responses of different neurons. D. Noise fluctuations with a correlation time constant of 1 ms (black) superimposed on a Gaussian input stimulus low-pass filtered at 500 ms (red) for a duration of 10 seconds were also presented to the two neuron populations. E,F. Population response (PSTH) of NGS (E) and GS (F) neurons to the input in D.

pS/µm2) to the ramp input in A. The different colors indicate voltage responses of different neurons. D. Noise fluctuations with a correlation time constant of 1 ms (black) superimposed on a Gaussian input stimulus low-pass filtered at 500 ms (red) for a duration of 10 seconds were also presented to the two neuron populations. E,F. Population response (PSTH) of NGS (E) and GS (F) neurons to the input in D.

During cortical development, wave-like activity on longer timescales occurs in the midst of fast-varying random synaptic fluctuations [13], [14], [28], [29]. Therefore, we compared the population responses of GS and NGS neurons to a slow-varying input (500 ms correlation time constant) common to all neurons with fast-varying background noise input (1 ms correlation time constant) independent for all neurons (Figure 3D). The distinction between the two neuron types is evident in the mean population responses (peristimulus time histogram, i.e. PSTH). The NGS population only captured the stimulus peaks (Figure 3E) while the GS population faithfully captured the temporal fluctuations of the common signal, aided by each neuron's temporal jitter caused by the independent noise fluctuations (Figure 3F). Although not an exact model of cortical wave development, this comparison supports the hypothesis that the intrinsic properties of single neurons can lead to different information transmission capabilities of cortical networks at different developmental time points, and the transition from wave propagation to wave cessation.

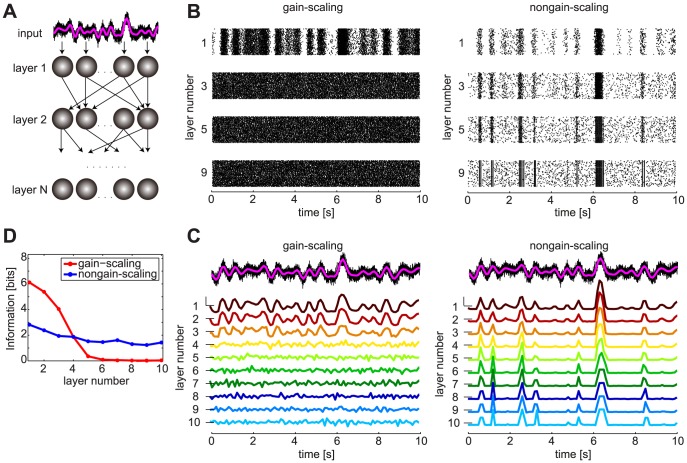

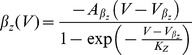

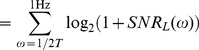

Transmission of slow-varying information through the network

The observed difference between the population responses of the GS and NGS neurons to the slow-varying stimulus in the presence of fast background fluctuations (Figure 3D–F) suggested that the two neuron types differ in their ability to transmit information at slow timescales. Therefore, we next examined how the identified single neuron properties affect information transmission across multiple layers in feedforward networks. Networks consisted of 10 layers of 2000 identical neurons of the two different types (Figure 4A). The neurons in the first layer receive a common temporally fluctuating stimulus with a long correlation time constant (1 s, see Methods); neurons in deeper layers receive synaptic input from neurons in the previous layer via conductance-based synapses. Each neuron in the network also receives a rapidly varying independent noise input (with a correlation time constant of 1 ms) to simulate fast-varying synaptic fluctuations. The noise input here is a rapidly-varying input that sets the statistical context for the slow-varying information; it does not transmit specific stimulus information itself. The GS and NGS networks have strikingly different spiking dynamics (Figure 4B). The GS network responds with higher mean firing rates in each layer, as would be expected from the  –

– curves characterizing intrinsic neuronal properties (Figure 1C,D). While the GS neurons have a baseline firing rate even at zero input current, the NGS neurons only fire for large input currents, with a threshold dependent on the level of intrinsic noise; thus, the two neuron types have different firing rates. To evaluate how the networks transmit fluctuations of the slow-varying common input signal, independent of the overall firing rates, we evaluated the averaged population (PSTH) response of each layer, normalized to have a mean equal to 0 and a variance equal to 1 (Figure 4C).

curves characterizing intrinsic neuronal properties (Figure 1C,D). While the GS neurons have a baseline firing rate even at zero input current, the NGS neurons only fire for large input currents, with a threshold dependent on the level of intrinsic noise; thus, the two neuron types have different firing rates. To evaluate how the networks transmit fluctuations of the slow-varying common input signal, independent of the overall firing rates, we evaluated the averaged population (PSTH) response of each layer, normalized to have a mean equal to 0 and a variance equal to 1 (Figure 4C).

Figure 4. Information transmission through GS and NGS networks.

A. Feedforward network with a slowly modulated time-varying input (magenta) presented to all neurons in the first layer, each neuron receiving in addition an independent noisy signal (black). B. Spike rasters for GS neurons ( pS/µm2 and

pS/µm2 and  pS/µm2) show the rapid signal degradation in deeper layers, while NGS neurons (

pS/µm2) show the rapid signal degradation in deeper layers, while NGS neurons ( pS/µm2 and

pS/µm2 and  pS/µm2) exhibit reliable signal transmission of large-amplitude events. The spiking responses synchronize in deeper layers. C. PSTHs from each layer in the two networks showing the propagation of a slow-varying input in the presence of background fast fluctuations. PSTHs were normalized to mean 0 and variance 1 to illustrate fluctuations (in spite of different firing rates) so that the dashed lines next to each PSTH denote 0 and the scalebar 2 normalized units. D. Information about the slow stimulus fluctuations conveyed by the population mean responses shown in C.

pS/µm2) exhibit reliable signal transmission of large-amplitude events. The spiking responses synchronize in deeper layers. C. PSTHs from each layer in the two networks showing the propagation of a slow-varying input in the presence of background fast fluctuations. PSTHs were normalized to mean 0 and variance 1 to illustrate fluctuations (in spite of different firing rates) so that the dashed lines next to each PSTH denote 0 and the scalebar 2 normalized units. D. Information about the slow stimulus fluctuations conveyed by the population mean responses shown in C.

The first few layers of the GS network robustly propagate the slow-varying signal as a result of the temporally jittered response produced by the sensitivity to fast fluctuations at the single neuron level, consistent with the population response in Figure 3F. However, due to the effects of these same noise fluctuations, this population response degrades in deeper layers (Figure 4C, left, see also Figure S1 for  ). In contrast, the NGS network is insensitive to the fast fluctuations and thresholds the slow-varying input at the first layer, as in Figure 3E. Despite the presence of fast-varying background noise, the NGS network robustly transmits the large peaks of this stimulus to deeper layers without distortion (Figure 4C, right).

). In contrast, the NGS network is insensitive to the fast fluctuations and thresholds the slow-varying input at the first layer, as in Figure 3E. Despite the presence of fast-varying background noise, the NGS network robustly transmits the large peaks of this stimulus to deeper layers without distortion (Figure 4C, right).

This difference in the transmission of information through the two network types is captured in the information between the population response and the slow-varying stimulus in Figure 4D. The GS network initially carries more information about the slow-varying stimulus than the NGS network; however, this information degrades in deeper layers when virtually all the input structure is lost, and drops below the NGS network beyond layer four (Figure 4D, bottom). While the information carried by the NGS network is initially lower than the GS network (due to signal thresholding), this information is preserved across layers and eventually exceeds the GS information.

The observed differences in the propagation of slow-varying inputs between the two network types resemble changes in wave propagation during development. While spontaneous waves cross cortex in stereotyped activity events that simultaneously activate large populations of neurons at birth, these waves disappear after the first postnatal week [13], [16]. We have demonstrated that immature neurons lacking the gain-scaling ability can indeed propagate slow-varying wave-like input of large amplitude as population activity across many layers. As these same neurons acquire the ability to locally scale the gain of their inputs and efficiently encode fast fluctuations, they lose the ability to propagate large amplitude events at the population level, consistent with the disappearance of waves in the second postnatal week [13]. While many parameters regulate the propagation of waves [14], [29], our network models demonstrate that varying the intrinsic properties of single neurons can capture substantial differences in the ability of networks to propagate slow-varying information. Thus, changes in single neuron properties can contribute to both spontaneous wave generation and propagation early in development and the waves' disappearance later in development.

Dynamics of signal propagation

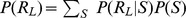

The layer-by-layer propagation of a slow-varying signal through the population responses of the two networks can be qualitatively predicted using a mean field approach that bridges descriptions of single neuron and network properties. Since network dynamics varies on faster timescales than the correlation timescale of the slow-varying signal, the propagation of a slow-varying signal can be studied by considering how a range of mean inputs propagate through each network. The intrinsic response of the neuron to a mean (DC) current input is quantified by the  –

– curve which averages over the details of the fast background fluctuations; yet, the magnitude of background noise,

curve which averages over the details of the fast background fluctuations; yet, the magnitude of background noise,  , can change the shape and gain of this curve [20], [21]. Thus, for a given neuron type, there is a different

, can change the shape and gain of this curve [20], [21]. Thus, for a given neuron type, there is a different  –

– curve depending on the level of noise

curve depending on the level of noise  ,

,  (Figure 1C,D). One can approximate the mean current input to a neuron in a given layer

(Figure 1C,D). One can approximate the mean current input to a neuron in a given layer  ,

,  , from the firing rate in the previous layer

, from the firing rate in the previous layer  through a linear input-output relationship, with a slope

through a linear input-output relationship, with a slope  dependent on network properties (connection probability and synaptic strength, see Eq. 15). Given the estimated mean input current for a given neuron in layer

dependent on network properties (connection probability and synaptic strength, see Eq. 15). Given the estimated mean input current for a given neuron in layer  ,

,  , the resulting firing rate of layer

, the resulting firing rate of layer  ,

,  , can then be computed by evaluating the appropriate

, can then be computed by evaluating the appropriate  –

– curve,

curve,  , which characterizes the neuron's intrinsic computation

, which characterizes the neuron's intrinsic computation

| (1) |

Thus, these two curves serve as an iterated map whereby an estimate of the firing rate in the Lth layer,  , is converted into a mean input current to the next layer,

, is converted into a mean input current to the next layer,  , which can be further converted into

, which can be further converted into  , propagating mean activity across multiple layers in the network (Figures 5, 6). While for neurons in the first layer, the selected

, propagating mean activity across multiple layers in the network (Figures 5, 6). While for neurons in the first layer, the selected  –

– curve is the one corresponding to the level of intrinsic noise injected into the first layer,

curve is the one corresponding to the level of intrinsic noise injected into the first layer,  , for neurons in deeper layers, the choice of

, for neurons in deeper layers, the choice of  –

– curve depends not only on the magnitude of the independent noise fluctuations injected into each neuron, but also on the fluctuations arising from the input from the previous layer (see Eq. 16 in Methods). The behavior of this iterated map is shaped by its fixed points, the points of intersection of the

curve depends not only on the magnitude of the independent noise fluctuations injected into each neuron, but also on the fluctuations arising from the input from the previous layer (see Eq. 16 in Methods). The behavior of this iterated map is shaped by its fixed points, the points of intersection of the  –

– curve

curve  with the input-output line

with the input-output line  , which organize the way in which signals are propagated from layer to layer. The number, location and stability of these fixed points depend on the curvature of

, which organize the way in which signals are propagated from layer to layer. The number, location and stability of these fixed points depend on the curvature of  and on

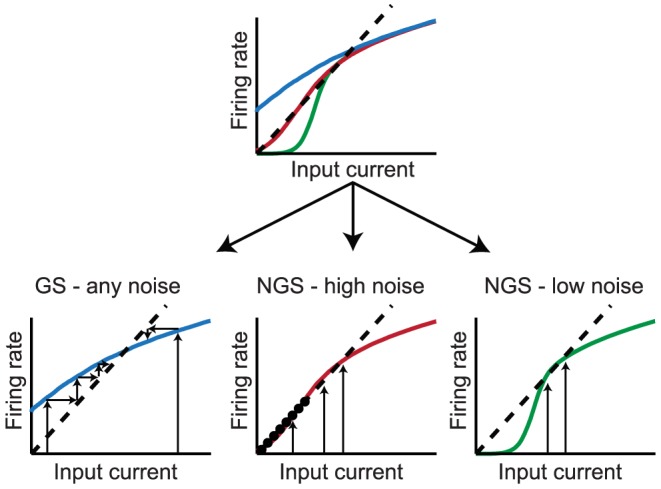

and on  (Figure 5). When the slope of

(Figure 5). When the slope of  at the fixed point is less than

at the fixed point is less than  , the fixed point is stable. This implies that the entire range of initial DC inputs (into layer 1) will tend to iterate toward the value at the fixed point as the mean current is propagated through downstream layers in the network (Figure 5, left). Therefore, all downstream layers will converge to the same population firing rate that corresponds to the fixed point. In the interesting case that

, the fixed point is stable. This implies that the entire range of initial DC inputs (into layer 1) will tend to iterate toward the value at the fixed point as the mean current is propagated through downstream layers in the network (Figure 5, left). Therefore, all downstream layers will converge to the same population firing rate that corresponds to the fixed point. In the interesting case that  becomes tangent to the linear input-output relation, i.e. the

becomes tangent to the linear input-output relation, i.e. the  –

– curve has a slope equal to

curve has a slope equal to  , the map exhibits a line attractor: there appears an entire line of stable fixed points (Figure 5, middle). This ensures the robust propagation of many input currents and population rates across the network. Interestingly, the

, the map exhibits a line attractor: there appears an entire line of stable fixed points (Figure 5, middle). This ensures the robust propagation of many input currents and population rates across the network. Interestingly, the  –

– curves of the GS and NGS neurons for different values of

curves of the GS and NGS neurons for different values of  fall into one of the regimes illustrated in Figure 5: GS neurons with their

fall into one of the regimes illustrated in Figure 5: GS neurons with their  -invariant

-invariant  –

– curves have a single stable fixed point (Figure 5, left), while the NGS neurons have line attractors with exact details depending on

curves have a single stable fixed point (Figure 5, left), while the NGS neurons have line attractors with exact details depending on  (Figure 5, middle and right). The mechanics of generating a line attractor have been most extensively explored in the context of oculomotor control (where persistent activity has been interpreted as a short-term memory of eye position that keeps the eyes still between saccades) and decision making in primates (where persistent neural activity has been interpreted as the basis of working memory) [30].

(Figure 5, middle and right). The mechanics of generating a line attractor have been most extensively explored in the context of oculomotor control (where persistent activity has been interpreted as a short-term memory of eye position that keeps the eyes still between saccades) and decision making in primates (where persistent neural activity has been interpreted as the basis of working memory) [30].

Figure 5. Fixed points of the iterated map dynamics.

Top: An illustration of three  –

– curves (colors) and the corresponding linear input-output relation (black dashed) with slope

curves (colors) and the corresponding linear input-output relation (black dashed) with slope  derived from the mean field. Bottom left: The dynamics has a single stable fixed point and all input currents are attracted to it (indicated by small arrows converging to the fixed point). This corresponds to

derived from the mean field. Bottom left: The dynamics has a single stable fixed point and all input currents are attracted to it (indicated by small arrows converging to the fixed point). This corresponds to  –

– curves of GS neurons at all values of

curves of GS neurons at all values of  . Middle: The dynamics has a line of stable fixed points that allow robust transmission of a large range of input currents in the network. NGS neurons with high values of

. Middle: The dynamics has a line of stable fixed points that allow robust transmission of a large range of input currents in the network. NGS neurons with high values of  have such dynamics. Right: The stable line of fixed points is smaller for

have such dynamics. Right: The stable line of fixed points is smaller for  –

– curves that are more "thresholding,'' corresponding to NGS neurons with low

curves that are more "thresholding,'' corresponding to NGS neurons with low  .

.

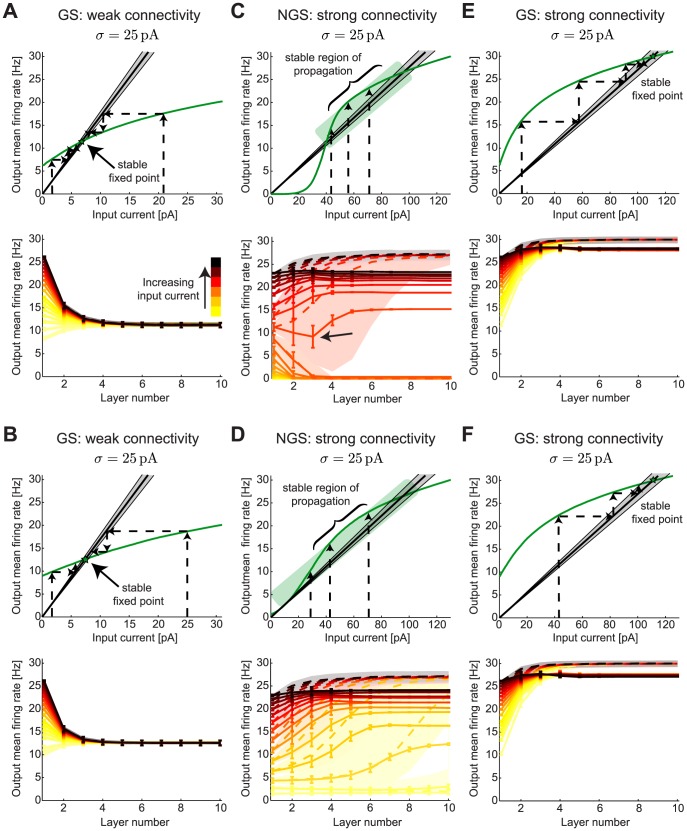

Figure 6. Firing rate propagation through networks of gain-scaling and nongain-scaling neurons.

A,B. Top: The  –

– curves (green) for GS neurons (

curves (green) for GS neurons ( pS/µm2 and

pS/µm2 and  pS/µm2) at two levels of noise,

pS/µm2) at two levels of noise,  pA (low noise) and

pA (low noise) and  pA (high noise). The linear input-output relationships from the mean field (black) predict how the mean output firing rate of a given network layer can be derived from the mean input current into the first layer with the standard deviation of the prediction shown in gray. Dashed arrows show the iterated map dynamics transforming different mean input currents into a single output firing rate determined by the stable fixed point (green star). Bottom: The network mean firing rates for a range of mean input currents (to layer 1) as a function of layer number, with a clear convergence to the fixed point by layer 5. The results from numerical simulations over 10 second-long trials are shown as full lines (mean

pA (high noise). The linear input-output relationships from the mean field (black) predict how the mean output firing rate of a given network layer can be derived from the mean input current into the first layer with the standard deviation of the prediction shown in gray. Dashed arrows show the iterated map dynamics transforming different mean input currents into a single output firing rate determined by the stable fixed point (green star). Bottom: The network mean firing rates for a range of mean input currents (to layer 1) as a function of layer number, with a clear convergence to the fixed point by layer 5. The results from numerical simulations over 10 second-long trials are shown as full lines (mean  from 2000 neurons in each layer) and mean field predictions are shown in dashed lines with a shaded background in the same color (for each different input) illustrating the standard deviation of the prediction. Other network parameters: connection probability

from 2000 neurons in each layer) and mean field predictions are shown in dashed lines with a shaded background in the same color (for each different input) illustrating the standard deviation of the prediction. Other network parameters: connection probability  , synaptic strength

, synaptic strength  and range of mean input currents 0–22 pA. C,D. Same as A,B but for NGS neurons (

and range of mean input currents 0–22 pA. C,D. Same as A,B but for NGS neurons ( pS/µm2 and

pS/µm2 and  pS/µm2) with stronger synaptic strength

pS/µm2) with stronger synaptic strength  and range of mean input currents 0–70 pA. The network dynamics show a region of stable firing rate propagation (green box) where the

and range of mean input currents 0–70 pA. The network dynamics show a region of stable firing rate propagation (green box) where the  –

– curve behaves like it is tangent to the input-output line for a large range of mean input currents (to layer 1). The size of the region increases with noise (until

curve behaves like it is tangent to the input-output line for a large range of mean input currents (to layer 1). The size of the region increases with noise (until  pA). Bottom panels show the transmission of a range of input firing rates across different layers in the network. The arrow denotes a case where the firing rate first decreases towards 0 and then stabilizes. E,F. Same synaptic strength as C,D but for GS neurons (

pA). Bottom panels show the transmission of a range of input firing rates across different layers in the network. The arrow denotes a case where the firing rate first decreases towards 0 and then stabilizes. E,F. Same synaptic strength as C,D but for GS neurons ( pS/µm2 and

pS/µm2 and  pS/µm2). Bottom panels show the convergence of firing rates to a single fixed point similar to the weakly connected GS network in A,B. As for the NGS networks in C,D, the mean field analysis predicts convergence to a slightly higher firing rate than the numerical simulations.

pS/µm2). Bottom panels show the convergence of firing rates to a single fixed point similar to the weakly connected GS network in A,B. As for the NGS networks in C,D, the mean field analysis predicts convergence to a slightly higher firing rate than the numerical simulations.

Indeed, Figure 6A,B shows that the  –

– curves for GS neurons at two values of

curves for GS neurons at two values of  , one low and one high, are very similar. The mean field analysis predicts that all initial DC inputs applied to layer 1 will converge to the same stable fixed point during propagation to downstream layers. Numerical simulations corroborate these predictions (Figure 6A,B, bottom). A combination of single neuron and network properties determine the steady state firing rate through

, one low and one high, are very similar. The mean field analysis predicts that all initial DC inputs applied to layer 1 will converge to the same stable fixed point during propagation to downstream layers. Numerical simulations corroborate these predictions (Figure 6A,B, bottom). A combination of single neuron and network properties determine the steady state firing rate through  (Eq. 15). Activity in the GS networks can propagate from one layer onto the next with relatively weak synaptic strength even when the networks are sparsely connected (5% connection probability), as a result of the low thresholds of these neurons (Figure 1D). The specific synaptic strength in Figure 6A,B was chosen arbitrarily so that the

(Eq. 15). Activity in the GS networks can propagate from one layer onto the next with relatively weak synaptic strength even when the networks are sparsely connected (5% connection probability), as a result of the low thresholds of these neurons (Figure 1D). The specific synaptic strength in Figure 6A,B was chosen arbitrarily so that the  –

– curve intersects the input-output line with slope

curve intersects the input-output line with slope  , but choosing different synaptic strength produces qualitatively similar network behavior (Figure S2). The parameter

, but choosing different synaptic strength produces qualitatively similar network behavior (Figure S2). The parameter  can be modulated by changing either the connectivity probability or the synaptic strength in the network; as long as their product is preserved,

can be modulated by changing either the connectivity probability or the synaptic strength in the network; as long as their product is preserved,  remains constant and the resulting network dynamics does not change (Figure S2). Furthermore, as a result of the lack of modulability of GS

remains constant and the resulting network dynamics does not change (Figure S2). Furthermore, as a result of the lack of modulability of GS  –

– curves by

curves by  (Figure 1D), the network dynamics remains largely invariant to the amplitude of background noise.

(Figure 1D), the network dynamics remains largely invariant to the amplitude of background noise.

In contrast, the amplitude of background noise fluctuations,  , has a much larger impact on the shape of NGS

, has a much larger impact on the shape of NGS  –

– curves (Figure 1C) and on the resulting network dynamics (Figure 5). When the combination of sparse connection probability and weak synaptic strength leads to the slope

curves (Figure 1C) and on the resulting network dynamics (Figure 5). When the combination of sparse connection probability and weak synaptic strength leads to the slope  being too steep (weak connectivity in GS networks, Figure 6A,B), there may be no point of intersection with the NGS

being too steep (weak connectivity in GS networks, Figure 6A,B), there may be no point of intersection with the NGS  –

– curves: all DC inputs are mapped below threshold and activity does not propagate to downstream layers. Keeping the same sparse connection probability of

curves: all DC inputs are mapped below threshold and activity does not propagate to downstream layers. Keeping the same sparse connection probability of  and increasing synaptic strength enables the propagation of neuronal activity initiated in the first layer to subsequent layers in NGS networks. For a particular value of

and increasing synaptic strength enables the propagation of neuronal activity initiated in the first layer to subsequent layers in NGS networks. For a particular value of  , there is an entire line of stable fixed points in the network dynamics (Figure 5, middle), so that a large range of input currents are robustly transmitted through the network. More commonly, however, the map has three fixed points: stable fixed points at a high value and at zero, and an intermediate unstable fixed point (Figure 6C,D). In this case, mean field theory predicts that DC inputs above the unstable fixed point should flow toward the high value, while inputs below it should iterate toward zero, causing the network to stop firing. However, the map still behaves as though the

, there is an entire line of stable fixed points in the network dynamics (Figure 5, middle), so that a large range of input currents are robustly transmitted through the network. More commonly, however, the map has three fixed points: stable fixed points at a high value and at zero, and an intermediate unstable fixed point (Figure 6C,D). In this case, mean field theory predicts that DC inputs above the unstable fixed point should flow toward the high value, while inputs below it should iterate toward zero, causing the network to stop firing. However, the map still behaves as though the  –

– curve and the input-output transformation are effectively tangent to one another over a wide range of input rates (green box in Figure 6C,D), creating an effective line of fixed points for which a large range of DC inputs is stably propagated through the network; this is generically true for a wide range of noise values, although the exact region of stable propagation depends on the value of

curve and the input-output transformation are effectively tangent to one another over a wide range of input rates (green box in Figure 6C,D), creating an effective line of fixed points for which a large range of DC inputs is stably propagated through the network; this is generically true for a wide range of noise values, although the exact region of stable propagation depends on the value of  (Figure 5, middle and right, Figure S3). The best input signal transmission is observed when the network noise selects the most linear

(Figure 5, middle and right, Figure S3). The best input signal transmission is observed when the network noise selects the most linear

–

– curve that simultaneously maximizes the range of DC inputs and population firing rates of the neurons (Figure 5, middle). This is approximately the noise value selected in Figure 6C,D. We call this a stable region of propagation for the network since a large range of mean DC inputs can be propagated across the network layers so that the population firing rates at each layer remain distinct. Our results resemble those of van Rossum et al. [31] where regimes of stable signal propagation were observed in networks of integrate-and-fire neurons by varying the DC input and an additional background noise. The best regime for stable signal propagation occurred for additive noise that was large enough to ensure that the population of neurons independently estimated the stimulus, as in our NGS networks (Figure 5, middle and right, Figure S3).

curve that simultaneously maximizes the range of DC inputs and population firing rates of the neurons (Figure 5, middle). This is approximately the noise value selected in Figure 6C,D. We call this a stable region of propagation for the network since a large range of mean DC inputs can be propagated across the network layers so that the population firing rates at each layer remain distinct. Our results resemble those of van Rossum et al. [31] where regimes of stable signal propagation were observed in networks of integrate-and-fire neurons by varying the DC input and an additional background noise. The best regime for stable signal propagation occurred for additive noise that was large enough to ensure that the population of neurons independently estimated the stimulus, as in our NGS networks (Figure 5, middle and right, Figure S3).

The emergence of extended regions of stable rate propagation implies that the NGS mean field predictions (Figure 6C,D, bottom) are less accurate than for the GS networks where the convergence to the stable fixed points is exact (Figure 6A,B). However, the NGS mean field predictions show qualitative agreement with the simulation results, in particular in the initial network layers where the approach to the nonzero stable fixed point is much slower than in the GS networks, i.e. occurs over a larger number of layers. Along with the slow convergence of firing rates toward a single population firing rate, the ability of network noise to modulate the NGS  –

– curves suggests that multiple

curves suggests that multiple  –

– curves can be used to predict network dynamics by combining added and intrinsically generated noise (see Eq. 16). As a result, for some input currents (e.g. arrow in Figure 6C) the firing rate goes down in the first three layers where network dynamics predicts convergence to the zero stable fixed point. The initial decrease of firing rate is due to the disappearance of weak synaptic inputs that cannot trigger the cells to spike. Network noise then selects a different

curves can be used to predict network dynamics by combining added and intrinsically generated noise (see Eq. 16). As a result, for some input currents (e.g. arrow in Figure 6C) the firing rate goes down in the first three layers where network dynamics predicts convergence to the zero stable fixed point. The initial decrease of firing rate is due to the disappearance of weak synaptic inputs that cannot trigger the cells to spike. Network noise then selects a different  –

– curve that shifts the dynamics into the rate stabilization region (Figure 6C, green box) where firing rates are stably propagated. The onset of synchronous firing of the neuronal population in each layer also contributes to rate stabilization. Population firing rates in deeper layers increase to a saturating value lower than the mean field predicted value. Similar results have been observed experimentally [32] and in networks of Hodgkin-Huxley neurons [33]. We find similar network dynamics for a more weakly connected NGS network using the smallest possible synaptic strength that allows activity to propagate through the network (Figure S2). As for the GS networks, as long as the product of connection probability and synaptic strength is constant, the slope of the input-output linear relationship

curve that shifts the dynamics into the rate stabilization region (Figure 6C, green box) where firing rates are stably propagated. The onset of synchronous firing of the neuronal population in each layer also contributes to rate stabilization. Population firing rates in deeper layers increase to a saturating value lower than the mean field predicted value. Similar results have been observed experimentally [32] and in networks of Hodgkin-Huxley neurons [33]. We find similar network dynamics for a more weakly connected NGS network using the smallest possible synaptic strength that allows activity to propagate through the network (Figure S2). As for the GS networks, as long as the product of connection probability and synaptic strength is constant, the slope of the input-output linear relationship  , and the network dynamics remain unchanged, even if these network parameters change individually (Figure S2).

, and the network dynamics remain unchanged, even if these network parameters change individually (Figure S2).

An exception to this result is observed at very sparse connectivity ( 2%), where network behavior is more similar to the GS networks (Figure S2, bottom right). At this sparse connectivity, independent noise reduces the common input across different neurons and synchrony is less pronounced. This argues that the emergence of synchrony plays a fundamental role in achieving reliable propagation of a range of DC inputs (and correspondingly population firing rates) in the NGS networks. Although experimental measurements of the connectivity probability in developing cortical networks are lacking, calcium imaging of single neurons demonstrates that activity across many neurons during wave propagation is synchronous [34]. Intracellular recordings of adult cultured cortical networks also demonstrate that synchronous neuronal firing activity is transmitted in multiple layers [32].

2%), where network behavior is more similar to the GS networks (Figure S2, bottom right). At this sparse connectivity, independent noise reduces the common input across different neurons and synchrony is less pronounced. This argues that the emergence of synchrony plays a fundamental role in achieving reliable propagation of a range of DC inputs (and correspondingly population firing rates) in the NGS networks. Although experimental measurements of the connectivity probability in developing cortical networks are lacking, calcium imaging of single neurons demonstrates that activity across many neurons during wave propagation is synchronous [34]. Intracellular recordings of adult cultured cortical networks also demonstrate that synchronous neuronal firing activity is transmitted in multiple layers [32].

To examine network behavior for comparable connectivity strength, we repeated the network simulations and mean field predictions of mean DC input propagation in GS networks with the same increased synaptic strength needed for propagation of activity in the NGS networks. We found that the behavior was similar to the weakly connected GS network: Regardless of the initial input current, the network output converged to a single output firing rate by layer 5 (Figure 6E,F), making these networks incapable of robustly propagating slow-varying signals without distortion. As for the strongly connected NGS networks, neurons across the different layers in these strongly connected GS networks developed synchronous firing. This synchrony led to a small difference (several Hz) between the final firing rate approached by each network compared with the firing rate predicted from the mean field analysis. Although both the strongly connected GS and NGS networks developed synchronous firing, the behavior of the two types of networks remained different (Figure 6).

The results in this section indicate that firing rate transmission depends on the details of single neuron properties, including their sensitivity to fast fluctuations as characterized by the LN models (Figure 1A,B). Firing rate transmission also depends on the modulability of the  –

– curves by the noise amplitude

curves by the noise amplitude  (Figure 1C,D). Because of these differences in intrinsic computation, the GS and NGS networks show distinct patterns of information transmission (Figure 5): firing rate convergence to a unique fixed point, or a line of fixed points ensuring stable propagation of firing rates which can be reliably distinguished at the output, respectively. In the latter case, even when a line of fixed point is not precisely realized as in Figure 5 (middle), competition between the slow convergence of firing rates to the mean field fixed point and the emergence of synchrony enable the propagation of firing rates through the different network layers, aided by the range of

(Figure 1C,D). Because of these differences in intrinsic computation, the GS and NGS networks show distinct patterns of information transmission (Figure 5): firing rate convergence to a unique fixed point, or a line of fixed points ensuring stable propagation of firing rates which can be reliably distinguished at the output, respectively. In the latter case, even when a line of fixed point is not precisely realized as in Figure 5 (middle), competition between the slow convergence of firing rates to the mean field fixed point and the emergence of synchrony enable the propagation of firing rates through the different network layers, aided by the range of  –

– curves sampled by network noise with amplitude

curves sampled by network noise with amplitude  .

.

Implications of single unit computational properties for information transmission

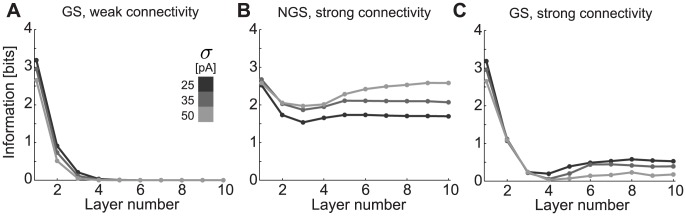

Given the predicted signal propagation dynamics, we now directly compute the mutual information between the mean DC input injected into layer 1 and the population firing rates at a given layer for each magnitude of the independent noise  (Figure 7). This measures how distinguishable network firing rate outputs at each layer are for different initial mean inputs. The convergence of population firing rates across layers to a single value in the GS networks leads to a drop in information towards zero for both the weakly (Figure 6A,B) and strongly connected GS networks (Figure 6E,F) as a function of layer number and for a wide range of network noise

(Figure 7). This measures how distinguishable network firing rate outputs at each layer are for different initial mean inputs. The convergence of population firing rates across layers to a single value in the GS networks leads to a drop in information towards zero for both the weakly (Figure 6A,B) and strongly connected GS networks (Figure 6E,F) as a function of layer number and for a wide range of network noise  (Figure 7A,C). NGS networks can transmit a range of mean DC inputs without distortion (Figure 6C,D); thus, the information between input DC and population firing rate remains relatively constant in subsequent layers (Figure 7B). The information slightly increases in deeper layers due to the emergence of synchronization, which locks the network output into a specific distribution of population firing rates. As noise amplitude increases, the selected

(Figure 7A,C). NGS networks can transmit a range of mean DC inputs without distortion (Figure 6C,D); thus, the information between input DC and population firing rate remains relatively constant in subsequent layers (Figure 7B). The information slightly increases in deeper layers due to the emergence of synchronization, which locks the network output into a specific distribution of population firing rates. As noise amplitude increases, the selected  –

– curve becomes tangent to the linear input-output relationship over a larger range of input firing rates (Figure 6C,D); hence, a larger range of inputs is stably transmitted across network layers. Counterintuitively, this suggests that increasing noise in the NGS networks can serve to increase the information such networks carry about a distribution of mean inputs.

curve becomes tangent to the linear input-output relationship over a larger range of input firing rates (Figure 6C,D); hence, a larger range of inputs is stably transmitted across network layers. Counterintuitively, this suggests that increasing noise in the NGS networks can serve to increase the information such networks carry about a distribution of mean inputs.

Figure 7. Mutual information about the mean stimulus transmitted by GS and NGS networks.

The mutual information as function of layer number for A. weakly connected GS ( pS/µm2 and

pS/µm2 and  pS/µm2), B. strongly connected NGS (

pS/µm2), B. strongly connected NGS ( pS/µm2 and

pS/µm2 and  pS/µm2) and C. strongly connected GS networks (

pS/µm2) and C. strongly connected GS networks ( pS/µm2 and

pS/µm2 and  pS/µm2) as shown in Figure 6 for different noise levels indicated by the shade of gray.

pS/µm2) as shown in Figure 6 for different noise levels indicated by the shade of gray.

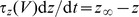

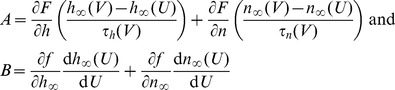

Origins of firing rate modulability by noise magnitude

The differential ability of GS and NGS networks to reliably propagate mean input signals is predicted by the modulability of the  –

– curves by the network noise

curves by the network noise  . To understand the dynamical origins of this difference, we analytically reduced the neuron model (Eq. 2) to a system of two first order differential equations describing the dynamics of the membrane potential

. To understand the dynamical origins of this difference, we analytically reduced the neuron model (Eq. 2) to a system of two first order differential equations describing the dynamics of the membrane potential  and an auxiliary slower-varying potential variable

and an auxiliary slower-varying potential variable  (Methods) [35]. We analyzed the dynamics in the phase plane by plotting

(Methods) [35]. We analyzed the dynamics in the phase plane by plotting  vs.

vs.  . The nullclines, curves along which the change in either

. The nullclines, curves along which the change in either  or

or  is 0, organize the flows of

is 0, organize the flows of  and

and  (Figure 8); these lines intersect at the fixed points of the neuron's dynamics. We studied the fixed points at different ratios of

(Figure 8); these lines intersect at the fixed points of the neuron's dynamics. We studied the fixed points at different ratios of  and

and  , with a particular focus on the values discussed above (

, with a particular focus on the values discussed above ( and

and  ). These exhibit substantial differences in the type and stability of the fixed points, as well as the emergent bifurcations where the fixed points change stability as one varies the mean DC input current into the neuron (Figure 8).

). These exhibit substantial differences in the type and stability of the fixed points, as well as the emergent bifurcations where the fixed points change stability as one varies the mean DC input current into the neuron (Figure 8).

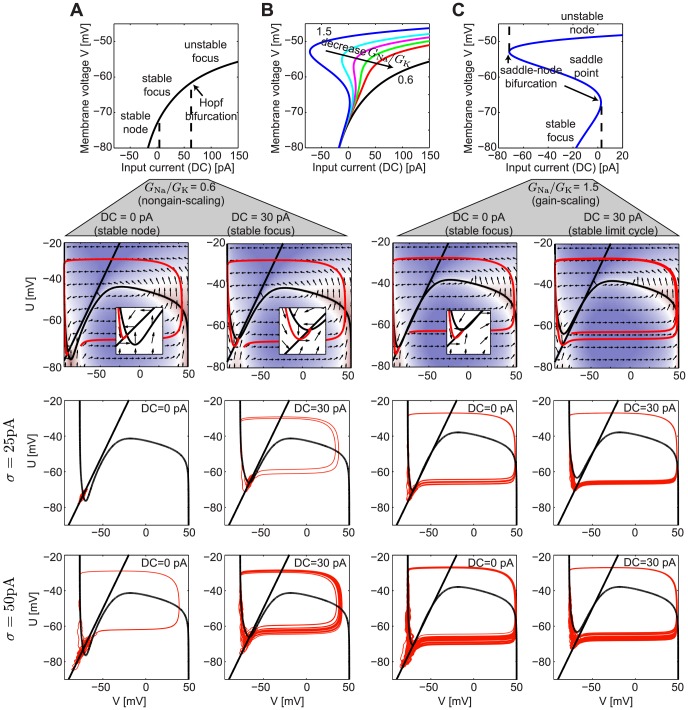

Figure 8. Analysis of the reduced Mainen model.

A. Top: Fixed points and their stability for the dynamics of a NGS neuron with  pS/µm2 and

pS/µm2 and  pS/µm2 (

pS/µm2 ( ) as a function of the input current DC. Bottom: The phase planes showing the nullclines (black) and their intersection points (fixed points) together with the flow lines indicated by the arrows. A single trajectory is shown in red. The inset shows a zoomed portion of the phase plane near the fixed point. Below we show trajectories for two values of

) as a function of the input current DC. Bottom: The phase planes showing the nullclines (black) and their intersection points (fixed points) together with the flow lines indicated by the arrows. A single trajectory is shown in red. The inset shows a zoomed portion of the phase plane near the fixed point. Below we show trajectories for two values of  and two DC values. B. The fixed points for different ratios

and two DC values. B. The fixed points for different ratios  , while keeping

, while keeping  pS/µm2 and varying

pS/µm2 and varying  , as a function of the DC. C. Same as A but for a GS neuron with

, as a function of the DC. C. Same as A but for a GS neuron with  pS/µm2 and

pS/µm2 and  pS/µm2 (

pS/µm2 ( ). Note that the abscissa has been scaled from A and B.

). Note that the abscissa has been scaled from A and B.

For a large range of DC inputs, the NGS neuron ( ) has a single stable fixed point (either a node or a focus) (Figure 8A). In this case, the only perturbation that can trigger the system to fire an action potential is a large-amplitude noise current fluctuation. The

) has a single stable fixed point (either a node or a focus) (Figure 8A). In this case, the only perturbation that can trigger the system to fire an action potential is a large-amplitude noise current fluctuation. The  of the current then determines the number of action potentials that will be fired in a given trial and strongly modulates the firing rate of the neuron. We show two trajectories at

of the current then determines the number of action potentials that will be fired in a given trial and strongly modulates the firing rate of the neuron. We show two trajectories at  pA and 50 pA and at two different DC values of 0 and 30 pA (Figure 8A), at which the

pA and 50 pA and at two different DC values of 0 and 30 pA (Figure 8A), at which the  –

– curves are strongly noise-modulated (Figure 1C). As the DC increases beyond 62 pA, the fixed point becomes unstable and a stable limit cycle emerges (not shown). In this case, any

curves are strongly noise-modulated (Figure 1C). As the DC increases beyond 62 pA, the fixed point becomes unstable and a stable limit cycle emerges (not shown). In this case, any  will move the trajectories into the stable limit cycle and the neuron will continuously generate action potentials, with a firing rate independent of

will move the trajectories into the stable limit cycle and the neuron will continuously generate action potentials, with a firing rate independent of  . Indeed, Figure 1C shows that the

. Indeed, Figure 1C shows that the  –

– curves become less effectively modulated by

curves become less effectively modulated by  for DC values greater than 62 pA.

for DC values greater than 62 pA.

As the conductance ratio  increases, the range of DC values for which the system has a single fixed point decreases (Figure 8B). Indeed, the GS neuron (

increases, the range of DC values for which the system has a single fixed point decreases (Figure 8B). Indeed, the GS neuron ( ) has a stable limit cycle for the majority of DC values (Figure 8C). This implies that GS neurons are reliably driven to fire action potentials for any

) has a stable limit cycle for the majority of DC values (Figure 8C). This implies that GS neurons are reliably driven to fire action potentials for any  and their firing rate is not very sensitive to

and their firing rate is not very sensitive to  . For low DC values, the stable limit cycle coexists with a stable fixed point, so in this case

. For low DC values, the stable limit cycle coexists with a stable fixed point, so in this case  of the noise can modulate the firing rate more effectively, as is seen in Figure 1D.

of the noise can modulate the firing rate more effectively, as is seen in Figure 1D.

This analysis highlights the origins for the differential modulability of firing rate in NGS and GS neurons. Although the model reduction sacrifices some of the accuracy of the original model, it retains the essential features of action potential generation: the sudden rise of the action potential which turns on a positive inward sodium current, and its termination by a slower decrease in membrane potential which shuts off the sodium current and initiates a positive outward potassium current hyperpolarizing the cell. Although simpler neuron models (e.g. binary and integrate-and-fire [36]–[38]) allow simple changes in firing thresholds, the dynamical features inherent in the conductance-based neurons studied here are needed to capture noise-dependent modulation.

Discussion