Abstract

We present the design, development and an initial study changes and adaptations related to navigation that take place in the brain, by incorporating an Audio-Based Environments Simulator (AbES) within a neuroimaging environment. This virtual environment enables a blind user to navigate through a virtual representation of a real space in order to train his/her orientation and mobility skills. Our initial results suggest that this kind of virtual environment could be highly efficient as a testing, training and rehabilitation platform for learning and navigation.

Keywords: Orientation and Mobility, Virtual Environment, Visual Impairment

ACM Classification Keywords: K.4.2 [Computing Milieux], Computers and Society – Social Issues, Assistive technologies for persons with disabilities

General Terms: Design, Experimentation

Introduction

The blind prefer to move about in a parametric manner, and not by crossing through the center of a room. It is easier for them to follow a path by touching the wall, being able to locate the access ways more easily and thus assuring themselves a route that will lead them to their destination [4]. This way of exploring the environment can cause problems for the blind, in that it brings them to find inefficient solutions to their mobilization problems [3]. Knowing the size of a room is not easy, and such information would be useful to help them get their bearings in the environment. In general, blind individuals can detect the level of echo produced in a room (either by talking, clapping or tapping their cane) in order to determine its size. When a blind individual has more time to walk around, get to know and move about in a closed environment, he/she would be willing to listen to descriptions and be able to identify details that allow for a more accurate level of navigation [4].

Having a mental map of the space in which we travel is essential for the efficient development of orientation and mobility techniques. As is well known, the majority of the information needed for the mental representation of space is obtained through the visual channel [11]. It is not feasible for blind users to access this information as fast as it can be done through the use of vision, and they are obligated to use other sensory channels for exploration (audio and haptic as well as other modes) as compensation [5]. In line with this view, mounting scientific evidence now suggests that these adaptive skills are developed in parallel with changes that occur within the brain itself [9]. It is now established that such changes do not only imply areas of the brain that are dedicated to processing the information received from the remaining senses, such as touch and hearing, but also regions of the brain normally associated with the analysis of visual information [12]. In other words, understanding how the brain changes in response to blindness ultimately tells us something about how individuals compensate for the loss of sight. This “neuroplasticity” or “rewiring” of the brain may thus explain the compensatory and, in some cases, enhanced behavioral abilities reported in individuals who are blind, such as finer tactile discrimination acuity [1], sound localization [11] and long-term memory recall [2]. Evidence of the functional and compensatory recruitment of visual areas to process other sensory modalities in the absence of sight has resulted largely from neuroimaging studies [12]. Modern brain imaging (fMRI) can identify areas of the brain that are associated with a particular behavioral task. Navigational skills have been extensively studied in sighted individuals [8] and key brain structures that underlie such skills have been identified. However, very little is known as to how these same corresponding areas of the brain relate to navigational performance in individuals who are blind, and as a result of the neuroplastic changes that result from vision loss.

The purpose of this research was to to analyze concrete possibilities for using an audio-based virtual environment simulator (AbES) in order to study changes in brain activity during navigation through gaming combined with advanced techniques of neuroimaging and neuroscience.

Audio-Based Environments Simulator

The simulator was developed to represent a real, familiar or unfamiliar environment to be navigated by a blind person. In the virtual environment, there are different elements and objects (walls, stairwells, doors, toilets or elevators) through which the user can discover and come to know his/her location.

It is possible to interact with doors, which can be opened and closed. For the rest of the objects the user can identify them and know of their location in the environment. The idea is for the user to be able to move about independently and to mentally map the entire environment.

The simulator is capable of representing any real environment by using a system of cells through which the user moves [11]. The user has audio feedback in the left, center and right side channels, and all his/her actions are carried out through the use of a traditional keyboard, where a set of keys have an associated action (Table 1). All of the actions in the virtual environment have a particular sound associated to them. In addition to this audio feedback, there are also spoken audio cues that provide information regarding the various objects and the user’s orientation in the environment. Orientation is provided by identifying the room in which the user is located and the direction in which he/she is facing, according to the cardinal compass points (east, west, north and south).

Table 1.

Commands and keystrokes for navigating the environment

| To | Press | Description |

|---|---|---|

| Turn Left | H | To hear the verbalized audio of the cardinal direction you are facing after having turned left. |

| Turn Right | K | To hear the verbalized audio of the cardinal direction you are facing after having turned right. |

| Walk | Space | If it is possible to advance, the sound of a footstep will be heard. Another sound will be heard that signifies having bumped into something if advancing is not possible. |

| Contextual Action | J | This command is used to ask what is in front of you, as well as to open doors. |

| Ask Room | F | This command allows the blind user to know in what room/floor he/she is located, and provides information about his/her current orientation and information about the task that must be completed, (if any). |

| Zoom In | Q | For users with residual vision, the zoom function was provided within the interface. As such, blind users can increase or decrease the size of the graphics presented on the map, thus being able to better appreciate the different elements of the videogame. In addition, the icon controlled by the player always remains at the center of the map, and when he/she goes in a certain direction, the map moves within the visualized space (Figure 1). |

| Zoom Out | A |

The stereo sound is used to achieve the user’s immersion by providing information on the location of objects, walls and doors in the virtual environment. Thus the user is able create a mental model of the spatial dimensions of the environment. While navigating, the user can interact with each of the previously mentioned elements, and each of these elements provides different feedback that helps the user become oriented in the environment. AbES includes three modes of interaction: Free Navigation, Path Navigation and Game Mode.

The free navigation mode provides the blind user with the possibility of exploring the building freely in order to become familiar with it. The facilitator can choose whether the user begins in the starting room, or let the AbES software randomly choose the starting point. In this mode, the facilitator can also choose to have no objects included in the game’s virtual environment (empty map). For a beginning user, we found it useful to include the option that all the doors in the building are open, making the navigation simpler. In the same way, for beginners it is necessary to hear all of the instructions that the simulator provides. For this reason the “Allow Text-To-Speech to end before any action” option is necessary.

Path navigation provides the blind user with the task of finding a particular room. The facilitator must choose the departure and arrival room and select how many routes he/she deems it is necessary to take. When all the routes have been selected, the user begins his/her interaction with the simulator and has to navigate all the chosen paths, thus training in, surveying and mapping the building.

The game mode provides blind users with the task of searching for “jewels” placed in the building. The purpose of the game is to explore the rooms and find all the jewels, bringing them outside one at a time and then going back into the building to continue exploring. Enemies are randomly placed in the building, and try to steal the user’s jewels and hide them elsewhere. There is a verbal, audio warning when the user is facing two cells away from a jewel or an enemy. The enemies always remain inside the building. In this game mode, the facilitator can choose the number of jewels to find (2, 4 or 6) and the number of monsters (2, 4 or 6).

In the first version of AbES that was used in this study, we represented the environment corresponding to the first and the second floors of the St. Paul’s building at the Carroll Center for the Blind in Newton, MA, USA. This entire environment could be navigated freely (see Fig. 2). The design and development of AbES was carried out by considering the ways in which blind users interact, and how audio can help them to increment certain spatial navigation skills and facilitate their cognitive development. In this way, usability was considered as an essential component in order to facilitate this development [11].

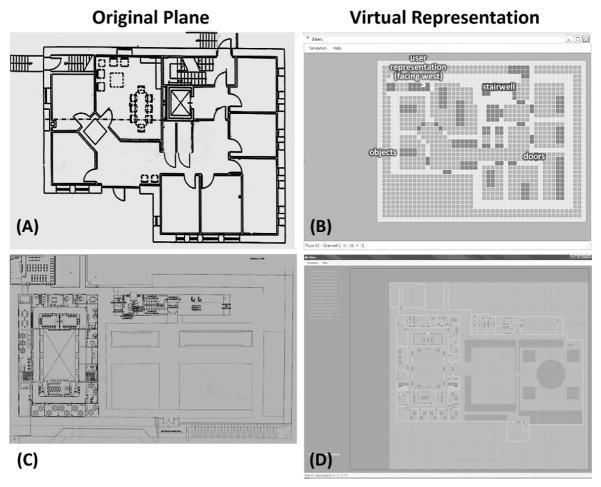

Figure 2.

Real and virtual environments in AbES. (A) The first floor plan of the St. Paul building. (B) Virtual representation of the same floor in AbES showing various objects the user interacts with. (C) Floor plan of the Santa Lucia building. (D) Virtual representation of the first floor of the Santa Lucia building

AbES and Brain Plasticity

Parallel to the development and validation of AbES, we are also investigating the brain mechanisms associated with navigational skills by carrying out virtual navigation tasks within a neuroimaging scanner environment. Modern day imaging techniques, such as functional magnetic resonance imaging (fMRI), allow us to follow brain activity related to behavioral performance. Functional MRI takes advantage of the fact that when a region of the brain is highly active, there is an oversupply of oxygenated blood to that region. By measuring the relative amounts of oxygenated and deoxygenated blood, it is possible to infer which regions of the brain are more active. This signal is then analyzed to generate images of the brain that reflect regions of the brain implicated with the behavioral task being carried out [6]. This technology has been instrumental in uncovering how the adaptive skills of the blind develop in parallel with changes occurring within the brain itself. In the case of blindness, we know that these adaptive skills implicate not only areas of the brain dedicated to processing information from the remaining senses such as touch and hearing, in addition to memory, but also regions of the brain normally associated with analyzing visual information [12].

Regarding navigational skills, brain networks have been extensively studied in sighted individuals [8]. In particular, brain structures that are key for navigation have been recognized (such as the hippocampus and parietal cortical areas) as well as essential markers that identify good navigators [7]. What is not known, however, is how the corresponding areas of the brain relate to navigational performance in the blind and, in particular, their relation to the neuroplastic changes that result from vision loss. To help shed light on this issue, we have adapted the AbES game so that it can be played within an fMRI scanner (Figure 3A). Again, as a proof of concept, we have shown that interacting the AbES within the scanner environment (testing with a sighted individual) leads to selective task activation of specific brain areas related to navigational skills. Specifically, when the subject listens to the audio instructions describing his or her target destination, we observe brain activity within the auditory regions of the brain. When that same person is asked to randomly walk through the virtual environment (i.e., without any goal destination), we find brain-associated activity within sensory-motor areas related to the hand’s action of pressing the keystrokes. However, when this same person is asked to navigate from a predetermined location to a particular target, we see a dramatic increase in brain activity that implies not only the auditory and sensory-motor regions of the brain, but regions of the visual cortex (to visualize the route) and frontal cortex (implicated in decision making), parietal cortex (important for spatial tasks), and hippocampus (implicated in spatial navigation and memory) as well (Figure 3B). As a next step, work is currently under way to compare the brain activation patterns associated with virtual navigation in sighted people (through sight and through hearing alone) with that which occurs in individuals with profound blindness (early and late onset). Of particular interest will be the role of the visual areas as they relate to plasticity and overall navigational performance (Figure 4). For example, is greater visual cortex activation correlated with strong navigating performance regardless of visual status and/or prior visual experience? Furthermore, how do activation patterns and brain networks change over time as subjects continue to learn and improve their overall navigational skills? Are there specific areas or patterns of brain activity that can help identify “good navigators” from those patterns that typify poor navigation? These as well as many other intriguing questions await further investigation.

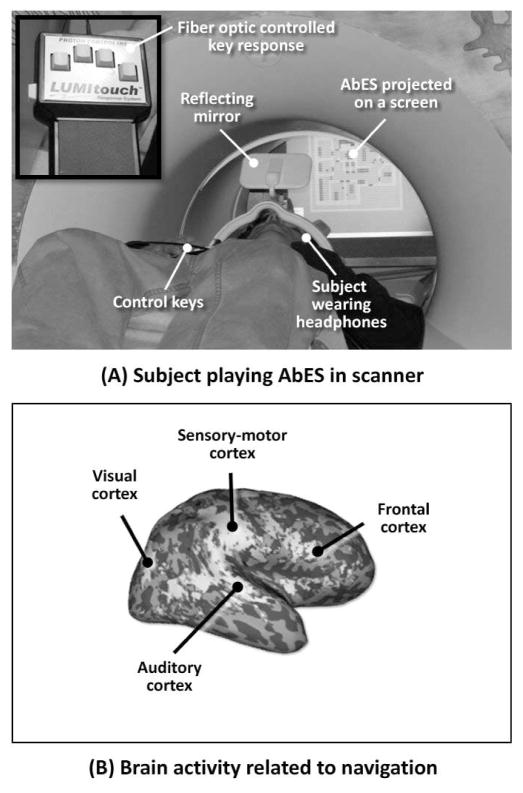

Figure 3.

Brain activity associated with navigation. (A) Sighted subject lying in the scanner and interacting with the AbES navigation software. (B) Activation of cortical areas while actively navigating with AbES. Areas implicated with active navigation include sensory-motor areas and auditory cortex as well as frontal, visual and hippocampal (not shown) areas.

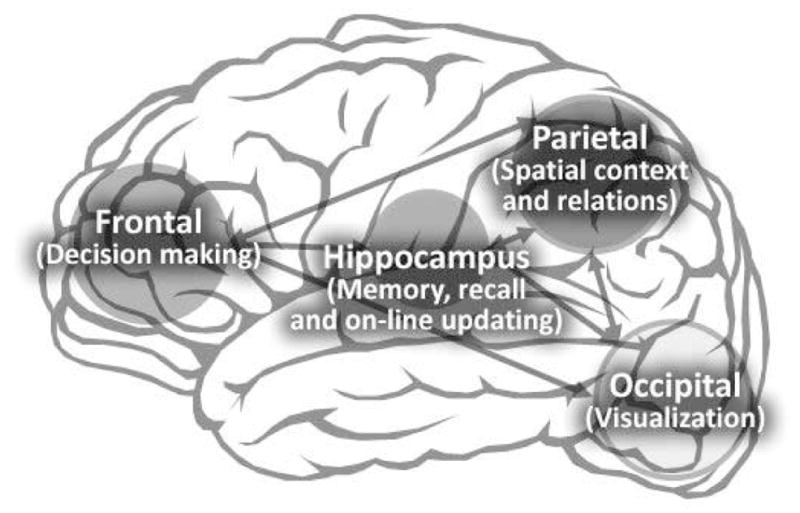

Figure 4.

Navigation is known to implicate a network of cortical areas: Frontal, Occipital, Hippocampus and Parietal. Known neural network for navigation (sighted)

Conclusions & Future Work

The purpose of this research was to analyze concrete possibilities for using an audio-based virtual environment simulator (AbES) in order to study changes in brain activity during navigation through gaming combined with advanced techniques of neuroimaging and neuroscience.

One of the modes of interaction in AbES is audio-based gaming. We intended for users to be able to play and enjoy the game, and in doing so learn to navigate their surrounding environment, get to know and understand its spaces, its dimensions and the corresponding objects. At the beginning of their training, users had to navigate through preset routes, defined by the facilitator. As they become more skilled, the idea is that they will be able to play AbES by navigating freely at their own pace in order to learn the surroundings in a playful manner, and thus lay the foundations for transferring virtual learning to real world navigation.

We continue to investigate the feasibility, effectiveness, and potential benefits of learning to navigate unfamiliar environments using virtual auditory-based gaming systems. At the same time, we are developing methods for quantifying behavioral gains as well as uncovering brain mechanisms associated with navigational skills. A key direction for future research will be to understand what aspects of acquired spatial information are actually transferred from virtual to real environments, and the conditions that promote this transfer [10]. Furthermore, understanding how the brain creates spatial cognitive maps as a function of learning modality and over time, as well as a function of an individual’s own experience and motivation will have potentially important repercussions in terms of how rehabilitation is carried out and, ultimately, an individual’s overall rehabilitative success. In looking ahead, future work in this area needs to continue to use a multidisciplinary approach, drawing on expertise from the teachers of blind students, clinicians, and technology developers, as well as neuroscientists, behavioral psychologists, and sociologists. As such, the steps to follow in this research are to test and analyze the impact that this kind of technology has, using a more complete experimental design with a bigger sample. This new study will include the use of specific checklists for orientation and mobility. To these ends, we consider different ways of interacting, such as haptic, 3D audio or the combination of both, in order to represent the virtual environments. In this way we are able to better understand the user’s brain plasticity when the learner interacts with gaming interfaces designed for the purpose of navigation. This also includes software redesigns, such as the integration of the 3D audio system fMRI. By further promoting an effective exchange of ideas, we believe that this will ultimately lead to the enhancement of the quality of life of individuals living with visual impairment, as well as enhance our understanding of the remarkable adaptive potential of the brain.

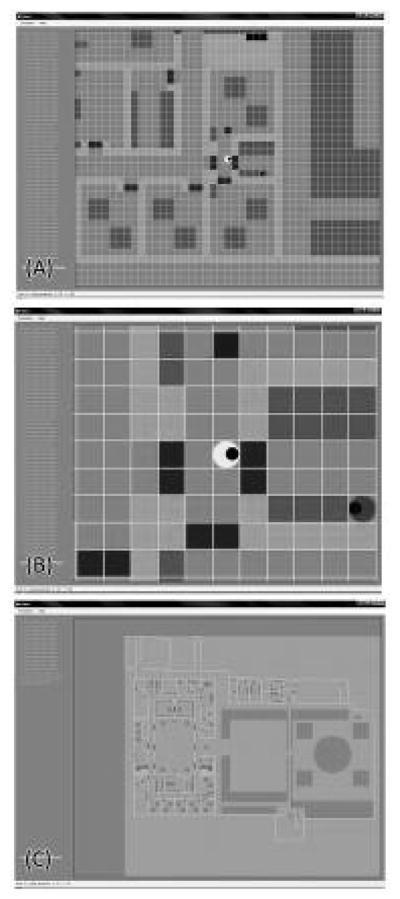

Figure 1.

Zoom function. (A) Different parts of the Santa Lucia School for the Blind building, on an equal scale. (B) View of the virtual environment when a user zooms in several times. (C) View of the virtual environment when a user zooms out several times.

Acknowledgments

This report was funded by the Chilean National Fund of Science and Technology, Fondecyt #1090352 and Project CIE-05 Program Center Education PBCT-Conicyt

Contributor Information

Jaime Sánchez, Email: jsanchez@dcc.uchile.cl, Department of Computer Science, Center for Advanced Research in Education (CARE), University of Chile, Blanco Encalada 2120., Santiago, Chile.

Mauricio Sáenz, Email: msaenz@dcc.uchile.cl, Department of Computer Science, Center for Advanced Research in Education (CARE), University of Chile, Blanco Encalada 2120., Santiago, Chile.

Alvaro Pascual-Leone, Berenson-Allen Center for Noninvasive Brain Stimulation, Department of Neurology, Beth Israel Deaconess Medical Center, Harvard Medical School.

Lotfi Merabet, Berenson-Allen Center for Noninvasive Brain Stimulation, Department of Neurology, Beth Israel Deaconess Medical Center, Harvard Medical School.

References

- 1.Alary F, Goldstein R, Duquette M, Chapman C, Voss P, Lepore F. Tactile acuity in the blind: a psychophysical study using a two-dimensional angle discrimination task. Experimental Brain Research. 2008;187(4):587–594. doi: 10.1007/s00221-008-1327-7. [DOI] [PubMed] [Google Scholar]

- 2.Amedi A, Raz N, Pianka P, Malach R, Zohary E. Early ‘visual’ cortex activation correlates with superior verbal memory performance in the blind. Nature Neuroscience. 2003;6(7):758–66. doi: 10.1038/nn1072. [DOI] [PubMed] [Google Scholar]

- 3.Kulyukin V, Gharpure C, Nicholson J, Pavithran S. RFID in robot-assisted indoor navigation for the visually impaired. IEEE/RSJ Intelligent Robots and Systems (IROS 2004) Conference; Sendai Kyodo Printing. 1979–1984..2004. [Google Scholar]

- 4.Lahav O, Mioduser D. Blind Persons’ Acquisition of Spatial Cognitive Mapping and Orientation Skills Supported by Virtual Environment. Proc. of the 5th, ICDVRAT 2004; 2004. pp. 131–138. [Google Scholar]

- 5.Lahav O, Mioduser D. Haptic-feedback support for cognitive mapping of unknown spaces by people who are blind. International Journal Human-Computer Studies. 2008;66(1):23–35. [Google Scholar]

- 6.Logothetis NK. What we can do and what we cannot do with fMRI. Nature. 2008;453(7197):869–878. doi: 10.1038/nature06976. [DOI] [PubMed] [Google Scholar]

- 7.Maguire EA, Burgess N, O’Keefe J. Human spatial navigation: cognitive maps, sexual dimorphism, and neural substrates. Current Opinion in Neurobiology. 1999;9(2):171–177. doi: 10.1016/s0959-4388(99)80023-3. [DOI] [PubMed] [Google Scholar]

- 8.Maguire EA, Burgess N, Donnett JG, Frackowiak RS, Frith CD, O’Keefe J. knowing where and getting there: a human navigation network. Science. 1998;280(5365):921–924. doi: 10.1126/science.280.5365.921. [DOI] [PubMed] [Google Scholar]

- 9.Pascual-Leone A, Amedi A, Fregni F, Merabet LB. The plastic human brain cortex. Annu Rev Neurosci. 2005;28:377–401. doi: 10.1146/annurev.neuro.27.070203.144216. [DOI] [PubMed] [Google Scholar]

- 10.Peruch P, Belingard L, Thinus-Blanc C. Transfer of spatial knowledge from virtual to real environments. Spatial Cognition. 2000;2:253–264. [Google Scholar]

- 11.Sánchez J, Noriega G, Farías C. Mental Representations of Open and Restricted Virtual Environments by People Who Are Blind. Proc. of the Laval Virtual Conference; 2009; 2009. pp. 217–226. [Google Scholar]

- 12.Théoret H, Merabet L, Pascual-Leone A. Behavioral and neuroplastic changes in the blind: evidence for functionally relevant cross-modal interactions. J Physiol Paris. 2004; 98(1–3):221–233. doi: 10.1016/j.jphysparis.2004.03.009. [DOI] [PubMed] [Google Scholar]