Abstract

Although it is intuitive that familiarity with complex visual objects should aid their preservation in visual working memory (WM), empirical evidence for this is lacking. This study used a conventional change-detection procedure to assess visual WM for unfamiliar and famous faces in healthy adults. Across experiments, faces were upright or inverted and a low- or high-load concurrent verbal WM task was administered to suppress contribution from verbal WM. Even with a high verbal memory load, visual WM performance was significantly better and capacity estimated as significantly greater for famous versus unfamiliar faces. Face inversion abolished this effect. Thus, neither strategic, explicit support from verbal WM nor low-level feature processing easily accounts for the observed benefit of high familiarity for visual WM. These results demonstrate that storage of items in visual WM can be enhanced if robust visual representations of them already exist in long-term memory.

Keywords: working memory, faces, familiarity, long-term memory, face identification

James (1890) and, later, Hebb (1949) noted that human memory appears to be composed of two distinct processes: a primary, or immediate, system and a secondary, or long-term system. The concept of different storage mechanisms for recently acquired and currently relevant information versus more historically acquired information became entrenched in experimental psychology with Atkinson and Shiffrin’s (1968) memory model. They proposed that new information first spends a few seconds in a limited capacity short-term memory store before being transferred to a larger store capable of supporting long-term memory (LTM). The concept of short-term memory was later superseded by the more complex concept of working memory (WM; Baddeley & Hitch, 1974).

Current views of WM generally posit a multicomponent system that stores and manipulates verbal, spatial, visual, and, perhaps, other information in separate caches in a time-limited fashion. WM is viewed as being able to combine previously learned information (that is, information in LTM) with current or recently available sensory information to enable learning, comprehension, and reasoning (Baddeley, 2000). It is widely assumed that WM is limited in its capacity to deal with (that is, encode, store, manipulate, rehearse) information (e.g., Cowan, 2001), that WM stores information in organized chunks to maximize the amount of information it can hold (Miller, 1956), and that WM uses LTM (or prior learning) to facilitate chunking (e.g., Gobet & Simon, 1996). A central premise is that WM capacity is limited to a small and constant number of chunks (approximately four; see Cowan for a review), but that the amount of information stored within each chunk can vary depending on the opportunity and ability to engage relevant associated information already established in LTM, such as categorical or semantic cues (Olsson & Poom, 2005). Although this view posits a general role for LTM in organizing or linking information to be stored in WM, it does not illuminate how familiarity with specific individual items might affect their storage in WM.

Our goal in the present series of experiments was to examine whether high levels of familiarity with specific to-be-remembered items can enhance the apparent capacity of visual WM. This question has important implications for understanding how visual learning and familiarity might lead to cognitive and perceptual fluency in tasks involved in a wide range of psychological skills, such as social cognition, object and face recognition, and reading. Although a handful of studies of verbal WM and combined visuospatial WM have found an influence of LTM, studies of familiarity effects on visual WM have produced null findings. Here, using unfamiliar and famous faces as stimuli, we present clear evidence that the storage of items in visual WM can be enhanced if representations of these items are already well established in LTM.

Whereas traditional WM capacity estimates of around 5–9 items were largely based on verbal tasks (Miller, 1956), more recent studies of visual WM report an upper capacity limit of around 3–4 items (Luck & Vogel, 1997; Todd & Marois, 2004; Vogel & Machizawa, 2004; Wheeler & Treisman, 2002). Olsson and Poom (2005) recently suggested that pure visual WM capacity might be even more severely limited to a single item when to-be-remembered items are novel with no preexisting verbal labels or semantic categories. Our question concerns whether visual WM for natural, meaningful stimuli can be enhanced when to-be remembered items have well-established LTM traces. Some support for this possibility can be found in studies of verbal and visuospatial WM that have reported enhanced capacity when expertise (that is, extensive experience in organizing and recognizing specific items) is present. Research with bilingual participants has shown that a larger number of first versus second language words can be stored in WM, indicating that long-term experience can boost verbal WM capacity (e.g., Chincotta & Underwood, 1996, 1997; Thorn & Gathercole, 1999). A different line of investigation has shown that expert chess players are able to store a larger number of meaningful (but not fake) chess patterns in WM than novice chess players, indicating that experience in organizing or linking visuospatial information can boost WM capacity (Chase & Simon, 1973; Gobet, 1998; Gobet & Simon, 1996). Such findings have been explained in two ways: either LTM aids WM maintenance by slowing or preventing decay of WM traces (Thorn, Gathercole, & Frankish, 2002) or LTM aids WM by promoting chunking, that is, making use of associations among to-be-remembered items and thus reducing total information load (Gobet & Simon).

In contrast to verbal and visuospatial WM studies, expertise or familiarity effects on WM capacity for information originally encoded in just the visual modality have proved harder to demonstrate. Using a visual change-detection task, Pashler (1988) tested WM for upright (familiar) versus mirror reflected (less familiar) letters but found no difference in performance and suggested that familiarity with to-be-remembered items does not influence visual WM. In contrast to this, Buttle and Raymond (2003) found that the ability to detect a change between two briefly presented faces, separated by a 100 ms pattern mask, was better when at least one of the faces was famous, indicating a benefit of familiarity. However, both studies used very brief retention intervals (less than 250 ms) between arrays, limiting interpretation to issues concerning perception and iconic memory rather than WM (Phillips, 1974; Sperling, 1960). Using longer retention intervals (500 ms), Olson and Jiang (2004) examined the effect of prior experience with meaningless block patterns by comparing visual WM for novel patterns with that for patterns studied 24 times previously (500 ms each; 12 s exposure in total per pattern). Although they demonstrated some explicit recognition memory for the repeated, nonnovel patterns, the level of familiarity they induced had no demonstrable effect on WM performance. In a similar vein, Chen, Eng, and Jiang (2006) compared visual WM for novel and trained meaningless polygons and reported no effect of training. In both of these studies, modest prior exposure and the poor ecological validity of the stimuli is likely to have resulted in weak, nondistinct, and possibly poorly organized LTM representations, limiting the ability to show any LTM modulation of visual WM. In apparent contrast to these null effects, Olson, Jiang, and Moore (2005) reported that prior visual experience mediated the contents of visual WM. They conducted a visual WM task that required detecting the location of a missing square in repeated square patterns. They showed that when the location of the missing square was predictive, performance significantly improved. Olson and colleagues interpret their results to suggest that the role of learning in visual WM is to enable prioritization of what visual information is to be stored rather than to increase capacity as such. This study does not speak to whether familiarity with to-be-remembered stimuli modulates WM, and so the question of whether LTM can influence purely visual WM remains open.

Using a conventional change-detection procedure to assess WM capacity (Luck & Vogel, 1997), we presented participants with arrays of familiar (famous) or unfamiliar faces and measured memory for them about one second later. We used faces for two reasons. First, faces are meaningful, ecologically valid stimuli, and the use of highly learned (famous) faces could be expected to activate well-established and distinct LTM representations, thus increasing the likelihood that any modulation of WM by LTM would be identifiable via improved behavioral performance on the WM task. Second, given the fundamental role of faces in social communication, an understanding of factors affecting visual WM for faces has obvious importance for many aspects of social cognition. The ability to maintain a visual representation of a face in visual WM is fundamental to successful social communication: It enables a continuous and coherent percept of the human players involved in a social episode when the flow of visual information to the brain is temporarily interrupted (such as with eye and head movements).

Several empirical studies have shown that the extent of prior experience with a face has significant consequences for the perceptual and attentional processing of that face. Tong and Nakayama (1999) showed that visual search was significantly speeded and displayed flatter set-size slopes when searching for one’s own face compared to a recently learned face, even when participants had received thousands of exposures to each recently learned face. They suggested that extreme familiarity (as with one’s own face) resulted in qualitatively different perceptual encoding processes than those involved in recognizing more recently learned (less personally relevant) faces. Two studies using variations of the attentional blink paradigm (Raymond, Shapiro, & Arnell, 1992) support this notion and demonstrate that visual superfamiliarity (as opposed to personal relevance) alters attentional processes. In one study, face identification was found to be resistant to the attentional blink (that is, identification could proceed when attentional resources were severely limited) when a famous face, but not an unfamiliar face, was presented as the second of two targets in a rapid serial visual presentation of face stimuli (Jackson & Raymond, 2006). The second study required detection of a change in the repeated presentation in rapid serial visual presentation (but not explicit identification) of face pairs and reported significantly better performance when the change involved at least one famous face (Buttle & Raymond, 2003). Although these studies provide strong evidence that LTM can facilitate perceptual encoding—and perhaps also WM consolidation—when attentional resources are scarce, the results cannot directly speak to the possibility that LTM might enhance WM capacity.

In our first experiment, we measured visual WM capacity for simple colored squares and for famous and unfamiliar upright faces (in different blocks), using a concurrent verbal WM task to suppress the strategic, explicit use of verbalization and verbal memory codes (Murray, 1968). In Experiment 2, we repeated the upright faces memory task using a randomized design and with high or low load in the accompanying verbal WM task. Experiments 3 and 4 were similar to Experiments 1 and 2 except that faces were inverted so that we could determine whether simple image features, unrelated to face identification processes, underpinned performance. We found that familiarity significantly boosted visual WM performance for upright faces and we provide evidence that this effect cannot easily be explained by strategic recruitment of verbal memory or verbalization of names, analysis of low-level face features, or encoding opportunity.

Experiment 1

Method

Participants

Twenty-four healthy young adults (16 women, mean age 22 years) with normal or corrected to normal vision participated. Participants in this and all subsequent experiments participated in exchange for course credits or money, were naïve to the purpose of their experiment, and provided informed consent prior to participation.

Apparatus

Stimuli were displayed on a 22-in. Mitsubishi DiamondPro 2060u monitor (32-bit true color; resolution 1,280 × 1,024 pixels) and generated by E-Prime software (Version 1.0; Schneider, Eschman, & Zuccolotto, 2002).

Stimuli

In this and all subsequent experiments, 22 grayscale images (2.8° × 3.3°) of Caucasian adult faces in frontal view with hair present, neutral expression, and neither teeth nor glasses visible were used for the faces conditions. Eleven faces were unfamiliar, selected from the Psychological Image Collection at Stirling (University of Stirling Psychology Department, 2002). The remaining 11 faces were of famous celebrities carefully selected from the Internet to avoid obvious preexisting semantic connections (such as common appearance in the same film or television program) that could lead to conceptual grouping effects (see Table 1 for famous face names). All face images had similar luminance, contrast, and resolution. In the squares condition, we used 11 filled squares (0.6° × 0.6°) each colored with a single hue (white, black, red, green, blue, yellow, orange, brown, purple, turquoise, or pink).

Table 1. Names of Famous Celebrity Faces Used in the Visual WM Task.

| Famous celebrities |

|---|

| Tom Cruise |

| Brad Pitt |

| Leonardo Di Caprio |

| Johnny Depp |

| Keanu Reeves |

| David Beckham |

| Robbie Williams |

| Matt Le Blanc |

| Ben Affleck |

Design and Procedure

Visual WM task

A standard visual change-detection task (Luck & Vogel, 1997) with concurrent verbal suppression was used to measure visual WM capacity. The three types of stimuli—unfamiliar faces, famous faces, and squares—were presented in separate blocks. All participants completed each stimulus block on separate days (order evenly counterbalanced across participants; no block effects resulted). To measure WM capacity, the number of faces to be remembered (face load) was varied; Loads 1, 2, 3, 4, 5, 6, 8, and 10 were used. There were 40 trials per load condition (50% change), yielding 320 trials in total in each stimulus block. Load and change–no change conditions were pseudorandomized within each experiment. In each face array on each trial, faces were displayed at randomly chosen locations within a white region (approximately 17.0° × 14.4°) with the constraint that each face was separated by at least 2.9° on the horizontal axis and 3.4° on the vertical axis, center to center. In each squares array on each trial, squares were displayed at random locations within a light grey region (approximately 9.8° × 7.3°) with the constraint that each square was separated by at least 2° center to center. Spatial location of memory items could not therefore be anticipated from trial to trial.

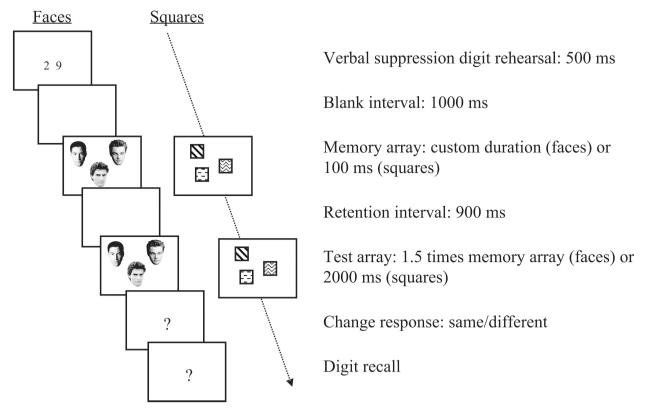

Each WM trial (illustrated in Figure 1) was initiated by pressing the space bar and began with a 500-ms central presentation of two randomly selected digits (font size = 50 points). To suppress the use of verbal WM, participants were instructed to silently repeat these digits throughout the whole trial. After a blank interval of 900 ms, a memory array comprising 1, 2, 3, 4, 5, 6, 8, or 10 items was presented. Colored squares were presented for 100 ms, a duration shown by Luck and Vogel (1997) to be effective for encoding arrays of up to 10 such stimuli. Duration times for faces were determined according to results from a visual search pretask designed to measure encoding speed, described in detail below. The memory array was followed by a 900-ms blank retention interval. A test array, either identical to the memory array (no-change trial, 50%) or with a single item changed to a different item (change trial), was then presented. No item changed location between the memory and test array or appeared twice within either array. Test array duration was 2,000 ms in the squares condition and 1.5 times the memory array duration in the faces conditions. After the termination of the test array, participants reported whether the memory and test arrays were the “same” or “different” using a key press. They then typed the digits presented at the trial’s start. All responses were unspeeded and no feedback was given. A short practice session was provided before the main experiment began. At the end of the third block on the final day, participants made a familiarity judgment on each famous face, based on a scale of 1–5 (1 indicating no recognition of the face and 5 indicating high familiarity). They were also asked to name each face as a further check of recognition. Ratings confirmed that the famous faces we used were highly familiar (M = 4.23, SE = 0.09) and relatively nameable (M = 73%, SE = 3).

Figure 1. Example illustration of a visual WM change-detection trial for (famous) faces and squares, with verbal suppression.

Visual search pretask

Eng, Chen, and Jiang (2005) demonstrated that exposure durations that are too short can limit perceptual encoding and result in underestimates of visual WM capacity. In order to ensure that results from the faces WM task reflected memory capacity rather than encoding limitations, memory array durations for each face condition in the WM task were determined separately for each participant using a visual search pretask, administered shortly before the WM task. Face familiarity (unfamiliar or famous) in the visual search task was blocked and corresponded to that in the succeeding WM task.

Participants searched for a single target face among a varying number of distractor faces. Face image and array dimensions were the same as in the WM task. Targets and distractors were selected at random from different sets of 12 target and 18 distractor faces. Arrays (set sizes) of 4, 7, and 10 faces were used. In each face array on each trial, faces were displayed at randomly chosen locations within a white region (approximately 17.0° × 14.4°) with the constraint that each face was separated by at least 2.9° on the horizontal axis and 3.4° on the vertical axis, center to center. No face appeared more than once in any given display. There were 72 trials per set size (50% target present), yielding 216 trials in total per session. Set size and target present/absent conditions were pseudorandomized. A search trial began with a 1,000-ms central fixation cross, followed by a target face presented for 1,000 ms in the center of the screen. After a 500-ms blank interval, the faces search display was presented until the participant reported, as quickly and accurately as possible, whether the target face was present or not using a simple key press. A short practice session was provided before the main experiment began. Each participant’s mean reaction time (RT) to make a correct search response at the largest set size (10) was used to set his/her memory-array duration for all loads in the corresponding faces WM task (see Table 2 for group means).

Table 2. Group Mean and Standard Error and Mean Standard Deviation from Individual Subjects, in ms, for Visual Search Pretest Reaction Times (RTs) for Upright Faces in Experiment 1.

| Upright | Group mean RT (SE) | Mean participant SD |

|---|---|---|

| Unfamiliar: Set size 4 | 1209 (47) | 341.17 |

| Unfamiliar: Set size 7 | 1736 (67) | 622.77 |

| Unfamiliar: Set size 10 | 2231 (91) | 887.92 |

| Famous: Set size 4 | 1105 (52) | 309.93 |

| Famous: Set size 7 | 1588 (69) | 544.45 |

| Famous: Set size 10 | 2031 (95) | 801.71 |

Note. Each participant’s mean RT at the largest load (10) was used to set the duration of all face memory arrays in their corresponding visual WM task for upright faces in Experiment 1.

Data Analysis

Only trials on which participants correctly reported both digits in the verbal suppression task were included in further analyses. This excluded 5.7% of the data. False alarm (FA) rates for the WM change-detection task varied significantly as a function of load for all stimulus conditions, indicating the need to convert the data into d′ scores. These were calculated using individual FA rates for each load and stimulus condition.

Visual WM capacity estimate

A numerical estimate of visual WM capacity (k) was calculated for each subject in each condition using a new formula we devised—we call it Bangor k—to yield a capacity estimate that improves on some aspects of previous estimates (Pashler, 1988; Cowan, 2001). Bangor k is the interpolated memory load value at which performance meets a criterion level, set here arbitrarily to d′ = 3.06. This d′ value corresponds to an overall accuracy level of 85% correct (obtained by using an FA probability rate of 0.12, the average obtained here across all conditions for loads 2 to 6, and a hit rate of 0.97). Bangor k is interpolated using a least squares line fit to d′ scores plotted as a function of log-transformed load values.1

| (1) |

Here, b and m are the intercept and slope, respectively, of the d′ values, and y is the d′ criterion threshold. Only data from loads 2–6 were used to calculate Bangor k in all experiments in this study. This avoided skewing the line fit for ceiling performance at Load 1 and asymptote performance beyond Load 6 in Experiment 1 and allowed better comparison between k estimates from Experiments 2–4 where only loads 2–6 were used.

The two main advantages of Bangor k over the more conventional Pashler’s (1988) and Cowan’s (2001) k estimates are that Bangor k is based on data collected from a wide range of loads (rather than a single load) and that it allows decision criteria (as reflected in false alarm rates) to vary with load.2 For interest and comparison, both Bangor k and Cowan’s k capacity estimates are reported in this paper (see Table 3).

Table 3. Bangor k and Cowan’s k Estimates of Visual WM Capacity in Experiments 1–4.

| Bangor k |

Cowan’s kavge |

|||

|---|---|---|---|---|

| Unfamiliar | Famous | Unfamiliar | Famous | |

| Experiment 1 Upright faces, 2-digit subvocal |

2.40 (±0.11) | 2.66 (±0.10) | 2.46 (±0.12) | 2.77 (±0.14) |

| Experiment 2 Upright faces, 2-digit vocal |

2.07 (±0.17) | 2.96 (±0.19) | 2.25 (±0.18) | 2.63 (±0.20) |

| Experiment 2 Upright faces, 5-digit vocal |

1.98 (±0.30) | 2.46 (±0.27) | 2.57 (±0.21) | 2.61 (±0.17) |

| Experiment 2 Upright faces, verbal loads combined |

2.03 (±0.24) | 2.71 (±0.23) | 2.41 (±0.20) | 2.62 (±0.19) |

| Experiment 3 Inverted faces, 2-digit subvocal |

2.05 (±0.13) | 2.09 (±0.13) | 1.76 (±0.09) | 2.02 (±0.08) |

| Experiment 4 Inverted faces, 5-digit vocal |

1.84 (±0.25) | 1.95 (±0.24) | 2.16 (±0.18) | 2.27 (±0.22) |

Note. Experiment 1: upright faces, 2-digit subvocal concurrent verbal WM load; Experiment 2: upright faces, 2-digit, 5-digit, and combined-digit vocalised concurrent verbal WM load; Experiment 3: inverted faces, 2-digit subvocal concurrent verbal WM load; Experiment 4: inverted faces, 5-digit vocalised concurrent verbal WM load. Bangor k is a new estimate we devised to measure visual WM capacity that improves on aspects of previous measures. The formula for Bangor k is k = 10^ ((y–b)/m)], where b and m are the intercept and slope respectively of the d′ values from loads 2–6 (load was log transformed), and y is a d′ criterion threshold. Cowan’s k was calculated at all loads for each participant, using the formula k = load * (hits-false alarms); we then used the average k value (kavge) to calculate the group capacity estimate. Parenthetical values give the standard error.

Results and Discussion

Visual Search Results

Table 2 shows the group means (M) and standard errors (SE) of RTs for all set size conditions. The table also reports the average standard deviation (SD) to provide an indication of within-subject variability in the search task. We found that search for famous faces was faster overall and did not slow as rapidly with increasing set size as did search for unfamiliar faces. A repeated-measures ANOVA on RTs with familiarity (unfamiliar, famous) and set size (4, 7, and 10) as within factors revealed a significant main effect of familiarity, F(1, 23) = 21.00, p < .001, , and a significant interaction between familiarity and set size, F(2, 46) = 5.10, p < .05, . A comparison of the slopes for functions relating RT to set size revealed a significantly shallower search slope for famous than for unfamiliar faces, t(23) = 2.45, p < .05. These results support previously published data showing that face familiarity boosts search speed (Tong & Nakayama, 1999).

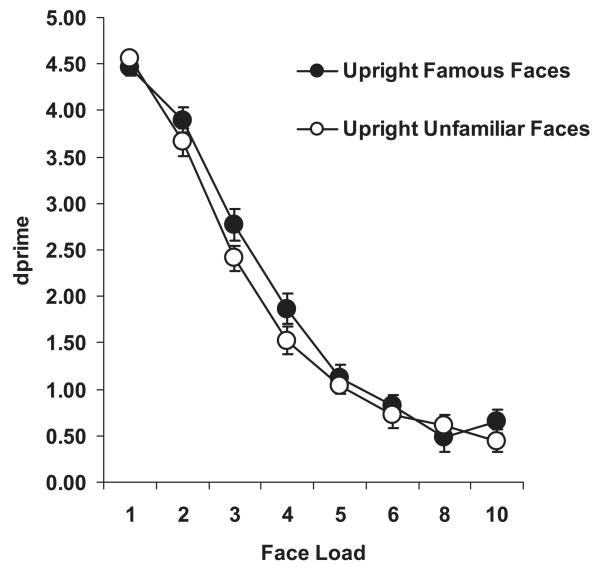

Visual WM Performance

The group mean performance for famous and unfamiliar faces in the WM task (expressed in d′) is plotted as a function of load in Figure 2. Performance was significantly better (by about 0.5 d′ units) in the change-detection task for famous than for unfamiliar faces, F(1, 23) = 7.55, p = .01, . Familiarity and load conditions did not significantly interact, F(7, 161) = 1.35, p > .22. The mean Bangor k capacity estimate for famous faces was 2.66 (±0.10), a value that was significantly higher than that obtained from the same participants for unfamiliar faces (2.40 ± 0.11), t(23) = 2.32, p < .05. This difference held even when we lowered the Bangor k performance criterion threshold from 85% to 75% (setting y to 2.36, based on a d′ score using the mean FA rate of 0.12 and a hit rate of 0.87); capacity estimates remained higher for famous (3.41 ± 0.11) than for unfamiliar (3.13 ± 0.13) faces, t(23) = 1.96, p = .06. Cowan’s k estimates can be seen in Table 3 for comparison. These results demonstrate that familiarity effects found in verbal (Thorn et al., 2002) and visuospatial (Gobet & Simon, 1996) WM appear to have a counterpart in visual WM. They also clearly indicate that storage of items in visual WM is enhanced if representations of these items are already well established in LTM.

Figure 2. Change-detection performance (d′) as a function of load for unfamiliar and famous upright faces in Experiment 1.

A concurrent two-digit verbal load was subvocally rehearsed. Bars represent ± 1 standard error.

Visual WM capacity for faces was markedly lower than that for colored squares. Bangor k was 3.38 (±0.19) for squares, a value consistent with previous (Cowan’s or Pashler’s k) estimates of visual WM capacity using similar stimuli (k = 3.0; Todd and Marois, 2004; k = 3.23–3.40, Vogel, Woodman, & Luck, 2001). When we applied a 75% threshold to the Bangor k formula, we estimated capacity to be 4.87 (±0.28) squares, similar to Alvarez and Cavanagh’s (2004) Cowan’s k estimate of 4.4 squares. Our Cowan’s k estimate for squares was 2.52 (±0.29). Higher visual WM capacity for squares than faces is consistent with previous studies that compared visual WM for faces and less complex stimuli (Eng et al., 2005), thus we can be confident that our change-detection paradigm and new Bangor k estimate produce valid results that are in line with previous research.

Experiment 2

Perhaps the enhanced performance for famous over unfamiliar upright faces seen in Experiment 1 was supported by the strategic use of verbal WM labels even though a concurrent verbal WM task was used. Although verbal and visual caches are widely assumed to be independent (Baddeley & Hitch, 1974; Cocchini, Logie, Della Sala, MacPherson, & Baddeley, 2002), Morey and Cowan (2004) reported that unrelated information in verbal WM can hinder performance in a concurrent visual WM task, pointing to a possible interaction between the two caches. This raises the possibility that leftover capacity in verbal WM could be recruited to aid visual working memory.

Experiment 2 tested this by increasing the load in the concurrent verbal task. The procedure used was largely similar to that used in Experiment 1 except that participants were required to rehearse the verbal WM items aloud throughout the trial rather than subvocally so that rehearsal could be properly monitored and explicit verbalization of face names could be prevented. If verbal WM was driving the familiarity effect, then the effect should be eliminated with the higher load verbal WM task.

Method

Participants

Fifteen healthy young adults (14 women, mean age 23 years) who had not participated in the previous experiment took part.

Apparatus and Stimuli

Stimuli were displayed on a 14-in. Dell Latitude D610 laptop (32-bit true color; resolution 1,280 × 1,024 pixels) and generated by E-Prime software (Version 1.1; Schneider et al., 2002). The same 11 unfamiliar and 11 famous faces were presented upright as in Experiment 1.

Design and Procedure

The primary differences between the visual WM task in this experiment and Experiment 1 were threefold. First, instead of adopting a blocked design, famous and unfamiliar face trials were pseudoramdomized within one session (50% famous trials). Second, rather than testing eight different visual WM loads, only loads 2, 4, and 6 were used. Third, memory arrays for all participants were presented for 2,500 ms and the test arrays for 3,000 ms (rather than being individually adjusted). Based on visual-search data obtained in Experiment 1, the memory array duration provided more than adequate encoding time at all loads.

The critical manipulation in this experiment was verbal WM load. Within the experimental session, half the trials required two digits to be remembered whereas remaining trials required that five digits be remembered (presented in a pseudorandomized order). In Experiment 1, participants had been instructed to rehearse the digits silently. This procedure left open the possibility that participants were inserting verbal face codes (that is, names or feature labels) into their digit rehearsal (e.g., “two,” “Tom Cruise;” “five,” “Brad Pitt”).3 To eliminate this possibility here, participants were now instructed to repeat the digits aloud and an experimenter monitored vocal rehearsal throughout the session. Each session began with 12 practice trials followed by 20 experimental trials for each visual WM load (2, 4, and 6), in each verbal-WM-load condition (2 and 5), presented in a pseudorandom order, yielding 120 famous face trials and 120 unfamiliar faces trials in total.

Results and Discussion

Verbal WM Digit Task

Consistent with capacity limitations, verbal WM performance was significantly poorer for five digits (M = 90%; SE = 2) than for two digits (M = 97%; SE = 2), F(1, 14) = 8.75, p = .01, . Interestingly, verbal WM was better, though not significantly so, overall when the concurrent visual WM task involved famous faces (M = 94%; SE = 3) than when it involved unfamiliar faces (M = 92%, SE = 1), F(1, 14) = 4.39, p = .06, . This suggests that the famous-faces WM task produced less interference with the concurrent verbal WM task than did the unfamiliar-faces WM task, which is counter to what we would expect if verbal WM were used to facilitate visual WM to a greater degree for famous than unfamiliar faces. Verbal WM performance was not significantly affected by concurrent face load F(2, 28) = 1.20, p > .30. All interactions were nonsignificant.

Visual WM Faces Task

Only trials on which participants correctly reported all digits in the verbal suppression task were included in the analysis of face-WM-task data; this excluded 8% and 6% of the data in the unfamiliar- and famous-face conditions, respectively.

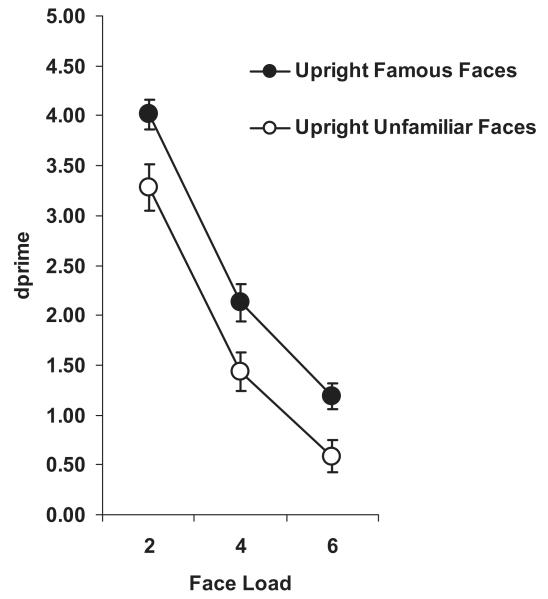

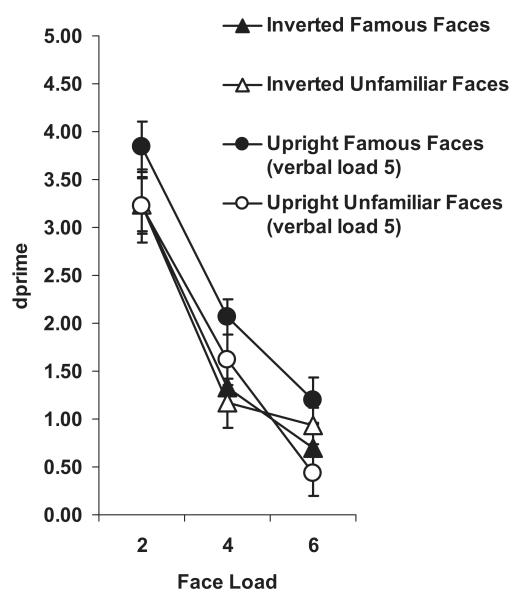

Overall, visual WM for faces was not significantly affected by verbal load, nor did face load, familiarity, or their interaction significantly interact with verbal load (F < 1.0 in all cases). Combined visual-WM-faces data from both verbal-WM-load conditions showed a significant main effect of familiarity on faces WM task performance, F(1, 14) = 28.94, p < .001, , and a nonsignificant interaction between familiarity and face load, replicating the results from Experiment 1. The data are plotted in Figure 3. The overall Bangor k estimate for famous faces was 2.71 (±0.23), a value that was significantly higher than that obtained from the same participants for unfamiliar faces (2.03, ±0.24), t(14) = 4.19, p < .01.

Figure 3. Change-detection performance (d′) as a function of load for unfamiliar and famous upright faces in Experiment 2.

Data shown is from combined two-digit and five-digit concurrent vocalized verbal WM load conditions. Bars represent ± 1 standard error.

Despite the lack of influence of concurrent high versus low verbal WM load upon visual WM for faces, we examined whether a familiarity effect was present in both verbal-load conditions by separately analyzing the faces data for Verbal-Load-2 and Load-5 conditions. WM performance was indeed significantly better for famous than unfamiliar faces in both verbal-WM-load conditions: two digits, F(1, 14) = 17.12, p < .01, ; five digits, F(1, 14) = 7.47, p < .02, . Neither analysis revealed a significant interaction between familiarity and face load. The magnitude of the familiarity effect (famous k minus unfamiliar k) did not significantly differ between Verbal-Load-5 and Verbal-Load-2 conditions, t(28) = 1.10, p = .28. (See Table 3 for capacity estimates).

To further test whether verbal WM may have been strategically used to support the familiarity effect on visual WM, we examined d′ differences for familiar versus unfamiliar faces for participants who performed above versus below the group median (93%) on the verbal WM task at Load 5. We reasoned that if familiarity effects resulted from using leftover verbal WM capacity, then the group performing below the median on the verbal WM task (with no leftover verbal WM capacity) should show no or smaller familiarity effects on the visual task than those performing above the median (near ceiling, with available verbal WM capacity). On the contrary, we found an opposite result. The group performing near ceiling showed significantly smaller familiarity effects than those performing well below ceiling (at mean 86% correct). Moreover for the latter group (with no available verbal capacity), we found a large significant effect of familiarity, F(1, 5) = 10.71, p < .02, providing additional evidence that verbally naming faces was not a likely strategy underpinning the familiarity effect.

We then compared the combined verbal-load data from this experiment with that from Experiment 1. A repeated-measures ANOVA with familiarity (famous, unfamiliar) and face load (2, 4, 6) as within factors, and experiment (1, 2) as a between factor, revealed a significant interaction between familiarity and experiment, F(1, 37) = 7.65, p < .01, . Although both experiments showed a significant main effect of familiarity, further examination revealed that the magnitude of the familiarity effect observed here in Experiment 2 (0.68 Bangor k units) was substantially and significantly higher than the magnitude of the effect in Experiment 1 (0.26 Bangor k units), t(37) = 2.28, p < .05, suggesting that randomizing famous and unfamiliar trials and lengthening encoding time served to enhance the familiarity effect. Even with a high concurrent verbal WM load with monitored vocalizations, the familiarity effect for faces in visual WM remained robust.

Experiment 3

One potential problem for our interpretation of the familiarity effect in visual WM is that enhanced performance for famous over unfamiliar faces could have been driven by particularly salient stimulus features present in the famous-face set and absent from the unfamiliar-face set. Experiment 3 tested this by inverting the faces. This manipulation leaves all the features intact but disrupts configural processes necessary for efficient face identification (e.g., Farah, Tanaka, & Drain; 1995; Farah, Wilson, Drain, & Tanaka, 1995; Moscovitch, Winocur, & Behrmann, 1997; Yin, 1969). If feature detection could account for our familiarity effect, then differences in performance between famous and unfamiliar faces should remain unperturbed when faces are inverted. If, however, face identification processes (that is, face familiarity) account for the effect, then inversion should significantly reduce any performance differential. Note that face inversion does not eliminate face recognition, it merely disrupts it (Collishaw & Hole, 2000); and it is possible that some benefit of familiarity might still be evident even when faces are inverted.

Method

Participants

A different group of 24 healthy young adults (21 women, mean age 21 years) participated.

Apparatus, Stimuli, and Design

The same apparatus was used as in Experiment 1. In Experiment 3, a blocked visual WM procedure with a concurrent two-digit subvocal verbal suppression task was administered to mirror the design of Experiment 1; the same unfamiliar and famous faces were used, rotated by 180°; Loads 2, 4, and 6 were used. The visual search pretask used in Experiment 1 was also administered for inverted faces using Set Sizes 2, 4, and 6. Each participant’s mean RT to make a correct search response at the largest set size was used to set his/her memory array duration for all set sizes in the corresponding faces WM task. Only WM trials on which participants correctly reported both digits in the verbal task were included in further analyses; this excluded 9.8% of the data.

Results and Discussion

Visual Search Results

Table 4 shows the group means (M) and standard errors (SE) of RTs for all set sizes and the average standard deviation (SD) to provide an indication of within-subject variability in the search task. Search for famous inverted faces was faster overall and did not slow as rapidly with increasing set size as search for unfamiliar inverted faces. A repeated-measures ANOVA with familiarity (unfamiliar, famous) and set size (2, 4, and 6) as within factors revealed a significant main effect of familiarity, F(1, 23) = 7.70, p < .05, , and a significant interaction between familiarity and set size, F(2, 46) = 8.46, p < .01. . A comparison of the slopes for functions relating RT to set size revealed a significantly shallower search slope for famous than for unfamiliar inverted faces, t(23) = 3.25, p < .01. These results support Tong and Nakayama’s (1999) finding that visual search performance for inverted faces was significantly more efficient for one’s own familiar face than unfamiliar faces, suggesting that face inversion does not fully abolish face recognition when faces are superfamiliar.4

Table 4. Group Mean and Standard Error and Mean Standard Deviation From Individual Subjects, in ms, for Visual Search Pretest Reaction Times (RTs) for Inverted Faces in Experiment 3.

| Inverted | Group mean RT (SE) | Mean participant SD |

|---|---|---|

| Unfamiliar: Set size 2 | 916 (32) | 202.33 |

| Unfamiliar: Set size 4 | 1299 (45) | 377.65 |

| Unfamiliar: Set size 6 | 1638 (53) | 554.74 |

| Famous: Set size 2 | 838 (31) | 190.19 |

| Famous: Set size 4 | 1143 (43) | 325.89 |

| Famous: Set size 6 | 1417 (54) | 456.44 |

Note. Each participant’s mean RT at the largest load (6) was used to set the duration of all face memory arrays in their corresponding visual WM task for inverted faces in Experiment 3.

Visual WM Performance

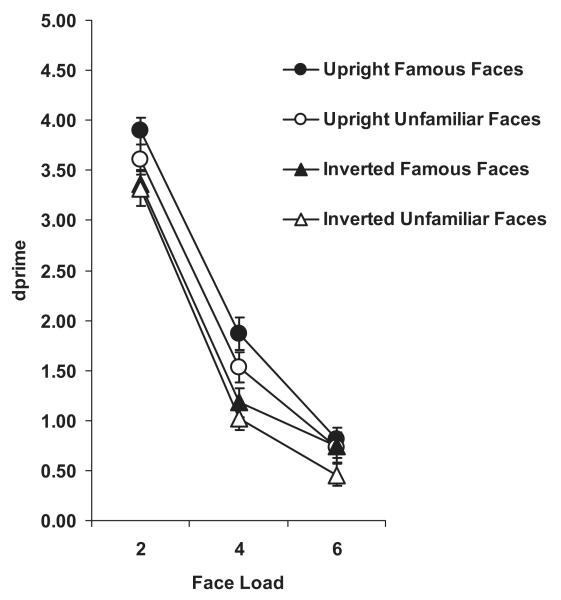

First, we compared inverted-face data with upright-face data from Experiment 1 (using Loads 2, 4, and 6 from both experiments) in order to assess inversion effects. A mixed design ANOVA with familiarity and load as within factors and orientation (experiment) as a between factor, revealed a significant main effect of orientation, F(1, 46) = 14.26, p < .001, . Orientation did not significantly interact with familiarity, F(1, 46) < 1.0, nor was there a significant three-way interaction between orientation, familiarity, and load, F(2, 92) < 1.0. Separate mixed ANOVAs for unfamiliar and famous faces with load as a within factor and orientation as a between factor revealed that performance on the WM task was significantly impaired for inverted versus upright faces in both the unfamiliar-face condition, F(1, 46) = 6.75, p = .01, , and the famous face condition, F(1, 46) = 11.23, p < .01, (Figure 4). Comparing Bangor k estimates, inversion significantly reduced unfamiliar face capacity by 15% (from 2.40 ± 0.11 upright unfamiliar faces to 2.05 ± 0.13 inverted unfamiliar faces), t(46) = 2.18, p < .05; famous face capacity was significantly reduced by 21% (from 2.66 ± 0.10 upright famous faces to 2.09 ± 0.13 inverted famous faces), t(46) = 3.56, p < .01. See Table 3 for related Cowan’s k estimates. These results are in line with predictions based on previous studies of face inversion (Curby & Gauthier, 2007; Farah, Tanaka, et al.; 1995; Farah, Wilson, et al., 1995; Moscovitch, et al., 1997).

Figure 4. Change-detection performance (d′) as a function of load for unfamiliar and famous upright faces (Experiment 1) versus inverted faces (Experiment 3).

A concurrent two-digit verbal load was subvocally rehearsed in both experiments. Bars represent ± 1 standard error.

To assess whether a familiarity effect was present for inverted faces, we conducted a repeated-measures ANOVA on the inverted-face d′ data. Contrary to our prediction that a familiarity effect would not be evident when faces were inverted, we found a significant main effect of familiarity, F(1, 23) = 4.61, p < .05, (Figure 4). This effect was primarily due to nonsignificant but higher d′ values for famous versus unfamiliar inverted faces at Load 6, t(23) = 1.99, p = .06, and was not evident at Loads 2 and 4. The interaction between familiarity and face load was nonsignificant (F < 1.0). The familiarity benefit seen at Load 6 might be due to insufficient encoding opportunity during the memory array on some trials. Recall that the memory array duration was set for each participant as the mean RT for the largest set size in the visual search pretest, which in this experiment was 6 (as opposed to 10 in Experiment 1). This would mean that, on a significant proportion of trials for Load 6, encoding time would be less than adequate. This was not a problem for Experiment 1 (upright faces) because the very long RT for Set Size 10 was more than adequate for the smaller WM loads at which d′ differed with familiarity (Loads 2–6). Importantly, the Bangor k capacity estimates were not different for unfamiliar versus famous inverted faces ( p = .7).

This experiment is important for two reasons. First, our finding that detection of a change in an array of faces was impaired when the faces were upside down versus upright indicates that face processing mechanisms were involved in the upright-faces WM task. Second, lack of any difference in the WM k capacity estimates for famous versus unfamiliar inverted faces supports the likelihood that low-level feature processing did not underpin performance in Experiment 1. Although a conclusion based on Bangor k is clear, the d′ data underpinning this estimate are somewhat untidy (at Load 6). In Experiment 4, we explored the effect of inversion further by assessing whether input from verbal WM could account for the modest familiarity effect for inverted faces.

Experiment 4

In Experiment 4, we repeated Experiment 3, using the more strenuous five-digit verbal suppression task with vocal rehearsal. We also randomized familiar and unfamiliar inverted face trials instead of blocking them, and we used a long duration (2,500 ms) memory array for all loads (deemed more than sufficient for encoding based on visual search results from Experiment 3) to determine if the familiarity effect for inverted faces seen at Load 6 in Experiment 3 was the result of encoding limitations.

Method

Participants

A different group of thirteen (10 women, mean age 22 years) healthy young adults with normal or corrected to normal vision participated.

Results and Discussion

Verbal WM Digit Task

Performance on the verbal WM task was not significantly affected by whether faces in the visual WM task were famous or unfamiliar or by concurrent face load. Only trials on which participants correctly reported all digits in the verbal suppression task were included in the analysis of visual face WM task performance; this excluded 8% of the data.

Visual WM Faces Task

In contrast to Experiment 3, there was no main effect of familiarity on visual WM for inverted faces, F(1, 12) < 1.0 (Figure 5). Bangor k capacity estimates for unfamiliar and famous inverted faces were 1.84 (±0.25) and 1.95 (±0.24), respectively, a nonsignificant difference ( p > .3). (See Table 3 for Cowan’s k estimates.) We also compared this inverted-face data with upright-face data obtained during the high verbal-load task in Experiment 2. A mixed-design ANOVA with familiarity and load as within factors and orientation (experiment) as a between factor, revealed a nonsignificant main effect of orientation, F(1, 26) = 2.98, p = .09, , but a significant interaction between orientation and familiarity, F(1, 26) = 5.57, p < .05, , supporting the presence of a significant familiarity effect for upright faces in Experiment 2 and lack thereof for inverted faces in the current experiment (Figure 5). The three-way interaction between orientation, familiarity, and load was nonsignificant, F(2, 52) < 1.0.

Figure 5. Change-detection performance (d′) as a function of load for unfamiliar and famous inverted faces in Experiment 4.

A concurrent five-digit verbal WM load was vocally rehearsed. Also presented for comparison are data from upright faces in the Verbal-Load-5 condition in Experiment 2. Bars represent ± 1 standard error.

The clear absence of a familiarity effect in visual WM when faces were inverted, with a large concurrent verbal WM load and sufficient encoding time at all loads, confirms that low-level featural differences between unfamiliar- and famous-face images used in this study are unlikely to account for the familiarity effect found for upright faces in Experiments 1 and 2.

It is also important to rule out the possibility that the lack of familiarity effect found here is not due to increased difficulty of the five-digit verbal WM task. Perhaps a high verbal WM load (hard verbal task) caused greater distraction from the faces WM task when faces were inverted (hard visual task) compared to when faces were upright (easier visual task). To examine this, we compared five-digit verbal WM performance between Experiments 2 (upright faces) and 4 (inverted faces) for both unfamiliar and famous faces. We found a nonsignificant difference in verbal WM performance between upright (M = 89%, SE = 0.10) and inverted-(M = 88%, SE = 4.0) unfamiliar-face conditions and between upright (M = 90%, SE = 3.0) and inverted (M = 89%, SE = 4.0) famous face conditions, F(1, 26) < 1.0 in both cases. Thus, the lack of familiarity effect in visual WM for inverted faces in the current experiment cannot be attributed to any differences in difficulty of the concurrent verbal memory task.

General Discussion

In four experiments, we used a visual change-detection task to demonstrate better visual WM for famous versus unfamiliar faces. Using a conventional interpretation of change-detection data (e.g., Luck & Vogel, 1997), our results can be taken to reflect enhanced WM capacity when items in memory have well-established traces in LTM. Before engaging in a discussion of how contemporary views of visual WM might accommodate our finding, we rule out three simple alternative explanations for the familiarity effects observed here.

First, enhanced visual WM performance for upright famous faces might have been achieved via strategic, explicit recruitment of verbal WM resources for famous but not unfamiliar stimuli. We think this is unlikely for two main reasons. First, when given a high verbal memory load that pulled performance below ceiling (Experiment 2), a robust visual familiarity effect was still observed, indicating that strategic employment of verbal codes in WM was not being used to support performance on the visual WM task. Second, if verbalizing or using spare capacity in a hypothetical separate verbal WM cache were an effective strategy for enhancing performance on the visual WM task, it is not clear why this strategy was differentially employed for famous versus unfamiliar faces. In our experiment, only a small set of 11 unfamiliar faces was seen by the participants, with each unfamiliar face being repeated well over one hundred times exclusive of practice trials. This experience would likely have given participants ample opportunity to assign and use names or other verbal labels for these faces if this were a useful strategy and would have led to no difference in visual WM performance for famous versus unfamiliar faces. It therefore seems unlikely that maintenance of explicit verbal codes supported visual WM for famous but not unfamiliar faces.

A second simple explanation for the familiarity effect is that low-level stimulus features (unrelated to the familiarity of the individual images) in the famous face set were for some reason more memorable than those in the unfamiliar face set. The results of Experiments 3 and 4 allow us to firmly reject this explanation. We found that the familiarity effect was fully eradicated by inverting faces even when a lengthy encoding time was given. Considering that it is well established that face inversion disrupts face identification (e.g., Farah, Tanaka, et al.; 1995; Farah, Wilson, et al., 1995; Moscovitch et al., 1997), we can conclude that WM capacity for famous upright faces was enhanced via face-recognition, not nonface-feature-discrimination, processes. It is worth noting that face inversion significantly reduced WM performance even for unfamiliar faces, consistent with previous research (Curby & Gauthier, 2007), which indicates that configural processing of faces contributed in some way to the efficiency of face information storage in visual WM.

A third explanation for our findings is that early perceptual encoding processes operate more efficiently for famous than unfamiliar faces, leading to enhanced WM capacity. There is evidence within our own study (visual search for famous faces was faster than for unfamiliar faces, Experiment 1) and in work published previously (Buttle & Raymond, 2003; Jackson & Raymond, 2006; Tong & Nakayama, 1999) that extreme familiarity boosts the speed and/or efficiency of perceptual face encoding. However, this cannot account for differences in WM performance for upright faces because in Experiment 1 the duration of the memory array (that is, available encoding time) was individually adjusted for each participant for each face set (famous, unfamiliar) to the mean of their own RT to search a display of 10 faces. This means that for visual WM loads of less than 10 (and where differences in d′ for famous versus unfamiliar were most evident), encoding times were ample for both face types. (Note that, from Table 2, it can be determined that the search time for 10 faces is more than adequate for encoding 6 to-be-remembered faces on the WM trials, even considering typical within subject variability.) Moreover, in Experiment 2 (Loads 2, 4, and 6), in which encoding duration for 6 faces was lengthened and fixed at 2,500 ms, the familiarity benefit for upright faces was even larger. Considering these findings, differential encoding opportunity does not offer a satisfactory explanation for the observed effects of familiarity on visual WM.

Having successfully ruled out some simple explanations for the effect of familiarity, we now explore how contemporary views of visual WM might accommodate our finding. Central to the current theoretical understanding of visual working memory is the concept of information chunks, a term first coined by Miller (1956) to describe mnemonic units of information. For visual memory, a chunk can be considered as a collection of low-level features that are strongly associated to one another (perhaps via spatiotemporal contiguity and other object- or part-defining properties) with much weaker associations to other visual chunks concurrently in use. (This definition is derived from Cowan, 2001). Note that, here, this concept of a visual chunk refers not only to integration of features (e.g., eyes and mouth) and their configuration within a face but also suggests an active process of segregation (creating distinctiveness) between concurrently cached items.

This dual aspect of the visual memory chunk becomes important when considering how change-detection data, including that reported here, should be interpreted. Consider that the change-detection task requires the participant to compare items seen at test with the contents of his or her visual WM cache. Thus, an enhancement in performance can mean that either the quantity of information stored in WM has been increased (e.g., Luck & Vogel, 1997) or the quality of the stored information (relevant to the task) has been improved, that is, the nature of the information selected for storage is of greater use for discriminating items seen at study from those seen at test (Awh, Barton, & Vogel, 2007). The notion of quantity versus quality of information in visual WM can be mapped onto the dual components of the hypothetical visual chunking process: Quantity may be controlled by within-chunk integration whereas quality may be determined (at least in part) by between-item segregation.

Enhanced visual WM performance for famous versus unfamiliar faces may thus be implemented by increasing quantity, quality, or both properties of mnemonic information. If we assume that the number of chunks that can be created is fixed (at about four, Cowan, 2001), then quantity (or capacity) is enhanced by packing more information into each chunk, that is, exerting greater integration. With faces, this might mean preferential use of configural processing of face information over featural processing. As already mentioned, inversion is known to disrupt configural face processes. Our findings from Experiment 4 that inverting faces eliminated the familiarity benefit for visual WM performance and that performance for both famous and unfamiliar faces dropped with face inversion support the notion that better within-item integration may form the basis of familiarity benefits in visual WM, at least for faces.

High familiarity with items could also facilitate between-chunk segregation processes, leading to greater distinctiveness among mnemonically stored items. Awh et al. (2007) showed that greater distinctiveness leads to improved performance on the change-detection task by reducing comparison errors. LTM links might actively serve to assist visual categorization of information (e.g., Chase & Simon, 1973; Gobet, 1998; Gobet & Simon, 1996) leading to greater distinctiveness at encoding.

Another possibility, raised by a computational model of WM developed by Raffone and Wolters (2001), is that better between-chunk segregation is a simple consequence of better within-chunk integration. Their model incorporates a mechanism for both within-chunk integration and between-chunk segregation that is consistent with the dual component concept of chunking and which may be enlightening with respect to our behavioral data. In their model, between-chunk segregation (they use the term ‘between-item’) is achieved by strong, rapid, and transient inhibition exerted on neural assemblies that code for objects (or coherent sets of features or parts) that are different from those objects or features selected for within-chunk integration. They suggested that this inhibition suppresses processing of irrelevant object features and acts to reduce potential perceptual interference from other objects that could disrupt feature integration within a selected object. If such a mechanism is at work in the human brain, then enhanced visual WM should result whenever individual items (in our experiments, faces or face parts) are tightly integrated at encoding, perhaps via configural processing. This should naturally promote distinctiveness between faces and thus better performance on a change-detection task. This leads us to modify the concept of a visual WM chunk to say that it is a collection of features and feature configurations that are strongly integrated with one another, probably by virtue of learned associations, and are thus made distinct from other less associated features and configurations concurrently in use.

It is important to note that we do not suggest that, in our experiments, one face representation is one complete chunk. Our capacity estimates of fewer than four faces suggest that not all features and configurations contained within a face are integrated to create a single chunk just because they belong to the same object. Rather, some features of a particular face might be tightly integrated—for example, both eyes and their eyebrows because together they are diagnostic to a particular face—whereas other features (e.g., a nondescript nose) are less well integrated, thus weakening the resolution, or quality, of the mnemonic face representation. As suggested earlier, familiarity might serve to strengthen the scope of features and configurations integrated within each face and thus reduce comparison errors between faces, enhancing WM by improving both the quantity and quality of information encoded, retained, and retrieved.

It is also possible that enhanced WM for famous versus unfamiliar faces is due to differences in visual complexity. Although we did not explicitly test this here, previous studies have shown that visual WM performance is enhanced as stimulus complexity is decreased (Alvarez & Cavanagh, 2004; Awh et al., 2007; Eng et al., 2005). One might therefore consider that, perhaps, famous faces are less complex than unfamiliar faces. Indeed, we find general support for a complexity account in Experiments 1 and 3, where we found faster visual search rates for famous versus unfamiliar upright and inverted faces, respectively. Of course all faces used here, upright or inverted, famous or not, are equally complex at a purely visual-pattern level of analysis, so if we are to consider complexity here it must be at a postsensory level, that is, at either a perceptual or conceptual level of analysis. There are at least two possible ways in which famous faces might be perceived as less complex than unfamiliar faces. First, perhaps only a small amount of essential visual information, pruned over many thousands of repeated exposures, is needed to identify them. Familiarity might enable fewer facial features and configurations to be used to create the most minimal representation able to support identification, akin to the theory of sparse neural coding (Barlow, 1961). Alternatively, the total amount of information maintained in visual WM might be equivalent for familiar and unfamiliar faces, but WM performance may be enhanced because familiar face information is simply better organized (or better chunked) and thus appears less complex. In this way complexity and chunking are highly related issues.

The important point leading from our findings is that visual WM processes are supported by LTM. It is fitting then, as a final point, to consider more closely the relationship between LTM and visual WM by raising the question of whether WM and LTM storage caches are really distinct from one another. Early neuropsychological research demonstrated a double dissociation between WM and LTM: Whereas amnesic patients were impaired on LTM tasks but performed relatively normally on WM tasks, other patients appeared to exhibit impaired WM and intact LTM (Baddeley & Warrington, 1970; Shallice & Warrington, 1970). Such findings prompted the widespread view that WM and LTM information is contained in separate stores, an idea consolidated in Baddeley and Hitch’s (1974) influential memory model. Recently, however, it has been suggested that WM and LTM might be related either as different states of the same representation (e.g., Cowan, 1998, 2001; Crowder, 1993; Ruchkin, Grafman, Cameron, & Berndt, 2003) or by shared neural pathways (Nichols, Kao, Verfaellie, & Gabrieli, 2006; Ranganath, Johnson, & D’Esposito, 2003; see Ranganath & Blumenfeld, 2005 for a review). Using functional magnetic resonance imaging (fMRI) to examine neural activity related to WM and LTM for faces, Ranganath et al. reported that similar prefrontal regions were activated during both WM and LTM tasks, whereas Nichols et al. reported an overlap in hippocampal activations across WM maintenance and LTM encoding tasks. If WM is a mechanism that accesses the LTM cache directly, rather than storing just previously activated representations separately, then it follows that prior experience should facilitate integration of information pertaining to a single item and enable use of learned distinctions among items. In this way LTM might enhance visual chunking, a process that enhances performance on tasks such as the change-detection task used here.

Acknowledgments

Aspects of this project were supported by Biotechnology & Biological Sciences Research Council, U.K., Grant BBS/B/16178 to JR. Margaret C. Jackson is supported by Wellcome Trust Grant 077185/Z/05/Z.

Footnotes

Least squares lines for d′ plotted as a function of load yielded higher r 2 values for log versus linear load plots. Average r 2 values were 0.96 for the log trend and 0.92 for the linear trend.

To calculate accurate capacity estimates using Bangor k, it is important to measure WM capacity using loads that exceed upper capacity limits. If loads are too small, WM performance will be at ceiling, and the point at which performance falls below the predefined threshold cannot be measured, precluding estimation of true capacity.

We thank an anonymous reviewer for their comments on this.

Note that, due to disparity between set sizes used in the visual search tasks in Experiment 1 (upright faces; Set Sizes 4, 7, and 10) and Experiment 3 (inverted faces; Set Sizes 2, 4, and 6), we were unable to employ valid statistical measures to compare search speeds between upright and inverted faces.

References

- Alvarez GA, Cavanagh P. The capacity of visual short-term memory is set both by visual information load and by number of objects. Psychological Science. 2004;15(2):106–111. doi: 10.1111/j.0963-7214.2004.01502006.x. [DOI] [PubMed] [Google Scholar]

- Atkinson RC, Shiffrin RM. Human memory: A proposed system and its control processes. The Psychology of Learning and Motivation. 1968;2:89–195. [Google Scholar]

- Awh E, Barton B, Vogel EK. Visual working memory represents a fixed number of items regardless of complexity. Psychological Science. 2007;18(7):622–628. doi: 10.1111/j.1467-9280.2007.01949.x. [DOI] [PubMed] [Google Scholar]

- Baddeley AD. The episodic buffer: A new component of working memory? Trends in Cognitive Sciences. 2000;4(11):417–423. doi: 10.1016/s1364-6613(00)01538-2. [DOI] [PubMed] [Google Scholar]

- Baddeley AD, Hitch G. Working memory. The Psychology of Learning and Motivation. 1974;8:47–89. [Google Scholar]

- Baddeley AD, Warrington EK. Amnesia and the distinction between long- and short-term memory. Journal of Verbal Learning and Verbal Behavior. 1970;9:176–189. [Google Scholar]

- Barlow HB. The coding of sensory messages. In: Thorpe WH, Zangwill OL, editors. Current problems in animal behaviour. Cambridge University Press; Cambridge, England: 1961. pp. 331–360. [Google Scholar]

- Buttle HM, Raymond JE. High familiarity enhances visual change detection for face stimuli. Perception and Psychophysics. 2003;65(8):1296–1306. doi: 10.3758/bf03194853. [DOI] [PubMed] [Google Scholar]

- Chase WG, Simon HA. The mind’s eye in chess. In: Chase WG, editor. Visual information processing. Academic Press; New York: 1973. pp. 215–281. [Google Scholar]

- Chen D, Eng HY, Jiang Y. Visual working memory for trained and novel polygons. Visual Cognition. 2006;14(1):37–54. [Google Scholar]

- Chincotta D, Underwood G. Mother tongue, language of schooling and bilingual digit span. British Journal of Psychology. 1996;87:193–208. [Google Scholar]

- Chincotta D, Underwood G. Bilingual memory span advantage for Arabic numerals over digit words. British Journal of Psychology. 1997;88:295–310. [Google Scholar]

- Cocchini G, Logie RH, Della Sala S, McPherson SE, Baddeley AD. Concurrent performance of two memory tasks: Evidence for domain-specific working memory systems. Memory and Cognition. 2002;30(7):1086–1095. doi: 10.3758/bf03194326. [DOI] [PubMed] [Google Scholar]

- Collishaw SM, Hole GJ. Featural and configurational processes in the recognition of faces of different familiarity. Perception. 2000;29(8):893–909. doi: 10.1068/p2949. [DOI] [PubMed] [Google Scholar]

- Cowan N. Visual and auditory working memory capacity. Commentary. Trends in Cognitive Sciences. 1998;2(3):77–78. doi: 10.1016/s1364-6613(98)01144-9. [DOI] [PubMed] [Google Scholar]

- Cowan N. The magical number 4 in short-term memory: A reconsideration of mental storage capacity. Behavioral and Brain Sciences. 2001;24:87–185. doi: 10.1017/s0140525x01003922. [DOI] [PubMed] [Google Scholar]

- Crowder RG. Short-term memory: Where do we stand? Memory and Cognition. 1993;21:142–145. doi: 10.3758/bf03202725. [DOI] [PubMed] [Google Scholar]

- Curby KM, Gauthier I. A visual short-term memory advantage for faces. Psychonomic Bulletin and Review. 2007;14(4):620–628. doi: 10.3758/bf03196811. [DOI] [PubMed] [Google Scholar]

- Eng HY, Chen D, Jiang Y. Visual working memory for simple and complex visual stimuli. Psychonomic Bulletin and Review. 2005;12(6):1127–1133. doi: 10.3758/bf03206454. [DOI] [PubMed] [Google Scholar]

- Farah MJ, Tanaka JN, Drain M. What causes the face inversion effect? Journal of Experimental Psychology: Human Perception and Performance. 1995;21:628–634. doi: 10.1037//0096-1523.21.3.628. [DOI] [PubMed] [Google Scholar]

- Farah MJ, Wilson KD, Drain HM, Tanaka JN. The inverted face inversion effect in prosopagnosia: Evidence for mandatory, face-specific perceptual mechanisms. Vision Research. 1995;35(14):2089–2093. doi: 10.1016/0042-6989(94)00273-o. [DOI] [PubMed] [Google Scholar]

- Gobet F. Expert memory: A comparison of four theories. Cognition. 1998;66(2):115–152. doi: 10.1016/s0010-0277(98)00020-1. [DOI] [PubMed] [Google Scholar]

- Gobet F, Simon H. Templates in chess memory: A mechanism for recalling several boards. Cognitive Psychology. 1996;31(1):1–40. doi: 10.1006/cogp.1996.0011. [DOI] [PubMed] [Google Scholar]

- Hebb DO. The Organization of behavior. Wiley; New York: 1949. [Google Scholar]

- Jackson MC, Raymond JE. The role of attention and familiarity in face identification. Perception and Psychophysics. 2006;68(4):543–557. doi: 10.3758/bf03208757. [DOI] [PubMed] [Google Scholar]

- James W. The principles of psychology. Holt, Rinehart,& Winston; New York: 1890. [Google Scholar]

- Luck SJ, Vogel EK. The capacity of visual working memory for features and conjunctions. Nature. 1997;390(6657):279–281. doi: 10.1038/36846. [DOI] [PubMed] [Google Scholar]

- Miller GA. The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychological Review. 1956;63:81–97. [PubMed] [Google Scholar]

- Morey CC, Cowan N. When visual and verbal memories compete: Evidence of cross-domain limits in working memory. Psychonomic Bulletin and Review. 2004;11(2):296–301. doi: 10.3758/bf03196573. [DOI] [PubMed] [Google Scholar]

- Moscovitch M, Winocur G, Behrmann M. What is special about face recognition? Nineteen experiments on a person with visual object agnosia and dyslexia but normal face recognition. Journal of Cognitive Neuroscience. 1997;9(5):555–604. doi: 10.1162/jocn.1997.9.5.555. [DOI] [PubMed] [Google Scholar]

- Murray DJ. Articulation and acoustical confusability in short-term memory. Journal of Experimental Psychology. 1968;78:679–684. [Google Scholar]

- Nichols EA, Kao Y-C, Verfaellie M, Gabrieli JDE. Working memory and long-term memory for faces: Evidence from fMRI and global amnesia for involvement of the medial temporal lobes. Hippocampus. 2006;16:604–616. doi: 10.1002/hipo.20190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olson IR, Jiang Y. Visual short-term memory is not improved by training. Memory and Cognition. 2004;32:1326–1332. doi: 10.3758/bf03206323. [DOI] [PubMed] [Google Scholar]

- Olson IR, Jiang Y, Moore KS. Associative learning improves visual working memory performance. Journal of Experimental Psychology: Human Perception and Performance. 2005;31:889–900. doi: 10.1037/0096-1523.31.5.889. [DOI] [PubMed] [Google Scholar]

- Olsson H, Poom L. Visual memory needs categories. Proceedings of the National Academy of Sciences, USA. 2005;102(24):8776–8780. doi: 10.1073/pnas.0500810102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pashler HE. Familiarity and visual change detection. Perception and Psychophysics. 1988;44(4):369–378. doi: 10.3758/bf03210419. [DOI] [PubMed] [Google Scholar]

- Phillips WA. On the distinction between sensory storage and short-term visual memory. Perception and Psychophysics. 1974;16:283–290. [Google Scholar]

- University of Stirling Psychology Department [Retrieved October 2002];Psychological Image Collection at Stirling. 2002 Available from http://pics.psych.stir.ac.uk.

- Raffone A, Wolters G. A cortical mechanism for binding in visual working memory. Journal of Cognitive Neuroscience. 2001;13(6):766–785. doi: 10.1162/08989290152541430. [DOI] [PubMed] [Google Scholar]

- Ranganath C, Blumenfeld RS. Doubts about double dissociations between short- and long-term memory. Trends in Cognitive Sciences. 2005;9(8):374–380. doi: 10.1016/j.tics.2005.06.009. [DOI] [PubMed] [Google Scholar]

- Ranganath C, Johnson MK, D’Esposito M. Prefrontal activity associated with working memory and episodic long-term memory. Neuropsychologia. 2003;41(3):378–389. doi: 10.1016/s0028-3932(02)00169-0. [DOI] [PubMed] [Google Scholar]

- Raymond JE, Shapiro KL, Arnell KM. Temporary suppression of visual processing in an RSVP task: An attentional blink? Journal of Experimental Psychology: Human Perception and Performance. 1992;18:849–860. doi: 10.1037//0096-1523.18.3.849. [DOI] [PubMed] [Google Scholar]

- Ruchkin DS, Grafman J, Cameron K, Berndt RS. Working memory retention systems: A state of activated long-term memory. Behavioral and Brain Sciences. 2003;26(6):709–777. doi: 10.1017/s0140525x03000165. [DOI] [PubMed] [Google Scholar]

- Schneider WX, Eschman A, Zuccolotto A. E-Prime user’s guide. Psychology Software Tools, Inc.; Pittsburgh, PA: 2002. [Google Scholar]

- Shallice T, Warrington EK. Independent functioning of verbal memory stores: A neuropsychological study. Quarterly Journal of Experimental Psychology. 1970;22:261–273. doi: 10.1080/00335557043000203. [DOI] [PubMed] [Google Scholar]

- Sperling G. The information available in brief visual representations. Psychological Monographs: General and Applied. 1960;74:1–29. [Google Scholar]

- Thorn ASC, Gathercole SE. Language-specific knowledge and short-term memory in bilingual and non-bilingual children. Quarterly Journal of Experimental Psychology. 1999;52A:303–324. doi: 10.1080/713755823. [DOI] [PubMed] [Google Scholar]

- Thorn ASC, Gathercole SE, Frankish CR. Language familiarity effects on short-term memory: The role of output delay and long-term knowledge. The Quarterly Journal of Experimental Psychology. 2002;55A(4):1363–1383. doi: 10.1080/02724980244000198. [DOI] [PubMed] [Google Scholar]

- Todd JJ, Marois R. Capacity limit of visual short-term memory in human posterior parietal cortex. Nature. 2004;428:751–754. doi: 10.1038/nature02466. [DOI] [PubMed] [Google Scholar]

- Tong F, Nakayama K. Robust representations for faces: Evidence from visual search. Journal of Experimental Psychology: Human Perception and Performance. 1999;25:1016–1035. doi: 10.1037//0096-1523.25.4.1016. [DOI] [PubMed] [Google Scholar]

- Vogel EK, Machizawa MG. Neural activity predicts individual differences in visual working memory capacity. Nature. 2004;428:748–751. doi: 10.1038/nature02447. [DOI] [PubMed] [Google Scholar]

- Vogel EK, Woodman GF, Luck SJ. Storage of features, conjunctions, and objects in visual working memory. Journal of Experimental Psychology: Human Perception and Performance. 2001;27:92–114. doi: 10.1037//0096-1523.27.1.92. [DOI] [PubMed] [Google Scholar]

- Wheeler ME, Treisman AM. Binding in short-term visual memory. Journal of Experimental Psychology: General. 2002;131(1):48–64. doi: 10.1037//0096-3445.131.1.48. [DOI] [PubMed] [Google Scholar]

- Yin RK. Looking at upside-down faces. Journal of Experimental Psychology. 1969;81:141–145. [Google Scholar]