Abstract

Context:

Computerized neuropsychological testing batteries have provided a time-efficient and cost-efficient way to assess and manage the neurocognitive aspects of patients with sport-related concussion. These tests are straightforward and mostly self-guided, reducing the degree of clinician involvement required by traditional clinical neuropsychological paper-and-pencil tests.

Objective:

To determine if self-reported supervision status affected computerized neurocognitive baseline test performance in high school athletes.

Design:

Retrospective cohort study.

Settings:

Supervised testing took place in high school computer libraries or sports medicine clinics. Unsupervised testing took place at the participant's home or another location with computer access.

Patients or Other Participants:

From 2007 to 2012, high school athletes across middle Tennessee (n = 3771) completed computerized neurocognitive baseline testing (Immediate Post-Concussion Assessment and Cognitive Testing [ImPACT]). They reported taking the test either supervised by a sports medicine professional or unsupervised. These athletes (n = 2140) were subjected to inclusion and exclusion criteria and then matched based on age, sex, and number of prior concussions.

Main Outcome Measure(s):

We extracted demographic and performance-based data from each de-identified baseline testing record. Paired t tests were performed between the self-reported supervised and unsupervised groups, comparing the following ImPACT baseline composite scores: verbal memory, visual memory, visual motor (processing) speed, reaction time, impulse control, and total symptom score. For differences that reached P < .05, the Cohen d was calculated to measure the effect size. Lastly, a χ2 analysis was conducted to compare the rate of invalid baseline testing between the groups. All statistical tests were performed at the 95% confidence interval level.

Results:

Self-reported supervised athletes demonstrated better visual motor (processing) speed (P = .004; 95% confidence interval [0.28, 1.52]; d = 0.12) and faster reaction time (P < .001; 95% confidence interval [−0.026, −0.014]; d = 0.21) composite scores than self-reported unsupervised athletes.

Conclusions:

Speed-based tasks were most affected by self-reported supervision status, although the effect sizes were relatively small. These data lend credence to the hypothesis that supervision status may be a factor in the evaluation of ImPACT baseline test scores.

Key Words: concussions, sports, neurocognitive testing, ImPACT

Key Points

Computerized baseline neurocognitive testing has become commonplace in athletes.

It is unclear whether neurocognitive baseline test performance in high school athletes is affected by self-reported supervision status (taking the test in supervised or unsupervised conditions).

High school athletes taking the Immediate Post-Concussion Assessment and Cognitive Testing (ImPACT) under self-reported supervised conditions scored better on visual motor (processing) speed and reaction time scores than athletes who self-reported taking the test under unsupervised conditions.

Supervision status of the baseline test may be a relevant factor when assessing and interpreting neurocognitive test scores.

Neuropsychological testing was first used to assess and manage sport-related concussions (SRCs) in the late 1980s.1 Since then, the transition from traditional paper-and-pencil to computerized administration of these tests has resulted in the widespread implementation of neurocognitive testing in schools, universities, and professional sporting leagues across the world.2–4 These tests are generally used in 2 ways: (1) a preseason (preinjury) baseline test, which represents an athlete's normal neurocognitive functioning and (2) follow-up postinjury test(s), administered after a concussion is sustained. Significant differences between an athlete's baseline and postconcussion tests can be measured using reliable change indices or regression-based procedures, aiding sports medicine clinicians in making informed return-to-play decisions.3,5–7

Return-to-play decisions are based, in part, on the assumption that a negligible amount of individual variability exists between an athlete's baseline and postconcussion tests, with differences in scores being attributed mainly to the effects of an SRC. Host factors, such as learning disabilities,2,8 attention-deficit disorder,2 the number of prior concussions,8 age and education levels,9 and language,10,11 have been shown to affect neurocognitive baseline testing performance. However, these factors rarely fluctuate in the short term and are therefore controlled between baseline and postconcussion tests.12 In contrast, situational factors, such as psychological distress,13 group (versus individual) test administration,4 and motivation (including “sandbagging”),14–16 are more likely to vary between tests because these factors are harder to control. Unfortunately, many of these situational factors influence baseline test performance as well. The comparison of postconcussion tests with a suboptimal or invalid baseline test could potentially lead to erroneous return-to-play decisions11,17 and misguided management of the athlete outside of sports with regard to academic modifications or pharmacologic therapy.

One situational factor related to baseline test performance that has yet to be investigated, to our knowledge, is whether performance varies when the test is taken under self-reported supervised or unsupervised circumstances. The advent of computerized neuropsychological testing batteries has allowed a more time-efficient and cost-efficient way to assess and manage the neurocognitive aspects of SRCs.17,18 These tests are straightforward and mostly self-guided, reducing the degree of clinician involvement required by traditional clinical neuropsychological paper-and-pencil tests.18,19 Additionally, it has been shown that the presence of a third-party observer negatively affects performance on traditional neuropsychological paper-and-pencil testing.20–22 Although the presence of a supervisor during computerized testing may seem unnecessary and possibly detrimental, the role play in the testing environment could be relevant. One widely used computerized neurocognitive testing battery (Immediate Post-Concussion Assessment and Cognitive Testing [ImPACT]; ImPACT Applications, Inc, Pittsburgh, PA) even indicates in its clinical interpretation manual that “improper supervision” is a contributing factor to invalid baseline tests.3

Undoubtedly, the administration and supervision of baseline testing by a trained sports medicine professional are important to standardize the baseline testing process. In an unsupervised environment, conditions are more likely to be uncontrolled. For instance, if the test is taken in an unsupervised group setting, horseplay, noise, and cell-phone interruptions may occur and could negatively affect test performance. In fact, in unsupervised conditions, sports medicine professionals cannot be certain that a particular individual actually completed the test. Although paraprofessionals (ie, coaches, school officials) may be able to successfully administer tests, they may be unfamiliar with the test itself,23 denying athletes the opportunity to have questions answered or directions clarified unambiguously. Although we did not evaluate the differences between trained and untrained supervision with regard to standardization of the administration and supervision of these tests, we aimed to evaluate the potential effect(s) of self-reported supervision status on baseline test scores. In this preliminary, retrospective study, we used self-reported supervision status as a dichotomous independent variable of interest. We hypothesized that supervised athletes would attain higher ImPACT baseline composite scores and a lower rate of invalid tests than athletes who took the test unsupervised.

METHODS

This study was clinical, retrospective, and observational in nature. Institutional review board approval was obtained, and written, informed consent was provided by guardians of all participants for administration of the baseline test and use of the data in future research.

Participants

The initial population consisted of 3771 student–athletes across middle Tennessee who completed ImPACT baseline testing between 2007 and 2012. Given the retrospective nature of this study, we could not determine with certainty the number of athletes taking the desktop versus the online version of ImPACT. However, we suspect that most of the athletes took the online version because the transition to the online version of ImPACT occurred in 2008. Data regarding supervision status were provided by the athletes in the demographic section of ImPACT. Thus, this study relied on self-reported supervised or nonsupervised test-taking status. Supervised baseline testing was administered either in a high school computer library or a sports medicine clinic setting and overseen by a certified athletic trainer. The unsupervised group took the test either at home or in other settings using coded access to ImPACT. This was a retrospective, archival study, so other instructional and environmental data regarding test administration are unknown.

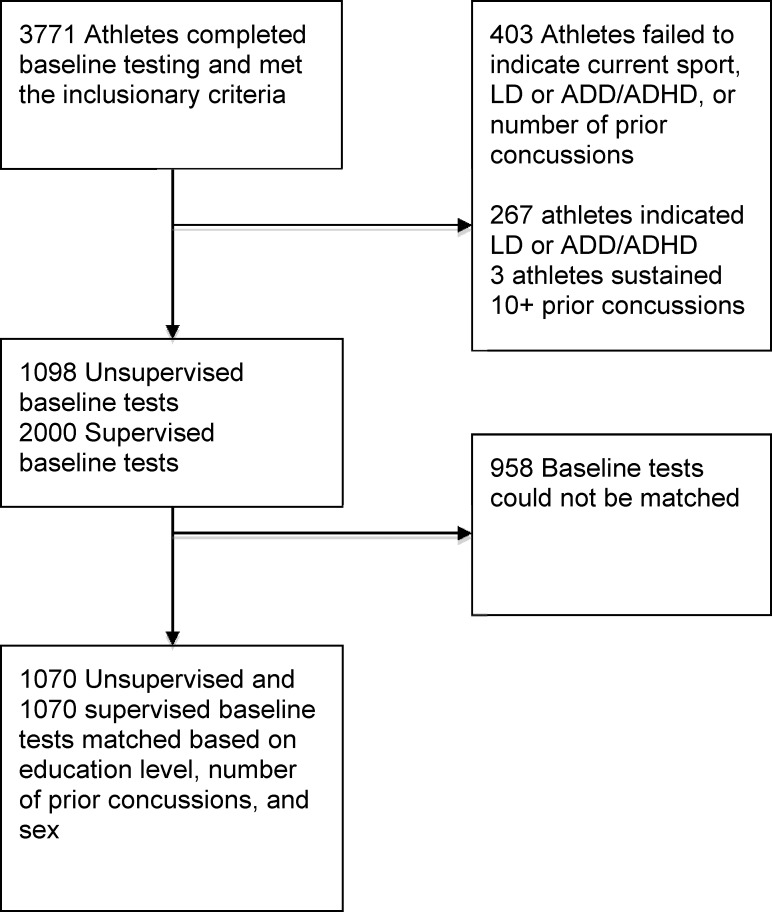

Inclusionary criteria were (1) high school athletes (education level indicated as 9–12 years) and (2) English reported as the primary language. Exclusionary criteria were (1) failure to indicate current sport, (2) self-reported history of learning disability or attention-deficit disorder, (3) failure to respond to the question assessing the presence of learning disability or attention-deficit disorder, (4) failure to indicate the number of prior concussions, and (5) a self-reported history of 10 or more prior concussions. We purposefully included invalid baseline tests (automatically identified by ImPACT) in an attempt to discern whether any differences in invalidity rates were related to supervision status. Descriptions of the various invalidity “flags” are available in the ImPACT clinical interpretation manual.3 Application of the inclusion and exclusion criteria resulted in 673 athletes being removed from the study, yielding 2000 supervised and 1098 unsupervised athletes who met all criteria. These athletes were then matched on age, sex, and number of prior concussions, resulting in a final sample of 2140 total baseline tests evaluated in this study. The Figure illustrates the selection of participants for this study.

Figure.

Flow chart illustrating inclusion for this study. Abbreviations: LD, learning disability; ADD, attention-deficit disorder; ADHD, attention-deficit hyperactivity disorder.

Materials and Procedures

To obtain baseline neurocognitive data, we chose to use ImPACT,3 a computerized neuropsychological testing battery designed specifically for the assessment and management of SRC. The ImPACT assesses cognitive functioning across 6 testing modules, targeting attention, memory, reaction time, and processing speed. From these modules, numerical composite scores are calculated for verbal memory, visual memory, visual motor (processing) speed, reaction time, and impulse control. In addition to measuring neurocognitive functioning, ImPACT also contains a postconcussion symptom scale to assess commonly reported symptoms after an SRC.24 The different neurocognitive testing modules and how they are incorporated into the 5 composite and symptom scores are detailed in Table 1. The utility, reliability, and validity of ImPACT have been discussed in the literature.5,17,25–27

Table 1.

ImPACT Modules and Composite Scores

| Module |

Cognitive Domain Evaluated |

| Word memory | Attentional processes, verbal recognition memory |

| Design memory | Attentional processes, visual recognition memory |

| X's and O's | Visual working memory, visual processing speed, and visual memory |

| Symbol match | Visual processing speed, learning, and memory |

| Color match | Impulse control, response inhibition |

| 3 letters | Working memory and visual-motor response speed |

| Composite score | Module(s) averaged |

| Verbal memory | Word memory, symbol match, and 3 letters (percentage correct) |

| Visual memory | Design memory (total percentage correct score) and X's and O's (total correct memory score) |

| Visual motor (processing) speed | X's and O's (total number correct: 4 during interference) and 3 letters (average counted correctly × 3 from countdown phase) |

| Reaction time | X's and O's (average correct reaction time of interference stage), symbol match (average correct reaction time/3) and color match (average correct reaction time) |

| Impulse control | X's and O's (total errors on the interference phase) and color match (total commissions) |

| Total symptom | Total for all 22-symptom descriptors (ie, headache, vomiting, dizziness). A complete list of symptoms can be found in the ImPACT clinical interpretation manual.3 |

Abbreviation: ImPACT, Immediate Post-Concussion Assessment and Cognitive Testing.

For the final sample in this study, data extracted from each de-identified baseline testing record included the following demographics: date of birth, testing date, sex, current sport, and number of prior concussions. Neurocognitive data extracted verbal memory, visual memory, visual motor (processing) speed, reaction time, impulse control composite scores, total symptom score, and the validity of the baseline test.

Statistical Analyses

We performed paired t tests to assess differences between the supervised and unsupervised athletes for the following ImPACT composite scores: verbal memory, visual memory, visual motor (processing) speed, reaction time, and impulse control. A paired t test was also conducted to determine any difference in the number of endorsed symptoms reported at baseline (total symptom score) between the groups. For differences that reached P < .05, we calculated the Cohen d to measure the effect size. Lastly, a Pearson χ2 test, without Yates correction for continuity, was conducted to compare the rate of invalid baseline testing between the supervised and unsupervised groups. All statistical tests were performed at the 95% confidence interval (CI) level.

RESULTS

In general, the athletes in this study were about 16 years of age and had a history of 0.22 concussions. Nearly one-third of the athletes in each group were female. The most common sports represented were football, soccer, and basketball. More detail about the demographic characteristics of the athletes in this study can be found in Table 2. When compared with unsupervised athletes, supervised athletes had greater visual motor (processing) speed (P = .004; 95% CI = 0.28, 1.52; d = 0.12) and faster reaction time (P < .001; 95% CI = −0.026, −0.014; d = 0.21) composite scores. No other composite score demonstrated significant differences.

Table 2.

Participants' Demographic Characteristics (n = 2140)

| Characteristic |

Group |

|

| Supervised (n = 1070) |

Unsupervised (n = 1070) |

|

| Age, mean ± SD | 16.09 ± 1.33 | 16.09 ± 1.33 |

| Females, n (%) | 351 (32.8) | 351 (32.8) |

| Males, n (%) | 719 (67.2) | 719 (67.2) |

| Number of concussions, mean ± SD | 0.22 ± 0.59 | 0.22 ± 0.59 |

| Sport, n (%) | ||

| Baseball | 23 (2.15) | 28 (2.62) |

| Basketball | 185 (17.3) | 111 (10.4) |

| Boxing | 0 (0.00) | 2 (0.19) |

| Cheerleading | 35 (3.27) | 50 (4.67) |

| Cross-country | 1 (0.09) | 50 (4.67) |

| Field hockey | 5 (0.47) | 0 (0.00) |

| Football | 447 (41.8) | 388 (36.3) |

| Golf | 0 (0.00) | 10 (0.93) |

| Ice hockey | 4 (0.37) | 0 (0.00) |

| Lacrosse | 23 (2.15) | 11 (1.03) |

| Road biking | 0 (0.00) | 1 (0.09) |

| Rugby | 0 (0.00) | 3 (0.28) |

| Soccer | 213 (19.9) | 182 (17.0) |

| Softball | 44 (4.11) | 29 (2.71) |

| Swimming | 0 (0.00) | 4 (0.37) |

| Tennis | 1 (0.09) | 30 (2.80) |

| Track and field | 9 (0.84) | 39 (3.64) |

| Volleyball | 36 (3.36) | 59 (5.51) |

| Wrestling | 44 (4.11) | 73 (6.82) |

Abbreviation: ImPACT, Immediate Post-Concussion Assessment and Cognitive Testing.

The Cohen d28 is often used to compare the magnitude of difference between groups by computing an effect size.25 To provide a reference scale for the values of d, Cohen29 operationally defined a value of <0.20 as a small effect, 0.21 to 0.50 as a medium effect, and 0.51 to 0.80 as a large effect. According to the Cohen values, the significant differences found between the unsupervised and supervised groups were of small (visual motor speed) to medium (reaction time) effect size.

Means and standard deviations of the ImPACT composite scores are summarized in Table 3. A total of 51 (4.77%) of the unsupervised and 51 (4.77%) of the supervised baseline tests were automatically identified by ImPACT as invalid. The difference in test validity rates between groups was not significant (χ21 = 0.00; P = 1.00). The incidence rates for the 5 different invalidity flags (provided automatically by ImPACT) are shown in Table 4. Table 3 also provides post hoc analyses between groups for the subtest modules of the visual motor (processing) speed and reaction time composite scores, given that group differences were significant.

Table 3.

ImPACT Baseline Mean Composite Scores Under Supervised and Unsupervised Conditions

| Composite Score |

Group |

95% Confidence Interval |

P Value |

Cohen d |

|

| Supervised (n = 1070) |

Unsupervised (n = 1070) |

||||

| Verbal memory (SD) | 84.33 (10.21) | 84.11 (10.89) | −0.67, 1.11 | .59 | |

| Visual memory (SD) | 72.88 (13.53) | 73.03 (13.56) | −1.30, 1.00 | .79 | |

| Visual motor speed (SD) | 38.32 (7.60) | 37.42 (6.98) | 0.28, 1.52 | .004 | 0.12 |

| X's and O's (total number correct during interference) | 114.2 (10.81) | 112.9 (9.13) | 0.45, 2.15 | .003 | 0.13 |

| 3 letters (average counted correctly from countdown phase) | 16.03 (4.60) | 15.54 (4.32) | 0.11, 0.87 | .010 | 0.11 |

| Reaction time (SD) | 0.58 (0.10) | 0.60 (0.09) | −0.026, −0.014 | <.001 | 0.21 |

| X's and O's (average correct reaction time of interference stage) | 0.50 (0.10) | 0.51 (0.08) | −0.02, −0.002 | .008 | 0.11 |

| Symbol match (average correct reaction time) | 1.43 (0.39) | 1.51 (0.39) | −0.11, −0.05 | <.001 | 0.21 |

| Color match (average correct reaction time) | 0.76 (0.16) | 0.78 (0.16) | −0.03, −0.006 | <.001 | 0.13 |

| Impulse control (SD) | 6.96 (5.45) | 6.65 (5.62) | −0.16, 0.78 | .15 | |

| Total symptom score (SD) | 4.64 (8.65) | 4.50 (8.89) | −0.60, 0.88 | .69 | |

Abbreviation: ImPACT, Immediate Post-Concussion Assessment and Cognitive Testing.

Table 4.

Prevalence of Invalidity “Flags” Triggered During ImPACT Baseline Testing Among Supervised and Unsupervised High School Athletes

| Invalidity “Flag” |

Group |

|

| Supervised (n = 51) |

Unsupervised (n = 51) |

|

| X's and O's: total incorrect > 30, n (%) | 6 (11.7) | 4 (7.84) |

| Impulse control composite score > 30, n (%) | 7 (13.7) | 5 (9.80) |

| Word memory: percentage correct < 69%, n (%) | 4 (7.84) | 8 (15.7) |

| Design memory: percentage correct < 50%, n (%) | 18 (35.3) | 20 (39.2) |

| 3 letters: total correct < 8, n (%) | 24 (47.1) | 24 (47.1) |

| Total invalidity “flags” | 59 | 61 |

Abbreviation: ImPACT, Immediate Post-Concussion Assessment and Cognitive Testing.

DISCUSSION

Although there is active debate regarding the utility of baseline neurocognitive testing in return-to-play protocols,30–32 these tests remain a heavily used measure in concussion management. For instance, in 2009, Covassin et al17 found that 94.7% of all athletic trainers in their sample administered baseline testing to their athletes. An invalid or suboptimal baseline test, if compared with a postconcussion test, could jeopardize the management and even long-term health of an athlete with a concussion. Multiple situational factors have been shown to influence baseline test performance.4,13–16 However, the role of supervision in the testing environment has not yet been empirically evaluated, as far as we know. Therefore, the goal of our pilot study was to determine if general self-reported supervision, or lack thereof, affected neurocognitive baseline test performance systematically in high school athletes. To our knowledge, this is the first study to address this question empirically.

Athletes who reported unsupervised status scored worse on visual motor (processing) speed and reaction time composite. Inspection of the subtest modules contributing to these composite scores (Table 3) reveals that self-reported supervision may be most important during matching timed tasks. Both the symbol match and the color match reaction time differences between groups reached a significance level of P < .001, and they had effect sizes of d = 0.21 and 0.13, respectively. Conceivably, lack of direction or instruction in an unsupervised environment might have contributed to these results if unsupervised athletes mistakenly assumed that these subtests measure accuracy instead of speed or reaction time.

In 2010, Schatz et al33 examined the relationships among subjective test feedback, cognitive functioning, and total symptom score on ImPACT in a sample of high school athletes. They found that 9.7% of the sample reported environmental distracters, 12% reported problems with the computer, and 17.9% reported problems with instructions. Instructional problems correlated only slightly with total symptom score. However, the sample was supervised by a physician. It is possible that the number of athletes encountering problems with instructions during baseline testing in our study was greater than the 17.9% that Schatz et al33 noted. Of course, further study is needed.

Although the visual motor (processing) speed and reaction time composite scores were affected by self-reported supervision status, differences in verbal memory, visual memory, impulse control, and total symptom composite scores were not. Additionally, the rates of invalid baseline tests in both groups were the same (4.77%); the most prominent invalidity flags were similar between the supervised and unsupervised groups (3 letters total correct < 8 [47.1% versus 47.1%, respectively] and design memory percentage correct < 50% flags [35.3% versus 39.2%, respectively]). The rates of invalid tests we found were similar to rates observed by Schatz et al34 in 2012 for the online version of ImPACT (6.3% for high school students and 4.1% for college students). In their study, most of the invalidity flags for the high school cohort were for 3 letters and design memory tests as well. Our data supported the hypotheses in that (1) general supervision during ImPACT baseline testing affected 2 of the 6 composite scores and (2) rates of invalid tests were similar. However, it is possible that aspects of these nonsignificant findings result from methodologic limitations.

Given the retrospective nature of our study, we could not control multiple factors. Experimental groups were delineated based on self-reported supervision status. Although the options for selecting supervision status in the demographic section of ImPACT are straightforward, supervised athletes could have inadvertently assigned themselves to the unsupervised group or vice versa. Also, supervised testing occurred in a variety of locations, and the specific testing circumstances, instructions, proctor-to-athlete ratio, proctor credentials, and quality of testing environments are unknown. In addition, we have no information regarding the conditions of the unsupervised test administration.

This study has other limitations. First, our results apply to high school students only, as we did not include youth, collegiate, or professional athletes in this sample. Second, all participants were from a specific geographic region of the country, and the results may not be applicable to athletes from other areas of the United States or from other countries. Third, only a single computerized neurocognitive test was used, and the effects of supervision status noted in this study may not be generalizable to performance on other neurocognitive test batteries. Fourth, we were unable to identify precisely which athletes took the desktop or online version of ImPACT. Finally, all demographic data are self-reported, and none were validated externally.

This study does have several strengths. First, we empirically investigated a new area in the realm of computerized baseline testing for athletes. Second, our sample was quite large, with more than 2100 high school athletes in a relatively homogeneous age group. Third, the cohorts were matched closely on the demographic variables of age, sex, and concussion history, factors shown to be related to baseline neurocognitive test performance. Fourth, potentially confounding demographic factors (eg, learning disability/attention-deficit disorder, primary language) were controlled. Fifth, sport representation was comparable across groups.

We did find several statistically significant group differences based on supervision status, but the effect sizes were small. We hope that the results of our study are not interpreted to mean that neurocognitive baseline testing in sports can or should be administered unsupervised, as the role clinicians have in the administration and interpretation of these tests can be critically important. During baseline testing, supervisors may be required to separate athletes appropriately (in a group setting),4 eliminate distractions, answer questions or give further instruction (especially to athletes who are taking ImPACT for the first time), and encourage optimal effort. Future well-controlled studies are needed to draw definitive conclusions about the role clinicians play in the testing environment. Additionally, although further study is needed, supervision during postinjury testing may also be important. Athletes with concussions may be more sensitive to uncontrolled environmental stimuli or may be unable to effectively read directions. Clinicians should not only be aware of the conditions under which baseline testing is administered but also review the testing circumstances and conditions with examinees who produce invalid tests.

REFERENCES

- 1.Alves WM, Rimel RW, Nelson WE. University of Virginia prospective study of football-induced minor head injury: status report. Clin Sports Med. 1987;6(1):211–218. [PubMed] [Google Scholar]

- 2.Solomon GS, Haase RF. Biopsychosocial characteristics and neurocognitive test performance in National Football League players: an initial assessment. Arch Clin Neuropsychol. 2008;23(5):563–577. doi: 10.1016/j.acn.2008.05.008. [DOI] [PubMed] [Google Scholar]

- 3.ImPACT 2007 Clinical Interpretation Manual. ImPACT Applications Web site. http://www.impacttest.com/pdf/ImPACT_Clinical_Interpretation_Manual.pdf. Accessed February 7, 2014. [Google Scholar]

- 4.Moser RS, Schatz P, Neidzwski K, Ott SD. Group versus individual administration affects baseline neurocognitive test performance. Am J Sports Med. 2011;39(11):2325–2330. doi: 10.1177/0363546511417114. [DOI] [PubMed] [Google Scholar]

- 5.Iverson GL, Lovell MR, Collins MW. Interpreting change on ImPACT following sport concussion. Clin Neuropsychol. 2003;17(4):460–467. doi: 10.1076/clin.17.4.460.27934. [DOI] [PubMed] [Google Scholar]

- 6.Echemendia RJ, Bruce JM, Bailey CM, Sanders JF, Arnett P, Vargas G. The utility of post-concussion neuropsychological data in identifying cognitive change following sports-related MTBI in the absence of baseline data. Clin Neuropsychol. 2012;26(7):1077–1091. doi: 10.1080/13854046.2012.721006. [DOI] [PubMed] [Google Scholar]

- 7.Collie A, Maruff P, Makdissi M, McStephen M, Darby DG, McCrory P. Statistical procedures for determining the extent of cognitive change following concussion. Br J Sports Med. 2004;38(3):273–278. doi: 10.1136/bjsm.2003.000293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Collins MW, Grindel SH, Lovell MR, et al. Relationship between concussion and neuropsychological performance in college football players. JAMA. 1999;282(10):964–970. doi: 10.1001/jama.282.10.964. [DOI] [PubMed] [Google Scholar]

- 9.Register-Mihalik JK, Kontos DL, Guskiewicz KM, Mihalik JP, Conder R, Shields EW. Age-related differences and reliability on computerized and paper-and-pencil neurocognitive assessment batteries. J Athl Train. 2012;47(3):297–305. doi: 10.4085/1062-6050-47.3.13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Jones NS, Young CC, Walter KD. Effect of language on baseline concussion screening tests in professional baseball players. Clin J Sport Med. 2011;21(2):153–184. doi: 10.1097/JSM.0000000000000031. [DOI] [PubMed] [Google Scholar]

- 11.Harmon KG, Drezner JA, Gammons M, et al. American Medical Society for Sports Medicine position statement: concussion in sport. Br J Sports Med. 2013;47(1):15–26. doi: 10.1136/bjsports-2012-091941. [DOI] [PubMed] [Google Scholar]

- 12.Guskiewicz KM, Bruce SL, Cantu RC, et al. National Athletic Trainers' Association Position Statement: management of sport-related concussion. J Athl Train. 2004;39(3):280–297. [PMC free article] [PubMed] [Google Scholar]

- 13.Bailey CM, Samples HL, Broshek DK, Freeman JR, Barth JT. The relationship between psychological distress and baseline sports-related concussion testing. Clin J Sport Med. 2010;20(4):272–277. doi: 10.1097/JSM.0b013e3181e8f8d8. [DOI] [PubMed] [Google Scholar]

- 14.Bailey CM, Echemendia RJ, Arnett PA. The impact of motivation on neuropsychological performance in sports-related mild traumatic brain injury. J Int Neuropsychol Soc. 2006;12(4):475–484. doi: 10.1017/s1355617706060619. [DOI] [PubMed] [Google Scholar]

- 15.Erdal K. Neuropsychological testing for sports-related concussion: how athletes can sandbag their baseline testing without detection. Arch Clin Neuropsychol. 2012;27(5):473–479. doi: 10.1093/arclin/acs050. [DOI] [PubMed] [Google Scholar]

- 16.Schatz P, Glatts C. “Sandbagging” baseline test performance on ImPACT, without detection, is more difficult than it appears. Arch Clin Neuropsychol. 2013;28(3):236–244. doi: 10.1093/arclin/act009. [DOI] [PubMed] [Google Scholar]

- 17.Covassin T, Elbin RJ, Stiller-Ostrowski JL, Kontos AP. Immediate post-concussion assessment and cognitive testing (ImPACT) practices of sports medicine professionals. J Athl Train. 2009;44(6):639–644. doi: 10.4085/1062-6050-44.6.639. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Collie A, Maruff P. Computerised neuropsychological testing. Br J Sports Med. 2003;37(1):2–3. doi: 10.1136/bjsm.37.1.2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Echemendia RJ, Herring S, Bailes J. Who should conduct and interpret the neuropsychological assessment in sports-related concussion? Br J Sports Med. 2009;43((suppl 1)):i32–i35. doi: 10.1136/bjsm.2009.058164. [DOI] [PubMed] [Google Scholar]

- 20.Kehrer CA, Sanchez PN, Habif UJ, Rosenbaum GJ, Townes BD. Effects of a significant-other observer on neuropsychological test performance. Clin Neuropsychol. 2000;14(1):67–71. doi: 10.1076/1385-4046(200002)14:1;1-8;FT067. [DOI] [PubMed] [Google Scholar]

- 21.Eastvold AD, Belanger HG, Vanderploeg RD. Does a third party observer affect neuropsychological test performance? It depends. Clin Neuropsychol. 2012;26(3):520–541. doi: 10.1080/13854046.2012.663000. [DOI] [PubMed] [Google Scholar]

- 22.Axelrod B, Barth J, Faust D, et al. Presence of third party observers during neuropsychological testing: official statement of the National Academy of Neuropsychology. Approved 5/15/99. Arch Clin Neuropsychol. 2000;15(5):379–380. doi: 10.1093/arclin/15.5.379. [DOI] [PubMed] [Google Scholar]

- 23.Bauer RM, Iverson GL, Cernich AN, Binder LM, Ruff RM, Naugle RI. Computerized neuropsychological assessment devices: joint position paper of the American Academy of Clinical Neuropsychology and the National Academy of Neuropsychology. Clin Neuropsychol. 2012;26(2):177–196. doi: 10.1080/13854046.2012.663001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Lovell MR, Iverson GL, Collins MW, et al. Measurement of symptoms following sports-related concussion: reliability and normative data for the post-concussion scale. Appl Neuropsychol. 2006;13(3):166–174. doi: 10.1207/s15324826an1303_4. [DOI] [PubMed] [Google Scholar]

- 25.Schatz P, Sandel N. Sensitivity and specificity of the online version of ImPACT in high school and collegiate athletes. Am J Sports Med. 2013;41(2):321–326. doi: 10.1177/0363546512466038. [DOI] [PubMed] [Google Scholar]

- 26.Elbin RJ, Schatz P, Covassin T. One-year test-retest reliability of the online version of ImPACT in high school athletes. Am J Sports Med. 2011;39(11):2319–2324. doi: 10.1177/0363546511417173. [DOI] [PubMed] [Google Scholar]

- 27.Iverson GL, Brooks BL, Collins MW, Lovell MR. Tracking neuropsychological recovery following concussion in sport. Brain Inj. 2006;20(3):245–252. doi: 10.1080/02699050500487910. [DOI] [PubMed] [Google Scholar]

- 28.Cohen J. Statistical Power Analysis for the Behavioral Sciences. New York, NY: Academic Press;; 1969. [Google Scholar]

- 29.Cohen J. Statistical power analysis. Curr Dir Psychol Sci. 1992;1(3):98–101. [Google Scholar]

- 30.McCrory P, Meeuwisse WH, Aubry M, et al. Consensus statement on concussion in sport: the 4th International Conference on Concussion in Sport held in Zurich, November 2012. Br J Sports Med. 2013;47(5):250–258. doi: 10.1136/bjsports-2013-092313. [DOI] [PubMed] [Google Scholar]

- 31.Randolph C. Baseline neuropsychological testing in managing sport-related concussion: does it modify risk? Curr Sports Med Rep. 2011;10(1):21–26. doi: 10.1249/JSR.0b013e318207831d. [DOI] [PubMed] [Google Scholar]

- 32.Resch JE, McCrea MA, Cullum CM. Computerized neurocognitive testing in the management of sport-related concussion: an update. Neuropsychol Rev. 2013;23(4):335–349. doi: 10.1007/s11065-013-9242-5. [DOI] [PubMed] [Google Scholar]

- 33.Schatz P, Neidzwski K, Moser RS, Karpf R. Relationship between subjective test feedback provided by high-school athletes during computer-based assessment of baseline cognitive functioning and self-reported symptoms. Arch Clin Neuropsychol. 2010;25(4):285–292. doi: 10.1093/arclin/acq022. [DOI] [PubMed] [Google Scholar]

- 34.Schatz P, Moser RS, Solomon GS, Ott SD, Karpf R. Prevalence of invalid computerized baseline neurocognitive test results in high school and collegiate athletes. J Athl Train. 2012;47(3):289–296. doi: 10.4085/1062-6050-47.3.14. [DOI] [PMC free article] [PubMed] [Google Scholar]