Abstract

A comprehensive guide that identifies critical evaluation and reporting elements necessary to move research into practice is needed. We propose a framework that highlights the domains required to enhance the value of dissemination and implementation research for end users. We emphasize the importance of transparent reporting on the planning phase of research in addition to delivery, evaluation, and long-term outcomes. We highlight key topics for which well-established reporting and assessment tools are underused (e.g., cost of intervention, implementation strategy, adoption) and where such tools are inadequate or lacking (e.g., context, sustainability, evolution) within the context of existing reporting guidelines. Consistent evaluation of and reporting on these issues with standardized approaches would enhance the value of research for practitioners and decision-makers.

A major challenge for practitioners and policymakers is that most evidence-based interventions are not ready for widespread dissemination.1 Not only does most research on evidence-based interventions not translate into practice or policy; but also, if it does, it usually takes an extraordinarily long time.2,3 This matters for public health practice and policy because the knowledge generated from taxpayer expenditures on research are not reaching the public, and especially not those most in need.4 This reflects poor return on investment, suboptimal health outcomes, and significant opportunity costs. Thus, there has been greatly increased attention to dissemination and implementation (D&I) research in the past few years both in the United States and internationally.5,6 For present purposes, we adopted the definitions in the National Institutes of Health (NIH) program announcement on D&I research.7 Dissemination is defined as the targeted distribution of information and intervention materials to a specific public health or clinical practice audience. The intent is to spread knowledge and the associated evidence-based interventions. Implementation is the use of strategies to adopt and integrate evidence-based health interventions and change practice patterns within specific settings.7

There are many reasons for this slow and incomplete translation,1 including research methods and reporting standards that do not seem relevant to the situations and decisions faced by practitioners and policymakers.8–10 To address these issues, the NIH and the Veterans Administration held a series of invited state-of-the-science meetings in late 2013 and early 2014 to address key gaps and opportunities in D&I research. Three separate assembled working groups focused on 1 of the following 3 issues: (1) training, (2) study design, and (3) reporting and measurement.

The goals of the working group focused on reporting and measurement were to identify key areas in need of better measurement and reporting at all stages of research for dissemination and implementation. We describe a framework developed by this working group. The working group included 23 D&I researchers, practitioners, and decision-makers from the United States and Canada. At the meeting, there was considerable discussion around guidelines for research reporting and their impact, and whether the D&I field was ready for Consolidated Standards of Reporting Trials (CONSORT)–like reporting guidelines. The consensus was that, given the plethora of existing guidelines and reporting criteria,11 it was premature to propose a specific set of guidelines until more was known about whether there are D&I-related gaps in existing guidelines.

Participants decided that, to advance the field and state of knowledge, a reasonable and important first step was to construct a framework that could serve as a guide to researchers to enhance D&I evaluation and reporting relevant to stakeholders. Such a framework is not intended as a formal theory or another model of D&I research; currently, more than 60 such models exist, with many overlapping constructs.12 Rather, the purposes of the proposed framework are to (1) focus attention on needs and opportunities to increase the value and usefulness of research for end users, and (2) identify key needs for evaluation, before issuing formal reporting guidelines for D&I research. Whereas previously published reviews of D&I models have developed strategies to select or use models for research or practice,12–15 here we provide a comprehensive framework to guide researchers across the different phases of research.

The purposes of this article are threefold: (1) to present and discuss implications of the framework, organized by different steps in the research process; (2) to highlight areas that are underreported, but would substantially enhance the value of research for end users with the end goal of improving population health; and (3) to compare concepts in existing reporting guidelines to our framework.

The target audience for this article includes D&I and comparative effectiveness researchers16 and those who are users of D&I evidence, who might consider asking the questions identified here when reviewing research reports or considering adoption of programs and policies. In addition, researchers at earlier stages of the translation cycle (efficacy researchers) could likely benefit from attention to these issues if their goal is to have the products of their research advance to policy or practice. We realize that efficacy research is quite different and are not implying that these issues need to be addressed at that stage. We do think, however, that it is never too early to begin considerations of translation and “designing for dissemination.”17,18 If the products of efficacy or effectiveness research are substantially misaligned with conditions, resources, and policies that have an impact on real world public health and health care delivery contexts, it is very unlikely that such interventions or guidelines will ever be adopted, or if adopted, will be implemented with quality or will be sustained.19 Therefore, the issues in this article should be relevant to both D&I and comparative effectiveness researchers and those seeking to develop, or select and make decisions about real-world use of interventions, programs, and guidelines.

FRAMEWORK FOR DISSEMINATION AND IMPLEMENTATION REPORTING

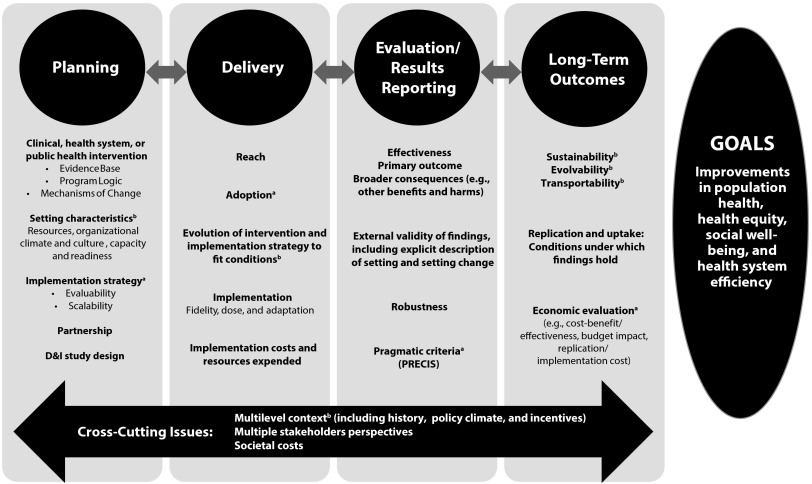

The meeting planning group (R. E. G., R. C. B., C. R. C., B. A. R., G. N.) developed an initial framework based on their expertise, as well as input from all expert working group members via structured premeeting telephone conversations with the objective to identify essential elements and recognized gaps in existing D&I models and frameworks in the area of measures and reporting. This early version of the framework was presented at the start of the meeting and strongly endorsed by the participants. Following this introductory session presenting the framework, participants discussed at length and reached consensus on key framework components and subcomponents (Figure 1).

FIGURE 1—

Framework for enhancing the value of research for dissemination and implementation.

Note. D&I = dissemination and implementation; PRECIS = Pragmatic Explanatory Indicator Summary. For a glossary of terms, please see Rabin et al.20 as well as appendix of additional definitions (available as a supplement to the online version of this article at http://www.ajph.org).

aUnderused or underreported elements despite published assessment tools.

bEmerging areas of interest with limited or no published assessment tools.

Figure 1 represents the various interrelated phases of an efficacy, effectiveness, or D&I research project in the columns, identifies key reporting elements in each phase, and highlights crosscutting issues (i.e., multilevel context, stakeholder perspectives, and costs). Detailed definitions of framework components are included in an appendix (available as a supplement to the online version of this article at http://www.ajph.org). As indicated by the title and the “Goals” column, the ultimate goal is to increase the usefulness of research for end users to enhance equitable, cost-efficient population health. In the next sections we highlight some crosscutting themes and important elements within each phase. We recognize that it may not be feasible to incorporate all of these elements in every study, and a programmatic approach may be more appropriate. But most, if not all, of these elements should be considered to ensure that the results of research efforts not only accelerate the use of a specific intervention but also advance the field in general and provide information to improve translation.

Crosscutting Themes

Several crosscutting themes apply to most or all phases of research, as indicated at the bottom of the figure. For example, applied research is almost always multilevel and crosses social–ecological levels.21,22 Unfortunately, it is not always conducted or reported that way and, in particular, information related to multilevel context, such as historical context, policy climate, and incentives, as well as organizational settings and persons delivering and receiving interventions, is often lacking or missing important elements.23,24 As indicated in multiple columns, the issue of “fit” or alignment (or lack of fit) between an intervention program or policy and its context is one of the key elements of our framework; thus, reporting on context is essential for end users to understand the potential fit for a given intervention.

Context is one of the most important and least often reported elements in research.25,26 As conceptualized here, context is multilevel, and cuts across domains including economic, political, social, and temporal factors. At present, there is no agreed-upon method for assessing context. The most frequent attempts to capture basic structural characteristics of context, such as study population size and demographics, are seldom the most relevant underlying causes of important outcomes.27 Moreover, structural characteristics fail to capture other important contextual factors that may greatly influence adoption, implementation, and sustainment such as organizational capacity for change, presence of an opinion leader, communication and feedback strategies, and the nature of the provider–end user relationship.

Other factors involve clarity of health information exchange between provider and end user within the patient’s personal goals of care, ensuring sufficient explanation of acceptable alternatives and best-case–worst-case scenarios. Interspecialty characteristics of a profession or individual professional such as training and credentialing, risk aversion, or society guidelines also influence the rapidity and penetration of evidence into practice. In present day health care, context can change rapidly; thus, it is important that context be assessed longitudinally, not just at the beginning of a study. There are ongoing efforts to specify organizational characteristics related to setting context and several instruments drawn from the management and business literature,28 but many such instruments are so lengthy as to be impractical or not helpful for analyses because they are so general. At present, we strongly recommend using mixed-methods approaches to report on context.29–32

A second crosscutting issue concerns the importance of considering and reporting information relevant from multiple stakeholder perspectives. A large body of literature calls for the need to make research more relevant to stakeholders, including practitioners and other end users of research (e.g., patients, community members).1,33–37 Less frequently addressed, but also critical perspectives include those of policymakers and administrators who must make difficult investment decisions. In particular, and as indicated as a third crosscutting issue, information on costs and resources required to deliver an intervention are essential, and often the first question asked by potential decision-makers. All too often, there are no data to answer this question,38 despite existing methods,38,39 and, when present, cost-of-intervention information, for example, is often nonstandardized and often does not take the perspective of a potential adopting organization.40

Planning

Information from the planning phases of research needs to be reported more frequently. By planning, we mean activities that precede actual piloting or project activities that involve delivery of services. This ideally involves a series of interactive meetings with stakeholders to understand and address the elements listed in the “Planning” column of Figure 1. It is often stated that one needs to plan for dissemination (or sustainability, or scale-up) from the beginning,17 but, despite these entreaties, it is usually not at all apparent how, if at all, research and intervention planners took such issues into consideration in the planning phase. Systematic approaches such as intervention mapping, a process for developing theory and evidence-based interventions, takes the planner through an explicit process to plan implementation interventions during the initial intervention development phase. Planners are guided to consider context and players responsible for program adoption, implementation, and sustainability, to consider what has to be done as well as the factors influencing specific adoption, implementation, and maintenance behaviors, and to change methods and strategies to increase use.41 We also recommend efforts to help specify the intervention theory or logic model that specifies how a program is intended to work.42,43 It is important for end users and other researchers to understand how a program developer thinks that change will come about. Less often reported, even in D&I research, is the implementation strategy employed to deliver the intervention. Proctor et al. recently published proposed guidelines for reporting elements of implementation strategies.44 They suggested

naming, defining, and operationalizing implementation strategies in terms of seven dimensions: actor, the action, action targets, temporality, dose, implementation outcomes addressed, and theoretical justification.44(p1)

Among the setting characteristics that are relevant in this phase are organizational readiness to change, setting capacity, and resource constraints. Investigators purporting to use community-engaged, stakeholder-engaged, or patient-centered research methods should report on the specific partnership strategies used, including the frequency and structure of meetings, and the amount of decision-making and budget authority that patient and community partners have. Finally, costs and resource considerations are important in the planning phase through use of evaluability assessment,45 which is a pre-evaluation activity designed to maximize the chances that any subsequent evaluation will result in useful information.46 This can determine the probability that an intervention has even a remote chance of being adopted or successfully implemented in real-world settings, even if effective.

Delivery

In the delivery or implementation phase, some of the same issues are important, but in slightly different ways. Of paramount importance in the delivery phase is reporting on the evolution of both intervention components and the implementation strategy. It is rare for either to be implemented exactly as planned, and transparent reporting on how these strategies evolved and how the evidence-based program was adapted to fit local contexts is crucial for understanding implementation.45,47,48 Recent publications on how to report on the balance between fidelity and adaptation49,50 provide helpful discussions and guides for reporting. Historically, failure to report on adaptations has been one weakness in D&I research, possibly because unwavering fidelity to an intervention protocol was considered paramount to good science,51 and, hence, researchers may not report adaptations out of concern that this would undermine the credibility of (and ability to publish) their study. But data on adaptations and local customization is the type of information that end users and potential adopting organizations most need. Over time, information on which elements of an intervention are essential (core) and which can be modified to local context should accumulate and guide future adaptation efforts.52

The costs of intervention delivery38 are also important to report. We recommend use of standard procedures to estimate costs, reporting from the perspective(s) of the organization delivering the program and from the patient perspective.40 In some cases, it may also be important to report costs from the societal perspective for large-scale public programs, or to estimate cost–benefit or downstream costs. Costs that are frequently excluded yet important to know are recruitment or promotion costs, and replication costs.38 Researchers often omit these expenses because they think of recruitment as a package that includes application of research inclusion and exclusion criteria and informed consent procedures. Although some of these are research costs that would not be required in standard care (e.g., informed consent), it is usually necessary to advertise and enroll participants into a real-world, nonresearch program, and these often substantial costs can be separated from research costs.

Finally, both reach and adoption need to be reported more consistently.53 Recent reviews of both publications and grant applications reveal that reach among individual participants (patients) is reported increasingly often as the percentage of persons approached or invited who participate, but the representativeness of these participants is reported far less often.53,54 Adoption among potential settings and staff is reported much less often, and again the characteristics of those taking part versus not are even less often reported.53,54

Results Reporting

The information in the results (and also the methods or appendix) section of an article is what is traditionally thought of as evaluation, process, and outcomes reporting. Our recommendations are to consider more broadly the outcomes of a program, including both intended and unintended consequences. This is not inconsistent with requirements to a priori identify and report on a primary outcome for a project, but recognizes that programs have more than just a single impact. Findings over the past couple decades have clearly established that interventions have both intended outcomes, and usually also other corollary and often unintended consequences (which can be positive or negative).55–58 If one considers the perspective of practitioners and policymakers who must choose among interventions for very diverse conditions, it is also helpful to include impact on broader or more general outcomes such as health-related quality of life.59,60

As indicated in the “Results Reporting” column of Figure 1, this broader focus includes reporting on both the robustness of outcomes (e.g., across different subgroups of patients, staff, settings, and time) and considering external validity and generalizability as well as internal validity issues.24 To address generalizability, researchers need to describe the intervention and contextual factors in sufficient detail to allow local decision-makers to make their own judgments about the applicability of the findings to their own settings and context. These questions (e.g., Will this intervention work in settings like mine? With patients like mine? With resources available to me?) are the types of issues most important to practitioners, decision-makers, and ultimately patients.9 This contextual perspective is aligned with the goals of both the precision or personalized medicine movements in the United States61–63 and realist perspectives internationally.64

Possibly the reporting change most currently ready to implement is the use of the Pragmatic Explanatory Indicator Summary (PRECIS) criteria, which could serve as one component of a future D&I reporting guideline.65 Developed by the CONSORT consortium to complement the CONSORT reporting criteria on pragmatic trials, the current PRECIS criteria include information on the extent to which a given study is pragmatic versus “explanatory” (or efficacious) on each of 10 dimensions (e.g., participant eligibility criteria, experimental intervention flexibility, experimental intervention practitioner expertise).

Long-Term Outcomes

One of the least reported outcomes across almost all health literatures is the extent to which an intervention or policy is maintained.53 There are multiple reasons for this, including lack of planning for sustainability, publication challenges, and limited funding periods, but in many ways the fundamental evaluation question from a stakeholder perspective is “Can this program be sustained over time (and under what conditions)?” Emerging literature is also documenting that an intervention almost never remains the same as it was during initial intervention, but changes over time.66,67 The irony is that to maintain or sustain results, an intervention may well need to change over time given that many aspects of context change. This recognition is starting to appear in the literature and has variously been termed evolvability or dynamic sustainability,66 and, when applied to different settings, transportability.68–70

Related to the theme of external validity,24 there is a need to report on replication of a program in different settings. Failure to replicate has been identified as a critical issue at all phases of research from basic discovery to dissemination,71,72 and understanding the context and conditions under which results are replicable or not is one of the most important tasks of D&I research.33

Reporting data on longer-term costs and benefits of programs and policies, and especially the cost–benefit value of different variations of interventions, is an important ultimate outcome, as indicated at the bottom of the “Long-Term Outcomes” column in Figure 1.

Summary of Figure and Framework

The issues identified in the framework are all important. In Figure 1, the expert working group highlighted with footnotes those issues most in need of attention to report on to advance the value of research for D&I. Footnote “a” denotes elements that are underreported, but for which there are published assessment tools (e.g., cost of intervention, implementation strategy, adoption).39,43,44,73–78 Footnote “b” denotes emerging areas of interest, for which there are currently no or few published assessment procedures, and little agreement on how that issue should be reported (e.g., context, sustainability, evolution).

A final point is that these issues are not independent, but rather interrelated. Many reflect a needed change in perspective from a reductionistic focus on a single thing at a time holding everything else constant to one of studying events and interventions in context, and understanding interactive and contextual effects.6,7,31 There needs to be more consistent reporting, using standard methods to significantly advance the field, and these issues need to be reported transparently and in connection with each other. Reporting on any 1 or 2 elements or issues in the framework will not substantially improve our knowledge or help end users. A concerted effort to address most of these issues in concert and transparently discussing issues such as adaptations made and lessons learned would substantially increase the value of D&I research.

EXPLORING THE NEED FOR REPORTING GUIDELINES

Although its underpinnings have been around since the 1970s,79 formal recognition of the field of D&I research in the United States has been present for about a decade. As noted by Chambers,80 the first program announcement for National Institutes of Health D&I research was published in 2002, attendance at the annual NIH conference on D&I research has quadrupled from 2007 to 2011, and the volume and quality of D&I research has increased considerably. The framework described in this article is seen as one important step to provide background to consider the need for a set of reporting guidelines for D&I research.

Part of the next stage in the evolution in D&I research involves reporting guidelines, which provide methodological structure for the design, conduct, and reporting of research. The Enhancing the Quality and Transparency of Health Research (EQUATOR) network was established in 2008 to raise awareness of existing reporting guidelines, while serving as a central repository and general resource for scientific authors, peer reviewers, and journal editors.81,82 Their process includes an assessment of existing guidelines for a research domain and the overall quality of published articles without a directly applicable guideline. The guidelines in EQUATOR provide a checklist to frame the conduct and reporting of research. For example, guidelines exist for randomized controlled trials,83 diagnostics,84 observational trials,85 quality improvement,86 cost-effectiveness,87 case reports,88 and systematic reviews.89,90 Furthermore, some guidelines have multiple extensions including the CONSORT recommendations for harms,91 noninferiority,92 cluster-design,93 pragmatic trials,94 herbal studies,95 and nonpharmacological treatment interventions.96 These reporting guidelines often enhance the quality of research reporting,97 but the current guidelines have been slow to penetrate into individual journals’ author instructions.98 One pragmatic obstacle to widespread dissemination and utilization of existing reporting guidelines is the continually expanding number of these recommendations, which create an atmosphere of “guideline fatigue.”

Some D&I researchers have highlighted the need for explicit scientific methods to study and report complex interventions.42 In related work, the Workgroup for Intervention Development and Evaluation Research checklist was developed and tested to evaluate the quality of reporting in knowledge translation systematic reviews.99 A structured reporting guidelines framework for D&I research derived by experts with widespread endorsement by D&I researchers, publishers, and policymakers may be needed to improve the quality and transparency in reporting of D&I research. However, our working group decided that proceeding to a set of D&I-specific reporting guidelines was premature without a systematic evaluation of existing guidelines to evaluate their overlap with our framework. Therefore, we reviewed the existing guidelines with the most likely overlap germane to D&I research, as judged by our consensus work group.

To identify existing reporting guidelines with potential relevance or overlap with D&I research, we used 2 approaches. First, one author (C. R. C.) contacted the EQUATOR network in January 2013 to simultaneously report our plans to organize a consensus conference to formulate D&I reporting guidelines and to ascertain whether any other groups were working on similar projects. This author also reviewed all of the published and in-development guidelines archived on the EQUATOR network Web site. The EQUATOR network subsequently connected us with the workgroup for the Standards for Reporting Phase IV Implementation Studies initiative in the United Kingdom. We consulted with the workgroup’s principal investigators to identify overlap and unique attributes of the 2 projects, as well as deficiencies in existing reporting guidelines. The second strategy that we used to identify existing reporting guidelines with potential relevance was to confer with participants of our NIH work group to solicit their awareness of pertinent, potentially overlapping reporting guidelines. One participant and coauthor (J. M. G.) listed the 6 published guidelines that we assessed. These existing reporting guidelines were CONSORT cluster randomized trial,93 CONSORT pragmatic trial,94 CONSORT nonpharmacological,96 PRISMA-health equity extension,100 Standards for Quality Improvement Reporting Excellence,86 and the complex interventions in health care (CReDECI) checklist.101

The results of our preliminary review of existing reporting guidelines overlap with our D&I framework are summarized in Table 1. This table was produced by one author’s (C. R. C.) review of the existing reporting guidelines’ recommendations within the context of the framework. Notably, none of the existing guidelines incorporate all of the key domains of our D&I framework. The CReDECI checklist incorporates the most elements of our framework by including 5 of 9 domains, but does not provide recommendations for scalability, pragmatic assessment, evolvability, or replication. None of the existing publications provides a recommendation to assess pragmatic versus explanatory design with an instrument such as the PRECIS tool.102 The CReDECI is the only guideline that recommends a description of the theoretical basis for the intervention tested.12 None recommends description of the conceptual basis of the implementation strategies employed. The Standards for Quality Improvement Reporting Excellence criteria are the only guideline to advocate a description of scalability, but this element is vaguely described as

paying particular attention to components of the intervention and context factors that helped determine the intervention’s effectiveness (or lack thereof), and types of settings in which this intervention is most likely to be effective.86

TABLE 1—

Overlap Between Dissemination and Implementation Framework and Existing Reporting Guidelines

| Guideline | Program Logic | Context | Scalability | Adoption | Robustness | Pragmatic Criteria | Sustainability or Evolvability | Replication | Economic Evaluation |

| Criteria for Reporting the Development and Evaluation of Complex Interventions (CReDECI)101 | + | + | − | + | + | − | − | − | + |

| Consolidated Standards of Reporting Trials (CONSORT) cluster93 | − | ? | − | + | − | − | − | + | − |

| CONSORT nonpharmacological96 | − | ? | − | ? | − | − | − | + | − |

| CONSORT pragmatic94 | − | − | − | − | − | − | − | + | − |

| Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA)-EQUITY100 | − | + | − | ? | − | − | − | − | − |

| Standards for Quality Improvement Reporting Excellence (SQUIRE)86 | − | + | ? | + | − | − | + | + | + |

Note. + = clear description suggesting that authors use guideline to incorporate this domain into their research report; ? = equivocal in that the guideline does not explicitly advise authors to incorporate this domain into their research report; − = no clear description suggesting that authors use guideline to incorporate this domain into their research report.

Our conclusion is that existing reporting guidelines most applicable to D&I researchers and peer reviewers do not address or lack precision around several essential D&I elements of our framework, so further exploration of D&I-specific reporting guidelines with EQUATOR methodology is warranted.

The next step in evaluating the value of distinct D&I reporting guidelines will be to contact the author groups for the existing guidelines summarized in Table 1 to ascertain plans for future or ongoing extensions to their guidelines into the realm of D&I research, with the assistance of the EQUATOR network. In addition, several other groups are already working on additional reporting guidelines that may be applicable to D&I research. The Standards for Reporting Phase IV Implementation Studies initiative in the United Kingdom seeks to improve the quality of reporting of implementation studies in real-life settings.103 In addition, the Reporting Manualised Interventions for Dissemination and Evaluation statement,104 Template for Intervention Description and Replication,105 and CONSORT extension for social and psychosocial interventions106 are all in development. These author groups will be contacted to assess the degree of overlap between their reporting guidelines and our D&I framework.

One limitation of our approach to the framework is that the expert panel was populated mainly by US-based scientists, so we have likely missed some key points from knowledge translation, knowledge exchange, and D&I research in a global context. In addition, the “underused” and “lacking” labels are based on expert judgment and not a systematic review of the literature.

Whether to proceed with the development of distinct D&I reporting guidelines based upon our framework depends upon a more in-depth assessment of the benefits of a separate guideline weighed against the pragmatic threat of “guideline fatigue.” One of the major benefits of distinct D&I reporting guidelines is the opportunity to explicitly define the key elements of D&I research in 1 article, along with their associated measures. The major barrier to reporting of these measures is the lack of practical, validated, well-accepted instruments and analytic approaches. However, highlighting the need for such tools and strengthening guidelines that require that future D&I studies attempt to assess these elements should enhance the scope and value of D&I research.

Acknowledgments

This work was partially funded by the National Cancer Institute, project 1P20 CA137219 (B. R.). J. M. Grimshaw holds a Canada Research Chair in Health Knowledge Transfer and Uptake.

We would like to thank David A. Bergman, MD, Melissa C. Brouwers, PhD, Amanda Cash, DrPH, David Chambers, DPhil, Robert M. Kaplan, PhD, Cara C. Lewis, PhD, Robert McNellis, MPH, Brian Mittman, PhD, Mary Northridge, PhD, Wynne E. Norton, PhD, Marcel Salive, MD, Kurt C. Stange, MD, PhD, Pimjai Sudsawad, ScD, Jonathan Tobin, PhD, Cynthia Vinson, PhD, and Nina Wallerstein, DrPH, who were all members of the expert working group on reporting and measurement issues in D&I research, for their contribution to the development of this framework. Their expertise, perspective, and collegiality were invaluable for formulating the basis of this article.

Human Participant Protection

Institutional review board approval was not needed because there were no human participants.

References

- 1.Riley WT, Glasgow RE, Etheredge L, Abernethy AP. Rapid, responsive, relevant (R3) research: a call for a rapid learning health research enterprise. Clin Transl Med. 2013;2(1):10. doi: 10.1186/2001-1326-2-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Balas EA, Boren SA. Managing Clinical Knowledge for Health Care Improvement: Yearbook of Medical Informatics. Stuttgart, Germany: Schattauer; 2000. [PubMed] [Google Scholar]

- 3.Glasgow RE, Emmons KM. How can we increase translation of research into practice? Types of evidence needed. Annu Rev Public Health. 2007;28:413–433. doi: 10.1146/annurev.publhealth.28.021406.144145. [DOI] [PubMed] [Google Scholar]

- 4.Washington, DC: Institute of Medicine; 2012. How far have we come in reducing health disparities?: progress since 2000—workshop summary. [PubMed] [Google Scholar]

- 5.Peters DH, Adam T, Alonge O, Agyepong IA, Tran N. Implementation research: what it is and how to do it. BMJ. 2013;347:f6753. doi: 10.1136/bmj.f6753. [DOI] [PubMed] [Google Scholar]

- 6.Brownson RC, Colditz GA, Proctor EK. Dissemination and Implementation Research in Health: Translating Science to Practice. 1st ed. New York, NY: Oxford University Press; 2012. [Google Scholar]

- 7.Glasgow RE, Vinson C, Chambers D, Khoury MJ, Kaplan RM, Hunter C. National Institutes of Health approaches to dissemination and implementation science: current and future directions. Am J Public Health. 2012;102(7):1274–1281. doi: 10.2105/AJPH.2012.300755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kolko DJ, Hoagwood KE, Springgate B. Treatment research for children and youth exposed to traumatic events: moving beyond efficacy to amp up public health impact. Gen Hosp Psychiatry. 2010;32(5):465–476. doi: 10.1016/j.genhosppsych.2010.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Rothwell PM. External validity of randomised controlled trials: “to whom do the results of this trial apply? Lancet. 2005;365(9453):82–93. doi: 10.1016/S0140-6736(04)17670-8. [DOI] [PubMed] [Google Scholar]

- 10.Pinnock H, Epiphaniou E, Taylor SJ. Phase IV implementation studies. The forgotten finale to the complex intervention methodology framework. Ann Am Thorac Soc. 2014;11(suppl 2):S118–S122. doi: 10.1513/AnnalsATS.201308-259RM. [DOI] [PubMed] [Google Scholar]

- 11.EQUATOR Network: Enhancing the QUAlity and Transparency Of health Research. 2013. Available at: http://www.equator-network.org. Accessed January 30, 2014.

- 12.Tabak RG, Khoong EC, Chambers DA, Brownson RC. Bridging research and practice: models for dissemination and implementation research. Am J Prev Med. 2012;43(3):337–350. doi: 10.1016/j.amepre.2012.05.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wilson PM, Petticrew M, Calnan MW, Nazareth I. Disseminating research findings: what should researchers do? A systematic scoping review of conceptual frameworks. Implement Sci. 2010;5:91. doi: 10.1186/1748-5908-5-91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Sales A, Smith J, Curran G, Kochevar L. Models, strategies, and tools. Theory in implementing evidence-based findings into health care practice. J Gen Intern Med. 2006;21(suppl 2):S43–S49. doi: 10.1111/j.1525-1497.2006.00362.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Mitchell SA, Fisher CA, Hastings CE, Silverman LB, Wallen GR. A thematic analysis of theoretical models for translational science in nursing: mapping the field. Nurs Outlook. 2010;58(6):287–300. doi: 10.1016/j.outlook.2010.07.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Khoury MJ, Gwinn M, Ioannidis JP. The emergence of translational epidemiology: from scientific discovery to population health impact. Am J Epidemiol. 2010;172(5):517–524. doi: 10.1093/aje/kwq211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Klesges LM, Estabrooks PA, Dzewaltowski DA, Bull SS, Glasgow RE. Beginning with the application in mind: designing and planning health behavior change interventions to enhance dissemination. Ann Behav Med. 2005;29(suppl):66–75. doi: 10.1207/s15324796abm2902s_10. [DOI] [PubMed] [Google Scholar]

- 18.Brownson RC, Jacobs JA, Tabak RG, Hoehner CM, Stamatakis KA. Designing for dissemination among public health researchers: findings from a national survey in the United States. Am J Public Health. 2013;103(9):1693–1699. doi: 10.2105/AJPH.2012.301165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Rogers EM. Diffusion of Innovations. 5th ed. New York, NY: Free Press; 2003. [Google Scholar]

- 20.Rabin BA, Brownson RC, Haire-Joshu D, Kreuter MW, Weaver NL. A glossary for dissemination and implementation research in health. J Public Health Manag Pract. 2008;14(2):117–123. doi: 10.1097/01.PHH.0000311888.06252.bb. [DOI] [PubMed] [Google Scholar]

- 21.McLeroy KR, Bibeau D, Steckler A, Glanz K. An ecological perspective on health promotion programs. Health Educ Q. 1988;15(4):351–377. doi: 10.1177/109019818801500401. [DOI] [PubMed] [Google Scholar]

- 22.Stokols D. Social ecology and behavioral medicine: implications for training, practice, and policy. Behav Med. 2000;26(3):129–138. doi: 10.1080/08964280009595760. [DOI] [PubMed] [Google Scholar]

- 23.Glasgow RE, Green LW, Klesges LM et al. External validity: we need to do more. Ann Behav Med. 2006;31(2):105–108. doi: 10.1207/s15324796abm3102_1. [DOI] [PubMed] [Google Scholar]

- 24.Green LW, Glasgow RE. Evaluating the relevance, generalization, and applicability of research: issues in external validation and translation methodology. Eval Health Prof. 2006;29(1):126–153. doi: 10.1177/0163278705284445. [DOI] [PubMed] [Google Scholar]

- 25.Stange KC, Breslau ES, Dietrich AJ, Glasgow RE. State-of-the-art and future directions in multilevel interventions across the cancer control continuum. J Natl Cancer Inst Monogr. 2012;2012(44):20–31. doi: 10.1093/jncimonographs/lgs006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.McDonald KM. Considering context in quality improvement interventions and implementation: concepts, frameworks, and application. Acad Pediatr. 2013;13(6, suppl):S45–S53. doi: 10.1016/j.acap.2013.04.013. [DOI] [PubMed] [Google Scholar]

- 27.McKinlay JB. Paradigmatic obstacles to improving the health of populations—implications for health policy. Salud Publica Mex. 1998;40(4):369–379. doi: 10.1590/s0036-36341998000400010. [DOI] [PubMed] [Google Scholar]

- 28.Weiner BJ, Belden CM, Bergmire DM, Johnston M. The meaning and measurement of implementation climate. Implement Sci. 2011;6:78. doi: 10.1186/1748-5908-6-78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Tomoaia-Cotisel A, Scammon DL, Waitzman NJ et al. Context matters: the experience of 14 research teams in systematically reporting contextual factors important for practice change. Ann Fam Med. 2013;11(suppl 1):S115–S123. doi: 10.1370/afm.1549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Stange KC, Glasgow RE. Rockville, MD: Agency for Healthcare Research and Quality; 2013. Considering and reporting important contextual factors in research on the patient-centered medical home. [Google Scholar]

- 31.Bayliss EA, Bonds DE, Boyd CM et al. Understanding the context of health for people with multiple chronic conditions: moving from what is the matter to what matters. Ann Fam Med. 2014;12(3):260–269. doi: 10.1370/afm.1643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Klassen AC, Creswell J, Plano Clark VL, Smith KC, Meissner HI. Best practices in mixed methods for quality of life research. Qual Life Res. 2012;21(3):377–380. doi: 10.1007/s11136-012-0122-x. [DOI] [PubMed] [Google Scholar]

- 33.Glasgow RE, Chambers D. Developing robust, sustainable, implementation systems using rigorous, rapid and relevant science. Clin Transl Sci. 2012;5(1):48–55. doi: 10.1111/j.1752-8062.2011.00383.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Fleurence R, Selby JV, Odom-Walker K et al. How the Patient-Centered Outcomes Research Institute is engaging patients and others in shaping its research agenda. Health Aff (Millwood) 2013;32(2):393–400. doi: 10.1377/hlthaff.2012.1176. [DOI] [PubMed] [Google Scholar]

- 35.Krumholz HM, Selby JV Patient-Centered Outcomes Research Institute. Seeing through the eyes of patients: the Patient-Centered Outcomes Research Institute funding announcements. Ann Intern Med. 2012;157(6):446–447. doi: 10.7326/0003-4819-157-6-201209180-00519. [DOI] [PubMed] [Google Scholar]

- 36.Selby JV. The Patient-Centered Outcomes Research Institute: a 2013 agenda for “research done differently.”. Popul Health Manag. 2013;16(2):69–70. doi: 10.1089/pop.2013.1621. [DOI] [PubMed] [Google Scholar]

- 37.Selby JV, Beal AC, Frank L. The Patient-Centered Outcomes Research Institute (PCORI) national priorities for research and initial research agenda. JAMA. 2012;307(15):1583–1584. doi: 10.1001/jama.2012.500. [DOI] [PubMed] [Google Scholar]

- 38.Ritzwoller DP, Sukhanova A, Gaglio B, Glasgow RE. Costing behavioral interventions: a practical guide to enhance translation. Ann Behav Med. 2009;37(2):218–227. doi: 10.1007/s12160-009-9088-5. [DOI] [PubMed] [Google Scholar]

- 39.O’Keeffe-Rosetti MC, Hornbrook MC, Fishman PA et al. A standardized relative resource cost model for medical care: application to cancer control programs. J Natl Cancer Inst Monogr. 2013;2013(46):106–116. doi: 10.1093/jncimonographs/lgt002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Gold MR, Siegel JE, Russell LB, Weinstein MC. Cost-Effectiveness in Health and Medicine. New York, NY: Oxford University Press; 2003. [Google Scholar]

- 41.Bartholomew LK, Parcel GS, Kok G, Gottlieb NH, Fernández ME. Planning Health Promotion Programs: An Intervention Mapping Approach. 3rd ed. San Francisco, CA: Jossey-Bass; 2011. [Google Scholar]

- 42.Michie S, Fixsen D, Grimshaw JM, Eccles MP. Specifying and reporting complex behaviour change interventions: the need for a scientific method. Implement Sci. 2009;4:40. doi: 10.1186/1748-5908-4-40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.French SD, Green SE, O’Connor DA et al. Developing theory-informed behaviour change interventions to implement evidence into practice: a systematic approach using the Theoretical Domains Framework. Implement Sci. 2012;7:38. doi: 10.1186/1748-5908-7-38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8:139. doi: 10.1186/1748-5908-8-139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Leviton LC, Khan LK, Rog D, Dawkins N, Cotton D. Evaluability assessment to improve public health policies, programs, and practices. Annu Rev Public Health. 2010;31:213–233. doi: 10.1146/annurev.publhealth.012809.103625. [DOI] [PubMed] [Google Scholar]

- 46.Wholey J. Assessing the feasibility and likely usefulness of evaluation. In: Wholey J, Hatry H, Newcomer K, editors. Handbook of Practical Program Evaluation. 2nd ed. San Francisco, CA: Jossey-Bass; 2004. pp. 33–62. [Google Scholar]

- 47.Lomas J. Diffusion, dissemination, and implementation: who should do what? Ann N Y Acad Sci. 1993;703:226–235. doi: 10.1111/j.1749-6632.1993.tb26351.x. discussion 235–227. [DOI] [PubMed] [Google Scholar]

- 48.Hawe P, Shiell A, Riley T. Complex interventions: how “out of control” can a randomised controlled trial be? BMJ. 2004;328(7455):1561–1563. doi: 10.1136/bmj.328.7455.1561. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Allen JD, Linnan LA, Emmons KM. Fidelity and its relationship to implementation effectiveness, adaptation, and dissemination. In: Brownson RC, Colditz G, Proctor E, editors. Dissemination and Implementation Research in Health: Translating Science to Practice. New York, NY: Oxford University Press; 2012. pp. 281–304. [Google Scholar]

- 50.Stirman SW, Miller CJ, Toder K, Calloway A. Development of a framework and coding system for modifications and adaptations of evidence-based interventions. Implement Sci. 2013;8:65. doi: 10.1186/1748-5908-8-65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Bellg AJ, Borrelli B, Resnick B et al. Enhancing treatment fidelity in health behavior change studies: best practices and recommendations from the NIH Behavior Change Consortium. Health Psychol. 2004;23(5):443–451. doi: 10.1037/0278-6133.23.5.443. [DOI] [PubMed] [Google Scholar]

- 52.Squires JE, Valentine JC, Grimshaw JM. Systematic reviews of complex interventions: framing the review question. J Clin Epidemiol. 2013;66(11):1215–1222. doi: 10.1016/j.jclinepi.2013.05.013. [DOI] [PubMed] [Google Scholar]

- 53.Gaglio B, Shoup JA, Glasgow RE. The RE-AIM framework: a systematic review of use over time. Am J Public Health. 2013;103(6):e38–e46. doi: 10.2105/AJPH.2013.301299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Kessler RS, Purcell EP, Glasgow RE, Klesges LM, Benkeser RM, Peek CJ. What does it mean to “employ” the RE-AIM model? Eval Health Prof. 2013;36(1):44–66. doi: 10.1177/0163278712446066. [DOI] [PubMed] [Google Scholar]

- 55.Powell AA, White KM, Partin MR et al. Unintended consequences of implementing a national performance measurement system into local practice. J Gen Intern Med. 2012;27(4):405–412. doi: 10.1007/s11606-011-1906-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Naylor MD, Kurtzman ET, Grabowski DC, Harrington C, McClellan M, Reinhard SC. Unintended consequences of steps to cut readmissions and reform payment may threaten care of vulnerable older adults. Health Aff (Millwood) 2012;31(7):1623–1632. doi: 10.1377/hlthaff.2012.0110. [DOI] [PubMed] [Google Scholar]

- 57.Peters E, Klein W, Kaufman A, Meilleur L, Dixon A. More is not always better: intuitions about effective public policy can lead to unintended consequences. Soc Issues Policy Rev. 2013;7(1):114–148. doi: 10.1111/j.1751-2409.2012.01045.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Lehmann U, Gilson L. Actor interfaces and practices of power in a community health worker programme: a South African study of unintended policy outcomes. Health Policy Plan. 2013;28(4):358–366. doi: 10.1093/heapol/czs066. [DOI] [PubMed] [Google Scholar]

- 59.Kaplan RM, Bush JW. Health-related quality of life measurement for evaluation research and policy analysis. Health Psychol. 1982;1(1):61–80. [Google Scholar]

- 60.Kaplan RM. Quality of life measurement. In: Karoly P, editor. Measurement Strategies in Health Psychology. New York, NY: Wiley & Sons; 1985. [Google Scholar]

- 61.Collins CD, Purohit S, Podolsky RH et al. The application of genomic and proteomic technologies in predictive, preventive and personalized medicine. Vascul Pharmacol. 2006;45(5):258–267. doi: 10.1016/j.vph.2006.08.003. [DOI] [PubMed] [Google Scholar]

- 62.Kaiser J. Francis Collins interview. Departing U.S. Genome Institute director takes stock of personalized medicine. Science. 2008;320(5881):1272. doi: 10.1126/science.320.5881.1272. [DOI] [PubMed] [Google Scholar]

- 63.Hamburg MA, Collins FS. The path to personalized medicine. N Engl J Med. 2010;363(4):301–304. doi: 10.1056/NEJMp1006304. [DOI] [PubMed] [Google Scholar]

- 64.Pawson R, Greenhalgh T, Harvey G, Walshe K. Realist review—a new method of systematic review designed for complex policy interventions. J Health Serv Res Policy. 2005;10(suppl 1):21–34. doi: 10.1258/1355819054308530. [DOI] [PubMed] [Google Scholar]

- 65.Thorpe KE, Zwarenstein M, Oxman AD et al. A Pragmatic-Explanatory Continuum Indicator Summary (PRECIS): a tool to help trial designers. CMAJ. 2009;180(10):E47–E57. doi: 10.1503/cmaj.090523. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Chambers DA, Glasgow RE, Stange KC. The dynamic sustainability framework: addressing the paradox of sustainment amid ongoing change. Implement Sci. 2013;8:117. doi: 10.1186/1748-5908-8-117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Scheirer MA, Dearing JW. An agenda for research on the sustainability of public health programs. Am J Public Health. 2011;101(11):2059–2067. doi: 10.2105/AJPH.2011.300193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Hartzler B, Lash SJ, Roll JM. Contingency management in substance abuse treatment: a structured review of the evidence for its transportability. Drug Alcohol Depend. 2012;122(1-2):1–10. doi: 10.1016/j.drugalcdep.2011.11.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Bernstein E, Topp D, Shaw E et al. A preliminary report of knowledge translation: lessons from taking screening and brief intervention techniques from the research setting into regional systems of care. Acad Emerg Med. 2009;16(11):1225–1233. doi: 10.1111/j.1553-2712.2009.00516.x. [DOI] [PubMed] [Google Scholar]

- 70.Schoenwald SK, Sheidow AJ, Letourneau EJ, Liao JG. Transportability of multisystemic therapy: evidence for multilevel influences. Ment Health Serv Res. 2003;5(4):223–239. doi: 10.1023/a:1026229102151. [DOI] [PubMed] [Google Scholar]

- 71.Ioannidis JP. Why most published research findings are false. PLoS Med. 2005;2(8):e124. doi: 10.1371/journal.pmed.0020124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Prasad V, Vandross A, Toomey C et al. A decade of reversal: an analysis of 146 contradicted medical practices. Mayo Clin Proc. 2013;88(8):790–798. doi: 10.1016/j.mayocp.2013.05.012. [DOI] [PubMed] [Google Scholar]

- 73.Murray E, Treweek S, Pope C et al. Normalisation process theory: a framework for developing, evaluating and implementing complex interventions. BMC Med. 2010;8:63. doi: 10.1186/1741-7015-8-63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Proctor E, Silmere H, Raghavan R et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health. 2011;38(2):65–76. doi: 10.1007/s10488-010-0319-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Glasgow RE, Klesges LM, Dzewaltowski DA, Estabrooks PA, Vogt TM. Evaluating the impact of health promotion programs: using the RE-AIM framework to form summary measures for decision making involving complex issues. Health Educ Res. 2006;21(5):688–694. doi: 10.1093/her/cyl081. [DOI] [PubMed] [Google Scholar]

- 76.Haug NA, Shopshire M, Tajima B, Gruber V, Guydish J. Adoption of evidence-based practices among substance abuse treatment providers. J Drug Educ. 2008;38(2):181–192. doi: 10.2190/DE.38.2.f. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Henggeler SW, Chapman JE, Rowland MD et al. Statewide adoption and initial implementation of contingency management for substance-abusing adolescents. J Consult Clin Psychol. 2008;76(4):556–567. doi: 10.1037/0022-006X.76.4.556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Greenhalgh T. Role of routines in collaborative work in healthcare organisations. BMJ. 2008;337:a2448. doi: 10.1136/bmj.a2448. [DOI] [PubMed] [Google Scholar]

- 79.Cochrane A. Effectiveness and Efficiency: Random Reflections on Health Services. London, England: Nuffield Provincial Hospital Trust; 1972. [Google Scholar]

- 80.Chambers D. Foreword. In: Brownson R, Colditz G, Proctor E, editors. Dissemination and Implementation Research in Health: Translating Science to Practice. New York, NY: Oxford University Press; 2012. pp. vii–x. [Google Scholar]

- 81.Altman DG, Simera I, Hoey J, Moher D, Schulz K. EQUATOR: reporting guidelines for health research. Open Med. 2008;2(2):e49–e50. [PMC free article] [PubMed] [Google Scholar]

- 82.Moher D, Simera I, Schulz KF, Hoey J, Altman DG. Helping editors, peer reviewers and authors improve the clarity, completeness and transparency of reporting health research. BMC Med. 2008;6:13. doi: 10.1186/1741-7015-6-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Schulz KF, Altman DG, Moher D, Group C. CONSORT 2010 statement: updated guidelines for reporting parallel group randomised trials. BMC Med. 2010;8:18. doi: 10.1186/1741-7015-8-18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Bossuyt PM, Reitsma JB, Bruns DE et al. The STARD statement for reporting studies of diagnostic accuracy: explanation and elaboration. Ann Intern Med. 2003;138(1):W1–W12. doi: 10.7326/0003-4819-138-1-200301070-00012-w1. [DOI] [PubMed] [Google Scholar]

- 85.von Elm E, Altman DG, Egger M et al. The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement: guidelines for reporting observational studies. Ann Intern Med. 2007;147(8):573–577. doi: 10.7326/0003-4819-147-8-200710160-00010. [DOI] [PubMed] [Google Scholar]

- 86.Davidoff F, Batalden P, Stevens D, Ogrinc G, Mooney S SQUIRE Development Group. Publication guidelines for improvement studies in health care: evolution of the SQUIRE Project. Ann Intern Med. 2008;149(9):670–676. doi: 10.7326/0003-4819-149-9-200811040-00009. [DOI] [PubMed] [Google Scholar]

- 87.Husereau D, Drummond M, Petrou S et al. Consolidated Health Economic Evaluation Reporting Standards (CHEERS) statement. BMJ. 2013;346:f1049. doi: 10.1136/bmj.f1049. [DOI] [PubMed] [Google Scholar]

- 88.Gagnier JJ, Kienle G, Altman DG et al. The CARE guidelines: consensus-based clinical case report guideline development. J Clin Epidemiol. 2014;67(1):46–51. doi: 10.1016/j.jclinepi.2013.08.003. [DOI] [PubMed] [Google Scholar]

- 89.Stroup DF, Berlin JA, Morton SC et al. Meta-analysis of observational studies in epidemiology: a proposal for reporting. Meta-analysis Of Observational Studies in Epidemiology (MOOSE) group. JAMA. 2000;283(15):2008–2012. doi: 10.1001/jama.283.15.2008. [DOI] [PubMed] [Google Scholar]

- 90.Moher D, Liberati A, Tetzlaff J, Altman DG, Group P. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med. 2009;151(4):264–269. doi: 10.7326/0003-4819-151-4-200908180-00135. W264. [DOI] [PubMed] [Google Scholar]

- 91.Ioannidis JP, Evans SJ, Gotzsche PC et al. Better reporting of harms in randomized trials: an extension of the CONSORT statement. Ann Intern Med. 2004;141(10):781–788. doi: 10.7326/0003-4819-141-10-200411160-00009. [DOI] [PubMed] [Google Scholar]

- 92.Piaggio G, Elbourne DR, Altman DG, Pocock SJ, Evans SJ, Group C. Reporting of noninferiority and equivalence randomized trials: an extension of the CONSORT statement. JAMA. 2006;295(10):1152–1160. doi: 10.1001/jama.295.10.1152. [DOI] [PubMed] [Google Scholar]

- 93.Campbell MK, Elbourne DR, Altman DG CONSORT Group. CONSORT statement: extension to cluster randomised trials. BMJ. 2004;328(7441):702–708. doi: 10.1136/bmj.328.7441.702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Zwarenstein M, Treweek S, Gagnier JJ et al. Improving the reporting of pragmatic trials: an extension of the CONSORT statement. BMJ. 2008;337:a2390. doi: 10.1136/bmj.a2390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Gagnier JJ, Boon H, Rochon P et al. Reporting randomized, controlled trials of herbal interventions: an elaborated CONSORT statement. Ann Intern Med. 2006;144(5):364–367. doi: 10.7326/0003-4819-144-5-200603070-00013. [DOI] [PubMed] [Google Scholar]

- 96.Boutron I, Moher D, Altman DG, Schulz KF, Ravaud PCONSORT Group. Methods and processes of the CONSORT Group: example of an extension for trials assessing nonpharmacologic treatments Ann Intern Med 20081484W60–W66. [DOI] [PubMed] [Google Scholar]

- 97.Simera I, Moher D, Hirst A, Hoey J, Schulz KF, Altman DG. Transparent and accurate reporting increases reliability, utility, and impact of your research: reporting guidelines and the EQUATOR Network. BMC Med. 2010;8:24. doi: 10.1186/1741-7015-8-24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Tao KM, Li XQ, Zhou QH, Moher D, Ling CQ, Yu WF. From QUOROM to PRISMA: a survey of high-impact medical journals’ instructions to authors and a review of systematic reviews in anesthesia literature. PLoS ONE. 2011;6(11):e27611. doi: 10.1371/journal.pone.0027611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Albrecht L, Archibald M, Arseneau D, Scott SD. Development of a checklist to assess the quality of reporting of knowledge translation interventions using the Workgroup for Intervention Development and Evaluation Research (WIDER) recommendations. Implement Sci. 2013;8:52. doi: 10.1186/1748-5908-8-52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Welch V, Petticrew M, Tugwell P et al. PRISMA-Equity 2012 extension: reporting guidelines for systematic reviews with a focus on health equity. PLoS Med. 2012;9(10):e1001333. doi: 10.1371/journal.pmed.1001333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Möhler R, Bartoszek G, Meyer G. Quality of reporting of complex healthcare interventions and applicability of the CReDECI list—a survey of publications indexed in PubMed. BMC Med Res Methodol. 2013;13(1):125. doi: 10.1186/1471-2288-13-125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Loudon K, Zwarenstein M, Sullivan F, Donnan P, Treweek S. Making clinical trials more relevant: improving and validating the PRECIS tool for matching trial design decisions to trial purpose. Trials. 2013;14:115. doi: 10.1186/1745-6215-14-115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Pinnock H, Taylor S, Epiphaniou E Developing standards for reporting phase IV implementation studies. 2013. Available at: http://www.equator-network.org/library/reporting-guidelines-under-development/#17. Accessed December 13, 2013.

- 104.Slade M. REporting Manualised INterventions for Dissemination and Evaluation (REMINDE) Statement. 2013. Available at: http://www.equator-network.org/library/reporting-guidelines-under-development/#6. Accessed December 13, 2013.

- 105.Hoffmann TC, Glaziou PP, Boutron I et al. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ. 2014;348:g1687. doi: 10.1136/bmj.g1687. [DOI] [PubMed] [Google Scholar]

- 106.Montgomery P, Grant S, Hopewell S et al. Protocol for CONSORT-SPI: an extension for social and psychological interventions. Implement Sci. 2013;8(1):99. doi: 10.1186/1748-5908-8-99. [DOI] [PMC free article] [PubMed] [Google Scholar]