Abstract

Abnormal reinforcement learning and representations of reward value are present in schizophrenia, and these impairments can manifest as deficits in risk/reward decision making. These abnormalities may be due in part to dopaminergic dysfunction within cortico-limbic-striatal circuitry. Evidence from studies with laboratory animal have revealed that normal DA activity within different nodes of these circuits is critical for mediating dissociable processes that can refine decision biases. Moreover, both phasic and tonic dopamine transmission appear to play separate yet complementary roles in these processes. Tonic dopamine release within the prefrontal cortex and nucleus accumbens, serves as a “running rate-meter” of reward and reflects contextual information such as reward uncertainty and overt choice behavior. On the other hand, manipulations of outcome-related phasic dopamine bursts and dips suggest these signals provide rapid feedback to allow for quick adjustments in choice as reward contingencies change. The lateral habenula is a key input to the DA system that phasic signals is necessary for expressing subjective decision biases; as suppression of activity within this nucleus leads to catastrophic impairments in decision making and random patterns of choice behavior. As schizophrenia is characterized by impairments in using positive and negative feedback to appropriately guide decision making, these findings suggest that these deficits in these processes may be mediated, at least in part, by abnormalities in both tonic and phasic dopamine transmission.

Key words: dopamine, decision making, phasic, tonic, habenula

Introduction

Despite common portrayal of schizophrenia as a disorder marked primarily by hallucinations and delusions, these positive symptoms are often accompanied by debilitating negative symptoms and cognitive deficits.1 Among the numerous domains of cognition, affect and motivation perturbed in this disease, there has been a growing interest in impairments in reinforcement learning and representations of reward value that may manifest as an inability to make appropriate cost/benefit decisions involving uncertainty or risk.2–4 For example, patients with schizophrenia perform worse than healthy controls on laboratory tests of probabilistic decision making.4–6 These deficits are accompanied by altered patterns of activation of distributed systems known to regulate these functions, including the different prefrontal and orbitofrontal cortical regions, the nucleus accumbens (NAc) and the amygdala. Although pathophysiological changes within these regions likely contribute to impairments in value representation and decision making, it is notable that they are all heavily innervated by dopamine (DA) transmission.7–12 As such, clarifying how different nodes within the dopaminergic system contribute to different aspects of risk/reward decision making can provide insight into how dysfunction within these circuits may relate to abnormalities in reward processing and decision making observed in this disorder.

A Distributed DA Circuit Mediates Risk/Reward Decision Making

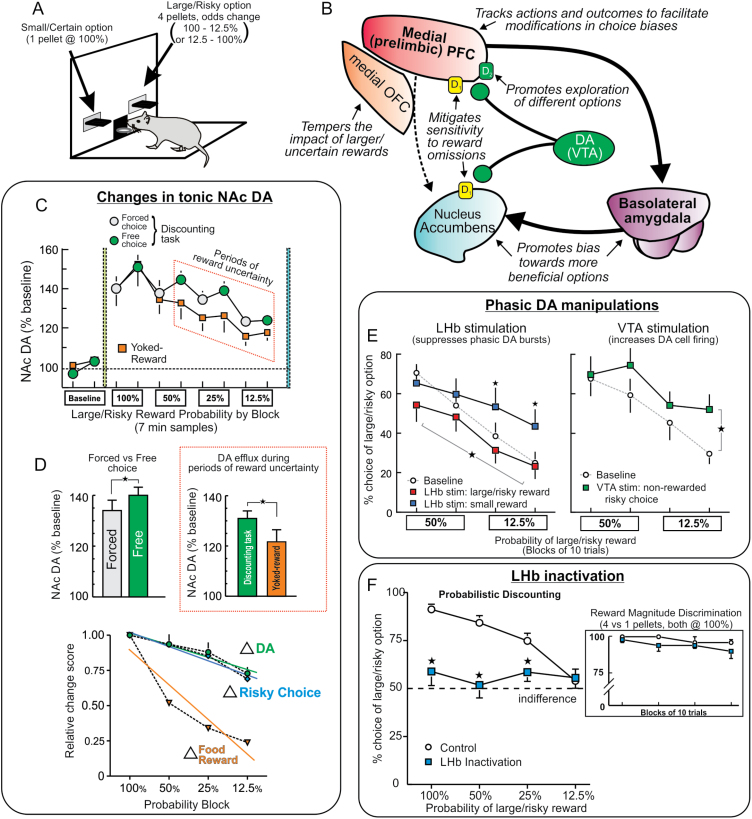

Early indications that alterations in DA transmission may interfere with risk/reward decision making came from clinical reports of the emergence of pathological gambling in patients receiving dopamine agonist therapy for Parkinson’s disease and restless legs syndrome.13,14 This effect may be related to the ability of these treatments to increase sensitivity for positive feedback at the expense of learning from negative outcomes.15 Similarly, Rogers et al.16 found that individuals who abused amphetamine displayed impairments on a laboratory-based gambling task. Nearly a decade later, preclinical studies began to characterize the role of DA in risky decision making in more detail, exploiting the greater experimental control afforded by animal models. A number of assays have been developed to assess risk/reward decision making in rodents, and work by our group has employed a probabilistic discounting procedure wherein rats choose between a small/certain (1 reward pellet) and large/risky reward (4 pellets) (figure 1A). During a daily session, the probability of obtaining the larger reward changes in a systematic manner over blocks of free-choice trials (100%–12.5% or vice versa), and rats must keep track of their choices and outcomes to ascertain when it may be more profitable to choose riskier or more conservatively.

Fig. 1.

(A) Depiction of the probabilistic discounting task used to asses risk/reward decision making in rodents. (B) Summary of the dissociable functions of cortical, limbic, and striatal nodes within DA circuitry in regulating probabilistic discounting, as inferred by inactivation and circuit disconnection studies. (C) Fluctuations in tonic levels of NAc DA (measured with microdialysis) associated with risk/reward decision making. Gray and green circles correspond to DA levels obtained during forced- and free-choice portions of each probability block, and squares represent data from animals that received rewards delivered passively on a yoked schedule. Highlighted region indicates portion of the discounting task associate with the greatest amount of reward uncertainty. Adapted from.30 (D) Tonic DA within the NAc was higher during free choice vs forced choices periods of the task (left), and during periods of greater uncertainty during decision making relative to rats receiving food on a yoked schedule (right). Changes in tonic NAc DA also corresponded closely to changes in choice behavior, but not reward availability (bottom). (E) Suppression of outcome-related phasic DA signals via LHb stimulation altered choice biases. LHb during receipt of larger or smaller rewards (left) shifted bias towards the alternative option. Conversely, inducing phasic DA burst in DA activity non-rewarded risky choices (via VTA stimulation, right) increased risky choice. Adapted from.43 (F) Inactivation of the LHb induced a massive disruption in decision making, causing rats to be indifferent to either option irrespective of their relative value. However, these manipulations did not affect choice during simpler decisions when choosing between smaller vs larger certain rewards of equal costs (inset). Adapted from.44 For all figures stars denote significant differences at P < 0.05.

Initial studies using systemic treatment with dopaminergic drugs revealed a key role for DA transmission in refining choice between uncertain and certain rewards.17 Increasing DA with amphetamine impairs updating of decision biases when reward probabilities change. Animals chose the riskier option when the odds of obtaining a larger reward were initially high and then decreased, and showed the opposite effect when reward probabilities were low and increased over a session. Thus, abnormal increases in DA activity appear to “lock in” choice biases, impairing the use of information about whether recent actions were rewarded (or not) to estimate changes in relative profitability of different options and to modify decision biases accordingly. Conversely, blockade of either the D1 or D2 receptor decreased risky choice, in keeping with a considerable literature indicating that normal DA tone promotes choice of larger, more costly rewards.17–19

Subsequent studies using discrete inactivation of different brain nuclei revealed that different component processes of risk/reward decision making are handled by distributed circuits (summarized in figure 1B). Amygdala inputs to the NAc promote choice of larger, uncertain rewards.7,9,10 However, these drives are tempered by different regions of the orbitofrontal (OFC) and medial prelimbic PFC (homologous to anterior cingulate Area 32) which act as a brake on these impulses and refine choice biases when reward probabilities change.7–10,20–22

DA within forebrain terminal regions further refines action selection during risk/reward decision making. D1 receptors in medial PFC and NAc mitigate sensitivity to non-rewarded actions, as their blockade reduces risky choice by increasing lose-shift behavior.11,12 Moreover, stimulation of D1 receptors in the NAc optimized decision making, increasing risky choices when they were advantageous, and shifting bias to the small/certain option as the utility of the large/uncertain option declined. Thus, activity at these receptors promotes exploitation of potentially more profitable options despite their uncertainty and helps a decision maker keep the “eye on the prize,” maintaining choice biases even when choices do not always yield reward. In comparison, PFC D2 receptors appear to promote exploration of different options in response to changes in reward probabilities, as blockade or stimulation of these receptors led to more static patterns of choice. Interestingly, D2 receptor manipulation within the NAc did not alter choice behavior on this task. This lack of effect may be related to findings that D2-containing striatal neurons appear to mediate avoidance behaviors.23,24 Given that the task used in our studies did not employ any explicit punishments, it is possible that NAc D2 receptors may play a more prominent role in refining decision biases in situations where certain actions may yield more preferred rewards but also deliver aversive consequences as well.25–27

Tonic and Phasic Dopamine Transmission

DA signaling (at least within striatal regions) is compartmentalized into distinct modes of transmission. Relatively low concentrations of extrasynaptic or “tonic” DA fluctuate on a slower timescale (sec to min), whereas rapid “phasic” signals are driven by burst firing of DA neurons.28,29 Our psychopharmacological manipulations indiscriminately target both modes of DA transmission, making it difficult to ascertain the respective contribution of these signals to choice behavior. Although the means to selectively disrupt tonic DA signals is not currently available, recent neurochemical studies have provided insight to their potential role in these processes. Using in vivo microdialysis, St. Onge et al.30 measured changes in tonic DA in rats performing a probabilistic discounting task. Within the mPFC, tonic DA levels were higher during periods when animals received greater amounts of reward. This profile was observed in rats that earned their rewards during the course of decision making and those that received rewards delivered passively on a yoked schedule. Thus, dynamic fluctuations in mesocortical DA efflux convey information about changes in the relative amount of reward availability and may serve as a reward “running-rate meter,” that may be used by the PFC to aid in directing choice accordingly. In comparison, changes in tonic NAc DA during decision making were more complex (figure 1C). NAc DA varied as a function of the amount of reward received over time, yet also appeared to encode other key factors. These included whether or not animals had to make a choice vs merely respond to obtain reward, how often they chose the risky option and the relative amount of uncertainty associated with those choices (figure 1D). Thus, fluctuations in tonic NAc DA signals seem to reflect an integration of various types of information used to guide decision making.

It is well-established that spatially restricted phasic DA signals appear to encode prediction errors during reward-related associative learning. DA neurons show phasic bursts of activity in response to unexpected rewards or cues that predict them, and display brief suppression in firing (“dips”) when expected rewards are not received.31 More recently, fast-scan voltammetric measures of subsecond changes in DA concentrations have shown that phasic DA release in the NAc also encodes outcome-related information during more complex situations involving choices between certain and uncertain rewards.32 These studies revealed that receiving rewards after a particular choice elicited phasic increases in NAc DA that were greater in magnitude when the reward was larger and uncertain. In contrast, non-rewarded risky choices caused phasic dips in NAc DA. In addition, increases in phasic DA occurred prior to a choice, with these signals encoding the expected availability of larger or more-preferred reward.

It has been known for some time that phasic bursts of DA neuron activity are driven by excitatory inputs to the midbrain arising from regions such as the pedunculopontine nucleus and prefrontal cortex.33–35 More recent work has identified an inhibitory circuit, incorporating the lateral habenula (LHb) and the recently discovered rostromedial tegmental nucleus (RMTg), that drives phasic dips in DA neuron firing.36–38 Stimulation of the LHb induces brief, gamma amino butyric acid (GABA)-mediated inhibition of midbrain DA neuron firing,39,40 that was subsequently discovered to be relayed through disynaptic circuits via the RMTg. The LHb sends glutamatergic projections to the RMTg that in turn sends dense GABAergic projections onto DA neurons in the VTA.41,42 LHb and RMTg neurons encode reward prediction errors in a manner opposite DA neurons, showing increased activity in response to punishment or reward omission and reduced firing in response to reward or reward-predictive cues.36–38

The finding that LHb stimulation suppresses DA neuron activity inspired us to use this approach as a tool to manipulate and override outcome and prechoice phasic DA signals to ascertain their influence over risk/reward decision making.43 We observed that brief (200–400ms) outcome-contingent stimulation of the LHb markedly influenced the direction of choice. Temporally specific LHb stimulation, delivered precisely when a risky or certain choice was rewarded (ie periods associated with increased phasic DA), caused animals to behave as if they had not received those rewards, redirecting their bias towards the other option (figure 1E, left). Conversely, activation of DA neurons via VTA stimulation when a risky choice was not-rewarded (that normally causes phasic dips in DA firing) increased bias for the risky option by decreasing sensitivity to negative feedback (figure 1E, right). Suppression of pre-choice DA signals also affected behavior. LHb stimulation prior to action selection reduced incentive salience of the reward-associated levers, as evidenced by an increase in response latencies. More intriguingly, it also reduced selection of the more preferred of the two options, most prominently when rats normally preferred the larger reward. This collection of findings suggests that phasic and tonic DA signaling play separate yet complementary roles that may form a system of reward checks and balances. Outcome-related phasic DA signals provide rapid feedback on whether or not recent actions were beneficial and increase or decrease the likelihood that those options are selected again. In turn, pre-choice DA signals promote expression of preferences for more desirable options. On the other hand, slower fluctuations in tonic DA may provide a longer-term accounting of reward histories and average expected utility so that individual outcomes are not overemphasized, ensuring that ongoing decision making proceeds in an efficient, adaptive and more rational manner.

The pronounced influence that LHb stimulation exerted over action selection prompted us to enquire about its normal contribution to these processes by assessing how inactivation of this nucleus affected various forms of cost/benefit decision making.44 This caused a catastrophic disruption in the ability of animals to make a decision, rendering them absolutely indifferent to which option may be more preferable, inducing unbiased and random patterns of choice, but only when larger rewards were associated with some form of cost (figure 1F). This massive effect suggests that differential LHb signals, encoding expectation or occurrence of negative/positive events, are crucial for helping an organism make up its mind when faced with ambiguous decisions regarding the cost and benefits of different actions. Activity within this nucleus aids in biasing behavior from a point of indifference toward committing to choices that may yield outcomes perceived as more beneficial. Integration of differential LHb reward/aversion signals by downstream targets such as the RMTg and DA neurons may set a tone, ie crucial for expression of preferences for one course of action over another. In turn, suppression of these signals would be expected to leave phasic (and tonic) DA signaling in disarray, rendering a decision-maker incapable of determining which option may be “better,” highlighting the importance of these signals in guiding ongoing reward seeking.

Implications for Schizophrenia

Dysfunction in DA transmission is a central feature of schizophrenia, yet its precise contribution to different aspects of the disease symptomology is often debated. Psychotic symptoms seem to be caused by a hyperdopaminergic state, likely driven by increased phasic activity in subcortical regions.1,28 Conversely, cognitive symptoms appear to be correlated with perturbations in prefrontal functioning that include reduced dopaminergic activity.1 Our findings may provide some insight into how aberrant DA signaling may contribute to impairments in reinforcement learning and decision making pervasive in the disorder. For example, schizophrenic patients show deficits in learning from both positive feedback and negative feedback and using this information to adapt choices trial-by-trial.3,45–47 Our findings that manipulation of phasic DA signaling interferes with action selection during risk/reward decision making demonstrate that these signals play a crucial role updating ongoing reward-seeking behavior. Disruptions in phasic DA signaling (as may occur in schizophrenia),28 would therefore be expected to lead to aberrant reinforcement learning. Hyperactivity within the DA system may attenuate dips in firing associated with non-rewarded events and impair learning from negative feedback. Conversely, persistent increases in DA tone could also diminish the impact that reward-related phasic bursts exert over downstream targets of this system, which in turn may reduce the influence that rewarded actions exert on subsequent action selection.

The finding that LHb regulates phasic DA activity and plays a crucial role in mediating subjective decision biases may also be relevant to schizophrenia. Although information on how habenular pathology may relate to schizophrenia is sparse, one post-mortem study revealed increased calcification in this nucleus in schizophrenic brains48 (but see).49 In addition, lesions of the LHb in animals disrupt attention, cognition and social behaviors in a manner that resembles what is observed in the disease.50,51 In a preliminary neuroimaging study, increased activation of this nucleus was observed in healthy controls following receipt of unexpected negative feedback or the absence of expected positive feedback.52 However, patients with schizophrenia did not display normal feedback-related modulation of habenular activity, suggesting dysfunction in this nucleus may also contribute to aberrant reinforcement learning and disorganized patterns of phasic DA activity.

Our focus on DA transmission is not meant to imply that perturbations in this system is the singular or even primary pathophysiology underlying impairments in reinforcement learning and decision making observed in schizophrenia. For example, schizophrenia has long been associated with reductions in both excitatory glutamatergic and in particular, inhibitory GABAergic transmission within the PFC.53–57 These neurochemical abnormalities would be expected to result in a cortical cacophony of abnormal patterns of activity within the frontal lobes. Dysfunction of certain prefrontal functions (such as working memory) arising from these abnormalities may contribute to reinforcement learning impairments observed in schizophrenia.58 On the other hand, the PFC sends direct and indirect projections to the DA system.59,60 As such, haphazard signal outflow from the PFC to DA neurons may be another driving force behind aberrant phasic DA activity. Indeed, reducing PFC GABA transmission increases phasic DA activity, similar to what is observed in the disorder.53 Thus, one challenge for future studies exploring the underlying mechanisms for impairments in reinforcement learning and decision making in schizophrenia is to isolate how abnormal phasic signaling contributes to these types of impairments, and whether this is a fundamental cause of these impairments or if they are a reflection of abnormal patterns of activity in regions upstream of DA neurons.

Acknowledgments

The authors have declared that there are no conflicts of interest in relation to the subject of this study.

Funding

Canadian Institutes of Health Research (MOP 89861) to S.B.F.

References

- 1. Abi-Dargham A. Do we still believe in the dopamine hypothesis? New data bring new evidence. Int J Neuropsychopharmacol. 2004;7:S1–S5. [DOI] [PubMed] [Google Scholar]

- 2. Gold JM, Waltz JA, Prentice KJ, Morris SE, Heerey EA. Reward processing in schizophrenia: a deficit in the representation of value. Schizophr Bull. 2008;34:835–847. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Cicero DC, Martin EA, Becker TM, Kerns JG. Reinforcement learning deficits in people with schizophrenia persist after extended trials. [published online ahead of print August 15, 2014]. Psychiatry Res. pii S0165-1781(14)00709-4. 10.1016/ j.psychres.2014.08.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Strauss GP, Waltz JA, Gold JM. A review of reward processing and motivational impairment in schizophrenia. Schizophr Bull. 2014;40:S107–S116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Rausch F, Mier D, Eifler S, et al. Reduced activation in ventral striatum and ventral tegmental area during probabilistic decision-making in schizophrenia. Schizophr Res. 2014;156:143–149. [DOI] [PubMed] [Google Scholar]

- 6. Krug A, Cabanis M, Pyka M, et al. Attenuated prefrontal activation during decision-making under uncertainty in schizophrenia: a multi-center fMRI study. Schizophr Res. 2014;152:176–183. [DOI] [PubMed] [Google Scholar]

- 7. Stopper CM, Floresco SB. Contributions of the nucleus accumbens and its subregions to different aspects of risk-based decision making. Cogn Affect Behav Neurosci. 2011;11:97–112. [DOI] [PubMed] [Google Scholar]

- 8. St Onge JR, Floresco SB. Prefrontal cortical contribution to risk-based decision making. Cereb Cortex. 2010;20:1816–1828. [DOI] [PubMed] [Google Scholar]

- 9. Ghods-Sharifi S, St Onge JR, Floresco SB. Fundamental contribution by the basolateral amygdala to different forms of decision making. J Neurosci. 2009;29:5251–5259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. St Onge JR, Stopper CM, Zahm DS, Floresco SB. Separate prefrontal-subcortical circuits mediate different components of risk-based decision making. J Neurosci. 2012;32:2886–2899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. St Onge JR, Abhari H, Floresco SB. Dissociable contributions by prefrontal D1 and D2 receptors to risk-based decision making. J Neurosci. 2011;31:8625–8633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Stopper CM, Khayambashi S, Floresco SB. Receptor-specific modulation of risk-based decision making by nucleus accumbens dopamine. Neuropsychopharmacology. 2013;38:715–728. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Gallagher DA, O’Sullivan SS, Evans AH, Lees AJ, Schrag A. Pathological gambling in Parkinson’s disease: risk factors and differences from dopamine dysregulation. An analysis of published case series. Mov Disord. 2007;22:1757–1763. [DOI] [PubMed] [Google Scholar]

- 14. Quickfall J, Suchowersky O. Pathological gambling associated with dopamine agonist use in restless legs syndrome. Parkinsonism Relat Disord. 2007;13:535–536. [DOI] [PubMed] [Google Scholar]

- 15. Frank MJ, Seeberger LC, O’reilly RC. By carrot or by stick: cognitive reinforcement learning in parkinsonism. Science. 2004;306:1940–1943. [DOI] [PubMed] [Google Scholar]

- 16. Rogers RD, Everitt BJ, Baldacchino A, et al. Dissociable deficits in the decision-making cognition of chronic amphetamine abusers, opiate abusers, patients with focal damage to prefrontal cortex, and tryptophan-depleted normal volunteers: evidence for monoaminergic mechanisms. Neuropsychopharmacology. 1999;20:322–339. [DOI] [PubMed] [Google Scholar]

- 17. St Onge JR, Floresco SB. Dopaminergic modulation of risk-based decision making. Neuropsychopharmacology. 2009;34:681–697. [DOI] [PubMed] [Google Scholar]

- 18. Salamone JD, Correa M, Farrar A, Mingote SM. Effort-related functions of nucleus accumbens dopamine and associated forebrain circuits. Psychopharmacology (Berl). 2007;191:461–482. [DOI] [PubMed] [Google Scholar]

- 19. Floresco SB, St Onge JR, Ghods-Sharifi S, Winstanley CA. Cortico-limbic-striatal circuits subserving different forms of cost-benefit decision making. Cogn Affect Behav Neurosci. 2008;8:375–389. [DOI] [PubMed] [Google Scholar]

- 20. Stopper CM, Green EB, Floresco SB. Selective involvement by the medial orbitofrontal cortex in biasing risky, but not impulsive, choice. Cereb Cortex. 2014;24:154–162. [DOI] [PubMed] [Google Scholar]

- 21. Kuhnen CM, Knutson B. The neural basis of financial risk taking. Neuron. 2005;47:763–770. [DOI] [PubMed] [Google Scholar]

- 22. Bechara A, Damasio AR, Damasio H, Anderson SW. Insensitivity to future consequences following damage to human prefrontal cortex. Cognition. 1994;50:7–15. [DOI] [PubMed] [Google Scholar]

- 23. Kravitz AV, Tye LD, Kreitzer AC. Distinct roles for direct and indirect pathway striatal neurons in reinforcement. Nat Neurosci. 2012;15:816–818. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Lobo DS, Souza RP, Tong RP, et al. Association of functional variants in the dopamine D2-like receptors with risk for gambling behaviour in healthy Caucasian subjects. Biol Psychol. 2010;85:33–37. [DOI] [PubMed] [Google Scholar]

- 25. Simon NW, Montgomery KS, Beas BS, et al. Dopaminergic modulation of risky decision-making. J Neurosci. 2011;31:17460–17470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Simon NW, Beas BS, Montgomery KS, Haberman RP, Bizon JL, Setlow B. Prefrontal cortical-striatal dopamine receptor mRNA expression predicts distinct forms of impulsivity. Eur J Neurosci. 2013;37:1779–1788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Mitchell MR, Weiss VG, Beas BS, Morgan D, Bizon JL, Setlow B. Adolescent risk taking, cocaine self-administration, and striatal dopamine signaling. Neuropsychopharmacology. 2014;39:955–962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Grace AA, Floresco SB, Goto Y, Lodge DJ. Regulation of firing of dopaminergic neurons and control of goal-directed behaviors. Trends Neurosci. 2007;30:220–227. [DOI] [PubMed] [Google Scholar]

- 29. Floresco SB. Dopaminergic regulation of limbic-striatal interplay. J Psychiatry Neurosci. 2007;32:400–411. [PMC free article] [PubMed] [Google Scholar]

- 30. St Onge JR, Ahn S, Phillips AG, Floresco SB. Dynamic fluctuations in dopamine efflux in the prefrontal cortex and nucleus accumbens during risk-based decision making. J Neurosci. 2012;32:16880–16891. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275:1593–1599. [DOI] [PubMed] [Google Scholar]

- 32. Sugam JA, Day JJ, Wightman RM, Carelli RM. Phasic nucleus accumbens dopamine encodes risk-based decision-making behavior. Biol Psychiatry. 2012;71:199–205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Floresco SB, West AR, Ash B, Moore H, Grace AA. Afferent modulation of dopamine neuron firing differentially regulates tonic and phasic dopamine transmission. Nat Neurosci. 2003;6:968–973. [DOI] [PubMed] [Google Scholar]

- 34. Lodge DJ, Grace AA. The laterodorsal tegmentum is essential for burst firing of ventral tegmental area dopamine neurons. Proc Natl Acad Sci U S A. 2006;103:5167–5172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Murase S, Grenhoff J, Chouvet G, Gonon FG, Svensson TH. Prefrontal cortex regulates burst firing and transmitter release in rat mesolimbic dopamine neurons studied in vivo. Neurosci Lett. 1993;157:53–56. [DOI] [PubMed] [Google Scholar]

- 36. Matsumoto M, Hikosaka O. Lateral habenula as a source of negative reward signals in dopamine neurons. Nature. 2007;447:1111–1115. [DOI] [PubMed] [Google Scholar]

- 37. Matsumoto M, Hikosaka O. Representation of negative motivational value in the primate lateral habenula. Nat Neurosci. 2009;12:77–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Hong S, Jhou TC, Smith M, Saleem KS, Hikosaka O. Negative reward signals from the lateral habenula to dopamine neurons are mediated by rostromedial tegmental nucleus in primates. J Neurosci. 2011;31:11457–11471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Christoph GR, Leonzio RJ, Wilcox KS. Stimulation of the lateral habenula inhibits dopamine-containing neurons in the substantia nigra and ventral tegmental area of the rat. J Neurosci. 1986;6:613–619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Ji H, Shepard PD. Lateral habenula stimulation inhibits rat midbrain dopamine neurons through a GABA(A) receptor-mediated mechanism. J Neurosci. 2007;27:6923–6930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Jhou TC, Fields HL, Baxter MG, Saper CB, Holland PC. The rostromedial tegmental nucleus (RMTg), a GABAergic afferent to midbrain dopamine neurons, encodes aversive stimuli and inhibits motor responses. Neuron. 2009;61:786–800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Jhou TC, Geisler S, Marinelli M, Degarmo BA, Zahm DS. The mesopontine rostromedial tegmental nucleus: A structure targeted by the lateral habenula that projects to the ventral tegmental area of Tsai and substantia nigra compacta. J Comp Neurol. 2009;513:566–596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Stopper CM, Tse MT, Montes DR, Wiedman CR, Floresco SB. Overriding phasic dopamine signals redirects action selection during risk/reward decision making. Neuron. 2014;84:177–189. [DOI] [PubMed] [Google Scholar]

- 44. Stopper CM, Floresco SB. What’s better for me? Fundamental role for lateral habenula in promoting subjective decision biases. Nat Neurosci. 2014;17:33–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Vogel SJ, Strauss GP, Allen DN. Using negative feedback to guide behavior: impairments on the first 4 cards of the Wisconsin Card Sorting Test predict negative symptoms of schizophrenia. Schizophr Res. 2013;151:97–101. [DOI] [PubMed] [Google Scholar]

- 46. Frank MJ. Schizophrenia: a computational reinforcement learning perspective. Schizophr Bull. 2008;34:1008–1011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Waltz JA, Frank MJ, Robinson BM, Gold JM. Selective reinforcement learning deficits in schizophrenia support predictions from computational models of striatal-cortical dysfunction. Biol Psychiatry. 2007;62:756–764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Sandyk R. Pineal and habenula calcification in schizophrenia. Int J Neurosci. 1992;67:19–30. [DOI] [PubMed] [Google Scholar]

- 49. Ranft K, Dobrowolny H, Krell D, Bielau H, Bogerts B, Bernstein HG. Evidence for structural abnormalities of the human habenular complex in affective disorders but not in schizophrenia. Psychol Med. 2010;40:557–567. [DOI] [PubMed] [Google Scholar]

- 50. Lecourtier L, Neijt HC, Kelly PH. Habenula lesions cause impaired cognitive performance in rats: implications for schizophrenia. Eur J Neurosci. 2004;19:2551–2560. [DOI] [PubMed] [Google Scholar]

- 51. van Kerkhof LW, Damsteegt R, Trezza V, Voorn P, Vanderschuren LJ. Functional integrity of the habenula is necessary for social play behaviour in rats. Eur J Neurosci. 2013;38:3465–3475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Shepard PD, Holcomb HH, Gold JM. Schizophrenia in translation: the presence of absence: habenular regulation of dopamine neurons and the encoding of negative outcomes. Schizophr Bull. 2006;32:417–421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Enomoto T, Tse MT, Floresco SB. Reducing prefrontal gamma-aminobutyric acid activity induces cognitive, behavioral, and dopaminergic abnormalities that resemble schizophrenia. Biol Psychiatry. 2011;69:432–441. [DOI] [PubMed] [Google Scholar]

- 54. Lewis DA, Volk DW, Hashimoto T. Selective alterations in prefrontal cortical GABA neurotransmission in schizophrenia: a novel target for the treatment of working memory dysfunction. Psychopharmacology (Berl). 2004;174:143–150. [DOI] [PubMed] [Google Scholar]

- 55. Benes FM, Berretta S. GABAergic interneurons: implications for understanding schizophrenia and bipolar disorder. Neuropsychopharmacology. 2001;25:1–27. [DOI] [PubMed] [Google Scholar]

- 56. Coyle JT. Glutamate and schizophrenia: beyond the dopamine hypothesis. Cell Mol Neurobiol. 2006;26:365–384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Javitt DC. Glutamate and schizophrenia: phencyclidine, N-methyl-D-aspartate receptors, and dopamine-glutamate interactions. Int Rev Neurobiol. 2007;78:69–108. [DOI] [PubMed] [Google Scholar]

- 58. Collins AG, Brown JK, Gold JM, Waltz JA, Frank MJ. Working memory contributions to reinforcement learning impairments in schizophrenia. J Neurosci. 2014;34:13747–13756. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Carr DB, Sesack SR. Projections from the rat prefrontal cortex to the ventral tegmental area: target specificity in the synaptic associations with mesoaccumbens and mesocortical neurons. J Neurosci. 2000;20:3864–3873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Omelchenko N, Sesack SR. Glutamate synaptic inputs to ventral tegmental area neurons in the rat derive primarily from subcortical sources. Neuroscience. 2007;146:1259–1274. [DOI] [PMC free article] [PubMed] [Google Scholar]