Abstract

Purpose

We examined whether school-age children with a history of SLI (H-SLI), their typically developing (TD) peers, and adults differ in sensitivity to audiovisual temporal asynchrony and whether such difference stems from the sensory encoding of audiovisual information.

Method

15 H-SLI children, 15 TD children, and 15 adults judged whether a flashed explosion-shaped figure and a 2 kHz pure tone occurred simultaneously. The stimuli were presented at 0, 100, 200, 300, 400, and 500 ms temporal offsets. This task was combined with EEG recordings.

Results

H-SLI children were profoundly less sensitive to temporal separations between auditory and visual modalities compared to their TD peers. Those H-SLI children who performed better at simultaneity judgment also had higher language aptitude. TD children were less accurate than adults, revealing a remarkably prolonged developmental course of the audiovisual temporal discrimination. Analysis of early ERP components suggested that poor sensory encoding was not a key factor in H-SLI children’s reduced sensitivity to audiovisual asynchrony.

Conclusions

Audiovisual temporal discrimination is impaired in H-SLI children and is still immature during mid-childhood in TD children. The present findings highlight the need for further evaluation of the role of atypical audiovisual processing in the development of SLI.

1. Introduction

A growing body of literature suggests that visual speech cues play an important role in typical language acquisition and speech perception. For example, the presence of a talker’s face has been shown to enhance infants’ ability to learn phoneme boundaries (Teinonen, Aslin, Alku, & Csibra, 2008) and new words (Hollich, Newman, & Jusczyk, 2005). It also significantly improves the understanding of speech-in-noise in both children and adults (Barutchu et al., 2010; Sumby & Pollack, 1954). The facilitative effect of visual cues on auditory perception is especially strong in individuals with hearing impairment. Thus, prelingually deaf children with cochlear implants understand speech better when it is audiovisual as compared to auditory only (Bergeson, Pisoni, & Davis, 2005). The degree of such benefit depends on the age of implantation, underlining the significance of audiovisual stimulation during early childhood (Schorr, Fox, van Wassenhove, & Knudsen, 2005).

The importance of visual speech cues during language acquisition is further bolstered by the findings that infants learn to pay attention to the mouth of the speaker during the first year of life. For example, 4–8 month old infants fixate on the mouth, rather than on the eyes, of a talker, presumably to benefit from redundant visual cues while learning the phonemes of their native language (Lewkowicz & Hansen-Tift, 2012). In fact, infants appear to be so acutely sensitive to articulatory movements that they are able to tell two different languages apart based on visual cues alone (Weikum et al., 2007). Paying attention to visual speech cues continues to be important during adulthood. A recent study by Yi and colleagues (Yi, Wong, & Eizenman, 2013) showed that as speech-to-noise ratio decreases, adults fixate their gaze significantly more on the speaker’s mouth in order to maintain good speech perception.

Several behavioral and electrophysiological studies have employed the so-called McGurk illusion in order to investigate how early in development visual information can influence auditory speech perception (Burnham & Dodd, 2004; Kushnerenko, Teinonen, Volein, & Csibra, 2008; Rosenblum, Schmuckler, & Johnson, 1997). The McGurk illusion is perceived when visual and auditory cues to phoneme identity are in conflict with each other. Although the exact stimuli used vary from study to study, it is common to dub the auditory “pa” onto the visual articulation of “ka,” with the resulting perception being that of “ta” if the merger of auditory and visual cues takes place. Such studies have demonstrated that infants as young as 4.5–5 months of age are in fact influenced by visual information and perceive the McGurk illusion. A study by Bristow and colleagues, using a different paradigm, examined audiovisual processing in even younger infants – just 10 weeks of age – and showed that infants integrate speech-related auditory and visual information during early stages of perception, similar to adults (Bristow et al., 2008).

Although a substantial amount of audiovisual processing is present early in development, as is evidenced by the above studies, the ability to fully integrate different senses and thus to gain the most benefit from multisensory information may have a protracted developmental course. For example, multiple studies have reported a reduced susceptibility to the McGurk illusion in children compared to adults and suggested that children are overall less influenced by visual information during auditory perception (e.g., Massaro, 1984; Massaro, Thompson, Barron, & Lauren, 1986; McGurk & MacDonald, 1976). In agreement with these behavioral reports, a neuroimaging study by Dick and colleagues has shown that the functional connectivity between brain regions involved in audiovisual speech perception differs significantly between school-age children and adults, indicating that audiovisual integration may not yet be mature by mid-childhood (Dick, Solodkin, & Small, 2010). This conclusion is also supported by an electrophysiological study of Brandwein and colleagues who reported that multisensory facilitation on a simple reaction time task and the accompanying reduction in the amplitude of the N1 ERP component to multisensory stimuli does not reach adult levels until approximately 14 years of age (Brandwein et al., 2011).

Despite the accumulating evidence for the importance of audiovisual processing in language acquisition, very little is known about a relationship between audiovisual processing and language aptitude. If visual speech cues facilitate language acquisition, then we would expect that those children who are good audiovisual integrators should also be effective language learners. On the other hand, a breakdown in efficient audiovisual processing may be expected to delay or disrupt at least some aspects of language acquisition and thus, hypothetically, contribute to the development of language disorders. A few studies have examined this issue by focusing on children with specific language impairment (SLI) (Boliek, Keintz, Norrix, & Obrzut, 2010; Hayes, Tiippana, Nicol, Sams, & Kraus, 2003; Meronen, Tiippana, Westerholm, & Ahonen, 2013; Norrix, Plante, & Vance, 2006; Norrix, Plante, Vance, & Boliek, 2007). SLI is a language disorder that is associated with significant linguistic difficulties in the absence of hearing impairment, frank neurological disorders, or low non-verbal intelligence (Leonard, 2014). Although it is typically diagnosed in early childhood, many school-age children with a history of SLI who no longer make overt grammatical errors continue to lag behind their peers in language aptitude (e.g., Hesketh & Conti-Ramsden, 2013), revealing the long-term nature of this disorder.

In all studies of audiovisual processing in SLI to date, the McGurk paradigm was used, and the authors reported the amount of fused McGurk perceptions and visual captures (e.g, hearing “ka” when the stimulus consisted of a visual “ka” combined with an auditory “pa”) in SLI children compared to their TD peers. Although as a whole these studies point to significant differences in the processing of audiovisual speech between SLI and TD children, specific findings and their interpretations vary from study to study. Most studies report a reduced number of fused McGurk percepts in children with language impairment (e.g., Boliek et al., 2010; Hayes et al., 2003; Norrix et al., 2007), with one exception being the study by Meronen and colleagues (Meronen et al., 2013). Findings on the amount of visual capture also differ. Hayes and colleagues reported that compared to TD children, children with language-learning difficulties showed significantly more instances of visual capture at low signal-to-noise ratios, which suggested that they relied primarily on visual information when responding (Hayes et al., 2003). On the other hand, a recent study by Meronen and colleagues showed the opposite effect, with a lower rate of visual capture in SLI compared to TD children under most difficult listening conditions, concluding that children with SLI have weak lip-reading skills (Meronen et al., 2013).

1.1. Audiovisual Temporal Discrimination

The reason for differences in the processing of audiovisual speech between SLI and TD children remains unclear. One possibility is that the McGurk stimulus, with its conflicting auditory and visual information about the identity of the phoneme, may present a challenge to the SLI population whose phonological awareness is thought to be weaker than that of TD children (e.g., Catts, 1993). However, the problem may also stem from difficulty in detecting temporal correspondences between auditory and visual information. Extensive research in the area of audiovisual integration shows that a temporal alignment between different sensory modalities is one of the key factors determining a successful merger of the senses (e.g., Meredith, Nemitz, & Stein, 1987). A reduced sensitivity to temporal discrepancies in audiovisual stimuli might, therefore, be a factor in poor audiovisual integration in SLI.

Individuals perceive audiovisual stimuli as synchronous not only when the two modalities are perfectly aligned in time, but also when they are separated by relatively short temporal periods of several tens to several hundreds of milliseconds (Bushara, Grafman, & Hallett, 2001; Dixon & Spitz, 1980; Grant, van Wassenhove, & Poeppel, 2004; McGrath & Summerfield, 1985; Petrini, Dahl, et al., 2009; Petrini, Halt, & Pollick, 2010; Petrini, Russell, & Pollick, 2009; Van Wassenhove, Grant, & Poeppel, 2007; Vatakis & Spence, 2006; for a review, see also Vroomen & Keetels, 2010; Vroomen & Stekelenburg, 2009; Vroomen & Stekelenburg, 2011). This temporal window of integration is significantly larger in infants but shortens with development (Lewkowicz, 1996, 2003, 2010; Pons, Lewkowicz, Soto-Faraco, & Sebastián-Gallés, 2009). However, the majority of studies on temporal audiovisual processing have been conducted with adults, and its maturational course is yet to be fully mapped. In two recent pioneering studies, Hillock-Dunn and colleagues examined audiovisual temporal discrimination in several groups of school-age children and in adults using a simultaneity judgment task (Hillock-Dunn & Wallace, 2012; Hillock, Powers, & Wallace, 2011). They reported that both children (6–11 years of age) and adolescents (12–17 years of age) were significantly more likely than adults to identify audiovisual stimuli separated by medium to large offsets as synchronous, pointing to a protracted developmental course of the audiovisual temporal function.

Temporal perception in children with SLI has been studied in great detail, and a deficit in the temporal discrimination mechanism has even been suggested as one of the main causes of this disorder (e.g., Tallal, Stark, & Mellits, 1985). However, almost all studies in this area used a single modality (mostly auditory) and focused primarily on the effect of stimulus brevity and the speed of presentation on auditory perception. To the best of our knowledge, only a few studies examined multimodal temporal discrimination in SLI. Tallal and colleagues (Tallal et al., 1985) reported that performance on sequencing cross-modal non-verbal stimuli with short inter-stimulus intervals (ISI) was one of six variables that, when taken together, correctly identified 98% of children with SLI in their sample as language-impaired. Short ISIs ranged from 8 ms to 305 ms (Tallal, 1980), and whether all were equally predictive of group membership is unknown. Because in Tallal’s studies children were asked to reproduce the order in which stimuli appeared, it is difficult to determine whether children were unable to perceive the correct sequence of stimuli or unable to maintain it in their working memory. A study by Grondin and colleagues (Grondin et al., 2007) tested 6–9 year old children with SLI and their age-matched TD peers on two temporal order judgment tests. In one, children had to report which of two flashes, on the left or on the right, appeared first. In another, children indicated whether the flash or the tone appeared first. The authors found that children with SLI performed significantly worse than their TD peers on both tasks; however, they did especially poorly on the bimodal task. Here, the SLI group’s accuracy remained close to chance level, even when separations between the onsets of the flash and the tone were as large as 420 ms. However, the stimuli used by Grondin and colleagues were only 5 ms in duration. Because children with SLI are known to have difficulty with very short stimuli (Leonard, McGregor, & Allen, 1992), their performance might have reflected the struggle to efficiently process brief sounds and flashes, rather than a failure to determine their temporal alignment.

Lastly, a recent study by Pons and colleagues (Pons, Andreu, Sanz-Torrent, Buil-Legaz, & Lewkowicz, 2013) compared the ability of 4–7 year old Spanish-speaking children with and without SLI to detect audiovisual temporal asynchrony in speech by evaluating how long children fixated on each of two simultaneously presented video clips. In one video clip, the audio and video portions were always synchronized, while in another they were offset by either 366 ms or 666 ms and presented with either the audio leading the video (the AV condition) or the video leading the audio (the VA condition). The authors assumed that if children can tell the difference between synchronous and asynchronous videos, they would prefer to fixate on the synchronous one. They found that neither group of children was able to detect speech asynchrony at the 366 ms offset. Further, while TD children detected the asynchrony at the 666 ms offset regardless of whether the audio preceded or followed the video, the SLI children detected temporal asynchrony only in the AV condition thus displaying a reduced ability to perceive audiovisual temporal asynchrony in speech.

1.2 Current Study

Taken together, the above studies suggest that children with language learning difficulties may in fact be impaired at detecting temporal correspondences between auditory and visual information; however, a more definitive evaluation with stimuli that do not place significant demands on either sensory or cognitive processing is needed. Additionally, the reason behind potentially worse audiovisual temporal discrimination in SLI remains elusive. Such measures as reaction time and accuracy represent the end result of multiple sensory and cognitive stages of processing and thus cannot identify the mechanism or mechanisms responsible for atypical audivovisual temporal function in SLI. One possibility is that children with SLI are impaired at detecting onsets of auditory and visual stimuli and encoding their early sensory properties when such stimuli occur in close temporal proximity. However, the difficulty may also stem from later cognitive processes, such as making an overt judgment about the temporal coincidence of two modalities. In order to understand the nature of neural processes that differ between TD and SLI children during audiovisual temporal discrimination, we combined a simultaneity judgment task (SJT) with event-related potentials (ERPs) recordings. ERPs are produced by large assemblies of post-synaptic neurons that fire in synchrony in response to a stimulus and are recorded on the scalp surface. This technique has exquisite temporal resolution, which allows one to track brain responses on a millisecond (ms) scale. Importantly, ERPs recorded within approximately 200 ms post-stimulus onset in either auditory or visual modalities are thought to reflect primarily sensory encoding of a stimulus and thus allow one to examine sensory processing somewhat independently from later cognitive analyses. This early temporal window typically includes a positive deflection called P1 and a negative deflection called N1.

In the visual modality, the P1 and N1 components are sensitive to physical properties of visual stimuli, such as luminance or pattern change. Infant studies show that the visual P1 to flash stimuli appears during the first 15 weeks of life (McCulloch, 2007). Its latency slowly shortens and the amplitude decreases during development. The early part of P1 is thought to originate in the dorsal extrastriate cortex of the middle occipital gyrus while the latter part from the ventral extrastriate cortex of the fusiform gyrus (Di Russo, Martínez, Sereno, Pitzalis, & Hillyard, 2001). The visual P1 component is followed by N1. Surface-recorded visual N1 is thought to be the result of activity in the lateral extrastriate cortex and inferior occipital-temporal cortex (Pratt, 2011). The visual P1 and N1 components have an occipital distribution in an ERP waveform.

In the auditory modality, the P1 and N1 components are thought to reflect the early sensory encoding of physical properties of sound, such as frequency, complexity, and intensity, among others (Näätänen & Picton, 1987). P1 is one of the dominant features of children’s ERPs, and its amplitude diminishes with age (Čeponienė, Rinne, & Näätänen, 2002). P1 is uniquely sensitive to sound onsets and is often absent for sound offsets. This component is likely the result of multiple neural generators, which include the primary auditory cortex, but also the hippocampus, the planum temporale, and the lateral temporal cortex (Pratt, 2011). The auditory N1 appears in children’s ERP recordings during the first few years of life. Developmental studies of early auditory ERPs sometimes disagree on the nature of the ERP components present during middle childhood, with some studies reporting immature ERPs in this age group (e.g., Sharma, Kraus, McGee, & Nicol, 1997; Sussman, Steinschneider, Gumenyuk, Grushko, & Lawson, 2008) while others showing an adult-like sequence of P1, N1, and P2 components (e.g., Čeponienė et al., 2002; Pang & Taylor, 2000). At least some of such disagreements are likely due to differences in the rate of stimulus presentation in various studies, with more adult-like ERPs elicited by stimuli presented at longer inter-stimulus intervals. Näätänen and Picton (1987) provide evidence for multiple N1 sub-components, which, together, may contribute to its parameters in the average waveform. At least one of these subcomponents is believed to originate from the supratemporal plane of the brain (Näätänen & Picton, 1987; Scherg, Vajsar, & Picton, 1989), which houses the primary auditory cortex. The auditory P1 and N1 components have fronto-central distribution in an ERP waveform.

Previous research suggests that some of these early auditory and visual ERP components may be atypical at least in a subset of children with SLI. The majority of studies on early processing in SLI focused on the auditory modality and examined the N1 peak amplitude and latency to either brief sounds or sounds that were presented in quick succession. For example, Neville and colleagues (Neville, Coffey, Holcomb, & Tallal, 1993) reported that N1 to standard tones was reduced at short ISIs over the right hemisphere sites in school-age language-impaired children. A similar finding was also reported for adolescents with SLI (Weber-Fox, Leonard, Hampton Wray, & Tomblin, 2010). Similarly, McArthur and Bishop (Bishop & McArthur, 2004; McArthur & Bishop, 2005) showed that children and young adults with SLI have age-inappropriate ERP components (including N1) to a range of sounds, not just pure tones. Stevens and colleagues suggested that the auditory N1 reduction in children with SLI is due to the attenuated auditory recovery cycle (Stevens, Paulsen, Yasen, Mitsunaga, & Neville, 2012). Studies that specifically examined visual ERPs in children with SLI are very few. In a seminal paper, Neville and colleagues (Neville et al., 1993) reported a reduction in the amplitude of visual P1 in school-age children with language impairment. However, Neville and colleagues’ participants had severe reading difficulties indicative of developmental dyslexia; therefore, whether this finding would extend to children with SLI without dyslexia is unclear. In sum, although a number of studies examined early sensory processing of auditory and visual modalities in SLI, most of such studies looked at one modality at a time. As a result, little is known about sensory processing in SLI under cross-modal conditions.

In this study, we compared the performance of school-age children with a history of SLI, their TD peers, and adults on a simultaneity judgment task (SJT) that was presented as a game and contained stimuli that do not pose sensory or cognitive processing difficulty. In order to avoid the influence of language skills on the SJT performance, we used non-speech stimuli – a pure tone and a flash of light – whose pairing was made meaningful through the context of the game. These stimuli were presented either synchronously or separated by temporal intervals that ranged from 100 to 500 ms, in 100 ms increments. The goal of behavioral analysis was to compare the accuracy with which children and adults could detect temporal asynchrony as offsets between modalities increased. The goal of our ERP analyses was to determine whether children with language learning difficulties differed in early neural response to auditory and visual stimuli as a function of the temporal separation between the two modalities. If found, such a difference would point to sensory encoding as a contributing factor to audiovisual temporal discrimination difficulty in SLI. Its absence, on the other hand, would suggest that group differences likely lie in later, cognitive stages of processing of temporal relationships. Lastly, in order to better understand the role of multisensory temporal discrimination in language skills, we performed linear regressions between children’s performance on the SJT and their language aptitude as measured by standardized tests.

2. Method

2.1 Participants

Three separate groups of participants took part in the study: 15 children with a history of SLI (H-SLI) (3 female), 15 typically developing (TD) children (4 female), and 15 college-age adults (4 female). The average age of the H-SLI group was 9 years (range: 7 years 3 months to 11 years 0 months), the average age of the TD group was 9 years and 1 month (range: 7 years 4 months to 10 years 10 months), and the average age of the adult group was 23 years (range: 20–40 years). The two groups of children were age-matched within approximately 3 months. All participants were monolingual native speakers of American English. All gave their written consent or assent to participate in the experiment. Additionally, at least one parent of each child gave a written consent to enroll their child in the study. The study was approved by the Institutional Review Board of Purdue University, and all study procedures conformed to The Code of Ethics of the World Medical Association (Declaration of Helsinki) (1964).

All children were administered the following battery of tests. Language skills were evaluated with the Clinical Evaluation of Language Fundamentals – 4th edition (CELF-4; Semel, Wiig, & Secord, 2003). Each child was tested on the four sub-tests that, together, provide the Core Language Score (CLS) as an overall measure of the child’s language ability. These sub-tests differed depending on the children’s age. All children were administered the Concepts and Following Directions, Recalling Sentences, and Formulated Sentences sub-tests. Additionally, 7–8 year olds (H-SLI n=8, TD n=7) were also tested on the Word Structure (WS) sub-test while 9–11 year olds (H-SLI n=7, TD n=8) were tested on the Word Classes-2 Total (WC) sub-test. Children’s verbal working memory was evaluated with the non-word repetition test (Dollaghan & Campbell, 1998) and the Number Memory Forward and Number Memory Reversed sub-tests of the Test of Auditory Processing Skills – 3rd edition (TAPS-3; Martin & Brownel, 2005). Non-verbal intelligence was assessed with the Test of Nonverbal Intelligence - 4th edition (TONI-4; Brown, Sherbenou, & Johnsen, 2010). Because the main task of the study required sustained attention and the ability to pay attention to two modalities presented at the same (or nearly the same) time, we examined children’s attention skills by administering the following sub-tests of the Test of Everyday Attention for Children (TEA-Ch; Manly, Robertson, Anderson, & Nimmo-Smith, 1999): Sky Search, Sky Search Dual Task, and Map Mission. The first two sub-tests required that children find and circle matching pairs of space ships surrounded by similar-looking distractor ships. During the Dual Task version of Sky Search, children also counted concurrently presented tone pips. Lastly, in Map Mission, children were asked to circle as many “knives-and-forks” symbols on a city map as possible during 1 minute. These tasks measured children’s ability to selectively focus or divide their attention.

The presence of Attention Deficit/Hyperactivity Disorder (ADHD) was evaluated with the help of the short version of the Parent Rating Scale of the Conners’ Rating Scales – Revised (Conners, 1997) and via a medical history form filled out by children’s parents. To rule out the presence of autism spectrum disorders, all children were evaluated with the Childhood Autism Rating Scale - 2nd edition (CARS-2; Schopler, Van Bourgondien, Wellman, & Love, 2010). We also recorded the level of mothers’ and fathers’ education as a measure of children’s socioeconomic status (SES). Data on adult participants’ non-verbal intelligence were collected with the help of the TONI-4. In all participants, handedness was assessed with an augmented version of the Edinburgh Handedness Questionnaire (Cohen, 2008; Oldfield, 1971).

Children in the H-SLI group were classified as having SLI when they were 4–5 years of age. These children were described in several studies of Leonard and colleagues (Leonard, Davis, & Deevy, 2007; Leonard & Deevy, 2011; Leonard, Deevy, et al., 2007). The gold standard for their evaluation at that time consisted of either the Structured Photographic Expressive Language Test – 2nd Edition (SPELT - II, Werner & Kresheck, 1983) or the Structured Photographic Expressive Language Test – Preschool 2 (Dawson, Eyer, & Fonkalsrud, 2005). Both tests have shown good sensitivity and specificity (Greenslade, Plante, & Vance, 2009; Plante & Vance, 1994). During diagnosis, all children fell below the 10th percentile on the above tests, with the majority falling below the 2nd percentile (range 1st – 8th percentile). During the years between the original diagnosis and participating in the current study, all but two H-SLI children received some form of speech therapy, which ranged in length from 2 to 8 years. Although at the time of this study, as a group, the H-SLI children scored within the normal range of language abilities based on the CLS of CELF-4, their CLS scores were nonetheless significantly lower than those of the TD group (F(1,29)=9.427, p=0.005) (see Table 1 for individual data and group means for CELF-4 subtests and CLS). Out of the four sub-tests of the CELF-4 that make up the composite CLS, the H-SLI group performed significantly worse on two – Formulated Sentences (F(1,29)=5.077, p=0.032) and Recalling Sentences (F(1,29)=16.413, p<0.001). Additionally, children in the H-SLI group performed much worse than their TD peers on the non-word repetition test. More specifically, the H-SLI group was less accurate at repeating three-syllable (F(1,29)=7.061, p=0.017) and four-syllable (F(1,29)=15.087, p=0.001) non-words, with a trend in the same direction for two-syllable non-words (F(1,29)=3.73, p=0.064). The Recalling Sentences sub-test of CELF and the non-word repetition test, which most clearly differentiated our participant groups, have been shown to have high sensitivity and specificity when identifying children with language disorders (Archibald & Gathercole, 2006; Conti-Ramsden, Botting, & Faragher, 2001; Dollaghan & Campbell, 1998; Ellis Weismer et al., 2000; Graf Estes, Evans, & Else-Quest, 2007; Hesketh & Conti-Ramsden, 2013). Furthermore, parents of 8 H-SLI children noted the presence of at least some language-related difficulties in their children during the time of testing. Four H-SLI children were still receiving speech therapy, with 3 more H-SLI children having stopped speech therapy approximately a year earlier. We take these results to indicate that, despite scoring within the normal range on CELF, our H-SLI children continued to lag behind their TD peers in linguistic competence. Indeed, earlier research shows that school-age children with a history of SLI often continue to exhibit albeit more subtle but clear linguistic difficulties (Conti-Ramsden et al., 2001; Hesketh & Conti-Ramsden, 2013; Rice, Hoffman, & Wexler, 2009). Such subtle differences in language competence may contribute to the development of learning disorders in other domains, most notably reading (Catts, Fey, Tomblin, & Zhang, 2002; Hesketh & Conti-Ramsden, 2013; Scarborough & Dobrich, 1990). Lastly, scores within the normal range on standardized tests in children with an established earlier diagnosis of SLI may not by themselves be sufficient to establish a true recovery because they may hide atypical cognitive strategies used by these children during testing (Karmiloff-Smith, 2009). Because audiovisual processing has a protracted developmental course, it is important to understand how children with documented language learning difficulties during early childhood compare to their TD peers during school-age years on the ability to detect audiovisual temporal asynchrony. Therefore, an older group of children was selected for this study.

Table 1.

CELF-4 scores of the H-SLI and TD children

| C&FD | RS | FS | WS | WC | CLS | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

| ||||||||||||||||

| Sub | SS | %ile | SS | %ile | SS | %ile | SS | %ile | R-SS | R-%ile | E-SS | E-%ile | T-SS | T-%ile | SS | %ile |

| H-SLI | ||||||||||||||||

| 1 | 10 | 50 | 9 | 37 | 14 | 91 | 9 | 37 | 102 | 55 | ||||||

| 2 | 13 | 84 | 8 | 25 | 11 | 63 | 13 | 84 | 108 | 70 | ||||||

| 3 | 11 | 63 | 6 | 9 | 8 | 25 | 10 | 50 | 93 | 32 | ||||||

| 4 | 8 | 25 | 8 | 25 | 6 | 9 | 11 | 63 | 90 | 25 | ||||||

| 5 | 14 | 91 | 9 | 37 | 12 | 75 | 12 | 75 | 111 | 77 | ||||||

| 6 | 15 | 95 | 13 | 84 | 10 | 50 | 14 | 91 | 118 | 88 | ||||||

| 7 | 8 | 25 | 5 | 5 | 7 | 16 | 7 | 16 | 81 | 10 | ||||||

| 8 | 11 | 63 | 8 | 25 | 13 | 84 | 6 | 9 | 97 | 42 | ||||||

| 9 | 11 | 63 | 12 | 75 | 13 | 84 | 13 | 84 | 16 | 98 | 15 | 95 | 117 | 87 | ||

| 10 | 12 | 75 | 7 | 16 | 11 | 63 | 10 | 50 | 7 | 16 | 8 | 29 | 97 | 42 | ||

| 11 | 12 | 75 | 9 | 37 | 15 | 95 | 14 | 91 | 11 | 63 | 13 | 84 | 114 | 82 | ||

| 12 | 7 | 16 | 2 | 0.4 | 6 | 9 | 8 | 25 | 3 | 5 | 6 | 9 | 72 | 3 | ||

| 13 | 9 | 37 | 7 | 16 | 12 | 75 | 8 | 25 | 7 | 16 | 7 | 16 | 93 | 32 | ||

| 14 | 13 | 84 | 6 | 9 | 10 | 50 | 11 | 63 | 10 | 50 | 10 | 50 | 98 | 45 | ||

| 15 | 10 | 50 | 8 | 25 | 9 | 37 | 13 | 84 | 13 | 84 | 13 | 84 | 99 | 47 | ||

|

| ||||||||||||||||

| Mean | 10.9 | 7.8 | 10.5 | 10.3 | 10.7 | 9.6 | 10.1 | 99.3 | ||||||||

|

| ||||||||||||||||

| TD | ||||||||||||||||

| 1 | 14 | 91 | 12 | 75 | 15 | 95 | 12 | 75 | 120 | 91 | ||||||

| 2 | 12 | 75 | 12 | 75 | 11 | 63 | 9 | 37 | 106 | 66 | ||||||

| 3 | 14 | 91 | 9 | 37 | 14 | 91 | 10 | 50 | 111 | 77 | ||||||

| 4 | 11 | 63 | 12 | 75 | 13 | 84 | 13 | 84 | 114 | 82 | ||||||

| 5 | 13 | 84 | 11 | 63 | 13 | 84 | 10 | 50 | 111 | 77 | ||||||

| 6 | 14 | 91 | 15 | 95 | 13 | 84 | 12 | 75 | 121 | 92 | ||||||

| 7 | 13 | 84 | 15 | 95 | 13 | 84 | 13 | 84 | 121 | 92 | ||||||

| 8 | 13 | 84 | 11 | 63 | 12 | 75 | 13 | 84 | 13 | 84 | 13 | 84 | 114 | 82 | ||

| 9 | 11 | 63 | 12 | 75 | 15 | 95 | 16 | 98 | 17 | 99 | 17 | 99 | 123 | 94 | ||

| 10 | 12 | 75 | 9 | 37 | 10 | 50 | 13 | 84 | 12 | 75 | 13 | 84 | 106 | 66 | ||

| 11 | 7 | 16 | 8 | 25 | 14 | 91 | 10 | 50 | 13 | 84 | 12 | 75 | 100 | 50 | ||

| 12 | 10 | 50 | 13 | 84 | 10 | 50 | 13 | 84 | 11 | 63 | 12 | 75 | 108 | 70 | ||

| 13 | 13 | 84 | 12 | 75 | 13 | 84 | 10 | 50 | 8 | 25 | 9 | 37 | 111 | 77 | ||

| 14 | 12 | 75 | 9 | 37 | 8 | 25 | 11 | 63 | 11 | 63 | 11 | 63 | 99 | 47 | ||

| 15 | 11 | 63 | 10 | 50 | 13 | 84 | 11 | 63 | 8 | 25 | 9 | 37 | 104 | 61 | ||

|

| ||||||||||||||||

| Mean | 12.0 | 11.3 | 12.5 | 11.3 | 12.1 | 11.6 | 12.0 | 111.3 | ||||||||

|

| ||||||||||||||||

| p | .174 | .000 | .032 | .407 | .228 | .295 | .245 | .005 | ||||||||

C&FD = Concepts and Following Directions; RS = Recalling Sentences; FS = Formulated Sentences; WS = Word Structure; WC = Word Classes; R = Receptive; E = expressive; T = Total; CLS = Core Language Score; SS = standardized score; %ile = percentile. p values represent a group comparison based on a one-way ANOVA.

All participants were free of neurological disorders, passed a hearing screening at a level of 20 dB HL at 500, 1000, 2000, 3000, and 4000 Hz, reported to have normal or corrected-to-normal vision, and were not taking medications that may affect brain function (such as anti-depressants) at the time of study. All had normal non-verbal intelligence and showed no signs of autism spectrum disorders. One child in the H-SLI group had a diagnosis of ADHD, but was not taking medications at the time of study. Additionally, two more H-SLI children fell into the strongly atypical range based on the ADHD Index of the Parent Rating Scale of the Conners’ Rating Scales – Revised. The ADHD Index of the Conners’ Questionnaire is based on the set of questions that have been shown to be most effective at distinguishing children at risk for ADHD from their TD peers (Conners, 1997). Children with either a diagnosis of ADHD or a high ADHD index on the Conners’ Questionnaire were not excluded from the study because the diagnosis of SLI preceded that of ADHD and because recent studies suggest that linguistic problems associated with ADHD are qualitatively different from those observed in SLI (Redmond, 2005; Redmond, Thompson, & Goldstein, 2011). However, we included information on the status of attention in both groups of children, together with several other variables, as a predictor in a multiple regression analysis in order to understand its role in performance on the SJT. One child in the H-SLI group had a diagnosis of dyslexia, based on parental report. All children in the TD group had language skills within the normal range and were free of ADHD. None of the adult participants had a history of SLI or ADHD based on self-report.

Tables 2 and 3 provide group means and standard errors (SE) for the two groups of children for all aforementioned measures (except for results for CELF4 which are shown in Table 1). The H-SLI children did not differ from TD peers in age, non-verbal intelligence, or SES as measured by mother’s years of education. Although significant, the difference in father’s years of education between the two groups was less than three years. As mentioned earlier, children in the H-SLI group performed significantly worse on the Formulated Sentences and Recalling Sentences sub-tests of CELF and on the non-word repetition test. In addition, this group of children scored significantly lower on both the Number Memory Forward (group, F(1,29)=8.885, p=0.006) and the Number Memory Reversed (group, F(1,29)=6.546, p=0.016) sub-tests of TAPS-3. Based on the Laterality Index of the Edinburgh Handedness Questionnaire, one child in the H-SLI group was left-handed. All other participants were right-handed.

Table 2.

Group means for age, TONI-4, CARS-2, ADHD Index, SES, and TAPS-3

| Age (years, months) | TONI-4 | CARS-2 | ADHD Indexa | Mother’s Education (years)b | Father’s Education (years)b | TAPS-3 | ||

|---|---|---|---|---|---|---|---|---|

| Number Memory |

||||||||

| Forward | Reversed | |||||||

|

|

||||||||

| H-SLI | 9;0(0.3) | 108.3(2.4) | 15.4(0.1) | 52.5(2.8) | 16.6(0.8) | 14.9(0.4) | 7.8(0.4) | 9.3(0.7) |

| TD | 9;1(0.3) | 107.6(2.1) | 15.3(0.2) | 48.0(1.6) | 16.3(0.8) | 17.5(0.8) | 10.0(0.6) | 11.7(0.6) |

| p | 0.921 | 0.821 | 0.590 | 0.173 | 0.819 | 0.012 | 0.006 | 0.016 |

Note. Numbers for TONI-4, CARS-2, ADHD Index, and the TAPS-3 subtests represent standardized scores. Numbers in parentheses are standard errors of the mean. P-values reflect a group comparison. TONI–4 = Test of Nonverbal Intelligence—4th edition; CARS–2 = Childhood Autism Rating Scale—2nd edition; ADHD = attention deficit/hyperactivity disorder; TAPS–3 = Test of Auditory Processing Skills—3rd edition.

From the Parent Rating Scale of the Conners’ Rating Scales—Revised.

Measure of children’s SES.

Table 3.

Group means for nonword repetition and TEA-Ch

| Nonword Repetition | TEA-Ch | |||||||

|---|---|---|---|---|---|---|---|---|

|

| ||||||||

| Syllables |

Sky Search | Sky Search Dual Task | Map Mission | |||||

| 1 | 2 | 3 | 4 | Total | ||||

|

|

|

|||||||

| H-SLI | 91.1(1.9) | 95.3(1.1) | 88.1(3.2) | 64.0(2.8) | 80.9(1.7) | 9.5(0.8) | 7.4(0.7) | 7.9(0.8) |

| TD | 92.3(1.3) | 98.3(1.1) | 96.9(0.9) | 79.6(2.9) | 90.1(1.2) | 9.3(0.4) | 8.8(0.9) | 9.1(0.7) |

| p | 0.619 | 0.064 | 0.017 | 0.001 | 0.000 | 0.882 | 0.220 | 0.284 |

Note. Numbers for TEA-Ch represent standardized scores. Numbers for Nonword Repetition are percent of correctly repeated phonemes. Numbers in parentheses are standard errors of the mean. P-values reflect a group comparison.

2.2 Stimuli and Experimental Design

All participants performed the same SJT task, which was presented as a game. Prior to the start of the experiment, participants watched a video with instructions. They were told that they would see a dragon in the middle of the screen and pictures of a boy and a girl holding futuristic-looking weapons at the top left and right corners of the screen (see Figure 1). They were also told that these characters lived on the planet Cabula, where dragons often raid apple orchards and steal apples. Dragons can only be scared away by special weapons. One weapon shoots lights while another shoots sounds. In order for dragons to be scared away, the light and the sound need to occur at exactly the same time. If either the light or the sound is delayed or one of the children forgets to shoot (and hence there is only the light but no sound or there is only the sound but no light), dragons do not get scared away. Participants were then asked to indicate by a button press on a response box (RB-530, Cedrus Corporation) during each trial whether or not the dragon was scared away (i.e., whether the sound and the light occurred at the same time or not). The images of the girl and the boy with futuristic weapons were present during the instructions video and on the first screen of each block prior to the onset of test trials. To avoid a shift of attention away from the dragon, their images were not present while participants performed the task. Task instructions were kept identical for children and adults. The “light” stimulus was an explosion-shaped red figure that appeared over the dragon’s body. The “sound” stimulus was a 2 kHz pure tone. Both were 200 ms in duration. This combination of sound and light stimuli was presented at the following temporal offsets: 0, 100, 200, 300, 400, and 500 ms. In half the trials, the sound preceded the light (AV trials), while in another half, the light preceded the sound (VA trials). Additionally, there were visual only (VO) and auditory only (AO) trials, during which either only the explosion-shaped figure appeared without the accompanying tone or only the tone was presented without the accompanying explosion-shaped figure. Each experimental block contained 5 instances of each of the 13 types of trials (5 AV offsets, 5 VA offsets, synchronous presentation, AO, and VO trials), presented in a random order. Each participant completed 10 experimental blocks, which yielded 50 responses for each trial type. Presentation of trials was controlled by the Presentation software (www.neurobs.com). Participants were instructed to press one button for trials on which the Dragon was scared away (synchronous stimulus presentations) and another for trials on which the Dragon was not scared away (asynchronous and AO/VO trials). Responses were recorded within a 2200 ms response window time-locked to the appearance of the first stimulus in each pair. The response window was followed by an inter-trial interval varying randomly among 4 values: 350ms, 700ms, 1050ms, and 1400ms. Hand to response button mapping was counterbalanced across participants.

Figure 1. Stimuli.

During the instructions video and just prior to the onset of each experimental block, participants saw a dragon in the middle of the screen and a boy and a girl holding futuristic looking weapons in the top right and left corners. To avoid the shift of attention away from the dragon (over whom the visual stimuli were presented), only the dragon was present on the screen during all experimental trials. The visual stimulus was a red explosion-shaped figure that appeared over the dragon’s body. The auditory stimulus was a 2 kHz pure tone.

At the beginning of the session, participants were fitted with an EEG cap and seated in a dimly-lit sound-attenuating booth, approximately 4 feet from a computer monitor. Sounds were presented at 60 dB SPL via a sound bar located directly under the monitor. Participants were first shown the instructions video and then practiced the task until it was clear. In order to make sure that children understood the task, they were prompted with a set of questions to describe under which conditions they were to press each button. Each block lasted approximately 3 minutes. Children played several rounds of the board game of their choice after each block and had a longer break after half of all blocks were completed. Adult participants had short breaks after each block and a longer one in the middle. Together with game breaks, the testing session lasted approximately 1 hour.

2.3 ERP Recordings and Data Analysis

Electroencephalographic (EEG) data were recorded from the scalp at a sampling rate of 512 Hz using 32 active Ag-AgCl electrodes secured in an elastic cap (Electro-Cap International Inc., USA). Electrodes were positioned over homologous locations across the two hemispheres according to the criteria of the International 10-10 system (American Electroencephalographic Society, 1994). The specific locations were as follows: midline sites: FZ, CZ, PZ, and OZ; mid-lateral sites: FP1/FP2, AF3/AF4, F3/F4, FC1/FC2, C3/C4, CP1/CP2, P3/P4, PO3/PO4, and O1/O2; and lateral sites: F7/F8, FC5/FC6, T7/T8, CP5/CP6, and P7/P8; and left and right mastoids. EEG recordings were made with the Active-Two System (BioSemi Instrumentation, Netherlands), in which the Common Mode Sense (CMS) active electrode and the Driven Right Leg (DRL) passive electrode replace the traditional “ground” electrode (Metting van Rijn, Peper, & Grimbergen, 1990). During recording, data were displayed in relationship to the CMS electrode and then referenced offline to the average of the left and right mastoids (Luck, 2005). The Active-Two System allows EEG recording with high impedances by amplifying the signal directly at the electrode (BioSemi, 2013; Metting van Rijn, Kuiper, Dankers, & Grimbergen, 1996). In order to monitor for eye movement, additional electrodes were placed over the right and left outer canthi (horizontal eye movement) and below the left eye (vertical eye movement). Horizontal eye sensors were referenced to each other, while the sensor below the left eye was referenced to FP1 in order to create electro-oculograms. Prior to data analysis, EEG recordings were filtered between 0.1 and 30Hz. Individual EEG records were visually inspected to exclude trials containing excessive muscular and other non-ocular artifacts. Additionally, all audiovisually synchronous and VO trials in which blinks occurred during stimulus presentation were excluded. Ocular artifacts were corrected by applying a spatial filter (EMSE Data Editor, Source Signal Imaging Inc., USA) (Pflieger, 2001). Similar to the independent component analysis (ICA), this method is able to separate ocular artifacts from brain activity without constructing a head model and without subtracting ocular activity from EEG channels. However, unlike the ICA, it bypasses the need to subjectively determine which components need to be excluded by relying on a representative set of artifacts and clean data segments. ERPs were epoched starting at 200 ms pre-stimulus and ending at 1000 ms post-stimulus onset. On trials in which the auditory and visual stimuli were not completely synchronized, the stimulus onset was the onset of the first stimulus in a pair (for example, when the sound preceded the appearance of the explosion-shaped figure, ERPs were averaged to the onset of the sound). The 200 ms prior to the recording onset served as a baseline.

The goal of the ERP analyses was to examine how auditory and visual neural responses differed depending on the group membership and offset. However, because offsets were short, auditory and visual ERPs partially overlapped. In order to better isolate ERPs unique to each sensory modality, the following procedure was used. ERPs elicited by AO trials were subtracted from ERPs elicited by AV stimulus pairs (in which the sound preceded the light) and from those elicited by synchronous pairs, thus revealing ERPs specific to visual stimulation at each offset. Similarly, ERPs elicited by VO trials were subtracted from ERPs elicited by VA stimulus pairs (in which the light preceded the sound) and from ERPs elicited by synchronous pairs, revealing ERPs specific to auditory stimulation at each offset. All ERP analyses were conducted on the resulting difference waves. For the sake of clarity, all difference waves are labeled by referring to the specific subtraction that was performed to produce them. For example, the difference wave that resulted from AO ERPs being subtracted from the ERPs elicited by AV stimuli with a 100 ms offset is labeled AV100-A. AV0 and VA0 labels both refer to the completely synchronous audiovisual presentation. This method for isolating modality-specific ERPs is similar to that used previously by Sketelenburger and Vroomen (Stekelenburg & Vroomen, 2005).

Because the focus of the ERP analysis was on sensory processing of audiovisual stimuli, we measured only the P1 and N1 ERP components elicited by auditory and visual stimuli. For the ease of reference, auditory P1 and N1 are listed with an “a” preceding them (e.g., aP1 and aN1), while visual P1 and N1 are listed with a “v” preceding them (e.g., vP1 and vN1). Since difference waves have relatively lower signal-to-noise ratio compared to original waveforms, which can produce spurious peak latency measurements, we chose to analyze a 50 percent area latency measure and the corresponding amplitude measure for each of the above components (Luck, 2005). The 50 percent area latency measure refers to the point in the ERP waveform that divides the area under the waveform in half for a specified temporal window. The amplitude at such a point was used as a given component’s amplitude measure. Temporal windows for area peak and latency measurements were based on the visual inspection of AV0-A and VA0-V difference waves. Temporal windows for other offsets were calculated by adding 100–500 ms to the beginning and the end of each window. As an example, the visual P1 elicited in children was measured between 92 and 150 ms post-stimulus onset in the AV0-A condition, between 192 and 250 ms post-stimulus onset in the AV100-A condition, and between 292 and 350 ms post-stimulus onset in the AV200-A condition, etc., in order to properly capture the ERPs elicited by the incrementally delayed visual stimulus. In agreement with earlier reports, adults’ ERPs had noticeably shorter latencies. It was therefore impossible to keep the same temporal windows for measuring ERPs elicited in children and adults. Importantly, however, although temporal windows used for ERP measurements in adults were placed over an earlier portion of the waveform, their absolute width was kept identical for children and adults (see Table 4). Measurements from all 3 groups were included into the analyses of components’ amplitudes. However, due to differences in the temporal windows for measuring ERP components, latencies of P1 and N1 were compared only between the two groups of children.

Table 4.

Measured components: sites and temporal windows

|

Temporal Windows for

Visual Components

| |||||||

|---|---|---|---|---|---|---|---|

| Component | Sites | Sync-A | AV100-A | AV200-A | AV300-A | AV400-A | AV500-A |

| Children | |||||||

| vP1 | O1/2 | 92–150 | 192–250 | 292–350 | 392–450 | 492–550 | 592–650 |

| vN1 | O1/2 | 152–208 | 252–308 | 352–408 | 452–508 | 552–608 | 652–708 |

|

| |||||||

| Adults | |||||||

| vP1 | O1/2 | 66–124 | 166–224 | 266–324 | 366–424 | 466–524 | 566–624 |

| vN1 | O1/2 | 134–190 | 234–290 | 334–390 | 434–490 | 534–590 | 634–690 |

|

Temporal Windows for

Auditory Components

| |||||||

|---|---|---|---|---|---|---|---|

| Component | Sites | Sync-V | VA100-V | VA200-V | VA300-V | VA400-V | VA500-V |

| Children | |||||||

| aP1 | F3/4, FC1/2, C3/4, CP1/2, P3/4; FZ, CZ, PZ | 50–98 | 150–198 | 250–298 | 350–398 | 450–498 | 550–598 |

| aN1 | F3/4, FC1/2, C3/4, CP1/2, P3/4; FZ, CZ, PZ | 100–152 | 200–252 | 300–352 | 400–452 | 500–552 | 600–652 |

|

| |||||||

| Adults | |||||||

| aP1 | F3/4, FC1/2, C3/4, CP1/2, P3/4; FZ, CZ, PZ | 26–74 | 126–174 | 226–274 | 326–374 | 426–474 | 526–574 |

| aN1 | F3/4, FC1/2, C3/4, CP1/2, P3/4; FZ, CZ, PZ | 100–152 | 200–252 | 300–352 | 400–452 | 500–552 | 600–652 |

Note. Difference waves from which measurements were taken are labeled with the specific subtraction used to produce them. For example, AV100-A is a difference wave that resulted from subtracting ERPs elicited by auditory only trials from ERPs elicited by an audiovisual stimulus in which the onset of the sound preceded the appearance of the explosion-shaped figure by 100 ms. All temporal windows are shown in milliseconds post-stimulus onset. Site abbreviations: F = frontal, FC = fronto-central, CP = centro-parietal, P = parietal, C = central, O = occipital. Odd numbers indicate electrodes positioned over the left hemisphere of the scalp, while even numbers indicate electrodes positioned over the right hemisphere of the scalp. FZ, CZ, and PZ are all midline sites.

Repeated-measures ANOVAs were used to evaluate behavioral and ERP results. For behavioral measures, ANOVAs included the factors of group (H-SLI and TD) and offset (100, 200, 300, 400, and 500). The following factors were used for ERP analyses over mid-lateral sites: group (H-SLI and TD), offset (0, 100, 200, 300, 400, and 500), hemisphere (O1 vs. O2 for visual components; F3, FC1, C3, CP1, P3 vs. F4, FC2, C4, CP2, P4 for auditory components), and site (for auditory components only since in visual analyses each hemisphere was represented by one site; F3 and F4 vs. FC1 and FC2 vs. C3 and C4 vs. CP1 and CP2 vs. P3 and P4). Auditory ERPs were also evaluated over midline sites in a separate analysis with the following factors: group (H-SLI and TD), offset (0, 100, 200, 300, 400, and 500), and site (FZ vs. CZ vs. PZ). Because previous research has consistently reported a difference in individuals’ ability to detect AV and VA asynchronies (Bushara et al., 2001; Dixon & Spitz, 1980; Grant et al., 2004; Lewkowicz, 1996; McGrath & Summerfield, 1985; Van Wassenhove et al., 2007), separate ANOVAs were conducted on behavioral and ERP measures to AV and VA offsets. Significant main effects with more than two levels were evaluated with a Bonferroni post-hoc analysis. In cases where the omnibus analysis produced a significant interaction, it was further evaluated with step-down ANOVAs or t-tests, with factors specific to any given interaction. For all repeated measures with greater than one degree of freedom in the numerator, the Huynh-Feldt (H-F) adjusted p-values were used to determine significance (Hays, 1994). Effect sizes, indexed by the partial eta squared statistic (ηp2), are reported for all significant repeated-measures ANOVA effects. Because the goal of statistical analyses was to identify the influence of group membership on behavioral and neural response to audiovisual stimuli, we report significant results only for the factor of group and its interactions with other factors.

2.4 Regressions

In order to examine whether children’s audiovisual temporal discrimination abilities can predict language skills, we conducted separate linear regression analyses between performance on the SJT (as a predictor variable) and the following measures of linguistic aptitude (as response variables): the Concepts and Following Directions, Formulated Sentences, and Recalling Sentences subtests of CELF-4 as well as the CLS composite, and the 4-syllable nonword repetition. We performed a regression analysis not only on individual sub-tests of CELF-4 but also on the composite score because while the influence of audiovisual discrimination may not be strong enough to manifest itself in performance on individual tasks, it may nonetheless significantly predict a child’s overall language ability. Furthermore, the CLS composite incorporates performance on Word Classes-2 and Word Structure sub-tests, which could not be analyzed separately due to a small number of children to whom each test was administered. Group means differed significantly for several of the above language measures. As a result, we conducted regression analyses separately for the TD and H-SLI groups.

Additionally, because performance on the SJT has been shown to improve with age and required sustained attention, we conducted a multiple regression analysis with participants’ age, group (TD or H-SLI), the ADHD index, and the three sub-tests of TEA-Ch as the predictors and the SJT accuracy as the response variable in order to gauge the degree to which these variables contributed to children’s performance on the SJT task. A stepwise regression was performed to arrive at the final model. The SJT measure used for all regressions was the average of synchronous (i.e., erroneous) perceptions over the two longest offsets (400 and 500 ms). These offsets were selected because they showed the largest individual variability and the largest group differences. Regression analyses were conducted separately on AV and VA offsets.

3. Results

3.1. Behavioral Results

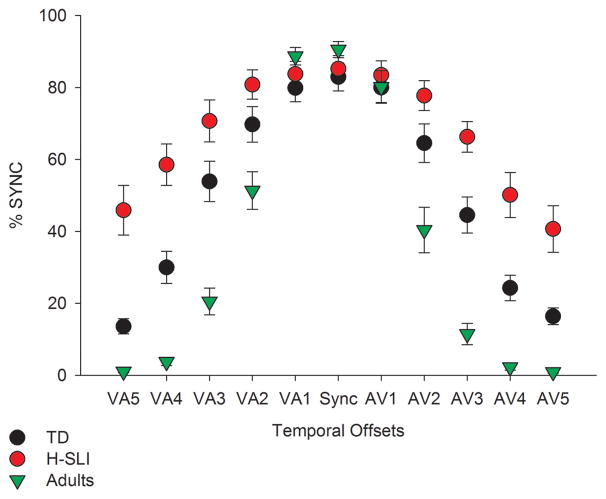

Behavioral results are shown in Figure 2. We evaluated children’s performance on the SJT by calculating the percent of trials at each offset for which stimuli were judged as synchronous, with an expectation that as the offset between visual and auditory modalities increased, the number of synchronous perceptions would decrease. Analysis of responses to VA and AV stimuli were nearly identical. Both yielded a significant effect of offset (VA: F(4,168)=212.809, p<0.001, ηp2=0.835; AV: F(4,168)=183.222, p<0.001, ηp2=0.814), with participants perceiving significantly fewer synchronous presentations as the offset increased from 100 to 200 ms (VA, p<0.001; AV, p<0.001), from 200 to 300 ms (VA, p<0.001; AV, p<0.001), from 300 to 400 ms (VA, p<0.001; AV, p<0.001), and from 400 to 500 ms (VA, p<0.001; AV, p=0.001), based on Bonferroni pairwise comparisons. The effect of group was also significant (VA, F(1,42)=26.621, p<0.001; AV, F(1,42)=30.91, p<0.001), with adults being more accurate than either group of children and with the TD children being more accurate than the H-SLI children (all p-values <0.01). The effects of offset and group were further defined by an offset by group interaction (VA: F(8, 168)=17.117, p<0.001, ηp2=0.449; AV: F(8, 168)=9.866, p<0.001, ηp2=0.32). Follow-up tests revealed a significant effect of group at 200, 300, 400, and 500 ms offsets for both AV and VA stimuli (F(2,42)=9.729–43.809, all p-values <0.001). Specific group differences based on Bonferroni pairwise comparisons are shown in Table 5 (top panel). Children in the H-SLI group were significantly less accurate than their TD peers when making judgments about 300, 400, and 500 ms offset in both AV and VA conditions (with the only exception being the VA300 stimulus, for which group difference fell just short of significance). The H-SLI children performed worse than adults on all but the shortest offsets (AV100 and VA100). Just as importantly, TD children, while more accurate than their H-SLI counterparts, nonetheless had significantly more difficulty at detecting audiovisual asynchrony than adults on all but three offsets (AV100, VA100, and VA500), revealing a prolonged developmental course for audiovisual temporal function.

Figure 2. Performance on the simultaneity judgment task.

The x axis displays all temporal offsets used in the study, with visual-auditory (VA) offsets in the left part of the graph and the audio-visual (AV) offsets in the right part of the graph. Numbers 1 through 5 correspond to temporal offsets of 100 ms to 500 ms, in 100 ms increments. For example, AV3 identifies a temporal offset in which the sound preceded the light by 300 ms. The y axis shows percent of synchronous perceptions. As offsets increased, percent of synchronous perceptions was expected to decrease. Error bars are standard errors of the mean.

Table 5.

Group differences on the simultaneity judgment task

| n=15 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| VA500 | VA400 | VA300 | VA200 | VA100 | SYNC | AV100 | AV200 | AV300 | AV400 | AV500 | |

| H-SLI<TD | *** | *** | NS | NS | NS | NS | NS | NS | ** | *** | *** |

| TD<Adults | NS | *** | *** | * | NS | NS | NS | ** | *** | ** | * |

| H-SLI<Adults | *** | *** | *** | *** | NS | NS | NS | *** | *** | *** | *** |

| n=12 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| VA500 | VA400 | VA300 | VA200 | VA100 | SYNC | AV100 | AV200 | AV300 | AV400 | AV500 | |

| H-SLI<TD | ** | * | NS | NS | NS | NS | NS | NS | NS | * | * |

| TD<Adults | NS | *** | *** | ** | NS | NS | NS | ** | *** | ** | * |

| H-SLI<Adults | *** | *** | *** | ** | NS | NS | NS | *** | *** | *** | *** |

p<0.05,

p<0.01,

p<0.001

NS=not significant

The top panel lists group differences for the analysis that included all 15 participants in each group. The bottom panel lists group differences for the analyses that were conducted only on the H-SLI children without any known attention difficulties and corresponding TD children and adults.

Because three of the H-SLI children either had a diagnosis of ADHD or scored high on the Conners’ ADHD index, we wanted to make sure that these children’s performance on the SJT did not skew our group results. We therefore re-ran our analyses with 12 H-SLI children without known attention difficulties, 12 age-matched TD children, and 12 adults. Similarly to the analysis with 15 participants in each group, a group by offset interaction was significant (VA, F(8,132)=13.61, p<0.001, ηp2=0.452; AV, F(8,132)=7.51, p<0.001, ηp2=0.313). Follow up analyses showed that group differences still remained, albeit over a somewhat smaller range of offsets (see Table 5, bottom panel).

Groups did not differ in their responses to completely synchronous presentations. However, analyses of accuracy to AO and VO stimuli yielded a significant effect of group (AO, F(2,44)=7.291, p=0.002; VO, F(2,44)=12.197, p<0.001), with H-SLI children being less accurate than both TD children and adults, based on Bonferroni pairwise comparisons (AO, H-SLI vs. TD, p=0.051, H-SLI vs. adults, p=0.002; VO, H-SLI vs. TD, p=0.004, H-SLI vs. adults, p<0.001). TD children and adults did not differ from each other. Additionally, in order to make sure that the offset and group effects were due to errors in stimulus identification rather than due to the lack of responses, we also analyzed the number of misses in each group. The VA analysis yielded a significant group by offset interaction (F(8,168)=2.473, p=0.022, ηp2=0.105), with TD children making more misses than adults to the 200, 300, 400, and 500 ms offsets (in all cases, p<0.05). The TD group also had more misses than the H-SLI group to the 400 ms offset (p=0.01). The AV analysis showed the effect of group (F(2,42)=8.389, p=0.001, ηp2=0.285), with adults having fewer misses than either TD children (p=0.001) or H-SLI children (p=0.015). No difference between the two groups of children was found. Although groups differed significantly in some comparisons, such differences were on average between only 1 and 7 misses.

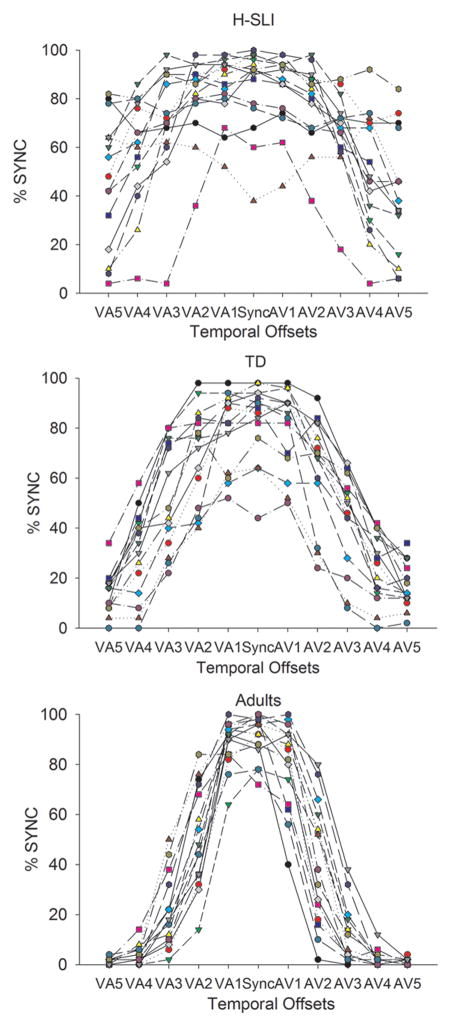

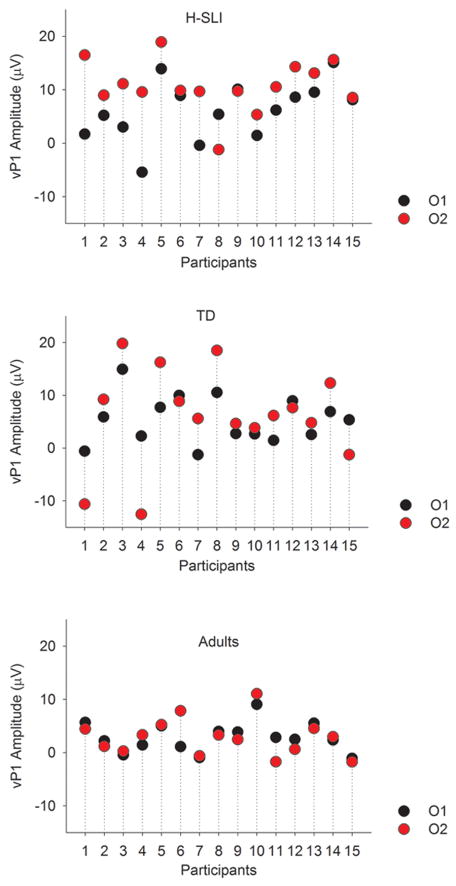

Visual inspection of behavioral SJT data revealed that there was much larger individual variability in the H-SLI group, with some children falling within the TD range on the SJT and some consistently unable to detect asynchrony even at the longest offsets. Individual variability in each group is shown in Figure 3.

Figure 3. Individual differences in performance on the simultaneity judgment task.

The x axis displays all temporal offsets used in the study, with VA offsets in the left part of the graph and the AV offsets in the right part of the graph. Numbers 1 through 5 correspond to temporal offsets of 100 to 500 ms, in 100 ms increments. For example, AV3 identifies a temporal offset in which the sound preceded the light by 300 ms. The y axis shows percent of synchronous perceptions. Each line represents a separate participant.

3.1.1. Regressions

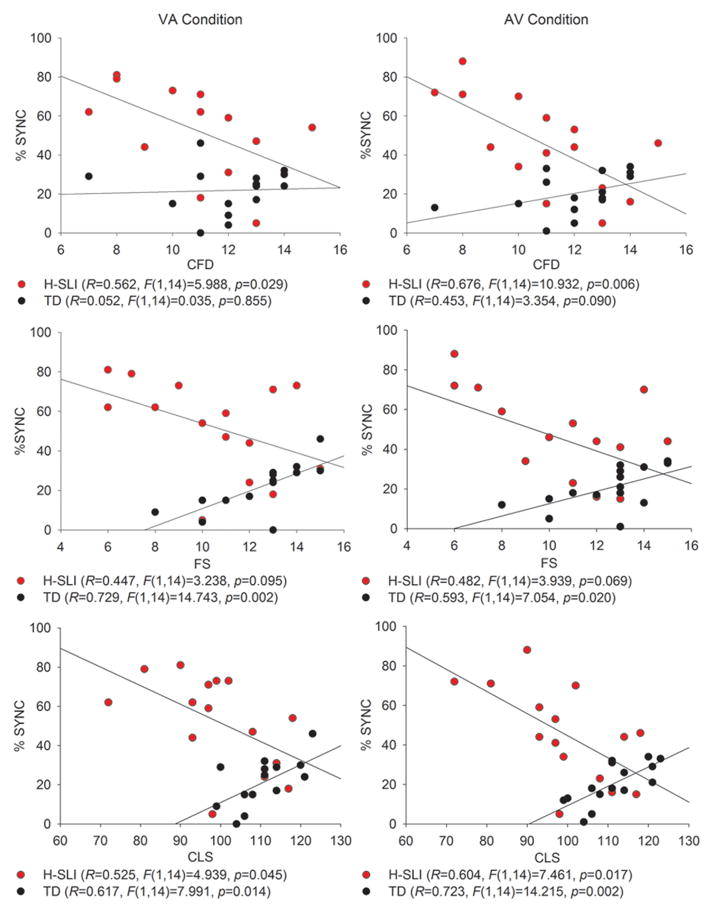

Figure 4 shows all significant regressions between the SJT and language measures, separately for the AV and VA conditions. In the H-SLI group, better performance on the SJT was associated with better scores on the C&FD subtest of CELF and with an overall higher CLS index, with a trend in the same direction for the FS subtest. This relationship held for both the AV and the VA offsets. In the TD group, better performance on the SJT appeared to be associated with worse scores on the FS subtest and the CLS index. However, further examination of the data strongly suggested that this paradoxical relationship was due to the fact that the younger children in the TD group had a higher CLS than the older children (e.g., there was a significant negative correlation between the CLS and age in the TD group: R=.561, F(1,14)=5.976, p=0.03), but had more difficulty with the SJT than their older counterparts. In a separate study in our laboratory, we tested seventeen 7–8 year-old and nineteen 10–11 year-old TD children on the same SJT (Kaganovich, in preparation). In neither group of children was worse performance on the SJT associated with better CELF-4 scores. These results suggest a relationship between audiovisual temporal function and language aptitude in H-SLI children. However, given a small number of participants in each group, a replication of these findings is needed.

Figure 4. Regressions between performance on the simultaneity judgment task and language tests.

The language measures that showed a significant relationship with the SJT were the Concepts and Following Directions (top panel) and the Formulated Sentences (middle panel) subtests of CELF-4, as well as the composite language score of CELF-4 (bottom panel). The y axis is the average percent of synchronous perceptions over the two longest offsets of 400 and 500 ms.

The results of the multiple regression analysis that aimed to predict performance on the SJT using group membership, age, the ADHD index, and the TEA-Ch scores are summarized in Table 6. Only group, age, and the ADHD index had significant enough p-values (<.05) to be included into the model. The final model was able to account for approximately 80% of the variability (R2 =0.799) in the performance on the AV offsets of the SJT and for approximately 60% of the variability (R2 =0.593) in the performance on the VA offsets of the SJT. The order in which variables were selected for the model was different for the AV and VA analyses, with the ADHD index entering before the group membership for performance on the AV offsets and with the group membership entering before the ADHD index for the VA offsets. More importantly, the regression coefficients for the predictors were similar for the AV and VA analyses. The ADHD index had a positive coefficient, and age had a negative coefficient. Thus, higher ADHD index scores were associated with worse performance on the SJT while older age was associated with better performance on the SJT. Importantly, in both analyses being a member of the H-SLI group was associated with worse performance on the SJT.

Table 6.

Multiple regression results

| AV offset | |||||||

|---|---|---|---|---|---|---|---|

| Model | Variable | B | SE | Beta | p | R2 | R2 change |

| 1 | Constant | −50.439 | 19.864 | .017 | .416 | .416 | |

| ADHD index | 1.741 | .645 | .000 | ||||

| 2 | Constant | 3.666 | 19.617 | .853 | .663 | .246 | |

| ADHD index | 1.386 | .312 | .514 | .000 | |||

| Group | −24.207 | 5.45 | −.514 | .000 | |||

| 3 | Constant | 65.247 | 21.304 | .005 | .799 | .136 | |

| ADHD index | 1.519 | .248 | .563 | .000 | |||

| Group | −23.282 | 4.295 | −.494 | .000 | |||

| Age | −7.662 | 1.827 | −.372 | .000 | |||

| VA offset | |||||||

|---|---|---|---|---|---|---|---|

| Model | Variable | B | SE | Beta | p | R2 | R2 change |

| 1 | Constant | 70.467 | 10.684 | .000 | .33 | .33 | |

| Group | −25.067 | 6.757 | −.574 | .001 | |||

| 2 | Constant | 20.545 | 23.319 | .386 | .445 | .115 | |

| Group | −21.15 | 6.479 | −.484 | .003 | |||

| ADHD index | .877 | .371 | .351 | .025 | |||

| 3 | Constant | 80.023 | 28.085 | .008 | .593 | .148 | |

| Group | −20.256 | 5.662 | −.464 | .001 | |||

| ADHD index | 1.005 | .326 | .402 | .005 | |||

| Age | −7.4 | 2.408 | −.388 | .005 | |||

B = regression coefficient, SE = Standard Error

3.2 ERP Results

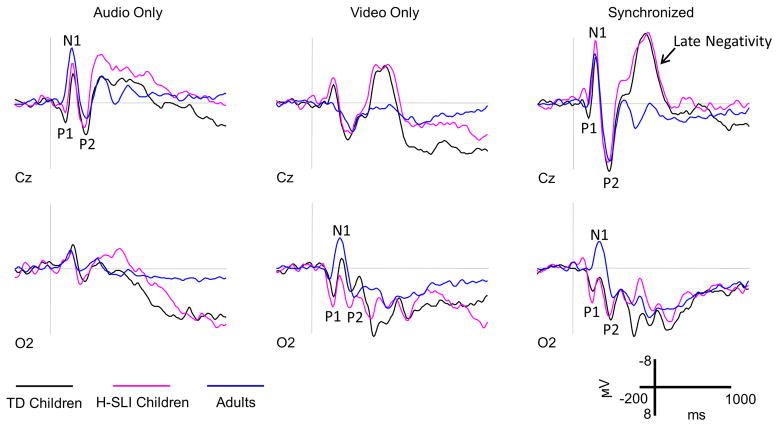

ERPs elicited by AO, VO, and audiovisually synchronous stimuli are shown in Figure 5. ERP waveforms elicited by these stimuli in children and adults differed in peaks’ latency, amplitude, and overall morphology. Generally speaking, adults’ components peaked earlier than children’s. Adults’ P1 was generally smaller while adults’ N1 larger in amplitude than the corresponding components in children’s waveforms. Lastly, children’s responses to visual and audiovisual stimuli contained an anterior negativity at approximately 400 ms post-stimulus onset, which was absent in adults’ waveforms.

Figure 5. ERPs elicited by AO, VO, and synchronous stimuli.

ERPs elicited by AO, VO, and synchronous audiovisual stimuli are shown for the H-SLI, TD, and adult groups over two representative electrodes. Negative is plotted up.

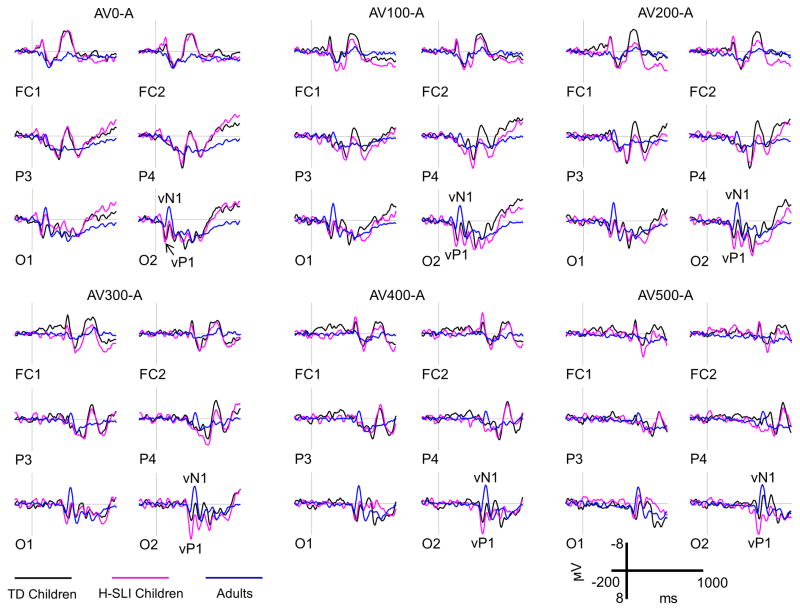

ERPs elicited by the visual part of audiovisual stimulation are shown in Figure 6, with measured components marked on the O2 site. Analysis of vP1 showed a larger amplitude of this component in the H-SLI children compared to adults at 200, 300, and 400 ms offsets over the right hemisphere (group by offset by hemisphere, (F(10,210)=1.944, p=0.041, ηp2=0.085; H-SLI vs. adults, all p<0.05 based on Bonferroni pairwise comparison). A similar finding for the 500 ms offset fell just short of significance (H-SLI vs. adults, p=0.053). The vP1 amplitude was larger in H-SLI than in TD children only at the 300 ms offset (p=0.034). Additionally, in the H-SLI group vP1 amplitude was significantly larger over the right compared to the left occipital site for 0, 300, 400, and 500 ms offsets (in all cases, p<0.05), with a trend in the same direction for the 200 ms offset (p=0.085). This hemispheric effect was absent in TD children and adults (see Figure 7). Analysis of the vN1 amplitude indicated that it was significantly smaller over the right than over the left occipital site only in the H-SLI group (group by hemisphere, F(2,42)=9.498, p<0.001, ηp2=0.311; hemisphere in H-SLI, F(1,14)=15.218, p=0.002, ηp2=0.521) This effect did not interact with offset. Analyses of vP1 and vN1 latencies did not yield any significant group differences between TD and H-SLI children.

Figure 6. Visual ERPs.

Visual ERPs elicited by the appearance of the explosion-shaped figure are shown for the H-SLI, TD, and adult groups over a representative set of electrodes. All ERPs are difference waves that were created by subtracting auditory only trials from AV stimuli with different offsets in order to reveal brain activity specific to visual stimulation. Difference waves are labeled based on the subtraction that was used to create them. For example, the AV500-A panel shows visual ERPs that were revealed by subtracting auditory only trials from ERPs elicited by audiovisual stimuli with a 500 ms offset. Measured components are marked on the O2 site. Negative is plotted up.

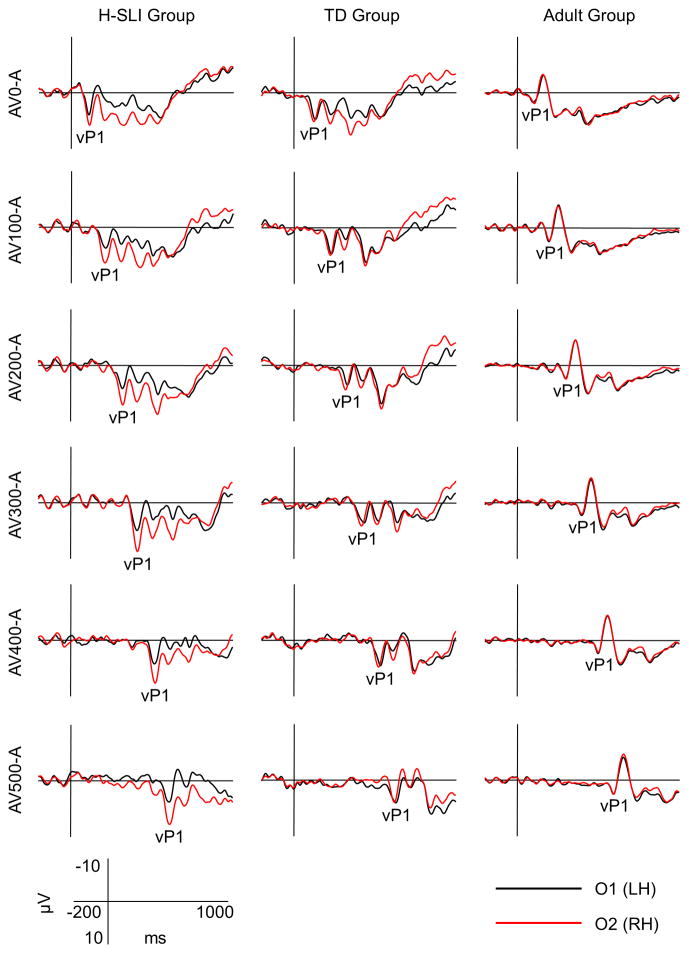

Figure 7. Hemispheric asymmetry of visual ERPs.

Visual ERPs elicited over the left hemisphere (LH) and right hemisphere (RH) occipital sites are overlaid for the three groups of participants and for each of the six offsets used in the study. The sustained positivity over the RH occipital site in the H-SLI group started at the onset of the vP1 component and continued for approximately 500 ms. Other visual components are not marked.

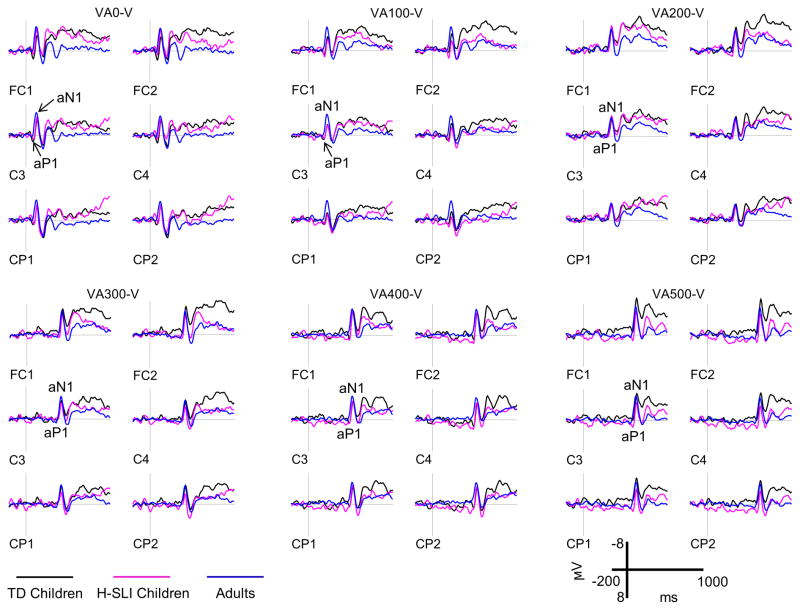

ERPs elicited by the auditory part of audiovisual stimulation are shown in Figure 8, with measured components marked on the C3 site. Several earlier reports showed that an adult-like aN1 may not appear in children’s ERPs until late childhood when sounds are presented at short inter-stimulus interval (e.g., Sharma et al., 1997; Sussman et al., 2008). However, because stimuli presented at short offsets in our study were from different modalities, both groups of children exhibited clear aP1 and aN1 components to sound onsets. Analysis of aP1 amplitude yielded no significant results. The aP1 latency, however, was overall shorter in the H-SLI group compared TD children (mid-lateral sites: group, F(1,28)=21.278, p<0.001, ηp2=0.432; midline sites: group, F(1,28)=14.626, p=0.001, ηp2=0.343). This effect interacted with offset (mid-lateral sites: group by offset, F(5,140)=34.947, p<0.001, ηp2=0.555; midline sites, F(5,140)=19.546, p<0.001, ηp2=0.411), with shorter latency in the H-SLI group for the 400 ms offset (mid-lateral sites: F(1,28)=199.758, p<0.001, ηp2=0.877; midline sites, F(1,28)=176.532, p<0.001, ηp2=0.863) and a trend in the same direction for the 500 ms offset (mid-lateral sites, (F(1,28)=2.757, p=0.108, ηp2=0.09; midline sites, F(1,28)=3.864, p=0.059, ηp2=0.121). Analysis of aN1 amplitude revealed that it was significantly enlarged in adults compared to H-SLI children and marginally enlarged compared to TD children at 100 ms offset (mid-lateral: group by offset, F(10,210)=2.359, p=0.014, ηp2=0.101, group, F(2,42)=6.504, ηp2=0.236; H-SLI vs. adults, p=0.003, TD vs. adults, p=0.064; midline: group by offset, F(10,210)=2.202, p=0.023, ηp2=0.095; group, F(2,42)=7.911, p=0.001, ηp2=0.274; H-SLI vs. adults, p=0.001, TD vs. adults, p=0.058) and that it was larger in TD children than in H-SLI children at the 500 ms offset (mid-lateral: group, F(2,42)=3.242, p=0.049; H-SLI vs. TD, p=0.047; midline: group, F(2,42)=2.843, p=0.069, ηp2=0.119; H-SLI vs. TD, p=0.068). Finally, analysis of the aN1 latency showed that the H-SLI group had marginally shorter latencies compared to their TD peers over the midline sites only (F(1,28)=3.910, p=0.058, ηp2=0.123). Insert Figure 8 here

Figure 8. Auditory ERPs.

Auditory ERPs elicited by the 2 kHz pure tone are shown for the H-SLI, TD, and adult groups over a representative set of electrodes. All ERPs are difference waves that were created by subtracting visual only trials from VA stimuli with different offsets in order to reveal brain activity specific to auditory stimulation. Difference waves are labeled based on the subtraction that was used to create them. For example, the VA500-V panel shows auditory ERPs that were revealed by subtracting visual only trials from ERPs elicited by visual-auditory stimuli with a 500 ms offset. Measured components are marked on the C3 site. Negative is plotted up.

4. Discussion

This study examined sensitivity to audiovisual temporal asynchrony in TD and H-SLI school-age children and adults. We found that children in the H-SLI group were profoundly less sensitive to temporal separations between auditory and visual modalities than their TD peers and adults, both when the sound preceded the visual stimulus and when the visual stimulus preceded the sound. These group differences persisted even when separations between modalities reached 400 and 500 ms. Just as importantly, TD children were less sensitive to audiovisual asynchrony than young adults, revealing a protracted developmental course of audiovisual temporal function. By using a multiple regression analysis we also showed that for children such factors as group membership (H-SLI or TD), age, and Conners’ ADHD index were able to account for 60% (the VA stimuli) to 80% (the AV stimuli) of variability in the SJT performance, with the H-SLI status, higher ADHD index (even in the absence of an official ADHD diagnosis), and younger age being predictive of poor audiovisual temporal function.

A separate analysis of a relationship between the SJT performance and language aptitude revealed that those H-SLI children who did better on the SJT also had higher scores on the C&FD subtest of CELF-4 and the overall language aptitude composite score, thus suggesting a relationship between audiovisual temporal discrimination and language proficiency in this group. This relationship was absent in the TD children. Given a relatively small number of participants in each group, this result requires replication. The importance of audiovisual processing for early phonological and lexical development has been well established. However, one might hypothesize that once these linguistic systems reach a certain level of maturity, visual information, and audiovisual temporal discrimination in particular, may play a smaller role. While language skills are relatively mature by mid-childhood in TD children, they often remain vulnerable well into adolescence and even adulthood in individuals with a history of SLI (Hesketh & Conti-Ramsden, 2013). Therefore, in the H-SLI group, a greater ability to use visual cues is likely to translate into better linguistic outcomes even during mid-childhood - the age range of our participants. Such prolonged dependence on visual information in children with SLI has also been reported by Botting and colleagues (Botting, Riches, Gaynor, & Morgan, 2010), who showed that children with SLI rely on gesture for speech comprehension significantly longer than their TD peers, likely due to weaker auditory speech comprehension skills in this group.

Alternatively, a correlation between the SJT and language aptitude in the H-SLI group may be due to some other cognitive function that plays a prominent role in both tasks. Previous research has demonstrated that children with SLI have difficulty with engaging, sustaining, and selectively focusing their attention (Dispaldro et al., in press; Finneran, Francis, & Leonard, 2009; Spaulding, Plante, & Vance, 2008; Stevens, Sanders, & Neville, 2006); therefore, a difference in attentional skills might have contributed to differences in group performance on both the SJT and CELF-4. To examine this possibility, we evaluated attention skills in both groups via two separate measures. The ADHD Index of the Conners’ Rating Scales – Revised was used to identify children who may be at risk for developing ADHD, while the TEA-Ch test directly examined children’s ability to sustain and divide attention. The two groups of children did not differ in either the ADHD index or the TEA-CH scores; however, based on the multiple regression analysis, the ADHD index accounted for a significant amount of variability in performance on the SJT. Nevertheless, after removing the two H-SLI children with the ADHD index in the highly atypical range and one H-SLI child with an official diagnosis of ADHD, from the SJD analysis, the group difference was still significant in both the AV and VA conditions, albeit over a somewhat smaller range of audiovisual offsets. In sum, attention does appear to have played some role in children’s performance on the SJT; however, group differences in the audiovisual temporal function cannot be fully accounted for by differences in attention skills.