Abstract

Classic psychology and economic studies argue that punishment is the standard response to violations of fairness norms. Typically, individuals are presented with the option to punish the transgressor or not. However, such a narrow choice set may fail to capture stronger alternative preferences for restoring justice. Here we show, in contrast to the majority of findings on social punishment, that other forms of justice restoration (e.g., compensation to the victim) are strongly preferred to punitive measures. Furthermore, these alternative preferences for restoring justice depend on the perspective of the deciding agent. When people are the recipient of an unfair offer, they prefer to compensate themselves without seeking retribution, even when punishment is free. Yet when people observe a fairness violation targeted at another, they change their decision to the most punitive option. Together these findings indicate that humans prefer alternative forms of justice restoration to punishment alone.

Introduction

Social norms, such as fairness concerns, provide prescribed standards for behavior that promote social efficiency and cooperation1-3. How humans resolve fairness transgressions has been extensively studied in the context of simple, constrained interactions4. Traditionally, people are presented with two options—engage in punitive behavior, or do nothing. In this context, people typically respond to fairness violations with punishment5,6. However, such a narrow range of options may fail to capture alternative, preferred strategies for restoring justice that are frequently observed in everyday life. Here, we test alternative preferences for justice restoration by broadening the decision-making space to include compensatory measures in addition to punishment. Since impartiality is a core principle of many legal systems and is believed to influence judicial decision-making, we further test whether these preferences are differentially deployed depending on the perspective of the deciding agent. That is, do unaffected third-parties sanction fairness violations differently than personally affected second-parties?

Demonstrations of how intensely humans endorse punishment as a means of ensuring fair and equitable outcomes2 suggests that punishment is the standard response to violations of justice. Hundreds of studies using the Ultimatum Game illustrate that people are willing to incur personal monetary costs in order to punish fairness violations. In the Ultimatum Game, two players must agree on how to split a sum of money. First, the Proposer makes an offer of how to divide the money. The Responder can then either accept the offer, in which case the money is split as proposed, or reject the offer, in which case neither player receives any money7. It is well established that Responders will forgo even large monetary benefits by rejecting the offer in order to punish the Proposer for offering an unfair split8,9. In fact, extremely unfair offers are rejected around 70% of the time10.

In the real world, however, punishment is rarely the only option for restoring justice. There are a broad range of alternative responses, reflecting the idea that both the transgressor and the victim can be differentially valued depending on one’s social preferences and conceptual sense of justice. For instance, some people may prefer to compensate the victim11, or punish the transgressor such that the penalty is proportionate to the harm committed12, preferences that may prove to play powerful roles in motivating the restoration of justice. Although existence of alternative forms of justice restoration date back as far as four millennia ago13, no research that we are aware of has examined these alternatives alongside the prototypical punitive options.

The question of justice restoration is important because most legal systems are largely based on the principle that social order depends on punishment. For much of modern civilization, formal systems—such as judges and juries14,15—have been structured to mete out justice. The underlying assumption is that people make judgments differently depending on whether a fairness violation is directed towards another individual, or aimed at the self. Given the distinct asymmetries between the way people perceive themselves versus their peers16, it is thought that unaffected, and putatively dispassionate third-parties sanction transgressors in a less egocentric and more deliberate manner than victims17. Indeed, theorists suggest that people experience psychologically close events (e.g. those experienced personally) in a detailed, concrete manner, whereas socially distant objects are construed in terms of high-level, abstract characteristics and principles18,19. Psychological distance from a transgression may therefore bias how people evaluate fairness violations and influence their subsequent preferences for restoring justice. Accordingly, we theorized that individuals would endorse different routes to justice restoration depending on whether they are the direct recipient of a fairness violation compared to when they merely observe it.

To examine alternative motivations for restoring justice and test whether individuals navigate fairness violations differently for both the self and another, we developed a novel economic game that broadens the available choice space to include a range of punitive and compensatory options for restoring justice that are not present in classic experimental games. To model alternative options for justice restoration frequently observed in the real world, we not only presented participants with the opportunity to accept or reject the proposed split (as in the Ultimatum Game), but also other novel options that reflect a range of other-regarding preferences.

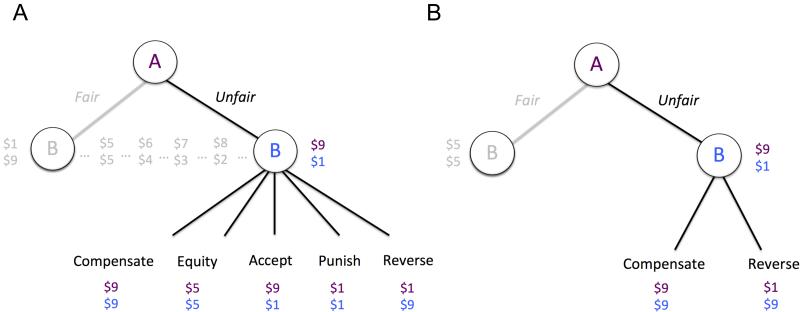

In our task, Player A has the first move and can propose a division of a $10 pie with Player B (Player A: $10 - x, Player B: x, Fig 1A). Player B can then reapportion the money by choosing from the following five options; 1) accept: agreeing to the proposed split ($10 - x, x)7; 2) punish: reducing Player A’s payout to the original amount offered to Player B (x, x)20; 3) equity: equally splitting the pie so that both players receive half of the initial endowment ($5, $5)4; 4) compensate: increasing Player B’s own payout to equal Player A’s payout, thus enlarging the pie to maximizing both players’ monetary outcomes ($10 - x, $10 - x)21; and finally, 5) reverse: reversing the proposed split—a ‘just deserts’ motive where the perpetrator deserves punishment proportionate to the wrong committed12—so that Player A is punished and Player B is compensated (x, $10 - x)22,23. See supplementary discussion for in-depth explanations of each option. As in many classic experimental economics games that explore trade-offs between discrete choice pairs7,24, participants were presented with only two options on any given trial, such that each option (i.e. ‘compensate’, ‘equity’, ‘accept’, ‘punish’, ‘reverse’) was randomly paired with one alternative option per trial, resulting in every combination pair, for a total of 10 unique combination pairs (Fig 1B). When making their offers, Player A was not aware which two options would be available to Player B on a given trial..

Figure 1. Game Structure.

A) The sequential game. Player A can make any offer to Player B. Here we illustrate all the options that Player B has to reapportion the money after being offered a split of $9/$1. On each round, however, Player B is presented with a forced choice between two options (e.g. compensate v equity, compensate v accept, compensate v punish, etc) for a total of 10 pairwise comparisons. Options were randomly paired and presented across the experiment. We focused our analysis on unfair offers, splits of $6/$4 through $9/$1. B) An example of a round where Player A offers Player B $1. In this case Player B is then presented with the option to either increase their own payout without decreasing Player A’s payout (compensate), or reverse the payouts such that Player A receives $1 and Player B receives $9 (reverse).

We find that although decades of research demonstrate that individuals consistently retaliate against those who behave unfairly, when alternative options for dealing with fairness violations are made available, these assumedly robust preferences to punish another are not actually preferred when offered alongside other, non-punitive options. However, when tasked with making the same decision on behalf of someone else who has experienced a fairness violation, individuals modify their responses and apply the harshest form of punishment to the transgressor. Together these results challenge our current understanding of social preferences and the emphasis placed on punitive behavior.

RESULTS

Preferences for justice restoration extend beyond punishment

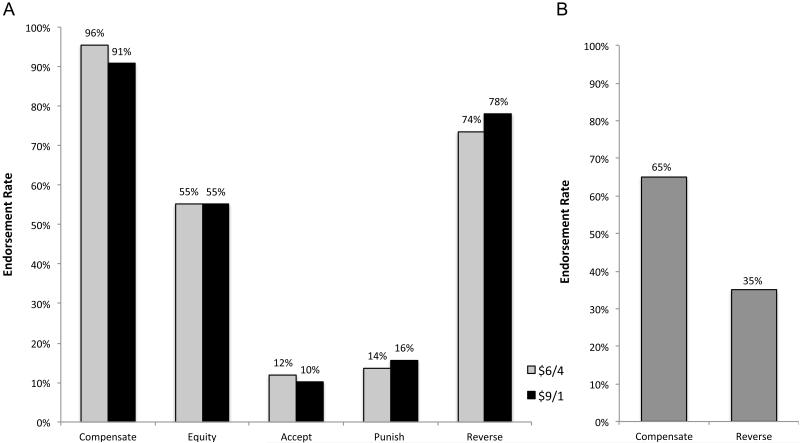

Fig 2A shows choice behavior (N=112; 42 males, mean age 20.8±2.11) for moderately unfair offers and highly unfair offers in Experiment 1. We compute endorsement rates by the frequency an option is selected, such that each option’s endorsement rate is out of 100% (number of times an option is selected/number of times option is presented during the experiment). That is, we calculate the number of times ‘accept’ is chosen when paired with every possible alternative option, and did the same for ‘punish’, ‘compensate’, ‘equity’, and ‘reverse’. Strikingly, across all offer types, participants least chose the options ‘accept’ and ‘punish’ (10% and 16% endorsement rate, respectively; Supplementary Table 1)—the two options most similar to those in the traditional Ultimatum Game. Instead, participants most preferred the option ‘compensate’, choosing to increase their own payout and apply no punishment to Player A (92% endorsement rate; Supplementary Table 1). This preference remained robust even when participants were offered a highly unfair split of (Fig 2A).

Figure 2. Choice behavior for restoring justice.

We compute endorsement rates by the frequency an option is selected from all available trials, such that each option’s endorsement rate is out of 100%. A) Results (n=112) reveal that compensation is the most preferred choice, even when offered highly unfair splits. B) The choice pair compensate v reverse (game structure illustrated in Figure 1B) equates for Player B’s fiscal efficiency, such that Player B can both compensate himself and punish Player A at no cost. Even when punishment is free, participants significantly prefer to compensate themselves and apply no punishment to Player A; Pearson’s X2=9, 1df, p=0.003, φ=0.15.

Since the choice pair ‘compensate’ versus ‘reverse’ controls for Player B’s monetary benefit—i.e. after receiving a highly unfair spilt of , choosing compensate or reverse results in the exact same monetary payout to Player B ($9)—we can use this choice pair to directly test other-regarding preferences while controlling for Player B’s fiscal efficiency. Results reveal that when responding to unfair offers, participants prefer to compensate rather than reverse, even though punishment is free (Pearson’s X2=9, 1df, p=0.003, φ=0.15, Fig 2B). In other words, despite the available option to maximize one’s payout while simultaneously applying punishment to Player A (selecting ‘reverse’), participants preferred to maximize their payoff and not apply any punishment to Player A. While most previous research has focused on punishment3 as the primary method of restoring justice, these findings illustrate that when possible, people actually prefer compensation to punishment.

In a second experiment, Player Bs were presented with varying splits of a $1 endowment from Player A, ranging from moderately unfair to highly unfair, , reflected through 10 cent increments. As in Experiment 1, participants (N=97, Experiment 2a) did not prefer traditional Ultimatum Game options to ‘accept’ the offer or to ‘punish’ Player A for proposing an unfair split, and instead the strongest preference was to compensate (84% endorsement rate of ‘compensate’ across all offer types, Supplementary Table 2a). Again, for unfair offers, the choice pair compensate v. reverse reveals that even when punishment is free, individuals still prefer to compensate and abstain from punishing Player A (Pearson’s X2=7.7, 1df, p=0.005, φ=0.14). Together, these findings indicate that when given the option for alternative forms of justice restoration, compensation of the victim is strongly preferred to punishment of the transgressor.

Second and third party preferences for justice restoration

In order to test whether being directly affected by a fairness violation influences decisions to restore justice, we also examined participants’ behavior when they acted as a non-vested third-party (Player C), observing interactions between Players A and B (N=261, Experiment 2b). That is, participants were asked to make decisions on behalf of another player such that payoffs would be paid to Players A and B and not to themselves. Unlike in the ‘Self’, second-party condition in which participants played the game as Player B (Experiments 1 and 2a), these ‘Other’, third-party decisions were non-costly and non-beneficial. Similar to decisions made in the Self condition, Player Cs (Other condition) show little preference to ‘accept’ the offer, or to ‘punish’ Player A for proposing an unfair split to Player B (Supplementary Table 2b).

While individuals chose to compensate oneself and another at the same rate when the offer was relatively fair (McNemar’s X2=1.2, 1df, p=0.27) we found that when responding to unfair offers, Player Cs selected ‘reverse’—the option that both compensates Player B and punishes Player A—significantly more often than Player Bs did for themselves (choice pair compensate/reverse: McNemar’s X2=13.5, 1df, p<0.001, φ=0.14; Supplementary Fig 2). In other words, although participants did not show preferences for punishing Player A when directly affected by a fairness violation (i.e., as a second party), when observation a fairness violation targeted at another (i.e., as a third party), participants significantly increased their retributive responding.

Since one motive for exploring justice restoration was to investigate whether broadening the decision-making space (to include a plurality of options) affects choice behavior, we ran four additional experiments (analyzed together, see supplementary materials) where all five options were available on every trial. In these studies, participants were offered splits of $1 and made decisions both for themselves and on behalf of others in a within-subjects design. That is, participants made decisions both when they were personally affected by a fairness violation (as Player B; Self condition), and also on behalf of another player who was affected by a fairness violation (as Player C; Other condition).

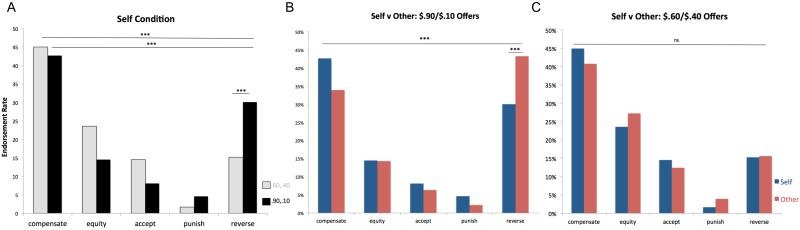

As with our previous experiments, participants (N=540) demonstrated strong preferences to ‘compensate’ (42% endorsement rate out of 100% across all offer types, Supplementary Fig 2A), and did not preferentially choose to ‘accept’ the offer or ‘punish’ Player A (10% and 3% endorsement rate, respectively) when deciding for themselves. However, as the split became increasingly unfair, participants were more likely to incorporate punitive measures17, almost doubling their endorsement of the ‘reverse’ option in which they simultaneously compensated themselves and punished Player A (15% endorsement of ‘reverse’ for relatively fair offers, compared to 30% for highly unfair offers; Cochran’s Q X2=234, 3df, p<0.001, Fig 3A; analyses across all four experiments25). Despite this, even when offered a highly unfair split , participants still preferred the least punitive and most compensatory option ‘compensate’ (43% endorsement rate; Cochran’s Q X2=562.2, 4df, p<0.001, Fig 3A).

Figure 3. Self v Other choice behavior.

A) Overall choice preferences (n=540) for relatively fair offers ($.60,$.40) compared to highly unfair offers ($.90,.$10) in the Self condition: participants exhibit strong preferences for the option to compensate in both fair and unfair trials; X2=562.2, 4df, p<0.001. However, preferences for retributive action become stronger when the offer is highly unfair; X2=234, 3df, p<0.001. B) Unfair offers ($.90,$.10 split) reveal that participants have significantly stronger preferences for retributive behavior (reverse option) when making decisions for another than they do for the self; X2=20.2, 1df, p<0.001, φ=.13. C) Fair offers (.60. 40 split) reveal similar choice preferences for Self and Other conditions; all X2s<1.16, all Ps>0.3; except for punish X2=4.67, 1df, p=0.03. ***p<0.001

The participants’ perspective (i.e. Self versus Other condition) shifted their preferences only when the offer was highly unfair. In the Other condition, participants chose to ‘reverse’ the players’ payouts significantly more than any other option (43% endorsement rate; Cochran’s X2=622.2, 4df, p<0.001; Fig 3B, see Supplementary Fig 3B for more details), and significantly more than they did in the Self condition (McNemar X2=20.2, 1df, p<0.001, φ=.13, Fig 3B). This result replicated Experiment 2, however, here participants were making decisions both as Player B and Player C (a within-subject design). Individuals who did not endorse punitive measures when deciding for themselves changed their decisions to the most retributive option after observing a fairness violation targeted at another. In contrast, there were no significant differences between choices for relatively fair offers in the Self and Other conditions (all X2s<1.16, all Ps>0.3; except for punish X2=4.67, 1df, p=0.03 Fig 3C).

DISCUSSION

Traditionally, research has focused on punishment as the preferred response to a perceived injustice, leading to the specious assumption that people prefer to punish when righting a wrong3,14,24,26,27. While these studies conclude that punishment is the standard response to fairness violations, it appears that these preferences to punish may be due to a limited choice set where participants do not have the option to select from non-punitive alternatives that satisfy other preferences (e.g. for equity). Here we demonstrate that when given the option to respond non-punitively to fairness violations, people derive greater utility from responding in a positive manner than they do in a punitive manner. That is, people prefer alternative forms of justice restoration, choosing compensation over punitive or retributive options. These findings fit within an emerging body of research exploring how prosocial options—like rewarding cooperation28 so long as punishment remains a viable option29—can be more effective in sustaining cooperation than punishment alone.

It is possible that participants chose to compensate and not punish because they prefer to maximize their own payment (rather than decrease the transgressor’s payment) and because they are averse to inequality. While these are both important motivations for justice restoration, they may not necessarily be mutually exclusive. An important next question is whether people still choose to compensate even if compensation does not match Player A’s payout (i.e. partial compensation). Future work designed to qualitatively identify relative preferences between compensation and equality will help decipher how—and when—people trade off compensation for equality.

There are of course instances when punishment becomes a more attractive response than non-punitive options. Depending on the options punishment is juxtaposed against, deciding to punish may provide the greatest utility. For example, when offered alongside the option to accept an unfair offer, punishment (e.g. equalizing both players’ payoffs as well as reducing the payoff of the transgressor) is the most preferred option in our experiments and in the abundant research employing the Ultimatum Game. Combining our findings with prior research on punishment clearly demonstrates that the preference for punishment can be differentially valued depending on the landscape of options. Punishment, compensation, equity, and other alternatives to justice restoration may all provide varying degrees of utility depending on the alternative available options and the extent of the fairness violation in the first place. However, the evidence that people exhibit strong preferences to compensate when responding to fairness violations suggests that the current emphasis on punishment fails to capture other important alternatives for justice restoration.

Interestingly however, when responding to a fairness violation on behalf of another, individuals shift their preferences for restoring justice to include the most punitive and retributive measures. That individuals prefer more punitive options when deciding on behalf of another but not for the self illustrates that context can dramatically alter the attractiveness of punishment as a measure of justice restoration. One possible explanation for the observed differences in choice behavior between self and other is that deciding for another entails greater psychological distance. Increasing psychological distance—including social distance—emphasizes higher-level, abstract characteristics in the perception, experience, and evaluation of situations or objects19,30. When deciding on behalf of another, people may be attending to schematic representations of justice—abstract ideological values such as ‘justice as fairness’31—which emphasizes the application of known social norms to right a perceived injustice. In this case, punitive responding increases because people can easily rely on the straightforward prescriptions of punishing as a means to restore justice. On the other hand, when making decisions for the self, events may be construed in terms of low-level, concrete, and essential features, including the possibility of monetary gain. When directly experiencing a fairness violation, people may be ignoring the straightforward prescriptions of justice (to punish), instead concretely evaluating each option and its consequences. Thus, the focus is less on punishing the transgressor and more on compensating the self.

Here we illustrate that when presented with alternative options for restoring justice, people do not prefer to punish. We also demonstrate that people respond more punitively on behalf of others than they do for themselves. The findings that victims prefer compensation over punishment could inform how the legal system approaches the punishment of transgressors. How to restore justice is a complex question, and while this research is only an initial step, it highlights the myopia of our understanding to date, and the critical importance of considering alternative means of making what was wrong, right.

METHODS

Experiment 1

Experiment 1 was run at the laboratory of the Center for Experimental Social Science (CESS) at New York University. 112 participants participated, drawn from the general undergraduate population and recruited through email solicitations. Each experimental session lasted approximately 1 hour. All experiments were approved by New York University’s Committee on Activities Involving Human Subjects and all participants completed a consent form before starting the experiment.

We utilized a pairwise comparison design that allowed us to directly contrast every choice pair (as in the Ultimatum Game, Fig 1B). We recruited as many as 22 participants during one session, randomly assigning half of the participants to play as Player A and the other half to play as Player B for the duration of the entire experiment. All participants were paid an initial $10 show up fee and an additional bonus depending on their choices (ranging from $1 to $9), which falls within the traditional monetary incentive structure for Ultimatum Games32. The instructions were read out loud so that all participants were collectively made aware of the rules. Full instructions can be found in the supplementary materials. On each trial, participants were randomly and anonymously paired with other participants in the room, resulting in 70 one-shot games. On every trial, all Player As were endowed with $10 and were told to make a split however he or she sees fit with Player B, so long as it is in whole dollar increments. Player B was then presented with options to re-apportion the money. Altogether there were five options, however, only two of these options were presented at one time on any given trial (Fig 1B). Participants were made aware that options to reapportion the money would be randomly paired and presented on each trial. Furthermore, participants were told that one trial would be randomly selected to be paid out and that half of the time the trial would be paid out according to Player A’s split (like a dictator game), and half the time according to the decision by Player B to reapportion the money (see supplementary information for more task details). Although Player A could choose to split the money however they saw fit, our aim was to understand social preferences for restoring justice, and so we restricted our analysis to unfair splits of $10, ranging from moderately unfair to highly unfair .

Experiments 2-6

Participants were recruited from the United States using the online labor market Amazon Mechanical Turk (AMT)33-36). Participants played anonymously over the Internet and were not allowed to participate in more than one experimental session. On each trial, Participants (Player B) were paid an initial participation fee of $.50 and an additional bonus depending on their choices (ranging from $.10 to $.90). Across all experiments participants were first presented with a standard digital consent form, which explained the general procedure, known risks (none), confidentiality, compensation, and their rights. They could only partake in the study once they agreed to the consent form.

To ensure task comprehension, participants had to correctly complete a quiz following the instructions. Only after they correctly completed the quiz could participants begin the task. Participants were then told to place their hands on the keyboard on the following keys: S, D, F, H, J, and a timer counted down from five before the task started. On each trial, the options ‘compensate’, ‘equity’, ‘accept’, ‘punish’, and ‘reverse’ (labeled in analyses and here, but not presented to participants; see Supplementary Figure 4) were displayed in a different order. After completing the task, participants were explicitly probed on their strategies when the offer was relatively fair and when the offer was highly unfair , for both the Self and Other conditions. That is, participants were asked “in your own words please describe your strategy for a scenario when Player A kept $.60 and offered $.40 to you”. See supplementary materials for a sampling of participants’ strategies.

Unlike the experiments run in the laboratory, in the experiments run through AMT, we restricted offers from Player A (in reality, predetermined offers from a computer) to varying levels of unfairness, ranging from moderately unfair to highly unfair , reflected through $.10 increments. This was done primarily because we were interested in how people resolve fairness transgressions.

Differences in Task Structure for Experiments 2 - 6

Experiment 2 was a pairwise comparison of each choice pair (Fig 1B). Participants (N=358) played the task either as Player B (Self condition; N=97) or as Player C (Other condition; N=261), a between-subjects design. Participants were only instructed about the condition they were in, such that the instructions either explained that participants were to make decisions for themselves and Player A (Self condition), or on behalf of two other Players (Other condition). Participants were able to make an additional payout based on their choices if they completed the Self condition. For participants who completed the Other condition, they did not make an additional bonus but were paid for the time taken to complete the task.

Like in Experiment 1, on each trial, participants were presented with only two options. For example, after being offered an unfair split, Player B only observed two options (e.g. compensate v equity, compensate v accept, compensate v punish, compensate v reverse, equity v accept, equity v punish, etc.). Thus, for every offer type ( - ), participants saw all possible pairwise comparisons (i.e. 10 pairs for each offer type, and four different offer types, 40 anonymous, one-shot games in total). Trials were randomly presented to participants.

In Experiments 3-6, participants played the task as both Player B and Player C. This within-subject design allowed us to explore each individual’s choices across conditions, Self and Other. Although Experiments 3-6 were quite similar, there were small differences between the tasks which are enumerated here. In Experiment 3, Self and Other trials were presented in discrete blocks, with the Self condition always presented first and the Other condition presented second. However, to ensure that were no order effects and that participants were not anchoring their decisions according to the decisions made in the first block (Self condition), Experiments 4 - 6 randomly presented the trials such that Self and Other trials were randomly interleaved across the experiment. In Experiment 3 reaction times were collected with a mouse, whereas in Experiments 4-6 reaction times were collected using the keyboard (button presses). Reaction time data was similar regardless of whether participants used a mouse or a keyboard: across all four Experiments, participants were faster to decide for another than they were for themselves (see reaction time data in supplementary materials). In Experiment 4, each participant was presented with a random ordering of trials. In other words, no participant saw the same order of offer types. In Experiment 5, all participants were presented with the same randomized set of trials. That is, AMT presented the same order of trials (previously determined by an algorithm in order to randomly interleave offer types and conditions) to all participants. Experiment 6 followed the same structure as Experiment 5, with the only difference being that blank profile pictures were added to the instructions in order to further delineate the roles of all the players.

Supplementary Material

ACKNOWLEDGMENTS

We are grateful to Dean Mobbs and Tim Dalgleish for their early help and support. This research is supported by a grant from the National Institute of Aging.

Footnotes

CONTRIBUTIONS

OFH and EAP designed the experiments, OFH carried out the experiments and ran the statistical analyses, and OFH, PSH, JVB and EAP wrote the paper.

The authors declare no competing financial interests

REFERENCES

- 1.Falk A, Fehr E, Fischbacher U. Testing theories of fairness - Intentions matter. Games and Economic Behavior. 2008;62:287–303. doi:Doi 10.1016/J.Geb.2007.06.001. [Google Scholar]

- 2.Fehr E, Fischbacher U. Why social preferences matter - The impact of non-selfish motives on competition, cooperation and incentives. Econ J. 2002;112:C1–C33. doi:Doi 10.1111/1468-0297.00027. [Google Scholar]

- 3.Fehr E, Fischbacher U, Gachter S. Strong reciprocity, human cooperation, and the enforcement of social norms. Hum Nature-Int Bios. 2002;13:1–25. doi: 10.1007/s12110-002-1012-7. doi:Doi 10.1007/S12110-002-1012-7. [DOI] [PubMed] [Google Scholar]

- 4.Fehr E, Schmidt KM. A theory of fairness, competition, and cooperation. Q J Econ. 1999;114:817–868. doi:Doi 10.1162/003355399556151. [Google Scholar]

- 5.Herrmann B, Thoni C, Gachter S. Antisocial punishment across societies. Science. 2008;319:1362–1367. doi: 10.1126/science.1153808. doi:Doi 10.1126/Science.1153808. [DOI] [PubMed] [Google Scholar]

- 6.Fowler JH. Altruistic punishment and the origin of cooperation. Proceedings of the National Academy of Sciences of the United States of America. 2005;102:7047–7049. doi: 10.1073/pnas.0500938102. doi:Doi 10.1073/Pnas.0500938102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Guth W, Schmittberger R, Schwarze B. An Experimental-Analysis of Ultimatum Bargaining. J Econ Behav Organ. 1982;3:367–388. doi:Doi 10.1016/0167-2681(82)90011-7. [Google Scholar]

- 8.Cameron LA. Raising the stakes in the ultimatum game: Experimental evidence from Indonesia. Econ Inq. 1999;37:47–59. [Google Scholar]

- 9.Slonim R, Roth AE. Learning in high stakes ultimatum games: An experiment in the Slovak Republic. Econometrica. 1998;66:569–596. doi:Doi 10.2307/2998575. [Google Scholar]

- 10.Camerer C. Behavioral game theory: experiments in strategic interaction. Russell Sage Foundation; Princeton University Press; 2003. [Google Scholar]

- 11.Weitekamp E. Reparative Justice. European Journal of Criminal Policy and Research. 1993;1:70–93. [Google Scholar]

- 12.Carlsmith KM, Darley JM, Robinson PH. Why do we punish? Deterrence and just deserts as motives for punishment. Journal of Personality and Social Psychology. 2002;83:284–299. doi: 10.1037/0022-3514.83.2.284. doi:Doi 10.1037//0022-3514.83.2.284. [DOI] [PubMed] [Google Scholar]

- 13.Gurney OR, Kramer SN. University of Chicago Press; 1965. [Google Scholar]

- 14.Fehr E, Fischbacher U. Third-party punishment and social norms. Evol Hum Behav. 2004;25:63–87. doi:Doi 10.1016/S1090-5138(04)00005-4. [Google Scholar]

- 15.Smith A, Millar A. The theory of moral sentiments. 1759 [Google Scholar]

- 16.Nisbett RE, Legant P, Marecek J. Behavior as Seen by Actor and as Seen by Observer. Journal of Personality and Social Psychology. 1973;27:154–164. doi:Doi 10.1037/H0034779. [Google Scholar]

- 17.Fehr E, Fischbacher U. Social norms and human cooperation. Trends Cogn Sci. 2004;8:185–190. doi: 10.1016/j.tics.2004.02.007. doi:10.1016/j.tics.2004.02.007. [DOI] [PubMed] [Google Scholar]

- 18.Ledgerwood A, Trope Y, Liberman N. Flexibility and Consistency in Evaluative Responding: The Function of Construal Level. Adv Exp Soc Psychol. 2010;43:257–295. doi:Doi 10.1016/S0065-2601(10)43006-3. [Google Scholar]

- 19.Trope Y, Liberman N. Construal-Level Theory of Psychological Distance. Psychol Rev. 2010;117:440–463. doi: 10.1037/a0018963. doi:Doi 10.1037/A0018963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Bolton GE, Zwick R. Anonymity Versus Punishment in Ultimatum Bargaining. Games and Economic Behavior. 1995;10:95–121. doi:Doi 10.1006/Game.1995.1026. [Google Scholar]

- 21.Lotz S, Okimoto TG, Schlosser T, Fetchenhauer D. Punitive versus compensatory reactions to injustice: Emotional antecedents to third-party interventions. Journal of experimental social psychology. 2011;47:477–480. doi:Doi 10.1016/J.Jesp.2010.10.004. [Google Scholar]

- 22.Pillutla MM, Murnighan JK. Unfairness, anger, and spite: Emotional rejections of ultimatum offers. Organ Behav Hum Dec. 1996;68:208–224. doi:Doi 10.1006/Obhd.1996.0100. [Google Scholar]

- 23.Straub PG, Murnighan JK. An Experimental Investigation of Ultimatum Games - Information, Fairness, Expectations, and Lowest Acceptable Offers. J Econ Behav Organ. 1995;27:345–364. doi:Doi 10.1016/0167-2681(94)00072-M. [Google Scholar]

- 24.Fehr E, Gachter S. Cooperation and punishment in public goods experiments. Am Econ Rev. 2000;90:980–994. doi:Doi 10.1257/Aer.90.4.980. [Google Scholar]

- 25.Schimmack U. The Ironic Effect of Significant Results on the Credibility of Multiple- Study Articles. Psychol Methods. 2012;17:551–566. doi: 10.1037/a0029487. doi:Doi 10.1037/A0029487. [DOI] [PubMed] [Google Scholar]

- 26.Henrich J, et al. Economic man” in cross-cultural perspective: Behavioral experiments in 15 small-scale societies. Behav Brain Sci. 2005;28:795–+. doi: 10.1017/S0140525X05000142. [DOI] [PubMed] [Google Scholar]

- 27.Rand DG, Nowak MA. The evolution of antisocial punishment in optional public goods games. Nat Commun. 2011;2:434. doi: 10.1038/ncomms1442. doi:10.1038/ncomms1442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Rand DG, Dreber A, Ellingsen T, Fudenberg D, Nowak MA. Positive interactions promote public cooperation. Science. 2009;325:1272–1275. doi: 10.1126/science.1177418. doi:10.1126/science.1177418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Andreoni J, Harbaugh W, Vesterlund L. The carrot or the stick: Rewards, punishments, and cooperation. Am Econ Rev. 2003;93:893–902. doi:Doi 10.1257/000282803322157142. [Google Scholar]

- 30.Ledgerwood A, Trope Y, Chaiken S. Flexibility Now, Consistency Later: Psychological Distance and Construal Shape Evaluative Responding. Journal of Personality and Social Psychology. 2010;99:32–51. doi: 10.1037/a0019843. doi:Doi 10.1037/A0019843. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Rawls J. Theory of Justice. Vop Filos. 1994:38–52. [Google Scholar]

- 32.Camerer CT, Anomalies RH. Ultimatums, Dictators and Manners Journal of Economic Behavior Perspectives. 1995;9:209–219. [Google Scholar]

- 33.Mason W, Suri S. Conducting behavioral research on Amazon’s Mechanical Turk. Behavior research methods. 2012;44:1–23. doi: 10.3758/s13428-011-0124-6. doi:10.3758/s13428-011-0124-6. [DOI] [PubMed] [Google Scholar]

- 34.Horton JJ, Rand DG, Zeckhauser RJ. The online laboratory: conducting experiments in a real labor market. Exp Econ. 2011;14:399–425. doi:Doi 10.1007/S10683-011-9273-9. [Google Scholar]

- 35.Paolacci G, Chandler J, Ipeirotis PG. Running experiments on Amazon Mechanical Turk. Judgm Decis Mak. 2010;5:411–419. [Google Scholar]

- 36.Buhrmester M, Kwang T, Gosling SD. Amazon’s Mechanical Turk: A New Source of Inexpensive, Yet High-Quality, Data? Perspect Psychol Sci. 2011;6:3–5. doi: 10.1177/1745691610393980. doi:Doi 10.1177/1745691610393980. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.