Abstract

Categorical perception (CP) refers to how similar things look different depending on whether they are classified as the same category. Many studies demonstrate that adult humans show CP for human emotional faces. It is widely debated whether the effect can be accounted for solely by perceptual differences (structural differences among emotional faces) or whether additional perceiver-based conceptual knowledge is required. In this review, I discuss the phenomenon of CP and key studies showing CP for emotional faces. I then discuss a new model of emotion which highlights how perceptual and conceptual knowledge interact to explain how people see discrete emotions in others’ faces. In doing so, I discuss how language (emotion words included in the paradigm) contribute to CP.

Keywords: categorical perception, emotional faces, language

Categorical perception (CP) occurs when an individual perceives a stimulus which varies continually along some dimension as one of two discrete categories. The most commonly cited and perhaps easily explained example is color. Adults do not perceive continuous changes along the visible light spectrum, but rather discrete colors (Bornstein, Kessen, & Weiskopf, 1976). This is not to say that adults cannot distinguish between different wavelengths, but rather that some changes are more meaningful (e.g., red, orange) than others (e.g., shades of red). As a result, continually varying stimuli are perceived as belonging to distinct categories marked by a sharp boundary (Harnad, 1987). The point at which perception shifts from one category to the other is known as the categorical boundary. A defining feature of CP is enhanced performance (i.e., accuracy, reaction time, discriminability) between stimuli which span the categorical boundary compared to discrimination between stimuli which do not cross the boundary, when the stimuli are separated by the same physical distance. Such enhanced performance between stimuli belonging to different categories (e.g., “between-category” trials) compared to performance between stimuli from one category (e.g., “within-category” trials) is known as the between-category advantage (Goldstone, 1994).

Categorical perception was first observed for speech sounds (e.g., Liberman, Harris, Hoffman, & Griffin, 1957), but since has been found for an array of stimuli from a variety of modalities, including for complex stimuli such as facial identity (e.g., Angeli, Davidoff, & Valentine, 2008; Beale & Keil, 1995; Kikutani, Roberson, & Hanley, 2008; Stevenage, 1998; Viviani, Binda, & Borsato, 2007) and facial expression (e.g., Calder, Young, Perrett, Etcoff, & Rowland, 1996; Etcoff & Magee, 1992; Roberson & Davidoff, 2000; Young et al., 1997). Figure 1 displays isometrically morphed (blended) emotional faces. Rather than seeing individual variations among the faces, normal participants perceive faces up to a certain point as one emotion, and thereafter as a second emotion.

Figure 1.

Morphs from anger to fear created at 10% intervals. Participants who perceive these faces in a categorical fashion identify morphs in two discrete groups with consistency. Participants typically identify morphs 1–5 as angry and morphs 6–10 as fearful (given the two emotion words). Given such identification, the categorical boundary would be between morphs 5 and 6.

Categorical Perception—The Measurement

Categorical perception is traditionally tested with two experimental paradigms, the second of which is dependent upon the results of the first paradigm. The first paradigm, identification (sometimes also called classification), establishes the location of the categorical boundary, whereas the second, discrimination, tests for the between-category advantage. The identification task is performed after the discrimination task to prevent the explicit use of category anchors (e.g., words which label the two categories; e.g., angry and sad). In the traditional identification task, a perceiver is presented with an array of stimuli that either exist naturally (e.g., color) or can be created to vary by using computerized “morphing” software. The perceiver is asked to identify each stimulus as one of the two words. If participants show CP, then they should identify all stimuli containing some level of content (up to some measurable point) as belonging to one category and all other stimuli containing more than this level of content as belonging to the other category. That is, they should show discrete sorting of stimuli into groups. When participants’ identifications of stimuli as belonging to one category are plotted against the incrementally changing stimuli, they should assume a sigmoid shape in which the categorical boundary can be inferred from the slope of line tangent to the steepest portion of the curve (see McKone, Martini, & Nakayama, 2001).

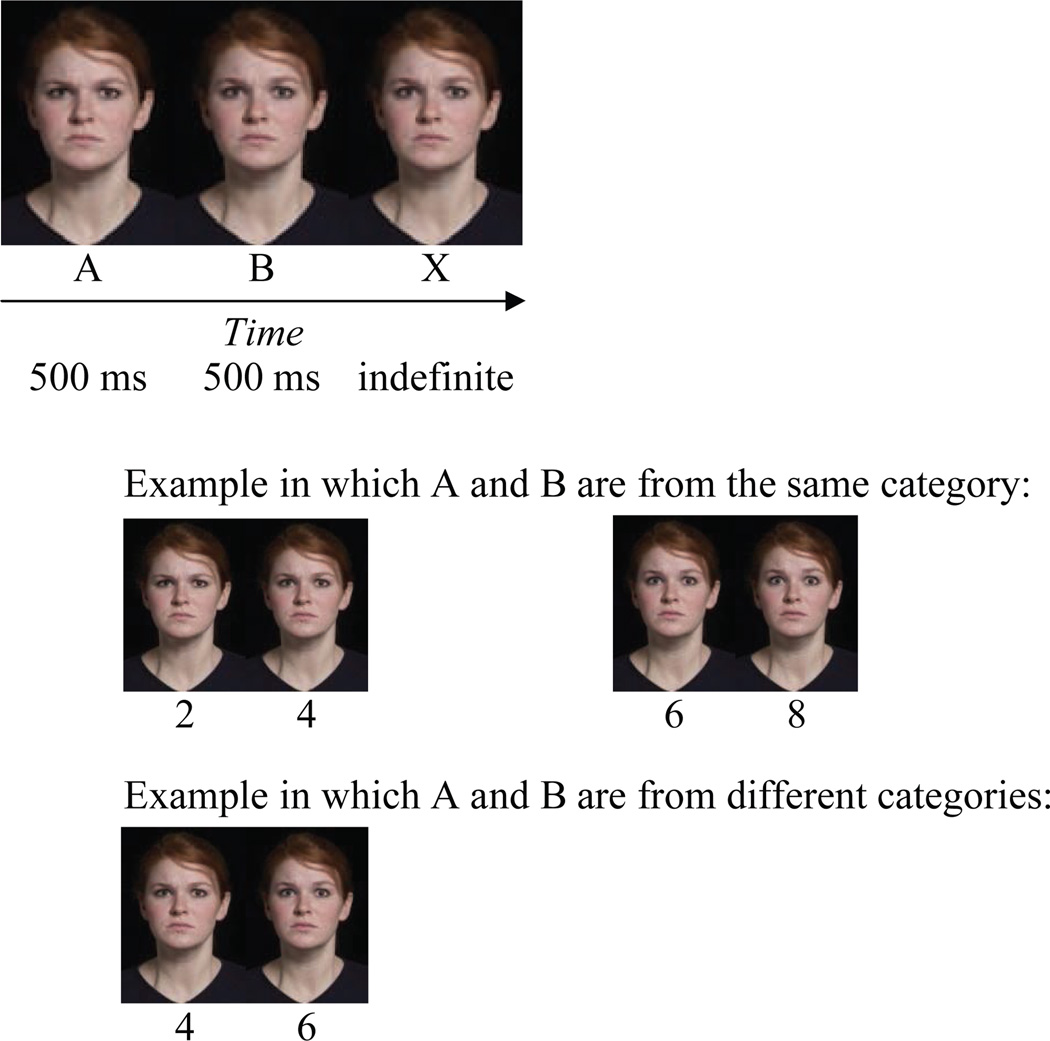

Any time a stimulus is identified as belonging to one category or another, one may get discrete identification of stimuli into groups. Therefore the presence of nonlinear identifications by itself is not sufficient for CP. Rather, CP also requires using a discrimination test to assess the between-category advantage. There exist many types of discrimination tasks whose utility and limitations have been previously discussed in depth (see McKone et al., 2001, for a good review). Most commonly, a two choice AB-X task is employed. Participants see two morphs sequentially (A and B), followed by a target stimulus (X). Participants respond whether X is A or B (see Figure 2). Critically, the physical difference between A and B in any pair must remain consistent across trials. If participants show CP, then they should also show enhanced performance for the between-category trials compared to the within-category trials (in addition to the discrete sorting in the identification task). It is also possible to mathematically determine the point at which enhanced performance should occur by calculating the derivative of the sigmoid which fits a participant’s identification data. This method represents a more precise method of determining the between-category advantage (e.g., Fugate, Gouzoules, & Barrett, 2010; McKone et al., 2001). In this case, participants show CP if their performance on the discrimination task is significantly correlated to the derivative of the sigmoid calculated from their identification data (in addition to discrete sorting in the identification task). In practice, however, CP is often claimed when the sigmoid derivative function predicts more of the variance in a participant’s discrimination data than does a straight line.

Figure 2.

Example of an AB-X discrimination trial. Participants see morph A, then morph B, then are asked to indicate whether morph X is either A or B. Here X is B.

Whether or not CP reflects shifts in the structural configuration of the stimuli themselves (e.g., how the stimuli are actually perceived) or cognitive influences (e.g., how the stimuli are classified) is highly debated (see Goldstone, 1994; Livingston, Andrews, & Harnad, 1998). Said another way, is CP really perceptual or conceptual (see Pilling, Wiggett, Ozgen, & Davies, 2003)? In the following sections, I discuss studies showing CP for emotional faces and how some models of emotion make perceptual claims for the effect. As part of that discussion, I raise challenges to claiming a purely perceptual effect given the paradigm. Finally, I end with a new model of emotion which emphasizes how cognitive influences augment underlying structural differences to explain why people see emotional faces in a categorical fashion.

Categorical Perception of Emotional Faces—Two Models of Emotion

Human perceivers see emotional faces as discrete categories. Perceivers quickly and often effortlessly look at another person’s face and make a judgment about what he or she is feeling (e.g., “he is angry” or “she is sad”). According to a basic view of emotion (Ekman, 1992; Izard, 1971; Tomkins, 1962), faces, voices, body postures (or some combination thereof) encode discrete emotional meaning and are evolutionarily conserved among species (homologous), innate (biologically-derived), and culturally similar (universal) among humans (see Ortony & Turner, 1990). According to the basic view of emotion, the structural information in the face is sufficient for discrete emotion perception to occur. For example, a perceiver should be able to look at a scowling face and know with a high level of certainty that the face is angry (and not disgusted, sad, etc.), without the use of any other information (i.e., context or conceptual influences). In general, studies report categorical perception of emotional faces as evidence for a basic view of emotion. The logic is that each emotion (at least those which have been defined as “basic”) is distinct from any other basic emotion. Therefore people should not perceive intermediates of morphed emotional faces that reflect the structural differences of the stimuli.

According to a dimensional model of emotion, however, emotions do not belong to discrete categories, but rather reflect dimensions of some continually varying properties (e.g., arousal and valence; see Russell, 1980). If this is the case, then people should be sensitive to continuous changes along a dimension as one face morphs into another. Specifically, when intermediate morphs are created from emotion categories which differ only along one dimension, people should perceive them as neutral because they pass through a zero point on the dimension (Woodworth & Schlosberg, 1954; see Calder et al., 1996, for a diagram). On the other hand, people should perceive intermediate morphs created from emotion categories which differ along both dimensions as a new (third) emotion.

Several studies have tested whether CP reflects the use of category (consistent with a basic model) or dimensional information of emotion (e.g., Fujimura, Matsuda, Katahira, Okada, & Okanoya, 2011; Young et al., 1997). For example, Young and colleagues report two studies consistent with a category approach to CP, while in two additional studies they find range effects and decreased performance for nonprototypical members (more consistent with a dimensional model) (see details in next section). Fujimura and colleagues (2011) show that participants use both category and dimensional information, and suggest that a hybrid view of CP might be necessary (see also Christie & Friedman, 2004; Russell, 2003).

Studies Showing CP for Emotional Faces

Etcoff and Magee (1992) were the first to show CP for emotional faces. The authors created pairwise morphs of several emotional faces (e.g., anger, sadness, fear, disgust, happiness, surprise, and neutral as a control) based on line drawings of photos depicting one individual in the Ekman and Friesen face set (1976). Only eight of the possible 20 combinations of morphed faces were tested, however, and a participant only completed the experiment on one of the eight continua. Participants pressed one of two keys labeled with an emotion word to identify the face (e.g., anger or fear). Participants’ identification data on each continuum were tested against a linear and nonlinear function. Identifications on each continuum were significantly different from that predicted by a straight line and significantly correlated with a sigmoid-like function. Participants also completed a sequential AB-X task on the continuum they identified. Between-category trials were more accurately discriminated than within-category trials for six of the eight continua. Those continua containing the emotion surprise did not show a between-category advantage (e.g., surprise–happy and surprise–fear).

Calder and colleagues (1996) created pairwise morphs of three emotional faces (anger, fear, happiness) from photos of one individual in the Ekman and Friesen (1976) face set. In a second experiment, additional identities were used to create facial morphs of the same content. No formal analyses were conducted on the participants’ identification data, but the general pattern of sorting suggested that participants were identifying morphs into two discrete categories for both experiments. Participants also completed a sequential AB-X task. Participants’ discrimination data were then correlated with their identification-derived data. For experiment 1, there was a significant correlation between the actual and predicted (identification-derived) data for the happy–sad and sad–anger continua, but not for the anger–fear continuum. For experiment 2, there was a significant correlation between participants’ actual and predicted data for the anger–fear and sad–anger continua, but not for the happy–sad continuum. The between-category advantage was also assessed based on accuracy to discriminate the between- and within-trial pairs. In both experiments, participants were more accurate overall at discriminating the between- versus within-category trials. In experiment 2, however, there was also a significant interaction with continuum, in which participants were more accurate for the between-category trials (compared to the within-category trials) for the anger–fear and the sad–anger continua than for the sad–happy continuum. In neither experiment, however, was the accuracy for the between- and within-category trials tested individually by continuum.

In two successive experiments (experiments 3 and 4), Calder and colleagues (1996) used the same faces in experiment 1 to create continual morphs among fear, anger, and happiness. The effect was a single continuum of morphed faces from fear through happiness through anger and then back to fear. A continual array of morphs rules out anchor and range effects that can occur when participants view the endpoints of a continuum. Using a continual array of morphs also allowed the number of times each morph was seen in the discrimination experiment to be equal. Participants identified each morph as one of three emotion words (e.g., anger, sad, or fear). Participants sorted morphs into discrete categories. In experiment 3, participants also performed a sequential AB-X task. Participants’ actual data was significantly correlated with their identification-derived data across the continual morphing array. In addition, accuracy was higher in the between- versus within-trials, although the effect was less for the anger–fear continuum.

In experiment 4, participants also performed a same/different task (rather than an AB-X task). Same/different tasks rule out memory demands associated with AB-X tasks. Participants’ actual data were significantly correlated with their identification-derived data across the array. In addition, participants showed an overall increase in discrimination (rather than accuracy) for morphs spanning the categorical boundaries, but the effect was less for the happiness–anger “section”.

Young and colleagues (1997) created all pairwise morphs of six emotional faces (e.g., anger, fear, disgust, sadness, happiness, and surprise) from photos of one individual in the Ekman and Friesen (1976) set. Experiments 1 and 2 were meant to specifically test the claims laid out by a dimensional versus a basic view of emotion (as described earlier) to see whether, given multiple emotion word choices in the identification task, participants would identify more ambiguous (intermediate) morphs as a third emotion (experiment 1) or as a neutral emotion (experiment 2). Participants showed discrete sorting patterns (no use of intermediate emotions or neutral) except in two cases. Participants identified intermediate fear–anger morphs as “surprise” and intermediate disgust–anger morphs as “neutral.” In experiment 3, a new group of participants completed a sequential AB-X discrimination task, in which A and B could be from different morphing continua. Participants were more accurate on the between- versus within-category trials, yet the results are difficult to interpret because the distance between morphs created from different endpoints inherently varies. Due to space limitations, I refer the reader to the original article in which this criticism is discussed more fully.

To summarize, there have been several studies using emotional faces to test for CP. The common conclusion drawn from these studies is that the structural information in the face is sufficient for the phenomenon to occur. Careful scrutiny of the data, however, suggests that the results are somewhat inconsistent, even when the same type of paradigm (AB-X) is used. In addition to the inconsistencies, there are a number of larger concerns in drawing such conclusions. First, all the stimuli were created from very caricatured, prototypical faces, for which many have noted the artificial nature (e.g., Barrett, 2006a). Second, morphs created from anger, fear, surprise, and disgust faces show the least evidence for CP, consistent with the idea that emotions which share affective (i.e., arousal and valence) information do not contain sufficient structural information. Finally, and most importantly, all of these studies require that participants identify the morph as one of two (or three, in the case of a continual array) emotion words. As a result, none of these studies can rule out that participants are not using the emotion words to help anchor the categories and refine category membership. That is, emotion words included as part of the task might “fill in the gaps” of the structural information.

Testing a Psychological Construction View of Emotion

According to a psychological constructionist view (described in more detail in this issue; see Lindquist & Gendron, 2013, pp. 66–71), adult humans show CP for human emotional faces because they have emotion words like “anger,” “sadness,” and “fear” which provide an internal context to constrain the continuous and highly variable array of facial movements made by people in everyday life (see Barrett, 2006a, 2006b; Barrett, Lindquist, & Gendron, 2007; Fugate et al., 2010). Whereas a basic view of emotion requires that CP arises solely from the structural information in the face, a psychological constructionist says that CP occurs when people use words which help impose structural specificity. In this view, people need not be aware of using such words; in fact, people often categorize using words implicitly (Barrett, 2006a, 2006b). A psychological constructionist view thus highlights the addition of conceptual processing in the categorical perception of emotional faces.

It is possible, even within a psychological constructionist view, that perceivers might show CP for faces which differ in affective (e.g., arousal and valence). In these cases, emotion words would most likely still have an effect on category membership, but they might refine the category boundaries rather than create them de novo. In this view, it would be fair to say that language can exploit what is given by nature, but it can also (and perhaps most remarkably) create categories into nature: language not only carves nature at its joints, but also carves joints into nature (see Lupyan, 2006). This would be consistent with the fact that evidence for CP tends to be stronger for faces which differ in affective information (e.g., happy–sad) compared to faces which share affective information (e.g., anger–fear, anger–disgust, surprise–fear). Theoretically, then, if one could remove (or at least limit) conceptual knowledge, including the activation of emotion words, perceivers should show CP for morphed emotional faces when the two faces differ in affective information (e.g., happy, sad). In the absence of emotion words, however, perceivers should not show CP for emotional faces which share affective information (e.g., disgust, anger, fear, surprise).

My colleagues and I have tried to address these claims directly (Fugate et al., 2010). Because all human adults have familiarity with human emotional faces (especially the caricatured ones shown in most experiments), it is impossible to address whether CP is the product of the faces themselves (the structural information) or whether additionally activated conceptual knowledge, including emotion words, affects CP. We (Fugate et al., 2010) used the facial actions of an evolutionary related species whose faces are structurally quite similar to humans’, but for which people neither have familiarity nor readily assign emotion words. In experiment 1, we tested human participants who had either extensive training with nonhuman facial expressions or no familiarity with nonhuman primate facial expressions, to see whether they showed CP for four categories of chimpanzee expressions. Neither the nonhuman primate “experts” or “novices” showed the between-category advantage when all continua were analyzed together, although experts and novices both showed a between-category advantage for one shared continuum. In experiment 2, we first trained naïve human perceivers to learn categories of chimpanzee facial expressions with either labeled or unlabeled pictures. Participants only showed CP for chimpanzee faces after learning categories of expressions with labels (even though the labels were not repeated in the task— participants identified the morphs into two categories by pictures). Those participants who learned the same categories without a label did not show CP, despite being equally good at identifying the morphs into discrete categories. Thus having previously learned a label (which acted like a word in this case) was enough to drive CP.

Other direct evidence for the role of language in CP of emotional faces comes from a study in which participants were first placed under verbal load. In that study, participants no longer showed CP for the faces when access to language was blocked (Roberson & Davidoff, 2000; see also Roberson, Damjanovic, & Pilling, 2007).

The idea that language—specifically emotion words—contribute to CP of emotional faces is additionally supported by many studies that do not directly test CP, but either require participants to make discrete categorizations or category judgments. For a good detailed summary of this work, I refer the reader to the article by Lindquist and Gendron (2013; see also Barrett et al., 2007).

Does Language Create or Augment CP for Emotional Faces?

The extent to which language affects CP is still largely debated; some argue for the direct role of language (e.g., language-caused effects), whereas others argue that the role is more indirect (e.g., language-mediated effects). It is possible that language exploits perceptual (i.e., structural) differences in stimuli, perhaps making fine-grained adjustments in the category boundary, or it is possible that language creates new categories. Consistent with the former idea, “category adjustment” models suggest CP arises from language, but only indirectly (Huttenlocher, Hedges, & Vevea, 2000; Roberson et al., 2007). The idea is that naming a target at encoding activates a representation of the category prototype. As memory of the target fades over time, participants’ estimations are biased by conceptual memory (e.g., words). The result is a consistent shift in recognition toward the category center (Roberson et al., 2007). The idea is that CP will only occur when there are poor (non-central) exemplars for a category to which between-category exemplars are compared. In this case, language has an effect because poor exemplars of within-category members are likely to be named inconsistently between encoding and time of testing. Targets and distracters can easily be distinguished when they cross the category boundary because they differ at both the conceptual and perceptual levels (Hanley & Roberson, 2011). Indeed, when only good within-category trials (i.e., central exemplars) from multiple studies were analyzed, accuracy was at the level of between-category trials (Hanley & Roberson, 2011). Such a model is also consistent with the dual code model originally proposed by Pisoni and colleagues for CP of speech (Pisoni & Lazarus, 1974; Pisoni & Tash, 1974), in which the conceptual code (e.g., words) contains less information but is easier to retain than the perceptual code (e.g., structural differences among the faces), unless verbal interference occurs during encoding.

Conclusion

It is difficult for us, as adult humans, to imagine what categorization would be like without our years of experience and our constant assessment of faces, whether into categories of gender, race, age, or emotion. The fact is, words—even when we are not aware of using them or we don’t need them to solve the task—provide us with a means to make these categorizations rapidly and easily. To this end, emotion perception studies (including CP studies) speak to how people perceive emotional faces in the context of words.

In order to achieve a fuller and better understanding of emotion perception, we should stop asking categorical questions (e.g., is CP perceptual or conceptual?) and start asking questions about the extent to which conceptual influences (such as language) affect perception and how early in processing such effects occur.

Acknowledgments

This manuscript was supported by a National Research Service Award (F32MH083455) from the NIMH to Jennifer Fugate. The content also does not necessarily represent the official views of the NIH. The author would like to thank Lisa Feldman Barrett for her inspiration and discussion about this work, as well as Kristen Lindquist, Maria Gendron, and two anonymous reviewers for comments on the original manuscript.

References

- Angeli A, Davidoff J, Valentine T. Face familiarity, distinctiveness, and categorical perception. Quarterly Journal of Experimental Psychology. 2008;61:690–707. doi: 10.1080/17470210701399305. [DOI] [PubMed] [Google Scholar]

- Barrett LF. Emotions as natural kinds? Perspectives on Psychological Science. 2006a;1:28–58. doi: 10.1111/j.1745-6916.2006.00003.x. [DOI] [PubMed] [Google Scholar]

- Barrett LF. Solving the emotion paradox: Categorization and the experience of emotion. Personality and Social Psychology Review. 2006b;10:20–46. doi: 10.1207/s15327957pspr1001_2. [DOI] [PubMed] [Google Scholar]

- Barrett LF, Lindquist K, Gendron M. Language as context for the perception of emotion. Trends in Cognitive Science. 2007;11:327–332. doi: 10.1016/j.tics.2007.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beale JM, Keil FC. Categorical effects in the perception of faces. Cognition. 1995;57:217–239. doi: 10.1016/0010-0277(95)00669-x. [DOI] [PubMed] [Google Scholar]

- Bornstein MH, Kessen W, Weiskopf S. Color vision and hue categorization in young human infants. Journal of Experimental Psychology: Human Perception and Performance. 1976;2:115–129. doi: 10.1037//0096-1523.2.1.115. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW, Perrett DI, Etcoff NL, Rowland D. Categorical perception of morphed facial expressions. Visual Cognition. 1996;3:81–117. [Google Scholar]

- Christie IC, Friedman BH. Autonomic specificity of discrete emotion and dimensions of affective space: A multivariate approach. International Journal of Psychophysiology. 2004;51:143–153. doi: 10.1016/j.ijpsycho.2003.08.002. [DOI] [PubMed] [Google Scholar]

- Ekman P. Are there basic emotions? Psychological Review. 1992;99:550–553. doi: 10.1037/0033-295x.99.3.550. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. Pictures of facial affect. Palo Alto, CA: Consulting Psychologists Press; 1976. [Google Scholar]

- Etcoff NL, Magee JJ. Categorical perception of facial expressions. Cognition. 1992;44:227–240. doi: 10.1016/0010-0277(92)90002-y. [DOI] [PubMed] [Google Scholar]

- Fugate JMB, Gouzoules H, Barrett LF. Reading chimpanzee faces: A test of the structural and conceptual hypotheses. Emotion. 2010;10:544–554. doi: 10.1037/a0019017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fujimura T, Matsuda Y-T, Katahira K, Okada M, Okanoya K. Categorical and dimensional perceptions in decoding emotional facial expressions. Cognition & Emotion. 2011;26:587–601. doi: 10.1080/02699931.2011.595391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldstone R. Influences of categorization on perceptual discrimination. Journal of Experimental Psychology: General. 1994;123:178–200. doi: 10.1037//0096-3445.123.2.178. [DOI] [PubMed] [Google Scholar]

- Hanley JR, Roberson D. Categorical perception effects reflect differences in typicality on within-category trials. Psychonomic Bulletin & Review. 2011;18:355–363. doi: 10.3758/s13423-010-0043-z. [DOI] [PubMed] [Google Scholar]

- Harnad S. Categorical perception. Cambridge, UK: Cambridge University Press; 1987. [Google Scholar]

- Huttenlocher J, Hedges LV, Vevea JL. Why do categories affect stimulus judgment? Journal of Experimental Psychology: General. 2000;129:220–241. doi: 10.1037//0096-3445.129.2.220. [DOI] [PubMed] [Google Scholar]

- Izard CE. The face of emotion. New York, NY: Appleton-Century-Crofts; 1971. [Google Scholar]

- Kikutani M, Roberson D, Hanley JR. What’s in a name? Categorical perception for unfamiliar faces can occur through labeling. Psychonomic Bulletin & Review. 2008;15:787–794. doi: 10.3758/pbr.15.4.787. [DOI] [PubMed] [Google Scholar]

- Liberman AM, Harris KS, Hoffman HS, Griffin BC. The discrimination of speech sounds within and across phoneme boundaries. Journal of Experimental Psychology. 1957;54:358–368. doi: 10.1037/h0044417. [DOI] [PubMed] [Google Scholar]

- Lindquist KA, Gendron M. What’s in a word? Language constructs emotion perception. Emotion Review. 2013;5:66–71. [Google Scholar]

- Livingston KR, Andrews JK, Harnad S. Categorical perception effects induced by category learning. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1998;24:732–753. doi: 10.1037//0278-7393.24.3.732. [DOI] [PubMed] [Google Scholar]

- Lupyan G. Labels facilitate learning of novel categories. In: Cangelosi A, Smith ADM, Smith KR, editors. The evolution of language: Proceedings of the 6th international conference; Singapore. World Scientific; 2006. pp. 190–197. [Google Scholar]

- McKone E, Martini P, Nakayama K. Categorical perception of face identity in noise isolates configural processing. Journal of Experimental Psychology: Human Perception and Performance. 2001;27:573–599. doi: 10.1037//0096-1523.27.3.573. [DOI] [PubMed] [Google Scholar]

- Ortony A, Turner TJ. What’s basic about basic emotions? Psychological Review. 1990;97:315–331. doi: 10.1037/0033-295x.97.3.315. [DOI] [PubMed] [Google Scholar]

- Pilling M, Wiggett A, Ozgen E, Davies IRL. Is color “categorical perception” really perceptual? Memory & Cognition. 2003;31:538–551. doi: 10.3758/bf03196095. [DOI] [PubMed] [Google Scholar]

- Pisoni DB, Lazarus JH. Categorical and noncategorical modes of speech perception along the voicing continuum. Journal of the Acoustical Society of America. 1974;55:328–333. doi: 10.1121/1.1914506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pisoni DB, Tash J. Reaction times to comparisons within and across phonetic categories. Perception and Psychophysics. 1974;15:285–290. doi: 10.3758/bf03213946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roberson D, Damjanovic L, Pilling M. Categorical perception of facial expressions: Evidence for a"category adjustment” model. Memory & Cognition. 2007;35:1814–1829. doi: 10.3758/bf03193512. [DOI] [PubMed] [Google Scholar]

- Roberson D, Davidoff J. The categorical perception of color and facial expressions: The effect of verbal interference. Memory & Cognition. 2000;28:977–986. doi: 10.3758/bf03209345. [DOI] [PubMed] [Google Scholar]

- Russell JA. A circumplex model of affect. Journal of Personality and Social Psychology. 1980;39:1161–1178. [Google Scholar]

- Russell JA. Core affect and the psychological construction of emotion. Psychological Review. 2003;110:145–172. doi: 10.1037/0033-295x.110.1.145. [DOI] [PubMed] [Google Scholar]

- Stevenage SV. Which twin are you? A demonstration of induced categorical perception of identical twin faces. British Journal of Psychology. 1998;89:39–57. [Google Scholar]

- Tomkins SS. Affect, imagery, consciousness. Vol. 1: The positive affects. New York, NY: Springer; 1962. [Google Scholar]

- Viviani P, Binda P, Borsato T. Categorical perception of newly learned faces. Visual Cognition. 2007;15:420–467. [Google Scholar]

- Woodworth RS, Schlosberg H. Experimental psychology. New York, NY: Henry Holt; 1954. (Revised ed.). [Google Scholar]

- Young AW, Rowland D, Calder AJ, Etcoff NL, Seth A, Perrett DI. Facial expression megamix: Tests of dimensional and category accounts of emotion recognition. Cognition. 1997;63:271–313. doi: 10.1016/s0010-0277(97)00003-6. [DOI] [PubMed] [Google Scholar]