Abstract

The National Heart, Lung, and Blood Institute (NHLBI) of the National Institutes of Health (NIH) convened a working group in June 2011 to examine alternative institutional review board (IRB) models. The working group was held in response to proposed changes in the regulations for government-supported research and the proliferation of multicenter clinical trials where multiple individual reviews may be inefficient. Group members included experts in heart, lung, and blood research, research oversight, bioethics, health economics, regulations, and information technology (IT). The group discussed alternative IRB models, ethical concerns, metrics for evaluating IRBs, IT needs, and economic considerations. Participants noted research gaps in IRB best practices and in metrics. The group arrived at recommendations for process changes, such as defining specific IRB performance requirements in funding announcements, requiring funded researchers to use more efficient alternative IRB models, and developing IT systems to facilitate information sharing and collaboration among IRBs. Despite the success of the National Cancer Institute's central IRB (CIRB), the working group, concerned about the creation costs and unknown cost efficiency of a new CIRB, and about the risk of shifting the burden of dealing with multiple IRBs from sponsors to research institutions, did not recommend the creation of an NHLBI-funded CIRB.

For over 45 years, federal agencies have required institutional review boards (IRBs) to oversee human subjects research. In 1966, when the precursors to the current regulations were implemented as federal grant administration policy for the Department of Health, Education, and Welfare,1,2 clinical trials were few, small, and usually performed within single institutions. Since then, randomized clinical trials have proliferated as they have become central to evidence-based medicine. They have also grown larger as the sample sizes necessary to demonstrate incremental benefits against a pool of existing effective therapies have increased. Clinical trials may now require more than 100 U.S. sites. This has given rise to a concern that the existing paradigm of local reviews, which can be duplicative, inefficient, and costly, is outmoded.3 This concern is apparent from the increasing interest in alternative IRB models, as demonstrated by the 2005 and 2006 workshops sponsored by agencies of the Department of Health and Human Services, the American Association of Medical Colleges, and others,4,5 and by the increasing use of central IRBs (CIRBs), both commercial and, more recently, federally funded.

The Office for Human Research Protections (OHRP) has proposed changes to the “Common Rule,” which would mandate that a multicenter study use a single IRB of record for all of its U.S. sites. Reasons behind this proposal include the fact that very little evidence exists to demonstrate that multiple IRB reviews better protect human subjects, that they are often duplicative and inefficient, and that they may actually weaken protections by creating an “authority vacuum.”6,7 OHRP has also recognized and is now evaluating one barrier to the use of alternative IRBs: institutions' concerns that they will face regulatory liability for decisions made by the external IRBs upon which they rely.8 The National Cancer Institute, too, is involved with alternative IRBs; its federally funded adult and pediatric CIRBs have enjoyed a decade of success.9

Recognizing this changing environment, the National Heart, Lung, and Blood Institute (NHLBI) of the National Institutes of Health (NIH) convened a working group to explore alternative IRB models and to discuss how to optimize the review process for federally funded research. The working group (listed at the end of this article) comprised experts in clinical research and its oversight, bioethics, health economics, regulations, and information technology (IT). They discussed IRB oversight in general and explored specific existing models, including multiple IRBs, reliance agreements with designated IRBs, regional IRBs, and commercial and federally funded CIRBs (all of which have been previously described5). In accordance with NHLBI policy, the working group has publicly posted an executive summary of the meeting.10

IRB oversight is but one component of a larger framework that protects study subjects. Regulatory agencies, data and safety monitoring boards, and even the free press all have their roles in ensuring the safety of clinical research. And at the heart of this protective framework, of course, is the individual investigator, who must conduct the trial with integrity. IRB reviews, then, are necessary but not sufficient to protect human subjects. Nonetheless, the working group recognized the importance of IRBs and explicitly called on IRBs to strive to ensure safe, ethically conducted trials, and not to become risk management boards that work primarily to limit their institutions' liability.11

Metrics of Efficiency and Performance

Measuring the effects that IRBs have on clinical research is difficult. When starting up a multicenter trial, researchers face many hurdles that slow down the process, and IRB review (often compounded by investigators' slow responses to IRB queries) is but one. Time spent awaiting IRB approval is often overshadowed by the lengthy, multilayered, complicated approvals involved in securing site contracts. One retrospective study at a large cancer center12 found that contractual negotiations and sign-offs were the largest contributors to study initiation delays, and that IRB reviews accounted for less than 25% of the total start-up time. But even those researchers whose retrospective analyses focus on the IRB process disagree on what timeline benchmarks to include to enable comparisons between various models. Working group members suggested that any such comparisons of efficiency must consider the type of study (observational versus interventional, single versus multiple sites), the level of risk to subjects, and the vulnerability of the patient population.

Prospectively collected comparative data have not yet been published, but two group members presented unpublished data from their own experiences of starting up a multicenter study. One member, whose institution—a large academic center—uses both central and local IRB reviews, witnessed far shorter approval times for clinical protocols (across the spectrum of cardiovascular and diabetes trials) when using CIRBs as compared with local IRBs. Factors that seemed to enhance the CIRBs' efficiency included more frequent meetings, better technologic resources and management, and the centralized review of amendments and advertising materials.

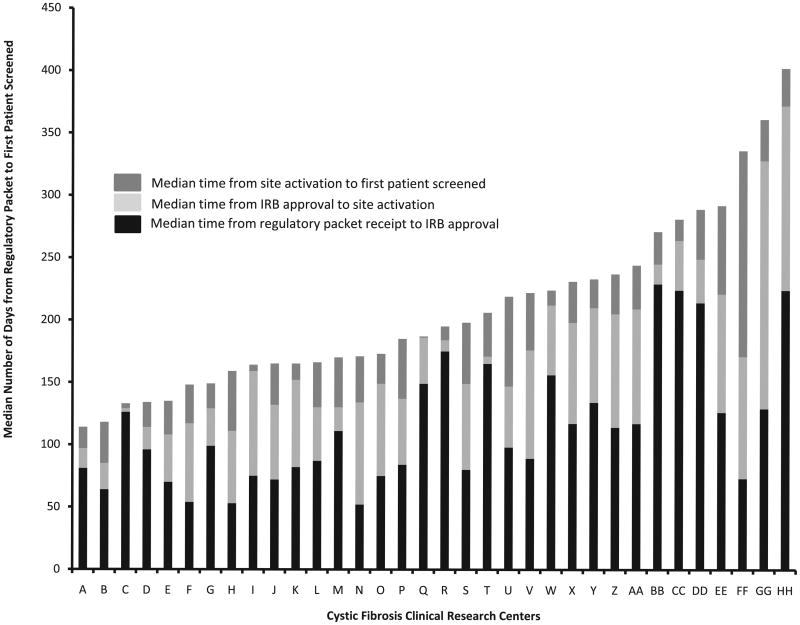

The other working group member presented prospective data from the Cystic Fibrosis Therapeutics Development Network, which showed great variability in the start-up times for 34 study centers that sought local IRB approval.13 IRB approval was only one of the factors that increased the time between submitting the completed regulatory packet and the screening of the first patient (Figure 1). The 34 sites in the study were supported by NIH Clinical and Translational Science Awards, but they did not initiate studies faster than sites without that support. The factors that did correlate with faster start-up times were higher institutional study volume, continuous process improvement, and transparent processes.

Figure 1.

Variability in clinical study start-up time. The chart shows the median number of days it took for studies at 34 cystic fibrosis clinical research sites supported by National Institutes of Health Clinical and Translational Science Awards to move from receipt of the regulatory packet to the first patient enrolled. Data covers October 2008 through March 2011, during which time each site began an average of eight studies.

The best ways to measure an IRB model's positive or negative impact have not been defined or widely accepted.14 The most common metric has been efficiency (i.e., how quickly a decision is made), and retrospective, survey-based reports on the variability of IRB decisions in multicenter trials have been published.15–17 But it is harder to measure the consistency and reliability of IRB decisions across time and study type, and much more difficult yet to assess the effect that IRB decisions have on protecting research subjects. Because quality metrics are so difficult to construct, the working group felt that a good first step in keeping IRBs focused on protecting human subjects would be simply sharing the records (such as meeting minutes or transcripts) of the decision making processes. Well-considered, thoughtful reviews could, in turn, serve as exemplars and educational tools. Secure IT would be required, of course, to safely and confidentially share these documents.

Facilitating “Shared Review”

Sophisticated IT would have the additional benefit of improving communications between IRBs. The Clinical and Translational Science Awards Consortium, currently funded by the NIH National Center for Advancing Translational Sciences, has undertaken one effort to improve IT systems. Still in an early pilot phase, IRBShare18 is a collaborative review model and electronic sharing resource designed to streamline the initiation of multicenter studies and trials. IRBShare enables institutions to share documents and data used in review decisions (e.g., minutes, protocols, investigators' brochures, approved consent documents) with other institutions participating in the same multicenter study. IRB chairs would then have the option of conducting a full IRB review locally or performing a “shared review,” with an appropriate portion focused on the local context. The “shared review” model does not require traditional reliance agreements, and all IRBs maintain “IRB of Record” status. The program will automatically limit accessibility, protect confidentiality, log all partner usage, and provide evaluation dashboards so that adopting sites can review and test efficiency gains.

Local Context Considerations

Similar to “shared review,” “facilitated review” is a model in which, after a CIRB reviews and approves a multicenter study, individual IRBs conduct a review focused on local issues. This model is currently in use by the NCI, and the NCI CIRB, at the suggestion of the Association for the Accreditation of Human Research Protection Programs, is undertaking a pilot at 25 sites in which the CIRB will conduct the full review to avoid dividing oversight responsibility between two IRBs. An external committee will evaluate the pilot late in 2012.

The working group specifically discussed the changing regulations on the concept of local context. OHRP policies have historically considered an understanding of the local research context integral to fulfilling the mandate of protecting human subjects.19 Recent OHRP public announcements20 and an editorial by its director21 appear to deemphasize that requirement. An IOM report22 on the NCI CIRB experience suggested that, in our ever-flatter world, the importance of local context has diminished; local context is rarely considered when interpreting the results of a clinical trial. One author of the IOM report even called the distinct review of local context an “unnecessary sacred cow.” A review conducted by one member of the working group found that local IRBs infrequently focused on truly local constraints and that many “local” issues were in fact common to many communities (such as low education level, poor comprehension of English, or poverty). Many in the group felt that CIRBs can adequately address these issues, but must maintain a sensitivity towards local IRBs.

Conflicts of Interest

Managing conflicts of interest—financial, scientific, and personal—is a complex endeavor regardless of whether an IRB is local, remote, or centralized. One working group member felt that the having a CIRB oversee hundreds of sites creates the risk that a board member with a conflict of interest would have the power to inappropriately stymie research at all those sites. Others felt it likelier that conflicts of interest would skew the decisions of local IRBs, where board members might have long-standing personal relationships with investigators or where their institutions might benefit financially from study participation. Notably, a survey of IRB members at individual academic institutions found that more than one-third had recent relationships with industry, and more than half were unaware of the formal process for disclosing relationships.22

The working group summarized five points to be considered when establishing conflict-of-interest policies for CIRBs. The policy must (1) balance conflicts of interest against expertise, given the cutting-edge nature of protocols; (2) apply to all members, staff, and consultants, (3) define “financial conflicts” to cover employment, consultancies, strategic advisory roles, and patent ownerships by the board members, their institutions, and their immediate families (extending, perhaps, to include adult children, parents, and siblings), (4) prohibit members with conflicts of interest from participating in any aspects of review (including initial, continuing, amending, and auditing) or writing policy that is related to those conflicts, and (5) establish a separate oversight body with knowledge and experience in the identification and management of conflicts of interest.

The Economics of IRBs

The final major consideration discussed by the working group was the economics of IRBs. Studies23–25 have demonstrated economies of scale for IRBs, both local and central, with the cost per review decreasing as the number of protocols increases. One study found that small IRBs have a particularly steep cost curve, which starts to level out around 140 reviews per year.26

Economies of scale may take longer to kick in for newly created CIRBs because of the costs of putting new processes in place and climbing potentially steep learning curves. For federal agencies thinking about creating new CIRBs, considerations to determine cost-effectiveness will include federal contracting regulations (which may add costly steps) and the availability of existing infrastructure (such as IT support and personnel, which may or may not be sufficient).

All of these variables mean that the economic efficiency of the NCI CIRB may not be easily replicated. And, in any case, replication of this centralized, disease-specific model may not always be desirable. Copying the NCI CIRB model many times over could shift the burden of dealing with multiple IRBs from federal and other funders onto the research institutions, which would have to deal with different CIRBs, each with its own submission processes, for studies of different diseases.

Recommendations

On the whole, the working group agreed with others who have found that multiple IRB reviews can be inefficient and that the duplication does not necessarily enhance the protection of human subjects. The group made the following recommendations.

To define IRB best practices. Research in the area of best practices, such as how best to train board members, would help create a template for excellence. This would not only improve the review process, but would also allow IRBs to see the steps that other IRBs have taken in their reviews, which, in turn, would help ensure that IRBs could trust each other's decisions.

To develop metrics to assess the quality and substance of IRB reviews and decisions. These might include whether the IRB's reasons for its decision are documented and valid; midstudy metrics to monitor safety; and feedback from investigators, IRB members, funders, and subjects.

To make specific IRB review requirements part of funding opportunity announcements (FOAs). As an example, an FOA for a multicenter clinical trial could require that 90% of the sites be able to process IRB review within 60 days. Investigators could be required to document adherence to the timeline.

To require funders to use of the most efficient IRB models. The development of metrics to compare the efficiency and appropriateness of alternative IRB decision-making models would facilitate such a requirement.

To develop systems, such as IRBShare, to permit multicenter study sites to share an individual IRB's process for decision making. The utility of this prototype, still under development, remains to be seen, but theoretically, the system could enhance the substance and efficiency of subsequent reviews and further the development of best practices.

The working group discussed, but did not recommend, the creation of a separate NHLBI-funded CIRB. The concerns were the creation costs and unknown cost-efficiency of such a CIRB, and the risk that it would shift the burden of dealing with multiple IRBs from sponsors to research institutions.

The system of multiple local IRB review for large clinical trials has been in place for decades but has not been shown to be the best model for the protection of human subjects and is inefficient. Research on IRB processes and sharing of best practices and the recommendations outlined may be expected to improve on the current paradigm.

Acknowledgments

The authors gratefully acknowledge the contributions of Gail Weinmann, MD, Deputy Director, Division of Lung Diseases, NHLBI, NIH, for her guidance in the planning of the meeting's scope and agenda and her input on the organization of the manuscript.

Funding/Support: The meeting and travel support for invited participants were funded by the National Heart, Lung, and Blood Institute.

Members of the National Heart, Lung, and Blood Institute's Working Group on Optimizing the IRB Process

Gordon R. Bernard, MD, Vanderbilt University School of Medicine (chair); C. Noel Bairey Merz, MD, Cedars-Sinai Heart Institute; Angela Bowen, MD, Angela J. Bowen & Associates, LLC; Nancy Neveloff Dubler, LLB, Montefiore Einstein Center for Bioethics; Daniel E. Ford, MD, MPH, Johns Hopkins University School of Medicine; Timothy J. Gardner, MD, Christiana Care Health System; Christine Grady, RN, PhD, NIH Clinical Center (planning committee member); Jacquelyn Goldberg, JD, NCI, NIH; Jesse A. Goldner, JD, St. Louis University Schools of Law and Medicine; Robert A. Harrington, MD, Stanford University School of Medicine; Paul A. Harris, PhD, Vanderbilt University; Vita J. Land, MD, MBA, Children's Memorial Hospital, Chicago (planning committee member); Kerry L. Lee, PhD, Duke Clinical Research Institute; Fernando J. Martinez, MD, University of Michigan Health Systems; Karen J. Maschke, PhD, The Hastings Center; Jerry Menikoff, MD, JD, Office for Human Research Protections; Diane Nugent, MD, Children's Hospital of Orange County, California; Thomas A Pearson, MD, MPH, PhD, Univ. or Rochester (planning committee member); Bonnie Ramsey, MD, University of Washington (planning committee member); Sherrill J. Slichter, MD, Puget Sound Blood Center; B. Taylor Thompson, MD, Massachusetts General Hospital/Harvard Medical School; David Tribble, MD, DrPH, Uniformed Services University of Health Sciences; Suresh Vedantham, MD, Washington University School of Medicine; and Todd H. Wagner, PhD, VA Palo Alto Health Care System/Stanford University.

Footnotes

Other disclosures: None.

Ethical approval: Ethics approval was not required for this work.

Previous presentations: A brief summary of this meeting was posted publicly in accordance with National Institutes of Health policy.

Disclaimer: The opinions expressed are those of the individual authors and do not represent a statement of the National Heart, Lung, and Blood Institute, National Institutes of Health, or Department of Health and Human Services.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Dr. Alice M. Mascette, Division of Cardiovascular Sciences, National Heart, Lung, and Blood Institute, National Institutes of Health, Bethesda, Maryland.

Dr. Gordon R. Bernard, Vanderbilt University Medical Center, Nashville, Tennessee.

Dr. Donna DiMichele, Division of Blood Diseases and Resources, National Heart, Lung, and Blood Institute, National Institutes of Health, Bethesda, Maryland.

Mr. Jesse A. Goldner, Saint Louis University Schools of Law and Medicine, St. Louis, Missouri.

Dr. Robert Harrington, Department of Medicine, Stanford University, Stanford, California; at the time of the working group meeting, he led the Duke Clinical Research Institute and was the Richard S. Stack MD Distinguished Professor, Division of Cardiology, Duke University School of Medicine, Durham, North Carolina.

Dr. Paul A. Harris, Vanderbilt University Medical Center, Nashville, Tennessee.

Ms. Hilary S. Leeds, Office of Science and Technology, National Heart, Lung, and Blood Institute, National Institutes of Health, Bethesda, Maryland.

Dr. Thomas A. Pearson, University of Rochester School of Medicine and Dentistry, Rochester, New York.

Dr. Bonnie Ramsey, Cystic Fibrosis Therapeutics Development Network Coordinating Center, and professor of pediatrics, University of Washington School of Medicine, Seattle, Washington.

Todd H. Wagner, VA Palo Alto Health Care System, Menlo Park, California, and consulting associate professor in health research and policy, Center for Health Policy/Center for Primary Care and Outcomes Research, Stanford University, Stanford, California.

References

- 1.Department of Health, Education, and Welfare. Protection of Human Subjects. Federal Register. 1974;39:18914–18920. [PubMed] [Google Scholar]

- 2.National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research. [Accessed August 13, 2012];Staff Report: The Protecion of Human Subjects in Research Conducted or Supported by Federal Agencies. 1978 Feb 10; http://www.archive.org/stream/protectionofhuma00unit/protectionofhuma00unit_djvu.txt.

- 3.Department of Health and Human Services, Office of the Inspector General. Institutional Review Boards: A Time for Reform. Report no. OEI-01-97-00193. [Accessed July 5, 2012]; http://www.oig.hhs.gov/oei/reports/oei-01-97-00193.pdf.

- 4.Department of Health and Human Services. Alternative Models of IRB Review Workshop Summary Report. Washington, DC: Department of Health and Human Services; 2005. [Google Scholar]

- 5.Department of Health and Human Services. National Conference on Alternative IRB Models: Optimizing Human Subject Protection. Washington, DC: American Association of Medical Colleges; 2006. [Google Scholar]

- 6.Menikoff J. The paradoxical problem with multiple-IRB review. N Engl J Med. 2010;363:1591–1593. doi: 10.1056/NEJMp1005101. [DOI] [PubMed] [Google Scholar]

- 7.Department of Health and Human Services Office of the Secretary 45 CFR Parts 46, 160, and 164; Food and Drug Administration 21 CFR Parts 50 and 56; human subjects research protections: Enhancing protections for research subjects and reducing burden, delay and ambiguity for investigators; advance notice of proposed rulemaking. Fed Regist. 2011;76:44512–44531. [Google Scholar]

- 8.Department of Health and Human Services. 45 CFR Part 46; Office of Human Research Protections; institutional review boards; advance notice of proposed rulemaking; request for comments. Fed Regist. 2009;74:9578–9583. [Google Scholar]

- 9.Christian MC, Goldberg JL, Killen J, et al. A central institutional review board for multi-institutional trials. N Engl J Med. 2002;346:1405–1408. doi: 10.1056/NEJM200205023461814. [DOI] [PubMed] [Google Scholar]

- 10.National Heart, Lung, and Blood Institute. Facilitating NHLBI clinical trials through optimization of the IRB process: Are central IRBs the solution? [Accessed July 5, 2012]; http://www.nhlbi.nih.gov/meetings/workshops/irb.htm.

- 11.Fost N, Levine RJ. The dysregulation of human subjects research. JAMA. 2007;298:2196–2198. doi: 10.1001/jama.298.18.2196. [DOI] [PubMed] [Google Scholar]

- 12.Dilts DM, Sandler AB. Invisible barriers to clinical trials: the impact of structural, infrastructural, and procedural barriers to opening oncology clinical trials. J Clin Oncol. 2006;24:4545–4552. doi: 10.1200/JCO.2005.05.0104. [DOI] [PubMed] [Google Scholar]

- 13.Van Dalfsen J, Ramsey B. Clinical Research Benchmarking Project. [Accessed July 5, 2012];Clinical and Translational Science Awards Consortium. 2012 https://www.ctsacentral.org/documents/bahdocs/Van%20Dalfsen.pdf.

- 14.Grady C. Do IRBs protect human research participants? JAMA. 2010;304:1122–1123. doi: 10.1001/jama.2010.1304. [DOI] [PubMed] [Google Scholar]

- 15.Hirshon JM, Krugman SD, Witting MD, et al. Variability in institutional review board assessment of minimal-risk research. Acad Emerg Med. 2002;9:1417–1420. doi: 10.1111/j.1553-2712.2002.tb01612.x. [DOI] [PubMed] [Google Scholar]

- 16.McWilliams R, Hoover-Fong J, Hamosh A, Beck S, Beaty T, Cutting G. Problematic variation in local institutional review of a multicenter genetic epidemiology study. JAMA. 2003;290:360–366. doi: 10.1001/jama.290.3.360. [DOI] [PubMed] [Google Scholar]

- 17.Silverman H, Hull SC, Sugarman J. Variability among institutional review boards' decisions within the context of a multicenter trial. Crit Care Med. 2001;29:235–241. doi: 10.1097/00003246-200102000-00002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.IRBShare. [Accessed July 5, 2012];CTSA Consortium Coordinating Center. https://www.irbshare.org/about.php.

- 19.Department of Health and Human Services. OHRP. IRB Knowldege of Local Research Context. [Accessed July 5, 2012];1998 Aug 27; [Updated July 21, 2000]. http://www.hhs.gov/ohrp/policy/local.html.

- 20.Department of Health and Human Services. OHRP. OHRP Archives Two Guidance Documents on Multicenter Clinical Trials. [Accessed, 2012];2010 Jan 5; http://www.hhs.gov/ohrp/newsroom/announcements.

- 21.Institute of Medicine. Multi-Center Phase III Clinical Trials and NCI Cooperative Groups: Workshop Summary. Washington, DC: National Academies Press; 2009. [PubMed] [Google Scholar]

- 22.Campbell EG, Weissman JS, Vogeli C, et al. Financial relationships between institutional review board members and industry. N Engl J Med. 2006;355:2321–2329. doi: 10.1056/NEJMsa061457. [DOI] [PubMed] [Google Scholar]

- 23.Speckman JL, Byrne MM, Gerson J, et al. Determining the costs of institutional review boards. IRB. 2007;29:7–13. [PubMed] [Google Scholar]

- 24.Sugarman J, Getz K, Speckman JL, Byrne MM, Gerson J, Emanuel EJ Consortium to Evaluate Clinical Research Ethics. The cost of institutional review boards in academic medical centers. N Engl J Med. 2005;352:1825–1827. doi: 10.1056/NEJM200504283521723. [DOI] [PubMed] [Google Scholar]

- 25.Wagner TH, Murray C, Goldberg J, Adler JM, Abrams J. Costs and benefits of the National Cancer Institute central institutional review board. J Clin Oncol. 2010;28:662–666. doi: 10.1200/JCO.2009.23.2470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Wagner TH, Cruz AM, Chadwick GL. Economies of scale in institutional review boards. Med Care. 2004;42:817–823. doi: 10.1097/01.mlr.0000132395.32967.d4. [DOI] [PubMed] [Google Scholar]