Abstract

Over the next two decades, a dramatic shift in the demographics of society will take place, with a rapid growth in the population of older adults. One of the most common complaints with healthy aging is a decreased ability to successfully perceive speech, particularly in noisy environments. In such noisy environments, the presence of visual speech cues (i.e., lip movements) provide striking benefits for speech perception and comprehension, but previous research suggests that older adults gain less from such audiovisual integration than their younger peers. To determine at what processing level these behavioral differences arise in healthy-aging populations, we administered a speech-in-noise task to younger and older adults. We compared the perceptual benefits of having speech information available in both the auditory and visual modalities and examined both phoneme and whole-word recognition across varying levels of signal-to-noise ratio (SNR). For whole-word recognition, older relative to younger adults showed greater multisensory gains at intermediate SNRs, but reduced benefit at low SNRs. By contrast, at the phoneme level both younger and older adults showed approximately equivalent increases in multisensory gain as SNR decreased. Collectively, the results provide important insights into both the similarities and differences in how older and younger adults integrate auditory and visual speech cues in noisy environments, and help explain some of the conflicting findings in previous studies of multisensory speech perception in healthy aging. These novel findings suggest that audiovisual processing is intact at more elementary levels of speech perception in healthy aging populations, and that deficits begin to emerge only at the more complex, word-recognition level of speech signals.

Keywords: Speech perception, Multisensory, Aging, Multisensory integration, Inverse effectiveness

Introduction

Visual cues are known to significantly impact speech perception; when one can both hear a speaker’s utterance and concurrently see the articulation of that utterance (lip reading), speech comprehension is more accurate (Ross, et al., 2011,Ross, et al., 2007a,Sommers, et al., 2005b,Stevenson and James, 2009,Sumby and Pollack, 1954) and less effortful (Fraser, et al., 2010) than when only auditory information is available. The behavioral gain observed when processing information via multiple sensory modalities is governed by a number of factors, with one of the more important being the relative effectiveness of the stimuli that are paired. As a general rule, greater benefits are observed from pairing stimuli that, on their own, are each weakly effective, compared to pairing stimuli that are both strongly effective when presented in isolation(Bishop and Miller, 2009,James, et al., 2012,Meredith and Stein, 1983,Meredith and Stein, 1986,Nath and Beauchamp, 2011,Stevenson, et al., 2012,Stevenson, et al., 2007,Stevenson and James, 2009,Stevenson, et al., 2009,Wallace, et al., 1996,Werner and Noppeney, 2009). This concept of “inverse effectiveness” implies that the primary benefits of multisensory integration take place when the individual stimuli provide weak or ambiguous information. For example, the addition of a visual speech signal provides the greatest gain when the auditory speech is noisy (Sumby and Pollack, 1954). Once an individual stimulus is sufficiently salient, the need for multisensory-mediated benefits substantially declines.

In the context of real-world multisensory stimuli, these changes in effectiveness can be mediated not only by changes in the external characteristics of the stimuli (for example the loudness of an auditory stimulus), but also by changes in internal events governing the processing of that information. The declines in visual and auditory acuity associated with normal aging are probably the result of decreases in internal signal strength attributable to changes in transduction and encoding processes, but also by additional internal noise (i.e., variability) to the transduction and encoding processes. The loss of visual and auditory acuity is seen for both simple and more complex stimuli, and is particularly prevalent for speech signals, most notably in the presence of external noise (Dubno, et al., 1984,Gosselin and Gagne, 2011,Humes, 1996,Martin and Jerger, 2005,Sommers, et al., 2005a).Although these age-related declines in speech perception and comprehension have been widely interpreted to be a result of changes in auditory acuity (Liu and Yan, 2007) and diminishedability to filter task-irrelevant auditory information(Hugenschmidt, et al., 2009), declines in visual acuity may play an important and underappreciated role. Some evidence suggests that older adults may rely on visual information to a greater extent than their younger counterparts (Freiherr, et al., 2013, Laurienti, et al., 2006), which may reflect the use of multisensory integration as a compensatory mechanism for declining unisensory abilities.

In this prior work, multisensory gain increased with age for the integration of simple audiovisual stimuli such as flashes and tones, a finding consistent with the principle of inverse effectiveness given the age-related declines in unisensory processing acuity (Laurienti, et al., 2006). However, for speech-related stimuli, the picture is more complex and provides only partial support for the concept of inverse effectiveness. Whereas older and younger adults showed equivalent levels of audiovisual gain for high (i.e., easier) SNR trials, older adults showed less gain than younger adults on low (i.e., more difficult) SNR trials (Tye-Murray, et al., 2010). One potential explanation for these disparate findings is that in this latter study participants were required to complete or repeat whole sentences, which may introduce variability due to other cognitive factors. For example, verbal memory is known to decline in non-demented aging (Park, et al., 2002), a finding that may impair the ability of older participants to recall whole sentences. Thus, the reduced multisensory gain observed on low SNR trials in older adults relative to younger adults may in fact reflect memory impairments for the full sentences, rather than deficits in the integration of auditory and visual cues.

Here, we conducted a novel study designed to address these conflicting observations, specifically structured to examine how aging affects multisensory-mediated gains in speech perception under noisy conditions. Critically, we examined these gains at the level of more elementary (i.e., phonemic) and more complex (i.e., whole word) components of speech, providing the first systematic investigation of how multisensory integration at different levels of processing is affected by aging. We presented younger and older healthy adults with a standard audiovisual speech-in-noise task in which participants reported the perceived word. We used single word presentations to limit the impact of higher-order cognitive changes known to occur with aging, such as changes in memory or context. Importantly, the task was scored both at the whole-word level and the phoneme level, allowing us to pinpoint whether changes in multisensory gain across the lifespan differed depending upon the level of processing necessary for accurate comprehension. Our results provide evidence that older adults show largely intact multisensory processing at lower (i.e., phonemic) levels of speech perception, but begin to show deficits at higher level processing with whole-word recognition.

Materials and Methods

Participants

Thirty-four participants (20 female, mean age = 39.0, sd = 18.4, range = 19–67) completed a behavioral speech-in-noise paradigm. Experimental protocols were approved by Vanderbilt University Medical Center’s institutional review board. Participants were divided into two age groups based upon the median age (39 years) reported in Ross et al.’s (2007a) publication investigating inverse effectiveness in single-word recognition. The younger group included participants 39 years of age or younger (18 in total, 8 female, mean age = 22.8 years, sd = 4.7, range = 19–38) and the older group consisted of participants 40 years of age or older (16 in total, 12 female, mean age = 57.3 years, sd = 6.9, range = 45–67). For demographic information, see Table 1. All individuals were screened for normal visual acuity with a tumbling E visual chart and were not hearing-aid users. Additionally, Mini-mental state examinations were conducted on all participants, with a score greater than or equal to 27 used as an exclusionary cutoff, though no participants were excluded. Additionally, participants reported no neurological impairments. Participants were recruited via flyer at Vanderbilt University Medical Center.

Table 1.

Participant Demographics

| N | Mean age (S.D.) | Age range |

% female | % male | |

|---|---|---|---|---|---|

| Younger | 18 | 22.8 (4.7) | 19–38 | 44% | 56% |

| Older | 16 | 57.3 (6.9) | 45–67 | 75% | 25% |

Stimuli

Stimuli included dynamic, audiovisual (AV) recordings of a female speaker saying 216 triphonemic nouns. Stimuli were selected from a previously published stimulus set, The Hoosier Audiovisual Multi-talker Database (Sheffert et al., 1996). All stimuli were spoken by speaker F1. The stimuli selected were monosyllabic English words that were matched across sets for accuracy on both visual-only and audio-only recognition (Lachs and Hernandez, 1998), and were also matched across sets in lexical neighborhood density (Luce and Pisoni, 1998; Sheffert et al., 1996). This set of single-word tokens have been used successfully in previous studies of multisensory integration (Stevenson et al., 2011; Stevenson et al., 2010; Stevenson and James, 2009; Stevenson et al., 2009). Audio signal levels were measured as root mean square (RMS) contrast and equated across all tokens.

All stimuli throughout the study were presented using MATLAB 2012b (MATHWORKS Inc., Natick, MA) software with the Psychophysics Toolbox extensions (Brainard, 1997; Pelli, 1997). Visual stimuli were 200×200 pixels and subtended 10×10° of visual angle. Audio stimuli were presented through two aligned speakers on each side of the monitor. All tokens lasted two seconds and included all pre-articulatory gestures.

In the visual-only condition, the visual component of each stimulus, or viseme, was presented. Auditory stimuli were all overlaid with 8-channel multitalker babble at 72 dB SPL. The presentation of auditory babble presentation began 500 ms prior to the beginning of the stimulus token and ended 500 ms following token offset. The RMS of the auditory babble was linearly ramped up and down, respectively, during the pre- and post-stimulus 500 ms periods, and was presented with the first and last frames of the visual token, respectively. Auditory stimuli were presented at four separate sound levels relative to the auditory noise. Differences in auditory level, or SNR, included 0, −6, −12, and −18 dB SPL.

Procedures

Participants sat in a sound- a light-attenuating WhisperRoom™ (Model SE 2000; Whisper Room Inc.) approximately 60 cm from the monitor. Participants were presented with nine separate runs of 24 single-word presentations each: four audiovisual runs (one at each SNR), four auditory-only runs (one at each SNR), and one visual-only run (with auditory multitalker babble). During auditory-only presentations, the first frame of the associated video was presented and remained static throughout the presentations. Run orders were randomized across participants. Within participants, word lists were randomized across runs with no words repeated. Word lists were also counterbalanced between individuals, so words were presented in different modalities and SNRs for each individual.

Experimental procedures were identical for all runs. Participants were instructed to attend to the speaker at all times, and to report the word they perceived by typing the word (on a keyboard placed in front of them). After each trial the experimenter confirmed the participant’s report to correct for spelling errors, and then the next word was presented. No time limit was given for participant responses. Each run lasted approximately 5 minutes, and all run orders were counterbalanced.

Analysis

Responses were scored in two ways, at the whole-word level and at the phoneme level. Word-recognition accuracy was scored as correct if and only if the entire word reported was correctly perceived. Phoneme accuracy allowed for participants to be scored as correctly reporting 0–3 phonemes per word. Mean word accuracy and phoneme accuracy were then calculated for each participant and for each run.

The expected multisensory accuracy predicted by the individual unisensory responses was calculated by:

pAV = p(A)+p(V)−[p(A)*p(V)],

Where pAV represents a null hypothesis characterizing what the response will be to audiovisual presentations if the auditory and visual information are processed independently (Stevenson, et al., 2014a), and where p(A) and p(V) represent the individual’s response accuracy to auditory- and visual-only presentations, respectively. Each participant’s responses to unisensory presentations were used to calculate their individual predicted pAV values for each SNR level. These predicted values were then used as a null hypothesis from which we measured multisensory interactions, namely multisensory gain which we define here as an increase in performance above and beyond that predicted by non-integrative statistical facilitation (Raab, 1962).

To assess unisensory auditory sensory processing levels, a two (age group: younger vs older) × four (SNR: 0, −6, −12, −18 dB SPL), mixed-model ANOVA was conducted for both at the word- and phoneme-recognition levels for auditory-only word presentations. To assess unisensory visual processing levels (i.e. lip reading), a between-subject t-test was performed across age groups. To assess multisensory benefits, a two (age group: younger vs older) × four (SNR: 0, −6, −12, −18 dB SPL) × 2 (AV-measure: observed AV vs predicted AV) mixed-model ANOVA was conducted for both word and phoneme accuracy scores. Finally, to directly compare age-related differences in multisensory gain for word versus phoneme recognition, the percent multisensory gain data were subjected to a 2 (age group: younger vs older) × 4 (SNR: 0, −6, −12, −18 dB SPL) × 2 (measure: whole-word vs phoneme) mixed-model ANOVA.

Results

All analyses were run separately for each of the two levels of data scoring: whole-word accuracy and phoneme accuracy. Mean accuracies and standard deviations were calculated for each group, sensory modality, and SNR level (Table 2).

Table 2.

Mean whole-word and phoneme recognition accuracy rates

| Word Recognition | Phoneme Recognition | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Modality | SNR | younger | older | p | t | d | younger | older | p | t | d |

| V | N/A | 14.1±8.7 | 9.4±7.4 | .104 | 1.67 | .59 | 43.1±10.6 | 37.5±12.3 | .135 | 1.54 | .49 |

| A | 0 | 86.1±9.4 | 71.8±15.7 | .002 | 3.45 | 1.11 | 94.2±4.2 | 56.9±9.8 | .005 | 3.00 | .97 |

| −6 | 73.4±9.2 | 52.6±15.1 | <.001 | 4.42 | 1.67 | 88.1±4.7 | 75.3±10.5 | <.001 | 4.86 | 1.57 | |

| −12 | 58.1±7.5 | 38.5±14.8 | <.001 | 4.113 | 1.67 | 76.7±6.0 | 62.6±12.2 | <.001 | 4.39 | 1.47 | |

| −18 | 7.2±6.7 | 2.9±4.7 | .062 | 1.94 | 0.74 | 23.7±10.0 | 14.6±9.1 | .008 | 2.86 | .95 | |

| AV | 0 | 92.8±7.5 | 80.7±23.5 | .084 | 1.79 | 0.69 | 97.1±2.9 | 89.2±23.4 | .154 | 1.46 | .48 |

| −6 | 86.8±8.2 | 77.1±13.8 | .048 | 2.06 | 0.86 | 95.1±3.5 | 90.4±6.1 | .008 | 2.85 | .95 | |

| −12 | 78.0±12.0 | 63.5±16.3 | .001 | 3.54 | 1.01 | 89.2±7.5 | 83.0±8.5 | .022 | 2.41 | .77 | |

| −18 | 45.6±13.0 | 27.1±12.4 | <.001 | 4.80 | 1.46 | 68.7±8.2 | 57.4±11.9 | .002 | 3.39 | 1.10 | |

| Multisensory Gain* | 0 | 5.2±11.2 | 6.90±15.9 | .447 | 0.77 | 0.12 | 0.6±3.9 | −2.0±17.7 | .548 | 0.61 | 0.20 |

| −6 | 9.9±8.9 | 20.0±12.2 | .006 | 2.90 | 0.94 | 2.0±3.7 | 6.1±6.5 | .021 | 2.43 | 0.78 | |

| −12 | 14.3±11.1 | 19.2±14.3 | .818 | 0.23 | 0.39 | 2.7±7.1 | 7.2±7.6 | .098 | 1.71 | 0.62 | |

| −18 | 25.5±10.8 | 15.0±10.4 | .001 | 3.56 | 0.99 | 12.4±9.4 | 10.7±12.4 | .635 | 0.48 | 0.16 | |

p, t, and d values describe a comparison of accuracies between younger and older groups

Values given for multisensory gain are percent changes between observed and predicted recognition accuracy rates.

Unisensory Performance

Visual only whole-word and viseme recognition

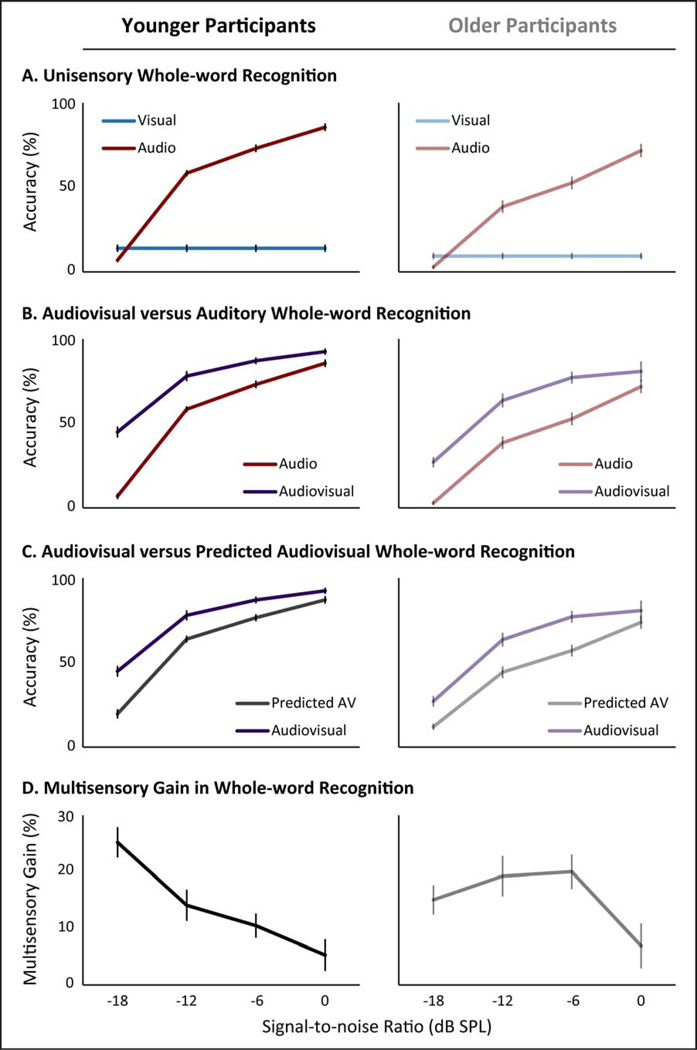

A between-subject t-test was used to compare younger and older groups’ visual-only speech perception accuracies at both the whole-word and viseme recognition levels. In both cases, younger individuals showed higher rates of visual-only accuracy. These differences approached significance at the whole-word recognition level (Figure 1A) and at the viseme recognition level (Figure 2A). For statistical results, see Table 2.

Figure 1. Word Recognition.

For all panels, younger adults are depicted on the left and older adults on the right. Panel A depicts word-recognition accuracies with auditory-only across signal-to-noise ratios (SNR) and visual-only presentations. Panel B compares similar accuracies with audiovisual presentations to the respective auditory-only conditions. Panel C compares the accuracies to audiovisual presentations with the predicted accuracy level based on the unisensory responses with the assumption that there are no interactions between auditory and visual processing. Finally, Panel D shows the level of behavioral multisensory gain when stimuli are presented concurrently through two stimulus modalities instead of the level predicted by independent presentations of the same stimuli calculated as AV – p(AV). Error bars depict standard error.

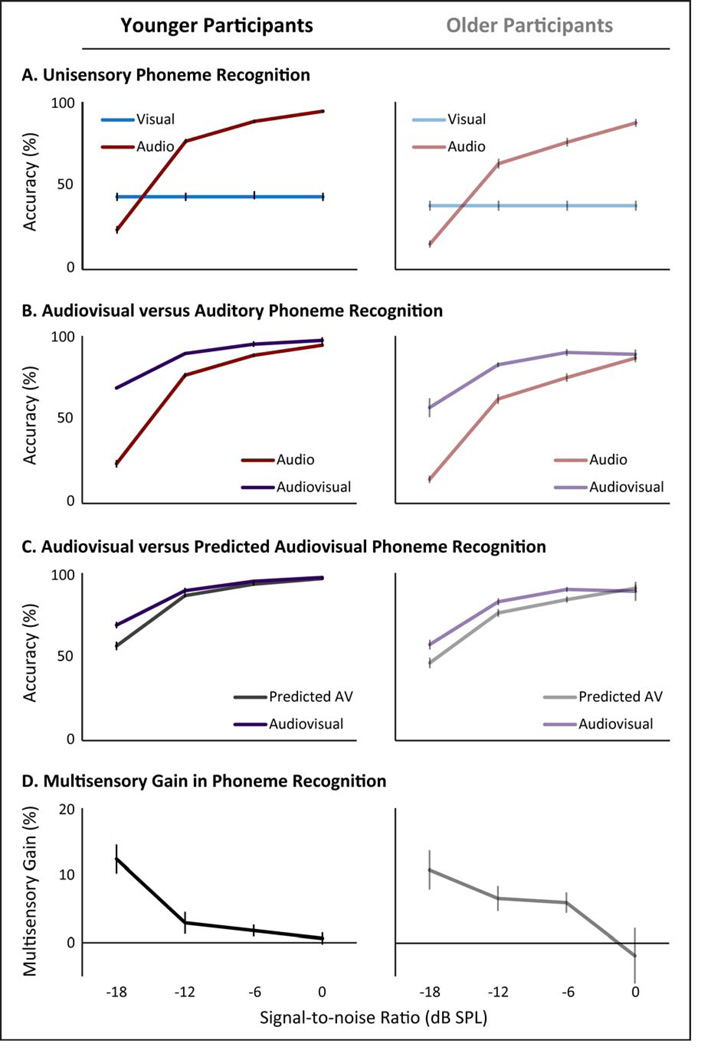

Figure 2. Phoneme Recognition.

For all panels, younger adults are depicted on the left and older adults on the right. Panel A depicts phoneme-recognition accuracies with auditory-only and visual only presentations across signal-to-noise ratios (SNR). Panel B compares similar accuracies with audiovisual presentations to the respective auditory-only conditions. Panel C compares the accuracies to audiovisual presentations with the predicted accuracy level based on the unisensory responses with the assumption that there are no interactions between auditory and visual processing. Finally, Panel D shows the level of behavioral multisensory gain when stimuli are presented concurrently through two stimulus modalities instead of the level predicted by independent presentations of the same stimuli calculated as AV – p(AV). Error bars depict standard error.

Auditory-only whole-word recognition

To analyze accuracies with auditory-only presentations, a two-way, mixed-method, repeated-measures ANOVA was conducted at both the whole-word and phoneme recognition levels with age group as a between-subjects factor and SNR as a with in-subjects factor. For whole-word recognition (Figure 1B), a main effect of age group was seen, with younger adults showing higher accuracy than older adults (p< 0.001, F(1,33) = 27.86, partial-η2 = 0.47). A main effect of SNR was also observed, with higher SNRs resulting in higher accuracy rates (p< 0.001, F(3,30) = 552.12, partial-η2 = 0.98). Finally, a significant interaction between age group and SNR levels was observed, with older adults showing decreases in accuracy at higher SNR levels as compared to younger adults (p = 0.001, F(3, 30) = 7.80, partial-η2 = 0.44). For statistical results, see Table 2.

Auditory only phoneme recognition

For phoneme recognition with auditory-only presentations (Figure 2B), a main effect of age group was also seen, with younger adults showing higher accuracy than older adults (p< 0.001, F(1,33) = 24.83, partial-η2 = 0.43). A main effect of SNR was also seen for phoneme recognition, with higher SNRs leading to higher accuracy rates (p< 0.001, F(3,30) = 558.70, partial-η2 = 0.98). Finally, no interaction between age group and SNR levels was observed (p = 0.16, F(3,31) = 1.87, partial-η2 = 0.15). For statistical results, see Table 2.

Multisensory Performance

Multisensory whole-word recognition

For the analysis of the effects of combined congruent audiovisual presentations, comparisons were made between the observed audiovisual response (AV) and the predicted audiovisual (pAV) response based on a given individual’s unisensory accuracies. A three-way, repeated-measures ANOVA was conducted on whole-word recognition scores with age group as a between-subject factor, and SNR level and observed vs. predicted multisensory accuracy (AV and pAV) as within-subjects factors. A significant main effect of age group was observed with the younger adults showing higher whole-word recognition accuracy (Figure 1C, p< 0.001, F(1) = 21.50, partial-η2 = 0.40). A significant main effect of SNR was also observed, with higher SNRs associated with more accurate whole-word recognition responses (Figure 1C, p< 0.001, F(3) = 392.55, partial-η2 = 0.98).

Additionally, a main effect of observed accuracy vs. predicted accuracy for these multisensory conditions was observed, with observed audiovisual responses being more accurate than predicted audiovisual whole-word recognition accuracy levels (Figure 1C, p< 0.001, F(1) = 171.18, partial-η2 = 0.84). No two-way interactions were observed between age group and observed vs. predicted multisensory accuracy (p = 0.49, F(1,33) = 0.49, partial-η2 = 0.02) or between age group and SNR level (p = 0.58, F(3,30) = 0.66, partial-η2 = 0.06).However, a significant interaction was observed between observed vs. predicted multisensory accuracy and SNR, where a larger difference was seen between observed audiovisual response accuracy (AV) and predicted (pAV) response accuracy at lower SNRs (Figure 1D, p< 0.001, F(3,30) = 7.91, partial-η2 = 0.44). This finding is concordant with the principle of inverse effectiveness, in which greater multisensory gain is seen with decreasing SNR (inverse effectiveness). Finally, a significant three-way interaction was observed, such that the increasing difference between observed audiovisual response accuracy and predicted accuracy (multisensory gain) was more prominent in the younger group relative to the older group (Figure 1D, p = 0.001, F(3,30) = 6.90, partial-η2 = 0.41).

Multisensory phoneme recognition

A three-way, repeated-measures ANOVA was also conducted for phoneme recognition with age group as a between-subject factor, and SNR level and observed vs. predicted multisensory (AV and pAV) as within-subjects factors. A significant main effect of age group was observed with the younger adults showing higher phoneme recognition accuracy (Figure 2B, p = 0.001, F(1) = 12.56, partial-η2 = 0.28). A significant main effect of SNR was also observed, with higher SNRs associated with more accurate phoneme-recognition responses (p< 0.001, F(3) = 315.02, partial-η2 = 0.97). For statistical results, see Table 2.

Additionally, a main effect of observed vs. predicted multisensory accuracy was observed, with observed audiovisual responses being more accurate than predicted audiovisual phoneme-recognition accuracy responses (Figure 2C, p< 0.001, F(1) = 62.55, partial-η2 = 0.66). A significant interaction was observed between observed vs. predicted multisensory accuracy and SNR, where a larger difference was seen between audiovisual (AV) and predicted audiovisual (pAV) phoneme-recognition accuracies at lower SNRs, again in support of inverse effectiveness (Figure 2C, p = 0.002, F(3,31) = 6.50, partial-η2 = 0.39). No two-way interactions were observed between age group and observed vs. predicted multisensory performance (p = 0.44, F(1,33) = 0.49, partial-η2 = 0.02) or between age group and SNR level (p = 0.43, F(3,31) = 0.95, partial-η2 = 0.09). By contrast to whole-word recognition, no significant two-way interaction was observed in the multisensory gain (difference between audiovisual and pAV phoneme-recognition accuracies at each SNR level) and age group (Figure 2D, p = 0.22, F(3,31) = 1.58, partial-η2 = 0.13).

Age differences in multisensory gain for whole-word and phoneme recognition

As reported above, measurements of multisensory gain revealed a significant two-way interaction between age group and SNR for word recognition but not for phoneme recognition. To directly compare age-related multisensory gains in word versus phoneme recognition, we conducted a 2 (age group: younger vs older) × 4 (SNR: 0, −6, −12, −18 dB SPL) × 2 (measure: whole-word vs phoneme) ANOVA on the % multisensory gain data shown Figures 1D and 2D. Of primary importance, we observed a significant three-way interaction (p = 0.002, F(3,96) = 1.58, partial-η2 = 0.14), indicating that older and younger adults showed significantly different patterns of inverse effectiveness across whole-word and phoneme accuracies. Follow-up t-tests were conducted to examine the driving factor in this interaction (for detailed statistics, see Table 2). In brief, these tests revealed that older adults showed significantly more gain at the −6 dB SPL SNR for both whole-word and phoneme recognition. By contrast, at the −18 dB SPL SNR older adults showed significantly reduced gain for whole-word, but not for phoneme, recognition. Thus, only at the level of whole-word recognition did older adults show decreased multisensory gain. Secondarily, the expected within-subject main effect of SNR was found (p< 0.001, F(1,96) = 20.02, partial-η2 = 0.39), with greater gain seen at lower SNRs. Similarly, a main effect of measure was seen (p< 0.001, F(1,96) = 152.51, partial-η2 = 0.83), with greater gain seen when calculating whole-word recognition. When collapsed across whole-word and phoneme recognition, no significant between-subjects effect of age was observed (p = 0.63, F(1,96) = 0.43, partial-η2 = 0.02). No significant two-way interactions were found between SNR and measure (p = 0.417, F(1,32) = 0.68, partial-η2 = 0.02), between SNR and age (p = 0.292, F(1,32) = 1.15, partial-η2 = 0.02), or between age and measure (p = 0.745, F(1,32) = 0.11, partial-η2< 0.01).

Discussion

One of the most common complaints of older adults is difficulty in understanding speech in noisy environments. In such environments seeing a speaker’s mouth can have dramatic behavioral benefits (Sumby and Pollack, 1954). Despite well-documented increases in multisensory integration associated with the pairing of simple, non-speech stimuli in older adults (Freiherr, et al., 2013,Laurienti, et al., 2006), there have also been reports of decreases in behavioral gain associated with audiovisual speech integration in an aging cohort (Tye-Murray, et al., 2010). The current results provide novel evidence to resolve this important conflict. Here, we show that older adults do in fact benefit from audiovisual speech integration in a similar manner to younger adults when tasked with phoneme recognition. However, at the level of whole-word recognition, older and younger adults showed different patterns of multisensory gain. Whereas younger adults’ performance was consistent with the concept of inverse effectiveness (i.e., increasing gains in multisensory recognition as SNR decreased), older adults did not show more multisensory gain as SNR decreased beyond −6db SPL.

While changes in perception and cognition are frequently reported in normal aging, sensory processing also declines in healthy aging. These changes include a reduction in visual acuity and auditory sensitivity due to changes in transduction processes in the retina and cochlea (Baltes and Lindenberger, 1997,Lindenberger and Baltes, 1997,Lindenberger and Ghisletta, 2009), as well as age-related changes along the sensory processing hierarchies (Cliff, et al., 2013,Hugenschmidt, et al., 2009,Nagamatsu, et al., 2011). In the current study we saw such effects very clearly, with a significant main effect of age group for both the auditory-only and visual-only conditions in which younger adults performed better than younger adults. Our interests extended beyond these changes in unisensory function to include how multisensory audiovisual abilities change with advancing age.

One hypothesis for the current study was that older individuals would benefit more from, or rely more on, multisensory integration in order to compensate for declines in unisensory acuity. This hypothesis is founded in the concept of inverse effectiveness, in which greater multisensory gains are seen as the information from the individual senses becomes weaker or more ambiguous (Laurienti, et al., 2006,Peiffer, et al., 2007,Stevenson, et al., 2014a,Stevenson, et al., 2014c). For example, despite being poorer lip readers (Sommers, et al., 2005b), older individuals attend to the speaker’s mouth to a higher degree, presumably as a strategy to increase the available visual information. An alternative hypothesis contends that upon reaching a threshold auditory SNR, there is a point at which individuals can no longer extract meaningful information from a given (or set of given) sensory input(s), and subsequently fail to exhibit any behavioral benefit from a second sensory input. For example, Gordon and Allen (2009) presented unisensory and multisensory sentences to both older and younger adults and varied the saliency of the visual speech signal. Older adults showed reduced performance under all conditions relative to younger individuals, but with non-degraded visual information, their multisensory gain remained similar. However, upon degrading the visual input, older adults reached a critical threshold in which they failed to benefit from the visual signal (i.e., showed no multisensory gain), while the younger group continued to show improvements over the unisensory presentations. While our current study did not actively manipulate the visual signal, the marginally worse performance of the older adults relative to the younger adults may have pushed these individuals closer to such a threshold.

The whole-word recognition data reported here do not unequivocally rule out one hypothesis over another. When looking at intermediate SNR levels (e.g., −6 dB), older adults show increased multisensory performance compared to younger adults, as measured by the difference between observed performance and predicted levels of accuracy based on pooled unisensory performance. This is consistent with prior work that has found the −6 dB SNR level to be a “sweet spot” for multisensory-mediated gains in speech intelligibility (Ma, et al., 2009, Ross, et al., 2011,Ross, et al., 2007a,Ross, et al., 2007b). The pattern of differences in gain between older and younger adults support the idea that older adults differentially benefit from multisensory integration relative to younger adults, but only within a limited range of SNRs. For other SNRs, such as −18 dB, older adults showed substantially less gain when compared with younger adults. In contrast to the “inverted U” function seen for older adults with whole-word recognition, changes in multisensory gain as a function of SNR in younger adults for whole-word stimuli showed a declining monotonic function much more in keeping with inverse effectiveness. These data, taken in isolation, support Gordon and Allen’s hypothesis (2009) that when older adults reach a critical threshold, the benefit that can be gained from perceiving speech through multiple sensory modalities is reduced.

Although the whole-word recognition data suggest that there is an age-related decrease in multisensory gain at lower SNRs, phoneme recognition shows a different pattern. In contrast to whole-word recognition, there was no main effect of age-difference in multisensory gain during phoneme recognition. In fact, despite significantly poorer absolute levels of performance across SNRs and sensory modalities relative to younger adults, older adults showed strikingly similar levels of multisensory gain, even down to the lowest SNR levels. This finding suggests that older adults are integrating the basic auditory (i.e., phonemes) and visual (i.e., visemes) building blocks of speech information similarly to younger individuals, yet are failing to reach a critical information threshold at which they can correctly identify the whole word. One possible cause of these seemingly conflicting results is rooted in the behavioral measures used. During whole-word recognition, the listeners may still be integrating the phonemic and visemic information, yet fall short of recognizing the entire word. For example, a listener may be presented with the word “cat” in a unisensory (i.e., auditory alone, visual alone) context, and not perceive any portion of the word correctly. However, when presented with the word in an audiovisual context, the same listener may report perceiving “cab.” While “cab” is incorrect on the word level, such a response still represents an increase in the information perceived, since the participant was able to correctly perceive the initial two phonemes/visemes. Thus, scoring whole-word recognition may miss important elements of the perceptual improvement, particularly at low SNRs in which it is more difficult to correctly identify entire words.

These results underscore the importance of how a given response is measured when characterizing the presence and magnitude of multisensory integration (Mégevand, et al., 2013, Stevenson and Wallace, 2013). Analogous results have been seen in the neurophysiological realm, in which early studies of multisensory integration in individual neurons were based on spiking (i.e., action potential) responses (Meredith and Stein, 1983, Meredith and Stein, 1985). In the absence of clear changes in spiking under multisensory conditions, a neuron was said to not integrate its different inputs. However, more recent work, which has focused not only on spiking but also on changes in the local field potential (LFP - a measure of summed synaptic activity), has illustrated the presence of multisensory interactions in the LFP response in the absence of clear changes in neuronal spiking (Ghazanfar and Maier, 2009,Ghazanfar, et al., 2005,Ghose and Wallace, 2014,Kayser, et al., 2009,Sarko, et al., 2013).

The dissociation between whole-word and phoneme recognition performance in the current study illustrates that, at least for older adults, multisensory-mediated gains in intelligibility at the phonemic level do not necessarily translate to comparable gains at the whole-word level (as they appear to in the younger cohort). This finding suggests that additional processes, most notably those involved in the transformation of phonemic representations into word-based representations, may be preferentially impacted in the aging process. Weaknesses in this transformation process may be reflective of more generalized cognitive declines that impact domains such as memory, executive function and attention (for review, see Freiherr, et al., 2013), each of which likely contribute to the assembly of larger functional speech units (e.g., words, sentences, etc.). Conversely, changes in these cognitive processes may be, at least in part, due to less efficient processing within sensory and multisensory representations, given that these representations serve as the foundation for perceptual and cognitive representations.

The concept of lower-level sensory processing changes in healthy aging cascading into higher-level perceptual and cognitive difficulties is not unique to speech perception. Indeed, other areas of common complaint in older adults, such as memory, can be at least in part the result of changes in sensory processing (Baltes and Lindenberger, 1997, Burke, et al., 2012,Lindenberger and Ghisletta, 2009,Lovden, et al., 2005). For example, in an analogous finding from the visual domain to that reported here, older adults performed equivalently to younger adults on visual perceptual discriminations requiring processing of only a single feature (such as color). However, when the visual discrimination task required binding, or integrating, multiple features to create a cohesive representation of the whole object, older adults were impaired (Ryan, et al., 2012). Indeed, it has been argued that deficits in apparently distinct memory and perceptual functions may in fact arise from common representations and computational mechanisms (Barense, et al., 2012). To inform these questions, future work should focus on establishing the nature of the relationships between (multi)sensory and cognitive representations, as well as describing how these relationships change with both normal and pathological aging.

Despite the different patterns of multisensory gain across SNRs at the whole word and phoneme levels, it can be argued that whole word recognition is a more relevant and meaningful measure of speech perception. Thus, in a typical speech comprehension setting, successful recognition at the word level will produce a significant behavioral benefit, whereas increased information processing at the phonemic level may be, in isolation, less behaviorally consequential. While these results suggest that in terms of the integration of the basic building blocks for language (i.e., phonemic/visemic), inverse effectiveness appears to govern performance, from a more practical perspective there appears to be a “sweet spot” at intermediate SNRs at which behaviorally-relevant levels of multisensory gain are greatest, at least in aging populations.

While this study examined age-related changes in multisensory perception of speech across SNR levels, the effectiveness of a speech signal is only one of a number of factors influencing sensory integration. In addition to effectiveness, the temporal and spatial relationship of sensory signals are also very important. The more spatially congruent and temporally aligned two inputs are, the more likely they will be integrated (Bertelson and Radeau, 1981,Conrey and Pisoni, 2006, Dixon and Spitz, 1980, Massaro, et al., 1996, van Atteveldt, et al., 2007, van Wassenhove, et al., 2007). Thus, changes in spatial and/or temporal processing seen in healthy aging populations may also impact audiovisual speech perception (Hay-McCutcheon, et al., 2009). This is perhaps most germane with respect to temporal processing, as higher-acuity multisensory temporal processing has been linked to increases in integration of audiovisual speech in healthy individuals (R.A. Stevenson, et al., 2012). Furthermore, clinical groups that show impaired multisensory temporal processing also show decreased integration of audiovisual speech (Stevenson, et al., 2014b). This, coupled with strong evidence that declines in auditory temporal processing are related to auditory speech perception in older adults (Gordon-Salant and Fitzgibbons, 1993,Pichora-Fuller, 2003,Schneider and Pichora-Fuller, 2001), suggests that possible age-related declines in multisensory temporal processing may likewise be related to age-related declines in audiovisual speech perception abilities.

Conclusion

Here we report results from an audiovisual speech recognition task using varying levels of noise with younger and older adult participants. We found that for whole-word recognition, older adults showed greater multisensory gains at intermediate SNRs compared to younger adults. On the other hand, at the phoneme level a different pattern emerged whereby both younger and older adults showed similar increases in multisensory gain as SNR decreased, consistent with the concept of inverse effectiveness. Collectively, the results provide important insights into both the similarities and differences in how older and younger adults integrate auditory and visual speech cues in noisy environments, and help explain some of the conflicting findings from previous studies of multisensory speech perception in healthy aging.

Research Highlights.

Perceiving speech in noisy environments is a primary complaint with healthy aging

Both older and younger adults benefit from seeing the visual articulation of speech

Older and younger adults show different patterns of multisensory benefit across levels of noise

With aging, audiovisual processing is intact at more elementary levels of speech perception

Age-related deficits begin to emerge only at the more complex level of speech perception

Acknowledgements

Acknowledgments Funding for this work was provided by a Banting Postdoctoral Fellowship administered by the Government of Canada, a University of Toronto Department of Psychology Postdoctoral Fellowship Grant, the Autism Research Training Program, National Institutes of Health F32 DC011993, National Institutes of Health R34 DC010927, National Institutes of Health R21 CA1834892, a Simons Foundation research grant, a Vanderbilt Institute for Clinical and Translational Research grant VR7263, a Vanderbilt Kennedy Center MARI/Hobbs Award, the Vanderbilt Brain Institute, and the Vanderbilt University Kennedy Center.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Baltes PB, Lindenberger U. Emergence of a powerful connection between sensory and cognitive functions across the adult life span: a new window to the study of cognitive aging? Psychology and aging. 1997;12(1):12–21. doi: 10.1037//0882-7974.12.1.12. [DOI] [PubMed] [Google Scholar]

- Barense MD, Groen II, Lee AC, Yeung L-K, Brady SM, Gregori M, Kapur N, Bussey TJ, Saksida LM, Henson RN. Intact memory for irrelevant information impairs perception in amnesia. Neuron. 2012;75(1):157–167. doi: 10.1016/j.neuron.2012.05.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bertelson P, Radeau M. Cross-modal bias and perceptual fusion with auditory-visual spatial discordance. Perception & psychophysics. 1981;29(6):578–584. doi: 10.3758/bf03207374. [DOI] [PubMed] [Google Scholar]

- Bishop CW, Miller LM. A multisensory cortical network for understanding speech in noise. Journal of cognitive neuroscience. 2009;21(9):1790–1805. doi: 10.1162/jocn.2009.21118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burke SN, Ryan L, Barnes CA. Characterizing cognitive aging of recognition memory and related processes in animal models and in humans. Frontiers in aging neuroscience. 2012:4. doi: 10.3389/fnagi.2012.00015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cliff M, Joyce DW, Lamar M, Dannhauser T, Tracy DK, Shergill SS. Aging effects on functional auditory and visual processing using fMRI with variable sensory loading. Cortex; a journal devoted to the study of the nervous system and behavior. 2013;49(5):1304–1313. doi: 10.1016/j.cortex.2012.04.003. [DOI] [PubMed] [Google Scholar]

- Conrey B, Pisoni DB. Auditory-visual speech perception and synchrony detection for speech and nonspeech signals. The Journal of the Acoustical Society of America. 2006;119(6):4065–4073. doi: 10.1121/1.2195091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dixon NF, Spitz L. The detection of auditory visual desynchrony. Perception. 1980;9(6):719–721. doi: 10.1068/p090719. [DOI] [PubMed] [Google Scholar]

- Dubno JR, Dirks DD, Morgan DE. Effects of age and mild hearing loss on speech recognition in noise. J Acoust Soc Am. 1984;76(1):87–96. doi: 10.1121/1.391011. [DOI] [PubMed] [Google Scholar]

- Fraser S, Gagne JP, Alepins M, Dubois P. Evaluating the effort expended to understand speech in noise using a dual-task paradigm: the effects of providing visual speech cues. Journal of speech, language, and hearing research : JSLHR. 2010;53(1):18–33. doi: 10.1044/1092-4388(2009/08-0140). [DOI] [PubMed] [Google Scholar]

- Freiherr J, Lundström JN, Habel U, Reetz K. Multisensory integration mechanisms during aging. Front Hum Neurosci. 2013;7 doi: 10.3389/fnhum.2013.00863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghazanfar AA, Maier JX. Rhesus monkeys (Macaca mulatta) hear rising frequency sounds as looming. Behavioral neuroscience. 2009;123(4):822–827. doi: 10.1037/a0016391. [DOI] [PubMed] [Google Scholar]

- Ghazanfar AA, Maier JX, Hoffman KL, Logothetis NK. Multisensory integration of dynamic faces and voices in rhesus monkey auditory cortex. J Neurosci. 2005;25(20):5004–5012. doi: 10.1523/JNEUROSCI.0799-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghose D, Wallace M. Heterogeneity in the spatial receptive field architecture of multisensory neurons of the superior colliculus and its effects on multisensory integration. Neuroscience. 2014;256:147–162. doi: 10.1016/j.neuroscience.2013.10.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gordon-Salant S, Fitzgibbons PJ. Temporal factors and speech recognition performance in young and elderly listeners. Journal of Speech, Language, and Hearing Research. 1993;36(6):1276–1285. doi: 10.1044/jshr.3606.1276. [DOI] [PubMed] [Google Scholar]

- Gordon MS, Allen S. Audiovisual speech in older and younger adults: integrating a distorted visual signal with speech in noise. Experimental aging research. 2009;35(2):202–219. doi: 10.1080/03610730902720398. [DOI] [PubMed] [Google Scholar]

- Gosselin PA, Gagne JP. Older adults expend more listening effort than young adults recognizing audiovisual speech in noise. Int J Audiol. 2011;50(11):786–792. doi: 10.3109/14992027.2011.599870. [DOI] [PubMed] [Google Scholar]

- Hay-McCutcheon MJ, Pisoni DB, Hunt KK. Audiovisual asynchrony detection and speech perception in hearing-impaired listeners with cochlear implants: a preliminary analysis. Int J Audiol. 2009;48(6):321–333. doi: 10.1080/14992020802644871. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hugenschmidt CE, Mozolic JL, Tan H, Kraft RA, Laurienti PJ. Age-related increase in cross-sensory noise in resting and steady-state cerebral perfusion. Brain Topography. 2009;21(3–4):241–251. doi: 10.1007/s10548-009-0098-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humes LE. Speech understanding in the elderly. J Am Acad Audiol. 1996;7(3):161–167. [PubMed] [Google Scholar]

- James TW, Stevenson RA, Kim S. Inverse effectiveness in multisensory processing. In: Stein BE, editor. The New Handbook of Multisensory Processes. Cambridge, MA: MIT Press; 2012. [Google Scholar]

- Kayser C, Petkov CI, Logothetis NK. Multisensory interactions in primate auditory cortex: fMRI and electrophysiology. Hearing research. 2009;258(1–2):80–88. doi: 10.1016/j.heares.2009.02.011. [DOI] [PubMed] [Google Scholar]

- Laurienti PJ, Burdette JH, Maldjian JA, Wallace MT. Enhanced multisensory integration in older adults. Neurobiology of Aging. 2006;27(8):1155–1163. doi: 10.1016/j.neurobiolaging.2005.05.024. [DOI] [PubMed] [Google Scholar]

- Laurienti PJ, Burdette JH, Maldjian JA, Wallace MT. Enhanced multisensory integration in older adults. Neurobiology of aging. 2006;27(8):1155–1163. doi: 10.1016/j.neurobiolaging.2005.05.024. [DOI] [PubMed] [Google Scholar]

- Lindenberger U, Baltes PB. Intellectual functioning in old and very old age: cross-sectional results from the Berlin Aging Study. Psychology and aging. 1997;12(3):410–432. doi: 10.1037//0882-7974.12.3.410. [DOI] [PubMed] [Google Scholar]

- Lindenberger U, Ghisletta P. Cognitive and sensory declines in old age: gauging the evidence for a common cause. Psychology and aging. 2009;24(1):1–16. doi: 10.1037/a0014986. [DOI] [PubMed] [Google Scholar]

- Liu XZ, Yan D. Ageing and hearing loss. The Journal of pathology. 2007;211(2):188–197. doi: 10.1002/path.2102. [DOI] [PubMed] [Google Scholar]

- Lovden M, Ghisletta P, Lindenberger U. Social participation attenuates decline in perceptual speed in old and very old age. Psychology and aging. 2005;20(3):423–7434. doi: 10.1037/0882-7974.20.3.423. [DOI] [PubMed] [Google Scholar]

- Ma WJ, Zhou X, Ross LA, Foxe JJ, Parra LC. Lip-reading aids word recognition most in moderate noise: a Bayesian explanation using high-dimensional feature space. PLoS One. 2009;4(3):e4638. doi: 10.1371/journal.pone.0004638. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin JS, Jerger JF. Some effects of aging on central auditory processing. J Rehabil Res Dev. 2005;42(4) Suppl 2:25–44. doi: 10.1682/jrrd.2004.12.0164. [DOI] [PubMed] [Google Scholar]

- Massaro DW, Cohen MM, Smeele PM. Perception of asynchronous and conflicting visual and auditory speech. The Journal of the Acoustical Society of America. 1996;100(3):1777–1786. doi: 10.1121/1.417342. [DOI] [PubMed] [Google Scholar]

- Mégevand P, Molholm S, Nayak A, Foxe JJ. Recalibration of the multisensory temporal window of integration results from changing task demands. PLoS One. 2013;8(8):e71608. doi: 10.1371/journal.pone.0071608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Interactions among converging sensory inputs in the superior colliculus. Science (New York, NY. 1983;221(4608):389–391. doi: 10.1126/science.6867718. [DOI] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Descending efferents from the superior colliculus relay integrated multisensory information. Science (New York, NY. 1985;227(4687):657–659. doi: 10.1126/science.3969558. [DOI] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Visual, auditory, and somatosensory convergence on cells in superior colliculus results in multisensory integration. Journal of neurophysiology. 1986;56(3):640–662. doi: 10.1152/jn.1986.56.3.640. [DOI] [PubMed] [Google Scholar]

- Nagamatsu LS, Carolan P, Liu-Ambrose TY, Handy TC. Age-related changes in the attentional control of visual cortex: A selective problem in the left visual hemifield. Neuropsychologia. 2011;49(7):1670–1678. doi: 10.1016/j.neuropsychologia.2011.02.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nath AR, Beauchamp MS. Dynamic changes in superior temporal sulcus connectivity during perception of noisy audiovisual speech. J Neurosci. 2011;31(5):1704–1714. doi: 10.1523/JNEUROSCI.4853-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park DC, Lautenschlager G, Hedden T, Davidson NS, Smith AD, Smith PK. Models of visuospatial and verbal memory across the adult life span. Psychol Aging. 2002;17(2):299–320. [PubMed] [Google Scholar]

- Peiffer AM, Mozolic JL, Hugenschmidt CE, Laurienti PJ. Age-related multisensory enhancement in a simple audiovisual detection task. Neuroreport. 2007;18(10):1077–1081. doi: 10.1097/WNR.0b013e3281e72ae7. [DOI] [PubMed] [Google Scholar]

- Pichora-Fuller MK. Processing speed and timing in aging adults: psychoacoustics, speech perception, and comprehension. International Journal of Audiology. 2003;42:S59–S67. doi: 10.3109/14992020309074625. [DOI] [PubMed] [Google Scholar]

- Raab DH. Statistical facilitation of simple reaction times. Transactions of the New York Academy of Sciences. 1962;24:574–590. doi: 10.1111/j.2164-0947.1962.tb01433.x. [DOI] [PubMed] [Google Scholar]

- Ross LA, Molholm S, Blanco D, Gomez-Ramirez M, Saint-Amour D, Foxe JJ. The development of multisensory speech perception continues into the late childhood years. The European journal of neuroscience. 2011;33(12):2329–2337. doi: 10.1111/j.1460-9568.2011.07685.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ross LA, Saint-Amour D, Leavitt VM, Javitt DC, Foxe JJ. Do you see what I am saying? Exploring visual enhancement of speech comprehension in noisy environments. Cereb Cortex. 2007a;17(5):1147–1153. doi: 10.1093/cercor/bhl024. [DOI] [PubMed] [Google Scholar]

- Ross LA, Saint-Amour D, Leavitt VM, Molholm S, Javitt DC, Foxe JJ. Impaired multisensory processing in schizophrenia: deficits in the visual enhancement of speech comprehension under noisy environmental conditions. Schizophrenia research. 2007b;97(1–3):173–183. doi: 10.1016/j.schres.2007.08.008. [DOI] [PubMed] [Google Scholar]

- Ryan L, Cardoza J, Barense M, Kawa K, Wallentin-Flores J, Arnold W, Alexander G. Age-related impairment in a complex object discrimination task that engages perirhinal cortex. Hippocampus. 2012;22(10):1978–1989. doi: 10.1002/hipo.22069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sarko DK, Ghose D, Wallace MT. Convergent approaches toward the study of multisensory perception. Frontiers in systems neuroscience. 2013:7. doi: 10.3389/fnsys.2013.00081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schneider BA, Pichora-Fuller MK. Age-related changes in temporal processing: implications for speech perception. Seminars in hearing. 2001:227–240. Copyright© 2001 by Thieme Medical Publishers, Inc., 333 Seventh Avenue, New York, NY 10001, USA. Tel.:+ 1 (212) 584–4662. [Google Scholar]

- Sommers MS, Tye-Murray N, Spehar B. Auditory-visual speech perception and auditory-visual enhancement in normal-hearing younger and older adults. Ear Hear. 2005a;26(3):263–275. doi: 10.1097/00003446-200506000-00003. [DOI] [PubMed] [Google Scholar]

- Sommers MS, Tye-Murray N, Spehar B. Auditory-visual speech perception and auditory-visual enhancement in normal-hearing younger and older adults. Ear and hearing. 2005b;26(3):263–275. doi: 10.1097/00003446-200506000-00003. [DOI] [PubMed] [Google Scholar]

- Stevenson R, Bushmakin M, Kim S, Wallace M, Puce A, James T. Inverse Effectiveness and Multisensory Interactions in Visual Event-Related Potentials with Audiovisual Speech. Brain Topography. 2012:1–19. doi: 10.1007/s10548-012-0220-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Geoghegan ML, James TW. Superadditive BOLD activation in superior temporal sulcus with threshold non-speech objects. Experimental Brain Research. 2007;179(1):85–95. doi: 10.1007/s00221-006-0770-6. [DOI] [PubMed] [Google Scholar]

- Stevenson RA, Ghose D, Fister JK, Sarko DK, Altieri NA, Nidiffer AR, Kurela LR, Siemann JK, James TW, Wallace MT. Identifying and Quantifying Multisensory Integration: A Tutorial Review. Brain Topogr. 2014a doi: 10.1007/s10548-014-0365-7. [DOI] [PubMed] [Google Scholar]

- Stevenson RA, James TW. Audiovisual integration in human superior temporal sulcus: Inverse effectiveness and the neural processing of speech and object recognition. NeuroImage. 2009;44(3):1210–1223. doi: 10.1016/j.neuroimage.2008.09.034. [DOI] [PubMed] [Google Scholar]

- Stevenson RA, Kim S, James TW. An additive-factors design to disambiguate neuronal and a real convergence: measuring multisensory interactions between audio, visual, and haptic sensory streams using fMRI. Experimental Brain Research. 2009;198(2–3):183–194. doi: 10.1007/s00221-009-1783-8. [DOI] [PubMed] [Google Scholar]

- Stevenson RA, Siemann JK, Schneider BC, Eberly HE, Woynaroski TG, Camarata SM, Wallace MT. Multisensory temporal integration in autism spectrum disorders. J Neurosci. 2014b;34(3):691–697. doi: 10.1523/JNEUROSCI.3615-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Wallace MT. Multisensory temporal integration: task and stimulus dependencies. Experimental brain research Experimentelle Hirnforschung Experimentation cerebrale. 2013;227(2):249–261. doi: 10.1007/s00221-013-3507-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Wallace MT, Altieri N. The interaction between stimulus factors and cognitive factors during multisensory integration of audiovisual speech. Front Psychol. 2014c;5:352. doi: 10.3389/fpsyg.2014.00352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Zemtsov RK, Wallace MT. Individual differences in the multisensory temporal binding window predict susceptibility to audiovisual illusions. J Exp Psychol Hum Percept Perform. 2012;38(6):1517–1529. doi: 10.1037/a0027339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sumby WH, Pollack I. Visual contribution to speech intelligibility in noise. Journal of the Acoustical Society of America. 1954;26:212–215. [Google Scholar]

- Tye-Murray N, Sommers M, Spehar B, Myerson J, Hale S. Aging, audiovisual integration, and the principle of inverse effectiveness. Ear and hearing. 2010;31(5):636–644. doi: 10.1097/AUD.0b013e3181ddf7ff. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Atteveldt NM, Formisano E, Blomert L, Goebel R. The effect of temporal asynchrony on the multisensory integration of letters and speech sounds. Cereb Cortex. 2007;17(4):962–974. doi: 10.1093/cercor/bhl007. [DOI] [PubMed] [Google Scholar]

- van Wassenhove V, Grant KW, Poeppel D. Temporal window of integration in auditory-visual speech perception. Neuropsychologia. 2007;45(3):598–607. doi: 10.1016/j.neuropsychologia.2006.01.001. [DOI] [PubMed] [Google Scholar]

- Wallace MT, Wilkinson LK, Stein BE. Representation and integration of multiple sensory inputs in primate superior colliculus. Journal of neurophysiology. 1996;76(2):1246–1266. doi: 10.1152/jn.1996.76.2.1246. [DOI] [PubMed] [Google Scholar]

- Werner S, Noppeney U. Super additive Responses in Superior Temporal Sulcus Predict Audiovisual Benefits in Object Categorization. Cereb Cortex. 2009 doi: 10.1093/cercor/bhp248. [DOI] [PubMed] [Google Scholar]