Abstract

Background

Preference for speech and music processed with nonlinear frequency compression and two controls (restricted and extended bandwidth hearing-aid processing) was examined in adults and children with hearing loss.

Purpose

Determine if stimulus type (music, sentences), age (children, adults) and degree of hearing loss influence listener preference for nonlinear frequency compression, restricted bandwidth and extended bandwidth.

Research Design

Within-subject, quasi-experimental study. Using a round-robin procedure, participants listened to amplified stimuli that were 1) frequency-lowered using nonlinear frequency compression, 2) low-pass filtered at 5 kHz to simulate the restricted bandwidth of conventional hearing aid processing, or 3) low-pass filtered at 11 kHz to simulate extended bandwidth amplification. The examiner and participants were blinded to the type of processing. Using a two-alternative forced-choice task, participants selected the preferred music or sentence passage.

Study Sample

Sixteen children (8–16 years) and 16 adults (19–65 years) with mild-to-severe sensorineural hearing loss.

Intervention

All subjects listened to speech and music processed using a hearing-aid simulator fit to the Desired Sensation Level algorithm v.5.0a (Scollie et al, 2005).

Results

Children and adults did not differ in their preferences. For speech, participants preferred extended bandwidth to both nonlinear frequency compression and restricted bandwidth. Participants also preferred nonlinear frequency compression to restricted bandwidth. Preference was not related to degree of hearing loss. For music, listeners did not show a preference. However, participants with greater hearing loss preferred nonlinear frequency compression to restricted bandwidth more than participants with less hearing loss. Conversely, participants with greater hearing loss were less likely to prefer extended bandwidth to restricted bandwidth.

Conclusion

Both age groups preferred access to high frequency sounds, as demonstrated by their preference for either the extended bandwidth or nonlinear frequency compression conditions over the restricted bandwidth condition. Preference for extended bandwidth can be limited for those with greater degrees of hearing loss, but participants with greater hearing loss may be more likely to prefer nonlinear frequency compression. Further investigation using participants with more severe hearing loss may be warranted.

Keywords: Hearing aids and assistive listening devices, Auditory rehabilitation, Pediatric Audiology

Sound quality is based on a judgment of the accuracy, appreciation, or intelligibility of audio output from an electronic device, such as a hearing aid. The sound quality of hearing aids has been identified as an important factor in hearing-aid users’ satisfaction with amplification (Humes, 1999; Kochkin, 2005). Findings suggest that sound quality may be related to speech recognition, but involves separate processes. While some listeners rate conditions with the highest speech intelligibility as also having the best sound quality (van Buuren et al, 1999), this does not seem to be the norm (Harford & Fox, 1978; Plyler et al, 2005; Rosengard et al, 2005).

Since the satisfaction of hearing-aid users is related, at least in part, to sound quality, there is an interest in the effect of different hearing-aid parameters on sound quality. In this paper, the maximum audible frequency (bandwidth) with amplification is defined as the highest frequency at which the listener can hear conversational speech with amplification. The maximum audible frequency is determined by measuring the hearing-aid output for conversational speech and then determining the point at which the root mean square level crosses the listener’s hearing threshold to become inaudible. The bandwidth with amplification is one factor that has been shown to influence both sound quality and speech recognition (Ricketts et al, 2008; Füllgrabe et al, 2010). Increasing the bandwidth has been found to improve objective measures of speech recognition for both children (Stelmachowicz et al, 2001, 2004) and adults (Ching et al, 1998; Hornsby et al, 2011), with children requiring greater bandwidth than adults in order to achieve equivalent performance. Adult listeners also indicate a subjective preference for increased bandwidth (Moore & Tan, 2003; Ricketts et al, 2008; Füllgrabe et al, 2010), but that preference is influenced by the stimuli used and degree of hearing loss. Specifically, adults with normal hearing prefer a wider bandwidth for music passages than for speech stimuli (Moore & Tan, 2003). Ricketts et al. (2008) found that listeners with less high-frequency hearing loss, as measured by the slope of the hearing loss, more consistently preferred speech and music with wideband processing (5.5 kHz vs 9 kHz) than listeners with more high-frequency hearing loss. Similar observations have been made for speech recognition, wherein listeners with less hearing loss are more likely to demonstrate benefit with increases in bandwidth than listeners with greater hearing loss (Ching et al, 1998; Hogan & Turner, 1998; Turner & Cummings, 1999; Ching et al, 2001).

However, extending the bandwidth with a hearing aid using conventional amplification, hereafter referred to as extended bandwidth (EBW), can be difficult to achieve in practice. Bandwidth can be restricted in the high frequencies due to the degree of hearing loss, the upper frequency limit of amplification, or both (Moore et al, 2008). The bandwidth traditionally available with hearing-aid amplification, 5–6 kHz (Dillon, 2001), is hereafter referred to as restricted bandwidth (RBW). A recent advance in hearing-aid signal processing, frequency lowering, has made it possible to provide information about speech over a greater bandwidth than is traditionally available to hearing aid users (see Alexander, 2013 for a review of frequency-lowering approaches). By shifting high-frequency sounds to lower frequencies, frequency lowering potentially increases the audibility of information originating from the higher frequencies. One approach to frequency lowering is nonlinear frequency compression (NFC). With NFC, the input signal is filtered into a low-frequency and a high-frequency band. The crossover point between the two bands is referred to as the start frequency. Below the start frequency, the signal is amplified without frequency compression while above the start frequency the signal is compressed in frequency. The amount of frequency compression applied is by specified the compression ratio.

While NFC has the potential to increase high-frequency audibility, the resulting spectral distortion in lower-frequency regions where the information is moved to could have detrimental effects on speech recognition. Studies of NFC do not consistently demonstrate improved speech recognition when compared to RBW (Simpson et al, 2005, 2006; Glista et al, 2009; Wolfe et al, 2010, 2011; Souza et al, 2013). However, as noted by Alexander (2013), differences in participant populations, hearing-aid technology, stimuli, and fitting methods across studies may have contributed to the variability in outcomes. For example, while Simpson et al. (2005) found improved consonant-vowel-consonant recognition with NFC, a follow up study by Simpson et al. (2006) did not. Although participants in both studies had severe to profound hearing loss, participants in the 2005 study had less hearing loss than participants in the 2006 study. Therefore, the participants in the 2006 study did not demonstrate the same benefit observed for the participants in the 2005 study, potentially because the compressed portion of the signal would have been less audible for the latter study. Because real-ear measures were not performed, it is difficult to determine how audibility in each study affected speech recognition. Another factor that may have influenced differences between the studies is the start frequency. Start frequencies were lower (1.25 or 1.60 kHz) in the 2006 study than in the 2005 study (with a few exceptions, ≥ 2.0 kHz), which may have adversely affected the perception of low-frequency information like fundamental frequency and formant ratios.

Some differences in outcomes across studies also may be related to the age of the participants. Stelmachowicz and colleagues (2001, 2004) demonstrated that children require greater bandwidth than adults in order to maximize speech perception, due to a greater reliance on acoustic-phonetic cues. Consistent with that finding, speech recognition results with children indicate that they may benefit more than adults from the provision of NFC when compared to RBW (Glista et al, 2009). While studies by Wolfe et al. (2010, 2011) did not include adult listeners, they did demonstrate that children consistently showed improved speech recognition from NFC. Findings with adults in the aforementioned NFC studies were not as consistently positive.

As with objective measures of speech recognition, outcomes of studies investigating subjective preference for NFC or RBW vary. Simpson et al. (2006) measured preference for NFC versus RBW using the Abbreviated Profile of Hearing Aid Benefit (APHAB; Cox & Alexander, 1995), but only included adult listeners. Although a statistical analysis of the subjective ratings for the APHAB was not reported, confidence intervals were provided by Cox and Alexander. None of the changes in ratings described by Simpson et al. were greater than the 90% confidence intervals, suggesting that their adult listeners did not perceive differences in benefit between NFC and RBW when compared to the normative sample for the APHAB.

The settings used for NFC are another factor that might influence preference over RBW. Studies where listeners preferred NFC (Glista et al, 2009; Wolfe et al, 2010, 2011) used the minimum settings (highest start frequency and/or lowest compression ratio) that simultaneously achieved audibility of the compressed portion of speech and avoided spectral overlap of /s/ and /ʃ/. Studies where listeners preferred RBW to NFC (Parsa et al, 2013; Souza et al, 2013) systematically adjusted the start frequency and compression ratio to determine the extent to which the listeners would tolerate the distortion caused by NFC. Audibility of the compressed portion was not measured. Therefore, it may have been that some of the settings selected (those with low start frequencies and high compression ratios) produced greater spectral distortion without additional gains in audibility of the compressed portion of the signal. Caution is warranted in comparing findings from Souza et al. to those clinical hearing aids with NFC, because only the most intense frequency components were lowered, which differs from the current commercial implementations of NFC, where all frequency components above the start frequency are lowered.

Age also may have impacted preference differences across studies. While few studies have compared adults and children, the majority of the children in Glista et al. (2009) had a preference for NFC, whereas the adults did not have a preference for one or the other (NFC or RBW). In contrast, Parsa et al. (2013) found that both adults and children had a preference for RBW but, as mentioned previously, the settings used may have resulted in increased distortion without increased audibility. Together these studies suggest that selecting the minimum NFC settings necessary to achieve audibility of the compressed portion of speech may be more likely to lead to a preference for NFC than NFC settings that use lower start frequencies and higher compression ratios.

While improvements in speech recognition with either NFC or EBW over RBW amplification have been demonstrated for some participant populations (Ching et al, 1998; Stelmachowicz et al, 2001, 2004; Simpson et al, 2005; Glista et al, 2009; Hornsby et al, 2011; Wolfe et al, 2010, 2011), there have been no direct comparisons of sound quality between EBW and NFC for either music or speech. It is our prediction that, because NFC distorts the frequency spectrum of the input signal, listeners will prefer EBW to NFC. Because differences in preference for EBW have been previously observed between speech and music (e.g., Moore & Tan, 2003), both were included. Lastly, while it is well known that children require a greater bandwidth than adults to achieve equivalent speech understanding, there are no studies that compare preference for EBW, NFC, and RBW in children and adults. Knowing which technology is preferred would be useful in helping clinicians determine candidacy and in counseling patients.

The purpose of the present study was to compare preferences for hearing-aid processing using EBW, NFC, and RBW in children and adults. Adults and children with primarily mild-to-severe hearing loss were included because 1) previous NFC studies have demonstrated speech recognition benefit for listeners with milder degrees of hearing loss (e.g., Wolfe et al, 2010, 2011) and 2) this allowed for a hearing-aid simulator and headphone arrangement to achieve audibility through 8 kHz for the EBW condition. Music passages and speech-in-quiet were included to ascertain the effect of the processing type on listeners’ preferences. Paired comparisons were selected to determine the direction of the preference.

METHOD

Participants

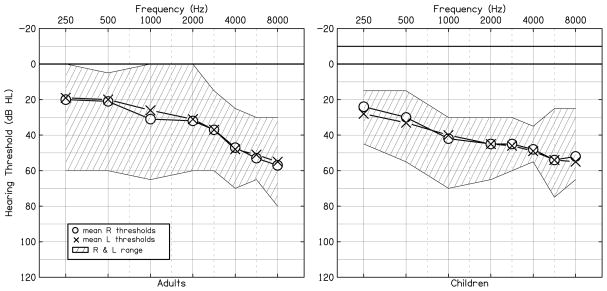

Because the method of fitting the simulated hearing aid was based on audibility, participants were only included if higher audibility could be achieved with EBW and NFC than RBW for at least one ear. Hearing thresholds were measured for all participants at octave frequencies from .25 to 8 kHz and at 6 kHz using an audiometer (GSI-61) with insert earphones (ER3A) or supra-aural earphones (TDH-50P)i. Hearing thresholds were also measured at interoctave frequencies when consecutive octave frequencies differed by ≥ 20 dB. Participants with high-frequency (2, 4, 6 kHz) pure tone average (PTA) ≤ 25 dB HL in either ear, asymmetric hearing loss (PTA difference ≥ 15 dB), or those who would have had asymmetric NFC settings due to differences in the bandwidth, were excluded. Three of the children were tested monaurally because higher audibility could not be achieved with either EBW or NFC than RBW in the non-test ear. One child was excluded due to an error during data collection. The remaining participants consisted of 16 children (mean age 12 years, range 8–16 years) and 16 adults (mean age 54 years, range 19–65 years) with mild-to-severe hearing loss. Figure 1 shows the hearing thresholds for the participants. Tables 1 and 2 show participant demographics for adults and children, respectively. Of the participants who wore hearing aids, all except participant 9A (adult) and participant 15C (child) wore binaural hearing aids.

Figure 1.

Mean hearing thresholds for the left ear (X) and the right ear (O) for the adults (left) and children (right). The shaded area represents the range of hearing thresholds.

Table 1.

Subject demographics, Adults.

| ID | Age (years) | Age ID | Amp (years) | Type | Music exp | Hearing Aid Simulator Information | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

| |||||||||||||

| Right | Left | ||||||||||||

| MAF (RBW) | SF | CR | MAF (NFC) | MAF (RBW) | SF | CR | MAF (NFC) | ||||||

|

| |||||||||||||

| 1A | 63 | 53 | NA | None | 2 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 2A | 24 | 0 | 1 | NFC | 1 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 3A | 54 | 21 | 47 | NFC | 1 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 4A | 56 | 53 | NA | None | 1 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 5A | 64 | 58 | NA | None | 1 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 6A | 38 | 8 | 9 | WDRC | 2 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 7A | 57 | 56 | NA | None | 2 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 8A | 64 | 45 | NA | None | 1 | 4000 | 2700 | 2.3 | 6960 | 4000 | 2700 | 2.3 | 6960 |

| 9A | 59 | 9 | 35 | WDRC | 1 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 10A | 60 | 45 | NA | None | 2 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 11A | 56 | 25 | NA | None | 2 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 12A | 61 | 61 | NA | None | 1 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 13A | 65 | 48 | 55 | WDRC | 2 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 14A | 62 | 62 | NA | None | 2 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 15A | 58 | 58 | NA | None | 2 | 5000 | 3800 | 2.6 | 5899 | 5000 | 3800 | 2.6 | 8240 |

| 16A | 19 | 10 | NA | None | 1 | 4000 | 2700 | 2.3 | 6960 | 4000 | 2700 | 2.3 | 6960 |

Age ID = age that hearing loss was identified. Amp = age at which amplification was provided. For music experience 1 = 0–4 years of music lessons, 2 = 5–10 years of music lessons, 3 = greater than 10 years of music lessons or in a music ensemble, 4 = music degree or employment as a musician. CR = frequency compression ratio; exp = experience; MAF = maximum audible frequency; NA = not applicable (in the Amp column this indicates participants who do not wear hearing aids), NK = not known; NT = ear not tested; NFC = nonlinear frequency compression with WDRC; SF = start frequency; WDRC = wide dynamic range compression.

Table 2.

Subject demographics, Children. See Table I caption for detailed explanation.

| SubjID | Age (years) | Age ID | Amp (years) | Type | Music exp | Hearing Aid Simulator Information | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

| |||||||||||||

| Right | Left | ||||||||||||

| MAF (RBW) | SF | CR | MAF (NFC) | MAF (RBW) | SF | CR | MAF (NFC) | ||||||

|

| |||||||||||||

| 1C | 16 | 1 | NA | None | 1 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 2C | 15 | 0 | 3 | NFC | 1 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 3C | 11 | 4 | 4 | NFC | 1 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 4C | 10 | 0 | 2 | NFC | 1 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 5C | 16 | NK | NA | None | 1 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 6C | 9 | NK | NA | NFC | 1 | NT | NT | NT | NT | 5000 | 3800 | 2.6 | 8240 |

| 7C | 12 | 3 | 3 | WDRC | 1 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 8C | 8 | 0 | 7 | NFC | 1 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 9C | 11 | 9 | 8 | WDRC | 1 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 10C | 12 | 5 | 5 | NFC | 1 | NT | NT | NT | NT | 5000 | 3800 | 2.6 | 8240 |

| 11C | 9 | 4 | 4 | WDRC | 1 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 12C | 9 | 4 | 4 | WDRC | 1 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 13C | 15 | 0 | 0 | WDRC | 1 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 14C | 11 | 0 | 3 | WDRC | 1 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

| 15C | 14 | 5 | 12 | WDRC | 1 | NT | NT | NT | NT | 5000 | 3800 | 2.6 | 8240 |

| 16C | 12 | 4 | 5 | WDRC | 2 | 5000 | 3800 | 2.6 | 8240 | 5000 | 3800 | 2.6 | 8240 |

Stimuli

Speech stimuli were fifteen sentences, spoken by an adult female, which included at least three fricatives per sentence (see Appendix). The sentence duration averaged 3.8 s and ranged from 2.9 to 4.4 s. The sentences were recorded digitally using a Shure Beta 53 microphone with the standard filter cap. The sentences were presented in quiet. Eight different music passages were used (see Appendix). The passages were extracted from a compact disc using Adobe Audition 3.0. The music duration averaged passages was 8 s and ranged from 7 to 11 s. Stimuli were presented at 60 dB SPL to the input of the hearing aid simulator, described next.

Amplification

Signal processing

Stimuli were processed using a hearing-aid simulator implemented in MATLAB (R2009b) with a sampling rate of 22.05 kHz (see McCreery et al, 2013; Alexander & Masterson, submitted [accepted pending final revision]). Hearing-aid amplification was simulated in order to maintain greater control over the NFC parameters and audibility. The stages in the program included a broadband input limiter circuit, filter bank, wide dynamic range compression, NFC (when appropriate), multichannel wide-dynamic range compression (WDRC), and a broadband output limiter. Table 3 describes the compression characteristics for the hearing-aid simulator. All compression characteristics are referenced to the ANSI (2009) standard. The gain control circuit was implemented using equation 8.1 of Kates (2008)

Table 3.

Hearing-aid simulator settings.

| Circuit | Settings |

|---|---|

| Input Limiter | 1 ms attack time, 50 ms release time, 10:1 CR, 105 dB SPL CT |

| Filterbank | 8 overlapping channels with center frequencies and, in parenthesis, cutoff frequencies (−3 dB) of ..25 (0, .3), .4 (0.33, 0.5), .63 (.52, 0.74), 1 (.85, 1.16), 1.6 (1.31, 1.92), 2.5 (2.07, 3.09), 4 (3.24, 4.95), & 6.3 (5.10, Nyquist) kHz. |

| WDRC | 5 ms attack time, 50 ms release time, CRs and CTs as prescribed by DSL, linear amplification provided below the CTs |

| BOLT/Output Limiter | 1 ms attack time, 50 ms release time, 10:1 CR, CTs as prescribed by DSL |

CR = compression ratio; CT = compression threshold; BOLT = broadband output limiting threshold; WDRC = wide dynamic range compression, DSL=Desired Sensation Level algorithm.

where n is the sampling time point, x(n) is the acoustic input signal, d(n) is the local mean level used to generate the gain signal which was applied to x(n) to form the output signal, α is a constant derived from the attack time, and β is a constant derived from the release time. Gain decreased when the signal level increased and increased when the signal level decreased.

When NFC was used, two output signals were formed by separate application of low-pass and high-pass filters to the signal, with the cut-off frequency equal to the start frequency. The high-pass signal was processed using overlapping blocks of 256 samples. Blocks were windowed in the time domain using the product of a Hamming and a sinc function. Magnitude and phase information at 32-sample intervals were obtained by submitting this 256-point window to the spectrogram function in MATLAB with 224 points of overlap (7/8) and 128 points used to calculate the discrete Fourier transforms. The instantaneous frequency, phase, and magnitude of each frequency bin above the start frequency, spanning a ~4.5 kHz region, was used to modulate sine-wave carriers using the following equation adopted from Simpson et al. (2005)

where Fout = output frequency, SF = start frequency, FCR = frequency-compression ratio, and Fin = instantaneous input frequency.

DSL settings

For each participant, the Desired Sensation Level algorithm v.5.0a (DSL: Scollie et al, 2005) generated the prescribed settings for the rear-ear aided response for average conversational speech (65 dB SPL), compression threshold, compression ratio, and maximum power output that were used to program the simulator. Absolute threshold in dB SPL at the tympanic membrane for each listener was estimated using a transfer function of the TDH 50 earphones (used for the audiometric testing) on an IEC 711 Zwislocki Coupler and KEMAR (Knowles Electronic Manikin for Acoustic Research, Burkhard & Sachs, 1975). These thresholds were subsequently entered into the DSL program. DSL settings were generated for a BTE hearing aid style with 8-channel WDRC, no venting, and no binaural correction. The recommended rear-ear aided response targets were used for the two age groups, which were lower for the adult prescription than the pediatric prescription. Because DSL does not provide a target sensation level (SL) at 8 kHz, the target SL at 6 kHz was used for 8 kHz. To prevent an unusually steep frequency response, the resultant target level was limited to the level of the 6000-Hz target plus 10 dB.

Simulator

The output for each participant was adjusted using two iterations of the following steps. Gain for each channel was automatically tuned to targets using the “Carrot Passage” from Audioscan® (Dorchester, ON). Specifically, the 12.8 second speech signal was analyzed using 1/3-octave filters specified by ANSI S1.11 (2004). A transfer function for the headphones used in this study (Sennheiser HD-25, Ireland) on a IEC 711 Zwislocki Coupler and KEMAR was used to estimate the real ear aided response. The resulting Long Term Average Speech Spectrum was then compared to the prescribed DSL m(I/O) v5.0a targets for a 60 dB SPL presentation level. The 1/3-octave levels in dB SPL were grouped according to which WDRC channel they overlapped. The average difference for each group was computed and used to adjust the gain in the corresponding channelii. Maximum gain was limited to 65 dB and minimum gain was limited to 0 dB (after accounting for the headphone transfer function). Because of filter overlap and subsequent summation in the low frequency channels, gain for channels centered below 500 Hz were decreased by 9 dB.

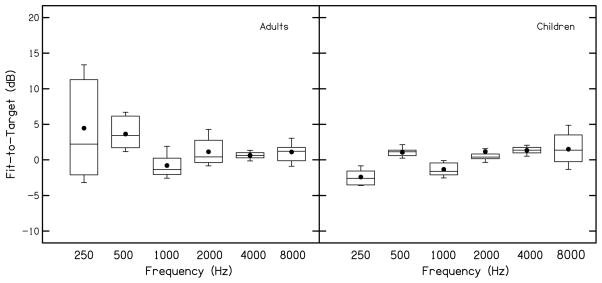

Figure 2 depicts the fit-to-target data. These data were derived by computing the output level for the “carrot passage” for each frequency band using 1/3 octave-wide filters based on the ANSI (2004) standard and then subtracting the DSL target level from the level of the carrot passage. The values for the left and the right ears were averaged, except for those fitted monaurally. For adults the mean deviations from target were 3.6, −0.8, 1.2, and 0.6 dB at .5, 1, 2, and 4 kHz, respectively. For children the mean deviations from target were 1.0, −1.3, 1.2, and 1.3 dB at .5, 1, 2, and 4 kHz, respectively. The greatest variability occurred at 250 Hz with the adults. This occurred because DSL sometimes prescribed gain less than zero dB when hearing thresholds were normal for the adults and gain in the hearing-aid simulator was prevented from going below zero dB, which occasionally resulted in a poor fit-to-target value.

Figure 2.

Fit-to-target (difference between hearing aid output and Desired Sensation Level (DSL) target level for adults (left panel) and children (right panel)). Positive numbers indicate that the output was higher than the target level. For this and remaining box and whisker plots, boxes represent the interquartile range and whiskers represent the 10th and 90th percentiles. For each box, lines represent the median and filled circles represent the mean scores.

RBW, EBW, and NFC settings

The simulator was programmed for three different conditions: RBW, EBW, and NFC. For the RBW and NFC conditions 0 dB gain was applied above 5 kHz and a 1024-tap low-pass filter was applied at 5000 Hz that reduced the output by 80 – 100 dB at 5500 Hz. For the EBW condition the anti-aliasing filter limited the highest frequency to approximately the Nyquist frequency (11.025 kHz). The maximum input frequency with NFC was set individually and was limited in the simulator to that available in the Phonak Naída SP (10 kHz or 4.5 kHz above the start frequency, whichever was lower). As is the case with the commercial implementation of NFC, the start frequency and compression ratio were selected from a predetermined set of combinations provided by the iPFG v2.0 (Phonak LLC, Chicago, IL) fitting software. The combinations consisted of those available in the fitting software and two interpolated combinations. The start frequency and compression ratio combination selected for each participant was based on a method described in Alexander (2013) and McCreery et al. (2013), which gives highest priority to maximum the bandwidth following NFC. Two steps were used to estimate audibility with NFC.

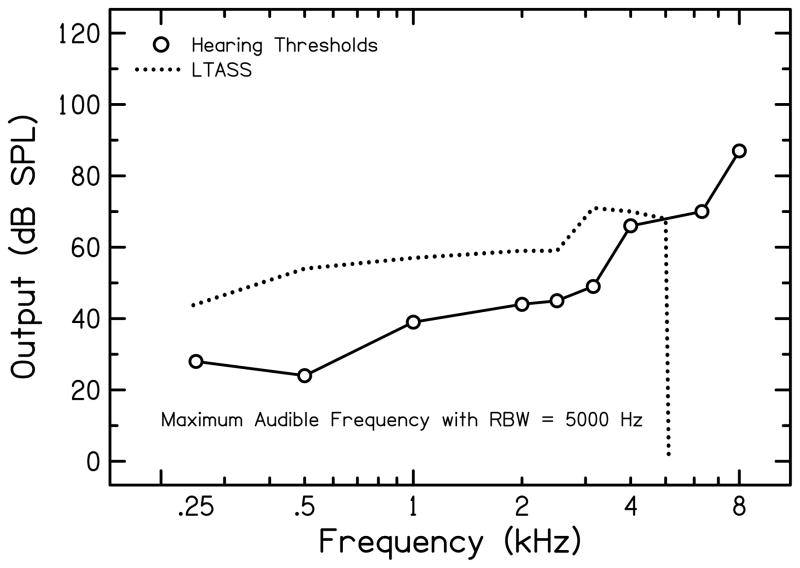

The first step determined the highest frequency that the hearing aid simulator could make audible to the participant without NFC (maximum audible frequency with RBW). This was operationally defined as the frequency where the participant’s threshold intersected the amplified LTASS (shown in Figure 3 for a representative participant). One-third octave filters were used to analyze the LTASS.

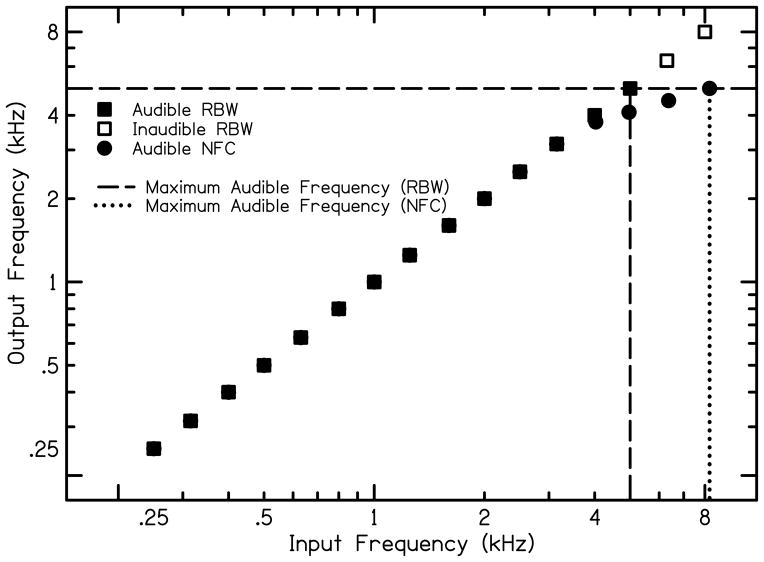

The second step determined the highest frequency that the hearing aid simulator could make audible to the participant with NFC (maximum audible frequency with NFC). This was accomplished by using the Sound Recover Fitting Assistant v1.10 (Joshua Alexander, Purdue University, IN). The Sound Recover Fitting Assistant estimates the maximum audible frequency with NFC by calculating the input frequency that would be lowered to the same frequency as the maximum audible frequency with RBW. That estimate is based on measurements that were taken with actual hearing aids, including the Naída SP (the same hearing aid that was used to select the available NFC settings for the current study). The program also plots a frequency input-output function, as depicted in Figure 4 for the same representative participant used for Figure 3. The horizontal dashed line at 5000 Hz represents the participant’s maximum audible frequency. Open symbols above that line represent frequencies that are inaudible while the filled symbols below it represent audible frequencies. The circles and squares represent the output frequency as a function of the input frequency for the selected RBW and NFC settings, respectively. The vertical dashed lines represent the maximum audible frequency for RBW (5000 Hz) and NFC (8240 Hz) conditions. For each participant, frequency input-output functions for different combinations of start frequencies and compression ratios were plotted. To avoid “strong” NFC settings, combinations that restricted the output frequency to a frequency that was less than 7% of that participant’s maximum audible frequency with RBW (determined in step 1) were not considered. The combination that resulted in the highest maximum audible frequency with NFC was then selected. In the rare case that multiple combinations fit these criteria, the combination with the highest start frequency was used in order to minimize potential spectral distortion of vowel formants. Using this procedure allowed us to document the highest frequency that was audible with NFC, prevent excessive spectral distortion, and place the output with NFC within each listener’s bandwidth.

Figure 3.

Amplified long-term average speech spectrum (LTASS) for a representative participant. The dashed line represents the amplified LTASS and the circles represent behavioral thresholds. The frequency at which the thresholds intersect the LTASS is the maximum audible frequency (MAF) for restricted bandwidth (RBW: for this participant 5000 Hz).

Figure 4.

Frequency input/output function for the same representative participant as for Figure 3. The maximum audible frequency with restricted bandwidth (RBW) was 5000 Hz. The output frequency as a function of the input frequency with nonlinear frequency compression (NFC) is displayed for a 3.8 kHz start frequency and a 2.6 compression ratio. The maximum audible frequency with NFC for this listener was 8240 Hz.

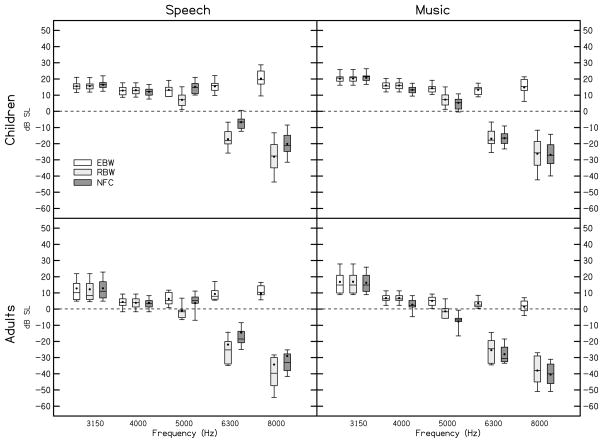

Audibility for frequencies ≥ 3150 Hz with EBW, RBW and NFC is shown in Figure 5. Results for speech and music are in the left and right columns respectively, and results for children and adults are in the top and bottom rows, respectively. To calculate the SL, first the SPL for frequency bands 1/3-octave wide (ANSI, 2004) was computed for each stimulus. Second, each participant’s absolute thresholds in dB HL were interpolated to the center frequencies for 1/3-octave wide filters (Pittman & Stelmachowicz, 2000). These thresholds were converted to dB SPL (Bentler & Pavlovic, 1989), adjusted to account for the internal noise spectrum (ANSI, 1997) and transformed to 1/3 octave band levels (Pavlovic, 1987). Fourth, the dB SL was computed for each stimulus by subtracting threshold from the stimulus level and then the mean level across stimuli was computed.

Figure 5.

Sensation level (SL) in dB for children (top row) and adults (bottom row). The left column shows SL for speech and the right column depicts SL for music. Sensation level with nonlinear frequency compression (NFC) was computed based on the output frequency. To conserve space, the SLs for frequencies below 3150 Hz were omitted because the SL was identical across the three types of processing. EBW, extended bandwidth; RBW, restricted bandwidth.

Procedure

Sound files from the output of the hearing-aid simulator were presented using custom software on a personal computer. They were converted from a digital to an analog signal using a Lynx Studio Technology Lynx Two B sound card (Costa Mesa, CA), routed using a MiniMon Mon 800 monitor matrix mixer (Behringer, Germany), amplified with a PreSonus HP4 headphone pre-amplifier (Baton Rouge, LA), and delivered to the participants via Sennheiser HD-25 headphones. The participants were seated in an audiometric sound booth in front of a touchscreen monitor, which was used by each participant to indicate the preferred condition. Participants were instructed to select the interval that contained the clearest speech or the interval that contained the best sounding music. To assist the children in understanding the term “clarity”, a visual demonstration was provided on the touch-screen monitor to all participants. The visual demonstration consisted of 8 pairs of pictures, one of each pair with higher resolution and the other with lower resolution. Each participant was instructed to point to the picture that was clearer.

Using a round-robin procedure, paired comparisons were made for the 3 processing conditions (EBW/RBW, NFC/RBW, EBW/NFC). For each trial, if the participant chose the condition that resulted in higher audibility (EBW to RBW, NFC to RBW, or EBW to NFC), then the preference was recorded as a 1. Otherwise the preference was recorded as a 0. Participants were provided with a practice session for each of the music and speech conditions. The order of these comparisons was counterbalanced across participants within a stimulus type. The stimuli were blocked by processing comparison with the music condition run first, followed by the speech condition. Within a block, the order of the type of processing (e.g. EBW/RBW or RBW/EBW) was randomized for each trial. Each participant listened to 2 trials of 15 speech and 8 music passages for each processing comparison for a total of 138 comparisons (2 trials, 3 processing comparisons, 15 speech and 8 music passages).

Analysis

Mean preference for each comparison was calculated by averaging preference across the trial data for each subject. The mean preference data were normally distributed about the mean. To determine if preference differed with processing comparison, stimulus condition or age, results were subjected to a mixed model ANOVA where the within-participant factors were processing comparison (EBW/RBW, NFC/RBW, EBW/NFC) and stimulus condition (music, speech) and the between-participants factor was age group (children, adults). Thus the ANOVA informed us if the mean preference differed by processing comparison, stimulus condition (music, speech), or age group (children, adult). The ANOVA did not inform us if the mean preference differed significantly from no preference (0.5) or how many participants showed a preference for one type of processing over another.

To determine which type of processing the participants preferred for each processing comparison, one sample a priori t-tests were used to establish if preference significantly differed from chance. The number of subjects exhibiting a preference was evaluated by assuming a binomial distribution (subjects could only choose one option per comparison). The standard deviation of the binomial distribution is where p is the preference score, q is the preference for the other strategy (1−p), and n is the number of comparisons. The confidence interval was calculated for each subject in each condition by multiplying the resultant binomial distribution by 1.96 (value of the 97.5 percentile point for a normal distribution, which for this two-tailed test gives the 95% confidence interval). If the confidence interval did not overlap .5 (no preference) then it was concluded that the subject had a reliable preference for one strategy over another.

The consistency of listeners’ preferences across comparisons was assessed qualitatively by constructing bar plots and statistically by using Pearson correlations. The data also were analyzed to determine if high frequency hearing loss (calculated PTA threshold for 4, 6, and 8 kHz) predicted preference ratings. Pearson correlations determined if PTA was associated with preference ratings.

RESULTS

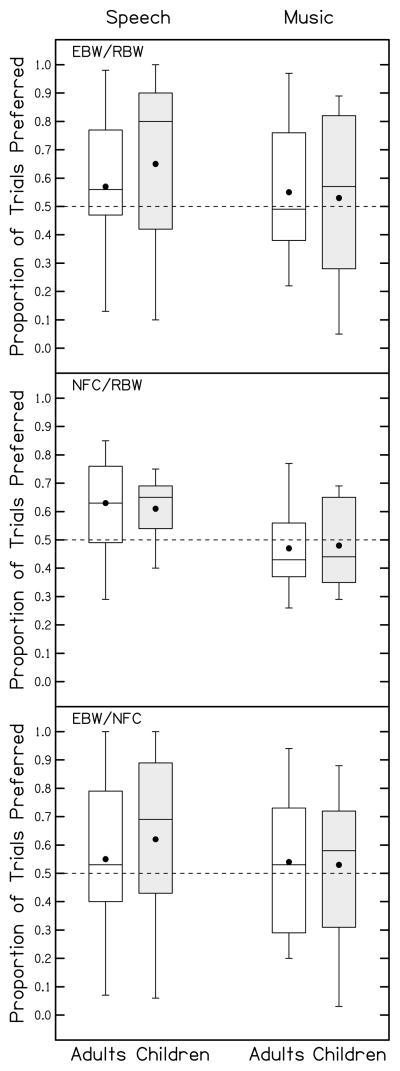

Figure 6 depicts the proportion of times participants preferred EBW compared to RBW, NFC compared to RBW, and EBW compared to NFC (top, middle and bottom panels respectively). For the comparisons with EBW, a proportion greater than .5 means that participants preferred EBW more often than the other type of processing (RBW or NFC). Similarly, for the comparison of NFC to RBW, a proportion greater than .5 indicates that NFC was preferred more often than RBW. The between-participants factor of age group was not statistically significant, F(1, 30) = 0.091, p = .765, ηp2 = .003. The main effect of stimulus condition F(1, 30) = 8.543, p = .007, ηp2 = .222 was significant, with the mean proportion higher for speech (M = .61, SD = .26) than for music (M = .52, SD = .24). The main effect of processing comparison F(2, 60) = 0.265, p = .768, ηp2 = .009 was not significant. The two-way interactions of stimulus condition with age group F(1, 30) = 0.691, p = .412, ηp2 = .023, processing comparison with age group F(2, 60) = 0.107, p = .899, ηp2 = .009, stimulus condition with processing comparison F(2, 60) = 1.489, p = .234, ηp2 = .047, and the three-way interaction of stimulus condition, age group, and processing comparison F(2, 60) = 0.879, p = .420, ηp2 = .028 were not significant. These results demonstrate that, on average, the participants preferred EBW to NFC, EBW to RBW, and NFC to RBW equally. Children and adults had equivalent preferences. Participants more frequently preferred EBW to NFC, EBW to RBW, and NFC to RBW for speech than for music.

Figure 6.

Proportion of trials preferred for EBW vs. RBW (top panel), NFC vs. RBW (middle panel) and EBW vs. NFC (bottom panel). Speech is shown in the left column and music is shown in the right. The shaded boxes depict results for the adults and the unshaded boxes depict results for the children. EBW, extended bandwidth; RBW, restricted bandwidth; NFC, nonlinear frequency compression.

One-sample t-tests were used to determine if participants significantly preferred EBW to NFC, EBW to RBW, and NFC to RBW for speech or music. Because the effect of age on preference was not significant, data were collapsed across age. For speech, participants significantly preferred EBW to RBW, t(31) = 2.106, p = .043, and NFC to RBW, t(31) = 4.694, p < .001 but not EBW to NFC, t(31) = 1.654, p = .108. For music, participants did not show a preference for EBW to RBW, t(31)=.862, p = .395, NFC to RBW, t(31) = −.904, p = .373, or EBW to NFC, t(32) = .671, p = .507. These results demonstrate that participants preferred greater access to high-frequency information (EBW or NFC to RBW) for speech. Otherwise, participants did not demonstrate a preference.

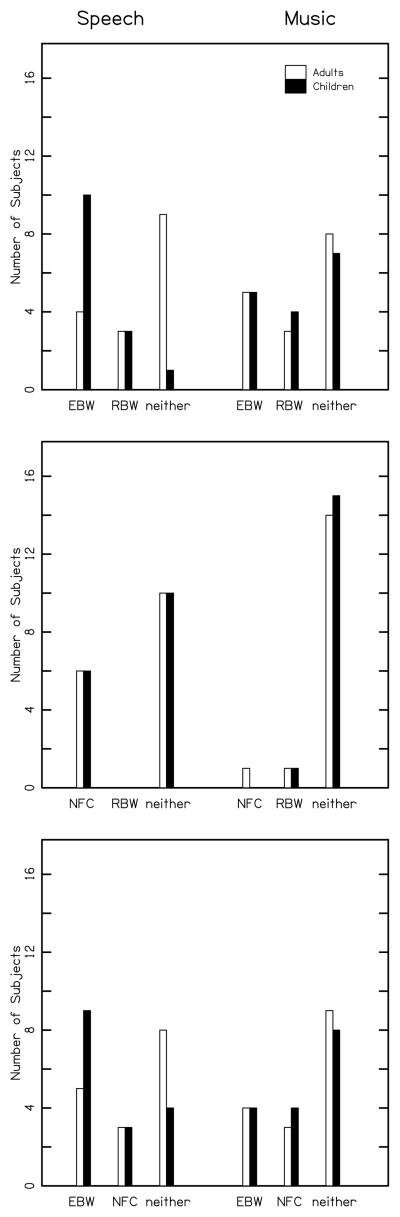

The number of participants who showed a preference is depicted in Figure 7. More participants showed a preference with speech than with music. While more participants preferred access to high frequency sounds (e.g. preferred EBW to RBW, NFC to RBW, and EBW to NFC), some participants showed the opposite preference or no preference.

Figure 7.

Number of participants with a preferred processing condition when comparing EBW vs. RBW (top panel), NFC vs. RBW (middle panel) and EBW vs. NFC (bottom panel). Speech is shown in the left column and music is shown in the right.

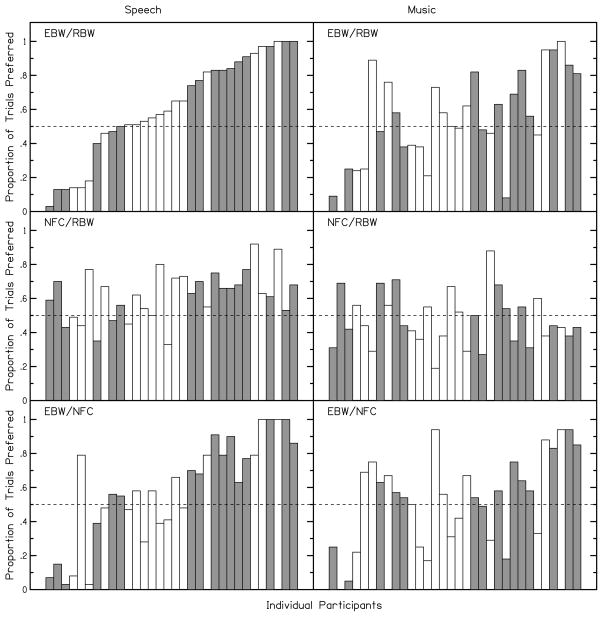

The consistency of preferences was assessed by constructing bar plots (Figure 8). The order of the participants is the same in each bar plot and was arranged based on their preference score for EBW compared to RBW for speech (upper left panel). Listeners who preferred EBW to RBW for speech also tended to prefer EBW to RBW for music r(32) = .613, p < .001. These same listeners also tended to prefer EBW to NFC for both speech r(32) = .864, p < .001 and music r(32) = .489, p = .004. A listener’s preference for EBW to RBW for speech did predict their preference for NFC to RBW for speech r(32) = .425, p = .015 but not for music r(32) = −.013, p < .944.

Figure 8.

Preference ratings. Participants are rank ordered by preference for EBW vs. RBW with speech in the top-left panel. Results for speech and music conditions are shown in the left and right columns, respectively. The same order of participants was maintained for the remaining plotted comparisons. Unshaded bars represent adult data and shaded bars represent child data. EBW, extended bandwidth; RBW, restricted bandwidth; NFC, nonlinear frequency compression.

PTA was associated with preference for comparisons with music. Specifically listeners with better PTA preferred EBW to RBW r(32) = −.411, p = .019 and EBW to NFC r(32) = −.410, p = .020. Listeners with poorer PTA preferred NFC to RBW r(32) = .357, p = .045. PTA did not predict preference for speech (−.209 ≤ r ≤ −.147, .250 ≤ p ≤ .421). These results demonstrate that 1) listeners were reasonably consistent in their preference for (or against) audibility of the high frequencies with EBW and 2) degree of high-frequency hearing loss was associated with preference.

DISCUSSION

Participants preferred EBW and NFC to RBW for speech, but otherwise did not show clear preferences overall. This is consistent with the hypothesis that participants will prefer the condition with the widest bandwidth. The present study is compatible with the extant literature with adults that found that listeners with hearing loss prefer EBW to RBW (Ricketts et al, 2008; Füllgrabe et al, 2010), when listeners have sufficient audibility of the signal. The results of this study also are consistent with previous research which found that listeners with better hearing in the high frequencies, as measured by the slope of the audiogram, were more likely to prefer EBW to RBW (Ricketts et al, 2008; Moore et al, 2011). Previous studies determined that preference for EBW to RBW can increase for some participants when gain is decreased, with the caveat that audibility must be maintained (Moore et al, 2011). This suggests that participants in this study might have expressed a stronger preference for EBW if we used less gain than that prescribed by DSL.

In the present study, listeners also showed a slight preference for NFC to RBW. This occurred despite the potential for spectral distortion caused by NFC. This is consistent with other studies of preference and speech perception that selected the minimum NFC settings necessary to achieve audibility (Glista et al, 2009; Wolfe et al, 2010, 2011; Glista et al, 2012). Studies that found a preference for RBW to NFC (Simpson et al, 2006; Parsa et al, 2013; Souza et al, 2013) did not quantify audibility of the compressed portion and may have selected NFC settings that did not result in greater audibility or may have used stronger NFC settings (lower start frequencies, higher compression ratios) without giving more audibility than weaker NFC settings and at the cost of spectral distortion. Using speech recognition instead of preference as the outcome measure, Souza et al. (2013), found that listeners with greater PTA were more likely to show improved speech recognition with NFC. Together the findings of the current study and Souza et al. suggest that listeners with greater high-frequency hearing loss are more likely to benefit from NFC.

Preference between EBW and NFC has not been reported previously in the literature. It was expected that the capacity for additional distortion by NFC would cause listeners to prefer EBW to NFC. Instead, this study found that listeners expressed equivalent preference for EBW and NFC. Listeners with less hearing loss, as measured by lower PTA, were more likely to prefer EBW to NFC. This, combined with the findings of Souza et al. (2013), suggests the increased distortion caused by NFC is offset by the increased audibility for listeners with greater hearing loss.

We also found that more listeners expressed a preference for EBW to RBW for speech than for music. This finding contrasts with other work in this area, which found that listeners with normal hearing preferred wider bandwidth (17 kHz) for music than speech (11 kHz: Moore & Tan 2003). If the present study had used a bandwidth wider than 10 kHz, the participants might have preferred a wider bandwidth for music than for speech. It is possible that the difference in instructions to listeners across the two stimulus types (“best sounding” for music and “clearest” for speech) influenced the results. Füllgrabe et al. (2010) found that their listeners with hearing loss preferred RBW to EBW for pleasantness but preferred EBW for clarity. The direction of our finding is consistent with that of Füllgrabe et al., in that ratings of clarity with speech were higher than those for “best sounding” for music. Because the music condition was followed by the speech condition, these results cannot inform us about whether the presentation order influenced the findings. It is expected that if there was an order effect, the listeners would have been more likely to show a preference as they gained more experience. Instead, listeners expressed a preference for speech, which was the first stimulus condition, but not for music, which was second stimulus condition. The present study also used a wide range of music samples, which may have introduced greater variability in preference for music than for speech across participants than if a more limited number of music samples had been used.

Age did not influence to preference in this study, despite research indicating that children require greater access to high-frequency sounds than adults in order to maintain equivalent speech understanding (Stelmachowicz et al, 2001). In this study, consistent with clinical practice, children were fit using a prescriptive method that resulted in a greater SL than the method used to fit the adults. Providing the children with the higher SL that they require may have resulted in equivalent judgments for the two age groups. Our findings contrast with those of Glista et al. (2009), who found that children preferred NFC to RBW more often than adults despite also using separate DSL prescriptive approaches for children and adults. One possible difference was that the present study maintained similar bandwidth with NFC across the two age groups, whereas the bandwidth is unknown for the listeners who participated in the Glista et al. study. Our results would suggest that differences in preference between adults and children might be eliminated when the children are provided with greater audibility and similar bandwidth.

Although our data indicate that listeners, on average, prefer greater bandwidth with minimal distortion, these results may not generalize to all hearing-aid users. First, the pattern of results might not extend to listeners with greater degrees of hearing loss who would require lower start frequencies and higher frequency-compression ratios or who cannot realistically experience audibility in the high frequencies with EBW. Second, this study asked listeners to select the conditions that they felt were clearer for speech or that sounded better for music. Measuring other dimensions, such as “sharpness” might have revealed differences in sound quality that were not obtained here. Lastly, because strength of preference was not measured it is not known how strongly our participants preferred EBW and NFC to RBW.

Clinically, the type of processing chosen should be the one that achieves the widest bandwidth while minimizing spectral distortion. Specifically, EBW should be used if the audible bandwidth can be improved compared to RBW. Otherwise, NFC could be used to improve audibility for higher frequencies. When fitting NFC, steps should be taken to ensure audibility of the compressed portion while simultaneously minimizing potential spectral distortion. This can be achieved, as was done in the present study, by selecting a higher start frequency or lower compression ratio when doing so does not impact the audible bandwidth with NFC. However, the large variability observed in the present study suggests that some listeners do not have a preference or may prefer lower bandwidth (see Figures 6 and 7). Some of this variability was explained by PTA and suggests that listeners with greater hearing loss are more likely to prefer NFC and those with less hearing loss are more likely to prefer EBW.

Conclusions

These data suggest a preference for access to high frequency sounds based on the observation that listeners preferred EBW and NFC to RBW. This preference occurred for speech but not for music. For music, participants with less high-frequency hearing loss were more likely to prefer EBW while participants greater high-frequency hearing loss were less likely to prefer NFC. One limitation of this study was that the listeners were restricted to those with mild to severe hearing loss. Therefore the results should not be generalized to individuals with greater degrees of hearing loss who may require different NFC settings than those used in this study.

Supplementary Material

Acknowledgments

Support: NIH: R01 DC04300, P30 DC-4662, T32 DC00013, F32 DC012709, R03 DC012635

The authors would like to thank Kanae Nishi for providing scripts used during stimulus development and discussions on the study design, Geoffrey Utter and Jody Spalding for running participants, Prasanna Aryal for computer programming support, Nick Smith and Kendra Schmid for assistance with the statistical analysis, and Brianna Byllesby and Evan Cordrey for creating the figures,.

Abbreviations

- APHAB

Abbreviated Profile of Hearing Aid Benefit

- ANOVA

analysis of variance

- DSL

Desired Sensation Level

- EBW

extended bandwidth

- HL

hearing loss

- KEMAR

Knowles Electronic Manikin for Acoustic Research

- NFC

nonlinear frequency compression

- PTA

pure-tone average

- RBW

restricted bandwidth

- SL

sensation level

- SPL

sound-pressure level

- WDRC

wide-dynamic range compression

Footnotes

If a conductive component was present when using TDH-50P, thresholds were re-measured with insert earphones to ensure that it was not related to a collapsed ear canal.

The level instead of the power was averaged to more closely mimic what would be done in the clinic. In the clinic, the clinician can take an average when “eyeballing” the match to target.

This work was presented at the 2012 Annual Meeting of the American Auditory Society, Scottsdale, AZ.

References

- Alexander JM. Individual variability in recognition of frequency-lowered speech. Semin Hear. 2013;34:86–109. [Google Scholar]

- Alexander JM, Masterson K. Effects of WDRC release time and number of channels on output SNR and speech recognition. Ear Hear. doi: 10.1097/AUD.0000000000000115. (submitted) [DOI] [PMC free article] [PubMed] [Google Scholar]

- ANSI. ANSI S3.5-1997. 1997. American national standard methods for calculation of the speech intelligibility index. [Google Scholar]

- ANSI. ANSI S1.11-2004. 2004. Specification for Octave-Band and Fractional-Octave-Band Analog and Digital Filters. [Google Scholar]

- ANSI. ANSI S3.22-2009. 2009. American National Standard Specification of Hearing Aid Characteristics. [Google Scholar]

- Bentler RA, Pavlovic CV. Transfer functions and correction factors used in hearing aid evaluation and research. amplification and aural rehabilitation. Ear and Hearing. 1989;10:58–63. doi: 10.1097/00003446-198902000-00010. [DOI] [PubMed] [Google Scholar]

- Burkhard MD, Sachs RM. Anthropometric manikin for acoustic research. J Acoust Soc Am. 1975;58:214–222. doi: 10.1121/1.380648. [DOI] [PubMed] [Google Scholar]

- Ching TYC, Dillon H, Byrne D. Speech recognition of hearing-impaired listeners:redictions from audibility and the limited role of high-frequency amplification. J Acoust Soc Am. 1998;103:1128–1140. doi: 10.1121/1.421224. [DOI] [PubMed] [Google Scholar]

- Ching TYC, Dillon H, Katsch R, Byrne D. Maximizing effective audibility in hearing aid fitting. Ear Hear. 2001;22:212–224. doi: 10.1097/00003446-200106000-00005. [DOI] [PubMed] [Google Scholar]

- Cox RM, Alexander GC. The abbreviated profile of hearing aid benefit. Ear Hear. 1995;16:176–186. doi: 10.1097/00003446-199504000-00005. [DOI] [PubMed] [Google Scholar]

- Dillon H. Hearing Aids. New York: Thieme; 2001. [Google Scholar]

- Füllgrabe C, Baer T, Stone MA, Moore BCJ. Preliminary evaluation of a method for fitting hearing aids with extended bandwidth. Int J Audiol. 2010;49:741–753. doi: 10.3109/14992027.2010.495084. [DOI] [PubMed] [Google Scholar]

- Glista D, Scollie S, Bagatto M, Seewald R, Parsa V, Johnson A. Evaluation of nonlinear frequency compression: clinical outcomes. Int J Audiol. 2009;48:632–644. doi: 10.1080/14992020902971349. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glista D, Scollie S, Sulkers J. Perceptual acclimatization post nonlinear frequency compression hearing aid fitting in older children. J Speech Hear Res. 2012;55:1765–1787. doi: 10.1044/1092-4388(2012/11-0163). [DOI] [PubMed] [Google Scholar]

- Harford ER, Fox J. The use of high-pass amplification for broad-frequency sensorineural hearing loss. Audiology. 1978;17:1–26. [PubMed] [Google Scholar]

- Hogan CA, Turner CW. High-frequency audibility: benefits for hearing-impaired listeners. J Acoust Soc Am. 1998;104:432–441. doi: 10.1121/1.423247. [DOI] [PubMed] [Google Scholar]

- Hornsby BWY, Johnson EE, Picou E. Effects of degree and configuration of hearing loss on the contribution of high-and low-frequency speech information to bilateral speech understanding. Ear Hear. 2011;32:543–555. doi: 10.1097/AUD.0b013e31820e5028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humes LE. Dimensions of hearing aid outcome. J Am Acad Audiol. 1999;10:26–39. [PubMed] [Google Scholar]

- Kates JM. Digital Hearing Aids. San Diego: Plural Publishing; 2008. [Google Scholar]

- Kochkin S. MarkeTrak VII: customer satisfaction with hearing instruments in the digital age. Hear J. 2005;58:30–39. [Google Scholar]

- McCreery R, Brennan M, Hoover B, Kopun J, Stelmachowicz PG. Maximizing audibility and speech recognition with nonlinear frequency compression by estimating audible bandwidth. Ear Hear. 2013;34:e24–27. doi: 10.1097/AUD.0b013e31826d0beb. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore BCJ, Füllgrabe C, Stone MA. Determination of preferred parameters for multichannel compression using individually fitted simulated hearing aids and paired comparisons. Ear Hear. 2011;32:556–568. doi: 10.1097/AUD.0b013e31820b5f4c. [DOI] [PubMed] [Google Scholar]

- Moore BCJ, Stone MA, Füllgrabe C, Glasberg BR, Puria S. Spectro-temporal characteristics of speech at high frequencies, and the potential for restoration of audibility to people with mild-to-moderate hearing loss. Ear Hear. 2008;29:907–922. doi: 10.1097/AUD.0b013e31818246f6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore BCJ, Tan CT. Perceived naturalness of spectrally distorted speech and music. J Acoust Soc Am. 2003;114:408–419. doi: 10.1121/1.1577552. [DOI] [PubMed] [Google Scholar]

- Parsa V, Scollie S, Glista D, Seelisch A. Nonlinear frequency compression: effects on sound quality ratings of speech and music. Trends Amplif. 2013;17:54–68. doi: 10.1177/1084713813480856. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pavlovic CV. Derivation of primary parameters and procedures for use in speech intelligibility predictions. J Acoust Soc Am. 1987;82:413–22. doi: 10.1121/1.395442. [DOI] [PubMed] [Google Scholar]

- Pittman AL, Stelmachowicz PG. Perception of voiceless fricatives by normal-hearing and hearing-impaired children and adults. J Speech Lang Hear R. 2000;43:1389–1401. doi: 10.1044/jslhr.4306.1389. [DOI] [PubMed] [Google Scholar]

- Plyler PN, Hill AB, Trine TD. The effects of expansion on the objective and subjective performance of hearing instrument users. J Am Acad Audiol. 2005;16:101–113. doi: 10.3766/jaaa.16.2.5. [DOI] [PubMed] [Google Scholar]

- Ricketts TA, Dittberner AB, Johnson EE. High-frequency amplification and sound quality in listeners with normal through moderate hearing loss. J Speech Lang Hear Res. 2008;51:160–172. doi: 10.1044/1092-4388(2008/012). [DOI] [PubMed] [Google Scholar]

- Rosengard PS, Payton KL, Braida LD. Effect of slow-acting wide dynamic range compression on measures of intelligibility and ratings of speech quality in simulated-loss listeners. J Speech Lang Hear R. 2005;48:702–714. doi: 10.1044/1092-4388(2005/048). [DOI] [PubMed] [Google Scholar]

- Scollie S, Seewald R, Cornelisse L, Moodie S, Bagatto M, Laurnagaray D, Beaulac S, Pumford J. The desired sensation level multistage input/output algorithm. Trends Amplif. 2005;9:159–197. doi: 10.1177/108471380500900403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simpson A, Hersbach AA, McDermott HJ. Improvements in speech perception with an experimental nonlinear frequency compression hearing device. Int J Audiol. 2005;44:281–292. doi: 10.1080/14992020500060636. [DOI] [PubMed] [Google Scholar]

- Simpson A, Hersbach AA, Mcdermott HJ. Frequency-compression outcomes in listeners with steeply sloping audiograms. Int J Audiol. 2006;45:619–629. doi: 10.1080/14992020600825508. [DOI] [PubMed] [Google Scholar]

- SoundRecover Fitting Assistant [Computer Program] West Lafayette, IN: Joshua Alexander; 2010. Version 1.10. [Google Scholar]

- Souza PE, Arehart KH, Kates JM, Croghan NBH, Gehani N. Exploring the limits of frequency lowering. J Speech Lang Hear R. 2013;56:1349–1363. doi: 10.1044/1092-4388(2013/12-0151). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, Lewis DE. Effect of stimulus bandwidth on the perception of /s/ in normal- and hearing-impaired children and adults. J Acoust Soc Am. 2001;110:2183–2190. doi: 10.1121/1.1400757. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, Lewis DE, Moeller MP. The importance of high-frequency audibility in the speech and language development of children with hearing loss. Arch Otolaryngol Head Neck Surg. 2004;130:556–562. doi: 10.1001/archotol.130.5.556. [DOI] [PubMed] [Google Scholar]

- Turner CW, Cummings KJ. Speech audibility for listeners with high-frequency hearing loss. Am J Audiol. 1999;8:47–56. doi: 10.1044/1059-0889(1999/002). [DOI] [PubMed] [Google Scholar]

- Van Buuren RA, Festen JM, Houtgast T. Compression and expansion of the temporal envelope: evaluation of speech intelligibility and sound quality. J Acoust Soc Am. 1999;105:2903–2913. doi: 10.1121/1.426943. [DOI] [PubMed] [Google Scholar]

- Wolfe J, John A, Schafer E, Nyffeler M, Boretzki M, Caraway T. Evaluation of nonlinear frequency compression for school-age children with moderate to moderately severe hearing loss. J Am Acad Audiol. 2010;21:618–628. doi: 10.3766/jaaa.21.10.2. [DOI] [PubMed] [Google Scholar]

- Wolfe J, John A, Schafer E, Nyffeler M, Boretzki M, Caraway T, Hudson M. Long-term effects of non-linear frequency compression for children with moderate hearing loss. Int J Audiol. 2011;50:396–404. doi: 10.3109/14992027.2010.551788. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.