Abstract

The manipulation of objects commonly involves motion between object and skin. In this review, we discuss the neural basis of tactile motion perception and its similarities with its visual counterpart. First, much like in vision, the perception of tactile motion relies on the processing of spatiotemporal patterns of activation across populations of sensory receptors. Second, many neurons in primary somatosensory cortex are highly sensitive to motion direction, and the response properties of these neurons draw strong analogies to those of direction-selective neurons in visual cortex. Third, tactile speed may be encoded in the strength of the response of cutaneous mechanoreceptive afferents and of a subpopulation of speed-sensitive neurons in cortex. However, both afferent and cortical responses are strongly dependent on texture as well, so it is unclear how texture and speed signals are disambiguated. Fourth, motion signals from multiple fingers must often be integrated during the exploration of objects, but the way these signals are combined is complex and remains to be elucidated. Finally, visual and tactile motion perception interact powerfully, an integration process that is likely mediated by visual association cortex.

Keywords: aperture problem, cortex, integration, peripheral nerve, somatosensory cortex

object manipulation commonly involves motion between the object and skin surface, as does haptic exploration. Indeed, to make out the shape of an object, we follow its contours; to make out its texture, we run our fingers across its surface (Lederman and Klatzky 1987). Perceptual experiments with human subjects demonstrate that information about the direction (Dreyer et al. 1978; Essick et al. 1988; Gardner and Sklar 1994; Keyson and Houtsma 1995; Norrsell and Olausson 1992) and speed (Bensmaia et al. 2006b; Depeault et al. 2008; Essick et al. 1988) of objects scanned across the skin is available. In this review, we discuss the neural mechanisms that mediate our ability to perceive tactile motion.

Motion Signal at the Somatosensory Periphery

The processing of tactile motion is thought to rely on two sources of information: the spatial-temporal pattern of activation of mechanoreceptive afferents (caused by the displacement of object contours over time) and skin stretch caused by friction between skin and object (Olausson and Norrsell 1993). At the somatosensory periphery, slowly adapting type 1 (SA1) afferents, which densely innervate the glabrous skin (Johansson and Vallbo 1979) and convey the most acute spatial information (Bensmaia et al. 2006a; Friedman et al. 2002; Phillips and Johnson 1981), play an important role in the spatial-temporal component of tactile motion processing. Rapidly adapting (RA) afferents (Kirman 1974) also play a major role in this aspect of motion processing, as evidenced by the fact that clear percepts of tactile motion can be induced when vibrating pins are sequentially activated with an optical-to-tactile converter display (OPTACON), which activates RA but not SA1 afferents (Gardner and Palmer 1989a, 1989b). In contrast, slowly adapting type 2 (SA2) afferents encode skin stretch and carry a motion signal by virtue of the fact that motion in different directions stretches the skin in different ways (Olausson et al. 2000).

SA1 and RA afferents encode stimulus motion primarily isomorphically. That is, the spatial-temporal pattern of skin indentation is processed in a pixelwise manner by populations of afferents (Pei et al. 2010; Whitsel et al. 1972): As a stimulus moves across the skin, it sequentially activates adjacent populations of afferent fibers. While individual SA1 and RA afferents exhibit little sensitivity to motion direction in the absence of tangential forces, they do exhibit some modulation by motion direction when such forces are present (Birznieks et al. 2010; Wheat et al. 2010), as do SA2 fibers.

Direction Signal in Primary Somatosensory Cortex

In contrast to their peripheral counterparts, the responses of neurons in primary somatosensory cortex (S1) exhibit strong tuning for the direction of tactile motion. Indeed, the strength of the response of neurons in areas 3b and 1 to brushes swept across the skin is strongly dependent on the direction of these sweeps (Werner and Whitsel 1970; Whitsel et al. 1972). This direction tuning extends to many different types of tactile motion stimuli, including bars, edges, rotating cylinders, and blunt probes (Costanzo and Gardner 1980; Ruiz et al. 1995; Warren et al. 1986), and the direction preference is consistent across scanning speeds (Ruiz et al. 1995). Modeling work suggests that the direction selectivity of S1 responses might be produced by excitatory and inhibitory subregions that respond asymmetrically to scanning directions (Gardner and Costanzo 1980).

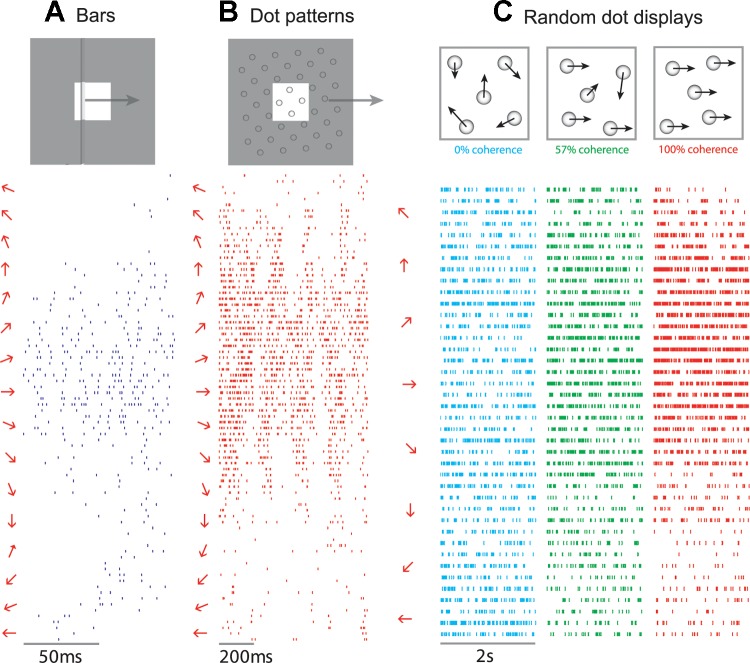

To elucidate the mechanisms of motion selectivity, a series of paired neurophysiological and psychophysical experiments was carried out in which S1 responses to a variety of motion stimuli—bars, Braille-like dot patterns, and random dot displays—were recorded from Rhesus macaques and judgments of perceived direction of these same stimuli were obtained from human observers. Stimuli were delivered with a stimulator consisting of a 20 × 20 array of probes that could be indented in spatiotemporal sequences that produced verisimilar percepts of motion (Killebrew et al. 2007). Moving bars and dots were used to reproduce the types of stimuli that have been used in other studies of tactile motion processing. The random dot displays were the tactile analogs of stimuli that have been widely used in studies of visual motion (Britten et al. 1993; Newsome and Pare 1988), in which the strength of the motion signal can be systematically adjusted by varying the degree to which the dots move in a common direction.

Experiments with scanned bars revealed that direction tuning emerges at the earliest stage of cortical processing, namely, in area 3b (Pei et al. 2010). Indeed, 30% of neurons in this area are significantly direction selective. Direction tuning is even more prevalent in areas 1 (57%) and 2 (54%). Similarly, responses to scanned dot patterns exhibit significant direction tuning in areas 3b (44%), 1 (59%), and 2 (18%), as do those to random dot displays at 100% coherence (19%, 42%, and 15% in areas 3b, 1, and 2, respectively). Finally, direction tuning increases systematically with motion coherence, particularly in area 1.

Overall, area 1 exhibits the strongest and most consistent motion tuning of the three modules of S1 that respond to tactile stimulation. Importantly, the preferred direction (PD) of a subpopulation of neurons in area 1 (30%) is consistent across stimulus types (Fig. 1) and remains stable across stimulus amplitudes and scanning speeds. The sensitivity of these neurons to motion direction accounts for the ability of human subjects to discriminate motion direction across stimulus types (Pei et al. 2010).

Fig. 1.

Responses of a neuron in area 1 to bars (A), dot patterns (B), and random dot displays (C) presented to the monkey's fingertip. Stimuli are illustrated as insets at top: for bars and dot patterns, the white square shows the 1-cm × 1-cm area over which the stimuli were presented and the gray region illustrates the stimulus extending outside of the stimulation area. On left of each raster is the direction of motion of the stimulus. This neuron produced the most robust response to stimuli moving at ∼20°, regardless of whether the stimuli were bars, dot patterns, or random dot displays. For random dot displays, the tuning response increased with increases in the motion coherence. Adapted from Pei et al. (2010).

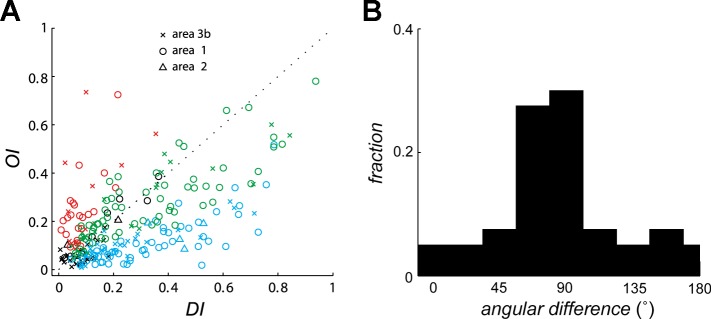

Relationship Between Direction and Orientation Tuning in Somatosensory Cortex

As is the case with motion-sensitive neurons in primary visual (Hubel and Wiesel 1968) and middle temporal (MT) cortices (Albright 1984), motion-sensitive neurons in S1 are sensitive to both direction of motion and stimulus orientation. Indeed, these neurons exhibit a wide variety of tuning properties to direction and orientation, ranging from pure orientation selectivity with no direction tuning (15%) to pure direction tuning with no orientation tuning (36%), with many neurons exhibiting both orientation and direction tuning (32%) (Fig. 2A). As is the case for MT neurons (Albright 1984), the PD tends to be perpendicular to the preferred orientation (Fig. 2B).

Fig. 2.

Orientation sensitivity and direction sensitivity in populations of neurons in S1. A: orientation selectivity index (OI) vs. direction selectivity index (DI) for neurons in areas 3b, 1, and 2. OI and DI measure the tuning strength to orientation and direction, respectively, each of which ranges from 0 to 1. Red symbols, neurons that yielded significant OIs; blue symbols, neurons that yielded significant DIs; green symbols, neurons for which both indexes were significant; black symbols, neurons for which neither index was significant. B: distribution of the angular difference between the preferred direction (measured from dot patterns) and the preferred orientation (measured from indented bars) of neurons in area 1. For orientation, 90° and 270° denote bars parallel to the long axis of the finger and 0° and 180° denote bars orthogonal to the long axis of the finger. The preponderance of neurons that are sensitive to orientation and direction of motion respond to contours moving in a direction perpendicular to their orientation. Adapted from Pei et al. (2010).

Motion Integration in Primary Somatosensory Cortex

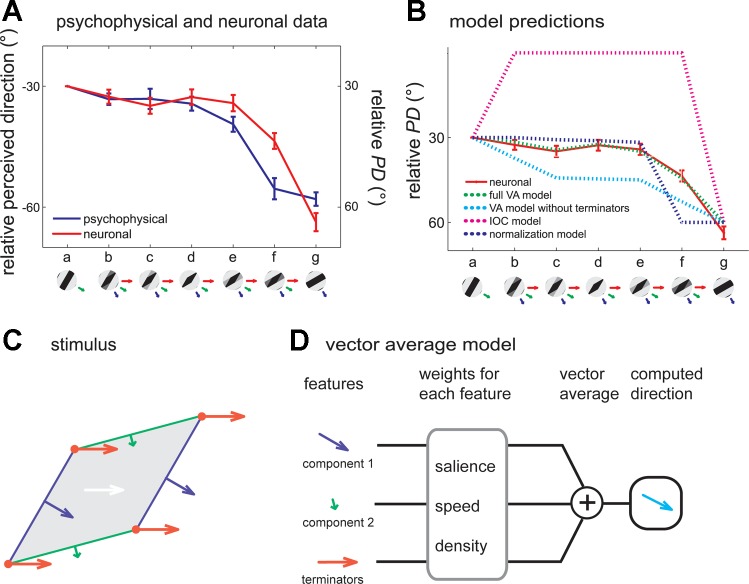

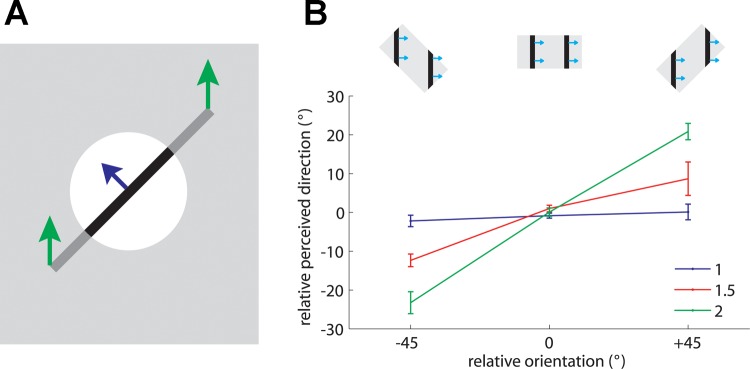

A fundamental question in motion processing is how local motion information is integrated to yield a global motion percept. Indeed, the velocity (speed and direction) of one-dimensional edges is ambiguous because information about the motion component parallel to their orientation is not available, an ambiguity known as the aperture problem (Wallach 1935) (Fig. 3A). Hence, to recover the veridical direction of an object, it is necessary to integrate motion information emanating from stimulus contours that differ in their orientations (Rust et al. 2006; Simoncelli and Heeger 1998) or rely on motion signals emanating from terminators (i.e., corners and intersections) whose velocity is unambiguous (Pack et al. 2003; Shimojo et al. 1989). Neurons at the lowest processing levels are subject to the aperture problem, as these neurons have spatially restricted receptive fields and thus only have access to local motion information. Motion integration involves resolving this ambiguity so that neurons at higher processing levels can extract and encode the global direction of a moving object.

Fig. 3.

A: geometry of the aperture problem. Green arrows show the actual motion of the bar; blue arrow shows the motion of the bar as observed through the circular aperture (white circle). When an edge is observed through a circular aperture, the only information available about its direction of motion is along the axis perpendicular to its orientation. In other words, no time-varying information is conveyed along the parallel axis. Adapted from Pei et al. (2008) [Copyright (2008) National Academy of Sciences, U.S.A.]. B: effect of aspect ratio on the barber pole effect. The relative orientation is the angular difference between the aperture's orientation and the direction orthogonal to the grating's orientation (as shown in insets). The magnitude of the barber pole illusion is measured by the relative perceived direction defined by the degree to which the perceived direction is biased from the direction orthogonal to the grating's orientation. The strength of the barber pole illusion increases as the aspect ratio increases. Error bars denote means ± SE. Adapted from Pei et al. (2008) [Copyright (2008) National Academy of Sciences, U.S.A.].

Three models have been hypothesized to account for visual motion integration: the intersection of constraints (IOC) model (Adelson and Movshon 1982; Fennema 1979; Movshon et al. 1983; Simoncelli and Heeger 1998), the vector average (VA) model (Movshon et al. 1983; Treue et al. 2000; Weiss et al. 2002), and the terminator model (Pack et al. 2003, 2004; Shimojo et al. 1989).

In visual motion research, superimposed gratings, which yield so-called plaids, have been used to contrast the different theories of motion integration. According to the IOC model, the velocity of a grating is consistent with a family of velocities that lies along a line parallel to the orientation of the grating. When two moving gratings are superimposed to yield a plaid, the velocity of the resulting plaid is given by the intersection of two constraints provided by each of the component gratings (Adelson and Movshon 1982). According to the VA model, the perceived direction is simply predicted by a weighted average of the normal velocities of two component gratings. Finally, according to the terminator model, the perceived direction is predominantly determined by the direction of motion of the terminators. In other words, the terminator motion vector is heavily weighted in the computation for the global direction of motion (Pack et al. 2001, 2003, 2004; Shimojo et al. 1989).

While the mechanisms of visual motion integration have received much experimental attention, their tactile counterparts have only recently begun to be characterized, with stimuli whose perceptual properties are well-established in vision, including barber poles (Guilford 1929; Wallach 1935, 1996) and superimposed gratings (plaids) (Adelson and Movshon 1982).

The Barber Pole Illusion

In the barber pole paradigm, a moving grating is presented through a rectangular aperture. The perceived direction of motion of a visual barber pole is biased toward the long axis of the aperture, suggesting that the terminators—the intersections between the grating and aperture—influence the perceived direction of motion (Kooi 1993; Wallach 1935). Specifically, perceived direction is biased toward the long axis of the aperture because the long axis comprises more terminators, which generally convey unambiguous information about stimulus velocity, than does the short axis. As is the case with visual barber poles, the orientation of the aperture in tactile barber poles exerts a strong effect on the perceived direction of motion of the grating (Fig. 3B) and the effect grows stronger as the aspect ratio increases (Bicchi et al. 2008; Pei et al. 2008). Furthermore, the mean angular deviations between the perceived direction and the direction orthogonal to grating orientation are close to those predicted by the terminator-average model proposed by Park et al. (2004). The perceived direction of barber poles thus seems to be determined by the motion of the terminators.

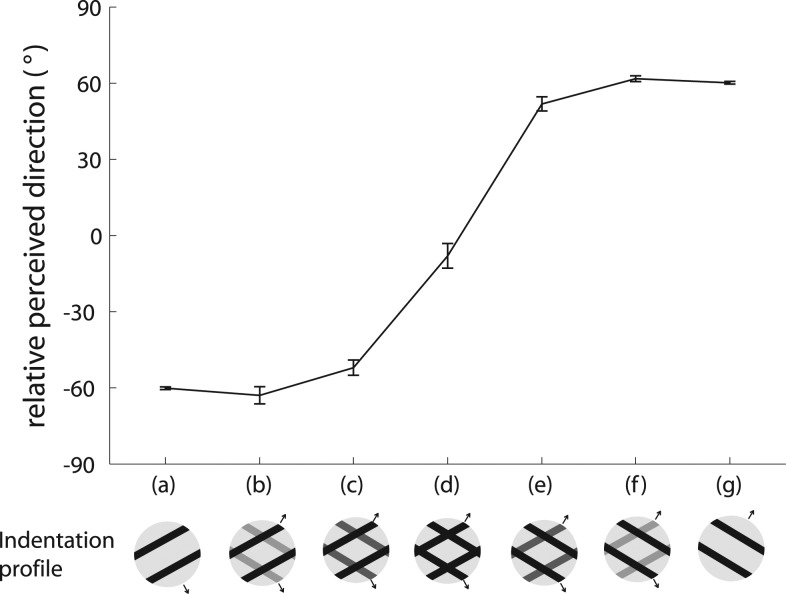

Type I Plaids

Another stimulus that has proven fruitful in elucidating the neural mechanisms of visual motion integration is the plaid, constructed by superimposing two periodic component gratings (Adelson and Movshon 1982). As is the case in vision, tactile gratings are perceived as moving in the direction orthogonal to their orientation (Fig. 4, stimuli a and g). When a simple grating gradually morphs into a plaid pattern—consisting of two gratings moving at the same speed in directions separated by 120°—the perceived direction of motion dramatically shifts to the bisector of the two directions of motion (Fig. 4, stimulus d). That is, when one component is dominant, the perceived direction is dominated by its motion; when both gratings are of equal amplitude, the perceived direction is at the intermediate between the two directions of motion.

Fig. 4.

Perceived direction—averaged across subjects–of 2 superimposed moving gratings that formed a plaid. When 1 component was dominant, the perceived direction of the stimulus was determined by the direction of that component; when the intensities of the 2 gratings were similar, the stimulus was perceived as a plaid moving in the direction that bisected the 2 components' directions. Relative perceived direction = perceived direction − plaid direction. Adapted from Pei et al. (2008) [Copyright (2008) National Academy of Sciences, U.S.A.].

Direction-selective neurons in S1 exhibit a wide variety of responses to plaids. At one extreme, “component” neurons yield bimodal patterns of responses to plaid stimuli, with the two modes separated by 120° (Fig. 5A). Such neurons thus respond whenever one of the component gratings moves in their PD. At the other extreme, “pattern” neurons exhibit unimodal response patterns to plaids: These neurons are most responsive when the plaid (or a pure grating) moves in their PD, a tuning property analogous to that found in “pattern” neurons in MT (Movshon et al. 1983; Movshon and Newsome 1996). While most S1 neurons exhibited responses intermediate between “component” and “pattern,” pure pattern neurons were only found in area 1 (Fig. 5B), which also contained component neurons. Overall, the direction selectivity of component and pattern neurons in S1 tended to be much weaker than that of their counterparts in V1 or MT. Again, the responses of S1 neurons can account for the perceived direction of the grating/plaid morphs (Fig. 5C), suggesting that these neurons belong to the processing chain that culminates in a tactile percept of global motion.

Fig. 5.

Integration properties of neurons in S1 measured based on neural responses to type 1 plaids. A: responses of a typical component neuron (c) and pattern neuron (p). The angular coordinate denotes the direction of motion of the stimulus (in °) and the radial coordinate the response (in impulses/s). Blue and green traces are the neural responses to component gratings, and red trace shows the neural response to plaids. Component predictions (dashed cyan traces) are constructed by summing the responses to the component gratings and corrected for baseline firing rate. Pattern predictions (dashed magenta traces) are tuning curves measured using simple gratings. B: each neuron's integration properties are indexed by characterizing the degree to which each of its responses to plaids matches the corresponding responses of an idealized component or pattern neuron. Zc and Zp are the Fisher's z-transforms of the partial correlation between the measured responses and the responses of an idealized component neuron and pattern neuron, respectively (Movshon and Newsome 1996). Data points in top left quadrant indicate pattern tuning, whereas points in bottom right quadrant indicate component tuning. Open symbols correspond to the example neurons shown in A. C: preferred direction (PD) of a population of pattern neurons in area 1 (red trace) and direction perceived by human observers (green traced, reproduced from Fig. 4). Adapted from Pei et al. (2011) with permission.

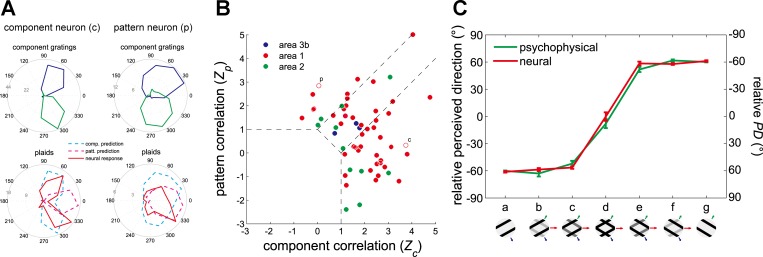

Models of Motion Integration: VA and IOC

One shortcoming of type 1 plaids is that they draw similar predictions from the VA and IOC models. One way to disambiguate the predictions from the two models is to assess the perception of and neuronal responses to type 2 plaids, in which the two component gratings move at different velocities. Indeed, the veridical direction of these patterns falls outside the range delimited by the component directions and so cannot be predicted from the VA model. Again, the responses of neurons in area 1 to type 2 plaids account for the perceived direction of these stimuli (Fig. 6A), further supporting the conclusion that these neurons play a major role in tactile motion perception. Importantly, the perceived motion direction of tactile type 2 plaids deviates from the predictions made by the IOC model (Fig. 6B, magenta trace) and instead seems to be determined predominantly by the direction of the faster component, with some influence from the slow one.

Fig. 6.

A: motion direction (blue trace) perceived by human observers and mean PD (red trace) of all significantly direction-tuned area 1 neurons for each grating/plaid morph (with component gratings at −30° and −60° resulting in a type 2 plaid with a veridical direction of 0°). Twenty-six of forty-five area 1 neurons were sensitive to the direction of motion of the type 2 plaid. B: comparison of the PDs (as shown in A) and those predicted by 4 candidate models for type 2 plaids. The full vector average (VA) model (green dashed traces) that includes motion signals from both local contours and terminators yielded the best predictions, better than a VA model that includes only the directions of local contours (dashed cyan traces). The intersection of constraints (IOC) model (dashed magenta traces) failed to explain the neuronal responses to type 2 plaids. C: breakdown of the stimulus features that are the inputs to the VA model. Green and blue contours and arrows correspond to the component edges and their respective directions of motion; red vertices and arrows correspond to the terminators and their direction of motion. D: computation of the stimulus direction based on the velocity of its components. Each feature is weighted according to its density (length of the edges, density of the terminators), its amplitude, and its speed. Weighted unit direction vectors are then summed to compute the perceived direction. Adapted from Pei et al. (2011) with permission.

A Model of Tactile Motion Integration

One possibility is that the neuronal PD and resulting perceived direction of motion are determined by a VA mechanism, with the faster component weighted more heavily than the slower component. However, predictions from a VA model with fixed weights for the components (Fig. 6B, cyan trace) or with weights derived from a population normalization model (Fig. 6B, blue trace) were not borne out in the data (Busse et al. 2009; Pei et al. 2011). Thus the PD and perceived direction cannot be computed based solely on the components' directions.

Another possibility is that signals from the terminators also contribute to the computation of motion direction. It turns out that the perceived directions and neuronal responses can be predicted by a model that includes the motion vectors of the two components and the terminators, each weighted according to their speeds and amplitudes (Fig. 6, B–D). In the model, the patterns of strains produced in the skin by the various gratings and plaids are first computed with a model of skin mechanics (Sripati et al. 2006). The model accounts for the fact that certain stimulus features are enhanced (e.g., corners) whereas others are suppressed due simply to skin mechanics. Second, edges and terminators in each pattern of strains are identified from the strain profile. Third, perceived direction (or neuronal PD) is computed as a vector average of edges and terminator velocities. The only parameter in the model is the weight assigned to the terminators relative to the edges (which were ascribed a weight of 1); all other factors, including speed and amplitude weighting, are computed from the stimulus and from known response properties of mechanoreceptive afferents. As expected, pattern neurons tended to yield higher terminator weights than their component counterparts. The model predicted that the perceived direction would shift gradually if the stimulus gradually changed from a pure component grating to the plaid pattern, a prediction that was borne out in the data.

That terminators contribute to motion perception implies that a population of local motion detectors in somatosensory cortex encodes terminator motion, analogously to end-stopped neurons in primary visual cortex (Gilbert 1977; Orban et al. 1979; Pack et al. 2003; Rose 1979; Yazdanbakhsh and Livingstone 2006). Indeed, in the visual system, it is hypothesized that terminator information is first computed in the primary visual cortex and then propagates to MT cortex (Pack et al. 2001). As of the writing of this review, end-stopped neurons have not been found or indeed sought out in S1.

In conclusion, then, tactile motion integration bears many similarities to its visual counterpart. A subpopulation of neurons in area 1 implements a terminator-based vector average mechanism to compute global tactile motion and can account for our ability to discern the direction of tactile motion.

Neural Representation of Tactile Speed

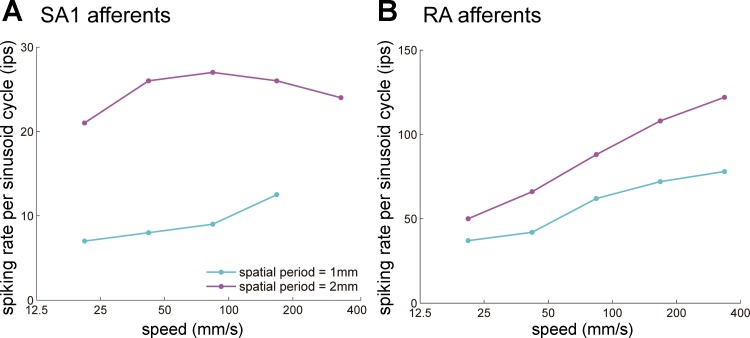

Information about the speed of objects scanned across the skin is perceptually available and relatively precise (Bensmaia et al. 2006b; Essick et al. 1988) unless the moving surface is completely smooth or comprises only sparse features (Depeault et al. 2008). The firing rates of mechanoreceptive afferents tend to increase as the speed of objects scanned across the skin increases (Cascio and Sathian 2001; Goodwin and Morley 1987; Pei et al. 2011) (Fig. 7). However, afferent responses are also highly dependent on surface texture (Connor and Johnson 1992; Weber et al. 2013), so it remains to be conclusively elucidated how information about texture and speed can be independently extracted from the peripheral signal. This disambiguation most likely involves integrating signals from multiple cutaneous submodalities (SA1, RA, and Pacinian) (Cascio and Sathian 2001; Goodwin et al. 1989; Sathian et al. 1989). In cortex, the confound between speed and texture is also observed (Depeault et al. 2013). However, a subpopulation of neurons in areas 1 and 2 increase their firing rate with increases in speed in a way that may account for tactile speed perception (Depeault et al. 2013), suggesting that the ambiguity of the peripheral speed signal may be resolved in S1.

Fig. 7.

Coding of speed in cutaneous mechanoreceptive afferents: responses of slowly adapting type 1 (SA1; A) and rapidly adapting (RA; B) afferents to 2 gratings scanned across their receptive fields at different speeds. RA responses increase monotonically with increases in speed, whereas SA1 responses do not. Adapted from Goodwin and Morley (1987) with permission.

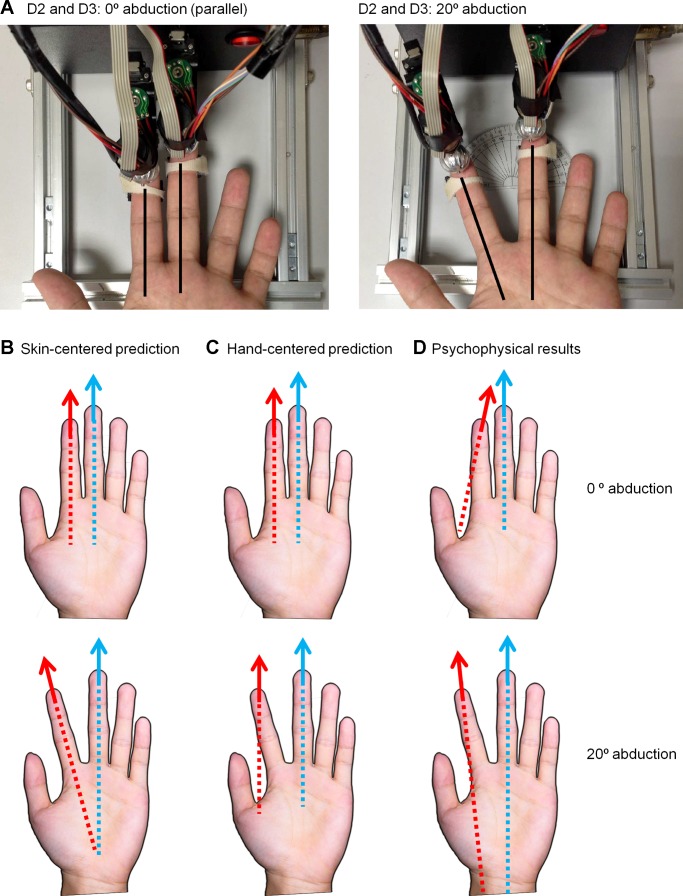

Multidigit Motion Integration

When grasping or manipulating an object, multiple fingers come into contact with it, so cutaneous signals originating from multiple fingerpads must be integrated with proprioceptive information about hand conformation to yield a holistic object percept (Berryman et al. 2006; Davidson 1972; Lederman and Klatzky 1987; Pont et al. 1997). When the object moves across the skin, motion signals from each contact point must also be combined to perceive how the object is moving with respect to the hand. This is a nontrivial process because tactile motion signals must be interpreted in the context of the position of the contact points with respect to one another. Rinker and Craig found that motion delivered to the thumb and index fingers was perceived as unitary only when the digits were parallel (Rinker and Craig 1994), demonstrating that hand conformation influences the interpretation of cutaneous motion signals. However, the reference frame within which multidigit motion information is integrated is unknown: Neither a skin-centered (somatotopic) reference frame nor a hand-centered (Cartesian) one can account for judgments of relative direction when motion stimuli are applied to different fingers (Fig. 8) (Pei et al. 2014).

Fig. 8.

Frame of reference for multidigit motion integration. A: subjects judged the parallelism of motion stimuli that were presented to the left index (D2) and middle (D3) fingers with a rotating motion stimulator. The angle between the 2 fingers differed by 0° or 20°. B and C: predicted perceived direction of the motion stimulus delivered on D2 (red arrow) that would be perceived as parallel to the motion stimulus delivered to D3 (blue arrow) based on skin-centered (B) or hand-centered (C) reference frames. D: in human psychophysical experiments, the perceived direction depended on relative finger orientations but did not conform to the predictions made by either the skin-centered or hand-centered reference frames.

The neural mechanisms underlying multidigit motion integration are also unknown. Apparent motion delivered to multiple digits was found to induce increased activity in areas 3b and 1, but this activity was not modulated by direction of motion (Friedman et al. 2011). Coherent motion across digits activates the anterior intraparietal and inferior parietal areas in the contralateral hemisphere (Kitada et al. 2003), but the functional significance of this activation remains to be elucidated. Multidigit motion integration might also occur in secondary somatosensory cortex, as neurons in the area exhibit both proprioceptive and cutaneous responses and have receptive fields that span multiple digits (Fitzgerald et al. 2006a, 2006b), but this has not been tested.

Integrating Visual and Tactile Motion

We are often confronted with information about the environment that originates from multiple modalities, and multisensory percepts are more reliable than unisensory ones (Beauchamp 2005; Ernst and Bulthoff 2004; Stein and Stanford 2008). Motion information is conveyed both visually and tactually, and these two streams of motion information interact reciprocally. For example, the perceived speed of a moving tactile grating is influenced by the concurrent presentation of a moving visual grating (Bensmaia et al. 2006b), and the perceived direction of a visual motion stimulus can be biased by a concurrently presented tactile motion stimulus (Pei et al. 2013). Saccade direction influences the perceived direction of tactile motion (Carter et al. 2008), motion aftereffects transfer between touch and vision (Konkle et al. 2009), and ambiguous visual motion can be disambiguated by touch (Blake et al. 2004; Pei et al. 2013).

Tactile motion elicits blood oxygen level-dependent (BOLD) responses in extrastriate visual areas, including MT (Blake et al. 2004; Hagen et al. 2002), and vibrotactile stimuli elicit BOLD responses in medial superior temporal cortex (Beauchamp et al. 2007) in humans, suggesting that these areas might be involved in visuotactile motion integration. Furthermore, disruptive transcranial magnetic stimulation (TMS) of area MT interferes with tactile speed perception (Basso et al. 2012). However, visuotactile motion integration can also be suppressed by disruptive TMS delivered to the junction between postcentral parietal and anterior intraparietal areas, indicating that posterior parietal cortex may also be involved in the integration process (Pasalar et al. 2010).

While MT and posterior parietal cortex both respond to visual and tactile motion, regions of visual and tactile activation only partially overlap, indicating that the neural substrates underlying visual and tactile processing are not identical (Summers et al. 2009). Interestingly, the portion of area MT that responds to touch differs in sighted and blind subjects, suggesting that visual experience somehow guides the formation of tactile representations in visual association cortex (Ricciardi et al. 2007). Finally, the activation of visual association cortex by tactile motion may be in part driven by visual imagery, as has been shown to be the case for the activation of these areas by tactile shape (Lacey et al. 2010; Lacey and Sathian 2011).

Conclusions

Tactile motion is computed based on a spatiotemporal pattern of skin deformations as well as shear forces exerted on the skin. Motion signals in peripheral afferents culminate in motion signals in cortex that are invariant with respect to other stimulus properties. Indeed, a majority of cutaneous neurons in S1 are direction selective, reflecting the importance of tactile motion in object manipulation and exploration. A subpopulation of S1 neurons integrates local motion signals emanating from object contours and terminators to achieve a percept of global motion based on a vector average mechanism. The remarkable parallels between visual and tactile motion processing suggest that these two perceptual systems have evolved similar mechanisms to extract motion information from a two-dimensional sheet of receptors in the retina and in the skin. The similarity in these motion representations is reflected in powerful interactions between visual and tactile motion perception, the neural basis of which has yet to be definitively identified.

GRANTS

This work was supported by National Institute of Neurological Disorders and Stroke Grant NS-082865, National Science Foundation Grant IOS-1150209, National Health Research Institutes (NHRI) Grant NHRI-EX101-10113EC, and Chang Gung Memorial Foundation Grants CMRPG350963, CMRPG590023, and CMRPG3C0461.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author(s).

AUTHOR CONTRIBUTIONS

Author contributions: Y.-C.P. prepared figures; Y.-C.P. and S.J.B. drafted manuscript; Y.-C.P. and S.J.B. edited and revised manuscript; Y.-C.P. and S.J.B. approved final version of manuscript.

ACKNOWLEDGMENTS

We thank Tsung-Chi Lee for his art work.

REFERENCES

- Adelson EH, Movshon JA. Phenomenal coherence of moving visual patterns. Nature 300: 523–525, 1982. [DOI] [PubMed] [Google Scholar]

- Albright TD. Direction and orientation selectivity of neurons in visual area MT of the macaque. J Neurophysiol 52: 1106–1130, 1984. [DOI] [PubMed] [Google Scholar]

- Basso D, Pavan A, Ricciardi E, Fagioli S, Vecchi T, Miniussi C, Pietrini P. Touching motion: rTMS on the human middle temporal complex interferes with tactile speed perception. Brain Topogr 25: 389–398, 2012. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS. See me, hear me, touch me: multisensory integration in lateral occipital-temporal cortex. Curr Opin Neurobiol 15: 145–153, 2005. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Yasar NE, Kishan N, Ro T. Human MST but not MT responds to tactile stimulation. J Neurosci 27: 8261–8267, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bensmaia SJ, Craig JC, Yoshioka T, Johnson KO. SA1 and RA afferent responses to static and vibrating gratings. J Neurophysiol 95: 1771–1782, 2006a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bensmaia SJ, Killebrew JH, Craig JC. Influence of visual motion on tactile motion perception. J Neurophysiol 96: 1625–1637, 2006b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berryman LJ, Yau JM, Hsiao SS. Representation of object size in the somatosensory system. J Neurophysiol 96: 27–39, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bicchi A, Scilingo EP, Ricciardi E, Pietrini P. Tactile flow explains haptic counterparts of common visual illusions. Brain Res Bull 75: 737–741, 2008. [DOI] [PubMed] [Google Scholar]

- Birznieks I, Wheat HE, Redmond SJ, Salo LM, Lovell NH, Goodwin AW. Encoding of tangential torque in responses of tactile afferent fibres innervating the fingerpad of the monkey. J Physiol 588: 1057–1072, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blake R, Sobel KV, James TW. Neural synergy between kinetic vision and touch. Psychol Sci 15: 397–402, 2004. [DOI] [PubMed] [Google Scholar]

- Britten KH, Shadlen MN, Newsome WT, Movshon JA. Responses of neurons in macaque MT to stochastic motion signals. Vis Neurosci 10: 1157–1169, 1993. [DOI] [PubMed] [Google Scholar]

- Busse L, Wade AR, Carandini M. Representation of concurrent stimuli by population activity in visual cortex. Neuron 64: 931–942, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carter O, Konkle T, Wang Q, Hayward V, Moore C. Tactile rivalry demonstrated with an ambiguous apparent-motion quartet. Curr Biol 18: 1050–1054, 2008. [DOI] [PubMed] [Google Scholar]

- Cascio CJ, Sathian K. Temporal cues contribute to tactile perception of roughness. J Neurosci 21: 5289–5296, 2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Connor CE, Johnson KO. Neural coding of tactile texture: comparison of spatial and temporal mechanisms for roughness perception. J Neurosci 12: 3414–3426, 1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Costanzo RM, Gardner EP. A quantitative analysis of responses of direction-sensitive neurons in somatosensory cortex of awake monkeys. J Neurophysiol 43: 1319–1341, 1980. [DOI] [PubMed] [Google Scholar]

- Davidson PW. Haptic judgments of curvature by blind and sighted humans. J Exp Psychol 93: 43–55, 1972. [DOI] [PubMed] [Google Scholar]

- Depeault A, Meftah EM, Chapman CE. Tactile speed scaling: contributions of time and space. J Neurophysiol 99: 1422–1434, 2008. [DOI] [PubMed] [Google Scholar]

- Depeault A, Meftah EM, Chapman CE. Neuronal correlates of tactile speed in primary somatosensory cortex. J Neurophysiol 110: 1554–1566, 2013. [DOI] [PubMed] [Google Scholar]

- Dreyer DA, Hollins M, Whitsel BL. Factors influencing cutaneous directional sensitivity. Sens Processes 2: 71–79, 1978. [PubMed] [Google Scholar]

- Ernst MO, Bulthoff HH. Merging the senses into a robust percept. Trends Cogn Sci 8: 162–169, 2004. [DOI] [PubMed] [Google Scholar]

- Essick GK, Franzen O, Whitsel BL. Discrimination and scaling of velocity of stimulus motion across the skin. Somatosens Motor Res 6: 21–40, 1988. [DOI] [PubMed] [Google Scholar]

- Fennema C, Thompson W. Velocity determination in scenes containing several moving objects. Computer Graphics Image Processing 9: 301–315, 1979. [Google Scholar]

- Fitzgerald PJ, Lane JW, Thakur PH, Hsiao SS. Receptive field (RF) properties of the macaque second somatosensory cortex: RF size, shape, and somatotopic organization. J Neurosci 26: 6485–6495, 2006a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fitzgerald PJ, Lane JW, Thakur PH, Hsiao SS. Receptive field properties of the macaque second somatosensory cortex: representation of orientation on different finger pads. J Neurosci 26: 6473–6484, 2006b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedman RM, Dillenburger BC, Wang F, Avison MJ, Gore JC, Roe AW, Chen LM. Methods for fine scale functional imaging of tactile motion in human and nonhuman primates. Open Neuroimag J 5: 160–171, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedman RM, Khalsa PS, Greenquist KW, LaMotte RH. Neural coding of the location and direction of a moving object by a spatially distributed population of mechanoreceptors. J Neurosci 22: 9556–9566, 2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gardner EP, Costanzo RM. Neuronal mechanisms underlying direction sensitivity of somatosensory cortical neurons in awake monkeys. J Neurophysiol 43: 1342–1354, 1980. [DOI] [PubMed] [Google Scholar]

- Gardner EP, Palmer CI. Simulation of motion on the skin. I. Receptive fields and temporal frequency coding by cutaneous mechanoreceptors of OPTACON pulses delivered to the hand. J Neurophysiol 62: 1410–1436, 1989a. [DOI] [PubMed] [Google Scholar]

- Gardner EP, Palmer CI. Simulation of motion on the skin. II. Cutaneous mechanoreceptor coding of the width and texture of bar patterns displaced across the OPTACON. J Neurophysiol 62: 1437–1460, 1989b. [DOI] [PubMed] [Google Scholar]

- Gardner EP, Sklar BF. Discrimination of the direction of motion on the human hand: a psychophysical study of stimulation parameters. J Neurophysiol 71: 2414–2429, 1994. [DOI] [PubMed] [Google Scholar]

- Gilbert CD. Laminar differences in receptive field properties of cells in cat primary visual cortex. J Physiol 268: 391–421, 1977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodwin AW, John KT, Sathian K, Darian-Smith I. Spatial and temporal factors determining afferent fiber responses to a grating moving sinusoidally over the monkey's fingerpad. J Neurosci 9: 1280–1293, 1989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodwin AW, Morley JW. Sinusoidal movement of a grating across the monkey's fingerpad: representation of grating and movement features in afferent fiber responses. J Neurosci 7: 2168–2180, 1987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guilford JP. Illusory movement from a rotating barber pole. Am J Psychol 41: 686–687, 1929. [Google Scholar]

- Hagen MC, Franzen O, McGlone F, Essick G, Dancer C, Pardo JV. Tactile motion activates the human middle temporal/V5 (MT/V5) complex. Eur J Neurosci 16: 957–964, 2002. [DOI] [PubMed] [Google Scholar]

- Hubel DH, Wiesel TN. Receptive fields and functional architecture of monkey striate cortex. J Physiol 195: 215–243, 1968. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johansson RS, Vallbo AB. Tactile sensibility in the human hand: relative and absolute densities of four types of mechanoreceptive units in glabrous skin. J Physiol 286: 283–300, 1979. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keyson DV, Houtsma AJ. Directional sensitivity to a tactile point stimulus moving across the fingerpad. Percept Psychophys 57: 738–744, 1995. [DOI] [PubMed] [Google Scholar]

- Killebrew JH, Bensmaia SJ, Dammann JF, Denchev P, Hsiao SS, Craig JC, Johnson KO. A dense array stimulator to generate arbitrary spatio-temporal tactile stimuli. J Neurosci Methods 161: 62–74, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirman JH. Tactile apparent movement: the effects of number of stimulators. J Exp Psychol 103: 1175–1180, 1974. [DOI] [PubMed] [Google Scholar]

- Kitada R, Kochiyama T, Hashimoto T, Naito E, Matsumura M. Moving tactile stimuli of fingers are integrated in the intraparietal and inferior parietal cortices. Neuroreport 14: 719–724, 2003. [DOI] [PubMed] [Google Scholar]

- Konkle T, Wang Q, Hayward V, Moore CI. Motion aftereffects transfer between touch and vision. Curr Biol 19: 745–750, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kooi FL. Local direction of edge motion causes and abolishes the barberpole illusion. Vision Res 33: 2347–2351, 1993. [DOI] [PubMed] [Google Scholar]

- Lacey S, Flueckiger P, Stilla R, Lava M, Sathian K. Object familiarity modulates the relationship between visual object imagery and haptic shape perception. Neuroimage 49: 1977–1990, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lacey S, Sathian K. Multisensory object representation: insights from studies of vision and touch. Prog Brain Res 191: 165–176, 2011. [DOI] [PubMed] [Google Scholar]

- Lederman SJ, Klatzky RL. Hand movements: a window into haptic object recognition. Cogn Psychol 19: 342–368, 1987. [DOI] [PubMed] [Google Scholar]

- Movshon JA, Newsome WT. Visual response properties of striate cortical neurons projecting to area MT in macaque monkeys. J Neurosci 16: 7733–7741, 1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Movshon JA, Adelson EH, Gizzi MS, Newsome WT. The analysis of moving visual patterns. Pontificiae Academiae Scientiarvm Scripta Varia 54: 117–151, 1983. [Google Scholar]

- Newsome WT, Pare EB. A selective impairment of motion perception following lesions of the middle temporal visual area (MT). J Neurosci 8: 2201–2211, 1988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Norrsell U, Olausson H. Human, tactile, directional sensibility and its peripheral origins. Acta Physiol Scand 144: 155–161, 1992. [DOI] [PubMed] [Google Scholar]

- Olausson H, Norrsell U. Observations on human tactile directional sensibility. J Physiol 464: 545–559, 1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olausson H, Wessberg J, Kakuda N. Tactile directional sensibility: peripheral neural mechanisms in man. Brain Res 866: 178–187, 2000. [DOI] [PubMed] [Google Scholar]

- Orban GA, Kato H, Bishop PO. End-zone region in receptive fields of hypercomplex and other striate neurons in the cat. J Neurophysiol 42: 818–832, 1979. [DOI] [PubMed] [Google Scholar]

- Pack CC, Berezovskii VK, Born RT. Dynamic properties of neurons in cortical area MT in alert and anaesthetized macaque monkeys. Nature 414: 905–908, 2001. [DOI] [PubMed] [Google Scholar]

- Pack CC, Gartland AJ, Born RT. Integration of contour and terminator signals in visual area MT of alert macaque. J Neurosci 24: 3268–3280, 2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pack CC, Livingstone MS, Duffy KR, Born RT. End-stopping and the aperture problem: two-dimensional motion signals in macaque V1. Neuron 39: 671–680, 2003. [DOI] [PubMed] [Google Scholar]

- Pasalar S, Ro T, Beauchamp MS. TMS of posterior parietal cortex disrupts visual tactile multisensory integration. Eur J Neurosci 31: 1783–1790, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pei YC, Chang TY, Lee TC, Saha S, Lai HY, Gomez-Ramirez M, Chou SW, Wong AM. Cross-modal sensory integration of visual-tactile motion information: instrument design and human psychophysics. Sensors (Basel) 13: 7212–7223, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pei YC, Hsiao SS, Bensmaia SJ. The tactile integration of local motion cues is analogous to its visual counterpart. Proc Natl Acad Sci USA 105: 8130–8135, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pei YC, Hsiao SS, Craig JC, Bensmaia SJ. Shape invariant coding of motion direction in somatosensory cortex. PLoS Biol 8: e1000305, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pei YC, Hsiao SS, Craig JC, Bensmaia SJ. Neural mechanisms of tactile motion integration in somatosensory cortex. Neuron 69: 536–547, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pei YC, Lee TC, Chang TY, Ruffatto D, 3rd, Spenko M, Bensmaia S. A multi-digit tactile motion stimulator. J Neurosci Methods 226: 80–87, 2014. [DOI] [PubMed] [Google Scholar]

- Phillips JR, Johnson KO. Tactile spatial resolution. II. Neural representation of bars, edges, and gratings in monkey primary afferents. J Neurophysiol 46: 1192–1203, 1981. [DOI] [PubMed] [Google Scholar]

- Pont SC, Kappers AM, Koenderink JJ. Haptic curvature discrimination at several regions of the hand. Percept Psychophys 59: 1225–1240, 1997. [DOI] [PubMed] [Google Scholar]

- Ricciardi E, Vanello N, Sani L, Gentili C, Scilingo EP, Landini L, Guazzelli M, Bicchi A, Haxby JV, Pietrini P. The effect of visual experience on the development of functional architecture in hMT+. Cereb Cortex 17: 2933–2939, 2007. [DOI] [PubMed] [Google Scholar]

- Rinker MA, Craig JC. The effect of spatial orientation on the perception of moving tactile stimuli. Percept Psychophys 56: 356–362, 1994. [DOI] [PubMed] [Google Scholar]

- Rose D. Mechanisms underlying the receptive field properties of neurons in cat visual cortex. Vision Res 19: 533–544, 1979. [DOI] [PubMed] [Google Scholar]

- Ruiz S, Crespo P, Romo R. Representation of moving tactile stimuli in the somatic sensory cortex of awake monkeys. J Neurophysiol 73: 525–537, 1995. [DOI] [PubMed] [Google Scholar]

- Rust NC, Mante V, Simoncelli EP, Movshon JA. How MT cells analyze the motion of visual patterns. Nat Neurosci 9: 1421–1431, 2006. [DOI] [PubMed] [Google Scholar]

- Sathian K, Goodwin AW, John KT, Darian-Smith I. Perceived roughness of a grating: correlation with responses of mechanoreceptive afferents innervating the monkey's fingerpad. J Neurosci 9: 1273–1279, 1989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shimojo S, Silverman GH, Nakayama K. Occlusion and the solution to the aperture problem for motion. Vision Res 29: 619–626, 1989. [DOI] [PubMed] [Google Scholar]

- Simoncelli EP, Heeger DJ. A model of neuronal responses in visual area MT. Vision Res 38: 743–761, 1998. [DOI] [PubMed] [Google Scholar]

- Sripati AP, Bensmaia SJ, Johnson KO. A continuum mechanical model of mechanoreceptive afferent responses to indented spatial patterns. J Neurophysiol 95: 3852–3864, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein BE, Stanford TR. Multisensory integration: current issues from the perspective of the single neuron. Nat Rev Neurosci 9: 255–266, 2008. [DOI] [PubMed] [Google Scholar]

- Summers IR, Francis ST, Bowtell RW, McGlone FP, Clemence M. A functional-magnetic-resonance-imaging investigation of cortical activation from moving vibrotactile stimuli on the fingertip. J Acoust Soc Am 125: 1033–1039, 2009. [DOI] [PubMed] [Google Scholar]

- Treue S, Hol K, Rauber HJ. Seeing multiple directions of motion-physiology and psychophysics. Nat Neurosci 3: 270–276, 2000. [DOI] [PubMed] [Google Scholar]

- Wallach H. Ueber visuell wahrgenommene Bewegungsrichtung. Psychol Forsch 20: 325–380, 1935. [Google Scholar]

- Wallach H. On the visually perceived direction of motion (reprinted from Psychol Forsch 20: 325–380, 1935). Perception 25: 1319–1367, 1996. [Google Scholar]

- Warren S, Hamalainen HA, Gardner EP. Objective classification of motion- and direction-sensitive neurons in primary somatosensory cortex of awake monkeys. J Neurophysiol 56: 598–622, 1986. [DOI] [PubMed] [Google Scholar]

- Weber AI, Saal HP, Lieber JD, Cheng JW, Manfredi LR, Dammann JF, 3rd, Bensmaia SJ. Spatial and temporal codes mediate the tactile perception of natural textures. Proc Natl Acad Sci USA 110: 17107–17112, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiss Y, Simoncelli EP, Adelson EH. Motion illusions as optimal percepts. Nat Neurosci 5: 598–604, 2002. [DOI] [PubMed] [Google Scholar]

- Werner G, Whitsel BL. Stimulus feature detection by neurons in somatosensory areas I and II of primates. IEEE Trans Man-Machine Syst 11: 36–38, 1970. [Google Scholar]

- Wheat HE, Salo LM, Goodwin AW. Cutaneous afferents from the monkeys fingers: responses to tangential and normal forces. J Neurophysiol 103: 950–961, 2010. [DOI] [PubMed] [Google Scholar]

- Whitsel BL, Roppolo JR, Werner G. Cortical information processing of stimulus motion on primate skin. J Neurophysiol 35: 691–717, 1972. [DOI] [PubMed] [Google Scholar]

- Yazdanbakhsh A, Livingstone MS. End stopping in V1 is sensitive to contrast. Nat Neurosci 9: 697–702, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]