Abstract

Background

Translational research is increasingly important as academic health centers transform themselves to meet new requirements of NIH funding. Most attention has focused on T1 translation studies (bench to bedside) with considerable uncertainty about how to enhance T2 (effectiveness trials) and especially T3 (implementation studies).

Objective

To describe an innovative example of a T3 study, conducted as partnership research with the leaders of a major natural experiment in Minnesota to improve the primary care of depression.

Methods

All health plans in the state have agreed on a new payment model to support clinics that implement the well-evidenced collaborative care model for depression in the DIAMOND Initiative (Depression Improvement Across Minnesota: Offering a New Direction). The DIAMOND Study was developed in an ongoing partnership with Initiative leaders from seven health plans, 85 clinics, and a regional quality improvement collaborative to evaluate the implementation and its impacts on patients and other stakeholders. We agreed upon a staggered implementation, multiple baseline research design, utilizing the concepts of practical clinical trials and engaged scholarship and have collaborated on all aspects of conducting the study, including joint identification of patient and clinic survey recipients.

Results

Complex study methods have worked well through 20 months because of the commitment of all stakeholders to both the Initiative and study. Over 1,500 patient subjects have been recruited from health plan information delivered weekly and 99.7% of 316 physicians and administrators from all participating clinical organizations have completed Study surveys.

Conclusions

Partnership research can greatly facilitate translational research studies.

The U.S. is increasingly engaged in an effort to transform the research enterprise, driven by the widely denounced “disconnection between the promise of basic science and the delivery of better health,” with the goal of overcoming translational blocks that cause that disconnection..(1, 2), (3) Thus far, most attention and funding has been devoted to T1, the block to translating the lessons of basic science into new clinical science methods. However, if we are to improve human health, much greater support and attention must be devoted to T2 (the block to translating new clinical knowledge into effective care) and T3, (the block to widely implementing best practice care through clinical practice and policy changes).(4)

Nearly all public attention to the gap between clinical evidence and care has focused on the slow and incomplete translation (implementation) of research evidence into practice, but there may be a greater problem with the evidence itself. Not only is evidence lacking for most medical care decisions, but the evidence that is present is often inadequate or irrelevant for the untidy realities of decision makers charged with implementing effective health care programs.(5–7) Green has summarized this situation as "if we want more evidence-based practice, we need more practice-based evidence."(5)

Even this formulation may be incomplete, however. Besides the evidence of efficacy usually cited, decision makers also need evidence of effectiveness, external validity, and implementation (both organizational factors and specific strategies). Tunis similarly concluded that “the widespread gaps in evidence-based knowledge suggest that systematic flaws exist in the production of scientific evidence, in part because there is no consistent effort to conduct clinical trials designed to meet the needs of decision makers.”(8) He called for more pragmatic or practical clinical trials (PCTs), where the hypothesis and study design are developed specifically to meet those needs. Glasgow et al followed up with recommendations and examples of how PCTs can be conducted and the results reported to enhance external validity without sacrificing internal validity.(9)

The purpose of this paper is to illustrate the concepts and methods useful for implementation research by describing an NIH-funded PCT to evaluate a large natural experiment to improve primary care of depression throughout an entire state.

Partnership Research and Engaged Scholarship

In order to create the types of evidence needed, it is important that decision makers be actively involved in the research process itself. Van de Ven and others have called this “engaged scholarship.”(10–13) They note that the research-practice gaps so apparent in health care are also present in a wide variety of industries and disciplines as researchers and decision-makers become increasingly disconnected. According to Van de Ven, this research-practice gap is usually considered either a knowledge transfer problem or as a basic incompatibility problem, whereas he concludes that the gap is largely a knowledge production problem, which calls for a different approach—knowledge co-production between scholars and practitioners. He defines the engaged scholarship approach as “a participative form of research for obtaining the different perspectives of key stakeholders (researchers, users, clients, sponsors, and practitioners) in studying complex problems.”(12) One advantage of engaged scholarship is that the research is designed to address problems that are grounded in reality, increasing the likelihood that results will not only be disseminated, but actually implemented to improve health.

Our term for engaged scholarship is “partnership research,” and the idea of partnerships for effective transfer and exchange of knowledge seems to be gaining greater currency among health services researchers.(14) Carried to the most desirable extreme, partnership research includes everything from joint creation of the research questions, intervention, research design, and proposal as well as collaborative conduct of the actual study, analysis of data, development of conclusions and recommendations, and finally implementation and spread of relevant findings.

There are certainly many barriers to scientist-decision-maker partnerships, but if their differences are recognized and accommodated, these parties can work together.(15, 16) For example, because of the limited time and research expertise of decision makers, they generally play a much more time-limited role throughout a research project; but as findings emerge, the roles reverse, since researchers have little time or power to actually implement anything based on these findings. The partnership approach is similar to what happens in some practice-based or integrated delivery system research networks or in the Veterans Administration’s QUERI program (Quality Enhancement Research Initiatives).(17–20) It is also similar to the longer history of community-based participatory research and to its more recent expansion into implementation networks.(21, 22)

A Natural Experiment – the DIAMOND Initiative

In this time of great ferment in health care, there are many innovations being developed for financing or delivery of care at the local, state, and national levels. These innovations, initiated by health plans, medical provider organizations, governmental units, and others, can be thought of as natural experiments.(23) Wikipedia defines a natural or quasi-experiment as “a naturally occurring instance of observable phenomena which approximates or duplicates the properties of a controlled experiment.”(http://en.wikipedia.org/wiki) It provides an example of when Helena, Montana had a smoking ban in effect in all public spaces for a seven month period in 2002, during which heart attack admissions to its only hospital dropped by 60% before rising again when the ban ended.(24) Such natural experiments provide important opportunities to study and learn, and partnerships between leaders of the innovation and researchers are particularly good vehicles for doing so. One of the most important recent natural experiments in health care is being implemented in Minnesota as a new approach to the primary care of depression in adults.(25, 26) This initiative, called DIAMOND for Depression Improvement Across Minnesota: Offering a New Direction, is an attempt to change the rules for depression care delivery and reimbursement so that the collaborative care model, proven in over 35 randomized controlled trials, is actually implemented and maintained.(27, 28) A key reason that evidence-based depression care has not been implemented, even in clinics that participated in the trials that proved its effectiveness, is that there has been no payment for the services involved under traditional coverage models.(29)

In order to solve that problem, ICSI (Institute for Clinical Systems Improvement), a nationally prominent regional quality improvement collaborative, brought together the major stakeholders in 2006, including patients, clinicians, payers, and employers. After reviewing evidence-based approaches to the care delivery and financing problem, the DIAMOND Steering Committee came up with a bundled payment recommendation, which all the payers in the state agreed to provide. This payment would cover care that follows ICSI’s treatment guidelines for depression (30) and includes all six key components of the evidence-based collaborative care management program:(27, 28)

Consistent use of a validated instrument for assessing and monitoring depression (choosing the PHQ9).(31, 32)

Systematic patient follow-up tracking and monitoring with a registry

Evidence-based stepped-care for treatment intensification

Relapse prevention planning

Care manager in the practice to educate, monitor, and coordinate care in collaboration with the primary care physician

Scheduled weekly psychiatric caseload consultation to supervise the care manager and provide treatment recommendations to the primary care clinician

In order to be eligible for the new payment, each primary care clinic had to include all adult primary care clinicians in the program, participate in a six-month training and implementation facilitation program managed by ICSI, provide ICSI with specified measurement information at regular intervals after implementation, and be certified by ICSI as meeting all of the above criteria. Twenty-four medical groups with 85 separate clinics and 553 FTE adult primary care physicians agreed to participate in a staged implementation over two years, with the first sequence implementing the care management program in March of 2008. The DIAMOND Steering Committee, with representatives of each health plan, Minnesota Department of Human Services, medical groups, employers, and patients meets monthly to monitor progress and make changes with the help of several committees set up for specific issues (e.g., measurement).

Patient involvement in this group has been extremely helpful to both Initiative and Study

Because DIAMOND would be implemented at different times by different clinics, this natural experiment became an ideal opportunity for parallel research to evaluate the impact of this change in care delivery and reimbursement.

Partnership Research Example – the DIAMOND Study

The first author (LS) had been involved in the early deliberations leading to establishment of the Initiative. When it became clear that the Initiative was likely to become a reality, he discussed with the DIAMOND Steering Committee their interest in a parallel study that could provide a detailed evaluation of this unique initiative. Both questions of particular interest to the stakeholders and the sequential approach to implementation were developed collaboratively. A research proposal was submitted to NIH in 9/06 in response to an open RFA for dissemination and implementation research, and it was funded in 9/07.

Implementation in five staggered sequences over three years was favored by ICSI in order to make it more feasible to train so many people and sites, while also allowing time to revise the approach from experience. Since natural experiments do not lend themselves to randomized controlled trials, we proposed to take advantage of this approach to utilize a quasi-experimental design strengthened by a staggered implementation time series with multiple baseline measures across settings as described by Speroff and O’Connor.(33) This design allows each site to serve as its own pre/post control, while the staggered implementation and repeated measures enhance the ability to control for secular trends and to document the replicability, robustness across settings, and time course of intervention effects. Speroff had suggested that this design was a particularly appropriate way to reduce validity problems for quality improvement research and Glasgow recommended it as an excellent way to conduct a practical clinical trial.(34)

The implementation plan agreed upon between Initiative and Study leaders was that each sequence would include 10–20 clinics with up to 120 FTE adult primary care clinicians, with six months of training and practice preparation followed by implementation and eligibility for the new payment for depressed patients that met established criteria. Large medical groups usually included clinics in multiple sequences. The study began recruiting depressed subjects weekly in a completely separate process across all 85 clinics that were planning to participate (regardless of what sequence they were in) and continued doing so over a 37 month time period. Thus, Study patients would be included for only 1 month pre-implementation for clinics in sequence #1, but for 25 months for sequence #5, while the opposite was true for post-implementation patients (see Table 2). Since the numbers of Study patients will be small from any individual clinic, it will only be possible to make conclusions about the overall impact of the program.

Table 2.

Timing of Implementation and Patient Data Collection, with Patients Identified and Recruited Weekly for Each Sequence of Clinics throughout 37 Months

| Month | 2/08 | 3–8/08 | 9/08–2/09 | 3/09–8/09 | 9/09–2/10 | 3/10–2/11 |

|---|---|---|---|---|---|---|

| Clinics | 10 | 15 | 20 | 15 | 25 | |

| Sequence | 1 | 2 | 3 | 4 | 5 | |

| 1 | A | B | B | B | B | B |

| 2 | A | A | B | B | B | B |

| 3 | A | A | A | B | B | B |

| 4 | A | A | A | A | B | B |

| 5 | A | A | A | A | A | B |

A = Pre-Implementation patient subjects recruited

B = Post-Implementation patient subjects recruited

With design and research questions developed by the partnership, researchers proposed specific aims that met the needs of the Initiative leaders:

To test the effects of changed reimbursement and facilitated organizational change on the use of evidence-based care processes for patients with depression.

To test the effect of the care process changes on changes in depression symptoms, healthcare costs, and work productivity

To identify organizational factors that affect the implementation and effects of the care changes

To describe the reach, adverse outcomes, adoption, implementation, and spread of the intervention in order to evaluate its potential for broader scale dissemination.(34)

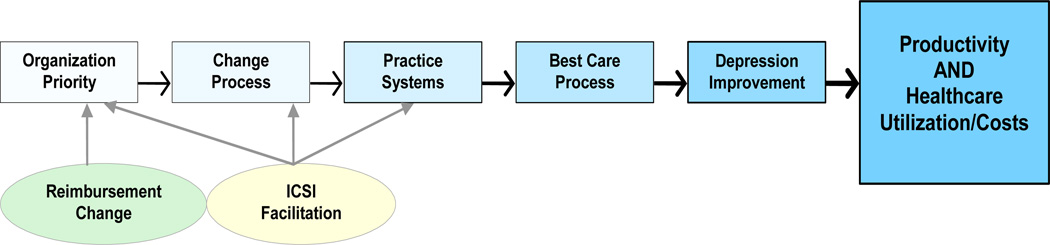

Fundable research must also include a conceptual framework that fits the intervention, outcomes, and design. Figure 1 was adapted from a framework for quality improvement of care that identifies the main factors predicting improvement as organizational priority for the specific improvement, an effective change process, and identification of appropriate practice system changes.(35) The DIAMOND Initiative intervention appeared likely to impact all three factors through the payment change and ICSI facilitation of care system changes. The details of each of the steps in this Implementation Chain are described in Table 1

Figure 1.

The Implementation Chain

Table 1.

Relation of the Conceptual Framework to the DIAMOND Initiative and Study

| Domain | Elements | Data Source* |

|---|---|---|

| Priority | Priority for depression care improvement relative to all other organizational priorities | 0–10 scale on PPC-RD |

| Change Process | Leadership support, development of new systems, orientation of staff, engagement of physicians, hiring of care manager, contracting for psychiatry consultation | CPCQ + ICSI documentation |

| Practice Systems | Coding changes, routine use of PHQ9 at onset and 6/12 months of care, systematic evaluation of co-morbidities, registry, tracking and monitoring, care coordination, self-management support, follow-up, treatment intensification, relapse prevention, performance measurement and reporting, quality improvement, standing orders | PPC-RD |

| Best Care Process | Shared decision-making, personalized care plan, assessed side effects, connected to community programs, assessed depression severity frequently, assessed alcohol use and suicidal thoughts, provided written information, provided with care manager, called to check on progress Anti-depressant fills, refills, changes, follow-up visits |

Patient survey Payer data |

| Depression Improvement | Change in PHQ9 score from baseline at 6 & 12 months (response and remission rates) | PHQ9 score changes |

| Productivity, Healthcare Utilization, and Costs | Change in absenteeism and presenteeism Total, inpatient, and outpatient costs, use of mental health specialists and |

Patient survey Payer claims data |

| Payment Change | Use of special DIAMOND claims code | Payer data |

| ICSI Facilitation | Specification of changes needed, training, certification, measurement, consultation, and improvement collaborative | Documentation of each step |

PPC-RD = Survey of clinical leaders for priority and practice systems for depression

CPCQ = Survey of administrative leaders for change process capability

ICSI = Institute for Clinical Systems Improvement

Surveys of independently identified patients are used to provide the information on relevant care processes and depression outcomes needed for aims 1 and 2 (see Table 3). This survey was modified from the PACIC (Patient Assessment of Chronic Illness Care) survey to include items specific to depression and the collaborative care model being implemented.(36, 37) It also includes the PHQ9 questions widely accepted as a measure of depression severity and questions about work productivity from the WPAI (Work Productivity and Activity Impairment Questionnaire).(38, 39) Each week, all member patients newly started on antidepressant medications at one of the 85 participating clinics are identified by the payers and contacted by study staff as potential study participants. Identifying these potential subjects involved another critical aspect of the partnership. Each payer agreed to follow a common algorithm to identify appropriate subjects from its pharmacy claims data. In order to satisfy IRB (Institutional Review Board) requirements for human subjects protection, each payer then needed to send each patient a letter about the study, providing a one week opportunity for the patient to opt out before the lists could be sent to the research survey center for calling. Despite the lack of reimbursement for these costs, all but one of the payers has continued to submit names and contact information every 1–2 weeks, with nearly 17,000 submitted through the first 21 months of the study. Fully 40% of these subjects could not be contacted, another 20% refused or were unable to participate, 15% were not being treated for depression at a participating clinic, and another 15% did not have a PHQ9 score >6, so only 1,570 depressed subjects have completed the baseline survey. Patients completing this survey at baseline were also asked for permission to resurvey them six months later, or for the subsample needed to measure productivity changes, at three, six, and twelve months later.

Table 3.

Study Data Sources in Relation to Specific Aims

| Data Source | Administration | Instrument* | Variables | Specific Aims |

|---|---|---|---|---|

| Patients starting new antidepressants for depression | Weekly samples identified by payers are phoned initially & at 6 months (at 3, 6, & 12 months for a productivity subsample) |

PACIC Survey - modified (includes the PHQ9 and WPAI) | Care received Depression severity & improvement Work productivity |

1 2 2 |

| Medical group and clinic leaders (MD & administrative) | Mail survey at baseline E-mail pre-implement’n + 12 & 24 months post-implementation (same) Mail survey after implementation |

Organizational survey PPC-RD survey CPCQ survey Cost templates |

Descriptive data Practice systems & priority for change Change process factors & strategies Implementation & operating costs |

3 & 4 3 & 4 3 2 |

| All payers participating in the Initiative | From lists of consenting subjects completing PACIC survey Mail survey |

Claims data aggregated at patient level Cost template |

Healthcare utilization & costs Implementation costs |

2 & 4 2 |

| ICSI | Mail survey | Cost template | Implementation costs | 2 |

The payers have also agreed to send the study team the health care utilization and cost data on consented subjects needed for aims 2 and 4. They obviously believe the study findings are important, to the point of being willing to send the data to a research foundation affiliated with a competing health plan.

Data for Aims 3 and 4 largely depend on the completion of multiple surveys by medical group and clinic leaders (see Table 3). The PPC-RD survey (similar to the instrument used to certify practices as medical homes) measures the presence of practice systems relevant for consistent depression management.(40, 41) The CPCQ (Change Process Capability Questionnaire) measures a clinic or medical group’s readiness and approach to improving quality.(42) At 21 months, we have obtained completed surveys from 99.7% of the 316 physician and administrator leaders surveyed. In addition, we have collected implementation cost estimates from medical groups, payers, and ICSI, as well as operating cost estimates from the medical groups, in order to better describe the financial costs of such an undertaking.

The chief requirement of a practical clinical trial is that it is designed to assist health care decision makers in solving their real life problems. Their most distinctive features have been described by Tunis et al and by Glasgow et al as:

Enrollment of a diverse study population that represents the range and distribution of patients faced by decision makers. DIAMOND accomplishes this by offering the DIAMOND program to all patients with depression meeting clinical eligibility.

Recruitment from a variety of practice settings. DIAMOND accomplishes this by including all clinicians within participating plans, including twenty-four medical groups with 85 separate clinics and 553 FTE adult primary care physicians.

Comparison of clinically relevant alternatives. Existing usual care, which includes guidelines for depression care, but not reimbursement for the 6 elements of collaborative care identified above, is the comparator against which the alternative of enhanced reimbursement for the collaborative care model components will be evaluated.

Measurement of a broad range of relevant health outcomes. DIAMOND includes a wide range of outcomes relevant to decision makers including measures of reach, depression, cost, implementation, and organizational factors related to success. The inclusion of individual and organizational level outcomes is a unique contribution of the DIAMOND initiative.

Lessons for Partnership Practical Clinical Trials

Although the DIAMOND Study is only 35% complete, it has demonstrated that it is possible to design and obtain funding for a large practical clinical trial of an even larger natural experiment. This has required very close relationships, trust, and good communication among many real life competing partners for both proposal development and operation of the study. Some of the factors that appear to have contributed to this relationship so far include:

Pre-existing collaborative relationships for quality improvement among the stakeholders

A neutral convening and facilitating organization like ICSI

Pre-existing trusting relationships of payer, medical group, and ICSI leaders with the principal investigator (LS)

Involvement of the principal investigator in the earliest phases of development of the Initiative, and a parallel involvement of ICSI non-research colleagues in the earliest phases of Study development

Mutually respectful pre-existing personal relationships among the individual leaders

An external expert consultant (JU) with credibility in depression improvement research who was willing to support both the Initiative and the Study

Involvement of experts who also understand and support partnership research from around the country as co-investigators and consultants

Research deference to the operational needs and specific concerns of Initiative leaders and their organizations

Cross membership of Study leadership on the Initiative Steering and other committees and comparable Initiative leadership on the Study Coordinating and Analysis Teams.

Willingness of the Study team to add research efforts to newly identified needs of the Initiative. For example, the Study added the collection of medical group operating cost estimates when it became clear that reimbursement coverage of such costs was a major threat to the Initiative.

Presence of an appropriate and available funding vehicle and a willing funder.

The above list of factors would not be surprising to those who have conducted community-based research or who have worked in practice-based research networks. (21, 22, 43–45) However, few of those examples have involved study of such a large natural experiment in changing medical care payment and delivery that was not created for the purpose of the research. We did have some advantages in Minnesota in items #1 and 2, but the others are relevant for researchers elsewhere who are willing to make a long-term commitment to partnership research. The key for finding such opportunities is to develop personal relationships with leaders of local organizations involved in changing care delivery through willingness to devote time and energy to helping them to evaluate those changes. Initially that may not take the form of formal research projects, but once trust is established, it can quickly migrate to the level where the next change effort can even be structured to facilitate research.

Our partnership research team has also encountered challenges:

There is an ongoing need to balance the needs and demands of Initiative clinical participants to provide efficient and effective depression care to their patients and the needs of the Study to collect information about organizational and patient factors related to the depression care process.

The Initiative and the Study have separate needs for information from the participating clinics, which can be confusing to clinical personnel.

There is a natural tension between the Study protocol and the Initiative leaders who want to learn results from the Study in ‘real time’ in order to make mid-course corrections, justify the value of the program, or address other needs and questions that arise. The result can be more like a formative evaluation than the summative evaluation that the Study will need to produce.

Since the patient sampling frame of the Study has no direct relationship to patients activated into DIAMOND by the Initiative clinics, and since only about 10% of all eligible patients end up being activated by the Initiative, the study sample risks including mostly patients who are not being treated by the DIAMOND care management program. This could result in not enough study subjects who receive DIAMOND care to be able to determine the effectiveness of the Initiative, so it required adding an additional sample of those who have had health plan claims for DIAMOND care.

So far, these challenges have been managed because there is a strong sense among the research and Initiative partners that the initial consensus on shared goals and the ongoing dialogue between the Study and the Initiative have been integral to the quality of both sides of this partnership project.

Conclusions

As summarized by Berwick, “Improving the U.S. health care system requires simultaneous pursuit of three aims: improving the experience of care, improving the health of populations, and reducing per capita costs of health care.”(46) The enormous challenge of this “Triple Aim” will require transformation of both the care system and the research system. These players can continue to operate largely on their own, or they can learn to work in partnership to form the kind of learning community needed to solve our problems. If more choose the partnership route, research can produce the evidence decision makers require to achieve Berwick’s aims.

ACKNOWLEDGEMENTS

This research would not have been possible without the active support of many leaders and staff at the Institute for Clinical Systems Improvement, payers (Blue Cross and Blue Shield of Minnesota, First Plan, HealthPartners, Medica, Minnesota Dept. of Human Services, Preferred One, and U Care), and twenty-four medical groups. Patient subject recruitment and surveys have been sustained by Colleen King and her wonderful staff at the HPRF Data Collection Center, and many other investigators and staff at HPRF have contributed extensively to the development and operations of this very complex study. We also thank Andy Van de Ven for his review and comments.

This research was funded by grant #5R01MH080692 from the National Institute of Mental Health

REFERENCES

- 1.Zerhouni EA. Translational and clinical science--time for a new vision. N Engl J Med. 2005;353:1621–1623. doi: 10.1056/NEJMsb053723. [DOI] [PubMed] [Google Scholar]

- 2.Zerhouni EA. US biomedical research: basic, translational, and clinical sciences. JAMA. 2005;294:1352–1358. doi: 10.1001/jama.294.11.1352. [DOI] [PubMed] [Google Scholar]

- 3.Sung NS, Crowley WF, Jr, Genel M, et al. Central challenges facing the national clinical research enterprise. Jama. 2003;289:1278–1287. doi: 10.1001/jama.289.10.1278. [DOI] [PubMed] [Google Scholar]

- 4.Woolf SH. The meaning of translational research and why it matters. JAMA. 2008;299:211–213. doi: 10.1001/jama.2007.26. [DOI] [PubMed] [Google Scholar]

- 5.Green LW, Glasgow RE. Evaluating the relevance, generalization, and applicability of research: issues in external validation and translation methodology. Eval Health Prof. 2006;29:126–153. doi: 10.1177/0163278705284445. [DOI] [PubMed] [Google Scholar]

- 6.Kottke TE, Solberg LI, Nelson AF, et al. Optimizing practice through research: a new perspective to solve an old problem. Ann Fam Med. 2008;6:459–462. doi: 10.1370/afm.862. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Moyer VA, Butler M. Gaps in the evidence for well-child care: a challenge to our profession. Pediatrics. 2004;114:1511–1521. doi: 10.1542/peds.2004-1076. [DOI] [PubMed] [Google Scholar]

- 8.Tunis SR, Stryer DB, Clancy CM. Practical clinical trials: increasing the value of clinical research for decision making in clinical and health policy. JAMA. 2003;290:1624–1632. doi: 10.1001/jama.290.12.1624. [DOI] [PubMed] [Google Scholar]

- 9.Glasgow RE, Magid DJ, Beck A, et al. Practical clinical trials for translating research to practice: design and measurement recommendations. Med Care. 2005;43:551–557. doi: 10.1097/01.mlr.0000163645.41407.09. [DOI] [PubMed] [Google Scholar]

- 10.Van de Ven A, Johnson PE. Knowledge for theory and practice. Acad Mgmt Rev. 2006;31 [Google Scholar]

- 11.Van de Ven A, Zlotkowski E. Toward a scholarship of engagement: a dialogue beween Andy Van de Ven and Edward Zlotkowski. Acad Mgmt Learning Education. 2005;4:355–362. [Google Scholar]

- 12.Van de Ven AH. Engaged scholarship: a guide for organizational and social research. New York: Oxford University Press; 2007. [Google Scholar]

- 13.Boyer EL. Engaged scholarship: a guide for organizational and social research. San Francisco: Jossey-Bass; 1990. [Google Scholar]

- 14.Mitchell P, Pirkis J, Hall J, et al. Partnerships for knowledge exchange in health services research, policy and practice. J Health Serv Res Policy. 2009;14:104–111. doi: 10.1258/jhsrp.2008.008091. [DOI] [PubMed] [Google Scholar]

- 15.Choi BC, Pang T, Lin V, et al. Can scientists and policy makers work together? J Epidemiol Community Health. 2005;59:632–637. doi: 10.1136/jech.2004.031765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Solberg LI, Elward KS, Phillips WR, et al. How can primary care cross the quality chasm? Ann Fam Med. 2009;7:164–169. doi: 10.1370/afm.951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gold M, Taylor EF. Moving research into practice: lessons from the US Agency for Healthcare Research and Quality's IDSRN program. Implement Sci. 2007;2:9. doi: 10.1186/1748-5908-2-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Stetler CB, McQueen L, Demakis J, et al. An organizational framework and strategic implementation for system-level change to enhance research-based practice: QUERI Series. Implement Sci. 2008;3:30. doi: 10.1186/1748-5908-3-30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Green LA, Hickner J. A short history of primary care practice-based research networks: from concept to essential research laboratories. J Am Board Fam Med. 2006;19:1–10. doi: 10.3122/jabfm.19.1.1. [DOI] [PubMed] [Google Scholar]

- 20.Francis J, Perlin JB. Improving performance through knowledge translation in the Veterans Health Administration. J Contin Educ Health Prof. 2006;26:63–71. doi: 10.1002/chp.52. [DOI] [PubMed] [Google Scholar]

- 21.Lindamer LA, Lebowitz B, Hough RL, et al. Establishing an implementation network: lessons learned from community-based participatory research. Implement Sci. 2009;4:17. doi: 10.1186/1748-5908-4-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Horowitz CR, Robinson M, Seifer S. Community-based participatory research from the margin to the mainstream: are researchers prepared? Circulation. 2009;119:2633–2642. doi: 10.1161/CIRCULATIONAHA.107.729863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Shadish WR, Cook TD, Campbell DT. Experimental and Quasi-Experimental Designs for Generalized Causal Inference. Boston: Houghton Mifflin; 2002. [Google Scholar]

- 24.Sargent RP, Shepard RM, Glantz SA. Reduced incidence of admissions for myocardial infarction associated with public smoking ban: before and after study. BMJ. 2004;328:977–980. doi: 10.1136/bmj.38055.715683.55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Korsen N, Pietruszewski P. Translating evidence to practice: two stories from the field. J Clin Psychol Med Settings. 2009;16:47–57. doi: 10.1007/s10880-009-9150-2. [DOI] [PubMed] [Google Scholar]

- 26.Jaeckels N. Early DIAMOND adopters offer insights. Minnesota Physician. 2009:28–29. 34. [Google Scholar]

- 27.Gilbody S, Bower P, Fletcher J, et al. Collaborative care for depression: a cumulative meta-analysis and review of longer-term outcomes. Arch Intern Med. 2006;166:2314–2321. doi: 10.1001/archinte.166.21.2314. [DOI] [PubMed] [Google Scholar]

- 28.Williams JW, Jr, Gerrity M, Holsinger T, et al. Systematic review of multifaceted interventions to improve depression care. Gen Hosp Psychiatry. 2007;29:91–116. doi: 10.1016/j.genhosppsych.2006.12.003. [DOI] [PubMed] [Google Scholar]

- 29.Pincus HA, Pechura CM, Elinson L, et al. Depression in primary care: linking clinical and systems strategies. Gen Hosp Psychiatry. 2001;23:311–318. doi: 10.1016/s0163-8343(01)00165-7. [DOI] [PubMed] [Google Scholar]

- 30.Depression, Major, in Adults in Primary Care (Guideline) [ICSI web site] [Accessed Aug 6, 2009];2009 Available at: http://www.icsi.org/guidelines_and_more/gl_os_prot/behavioral_health/depression_5/depression__major__in_adults_in_primary_care_4.html. [Google Scholar]

- 31.Kroenke K, Spitzer RL. The PHQ-9: A new depression and diagnostic severity measure. Psychiatric Annals. 2002;32:509–521. [Google Scholar]

- 32.Lowe B, Kroenke K, Herzog W, et al. Measuring depression outcome with a brief self-report instrument: sensitivity to change of the Patient Health Questionnaire (PHQ-9) J Affect Disord. 2004;81:61–66. doi: 10.1016/S0165-0327(03)00198-8. [DOI] [PubMed] [Google Scholar]

- 33.Speroff T, O'Connor GT. Study designs for PDSA quality improvement research. Qual Manag Health Care. 2004;13:17–32. doi: 10.1097/00019514-200401000-00002. [DOI] [PubMed] [Google Scholar]

- 34.Glasgow RE. RE-AIMing research for application: ways to improve evidence for family medicine. J Am Board Fam Med. 2006;19:11–19. doi: 10.3122/jabfm.19.1.11. [DOI] [PubMed] [Google Scholar]

- 35.Solberg LI. Improving Medical Practice: A Conceptual Framework. Ann Fam Med. 2007;5:251–256. doi: 10.1370/afm.666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Glasgow RE, Wagner EH, Schaefer J, et al. Development and validation of the Patient Assessment of Chronic Illness Care (PACIC) Med Care. 2005;43:436–444. doi: 10.1097/01.mlr.0000160375.47920.8c. [DOI] [PubMed] [Google Scholar]

- 37.Glasgow RE, Whitesides H, Nelson CC, et al. Use of the Patient Assessment of Chronic Illness Care (PACIC) With Diabetic Patients: Relationship to patient characteristics, receipt of care, and self-management. Diabetes Care. 2005;28:2655–2661. doi: 10.2337/diacare.28.11.2655. [DOI] [PubMed] [Google Scholar]

- 38.Gilbody S, Richards D, Brealey S, et al. Screening for depression in medical settings with the Patient Health Questionnaire (PHQ): a diagnostic meta-analysis. J Gen Intern Med. 2007;22:1596–1602. doi: 10.1007/s11606-007-0333-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Mattke S, Balakrishnan A, Bergamo G, et al. A review of methods to measure health-related productivity loss. Am J Manag Care. 2007;13:211–217. [PubMed] [Google Scholar]

- 40.Scholle SH, Pawlson LG, Solberg LI, et al. Measuring practice systems for chronic illness care: accuracy of self-reports from clinical personnel. Jt Comm J Qual Patient Saf. 2008;34:407–416. doi: 10.1016/s1553-7250(08)34051-3. [DOI] [PubMed] [Google Scholar]

- 41.Solberg LI, Asche SE, Pawlson LG, et al. Practice systems are associated with high-quality care for diabetes. Am J Manag Care. 2008;14:85–92. [PubMed] [Google Scholar]

- 42.Solberg LI, Asche SE, Margolis KL, et al. Measuring an Organization's Ability to Manage Change: The Change Process Capability Questionnaire and Its Use for Improving Depression Care. Am J Med Qual. 2008;23:193–200. doi: 10.1177/1062860608314942. [DOI] [PubMed] [Google Scholar]

- 43.Tierney WM, Oppenheimer CC, Hudson BL, et al. A national survey of primary care practice-based research networks. Ann Fam Med. 2007;5:242–250. doi: 10.1370/afm.699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Mold JW, Peterson KA. Primary care practice-based research networks: working at the interface between research and quality improvement. Ann Fam Med. 2005;3(Suppl 1):S12–S20. doi: 10.1370/afm.303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Jones L, Wells K. Strategies for academic and clinician engagement in community-participatory partnered research. JAMA. 2007;297:407–410. doi: 10.1001/jama.297.4.407. [DOI] [PubMed] [Google Scholar]

- 46.Berwick DM, Nolan TW, Whittington J. The triple aim: care, health, and cost. Health Aff (Millwood) 2008;27:759–769. doi: 10.1377/hlthaff.27.3.759. [DOI] [PubMed] [Google Scholar]