Abstract

The orbitofrontal cortex (OFC) has long been associated with the flexible control of behavior and concepts such as behavioral inhibition, self-control and emotional regulation. These ideas emphasize the suppression of behaviors and emotions, but OFC’s affirmative functions have remained enigmatic. Here we review recent work that has advanced our understanding of this prefrontal area and how its functions are shaped through interaction with subcortical structures such as the amygdala. Recent findings have overturned theories emphasizing behavioral inhibition as OFC’s fundamental function. Instead, new findings indicate that OFC provides predictions about specific outcomes associated with stimuli, choices and actions, especially their moment-to-moment value based on current internal states. OFC function thereby encompasses a broad representation or model of an individual’s sensory milieu and potential actions, along with their relationship to likely behavioral outcomes.

Keywords: affect, amygdala, behavioral inhibition, choice, common currency, decision making, depression, emotion, emotional regulation, medial frontal cortex, obsessive-compulsive disorder, orbital prefrontal cortex, orbitofrontal cortex, outcome, reinforcement learning, reward, substance abuse, valuation, ventromedial frontal cortex

Introduction

Over a century ago, a team of divers discovered an ancient mechanical device in the wreck of a Roman galleon. Called the Antikythera mechanism, its function remained a mystery for decades. The use of new imaging techniques, together with a painstaking analysis of the device’s inner workings, revealed that this collection of bronze gears can calculate the future locations of the earth, moon and sun. As a result, the Antikythera mechanism accurately predicted eclipses and other celestial events (Freeth et al., 2008). In ancient times, these prognostications probably contributed to planning important cultural activities, and so it is of some interest that the ancients trusted an analog computer for these prophesies, rather than their oracles, auguries or authorities.

Like the chance discovery of the Antikythera mechanism, the first knowledge about orbitofrontal cortex (OFC) function stemmed from a fortuitous event. As the most ventral part of the prefrontal cortex (PFC) (Fig. 1), OFC was directly in harms way when an accidental explosion led to “the passage of an iron rod through the head” of a now-famous railroad worker named Phineas Gage (Harlow, 1848). His subsequent lack of self-control, coupled with otherwise fairly normal cognitive capacities, had an enduring influence on ideas about OFC, along with PFC more generally. Based on patients like Phineas Gage and animals with experimental lesions of OFC, many theories hold that its functions primarily involve behavioral inhibition, inhibitory self-control and emotional regulation (Dias et al., 1996; Mishkin, 1964; Roberts and Wallis, 2000; Rolls, 2000).

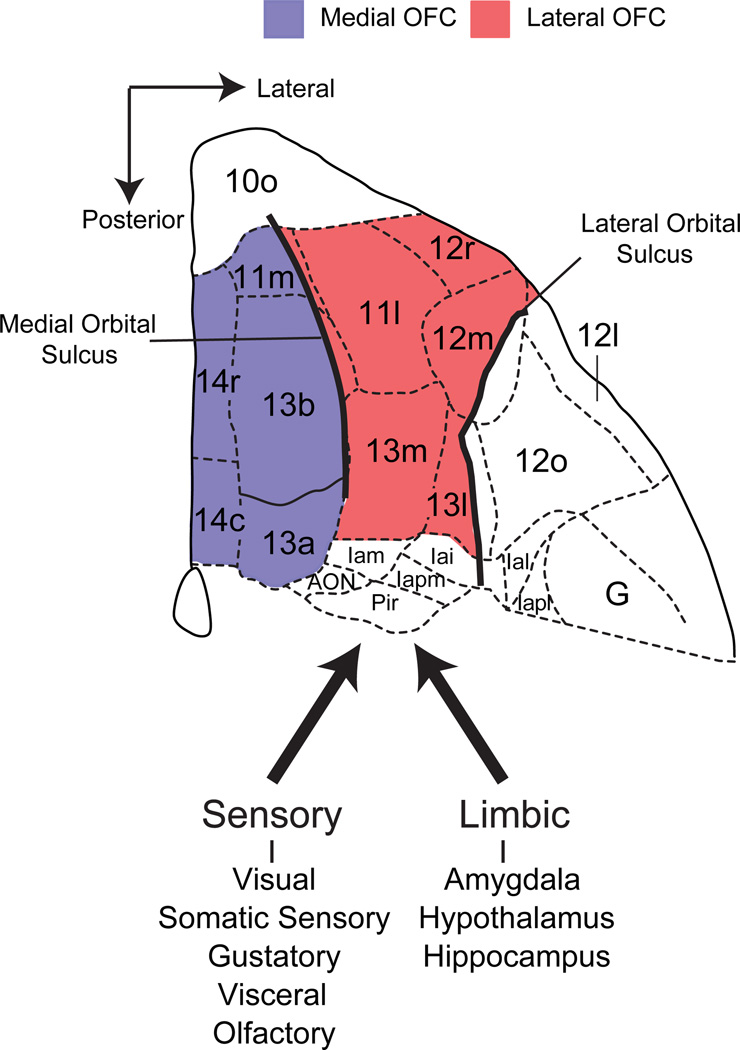

Figure 1.

Carmichael and Price’s (1994) parcellation of the ventral surface of the frontal lobe of a macaque brain based on variation in chemo- and cytoarchitecture. Red and purple shaded regions correspond to the lateral and medial subdivisions of OFC, respectively. These two subdivisions are often used in neuropsychological and neurophysiological investigations in primates to grossly subdivide OFC. The medial and lateral orbital sulci are marked by thick black lines. Thick dark arrows indicate connections, based on Carmichael and Price (1995a,b).

Recent research has overturned this view of OFC function. These results show that OFC has a more affirmative function. Rather than primarily suppressing unwanted behaviors and emotions, this new evidence shows that OFC contributes to both by predicting the specific outcomes that should follow either sensory events or behavioral choices and representing an updated valuation of these outcomes. These signals play a particularly important role at the time of a choice, and they underlie both good choices and bad ones.

Evidence from monkeys indicates that OFC encodes the predicted value of objects that might serve as goals, perhaps in an abstract “common currency” (Padoa-Schioppa, 2011). In contrast, results from rodents emphasize specific outcomes more than a general outcome like a common currency. These findings suggest that OFC represents the sensory qualities of available outcomes that have become associated with conditioned stimuli (Schoenbaum et al., 2011) and potentially maps the entire sensory milieu in terms of potentially reinforcing outcomes (Wilson et al., 2014). Although delineating OFC’s differences among mammalian species remains an active area of research, an emerging consensus recognizes that OFC in all mammals plays a critical role in signaling predictions about the future outcomes of behavior, including both appetitive and aversive ones.

Researchers deciphered the Antikythera device through interplay between a detailed analysis of its inner workings and hypotheses about its function, and we would like to do something similar for OFC. Accordingly, this review characterizes the necessary functions of OFC and then delves into its mechanisms. We discuss the information encoded by OFC neurons, the source of these signals, and how distinct parts of OFC contribute to its functions. In doing so, we draw primarily on insights gained from studies of monkeys and rodents, in part because they provide a unique opportunity to examine the causal contribution of OFC to behavior, and partly because they provide insight into its information processing at the single-neuron level. We pose three questions: What does OFC do?; What information does OFC process?; and How does OFC influence behavior?

What does OFC do?

Prediction of specific outcomes

Our strongest evidence regarding OFC’s function comes from studying animals and humans without an OFC. Observations of patients (like the aforementioned Phineas Gage) and monkeys with damage to OFC have led to the idea that OFC is important for inhibitory control, specifically for suppressing prepotent responses in the face of changing circumstances (Mishkin, 1964). This “inhibitory control” hypothesis was based in part on the effects of aspiration lesions of OFC on a task called object reversal learning. When humans, monkeys, cats and rodents with OFC damage face this task, they cannot rapidly reverse their choices after changes in stimulus-reward contingencies (Butter, 1969; Chudasama and Robbins, 2003; Dias et al., 1996; Mishkin, 1964; Schoenbaum et al., 2003a; Teitelbaum, 1964). Over time, and with repeated findings of this kind, the deficits in object reversal learning became accepted as a pathognomonic sign of OFC damage. The inhibitory control hypothesis of OFC function has had a wide-ranging impact on neuropsychology, neurology and psychiatry (Blair, 2010; Clark et al., 2004; Fineberg et al., 2009; Roberts and Wallis, 2000) and has been used to account for the extreme changes in emotional behavior that often follow OFC damage or dysfunction (Rolls, 2000).

The link between OFC function, reversal learning and inhibitory control was based largely on the effects of aspiration lesions of OFC (Iversen and Mishkin, 1970; Jones and Mishkin, 1972; cf. Dias et al., 1996), lesions that not only remove the cortex but also may damage fibers of passage—axons coursing nearby or through OFC. In a study of macaque monkeys, we recently found that complete, excitotoxic, fiber-sparing lesions of OFC (Fig. 1, Fig. 2a) had no effect on either object reversal learning (Fig 2b) or emotional responses to artificial snakes (Rudebeck et al., 2013b) (Fig 2c), both of which are severely disrupted by aspiration lesions of OFC (Butter, 1969; Butter et al., 1970). This surprising result indicates that, contrary to prevailing expert opinion, OFC is not necessary for choosing objects flexibly in the face of changing stimulus-reward contingencies, at least in this classic task. Thus the pathognomonic “OFC task” does not depend on OFC at all, at least not as this anatomical term refers to gray matter as opposed to white matter and fiber tracts.

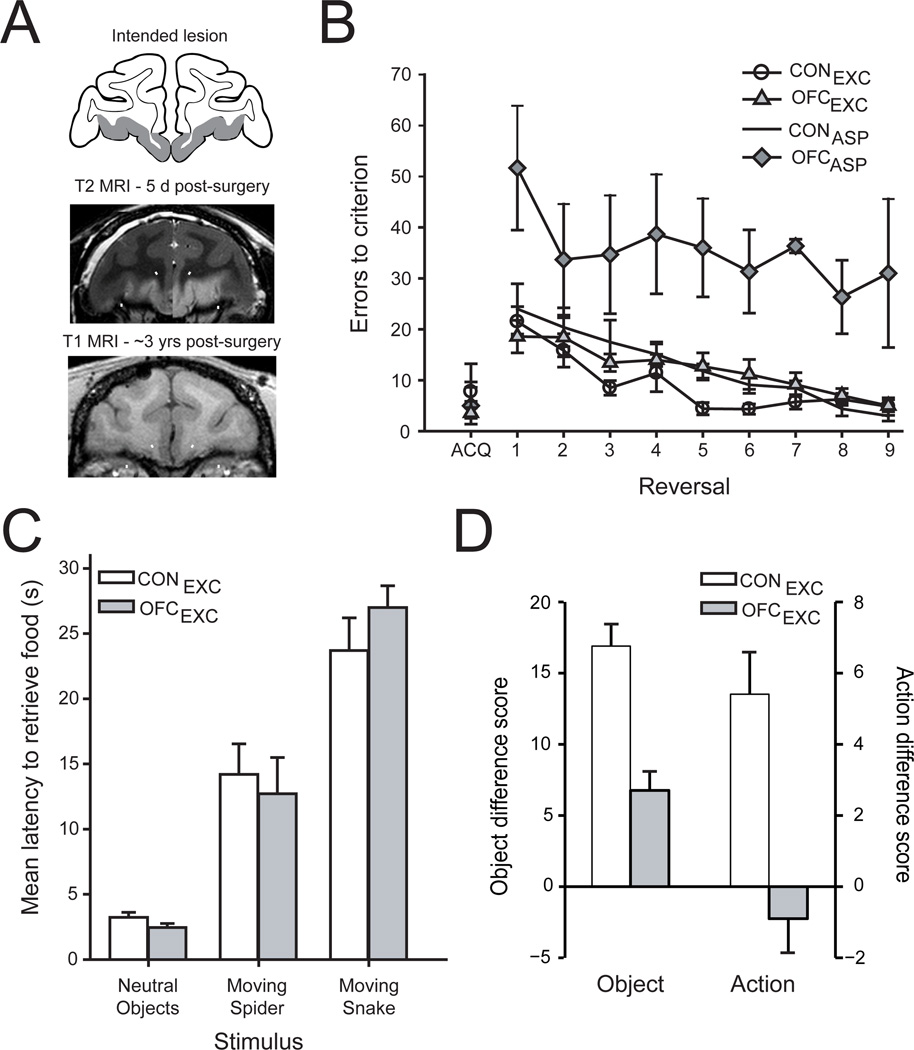

Figure 2.

The effect of excitotoxic lesions of OFC on reward-guided behavior. A) Excitotoxic OFC lesions; location and extent of the intended lesion shown on drawing of a coronal section through the frontal lobe (Intended lesion, top), representative case with an excitotoxic lesion of OFC (T2-weighted MRI, taken 5 days after the injection of excitotoxins, middle), and representative case with an excitotoxic lesion of OFC (T1-weighted MRI, taken ~3 years after surgery, bottom). B) Serial object reversal learning. Mean (±SEM) number of errors for unoperated controls (CONEXC and CONASP, unfilled circles and squares respectively), macaques with excitotoxic OFC lesions (OFCEXC, shaded triangles) and macaques with aspiration lesions of OFC (OFCASP, shaded diamonds). Unlike monkeys with aspiration lesions of OFC, monkeys with excitotoxic lesions of OFC do not differ from controls in their performance on this task. C) Emotional responses to neutral and fear inducing objects. Mean (±SEM) latency of unoperated controls (unfilled bars) and monkeys with excitotoxic OFC lesions (gray bar) to retrieve a desired food reward in the presence of different objects. Monkeys with excitotoxic lesions of OFC do not differ from controls. D) Devaluation task. Mean (±SEM) difference scores for unoperated controls (unfilled bars) and macaques with excitotoxic lesions of OFC (gray bars) in the object-(left) and action-based (right) devaluation tasks. Same labels as in (C). Monkeys with excitotoxic lesions of OFC are still undergoing behavioral testing; the estimated extents of the OFC lesions as determined from postoperative T2-weighted MR scans ranged from 64 to 96% complete, and later T1-weighted (structural) MR scans are consistent with this picture. There was no correlation of the lesion extent and scores on any behavioral assessment. Adapted from Rudebeck et al. (2013b) and Rhodes and Murray (2013).

These new results also raise doubts about the links between OFC, emotional regulation and behavioral flexibility—for both monkeys and humans (Butter et al., 1970; Damasio et al., 1990; Izquierdo et al., 2005). The locations of white-matter bundles traveling into the frontal lobes (Croxson et al., 2005; Jbabdi et al., 2013) suggest that humans with damage to OFC—whether through strokes, blunt force trauma, tumor excisions or aneurisms—have damage not only to the gray matter of OFC, but also to white matter traveling to frontal regions outside OFC. The close correspondence between macaque and human OFC (Petrides and Pandya, 1994) extends this conclusion to experimental lesions of OFC in monkeys.

Two obvious questions follow from our new results: i) which white matter pathways are damaged by aspiration of OFC; and ii) why do excitotoxic lesions in rodents and marmosets cause deficits in reversal learning (Chudasama and Robbins, 2003; Dias et al., 1996; Izquierdo et al., 2013; Schoenbaum et al., 2003a)?

In answer to the first question, aspiration lesions of medial OFC, alone, do not affect emotional responses to snakes (Noonan et al., 2010a) or cause deficits in object reversal learning (Noonan et al., 2010b). Recall that, in our recent experiment, the selective excitotoxic lesion eliminated both medial and lateral OFC (Fig. 1, Fig. 2a). Taken together, these findings suggest that white matter tracts adjacent to the lateral, but not medial, OFC might be the critical pathways. Anatomical tract tracing studies also show that connections from the medial temporal lobe to the lateral convexity of PFC course near to the fundus of the lateral orbital sulcus, close to where cortex is typically removed by aspiration lesions of OFC (Lehman et al., 2011). Alternatively, the severe impairments in reversal learning seen in the older studies might have resulted from a more widespread disconnection, one involving axons located near both medial and lateral OFC.

There are a number of potential answers to the second question. One is that different species, by virtue of their different foraging niches, solve reversal learning tasks using different strategies. This idea could account for the observation that deficits after excitotoxic lesions of OFC in rats and marmosets differ qualitatively from those caused by aspiration lesions in macaques. Specifically, deficits in rodents and marmosets are usually confined to the first few reversals (Clarke et al., 2008) whereas those in macaques emerge only after a number of reversals (Izquierdo et al., 2004). Alternatively, the enlargement of PFC during primate evolution (Passingham and Wise, 2012; Preuss, 1995) could mean that other parts of PFC, beyond the OFC areas involved in our lesions, can guide choices in reversal learning. Once we identify the cortical areas underlying reversal learning in macaques, we should be in a position to decide among these possibilities.

The results discussed so far show something about what OFC does not do, but not much about what it does. Compared with the negative results obtained for the object reversal learning (Fig. 2b, triangles) and emotional regulation (Fig. 2c) tasks, results from the devaluation task show that OFC plays a critical role in choosing objects based on the expected value of specific outcomes (Fig. 2d, left). This impairment, alone among those observed in the tasks we used, did not depend on disrupting fiber pathways near or passing through OFC.

In devaluation tasks, animals need to update the value of specific outcomes and to use that information to guide choices. Here, when we say “specific outcome”, we mean a representation of the constellation of sensory properties that together comprise the food or fluid that is produced by a particular choice. For a food outcome, for example, this would translate to its taste, smell, visual attributes such as color, shape and texture, and its feel in the mouth. Importantly, whereas object reversal learning examines the ability of animals to respond flexibly to changes in stimulus–reward contingencies when food value is fixed and a single type of reward is available, devaluation tasks examine the ability of animals to respond flexibly to changes in the value of different foods, based on current internal states such as selective satiation. Put another way, reversal learning relates to the likelihood of resource availability given some choice, whereas devaluation tasks probe what a particular resource is worth at the moment.

Given the finding that fiber-sparing lesions of OFC disrupted the choice between two objects based on current valuations (Fig. 2d, left), we conclude that OFC is necessary for updating the value of specific object-outcome associations, not simply tracking the presence, absence or likelihood of a reward. Of course, OFC operates in concert with other brain regions to perform this function, including the amygdala and mediodorsal nucleus of the thalamus (Murray and Rudebeck, 2013), a point taken up later.

In a separate devaluation experiment, monkeys with selective excitotoxic lesions of OFC also had deficits in updating the valuation of expected outcomes in an action-outcome task (Rhodes and Murray, 2013)(Fig. 2d, right). In fact, these results came from some of the same monkeys tested on the object-outcome task (Fig. 2d, left). This result is surprising given that previous results in both rodents (Ostlund and Balleine, 2007) and macaque monkeys (Rudebeck et al., 2008) suggested that OFC was only required for Pavlovian (stimulus-outcome) or object-reward processes, not action-outcome or instrumental responses. These data indicate that, in primates, OFC may be necessary for all computations where sensory-specific properties of the outcome have to be considered. On this view, OFC functions in evaluating such objects both as choice items (in the object devaluation task) and as object outcomes (e.g., food rewards in both object and action devaluation tasks).

Taken together, the results from devaluation studies suggest that OFC encodes a unified representation of outcomes, including their specific sensory properties and current biological value, which could enter into a variety of associations. For example, outcomes could be associated not only with objects and actions, but also with abstract concepts, behavioral rules and strategies. Indeed, one study in macaques that employed an analog of the Wisconsin Card Sort Task implicated OFC in the selection of behavior-guiding rules after rule changes (Buckley et al., 2009).

Our results appear to fit with data from rodents suggesting that OFC is critical for representing the sensory qualities of specific outcomes associated with actions as well as objects (Burke et al., 2008; Gremel and Costa, 2013; Keiflin et al., 2013) and model-based, as opposed to model-free, reinforcement learning (McDannald et al., 2011, 2014). Model-based learning draws on an animal’s detailed knowledge of the world, in contrast to model-free reinforcement learning, which accounts for behaviors based solely on experience with stimulus–response–outcome associations. Primate and rodents diverged long ago, but we assume that as OFC evolved in both lineages the parts inherited from their common ancestor retained a conserved function and new parts (Passingham and Wise, 2012; Preuss, 1995) performed related ones. As a result, we are now in a position to answer the question posed by the title of this section.

Summary: What does OFC do?

Impairments in object reversal learning (Fig. 2b) and emotional responding (Fig. 2c) in macaques previously ascribed to OFC appear to be due to interrupting fiber tracts running nearby (or though) OFC. Accordingly, the available evidence now argues against the idea that OFC per se plays an important role in behavioral inhibition, inhibitory self-control or emotional regulation. Instead, OFC, acting together with the amygdala and mediodorsal thalamus, represents the specific identity of predicted outcomes and their up-to-the-moment value, taking into account an animal’s or human’s current state (Gottfried and Zelano, 2011; Holland and Gallagher, 2004; Passingham and Wise, 2012; Schoenbaum et al., 2009). In this way, OFC plays a crucial role in making beneficial choices among either objects or outcomes (Fig. 2d), and perhaps concepts, rules and strategies, as well. In addition, the outcome representations housed in OFC presumably mediate associations that promote certain Pavlovian responses (i.e., licking).

What information does OFC process?

Signals related to attributes of future outcomes

Now that we can say what the OFC does, we can explore its mechanisms more fully. Physiological recording studies have demonstrated that many neurons in OFC signal information related to reinforcers that have become associated with stimuli, choices and actions, specifically foods, fluids and mildly aversive outcomes. The first neurophysiological studies of OFC showed that its cells encoded stimuli that predicted impending fluid rewards (Niki et al., 1972; Rosenkilde et al., 1981; Thorpe et al., 1983), and a myriad of additional outcome-related signals have been reported since then (for a review see, Abe et al., 2011; Morrison and Salzman, 2011; Padoa-Schioppa and Cai, 2011; Pearson et al., 2014; Schultz et al., 2011; Simmons et al., 2007; Wallis and Kennerley, 2010).

One way to synthesize the diverse array of signals in OFC depends on the idea that its neurons signal predicted outcomes as well as their receipt. Specifically, a substantial proportion of OFC neurons encode the potential for, sensory attributes of and subjective value of outcomes associated with external stimuli. Other OFC neurons signal similar information when the predicted outcomes actually occur (for example, Padoa-Schioppa and Assad, 2006). It is reasonable to think that the stimulus-evoked signals convey the subjective value of biologically significant outcomes because, unlike neurons in other parts of the brain, neurons in OFC distinguish between appetitive and aversive outcomes. In contrast, signals elsewhere appear to code motivational salience, which does not depend as much on whether the outcome is detrimental or beneficial (Morrison and Salzman, 2009; Roesch and Olson, 2004). These neurophysiological attributes, combined with connections from limbic structures and a variety of sensory modalities (Carmichael and Price, 1995a, b; Ghashghaei et al., 2007)(Fig. 1), place OFC in a unique position. Indeed, OFC is one of the few places in the brain where visual, gustatory, olfactory and visceral sensory inputs converge (Price, 2005). Thus, neurons in this part of PFC have access to the information essential for evaluating and choosing advantageously among options.

Functional brain imaging (fMRI) investigations of humans similarly suggest that OFC signals outcome-related attributes (Gottfried and Zelano, 2011; O'Doherty, 2007). One difference between human and animal studies is that the former rarely use primary reinforcement, such as fluid reward or chocolate (cf. Small et al., 2001), whereas studies involving animals almost always do. Instead, human participants usually play games or other tasks to earn money or other secondary reinforcers. This difference complicates the comparison of results from different species and raises the possibility that separable parts of OFC encode primary and secondary reinforcement.

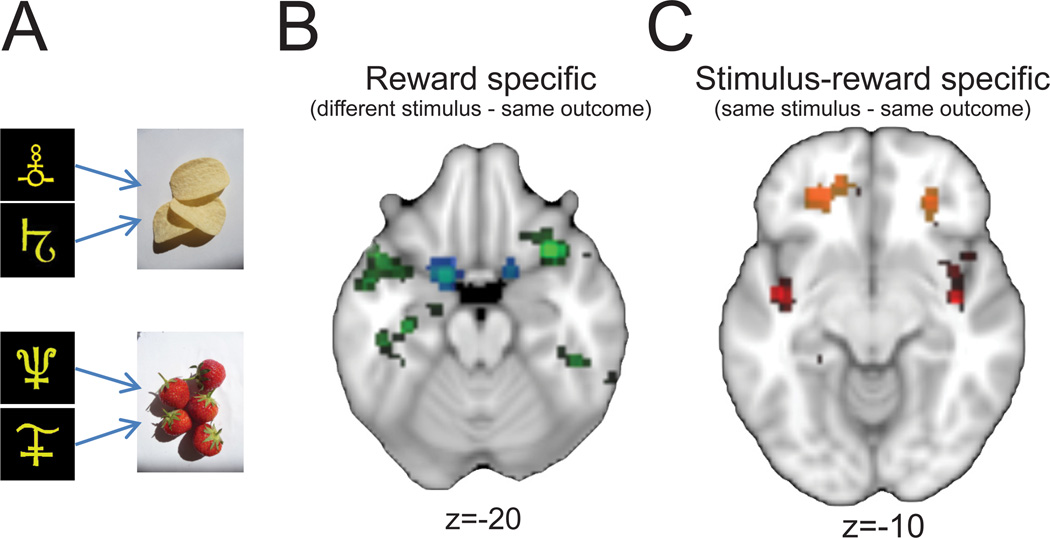

Two neuroimaging studies indicate that primary and secondary reinforcers may be encoded by separate parts of OFC in humans. A posterior to anterior dissociation in signaling primary and secondary reinforcement, respectively, was first reported in an experiment comparing OFC activation for sexually explicit images and money (Sescousse et al., 2010). The images used in that study were more complicated than suggested by terms like primary and secondary reinforcers, however, and many of them probably operated as both.

A recent study provided clearer evidence on this issue (Klein-Flugge et al., 2013). Using an experimental design that relied on repetition-suppression effects to map reward-related representations in OFC, the authors showed that representations in anterior OFC are specific to secondary reinforcers whereas those in the most posterior part of OFC are specific to primary reinforcers. Subjects were taught that two pairs of stimuli predicted specific but different outcomes. For instance, two stimuli predicted strawberries and two other stimuli predicted potato crisps (Fig 3a). To unmask parts of OFC that encoded primary rewards, such as strawberries, the authors looked for areas showing a decrease in activation when a different stimulus predicted the same primary reinforcement as on the previous trial (i.e., different stimulus, same outcome, Fig 3b). In this condition, parts of posterior OFC as well as agranular insular cortex showed reduced activation. For secondary reinforcement, the authors searched for decreased activations after repeated presentations of the same stimulus-outcome combinations (i.e., same stimulus, same outcome, Fig 3c). In this condition, parts of anterior OFC showed reduced activation. Thus, primary and secondary reinforcers appear to be signaled in different parts of the human OFC, especially for distinct stimuli associated with specific reinforcers. Future research on nonhuman primates could determine whether these signals contribute causally to goal selection and outcome evaluation. In addition, fMRI studies in humans could provide additional detail by investigating the generality of the anterior-posterior dissociation, and whether different types of primary and secondary reinforcers map onto distinct portions of OFC.

Figure 3.

Encoding of primary and secondary reinforcers in OFC. A) Two stimuli were paired arbitrarily with each of two different foods, equally valued by participants. Stimuli appeared sequentially for 700 ms separated by a 400-ms intertrial interval. B) Brain regions showing a decrement in BOLD response (green-blue shading) following presentation of two different stimuli that predicted the same food reward (different-stimulus, same-outcome). This measure is thought to represent primary reinforcers. C) Brain regions showing a decrement in BOLD response (red-yellow shading) following the sequential presentation of the same stimulus-reward pairing (same stimulus, same outcome). This measure is thought to represent secondary reinforcers. Z coordinates denote dorsal-ventral level. Adapted from Klein-Flugge et al. (2013).

Outcome signals in OFC: beyond sensory attributes and subjective value

Although some neurons in OFC signal the identity and subjective value of reward (Morrison and Salzman, 2009; Tremblay and Schultz, 1999), OFC neurons might represent other aspects of reward, as well. For example, OFC is thought to play a role in the hedonic experience of reward through its role in valuation (as reviewed by Kringelbach, 2005). If so, then OFC neurons might signal reward irrespective of its context, attributes, or meaning, somewhat similar to theories suggesting that the OFC is important for computing general economic value (Padoa-Schioppa, 2007).

A recent series of experiments by Tsujimoto and colleagues set out to tackle this question. Their results indicate that a proportion of neural responses in OFC convey the sensory or informational aspects of rewards, rather than being directly related to either their identity or hedonic aspects (Tsujimoto et al., 2011, 2012). Macaques were trained to implement different behavioral response strategies based on instructional cues. In one condition, the orientation of a bar presented on a monitor screen instructed macaques whether to make the same response as on the previous trial or to make a different one (i.e., stay or switch). In another, the delivery of one drop or two half-drops of fluid served as the instruction cues, again indicating whether to stay or switch (Fig 4a). Correct performance in either condition was rewarded with the same fluid reward, delivered in the same way. On trials in which fluid served both as an instructional cue and a reward, it was possible to determine whether neurons in OFC encoded reward for either its instructional meaning, as feedback for a correct response, or irrespective of its meaning. If OFC neurons always had the latter property, it would indicate that this cortical area signals reward for its pleasurable or hedonic aspects.

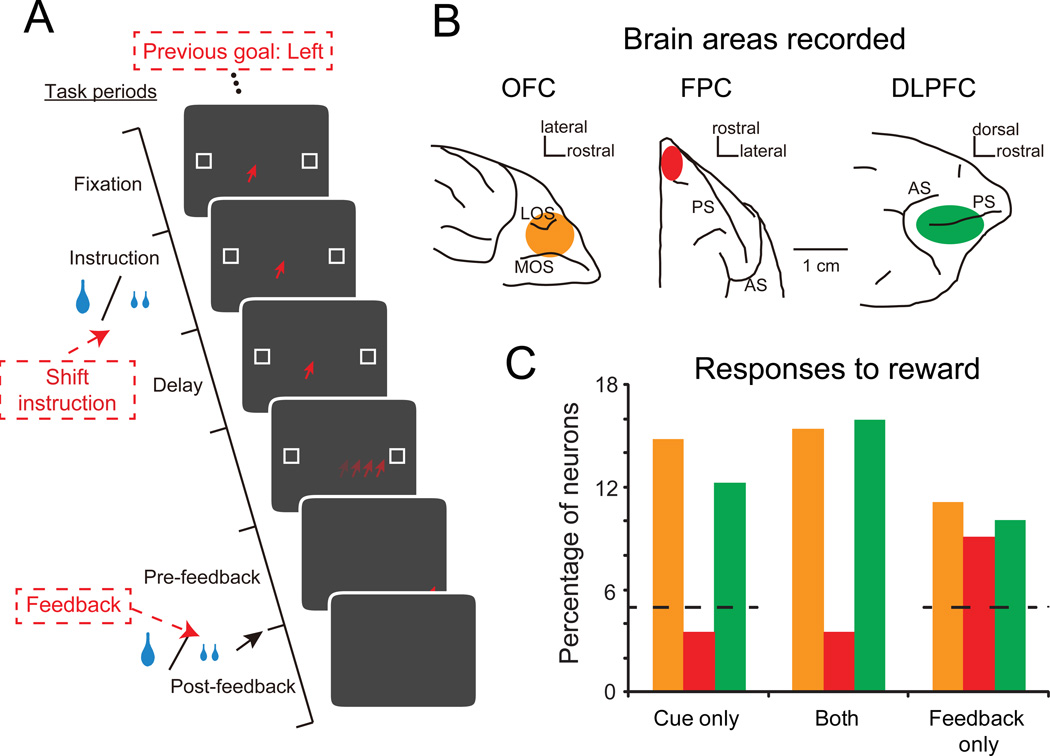

Figure 4.

OFC activity related to rewards delivered as instructional cues or feedback. A) Trial sequence. Trials started with the presentation of an instructional cue, either a single drop of fluid or two smaller drops. These cues signaled to the monkey whether to stay with the response from the previous trial (left or right) or switch to the alternative option. Once the monkey made a saccade to one of the two potential targets, both targets became solid white, whether correctly or incorrectly performed. Successful performance led to the delivery of additional fluid reward as feedback (reinforcement). Red type indicates sequence on example trial. B) Brain regions studied: OFC (orange on ventral view of macaque frontal lobe); frontal polar cortex (FPC, red on dorsal view of macaque frontal lobe); dorsolateral prefrontal cortex (DLPFC, green on lateral view of macaque frontal lobe). C) Percentage of neurons in OFC, FPC and DLPFC encoding the reward when delivered as an instructional cue (cue only), as feedback (feedback only) or both. Color scheme as in (B). Dashed line indicates noise/chance level for cue and feedback responses. Adapted from Tsujimoto et al. (2012).

A proportion of the neurons in OFC only encoded the reward when it was presented as an instruction cue. These same neurons did not respond to the reward delivered for correctly performing the instructed strategy (cue only, Fig 4b, c). A separate population of OFC neurons signaled reward when it was delivered for a correctly performed trial, and yet another population signaled rewards irrespective of when they were delivered (both and feedback only, Fig. 4c). Within OFC, roughly equally sized populations of neurons—about 15 percent of the task-related sample—responded to rewards in these three different categories (Fig 4c). Interestingly, a similar pattern of results was obtained in dorsolateral PFC, but the cortex of the frontal pole differed in that it only signaled reward as feedback (Fig 4c).

The presence of separable populations of OFC neurons signaling the occurrence of fluid reward delivery in these different ways is intriguing. Some neurons in OFC clearly signal the delivery of reward irrespective of its context, potentially suggesting a role for this population in conveying the subjective value of reward or potentially the specific identity of the reward. Neurons encoding reward only as feedback could serve to reinforce or extinguish previously learned associations, a process critical for updating associational knowledge and models of the environment. There is also evidence suggesting that reward-as-feedback encoding in OFC is task specific (Luk and Wallis, 2013).

Finally, neurons in OFC that signal instructional—as opposed to reward-related—information likely encode representations in addition to the “reward as instruction” signals described above. Encoding of task-related signals in OFC unrelated to reinforcement, such as the specific identity of sensory stimuli or the current abstract behavior-guiding rule, have also been reported in a number of studies in humans, macaques and rodents, but their importance has often received little emphasis (for example, Schoenbaum and Eichenbaum, 1995; Wallis et al., 2001; Wallis and Miller, 2003; Zelano et al., 2011). Instead, studies and reviews (including our own) have concentrated on the reward-related attributes of OFC activity (Morrison and Salzman, 2011; Rudebeck and Murray, 2011a). This was in part done to link the data to neuropsychological results and theories concerning the role of OFC in emotion (Rolls, 2000). The existence of non-reinforcement related signals in OFC is potentially important as it suggests a richer representation of the environment beyond reward. It adds weight to the hypothesis that OFC does not simply signal the attributes of a particular reinforcer or stimulus-reinforcer association but instead provides a map of all relevant attributes, related to reinforcement or not, needed to parse the environment (Wilson et al., 2014). It is worth noting that both task relevant and irrelevant signals have been reported in other parts of PFC in macaques, suggesting that encoding of the whole environment maybe a property of PFC areas in general, and not specific to OFC (Genovesio et al., 2014; Mann et al., 1988).

Despite its intuitive appeal, one issue with the idea that OFC represents all relevant attributes of the environment is that it does not readily provide an explanation of how certain sensory features become behaviorally relevant and therefore encoded by neurons in OFC. One possibility is that, in novel settings, all sensory aspects of the task are represented in OFC. Through learning, these representations are pruned until only those that have led to the desired outcomes will be encoded, an idea that has already gained some attention (Huys et al., 2012).

The origin of outcome-related signals

Neurophysiological and neuroimaging studies indicate the diverse types of neural signals present in OFC, but they do not indicate how these signals arise. Understanding the source of these signals could prove to be important for future attempts to influence OFC activity therapeutically. OFC receives connections from parts of the limbic system as well as from many of the different sensory modalities (Fig. 1, Price, 2005). Of all of the areas that interact with OFC, perhaps the amygdala has received the most attention. Interaction between these two structures is thought to be central to emotion and reward-guided behaviors (Gottfried et al., 2003; Holland and Gallagher, 2004; Murray and Wise, 2010; Salzman and Fusi, 2010; Schoenbaum and Roesch, 2005). Exactly how the amygdala contributes to neural signals in OFC remains unclear. The two available studies that have assessed the impact of amygdala damage on OFC outcome-related activity—one in humans and one in rats—offer differing perspectives, potentially due to species differences (Hampton et al., 2007; Schoenbaum et al., 2003b). In addition, studies that involve recording from both the OFC and amygdala in primates have emphasized opposing roles for these two structures in encoding stimulus–outcome associations for rewards and punishments (Morrison et al., 2011), with OFC encoding appetitive outcomes earlier than the amygdala and the opposite results for punishment.

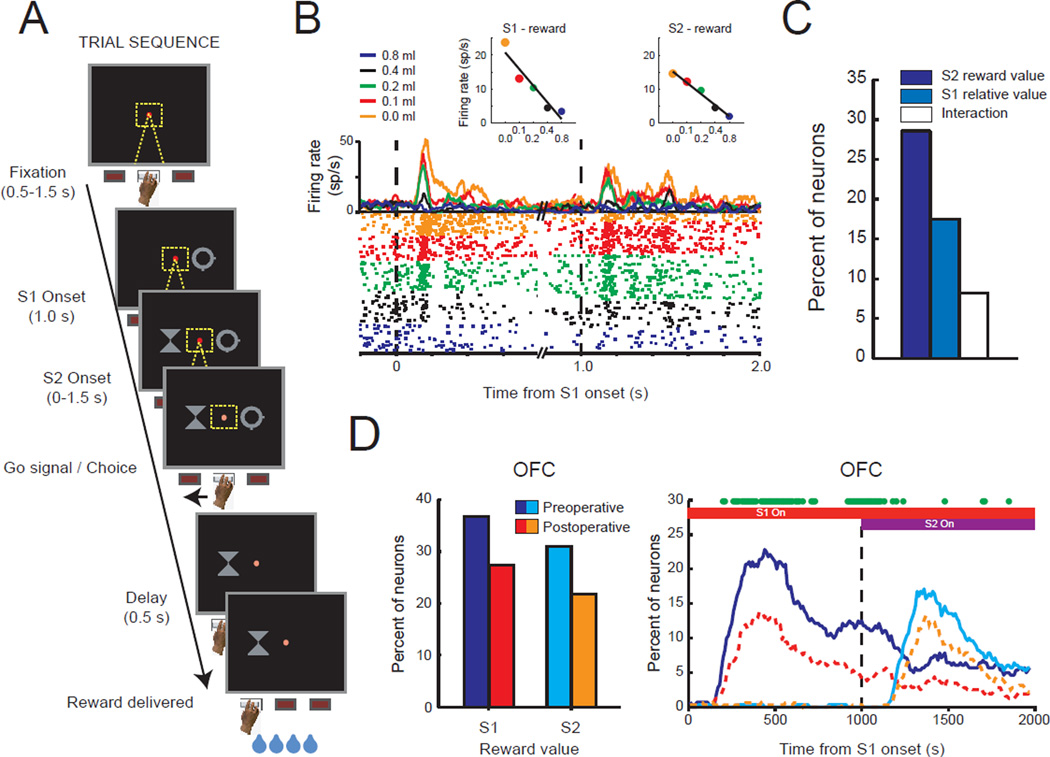

To examine the amygdala’s contribution to outcome encoding, we recorded neural activity in lateral OFC (areas 11 and 13, Fig. 1 red shaded area) and a restricted part of medial prefrontal cortex (MFC, dorsal bank of the cingulate sulcus, corresponding to areas 9v and 24c), of macaques while they performed a choice task for fluid rewards (Fig. 5a) (Rudebeck et al., 2013a). Here, different amounts of fluid reward, which we take to be encoded as an attribute of the outcome, were assigned to the different stimuli available for choice. Recordings were made before and after amygdala lesions. When the amygdala was intact, similar to several previous reports (for example, Wallis and Miller, 2003), we found that many neurons in both OFC and MFC signaled reward or outcome-related aspects of the task, including encoding of the value of anticipated outcomes associated with external stimuli (S1 and S2, Fig. 5b), as well as the value of received outcomes (not illustrated). Neural activity associated with reward was, however, more prevalent in OFC than in the MFC. For instance, relative to MFC, more neurons in OFC signaled the amount of reward associated with the visual cues that the macaques chose, and they did so earlier.

Figure 5.

The effect of amygdala lesions on reward-value encoding in OFC. A) Trial sequence. On each trial monkeys were sequentially presented with two stimuli (S1 and S2) associated with different amounts of reward and were instructed to choose between them. Each stimulus was associated with a different amount of fluid reward and monkeys nearly always chose the stimulus associated with the highest amount. B) Spike density and raster plots illustrating the activity of one neuron in OFC that exhibited its highest firing rate to stimuli associated with smallest amount of reward. Each dot in the raster plot indicates the time that this neuron discharged. The color of the curves and dots in the raster plot correspond to the amount of reward associated with each stimulus, as noted in the key. Inset figures show the relationship between each neuron’s firing rate and reward value, within the periods after S1 and S2 stimulus presentation, respectively. C) The proportion of OFC neurons encoding the absolute value of S2, relative value of S1 (either higher or lower than S2), or interaction between these factors after the presentation of the second stimulus. D) Effect of amygdala lesions. Left: proportion of OFC neurons preoperatively (blue/turquoise) and postoperatively (red/orange) encoding the reward value associated with S1 and S2. Right: time course of stimulus-reward value encoding. Green dots indicate significant reductions in reward encoding after amygdala lesions. Red bar: duration of S1 presentation. Purple bar: duration of S2 presentation. Adapted from Rudebeck et al. (2013a).

Input from the amygdala to frontal cortex was removed by making complete excitotoxic lesions of the amygdala in both hemispheres. This led to a decrease in the proportion of neurons in OFC encoding outcome-related aspects of the task (Fig. 5d). It also altered the latency at which OFC neurons signaled the amount of reward associated with different options. By contrast, outcome-related encoding in MFC was largely unchanged. Thus, despite projecting to both MFC and OFC, the amygdala has a greater influence on outcome encoding in OFC.

Importantly, removing input from the amygdala did not abolish outcome-related activity in OFC, but merely led to an approximately one-third reduction. Based on the work of Tsujimoto and colleagues, discussed previously, it could be that amygdala input is critical for the cells that signal reward irrespective of its behavioral context, a possibility that could be tested empirically. These data also indicate that the amygdala is not the sole origin for the outcome or reward information being signaled by neurons in OFC. Although the specific task parameters and experimental design could have attenuated the decrement that we observed—for example our monkeys were highly familiar with the choice options—it seems likely that other parts of the brain play an integral role in OFC outcome signals. Hypothalamus, inferotemporal cortex, perirhinal cortex, dopaminergic neurons in the midbrain and parts of the thalamus all send projections to OFC (Goldman-Rakic and Porrino, 1985; Ongur et al., 1998; Saleem et al., 2008; Williams and Goldman-Rakic, 1998) and each could provide information related to potential reinforcement or outcomes.

Projections from the basal forebrain also target OFC (Kitt et al., 1987). Like input from the amygdala, inputs from the basal forebrain may influence outcome-related signals and, ultimately, the encoding of specific goals for action in OFC. In rats, bursting activity in basal forebrain neurons in response to behaviorally relevant stimuli is time-locked to task related changes in local field potentials (LFPs) in OFC (Nguyen and Lin, 2014). In addition, electrical stimulation of the basal forebrain elicits alterations in these LFPs, suggesting that neurons in the basal forebrain directly contribute to OFC encoding of behaviorally relevant stimuli. Although input from basal forebrain neurons is unlikely to directly influence the encoding of specific goals or outcomes in OFC (Croxson et al., 2011), temporally discrete signals from this region might serve to heighten the salience of specific options during evaluation and goal selection in OFC. Alternatively, it may support plasticity for behaviorally relevant associations, including stimulus-reward associations, during learning.

These findings highlight the fact that many brain structures contribute to encoding specific outcomes in OFC, including the amygdala. Determining the contribution of each of OFC’s inputs will undoubtedly be important for gaining a mechanistic and systems level understanding of this area’s function. We think this knowledge is important and has the potential to guide therapeutic interventions aiming to affect OFC processing.

Comparison and choice signals

When making a choice, the costs and benefits of a number of alternatives must be weighed before a final selection can be made. In some situations, the presence of alternative options can influence the valuation of the option currently under consideration (Tversky and Itamar, 1993). Given this, we would like to know whether neural signals in OFC are influenced by the relative value of alternative options presented for choice as well as the current option. If only the latter, then OFC’s role in choice would be strictly evaluative. If both, then its role would include relative and evaluative functions.

As already indicated, neurons in lateral OFC (Fig. 1, red shaded area) encode the predicted subjective value of external stimuli (Kennerley et al., 2009; Padoa-Schioppa and Assad, 2006; Roesch and Olson, 2005; Tremblay and Schultz, 1999). In many studies of macaque OFC in choice behavior, two or more choice options are revealed to the subject at the same time. This aspect of the experimental design makes it difficult to disentangle neuronal responses related to the valuation of each individual option. To get around this problem, a few studies have either held the value of one option stable while varying the other (Padoa-Schioppa and Assad, 2008) or they have sequentially presented the options for choice, allowing option values to be dissociated temporally (Luk and Wallis, 2013; Rudebeck et al., 2013a; Wallis and Miller, 2003).

In one of these studies, we sequentially presented macaques with two rewarded options, each associated with a different amount of fluid reward (Fig. 5a)(Rudebeck et al., 2013a). While monkeys were performing the task, neural activity was recorded in lateral OFC. To determine whether reward-related signals in lateral OFC were influenced by the context in which they were presented, we looked at whether encoding of the second option’s (S2) reward value was influenced by that of the first (S1). Although a small population of neurons in lateral OFC (about 8 percent) signaled the reward associated with S2 in a manner that was dependent on S1, a much larger proportion of neurons (about 25 percent) signaled the reward value of the S2 independently of S1 (Fig. 5c). Thus, neurons in lateral OFC predominantly provide an assessment of each individual option independently of the others available.

If OFC signals are critical for making choices, then variations in the activity of OFC neurons should be correlated with choice behavior. Padoa-Schioppa (2013) has examined this issue for alternatives of similar value. Previously, he had characterized three different types of neurons in lateral OFC: “offer value” neurons that encode the value of the two offers presented, “chosen value” neurons that signal the value of the best or chosen option, and “chosen juice” neurons that signal the juice type (or juice identity) associated with the chosen option. Of these, chosen-juice neurons seem to be most specifically related to the outcome of the trial, as opposed to evaluating alternatives. Surprisingly, Padoa-Schioppa reported that slight fluctuations in either the pre-trial or within-trial activity of chosen juice neurons, but not offer-value or chosen-value neurons, correlated with small changes in choice behavior. Later, we take up the finding that it is chosen-juice neurons that show this correlation. For now, we simply note that this study provides evidence that neurons in lateral OFC participate in the process of choosing among options.

Evidence from human neuroimaging, macaque lesion and neurophysiology studies suggest a different view of OFC functional organization, one that questions the role of lateral OFC in choices per se, at least for the part of choice that involves comparisons and final selections. Several studies suggest that whereas lateral parts of OFC are important for evaluating options (Rudebeck and Murray, 2011b; Walton et al., 2010), its more medial parts are engaged when options have to be compared and selected (Boorman et al., 2009; FitzGerald et al., 2009; Hunt et al., 2012; Noonan et al., 2010b; Rudebeck and Murray, 2011b). Note that by medial OFC, we refer to the areas shaded in purple in Fig. 1, and not to the areas called MFC above.

The results of a recent neurophysiology study by Strait et al. (2014) adds weight to the idea that value comparison occurs in the medial OFC. In their study, monkeys chose between two sequentially presented choice options, each associated with different amounts of reward and different probabilities that the reward would be delivered. Neurons in medial OFC signaled the value of the two offers as they were presented using a “common currency” value scale to integrate reward size and probability. Notably, after the presentation of the second option, neurons encoded the value of the options using an inversely correlated encoding scheme. Opposing encoding schemes for the two options indicates that the representations of the two offers may be mutually inhibiting each other. Furthermore, after the presentation of the second option, neurons rapidly transitioned to signal the value of the option that would subsequently be chosen. This type of inversely correlated encoding contrasts with the type of signals seen in lateral OFC, where options are encoded largely independent of each other (Fig. 5c) and use correlated encoding schemes (unpublished observations). Although these findings are intriguing, we note that only a small proportion of neurons in medial OFC (about 10 percent) were found to have these properties, and that neurons in other brain areas were not examined using the same methods (Strait et al., 2014).

In addition to providing evidence for a role for medial OFC in guiding choice, the findings of Strait et al. are consistent with the idea that there are two functionally distinct modules in OFC, one for identifying and signaling the specific characteristics of different outcomes associated with objects or actions and one for comparing these options to select a goal for action (Rudebeck and Murray, 2011a; Rushworth et al., 2011). How the comparison process in medial OFC influences action selection, the next step in the process of attaining ones goals, is an issue we take up later.

Even if we accept the idea that medial OFC plays the largest role in choices and lateral OFC does so for evaluations, this still leaves the question of why the activity of chosen-juice neurons in lateral OFC varied with choice behavior and the activity of chosen-value neurons did not. One possibility is that the fluctuations in activity in these neurons reflect feedback coming from medial OFC about the result of the comparison process. Indeed, this explanation was proposed by Padoa-Schioppa (2013) and, as he noted, would help to explain why pre-trial activity was predictive of the subsequent choice. One way to test this idea could be to record the activity of chosen-juice neurons without input from medial OFC, using temporary interference methods, such as pharmacogenetic inactivation, to specifically suppress the activity of neurons in medial OFC that project to lateral OFC.

Summary: What information does OFC process?

It is commonly accepted that OFC processes information related to reward value in some way. Although this idea has contributed importantly to understanding OFC, recent results point to the need for more specific concepts. OFC neurons signal reward in multiple ways, which depend on the level of association (Fig. 3), the specific sensory properties of the reward, including its instantaneous subjective value (Fig. 2d, 5b, 5d), and the instructional information it conveys (Fig. 4). These signals are not homogeneously distributed within OFC (Fig. 3), which could account for some of the discrepancies in the literature. Converging lines of evidence point to a role for lateral OFC in evaluating options, fairly independently of each other, and medial OFC in comparing and contrasting them in order to make a choice.

How does OFC influence behavior?

Outcome signals: dopaminergic prediction errors

For any organism to learn, a prediction of the outcome associated with an action has to be compared to the actual outcome obtained from making that action (Sutton and Barto, 1998). The difference or “prediction error” can then be used to update expectations and drive learning. Dopaminergic neurons in the ventral tegmental area (VTA) and substantia nigra pars compacta are thought to provide one such prediction-error signal for reward-guided learning (Schultz et al., 1997), but where do the dopamine neurons get information about predicted outcomes?

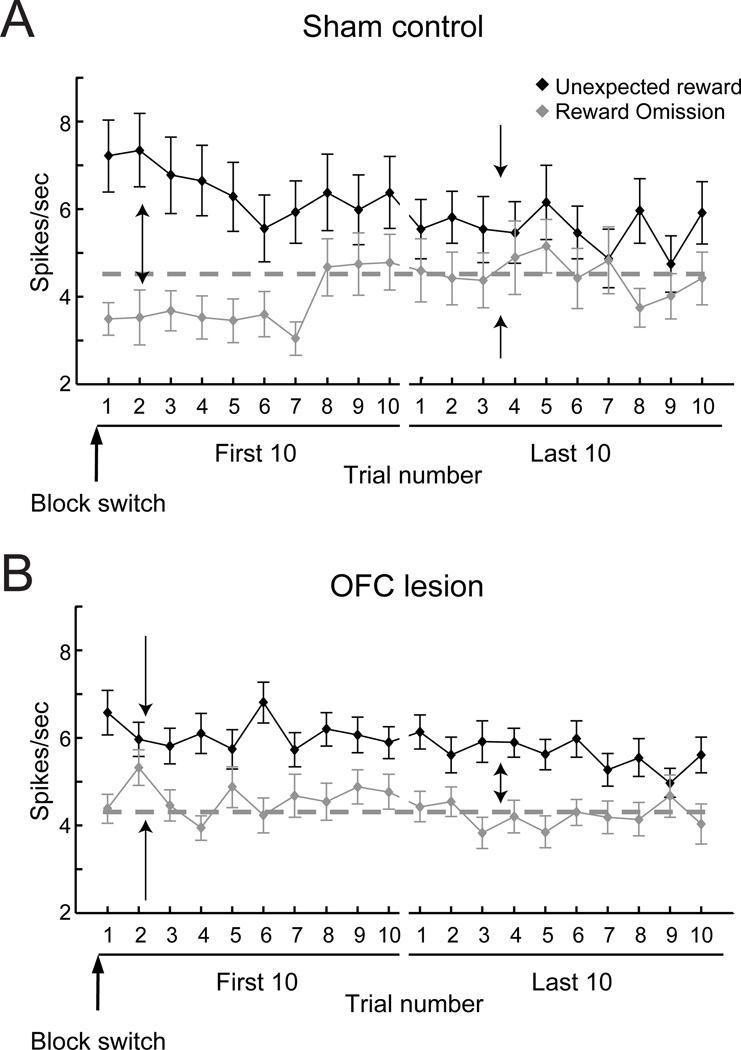

To address this issue, Takahashi and colleagues investigated whether OFC serves as a source of the prediction signal for VTA neurons (Takahashi et al., 2011). Recordings were made from putative dopaminergic projection neurons in the VTA of rats with ipsilateral OFC lesions (i.e., unilateral OFC lesions in the same hemisphere as the VTA recordings) while the rats performed a behavioral task designed to generate rewardrelated error signals (Fig. 6a). This task had previously been shown to be dependent on both OFC and VTA (Takahashi et al., 2009). Without input from OFC, dopaminergic neurons in the VTA did not exhibit the usual pattern of error-related firing when rats’ expectations about potential rewards were violated. Specifically, the initial increase or decrease in activity in dopamine neurons following unexpected reward delivery or omission, respectively, was attenuated in rats with OFC lesions relative to controls (compare Fig. 6a and b). Furthermore, unlike in controls, the increases and decreases in activity to unexpected changes in reward did not change through repeated experience in rats without an OFC. This can be appreciated by comparing activity on trials immediately after a block switch relative to later in the block (Fig. 6a First 10 vs. Last 10). These data indicate that without input from OFC, prediction error signals conveyed by the firing of VTA neurons are not being updated through experience—a key requirement for any associative learning error signal and model updating in model-based reinforcement learning. Moreover, the contribution of OFC to VTA signals appears to be distinct from that of other frontal areas. Inactivation of MFC in rats produces a different pattern of effects on VTA dopaminergic activity (Jo et al., 2013).

Figure 6.

The effect of OFC lesions on reward-prediction errors in dopaminergic neurons of the ventral tegmental area (VTA). Mean (±SEM) firing rate (spikes / s) of reward responsive VTA neurons in sham-operated (A) and OFC lesion (B) rats following unexpected reward delivery or omission immediately (First 10 trials) and later (Last 10 trials) after a change in the reward contingencies in the task (Block switch). Without input from OFC, VTA neurons do not display the normal patterns of “prediction error” signaling. Dark solid lines represent responses to unexpected delivery of reward. Gray solid lines represent responses to unexpected omission of reward. Gray dashed lines represent baseline firing. Arrows highlight differences in the VTA neuronal responses in the first and last 10 trials after block switches in the sham-operated and lesion rats. Adapted from Takahashi et al. (2011).

In the same study by Takahashi and colleagues, computational modeling of neural activity of VTA neurons in rats that lacked an OFC was consistent with the idea that OFC provides VTA neurons with a specific prediction of the expected outcomes of potential choices, which the authors called a state. Specifically, models where the OFC signaled the current identity of the outcomes or states, not the value of an outcome, most closely matched the firing rate of dopamine neurons after OFC lesions. Thus, the data suggest that signals regarding the current set of specific, predicted outcomes from OFC are used by the dopamine neurons to compute reward prediction errors, which, in turn, are used to promote learning in other brain areas. Indeed, through feedback connections from the VTA to the frontal cortex, dopaminergic error signals likely affect the updating of outcome-related and other model-based signals in OFC, as well as in other parts of the frontal cortex that, like OFC, provide predictions about the future state of the internal or external environment.

Choice signals: action selection, lateral frontal cortex and striatum

While potential alternatives for choice are being evaluated and compared in OFC, how might these signals influence the selection of actions in order to achieve the desired goal? Recent neurophysiological evidence suggests that one way OFC might affect processing in brain structures that control action planning, for example premotor cortex, is through a mechanism similar to top–down attention and biased competition (Pastor-Bernier and Cisek, 2011). In primates, an influence of OFC on premotor cortex could be mediated by connections between OFC and ventral, medial and dorsolateral PFC (Barbas and Pandya, 1989; Carmichael and Price, 1996; Saleem et al., 2013), which contribute multisynaptic pathways to dorsal premotor areas (Takahara et al., 2012).

A systematic analysis of the neurophysiological signals along the pathway(s) from OFC to premotor cortex has yet to be conducted. However, recordings of neural activity in OFC and lateral PFC appear to support the idea that predicted-outcome signals from OFC influence attentional and action processing in ventral and dorsolateral PFC (Cai and Padoa-Schioppa, 2014; Kennerley and Wallis, 2009a, b). For example, in the study by Tsujimoto et al. (2011), activity in OFC specified the strategy to be used for any given trial significantly earlier than did neurons in dorsolateral PFC. Yet neurons in dorsolateral PFC, but not OFC, encoded the location of the upcoming response (left or right). Thus, the timing of these signals indicates that outcome predictions generated in OFC may guide action selection in premotor cortex via dorsolateral PFC.

Direct projections to the striatum are another route by which OFC might bias the selection of actions. OFC densely innervates the ventral and medial striatum (Haber et al., 1995) and interaction between the OFC and distinct parts of the striatum regulates habitual vs. goal-directed behavior in mice (Gremel and Costa, 2013). Taking a mechanistic approach, two optogenetic studies in mice have recently shown that stimulation of OFC projections to the striatum can bias action selection in different ways. In the first study, Ahmari et al. (2013) used virally transfected channel-rhodopsin to stimulate glutamatergic neurons in medial OFC. Selectively enhancing the firing rate of OFC projection neurons to striatum in one hemisphere induced repetitive behaviors. Interestingly, the increase in repetitive behaviors was only observed after repeated stimulation over days, suggesting that the medial OFC’s influence on action control through the striatum is indirect or requires temporally slow adaptations in striatal microcircuits to occur.

In the second study, Burguiere et al. (2013) revealed that selectively enhancing the activity of lateral OFC projections to the striatum in a genetic mouse model of obsessive-compulsive disorder (OCD) alleviated compulsive grooming. Careful inspection of the relevant neural circuits revealed that OFC projections influenced action selection by altering the balance of inhibition and excitation in striatal microcircuits.

Taken together, these studies reveal that OFC can influence action selection by altering processing in the striatum. In addition to taking a mechanistic approach to understanding the influence of OFC, these studies indicate that anatomically distinct parts of OFC play different roles in biasing actions. Whether OFC-striatal interactions in primates influence action in a similar manner is an exciting avenue for future investigation.

Summary: How does OFC influence behavior?

In primates, at least, OFC is not connected to structures that directly affect movements, such as either motor or premotor areas, so it must influence behavior through interaction with other areas. A circuit-level understanding of OFC function helps elucidate its contribution to behavioral choice. Recent work has highlighted some of the routes via which the OFC influences behavior, including through its interaction with the basal forebrain, dopaminergic neurons in the VTA and the striatum.

Summary and conclusions

Despite the complicated design of the Antikythera mechanism—a product of the thousands of years of astronomy and engineering—discerning its inner workings and function was cracked within a century once the tools became available to do so. Likewise, OFC has resulted from millions of years of evolution that have made it an astonishingly complicated gismo. We are only beginning to understand its functions, but by using some new tools, along with older ones, we believe that discerning its inner workings will help unravel its functions. Here we adopted this mechanistic approach, one emphasizing the activity of individual neurons in OFC and an understanding of their causal contributions to behavior.

As we have seen, OFC, especially lateral OFC, signals the outcomes that are likely to occur after the choice of an object or an action. Importantly, these outcomes are not encoded in a featureless manner in OFC. Instead, the constellation of sensory features associated with potential outcomes is encoded as a representation of outcome identity, along with its biological value at any given moment. Thus, the function of OFC resembles that of the Antikythera mechanism in that both devices predict the future, which is why our title refers to OFC as an oracle of sorts.

Such outcome-predictive signals in lateral OFC result from a wide array of inputs, only some of which depend on input from the amygdala. Available evidence from primates indicates that once lateral OFC evaluates potential alternatives, medial OFC then compares and contrasts them using a common currency of universal value. While it seems likely that comparison signals in medial OFC contribute to the selection of actions, lateral OFC may also contribute to this process, especially when a choice is aimed at outcomes with particular features, specific spatial locations or particular contexts, either alone or in combination.

A mechanistic approach to elucidating OFC function aims to characterize how its specific patterns of neural activity result from distinct inputs, especially those related to the updated valuation of specific reinforcers and outcomes. We think that this type of knowledge is important for at least two reasons, both of which involve understanding the function of specific brain circuits, including both areas and pathways. First, it addresses not only how specific pathways produce predictive signals in OFC, but also how, in turn, OFC influences downstream structures to bias the choice of action and compare predicted signals with those that actually occur. Second, this type of knowledge will be critical for understanding disorders that involve dysfunction of OFC, such as major depressive disorder, OCD and substance abuse (Murray et al., 2011).

ACKNOWLEDGEMENTS

This work was supported, in part, by the Intramural Research Program of the National Institute of Mental Health and an internal grant from the Icahn School of Medicine at Mount Sinai. We are indebted to Steven P. Wise for comments on an earlier version of the manuscript and Andrew Rudebeck for his contagious excitement for obscure artifacts of antiquity.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

CONFLICT OF INTEREST: None

References

- Abe H, Seo H, Lee D. The prefrontal cortex and hybrid learning during iterative competitive games. Ann N Y Acad Sci. 2011;1239:100–108. doi: 10.1111/j.1749-6632.2011.06223.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ahmari SE, Spellman T, Douglass NL, Kheirbek MA, Simpson HB, Deisseroth K, Gordon JA, Hen R. Repeated cortico-striatal stimulation generates persistent OCD-like behavior. Science. 2013;340:1234–1239. doi: 10.1126/science.1234733. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barbas H, Pandya DN. Architecture and intrinsic connections of the prefrontal cortex in the rhesus monkey. Journal of Comparative Neurology. 1989;286:353–375. doi: 10.1002/cne.902860306. [DOI] [PubMed] [Google Scholar]

- Blair RJ. Psychopathy, frustration, and reactive aggression: the role of ventromedial prefrontal cortex. Br J Psychol. 2010;101:383–399. doi: 10.1348/000712609X418480. [DOI] [PubMed] [Google Scholar]

- Boorman ED, Behrens TE, Woolrich MW, Rushworth MF. How green is the grass on the other side? Frontopolar cortex and the evidence in favor of alternative courses of action. Neuron. 2009;62:733–743. doi: 10.1016/j.neuron.2009.05.014. [DOI] [PubMed] [Google Scholar]

- Buckley MJ, Mansouri FA, Hoda H, Mahboubi M, Browning PG, Kwok SC, Phillips A, Tanaka K. Dissociable components of rule-guided behavior depend on distinct medial and prefrontal regions. Science. 2009;325:52–58. doi: 10.1126/science.1172377. [DOI] [PubMed] [Google Scholar]

- Burguiere E, Monteiro P, Feng G, Graybiel AM. Optogenetic stimulation of lateral orbitofronto-striatal pathway suppresses compulsive behaviors. Science. 2013;340:1243–1246. doi: 10.1126/science.1232380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burke KA, Franz TM, Miller DN, Schoenbaum G. The role of the orbitofrontal cortex in the pursuit of happiness and more specific rewards. Nature. 2008;454:340–344. doi: 10.1038/nature06993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Butter CM. Perseveration in extinction and in discrimination reversal tasks following selective frontal ablations in Macaca mulatta. Physiol and Behav. 1969;4:163–171. [Google Scholar]

- Butter CM, Snyder DR, McDonald JA. Effects of orbital frontal lesions on aversive and aggressive behaviors in rhesus monkeys. J Comp Physiol and Psychol. 1970;72:132–144. doi: 10.1037/h0029303. [DOI] [PubMed] [Google Scholar]

- Cai X, Padoa-Schioppa C. Contributions of Orbitofrontal and Lateral Prefrontal Cortices to Economic Choice and the Good-to-Action Transformation. Neuron. 2014;81:1140–1151. doi: 10.1016/j.neuron.2014.01.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carmichael ST, Price JL. Limbic connections of the orbital and medial prefrontal cortex in macaque monkeys. J Comp Neurol. 1995a;363:615–641. doi: 10.1002/cne.903630408. [DOI] [PubMed] [Google Scholar]

- Carmichael ST, Price JL. Sensory and premotor connections of the orbital and medial prefrontal cortex of macaque monkeys. J Comp Neurol. 1995b;363:642–664. doi: 10.1002/cne.903630409. [DOI] [PubMed] [Google Scholar]

- Carmichael ST, Price JL. Connectional networks within the orbital and medial prefrontal cortex of macaque monkeys. J Comp Neurol. 1996;371:179–207. doi: 10.1002/(SICI)1096-9861(19960722)371:2<179::AID-CNE1>3.0.CO;2-#. [DOI] [PubMed] [Google Scholar]

- Chudasama Y, Robbins TW. Dissociable contributions of the orbitofrontal and infralimbic cortex to pavlovian autoshaping and discrimination reversal learning: further evidence for the functional heterogeneity of the rodent frontal cortex. J Neurosci. 2003;23:8771–8780. doi: 10.1523/JNEUROSCI.23-25-08771.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark L, Cools R, Robbins TW. The neuropsychology of ventral prefrontal cortex: decision-making and reversal learning. Brain and Cognition. 2004;55:41–53. doi: 10.1016/S0278-2626(03)00284-7. [DOI] [PubMed] [Google Scholar]

- Clarke HF, Robbins TW, Roberts AC. Lesions of the medial striatum in monkeys produce perseverative impairments during reversal learning similar to those produced by lesions of the orbitofrontal cortex. J Neurosci. 2008;28:10972–10982. doi: 10.1523/JNEUROSCI.1521-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Croxson PL, Johansen-Berg H, Behrens TE, Robson MD, Pinsk MA, Gross CG, Richter W, Richter MC, Kastner S, Rushworth MF. Quantitative investigation of connections of the prefrontal cortex in the human and macaque using probabilistic diffusion tractography. J Neurosci. 2005;25:8854–8866. doi: 10.1523/JNEUROSCI.1311-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Croxson PL, Kyriazis DA, Baxter MG. Cholinergic modulation of a specific memory function of prefrontal cortex. Nat Neurosci. 2011;14:1510–1512. doi: 10.1038/nn.2971. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damasio AR, Tranel D, Damasio H. Individuals with sociopathic behavior caused by frontal damage fail to respond autonomically to social stimuli. Behavl Brain Res. 1990;41:81–94. doi: 10.1016/0166-4328(90)90144-4. [DOI] [PubMed] [Google Scholar]

- Dias R, Robbins TW, Roberts AC. Dissociation in prefrontal cortex of affective and attentional shifts. Nature. 1996;380:69–72. doi: 10.1038/380069a0. [DOI] [PubMed] [Google Scholar]

- Fineberg NA, Potenza MN, Chamberlain SR, Berlin HA, Menzies L, Bechara A, Sahakian BJ, Robbins TW, Bullmore ET, Hollander E. Probing compulsive and impulsive behaviors, from animal models to endophenotypes: a narrative review. Neuropsychopharm. 2009;35:591–604. doi: 10.1038/npp.2009.185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- FitzGerald TH, Seymour B, Dolan RJ. The role of human orbitofrontal cortex in value comparison for incommensurable objects. J Neurosci. 2009;29:8388–8395. doi: 10.1523/JNEUROSCI.0717-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freeth T, Jones A, Steele JM, Bitsakis Y. Calendars with Olympiad display and eclipse prediction on the Antikythera Mechanism. Nature. 2008;454:614–617. doi: 10.1038/nature07130. [DOI] [PubMed] [Google Scholar]

- Genovesio A, Tsujimoto S, Navarra G, Falcone R, Wise SP. Autonomous encoding of irrelevant goals and outcomes by prefrontal cortex neurons. J Neurosci. 2014;34:1970–1978. doi: 10.1523/JNEUROSCI.3228-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghashghaei HT, Hilgetag CC, Barbas H. Sequence of information processing for emotions based on the anatomic dialogue between prefrontal cortex and amygdala. Neuroimage. 2007;34:905–923. doi: 10.1016/j.neuroimage.2006.09.046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldman-Rakic PS, Porrino LJ. The primate mediodorsal (MD) nucleus and its projection to the frontal lobe. J Comp Neurol. 1985;242:535–560. doi: 10.1002/cne.902420406. [DOI] [PubMed] [Google Scholar]

- Gottfried JA, O'Doherty J, Dolan RJ. Encoding predictive reward value in human amygdala and orbitofrontal cortex. Science. 2003;301:1104–1107. doi: 10.1126/science.1087919. [DOI] [PubMed] [Google Scholar]

- Gottfried JA, Zelano C. The value of identity: olfactory notes on orbitofrontal cortex function. Ann N Y Acad Sci. 2011;1239:138–148. doi: 10.1111/j.1749-6632.2011.06268.x. [DOI] [PubMed] [Google Scholar]

- Gremel CM, Costa RM. Orbitofrontal and striatal circuits dynamically encode the shift between goal-directed and habitual actions. Nature Comm. 2013;4:2264. doi: 10.1038/ncomms3264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haber SN, Kunishio K, Mizobuchi M, Lynd-Balta E. The orbital and medial prefrontal circuit through the primate basal ganglia. J Neurosci. 1995;15:4851–4867. doi: 10.1523/JNEUROSCI.15-07-04851.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hampton AN, Adolphs R, Tyszka MJ, O'Doherty JP. Contributions of the amygdala to reward expectancy and choice signals in human prefrontal cortex. Neuron. 2007;55:545–555. doi: 10.1016/j.neuron.2007.07.022. [DOI] [PubMed] [Google Scholar]

- Harlow J. Passage of an iron rod through the head. Boston Medical and Surgical Journal. 1848;39:389–393. [PMC free article] [PubMed] [Google Scholar]

- Holland PC, Gallagher M. Amygdala-frontal interactions and reward expectancy. Curr Opin Neurobiol. 2004;14:148–155. doi: 10.1016/j.conb.2004.03.007. [DOI] [PubMed] [Google Scholar]

- Hunt LT, Kolling N, Soltani A, Woolrich MW, Rushworth MF, Behrens TE. Mechanisms underlying cortical activity during value-guided choice. Nat Neurosci. 2012;15:470–476. S471–S473. doi: 10.1038/nn.3017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huys QJ, Eshel N, O'Nions E, Sheridan L, Dayan P, Roiser JP. Bonsai trees in your head: how the pavlovian system sculpts goal-directed choices by pruning decision trees. PLoS Comp Biol. 2012;8:e1002410. doi: 10.1371/journal.pcbi.1002410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iversen SD, Mishkin M. Perseverative interference in monkeys following selective lesions of the inferior prefrontal convexity. Exp Brain Res. 1970;11:376–386. doi: 10.1007/BF00237911. [DOI] [PubMed] [Google Scholar]

- Izquierdo A, Darling C, Manos N, Pozos H, Kim C, Ostrander S, Cazares V, Stepp H, Rudebeck PH. Basolateral amygdala lesions facilitate reward choices after negative feedback in rats. J Neurosci. 2013;33:4105–4109. doi: 10.1523/JNEUROSCI.4942-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Izquierdo A, Suda RK, Murray EA. Bilateral orbital prefrontal cortex lesions in rhesus monkeys disrupt choices guided by both reward value and reward contingency. J Neurosci. 2004;24:7540–7548. doi: 10.1523/JNEUROSCI.1921-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Izquierdo A, Suda RK, Murray EA. Comparison of the effects of bilateral orbital prefrontal cortex lesions and amygdala lesions on emotional responses in rhesus monkeys. J Neurosci. 2005;25:8534–8542. doi: 10.1523/JNEUROSCI.1232-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jbabdi S, Lehman JF, Haber SN, Behrens TE. Human and monkey ventral prefrontal fibers use the same organizational principles to reach their targets: tracing versus tractography. J Neurosci. 2013;33:3190–3201. doi: 10.1523/JNEUROSCI.2457-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jo YS, Lee J, Mizumori SJ. Effects of prefrontal cortical inactivation on neural activity in the ventral tegmental area. J Neurosci. 2013;33:8159–8171. doi: 10.1523/JNEUROSCI.0118-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones B, Mishkin M. Limbic lesions and the problem of stimulus--reinforcement associations. Exp Neurol. 1972;36:362–377. doi: 10.1016/0014-4886(72)90030-1. [DOI] [PubMed] [Google Scholar]

- Keiflin R, Reese RM, Woods CA, Janak PH. The orbitofrontal cortex as part of a hierarchical neural system mediating choice between two good options. J Neurosci. 2013;33:15989–15998. doi: 10.1523/JNEUROSCI.0026-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennerley SW, Dahmubed AF, Lara AH, Wallis JD. Neurons in the frontal lobe encode the value of multiple decision variables. J Cogn Neurosci. 2009;21:1162–1178. doi: 10.1162/jocn.2009.21100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennerley SW, Wallis JD. Encoding of reward and space during a working memory task in the orbitofrontal cortex and anterior cingulate sulcus. J Neurophysiol. 2009a;102:3352–3364. doi: 10.1152/jn.00273.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennerley SW, Wallis JD. Reward-dependent modulation of working memory in lateral prefrontal cortex. J Neurosci. 2009b;29:3259–3270. doi: 10.1523/JNEUROSCI.5353-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kitt CA, Mitchell SJ, DeLong MR, Wainer BH, Price DL. Fiber pathways of basal forebrain cholinergic neurons in monkeys. Brain Res. 1987;406:192–206. doi: 10.1016/0006-8993(87)90783-9. [DOI] [PubMed] [Google Scholar]

- Klein-Flugge MC, Barron HC, Brodersen KH, Dolan RJ, Behrens TE. Segregated encoding of reward-identity and stimulus-reward associations in human orbitofrontal cortex. J Neurosci. 2013;33:3202–3211. doi: 10.1523/JNEUROSCI.2532-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kringelbach ML. The human orbitofrontal cortex: linking reward to hedonic experience. Nat Rev Neurosci. 2005;6:691–702. doi: 10.1038/nrn1747. [DOI] [PubMed] [Google Scholar]

- Lehman JF, Greenberg BD, McIntyre CC, Rasmussen SA, Haber SN. Rules ventral prefrontal cortical axons use to reach their targets: implications for diffusion tensor imaging tractography and deep brain stimulation for psychiatric illness. J Neurosci. 2011;31:10392–10402. doi: 10.1523/JNEUROSCI.0595-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luk CH, Wallis JD. Choice coding in frontal cortex during stimulus-guided or action-guided decision-making. J Neurosci. 2013;33:1864–1871. doi: 10.1523/JNEUROSCI.4920-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mann SE, Thau R, Schiller PH. Conditional task-related responses in monkey dorsomedial frontal cortex. Exp Brain Res. 1988;69:460–468. doi: 10.1007/BF00247300. [DOI] [PubMed] [Google Scholar]

- McDannald MA, Esber GR, Wegener MA, Wied HM, Liu TL, Stalnaker TA, Jones JL, Trageser J, Schoenbaum G. Orbitofrontal neurons acquire responses to 'valueless' Pavlovian cues during unblocking. eLife. 2014:e02653. doi: 10.7554/eLife.02653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDannald MA, Lucantonio F, Burke KA, Niv Y, Schoenbaum G. Ventral striatum and orbitofrontal cortex are both required for model-based, but not model-free, reinforcement learning. J Neurosci. 2011;31:2700–2705. doi: 10.1523/JNEUROSCI.5499-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mishkin M. Perseveration of central sets after frontal lesions in monkeys. In: Warren JM, Akert K, editors. The Frontal Granular Cortex and Behavior. New York: McGraw-Hill; 1964. pp. 219–241. [Google Scholar]

- Morrison SE, Saez A, Lau B, Salzman CD. Different time courses for learning-related changes in amygdala and orbitofrontal cortex. Neuron. 2011;71:1127–1140. doi: 10.1016/j.neuron.2011.07.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morrison SE, Salzman CD. The convergence of information about rewarding and aversive stimuli in single neurons. J Neurosci. 2009;29:11471–11483. doi: 10.1523/JNEUROSCI.1815-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morrison SE, Salzman CD. Representations of appetitive and aversive information in the primate orbitofrontal cortex. Ann N Y Acad Sci. 2011;1239:59–70. doi: 10.1111/j.1749-6632.2011.06255.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray EA, Rudebeck PH. The drive to strive: goal generation based on current needs. Front Neurosci. 2013;7:112. doi: 10.3389/fnins.2013.00112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray EA, Wise SP. Interactions between orbital prefrontal cortex and amygdala: advanced cognition, learned responses and instinctive behaviors. Curr Opin Neurobiol. 2010;20:212–220. doi: 10.1016/j.conb.2010.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray EA, Wise SP, Drevets WC. Localization of dysfunction in major depressive disorder: prefrontal cortex and amygdala. Biol Psychiatry. 2011;69:e43–e54. doi: 10.1016/j.biopsych.2010.09.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nguyen DP, Lin SC. A frontal cortex event-related potential driven by the basal forebrain. eLife. 2014;3:e02148. doi: 10.7554/eLife.02148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niki H, Sakai M, Kubota K. Delayed alternation performance and unit activity of the caudate head and medial orbitofrontal gyrus in the monkey. Brain Res. 1972;38:343–353. doi: 10.1016/0006-8993(72)90717-2. [DOI] [PubMed] [Google Scholar]

- Noonan MP, Sallet J, Rudebeck PH, Buckley MJ, Rushworth MF. Does the medial orbitofrontal cortex have a role in social valuation? Eur J Neurosci. 2010a;31:2341–2351. doi: 10.1111/j.1460-9568.2010.07271.x. [DOI] [PubMed] [Google Scholar]

- Noonan MP, Walton ME, Behrens TE, Sallet J, Buckley MJ, Rushworth MF. Separate value comparison and learning mechanisms in macaque medial and lateral orbitofrontal cortex. Proc Nat Acad Sci. 2010b;107:20547–20552. doi: 10.1073/pnas.1012246107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Doherty JP. Lights, camembert, action! The role of human orbitofrontal cortex in encoding stimuli, rewards, and choices. Ann NY Acad Sci. 2007;1121:254–272. doi: 10.1196/annals.1401.036. [DOI] [PubMed] [Google Scholar]

- Ongur D, An X, Price JL. Prefrontal cortical projections to the hypothalamus in macaque monkeys. J Comp Neurol. 1998;401:480–505. [PubMed] [Google Scholar]

- Ostlund SB, Balleine BW. Orbitofrontal cortex mediates outcome encoding in Pavlovian but not instrumental conditioning. J Neurosci. 2007;27:4819–4825. doi: 10.1523/JNEUROSCI.5443-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C. Orbitofrontal cortex and the computation of economic value. Ann N Y Acad Sci. 2007;1121:232–253. doi: 10.1196/annals.1401.011. [DOI] [PubMed] [Google Scholar]

- Padoa-Schioppa C. Neurobiology of economic choice: a good-based model. Ann Rev Neurosci. 2011;34:333–359. doi: 10.1146/annurev-neuro-061010-113648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C. Neuronal origins of choice variability in economic decisions. Neuron. 2013;80:1322–1336. doi: 10.1016/j.neuron.2013.09.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C, Assad JA. The representation of economic value in the orbitofrontal cortex is invariant for changes of menu. Nat Neurosci. 2008;11:95–102. doi: 10.1038/nn2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C, Cai X. The orbitofrontal cortex and the computation of subjective value: consolidated concepts and new perspectives. Ann N Y Acad Sci. 2011;1239:130–137. doi: 10.1111/j.1749-6632.2011.06262.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Passingham RE, Wise SP. The Neurobiology of the Prefrontal Cortex: Anatomy, Evolution, and the Origin of Insight, 1 edn. USA: Oxford University Press; 2012. [Google Scholar]

- Pastor-Bernier A, Cisek P. Neural correlates of biased competition in premotor cortex. J Neurosci. 2011;31:7083–7088. doi: 10.1523/JNEUROSCI.5681-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pearson JM, Watson KK, Platt ML. Decision making: the neuroethological turn. Neuron. 2014;82:950–965. doi: 10.1016/j.neuron.2014.04.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. Comparative architectonic analysis of the human and macaque frontal cortex. In: Boller F, Grafman J, editors. Handbook of Neuropsychology. Amsterdam: Elsevier Science Publishers; 1994. pp. 17–58. [Google Scholar]

- Preuss T. Do rats have a prefrontal cortex? The Rose-Woolsey-Akert program reconsidered. J Cog Neurosci. 1995;7:1–24. doi: 10.1162/jocn.1995.7.1.1. [DOI] [PubMed] [Google Scholar]

- Price JL. Free will versus survival: brain systems that underlie intrinsic constraints on behavior. J Comp Neurol. 2005;493:132–139. doi: 10.1002/cne.20750. [DOI] [PubMed] [Google Scholar]

- Rhodes SE, Murray EA. Differential effects of amygdala, orbital prefrontal cortex, and prelimbic cortex lesions on goal-directed behavior in rhesus macaques. J Neurosci. 2013;33:3380–3389. doi: 10.1523/JNEUROSCI.4374-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roberts AC, Wallis JD. Inhibitory control and affective processing in the prefrontal cortex: neuropsychological studies in the common marmoset. Cereb Cortex. 2000;10:252–262. doi: 10.1093/cercor/10.3.252. [DOI] [PubMed] [Google Scholar]